StateSpace Planning Sources Ch 3 Appendix A Slides

- Slides: 31

State-Space Planning Sources: • Ch. 3 • Appendix A • Slides from Dana Nau’s lecture Dr. Héctor Muñoz-Avila

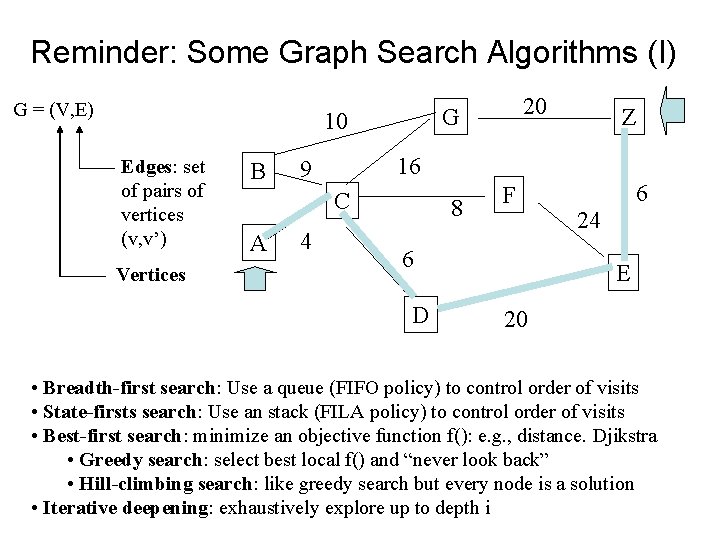

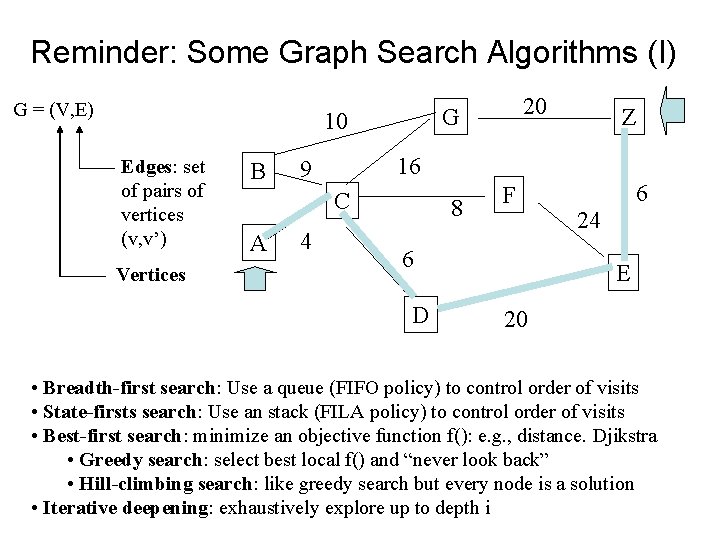

Reminder: Some Graph Search Algorithms (I) G = (V, E) 10 Edges: set of pairs of vertices (v, v’) Vertices B 4 Z 16 9 C A 20 G 8 F 6 D 6 24 E 20 • Breadth-first search: Use a queue (FIFO policy) to control order of visits • State-firsts search: Use an stack (FILA policy) to control order of visits • Best-first search: minimize an objective function f(): e. g. , distance. Djikstra • Greedy search: select best local f() and “never look back” • Hill-climbing search: like greedy search but every node is a solution • Iterative deepening: exhaustively explore up to depth i

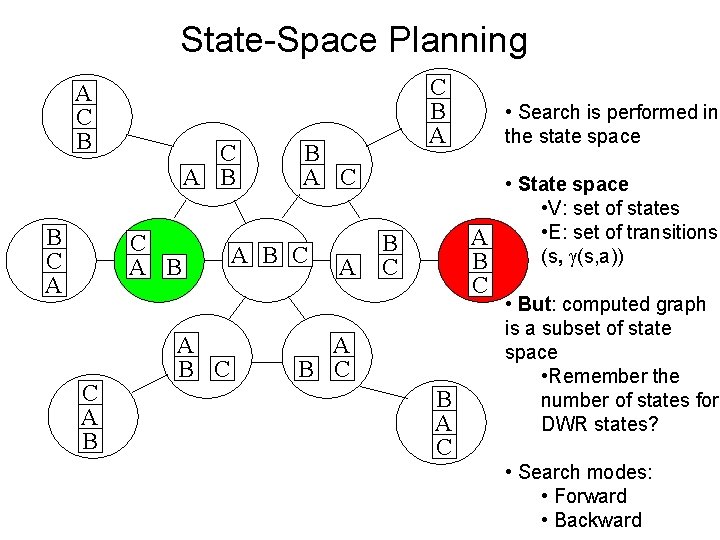

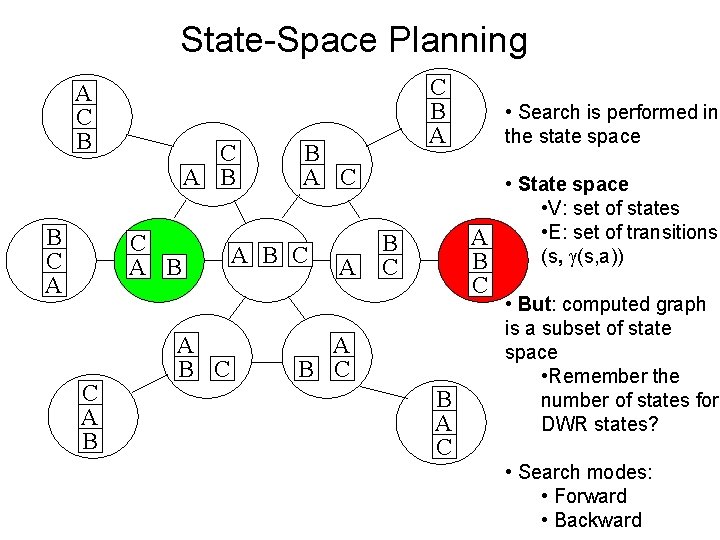

State-Space Planning A C B B C A B A B C C B A C A B C A • Search is performed in the state space • State space • V: set of states • E: set of transitions A (s, a)) B B C C A B C B A C • But: computed graph is a subset of state space • Remember the number of states for DWR states? • Search modes: • Forward • Backward

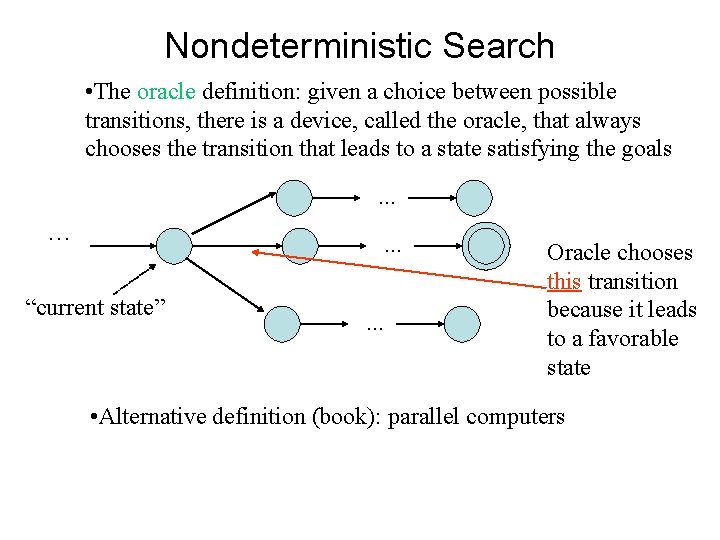

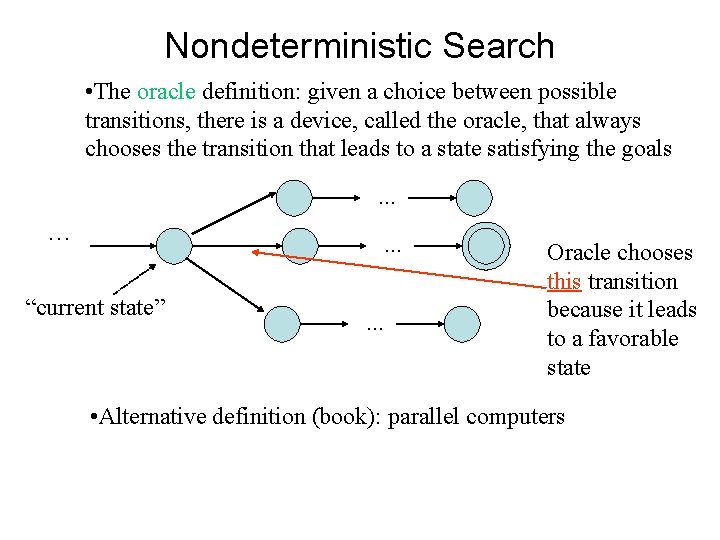

Nondeterministic Search • The oracle definition: given a choice between possible transitions, there is a device, called the oracle, that always chooses the transition that leads to a state satisfying the goals. . . … . . . “current state” . . . Oracle chooses this transition because it leads to a favorable state • Alternative definition (book): parallel computers

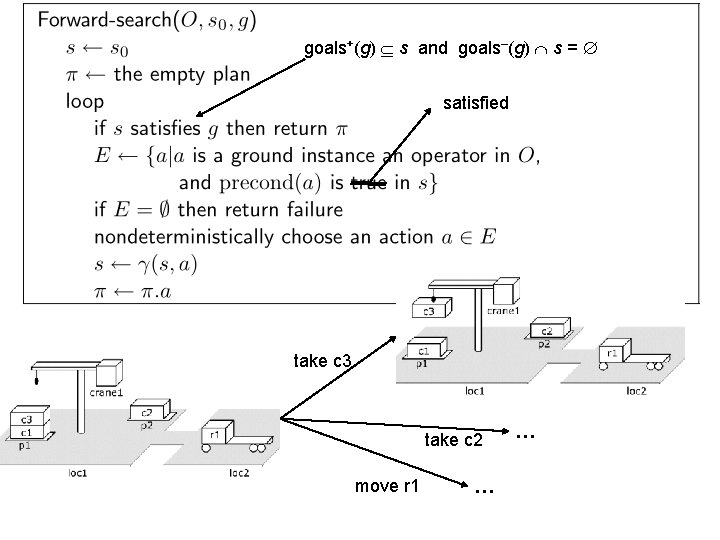

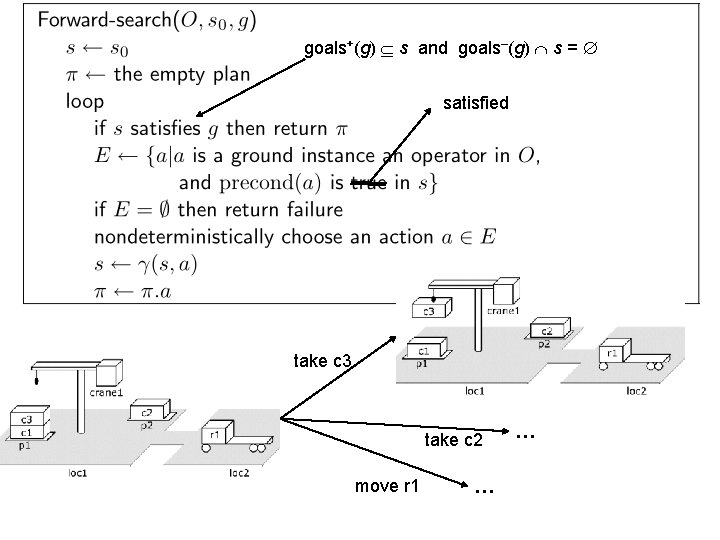

goals+(g) s and goals–(g) s = Forward Search satisfied take c 3 take c 2 move r 1 … …

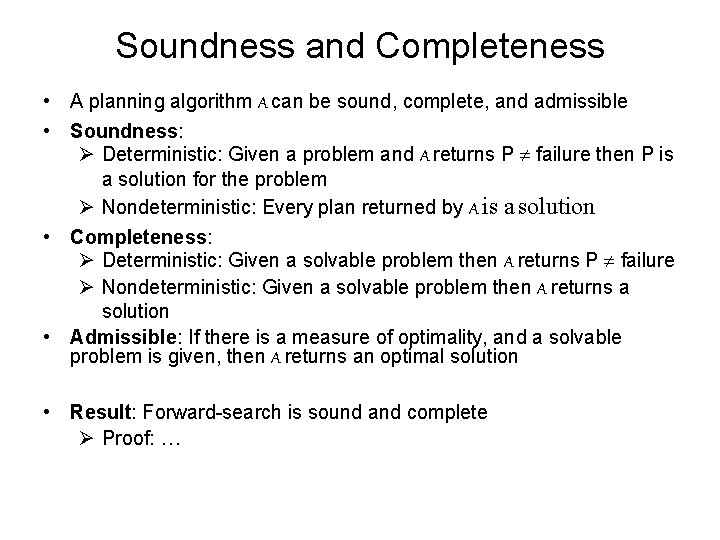

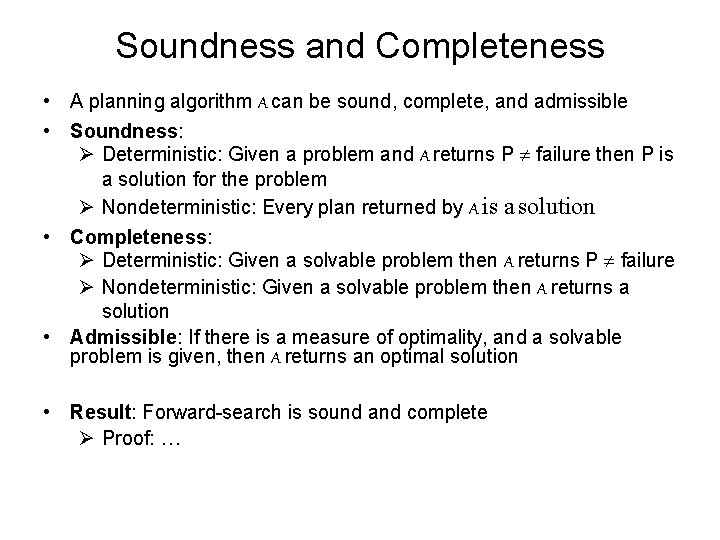

Soundness and Completeness • A planning algorithm A can be sound, complete, and admissible • Soundness: Ø Deterministic: Given a problem and A returns P failure then P is a solution for the problem Ø Nondeterministic: Every plan returned by A is a solution • Completeness: Ø Deterministic: Given a solvable problem then A returns P failure Ø Nondeterministic: Given a solvable problem then A returns a solution • Admissible: If there is a measure of optimality, and a solvable problem is given, then A returns an optimal solution • Result: Forward-search is sound and complete Ø Proof: …

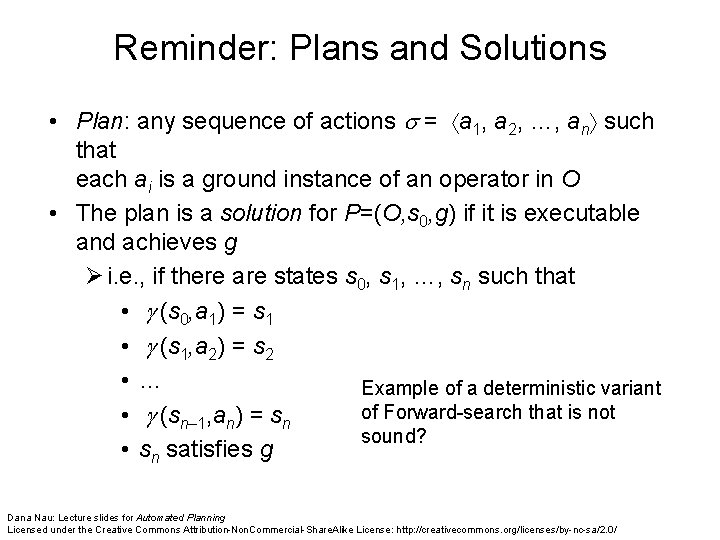

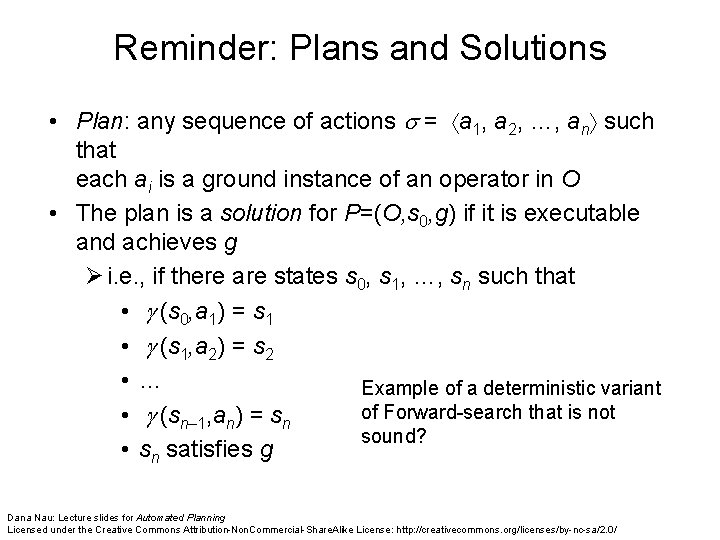

Reminder: Plans and Solutions • Plan: any sequence of actions = a 1, a 2, …, an such that each ai is a ground instance of an operator in O • The plan is a solution for P=(O, s 0, g) if it is executable and achieves g Ø i. e. , if there are states s 0, s 1, …, sn such that • (s 0, a 1) = s 1 • (s 1, a 2) = s 2 • … Example of a deterministic variant of Forward-search that is not • (sn– 1, an) = sn sound? • sn satisfies g Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

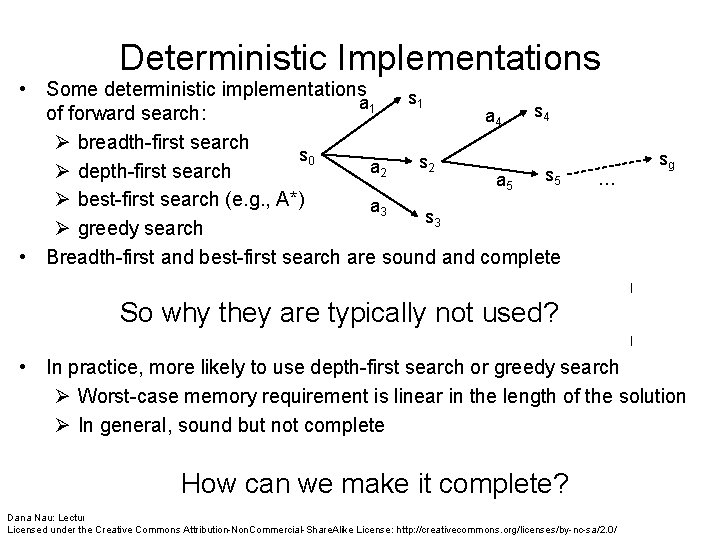

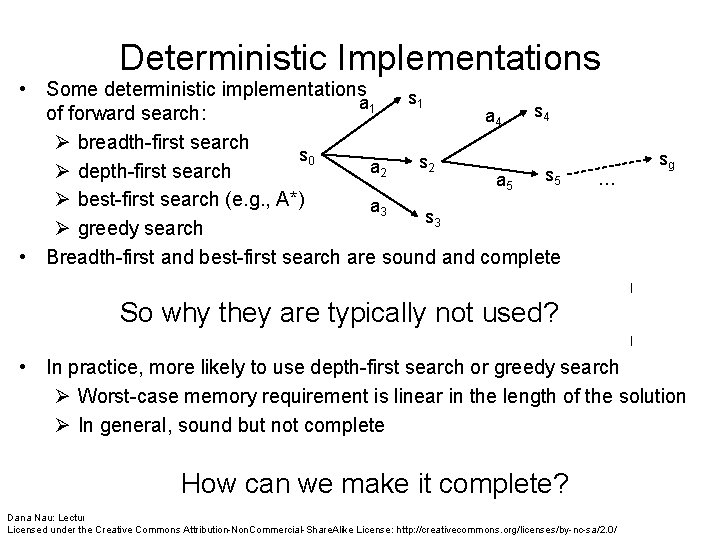

Deterministic Implementations • Some deterministic implementations s 1 a 1 s 4 of forward search: a 4 Ø breadth-first search s 0 sg s 2 a 2 Ø depth-first search s 5 … a 5 Ø best-first search (e. g. , A*) a 3 s 3 Ø greedy search • Breadth-first and best-first search are sound and complete Ø But they usually aren’t practical because they require too much memory So why they are typically not used? Ø Memory requirement is exponential in the length of the solution • In practice, more likely to use depth-first search or greedy search Ø Worst-case memory requirement is linear in the length of the solution Ø In general, sound but not complete • But classical planning has only finitely many states • Thus, can makecan depth-first search complete by doing loop. How we make it complete? checking Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

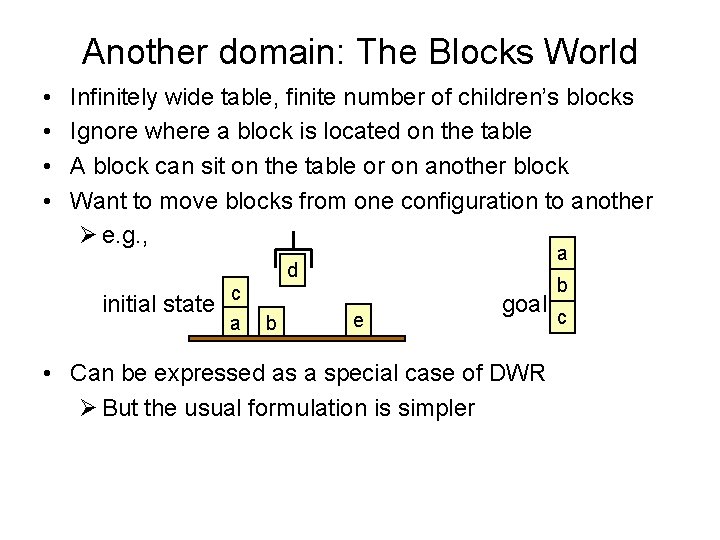

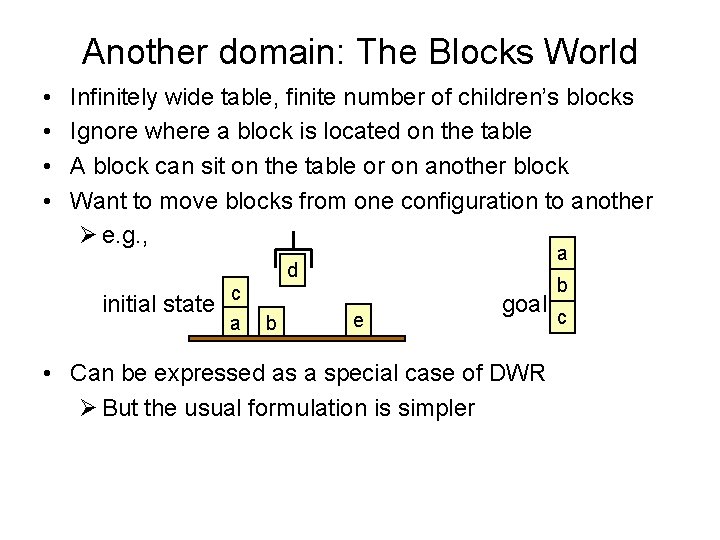

Another domain: The Blocks World • • Infinitely wide table, finite number of children’s blocks Ignore where a block is located on the table A block can sit on the table or on another block Want to move blocks from one configuration to another Ø e. g. , a d initial state c a b b e goal c • Can be expressed as a special case of DWR Ø But the usual formulation is simpler

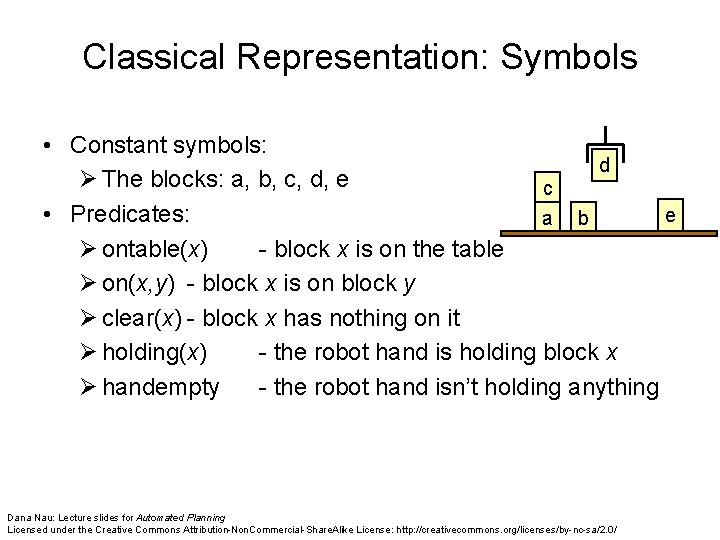

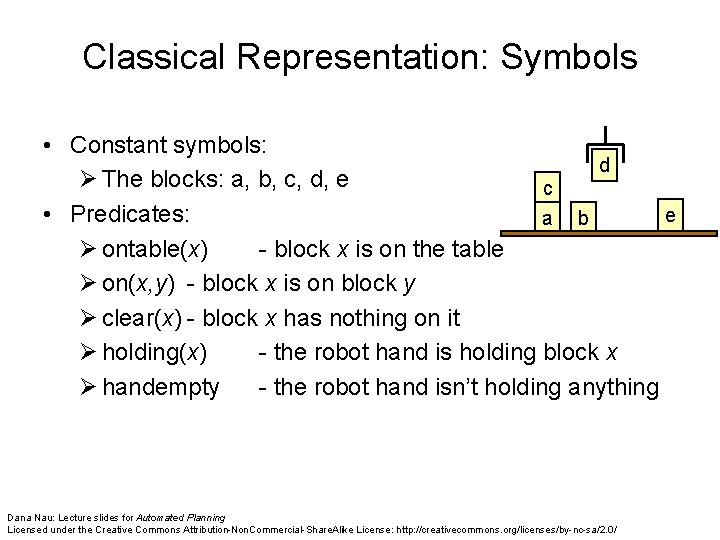

Classical Representation: Symbols • Constant symbols: d Ø The blocks: a, b, c, d, e c e • Predicates: a b Ø ontable(x) - block x is on the table Ø on(x, y) - block x is on block y Ø clear(x) - block x has nothing on it Ø holding(x) - the robot hand is holding block x Ø handempty - the robot hand isn’t holding anything Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

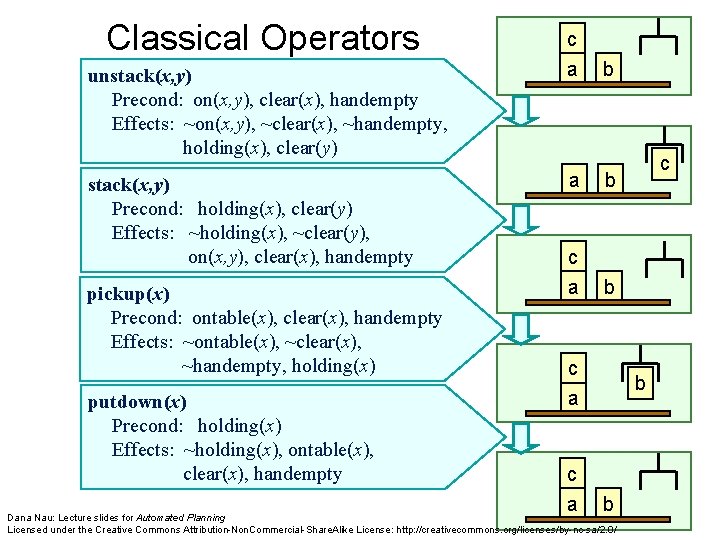

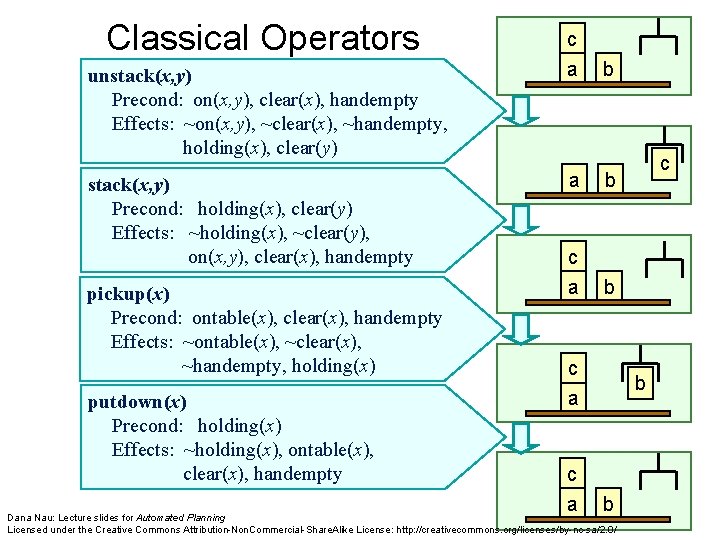

Classical Operators unstack(x, y) Precond: on(x, y), clear(x), handempty Effects: ~on(x, y), ~clear(x), ~handempty, holding(x), clear(y) stack(x, y) Precond: holding(x), clear(y) Effects: ~holding(x), ~clear(y), on(x, y), clear(x), handempty pickup(x) Precond: ontable(x), clear(x), handempty Effects: ~ontable(x), ~clear(x), ~handempty, holding(x) putdown(x) Precond: holding(x) Effects: ~holding(x), ontable(x), clear(x), handempty c a b c a c b b Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

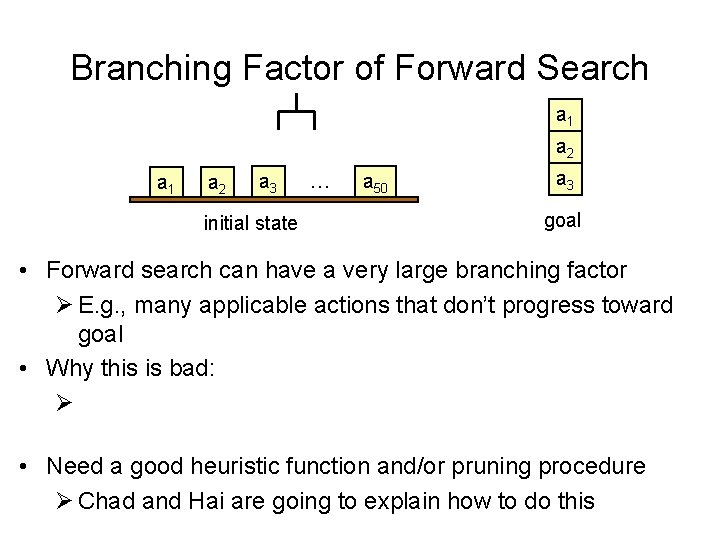

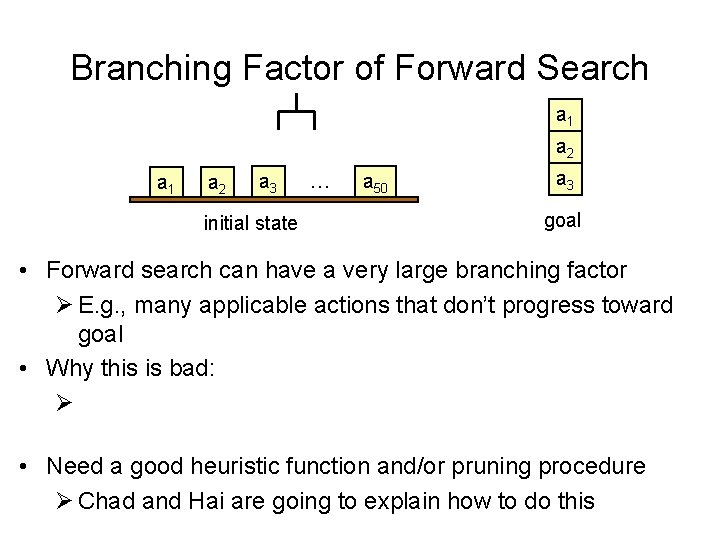

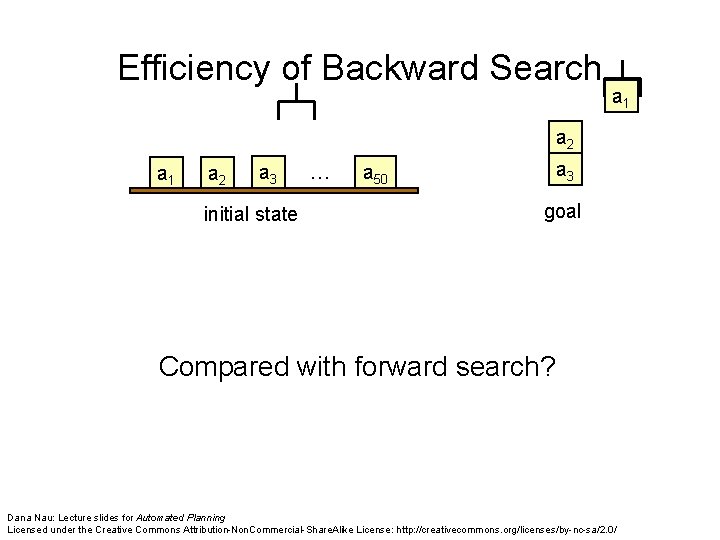

Branching Factor of Forward Search a 1 a 2 a 3 initial state … a 50 a 3 goal • Forward search can have a very large branching factor Ø E. g. , many applicable actions that don’t progress toward goal • Why this is bad: Ø Deterministic implementations can waste time trying lots of irrelevant actions • Need a good heuristic function and/or pruning procedure Ø Chad and Hai are going to explain how to do this

Backward Search • For forward search, we started at the initial state and computed state transitions Ø new state = (s, a) • For backward search, we start at the goal and compute inverse state transitions Ø new set of subgoals = – 1(g, a) • To define -1(g, a), must first define relevance: Ø An action a is relevant for a goal g if • a makes at least one of g’s literals true – g effects(a) ≠ • a does not make any of g’s literals false – g+ effects–(a) = and g– effects+(a) = How do we write this formally? Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

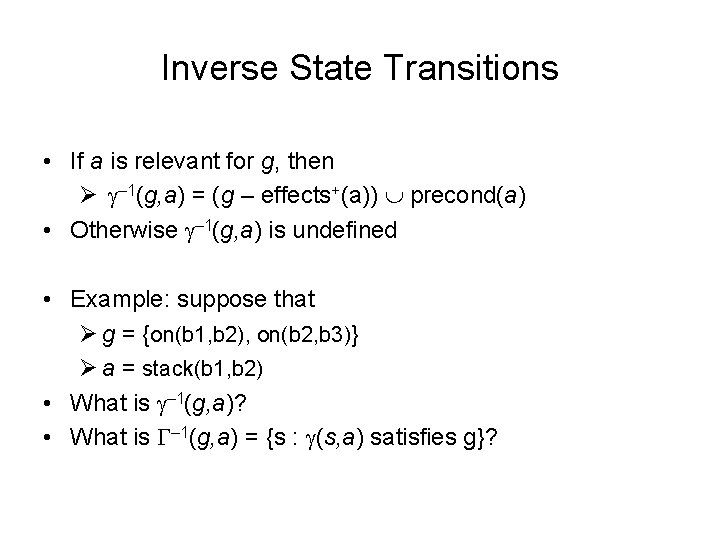

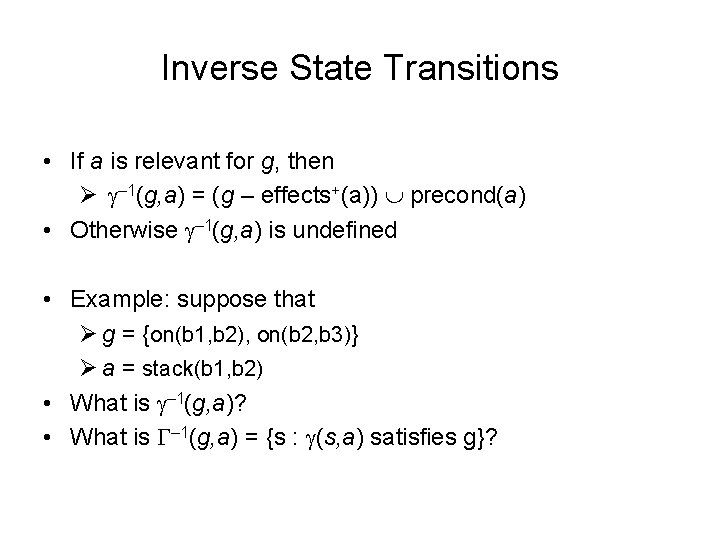

Inverse State Transitions • If a is relevant for g, then Ø – 1(g, a) = (g – effects+(a)) precond(a) • Otherwise – 1(g, a) is undefined • Example: suppose that Ø g = {on(b 1, b 2), on(b 2, b 3)} Ø a = stack(b 1, b 2) • What is – 1(g, a)? • What is – 1(g, a) = {s : (s, a) satisfies g}?

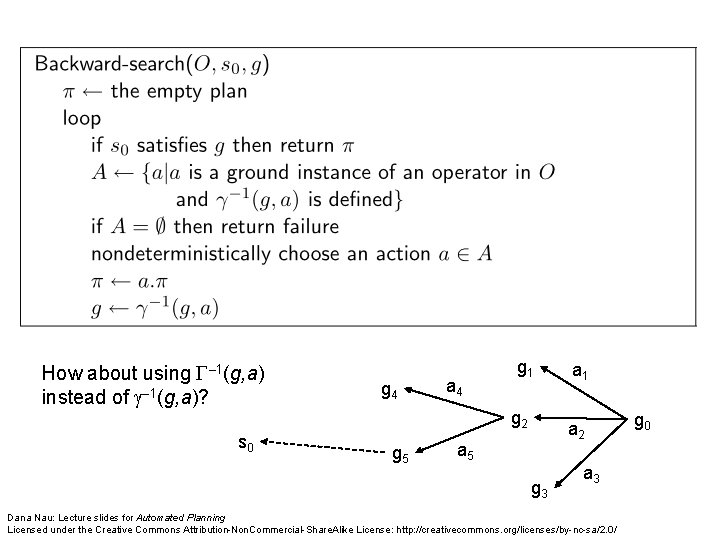

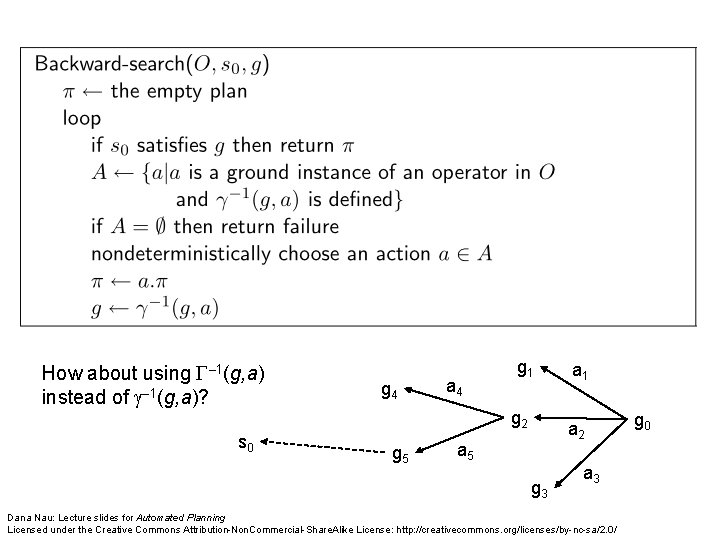

How about using – 1(g, a) instead of – 1(g, a)? s 0 g 4 a 4 g 1 g 2 g 5 a 1 a 2 a 5 g 3 a 3 Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/ g 0

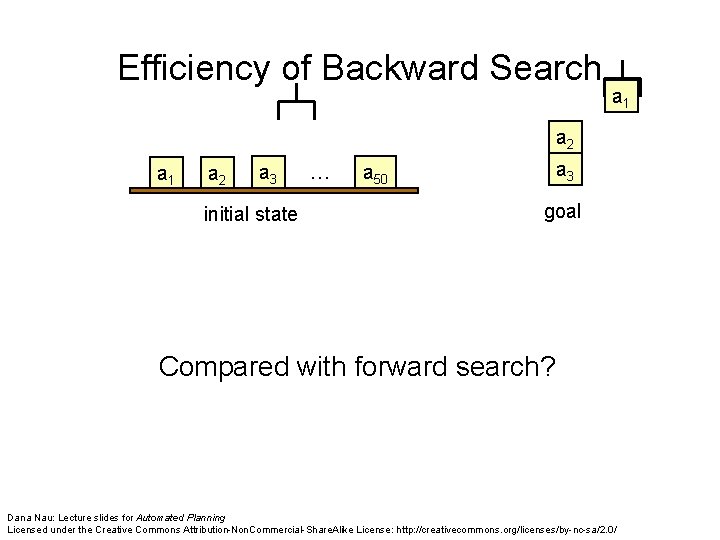

Efficiency of Backward Search a 1 a 2 a 3 initial state … a 50 a 3 goal • Backward search can also have a very large branching factor Ø E. g. , many relevant actions that don’t regress toward the initial state Compared with forward search? • As before, deterministic implementations can waste lots of time trying all of them Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

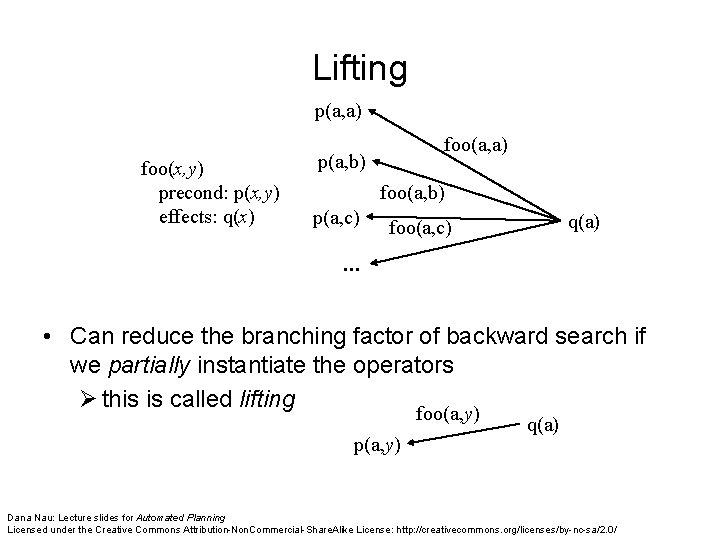

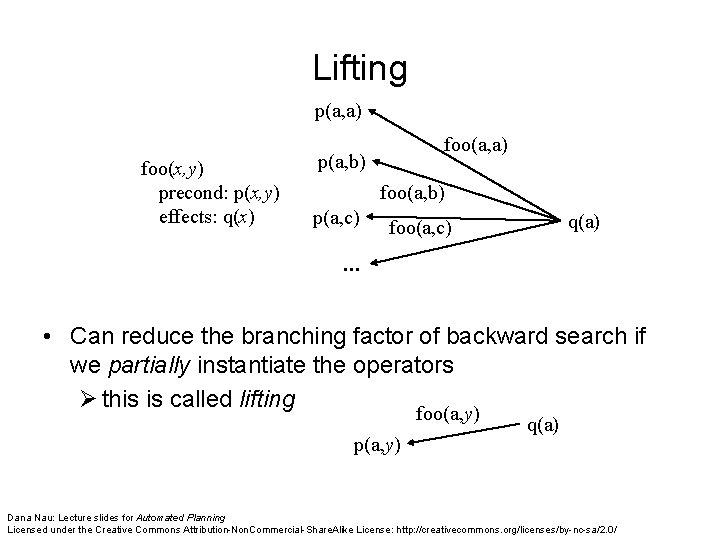

Lifting p(a, a) foo(x, y) precond: p(x, y) effects: q(x) foo(a, a) p(a, b) foo(a, b) p(a, c) q(a) foo(a, c) … • Can reduce the branching factor of backward search if we partially instantiate the operators Ø this is called lifting foo(a, y) p(a, y) q(a) Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

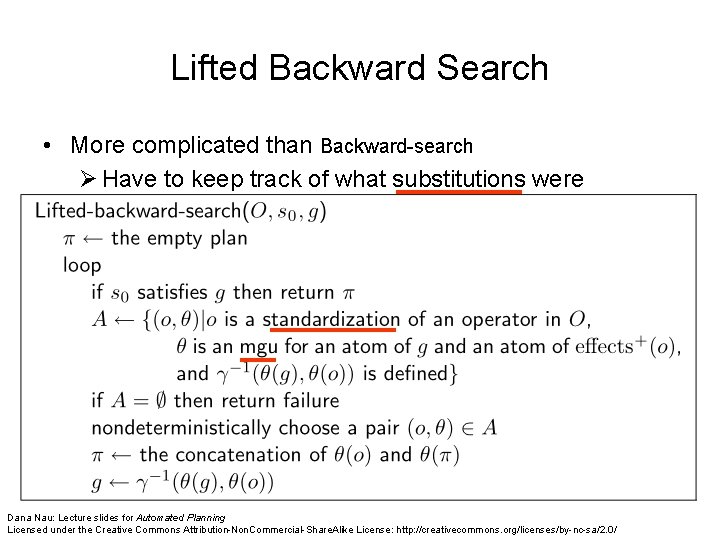

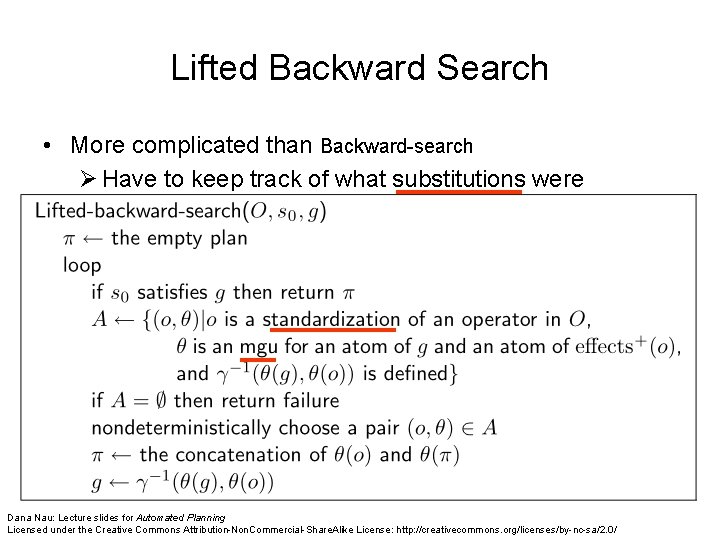

Lifted Backward Search • More complicated than Backward-search Ø Have to keep track of what substitutions were performed • But it has a much smaller branching factor Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

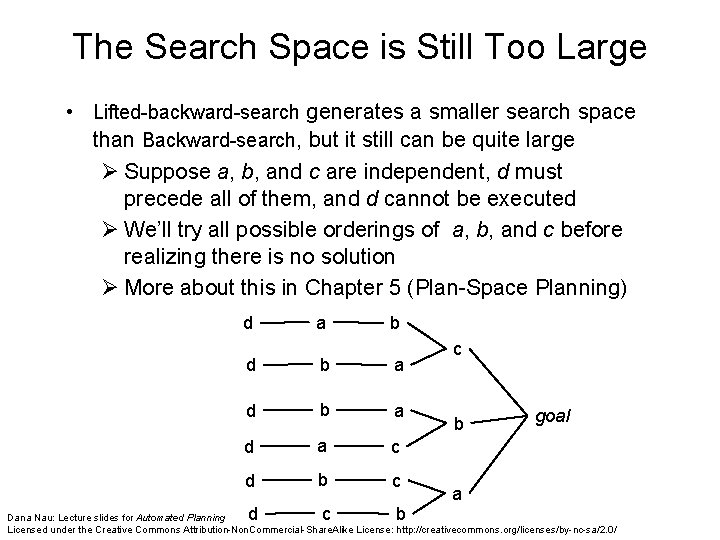

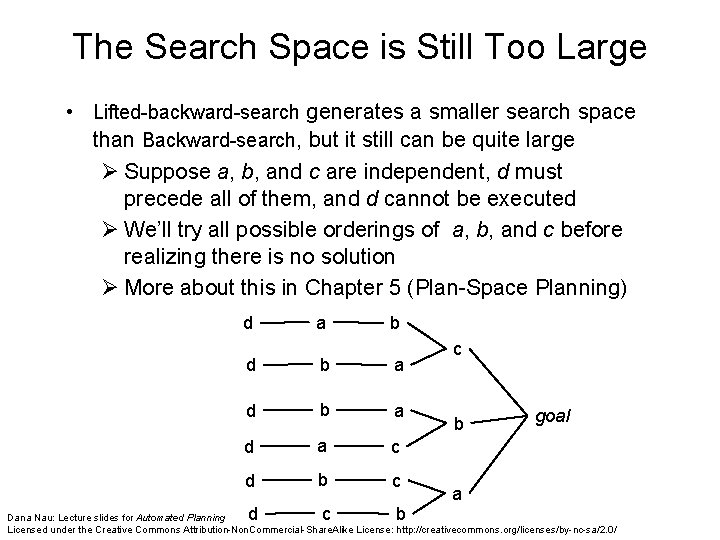

The Search Space is Still Too Large • Lifted-backward-search generates a smaller search space than Backward-search, but it still can be quite large Ø Suppose a, b, and c are independent, d must precede all of them, and d cannot be executed Ø We’ll try all possible orderings of a, b, and c before realizing there is no solution Ø More about this in Chapter 5 (Plan-Space Planning) d a b d b a d a c d b c d c b goal a Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

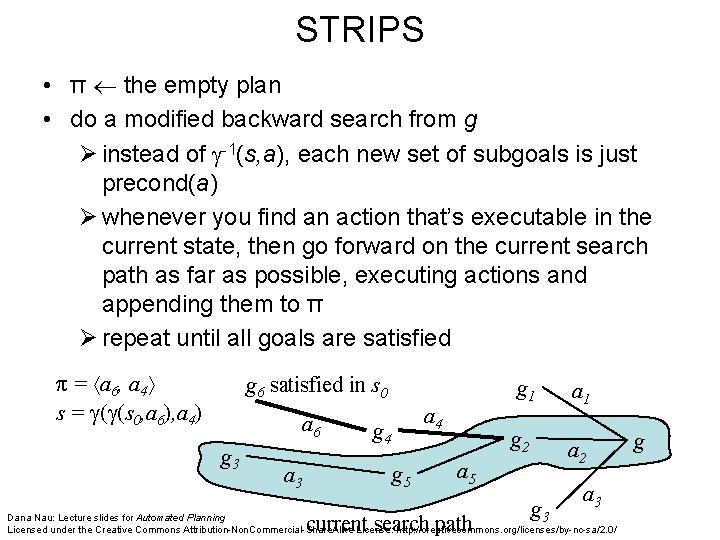

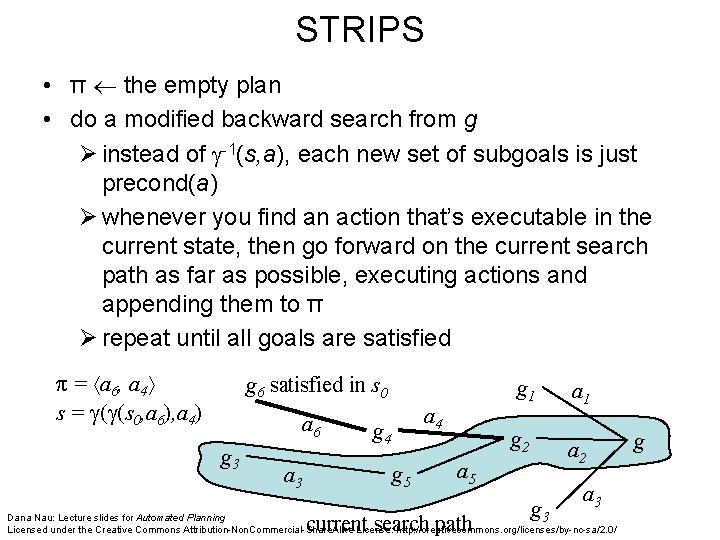

STRIPS • π the empty plan • do a modified backward search from g Ø instead of -1(s, a), each new set of subgoals is just precond(a) Ø whenever you find an action that’s executable in the current state, then go forward on the current search path as far as possible, executing actions and appending them to π Ø repeat until all goals are satisfied π = a 6, a 4 s = ( (s 0, a 6), a 4) g 6 satisfied in s 0 a 6 g 3 a 4 g 5 g 1 a 1 g 2 a 5 g a 3 Dana Nau: Lecture slides for Automated Planning 3 Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/ current search path g

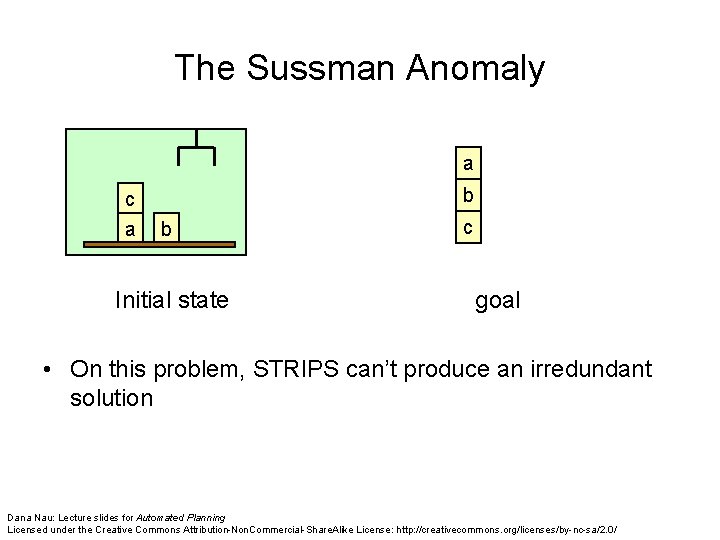

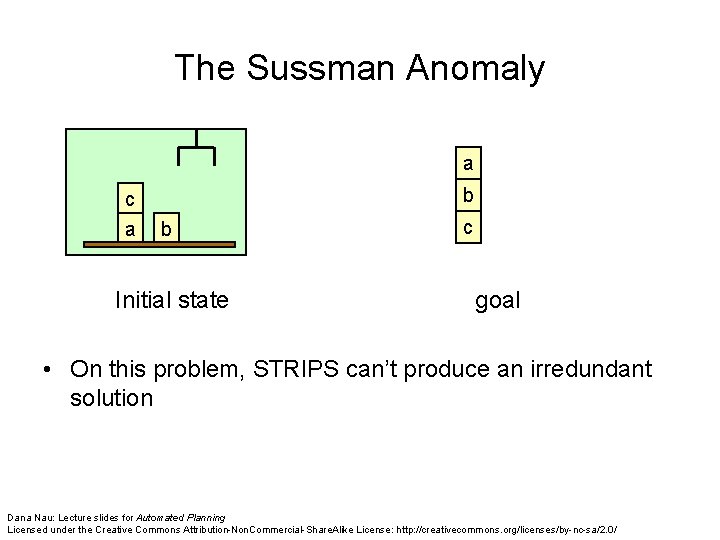

The Sussman Anomaly a c a b b Initial state c goal • On this problem, STRIPS can’t produce an irredundant solution Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

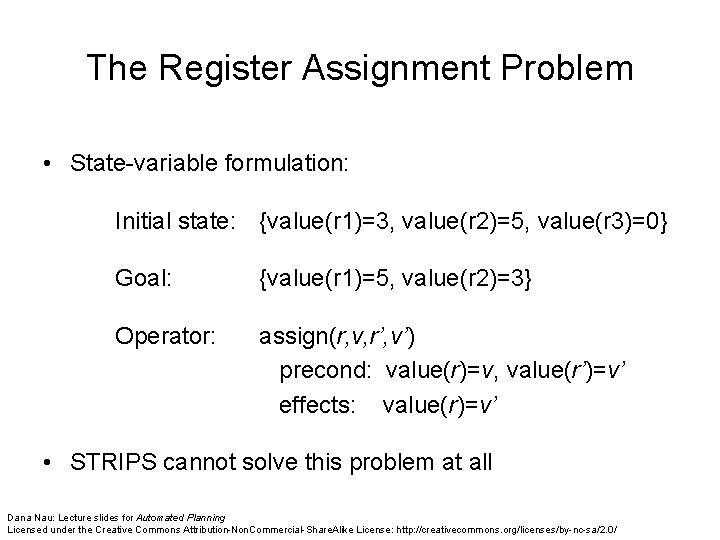

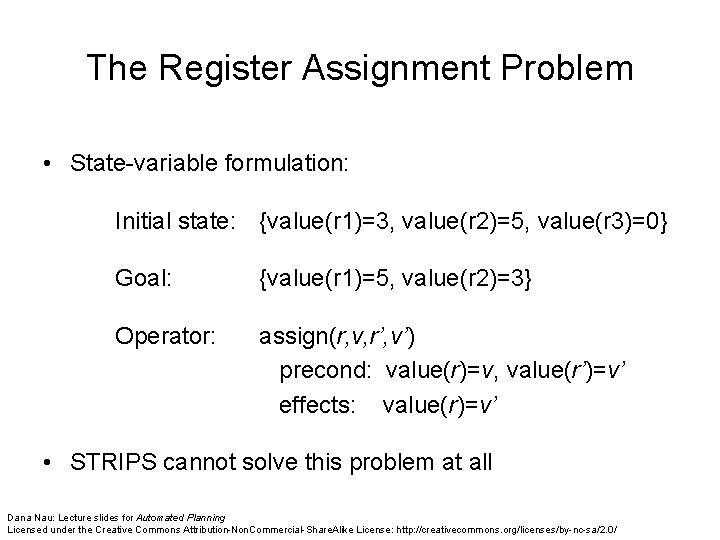

The Register Assignment Problem • State-variable formulation: Initial state: {value(r 1)=3, value(r 2)=5, value(r 3)=0} Goal: {value(r 1)=5, value(r 2)=3} Operator: assign(r, v, r’, v’) precond: value(r)=v, value(r’)=v’ effects: value(r)=v’ • STRIPS cannot solve this problem at all Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

How to Fix? • Several ways: Ø Do something other than state-space search • e. g. , Chapters 5– 8 Ø Use forward or backward state-space search, with domain-specific knowledge to prune the search space • Can solve both problems quite easily this way • Example: block stacking using forward search Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

Domain-Specific Knowledge • A blocks-world planning problem P = (O, s 0, g) is solvable if s 0 and g satisfy some simple consistency conditions • g should not mention any blocks not mentioned in s 0 • a block cannot be on two other blocks at once • etc. – Can check these in time O(n log n) • If P is solvable, can easily construct a solution of length O(2 m), where m is the number of blocks Ø Move all blocks to the table, then build up stacks from the bottom • Can do this in time O(n) • With additional domain-specific knowledge can do even better …

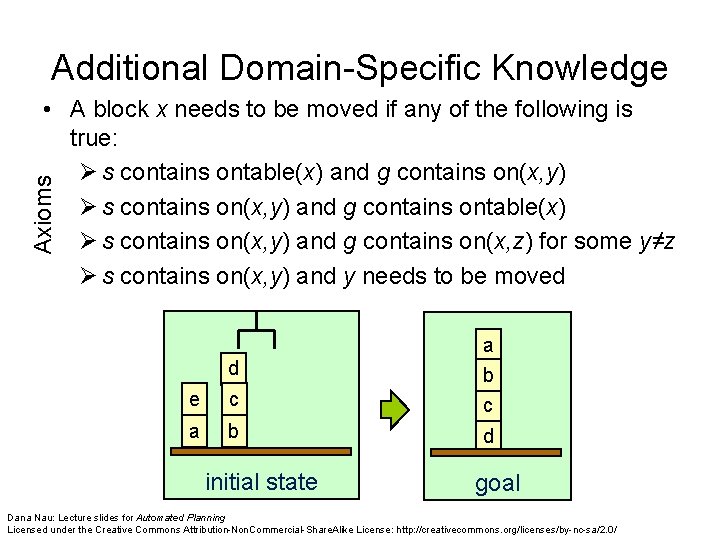

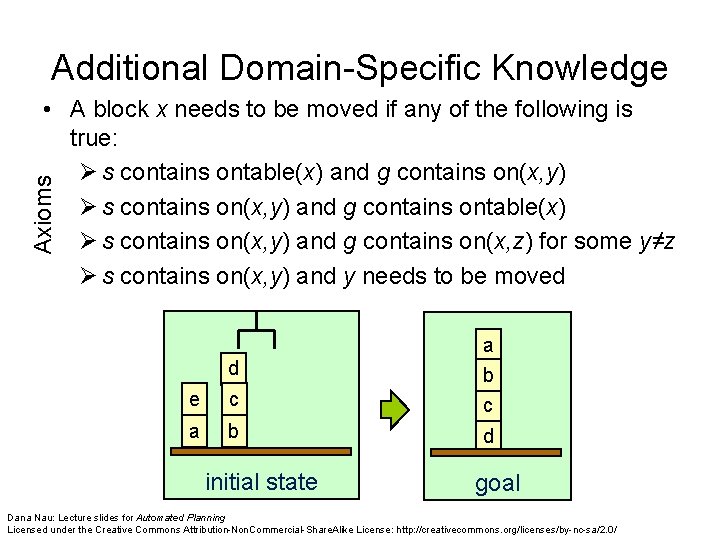

Additional Domain-Specific Knowledge Axioms • A block x needs to be moved if any of the following is true: Ø s contains ontable(x) and g contains on(x, y) Ø s contains on(x, y) and g contains ontable(x) Ø s contains on(x, y) and g contains on(x, z) for some y≠z Ø s contains on(x, y) and y needs to be moved e c a b d d initial state goal Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

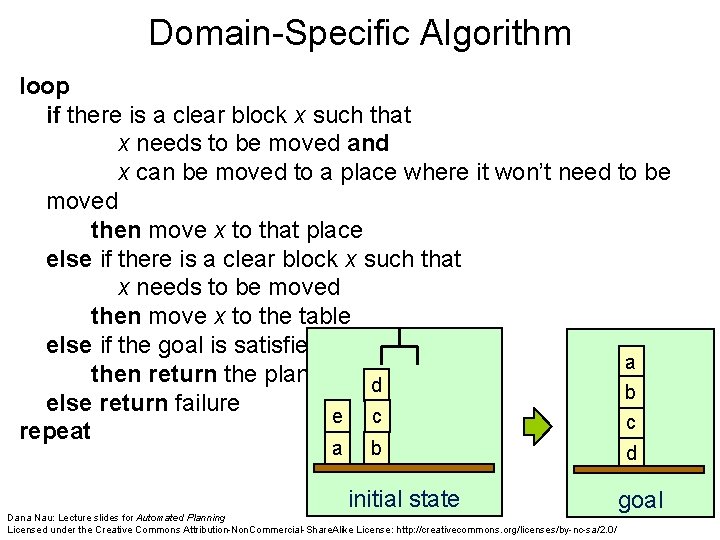

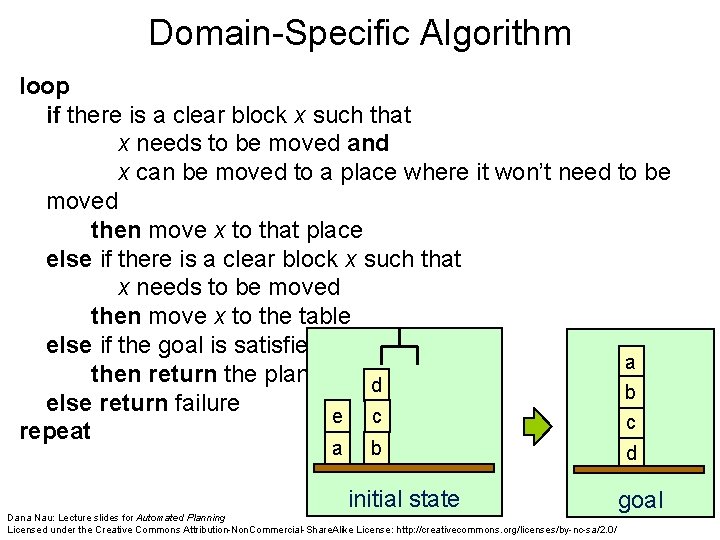

Domain-Specific Algorithm loop if there is a clear block x such that x needs to be moved and x can be moved to a place where it won’t need to be moved then move x to that place else if there is a clear block x such that x needs to be moved then move x to the table else if the goal is satisfied a then return the plan d b else return failure e c c repeat a b initial state Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/ d goal

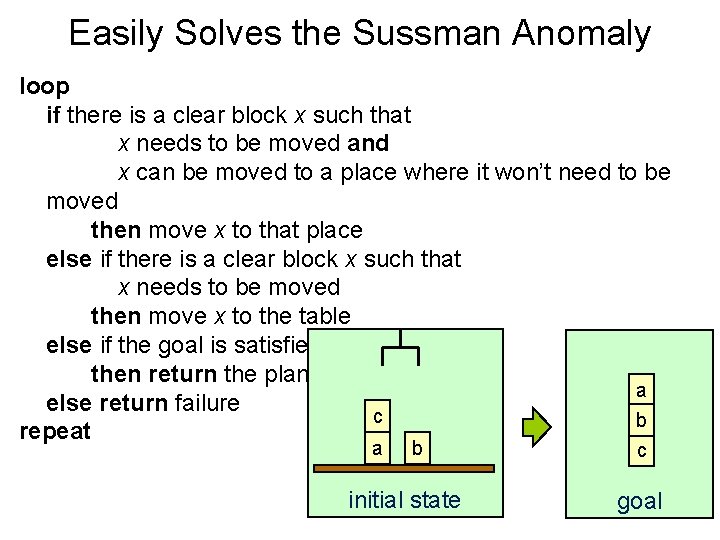

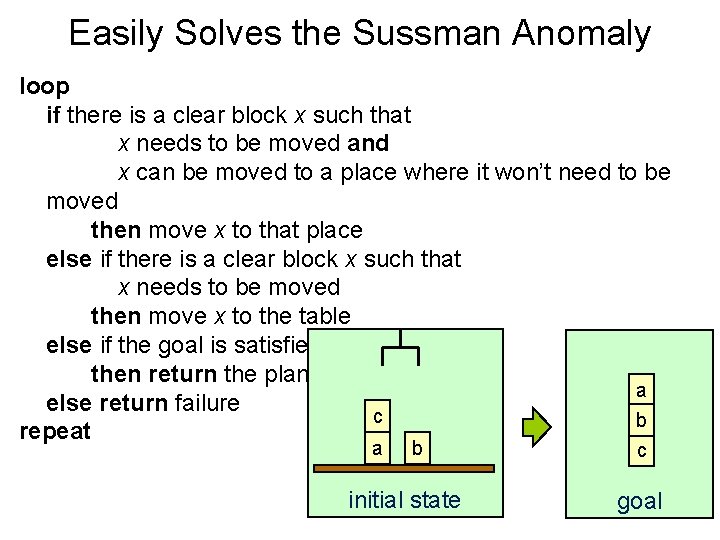

Easily Solves the Sussman Anomaly loop if there is a clear block x such that x needs to be moved and x can be moved to a place where it won’t need to be moved then move x to that place else if there is a clear block x such that x needs to be moved then move x to the table else if the goal is satisfied then return the plan a else return failure c b repeat a b initial state c goal

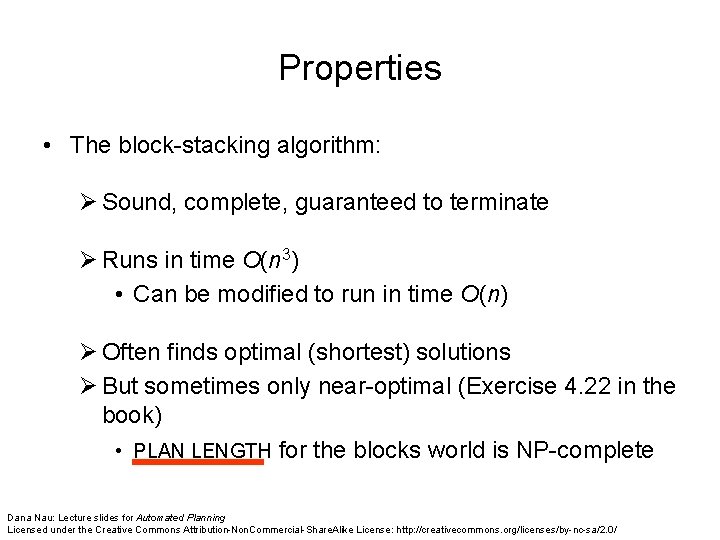

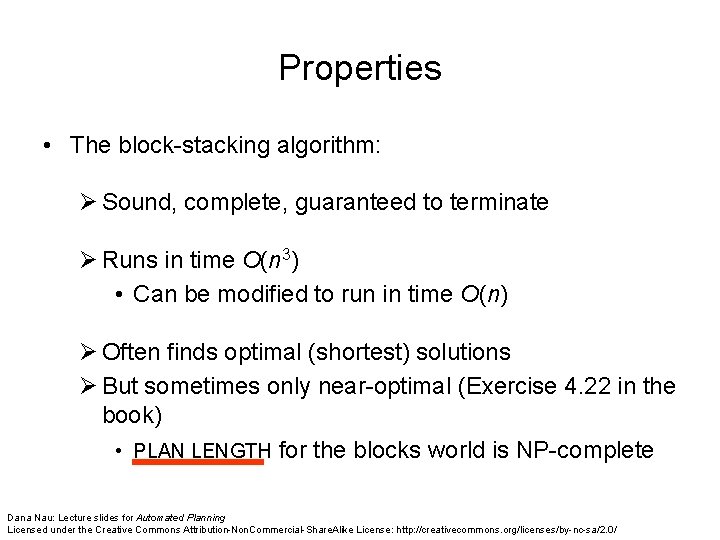

Properties • The block-stacking algorithm: Ø Sound, complete, guaranteed to terminate Ø Runs in time O(n 3) • Can be modified to run in time O(n) Ø Often finds optimal (shortest) solutions Ø But sometimes only near-optimal (Exercise 4. 22 in the book) • PLAN LENGTH for the blocks world is NP-complete Dana Nau: Lecture slides for Automated Planning Licensed under the Creative Commons Attribution-Non. Commercial-Share. Alike License: http: //creativecommons. org/licenses/by-nc-sa/2. 0/

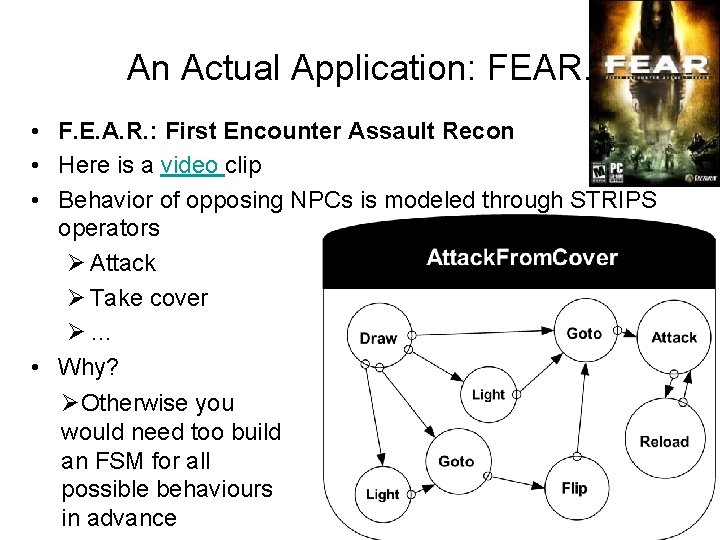

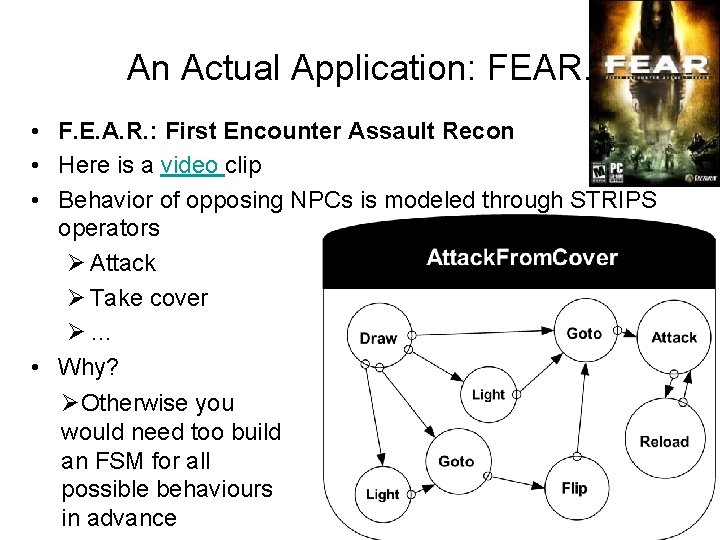

An Actual Application: FEAR. • F. E. A. R. : First Encounter Assault Recon • Here is a video clip • Behavior of opposing NPCs is modeled through STRIPS operators Ø Attack Ø Take cover Ø… • Why? ØOtherwise you would need too build an FSM for all possible behaviours in advance

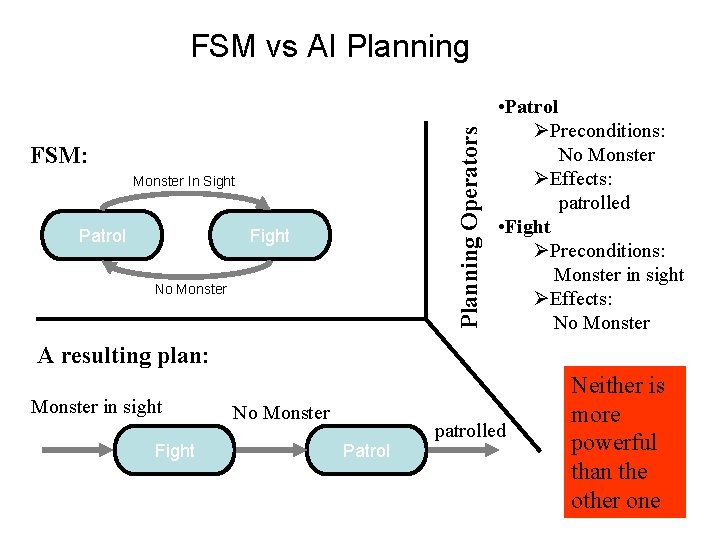

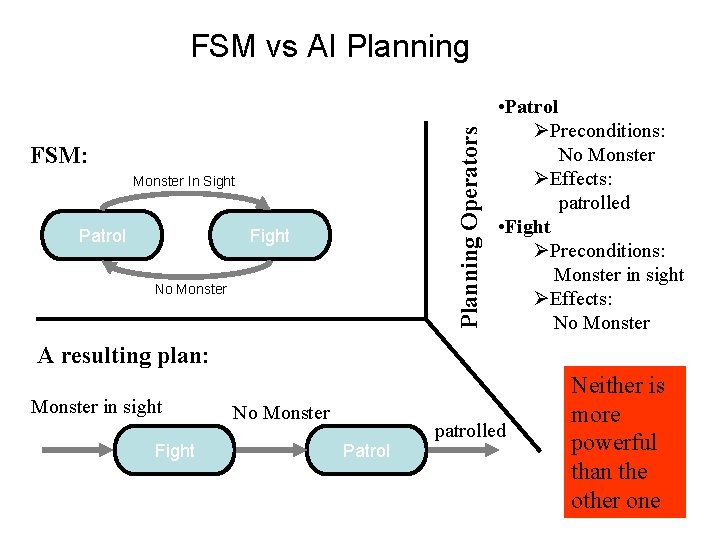

Planning Operators FSM vs AI Planning FSM: Monster In Sight Patrol Fight No Monster • Patrol ØPreconditions: No Monster ØEffects: patrolled • Fight ØPreconditions: Monster in sight ØEffects: No Monster A resulting plan: Monster in sight Fight No Monster Patrol patrolled Neither is more powerful than the other one

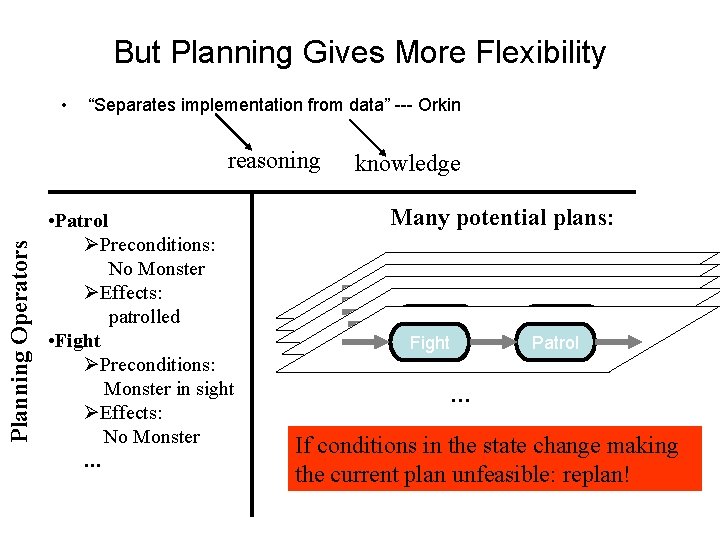

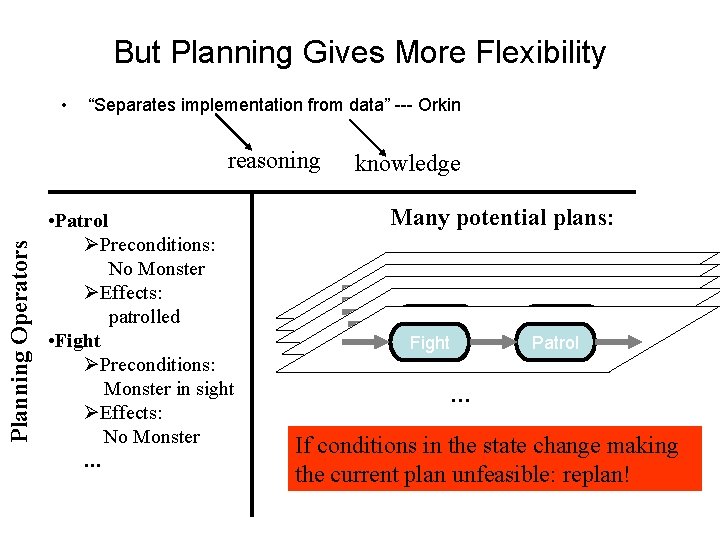

But Planning Gives More Flexibility • “Separates implementation from data” --- Orkin Planning Operators reasoning • Patrol ØPreconditions: No Monster ØEffects: patrolled • Fight ØPreconditions: Monster in sight ØEffects: No Monster … knowledge Many potential plans: Fight Fight Patrol Patrol … If conditions in the state change making the current plan unfeasible: replan!