STAPL An Adaptive Generic Parallel C Library Tao

STAPL: An Adaptive, Generic, Parallel C++ Library Tao Huang, Alin Jula, Jack Perdue, Tagarathi Nageswar Rao, Timmie Smith, Yuriy Solodkyy, Gabriel Tanase, Nathan Thomas, Anna Tikhonova, Olga Tkachyshyn, Nancy M. Amato, Lawrence Rauchwerger stapl-support@tamu. edu Parasol Lab, Department of Computer Science, Texas A&M University, http: //parasol. tamu. edu/ STAPL: Standard Template Adaptive Parallel Library STAPL Overview The Standard Template Adaptive Parallel Library (STAPL) is a framework for parallel C++ code. Its core is a library of ISO Standard C++ components with interfaces similar to the (sequential) ISO C++ standard library (STL). Adaptive Framework • Ease of use Shared Object Model provides consistent programming interface, regardless of a actual system memory configuration (shared or distributed). • Efficiency Application building blocks are based on C++ STL constructs that are extended and automatically tuned for parallel execution. • Portability ARMI runtime system hides machine specific details and provides an efficient, uniform communication interface. p. Algorithms Run-time System ARMI Communication Library Pthreads Non-partitioned Shared Memory View of Data l l l Base classes implement basic functionality. New p. Containers can be derived from Base classes with extended and optimized functionality. For example, a p. Matrix can be accessed using a row based view or a column based view. Views can be used to specify p. Container (re)distribution. Common views provided (e. g. row, column, blocked, block cyclic for p. Matrix); users can build specialized views. p. Vector, p. List, p. Hash. Map, p. Graph, p. Matrix provided. Provides a shared view of a distributed work space l Partitioned Shared Memory View of Data l Run-time System and ARMI Easy to use defaults provided for basic users; advanced users have the flexibility to specialize and/or optimize methods. Supports multiple logical views of the data. l l p. Container Provides a shared-memory view of distributed data. Deploys an Efficient Design l Scheduler Executor Performance Monitor Open. MP MPI Native Data Shared Memory Data Distributed Memory Th 0 Data Distributed Memory Th 1 Th 2 Th 3 l Supports parallel execution l Thread 0 Thread 1 Thread 2 l Thread 3 Row Based View Aligned with the distribution Column Based View Not aligned with the distribution Subranges of the p. Range are executable tasks A task consists of a function object and a description of the data to which it is applied Clean expression of computation as parallel task graph Stores Data Dependence Graphs used in processing subranges Subrange 1 Subrange 2 Application data stored in p. Graph Thread 2 normal misfold Prion Protein l Seismic Ray Tracing l Simulation of propagation of seismic rays in earth’s crust. Thread 2 • Provides high performance, RMI style communication between threads in program • async_rmi, standard collective operations (i. e. , broadcast and reduce). Function Thread 1 Subrange 4 Motion Planning l Probabilistic Roadmap Methods for motion planning with application to protein folding, intelligent CAD, animation, robotics, etc. ARMI Communication Library Thread 1 Function Particle Transport Computation l Efficient Massively Parallel Implementation of Discrete Ordinates Particle Transport Calculation. Oil well logging simulation p. Range Distributed data structure with parallel methods. l p. Containers l p. Container l l User Application Code The goals of STAPL are: l Applications using STAPL Subrange 3 Subrange 5 Subrange 6 Function • Transparent execution using various lower level protocols such as MPI and Pthreads – also, mixed mode operation. Function p. Range defined on a p. Graph across two threads. • Controllable tuning – message aggregation. Effect of Aggregation in ARMI

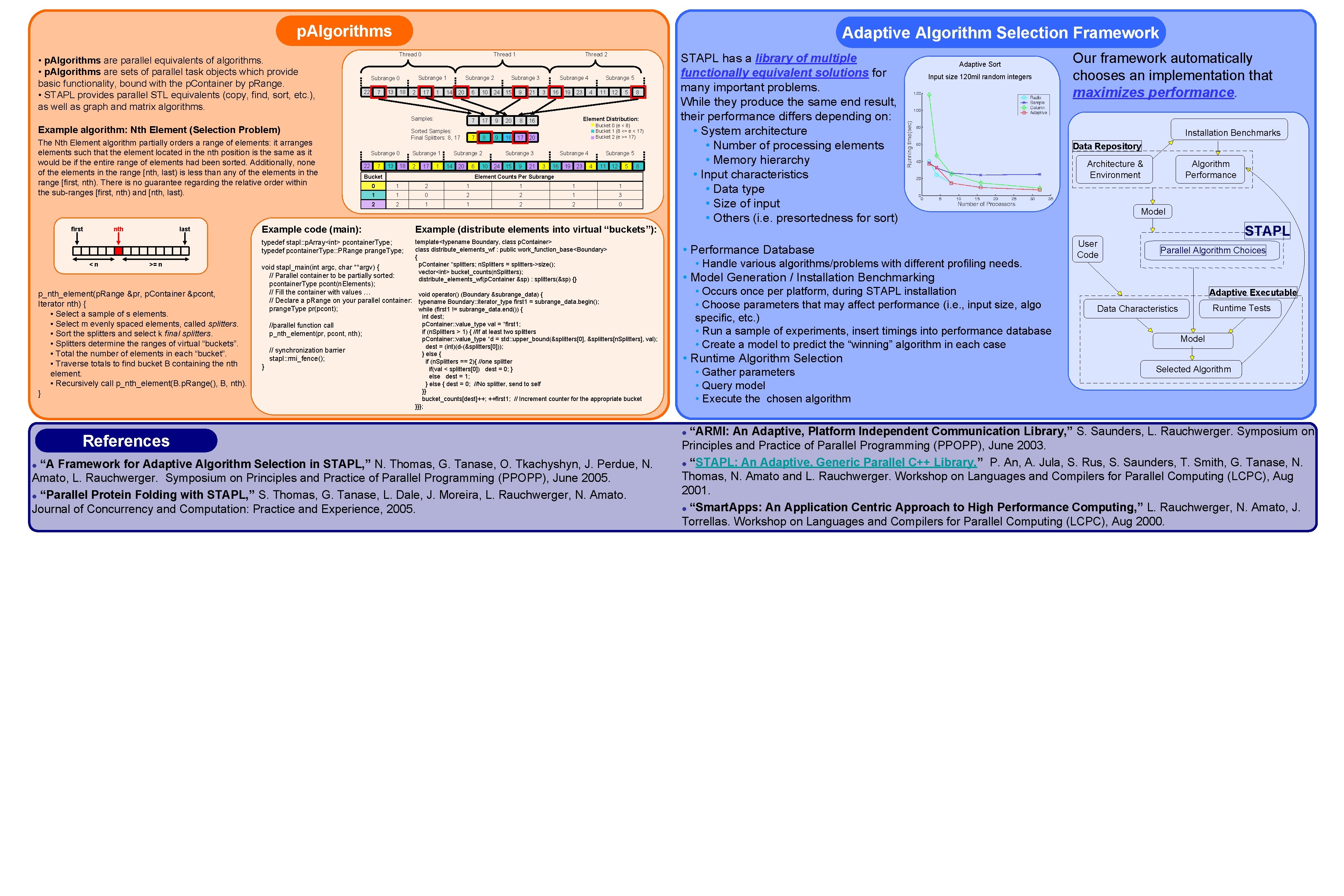

p. Algorithms • p. Algorithms are parallel equivalents of algorithms. • p. Algorithms are sets of parallel task objects which provide basic functionality, bound with the p. Container by p. Range. • STAPL provides parallel STL equivalents (copy, find, sort, etc. ), as well as graph and matrix algorithms. Adaptive Algorithm Selection Framework Thread 0 Subrange 1 Subrange 0 22 7 13 18 Thread 1 2 17 1 14 Subrange 2 20 Samples: Example algorithm: Nth Element (Selection Problem) The Nth Element algorithm partially orders a range of elements: it arranges elements such that the element located in the nth position is the same as it would be if the entire range of elements had been sorted. Additionally, none of the elements in the range [nth, last) is less than any of the elements in the range [first, nth). There is no guarantee regarding the relative order within the sub-ranges [first, nth) and [nth, last). first nth <n last >= n p_nth_element(p. Range &pr, p. Container &pcont, Iterator nth) { • Select a sample of s elements. • Select m evenly spaced elements, called splitters. • Sort the splitters and select k final splitters. • Splitters determine the ranges of virtual “buckets”. • Total the number of elements in each “bucket”. • Traverse totals to find bucket B containing the nth element. • Recursively call p_nth_element(B. p. Range(), B, nth). } Sorted Samples: Final Splitters: 8, 17 Subrange 0 22 7 13 18 Subrange 1 2 17 1 Subrange 3 6 10 24 15 9 21 7 17 9 20 8 16 7 8 9 16 17 20 Subrange 2 14 20 6 Bucket Thread 2 10 Subrange 4 3 16 15 9 21 23 4 11 12 5 8 Element Distribution: Bucket 0 (e < 8) Bucket 1 (8 <= e < 17) Bucket 2 (e >= 17) Subrange 3 24 19 Subrange 5 Subrange 4 3 16 19 23 Subrange 5 4 11 12 5 8 Element Counts Per Subrange 0 1 2 1 1 1 0 2 2 1 3 2 2 1 1 2 2 0 Example code (main): Example (distribute elements into virtual “buckets”): typedef stapl: : p. Array<int> pcontainer. Type; typedef pcontainer. Type: : PRange prange. Type; template<typename Boundary, class p. Container> class distribute_elements_wf : public work_function_base<Boundary> { p. Container *splitters; n. Splitters = splitters->size(); vector<int> bucket_counts(n. Splitters); distribute_elements_wf(p. Container &sp) : splitters(&sp) {} void stapl_main(int argc, char **argv) { // Parallel container to be partially sorted: pcontainer. Type pcont(n. Elements); // Fill the container with values … void operator() (Boundary &subrange_data) { // Declare a p. Range on your parallel container: typename Boundary: : iterator_type first 1 = subrange_data. begin(); prange. Type pr(pcont); while (first 1 != subrange_data. end()) { //parallel function call p_nth_element(pr, pcont, nth); // synchronization barrier stapl: : rmi_fence(); } int dest; p. Container: : value_type val = *first 1; if (n. Splitters > 1) { //If at least two splitters p. Container: : value_type *d = std: : upper_bound(&splitters[0], &splitters[n. Splitters], val); dest = (int)(d-(&splitters[0])); } else { if (n. Splitters == 2){ //one splitter if(val < splitters[0]) dest = 0; } else dest = 1; } else { dest = 0; //No splitter, send to self }} bucket_counts[dest]++; ++first 1; // Increment counter for the appropriate bucket }}}; References “A Framework for Adaptive Algorithm Selection in STAPL, ” N. Thomas, G. Tanase, O. Tkachyshyn, J. Perdue, N. Amato, L. Rauchwerger. Symposium on Principles and Practice of Parallel Programming (PPOPP), June 2005. l “Parallel Protein Folding with STAPL, ” S. Thomas, G. Tanase, L. Dale, J. Moreira, L. Rauchwerger, N. Amato. Journal of Concurrency and Computation: Practice and Experience, 2005. l STAPL has a library of multiple functionally equivalent solutions for many important problems. While they produce the same end result, their performance differs depending on: • System architecture • Number of processing elements • Memory hierarchy • Input characteristics • Data type • Size of input • Others (i. e. presortedness for sort) Adaptive Sort Input size 120 mil random integers • Performance Database • Handle various algorithms/problems with different profiling needs. Our framework automatically chooses an implementation that maximizes performance. Installation Benchmarks Data Repository Architecture & Environment Algorithm Performance Model User Code STAPL Parallel Algorithm Choices • Model Generation / Installation Benchmarking • Occurs once per platform, during STAPL installation • Choose parameters that may affect performance (i. e. , input size, algo specific, etc. ) • Run a sample of experiments, insert timings into performance database • Create a model to predict the “winning” algorithm in each case • Runtime Algorithm Selection • Gather parameters • Query model • Execute the chosen algorithm Adaptive Executable Runtime Tests Data Characteristics Model Selected Algorithm “ARMI: An Adaptive, Platform Independent Communication Library, ” S. Saunders, L. Rauchwerger. Symposium on Principles and Practice of Parallel Programming (PPOPP), June 2003. l “STAPL: An Adaptive, Generic Parallel C++ Library, ” P. An, A. Jula, S. Rus, S. Saunders, T. Smith, G. Tanase, N. Thomas, N. Amato and L. Rauchwerger. Workshop on Languages and Compilers for Parallel Computing (LCPC), Aug 2001. l “Smart. Apps: An Application Centric Approach to High Performance Computing, ” L. Rauchwerger, N. Amato, J. Torrellas. Workshop on Languages and Compilers for Parallel Computing (LCPC), Aug 2000. l

- Slides: 2