Standards in DPM Outline Disk Pool Manager DPM

- Slides: 37

Standards in DPM

Outline • • • Disk Pool Manager (DPM) Grid Data Management Why Standards POSIX Access (NFS 4. 1) HTTP / Web. DAV

Disk Pool Manager (DPM)

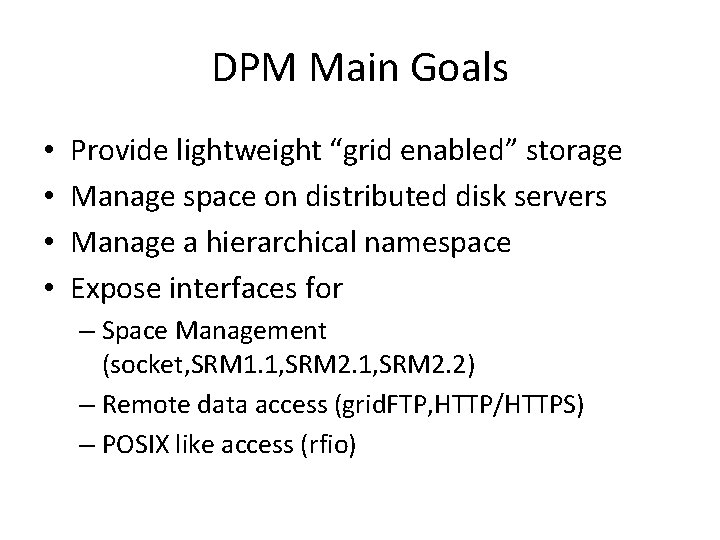

DPM Main Goals • • Provide lightweight “grid enabled” storage Manage space on distributed disk servers Manage a hierarchical namespace Expose interfaces for – Space Management (socket, SRM 1. 1, SRM 2. 2) – Remote data access (grid. FTP, HTTP/HTTPS) – POSIX like access (rfio)

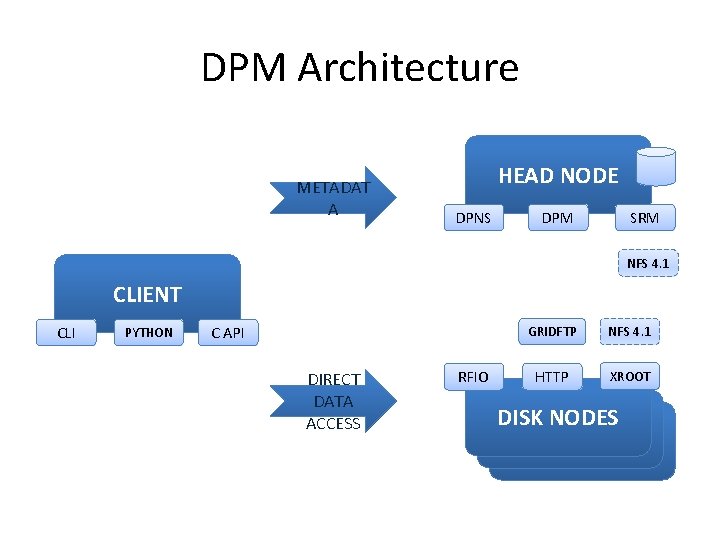

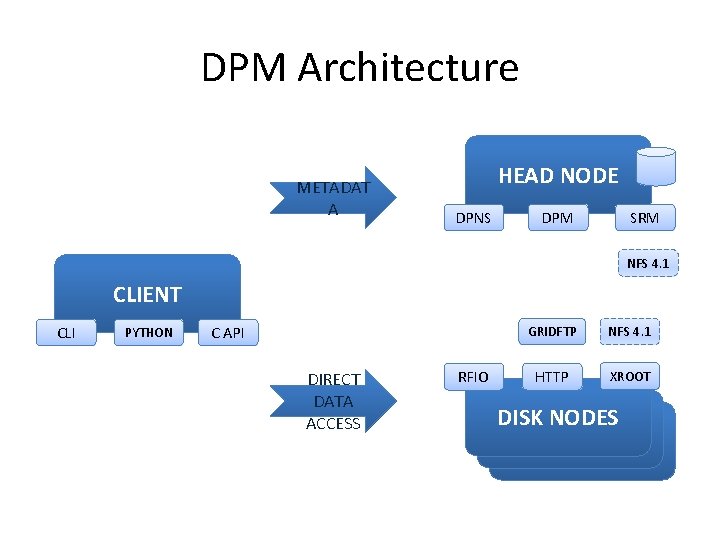

DPM Architecture METADAT A HEAD NODE DPNS DPM SRM NFS 4. 1 CLIENT CLI PYTHON C API DIRECT DATA ACCESS RFIO GRIDFTP NFS 4. 1 HTTP XROOT DISK NODES DISKNODE

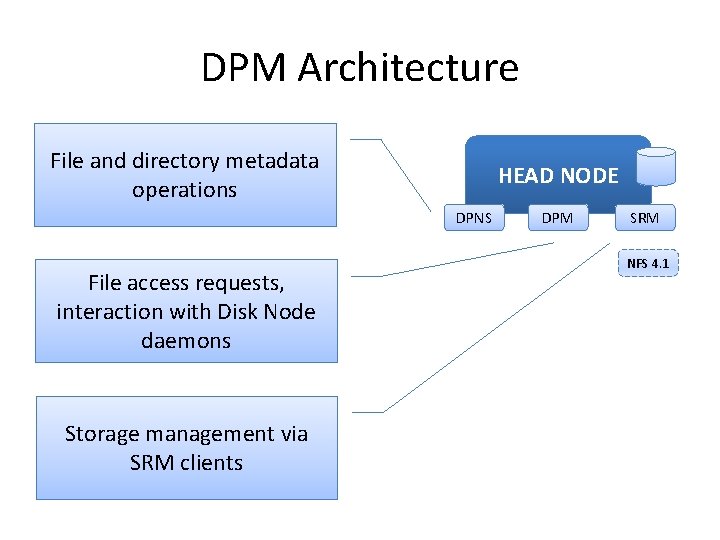

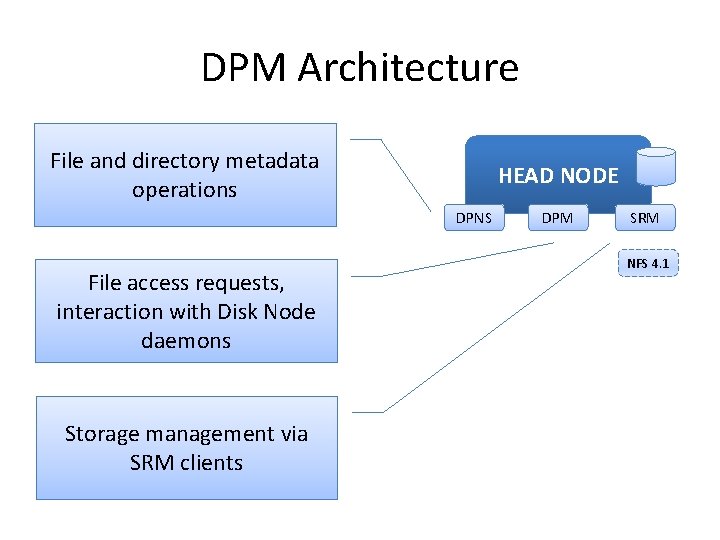

DPM Architecture METADATA File and directory metadata operations HEAD NODE DPNS File access requests, interaction with Disk Node daemons Storage management via SRM clients DPM SRM NFS 4. 1

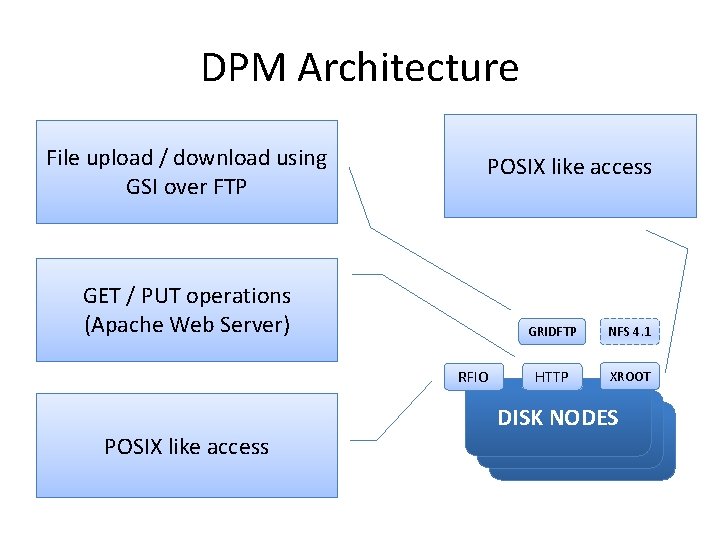

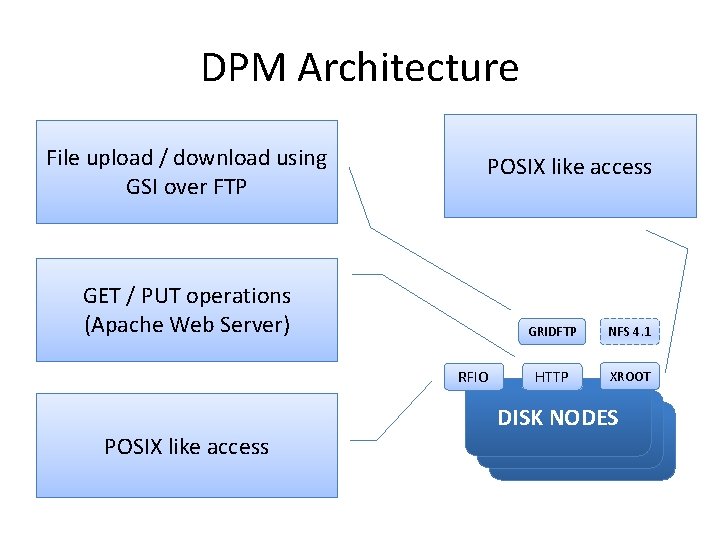

DPM Architecture File upload / download using GSI over FTP POSIX like access GET / PUT operations (Apache Web Server) RFIO POSIX like access GRIDFTP NFS 4. 1 HTTP XROOT DISK NODES DISKNODE

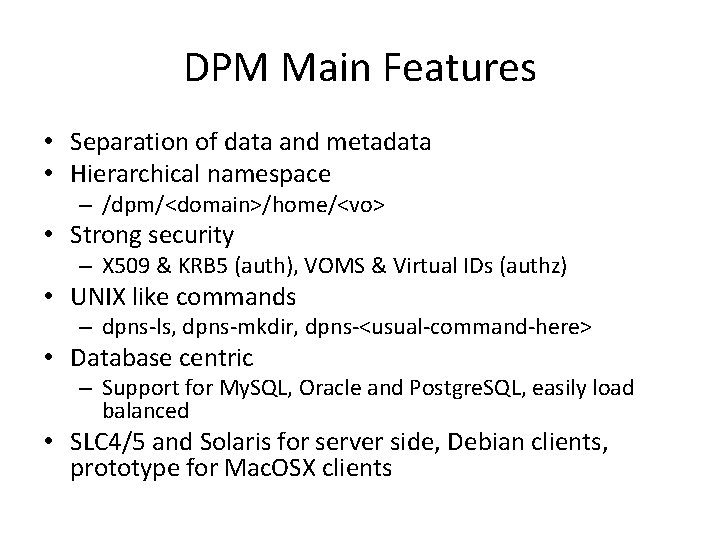

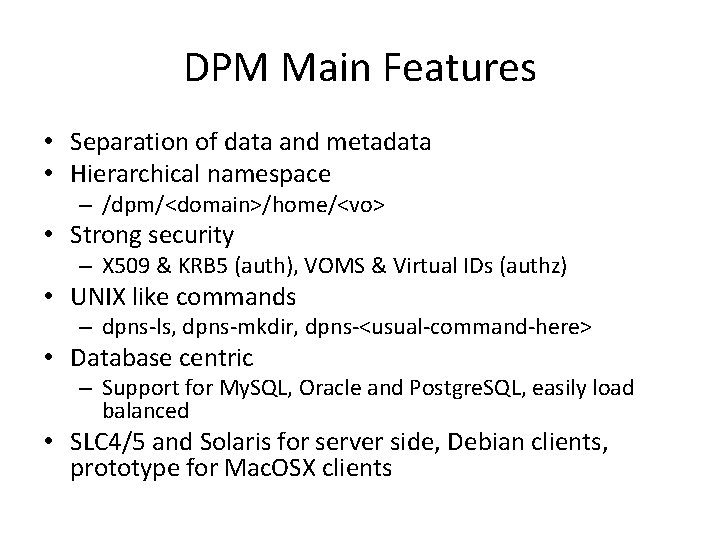

DPM Main Features • Separation of data and metadata • Hierarchical namespace – /dpm/<domain>/home/<vo> • Strong security – X 509 & KRB 5 (auth), VOMS & Virtual IDs (authz) • UNIX like commands – dpns-ls, dpns-mkdir, dpns-<usual-command-here> • Database centric – Support for My. SQL, Oracle and Postgre. SQL, easily load balanced • SLC 4/5 and Solaris for server side, Debian clients, prototype for Mac. OSX clients

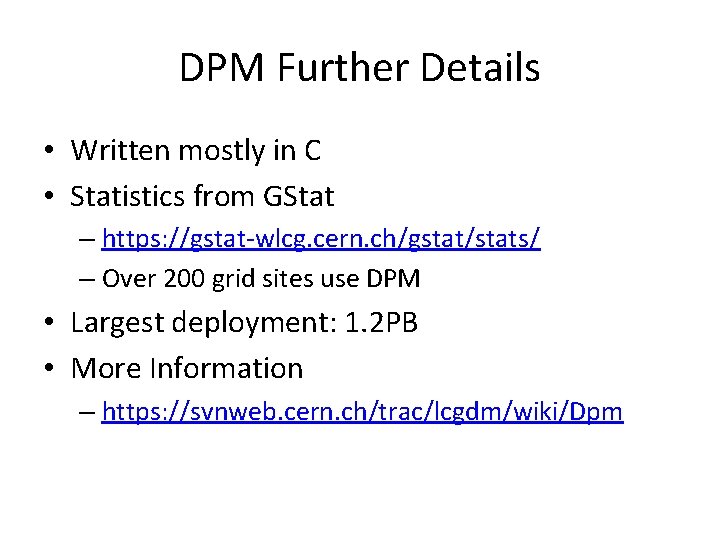

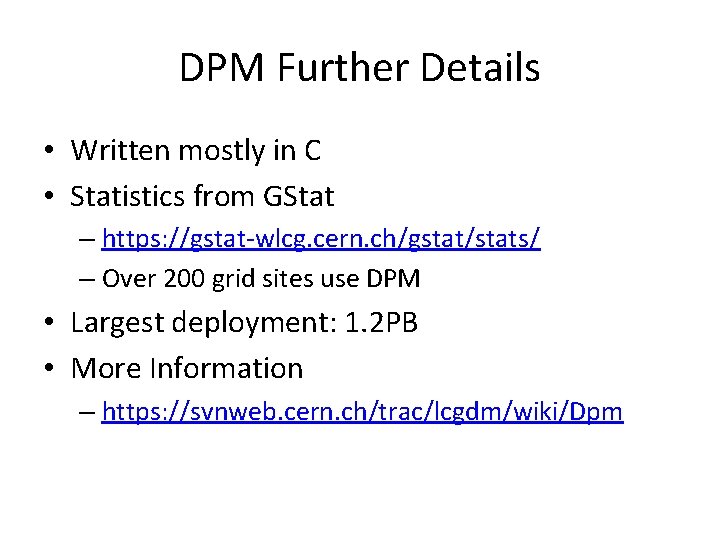

DPM Further Details • Written mostly in C • Statistics from GStat – https: //gstat-wlcg. cern. ch/gstat/stats/ – Over 200 grid sites use DPM • Largest deployment: 1. 2 PB • More Information – https: //svnweb. cern. ch/trac/lcgdm/wiki/Dpm

Grid Data Management

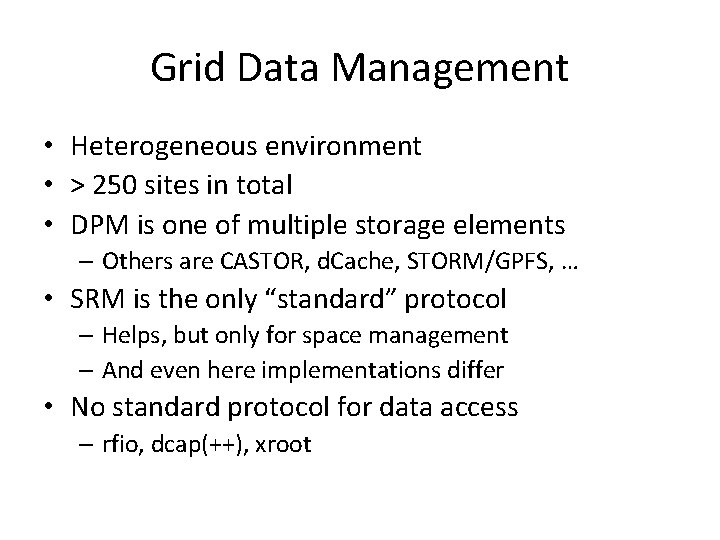

Grid Data Management • Heterogeneous environment • > 250 sites in total • DPM is one of multiple storage elements – Others are CASTOR, d. Cache, STORM/GPFS, … • SRM is the only “standard” protocol – Helps, but only for space management – And even here implementations differ • No standard protocol for data access – rfio, dcap(++), xroot

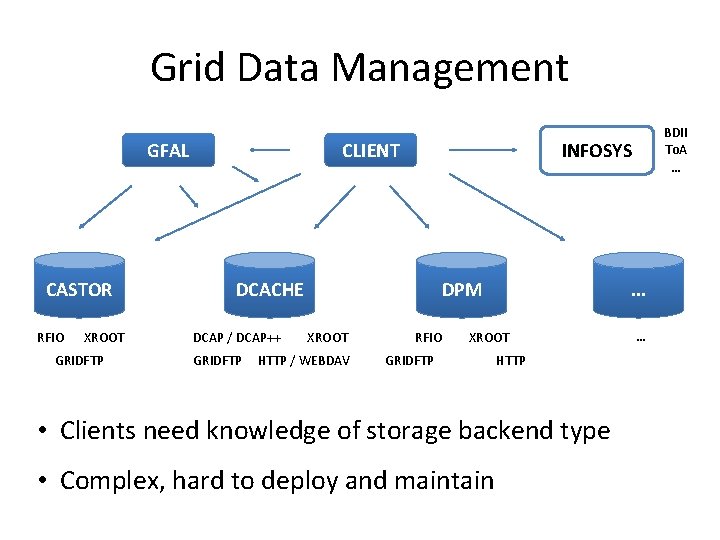

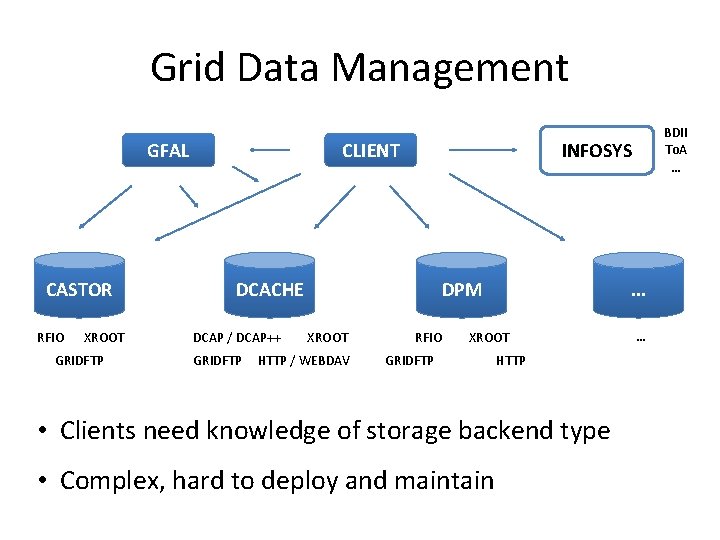

Grid Data Management GFAL CASTOR RFIO XROOT GRIDFTP INFOSYS CLIENT DCACHE DCAP / DCAP++ GRIDFTP DPM XROOT HTTP / WEBDAV BDII To. A … RFIO . . . XROOT GRIDFTP HTTP • Clients need knowledge of storage backend type • Complex, hard to deploy and maintain …

Grid Data Management • Library dependency issues • Requirement of user interfaces (UIs) – Entry points to the grid – Maintained by experts • Very hard to use “standard” distributions – Even transition from SLC 4 to 5 is problematic • Validation takes a long time

Why Standards? • Accessibility – Not limiting access to OS X version Y with library Z • Validation – Using common validation and test tools • Stability – Evolution discussed in a wide group • Ease of implementation – Sharing of experiences, common code base • No vendor lock-in • …

POSIX Data Access & NFS 4. 1

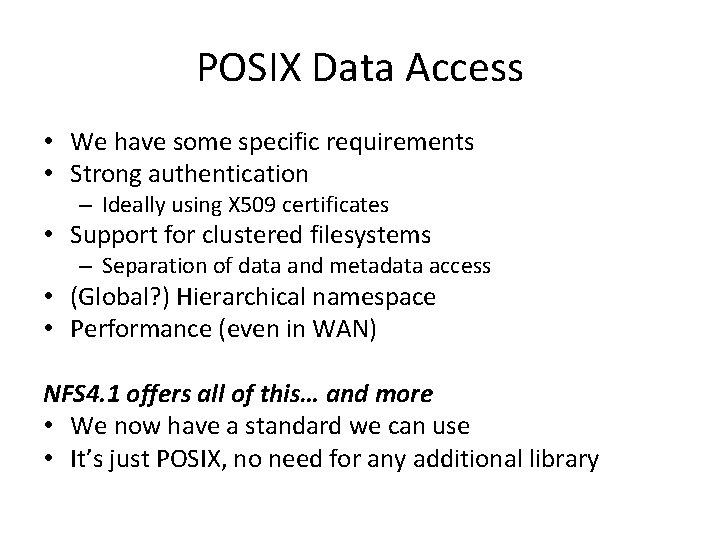

POSIX Data Access • We have some specific requirements • Strong authentication – Ideally using X 509 certificates • Support for clustered filesystems – Separation of data and metadata access • (Global? ) Hierarchical namespace • Performance (even in WAN) NFS 4. 1 offers all of this… and more • We now have a standard we can use • It’s just POSIX, no need for any additional library

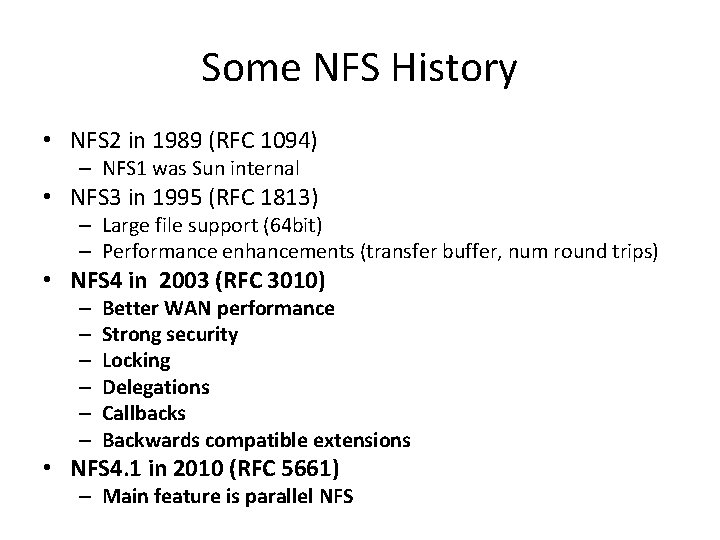

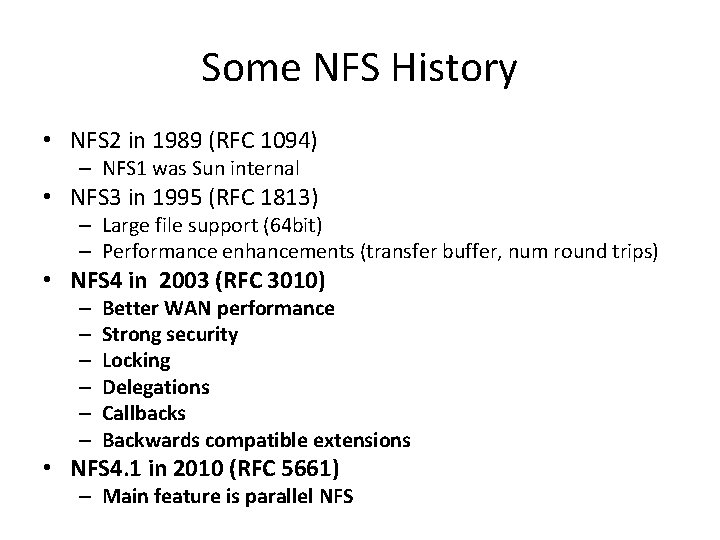

Some NFS History • NFS 2 in 1989 (RFC 1094) – NFS 1 was Sun internal • NFS 3 in 1995 (RFC 1813) – Large file support (64 bit) – Performance enhancements (transfer buffer, num round trips) • NFS 4 in 2003 (RFC 3010) – – – Better WAN performance Strong security Locking Delegations Callbacks Backwards compatible extensions • NFS 4. 1 in 2010 (RFC 5661) – Main feature is parallel NFS

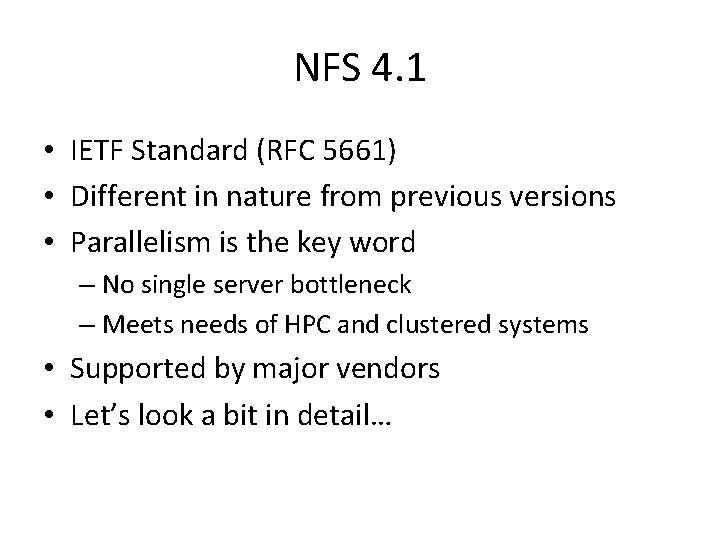

NFS 4. 1 • IETF Standard (RFC 5661) • Different in nature from previous versions • Parallelism is the key word – No single server bottleneck – Meets needs of HPC and clustered systems • Supported by major vendors • Let’s look a bit in detail…

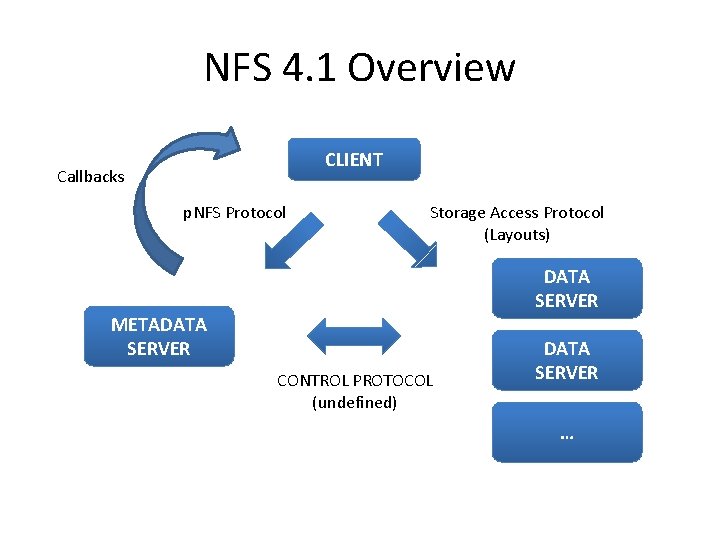

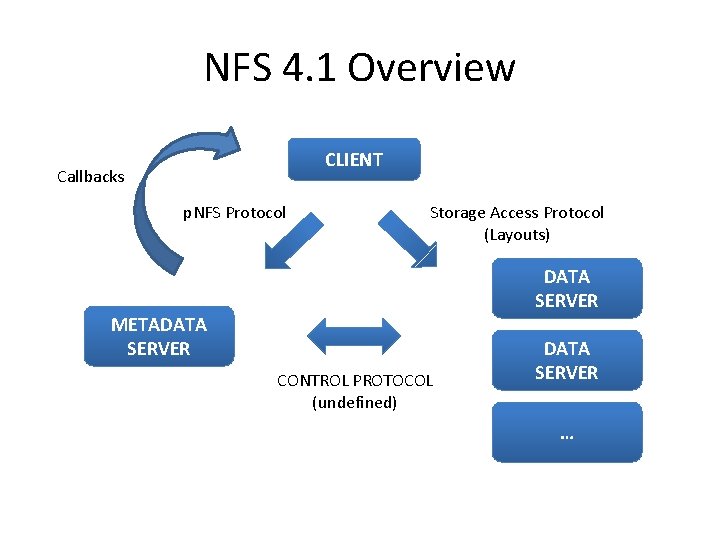

NFS 4. 1 Overview CLIENT Callbacks p. NFS Protocol Storage Access Protocol (Layouts) DATA SERVER METADATA SERVER CONTROL PROTOCOL (undefined) DATA SERVER …

NFS 4. 1 Feature 1 – Unified Protocol • One protocol, one port (2049) • Previous versions required additional protocols – mount, lock, status, …

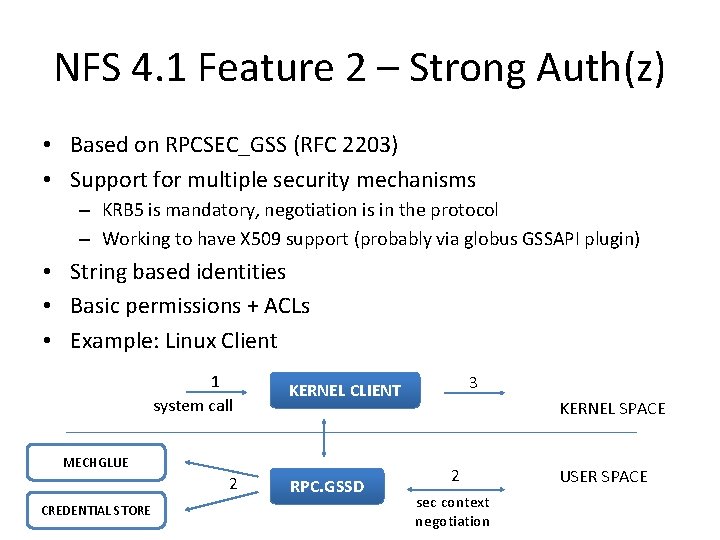

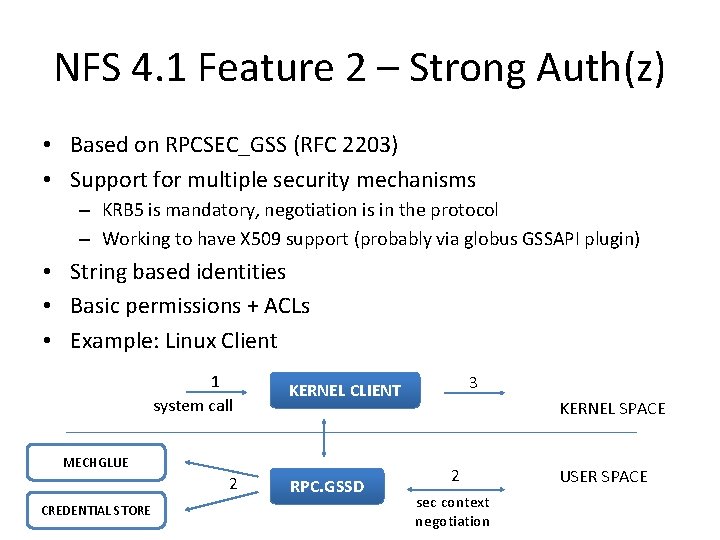

NFS 4. 1 Feature 2 – Strong Auth(z) • Based on RPCSEC_GSS (RFC 2203) • Support for multiple security mechanisms – KRB 5 is mandatory, negotiation is in the protocol – Working to have X 509 support (probably via globus GSSAPI plugin) • String based identities • Basic permissions + ACLs • Example: Linux Client 1 system call MECHGLUE 2 CREDENTIAL STORE 3 KERNEL CLIENT RPC. GSSD KERNEL SPACE 2 sec context negotiation USER SPACE

NFS 4. 1 Feature 3 – Bulk Operations • Protocol defines only two procedures – NULL and COMPOUND • COMPOUND procedure holds Operations – Open, Read, Write, Close, … • Much less round trips – Better performance, especially over WAN

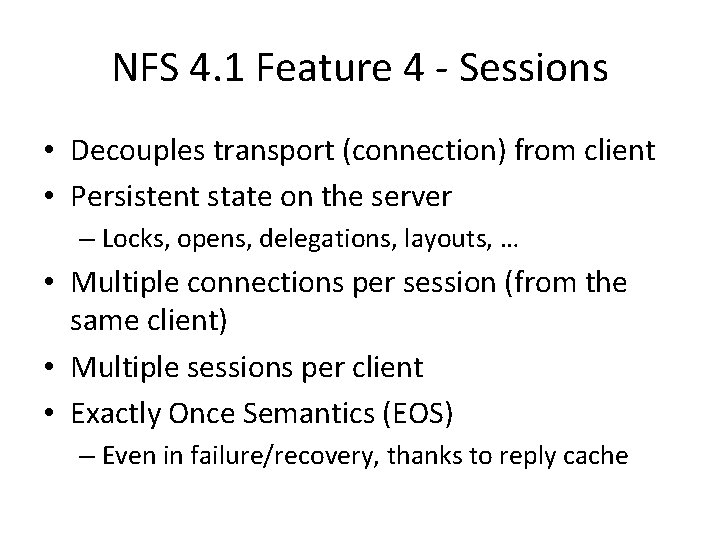

NFS 4. 1 Feature 4 - Sessions • Decouples transport (connection) from client • Persistent state on the server – Locks, opens, delegations, layouts, … • Multiple connections per session (from the same client) • Multiple sessions per client • Exactly Once Semantics (EOS) – Even in failure/recovery, thanks to reply cache

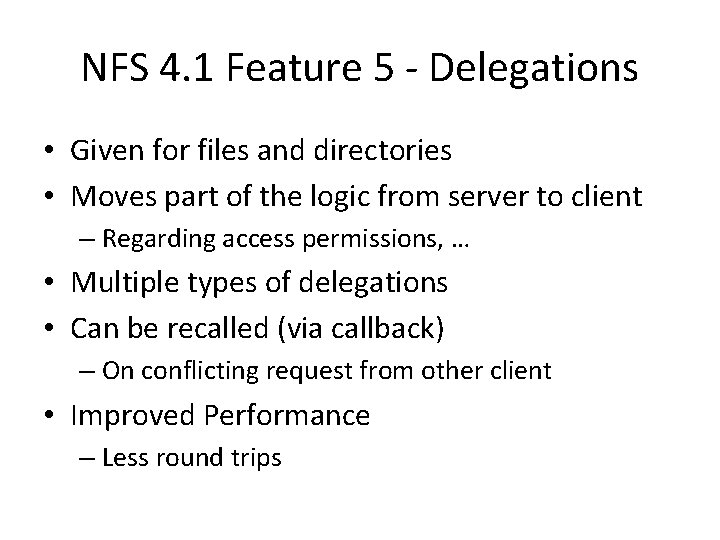

NFS 4. 1 Feature 5 - Delegations • Given for files and directories • Moves part of the logic from server to client – Regarding access permissions, … • Multiple types of delegations • Can be recalled (via callback) – On conflicting request from other client • Improved Performance – Less round trips

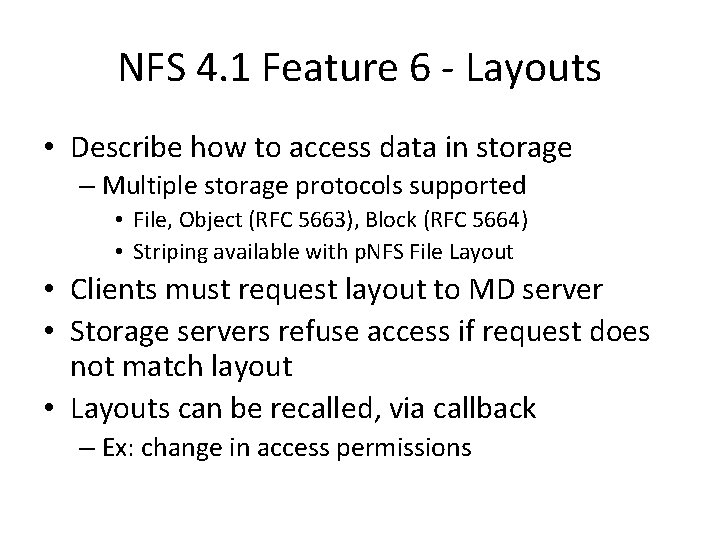

NFS 4. 1 Feature 6 - Layouts • Describe how to access data in storage – Multiple storage protocols supported • File, Object (RFC 5663), Block (RFC 5664) • Striping available with p. NFS File Layout • Clients must request layout to MD server • Storage servers refuse access if request does not match layout • Layouts can be recalled, via callback – Ex: change in access permissions

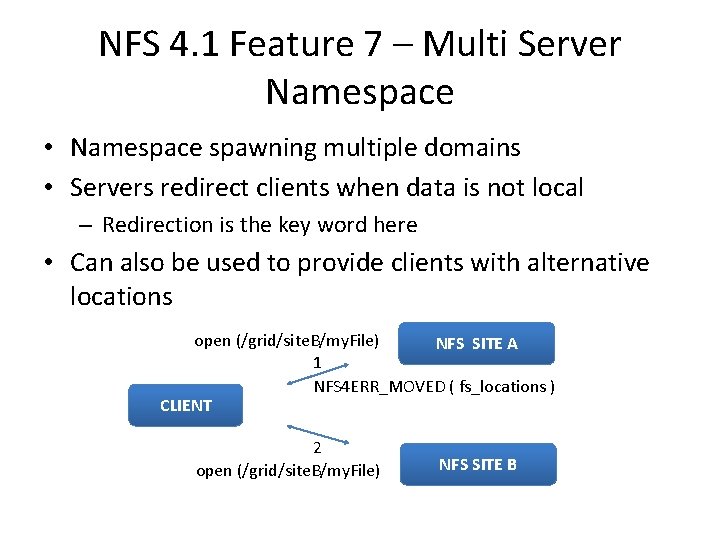

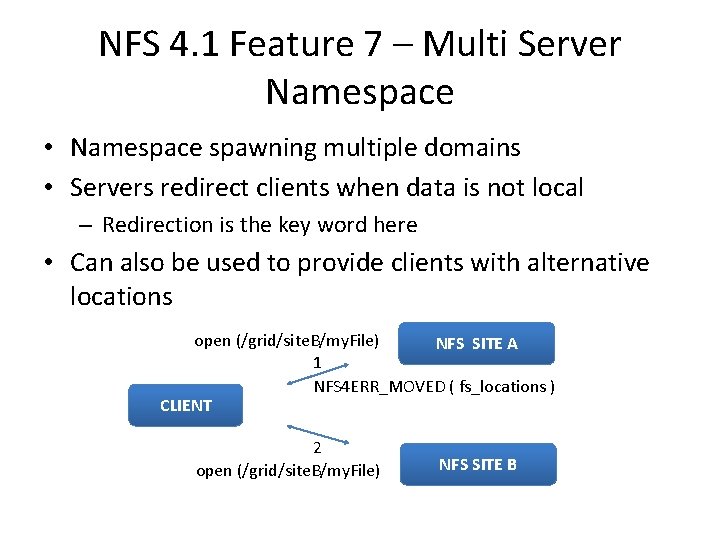

NFS 4. 1 Feature 7 – Multi Server Namespace • Namespace spawning multiple domains • Servers redirect clients when data is not local – Redirection is the key word here • Can also be used to provide clients with alternative locations open (/grid/site. B/my. File) NFS SITE A 1 NFS 4 ERR_MOVED ( fs_locations ) CLIENT 2 open (/grid/site. B/my. File) NFS SITE B

NFS 4. 1 Additional Goodies • Clients provided by industry – Linux, Solaris, Windows • Free client caching – It’s just there… we benefit from experts implementing caching in the OS • Support from major industry vendors – Netapp, Panasas, IBM, Oracle, EMC – Waiting for wide client availability – d. Cache also has support for NFS 4. 1

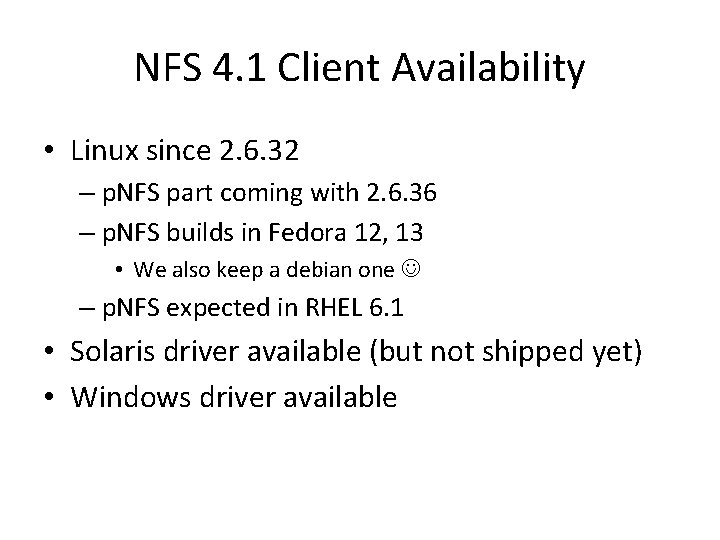

NFS 4. 1 Client Availability • Linux since 2. 6. 32 – p. NFS part coming with 2. 6. 36 – p. NFS builds in Fedora 12, 13 • We also keep a debian one – p. NFS expected in RHEL 6. 1 • Solaris driver available (but not shipped yet) • Windows driver available

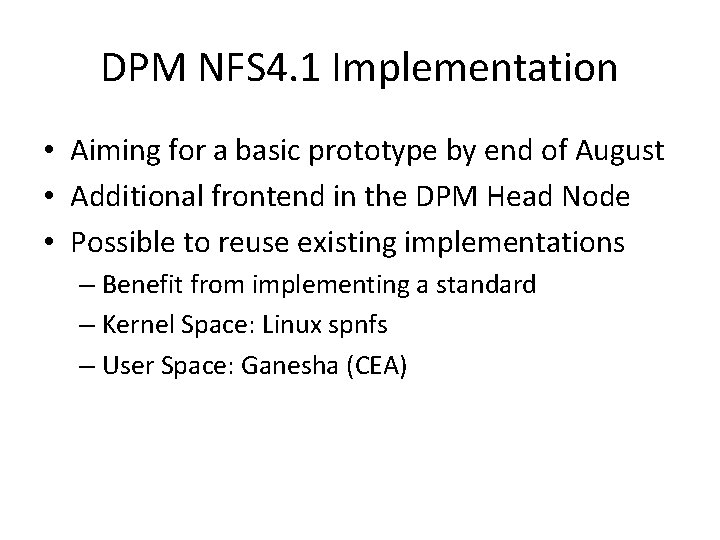

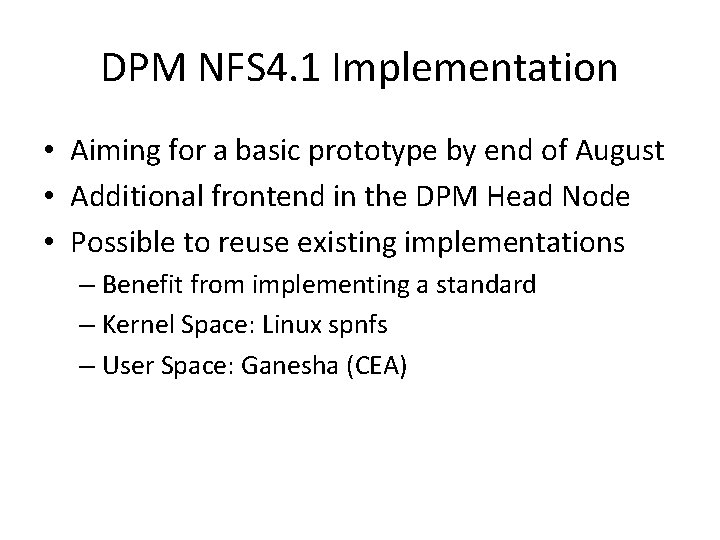

DPM NFS 4. 1 Implementation • Aiming for a basic prototype by end of August • Additional frontend in the DPM Head Node • Possible to reuse existing implementations – Benefit from implementing a standard – Kernel Space: Linux spnfs – User Space: Ganesha (CEA)

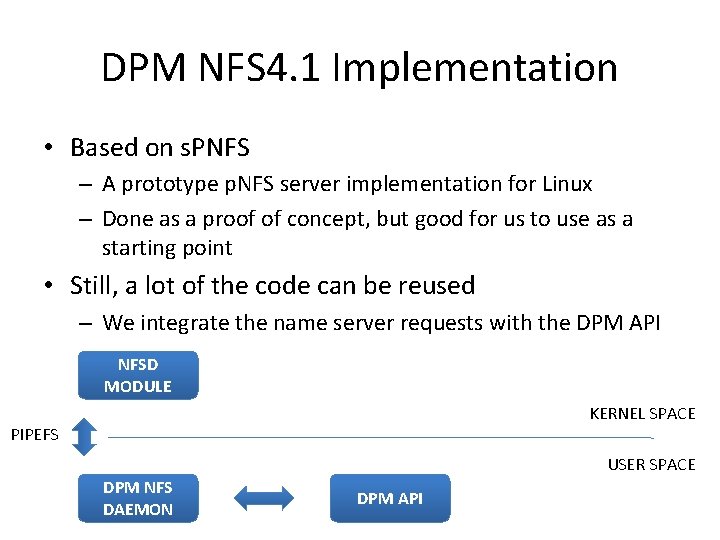

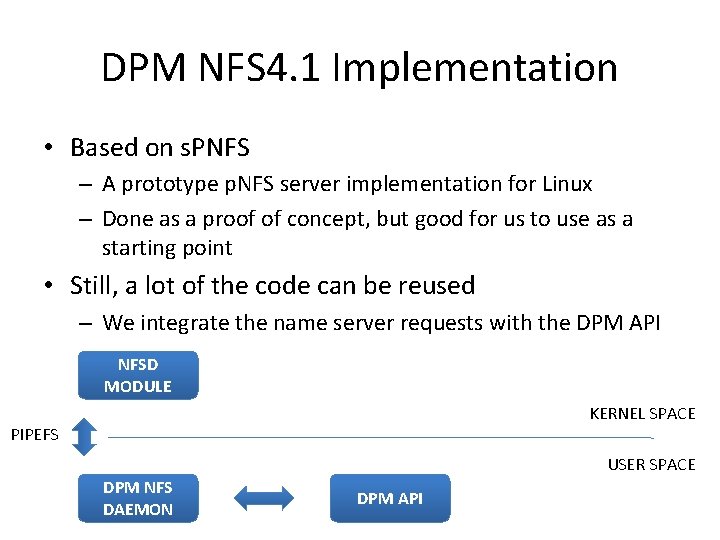

DPM NFS 4. 1 Implementation • Based on s. PNFS – A prototype p. NFS server implementation for Linux – Done as a proof of concept, but good for us to use as a starting point • Still, a lot of the code can be reused – We integrate the name server requests with the DPM API NFSD MODULE KERNEL SPACE PIPEFS USER SPACE DPM NFS DAEMON DPM API

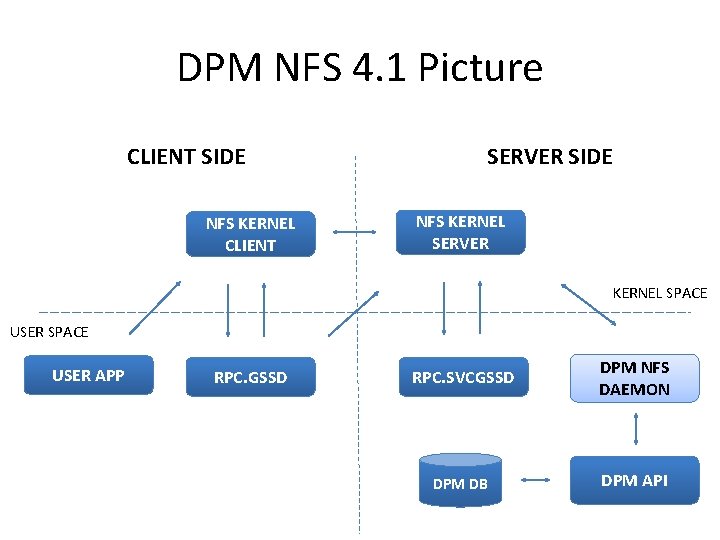

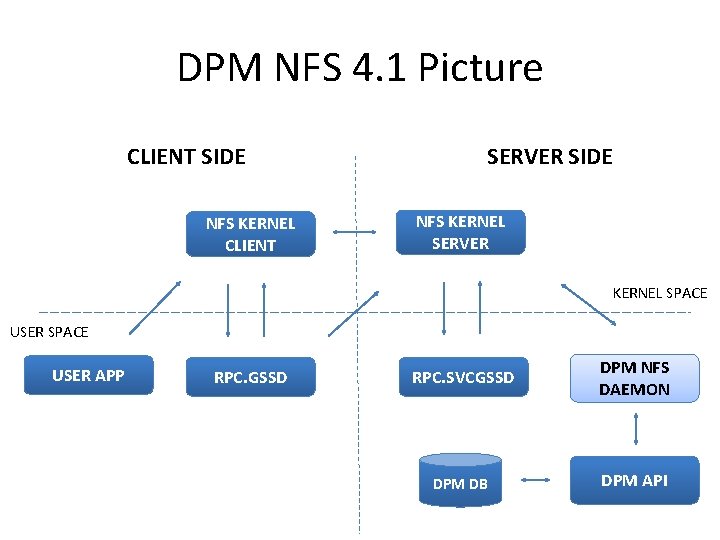

DPM NFS 4. 1 Picture CLIENT SIDE NFS KERNEL CLIENT SERVER SIDE NFS KERNEL SERVER KERNEL SPACE USER APP RPC. GSSD RPC. SVCGSSD DPM NFS DAEMON DPM DB DPM API

More Info https: //svnweb. cern. ch/trac/lcgdm/ wiki/Dpm/Dev/NFS 41

Data Transfer

HTTP (s) • DPM already supports HTTP (s) – As a transfer protocol • Easy authentication / authorization – Newer versions of openssl with X 509 proxy support make this even easier • Implemented as an apache module or cgi • Firewall friendly • Clients? They are everywhere…

Web. DAV • Extensions to HTTP 1. 1 for document management (RFC 2518) • Enables wide collaboration – Locking – Namespace management (copy, move, …) – Metadata / properties on files • Maybe not so interesting for HEP users – But very popular within other communities – d. Cache has had very good feedback on it • Implementation not yet scheduled, but in the plan

Conclusions • Our environment is not standards friendly • Standard protocols exist today fitting all our use cases • Benefits for users, developers, admins – Usability, maintainability, evolution • DPM will continue focusing on standards – And will soon use them for all our use cases • Ongoing work also within the EMI data management group in the same direction