STAM 2000 KPA Ltd Assessing Software Inspection Processes

- Slides: 35

STAM © 2000, KPA Ltd. Assessing Software Inspection Processes with STAM* Ron S. Kenett KPA Ltd. and Tel Aviv University Software Trouble Assessment Matrix *This presentation is extracted from SOFTWARE PROCESS QUALITY: Management and Control by Kenett and Baker, Marcel Dekker Inc. , 1998. It was first published as "Assessing Software development and Inspection Errors",

STAM Presentation agenda © 2000, KPA Ltd. l l Software life cycles Inspection processes Measurement programs Assessing software inspection processes

STAM Presentation agenda © 2000, KPA Ltd. l l Software life cycles Inspection processes Measurement programs Assessing software inspection processes

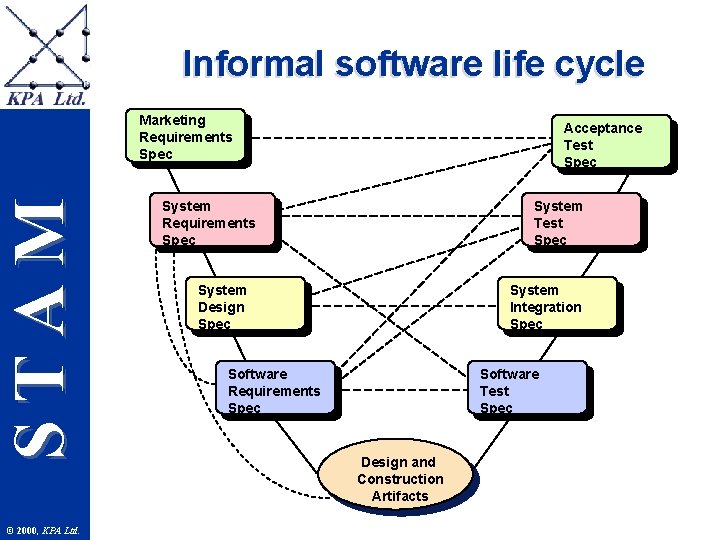

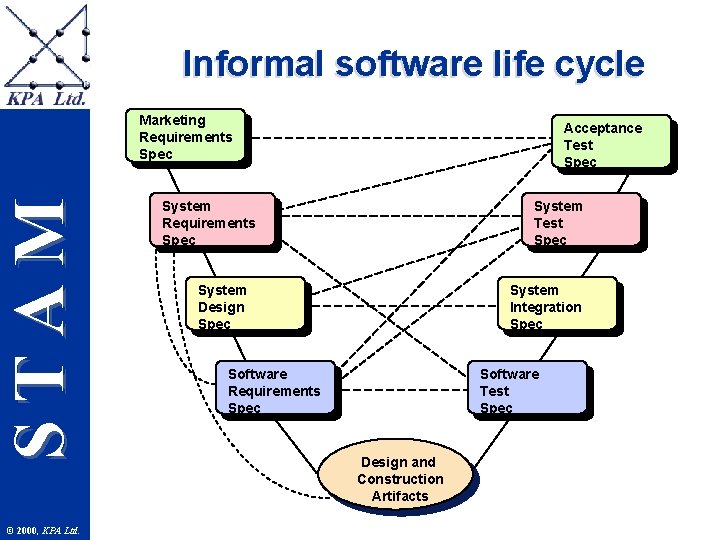

Informal software life cycle STAM Marketing Requirements Spec © 2000, KPA Ltd. Acceptance Test Spec System Requirements Spec System Design Spec System Integration Spec Software Requirements Spec Software Test Spec Design and Construction Artifacts

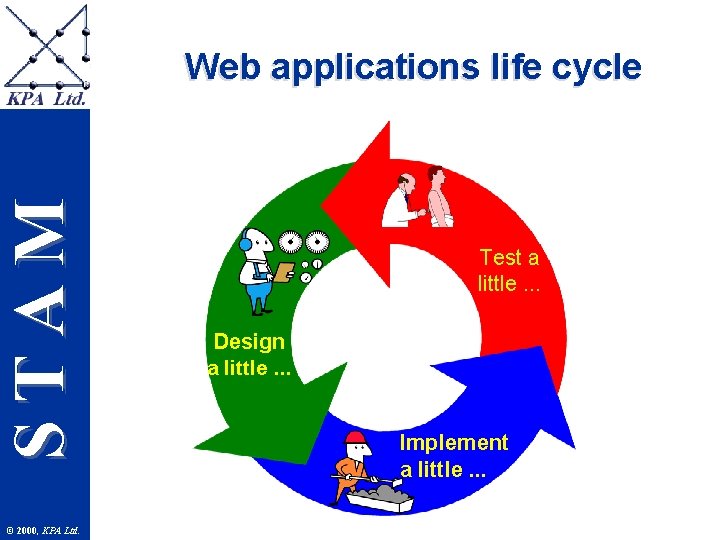

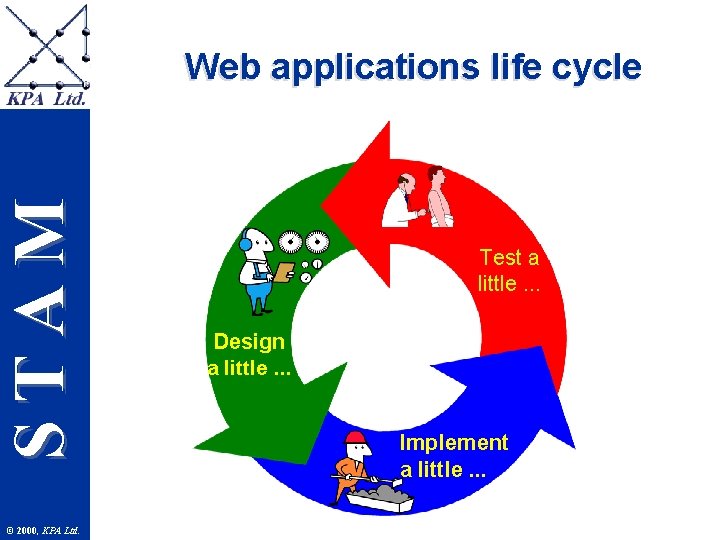

STAM Web applications life cycle © 2000, KPA Ltd. Test a little. . . Design a little. . . Implement a little. . .

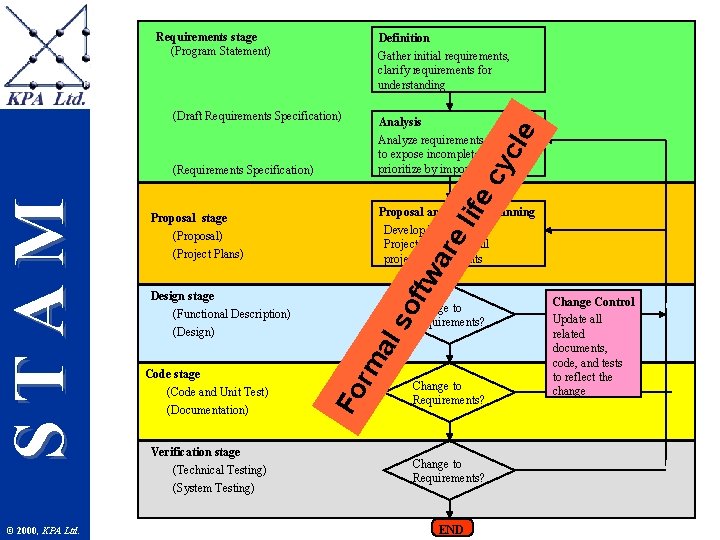

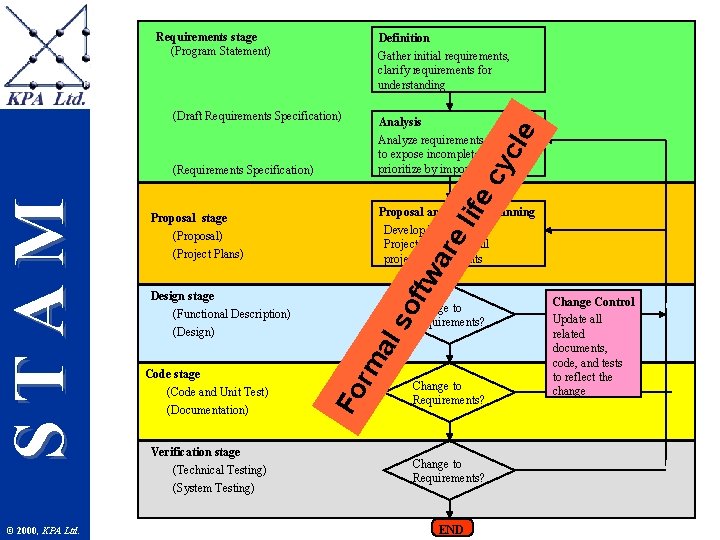

Requirements stage (Program Statement) Definition Gather initial requirements, clarify requirements for understanding Analysis Analyze requirements, categorize to expose incomplete areas, and prioritize by importance © 2000, KPA Ltd. Design stage (Functional Description) (Design) Code stage (Code and Unit Test) (Documentation) Verification stage (Technical Testing) (System Testing) ife ar el tw (Project Plans) Proposal and Project Planning Develop Proposal and Project Plans to fulfill project requirements al so f Proposal stage (Proposal) Change to Requirements? Fo rm STAM (Requirements Specification) cy c le (Draft Requirements Specification) Change to Requirements? END Change Control Update all related documents, code, and tests to reflect the change

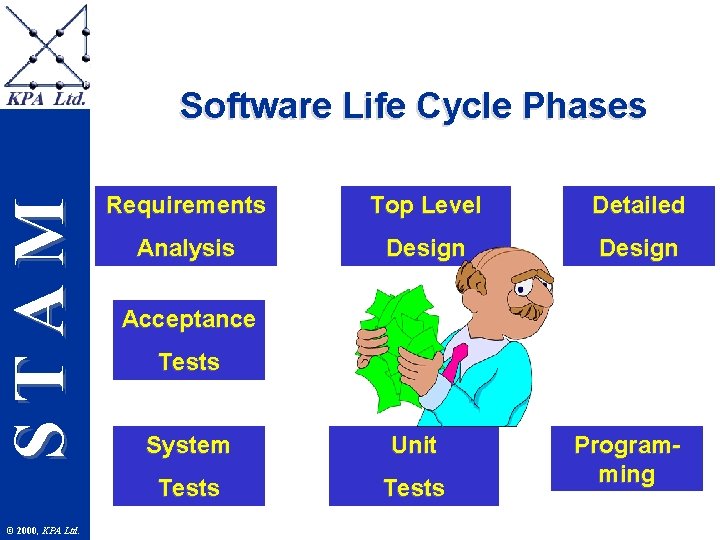

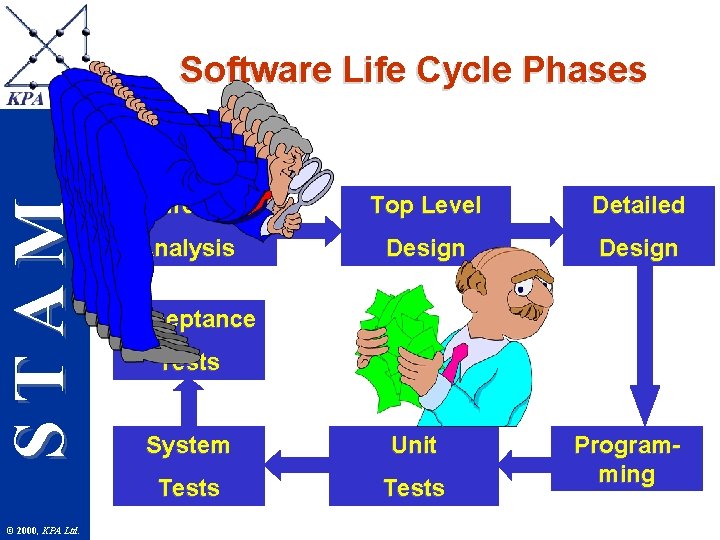

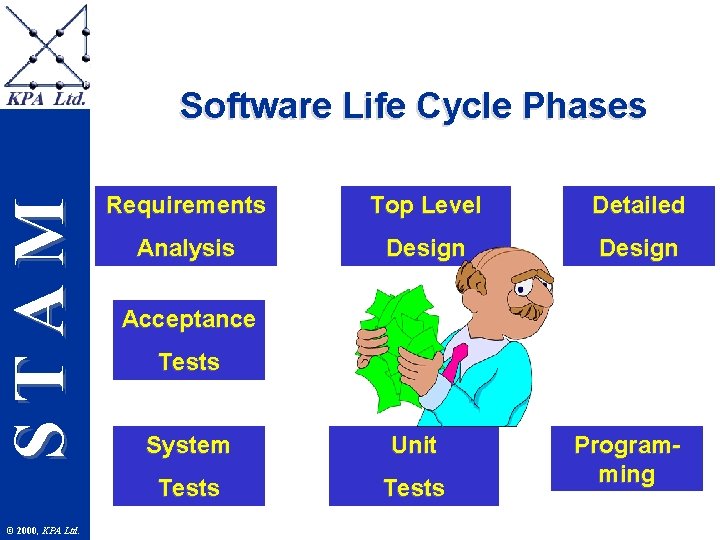

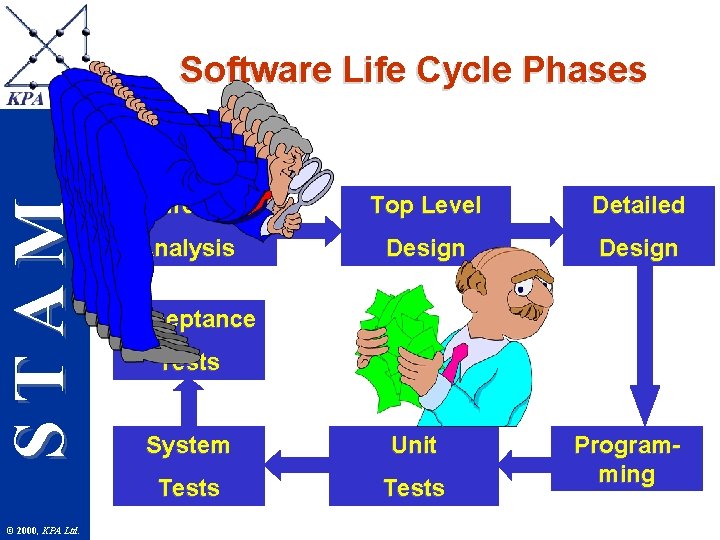

STAM Software Life Cycle Phases © 2000, KPA Ltd. Requirements Top Level Detailed Analysis Design Acceptance Tests System Unit Tests Programming

STAM Presentation agenda © 2000, KPA Ltd. l l Software life cycles Inspection processes Measurement programs Assessing software inspection processes

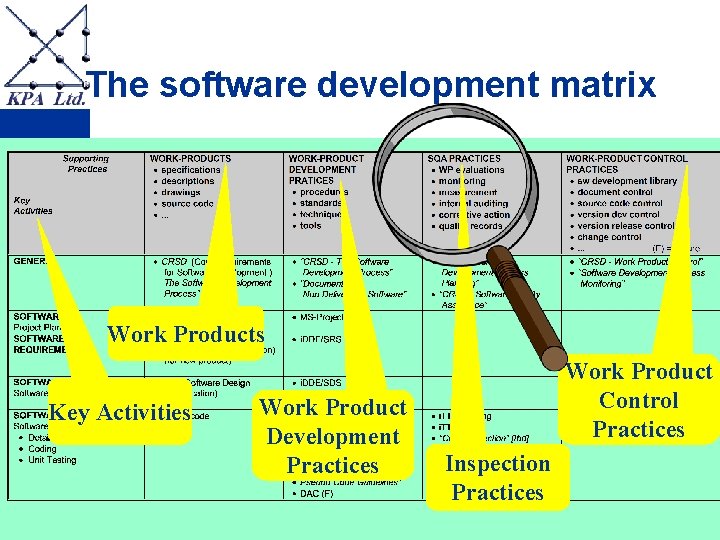

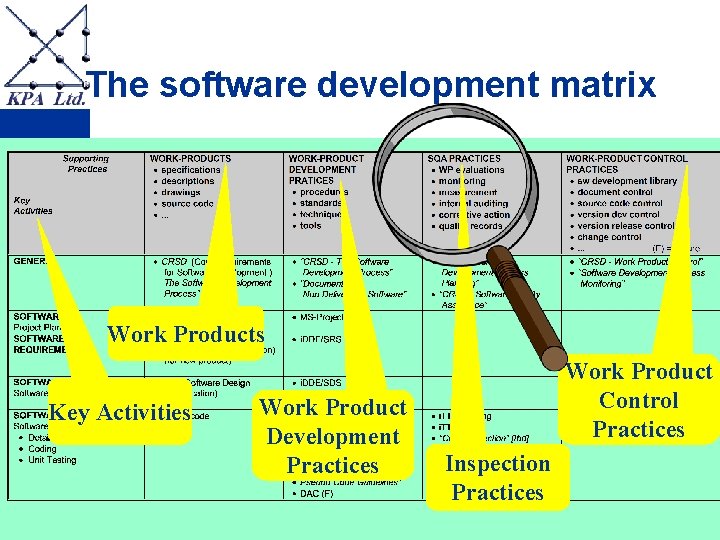

STAM The software development matrix Work Products Key Activities © 2000, KPA Ltd. Work Product Development Practices Work Product Control Practices Inspection Practices

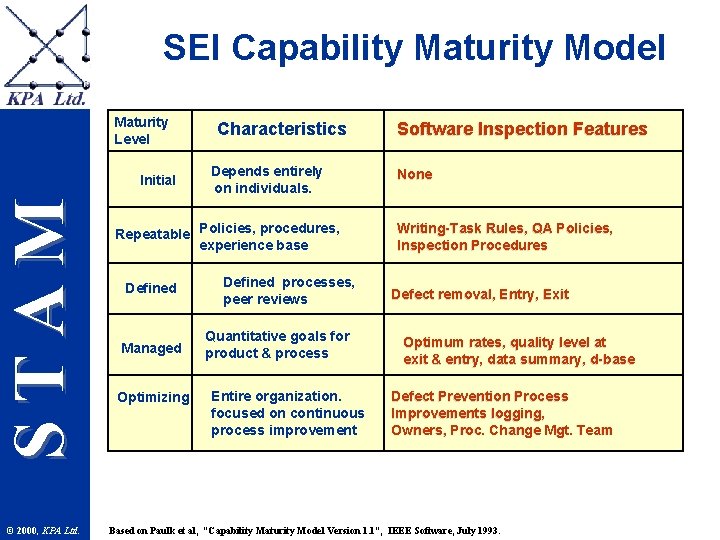

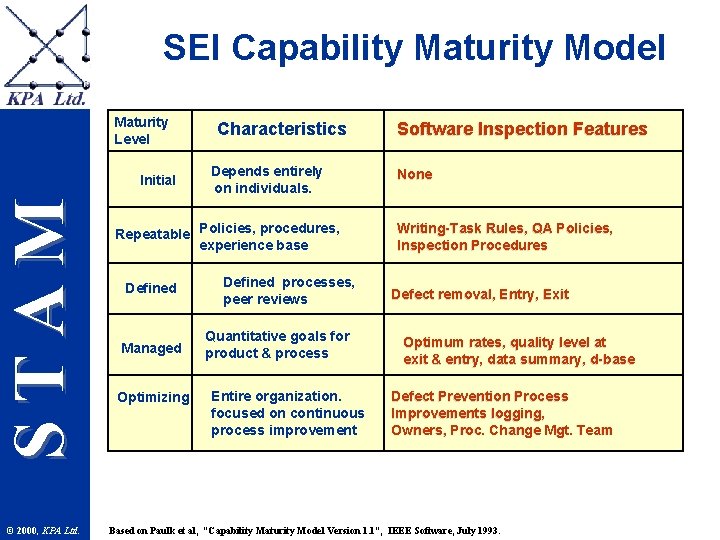

SEI Capability Maturity Model Maturity Level STAM Initial © 2000, KPA Ltd. Characteristics Depends entirely on individuals. Repeatable Policies, procedures, experience base Defined Managed Optimizing Defined processes, peer reviews Quantitative goals for product & process Entire organization. focused on continuous process improvement Software Inspection Features None Writing-Task Rules, QA Policies, Inspection Procedures Defect removal, Entry, Exit Optimum rates, quality level at exit & entry, data summary, d-base Defect Prevention Process Improvements logging, Owners, Proc. Change Mgt. Team Based on Paulk et al, “Capability Maturity Model Version 1. 1”, IEEE Software, July 1993.

STAM Presentation agenda © 2000, KPA Ltd. l l Software life cycles Inspection processes Measurement programs Assessing software inspection processes

STAM Software Measurement Programs © 2000, KPA Ltd.

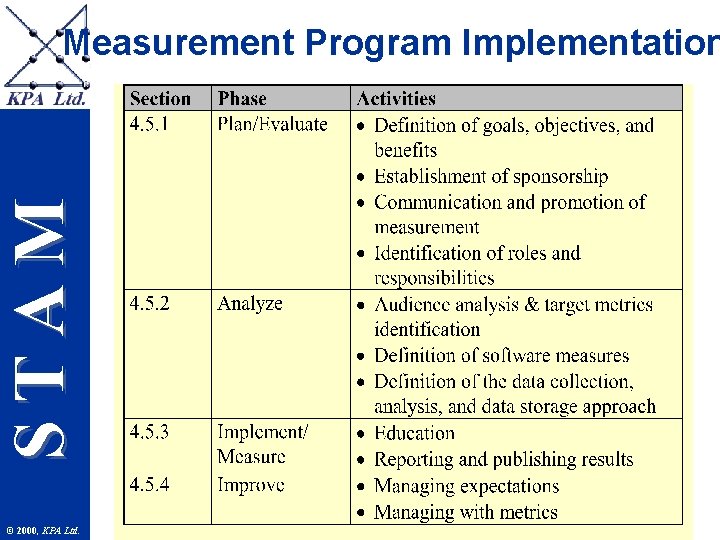

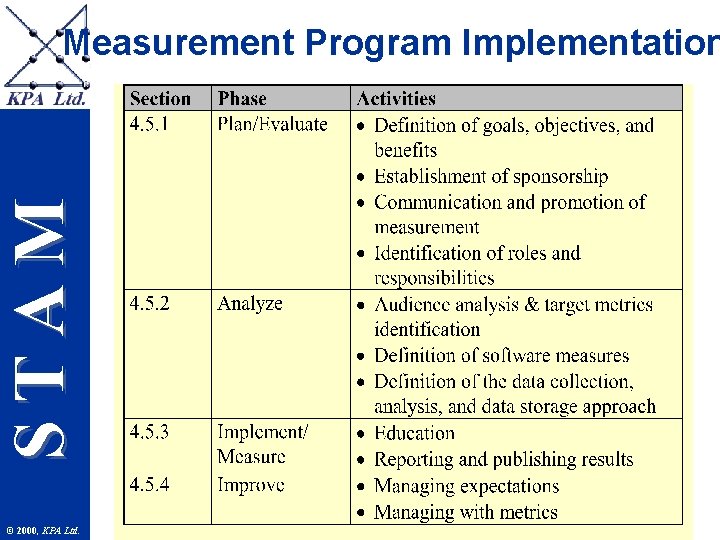

STAM Measurement Program Implementation © 2000, KPA Ltd.

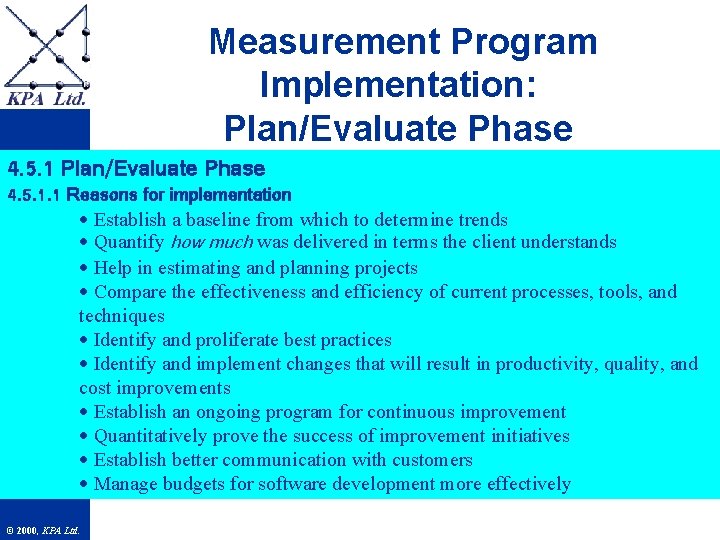

Measurement Program Implementation: Plan/Evaluate Phase 4. 5. 1 Plan/Evaluate Phase STAM 4. 5. 1. 1 Reasons for implementation · Establish a baseline from which to determine trends · Quantify how much was delivered in terms the client understands · Help in estimating and planning projects · Compare the effectiveness and efficiency of current processes, tools, and techniques · Identify and proliferate best practices · Identify and implement changes that will result in productivity, quality, and cost improvements · Establish an ongoing program for continuous improvement · Quantitatively prove the success of improvement initiatives · Establish better communication with customers · Manage budgets for software development more effectively © 2000, KPA Ltd.

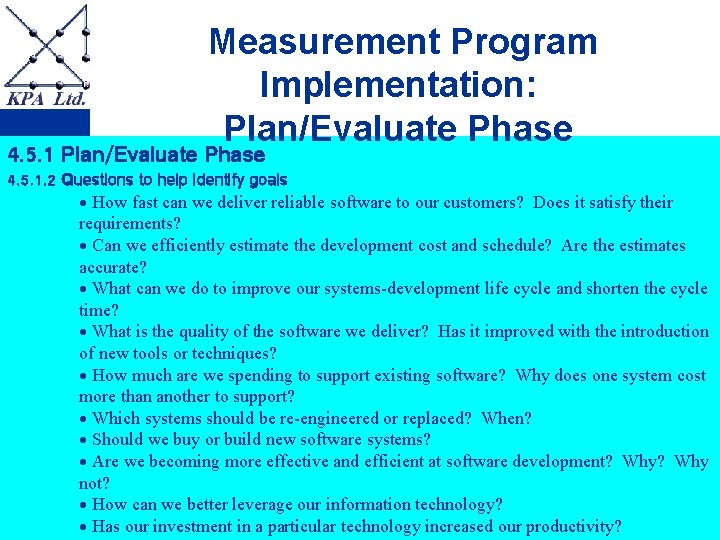

Measurement Program Implementation: Plan/Evaluate Phase 4. 5. 1 Plan/Evaluate Phase STAM 4. 5. 1. 2 Questions to help identify goals · How fast can we deliver reliable software to our customers? Does it satisfy their requirements? · Can we efficiently estimate the development cost and schedule? Are the estimates accurate? · What can we do to improve our systems-development life cycle and shorten the cycle time? · What is the quality of the software we deliver? Has it improved with the introduction of new tools or techniques? · How much are we spending to support existing software? Why does one system cost more than another to support? · Which systems should be re-engineered or replaced? When? · Should we buy or build new software systems? · Are we becoming more effective and efficient at software development? Why not? · How can we better leverage our information technology? © 2000, KPA Ltd. · Has our investment in a particular technology increased our productivity?

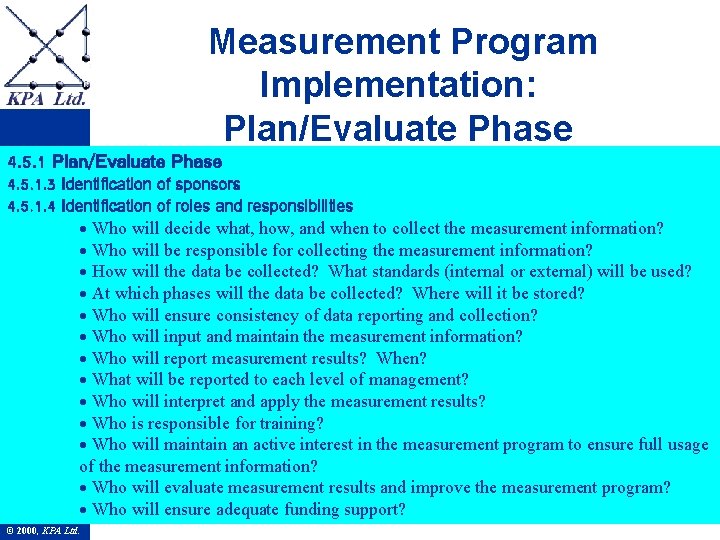

Measurement Program Implementation: Plan/Evaluate Phase 4. 5. 1 Plan/Evaluate Phase STAM 4. 5. 1. 3 Identification of sponsors 4. 5. 1. 4 Identification of roles and responsibilities · Who will decide what, how, and when to collect the measurement information? · Who will be responsible for collecting the measurement information? · How will the data be collected? What standards (internal or external) will be used? · At which phases will the data be collected? Where will it be stored? · Who will ensure consistency of data reporting and collection? · Who will input and maintain the measurement information? · Who will report measurement results? When? · What will be reported to each level of management? · Who will interpret and apply the measurement results? · Who is responsible for training? · Who will maintain an active interest in the measurement program to ensure full usage of the measurement information? · Who will evaluate measurement results and improve the measurement program? · Who will ensure adequate funding support? © 2000, KPA Ltd.

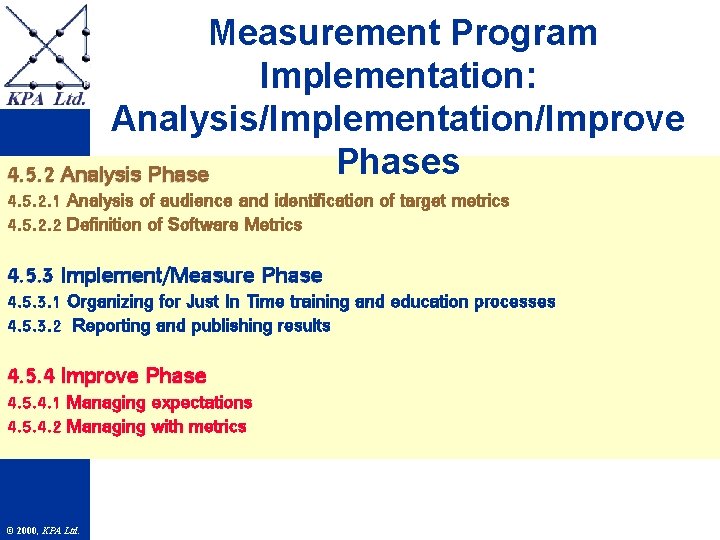

Measurement Program Implementation: Analysis/Implementation/Improve Phases 4. 5. 2 Analysis Phase STAM 4. 5. 2. 1 Analysis of audience and identification of target metrics 4. 5. 2. 2 Definition of Software Metrics 4. 5. 3 Implement/Measure Phase 4. 5. 3. 1 Organizing for Just In Time training and education processes 4. 5. 3. 2 Reporting and publishing results 4. 5. 4 Improve Phase 4. 5. 4. 1 Managing expectations 4. 5. 4. 2 Managing with metrics © 2000, KPA Ltd.

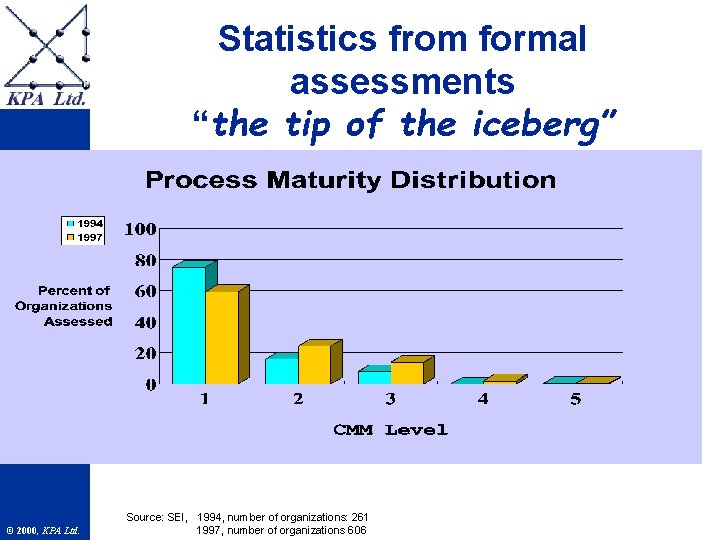

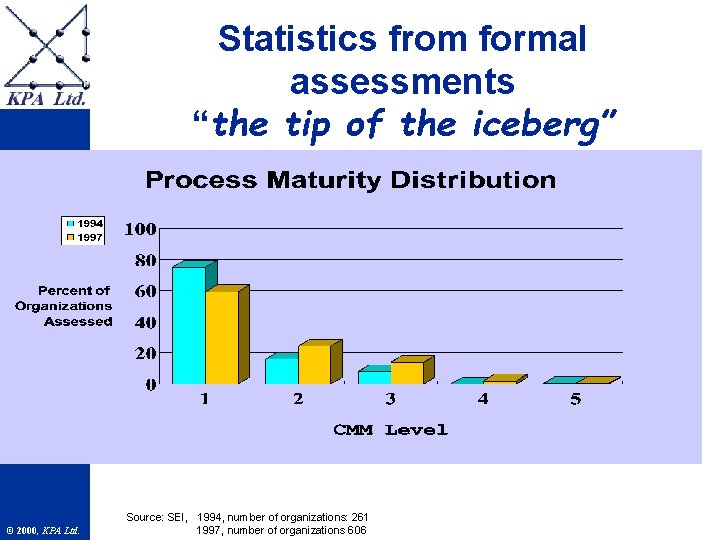

STAM Statistics from formal assessments “the tip of the iceberg” © 2000, KPA Ltd. Source: SEI, 1994, number of organizations: 261 1997, number of organizations 606

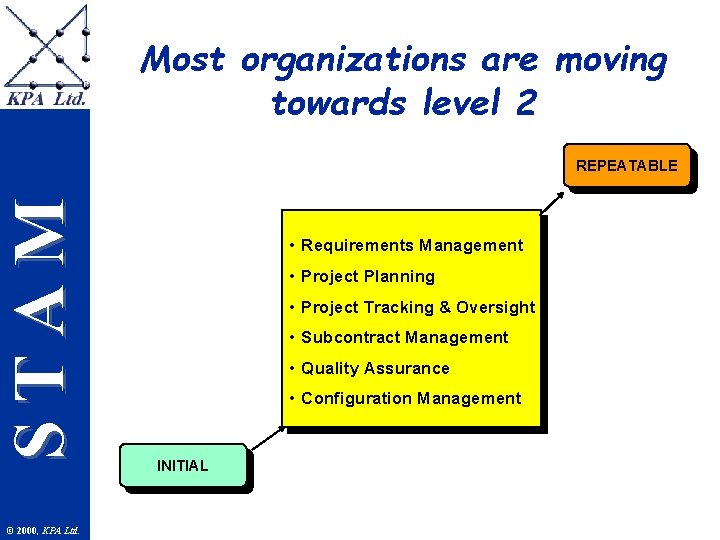

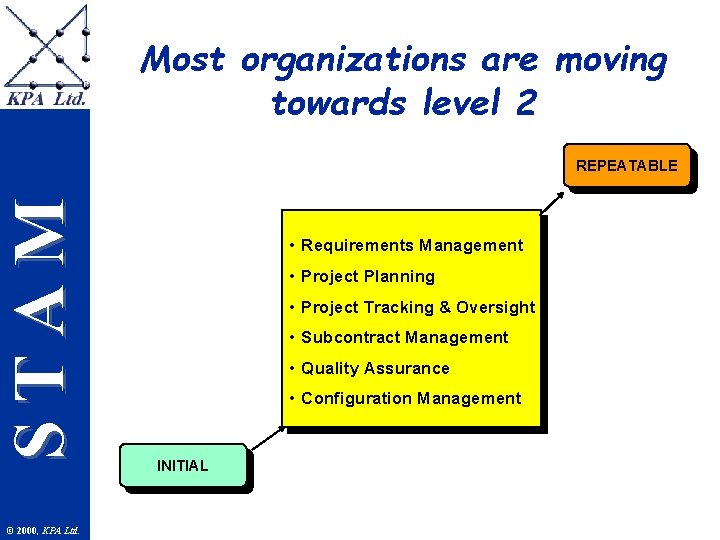

Most organizations are moving towards level 2 STAM REPEATABLE © 2000, KPA Ltd. • Requirements Management • Project Planning • Project Tracking & Oversight • Subcontract Management • Quality Assurance • Configuration Management INITIAL

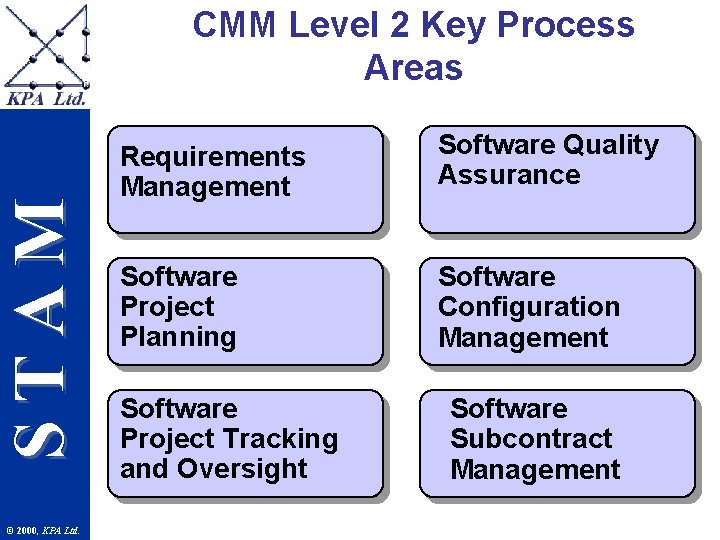

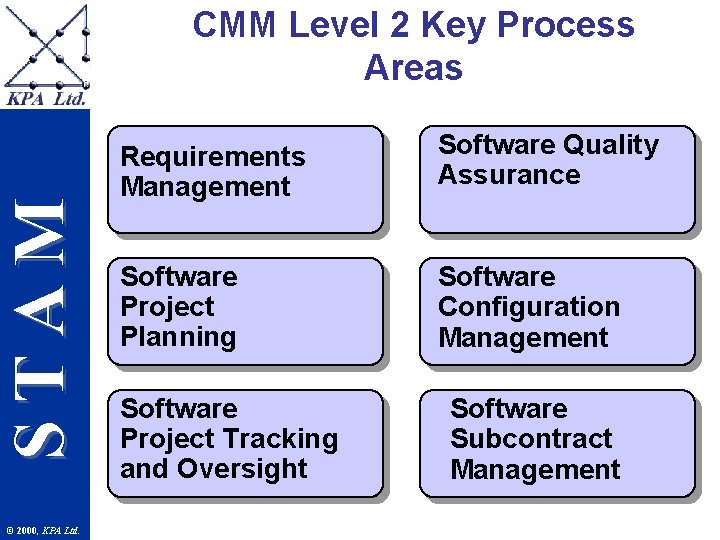

STAM CMM Level 2 Key Process Areas © 2000, KPA Ltd. Requirements Management Software Quality Assurance Software Project Planning Software Configuration Management Software Project Tracking and Oversight Software Subcontract Management

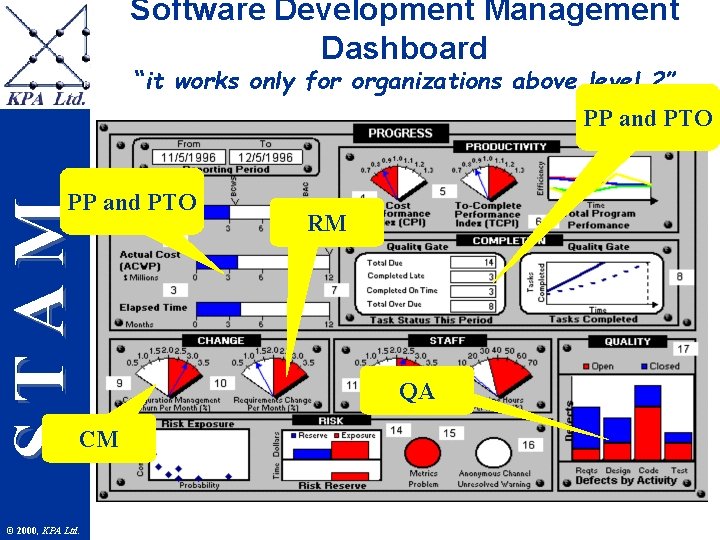

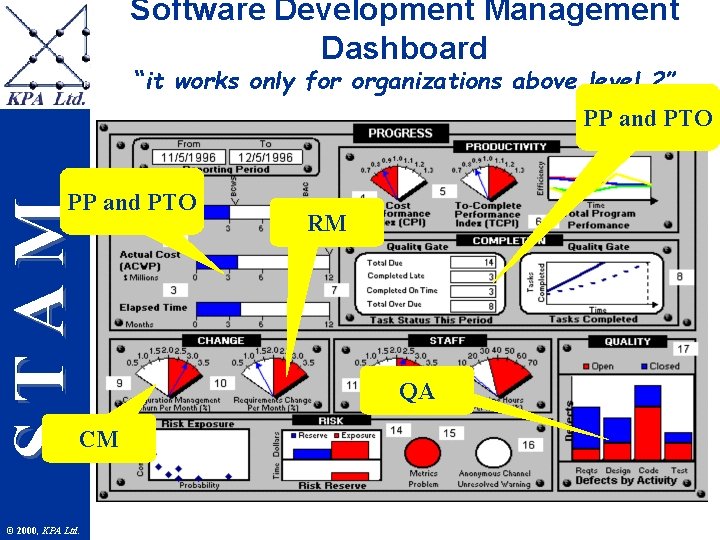

Software Development Management Dashboard “it works only for organizations above level 2” PP and PTO STAM PP and PTO CM © 2000, KPA Ltd. RM QA

STAM Presentation agenda © 2000, KPA Ltd. l l Software life cycles Inspection processes Measurement programs Assessing software inspection processes

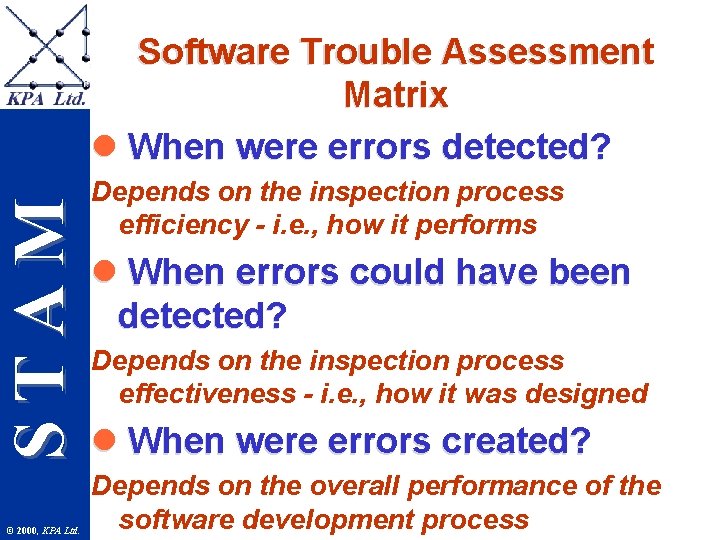

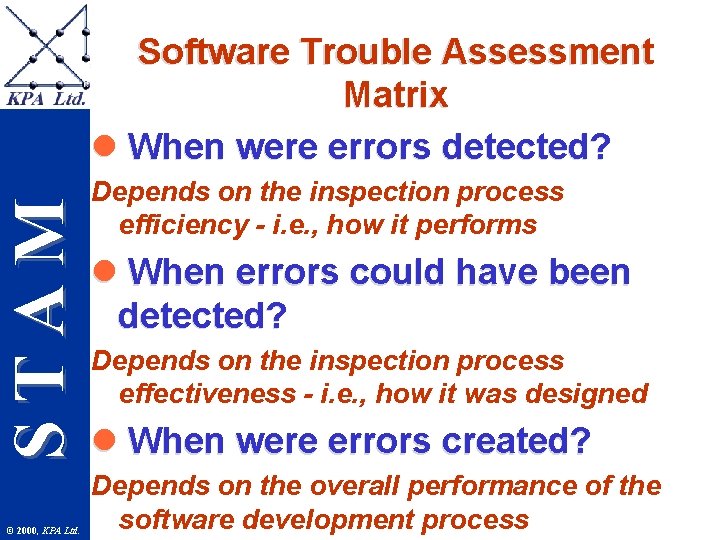

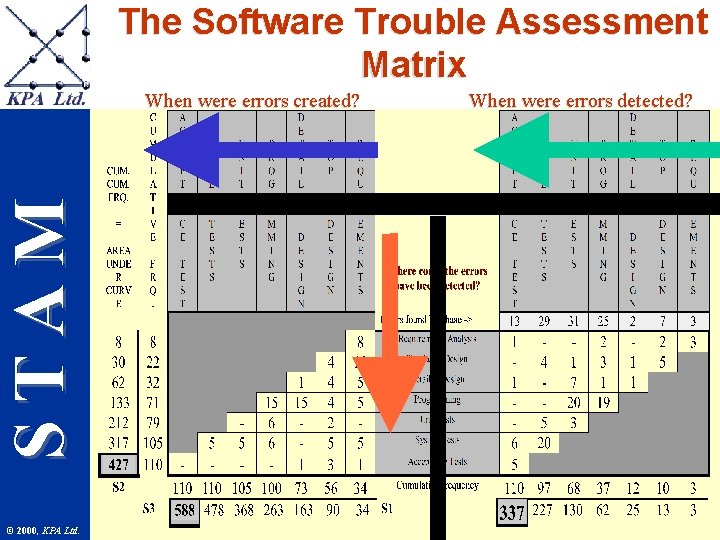

STAM Software Trouble Assessment Matrix l When were errors detected? © 2000, KPA Ltd. Depends on the inspection process efficiency - i. e. , how it performs l When errors could have been detected? Depends on the inspection process effectiveness - i. e. , how it was designed l When were errors created? Depends on the overall performance of the software development process

STAM Software Life Cycle Phases © 2000, KPA Ltd. Requirements Top Level Detailed Analysis Design Acceptance Tests System Unit Tests Programming

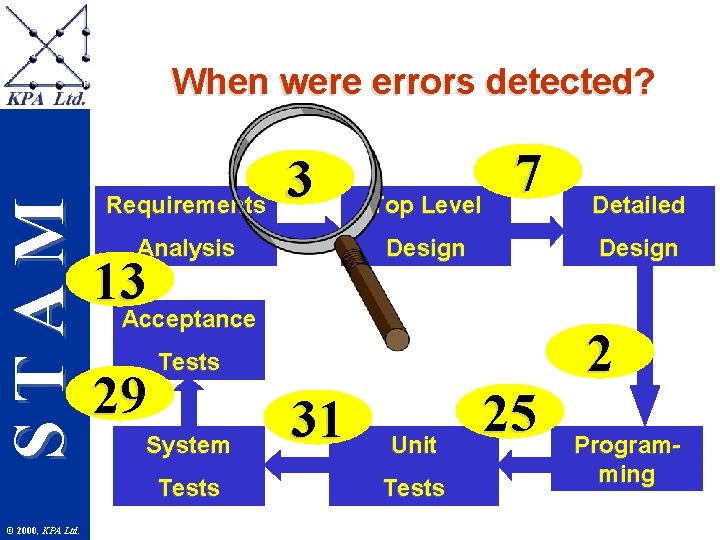

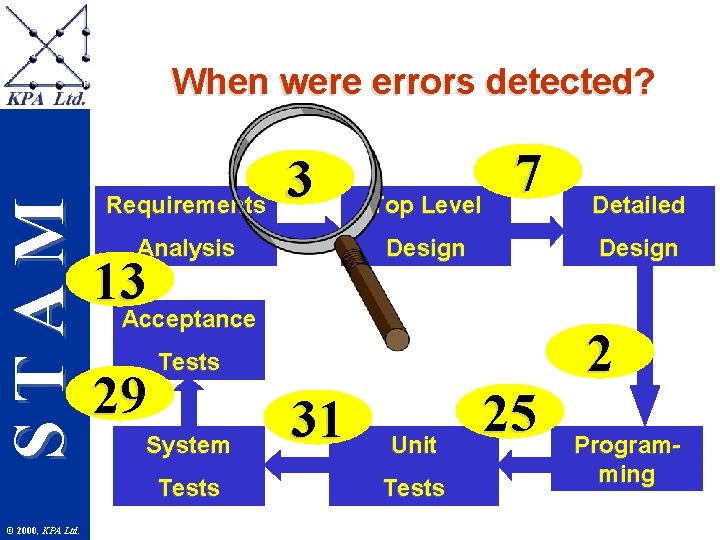

STAM When were errors detected? Requirements Analysis 29 Top Level 7 Design 13 Acceptance System 31 Unit Tests Detailed Design Tests © 2000, KPA Ltd. 3 25 2 Programming

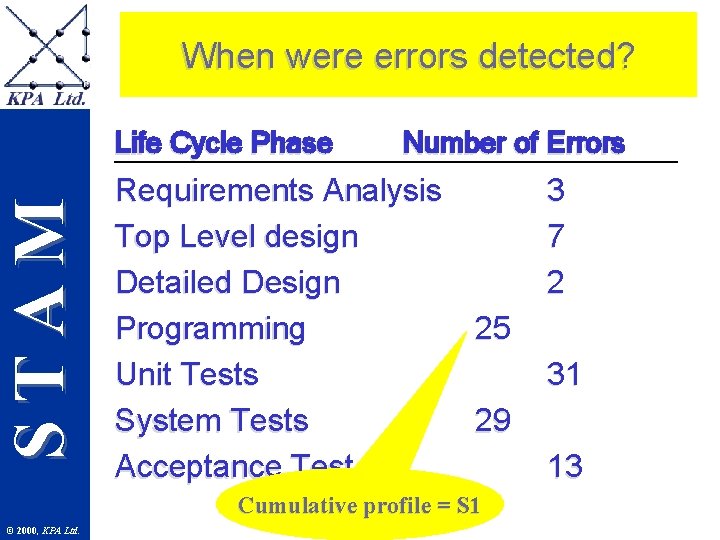

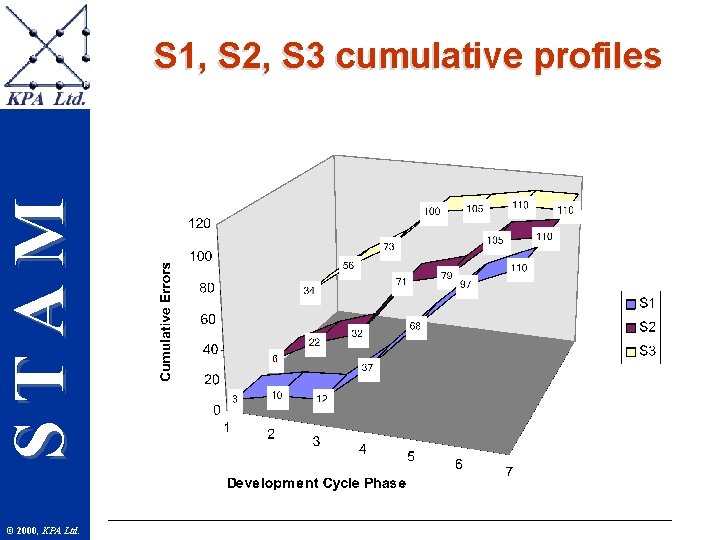

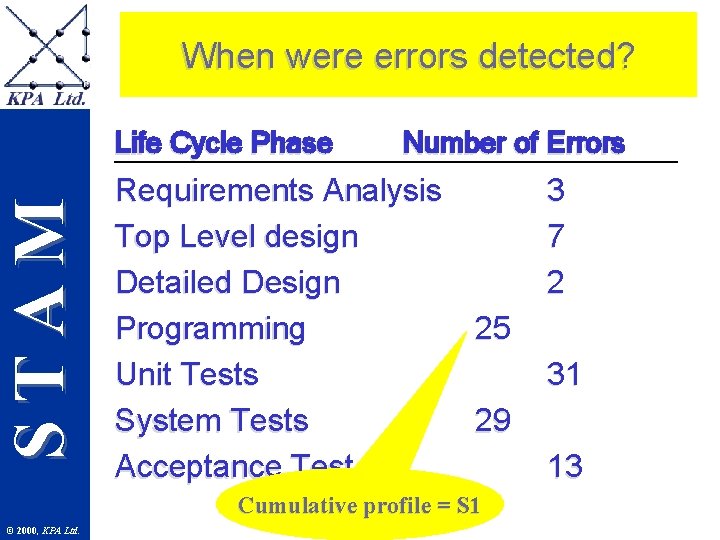

STAM When were errors detected? Life Cycle Phase Number of Errors Requirements Analysis 3 Top Level design 7 Detailed Design 2 Programming 25 Unit Tests 31 System Tests 29 Acceptance Test 13 Cumulative profile = S 1 © 2000, KPA Ltd.

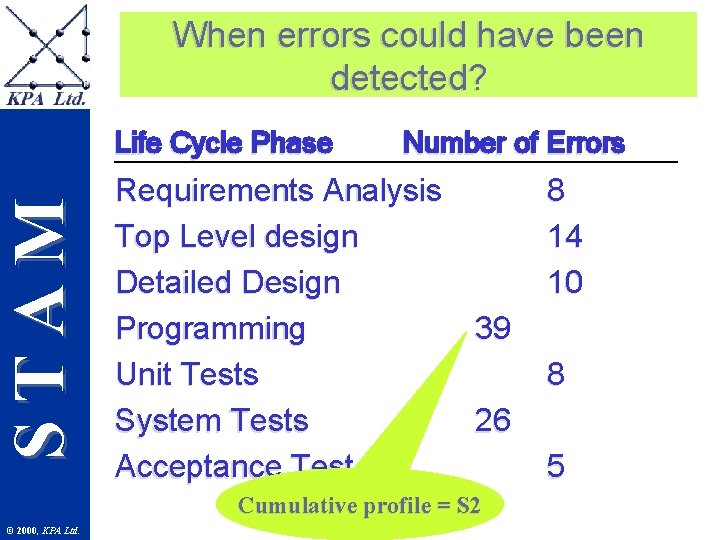

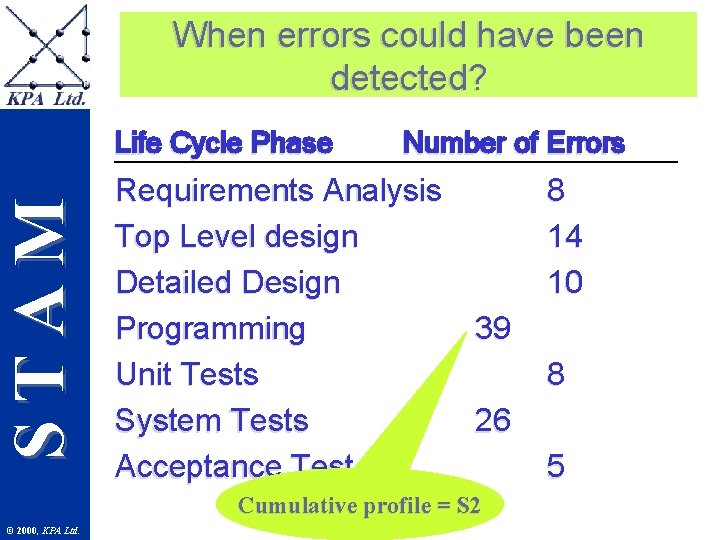

STAM When errors could have been detected? Life Cycle Phase Number of Errors Requirements Analysis 8 Top Level design 14 Detailed Design 10 Programming 39 Unit Tests 8 System Tests 26 Acceptance Test 5 Cumulative profile = S 2 © 2000, KPA Ltd.

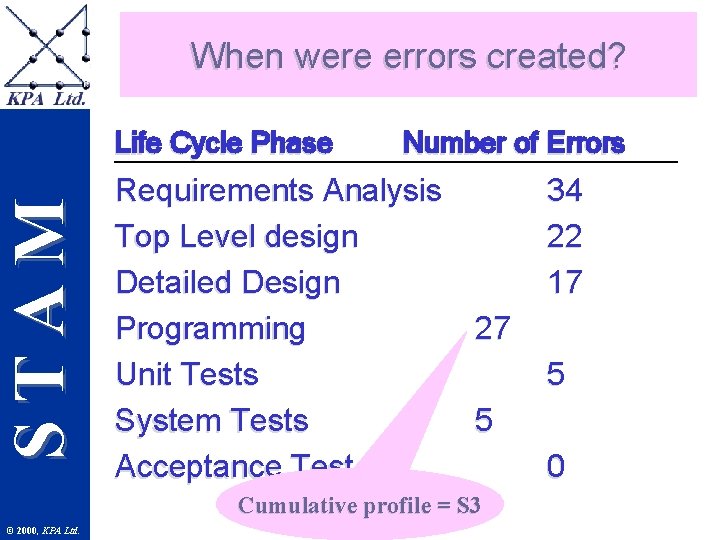

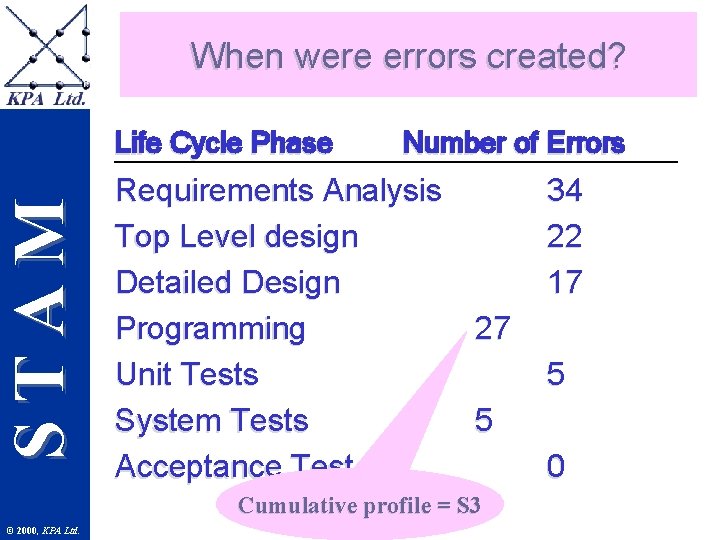

STAM When were errors created? Life Cycle Phase Number of Errors Requirements Analysis 34 Top Level design 22 Detailed Design 17 Programming 27 Unit Tests 5 System Tests 5 Acceptance Test 0 Cumulative profile = S 3 © 2000, KPA Ltd.

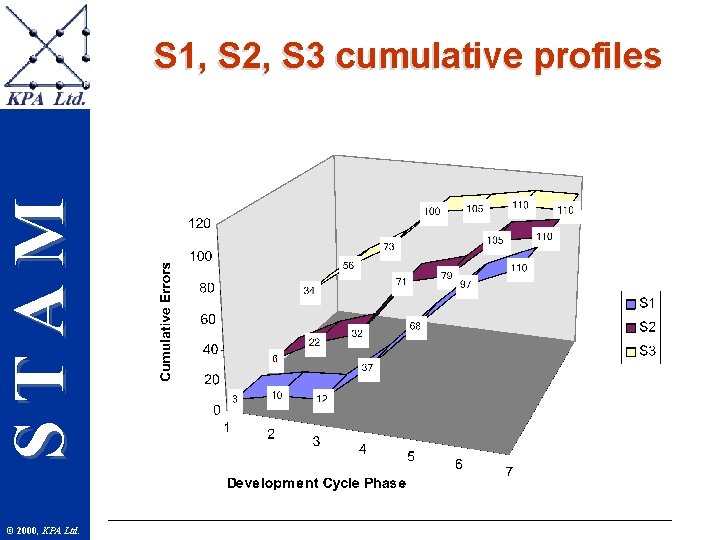

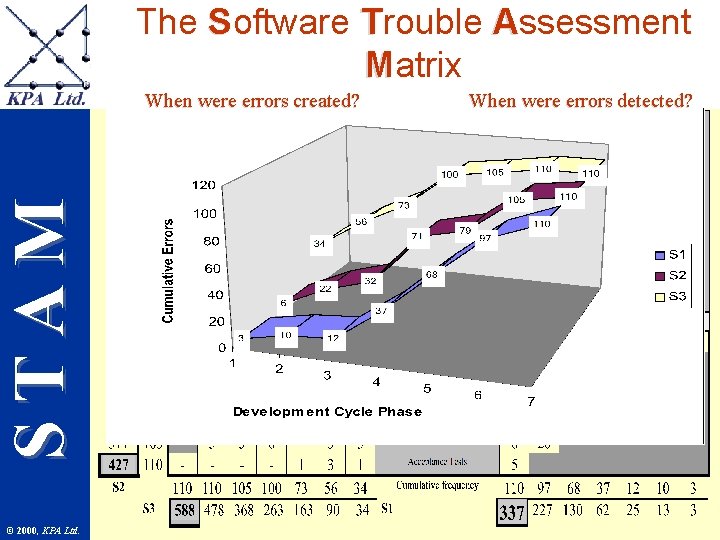

STAM S 1, S 2, S 3 cumulative profiles © 2000, KPA Ltd.

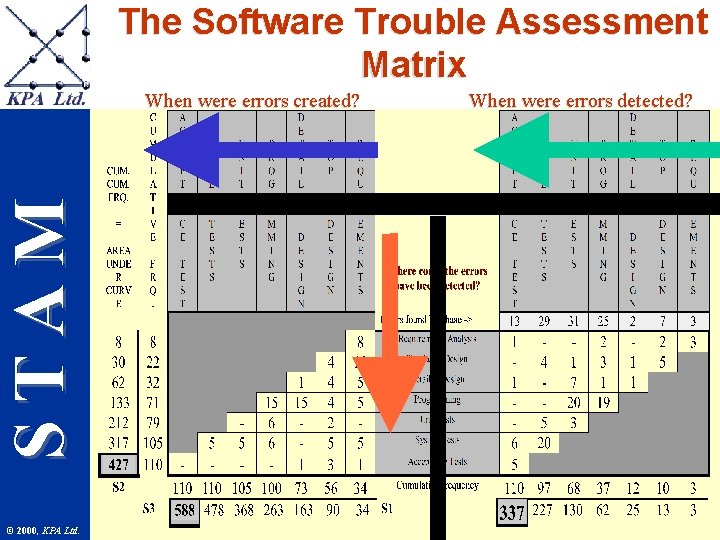

The Software Trouble Assessment Matrix STAM When were errors created? © 2000, KPA Ltd. When were errors detected?

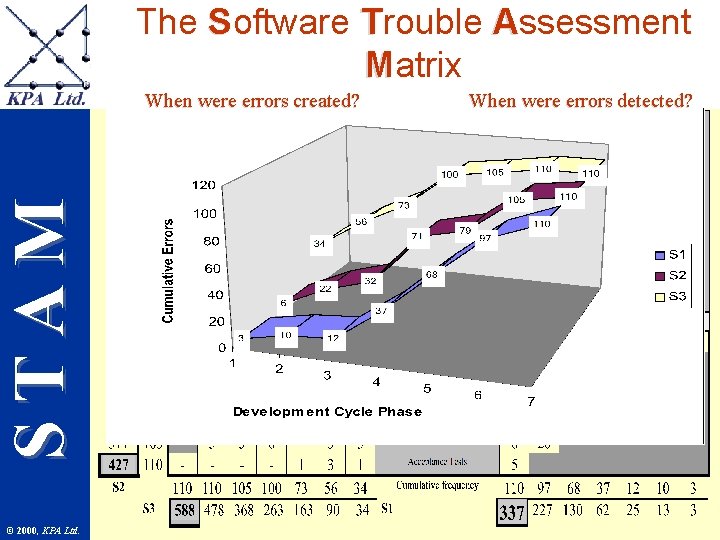

The S Software T Trouble A Assessment M atrix STAM When were errors created? © 2000, KPA Ltd. When were errors detected?

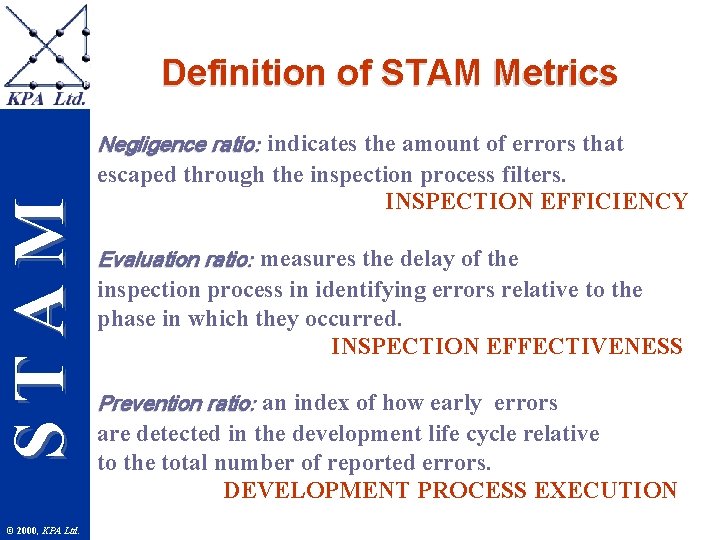

Definition of STAM Metrics STAM Negligence ratio: indicates the amount of errors that © 2000, KPA Ltd. escaped through the inspection process filters. INSPECTION EFFICIENCY Evaluation ratio: measures the delay of the inspection process in identifying errors relative to the phase in which they occurred. INSPECTION EFFECTIVENESS Prevention ratio: an index of how early errors are detected in the development life cycle relative to the total number of reported errors. DEVELOPMENT PROCESS EXECUTION

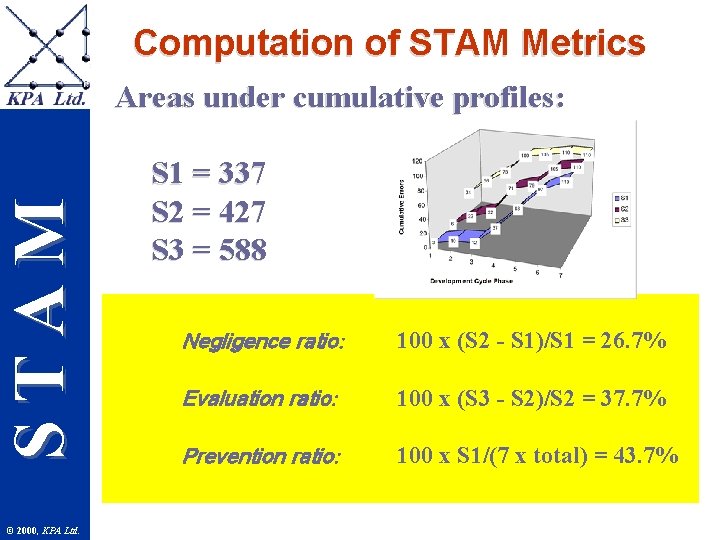

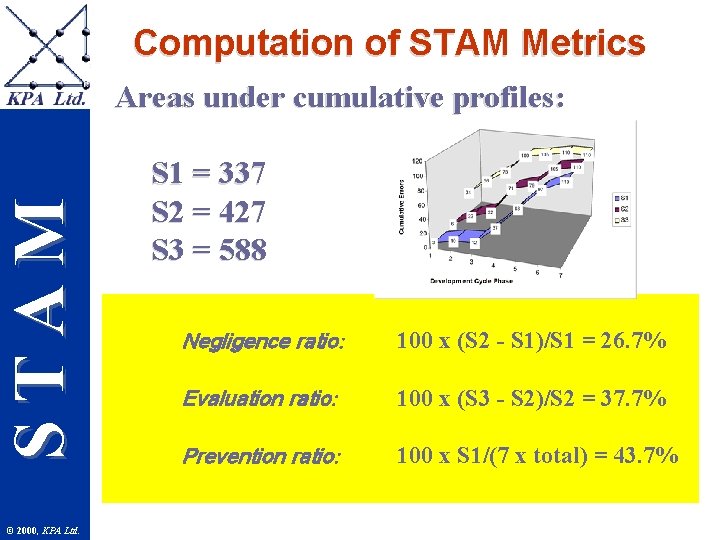

Computation of STAM Metrics STAM Areas under cumulative profiles: © 2000, KPA Ltd. S 1 = 337 S 2 = 427 S 3 = 588 Negligence ratio: 100 x (S 2 - S 1)/S 1 = 26. 7% Evaluation ratio: 100 x (S 3 - S 2)/S 2 = 37. 7% Prevention ratio: 100 x S 1/(7 x total) = 43. 7%

STAM Interpretation of STAM Metrics © 2000, KPA Ltd. 1. Errors are detected 27% later than they should have been (I. e. if the inspection processes worked perfectly) 2. The design of the inspection processes imply that errors are detected 38% into the phase following their creation. 3. Ideally all errors are requirements errors, and they are detected in the requirements phase. In this example only 47% of this ideal is materialized implying significant opportunities for improvement.

STAM Conclusions © 2000, KPA Ltd. l Inspection processes need to be designed in the context of a software life cycle l Inspection processes need to be evaluated using quantitative metrics l STAM metrics provide such an evaluation l STAM metrics should be integrated in an overall measurement program