Spoken Language Recognition and Understanding Renato De Mori

![Composition process 2 Step 2 – composition by fusion HOTEL. [luxury four_stars] HOTEL. [h_facility Composition process 2 Step 2 – composition by fusion HOTEL. [luxury four_stars] HOTEL. [h_facility](https://slidetodoc.com/presentation_image/2c01c8ee3aa20dfae53b33abf981634b/image-37.jpg)

![Composition process 3 Step 3– Composition by attachment new fragment ADDRESS. [adr_city Lyon] Composition Composition process 3 Step 3– Composition by attachment new fragment ADDRESS. [adr_city Lyon] Composition](https://slidetodoc.com/presentation_image/2c01c8ee3aa20dfae53b33abf981634b/image-38.jpg)

- Slides: 106

Spoken Language Recognition and Understanding Renato De Mori Mc Gill University of Avignon LUNA IEEE DL 2009 Athens IST contract no 33549 Athens, october 17 th 2009 October 17 th, 2009 1 1

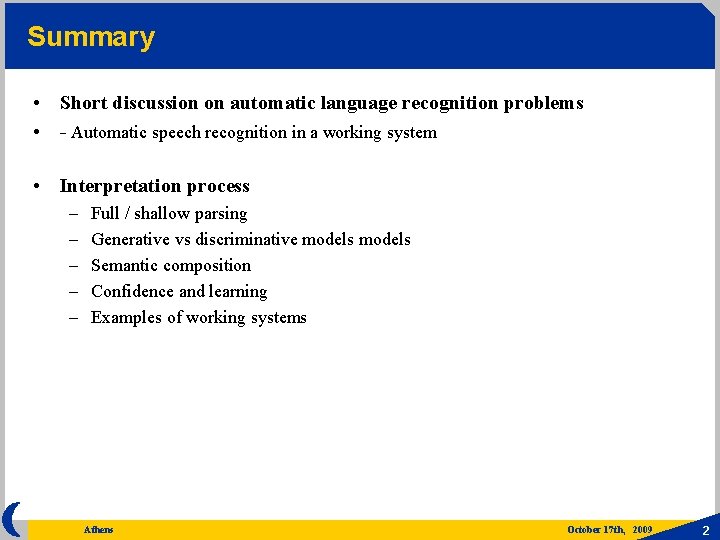

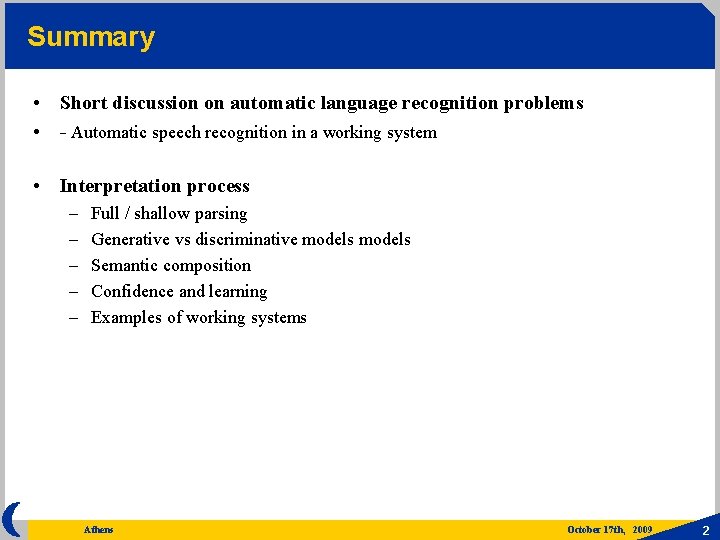

Summary • Short discussion on automatic language recognition problems • - Automatic speech recognition in a working system • Interpretation process – – – Full / shallow parsing Generative vs discriminative models Semantic composition Confidence and learning Examples of working systems Athens October 17 th, 2009 2

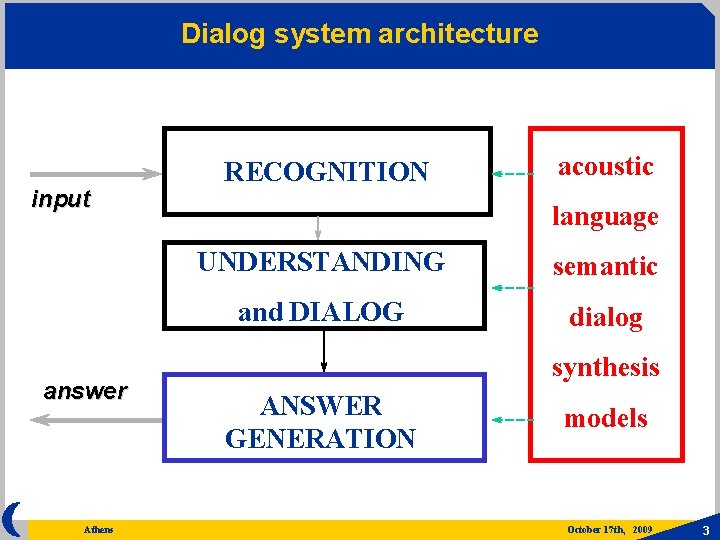

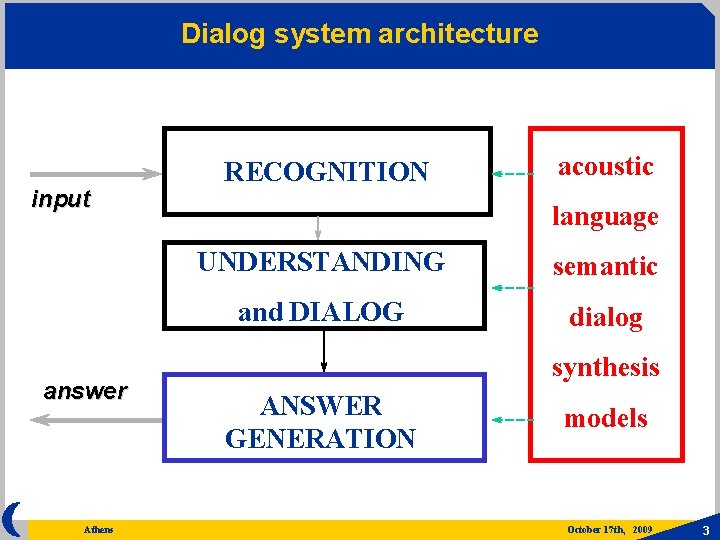

Dialog system architecture input answer Athens RECOGNITION acoustic language UNDERSTANDING semantic and DIALOG dialog synthesis ANSWER GENERATION models October 17 th, 2009 3

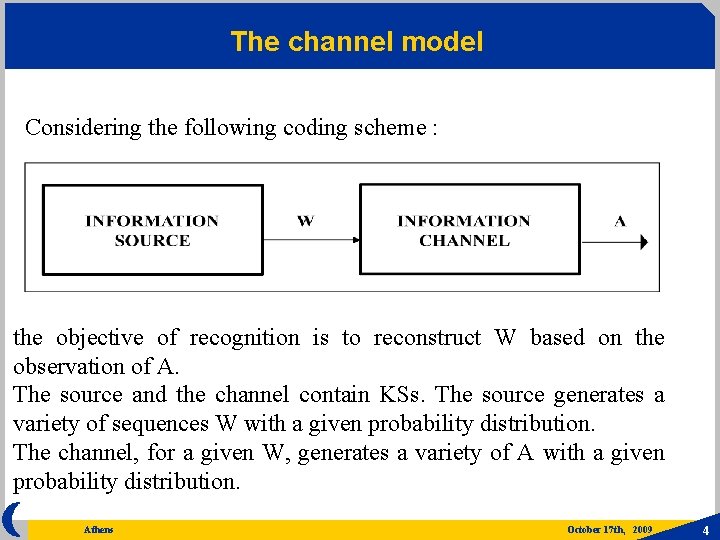

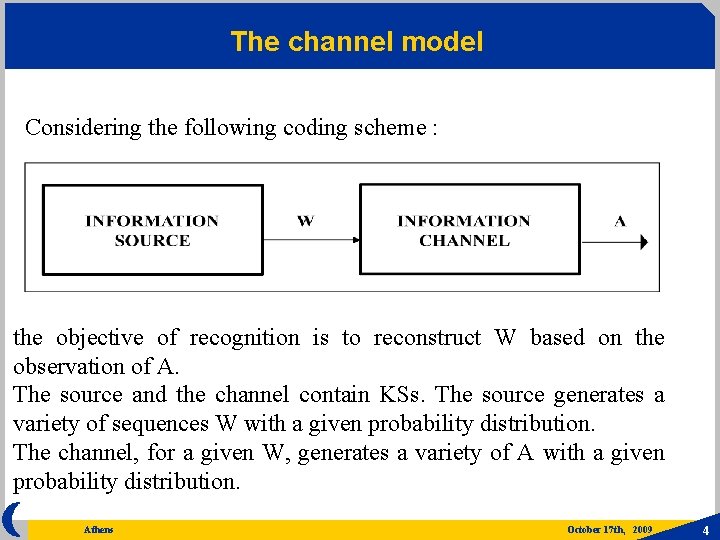

The channel model Considering the following coding scheme : the objective of recognition is to reconstruct W based on the observation of A. The source and the channel contain KSs. The source generates a variety of sequences W with a given probability distribution. The channel, for a given W, generates a variety of A with a given probability distribution. Athens October 17 th, 2009 4

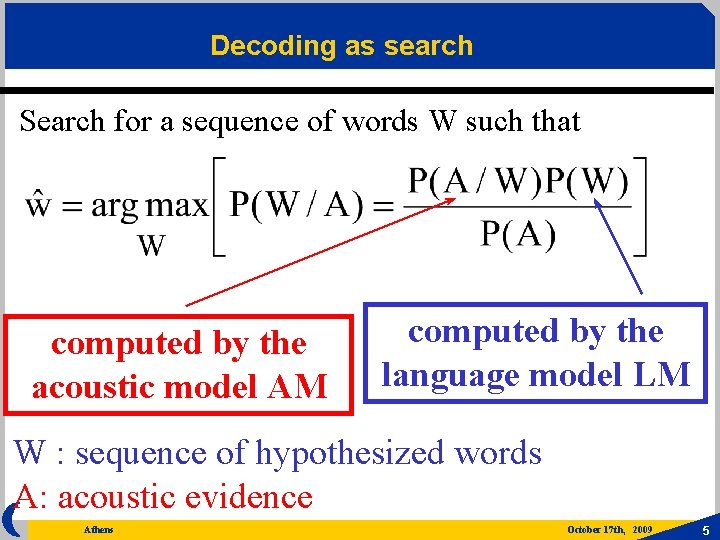

Decoding as search Search for a sequence of words W such that computed by the acoustic model AM computed by the language model LM W : sequence of hypothesized words A: acoustic evidence Athens October 17 th, 2009 5

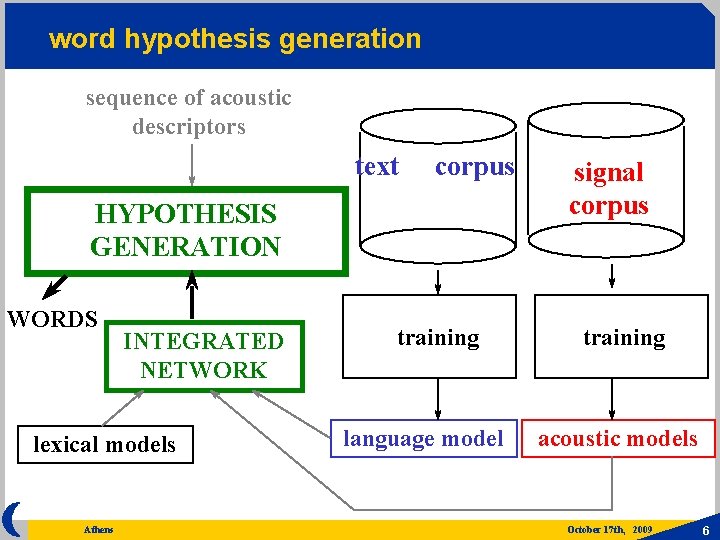

word hypothesis generation sequence of acoustic descriptors text corpus HYPOTHESIS GENERATION WORDS INTEGRATED NETWORK lexical models Athens training language model signal corpus training acoustic models October 17 th, 2009 6

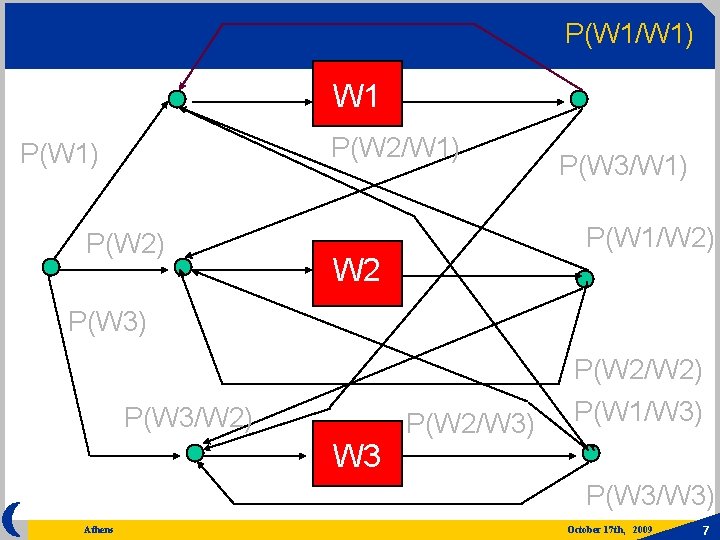

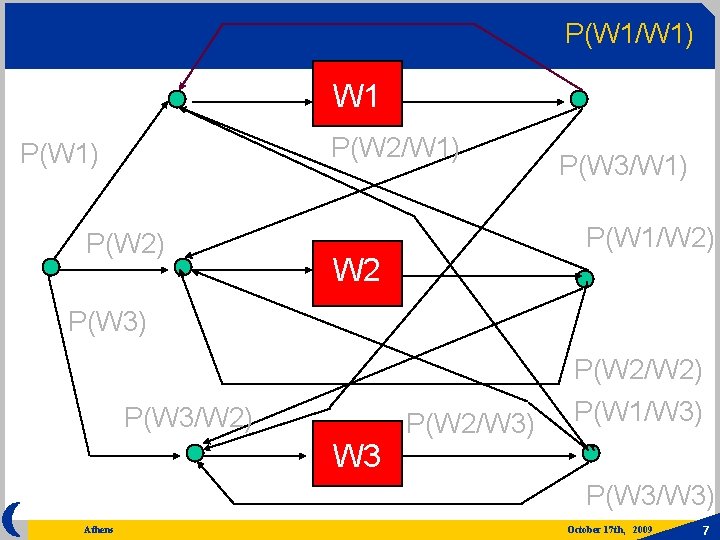

P(W 1/W 1) W 1 P(W 2/W 1) P(W 2) P(W 3/W 1) P(W 1/W 2) W 2 P(W 3) P(W 3/W 2) W 3 P(W 2/W 3) P(W 2/W 2) P(W 1/W 3) P(W 3/W 3) Athens October 17 th, 2009 7

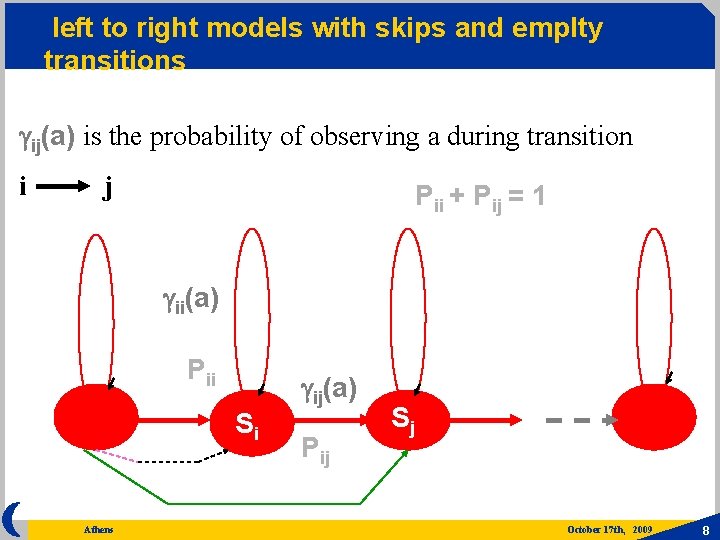

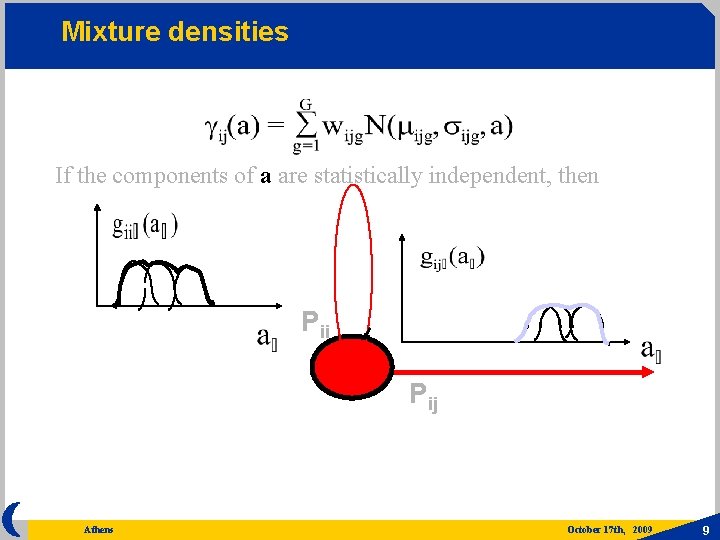

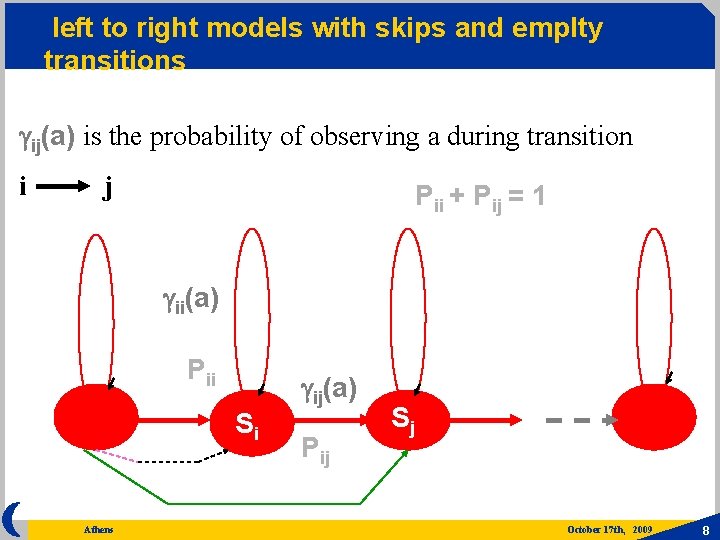

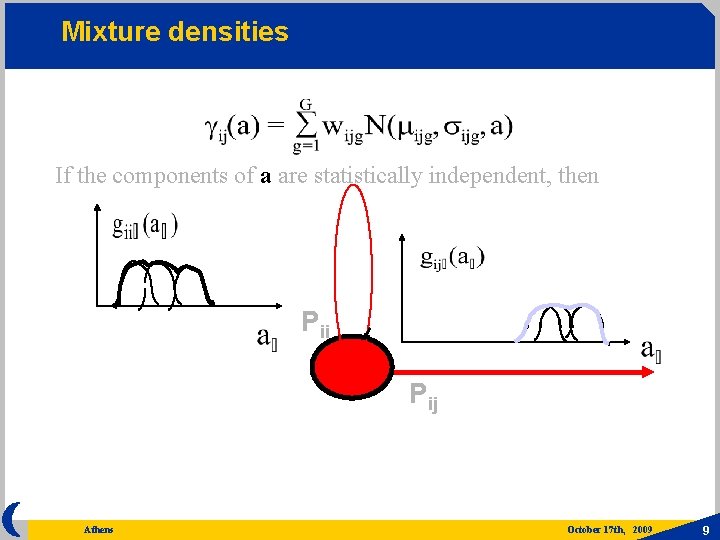

left to right models with skips and emplty transitions gij(a) is the probability of observing a during transition i j Pii + Pij = 1 gii(a) Pii Si Athens gij(a) Pij Sj October 17 th, 2009 8

Mixture densities If the components of a are statistically independent, then Pii Pij Athens October 17 th, 2009 9

Major problems Impressive performance but not robust enough. Some reasons: acoustic variability noise disfluency pronunciation variability language variety reduced feature size modeling limits non-admissible search Athens October 17 th, 2009 10

Demo LUNAVIZ OK 340. 1 HS 267 3 267 4 DISF 298 1 COM 238 6 COM 238 4 Athens October 17 th, 2009 11

Possible applications Constrained environments (broadcast transcriptions) Partial automation (telephone services) Redundant messages (spoken surveys) Specific content extraction (understanding and dialog) Athens October 17 th, 2009 12

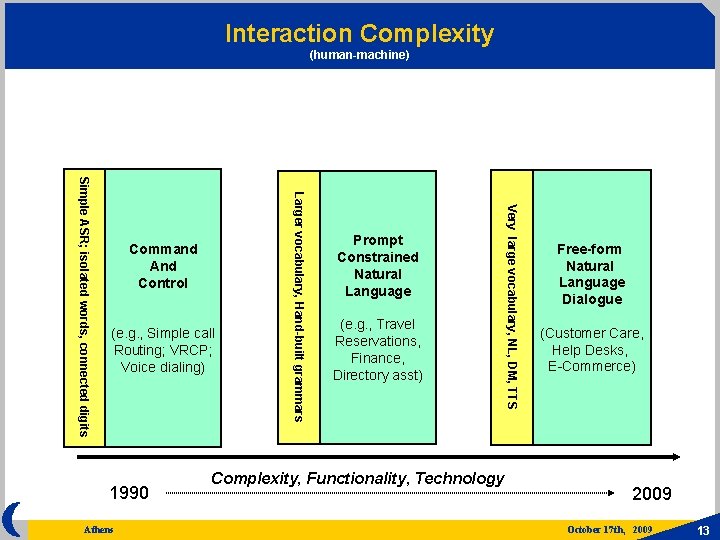

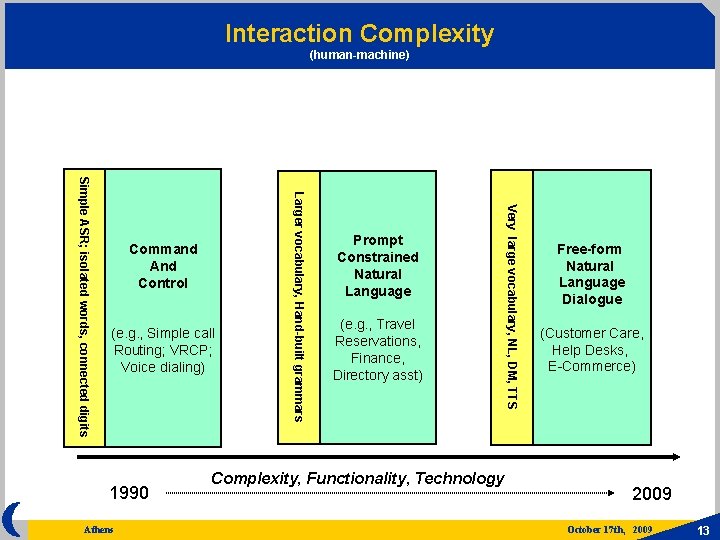

Interaction Complexity (human-machine) 1990 Athens Prompt Constrained Natural Language (e. g. , Travel Reservations, Finance, Directory asst) Complexity, Functionality, Technology Very large vocabulary, NL, DM, TTS (e. g. , Simple call Routing; VRCP; Voice dialing) Larger vocabulary, Hand-built grammars Simple ASR; isolated words, connected digits Command And Control Free-form Natural Language Dialogue (Customer Care, Help Desks, E-Commerce) 2009 October 17 th, 2009 13

COMPUTER INTERPRETATION PROCESS Athens October 17 th, 2009 14

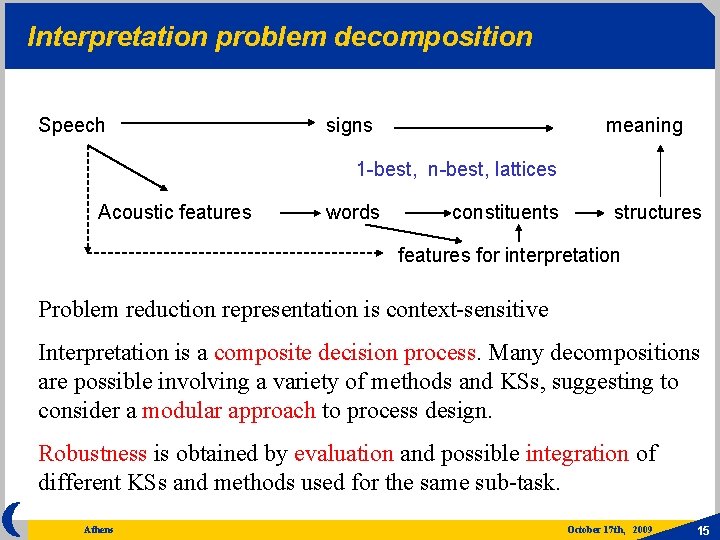

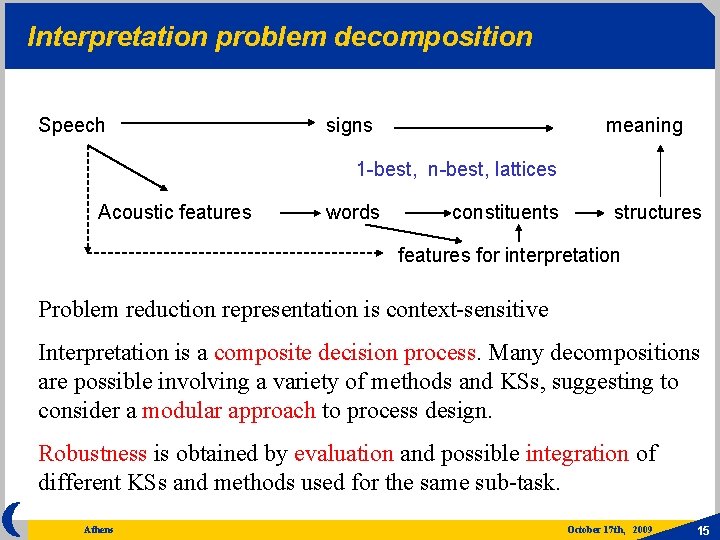

Interpretation problem decomposition Speech signs meaning 1 -best, n-best, lattices Acoustic features words constituents structures features for interpretation Problem reduction representation is context-sensitive Interpretation is a composite decision process. Many decompositions are possible involving a variety of methods and KSs, suggesting to consider a modular approach to process design. Robustness is obtained by evaluation and possible integration of different KSs and methods used for the same sub-task. Athens October 17 th, 2009 15

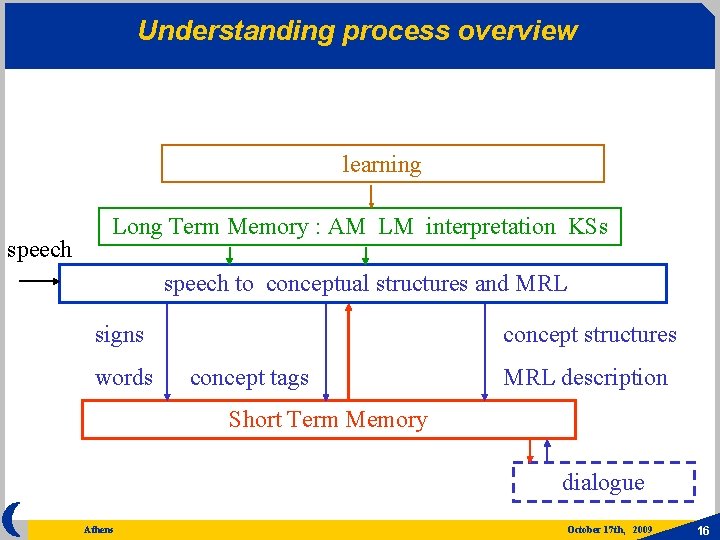

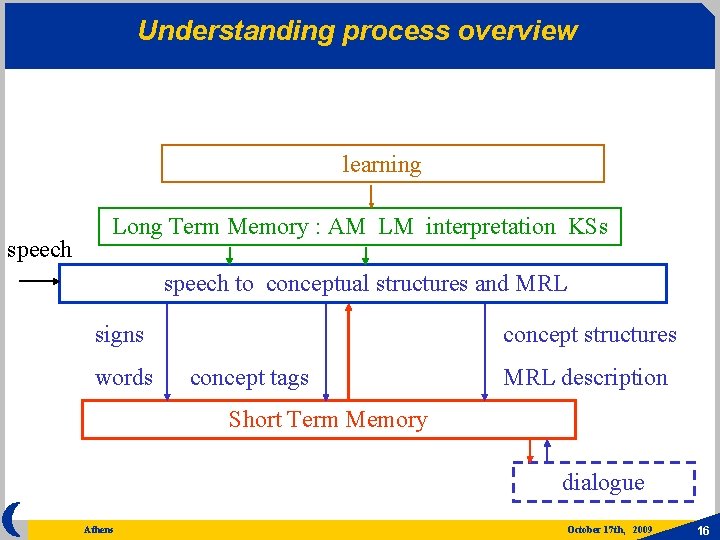

Understanding process overview learning speech Long Term Memory : AM LM interpretation KSs speech to conceptual structures and MRL signs concept structures words concept tags MRL description Short Term Memory dialogue Athens October 17 th, 2009 16

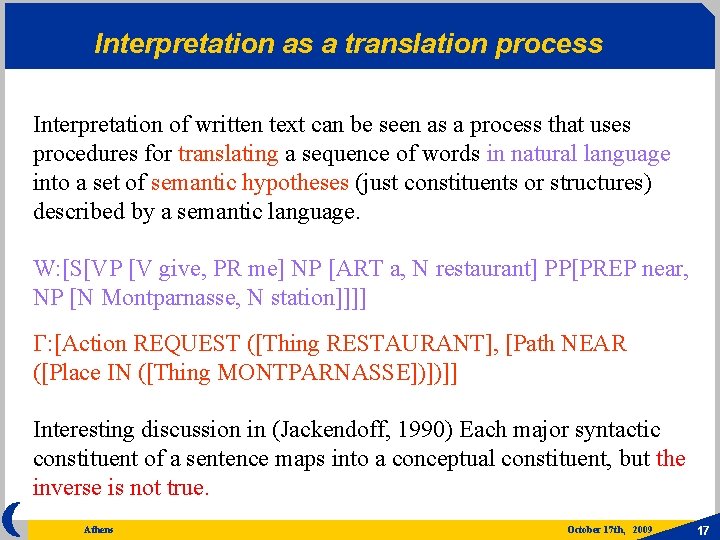

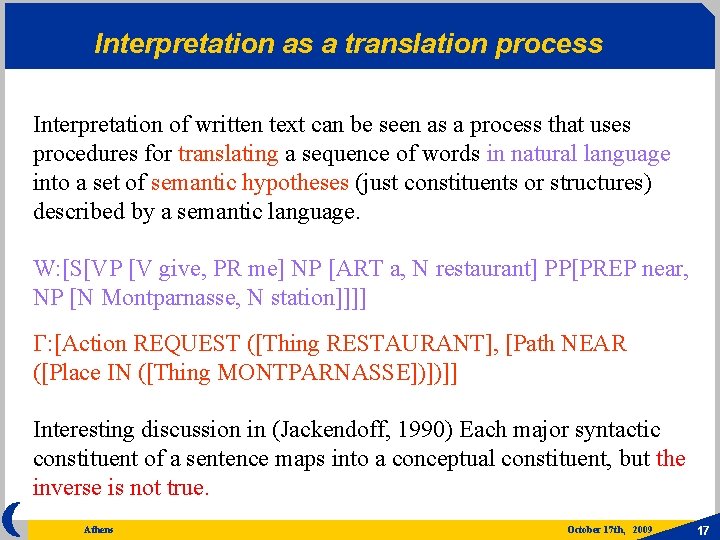

Interpretation as a translation process Interpretation of written text can be seen as a process that uses procedures for translating a sequence of words in natural language into a set of semantic hypotheses (just constituents or structures) described by a semantic language. W: [S[VP [V give, PR me] NP [ART a, N restaurant] PP[PREP near, NP [N Montparnasse, N station]]]] G: [Action REQUEST ([Thing RESTAURANT], [Path NEAR ([Place IN ([Thing MONTPARNASSE])])]] Interesting discussion in (Jackendoff, 1990) Each major syntactic constituent of a sentence maps into a conceptual constituent, but the inverse is not true. Athens October 17 th, 2009 17

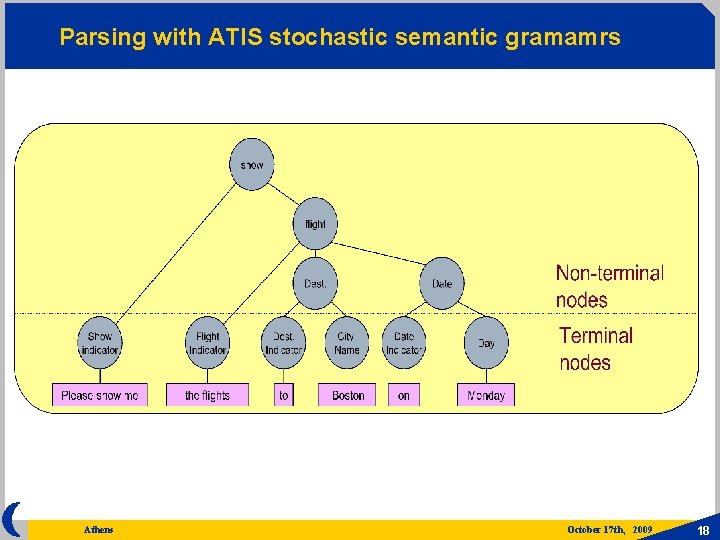

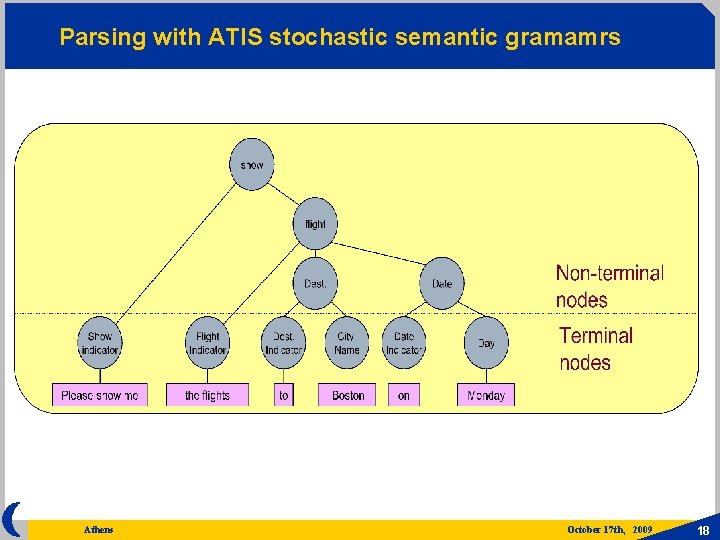

Parsing with ATIS stochastic semantic gramamrs Athens October 17 th, 2009 18

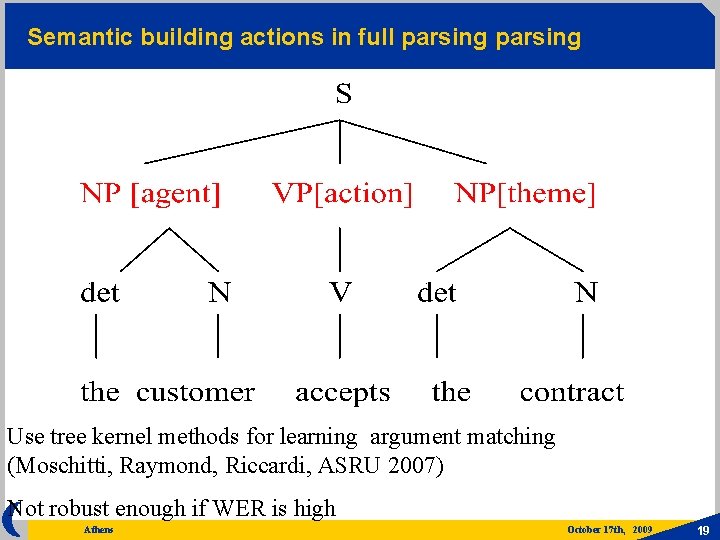

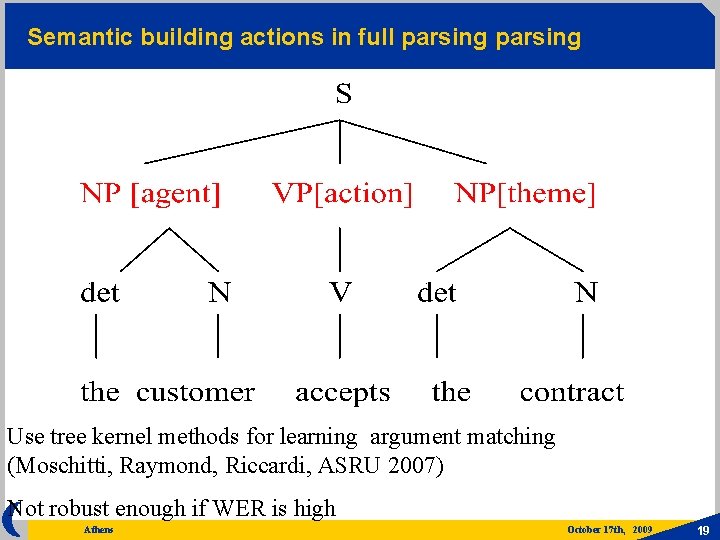

Semantic building actions in full parsing Use tree kernel methods for learning argument matching (Moschitti, Raymond, Riccardi, ASRU 2007) Not robust enough if WER is high Athens October 17 th, 2009 19

Predicate/argument structures and parsers Recently, classifiers were proposed for detecting concepts and roles. Such detection process was integrated with a stochastic parser (e. g. Charniak 2001). A solution using this parser and tree-kernel based classifiers for predicate argument detection in SLU is proposed in (Moschitti et al. ASRU 2007). Other relevant contributions on stochastic semantic parsing can be found in (Goddeau and Zue. 1992, . Goodman. 1996, . Chelba and Jelinek, 2000, . Roark, 2002, Collins, 2003) Lattice-based parsers are reviewed in (Hall, 2005) Athens October 17 th, 2009 20

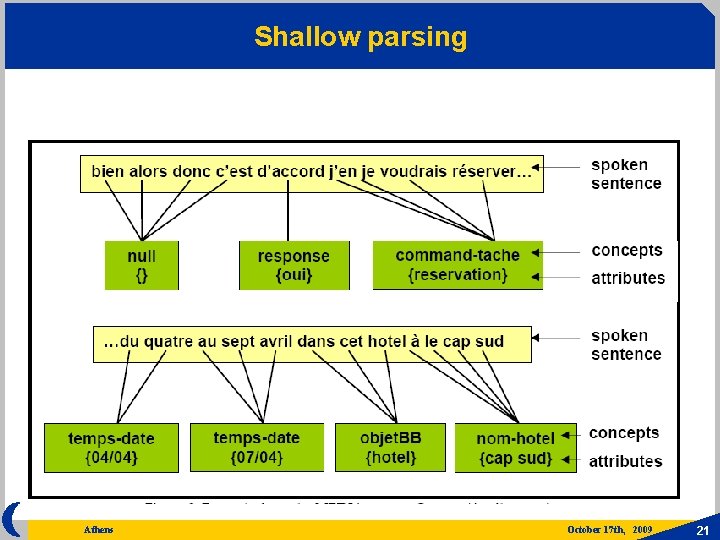

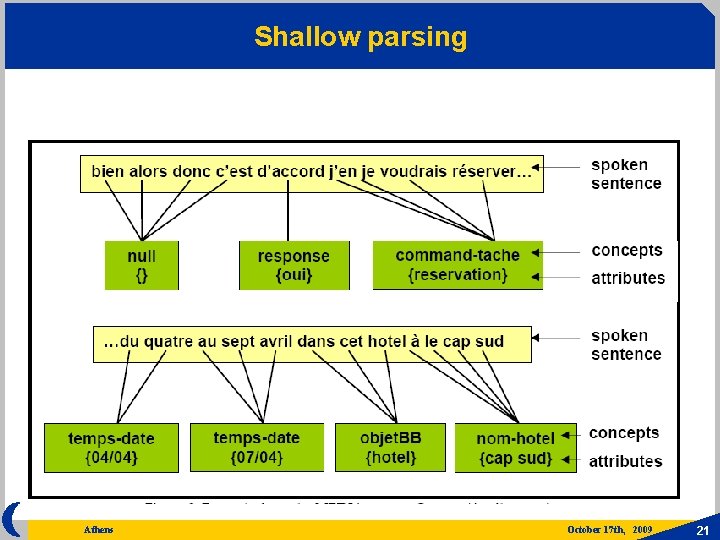

Shallow parsing Athens October 17 th, 2009 21

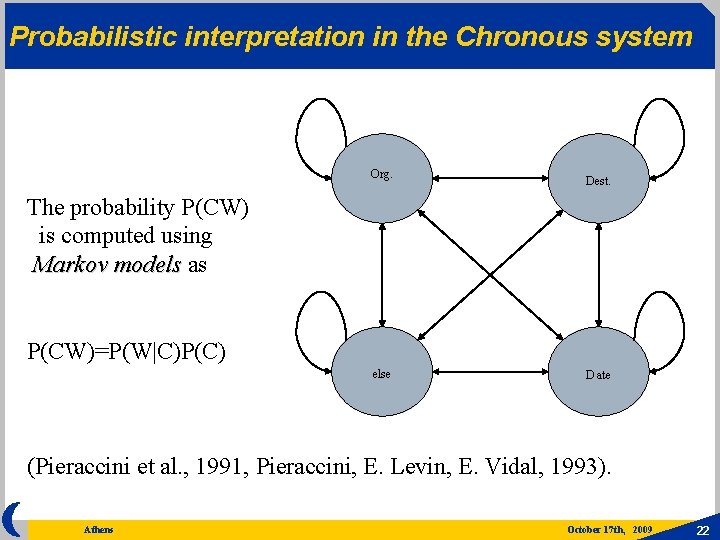

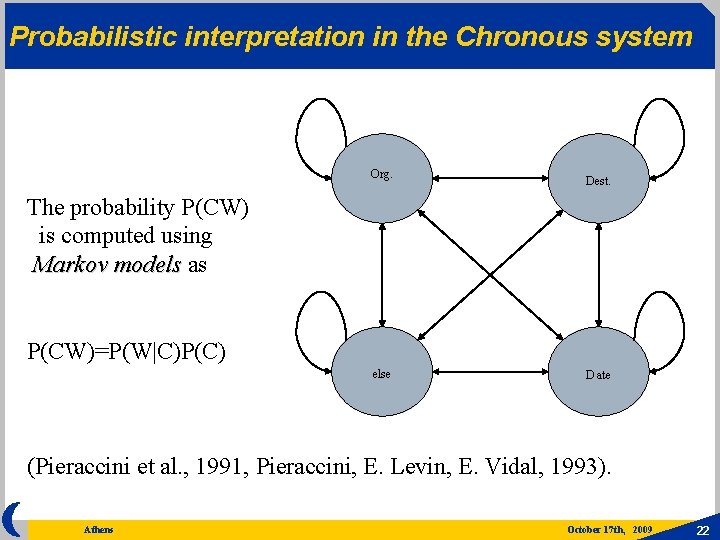

Probabilistic interpretation in the Chronous system Org. Dest. The probability P(CW) is computed using Markov models as Markov models P(CW)=P(W|C)P(C) else Date (Pieraccini et al. , 1991, Pieraccini, E. Levin, E. Vidal, 1993). Athens October 17 th, 2009 22

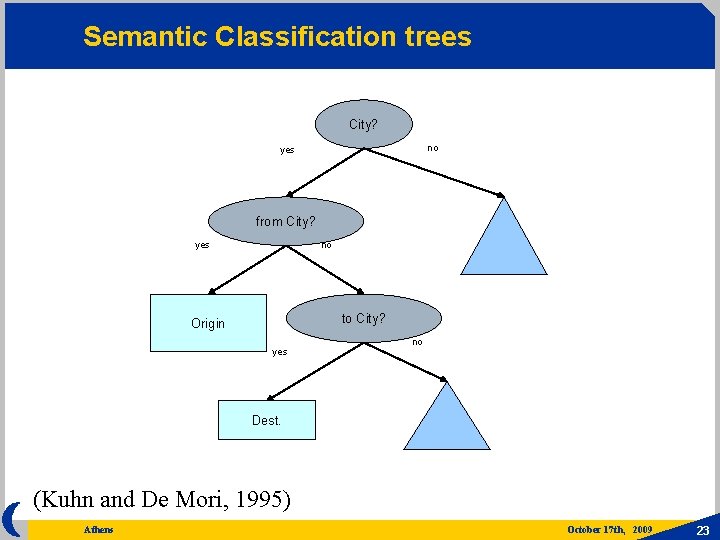

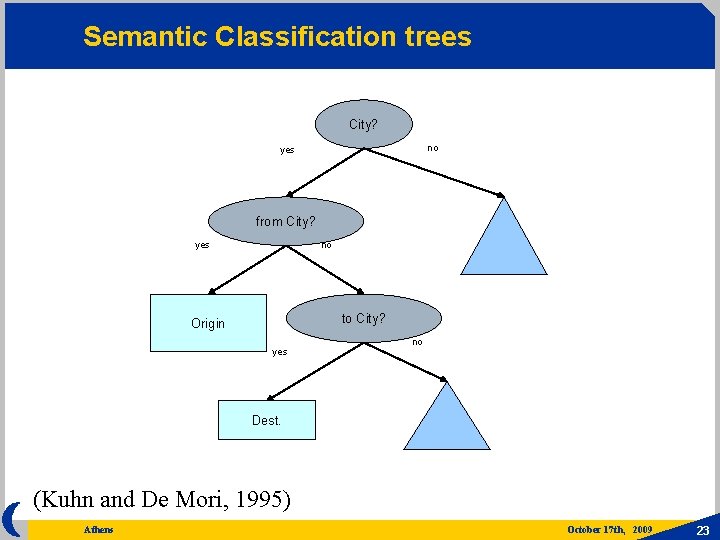

Semantic Classification trees City? no yes from City? yes no to City? Origin yes no Dest. (Kuhn and De Mori, 1995) Athens October 17 th, 2009 23

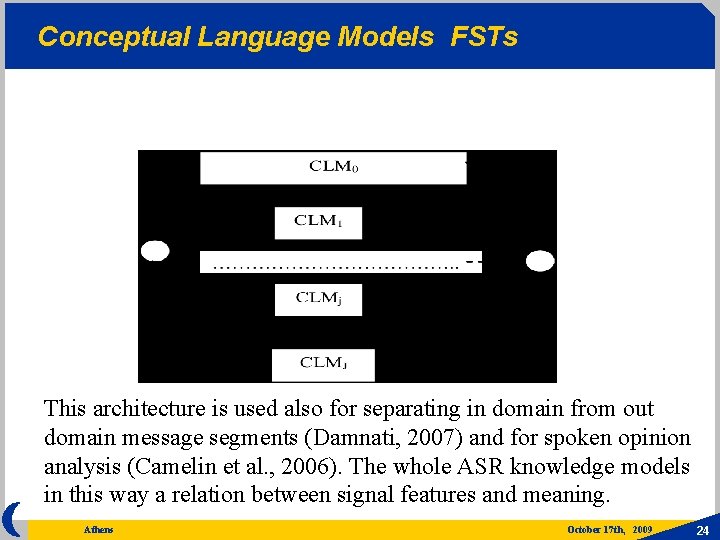

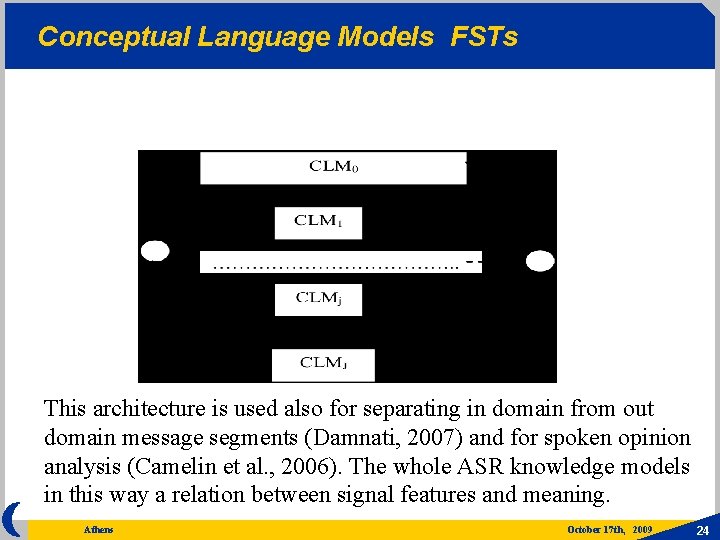

Conceptual Language Models FSTs This architecture is used also for separating in domain from out domain message segments (Damnati, 2007) and for spoken opinion analysis (Camelin et al. , 2006). The whole ASR knowledge models in this way a relation between signal features and meaning. Athens October 17 th, 2009 24

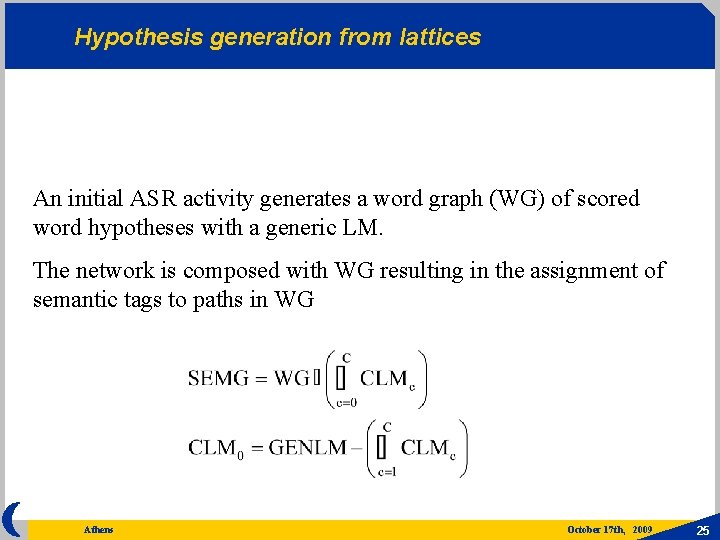

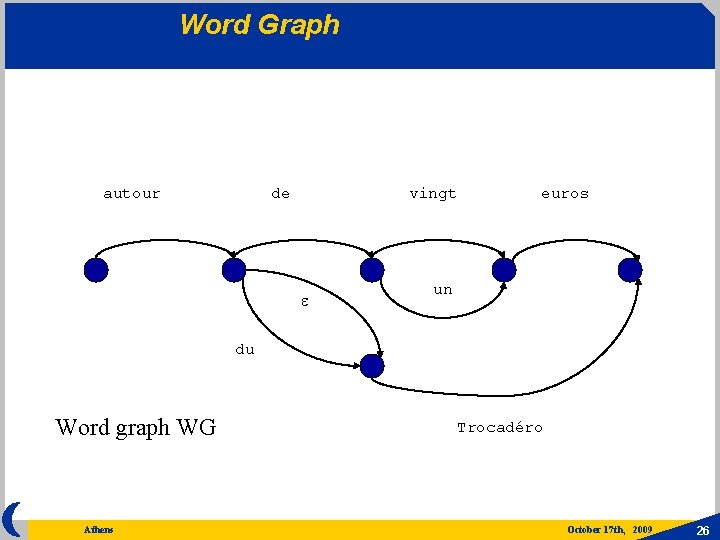

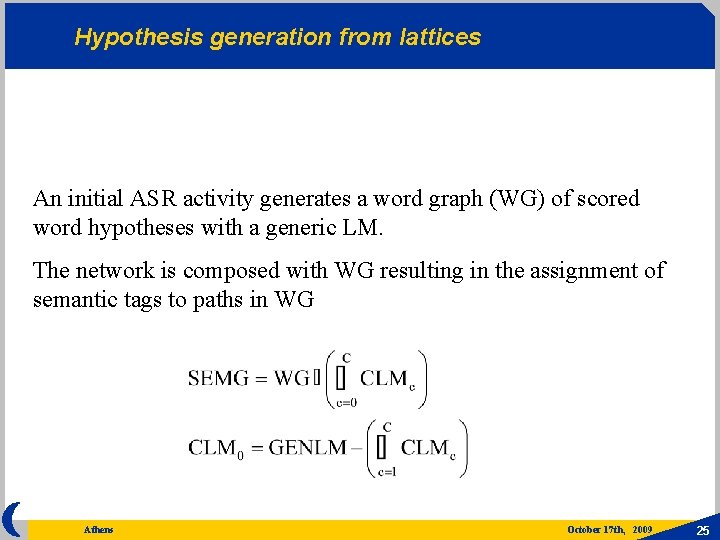

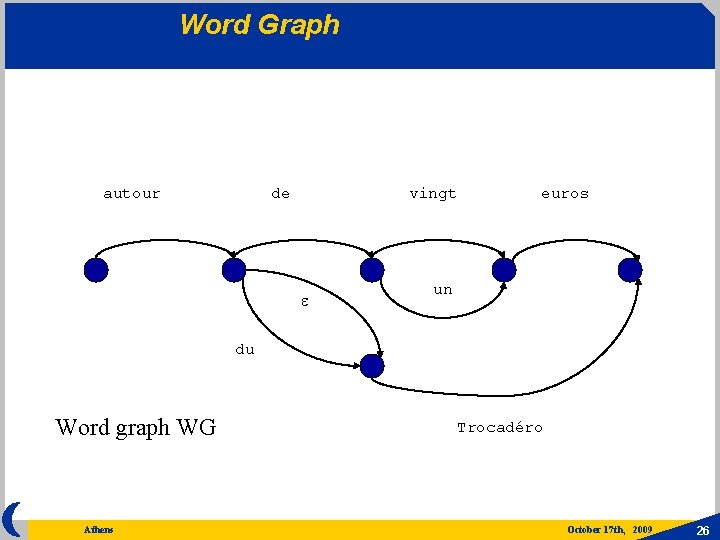

Hypothesis generation from lattices An initial ASR activity generates a word graph (WG) of scored word hypotheses with a generic LM. The network is composed with WG resulting in the assignment of semantic tags to paths in WG Athens October 17 th, 2009 25

Word Graph autour de vingt e euros un du Word graph WG Athens Trocadéro October 17 th, 2009 26

Composition autour/NEAR /RANGE de/e <b(PRICE)> <b(PLACE)> du/ e euros/<PRICE> vingt/ e un/ e Trocadéro/<PLACE> e /e Trocadéro/e Athens October 17 th, 2009 27

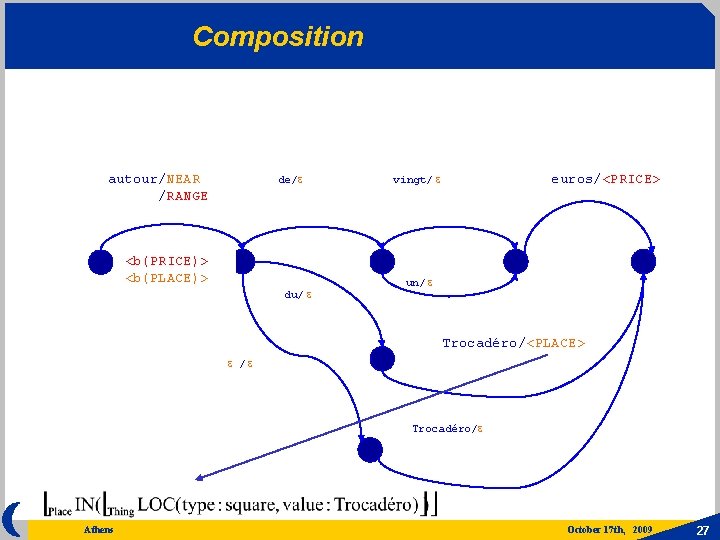

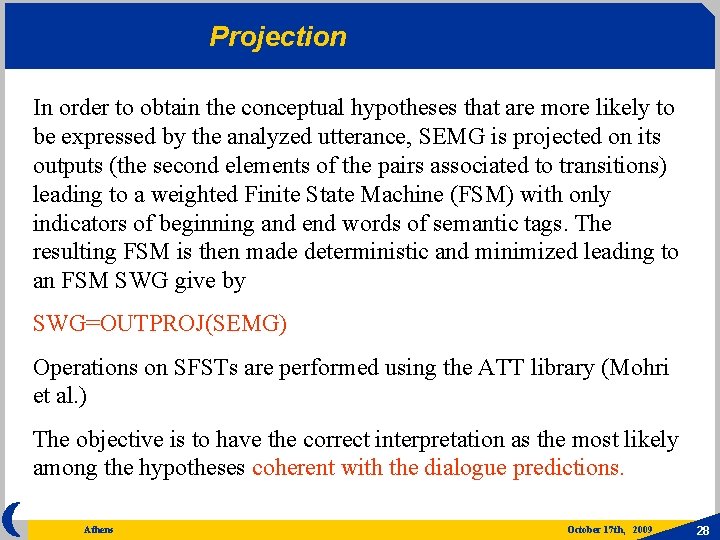

Projection In order to obtain the conceptual hypotheses that are more likely to be expressed by the analyzed utterance, SEMG is projected on its outputs (the second elements of the pairs associated to transitions) leading to a weighted Finite State Machine (FSM) with only indicators of beginning and end words of semantic tags. The resulting FSM is then made deterministic and minimized leading to an FSM SWG give by SWG=OUTPROJ(SEMG) Operations on SFSTs are performed using the ATT library (Mohri et al. ) The objective is to have the correct interpretation as the most likely among the hypotheses coherent with the dialogue predictions. Athens October 17 th, 2009 28

Demo LUNAVIZ OK 340. 1 Best path avoids insertions Athens October 17 th, 2009 29

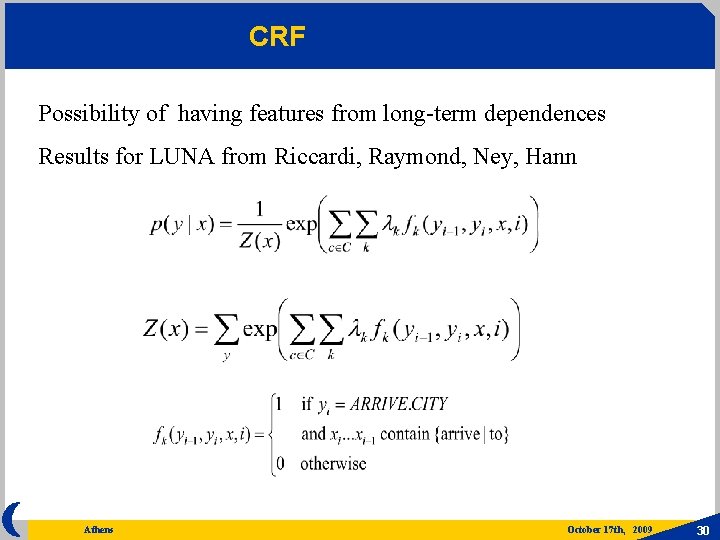

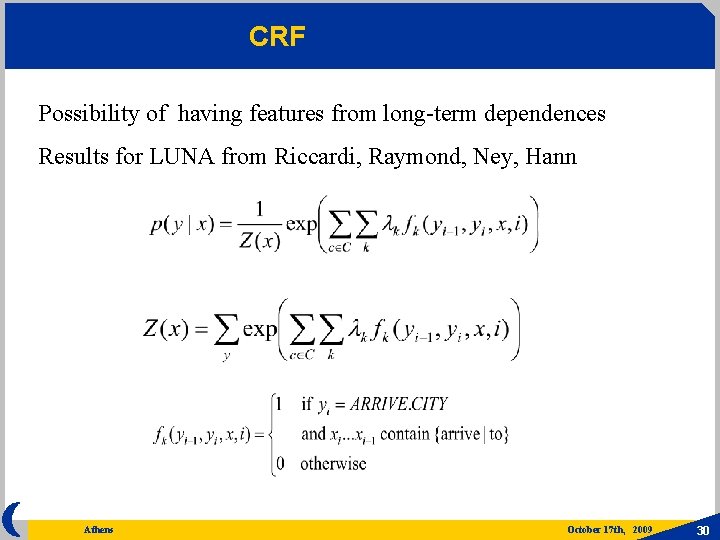

CRF Possibility of having features from long-term dependences Results for LUNA from Riccardi, Raymond, Ney, Hann Athens October 17 th, 2009 30

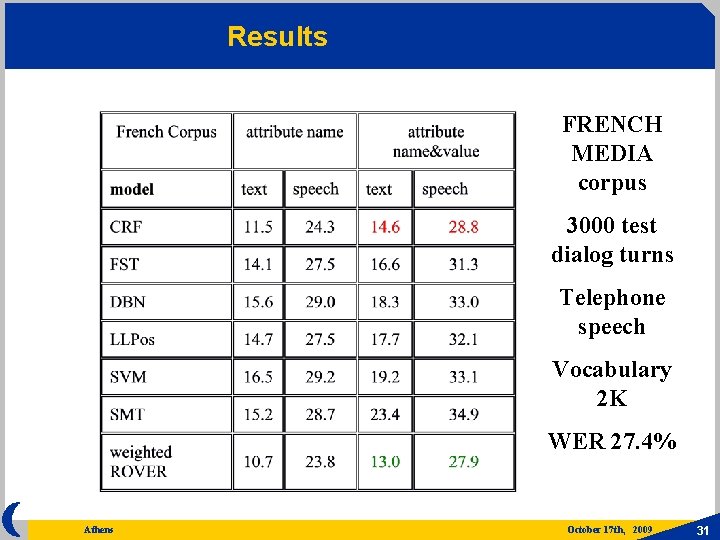

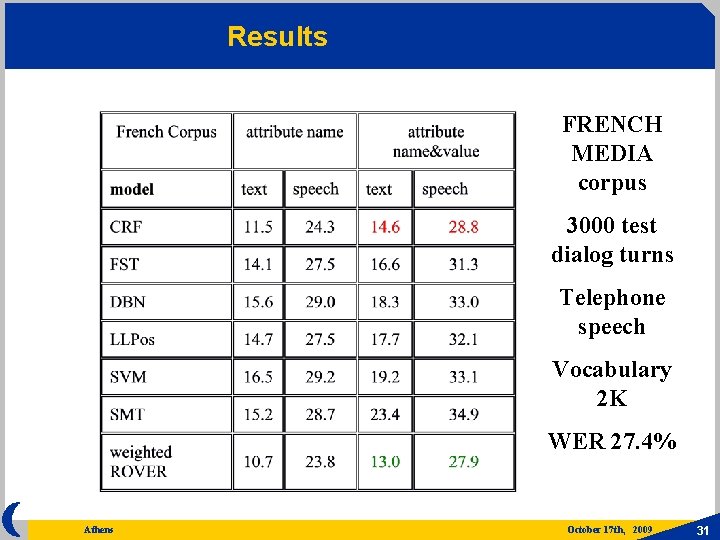

Results FRENCH MEDIA corpus 3000 test dialog turns Telephone speech Vocabulary 2 K WER 27. 4% Athens October 17 th, 2009 31

Computational semantics Epistemology, the science of knowledge, considers a datum as basic unit. Semantics deals with the organization of meanings and the relations between sensory signs or symbols and what they denote or mean. Computer epistemology deals with observable facts and their representation in a computer. Natural language interpretation by computers performs a conceptualization of the world using computational processes for composing a meaning representation structure from available signs and their features. Athens October 17 th, 2009 32

Frames as computational structures (intension) A frame scheme with defining properties represents types of conceptual structures (intension) as well as instances of them (extension). Relations with signs can be established by attached procedures (S. Young et al. , 1989). {address loc TOWN (facet) ……attached procedures area DEPARTMENT OR PROVINCE OR STATE ……attached procedures country NATION ……attached procedures street NUMBER AND NAME ……attached procedures zip ORDINAL NUMBER ……attached procedures } Athens October 17 th, 2009 33

Frame instances (extension) A convenient way for asserting properties, and reasoning about semantic knowledge is to represent it as a set of logic formulas. A frame instance (extension) can be obtained from predicates that are related and composed into a computational structure. Frame schemata can be derived from knowledge obtained by applying semantic theories. Interesting theories can be found, for example in (Jackendoff, 1990, 2002) or in (Brackman 1978, reviewed by Woods 1985) Athens October 17 th, 2009 34

Frame instance Schemata contain collections of properties and values expressing relations. A property or a role are represented by a slot filled by a value {a 0001 instance_of loc area country street zip } Athens address Avignon Vaucluse France 1, avenue Pascal 84000 October 17 th, 2009 35

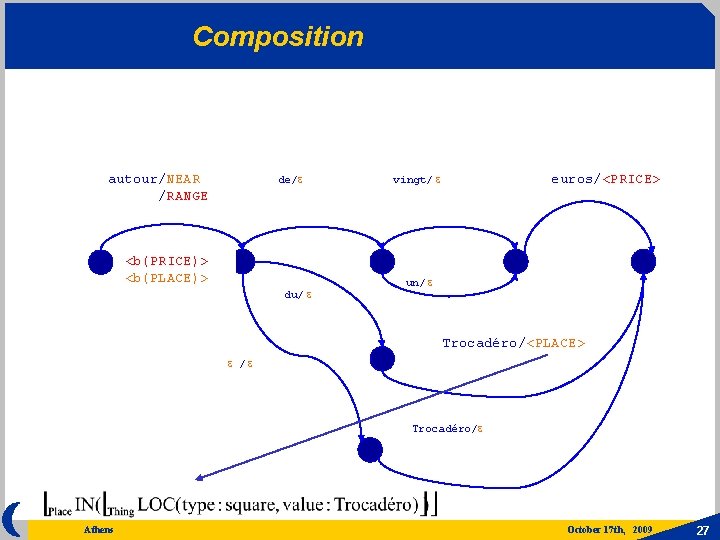

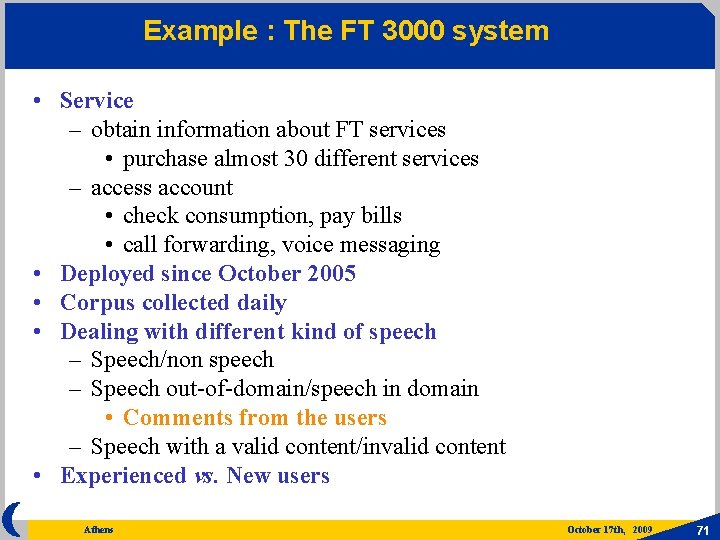

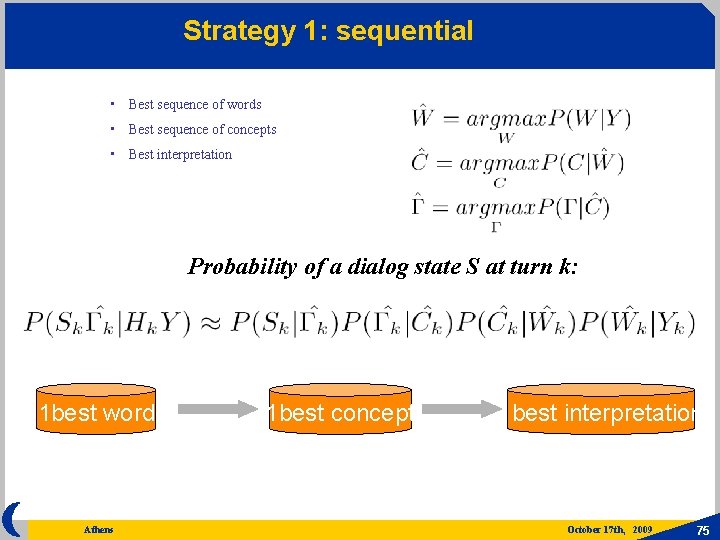

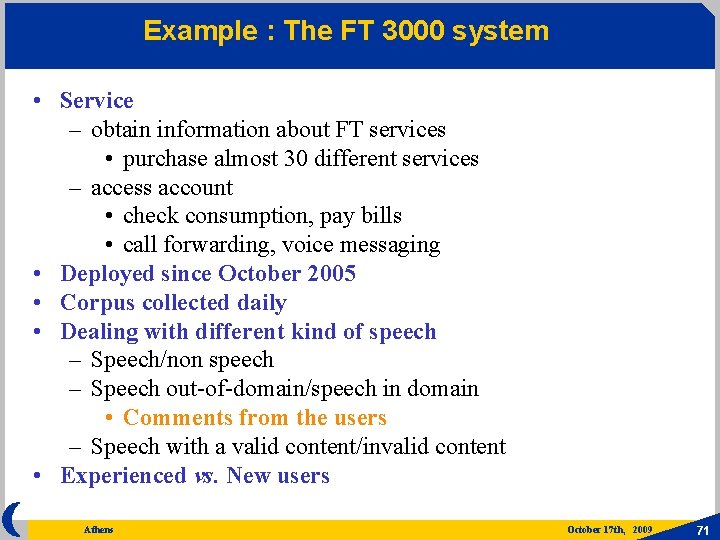

Composition process 1 Step 1 – concept tags -> frame instance fragments Concept tag : hotel-parking, Fragment : HOTEL. [hotel_facilities FACILITY. [facility_type parking]] Athens October 17 th, 2009 36

![Composition process 2 Step 2 composition by fusion HOTEL luxury fourstars HOTEL hfacility Composition process 2 Step 2 – composition by fusion HOTEL. [luxury four_stars] HOTEL. [h_facility](https://slidetodoc.com/presentation_image/2c01c8ee3aa20dfae53b33abf981634b/image-37.jpg)

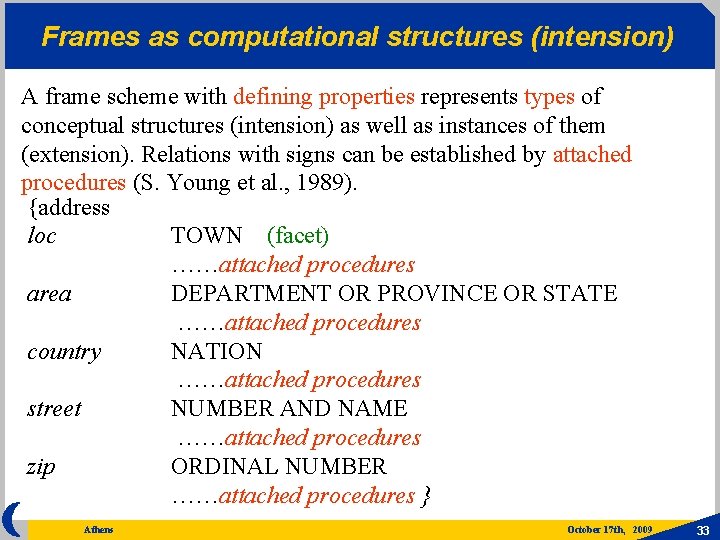

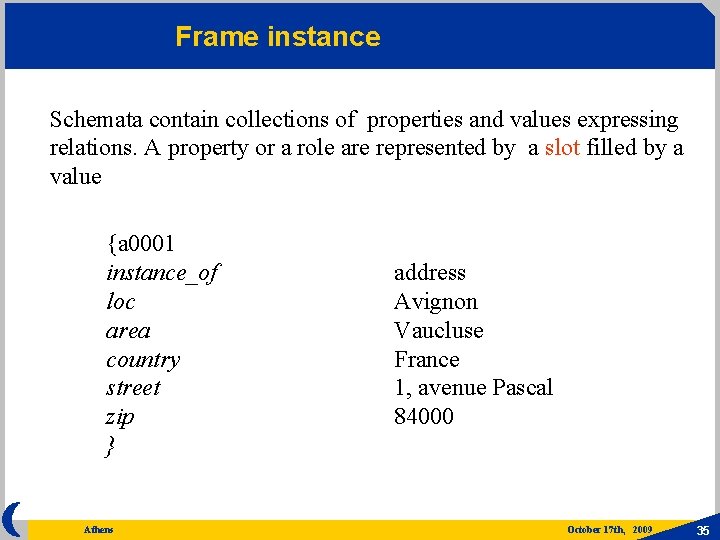

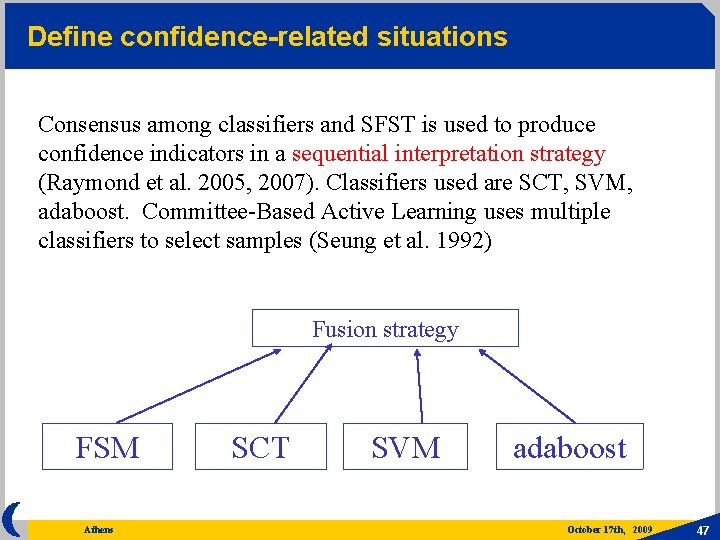

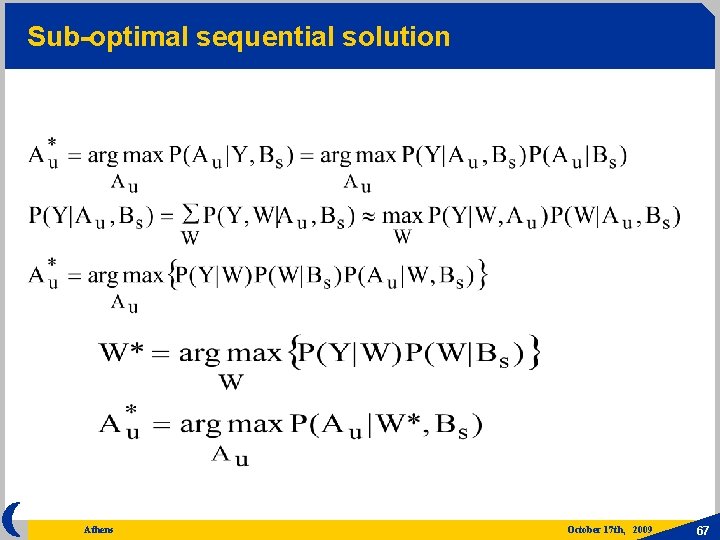

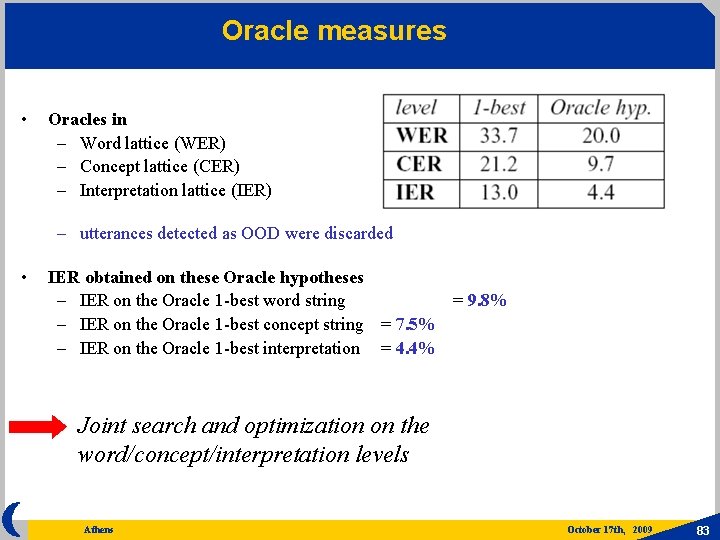

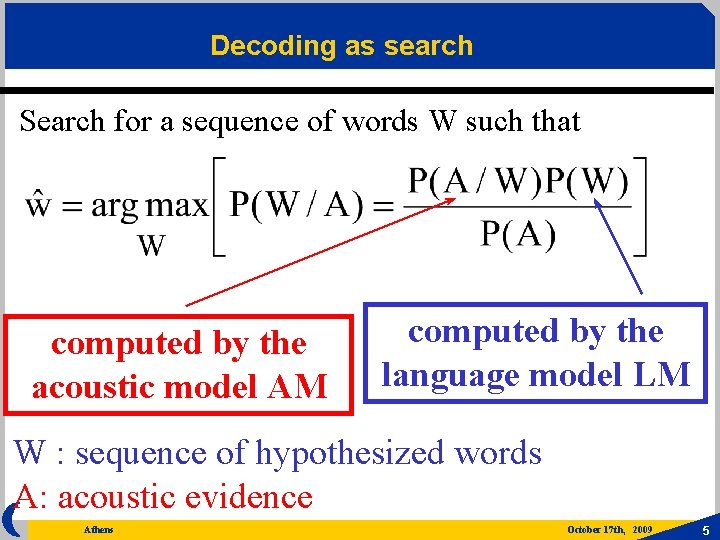

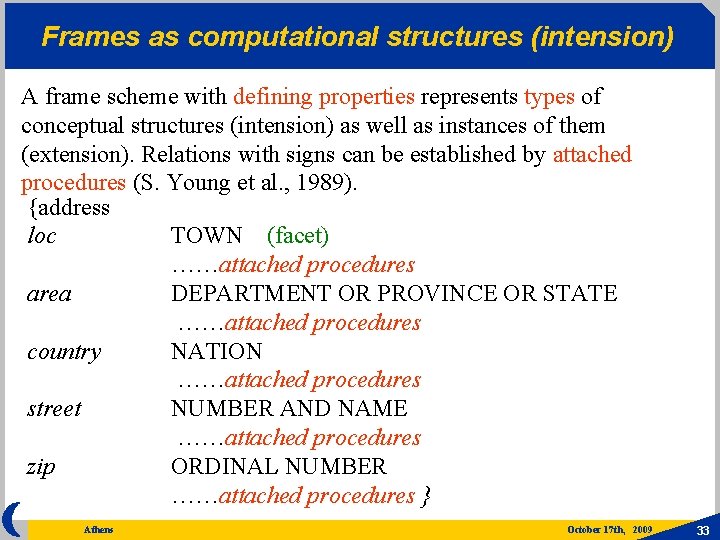

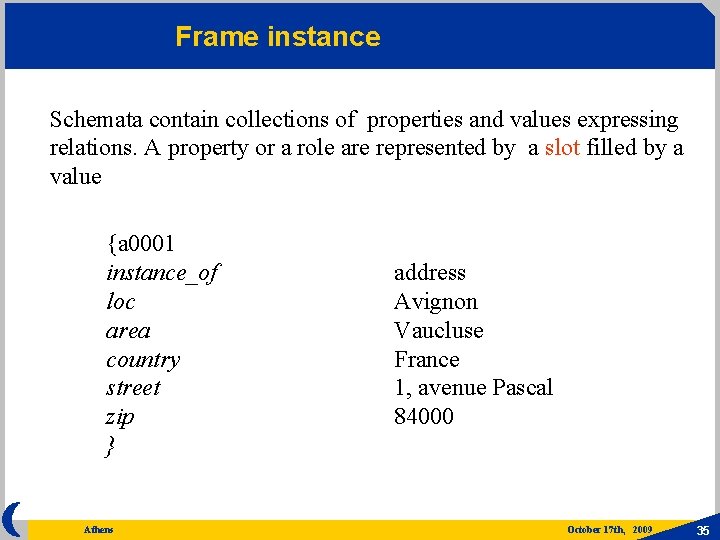

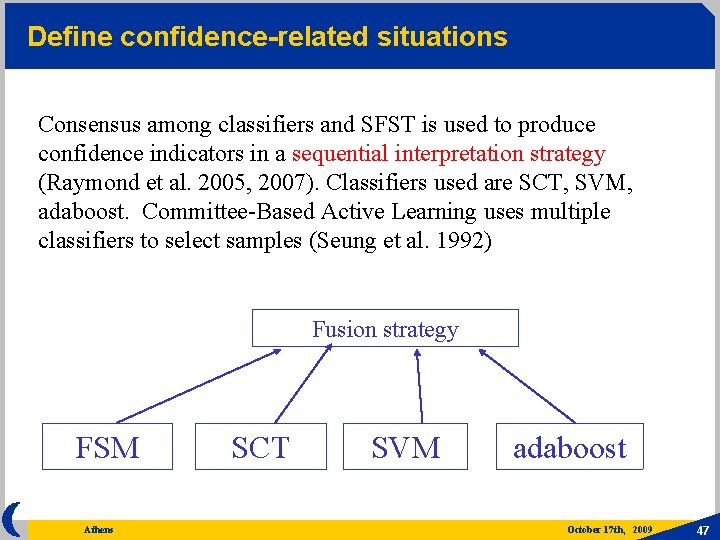

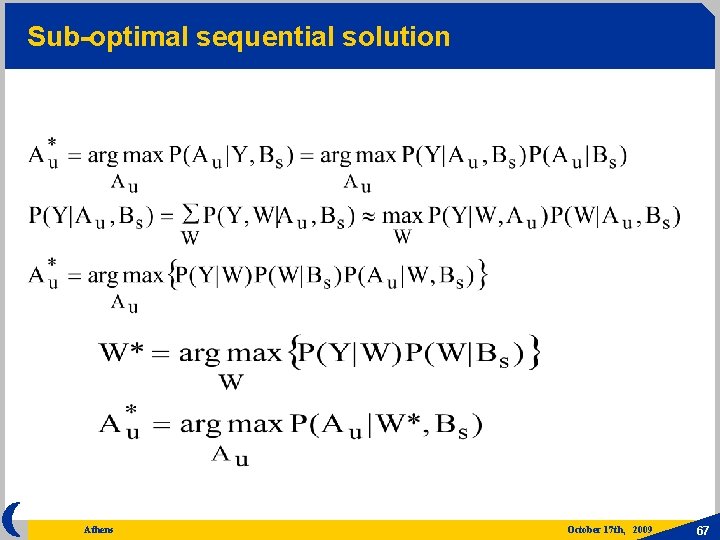

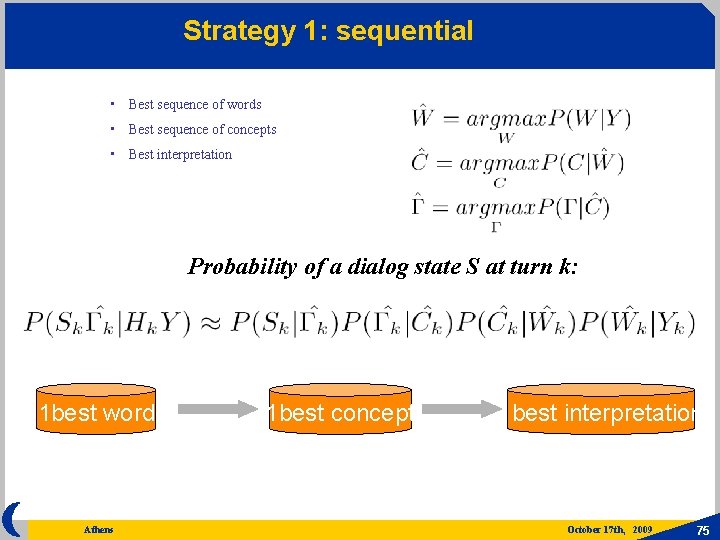

Composition process 2 Step 2 – composition by fusion HOTEL. [luxury four_stars] HOTEL. [h_facility FACILITY. [hotel_service tennis]] Fusion rule result HOTEL. [luxury four_stars, h_facility FACILITY. [hotel_service tennis]] Athens October 17 th, 2009 37

![Composition process 3 Step 3 Composition by attachment new fragment ADDRESS adrcity Lyon Composition Composition process 3 Step 3– Composition by attachment new fragment ADDRESS. [adr_city Lyon] Composition](https://slidetodoc.com/presentation_image/2c01c8ee3aa20dfae53b33abf981634b/image-38.jpg)

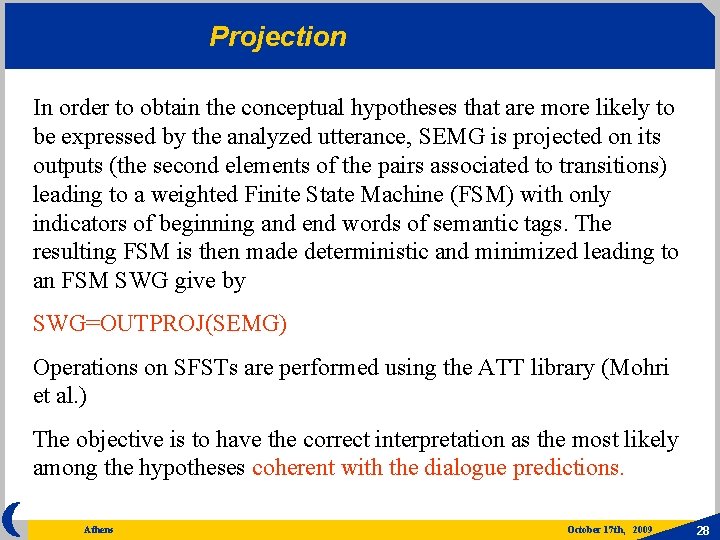

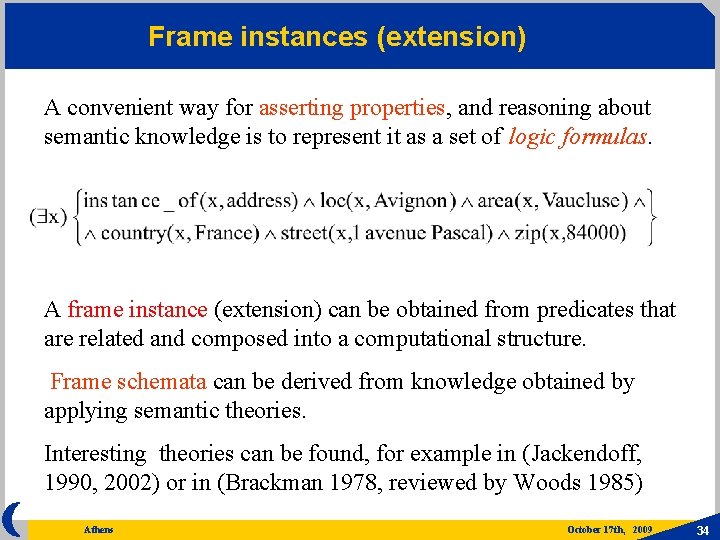

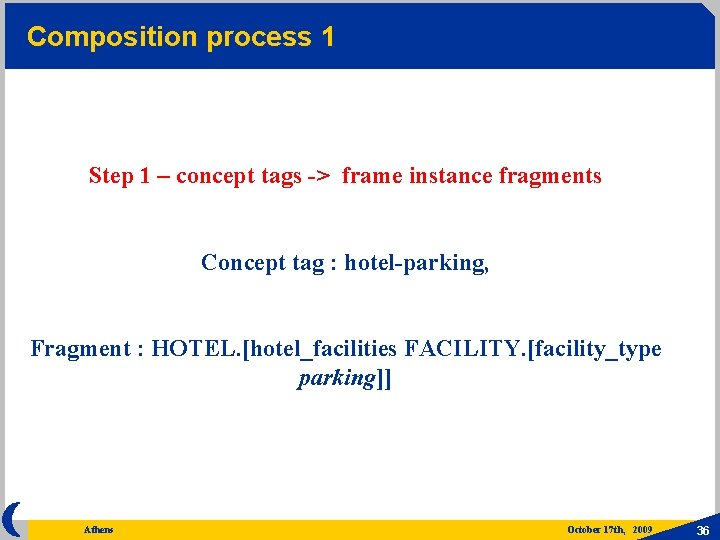

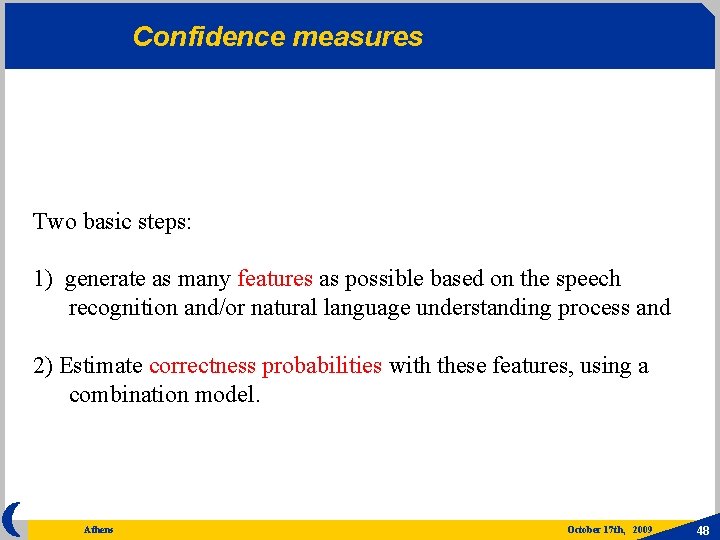

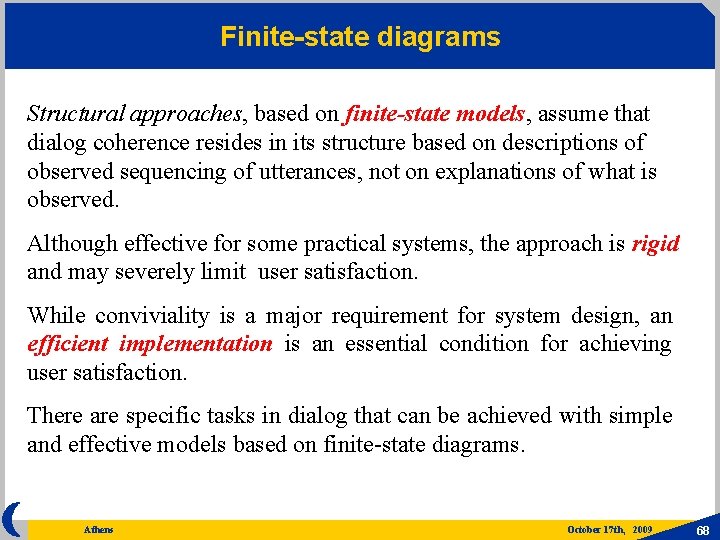

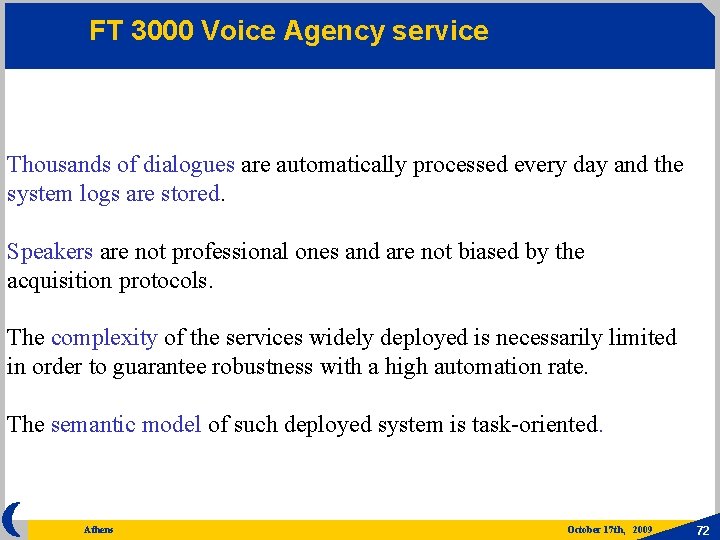

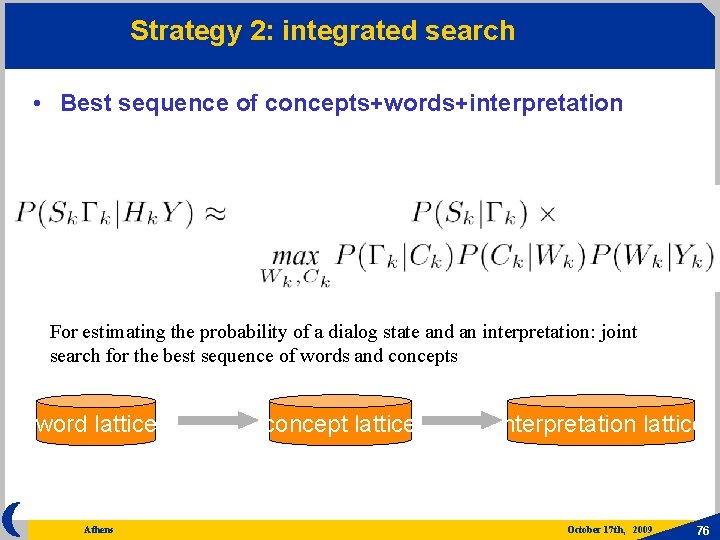

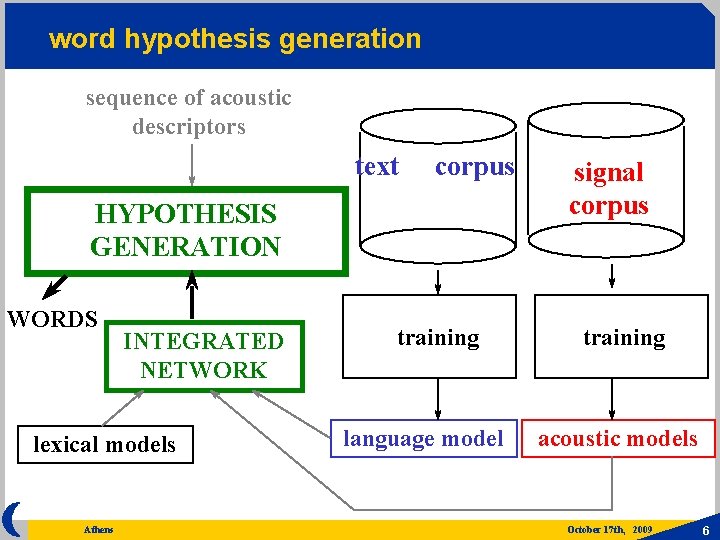

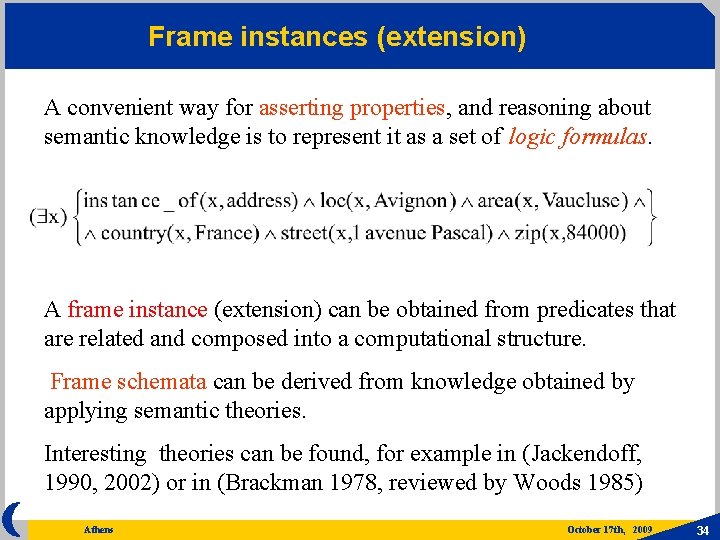

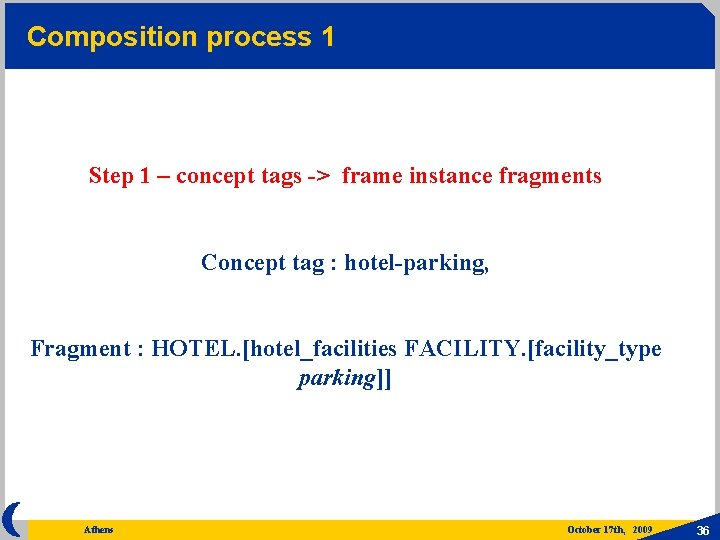

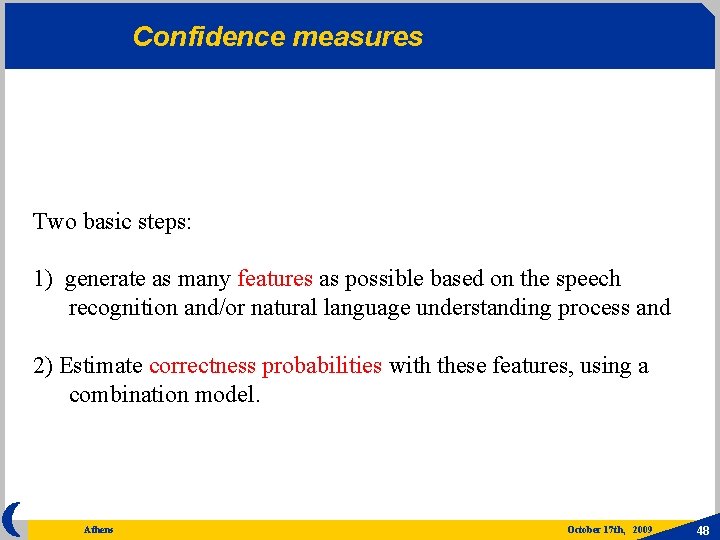

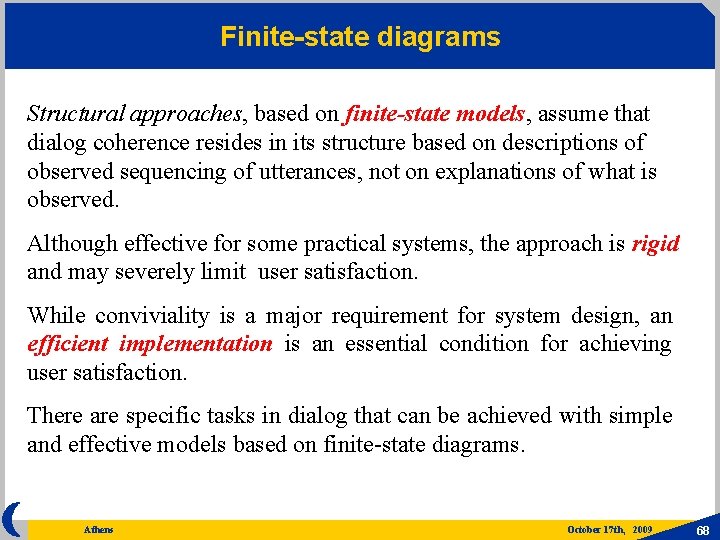

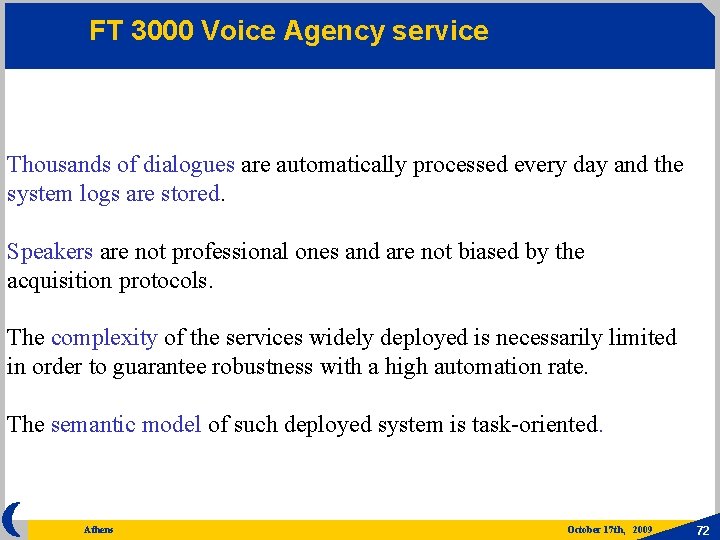

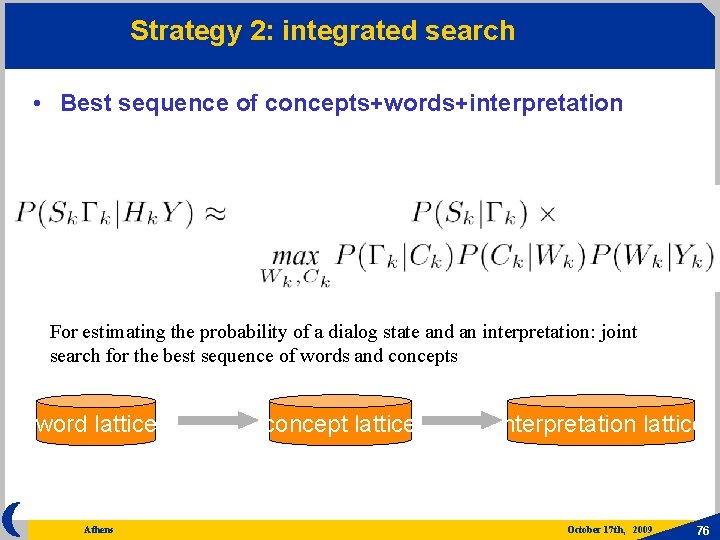

Composition process 3 Step 3– Composition by attachment new fragment ADDRESS. [adr_city Lyon] Composition result: HOTEL. [luxury four_stars, h_facility FACILITY. [hotel_service tennis], at_loc ADDRESS. [adr_city Lyon]] Athens October 17 th, 2009 38

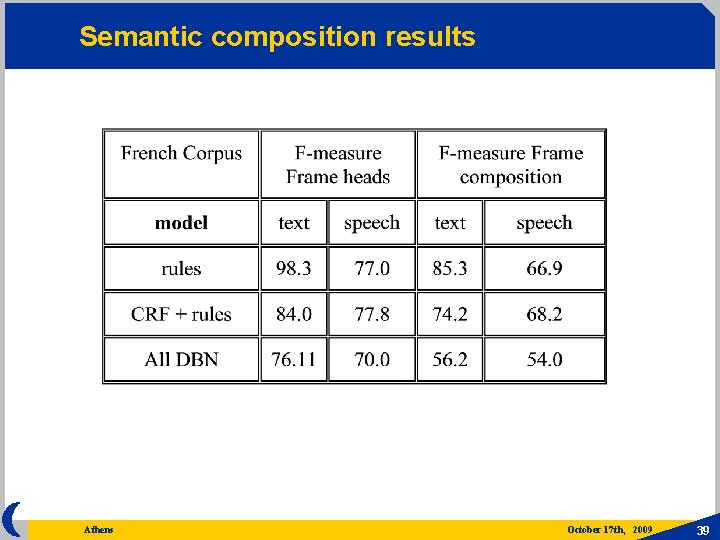

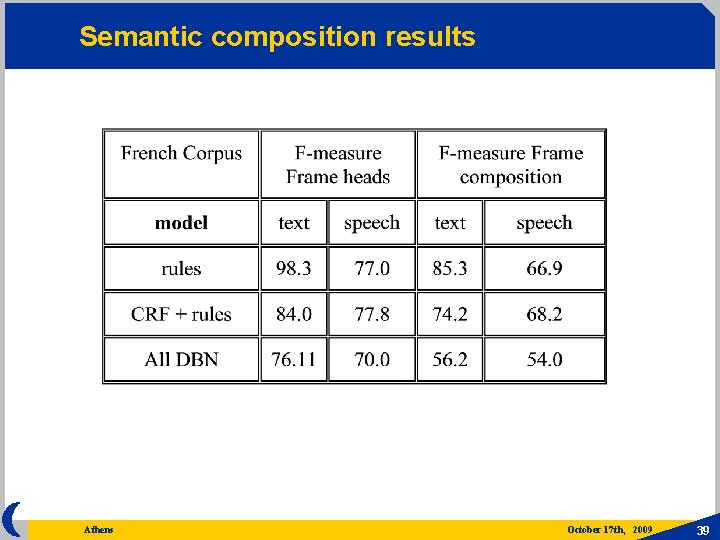

Semantic composition results Athens October 17 th, 2009 39

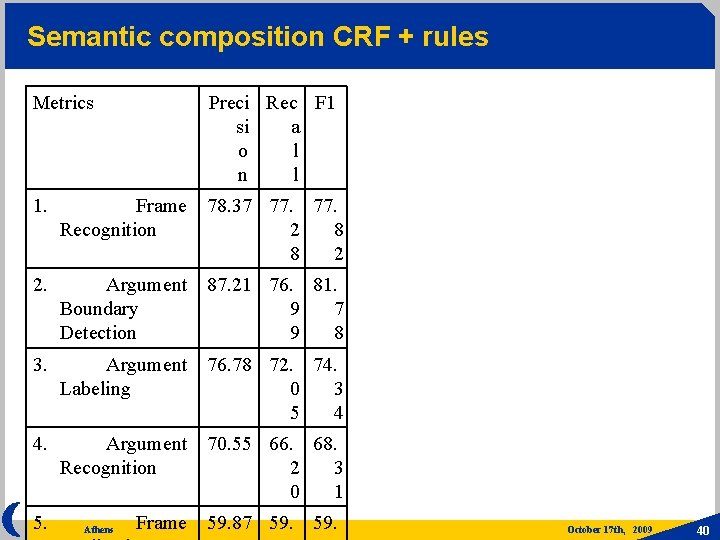

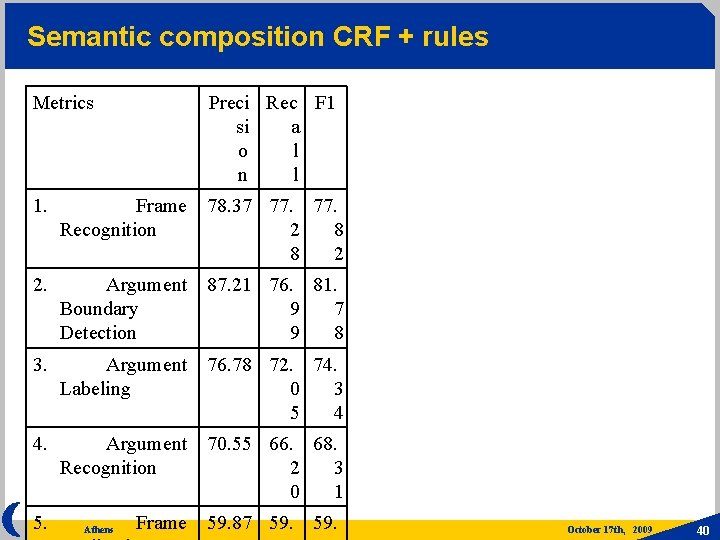

Semantic composition CRF + rules Metrics Preci Rec F 1 si a o l n l 1. Frame 78. 37 77. Recognition 2 8 8 2 2. Argument 87. 21 76. 81. Boundary 9 7 Detection 9 8 3. Argument 76. 78 72. 74. Labeling 0 3 5 4 4. Argument 70. 55 66. 68. Recognition 2 3 0 1 5. Athens Frame 59. 87 59. October 17 th, 2009 40

Demo LUNAVIZ OK 340 1 Athens October 17 th, 2009 41

CONFIDENCE AND LEARNING Athens October 17 th, 2009 42

Confidence Evaluate confidence of components and compositions represents the confidence indicators or a function of them. Notice that it is difficult to compare competing interpretation hypotheses based on the probability where Y is a time sequence of acoustic features, because different semantic constituents may have been hypothesized on different time segments of stream Y. Athens October 17 th, 2009 43

Probabilistic frame based systems In probabilistic frame-based systems, (Koller 1998 ) a frame slot S of a frame F is associated a facet Q with value Y: Q(F, S, Y). A probability model is part of a facet as it represents a restriction on the values Y. It is possible to have a probability model for a slot value which depends on a slot chain. It is also possible to inherit probability models from classes to subclasses, to use probability models in multiple instances and to have probability distributions representing structural uncertainty about a set of entities. Athens October 17 th, 2009 44

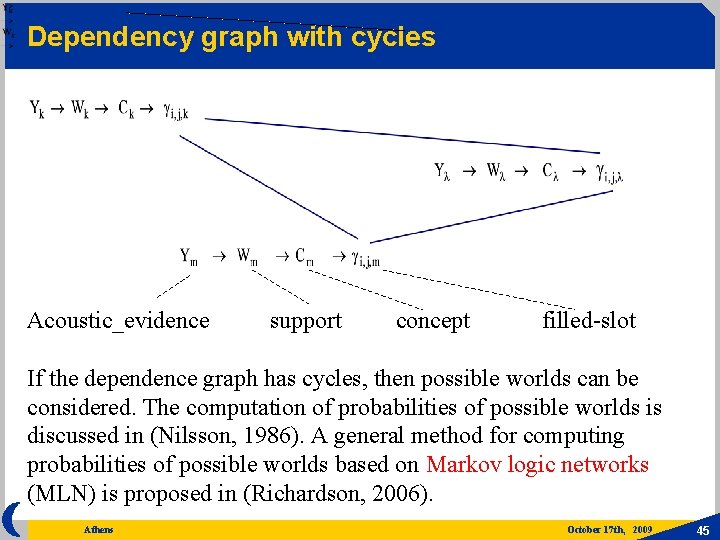

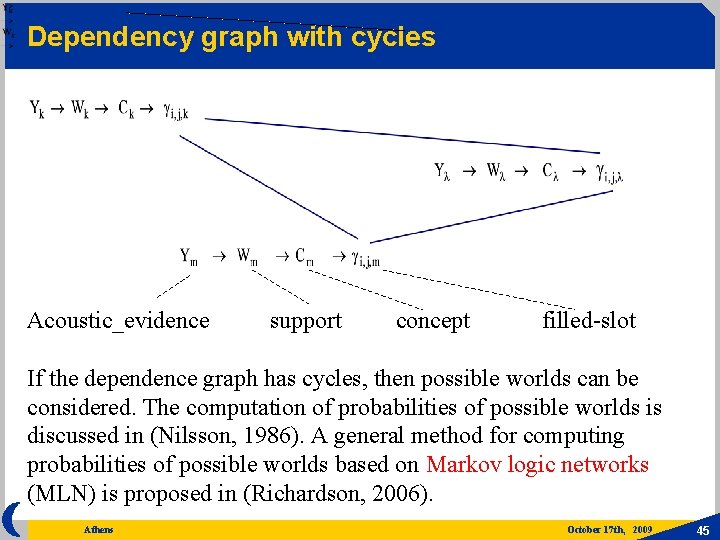

Dependency graph with cycles Acoustic_evidence support concept filled-slot If the dependence graph has cycles, then possible worlds can be considered. The computation of probabilities of possible worlds is discussed in (Nilsson, 1986). A general method for computing probabilities of possible worlds based on Markov logic networks (MLN) is proposed in (Richardson, 2006). Athens October 17 th, 2009 45

Probabilistic models of relational data Relational Markov Networks (RMN) are a generalization of CRFs that allow for collective classification of a set of related entities by integrating information from features of individual entities as well as relations between them (Taskar et al. , 2002). Methods for probabilistic logic learning are reviewed in (De Raedt, 2003). Athens October 17 th, 2009 46

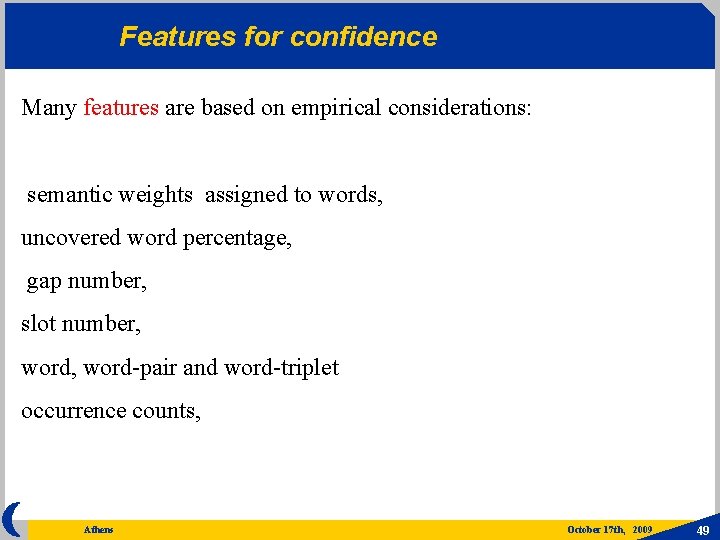

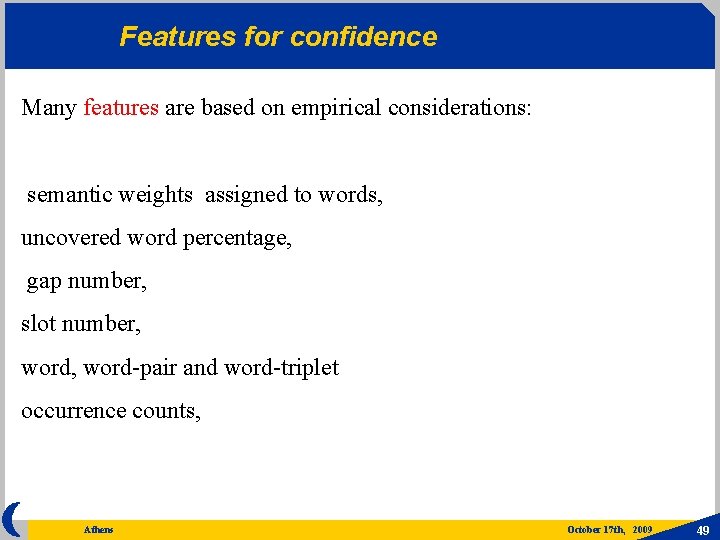

Define confidence-related situations Consensus among classifiers and SFST is used to produce confidence indicators in a sequential interpretation strategy (Raymond et al. 2005, 2007). Classifiers used are SCT, SVM, adaboost. Committee-Based Active Learning uses multiple classifiers to select samples (Seung et al. 1992) Fusion strategy FSM Athens SCT SVM adaboost October 17 th, 2009 47

Confidence measures Two basic steps: 1) generate as many features as possible based on the speech recognition and/or natural language understanding process and 2) Estimate correctness probabilities with these features, using a combination model. Athens October 17 th, 2009 48

Features for confidence Many features are based on empirical considerations: semantic weights assigned to words, uncovered word percentage, gap number, slot number, word, word-pair and word-triplet occurrence counts, Athens October 17 th, 2009 49

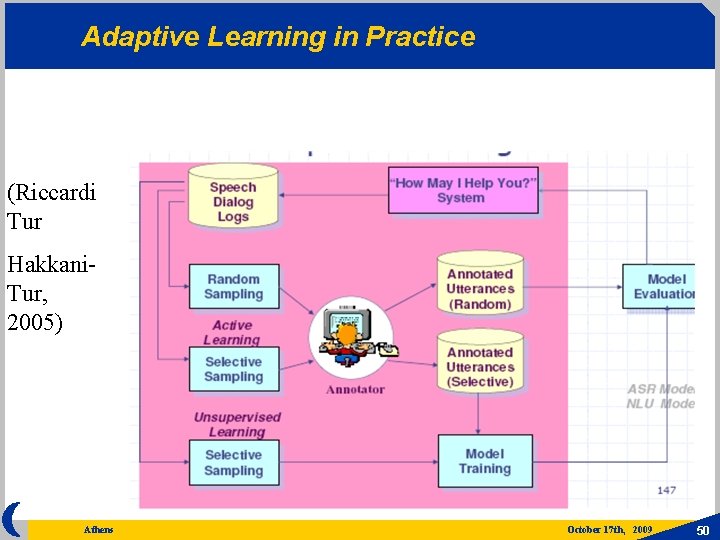

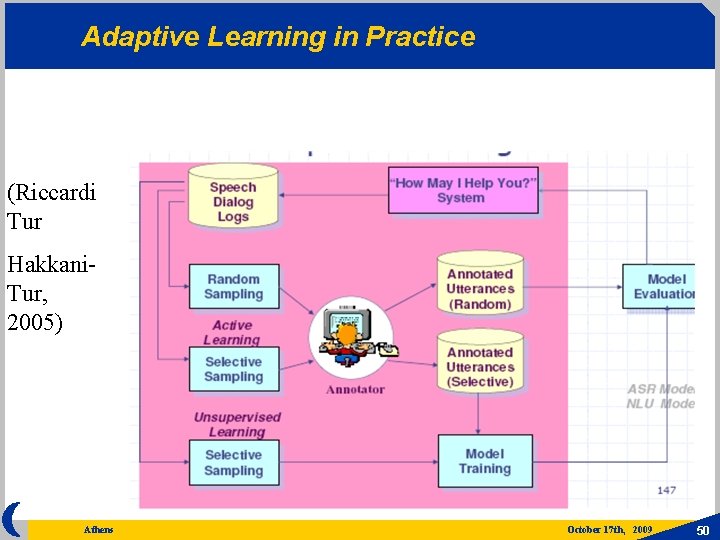

Adaptive Learning in Practice (Riccardi Tur Hakkani. Tur, 2005) Athens October 17 th, 2009 50

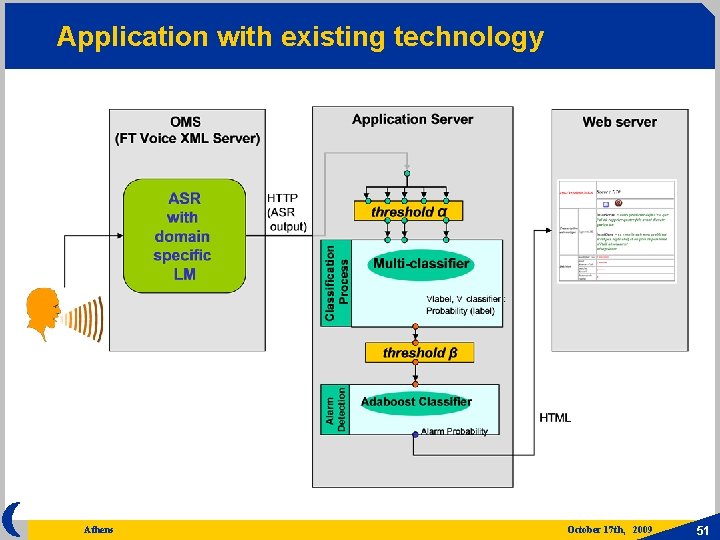

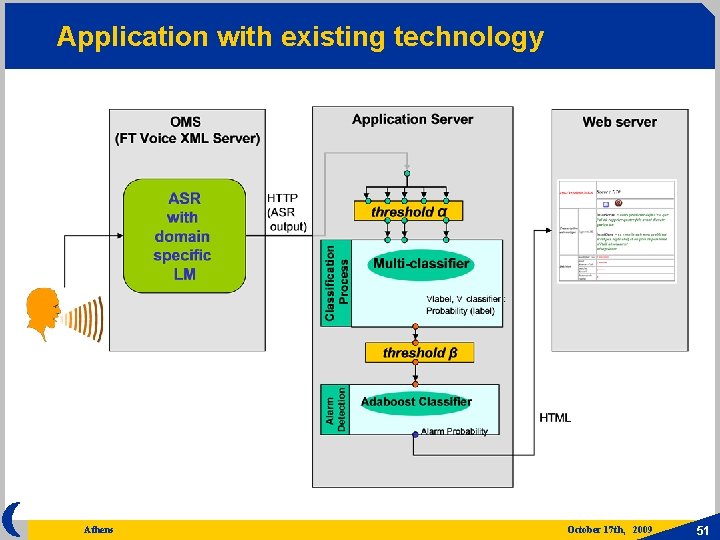

Application with existing technology Athens October 17 th, 2009 51

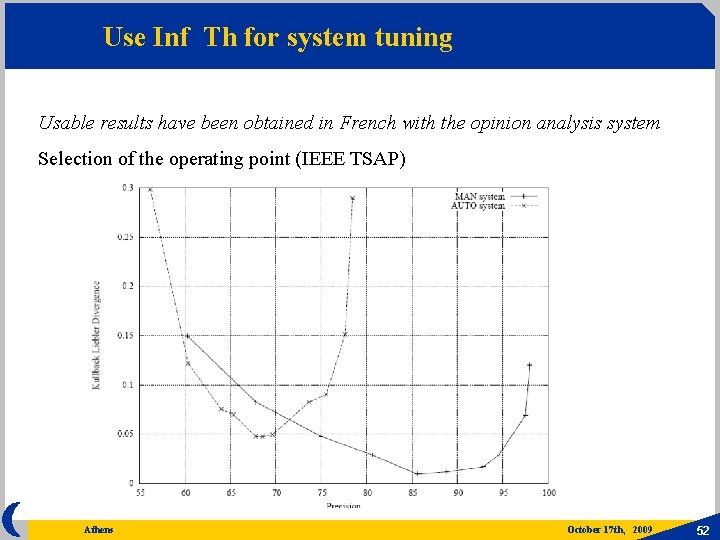

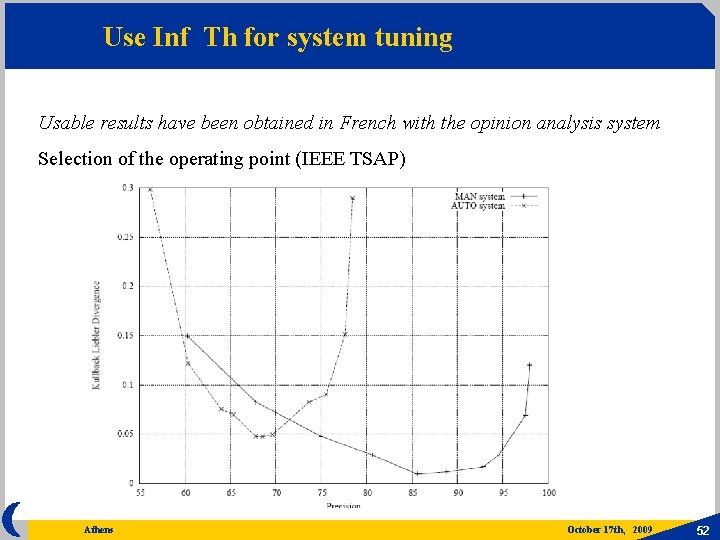

Use Inf Th for system tuning Usable results have been obtained in French with the opinion analysis system Selection of the operating point (IEEE TSAP) Athens October 17 th, 2009 52

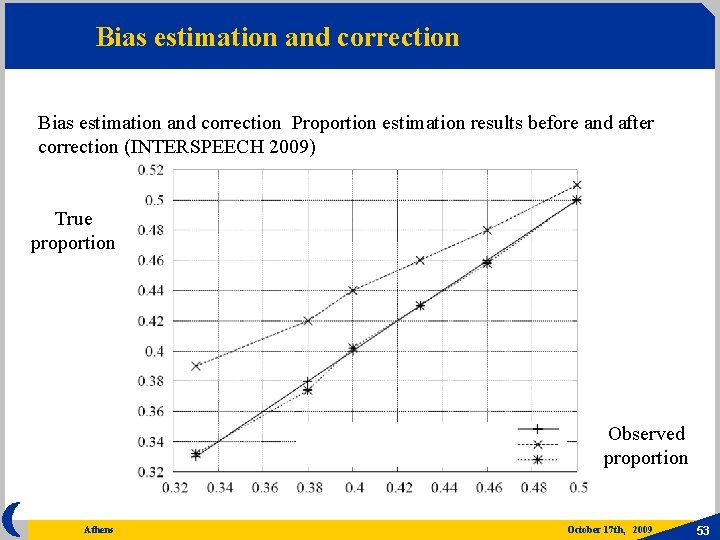

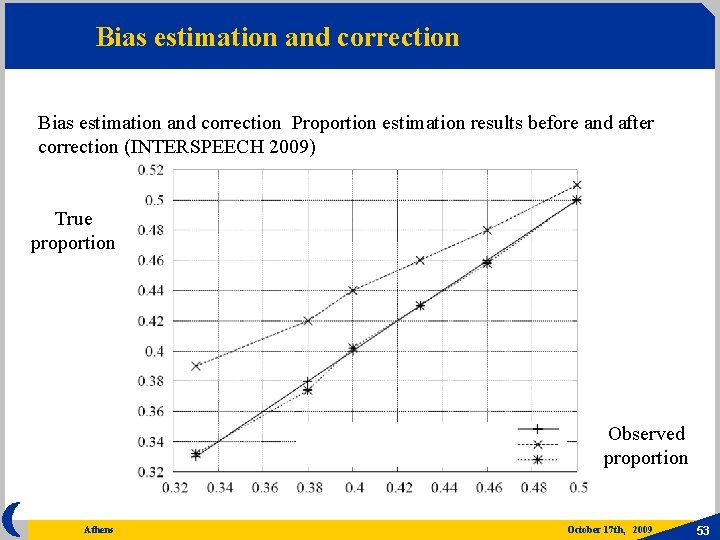

Bias estimation and correction Proportion estimation results before and after correction (INTERSPEECH 2009) True proportion Observed proportion Athens October 17 th, 2009 53

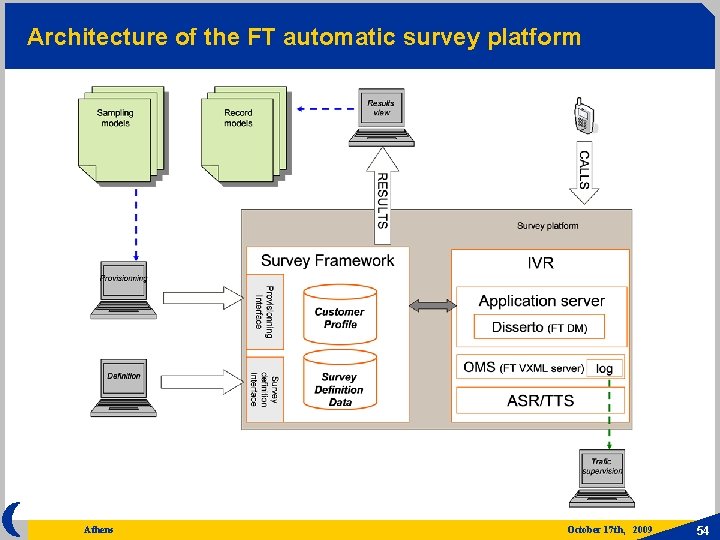

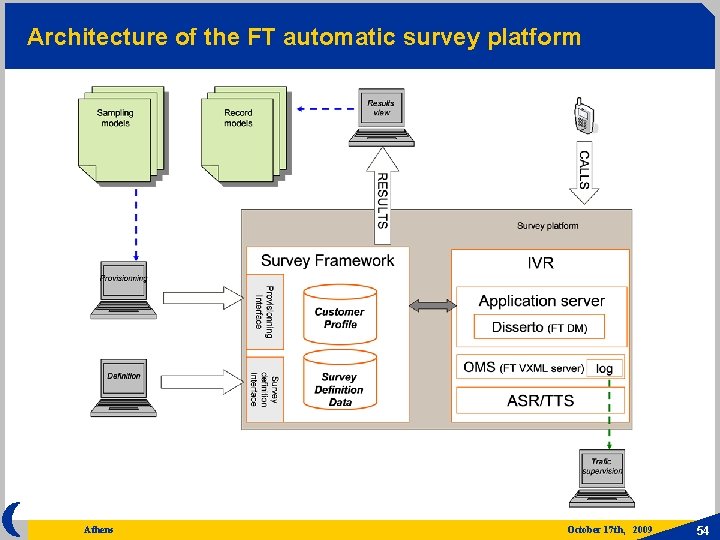

Architecture of the FT automatic survey platform Athens October 17 th, 2009 54

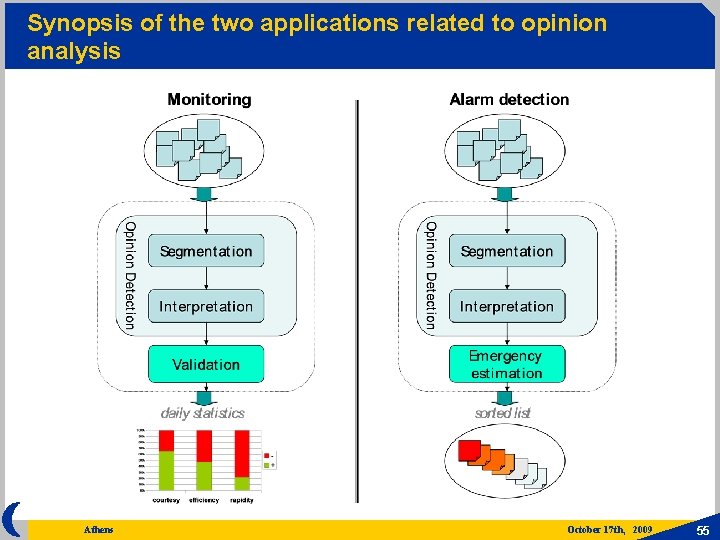

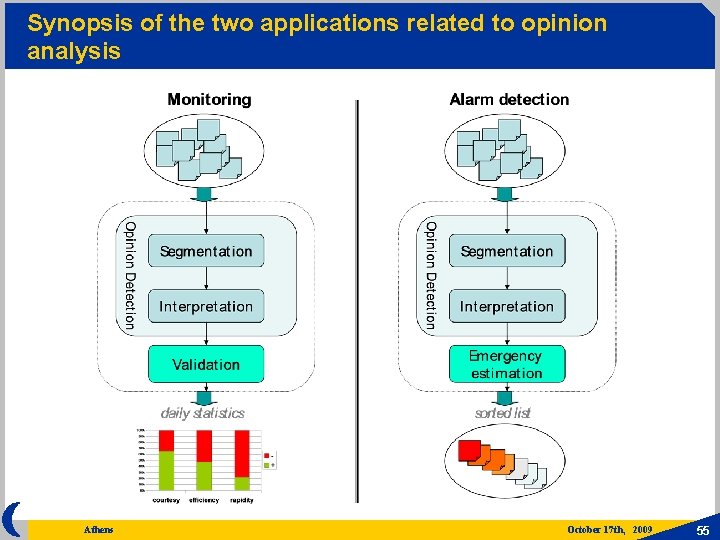

Synopsis of the two applications related to opinion analysis Athens October 17 th, 2009 55

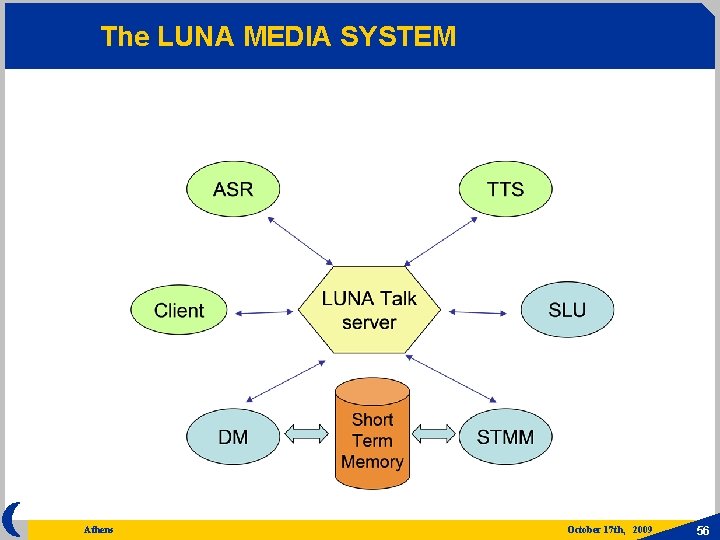

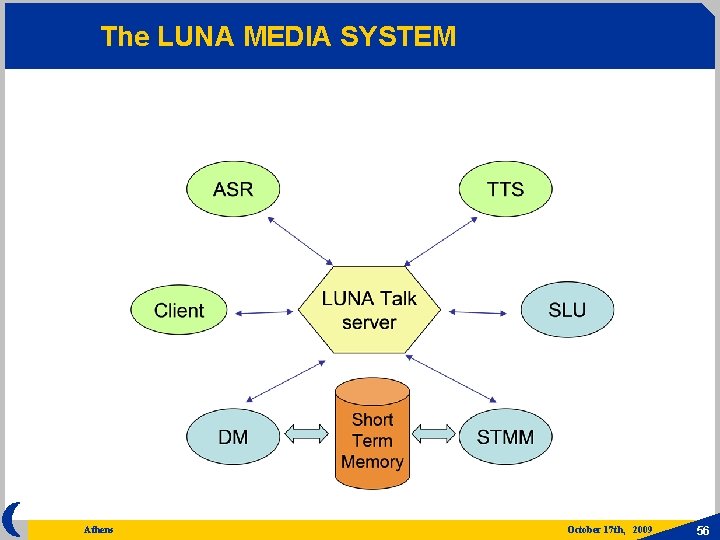

The LUNA MEDIA SYSTEM Athens October 17 th, 2009 56

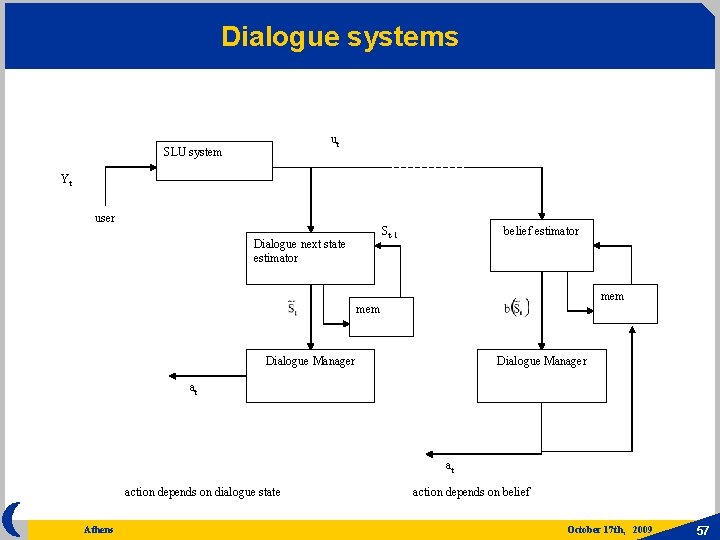

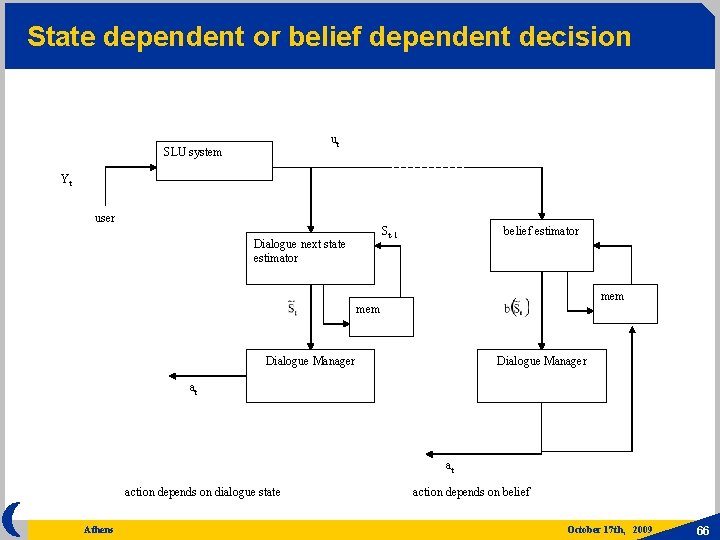

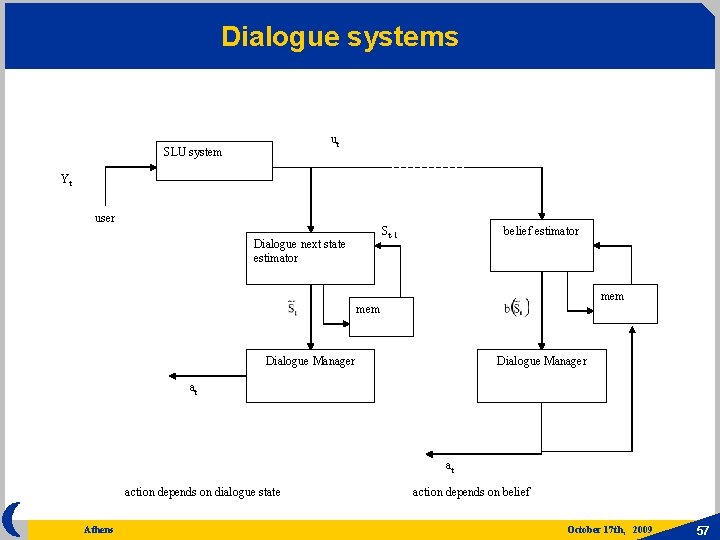

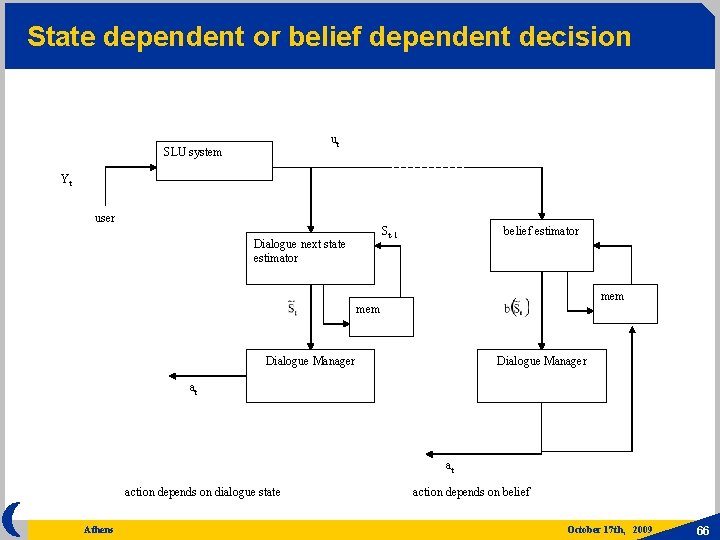

Dialogue systems ut SLU system Yt user belief estimator St-1 Dialogue next state estimator mem Dialogue Manager at at action depends on dialogue state Athens action depends on belief October 17 th, 2009 57

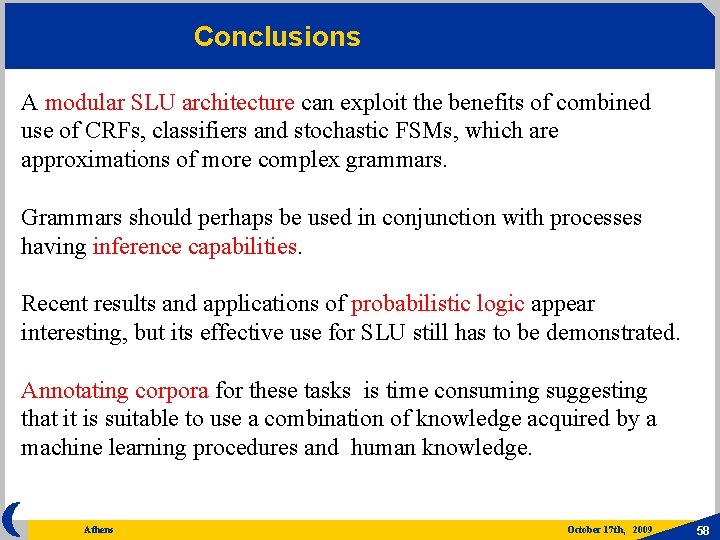

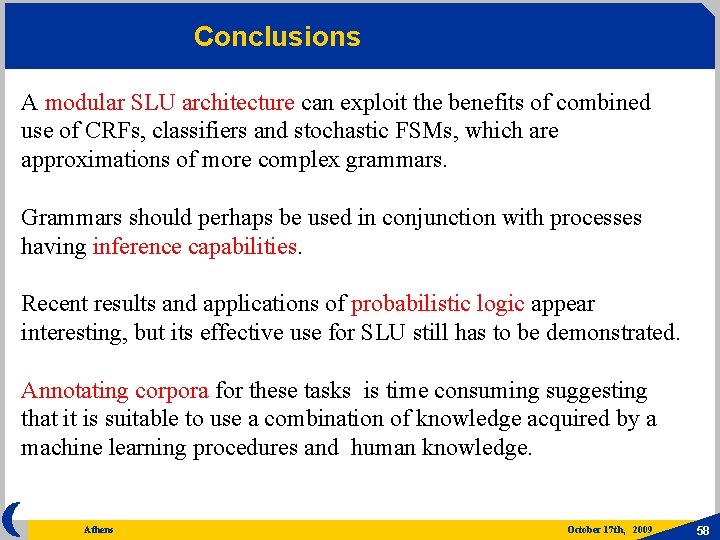

Conclusions A modular SLU architecture can exploit the benefits of combined use of CRFs, classifiers and stochastic FSMs, which are approximations of more complex grammars. Grammars should perhaps be used in conjunction with processes having inference capabilities. Recent results and applications of probabilistic logic appear interesting, but its effective use for SLU still has to be demonstrated. Annotating corpora for these tasks is time consuming suggesting that it is suitable to use a combination of knowledge acquired by a machine learning procedures and human knowledge. Athens October 17 th, 2009 58

Conclusions Robustness, incremental learning, portability are important and open issues. SLU is not only used in human-machine dialogs. Other applications are for opinion analysis, indexing, summarization, retrieval. Some applications can tolerate a certain amount of ASR imprecision. Athens October 17 th, 2009 59

THANK YOU Athens October 17 th, 2009 60

Dialogue system requirements Negotiation ability user satisfaction , new requests Context interpretation anaphora, ellipses, disfluencies, synchronization Interaction flexibility focus switch Cooperative reactions completion, corrective, conditional, intentional answers Adequacy of response style Athens October 17 th, 2009 61

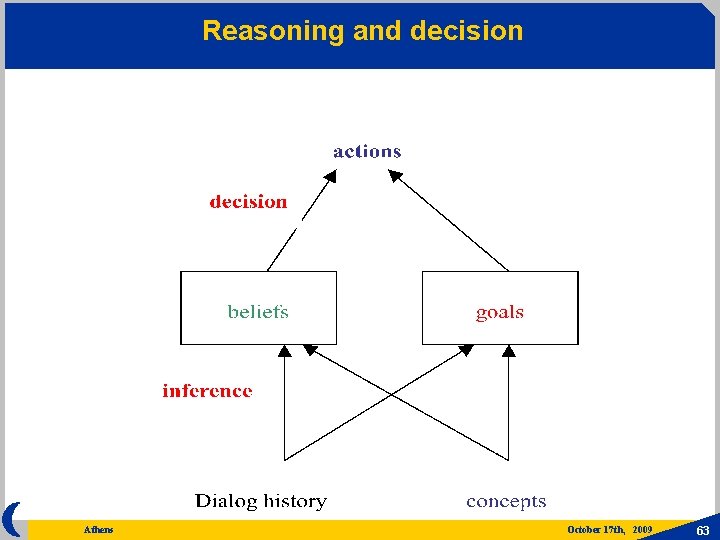

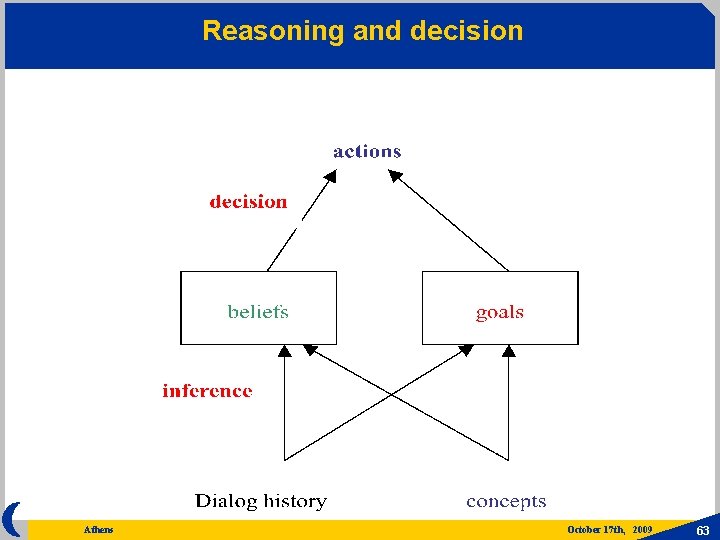

Communicative acts cooperation inspires communication which should be based on rationality Communication is performed by agents which are in a mental state An act depends on mental states and has the effect of modifying them Athens October 17 th, 2009 62

Reasoning and decision Athens October 17 th, 2009 63

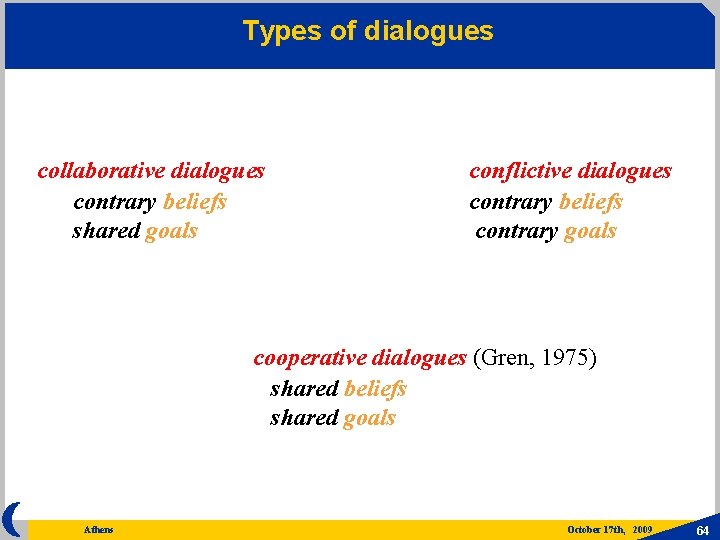

Types of dialogues collaborative dialogues contrary beliefs shared goals conflictive dialogues contrary beliefs contrary goals cooperative dialogues (Gren, 1975) shared beliefs shared goals Athens October 17 th, 2009 64

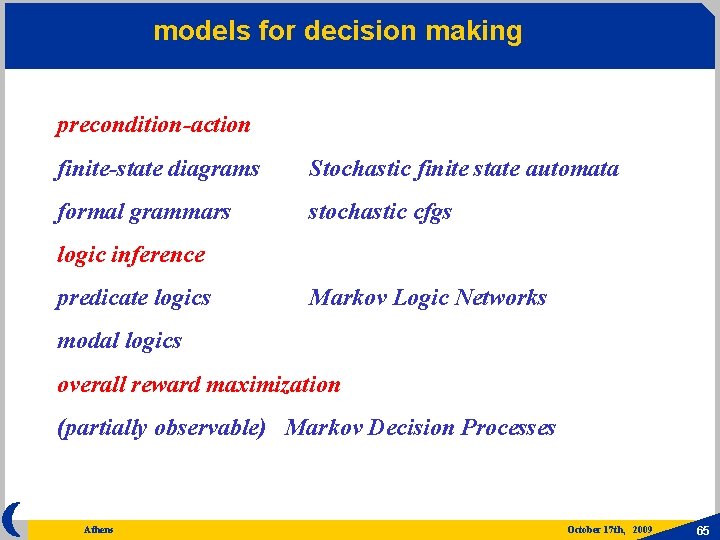

models for decision making precondition-action finite-state diagrams Stochastic finite state automata formal grammars stochastic cfgs logic inference predicate logics Markov Logic Networks modal logics overall reward maximization (partially observable) Markov Decision Processes Athens October 17 th, 2009 65

State dependent or belief dependent decision ut SLU system Yt user belief estimator St-1 Dialogue next state estimator mem Dialogue Manager at at action depends on dialogue state Athens action depends on belief October 17 th, 2009 66

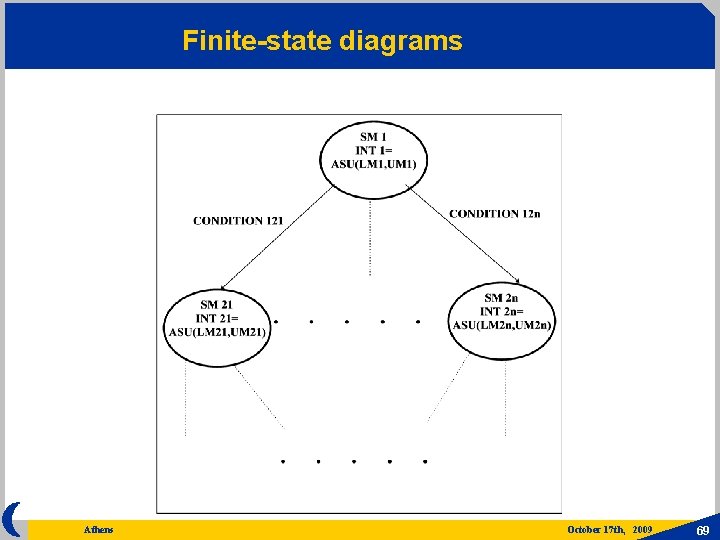

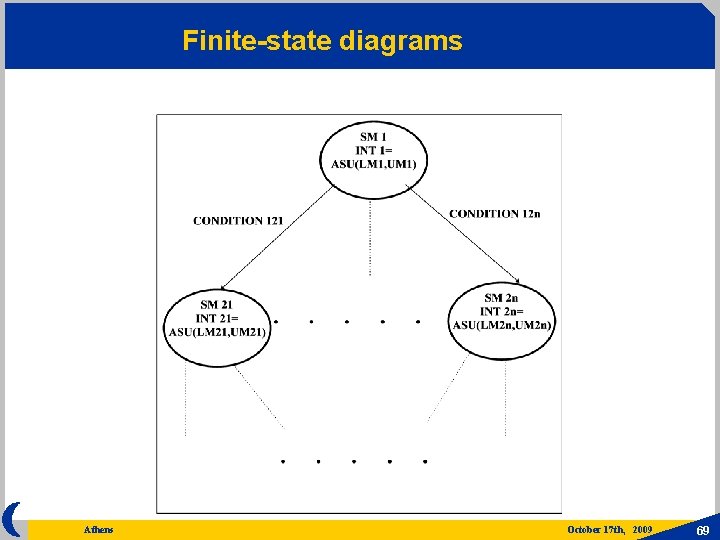

Sub-optimal sequential solution Athens October 17 th, 2009 67

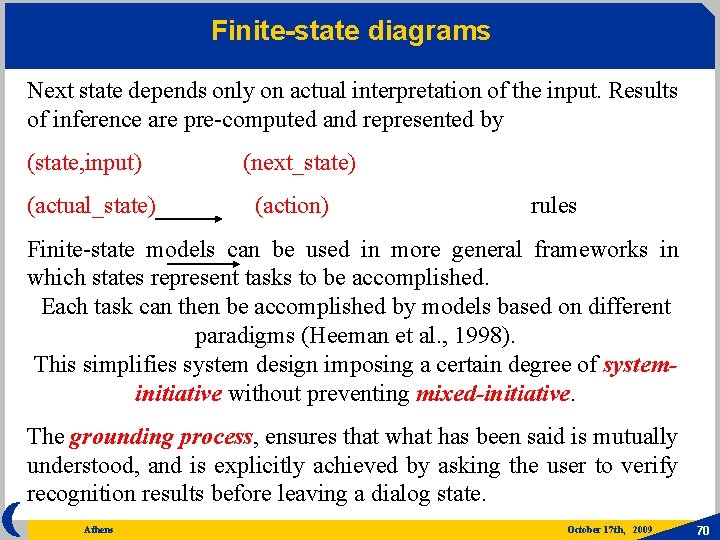

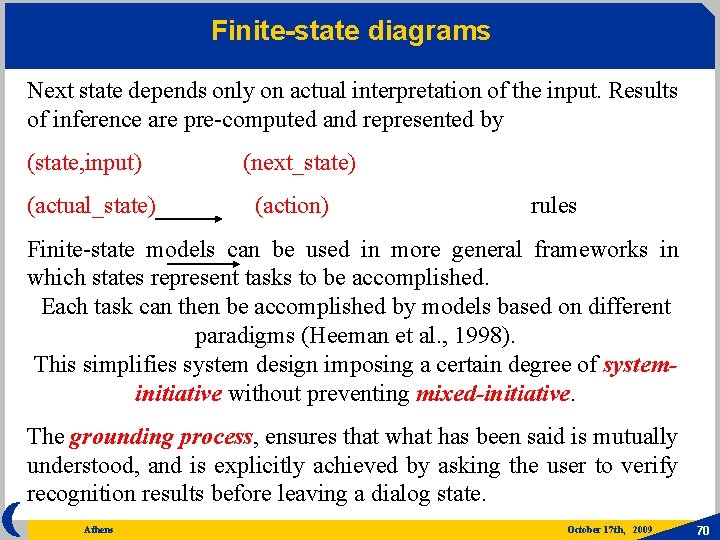

Finite-state diagrams Structural approaches, based on finite-state models, assume that dialog coherence resides in its structure based on descriptions of observed sequencing of utterances, not on explanations of what is observed. Although effective for some practical systems, the approach is rigid and may severely limit user satisfaction. While conviviality is a major requirement for system design, an efficient implementation is an essential condition for achieving user satisfaction. There are specific tasks in dialog that can be achieved with simple and effective models based on finite-state diagrams. Athens October 17 th, 2009 68

Finite-state diagrams Athens October 17 th, 2009 69

Finite-state diagrams Next state depends only on actual interpretation of the input. Results of inference are pre-computed and represented by (state, input) (next_state) (actual_state) (action) rules Finite-state models can be used in more general frameworks in which states represent tasks to be accomplished. Each task can then be accomplished by models based on different paradigms (Heeman et al. , 1998). This simplifies system design imposing a certain degree of systeminitiative without preventing mixed-initiative. The grounding process, ensures that what has been said is mutually understood, and is explicitly achieved by asking the user to verify recognition results before leaving a dialog state. Athens October 17 th, 2009 70

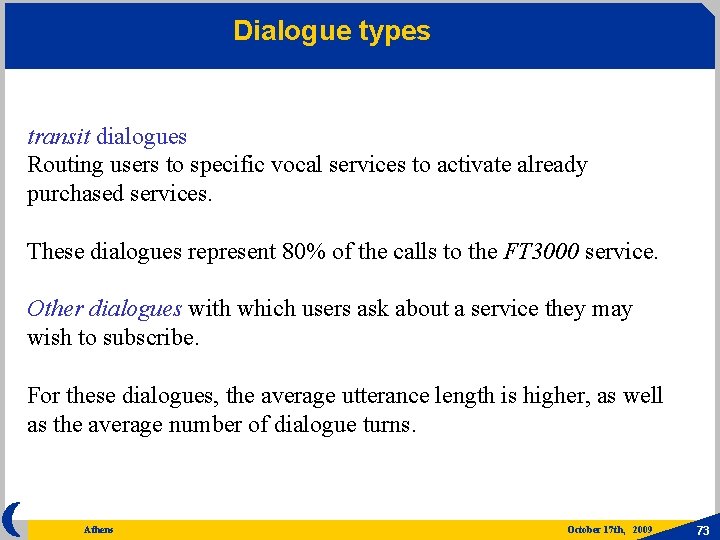

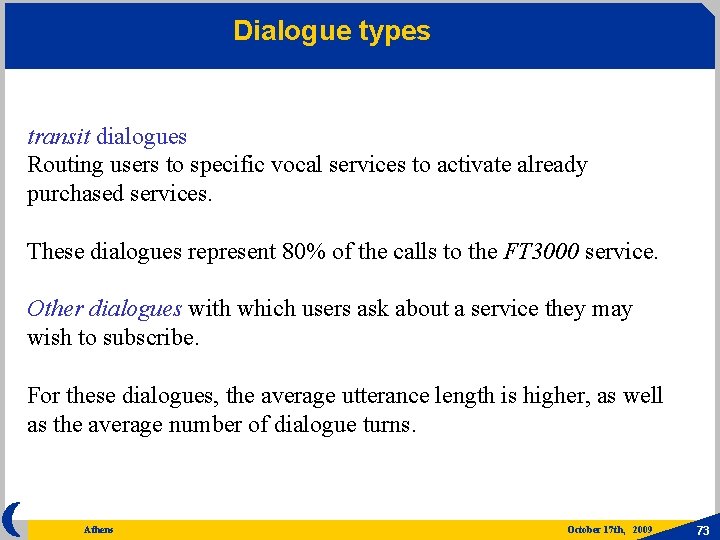

Example : The FT 3000 system • Service – obtain information about FT services • purchase almost 30 different services – access account • check consumption, pay bills • call forwarding, voice messaging • Deployed since October 2005 • Corpus collected daily • Dealing with different kind of speech – Speech/non speech – Speech out-of-domain/speech in domain • Comments from the users – Speech with a valid content/invalid content • Experienced vs. New users Athens October 17 th, 2009 71

FT 3000 Voice Agency service Thousands of dialogues are automatically processed every day and the system logs are stored. Speakers are not professional ones and are not biased by the acquisition protocols. The complexity of the services widely deployed is necessarily limited in order to guarantee robustness with a high automation rate. The semantic model of such deployed system is task-oriented. Athens October 17 th, 2009 72

Dialogue types transit dialogues Routing users to specific vocal services to activate already purchased services. These dialogues represent 80% of the calls to the FT 3000 service. Other dialogues with which users ask about a service they may wish to subscribe. For these dialogues, the average utterance length is higher, as well as the average number of dialogue turns. Athens October 17 th, 2009 73

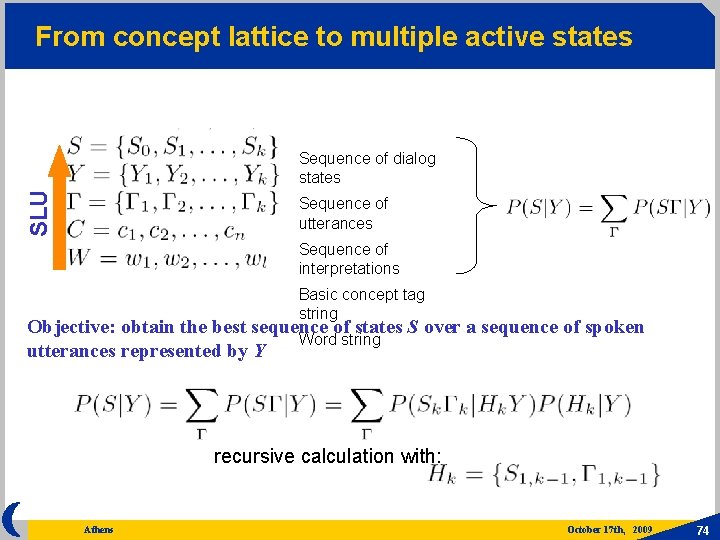

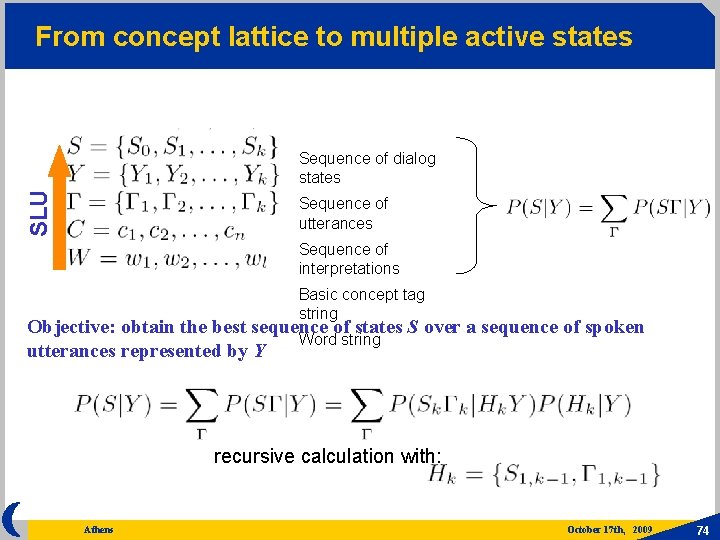

From concept lattice to multiple active states SLU Sequence of dialog states Sequence of utterances Sequence of interpretations Basic concept tag string Objective: obtain the best sequence of states S over a sequence of spoken Word string utterances represented by Y recursive calculation with: Athens October 17 th, 2009 74

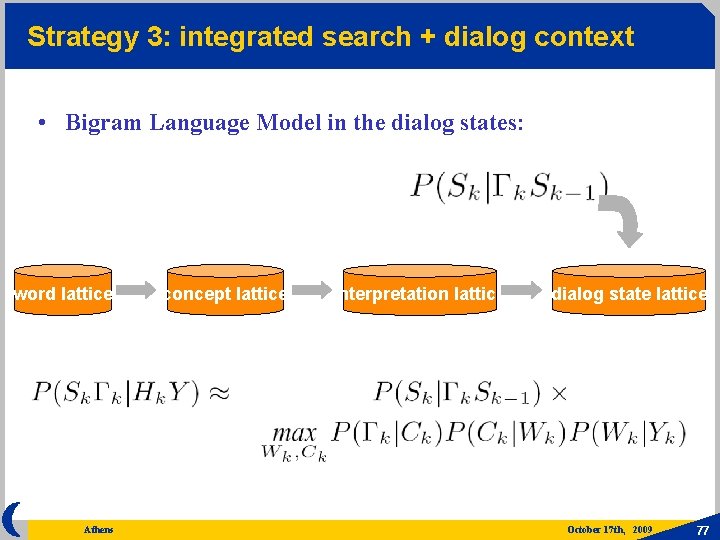

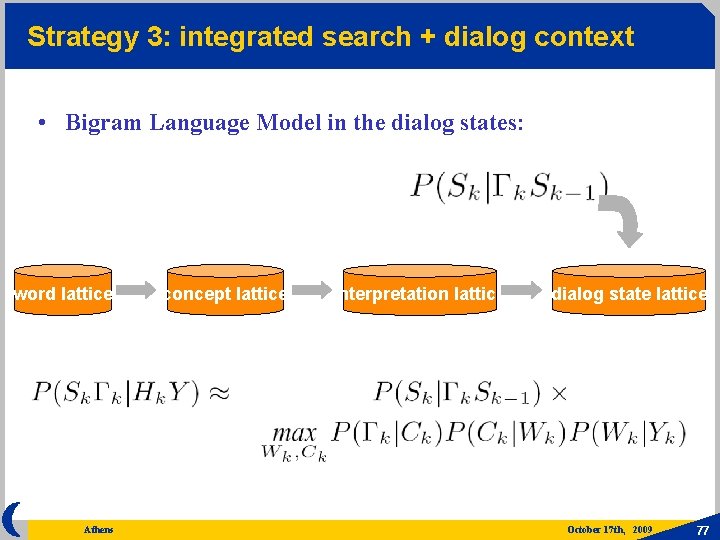

Strategy 1: sequential • Best sequence of words • Best sequence of concepts • Best interpretation Probability of a dialog state S at turn k: 1 best word Athens 1 best concept 1 best interpretation October 17 th, 2009 75

Strategy 2: integrated search • Best sequence of concepts+words+interpretation For estimating the probability of a dialog state and an interpretation: joint search for the best sequence of words and concepts word lattice Athens concept lattice interpretation lattice October 17 th, 2009 76

Strategy 3: integrated search + dialog context • Bigram Language Model in the dialog states: word lattice Athens concept lattice interpretation lattice dialog state lattice October 17 th, 2009 77

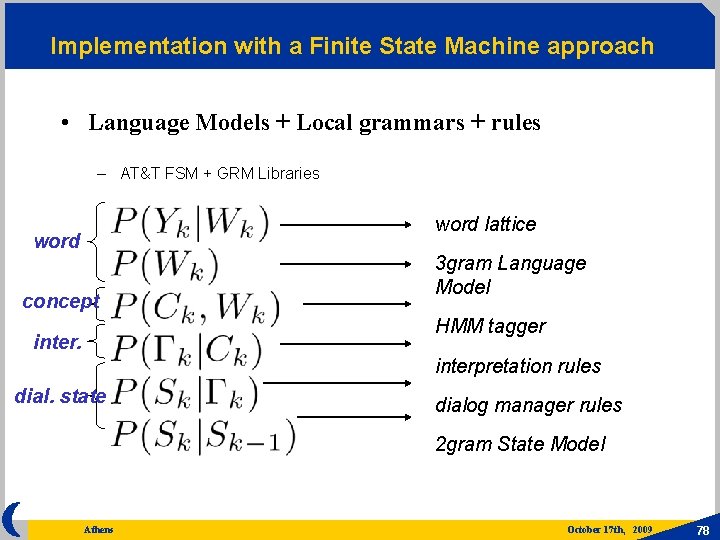

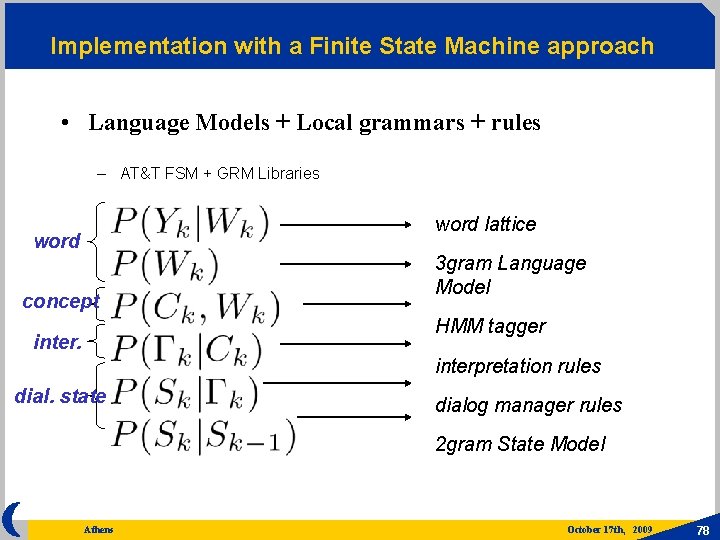

Implementation with a Finite State Machine approach • Language Models + Local grammars + rules – AT&T FSM + GRM Libraries word lattice word concept 3 gram Language Model HMM tagger interpretation rules dial. state dialog manager rules 2 gram State Model Athens October 17 th, 2009 78

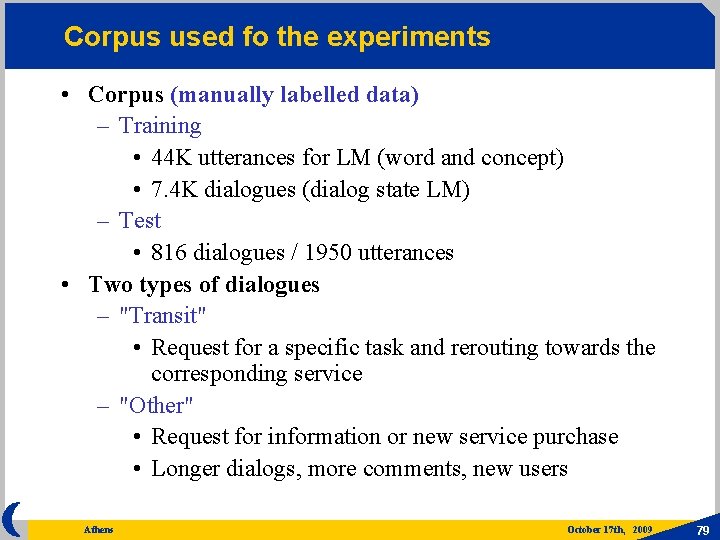

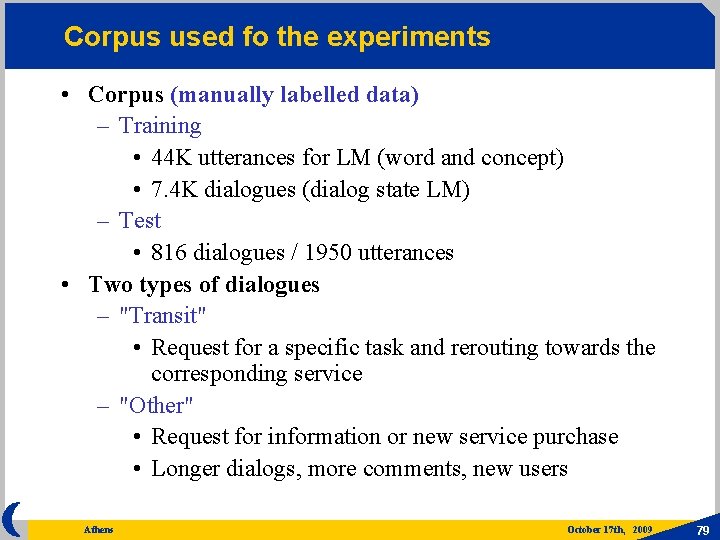

Corpus used fo the experiments • Corpus (manually labelled data) – Training • 44 K utterances for LM (word and concept) • 7. 4 K dialogues (dialog state LM) – Test • 816 dialogues / 1950 utterances • Two types of dialogues – "Transit" • Request for a specific task and rerouting towards the corresponding service – "Other" • Request for information or new service purchase • Longer dialogs, more comments, new users Athens October 17 th, 2009 79

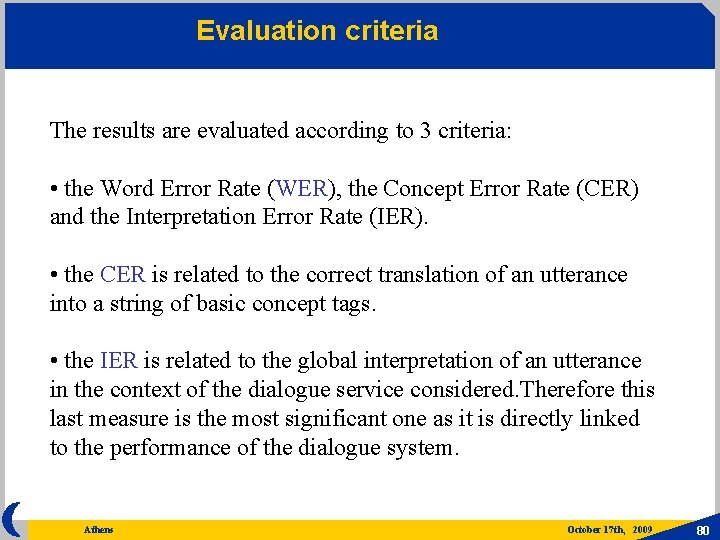

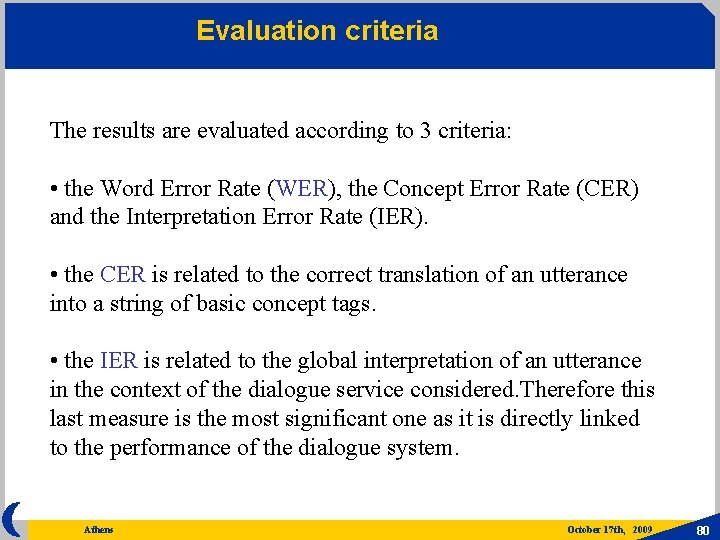

Evaluation criteria The results are evaluated according to 3 criteria: • the Word Error Rate (WER), the Concept Error Rate (CER) and the Interpretation Error Rate (IER). • the CER is related to the correct translation of an utterance into a string of basic concept tags. • the IER is related to the global interpretation of an utterance in the context of the dialogue service considered. Therefore this last measure is the most significant one as it is directly linked to the performance of the dialogue system. Athens October 17 th, 2009 80

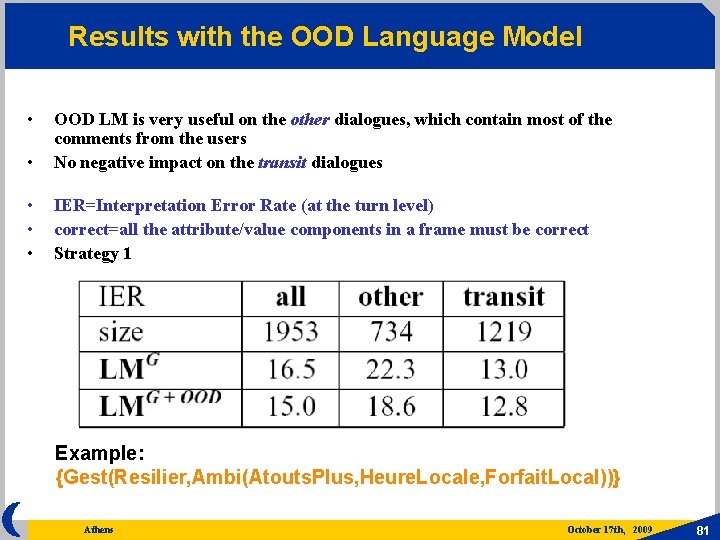

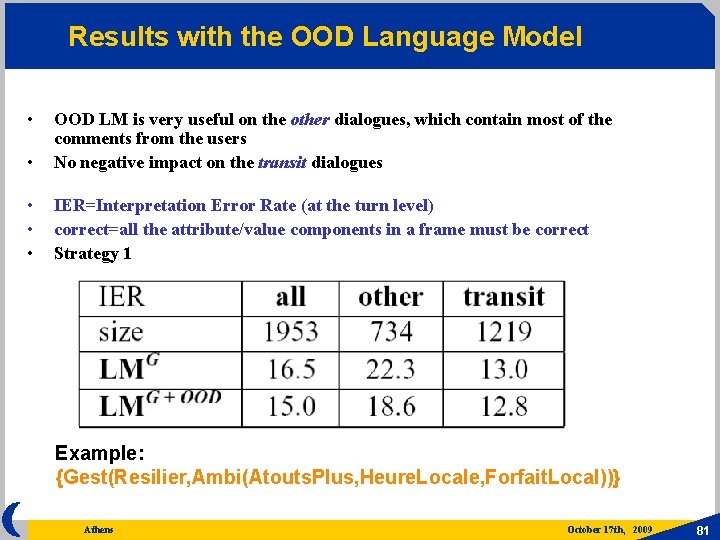

Results with the OOD Language Model • • OOD LM is very useful on the other dialogues, which contain most of the comments from the users No negative impact on the transit dialogues • • • IER=Interpretation Error Rate (at the turn level) correct=all the attribute/value components in a frame must be correct Strategy 1 Example: {Gest(Resilier, Ambi(Atouts. Plus, Heure. Locale, Forfait. Local))} Athens October 17 th, 2009 81

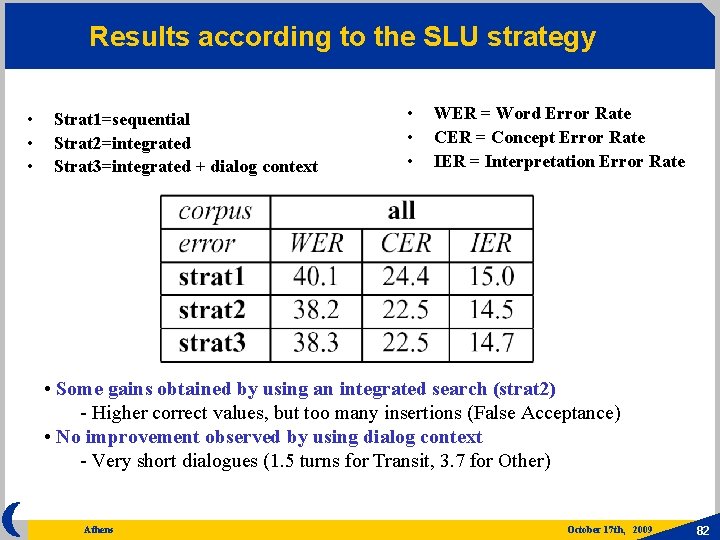

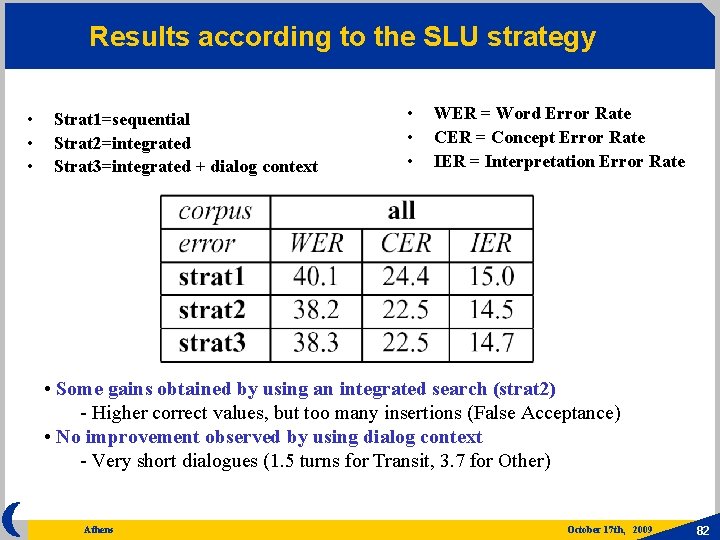

Results according to the SLU strategy • • • Strat 1=sequential Strat 2=integrated Strat 3=integrated + dialog context • • • WER = Word Error Rate CER = Concept Error Rate IER = Interpretation Error Rate • Some gains obtained by using an integrated search (strat 2) - Higher correct values, but too many insertions (False Acceptance) • No improvement observed by using dialog context - Very short dialogues (1. 5 turns for Transit, 3. 7 for Other) Athens October 17 th, 2009 82

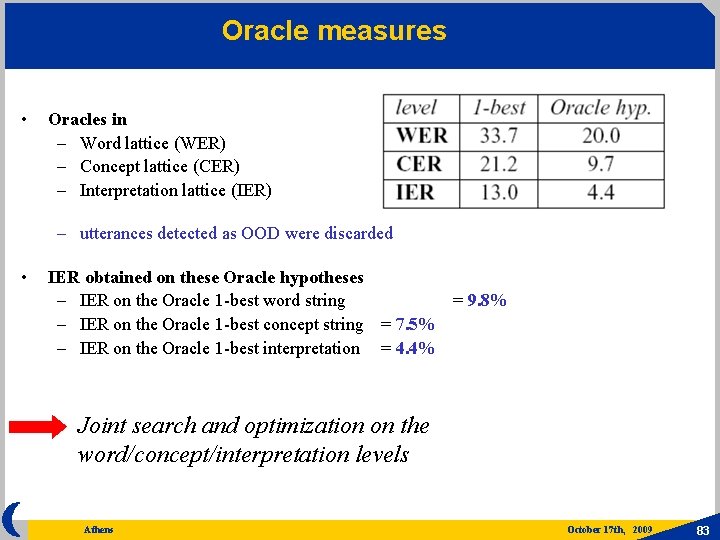

Oracle measures • Oracles in – Word lattice (WER) – Concept lattice (CER) – Interpretation lattice (IER) – utterances detected as OOD were discarded • IER obtained on these Oracle hypotheses – IER on the Oracle 1 -best word string = 9. 8% – IER on the Oracle 1 -best concept string = 7. 5% – IER on the Oracle 1 -best interpretation = 4. 4% Joint search and optimization on the word/concept/interpretation levels Athens October 17 th, 2009 83

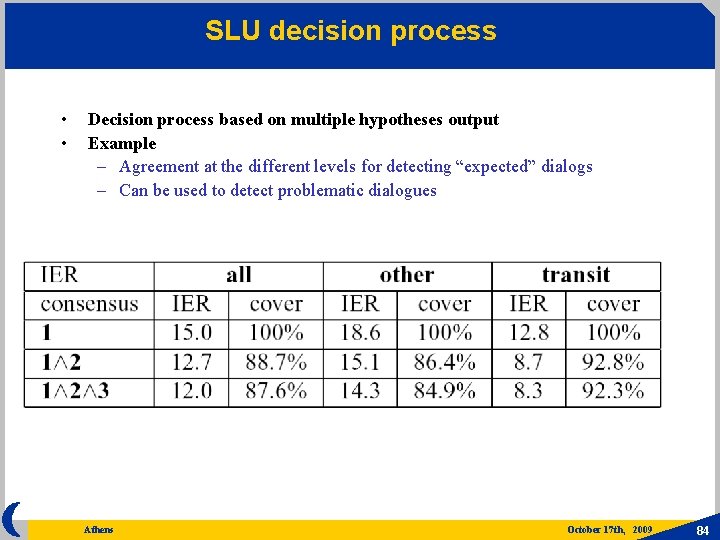

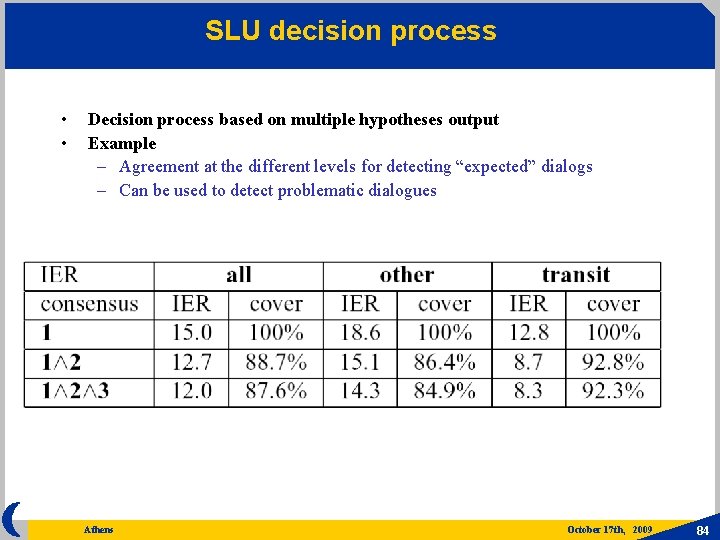

SLU decision process • • Decision process based on multiple hypotheses output Example – Agreement at the different levels for detecting “expected” dialogs – Can be used to detect problematic dialogues Athens October 17 th, 2009 84

Plan-based approaches communicating is acting (Austin, 1962) utterance is also as a realization of observable communicative actions: speech acts or dialog acts like inform, request, confirm, commit, Communicative actions can be planned and recognized on the basis of mental states. Dialog analysis is considered in the framework of explaining actions based on mental states, relying on general models of actions and mental attitudes. Athens October 17 th, 2009 85

Activities • identify the user goals or intensions (e. g. task goal, communicative goals (add information or corrections) goal reasoner infers goals or intensions from interpretations or inferred dialog acts. It should respect an interaction model • identify and update beliefs Cooperation, rationality and other model properties are represented by formulae which are used by an inference engine Result : Dialogue state • make decisions Result : actions Optimal (minimum risk, maximum reward) decision strategies Athens October 17 th, 2009 86

Rational interaction to recognize goals and beliefs Rational interaction is based on the assumption that an intelligent system has a communication ability, grounded on a general competence, that characterizes rational behavior (Sadek) A proposition is a belief of an agent if the agent considers that the proposition is true. Belief is the mental attitude whereby an agent has a model of the world. Reasoning involves belief reconstruction and revision Uncertainty is a way of representing an approximate perception of the world and expresses the fact that the agent believes that a proposition is not true, but it is more likely than its negation. Athens October 17 th, 2009 87

Rational interaction Formulae of the type B(i, p), U(i, p), et C(i, p) can be respectively read as : “i believes that p is true”, “i is uncertain about the truth of p”, and “i desires that p is currently true”. The logical model for operator B accounts for interesting properties of a rational agent, such as consistency of beliefs, and introspection capacity, formally characterized by the validity of logical schema such as: Athens October 17 th, 2009 88

Rational interaction • an agent cannot be uncertain of its mental attitudes • an agent chooses the logical consequences of her choices: • an agent must choose the event courses he believes he is already in • an agent cannot bring about a situation if he believes that it already holds • agents are in perfect agreement with themselves about their own mental attitudes • Agents may also decide actions based on reasoning Athens October 17 th, 2009 89

Probability distribution of user goals Recent systems accommodate for ASR and interpretation errors by maintaining beliefs about what the user has said rather than accepting the output from the recogniser. Solutions: • models that update a compressed representation of belief using the most likely last user utterance (Rudnicky CMU) • use the full set of possible user utterances to update a distribution over user goals. (Young, Williams, Thompson, Cambridge Univ) Athens October 17 th, 2009 90

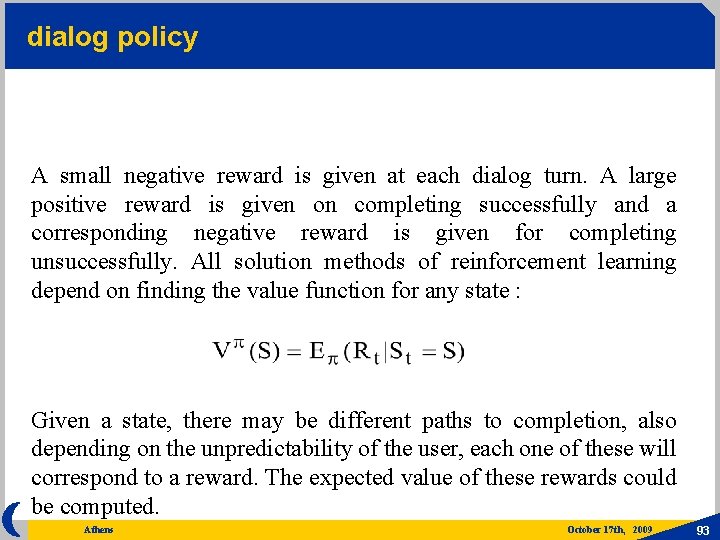

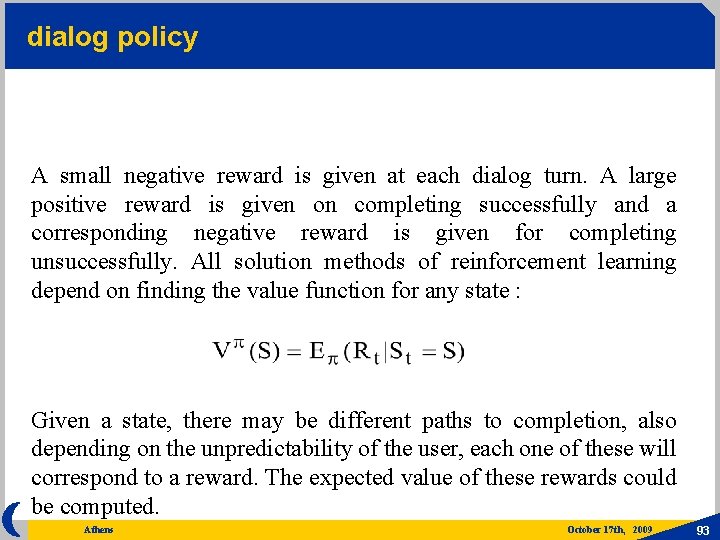

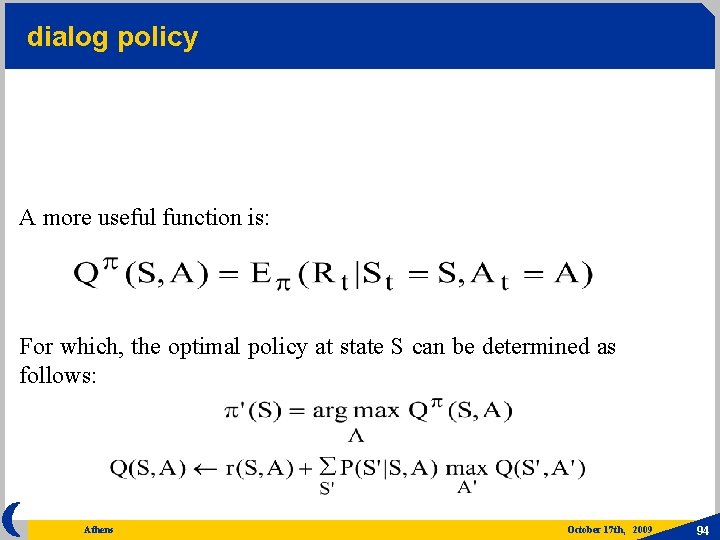

dialog policy The dialog policy chooses a system action, based on the actual dialog state: State transitions are characterized by the following probability distribution: Athens October 17 th, 2009 91

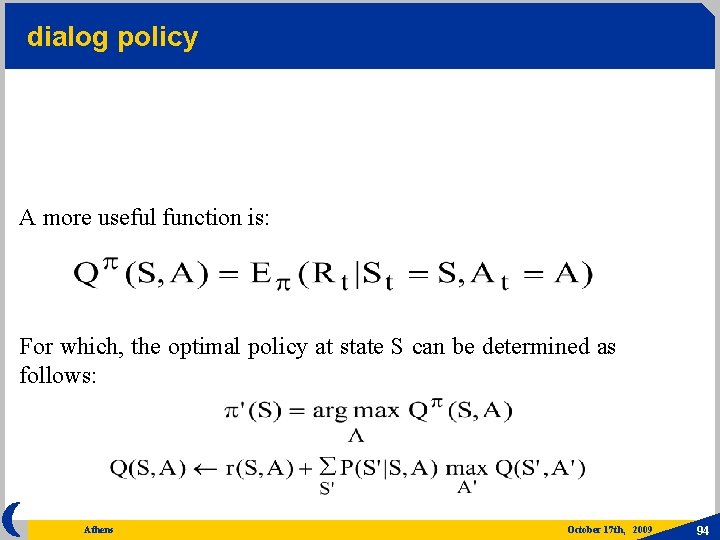

dialog policy The combination of action and state at time t determines the expected immediate reward: The goal is to find a policy which maximizes the total reward: Athens October 17 th, 2009 92

dialog policy A small negative reward is given at each dialog turn. A large positive reward is given on completing successfully and a corresponding negative reward is given for completing unsuccessfully. All solution methods of reinforcement learning depend on finding the value function for any state : Given a state, there may be different paths to completion, also depending on the unpredictability of the user, each one of these will correspond to a reward. The expected value of these rewards could be computed. Athens October 17 th, 2009 93

dialog policy A more useful function is: For which, the optimal policy at state S can be determined as follows: Athens October 17 th, 2009 94

Partially Observable MDP In most practical spoken dialog systems, the state space and the set of possible user dialogue acts are very large. As a result, belief monitoring is computationally very expensive and direct policy optimisation is intractable. The user belief cannot be observed and must be inferred. Thus a dialog system must base its strategy on incomplete data and has to use Partially Observable MDP. Dialog states are summarized in system beliefs (a manageable finite set) System beliefs are obtained by using an observation vector O which encapsulates the evidence needed to update and maintain them. Athens October 17 th, 2009 95

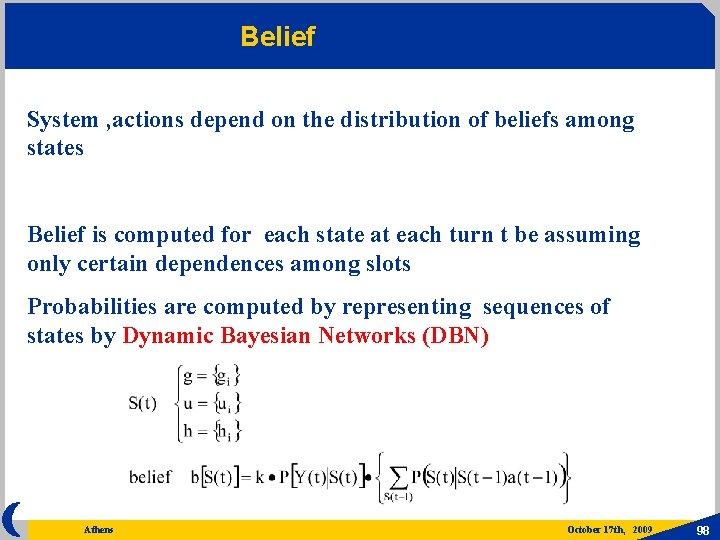

POMDP Modelling a dialog system as a POMDP involves maintaining a probability distribution over all possible machine states. When performing policy optimisation, however, the subsequent machine action is likely to focus on just the most likely states. This suggests maintaining two coupled state spaces: the full space called the master state space and a much simpler space called the summary state space The summary state space consists of the top N user goal states from master space Athens October 17 th, 2009 96

Hidden Information State (HIS) The HIS model groups together user goals into partitions which are indistinguishable given the past user utterances. In order to reduce complexity, a Bayesian Network is used to represent the state of the POMDP, where the network is factored according to the relations of the system relational data base (Thompson and Young, ICASSP 2008). State S : (user_goal g, user act u, history summary h) States are hidden and have to be inferred Athens October 17 th, 2009 97

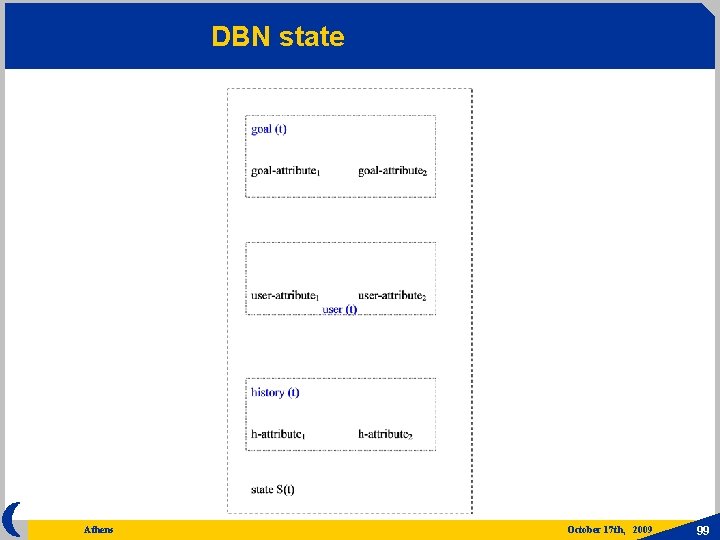

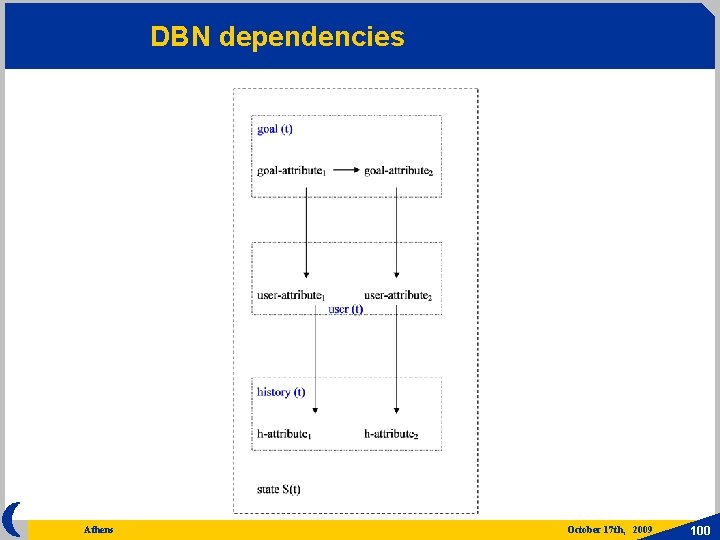

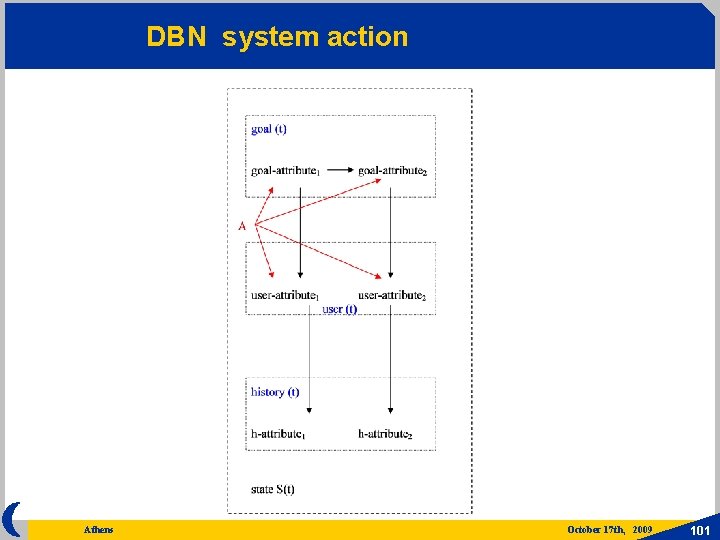

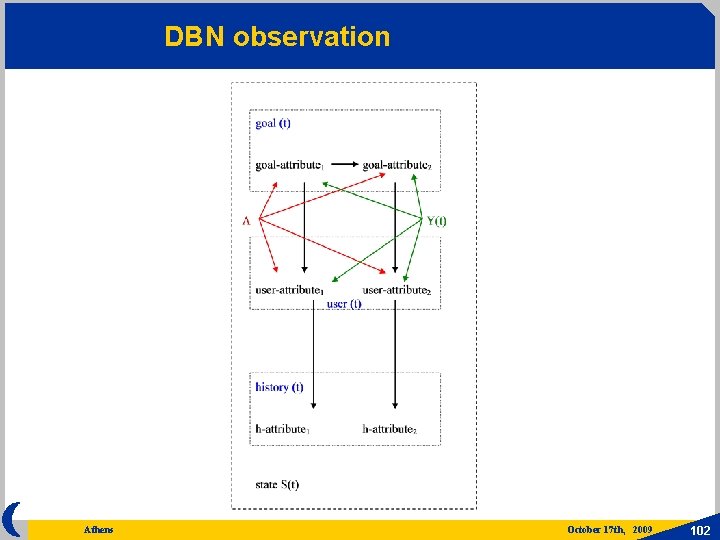

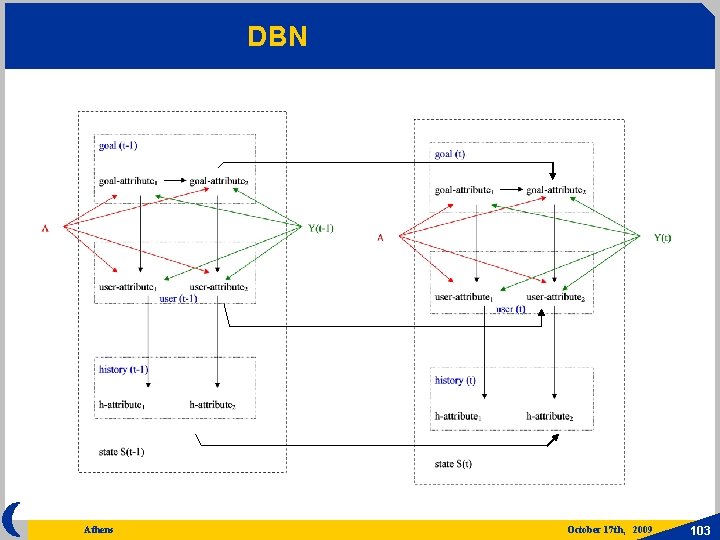

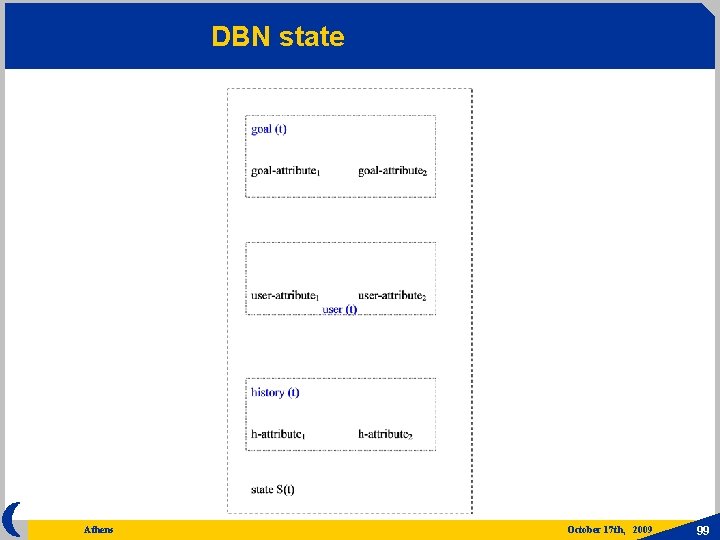

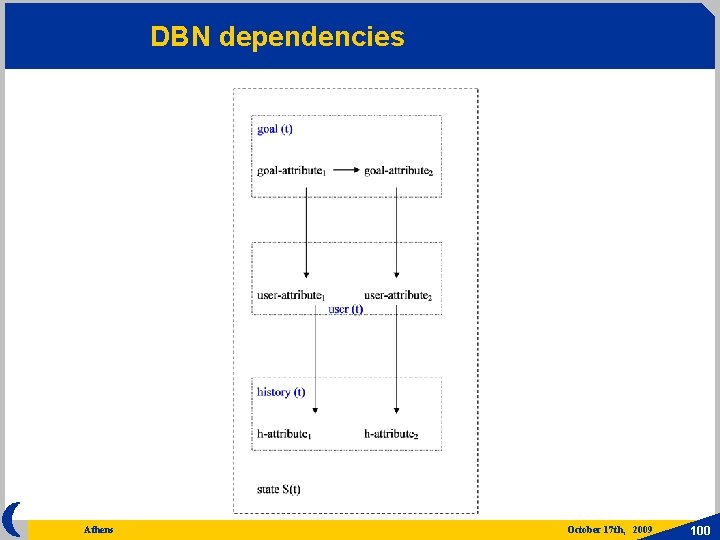

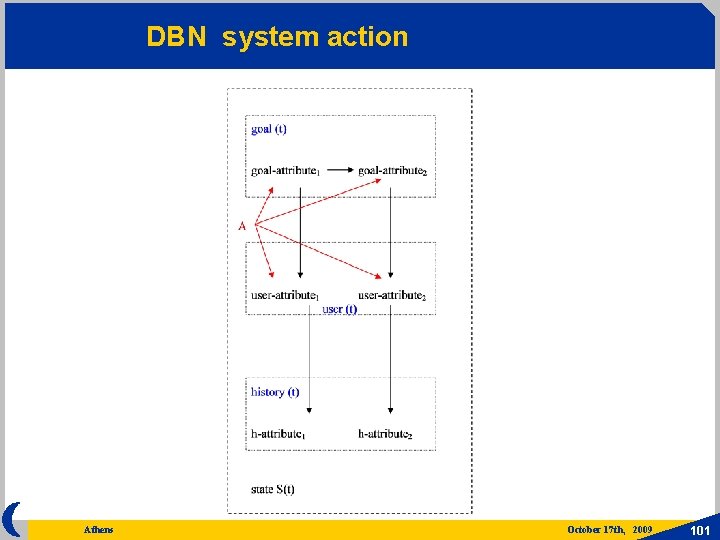

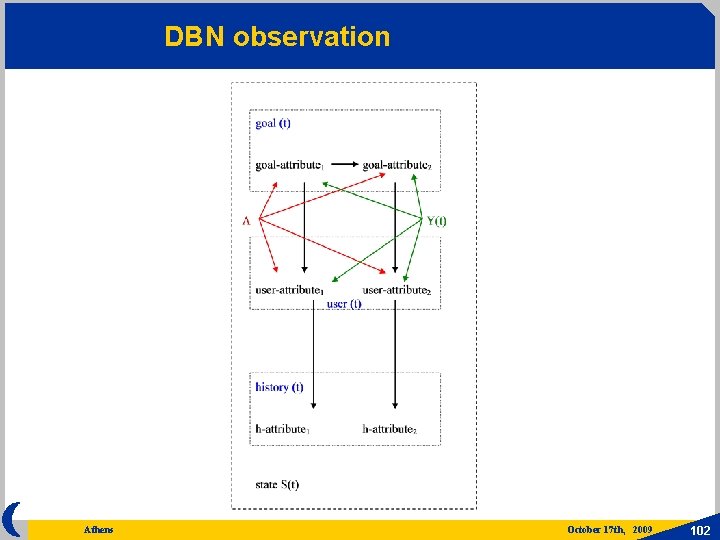

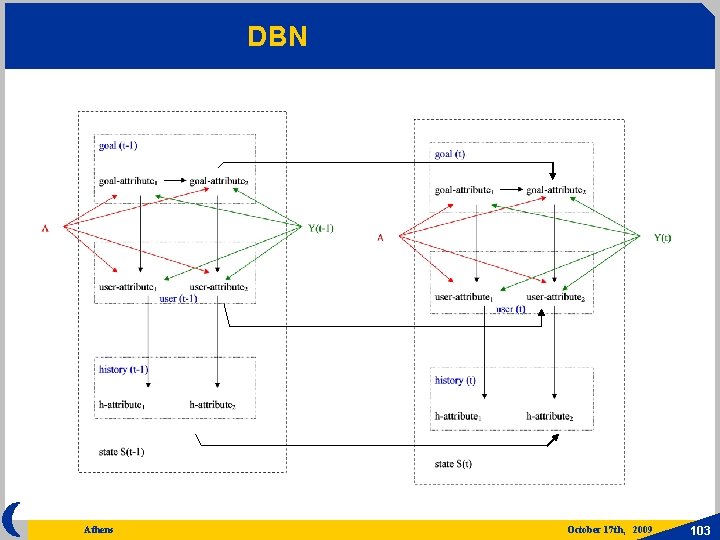

Belief System , actions depend on the distribution of beliefs among states Belief is computed for each state at each turn t be assuming only certain dependences among slots Probabilities are computed by representing sequences of states by Dynamic Bayesian Networks (DBN) Athens October 17 th, 2009 98

DBN state Athens October 17 th, 2009 99

DBN dependencies Athens October 17 th, 2009 100

DBN system action Athens October 17 th, 2009 101

DBN observation Athens October 17 th, 2009 102

DBN Athens October 17 th, 2009 103

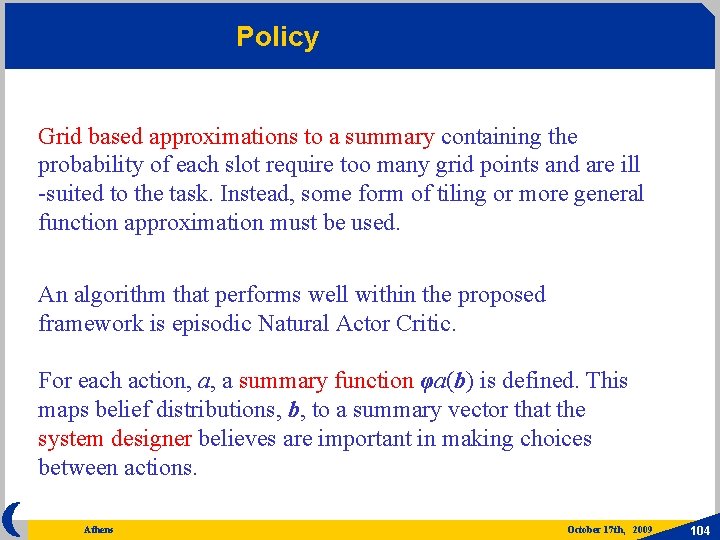

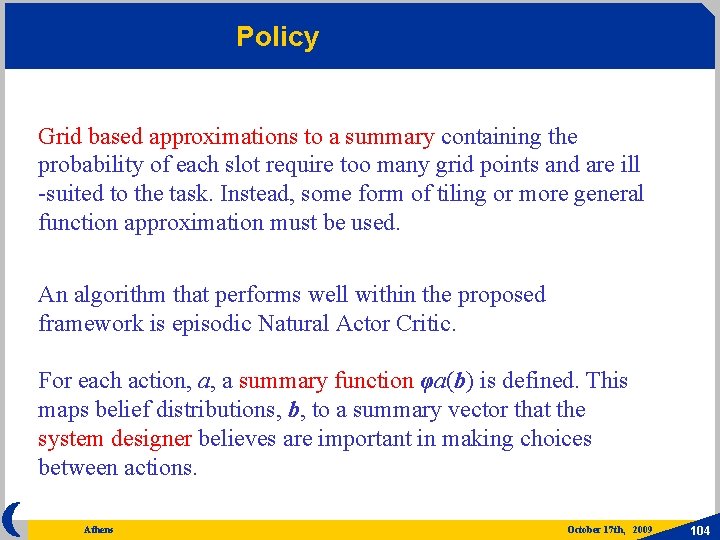

Policy Grid based approximations to a summary containing the probability of each slot require too many grid points and are ill -suited to the task. Instead, some form of tiling or more general function approximation must be used. An algorithm that performs well within the proposed framework is episodic Natural Actor Critic. For each action, a, a summary function φa(b) is defined. This maps belief distributions, b, to a summary vector that the system designer believes are important in making choices between actions. Athens October 17 th, 2009 104

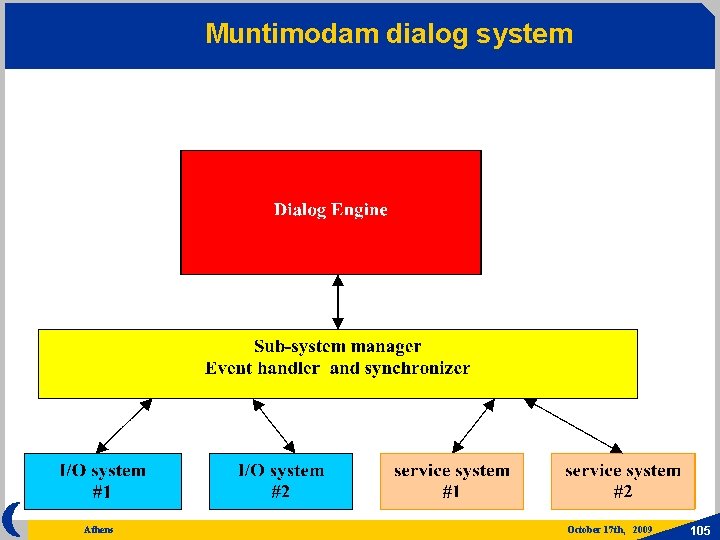

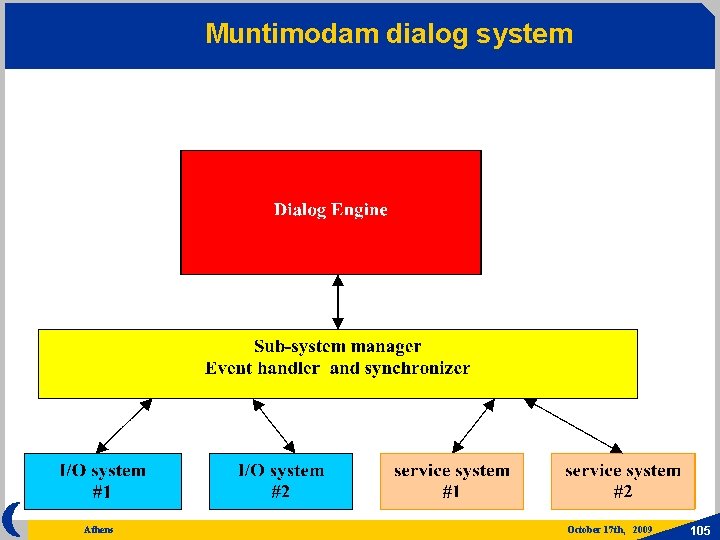

Muntimodam dialog system Athens October 17 th, 2009 105

THANK YOU Athens October 17 th, 2009 106