Spoken Dialogue Systems A Tutorial Gautam Varma Mantena

- Slides: 46

Spoken Dialogue Systems A Tutorial Gautam Varma Mantena Dr. Kishore Prahallad Speech and Vision Lab, International Institute of Information Technology. Hyderbad

Introduction �Interface between a human and a machine for information access. �Some applications for information access: �Search engines. Example: Google search, Bing, etc. �IVR applications. Example: Most customer care �Dialogue Systems. Example Google chat bots (guru@google. com) �Dialogue Systems: �Dialogue is a much freer mode of communication. �Speech being the natural mode of communication, there is a need to build systems which communicate to users via speech Speech and Vision Lab, International Institute of Information Technology - Hyderabad 2

Introduction (contd. ) �Modes of communication in a dialogue system �Text �Speech �Multimodal features like gestures, touch screen, etc. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 3

Dialogue �How is spoken dialogue different from other modes of communication? �Input: Input utterance can consists disfluencies (like ‘uh’ and ‘hmm’), word repetitions, etc. �Barge-ins: Interrupting the system before allowing it to finish �Turn taking: System should know when to speak (i. e. when to take control of the conversation) �Prosodic information. �Grounding with user. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 4

Applications of Spoken Dialogue Systems �ARISE – Automatic Railway Information System for Europe �Room. Line – Conference room scheduling and reservation. �Let’s Go – Bus information system �Team. Talk – Command control interface to a team of robots �Communicator – Air travel planning �Jupiter – Automated weather service �MIS – Mandi Information System and many more Speech and Vision Lab, International Institute of Information Technology - Hyderabad 5

Characteristics of a Spoken Dialogue Systems �Ability to understand user’s goal and to reach an appropriate and a satisfied solution. �Ability to carry out sub-dialogues to achieve subgoals. �Ability to pass control from one sub-dialogue to another. �Ability to vary the dialogue initiative modes from system initiative to user initiative. �Use of a user model to expect user’s utterances and act aptly. �Directing the user towards task completion. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 6

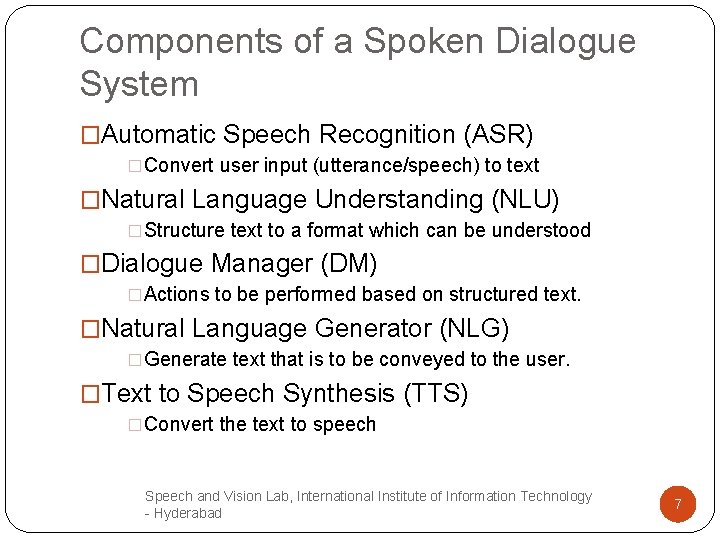

Components of a Spoken Dialogue System �Automatic Speech Recognition (ASR) �Convert user input (utterance/speech) to text �Natural Language Understanding (NLU) �Structure text to a format which can be understood �Dialogue Manager (DM) �Actions to be performed based on structured text. �Natural Language Generator (NLG) �Generate text that is to be conveyed to the user. �Text to Speech Synthesis (TTS) �Convert the text to speech Speech and Vision Lab, International Institute of Information Technology - Hyderabad 7

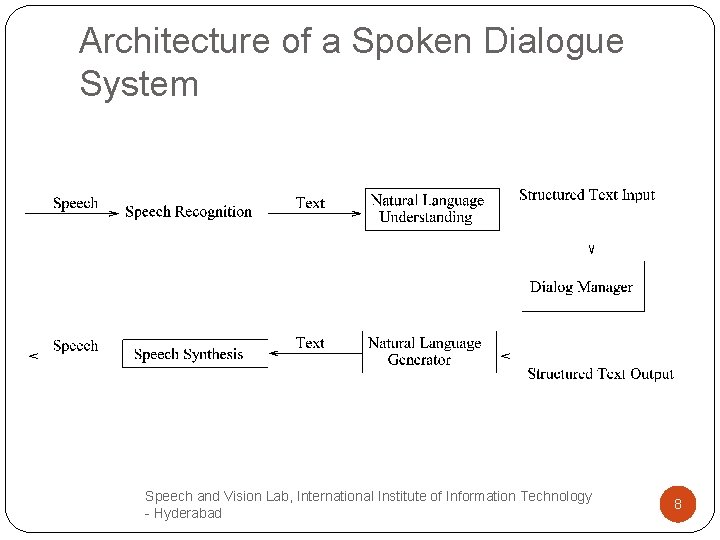

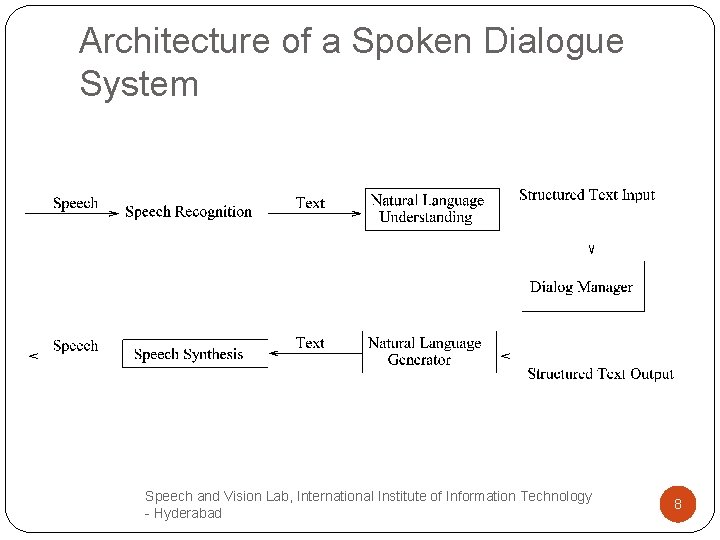

Architecture of a Spoken Dialogue System Speech and Vision Lab, International Institute of Information Technology - Hyderabad 8

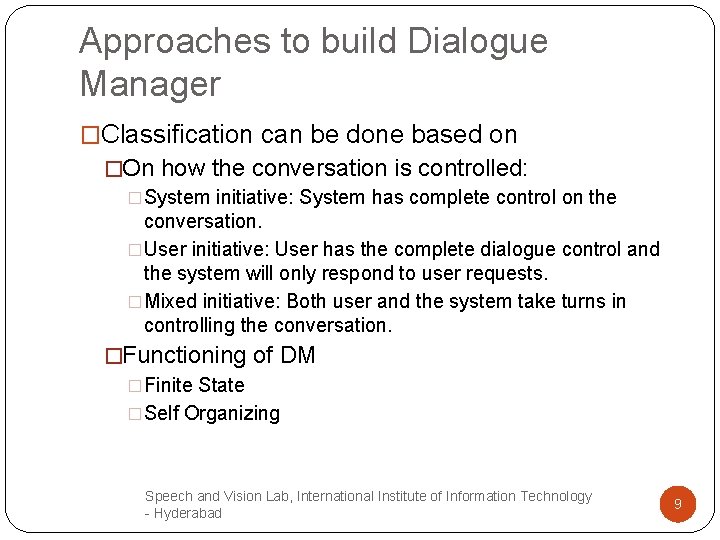

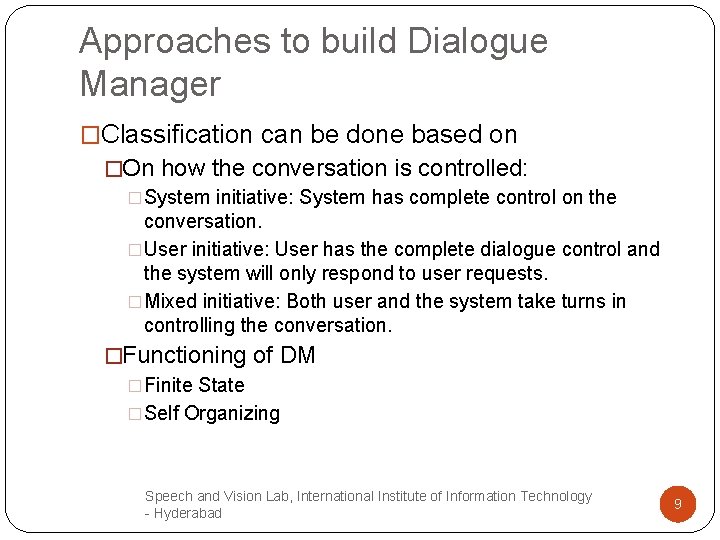

Approaches to build Dialogue Manager �Classification can be done based on �On how the conversation is controlled: �System initiative: System has complete control on the conversation. �User initiative: User has the complete dialogue control and the system will only respond to user requests. �Mixed initiative: Both user and the system take turns in controlling the conversation. �Functioning of DM �Finite State �Self Organizing Speech and Vision Lab, International Institute of Information Technology - Hyderabad 9

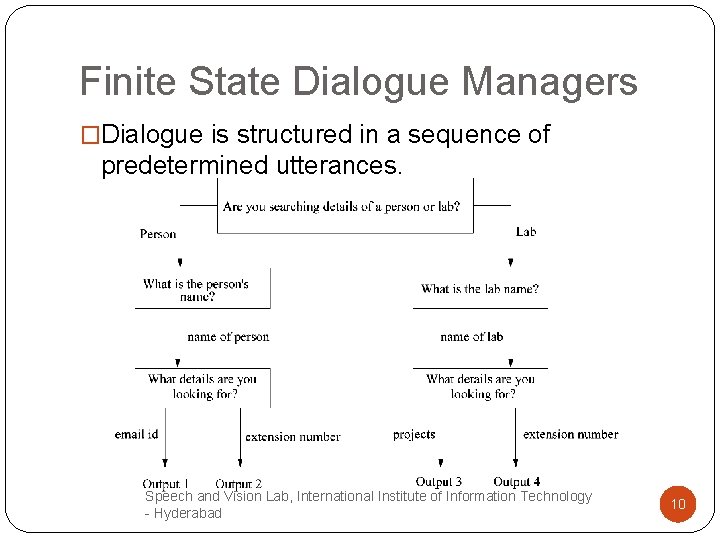

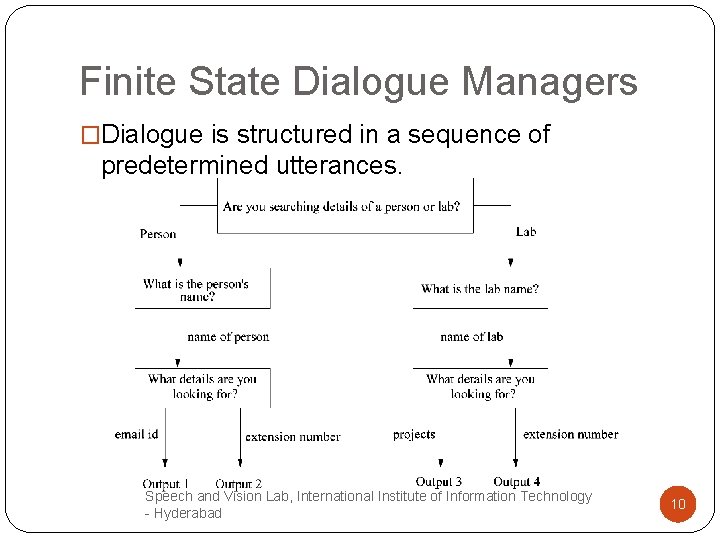

Finite State Dialogue Managers �Dialogue is structured in a sequence of predetermined utterances. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 10

Finite State Dialogue Managers (contd. ) �User is expected to answer only the system queries. Providing more information would be redundant. �FS-DM systems are system initiative as they restrict user responses. �FS-DM systems are very robust as it already knows what state it is in and what would be the possible responses of the user. �Useful when dealing with well structured data like small enquiry systems, questionnaires, etc. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 11

Self Organizing Models �Dialogue path is not predetermined �Dialogue path evolves along with the user responses to the system. �Mixed initiative. �Provide much freer form of communication as the user is not restricted. �Types of self organizing models: �Frame based �Agent based �Information State based. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 12

Frame Based Dialogue Manager �Frame (or form) based models function like form filling application. It keeps track of the information present and information to be acquired. �System chooses the required question to be asked at every instant. Dialogue path is not predetermined. �State of DM is defined as the content of the frame. �Frame is a data structure which holds the concepts and the necessary actions to be performed. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 13

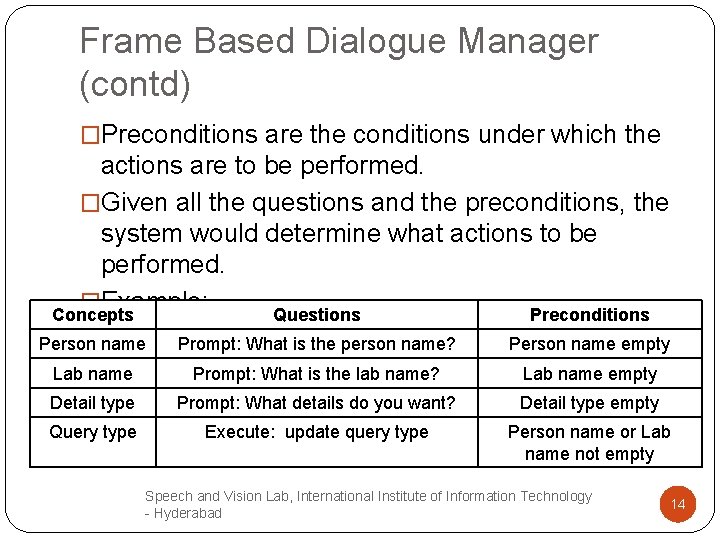

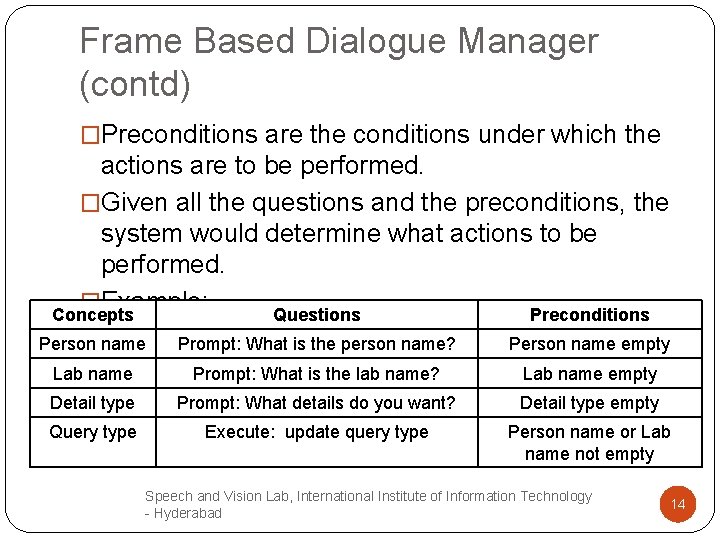

Frame Based Dialogue Manager (contd) �Preconditions are the conditions under which the actions are to be performed. �Given all the questions and the preconditions, the system would determine what actions to be performed. �Example: Concepts Questions Preconditions Person name Prompt: What is the person name? Person name empty Lab name Prompt: What is the lab name? Lab name empty Detail type Prompt: What details do you want? Detail type empty Query type Execute: update query type Person name or Lab name not empty Speech and Vision Lab, International Institute of Information Technology - Hyderabad 14

Agent based Systems �An agent is one which perceives its environment and perform necessary actions. �Information obtained from the environment by an agent are called percepts. �Agents behavior is dependent on the sequence of percepts it receives from the environment. �Goal of an intelligent agent is to effectively map the percept sequences to its corresponding action sequences via a program called agent program. �Example model is a plan based model. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 15

Agent based Systems (contd) �In a plan based model, given an initial state and a goal state, the system constructs a plan or a sequence of operations to be performed. �The series of actions are to be performed are defined by an action schema. �An example action schema consists of the following parameters and constraints �Preconditions: Necessary conditions for successful execution of the procedure Speech and Vision Lab, International Institute of Information Technology - Hyderabad 16

Agent based Systems (contd) �Effect: Conditions that become true after execution of the procedure. �Body: A set of goal states that are to be achieved in executing the procedure. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 17

Information State based Dialogue Systems �Dialogue management operations are all in terms of information state. �Information state of a dialogue represents: �Current information present. �Information obtained from previous dialogue moves. �Future actions to be performed. �Information state approach for dialogue modeling consists of: �Description and a representation of the information components that constitute an information state. In general they can be sub-divided into static and dynamic Speech andcomponents. Vision Lab, International Institute of Information Technology - Hyderabad 18

Information State based Dialogue Systems (contd) �Static components are those information components that do not change during a conversation with a user. Static components can be domain knowledge, update rules, etc. �Set of dialogue moves that will update the information state. �Set of update rules that control the update process of the information state. Rules are governed by the current information state and the performed dialogue move. �An update strategy for deciding which update rule to be executed from a set of applicable ones. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 19

Stochastic Methods for learning Dialogue Strategies �Dialogue systems like information state based systems are complex to build. �Difficulty comes in incorporating update moves and strategies. �An approach to overcome the complexity is to model the dialogue manager as a stochastic model and train the model using preliminary dialogue corpus. �Incase of real time systems, it is difficult to foresee all possible dialogue scenarios that could occur between a user and a system. To overcome such problems we could approximate a dialogue system stochastic model. Speechto anda Vision Lab, International Institute of Information Technology - Hyderabad 20

Stochastic Methods for learning Dialogue Strategies (contd. ) �Some of the approaches used for modeling dialogue managers are as follows: �Markov Decision Process (MDP) �Partially Observable Markov Decision Process (POMDP) Speech and Vision Lab, International Institute of Information Technology - Hyderabad 21

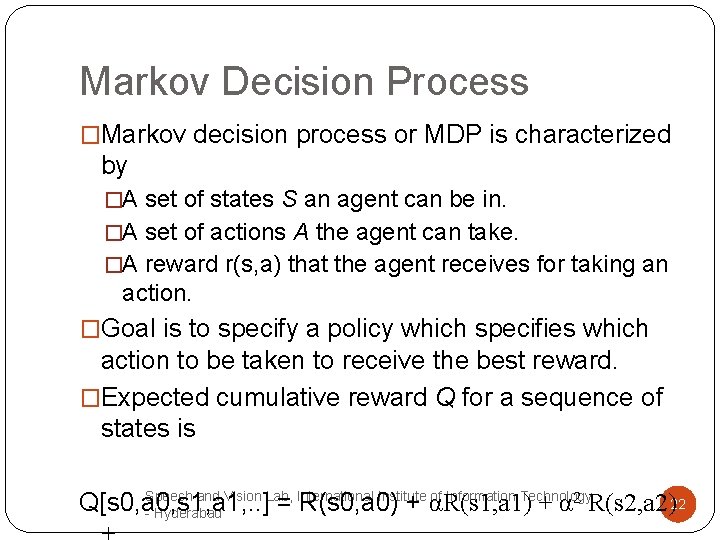

Markov Decision Process �Markov decision process or MDP is characterized by �A set of states S an agent can be in. �A set of actions A the agent can take. �A reward r(s, a) that the agent receives for taking an action. �Goal is to specify a policy which specifies which action to be taken to receive the best reward. �Expected cumulative reward Q for a sequence of states is Speech and Vision Lab, International Institute of Information Technology 2 R(s 2, a 2)22 Q[s 0, a 0, s 1, a 1, . . ] = R(s 0, a 0) + αR(s 1, a 1) + α - Hyderabad

Markov Decision Process �α is called discount factor and lies between 0 and 1. �It gives more importance to the current reward than to future rewards. �The more future a reward, the more discounted its value. �Bellman equation: Q(s, a) = R(s, a) + α ∑s 1 P(s 1|s, a) maxa 1 Q(s 1, a 1) We require hand labeled data to model R(s, a) and P(s 1|s, a) Speech and Vision Lab, International Institute of Information Technology - Hyderabad 23

Partially Observable Markov Decision Process (POMDP) �Problems with MDP: �System might never know what state the user is in. �System state might be misdirected by recognition errors. �Use of POMDP to overcome the above problems. �Environment is not completely observable for the agent. �POMDP provides a framework to overcome such errors by explicitly mentioning the recognition errors. �It models the user output as an observed signal generated from another hidden variable. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 24

Evaluation of Spoken Dialogue System �Dialogue metrics can be classified as: �Objective measures: calculated automatically by machine without any considerations towards human judgment. �Some objective measures: � Percentage of correct answers given by a system to a user. � Number of turns taken to complete the task. � Mean user response time. � Mean system response time. � Percentage of errors recognized by the dialogue system. � Time taken to complete the task. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 25

Evaluation of Spoken Dialogue System (contd) �Subjective measures: require human analysis and a set of ground rules for user evaluation. � Some subjective measures: � System cooperation. � User satisfaction � Percentage of correct and partially correct answers. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 26

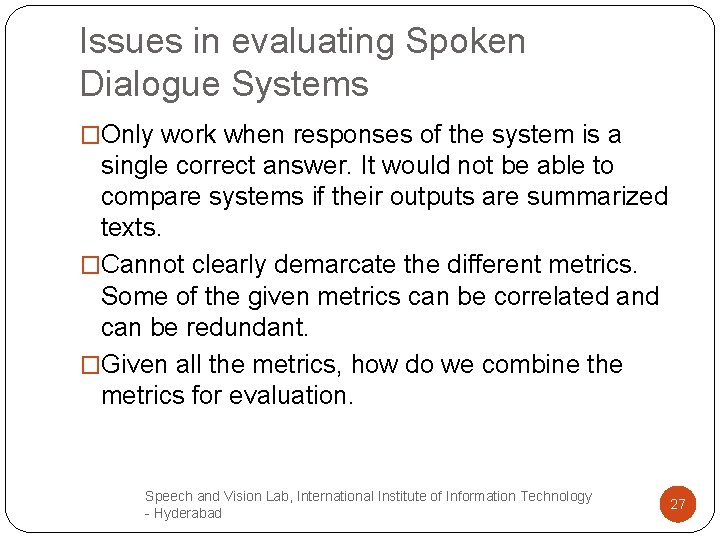

Issues in evaluating Spoken Dialogue Systems �Only work when responses of the system is a single correct answer. It would not be able to compare systems if their outputs are summarized texts. �Cannot clearly demarcate the different metrics. Some of the given metrics can be correlated and can be redundant. �Given all the metrics, how do we combine the metrics for evaluation. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 27

Mandi Information System Speech and Vision Lab, International Institute of Information Technology - Hyderabad

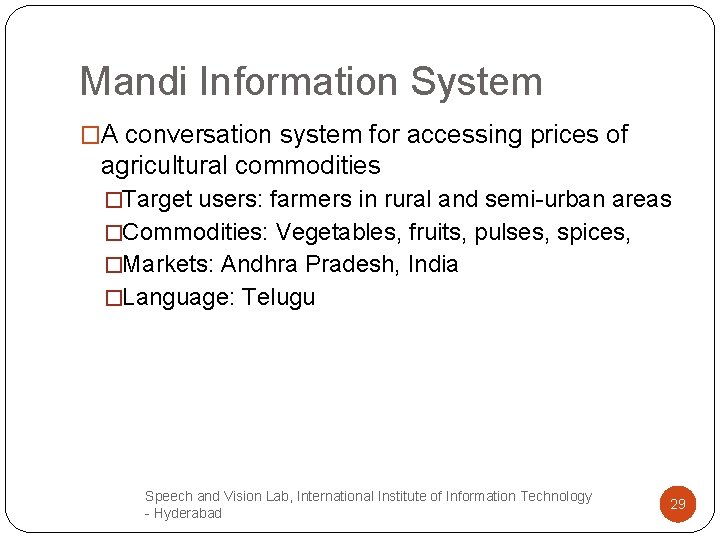

Mandi Information System �A conversation system for accessing prices of agricultural commodities �Target users: farmers in rural and semi-urban areas �Commodities: Vegetables, fruits, pulses, spices, �Markets: Andhra Pradesh, India �Language: Telugu Speech and Vision Lab, International Institute of Information Technology - Hyderabad 29

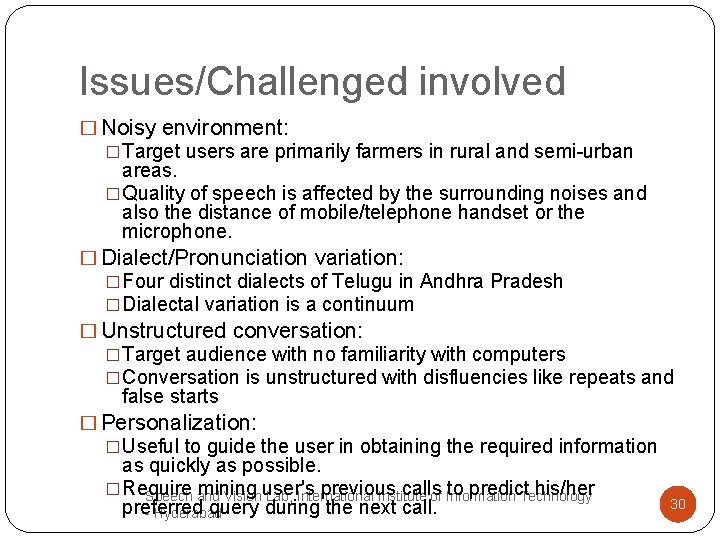

Issues/Challenged involved � Noisy environment: �Target users are primarily farmers in rural and semi-urban areas. �Quality of speech is affected by the surrounding noises and also the distance of mobile/telephone handset or the microphone. � Dialect/Pronunciation variation: �Four distinct dialects of Telugu in Andhra Pradesh �Dialectal variation is a continuum � Unstructured conversation: �Target audience with no familiarity with computers �Conversation is unstructured with disfluencies like repeats and false starts � Personalization: �Useful to guide the user in obtaining the required information as quickly as possible. �Require mining previous calls to predict. Technology his/her Speech and Vision user's Lab, International Institute of Information 30 preferred query during the next call. - Hyderabad

Building a baseline system �Data collection �Users were asked to read out the names of the commodities, place names, etc. from the text provided to them. �Users were shown a series of pictures of agricultural commodities and were asked to say the names of the commodities shown in the picture. This is to collect the dialectal variations of the commodities. �Users were asked a series of questions related to agriculture and the places around their locality. This is to record conversational speech. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 31

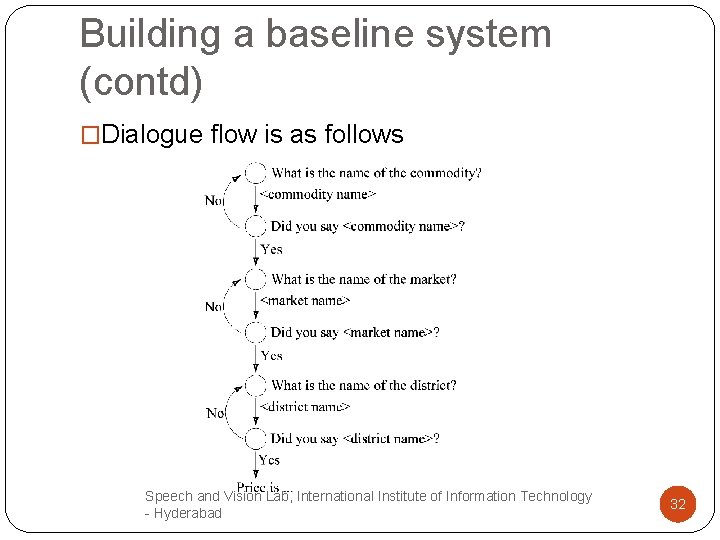

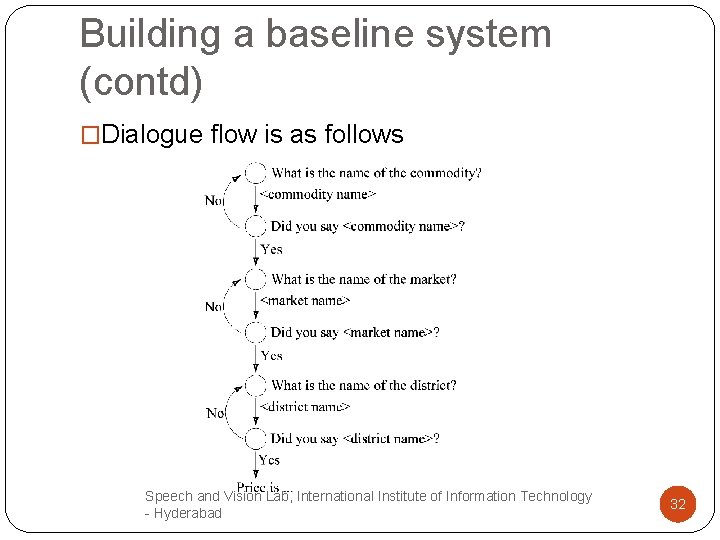

Building a baseline system (contd) �Dialogue flow is as follows Speech and Vision Lab, International Institute of Information Technology - Hyderabad 32

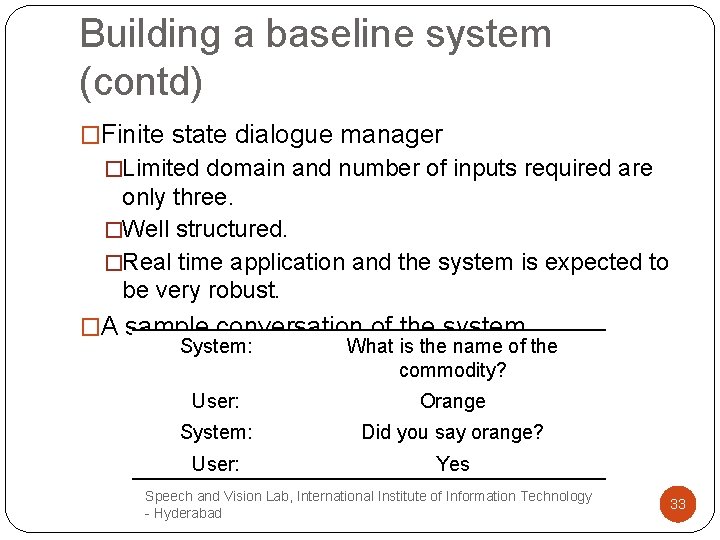

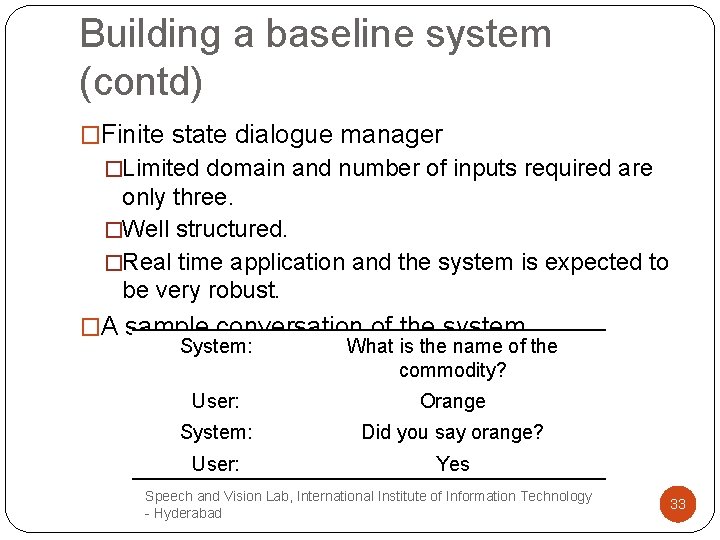

Building a baseline system (contd) �Finite state dialogue manager �Limited domain and number of inputs required are only three. �Well structured. �Real time application and the system is expected to be very robust. �A sample conversation of the system System: What is the name of the commodity? User: Orange System: Did you say orange? User: Yes Speech and Vision Lab, International Institute of Information Technology - Hyderabad 33

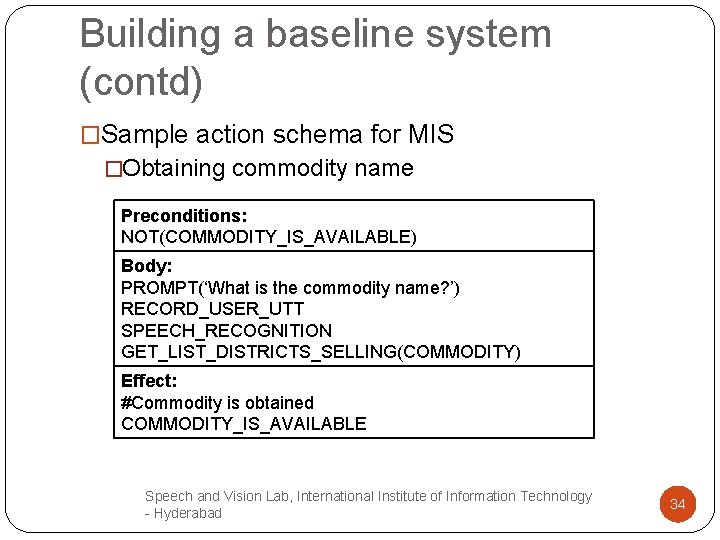

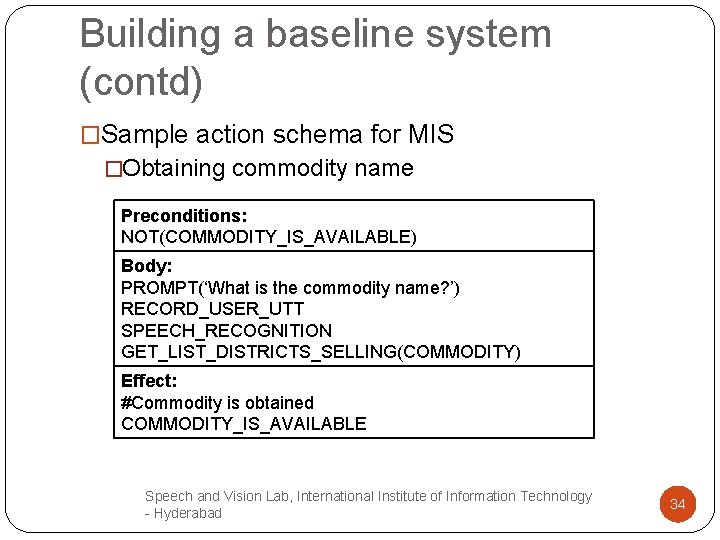

Building a baseline system (contd) �Sample action schema for MIS �Obtaining commodity name Preconditions: NOT(COMMODITY_IS_AVAILABLE) Body: PROMPT(‘What is the commodity name? ’) RECORD_USER_UTT SPEECH_RECOGNITION GET_LIST_DISTRICTS_SELLING(COMMODITY) Effect: #Commodity is obtained COMMODITY_IS_AVAILABLE Speech and Vision Lab, International Institute of Information Technology - Hyderabad 34

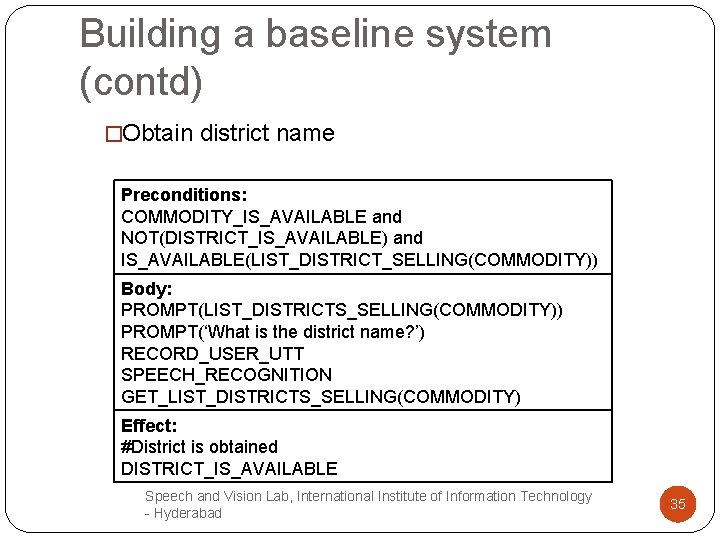

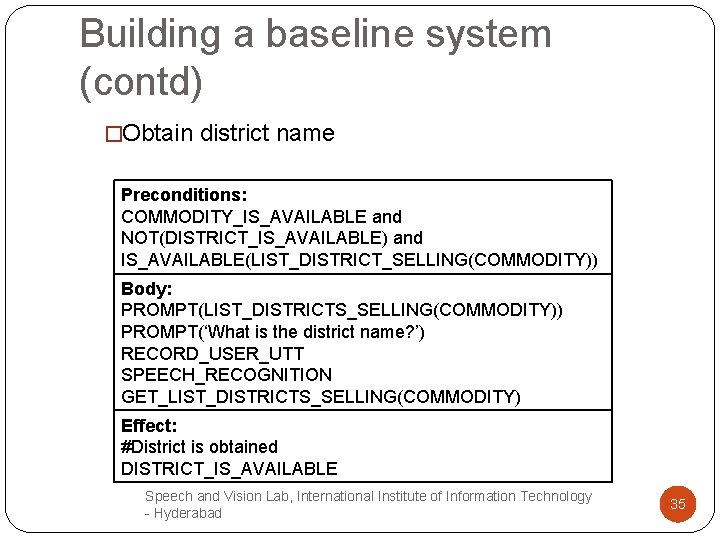

Building a baseline system (contd) �Obtain district name Preconditions: COMMODITY_IS_AVAILABLE and NOT(DISTRICT_IS_AVAILABLE) and IS_AVAILABLE(LIST_DISTRICT_SELLING(COMMODITY)) Body: PROMPT(LIST_DISTRICTS_SELLING(COMMODITY)) PROMPT(‘What is the district name? ’) RECORD_USER_UTT SPEECH_RECOGNITION GET_LIST_DISTRICTS_SELLING(COMMODITY) Effect: #District is obtained DISTRICT_IS_AVAILABLE Speech and Vision Lab, International Institute of Information Technology - Hyderabad 35

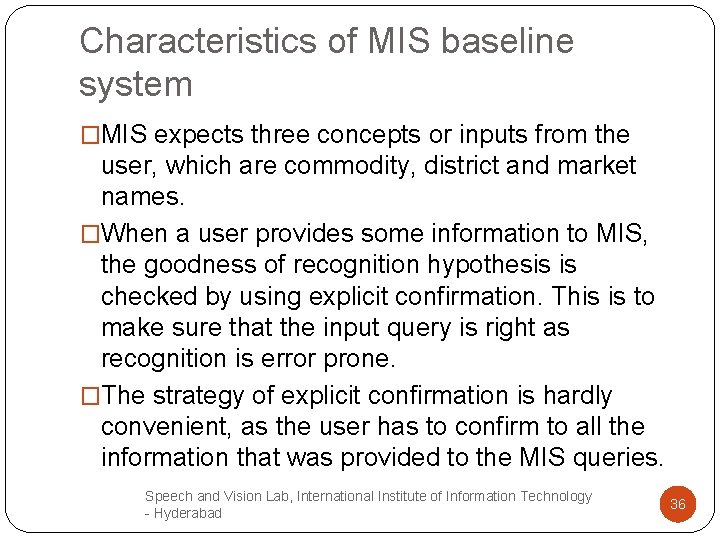

Characteristics of MIS baseline system �MIS expects three concepts or inputs from the user, which are commodity, district and market names. �When a user provides some information to MIS, the goodness of recognition hypothesis is checked by using explicit confirmation. This is to make sure that the input query is right as recognition is error prone. �The strategy of explicit confirmation is hardly convenient, as the user has to confirm to all the information that was provided to the MIS queries. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 36

Mandi Information System II �Spoken dialogue system should provide accurate information to a user in less number of turns (or interactions). �Speech recognition being error prone, it is difficult to avoid confirmations from users. However, the objective would be to limit these confirmations. �An approach would be to associate a confidence score to the recognition output of an ASR. �Based on the confidence measure we decide the dialogue flow of the system. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 37

Multiple Decoders and Contextual Information �One could build multiple ASR decoders, where each decoder tries to capture complementary information about the speech data. Can be done through training multiple decoders using �Different training datasets �Different features such as Mel-frequency cepstral coefficients, linear prediction cepstral coefficients. �If a majority of these decoders agree on a hypothesis, the recognized output is considered to be same and the system could choose to avoid an explicit confirmation from a user. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 38

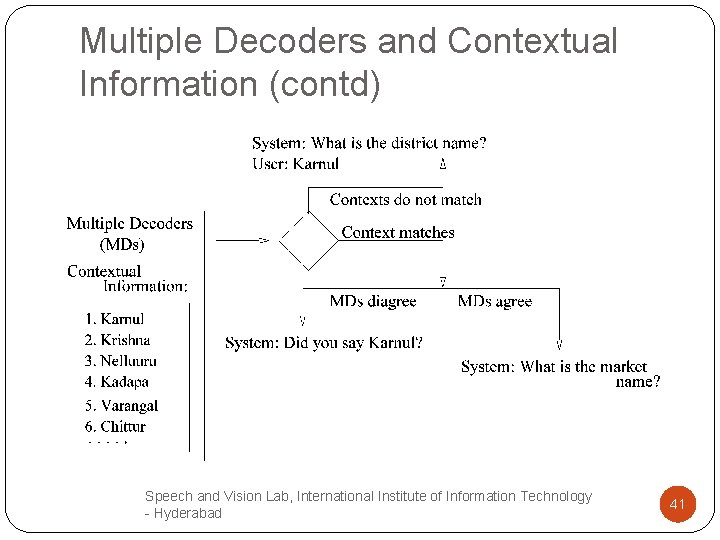

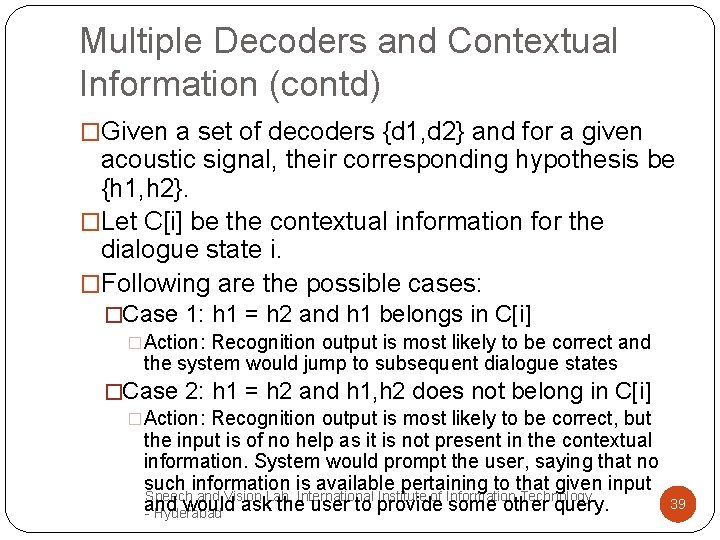

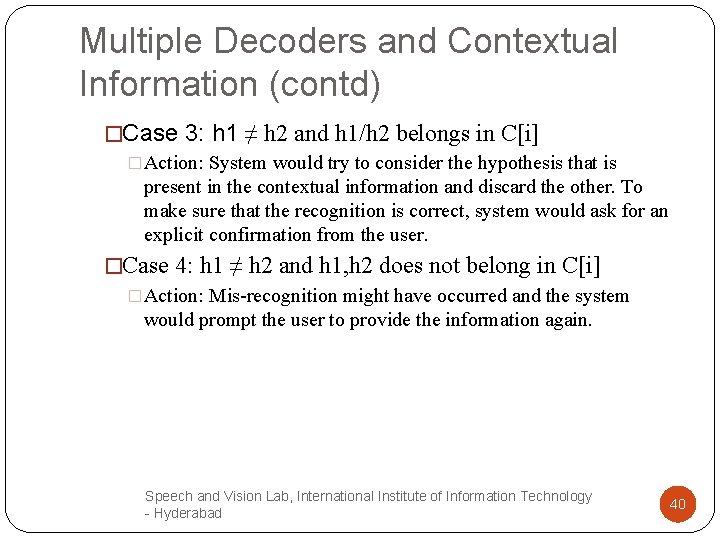

Multiple Decoders and Contextual Information (contd) �Given a set of decoders {d 1, d 2} and for a given acoustic signal, their corresponding hypothesis be {h 1, h 2}. �Let C[i] be the contextual information for the dialogue state i. �Following are the possible cases: �Case 1: h 1 = h 2 and h 1 belongs in C[i] �Action: Recognition output is most likely to be correct and the system would jump to subsequent dialogue states �Case 2: h 1 = h 2 and h 1, h 2 does not belong in C[i] �Action: Recognition output is most likely to be correct, but the input is of no help as it is not present in the contextual information. System would prompt the user, saying that no such information is available pertaining to that given input Speech and Vision Lab, International Institute of Information Technology and would ask the user to provide some other query. - Hyderabad 39

Multiple Decoders and Contextual Information (contd) �Case 3: h 1 ≠ h 2 and h 1/h 2 belongs in C[i] �Action: System would try to consider the hypothesis that is present in the contextual information and discard the other. To make sure that the recognition is correct, system would ask for an explicit confirmation from the user. �Case 4: h 1 ≠ h 2 and h 1, h 2 does not belong in C[i] �Action: Mis-recognition might have occurred and the system would prompt the user to provide the information again. Speech and Vision Lab, International Institute of Information Technology - Hyderabad 40

Multiple Decoders and Contextual Information (contd) Speech and Vision Lab, International Institute of Information Technology - Hyderabad 41

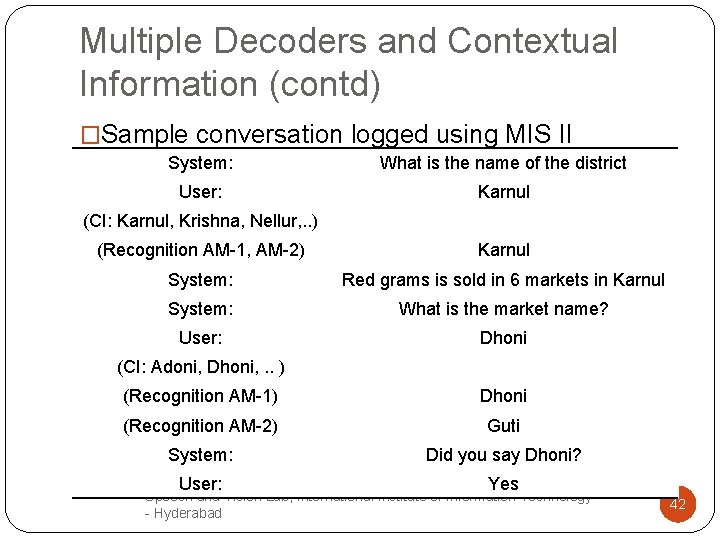

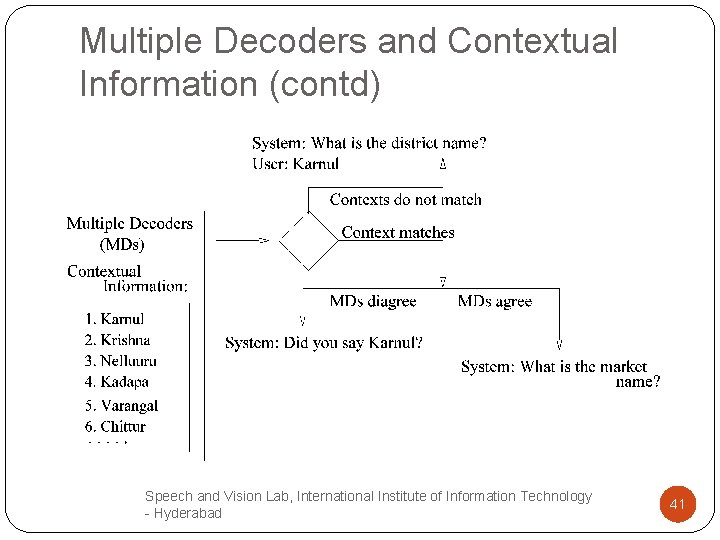

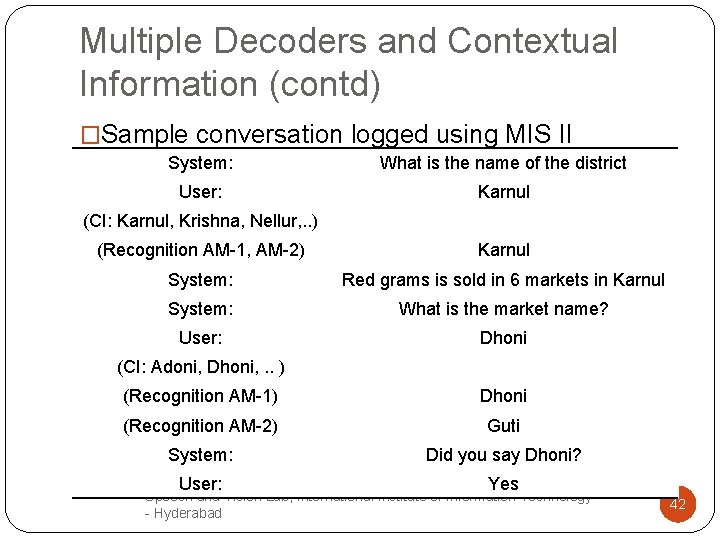

Multiple Decoders and Contextual Information (contd) �Sample conversation logged using MIS II System: What is the name of the district User: Karnul (CI: Karnul, Krishna, Nellur, . . ) (Recognition AM-1, AM-2) Karnul System: Red grams is sold in 6 markets in Karnul System: What is the market name? User: Dhoni (CI: Adoni, Dhoni, . . ) (Recognition AM-1) Dhoni (Recognition AM-2) Guti System: Did you say Dhoni? User: Yes Speech and Vision Lab, International Institute of Information Technology - Hyderabad 42

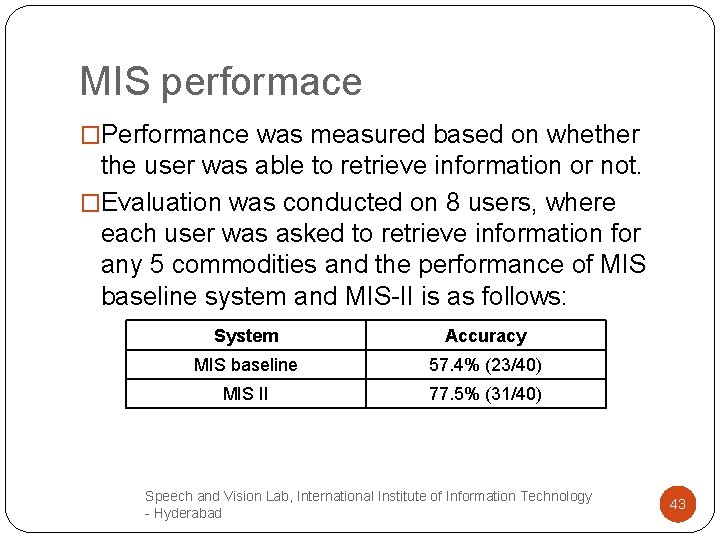

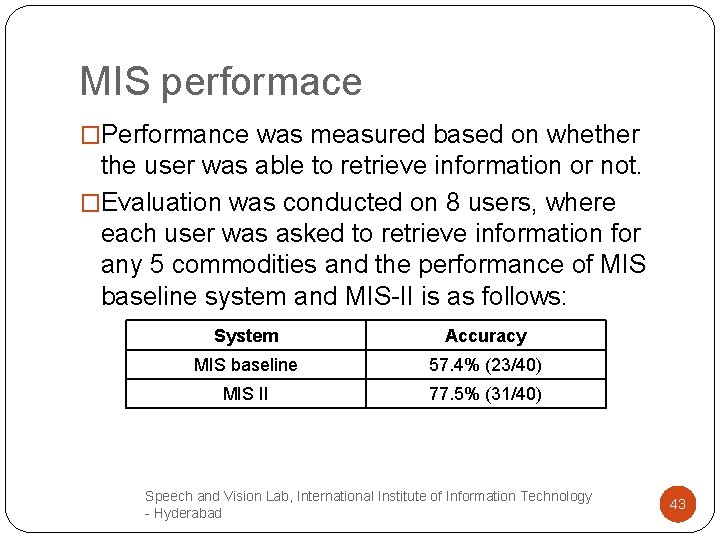

MIS performace �Performance was measured based on whether the user was able to retrieve information or not. �Evaluation was conducted on 8 users, where each user was asked to retrieve information for any 5 commodities and the performance of MIS baseline system and MIS-II is as follows: System Accuracy MIS baseline 57. 4% (23/40) MIS II 77. 5% (31/40) Speech and Vision Lab, International Institute of Information Technology - Hyderabad 43

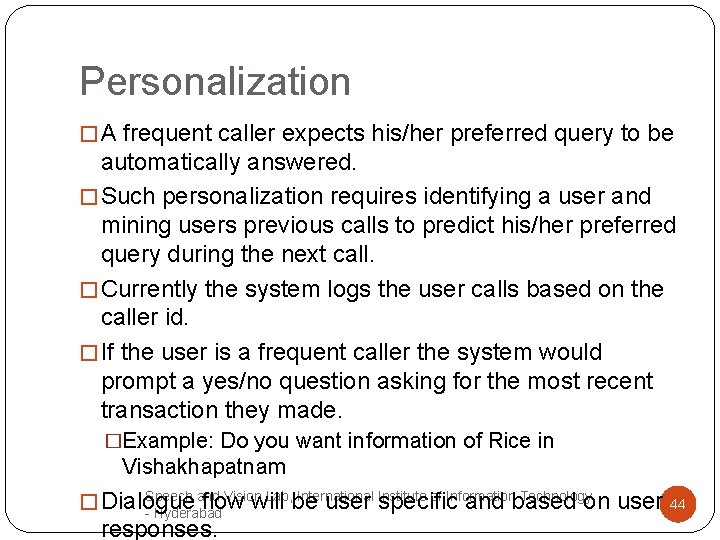

Personalization � A frequent caller expects his/her preferred query to be automatically answered. � Such personalization requires identifying a user and mining users previous calls to predict his/her preferred query during the next call. � Currently the system logs the user calls based on the caller id. � If the user is a frequent caller the system would prompt a yes/no question asking for the most recent transaction they made. �Example: Do you want information of Rice in Vishakhapatnam Speech and Vision Lab, International Institute of Information Technology � Dialogue flow will be user specific and based on user - Hyderabad responses. 44

MIS Demo Number: 66150320 extension 2

Thank You