SPMD Single Program Multiple Data Streams Hui Ren

- Slides: 13

SPMD: Single Program Multiple Data Streams Hui Ren Electrical & Computer Engineering Tufts University

Background This also increased power consumption, and had problems of heat dissipation at high clock speeds. Previously, computing performance was increased through clock speed scaling. Parallel computing allow more instructions to complete in a given time through parallel execution. Now days, parallel computing has entered main stream use, following the introduction of multi-core processors. 2

What is SPMD? SPMD mode is a method of parallel computing, its processors run the same program, but execute different data. SPMD could get better computing performance through increasing the number of processors. 3

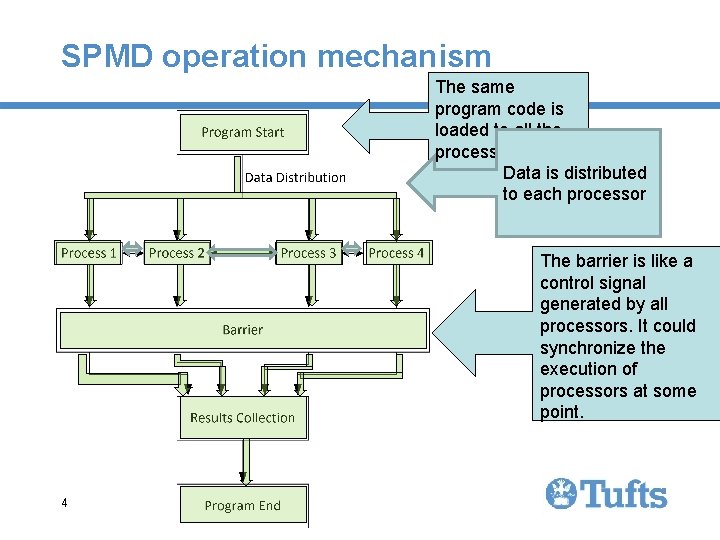

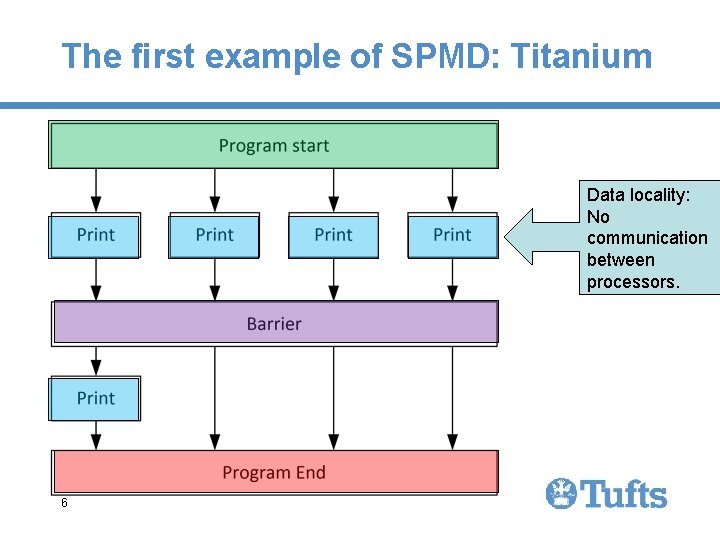

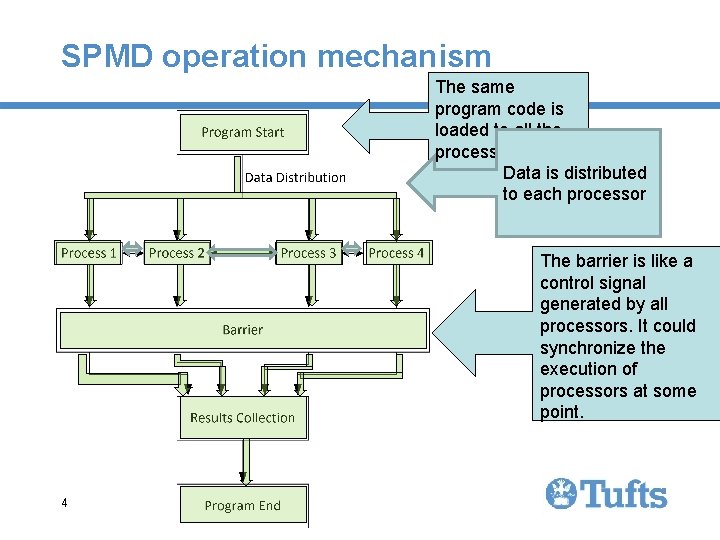

SPMD operation mechanism The same program code is loaded to all the processors. Data is distributed to each processor The barrier is like a control signal generated by all processors. It could synchronize the execution of processors at some point. 4

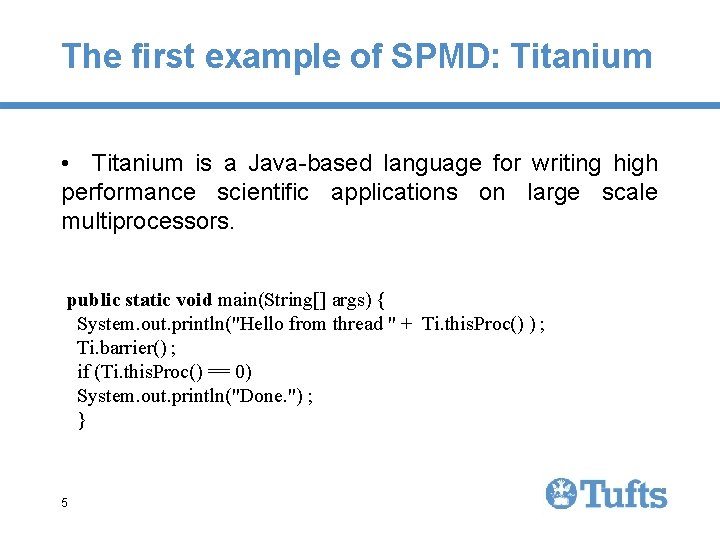

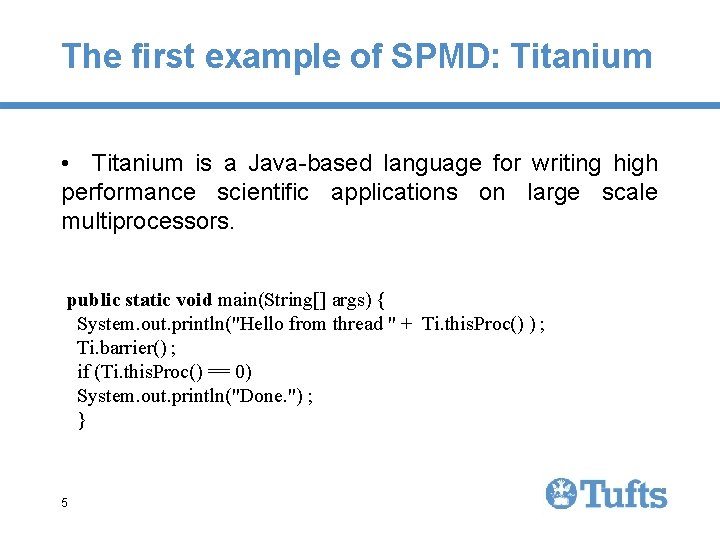

The first example of SPMD: Titanium • Titanium is a Java-based language for writing high performance scientific applications on large scale multiprocessors. public static void main(String[] args) { System. out. println("Hello from thread " + Ti. this. Proc() ) ; Ti. barrier() ; if (Ti. this. Proc() == 0) System. out. println("Done. ") ; } 5

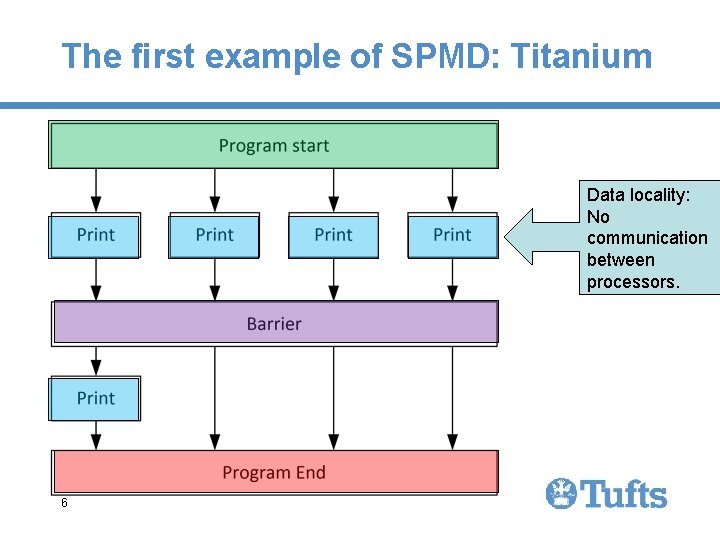

The first example of SPMD: Titanium Data locality: No communication between processors. 6

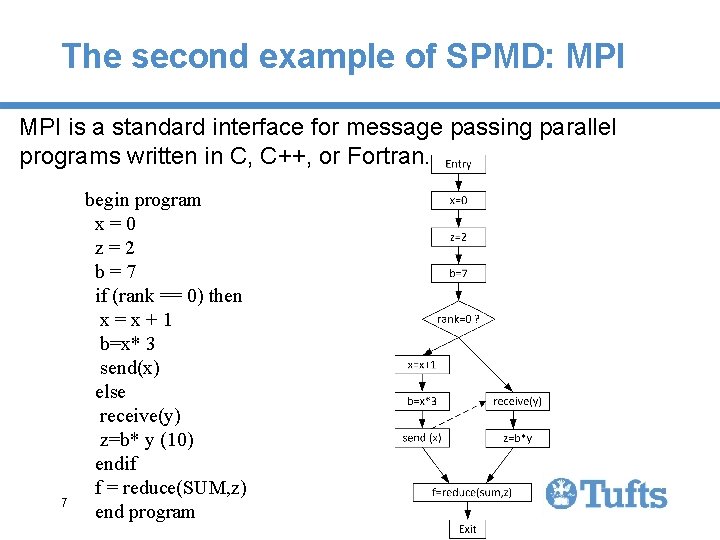

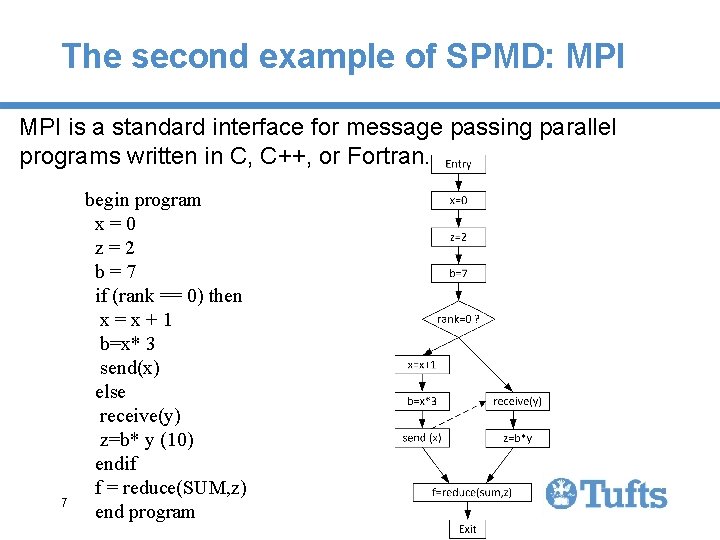

The second example of SPMD: MPI is a standard interface for message passing parallel programs written in C, C++, or Fortran. 7 begin program x=0 z=2 b=7 if (rank == 0) then x=x+1 b=x* 3 send(x) else receive(y) z=b* y (10) endif f = reduce(SUM, z) end program

The second example of SPMD: MPI • we can see that the variable y will be assigned the constant value 1 due to the send of x and the corresponding receive into y. • SPMD has a local view of execution. 8

Advantages of SPMD • Locality. Data locality is essential to achieving good performance on large-scale machines, where communication across the network is very expensive. • Structured Parallelism. The set of threads is fixed throughout computation. It is easier for compilers to reason about SPMD code, resulting in more efficient program analyses than in other models. • Simple runtime implementation. SPMD belongs to MIMD, it has a local view of execution and parallelism is exposed directly to the user, compilers and runtime systems require less effort to implement than many other MIMD models. 9

Disadvantages of SPMD • SPMD is a flat model, which makes it dificult to write hierarchical code, such as divide-andconquer algorithms, as well as programs optimized for hierarchical machines. • The second disadvantage may be that it seems hard to get the desired speedup using SPMD. 10

Expectation § The advantages of SPMD are very obvious, and SPMD is still a common use on many large-scale machines. Many scientists have done researches to improve SPMD, such as the recursive SPMD, which provides hierarchical teams. § So, SPMD will still be a good method for parallel computing in the future. 11

References 1. Kamil, A. A. , Single Program, Multiple Data Programming for Hierarchical Computations. Dissertation of Philosophy Doctor, University of California, Berkeley, 2012. 2. Wierman, A. , L. L. H. Andrew, and A. Tang, Power-aware speed scaling in processor sharing systems: Optimality and robustness. Perform. Eval. , 2012. 69(12): p. 601 -622. 3. Pais, M. S. , K. Yamanaka, and E. R. Pinto, Rigorous Experimental Performance Analysis of Parallel Evolutionary Algorithms on Multicore Platforms. Latin America Transactions, IEEE (Revista IEEE America Latina), 2014. 12(4): p. 805 -811. 4. Cremonesi, P. and C. Gennaro, Integrated performance models for SPMD applications and MIMD architectures. Parallel and Distributed Systems, IEEE Transactions on, 2002. 13(12): p. 1320 -1332. 5. Cao, J. -J. , S. -S. Fan, and X. Yang. SPMD Performance Analysis with Parallel Computing of Matlab. in Intelligent Networks and Intelligent Systems (ICINIS), 2012 Fifth International Conference on. 2012. 6. Lusk, E. MPI in 2002: has it been ten years already? in Cluster Computing, 2002. Proceedings. 2002 IEEE International Conference on. 2002. 7. Strout, M. M. , B. Kreaseck, and P. D. Hovland. Data-Flow Analysis for MPI Programs. in Parallel Processing, 2006. ICPP 2006. International Conference on. 2006. 8. Numrich, R. W. and J. Reid, Co-array Fortran for parallel programming. SIGPLAN Fortran Forum, 1998. 17(2): p. 1 -31. 12

Thank you! 13