Split Snapshots and Skippy Indexing Long Live the

- Slides: 31

Split Snapshots and Skippy Indexing: Long Live the Past! Ross Shaull <rshaull@cs. brandeis. edu> Liuba Shrira <liuba@cs. brandeis. edu> Brandeis University

Our Idea of a Snapshot • A window to the past in a storage system • Access data as it was at time snapshot was requested • System-wide • Snapshots may be kept forever – I. e. , “long-lived” snapshots • Snapshots are consistent – Whatever that means… • High frequency (up to CDP)

Why Take Snapshots? • Fix operator errors • Auditing – When did Bob’s salary change, and who made the changes? • Analysis – How much capital was tied up in blue shirts at the beginning of this fiscal year? • We don’t necessarily know what will be interesting in the future

BITE • Give the storage system a new capability: Back-in-Time Execution • Run read-only code against current state and any snapshot • After issuing request for BITE, no special code required for accessing data in the snapshot

Other Approaches: Databases • Immortal. DB, Time-Split BTree (Lomet) – Reorganizes current state – Complex • Snapshot isolation (Postgre. SQL, Oracle) – Extension to transactions – Only for recent past • Oracle Flash. Back – Page-level copy of recent past (not forever) – Interface seems similar to BITE

Other Approaches: FS • WAFL (Hitz), ext 3 cow (Peterson) – Limited on-disk locality – Application-level consistency a challenge • VSS (Sankaran) – Blocks disk requests – Suitable for backup-type frequency

A Different Approach • Goals: – – Avoid declustering current state Don’t change how current state is accessed Application requests snapshot Snapshots are “on-line” (not in warehouse) • Split Snapshots – Copy past out incrementally – Snapshots available through virtualized buffer manager

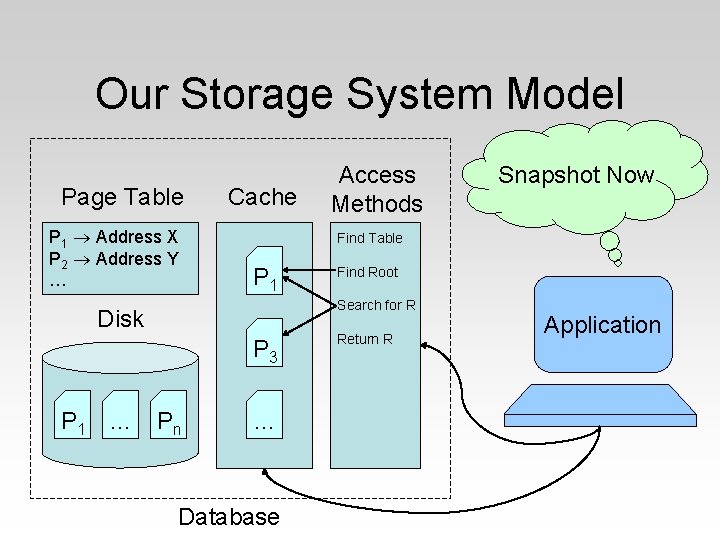

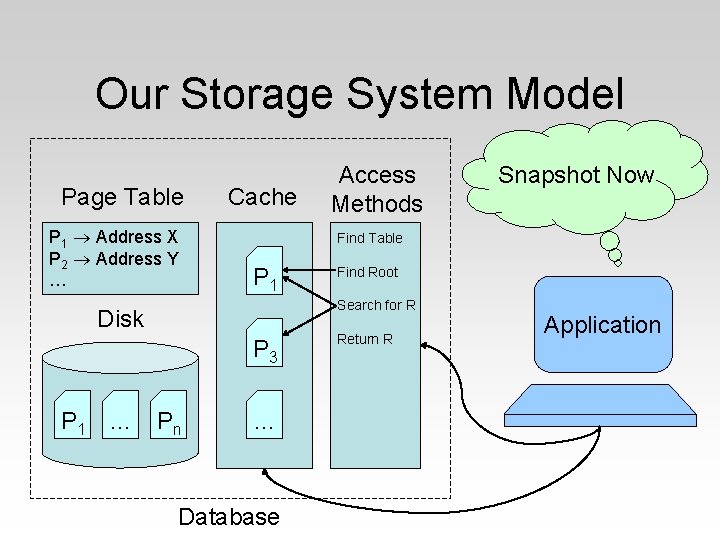

Our Storage System Model • A “database” – Has transactions – Has recovery log – Organizes data in pages on disk

Our Consistency Model • Crash consistency – Imagine that a snapshot is declared, but then before any modifications can be made, the system crashes – After restart, recovery kicks in and the current state is restored to *some* consistent point – All snapshots will have this same consistency guarantee after a crash

Our Storage System Model Page Table P 1 Address X P 2 Address Y … Cache P 1 Find Root Search for R P 3 Pn ISnapshot want record Now R Find Table Disk P 1 … Access Methods … Database Return R Application

Retaining the Past Versus

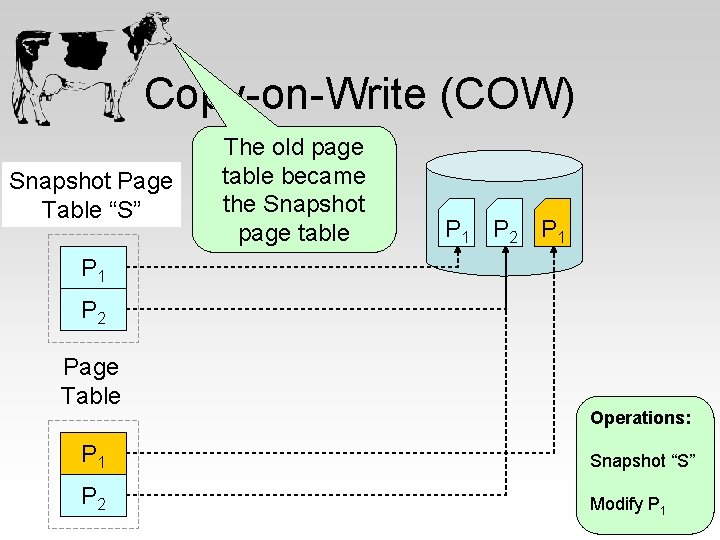

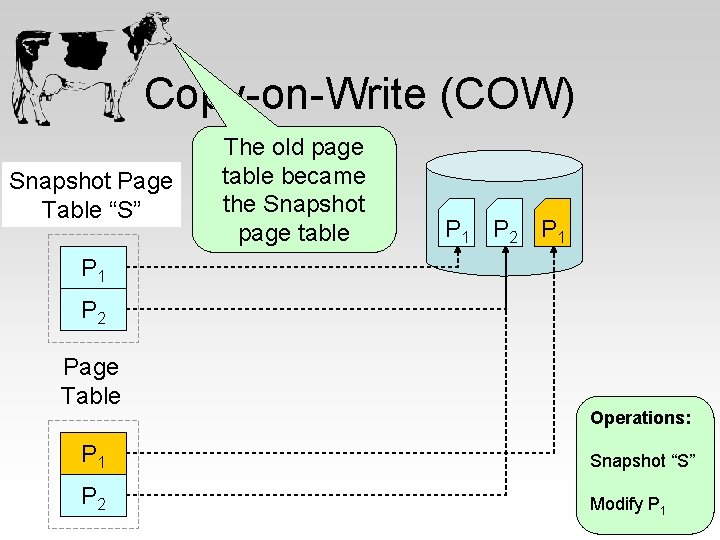

Copy-on-Write (COW) Snapshot Page Table “S” The old page table became the Snapshot page table P 1 P 2 Page Table Operations: P 1 Snapshot “S” P 2 Modify P 1

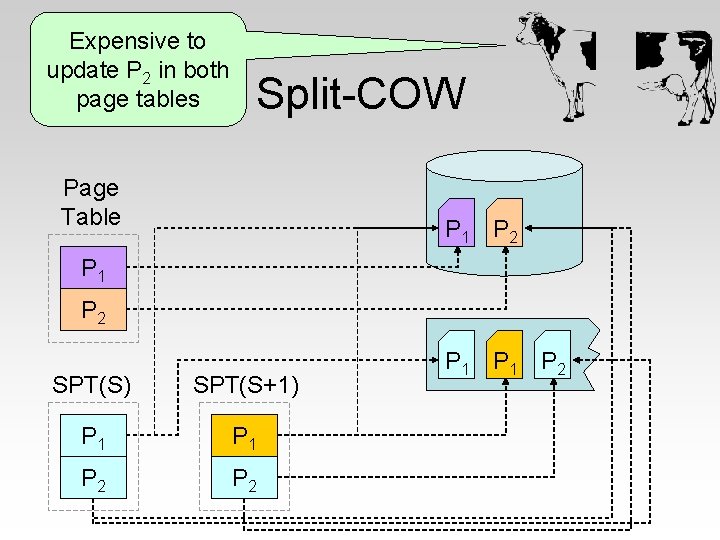

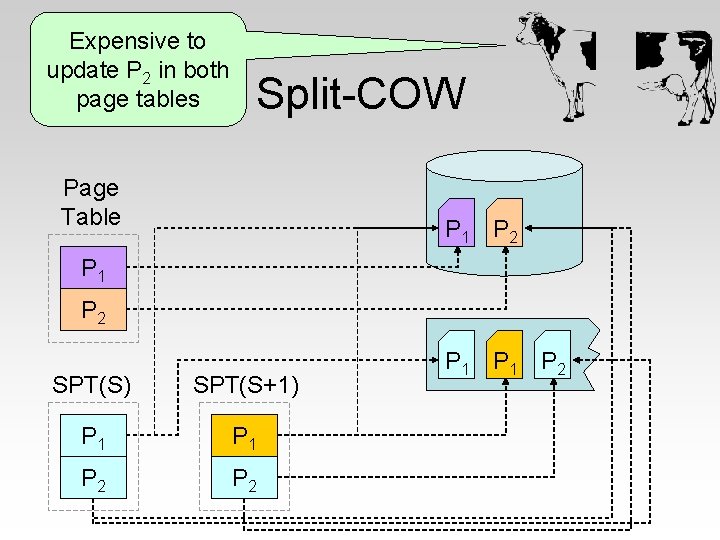

Expensive to update P 2 in both page tables Split-COW Page Table P 1 P 2 SPT(S) SPT(S+1) P 1 P 2

What’s next 1. How to manage the metadata? 2. How will snapshot pages be accessed? 3. Can we be non-disruptive?

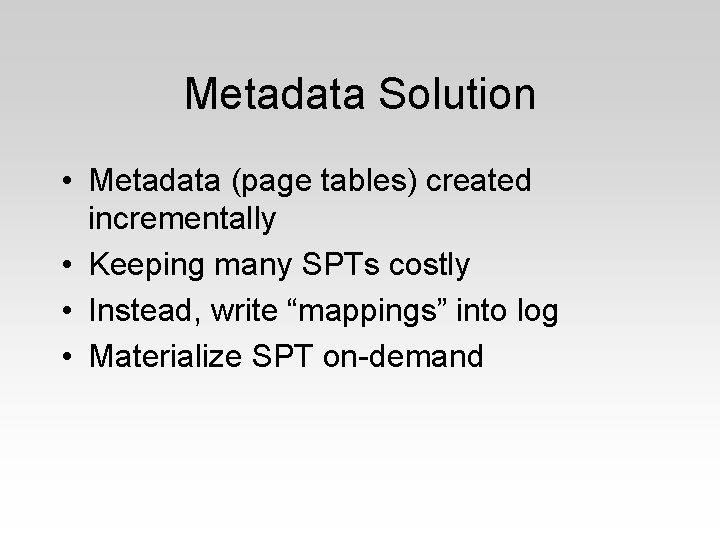

Metadata Solution • Metadata (page tables) created incrementally • Keeping many SPTs costly • Instead, write “mappings” into log • Materialize SPT on-demand

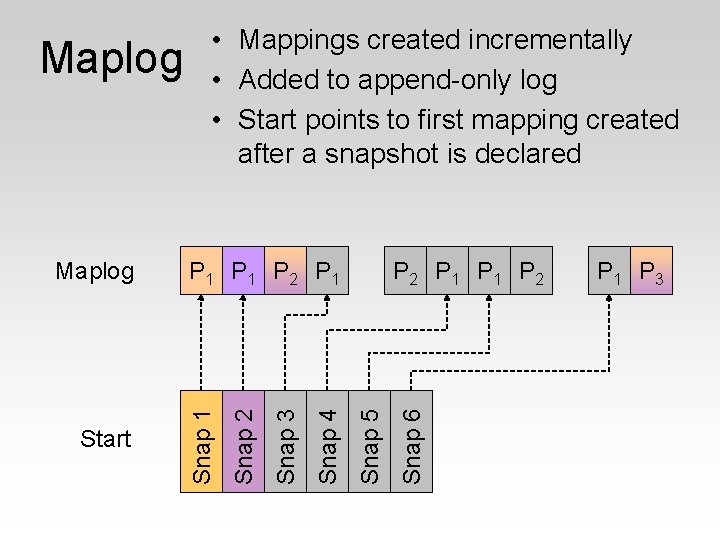

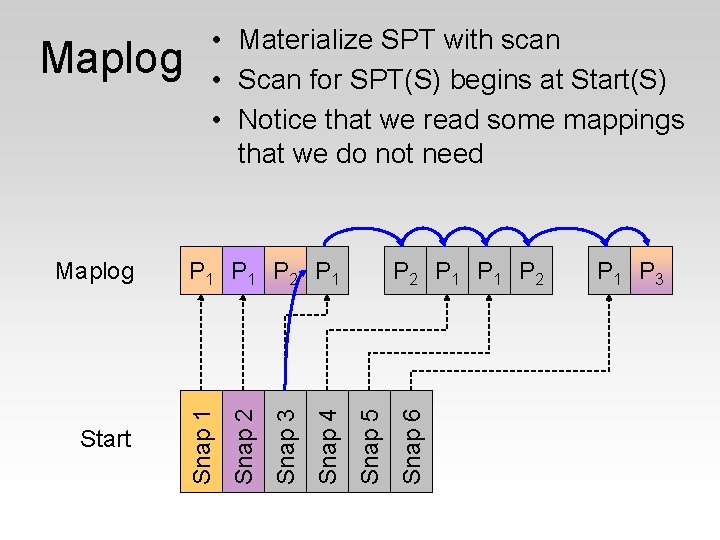

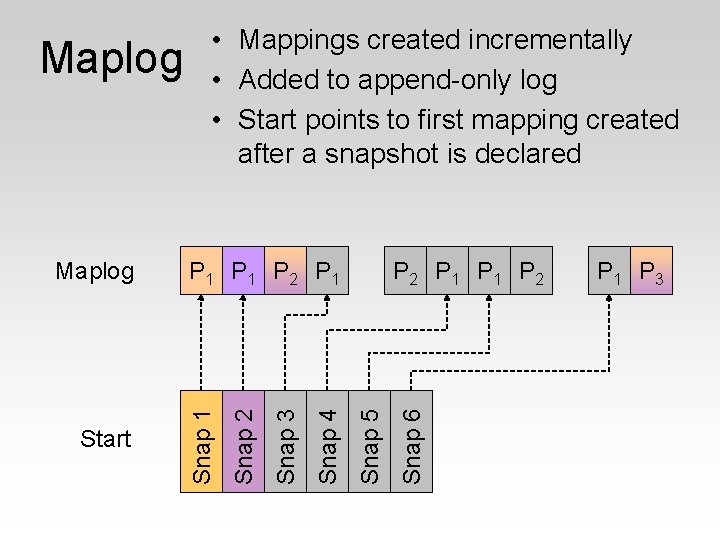

Snap 6 P 2 P 1 P 2 Snap 5 Snap 4 Snap 3 Start P 1 P 2 P 1 Snap 2 Maplog Snap 1 Maplog • Mappings created incrementally • Added to append-only log • Start points to first mapping created after a snapshot is declared P 1 P 3

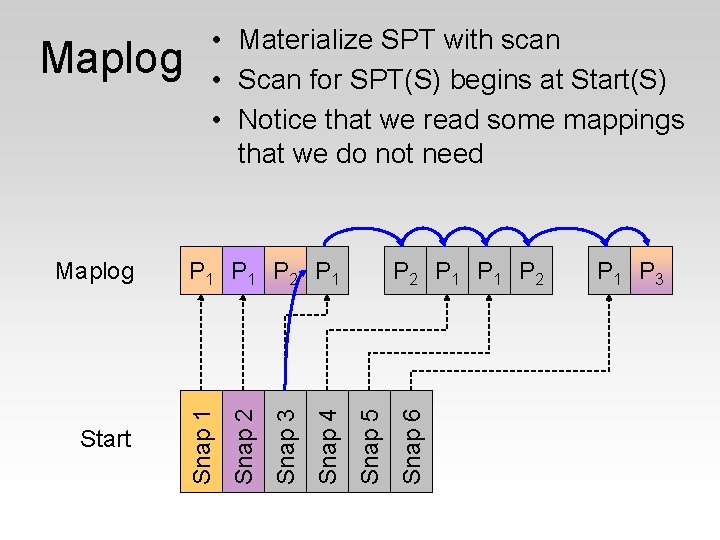

Snap 6 P 2 P 1 P 2 Snap 5 Snap 4 Snap 3 Start P 1 P 2 P 1 Snap 2 Maplog Snap 1 Maplog • Materialize SPT with scan • Scan for SPT(S) begins at Start(S) • Notice that we read some mappings that we do not need P 1 P 3

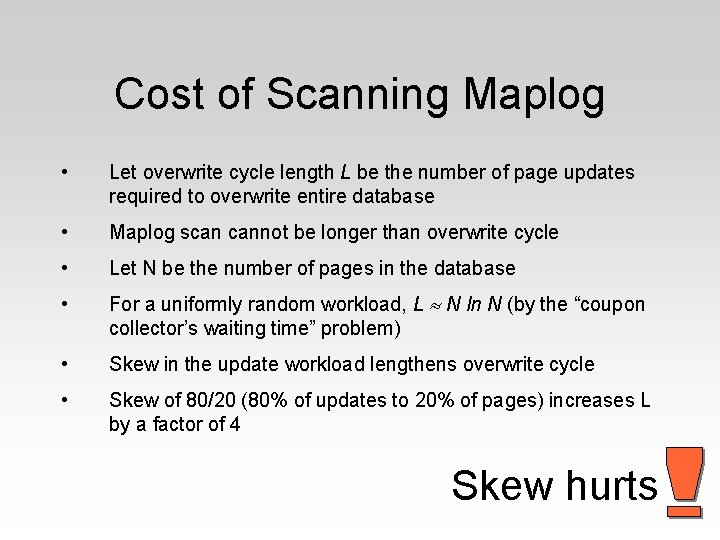

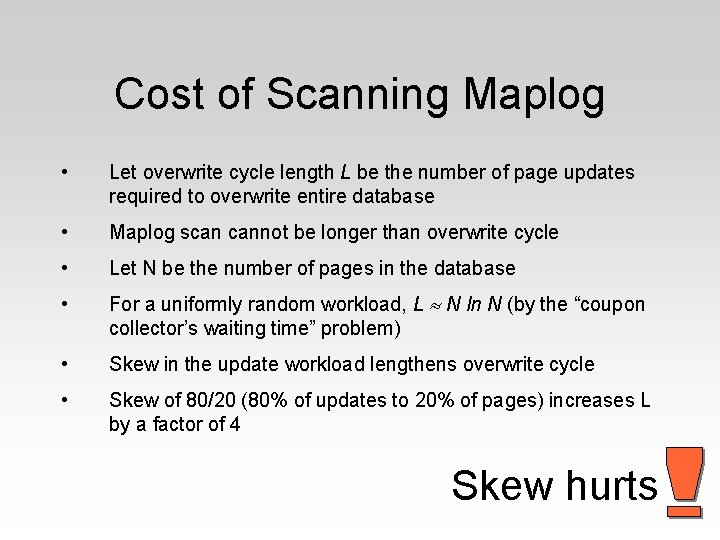

Cost of Scanning Maplog • Let overwrite cycle length L be the number of page updates required to overwrite entire database • Maplog scan cannot be longer than overwrite cycle • Let N be the number of pages in the database • For a uniformly random workload, L N ln N (by the “coupon collector’s waiting time” problem) • Skew in the update workload lengthens overwrite cycle • Skew of 80/20 (80% of updates to 20% of pages) increases L by a factor of 4 Skew hurts

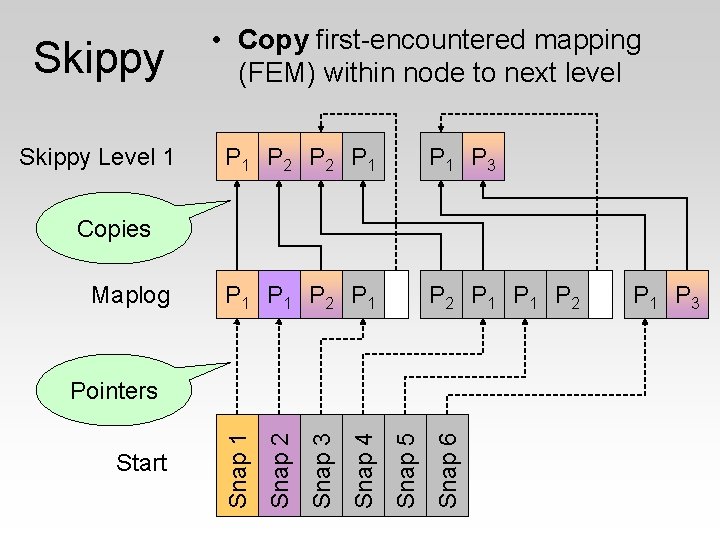

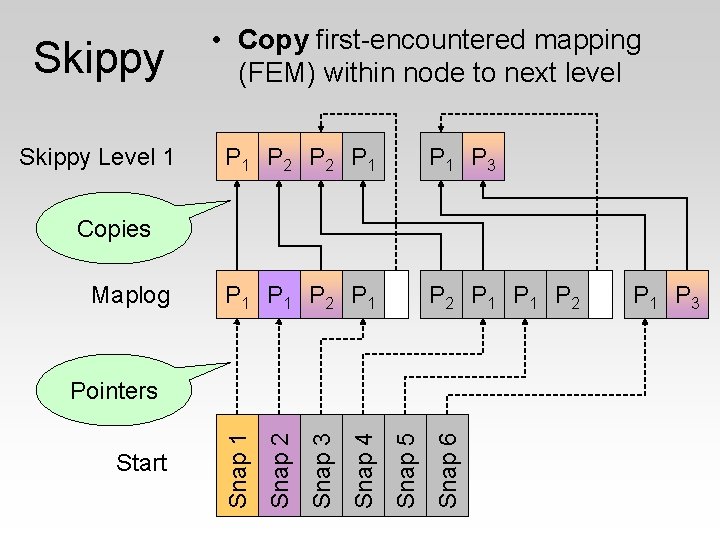

Skippy Level 1 • Copy first-encountered mapping (FEM) within node to next level P 1 P 2 P 1 P 3 P 1 P 2 P 1 P 1 P 2 Copies Maplog Snap 6 Snap 5 Snap 4 Snap 3 Snap 2 Start Snap 1 Pointers P 1 P 3

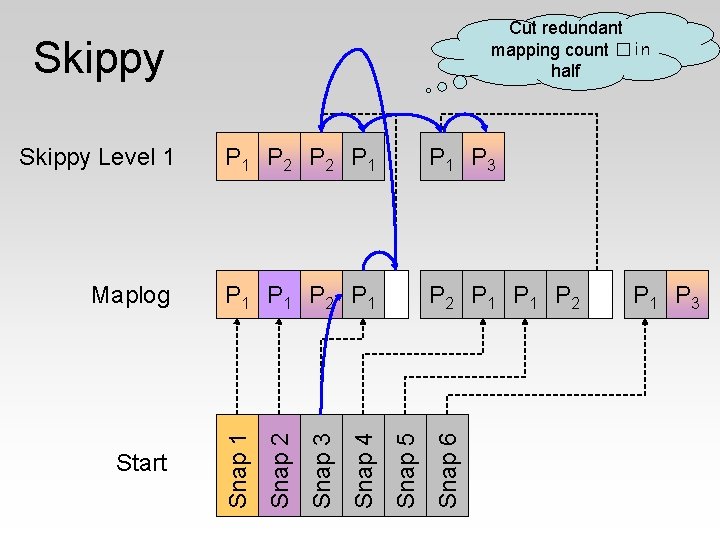

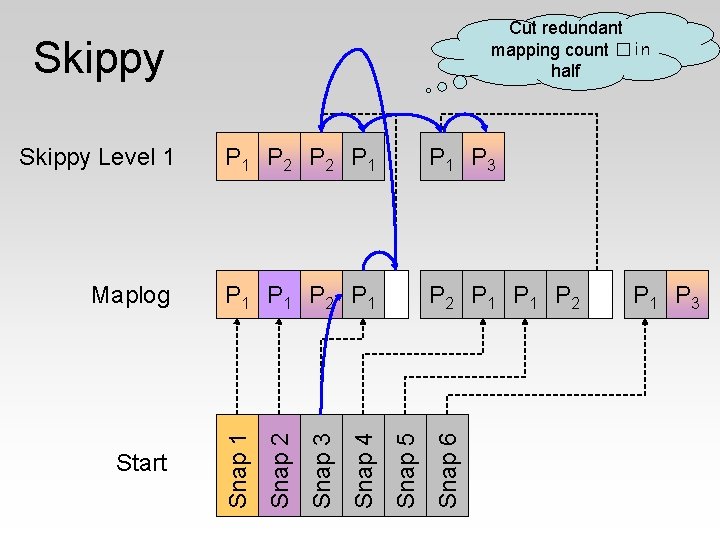

Cut redundant mapping count �in half Skippy Start Snap 6 P 2 P 1 P 2 Snap 5 P 1 P 2 P 1 Snap 4 Maplog Snap 3 P 1 P 3 Snap 2 P 1 P 2 P 1 Snap 1 Skippy Level 1 P 3

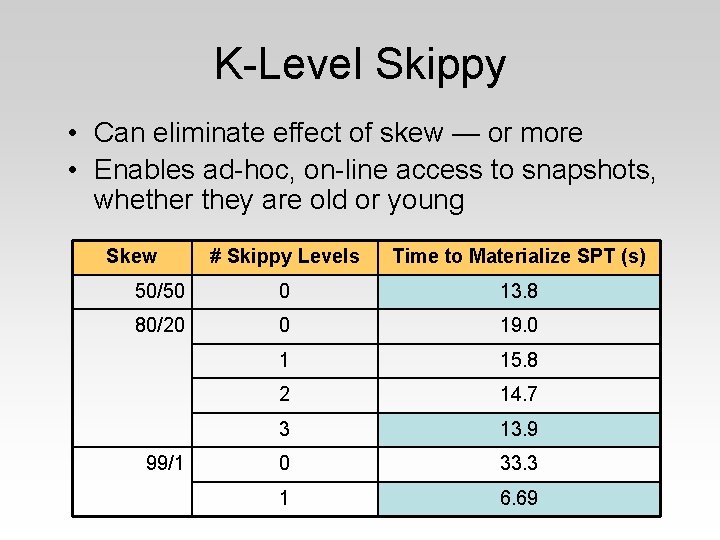

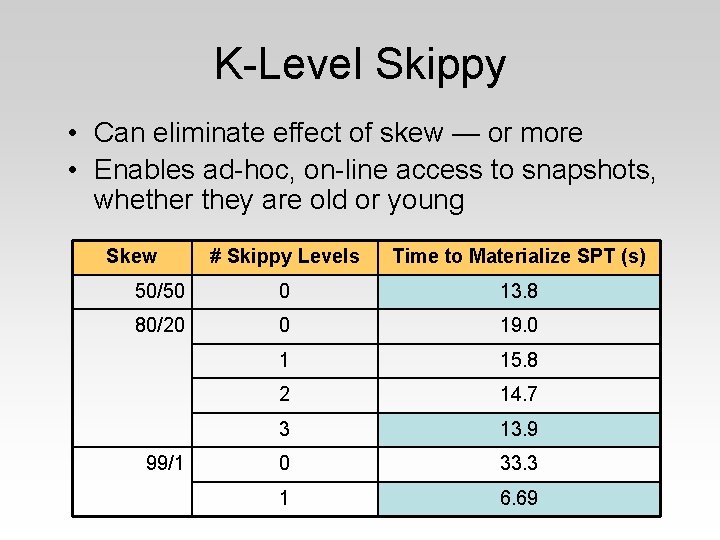

K-Level Skippy • Can eliminate effect of skew — or more • Enables ad-hoc, on-line access to snapshots, whether they are old or young Skew # Skippy Levels Time to Materialize SPT (s) 50/50 0 13. 8 80/20 0 19. 0 1 15. 8 2 14. 7 3 13. 9 0 33. 3 1 6. 69 99/1

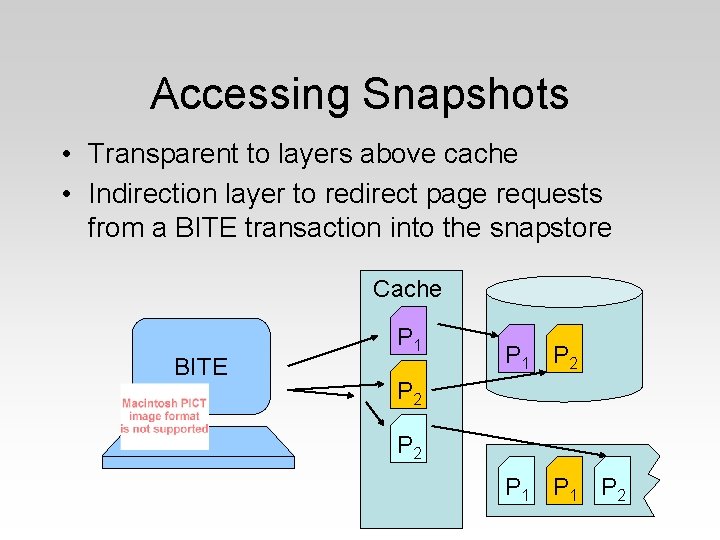

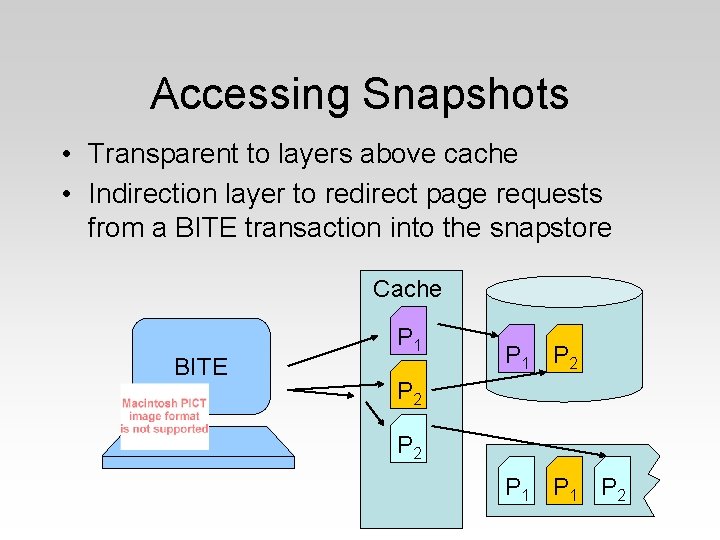

Accessing Snapshots • Transparent to layers above cache • Indirection layer to redirect page requests from a BITE transaction into the snapstore Cache Read Current BITE State P 1 P 2 P 2 P 1 P 2

Non-Disruptiveness • Can we create Skippy and COW prestates without disrupting the current state? • Key idea: – Leverage recovery to defer all snapshotrelated writes – Write snapshot data in background to secondary disk

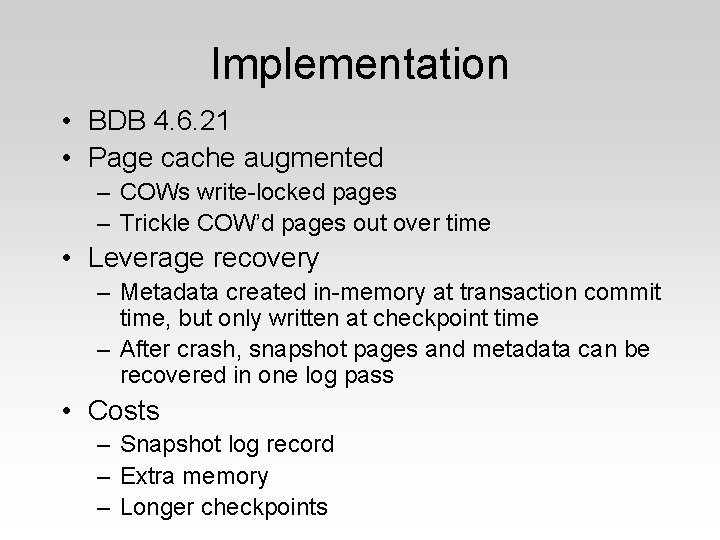

Implementation • BDB 4. 6. 21 • Page cache augmented – COWs write-locked pages – Trickle COW’d pages out over time • Leverage recovery – Metadata created in-memory at transaction commit time, but only written at checkpoint time – After crash, snapshot pages and metadata can be recovered in one log pass • Costs – Snapshot log record – Extra memory – Longer checkpoints

Early Disruptiveness Results • Single-threaded updating workload of 100, 000 transactions • 66 M database • We can retain a snapshot after every transaction for a 6– 8% penalty to writers • Tests with readers show little impact on sequential scans (not depicted)

Paper Trail • Upcoming poster and short paper at ICDE 08 • “Skippy: a New Snapshot Indexing Method for Time Travel in the Storage Manager” to appear in SIGMOD 08 • Poster and workshop talks – NEDBDay 08, SYSTOR 08

Questions?

Backups…

Recovery Sketch 1 • Snapshots are crash consistent • Must recover data and metadata for all snapshots since last checkpoint • Pages might have been trickled, so must truncate snapstore back to last mapping before previous checkpoint • We require only that a snapshot log record be forced into the log with a group commit, no other data/metadata must be logged until checkpoint.

Recovery Sketch 2 • Walk backward through WAL, applying UNDOs • When snapshot record is encountered, copy the “dirty” pages and create a mapping • Trouble is that snapshots can be concurrent with transactions • Cope with this by “COWing” a page when an UNDO for a different transaction is applied to that page

The Future • Sometimes we want to scrub the past – Running out of space? – Retention windows for SOX-compliance • Change past state representation – Deduplication – Compression