SpeechtoSpeech MT in the JANUS System Lori Levin

![Semantic Grammars Hotel Reservation Example: Input: we have two hotels available Parse Tree: [give-information+availability+hotel] Semantic Grammars Hotel Reservation Example: Input: we have two hotels available Parse Tree: [give-information+availability+hotel]](https://slidetodoc.com/presentation_image/a4e860850d7ae976a86b0a28789ad963/image-13.jpg)

![SALT Approach • Example: Input: we have two hotels available Arg-SOUP: [exist] [hotel-type] [available] SALT Approach • Example: Input: we have two hotels available Arg-SOUP: [exist] [hotel-type] [available]](https://slidetodoc.com/presentation_image/a4e860850d7ae976a86b0a28789ad963/image-24.jpg)

- Slides: 57

Speech-to-Speech MT in the JANUS System Lori Levin and Alon Lavie Language Technologies Institute Carnegie Mellon University 4/24/00 UMD Seminar

Outline • Design and Engineering of the JANUS/C-STAR speech-to-speech MT system – Fundamentals of our approach – System overview – Engineering a multi-domain system • The C-STAR Travel Domain Interlingua (IF) • Evaluation and User Studies • Conclusions, Current and Future Research 4/24/00 UMD Seminar 2

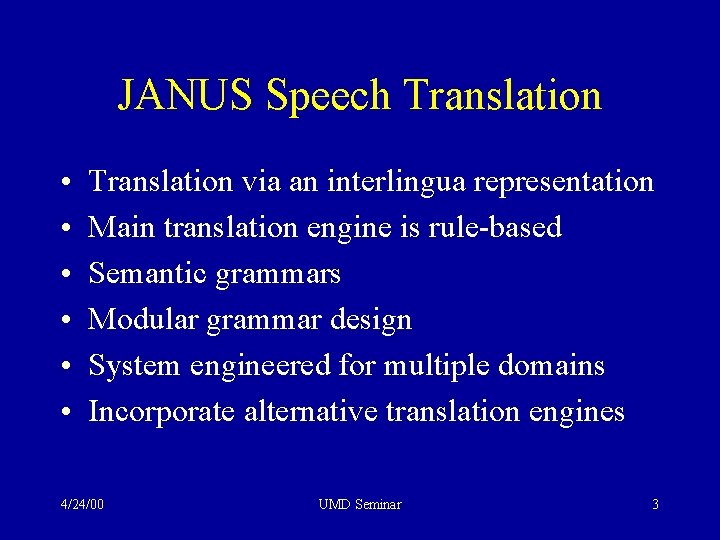

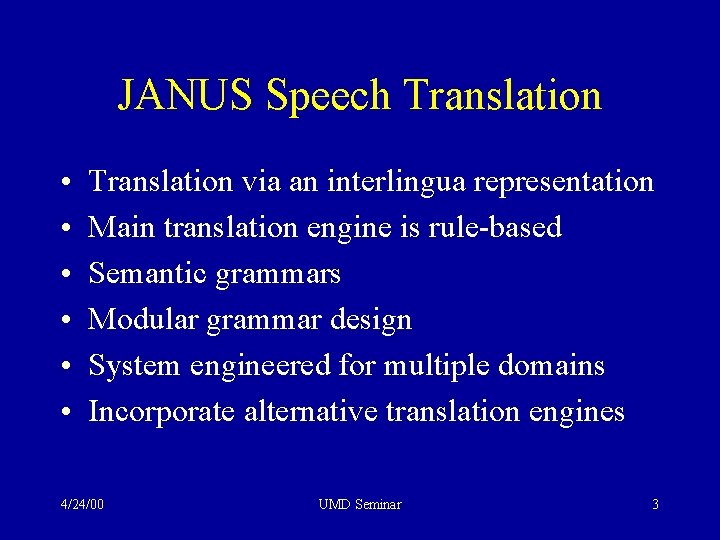

JANUS Speech Translation • • • Translation via an interlingua representation Main translation engine is rule-based Semantic grammars Modular grammar design System engineered for multiple domains Incorporate alternative translation engines 4/24/00 UMD Seminar 3

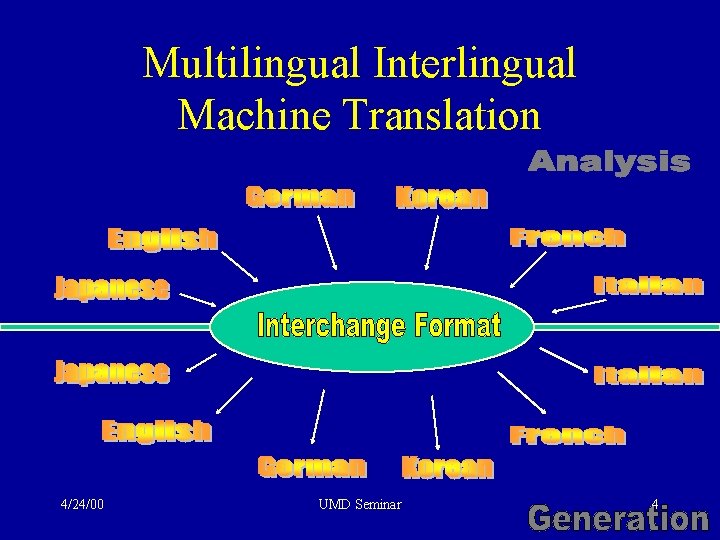

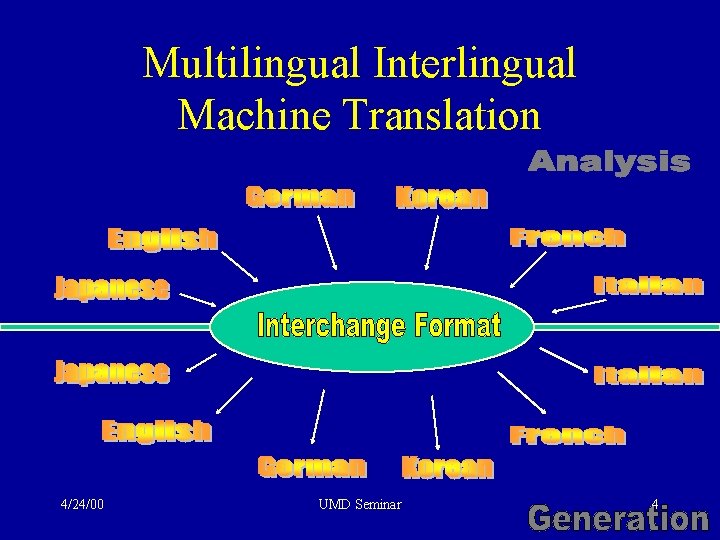

Multilingual Interlingual Machine Translation 4/24/00 UMD Seminar 4

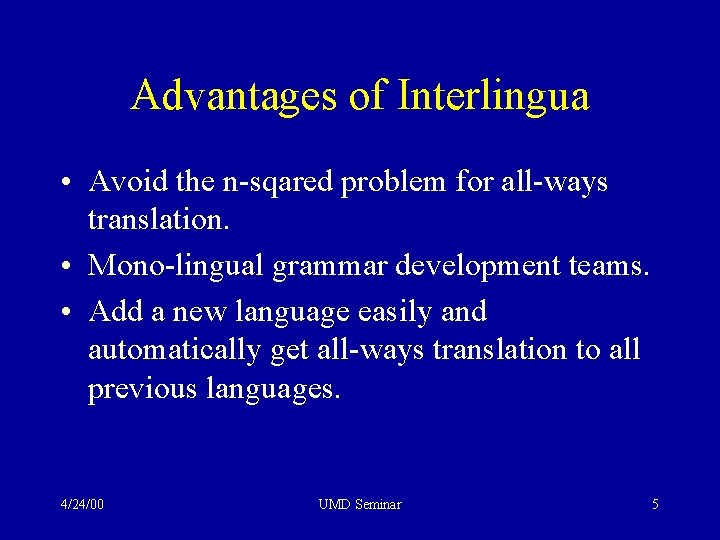

Advantages of Interlingua • Avoid the n-sqared problem for all-ways translation. • Mono-lingual grammar development teams. • Add a new language easily and automatically get all-ways translation to all previous languages. 4/24/00 UMD Seminar 5

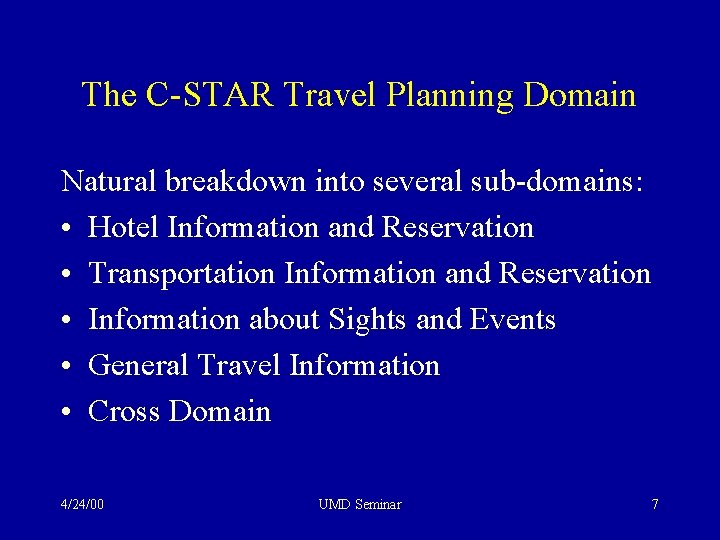

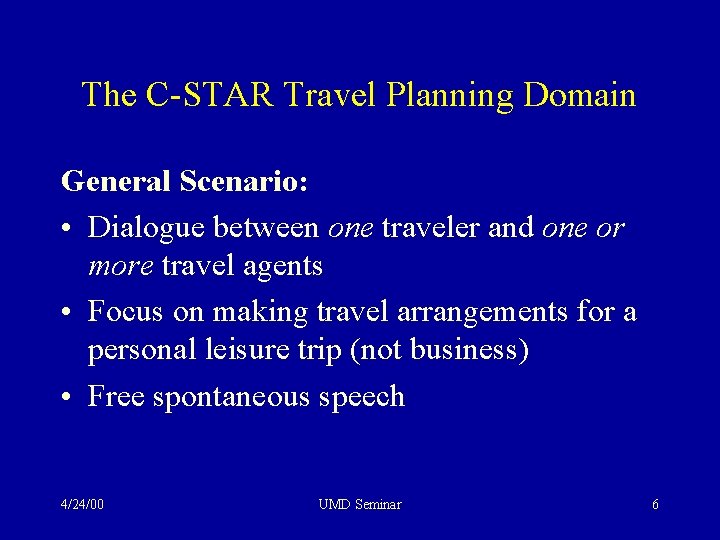

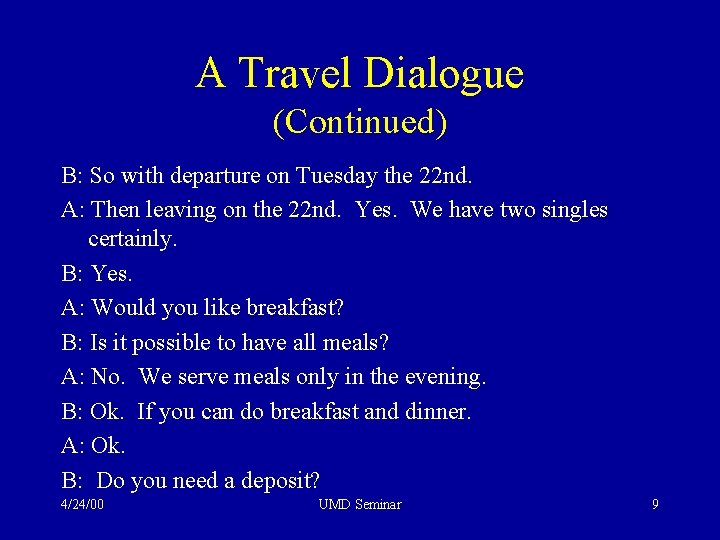

The C-STAR Travel Planning Domain General Scenario: • Dialogue between one traveler and one or more travel agents • Focus on making travel arrangements for a personal leisure trip (not business) • Free spontaneous speech 4/24/00 UMD Seminar 6

The C-STAR Travel Planning Domain Natural breakdown into several sub-domains: • Hotel Information and Reservation • Transportation Information and Reservation • Information about Sights and Events • General Travel Information • Cross Domain 4/24/00 UMD Seminar 7

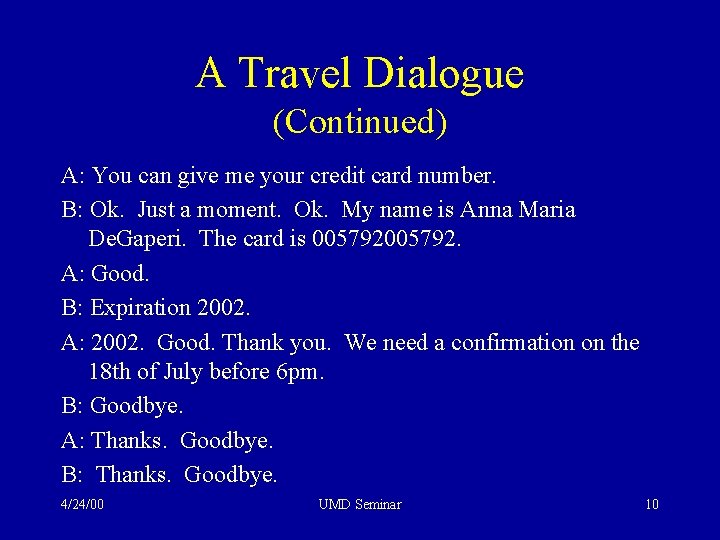

A Travel Dialogue Translated from Italian A: Albergo Gabbia D’Oro. Good evening. B: My name is Anna Maria De. Gasperi. I’m calling from Rome. I wish to book two single rooms. A: Yes. B: From Monday to Friday the 18 th, I’m sorry, to Monday the 21 st. A: Friday the 18 th of June. B: The 18 th of July. I’m sorry. A: Friday the 18 th of July to, you were saying, Sunday. B: No. Through Monday the 21 st. 4/24/00 UMD Seminar 8

A Travel Dialogue (Continued) B: So with departure on Tuesday the 22 nd. A: Then leaving on the 22 nd. Yes. We have two singles certainly. B: Yes. A: Would you like breakfast? B: Is it possible to have all meals? A: No. We serve meals only in the evening. B: Ok. If you can do breakfast and dinner. A: Ok. B: Do you need a deposit? 4/24/00 UMD Seminar 9

A Travel Dialogue (Continued) A: You can give me your credit card number. B: Ok. Just a moment. Ok. My name is Anna Maria De. Gaperi. The card is 005792. A: Good. B: Expiration 2002. A: 2002. Good. Thank you. We need a confirmation on the 18 th of July before 6 pm. B: Goodbye. A: Thanks. Goodbye. B: Thanks. Goodbye. 4/24/00 UMD Seminar 10

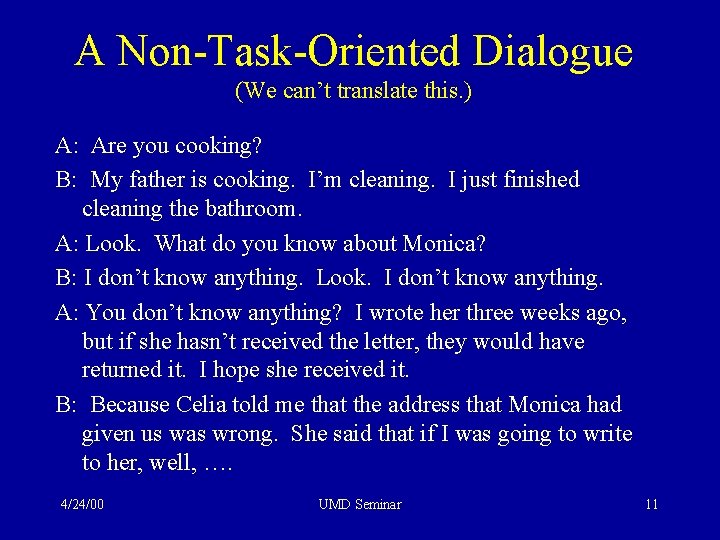

A Non-Task-Oriented Dialogue (We can’t translate this. ) A: Are you cooking? B: My father is cooking. I’m cleaning. I just finished cleaning the bathroom. A: Look. What do you know about Monica? B: I don’t know anything. Look. I don’t know anything. A: You don’t know anything? I wrote her three weeks ago, but if she hasn’t received the letter, they would have returned it. I hope she received it. B: Because Celia told me that the address that Monica had given us was wrong. She said that if I was going to write to her, well, …. 4/24/00 UMD Seminar 11

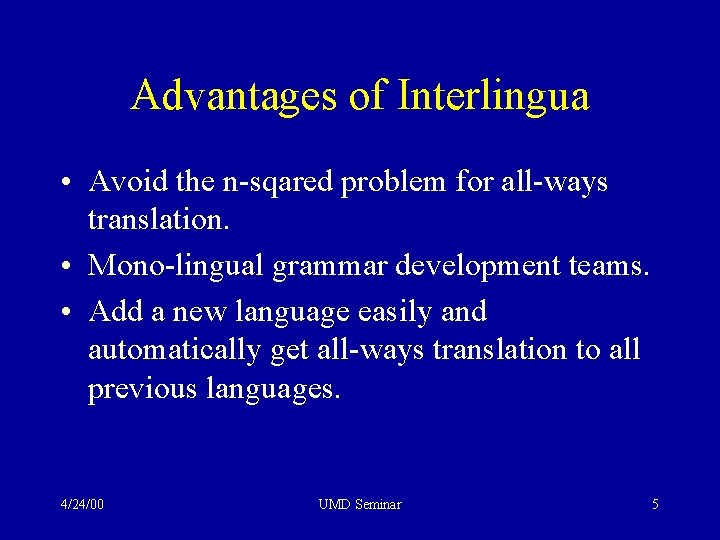

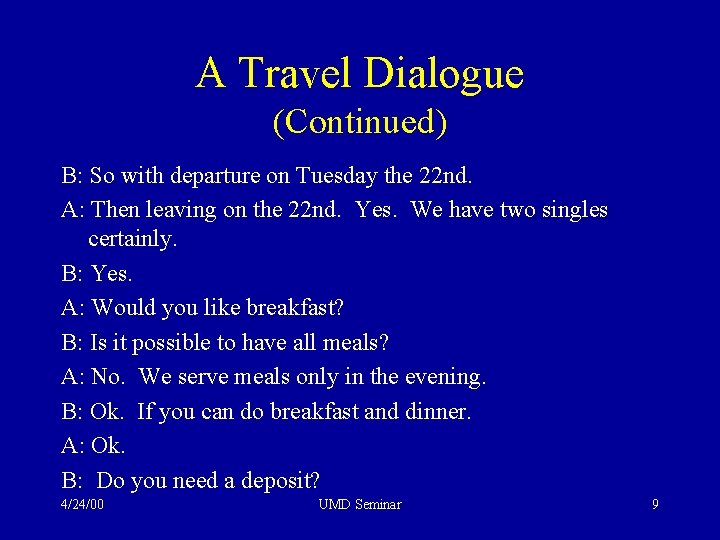

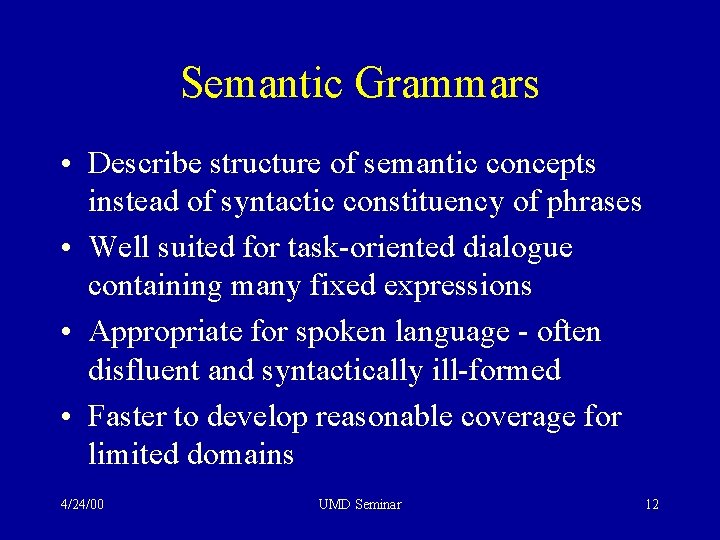

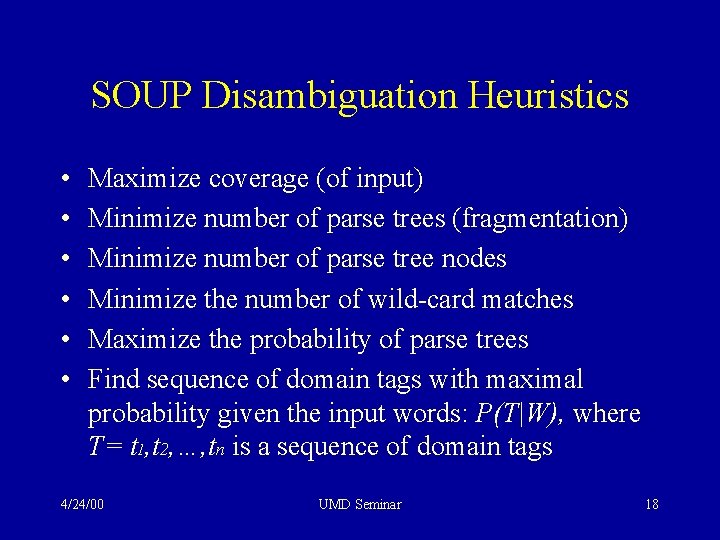

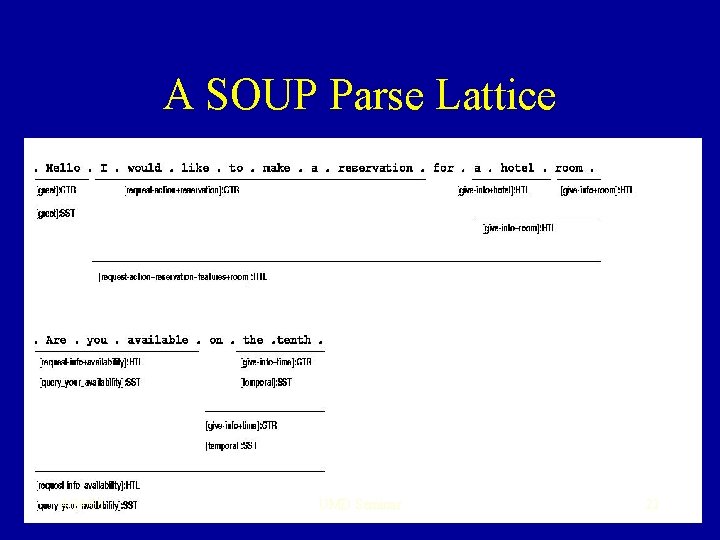

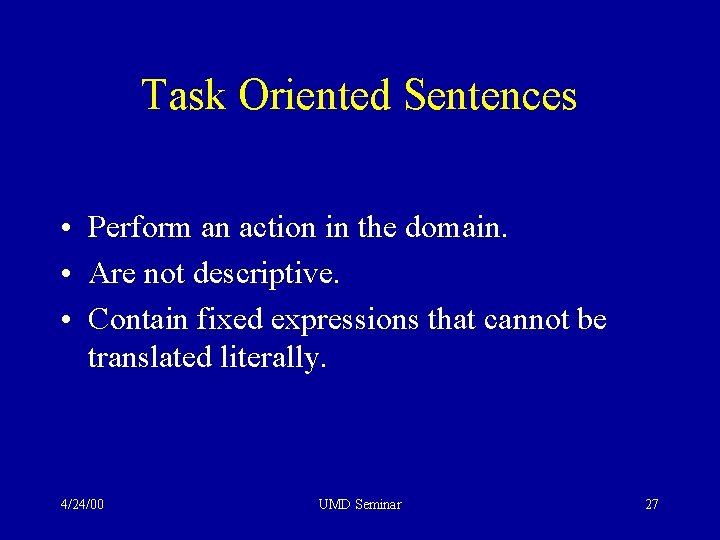

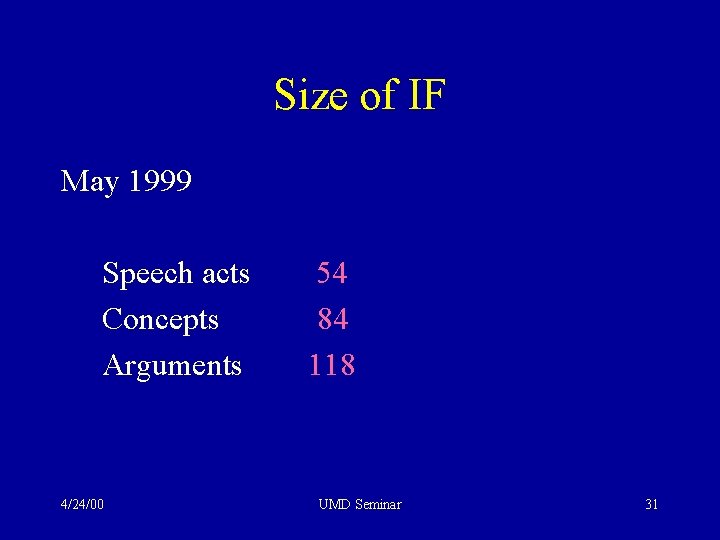

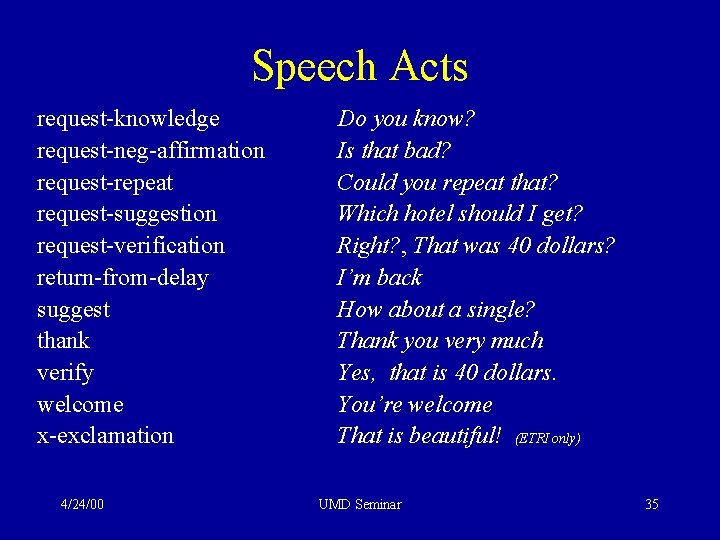

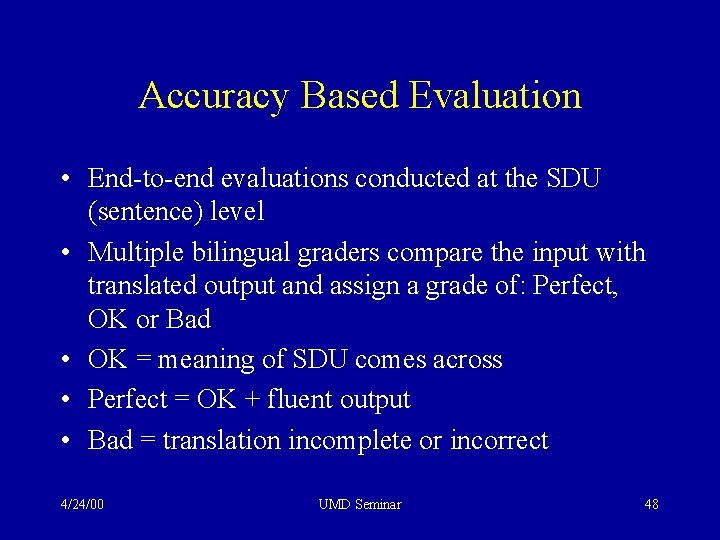

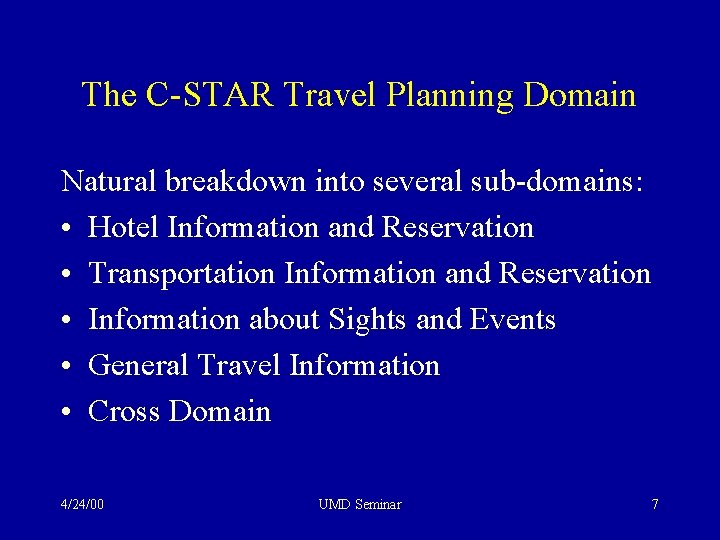

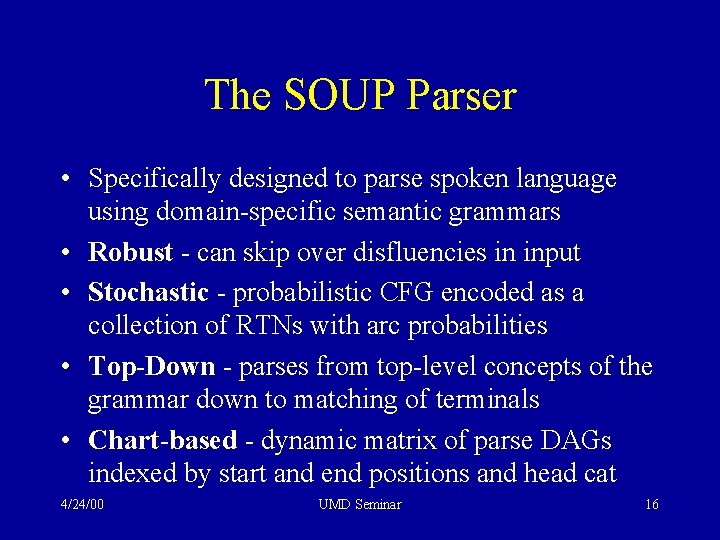

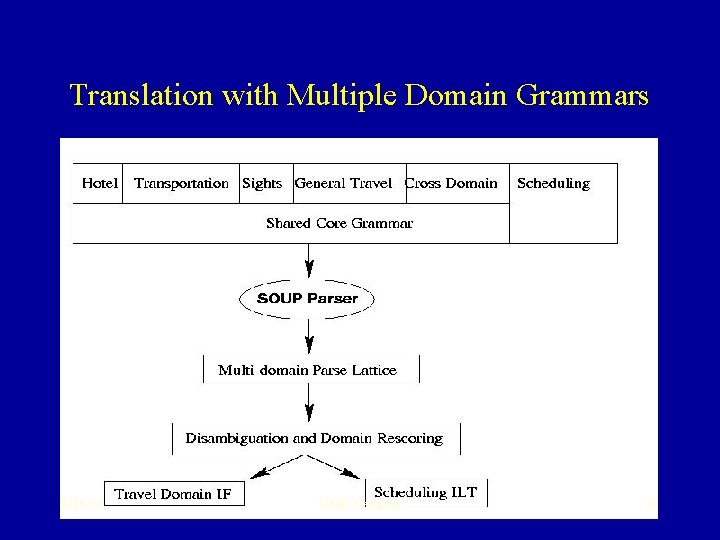

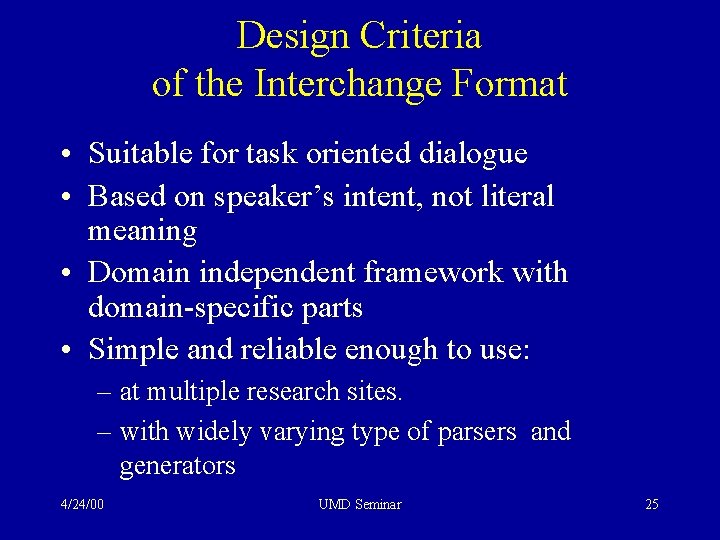

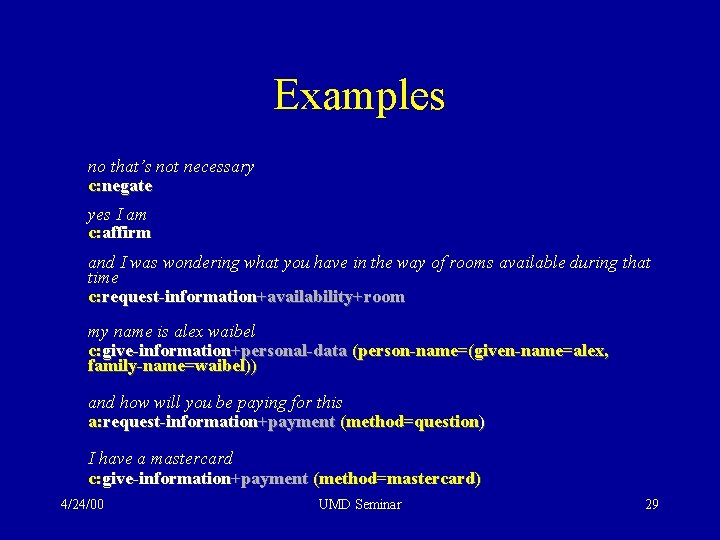

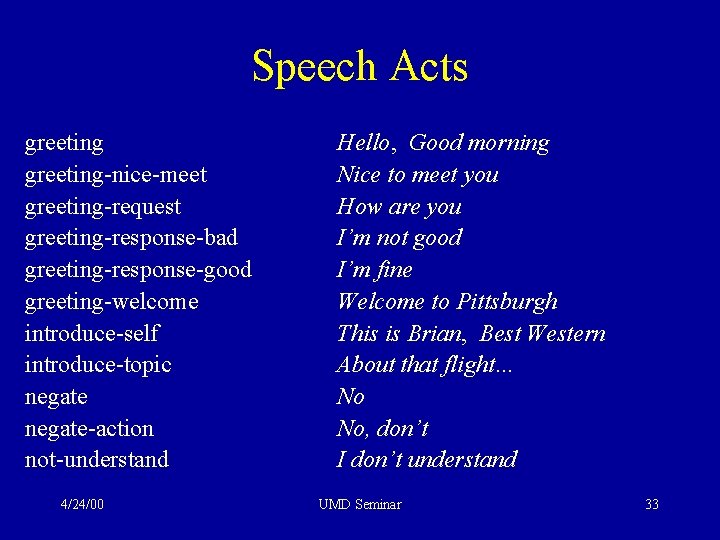

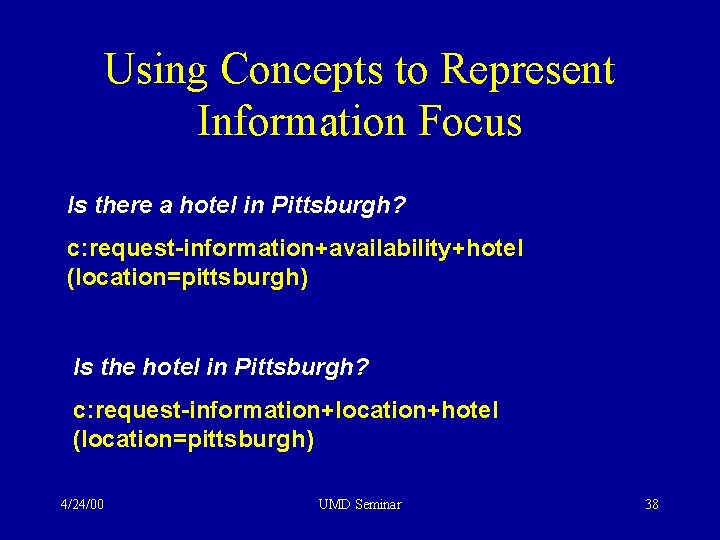

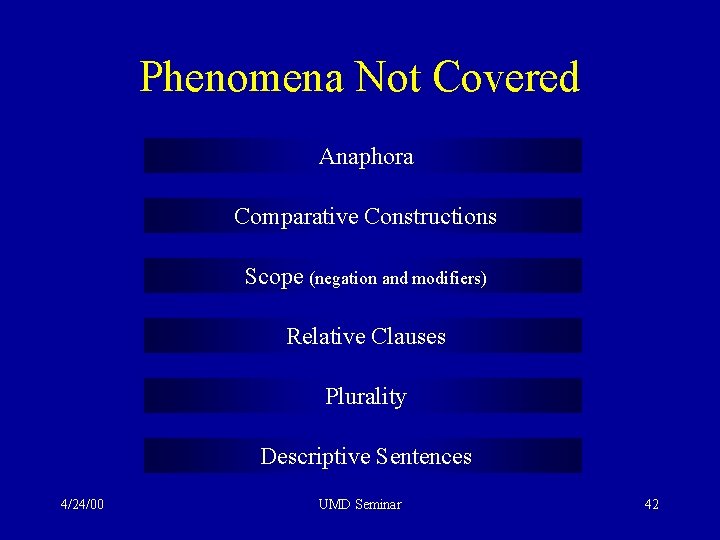

Semantic Grammars • Describe structure of semantic concepts instead of syntactic constituency of phrases • Well suited for task-oriented dialogue containing many fixed expressions • Appropriate for spoken language - often disfluent and syntactically ill-formed • Faster to develop reasonable coverage for limited domains 4/24/00 UMD Seminar 12

![Semantic Grammars Hotel Reservation Example Input we have two hotels available Parse Tree giveinformationavailabilityhotel Semantic Grammars Hotel Reservation Example: Input: we have two hotels available Parse Tree: [give-information+availability+hotel]](https://slidetodoc.com/presentation_image/a4e860850d7ae976a86b0a28789ad963/image-13.jpg)

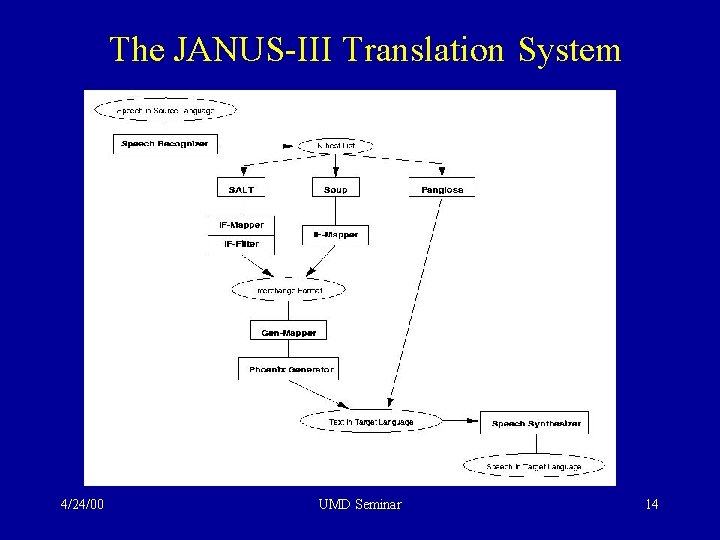

Semantic Grammars Hotel Reservation Example: Input: we have two hotels available Parse Tree: [give-information+availability+hotel] (we have [hotel-type] ([quantity=] (two) [hotel] (hotels) available) 4/24/00 UMD Seminar 13

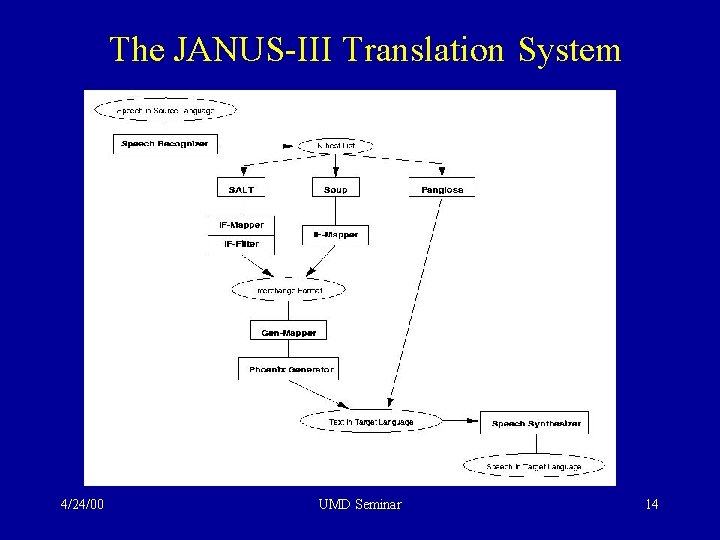

The JANUS-III Translation System 4/24/00 UMD Seminar 14

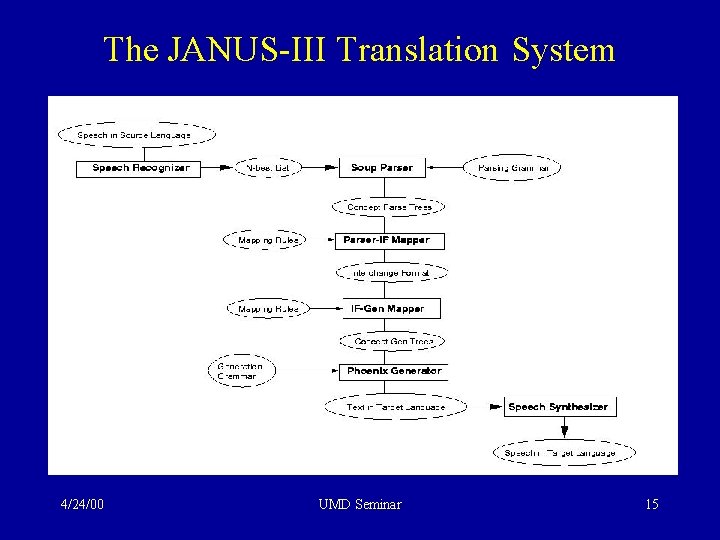

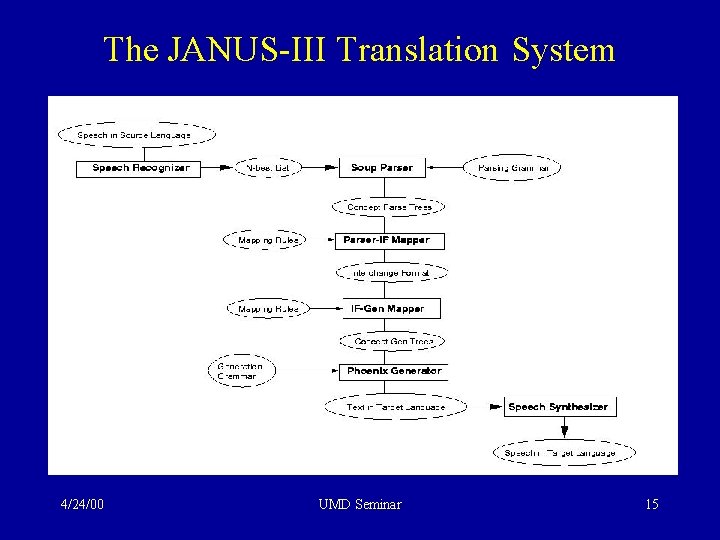

The JANUS-III Translation System 4/24/00 UMD Seminar 15

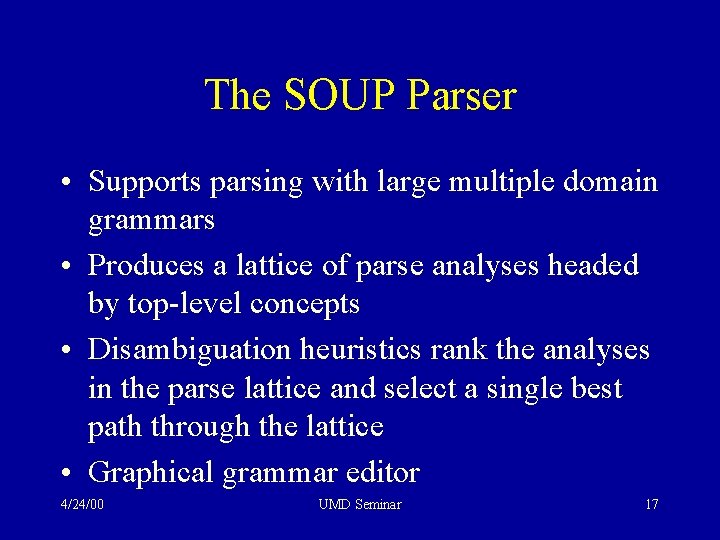

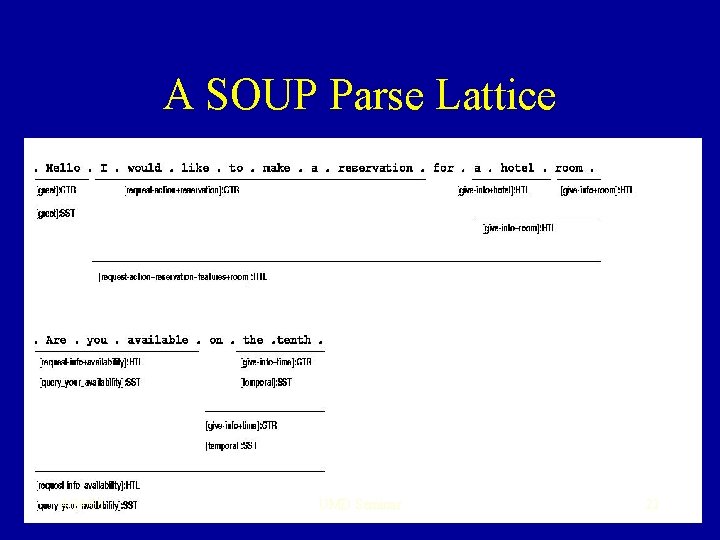

The SOUP Parser • Specifically designed to parse spoken language using domain-specific semantic grammars • Robust - can skip over disfluencies in input • Stochastic - probabilistic CFG encoded as a collection of RTNs with arc probabilities • Top-Down - parses from top-level concepts of the grammar down to matching of terminals • Chart-based - dynamic matrix of parse DAGs indexed by start and end positions and head cat 4/24/00 UMD Seminar 16

The SOUP Parser • Supports parsing with large multiple domain grammars • Produces a lattice of parse analyses headed by top-level concepts • Disambiguation heuristics rank the analyses in the parse lattice and select a single best path through the lattice • Graphical grammar editor 4/24/00 UMD Seminar 17

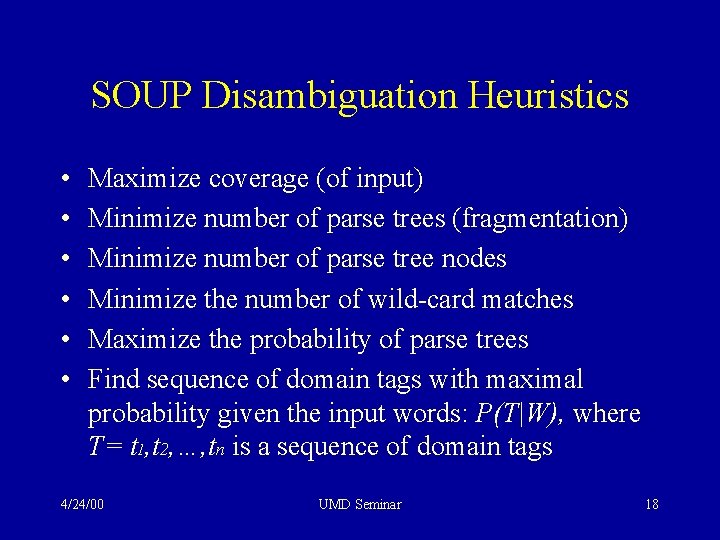

SOUP Disambiguation Heuristics • • • Maximize coverage (of input) Minimize number of parse trees (fragmentation) Minimize number of parse tree nodes Minimize the number of wild-card matches Maximize the probability of parse trees Find sequence of domain tags with maximal probability given the input words: P(T|W), where T= t 1, t 2, …, tn is a sequence of domain tags 4/24/00 UMD Seminar 18

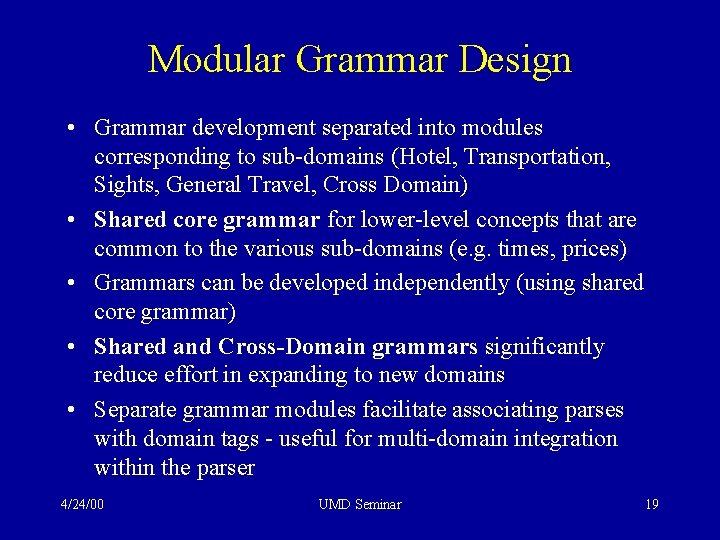

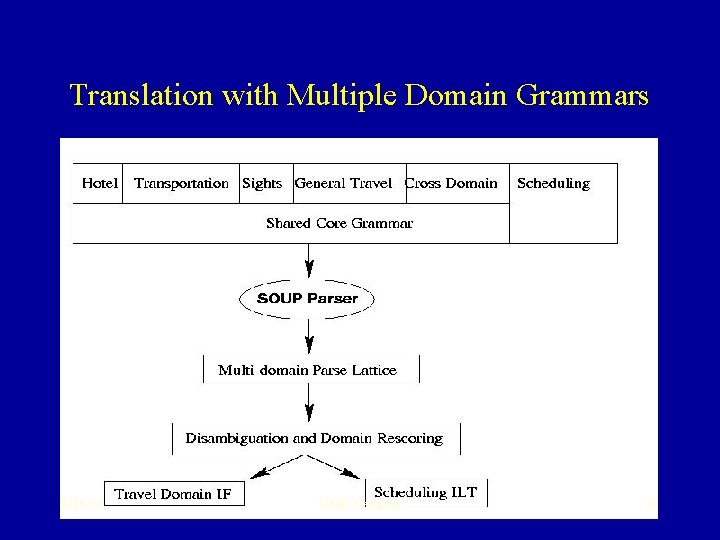

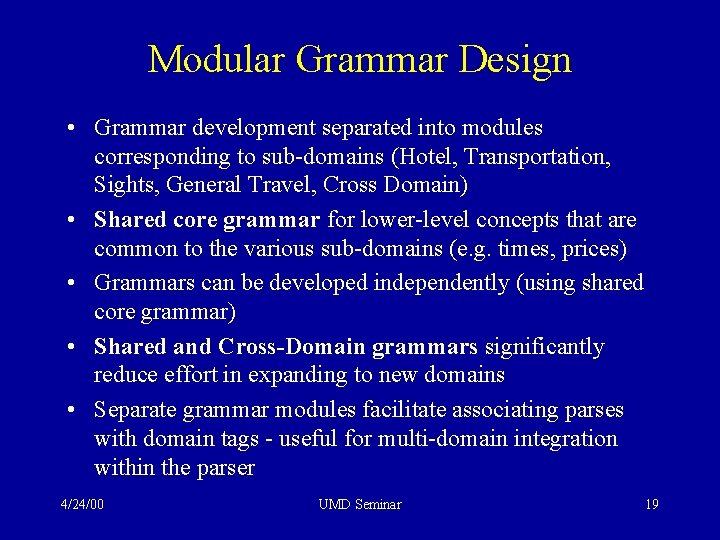

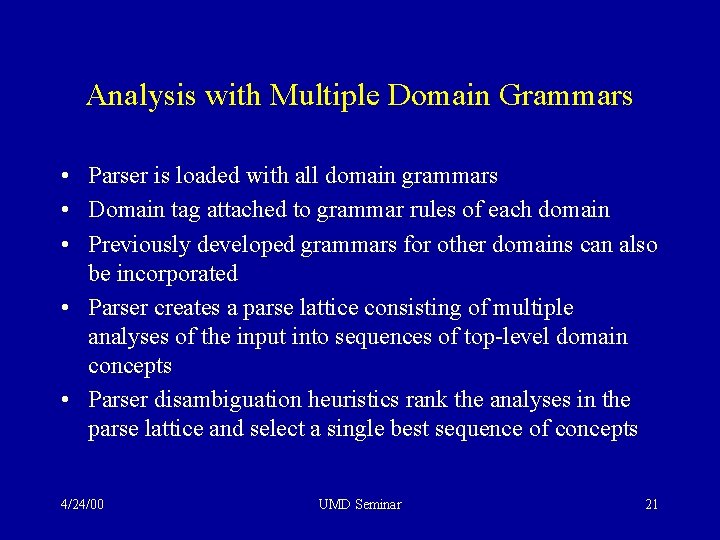

Modular Grammar Design • Grammar development separated into modules corresponding to sub-domains (Hotel, Transportation, Sights, General Travel, Cross Domain) • Shared core grammar for lower-level concepts that are common to the various sub-domains (e. g. times, prices) • Grammars can be developed independently (using shared core grammar) • Shared and Cross-Domain grammars significantly reduce effort in expanding to new domains • Separate grammar modules facilitate associating parses with domain tags - useful for multi-domain integration within the parser 4/24/00 UMD Seminar 19

Translation with Multiple Domain Grammars 4/24/00 UMD Seminar 20

Analysis with Multiple Domain Grammars • Parser is loaded with all domain grammars • Domain tag attached to grammar rules of each domain • Previously developed grammars for other domains can also be incorporated • Parser creates a parse lattice consisting of multiple analyses of the input into sequences of top-level domain concepts • Parser disambiguation heuristics rank the analyses in the parse lattice and select a single best sequence of concepts 4/24/00 UMD Seminar 21

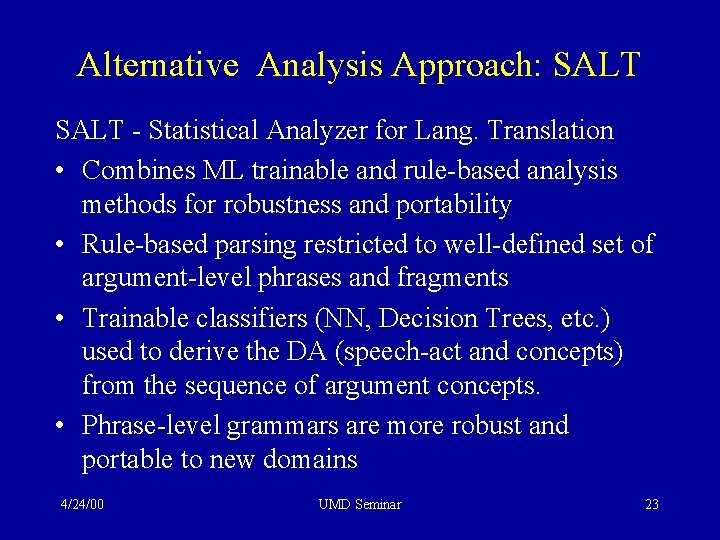

A SOUP Parse Lattice 4/24/00 UMD Seminar 22

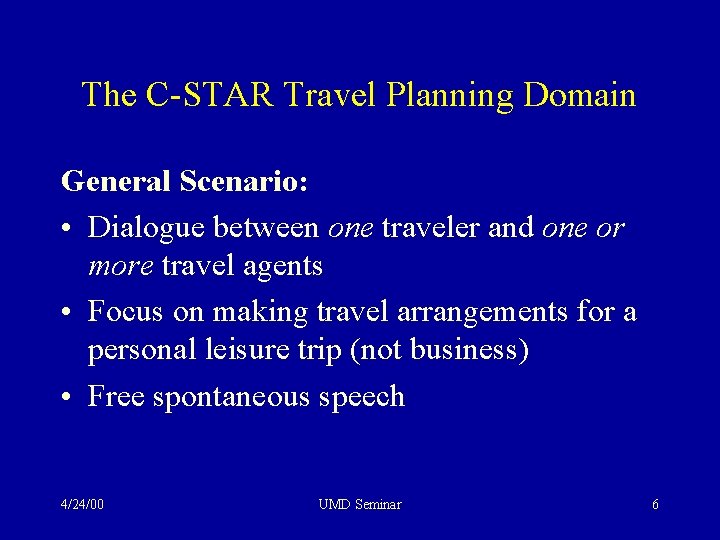

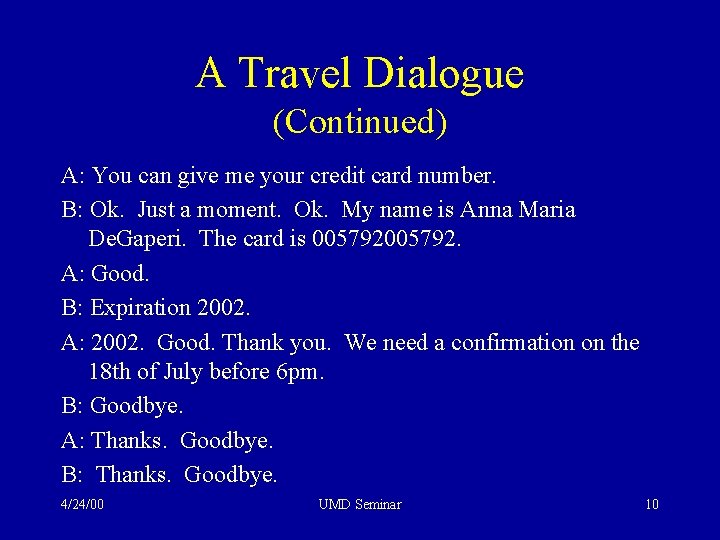

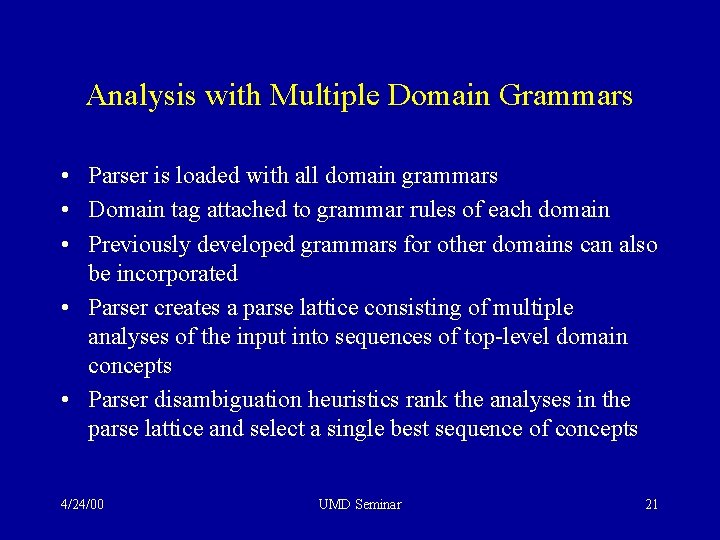

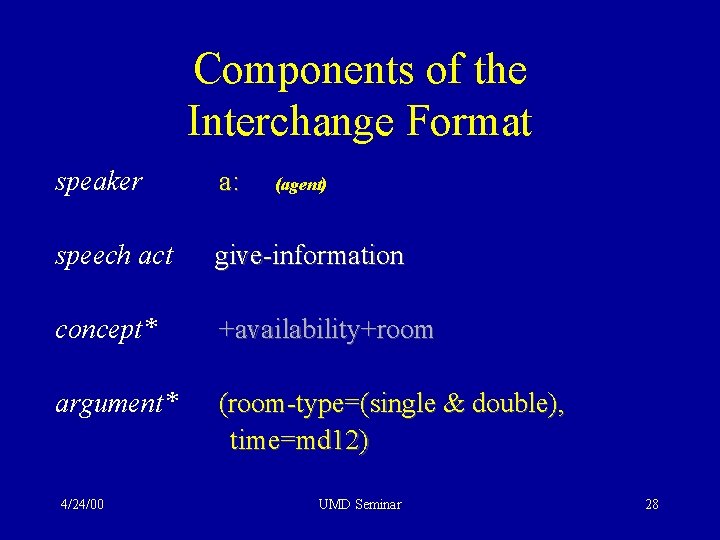

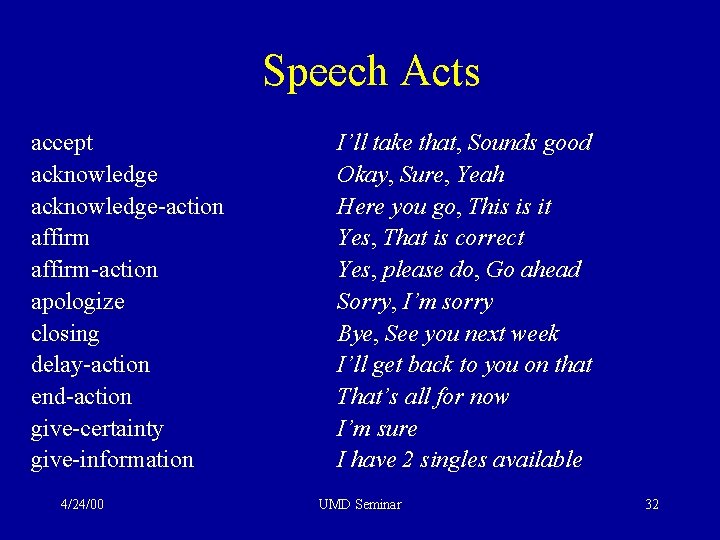

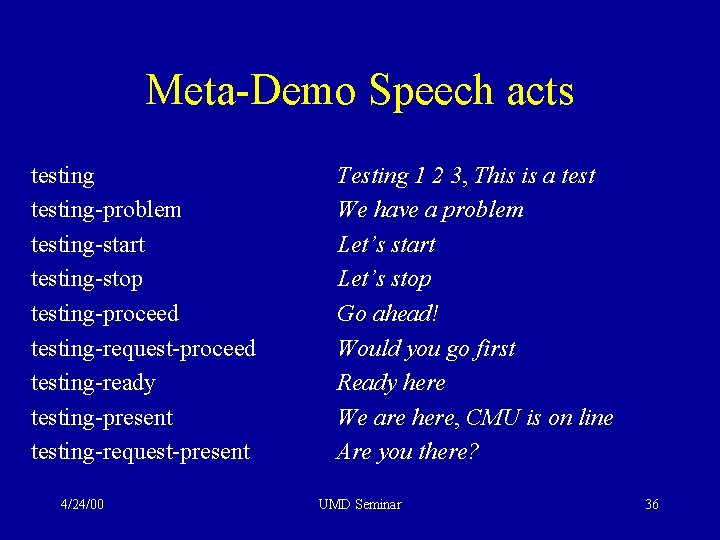

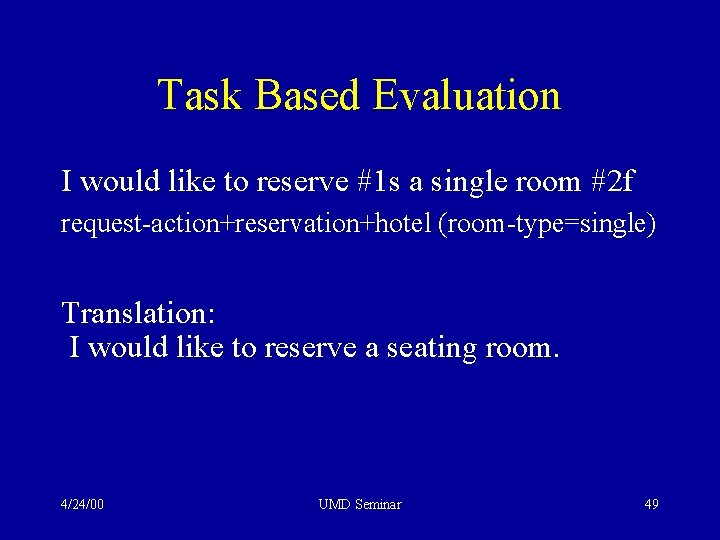

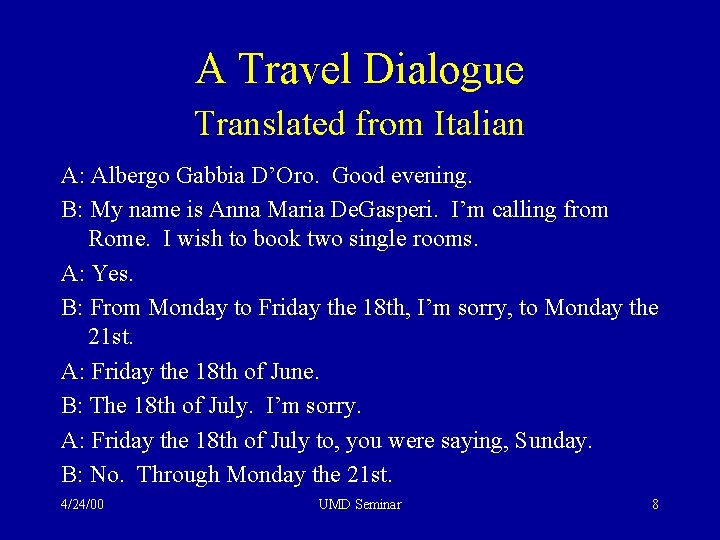

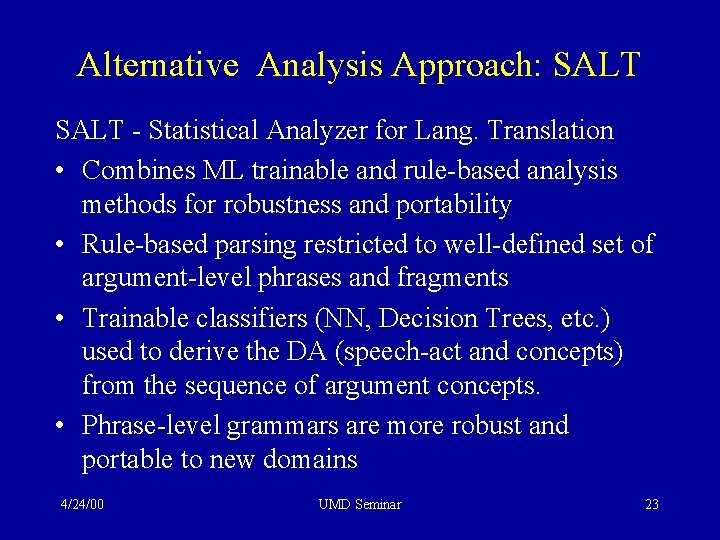

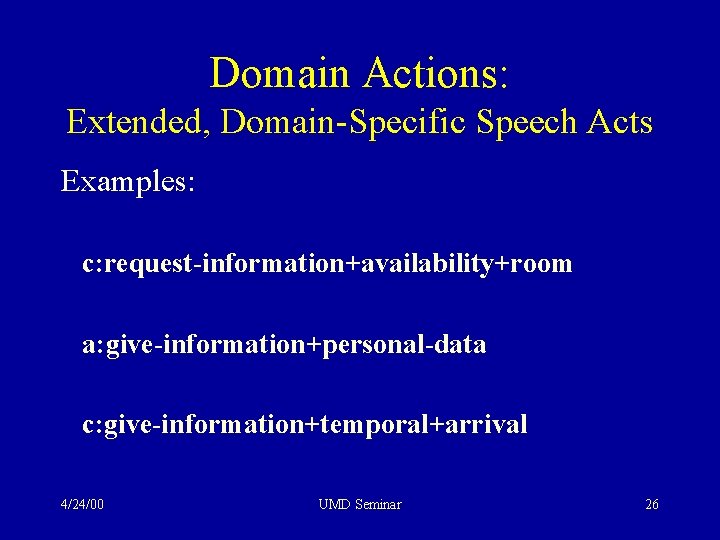

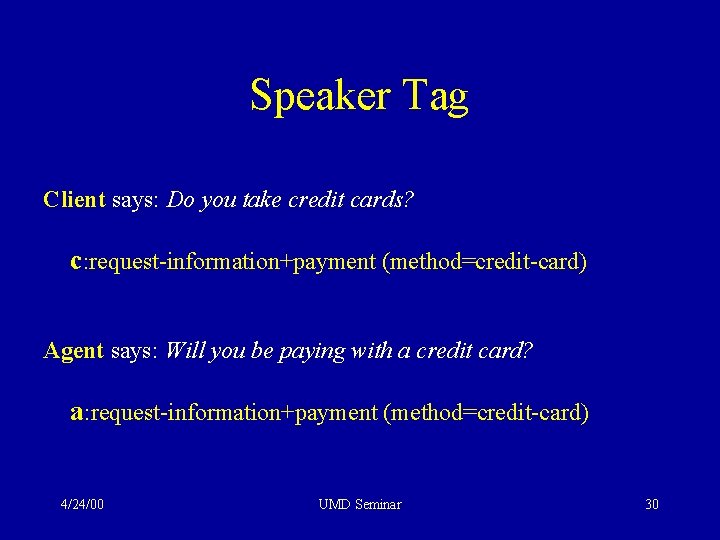

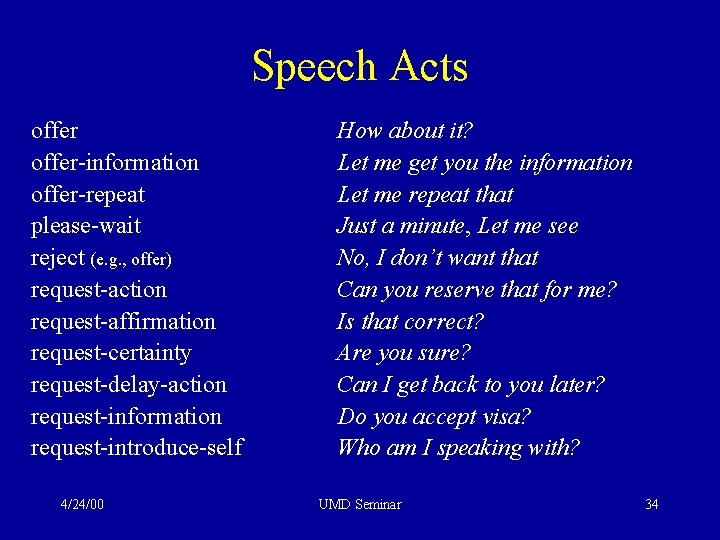

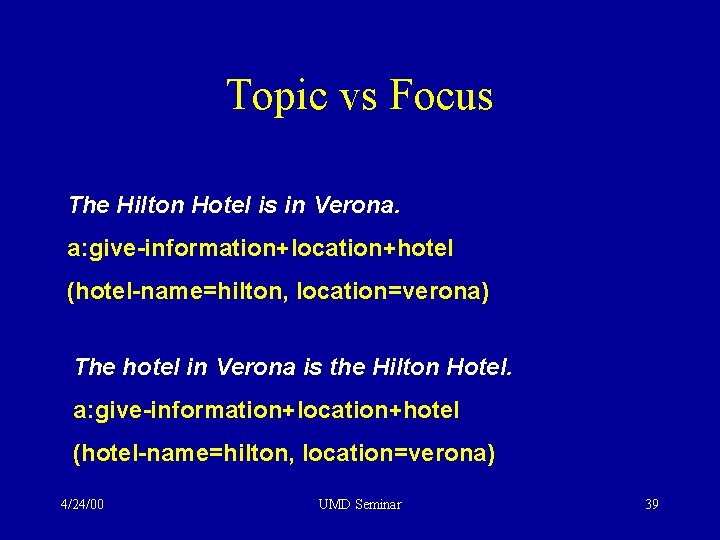

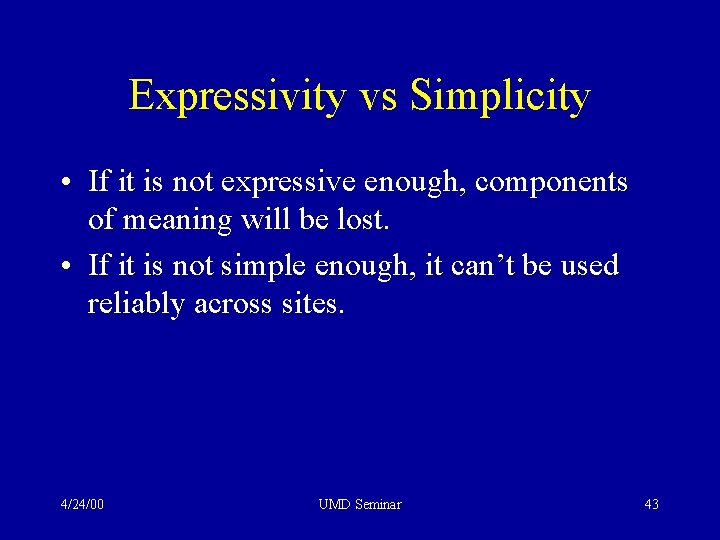

Alternative Analysis Approach: SALT - Statistical Analyzer for Lang. Translation • Combines ML trainable and rule-based analysis methods for robustness and portability • Rule-based parsing restricted to well-defined set of argument-level phrases and fragments • Trainable classifiers (NN, Decision Trees, etc. ) used to derive the DA (speech-act and concepts) from the sequence of argument concepts. • Phrase-level grammars are more robust and portable to new domains 4/24/00 UMD Seminar 23

![SALT Approach Example Input we have two hotels available ArgSOUP exist hoteltype available SALT Approach • Example: Input: we have two hotels available Arg-SOUP: [exist] [hotel-type] [available]](https://slidetodoc.com/presentation_image/a4e860850d7ae976a86b0a28789ad963/image-24.jpg)

SALT Approach • Example: Input: we have two hotels available Arg-SOUP: [exist] [hotel-type] [available] SA-Predictor: give-information Concept-Predictor: availability+hotel • Predictors using SOUP argument concepts and input words • Preliminary results are encouraging 4/24/00 UMD Seminar 24

Design Criteria of the Interchange Format • Suitable for task oriented dialogue • Based on speaker’s intent, not literal meaning • Domain independent framework with domain-specific parts • Simple and reliable enough to use: – at multiple research sites. – with widely varying type of parsers and generators 4/24/00 UMD Seminar 25

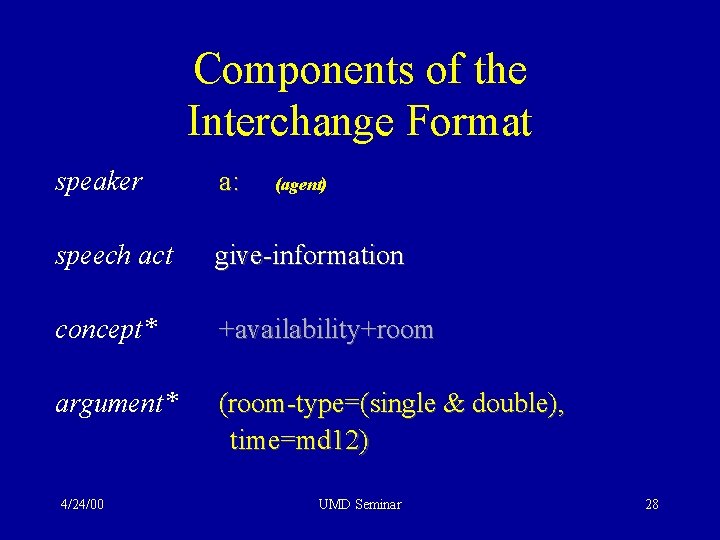

Domain Actions: Extended, Domain-Specific Speech Acts Examples: c: request-information+availability+room a: give-information+personal-data c: give-information+temporal+arrival 4/24/00 UMD Seminar 26

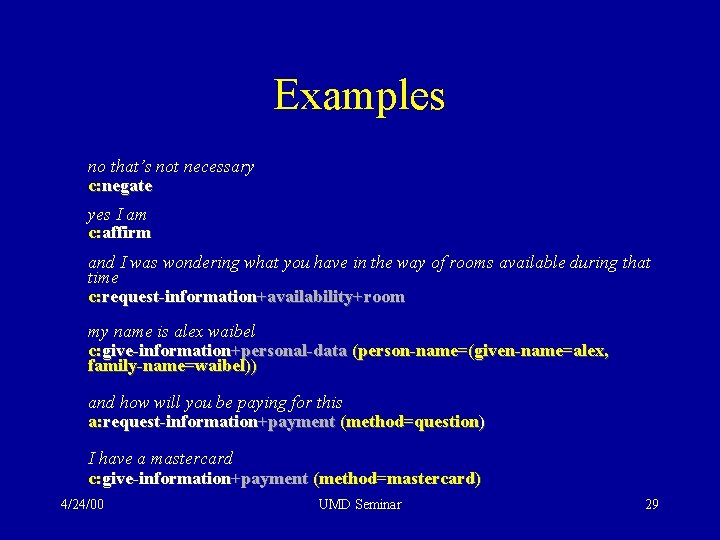

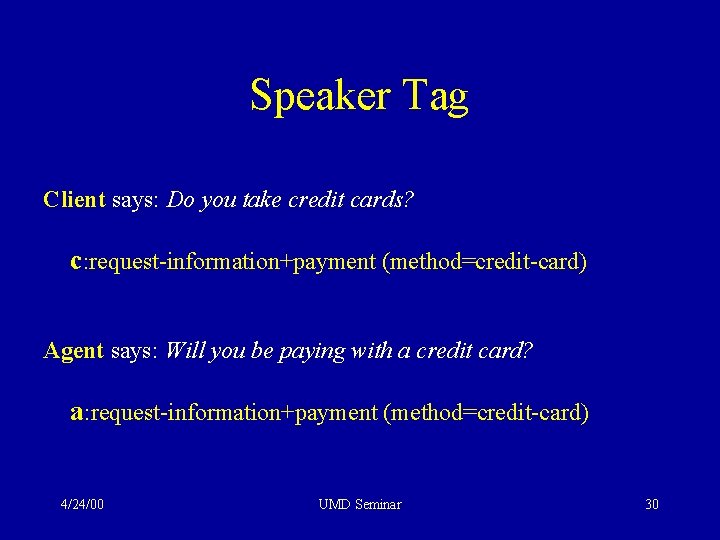

Task Oriented Sentences • Perform an action in the domain. • Are not descriptive. • Contain fixed expressions that cannot be translated literally. 4/24/00 UMD Seminar 27

Components of the Interchange Format speaker a: speech act give-information concept* +availability+room argument* (room-type=(single & double), time=md 12) 4/24/00 (agent) UMD Seminar 28

Examples no that’s not necessary c: negate yes I am c: affirm and I was wondering what you have in the way of rooms available during that time c: request-information+availability+room my name is alex waibel c: give-information+personal-data (person-name=(given-name=alex, family-name=waibel)) and how will you be paying for this a: request-information+payment (method=question) I have a mastercard c: give-information+payment (method=mastercard) 4/24/00 UMD Seminar 29

Speaker Tag Client says: Do you take credit cards? c: request-information+payment (method=credit-card) Agent says: Will you be paying with a credit card? a: request-information+payment (method=credit-card) 4/24/00 UMD Seminar 30

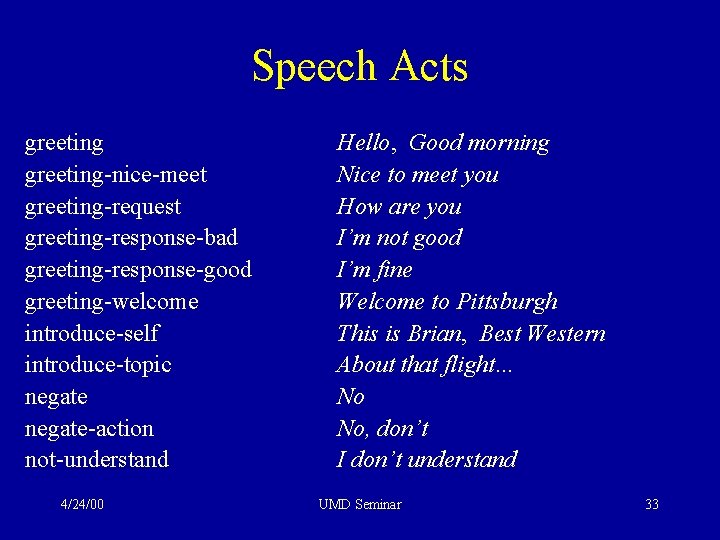

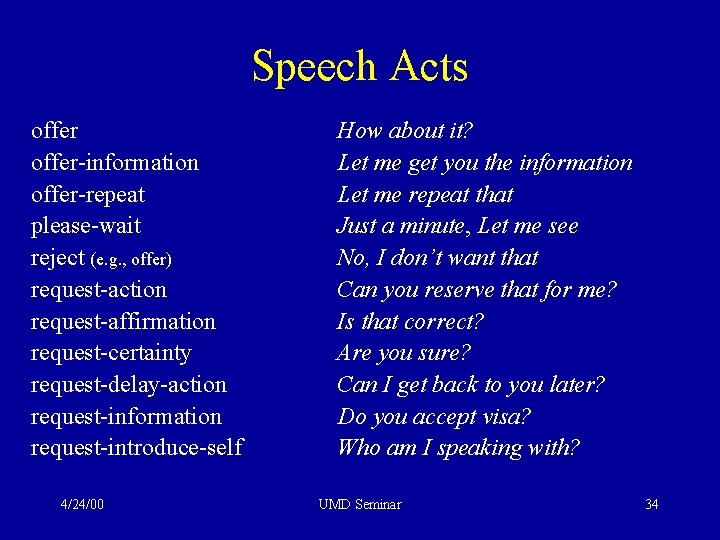

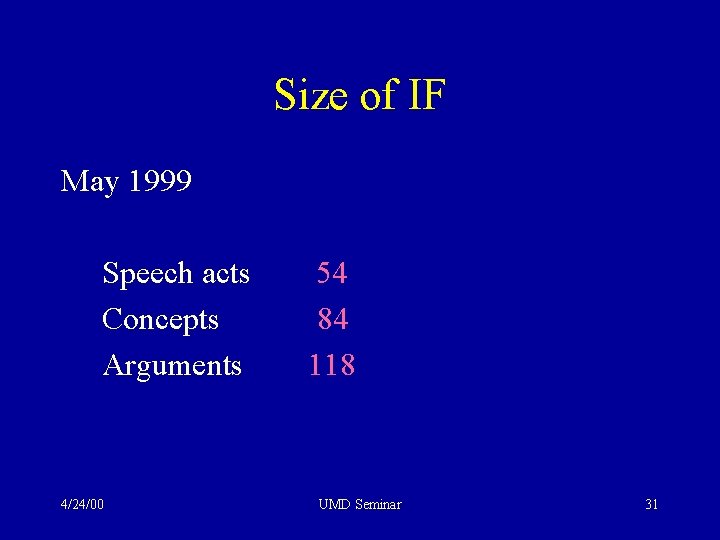

Size of IF May 1999 Speech acts Concepts Arguments 4/24/00 54 84 118 UMD Seminar 31

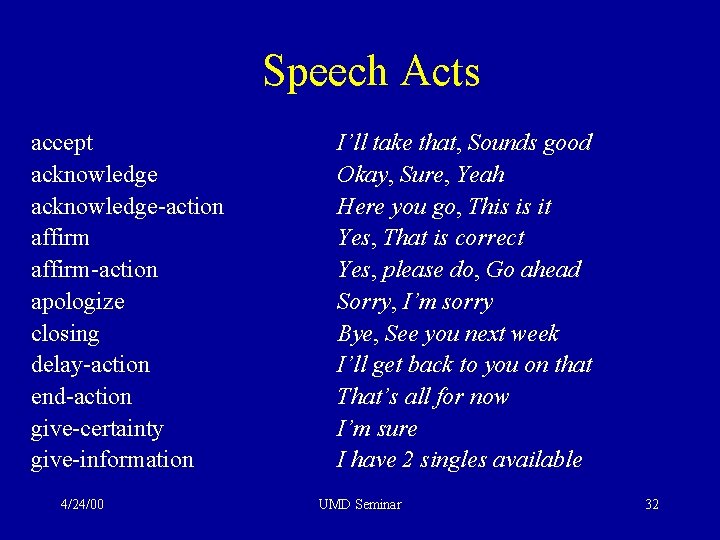

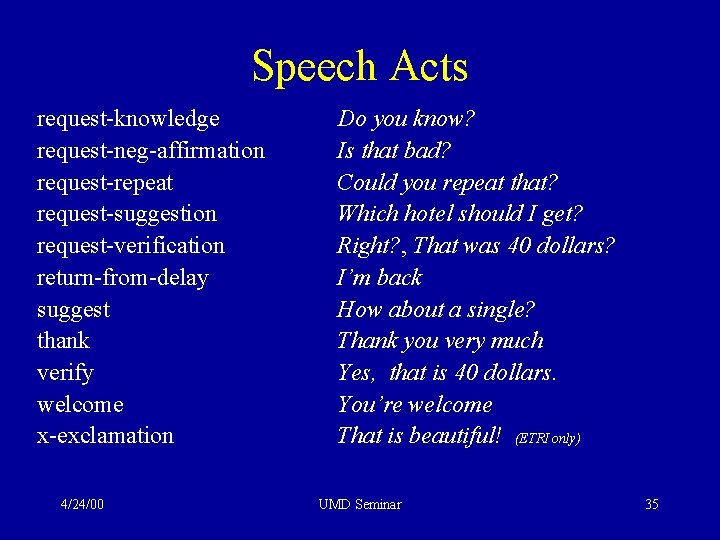

Speech Acts accept acknowledge-action affirm-action apologize closing delay-action end-action give-certainty give-information 4/24/00 I’ll take that, Sounds good Okay, Sure, Yeah Here you go, This is it Yes, That is correct Yes, please do, Go ahead Sorry, I’m sorry Bye, See you next week I’ll get back to you on that That’s all for now I’m sure I have 2 singles available UMD Seminar 32

Speech Acts greeting-nice-meet greeting-request greeting-response-bad greeting-response-good greeting-welcome introduce-self introduce-topic negate-action not-understand 4/24/00 Hello, Good morning Nice to meet you How are you I’m not good I’m fine Welcome to Pittsburgh This is Brian, Best Western About that flight… No No, don’t I don’t understand UMD Seminar 33

Speech Acts offer-information offer-repeat please-wait reject (e. g. , offer) request-action request-affirmation request-certainty request-delay-action request-information request-introduce-self 4/24/00 How about it? Let me get you the information Let me repeat that Just a minute, Let me see No, I don’t want that Can you reserve that for me? Is that correct? Are you sure? Can I get back to you later? Do you accept visa? Who am I speaking with? UMD Seminar 34

Speech Acts request-knowledge request-neg-affirmation request-repeat request-suggestion request-verification return-from-delay suggest thank verify welcome x-exclamation 4/24/00 Do you know? Is that bad? Could you repeat that? Which hotel should I get? Right? , That was 40 dollars? I’m back How about a single? Thank you very much Yes, that is 40 dollars. You’re welcome That is beautiful! (ETRI only) UMD Seminar 35

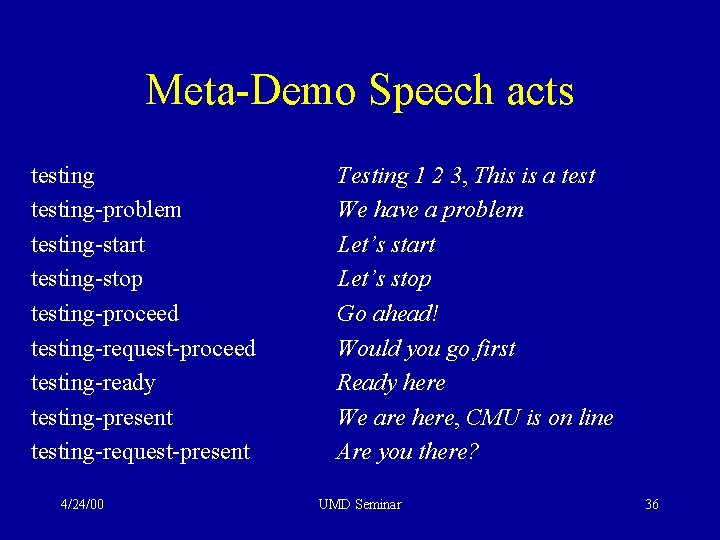

Meta-Demo Speech acts testing-problem testing-start testing-stop testing-proceed testing-request-proceed testing-ready testing-present testing-request-present 4/24/00 Testing 1 2 3, This is a test We have a problem Let’s start Let’s stop Go ahead! Would you go first Ready here We are here, CMU is on line Are you there? UMD Seminar 36

Some Concepts • Actions: change, reservation, confirmation, cancellation, help, purchase, view, display, preference • Attributes: availability, price, temporal, price, location, size, features etc. • Objects: room, hotel, flight, tour, event, attraction, web-page etc. • Other: arrival, departure, numeral, expirationdate, payment 4/24/00 UMD Seminar 37

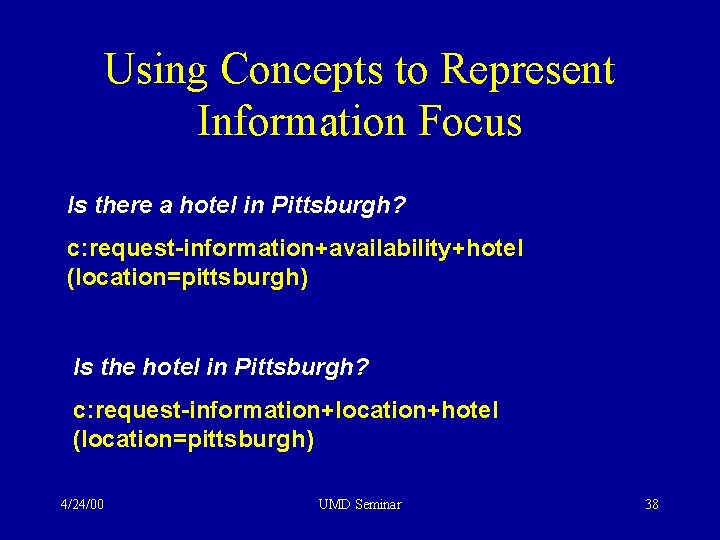

Using Concepts to Represent Information Focus Is there a hotel in Pittsburgh? c: request-information+availability+hotel (location=pittsburgh) Is the hotel in Pittsburgh? c: request-information+location+hotel (location=pittsburgh) 4/24/00 UMD Seminar 38

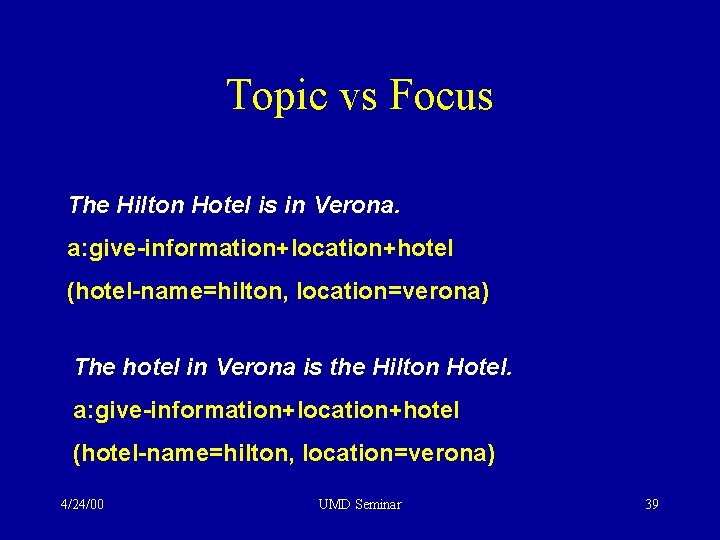

Topic vs Focus The Hilton Hotel is in Verona. a: give-information+location+hotel (hotel-name=hilton, location=verona) The hotel in Verona is the Hilton Hotel. a: give-information+location+hotel (hotel-name=hilton, location=verona) 4/24/00 UMD Seminar 39

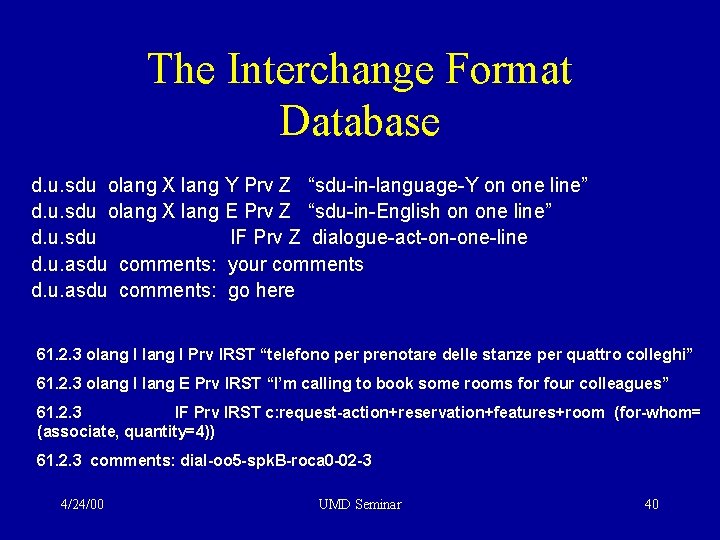

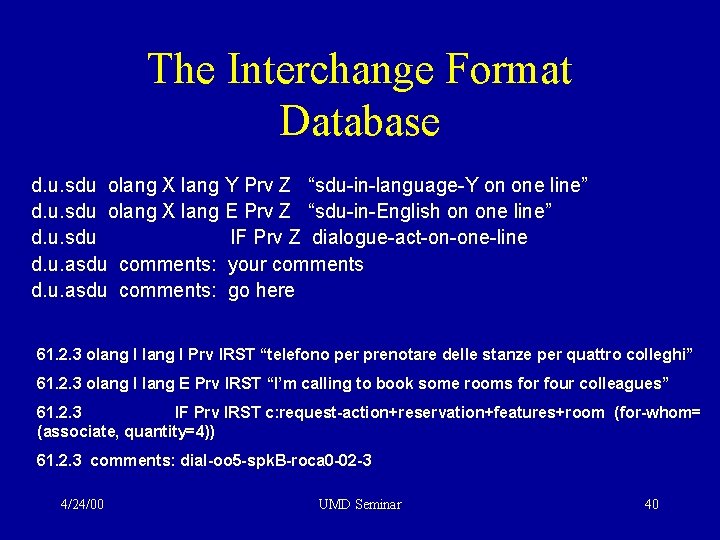

The Interchange Format Database d. u. sdu olang X lang Y Prv Z “sdu-in-language-Y on one line” d. u. sdu olang X lang E Prv Z “sdu-in-English on one line” d. u. sdu IF Prv Z dialogue-act-on-one-line d. u. asdu comments: your comments d. u. asdu comments: go here 61. 2. 3 olang I Prv IRST “telefono per prenotare delle stanze per quattro colleghi” 61. 2. 3 olang I lang E Prv IRST “I’m calling to book some rooms for four colleagues” 61. 2. 3 IF Prv IRST c: request-action+reservation+features+room (for-whom= (associate, quantity=4)) 61. 2. 3 comments: dial-oo 5 -spk. B-roca 0 -02 -3 4/24/00 UMD Seminar 40

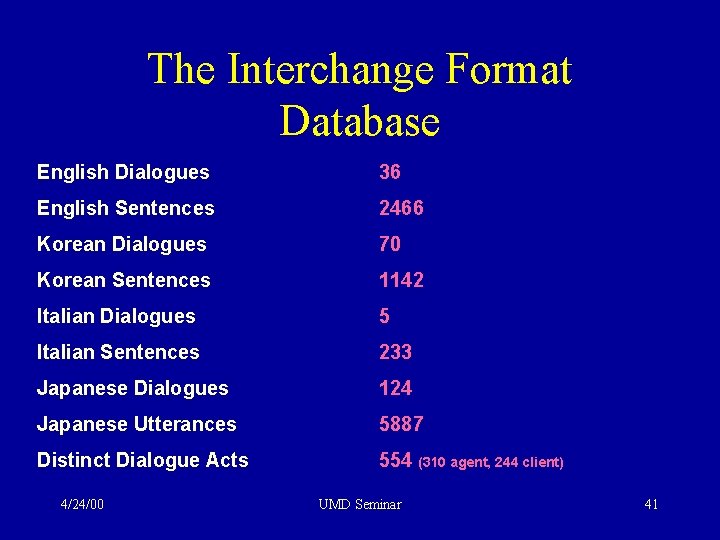

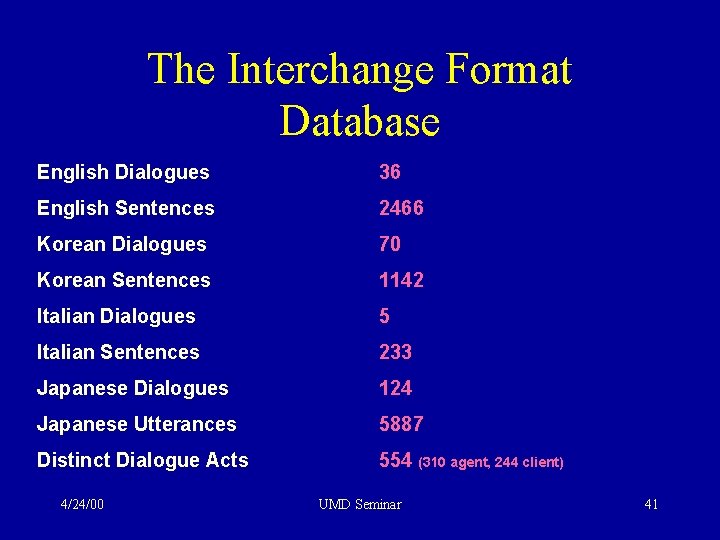

The Interchange Format Database English Dialogues 36 English Sentences 2466 Korean Dialogues 70 Korean Sentences 1142 Italian Dialogues 5 Italian Sentences 233 Japanese Dialogues 124 Japanese Utterances 5887 Distinct Dialogue Acts 554 (310 agent, 244 client) 4/24/00 UMD Seminar 41

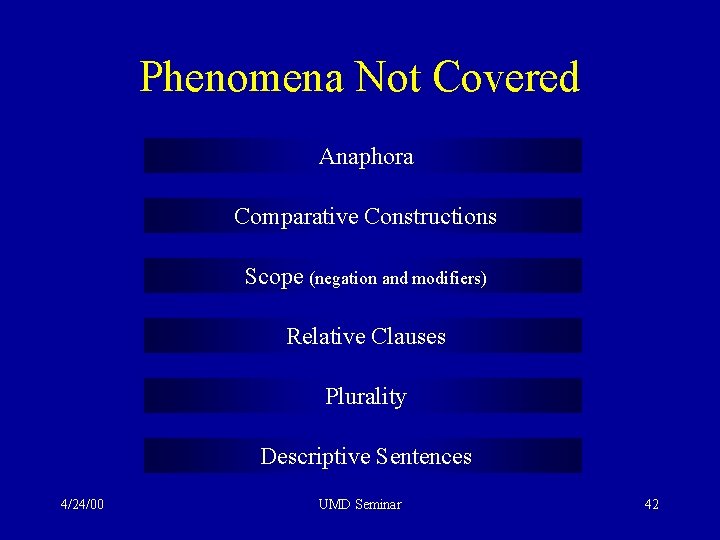

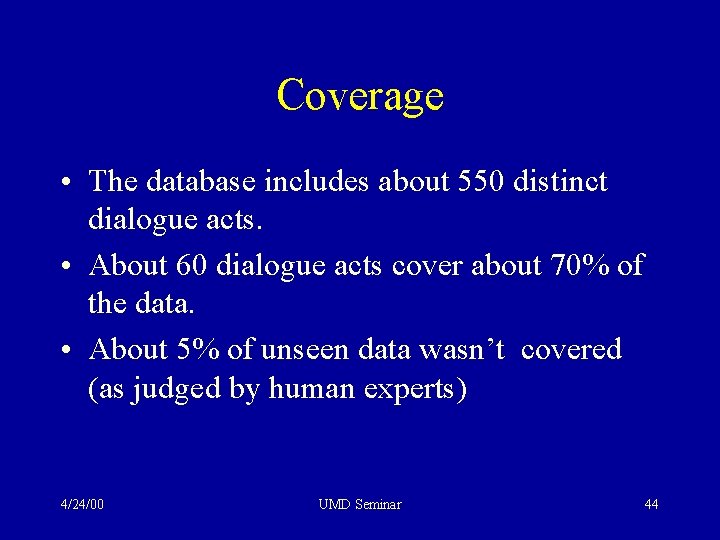

Phenomena Not Covered Anaphora Comparative Constructions Scope (negation and modifiers) Relative Clauses Plurality Descriptive Sentences 4/24/00 UMD Seminar 42

Expressivity vs Simplicity • If it is not expressive enough, components of meaning will be lost. • If it is not simple enough, it can’t be used reliably across sites. 4/24/00 UMD Seminar 43

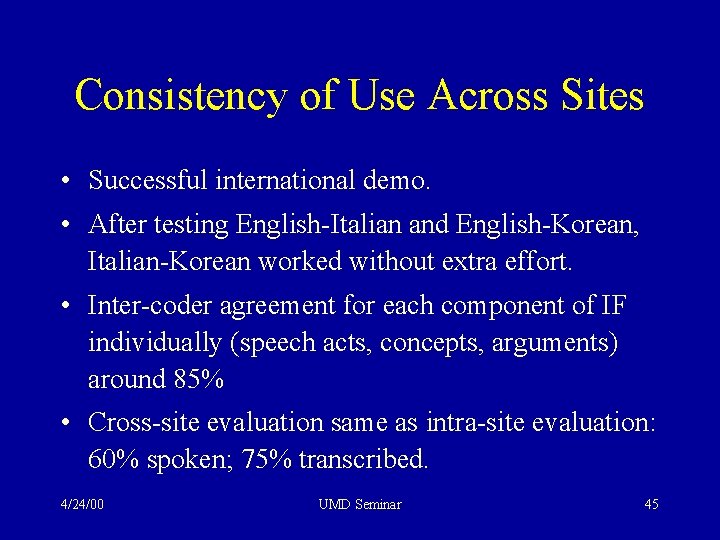

Coverage • The database includes about 550 distinct dialogue acts. • About 60 dialogue acts cover about 70% of the data. • About 5% of unseen data wasn’t covered (as judged by human experts) 4/24/00 UMD Seminar 44

Consistency of Use Across Sites • Successful international demo. • After testing English-Italian and English-Korean, Italian-Korean worked without extra effort. • Inter-coder agreement for each component of IF individually (speech acts, concepts, arguments) around 85% • Cross-site evaluation same as intra-site evaluation: 60% spoken; 75% transcribed. 4/24/00 UMD Seminar 45

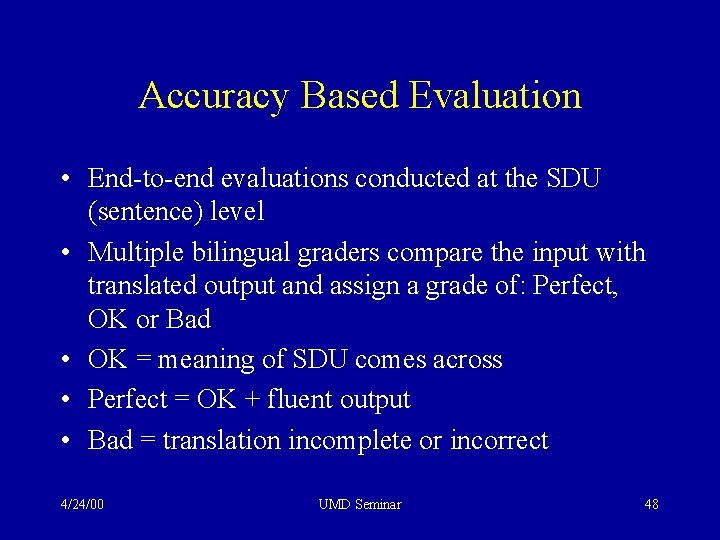

User Studies • We conducted three sets of user tests • Travel agent played by experienced system user • Traveler is played by a novice and given five minutes of instruction • Traveler is given a general scenario - e. g. , plan a trip to Heidelberg • Communication only via ST system, multi-modal interface and muted video connection • Data collected used for system evaluation, error analysis and then grammar development 4/24/00 UMD Seminar 46

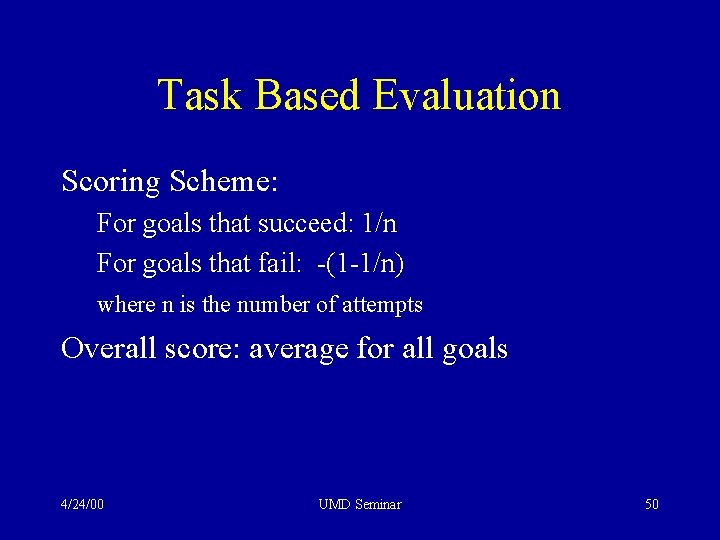

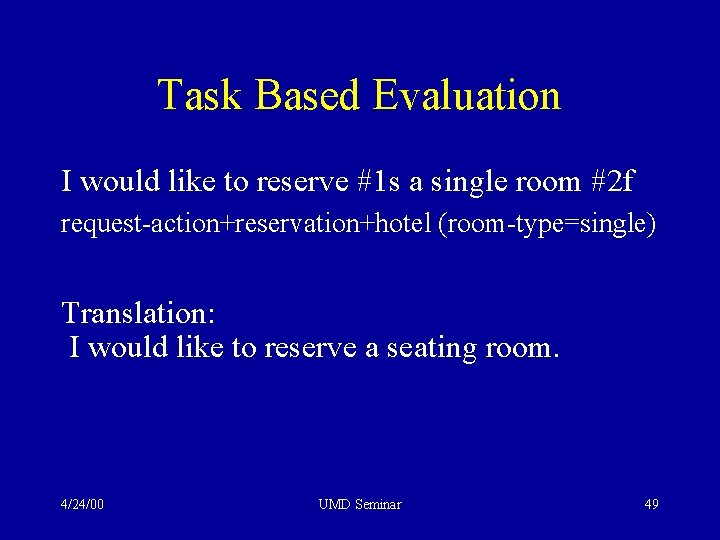

Evaluations • Accuracy Based Evaluation – Translation preserves original meaning • Task Based Evaluation – goal success or failure – user effort: how many attempts before succeeding or giving up 4/24/00 UMD Seminar 47

Accuracy Based Evaluation • End-to-end evaluations conducted at the SDU (sentence) level • Multiple bilingual graders compare the input with translated output and assign a grade of: Perfect, OK or Bad • OK = meaning of SDU comes across • Perfect = OK + fluent output • Bad = translation incomplete or incorrect 4/24/00 UMD Seminar 48

Task Based Evaluation I would like to reserve #1 s a single room #2 f request-action+reservation+hotel (room-type=single) Translation: I would like to reserve a seating room. 4/24/00 UMD Seminar 49

Task Based Evaluation Scoring Scheme: For goals that succeed: 1/n For goals that fail: -(1 -1/n) where n is the number of attempts Overall score: average for all goals 4/24/00 UMD Seminar 50

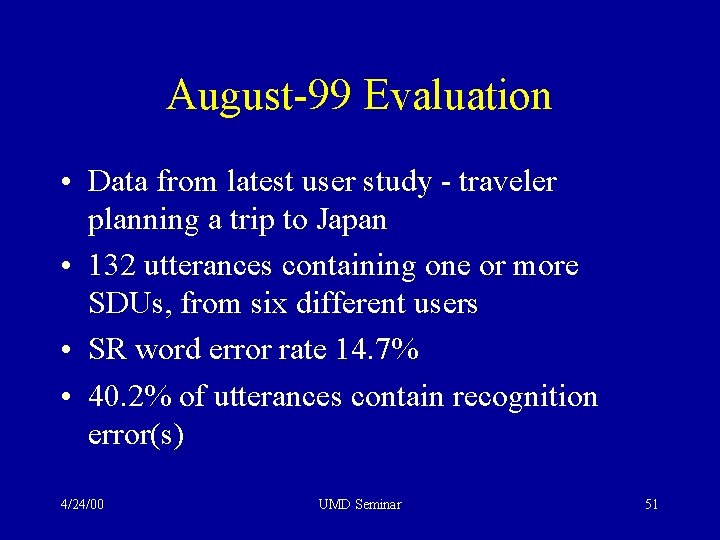

August-99 Evaluation • Data from latest user study - traveler planning a trip to Japan • 132 utterances containing one or more SDUs, from six different users • SR word error rate 14. 7% • 40. 2% of utterances contain recognition error(s) 4/24/00 UMD Seminar 51

Accuracy and Task Based Evaluations 4/24/00 UMD Seminar 52

Accuracy Based Evaluation 4/24/00 UMD Seminar 53

Evaluation - Progress Over Time 4/24/00 UMD Seminar 54

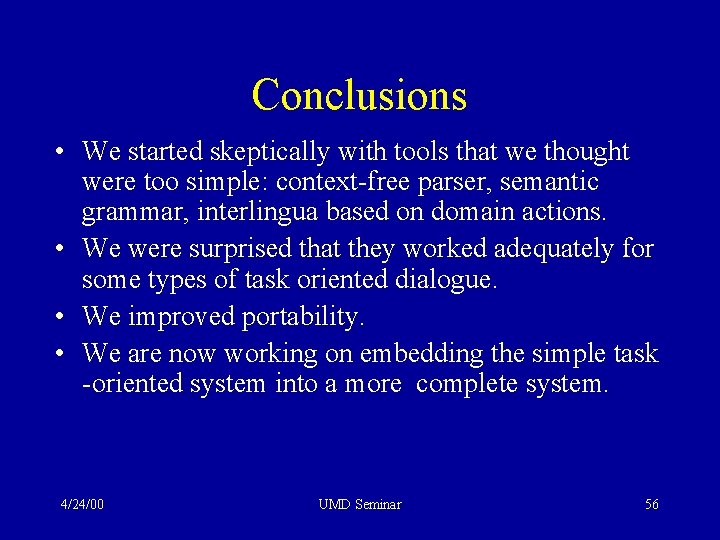

Current and Future Work • Expanding the travel domain: covering descriptive as well as task-oriented sentences • Development of the SALT statistical approach and expanding it to other domains • Full integration of multiple MT approaches: SOUP, SALT, Pangloss • Disambiguation: improved sentence-level disambiguation; applying discourse contextual information for disambiguation 4/24/00 UMD Seminar 55

Conclusions • We started skeptically with tools that we thought were too simple: context-free parser, semantic grammar, interlingua based on domain actions. • We were surprised that they worked adequately for some types of task oriented dialogue. • We improved portability. • We are now working on embedding the simple task -oriented system into a more complete system. 4/24/00 UMD Seminar 56

The JANUS/C-STAR Team • Project Leaders: Lori Levin, Alon Lavie, Monika Woszczyna, Alex Waibel • Grammar and Component Developers: Donna Gates, Dorcas Wallace, Taro Watanabe, Boris Bartlog, Ariadna Font-Llitjos, Marsal Gavalda, Chad Langley, Marcus Munk, Klaus Ries, Klaus Zechner, Detlef Koll, Michael Finke, Eric Carraux, Celine Morel, Alexandra Slavkovic, Susie Burger, Laura Tomokiyo, Takashi Tomokiyo, Kavita Thomas, Mirella Lapata, Matthew Broadhead, Cortis Clark, Christie Watson, Daniella Mueller, Sondra Ahlen 4/24/00 UMD Seminar 57