Spark Streaming Largescale nearrealtime stream processing Tathagata Das

- Slides: 37

Spark Streaming Large-scale near-real-time stream processing Tathagata Das (TD) UC BERKELEY

Motivation ²Many important applications must process large data streams at second-scale latencies – Check-ins, status updates, site statistics, spam filtering, … ²Require large clusters to handle workloads ²Require latencies of few seconds 2

Case study: Conviva, Inc. ²Real-time monitoring of online video metadata ²Custom-built distributed streaming system – 1000 s complex metrics on millions of videos sessions – Requires many dozens of nodes for processing ²Hadoop backend for offline analysis – Generating daily and monthly reports – Similar computation as the streaming system Painful to maintain two stacks 3

Goals ² Framework for large-scale stream processing ² Scalable to large clusters (~ 100 nodes) with near-real-time latency (~ 1 second) ² Efficiently recovers from faults and stragglers ² Simple programming model that integrates well with batch & interactive queries Existing system do not achieve all of them 4

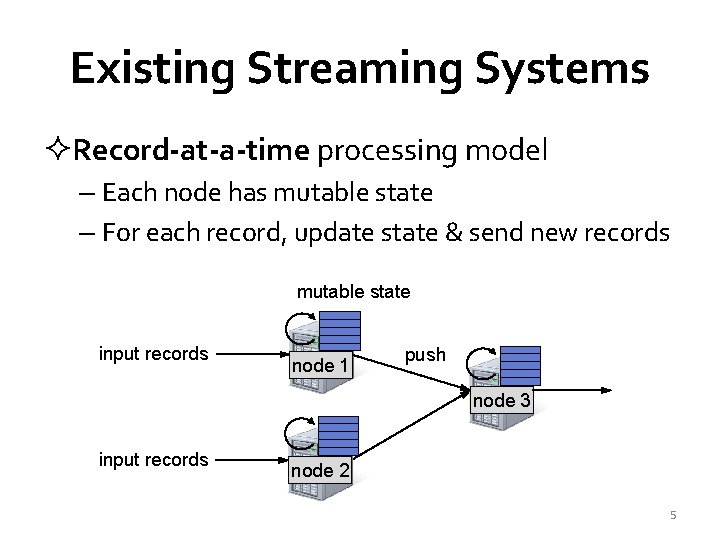

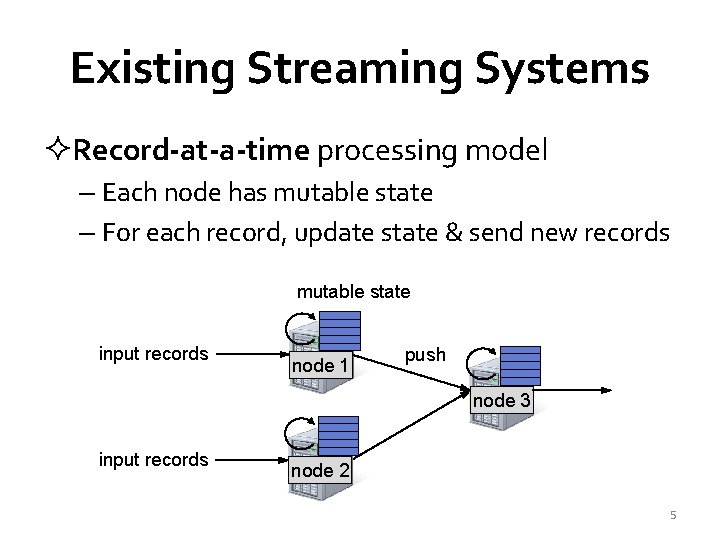

Existing Streaming Systems ²Record-at-a-time processing model – Each node has mutable state – For each record, update state & send new records mutable state input records node 1 push node 3 input records node 2 5

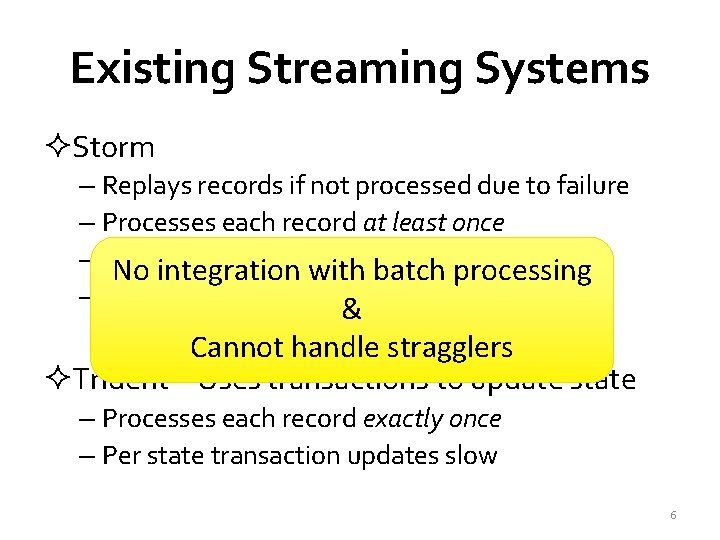

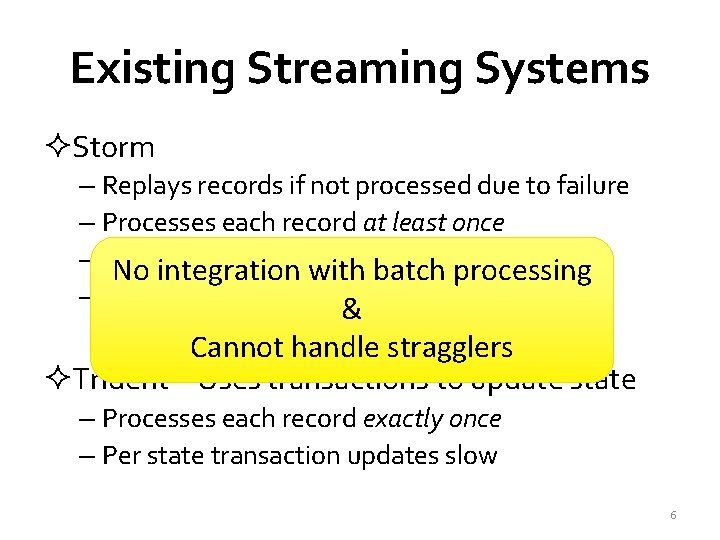

Existing Streaming Systems ²Storm – Replays records if not processed due to failure – Processes each record at least once – May update mutable state twice! No integration with batch processing – Mutable state can be& lost due to failure! Cannot handle stragglers ²Trident – Uses transactions to update state – Processes each record exactly once – Per state transaction updates slow 6

Spark Streaming 7

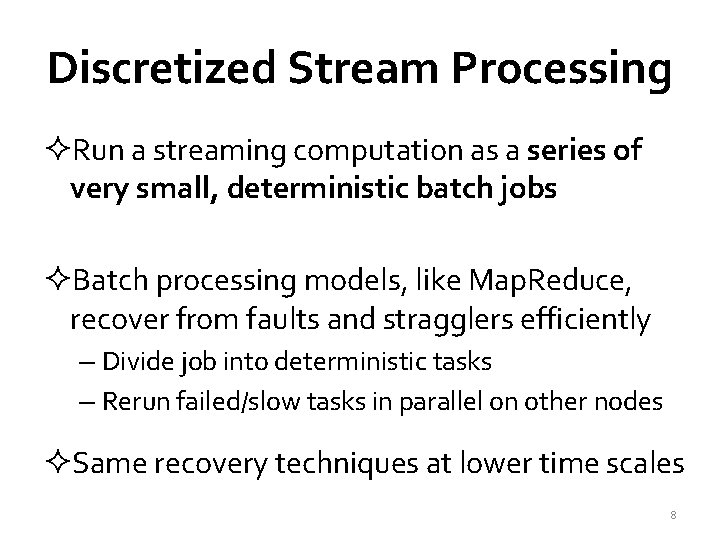

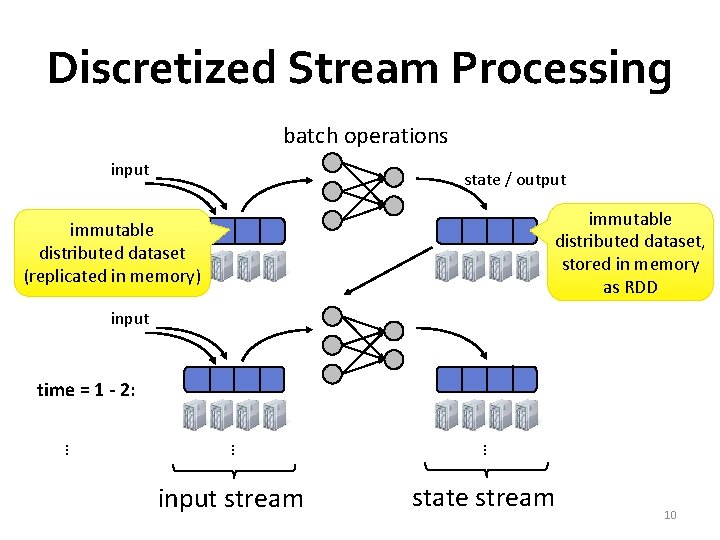

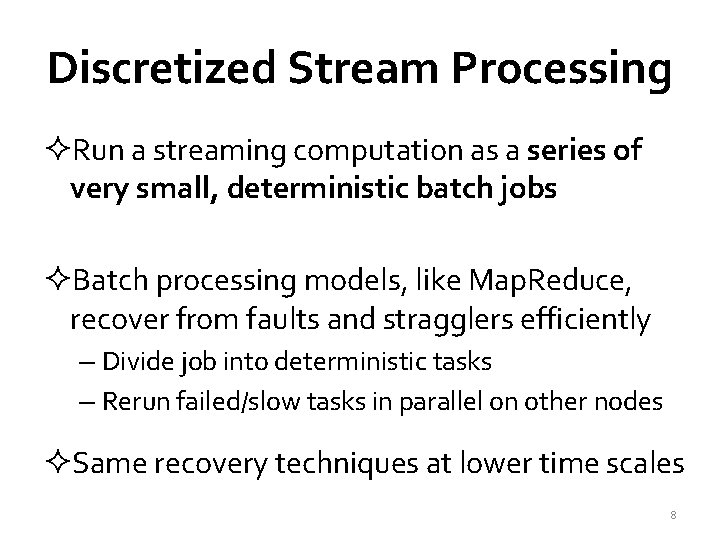

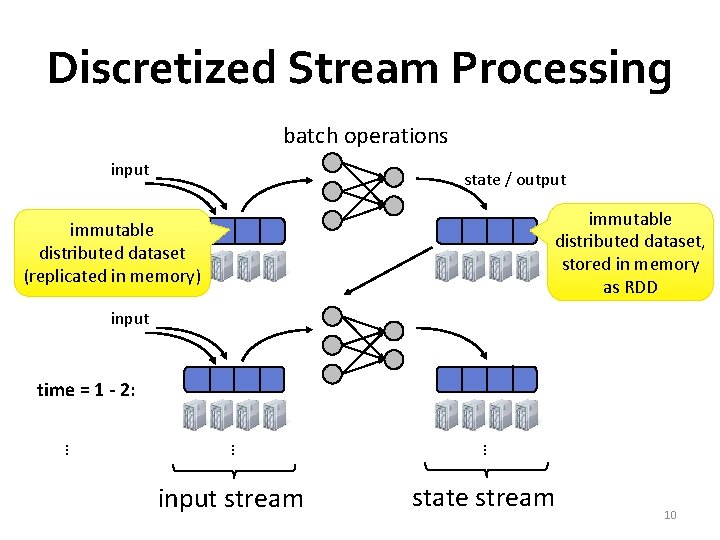

Discretized Stream Processing ²Run a streaming computation as a series of very small, deterministic batch jobs ²Batch processing models, like Map. Reduce, recover from faults and stragglers efficiently – Divide job into deterministic tasks – Rerun failed/slow tasks in parallel on other nodes ²Same recovery techniques at lower time scales 8

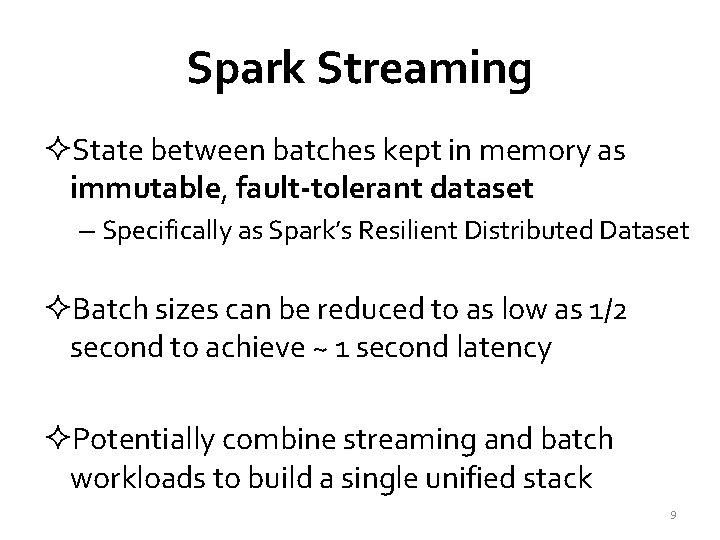

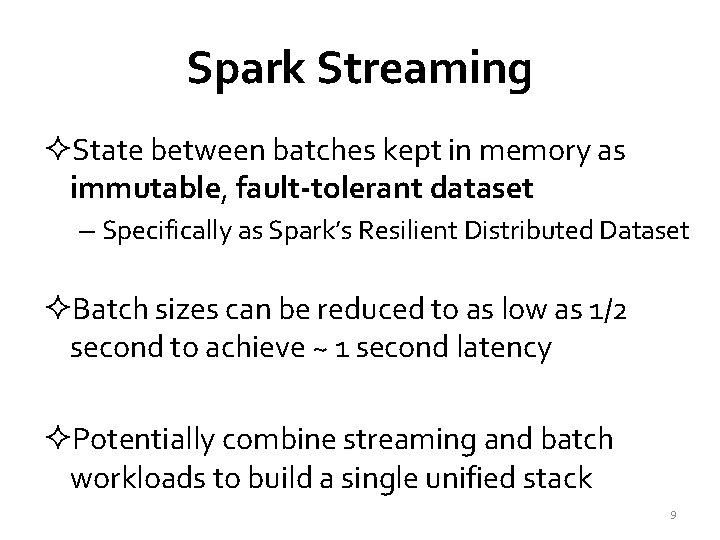

Spark Streaming ²State between batches kept in memory as immutable, fault-tolerant dataset – Specifically as Spark’s Resilient Distributed Dataset ²Batch sizes can be reduced to as low as 1/2 second to achieve ~ 1 second latency ²Potentially combine streaming and batch workloads to build a single unified stack 9

Discretized Stream Processing batch operations input state / output immutable distributed dataset, stored in memory as RDD timeimmutable = 0 - 1: distributed dataset (replicated in memory) input … … … time = 1 - 2: input stream state stream 10

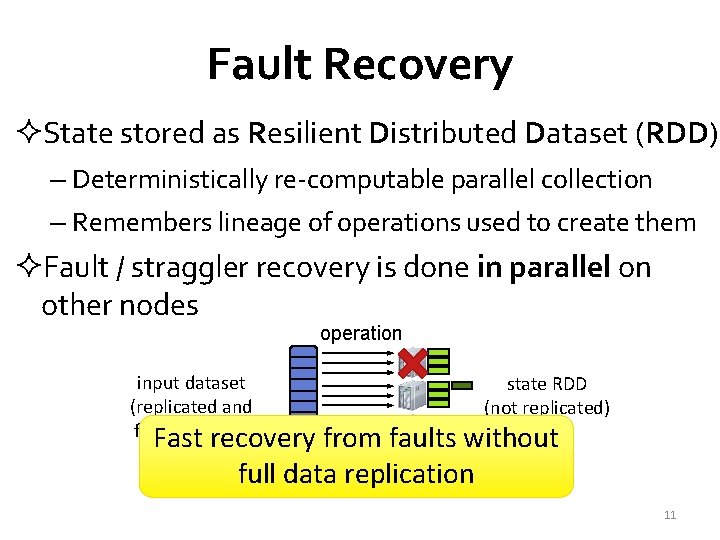

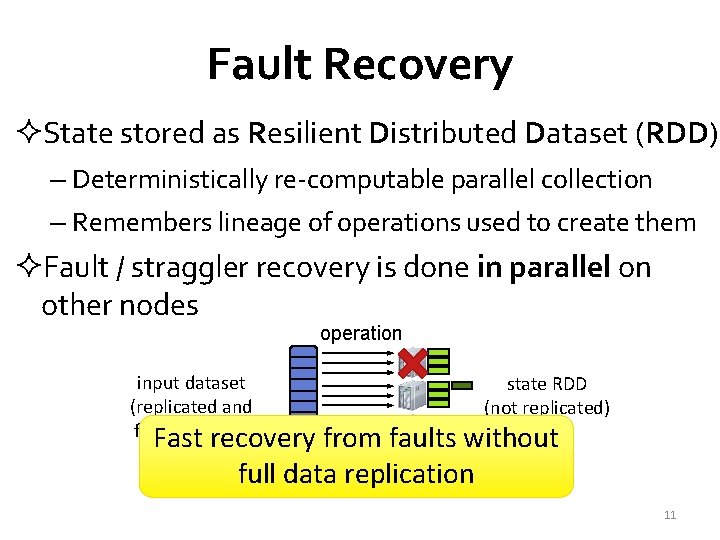

Fault Recovery ²State stored as Resilient Distributed Dataset (RDD) – Deterministically re-computable parallel collection – Remembers lineage of operations used to create them ²Fault / straggler recovery is done in parallel on other nodes operation input dataset (replicated and fault-tolerant) Fast recovery state RDD (not replicated) from faults without full data replication 11

Programming Model ²A Discretized Stream or DStream is a series of RDDs representing a stream of data – API very similar to RDDs ²DStreams can be created… – Either from live streaming data – Or by transforming other DStreams 12

DStream Data Sources ²Many sources out of the box – HDFS – Kafka – Flume – Twitter – TCP sockets – Akka actor – Zero. MQ Contributed by external developers ²Easy to add your own 13

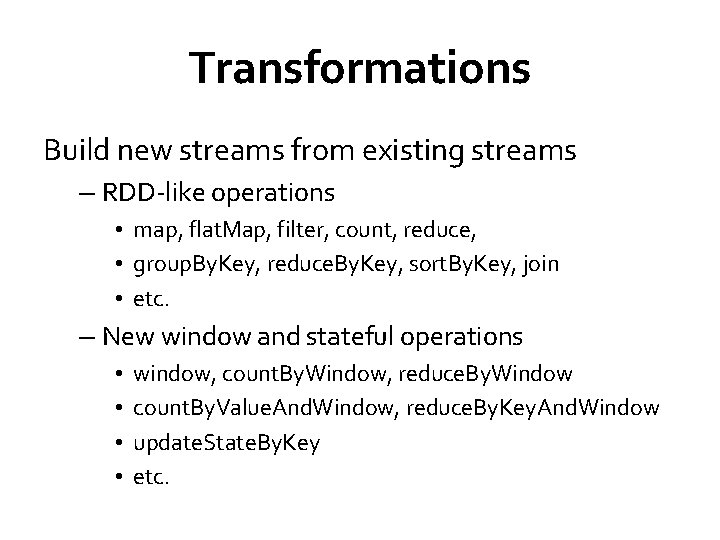

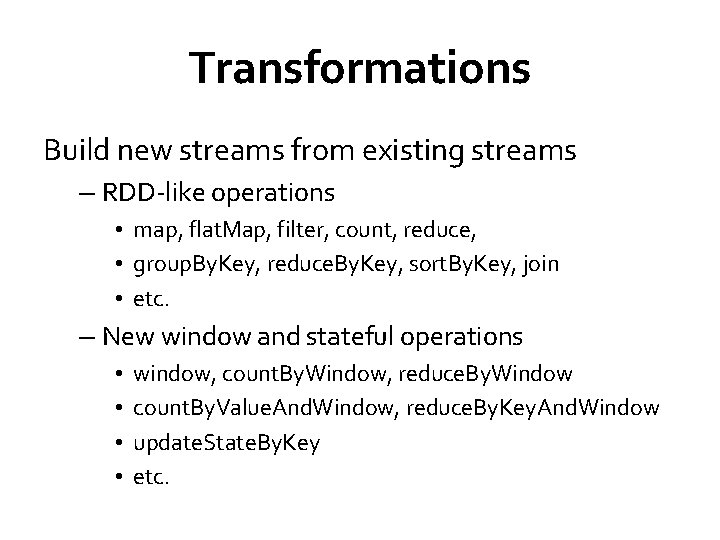

Transformations Build new streams from existing streams – RDD-like operations • map, flat. Map, filter, count, reduce, • group. By. Key, reduce. By. Key, sort. By. Key, join • etc. – New window and stateful operations • • window, count. By. Window, reduce. By. Window count. By. Value. And. Window, reduce. By. Key. And. Window update. State. By. Key etc.

Output Operations Send data to outside world – save. As. Hadoop. Files – prints on the driver’s screen – foreach - arbitrary operation on every RDD

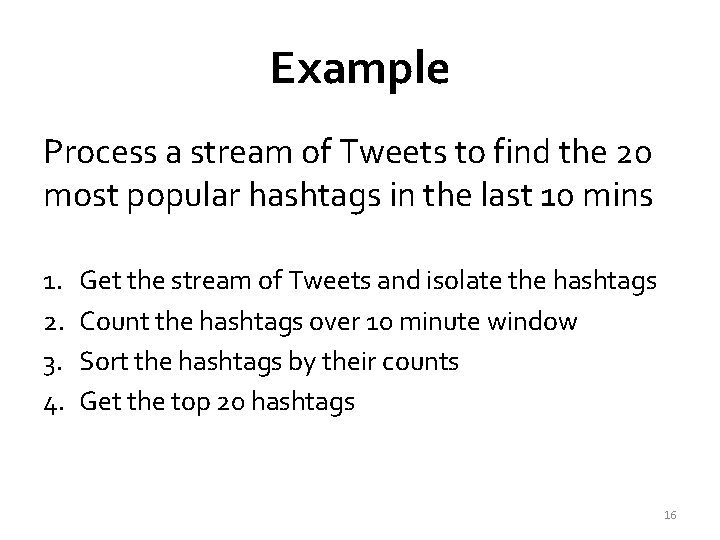

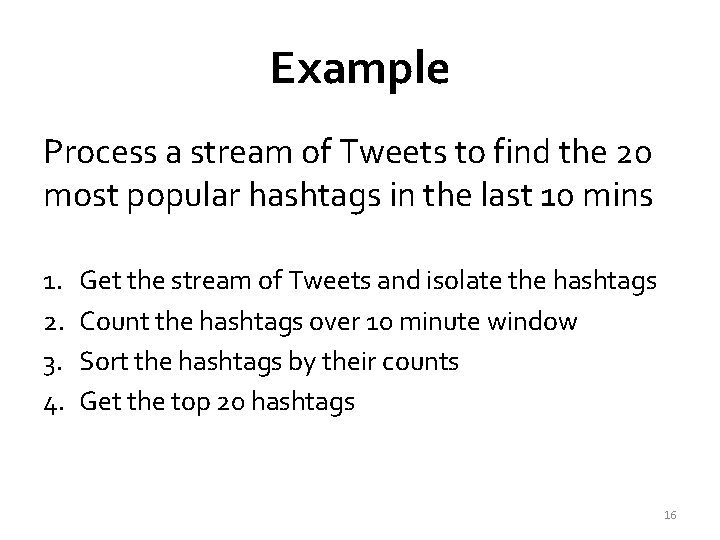

Example Process a stream of Tweets to find the 20 most popular hashtags in the last 10 mins 1. 2. 3. 4. Get the stream of Tweets and isolate the hashtags Count the hashtags over 10 minute window Sort the hashtags by their counts Get the top 20 hashtags 16

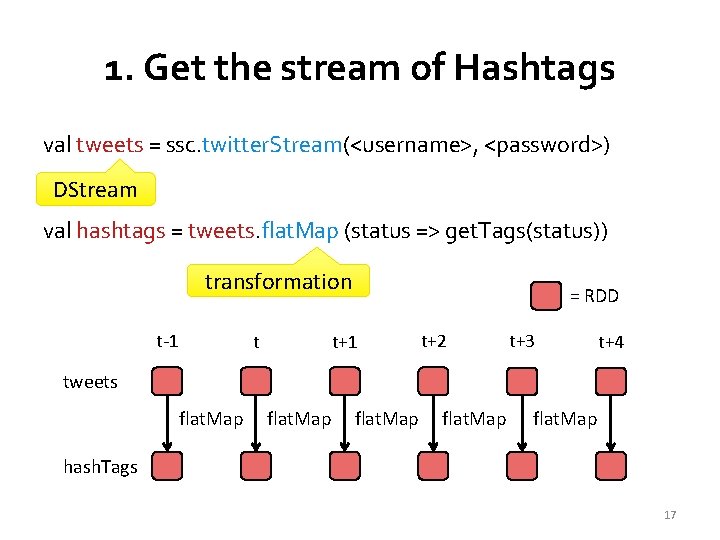

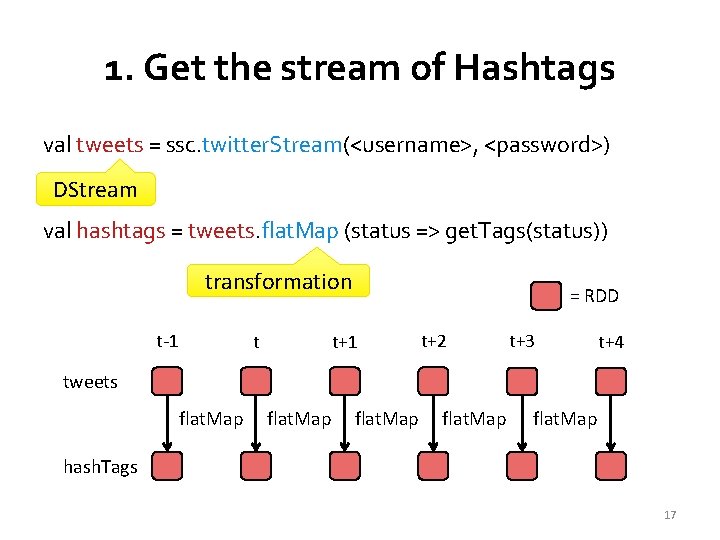

1. Get the stream of Hashtags val tweets = ssc. twitter. Stream(<username>, <password>) DStream val hashtags = tweets. flat. Map (status => get. Tags(status)) transformation t-1 t = RDD t+1 t+2 t+3 t+4 tweets flat. Map hash. Tags 17

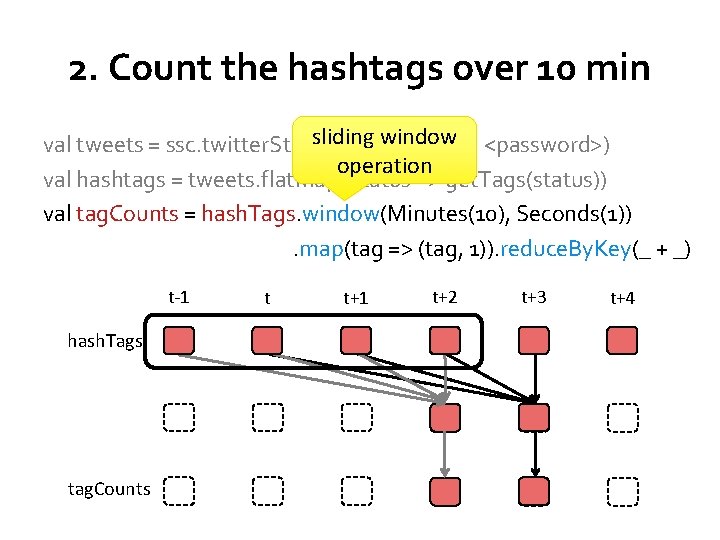

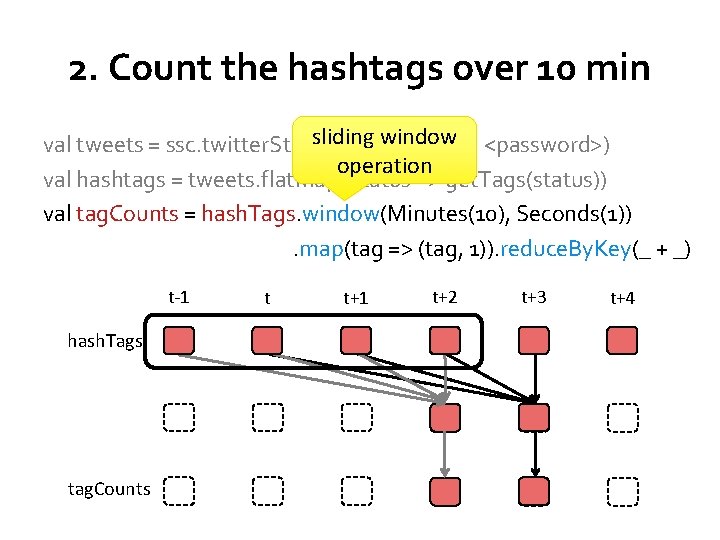

2. Count the hashtags over 10 min sliding window <password>) val tweets = ssc. twitter. Stream(<username>, operation val hashtags = tweets. flat. Map (status => get. Tags(status)) val tag. Counts = hash. Tags. window(Minutes(10), Seconds(1)). map(tag => (tag, 1)). reduce. By. Key(_ + _) t-1 hash. Tags tag. Counts t t+1 t+2 t+3 t+4

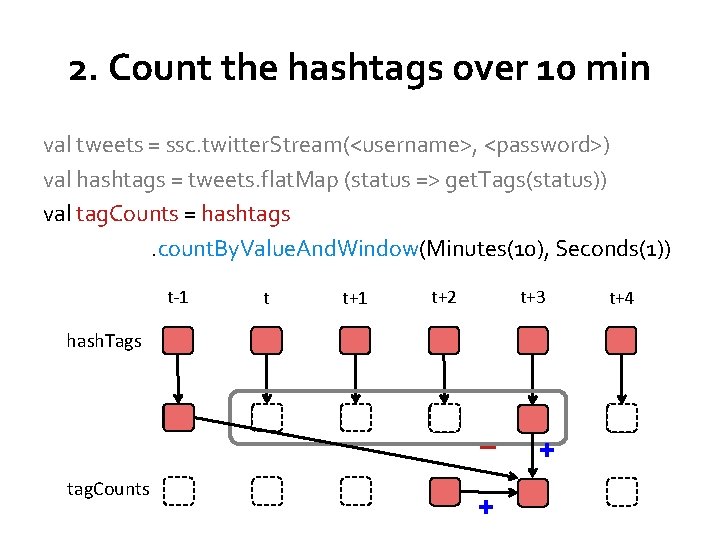

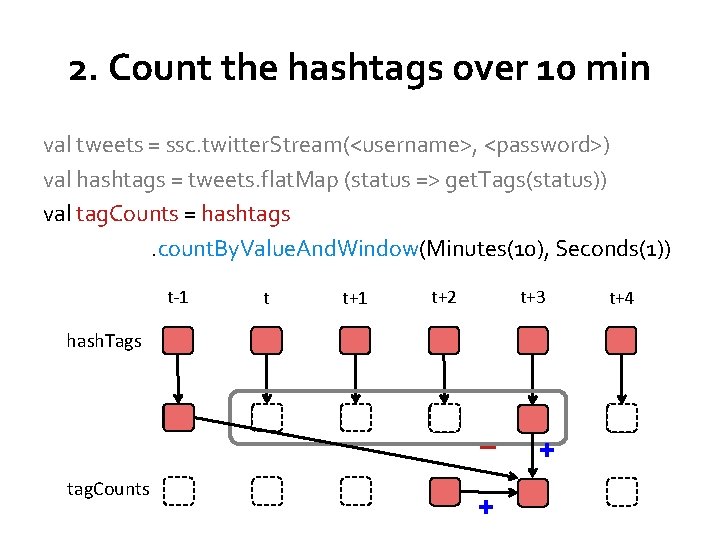

2. Count the hashtags over 10 min val tweets = ssc. twitter. Stream(<username>, <password>) val hashtags = tweets. flat. Map (status => get. Tags(status)) val tag. Counts = hashtags. count. By. Value. And. Window(Minutes(10), Seconds(1)) t-1 t t+1 t+2 t+3 hash. Tags – tag. Counts + + t+4

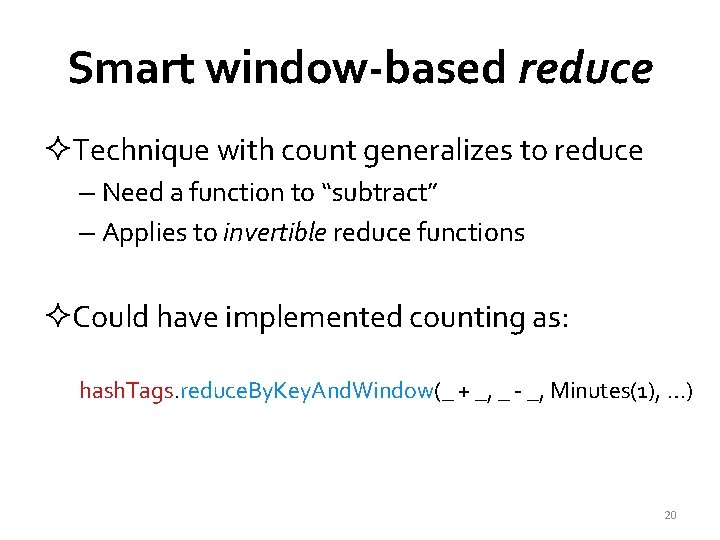

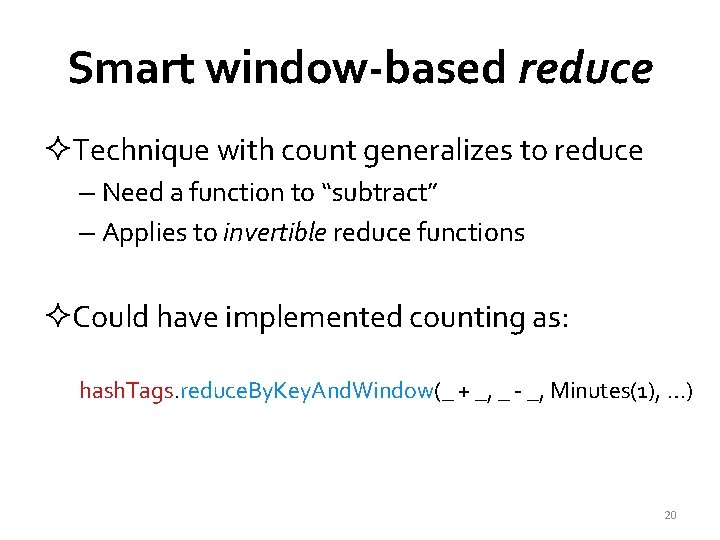

Smart window-based reduce ²Technique with count generalizes to reduce – Need a function to “subtract” – Applies to invertible reduce functions ²Could have implemented counting as: hash. Tags. reduce. By. Key. And. Window(_ + _, _ - _, Minutes(1), …) 20

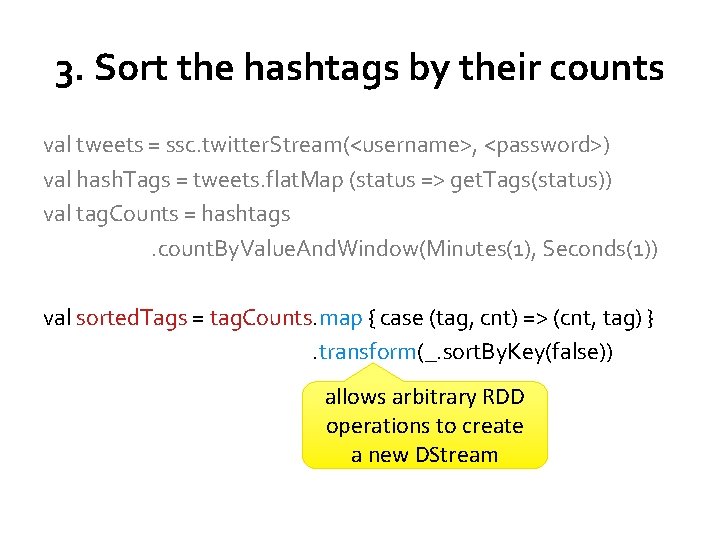

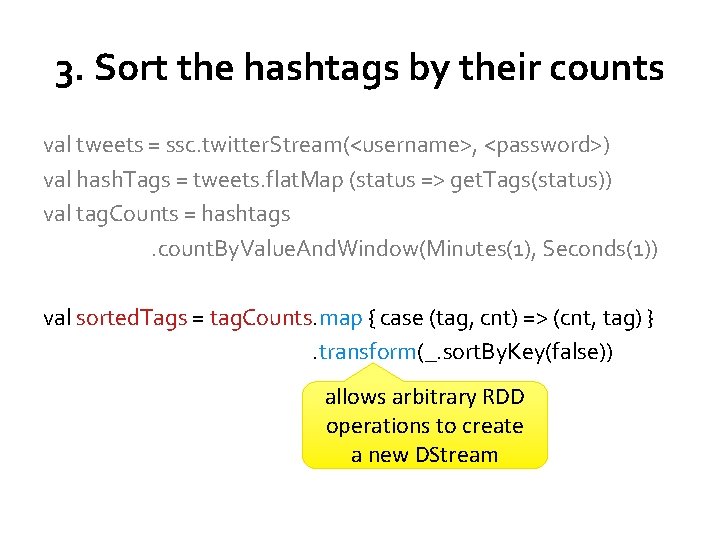

3. Sort the hashtags by their counts val tweets = ssc. twitter. Stream(<username>, <password>) val hash. Tags = tweets. flat. Map (status => get. Tags(status)) val tag. Counts = hashtags. count. By. Value. And. Window(Minutes(1), Seconds(1)) val sorted. Tags = tag. Counts. map { case (tag, cnt) => (cnt, tag) }. transform(_. sort. By. Key(false)) allows arbitrary RDD operations to create a new DStream

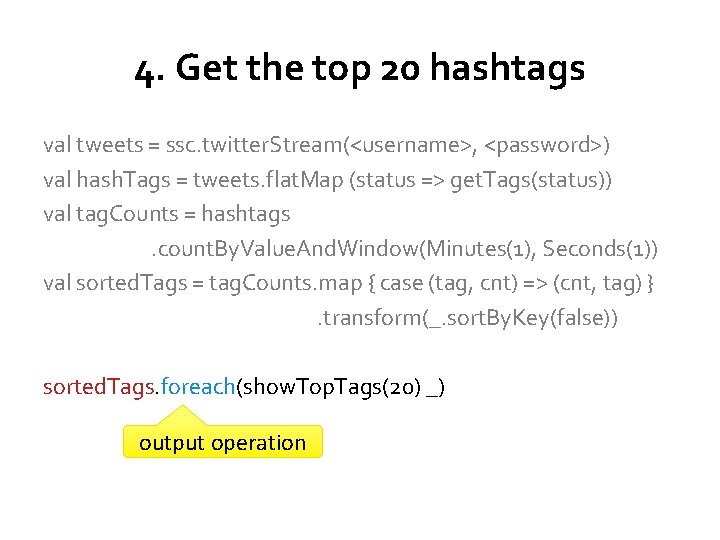

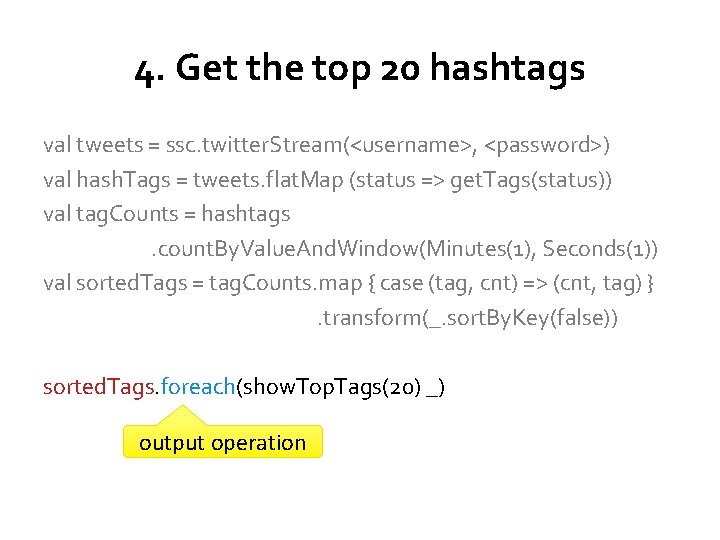

4. Get the top 20 hashtags val tweets = ssc. twitter. Stream(<username>, <password>) val hash. Tags = tweets. flat. Map (status => get. Tags(status)) val tag. Counts = hashtags. count. By. Value. And. Window(Minutes(1), Seconds(1)) val sorted. Tags = tag. Counts. map { case (tag, cnt) => (cnt, tag) }. transform(_. sort. By. Key(false)) sorted. Tags. foreach(show. Top. Tags(20) _) output operation

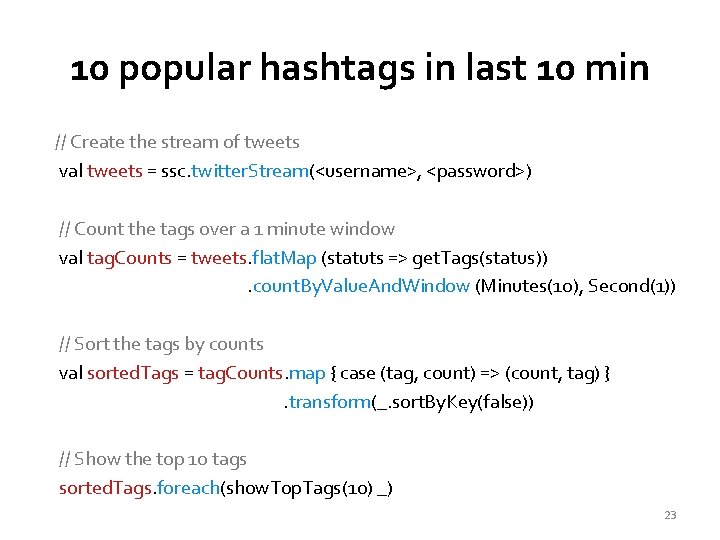

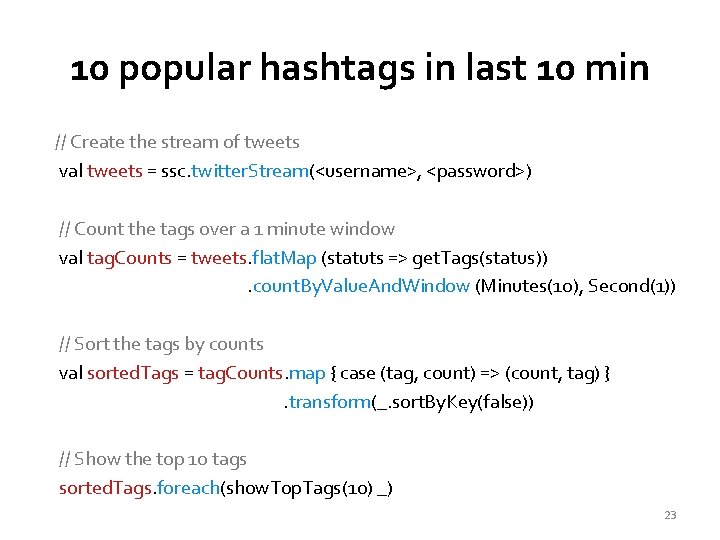

10 popular hashtags in last 10 min // Create the stream of tweets val tweets = ssc. twitter. Stream(<username>, <password>) // Count the tags over a 1 minute window val tag. Counts = tweets. flat. Map (statuts => get. Tags(status)). count. By. Value. And. Window (Minutes(10), Second(1)) // Sort the tags by counts val sorted. Tags = tag. Counts. map { case (tag, count) => (count, tag) }. transform(_. sort. By. Key(false)) // Show the top 10 tags sorted. Tags. foreach(show. Top. Tags(10) _) 23

Demo 24

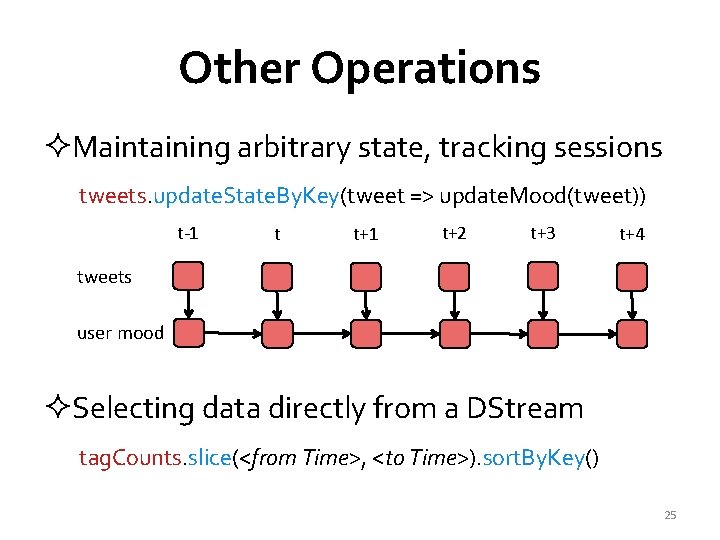

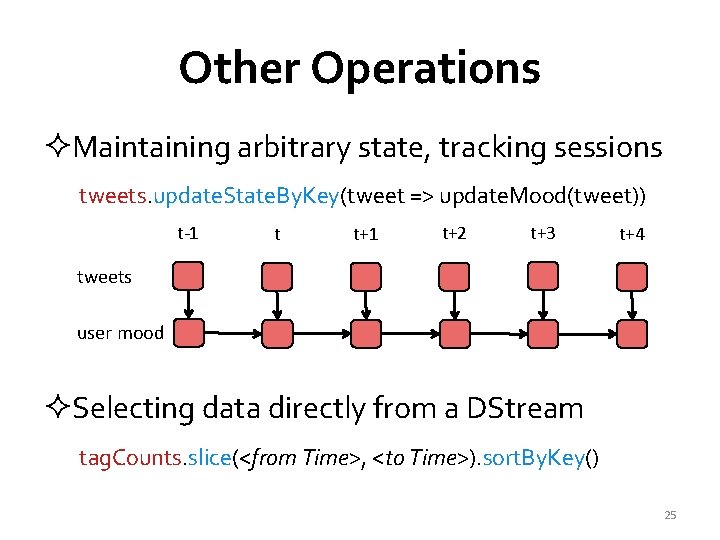

Other Operations ²Maintaining arbitrary state, tracking sessions tweets. update. State. By. Key(tweet => update. Mood(tweet)) t-1 t t+1 t+2 t+3 t+4 tweets user mood ²Selecting data directly from a DStream tag. Counts. slice(<from Time>, <to Time>). sort. By. Key() 25

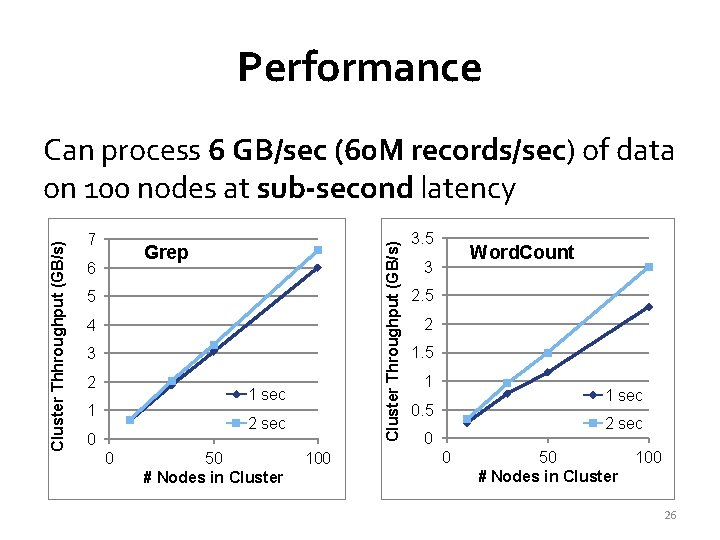

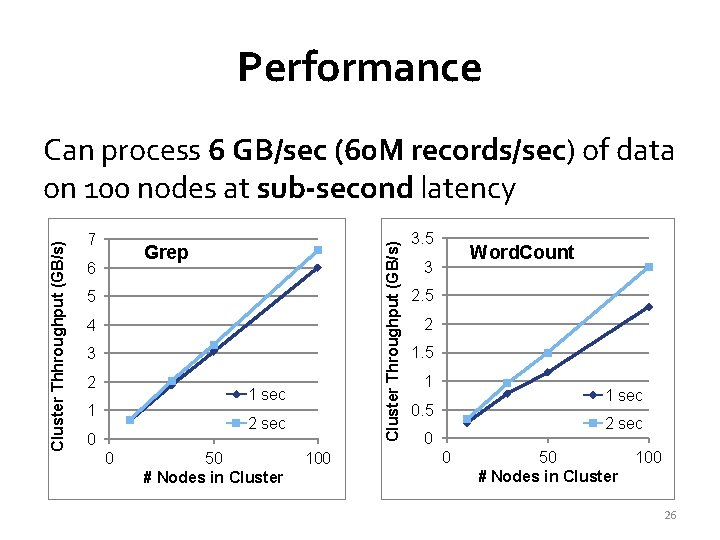

Performance 7 Cluster Throughput (GB/s) Cluster Thhroughput (GB/s) Can process 6 GB/sec (60 M records/sec) of data on 100 nodes at sub-second latency Grep 6 5 4 3 2 1 sec 1 2 sec 0 0 50 # Nodes in Cluster 100 3. 5 Word. Count 3 2. 5 2 1. 5 1 1 sec 0. 5 2 sec 0 0 50 # Nodes in Cluster 100 26

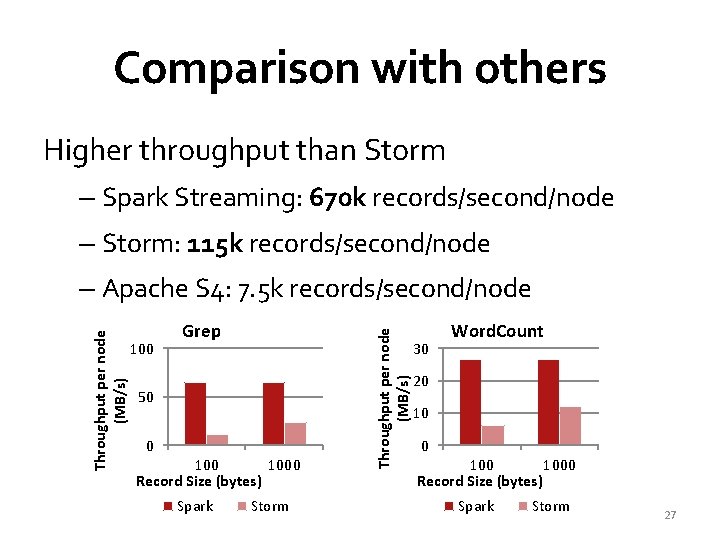

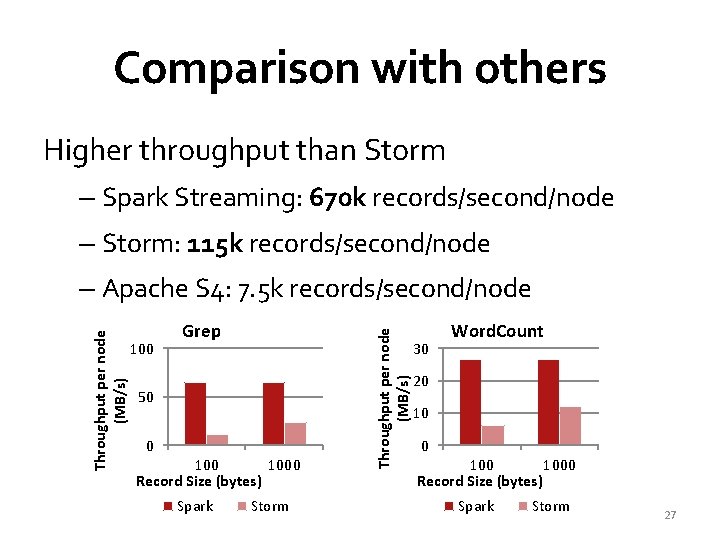

Comparison with others Higher throughput than Storm – Spark Streaming: 670 k records/second/node – Storm: 115 k records/second/node 100 Grep 50 0 1000 Record Size (bytes) Spark Storm Throughput per node (MB/s) – Apache S 4: 7. 5 k records/second/node 30 Word. Count 20 1000 Record Size (bytes) Spark Storm 27

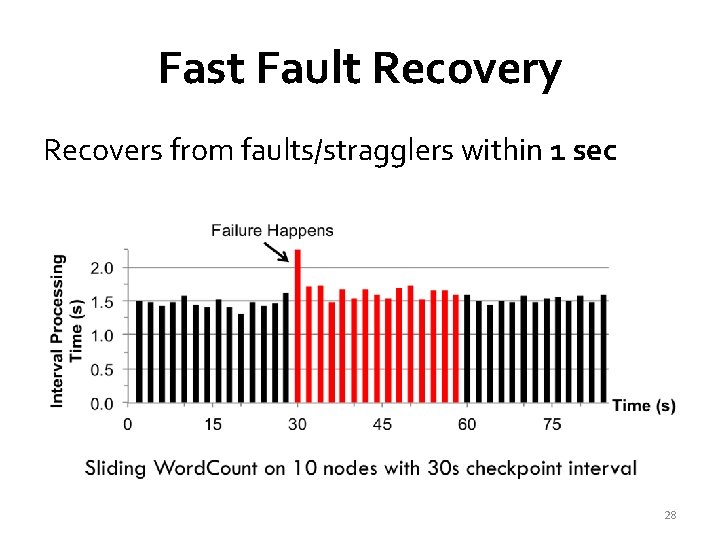

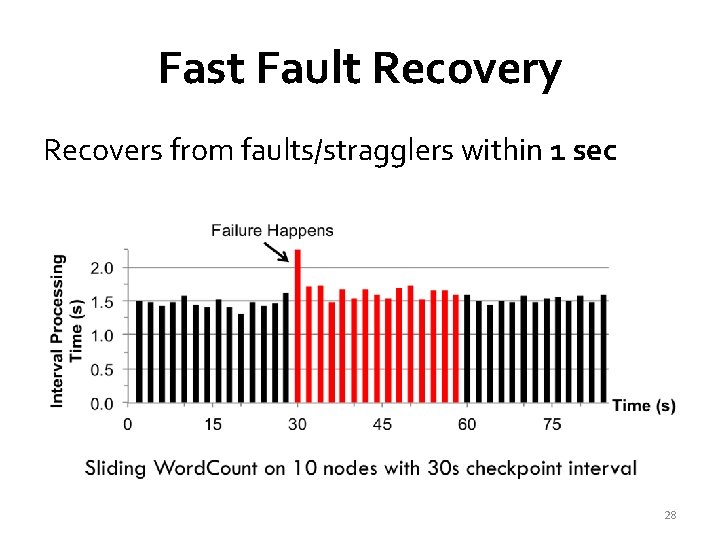

Fast Fault Recovery Recovers from faults/stragglers within 1 sec 28

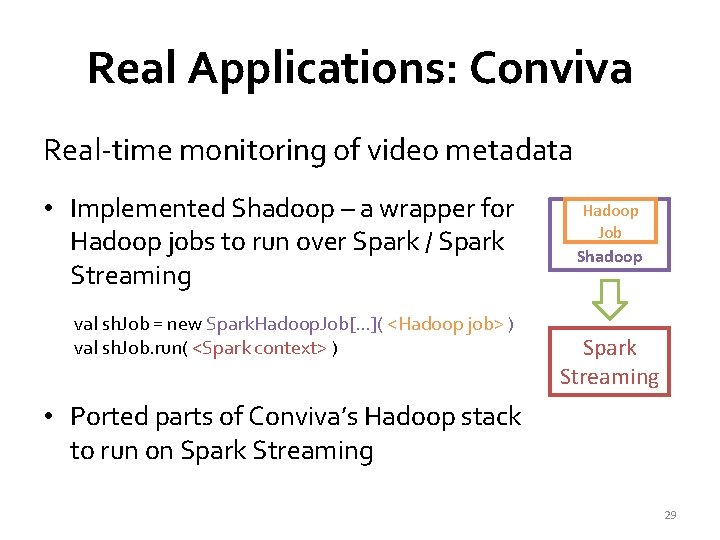

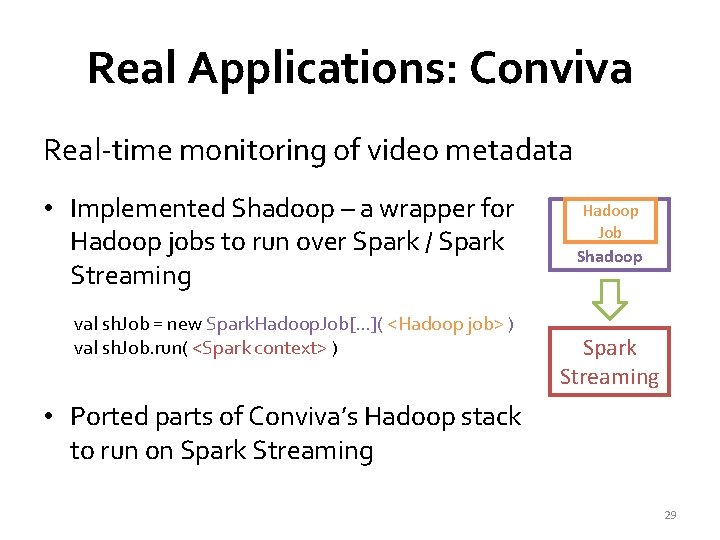

Real Applications: Conviva Real-time monitoring of video metadata • Implemented Shadoop – a wrapper for Hadoop jobs to run over Spark / Spark Streaming val sh. Job = new Spark. Hadoop. Job[…]( <Hadoop job> ) val sh. Job. run( <Spark context> ) Hadoop Job Shadoop Spark Streaming • Ported parts of Conviva’s Hadoop stack to run on Spark Streaming 29

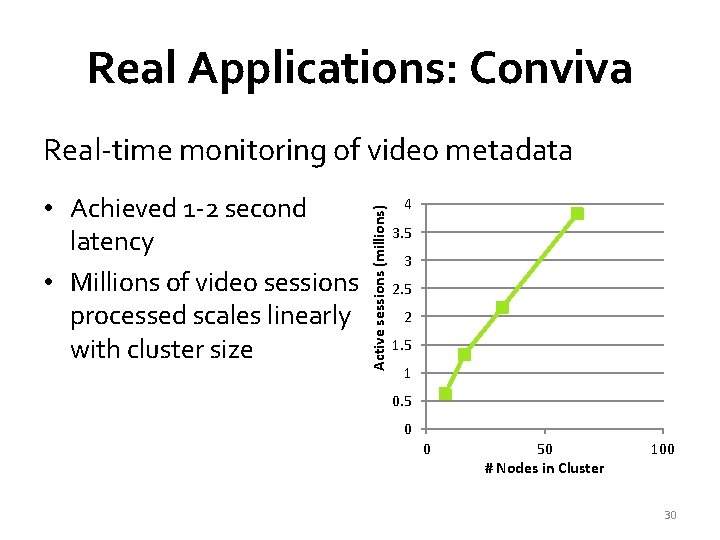

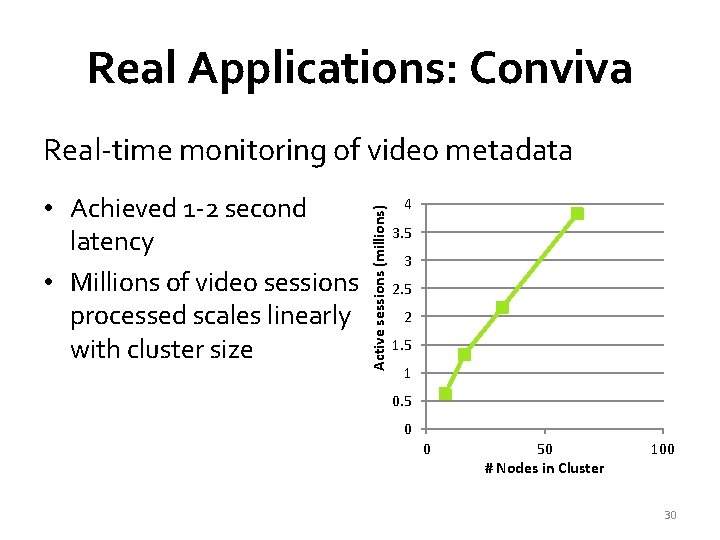

Real Applications: Conviva • Achieved 1 -2 second latency • Millions of video sessions processed scales linearly with cluster size Active sessions (millions) Real-time monitoring of video metadata 4 3. 5 3 2. 5 2 1. 5 1 0. 5 0 0 50 # Nodes in Cluster 100 30

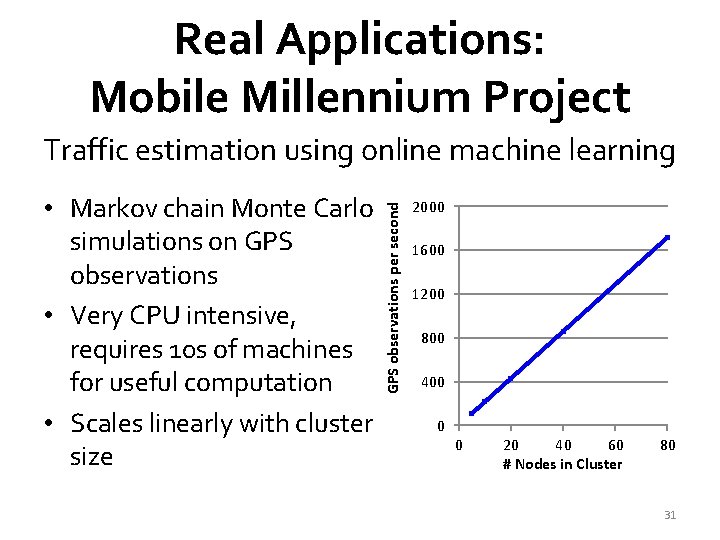

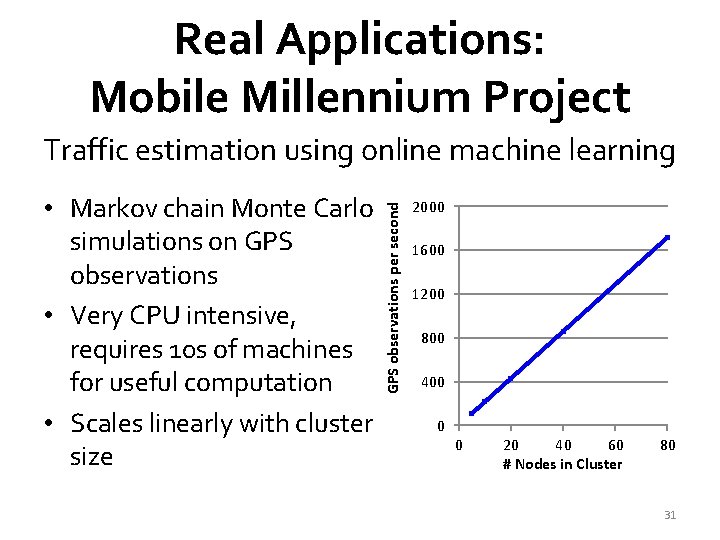

Real Applications: Mobile Millennium Project • Markov chain Monte Carlo simulations on GPS observations • Very CPU intensive, requires 10 s of machines for useful computation • Scales linearly with cluster size GPS observations per second Traffic estimation using online machine learning 2000 1600 1200 800 400 0 0 20 40 60 # Nodes in Cluster 80 31

Failure Semantics ²Input data replicated by the system ²Lineage of deterministic ops used to recompute RDD from input data if worker nodes fails ²Transformations – exactly once ²Output operations – at least once 32

Java API for Streaming ²Developed by Patrick Wendell ²Similar to Spark Java API ²Don’t need to know scala to try streaming! 33

Contributors ² 5 contributors from UCB, 3 external contributors – Matei Zaharia, Haoyuan Li – Patrick Wendell – Denny Britz – Sean Mc. Namara* – Prashant Sharma* – Nick Pentreath* – Tathagata Das 34

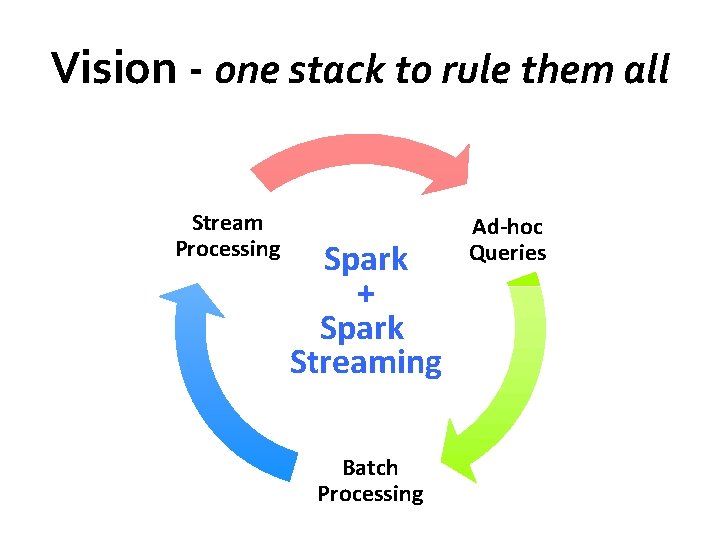

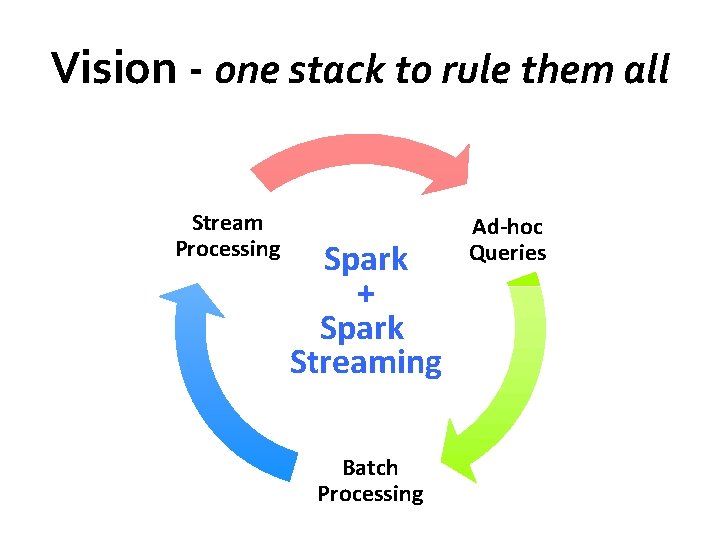

Vision - one stack to rule them all Stream Processing Spark + Spark Streaming Batch Processing Ad-hoc Queries

36

Conclusion Alpha to be release with Spark 0. 7 by weekend Look at the new Streaming Programming Guide More about Spark Streaming system in our paper http: //tinyurl. com/dstreams Join us in Strata on Feb 26 in Santa Clara 37