Sound Applications Advanced Multimedia Tamara Berg Reminder HW

Sound Applications Advanced Multimedia Tamara Berg

Reminder • HW 2 due March 13, 11: 59 pm • Questions?

Howard Leung

Audio Indexing and Retrieval • Features for representing audio: – Metadata – low level features – high level audio features • Example usage cases: Audio classification Music retrieval

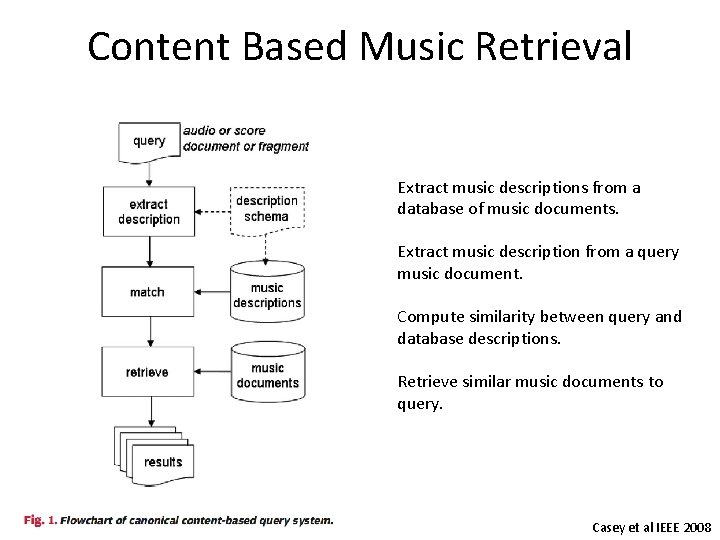

Content Based Music Retrieval Extract music descriptions from a database of music documents. Extract music description from a query music document. Compute similarity between query and database descriptions. Retrieve similar music documents to query. Casey et al IEEE 2008

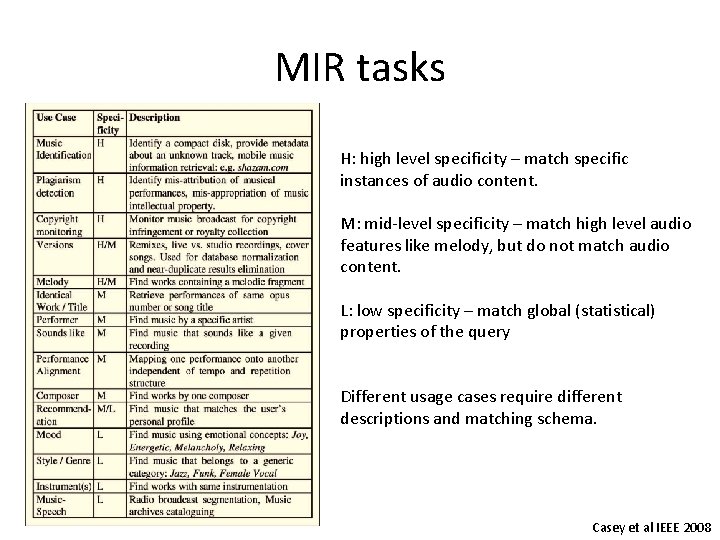

MIR tasks H: high level specificity – match specific instances of audio content. M: mid-level specificity – match high level audio features like melody, but do not match audio content. L: low specificity – match global (statistical) properties of the query Different usage cases require different descriptions and matching schema. Casey et al IEEE 2008

Metadata • Most common method of accessing music • Can be rich and expressive • When catalogues become very large, difficult to maintain consistent metadata Useful for low specificity queries Casey et al IEEE 2008

Metadata • Pandora. com – Uses metadata to estimate artist similarity and track similarity and creates personalized radio stations. Experts entered metadata of musicalcultural properties (20 -30 minutes per track of an expert’s time – 50 person-years for 1 million tracks). • Crowd sourced metadata repositories (gracenote, musicbrainz). Factual metadata (artist, album, year, title, duration). Cultural metadata (mood, emotion, genre, style). • Automatic metadata methods – generate descriptions from community metadata automatically. Language analysis to associate noun and verb phrases with musical features (Whitman & Rifkin). Casey et al IEEE 2008

Content features • Low level or high level • Want features to be robust to certain changes in the audio signal – Noise – Volume – Sampling • High level features will be more robust to changes, low level features will be less robust. • Low level features will be easy to compute, high level difficult

Content features • Low level or high level • Want features to be robust to certain changes in the audio signal – Noise – Volume – Sampling • High level features will be more robust to changes, low level features will be less robust. • Low level features will be easy to compute, high level difficult

Content features • Low level or high level • Want features to be robust to certain changes in the audio signal – Noise – Volume – Sampling • High level features will be more robust to changes, low level features will be less robust. • Low level features will be easy to compute, high level difficult

Low level audio features • Low level measurements of audio signal that contain information about a musical work. • Can be computed periodically (10 -1000 ms intervals) or beat synchronous. In text analysis we had words, here we have to come up with our own set of features to compute from audio signal! Casey et al IEEE 2008

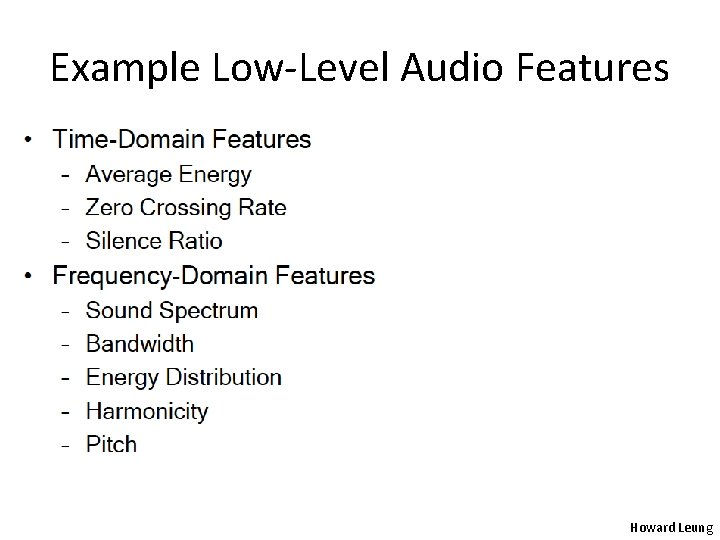

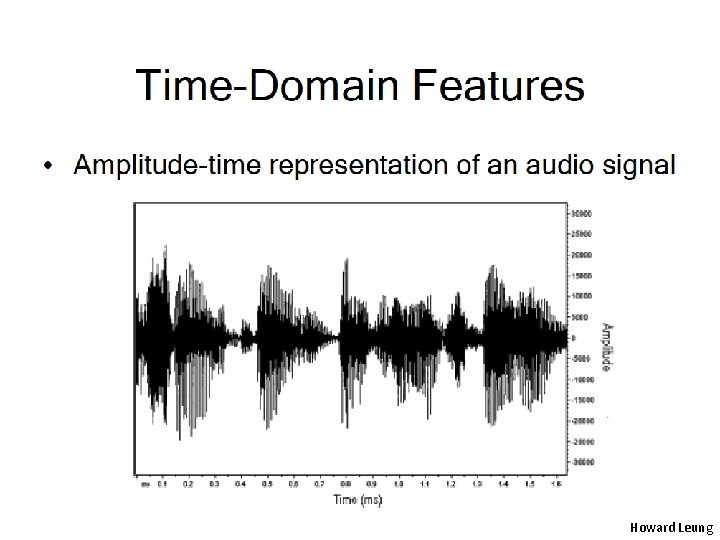

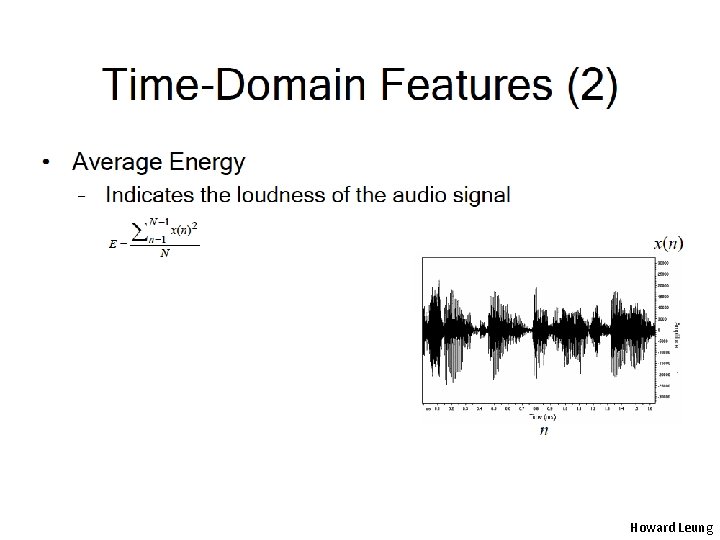

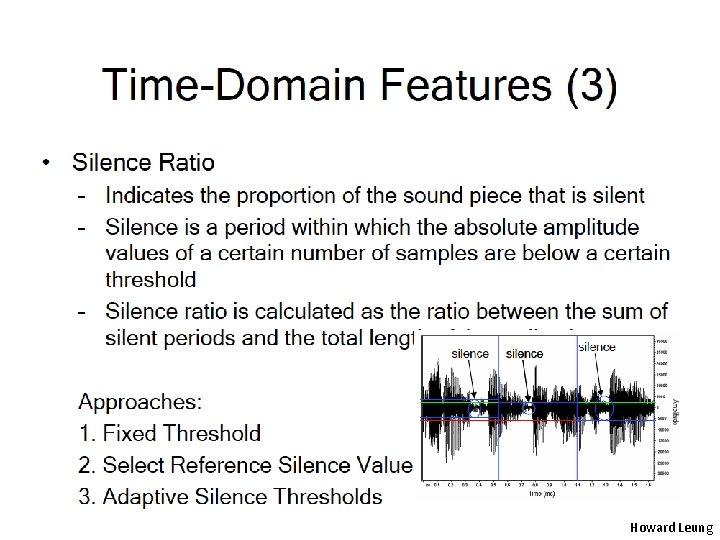

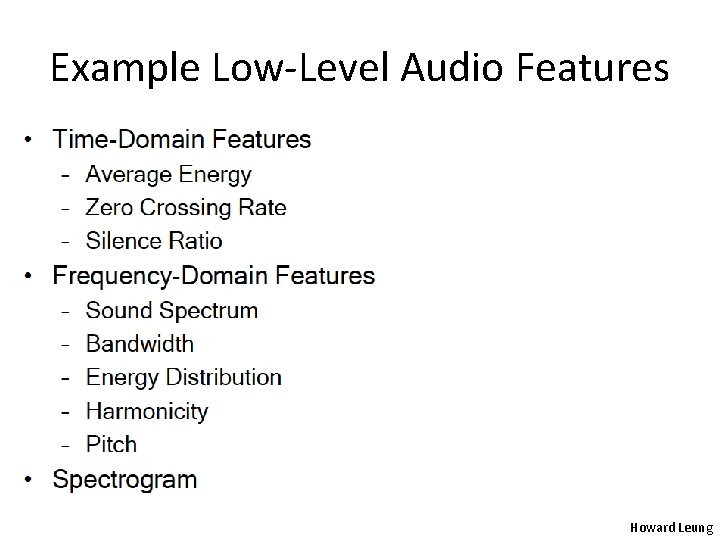

Example Low-Level Audio Features Howard Leung

Howard Leung

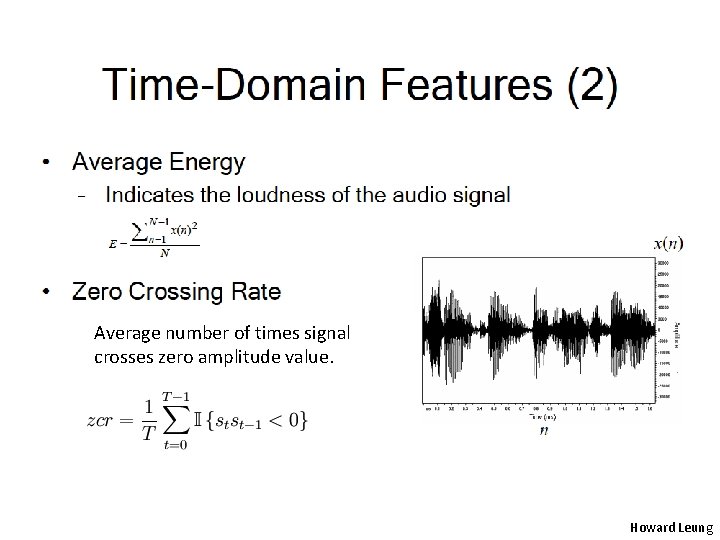

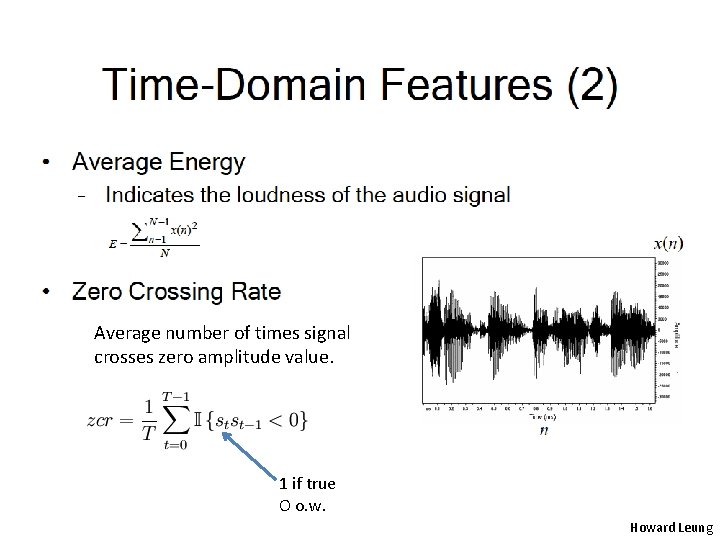

Average number of times signal crosses zero amplitude value. Howard Leung

Average number of times signal crosses zero amplitude value. Howard Leung

Average number of times signal crosses zero amplitude value. 1 if true O o. w. Howard Leung

Howard Leung

Example Low-Level Audio Features Howard Leung

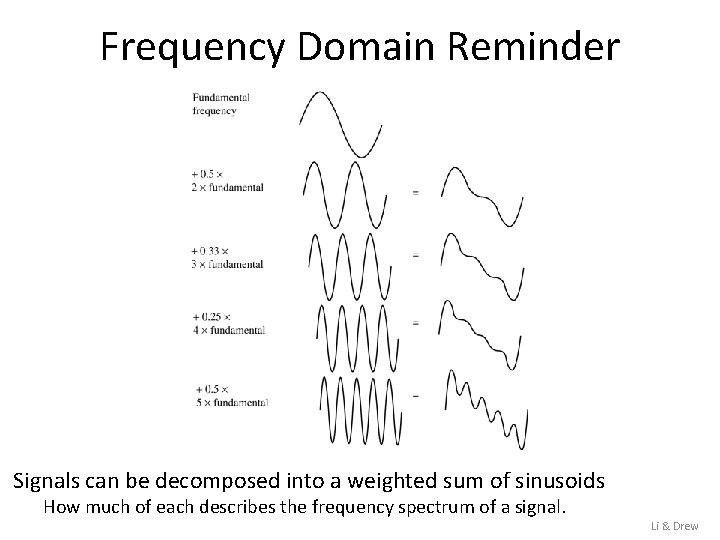

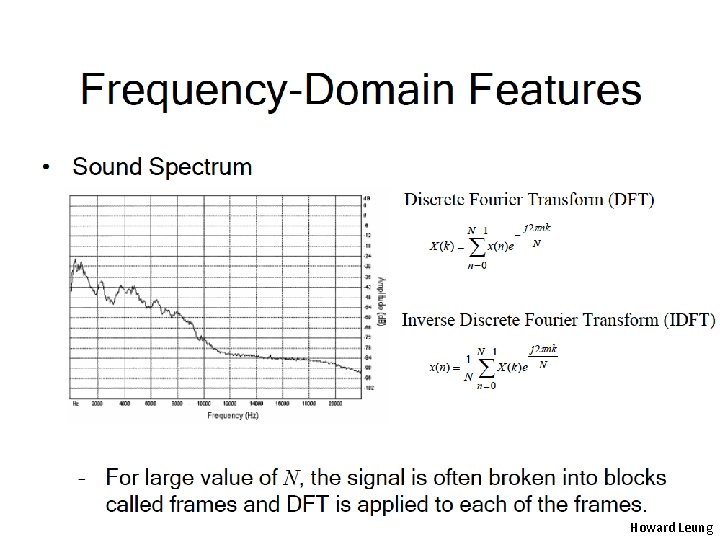

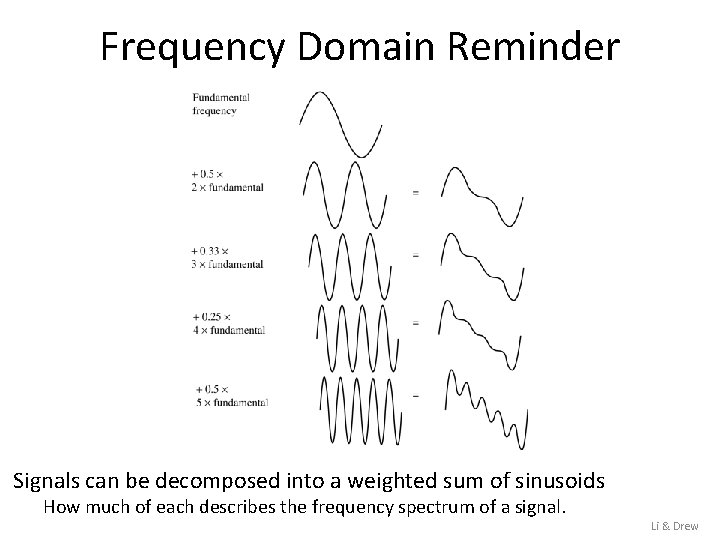

Frequency Domain Reminder Signals can be decomposed into a weighted sum of sinusoids How much of each describes the frequency spectrum of a signal. Li & Drew

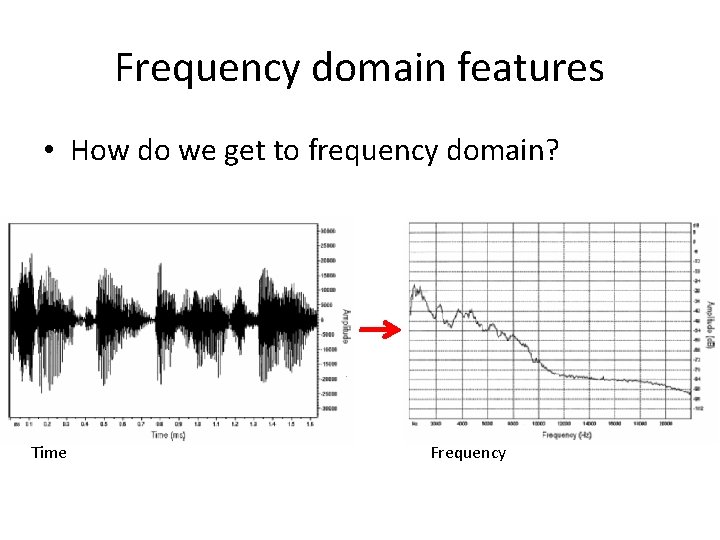

Frequency domain features • How do we get to frequency domain? Time Frequency

DFT Discrete Fourier Transform (DFT) of the audio Converts to a frequency representation DFT analysis occurs in terms of number of equally spaced ‘bins’ Each bin represents a particular frequency range DFT analysis gives the amount of energy in the audio signal that is present within the frequency range for each bin Inverse Discrete Fourier Transform (IDFT) Converts from frequency representation back to audio signal.

DFT Discrete Fourier Transform (DFT) of the audio Converts to a frequency representation DFT analysis occurs in terms of number of equally spaced ‘bins’ Each bin represents a particular frequency range DFT analysis gives the amount of energy in the audio signal that is present within the frequency range for each bin Inverse Discrete Fourier Transform (IDFT) Converts from frequency representation back to audio signal.

DFT Discrete Fourier Transform (DFT) of the audio Converts to a frequency representation DFT analysis occurs in terms of number of equally spaced ‘bins’ Each bin represents a particular frequency range DFT analysis gives the amount of energy in the audio signal that is present within the frequency range for each bin Inverse Discrete Fourier Transform (IDFT) Converts from frequency representation back to audio signal.

Howard Leung

Filtering Removes frequency components from some part of the spectrum Low pass filter – removes high frequency components from input and leaves only low in the output signal. High pass filter – removes low frequency components from input and leaves only high in the output signal. Band pass filter – removes some part of the frequency spectrum.

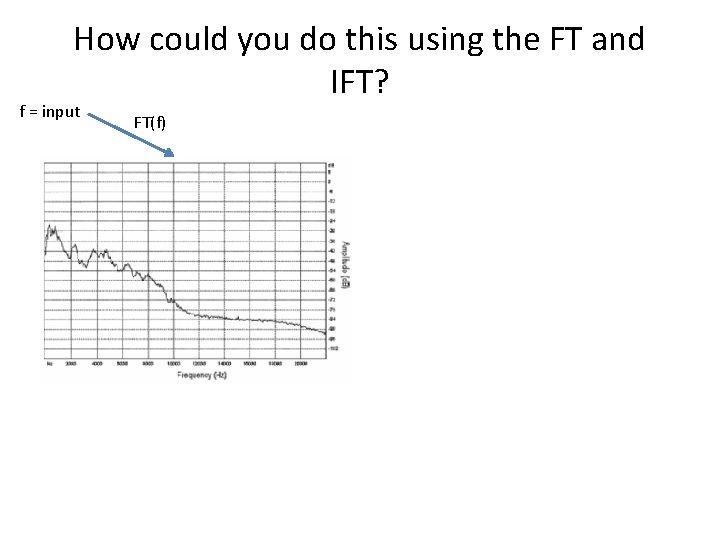

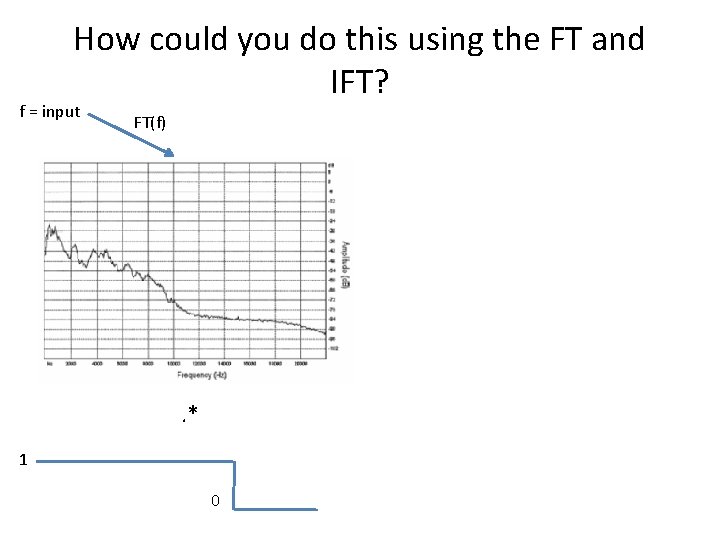

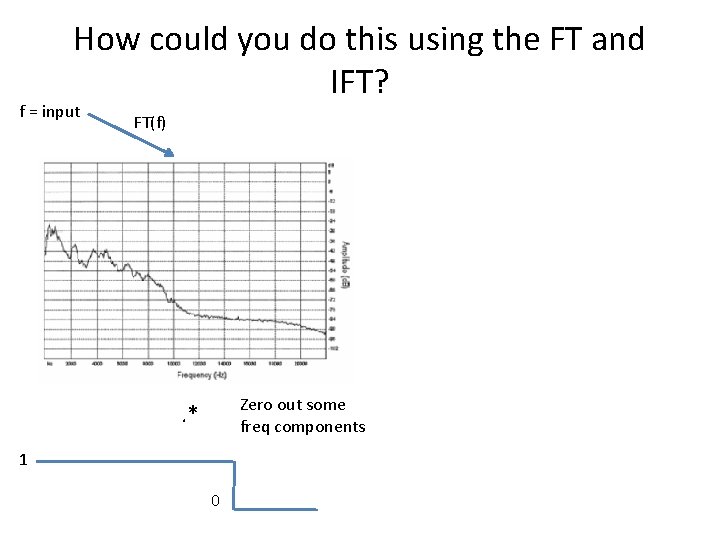

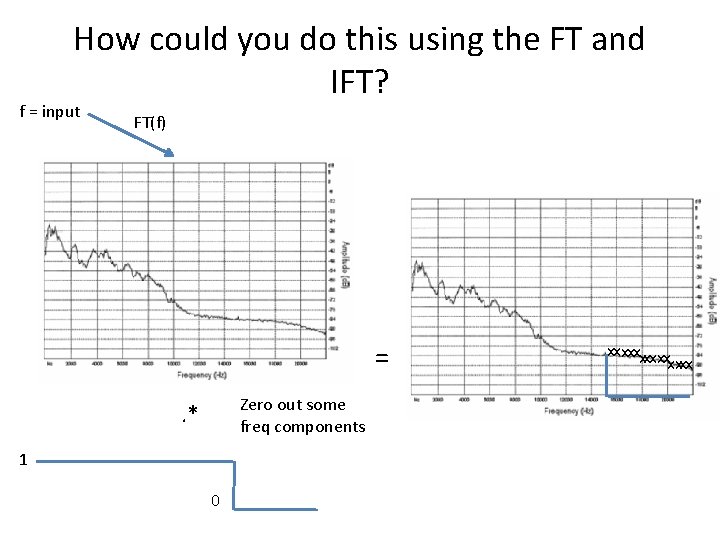

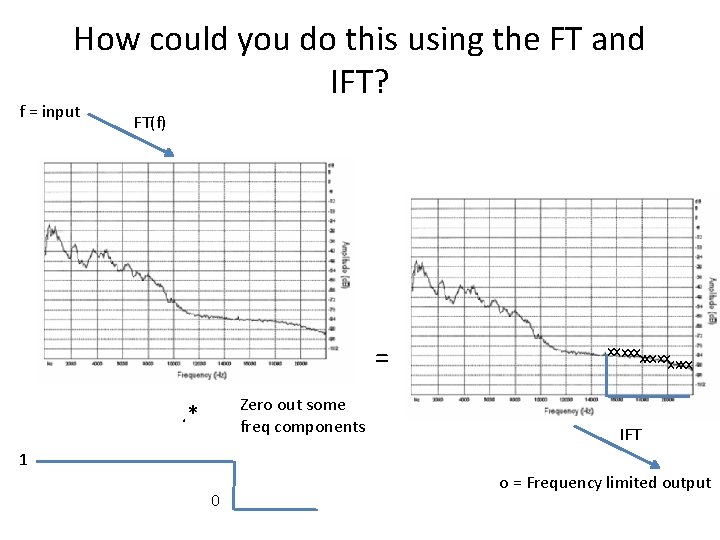

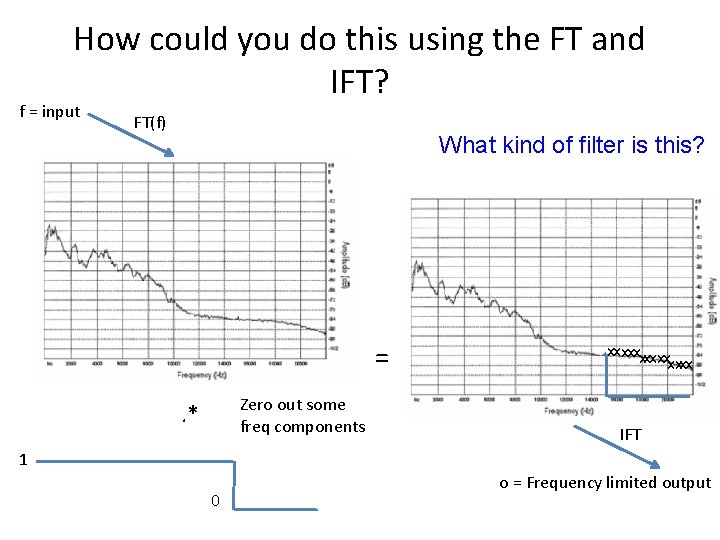

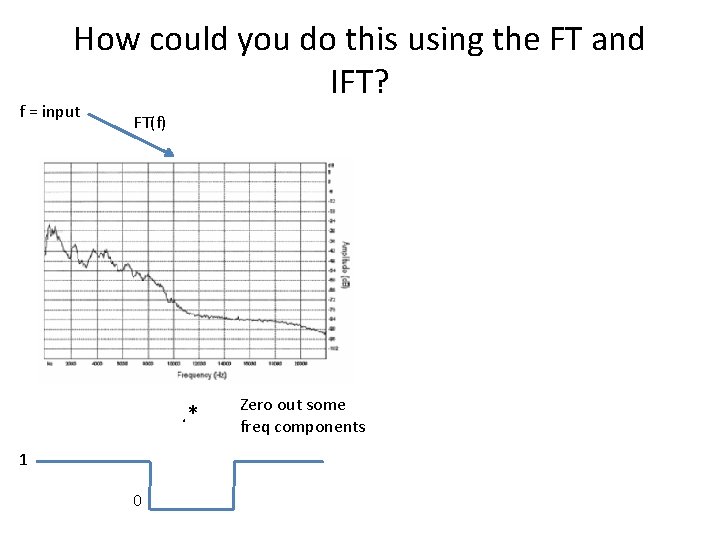

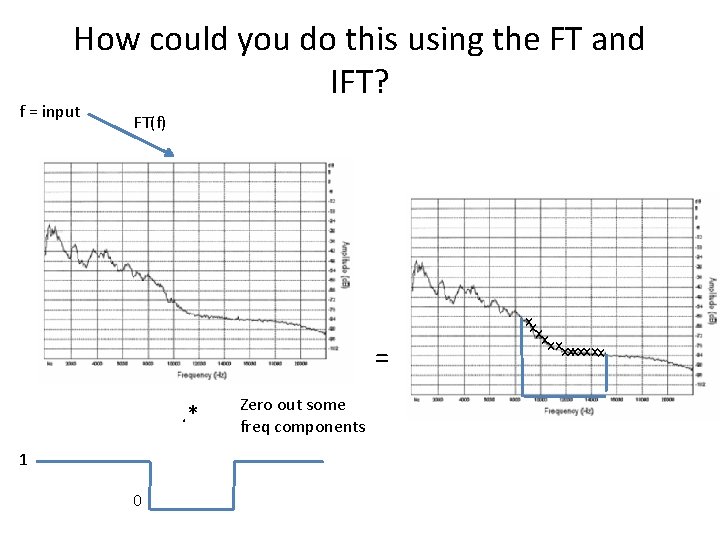

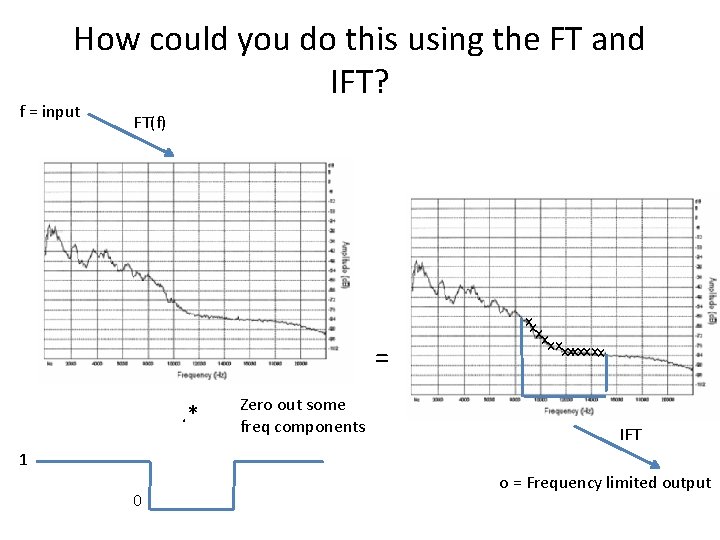

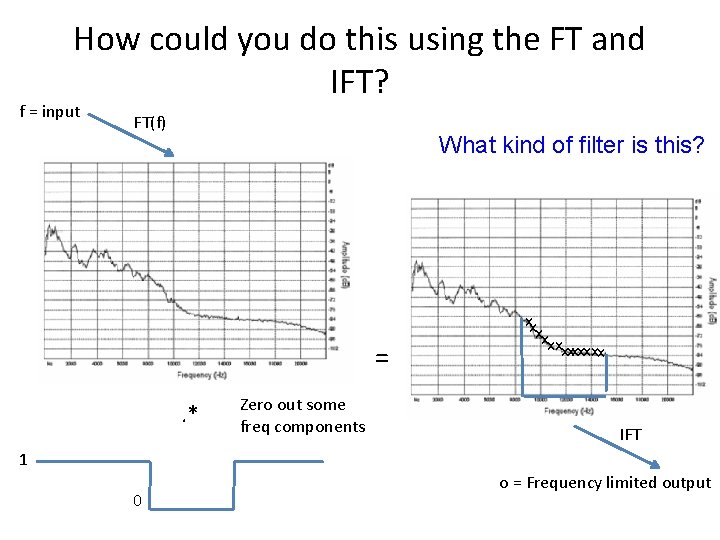

How could you do this using the FT and IFT? Compute FT spectrum of input. Zero out the part of the frequency spectrum that you want to filter out. Compute the IFT of this modified spectrum -> output will be input with some frequency components removed.

How could you do this using the FT and IFT? f = input

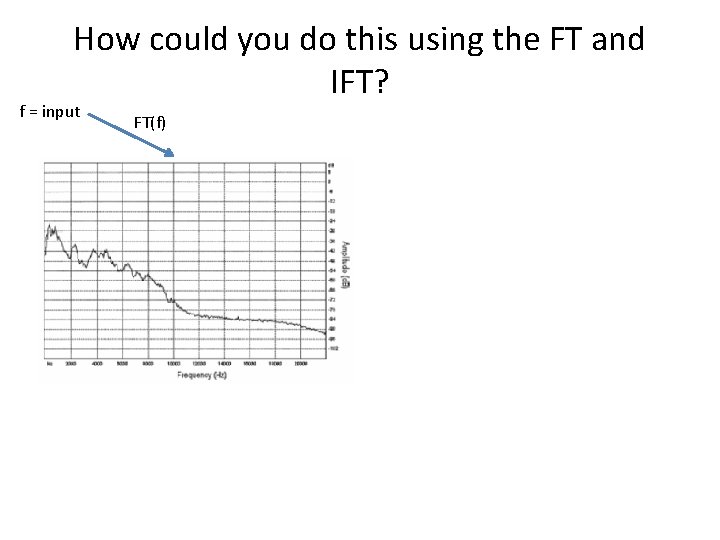

How could you do this using the FT and IFT? f = input FT(f)

How could you do this using the FT and IFT? f = input FT(f) . * 1 0

How could you do this using the FT and IFT? f = input FT(f) Zero out some freq components . * 1 0

How could you do this using the FT and IFT? f = input FT(f) = Zero out some freq components . * 1 0 xxxxx

How could you do this using the FT and IFT? f = input FT(f) = Zero out some freq components . * xxxxx IFT 1 0 o = Frequency limited output

How could you do this using the FT and IFT? f = input FT(f) What kind of filter is this? = Zero out some freq components . * xxxxx IFT 1 0 o = Frequency limited output

How could you do this using the FT and IFT? f = input

How could you do this using the FT and IFT? f = input FT(f)

How could you do this using the FT and IFT? f = input FT(f) . * 1 0

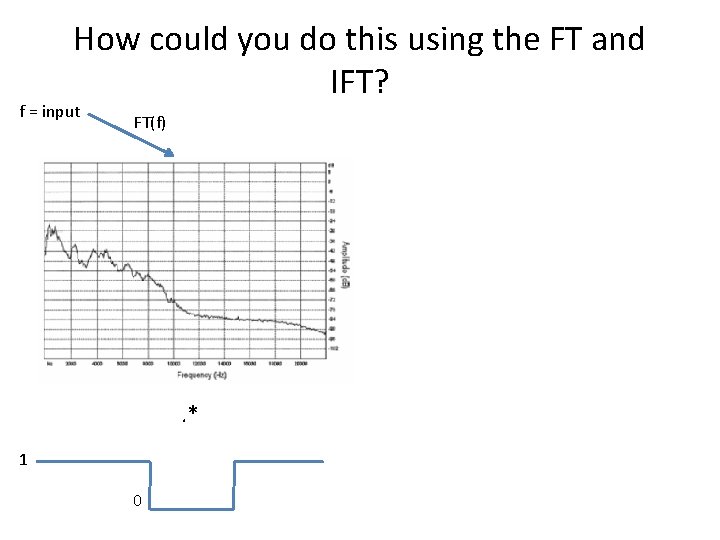

How could you do this using the FT and IFT? f = input FT(f) . * 1 0 Zero out some freq components

How could you do this using the FT and IFT? f = input FT(f) =. * 1 0 Zero out some freq components xx xx xxxxx

How could you do this using the FT and IFT? f = input FT(f) =. * Zero out some freq components xx xx xxxxx IFT 1 0 o = Frequency limited output

How could you do this using the FT and IFT? f = input FT(f) What kind of filter is this? =. * Zero out some freq components xx xx xxxxx IFT 1 0 o = Frequency limited output

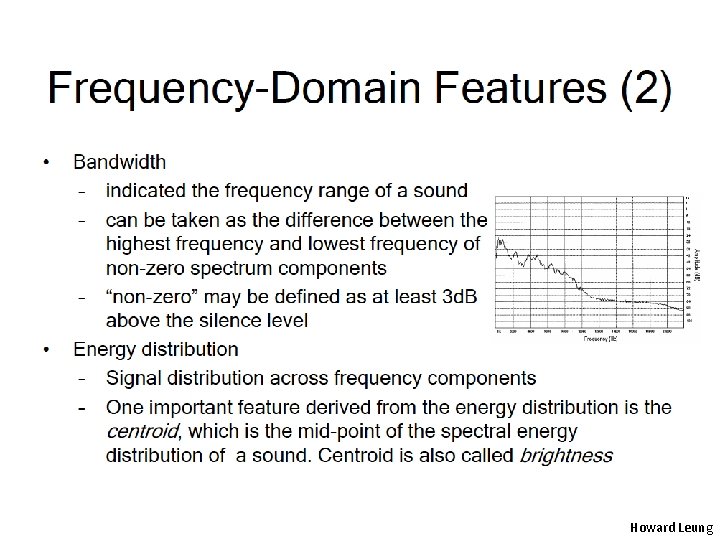

Howard Leung

Howard Leung

Frequency Domain Reminder Signals can be decomposed into a weighted sum of sinusoids How much of each describes the frequency spectrum of a signal. Li & Drew

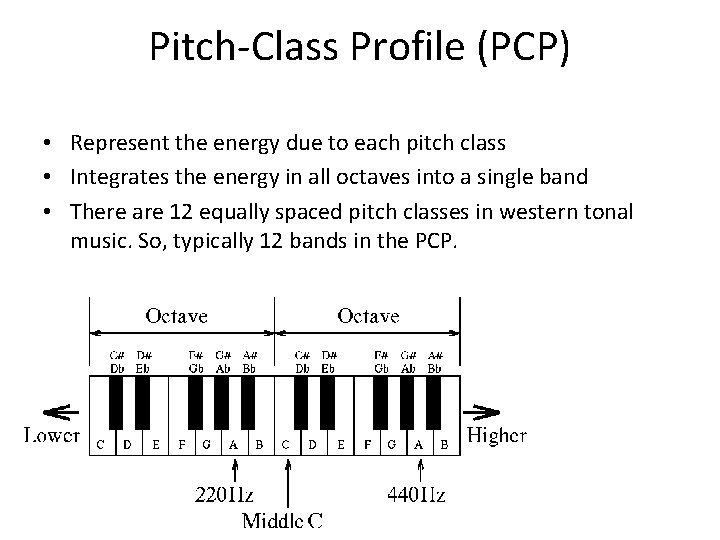

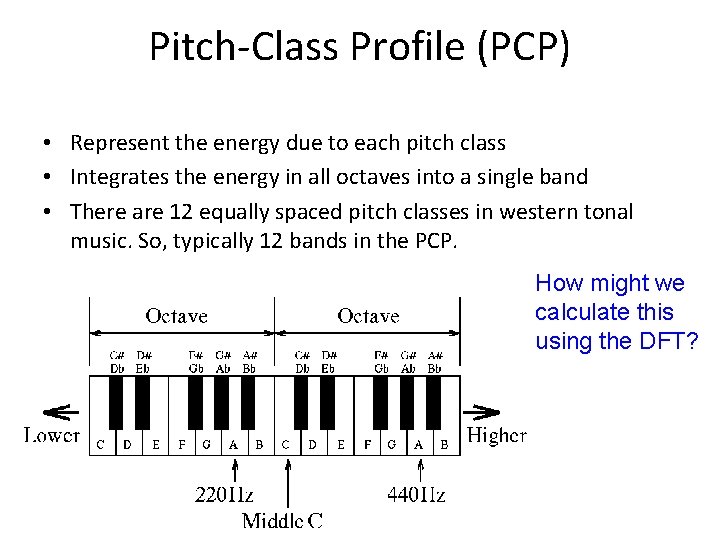

Pitch-Class Profile (PCP) • Represent the energy due to each pitch class • Integrates the energy in all octaves into a single band • There are 12 equally spaced pitch classes in western tonal music. So, typically 12 bands in the PCP.

Pitch-Class Profile (PCP) • Represent the energy due to each pitch class • Integrates the energy in all octaves into a single band • There are 12 equally spaced pitch classes in western tonal music. So, typically 12 bands in the PCP. How might we calculate this using the DFT?

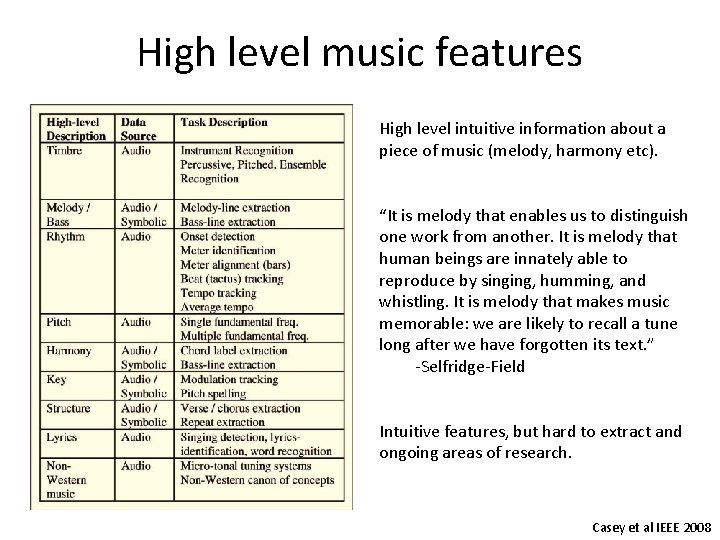

High level music features High level intuitive information about a piece of music (melody, harmony etc). “It is melody that enables us to distinguish one work from another. It is melody that human beings are innately able to reproduce by singing, humming, and whistling. It is melody that makes music memorable: we are likely to recall a tune long after we have forgotten its text. ” -Selfridge-Field Intuitive features, but hard to extract and ongoing areas of research. Casey et al IEEE 2008

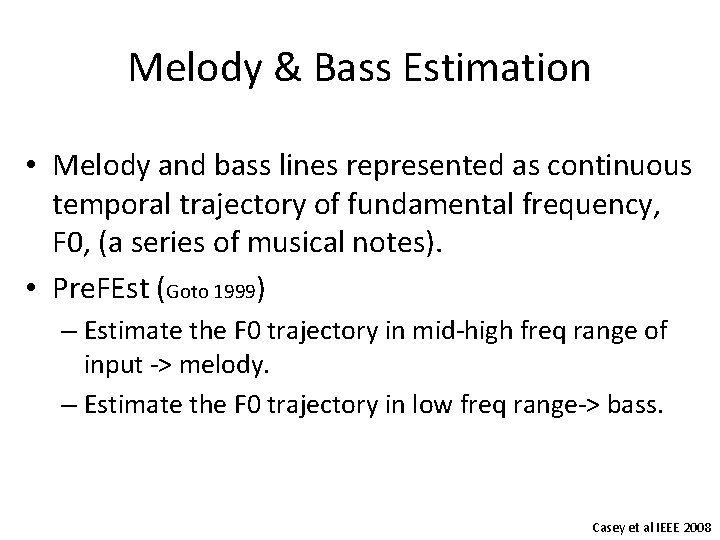

Melody & Bass Estimation • Melody and bass lines represented as continuous temporal trajectory of fundamental frequency, F 0, (a series of musical notes). • Pre. FEst (Goto 1999) – Estimate the F 0 trajectory in mid-high freq range of input -> melody. – Estimate the F 0 trajectory in low freq range-> bass. Casey et al IEEE 2008

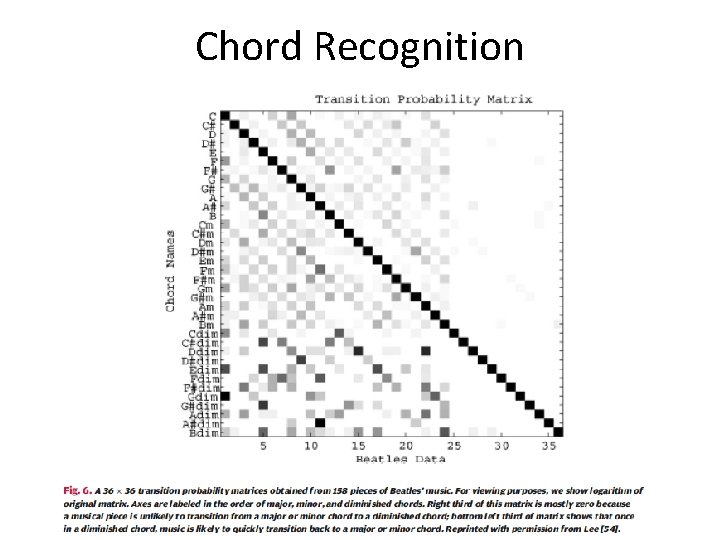

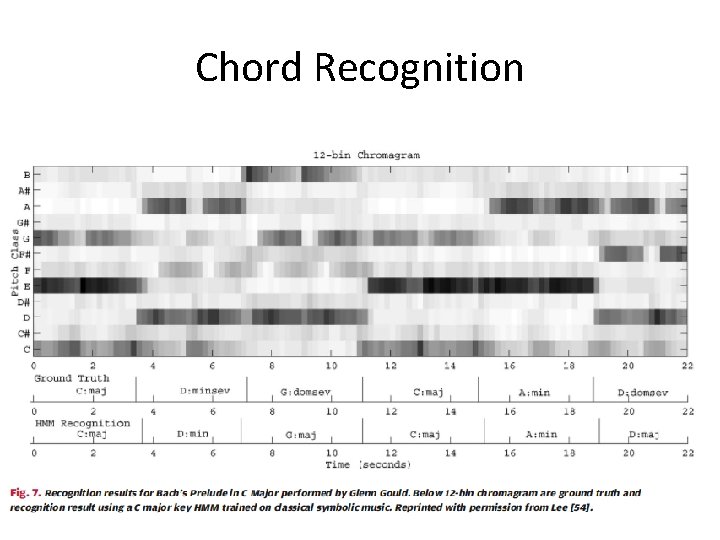

Chord Recognition Recognize chord progressions based on: - Estimated PCPs - Statistics of transitions between PCPs Casey et al IEEE 2008

Chord Recognition

Chord Recognition

Music as vector of features • Once again we represent (music) documents as a vector of numbers – Each entry (or set of entries) in this vector is a different feature

Music as vector of features • Once again we represent (music) documents as a vector of numbers – Each entry (or set of entries) in this vector is a different feature • To retrieve music documents given a query we can: – – Find exact matches Find nearest match Find nearby matches Train a classifier to recognize a given category (genre, style etc).

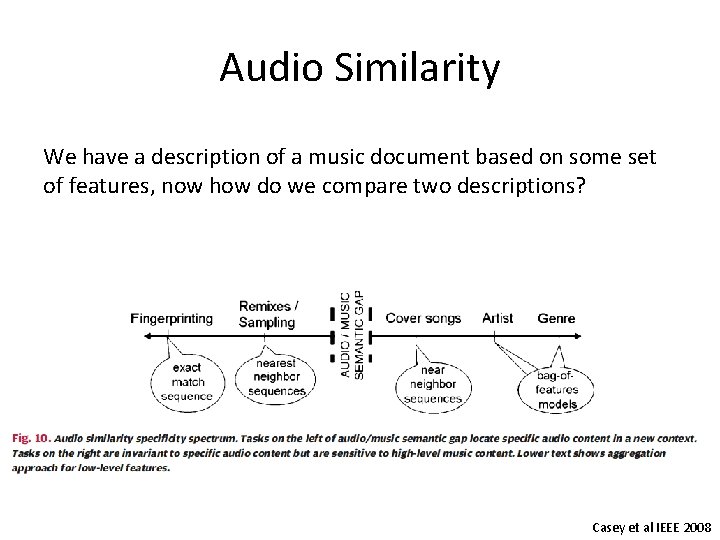

Audio Similarity We have a description of a music document based on some set of features, now how do we compare two descriptions? Casey et al IEEE 2008

Usage examples

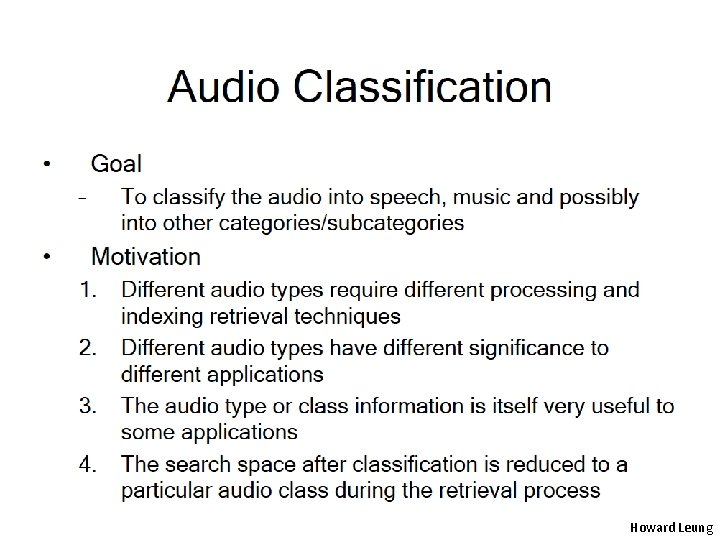

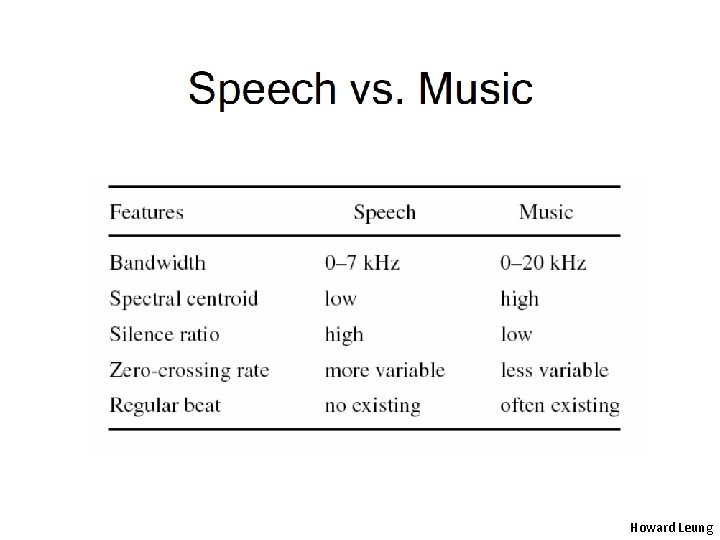

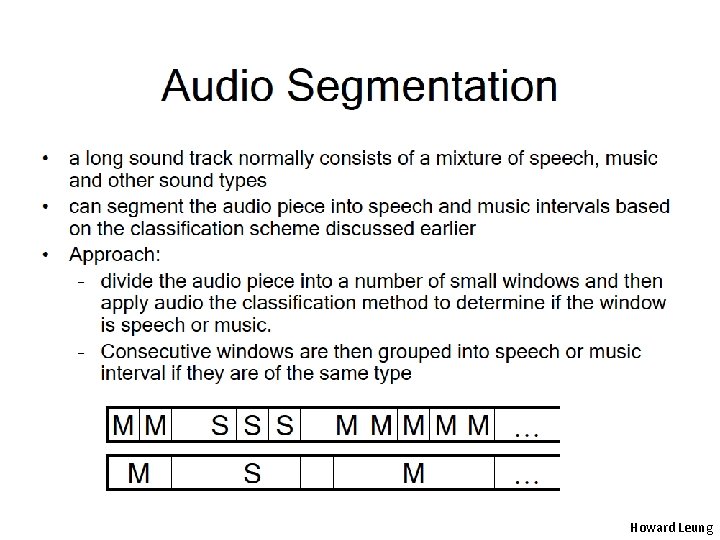

Howard Leung

Howard Leung

Howard Leung

Howard Leung

Howard Leung

Howard Leung

Howard Leung

Query by humming • Requires robustness to variation because matches will not be exact • Extract melody from dataset of songs • Extract melody from hum • Match by comparing similarities of melodies (nearby matches)

Copyright monitoring • Compute fingerprints from database examples • Compute fingerprint from query example • Find exact matches

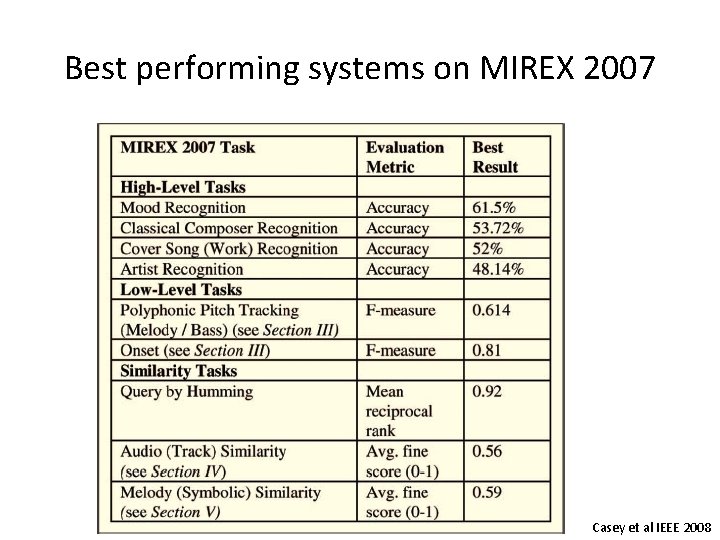

Best performing systems on MIREX 2007 Casey et al IEEE 2008

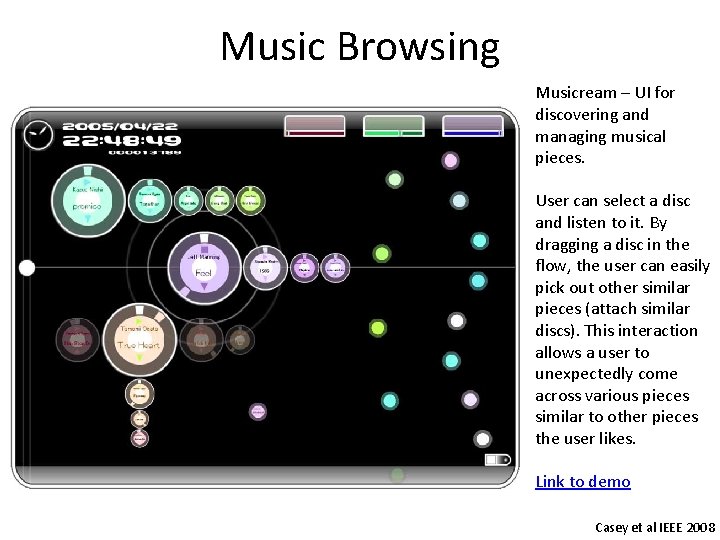

Music Browsing Musicream – UI for discovering and managing musical pieces. User can select a disc and listen to it. By dragging a disc in the flow, the user can easily pick out other similar pieces (attach similar discs). This interaction allows a user to unexpectedly come across various pieces similar to other pieces the user likes. Link to demo Casey et al IEEE 2008

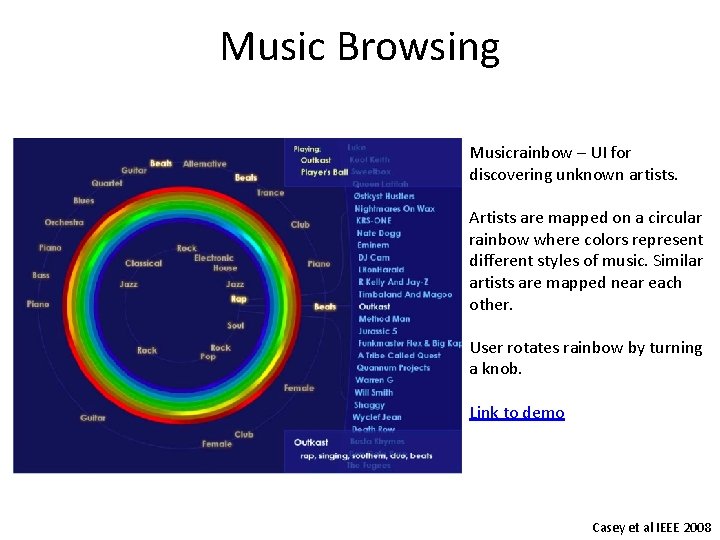

Music Browsing Musicrainbow – UI for discovering unknown artists. Artists are mapped on a circular rainbow where colors represent different styles of music. Similar artists are mapped near each other. User rotates rainbow by turning a knob. Link to demo Casey et al IEEE 2008

Howard Leung

- Slides: 68