Sorting Algorithms 2 Quicksort General Quicksort Algorithm Select

![Complexity of Quicksort Recurrence Relation: [Average Case] 2 sub problems ½ size (if good Complexity of Quicksort Recurrence Relation: [Average Case] 2 sub problems ½ size (if good](https://slidetodoc.com/presentation_image/355b37ab97cfc6c7d30c5eb8ac198339/image-7.jpg)

![Complexity of Quicksort Recurrence Relation: [Worst Case] Partition separates into (n-1) and (1) n Complexity of Quicksort Recurrence Relation: [Worst Case] Partition separates into (n-1) and (1) n](https://slidetodoc.com/presentation_image/355b37ab97cfc6c7d30c5eb8ac198339/image-8.jpg)

![Merge. Sort Example Temp Array 3 20 Array 18 Temp[i] < Temp[j] No 3 Merge. Sort Example Temp Array 3 20 Array 18 Temp[i] < Temp[j] No 3](https://slidetodoc.com/presentation_image/355b37ab97cfc6c7d30c5eb8ac198339/image-17.jpg)

![How Fast? [1, 2, 3] K 1 <= K 2 Yes [1, 2, 3] How Fast? [1, 2, 3] K 1 <= K 2 Yes [1, 2, 3]](https://slidetodoc.com/presentation_image/355b37ab97cfc6c7d30c5eb8ac198339/image-24.jpg)

- Slides: 26

Sorting Algorithms 2

Quicksort General Quicksort Algorithm: Select an element from the array to be the pivot n Rearrange the elements of the array into a left and right subarray n n All values in the left subarray are < pivot n All values in the right subarray are > pivot Independently sort the subarrays n No merging required, as left and right are independent problems [ Parallelism? !? ] n

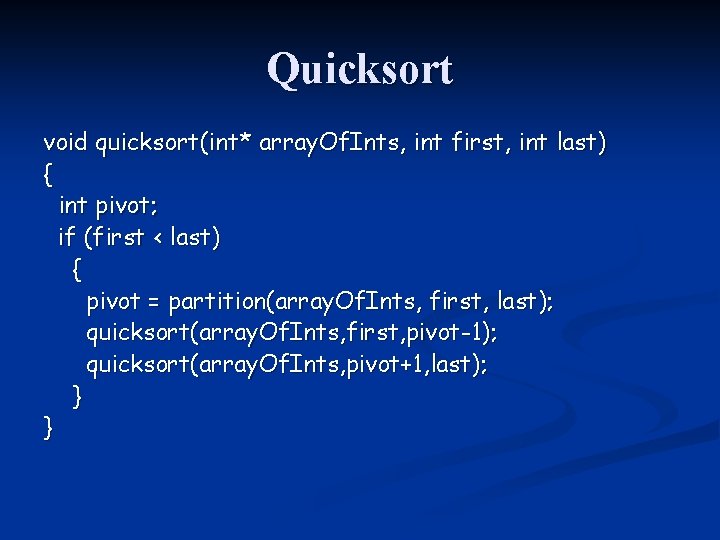

Quicksort void quicksort(int* array. Of. Ints, int first, int last) { int pivot; if (first < last) { pivot = partition(array. Of. Ints, first, last); quicksort(array. Of. Ints, first, pivot-1); quicksort(array. Of. Ints, pivot+1, last); } }

Quicksort int partition(int* array. Of. Ints, int first, int last) { int temp; int p = first; // set pivot = first index for (int k = first+1; k <= last; k++) // for every other indx { if (array. Of. Ints[k] <= array. Of. Ints[first]) // if data is smaller { p = p + 1; // update final pivot location swap(array. Of. Ints[k], array. Of. Ints[p]); } } swap(array. Of. Ints[p], array. Of. Ints[first]); return p; }

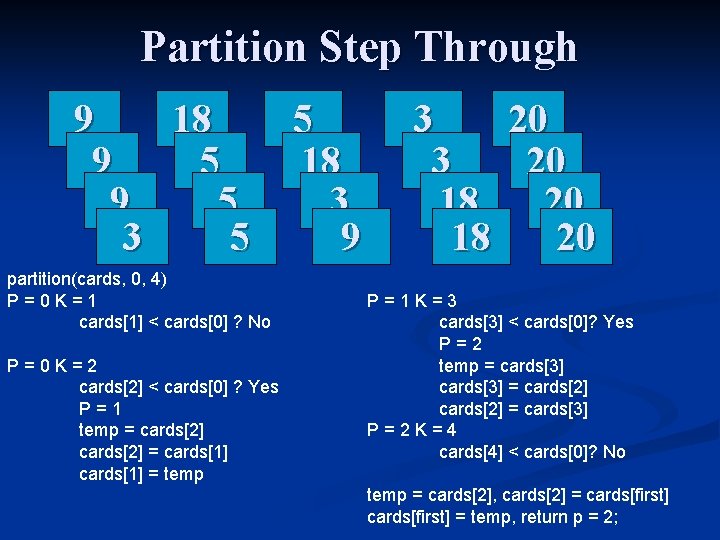

Partition Step Through 9 18 9 5 3 5 partition(cards, 0, 4) P=0 K=1 cards[1] < cards[0] ? No P=0 K=2 cards[2] < cards[0] ? Yes P=1 temp = cards[2] = cards[1] = temp 5 18 3 9 3 20 18 20 P=1 K=3 cards[3] < cards[0]? Yes P=2 temp = cards[3] = cards[2] = cards[3] P=2 K=4 cards[4] < cards[0]? No temp = cards[2], cards[2] = cards[first] = temp, return p = 2;

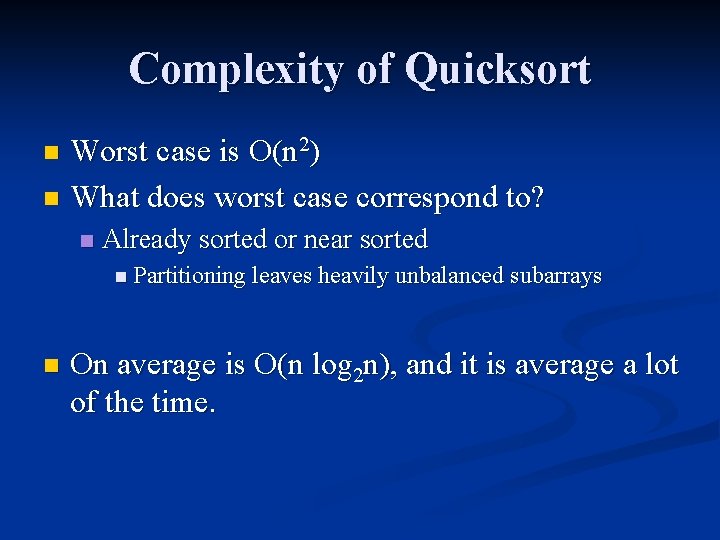

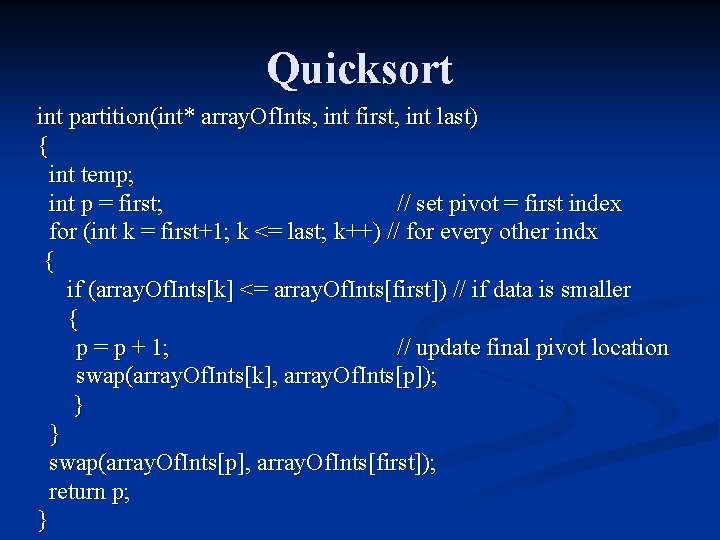

Complexity of Quicksort Worst case is O(n 2) n What does worst case correspond to? n n Already sorted or near sorted n Partitioning leaves heavily unbalanced n subarrays On average is O(n log 2 n), and it is average a lot of the time.

![Complexity of Quicksort Recurrence Relation Average Case 2 sub problems ½ size if good Complexity of Quicksort Recurrence Relation: [Average Case] 2 sub problems ½ size (if good](https://slidetodoc.com/presentation_image/355b37ab97cfc6c7d30c5eb8ac198339/image-7.jpg)

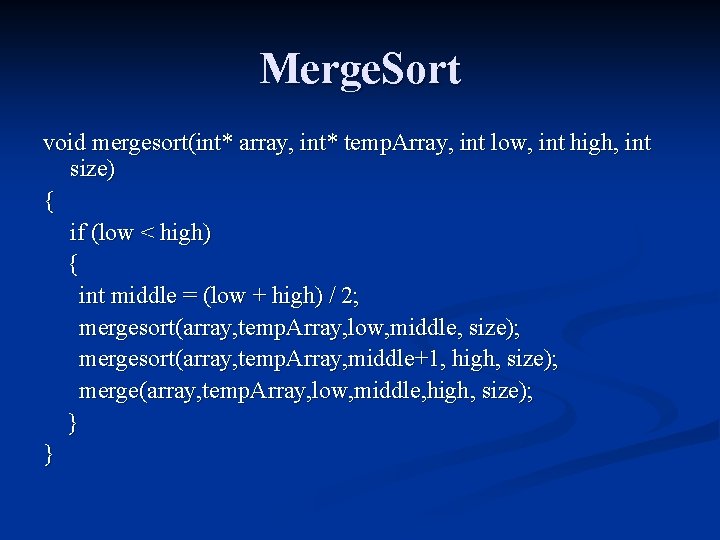

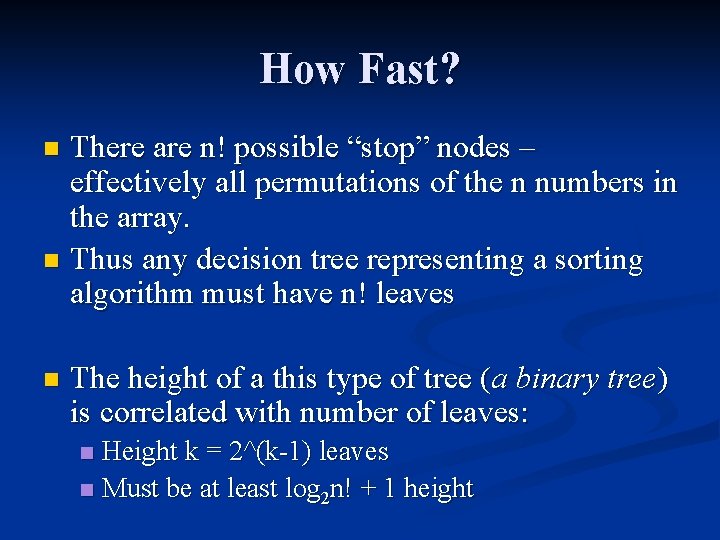

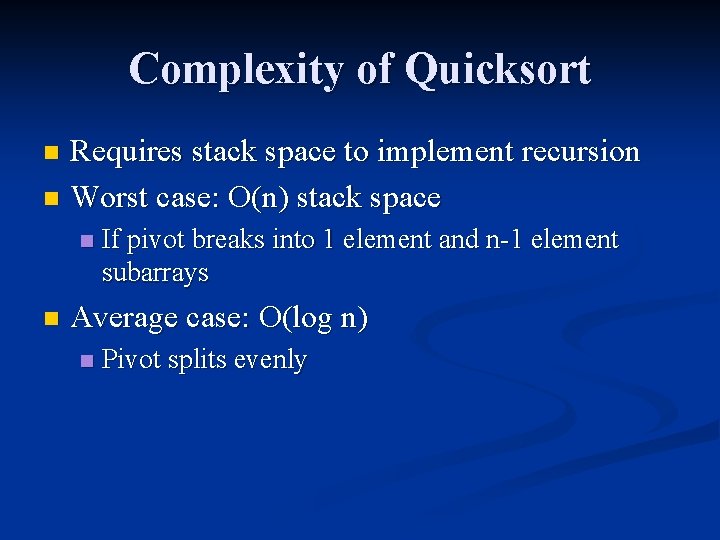

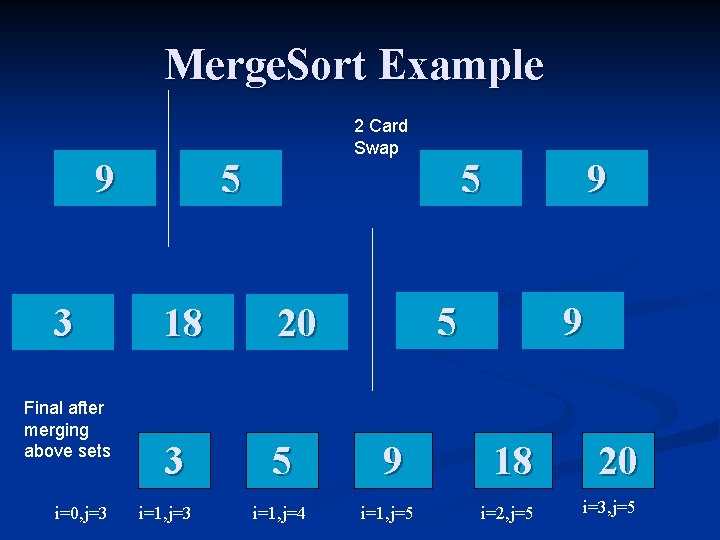

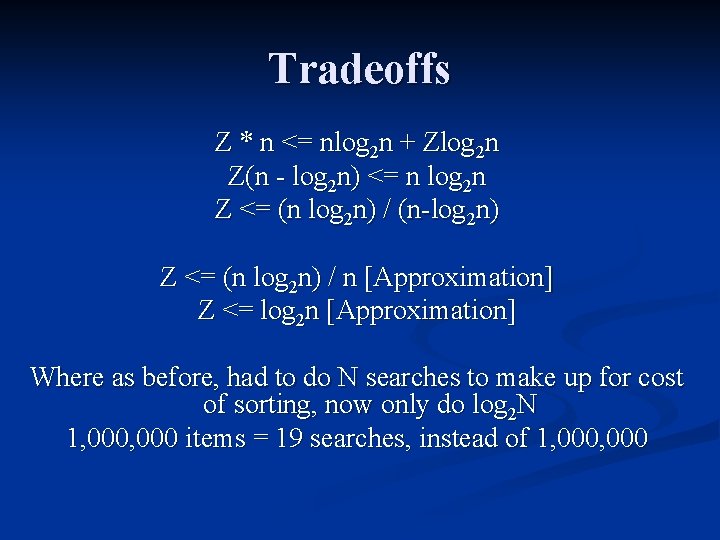

Complexity of Quicksort Recurrence Relation: [Average Case] 2 sub problems ½ size (if good pivot) Partition is O(n) a=2 b=2 k=1 2 = 21 Master Theorem: O(nlog 2 n)

![Complexity of Quicksort Recurrence Relation Worst Case Partition separates into n1 and 1 n Complexity of Quicksort Recurrence Relation: [Worst Case] Partition separates into (n-1) and (1) n](https://slidetodoc.com/presentation_image/355b37ab97cfc6c7d30c5eb8ac198339/image-8.jpg)

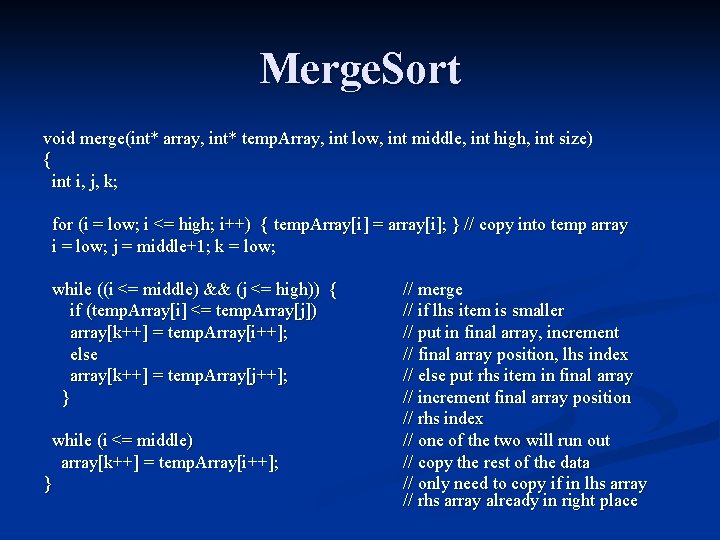

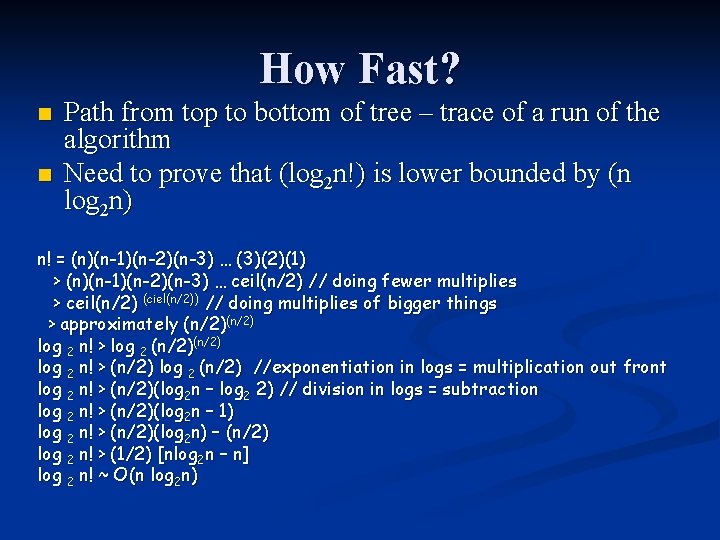

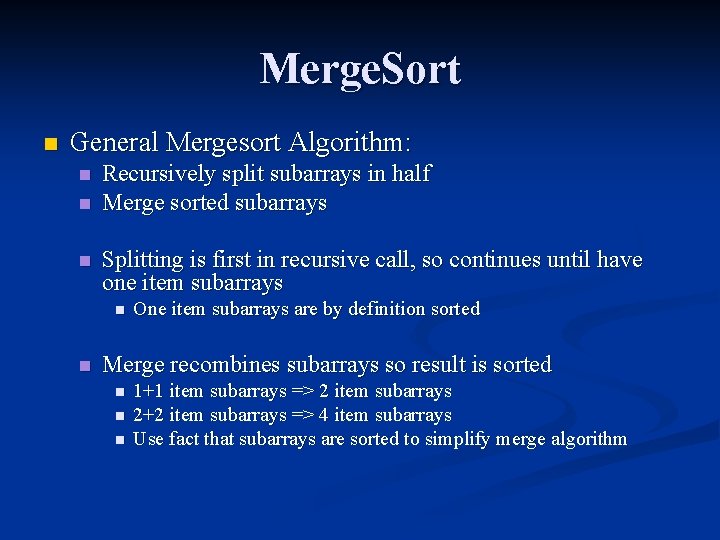

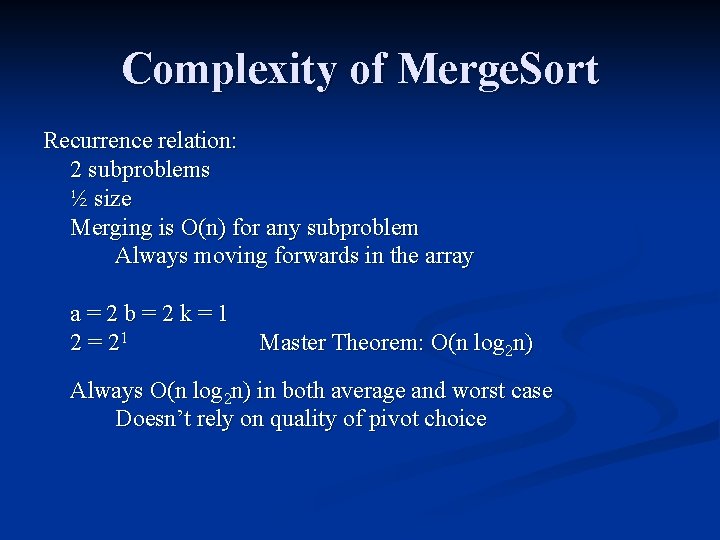

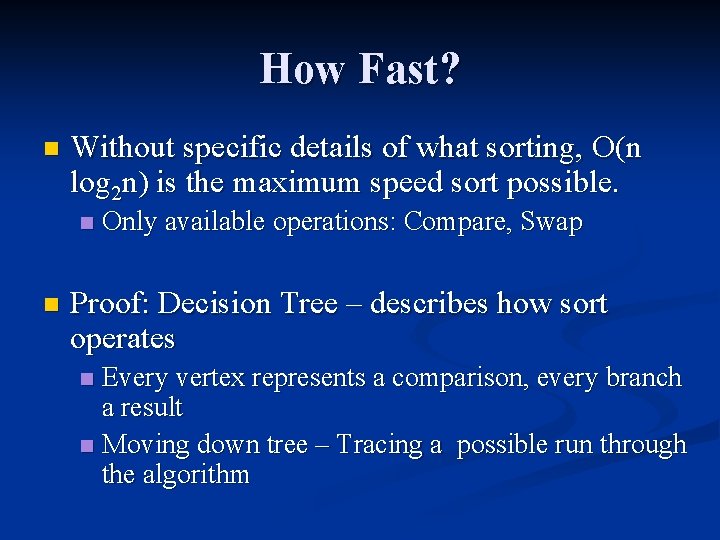

Complexity of Quicksort Recurrence Relation: [Worst Case] Partition separates into (n-1) and (1) n Can’t use master theorem: n b (subproblem size) changes n-1/n n-2/n-1 n-3/n-2 n Note that sum of partition work: n + (n-1) + (n-2) + (n-3) … Sum(1, N) = N(N+1)/2 = O(N 2)

Complexity of Quicksort Requires stack space to implement recursion n Worst case: O(n) stack space n n n If pivot breaks into 1 element and n-1 element subarrays Average case: O(log n) n Pivot splits evenly

Merge. Sort n General Mergesort Algorithm: n n n Recursively split subarrays in half Merge sorted subarrays Splitting is first in recursive call, so continues until have one item subarrays n n One item subarrays are by definition sorted Merge recombines subarrays so result is sorted n n n 1+1 item subarrays => 2 item subarrays 2+2 item subarrays => 4 item subarrays Use fact that subarrays are sorted to simplify merge algorithm

Merge. Sort void mergesort(int* array, int* temp. Array, int low, int high, int size) { if (low < high) { int middle = (low + high) / 2; mergesort(array, temp. Array, low, middle, size); mergesort(array, temp. Array, middle+1, high, size); merge(array, temp. Array, low, middle, high, size); } }

Merge. Sort void merge(int* array, int* temp. Array, int low, int middle, int high, int size) { int i, j, k; for (i = low; i <= high; i++) { temp. Array[i] = array[i]; } // copy into temp array i = low; j = middle+1; k = low; while ((i <= middle) && (j <= high)) { if (temp. Array[i] <= temp. Array[j]) array[k++] = temp. Array[i++]; else array[k++] = temp. Array[j++]; } while (i <= middle) array[k++] = temp. Array[i++]; } // merge // if lhs item is smaller // put in final array, increment // final array position, lhs index // else put rhs item in final array // increment final array position // rhs index // one of the two will run out // copy the rest of the data // only need to copy if in lhs array // rhs array already in right place

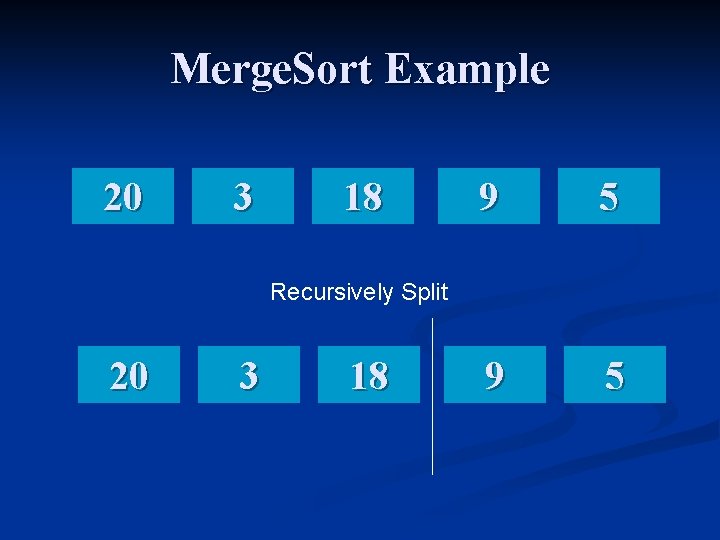

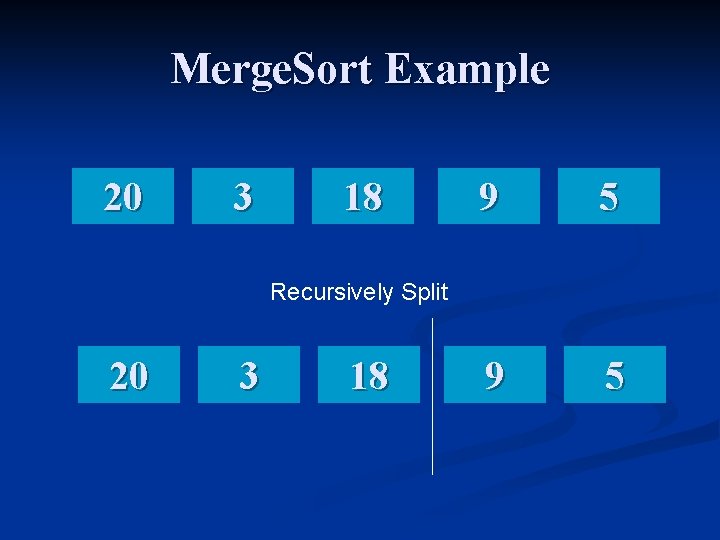

Merge. Sort Example 20 3 18 9 5 Recursively Split 20 3 18

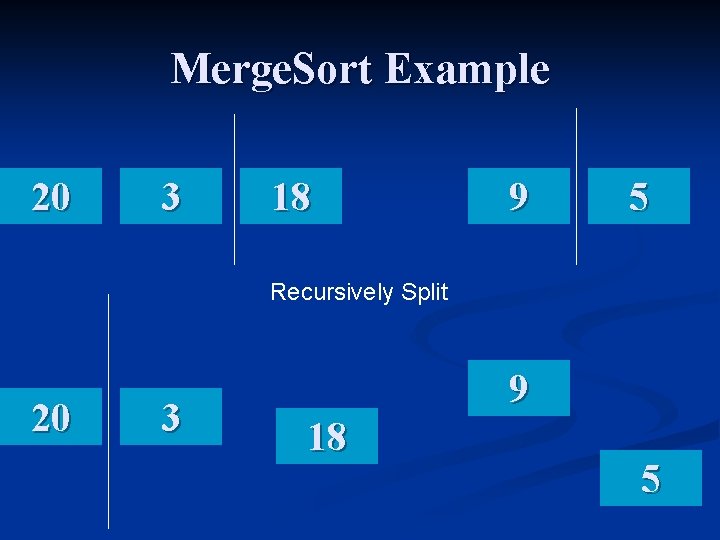

Merge. Sort Example 20 3 18 9 5 Recursively Split 20 3 9 18 5

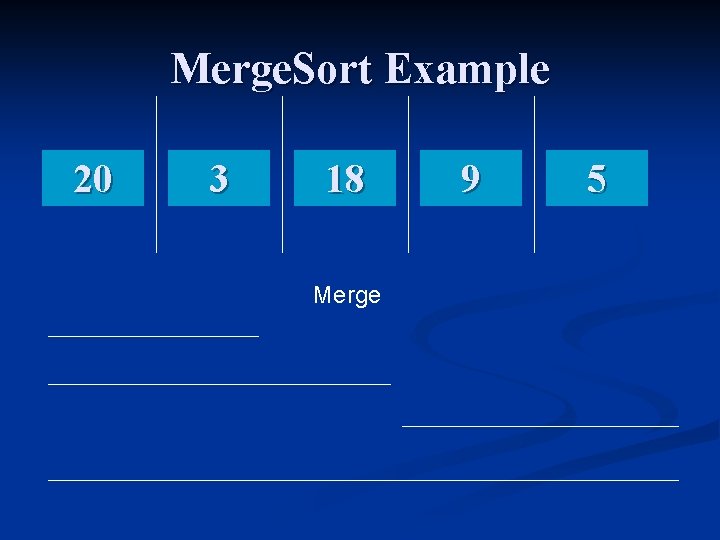

Merge. Sort Example 20 3 18 Merge 9 5

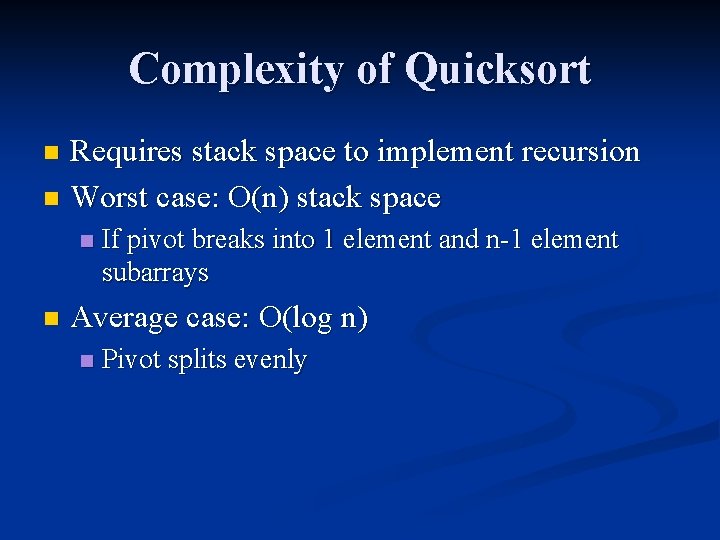

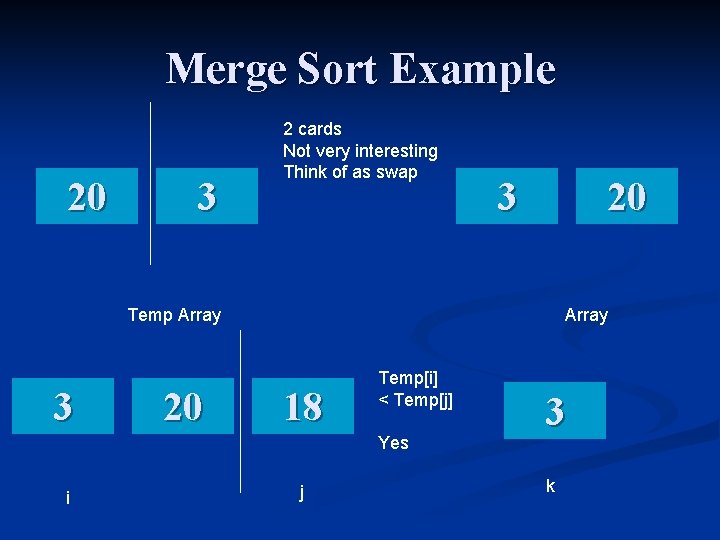

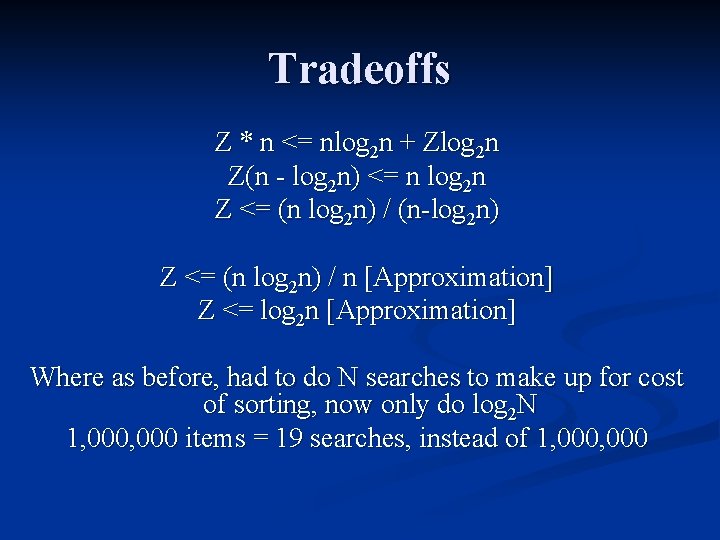

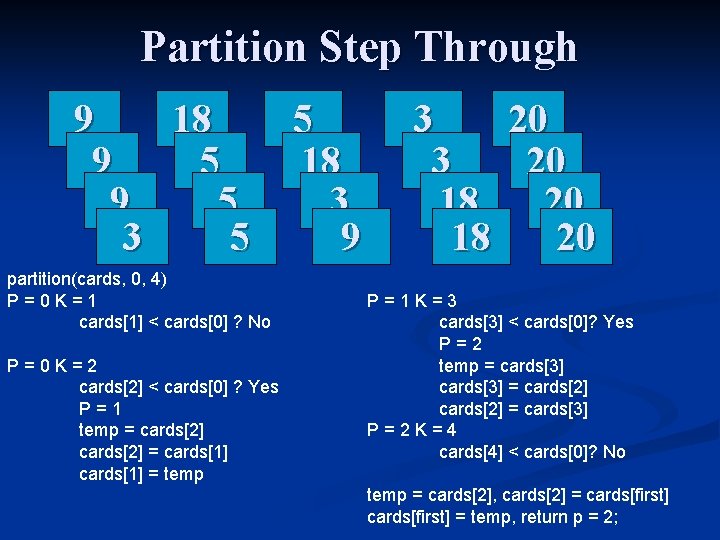

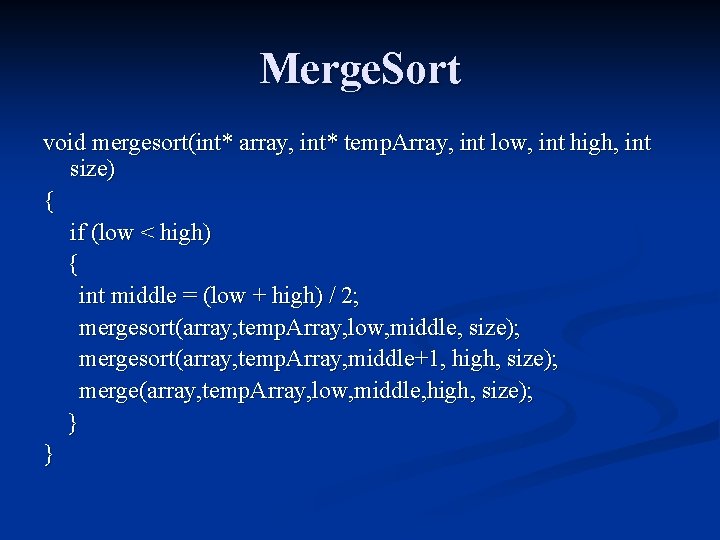

Merge Sort Example 20 3 2 cards Not very interesting Think of as swap 3 20 Temp Array 3 20 Array 18 Temp[i] < Temp[j] Yes i j 3 k

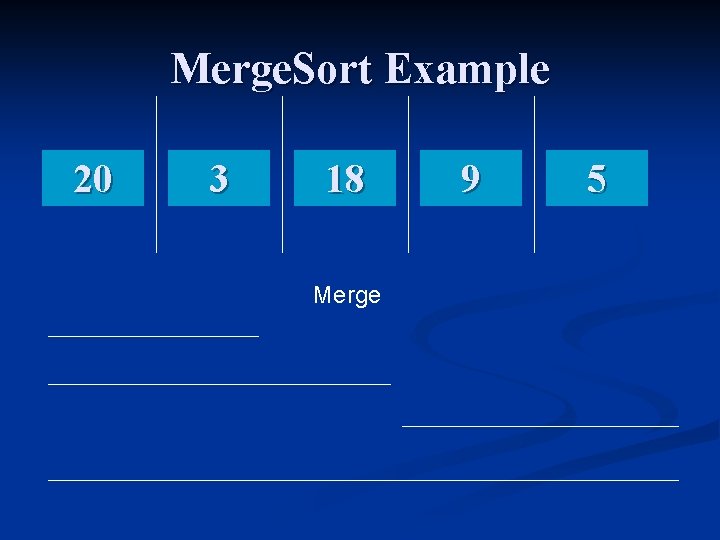

![Merge Sort Example Temp Array 3 20 Array 18 Tempi Tempj No 3 Merge. Sort Example Temp Array 3 20 Array 18 Temp[i] < Temp[j] No 3](https://slidetodoc.com/presentation_image/355b37ab97cfc6c7d30c5eb8ac198339/image-17.jpg)

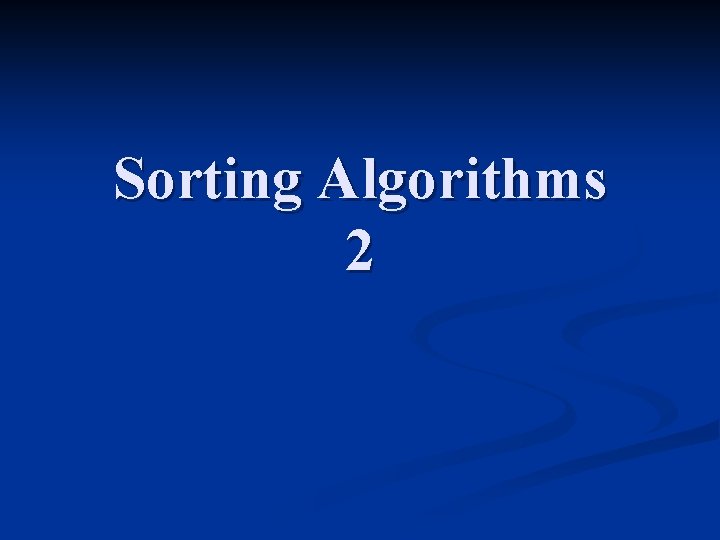

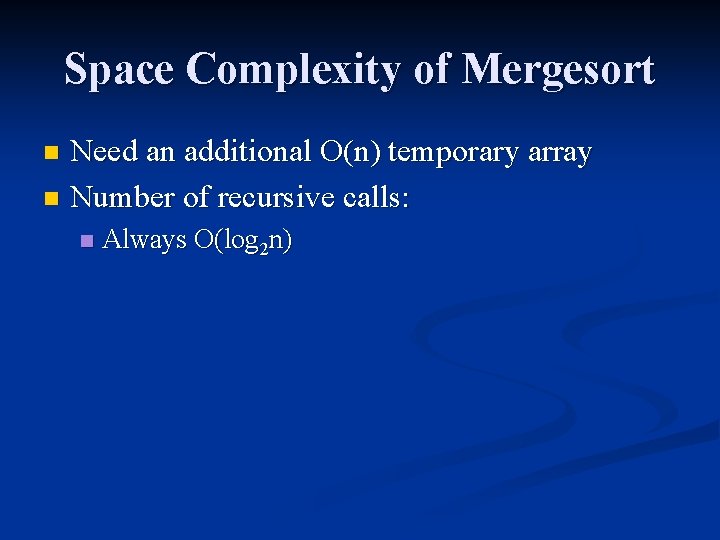

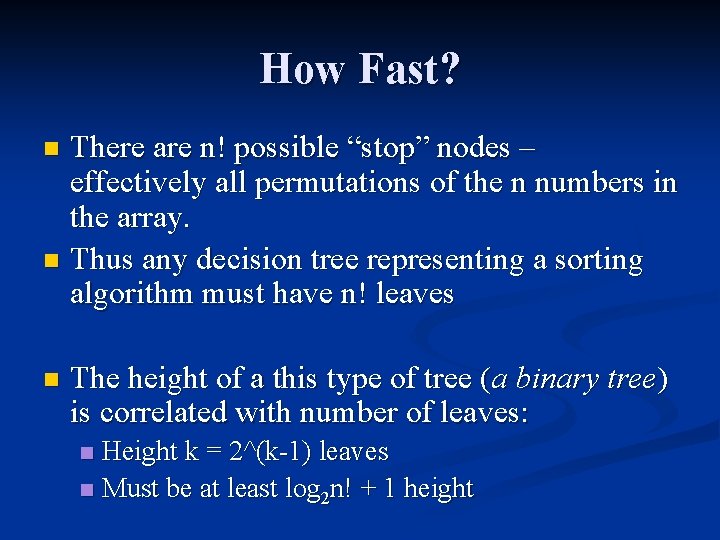

Merge. Sort Example Temp Array 3 20 Array 18 Temp[i] < Temp[j] No 3 j i k Update J, K by 1 => Hit Limit of Internal While Loop, as J > High Now Copy until I > Middle Array 3 18 18 20 k

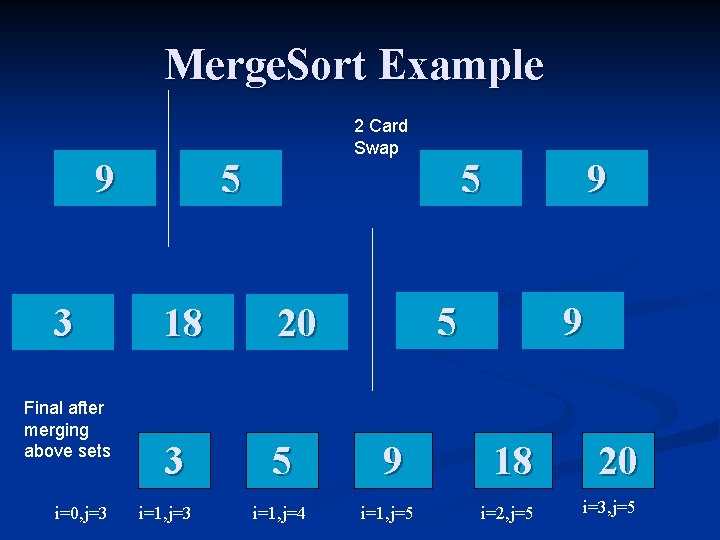

Merge. Sort Example 9 3 Final after merging above sets i=0, j=3 2 Card Swap 5 18 20 3 5 i=1, j=3 i=1, j=4 5 9 i=1, j=5 9 18 20 i=2, j=5 i=3, j=5

Complexity of Merge. Sort Recurrence relation: 2 subproblems ½ size Merging is O(n) for any subproblem Always moving forwards in the array a=2 b=2 k=1 2 = 21 Master Theorem: O(n log 2 n) Always O(n log 2 n) in both average and worst case Doesn’t rely on quality of pivot choice

Space Complexity of Mergesort Need an additional O(n) temporary array n Number of recursive calls: n n Always O(log 2 n)

Tradeoffs n When it is more useful to: Just search n Quicksort or Mergesort and search n Assume Z searches Search on random data: Z * O(n) Fast Sort and binary search: O(nlog 2 n) + Z *log 2 n n

Tradeoffs Z * n <= nlog 2 n + Zlog 2 n Z(n - log 2 n) <= n log 2 n Z <= (n log 2 n) / (n-log 2 n) Z <= (n log 2 n) / n [Approximation] Z <= log 2 n [Approximation] Where as before, had to do N searches to make up for cost of sorting, now only do log 2 N 1, 000 items = 19 searches, instead of 1, 000

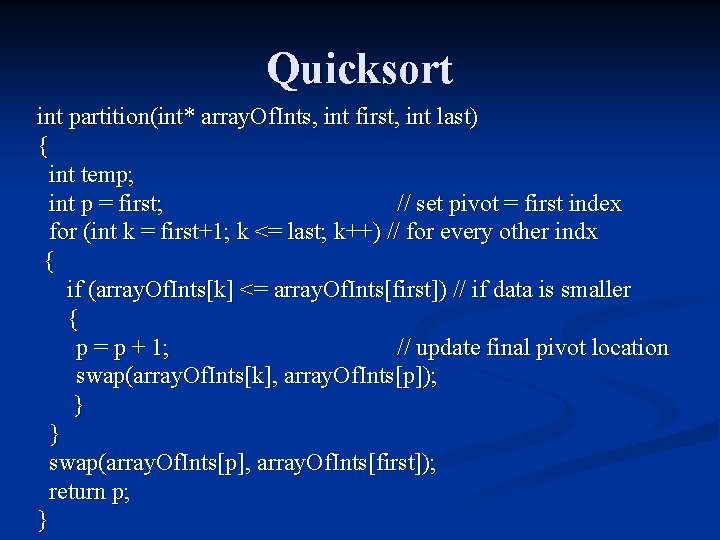

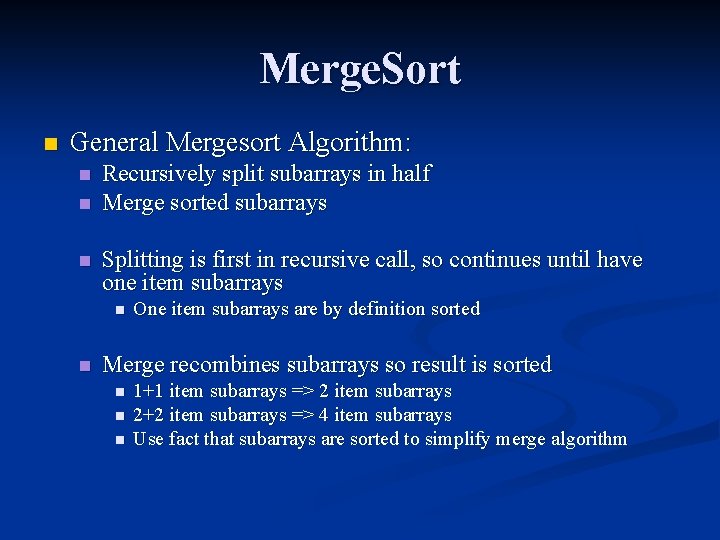

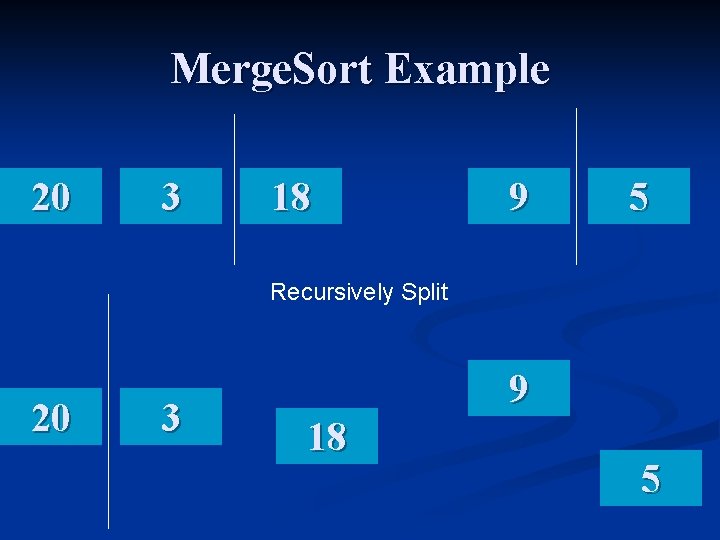

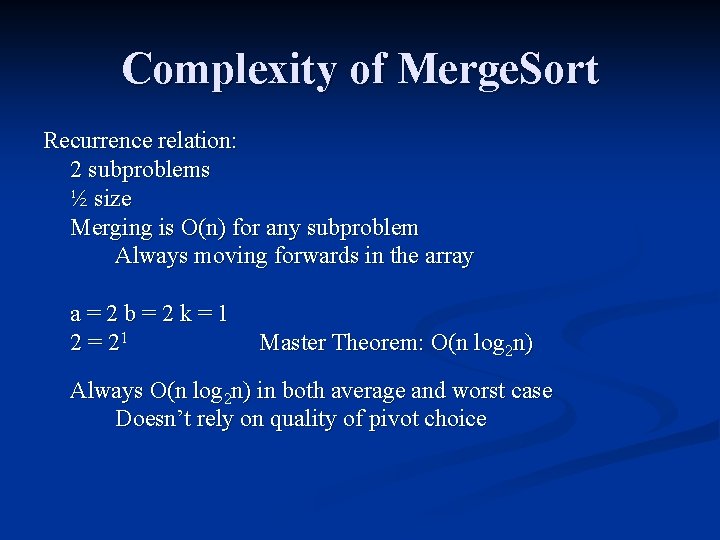

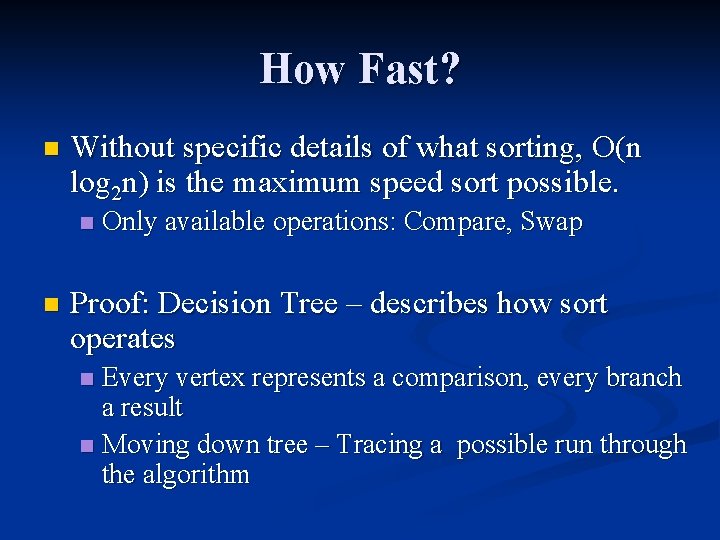

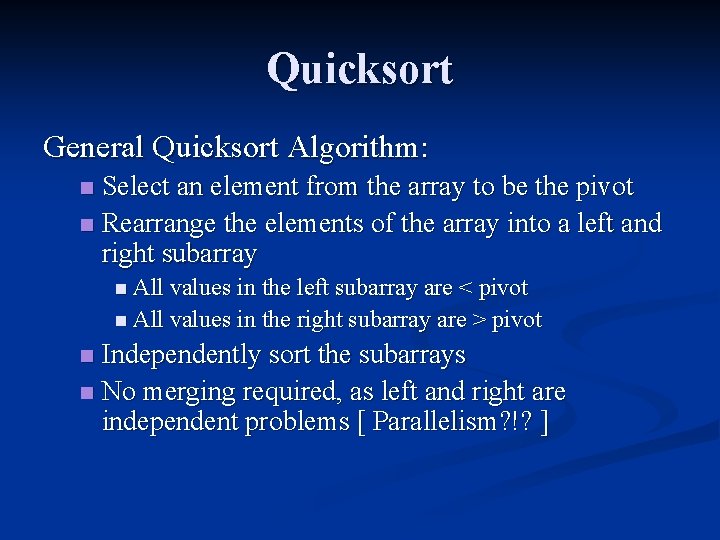

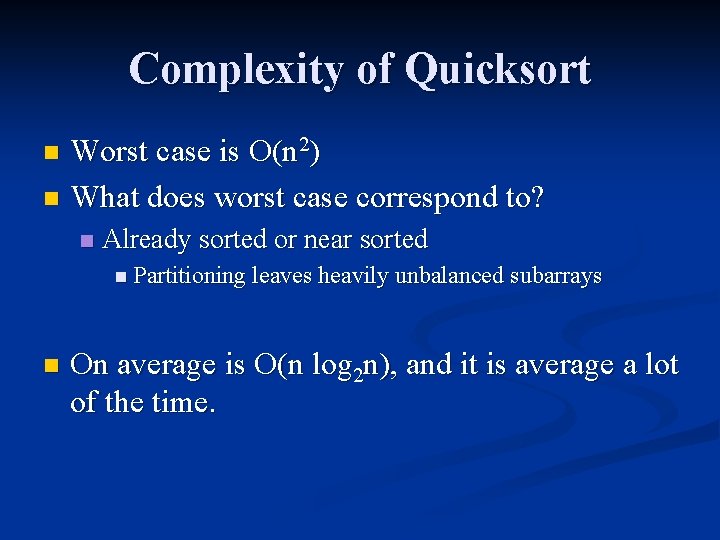

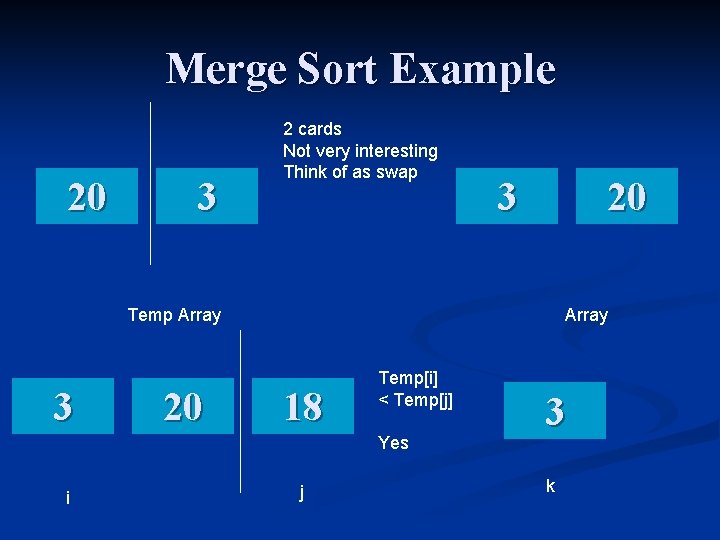

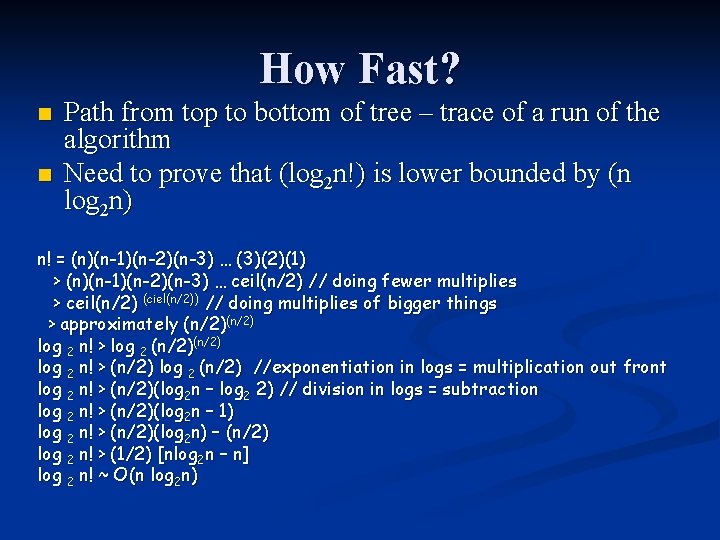

How Fast? n Without specific details of what sorting, O(n log 2 n) is the maximum speed sort possible. n n Only available operations: Compare, Swap Proof: Decision Tree – describes how sort operates Every vertex represents a comparison, every branch a result n Moving down tree – Tracing a possible run through the algorithm n

![How Fast 1 2 3 K 1 K 2 Yes 1 2 3 How Fast? [1, 2, 3] K 1 <= K 2 Yes [1, 2, 3]](https://slidetodoc.com/presentation_image/355b37ab97cfc6c7d30c5eb8ac198339/image-24.jpg)

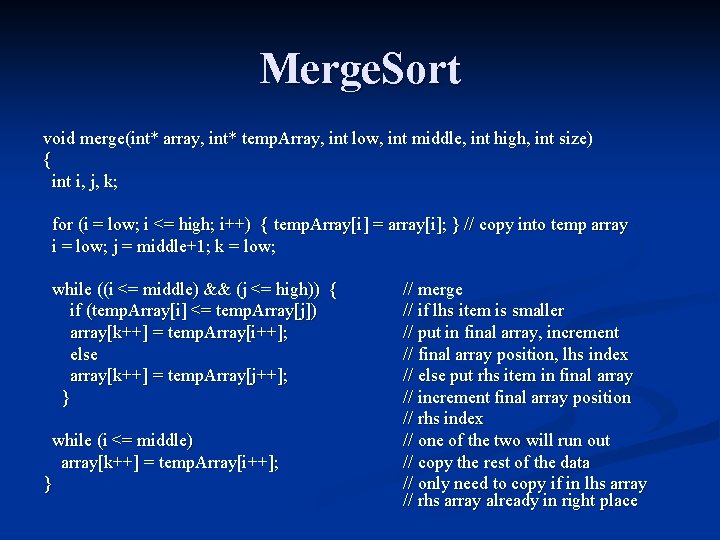

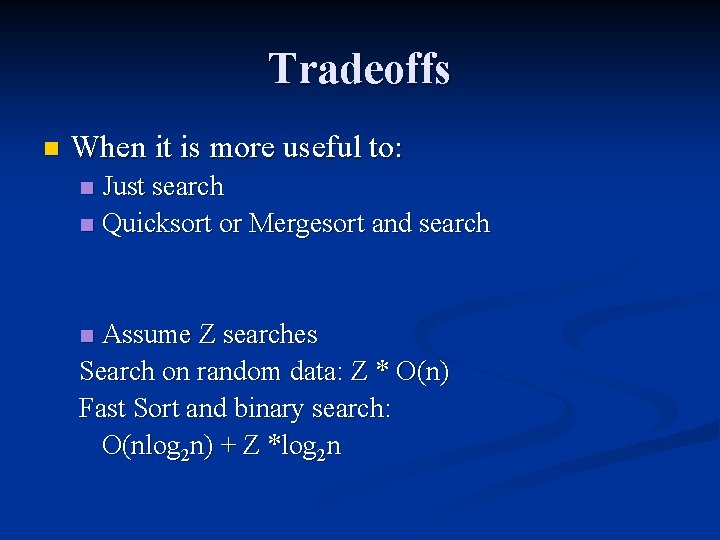

How Fast? [1, 2, 3] K 1 <= K 2 Yes [1, 2, 3] No K 2 <= K 3 Yes K 1 <= K 3 Yes No [1, 2, 3] [2, 1, 3] stop K 1 <= K 3 Yes stop [1, 3, 2] No [2, 3, 1] stop K 2 <= K 3 Yes No stop [3, 1, 2] [2, 1, 3] stop [2, 3, 1] No stop [3, 2, 1]

How Fast? There are n! possible “stop” nodes – effectively all permutations of the n numbers in the array. n Thus any decision tree representing a sorting algorithm must have n! leaves n n The height of a this type of tree (a binary tree) is correlated with number of leaves: Height k = 2^(k-1) leaves n Must be at least log 2 n! + 1 height n

How Fast? n n Path from top to bottom of tree – trace of a run of the algorithm Need to prove that (log 2 n!) is lower bounded by (n log 2 n) n! = (n)(n-1)(n-2)(n-3) … (3)(2)(1) > (n)(n-1)(n-2)(n-3) … ceil(n/2) // doing fewer multiplies > ceil(n/2) (ciel(n/2)) // doing multiplies of bigger things > approximately (n/2) log 2 n! > log 2 (n/2) log 2 n! > (n/2) log 2 (n/2) //exponentiation in logs = multiplication out front log 2 n! > (n/2)(log 2 n – log 2 2) // division in logs = subtraction log 2 n! > (n/2)(log 2 n – 1) log 2 n! > (n/2)(log 2 n) – (n/2) log 2 n! > (1/2) [nlog 2 n – n] log 2 n! ~ O(n log 2 n)