SOP for Machine Learning J S Roger Jang

- Slides: 13

SOP for Machine Learning J. -S. Roger Jang (張智星) jang@mirlab. org http: //mirlab. org/jang MIR Lab, CSIE Dept. National Taiwan University

SOP for ML Does SOP for ML exist? � Yes! � More or less… 2/13

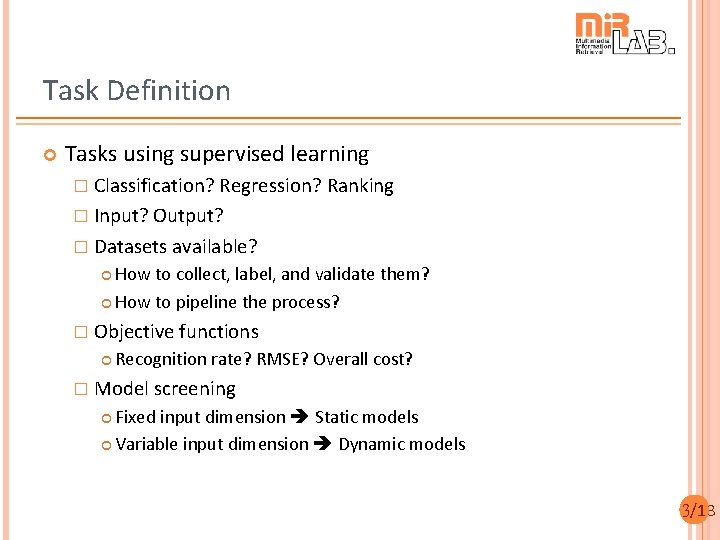

Task Definition Tasks using supervised learning � Classification? Regression? Ranking � Input? Output? � Datasets available? How to collect, label, and validate them? How to pipeline the process? � Objective functions Recognition rate? RMSE? Overall cost? � Model screening Fixed input dimension Static models Variable input dimension Dynamic models 3/13

Data Cleaning Handling of missing value � Data selection by maximum coverage � Data imputing By median or average By ML (again!) Data checkup (requires the aid of visualization tool) � Same-value features within a class � Same-feature entry � Outline removal via whisker plot � Correlation analysis/plot 4/13

Data Preprocessing Unstructured data to structured � Texts One-hot encoding Word hashing Word to vector TF-IDF & SVD … 5/13

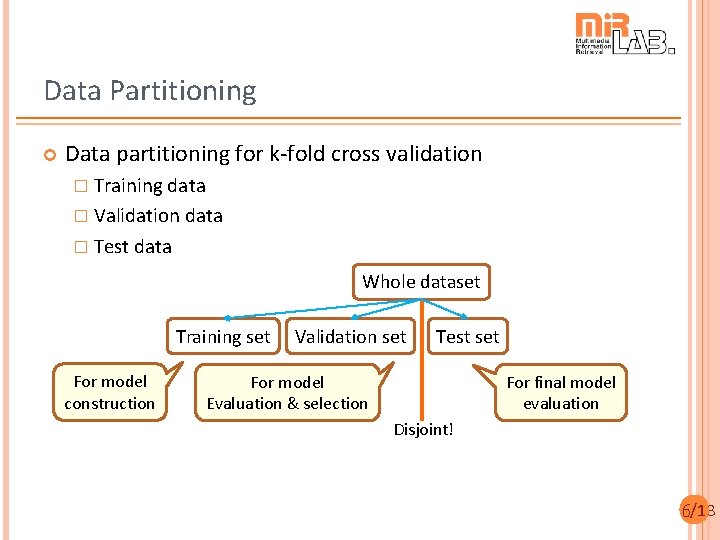

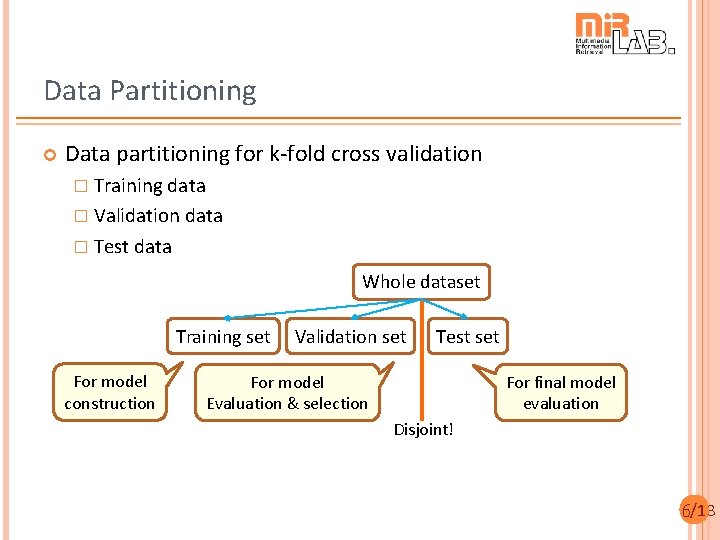

Data Partitioning Data partitioning for k-fold cross validation � Training data � Validation data � Test data Whole dataset Training set For model construction Validation set Test set For model Evaluation & selection For final model evaluation Disjoint! 6/13

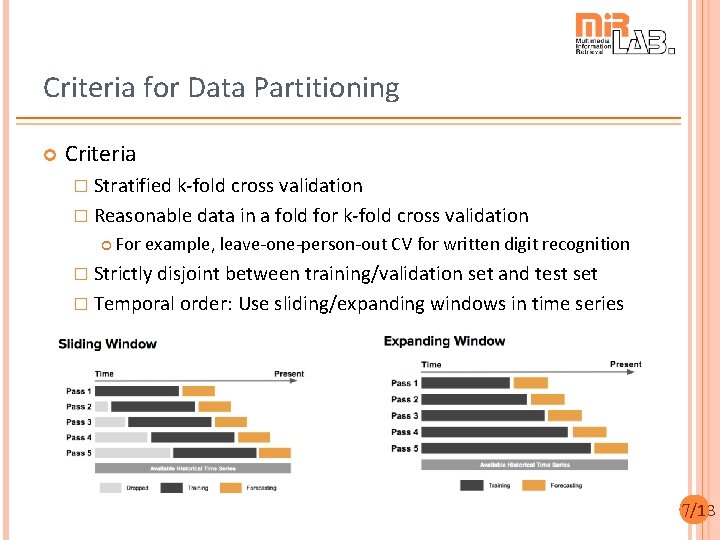

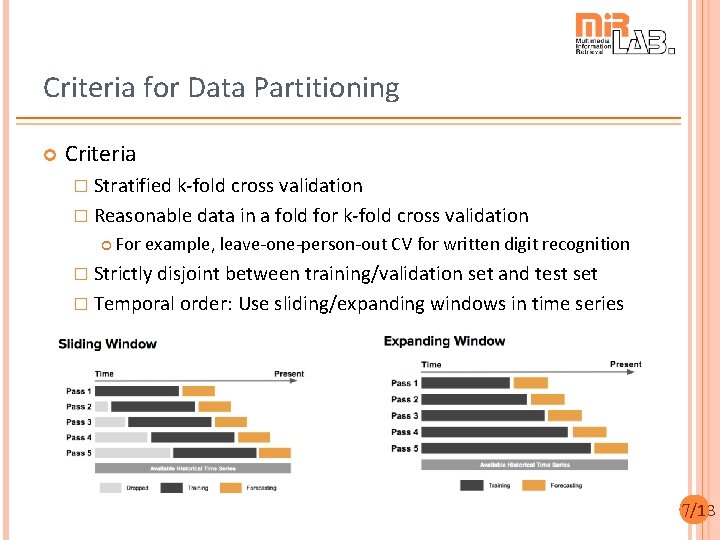

Criteria for Data Partitioning Criteria � Stratified k-fold cross validation � Reasonable data in a fold for k-fold cross validation For example, leave-one-person-out CV for written digit recognition � Strictly disjoint between training/validation set and test set � Temporal order: Use sliding/expanding windows in time series 7/13

Classical ML Models Try out basic models � Static models K-nearest-neighbor classifier Naïve Bayes classifiers & quadratic classifiers Support vector classifiers Random forests & XGBoost � Dynamic models HMM (hidden Markov models) RNN (recurrent neural networks) LSTM (long short-term memory) Check feature feasibility � Training vs. validation accuracy 8/13

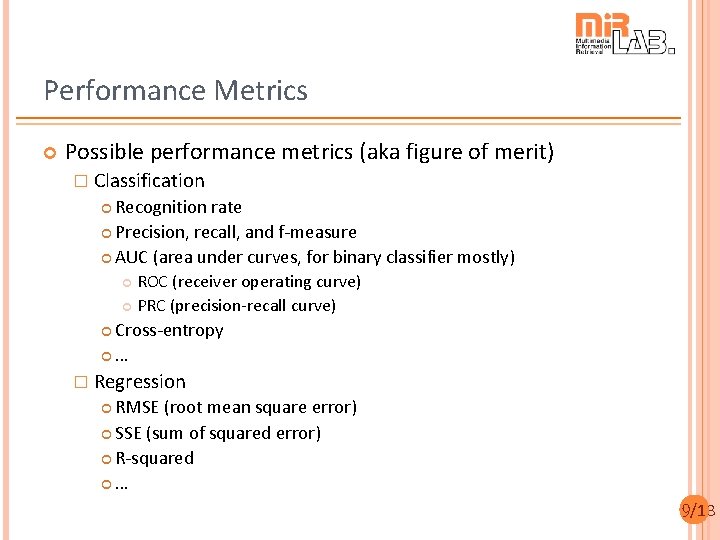

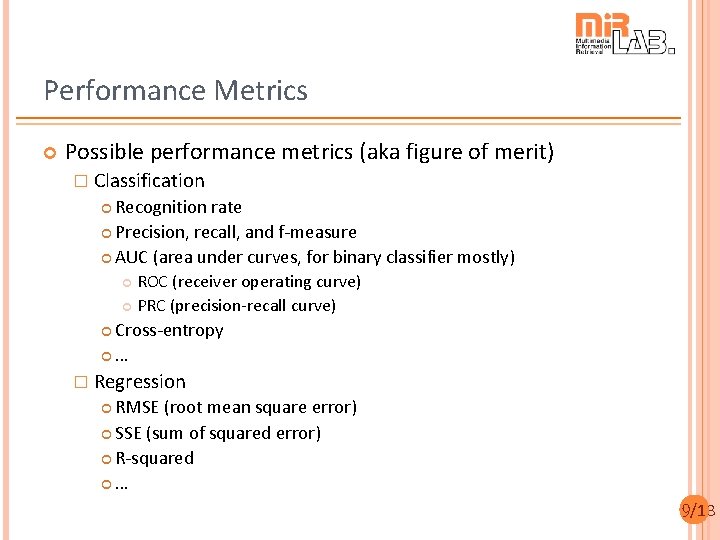

Performance Metrics Possible performance metrics (aka figure of merit) � Classification Recognition rate Precision, recall, and f-measure AUC (area under curves, for binary classifier mostly) ROC (receiver operating curve) PRC (precision-recall curve) Cross-entropy … � Regression RMSE (root mean square error) SSE (sum of squared error) R-squared … 9/13

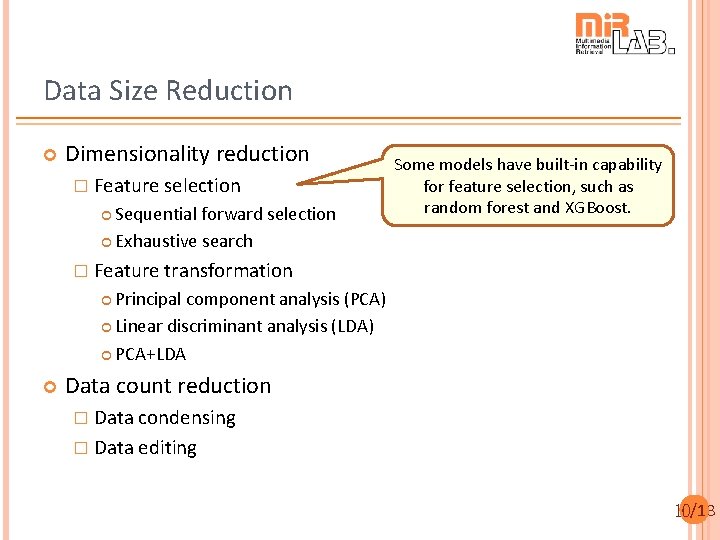

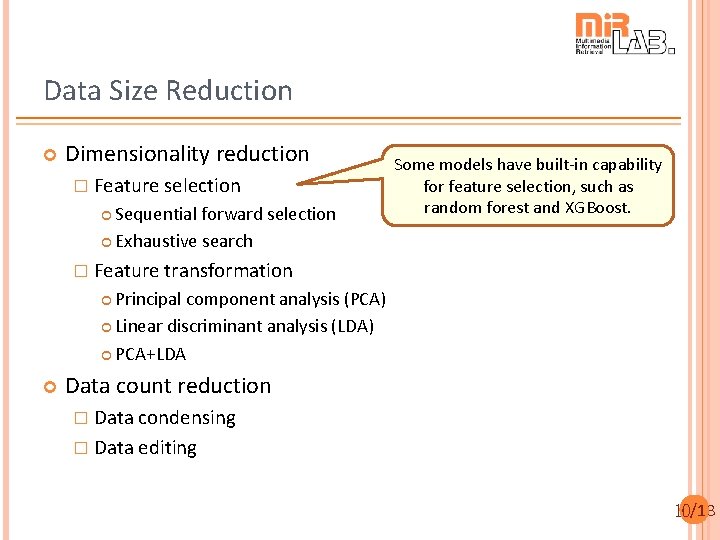

Data Size Reduction Dimensionality reduction � Feature selection Sequential forward selection Exhaustive search Some models have built-in capability for feature selection, such as random forest and XGBoost. � Feature transformation Principal component analysis (PCA) Linear discriminant analysis (LDA) PCA+LDA Data count reduction � Data condensing � Data editing 10/13

Avoidance of Overfitting Model structure identification (aka model complexity estimation) � No. of clusters in k-means clustering for KNNC � No. of mixtures in GMM Regression � No. of hidden layers in NN Regularization � Use regularization term in the objective function For instance, L-2 norm of weights in NN � Drop-out in NN Classification 11/13

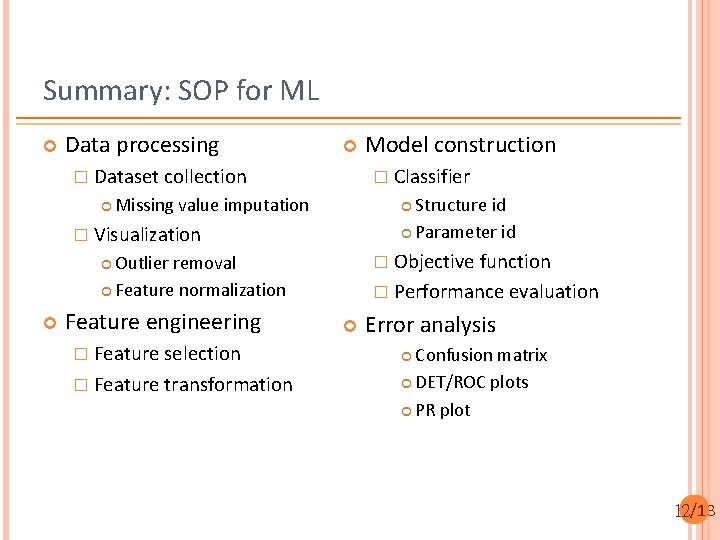

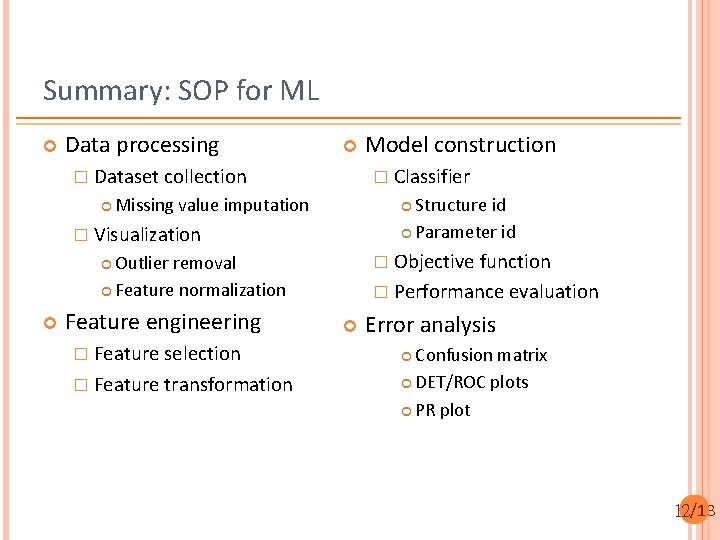

Summary: SOP for ML Data processing � Dataset collection � Classifier Missing value imputation Structure id Parameter id � Visualization � Objective function Outlier removal Feature normalization Feature engineering � Feature selection � Feature transformation Model construction � Performance evaluation Error analysis Confusion matrix DET/ROC plots PR plot 12/13

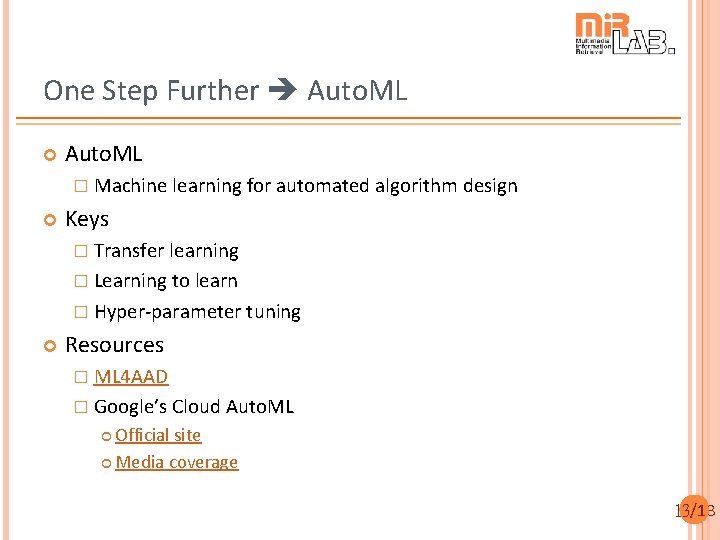

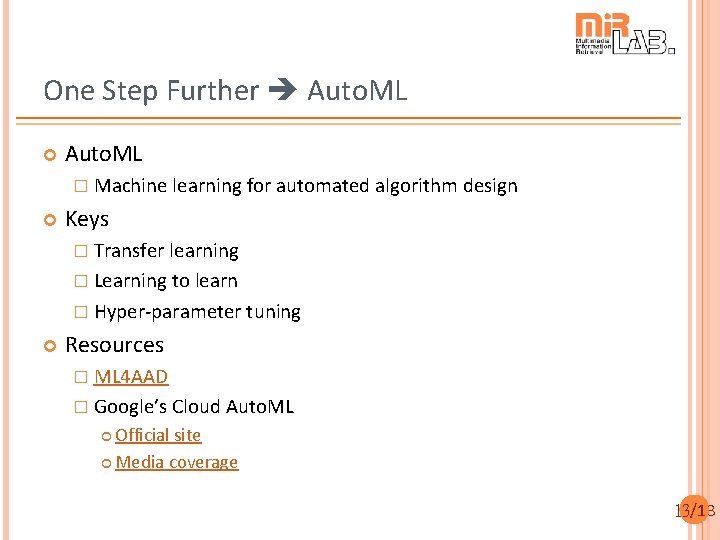

One Step Further Auto. ML � Machine learning for automated algorithm design Keys � Transfer learning � Learning to learn � Hyper-parameter tuning Resources � ML 4 AAD � Google’s Cloud Auto. ML Official site Media coverage 13/13