Some Final Words Kafu Wong University of Hong

- Slides: 61

Some Final Words Ka-fu Wong University of Hong Kong 1

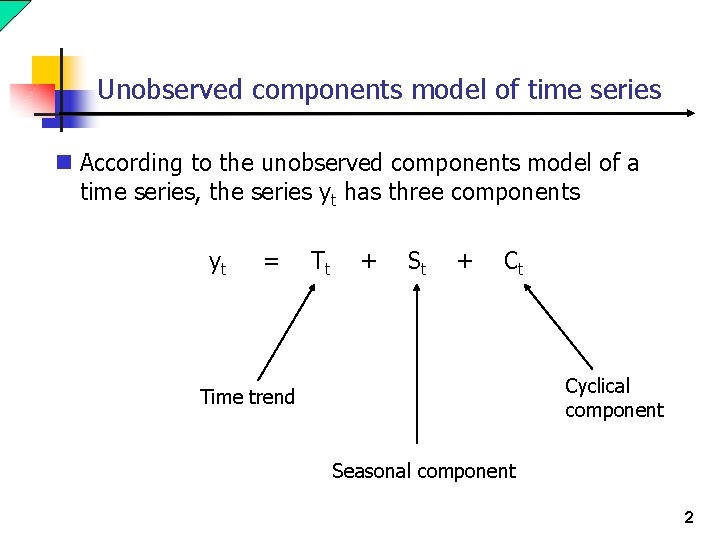

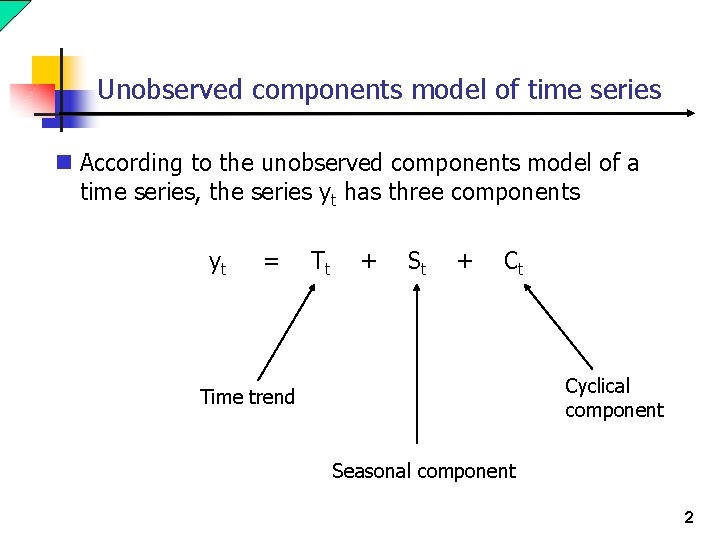

Unobserved components model of time series n According to the unobserved components model of a time series, the series yt has three components yt = Tt + St + Ct Cyclical component Time trend Seasonal component 2

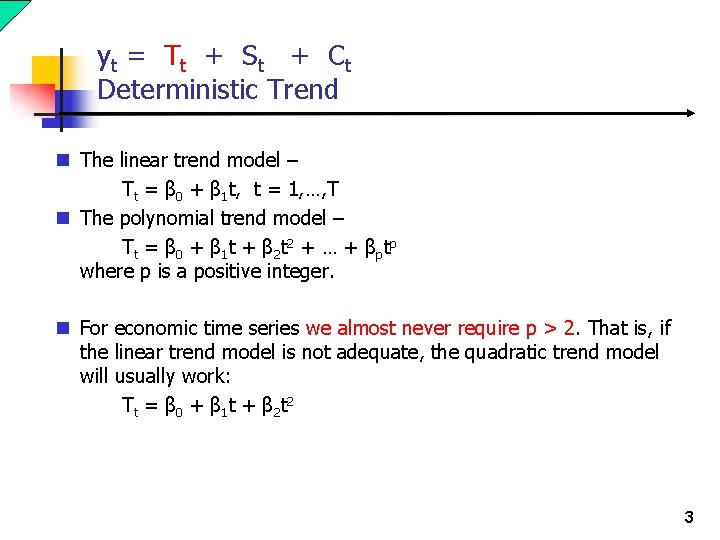

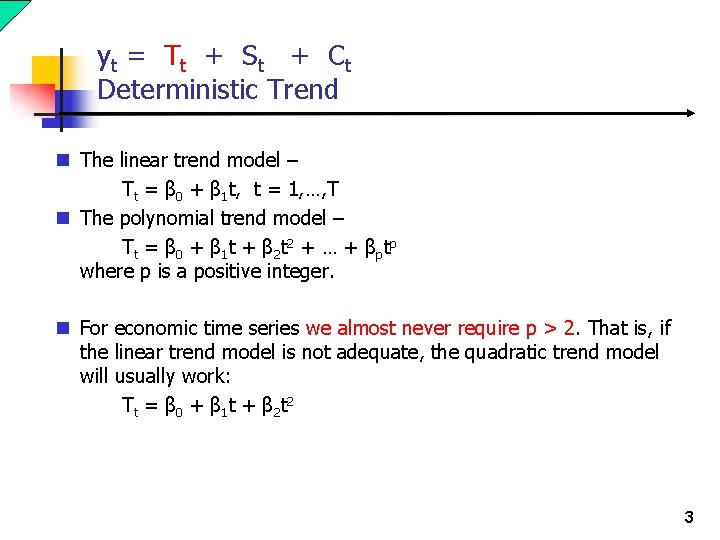

yt = T t + S t + C t Deterministic Trend n The linear trend model – Tt = β 0 + β 1 t, t = 1, …, T n The polynomial trend model – Tt = β 0 + β 1 t + β 2 t 2 + … + βptp where p is a positive integer. n For economic time series we almost never require p > 2. That is, if the linear trend model is not adequate, the quadratic trend model will usually work: T t = β 0 + β 1 t + β 2 t 2 3

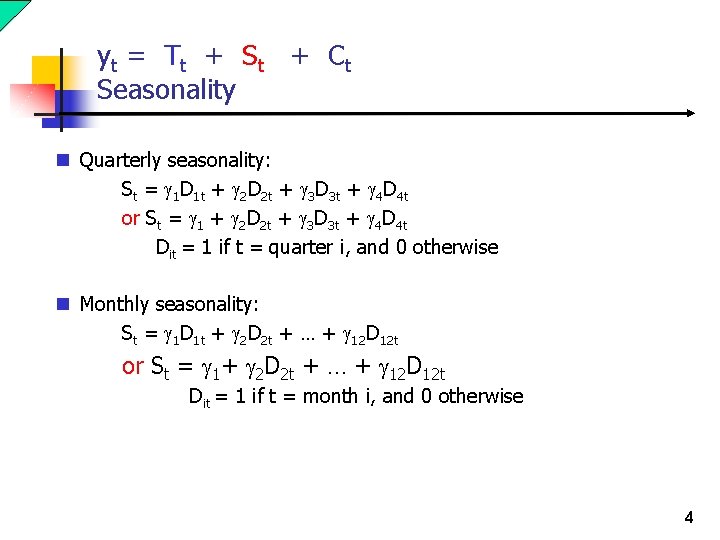

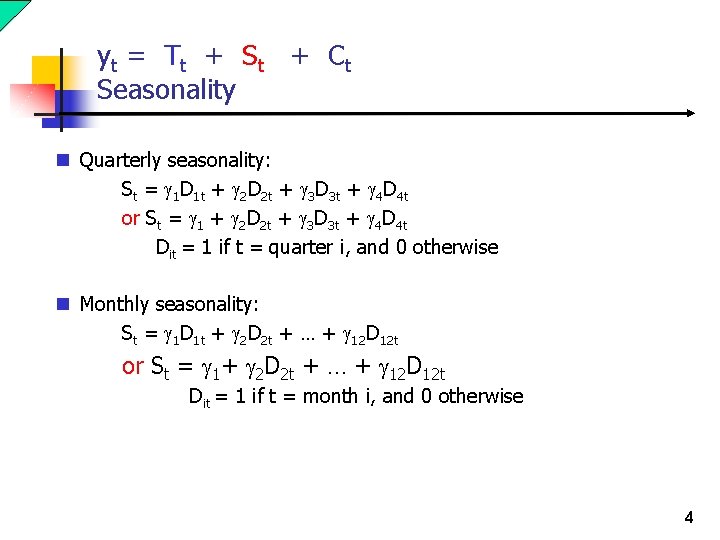

yt = T t + S t + C t Seasonality n Quarterly seasonality: St = g 1 D 1 t + g 2 D 2 t + g 3 D 3 t + g 4 D 4 t or St = g 1 + g 2 D 2 t + g 3 D 3 t + g 4 D 4 t Dit = 1 if t = quarter i, and 0 otherwise n Monthly seasonality: St = g 1 D 1 t + g 2 D 2 t + … + g 12 D 12 t or St = g 1+ g 2 D 2 t + … + g 12 D 12 t Dit = 1 if t = month i, and 0 otherwise 4

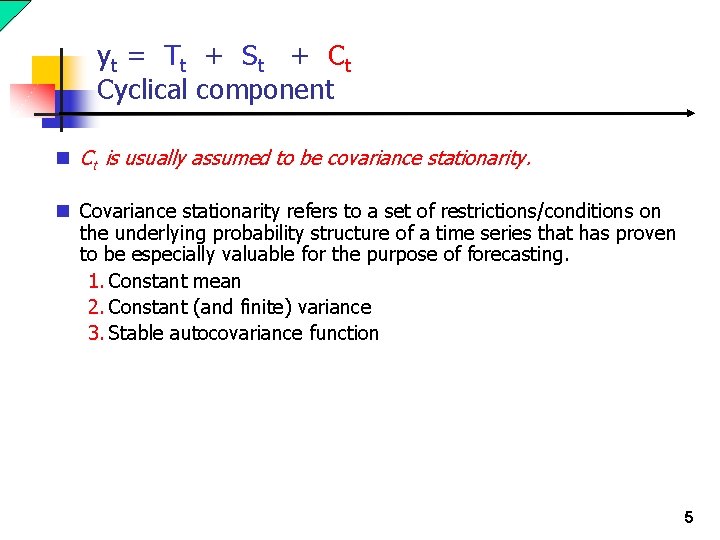

yt = T t + S t + C t Cyclical component n Ct is usually assumed to be covariance stationarity. n Covariance stationarity refers to a set of restrictions/conditions on the underlying probability structure of a time series that has proven to be especially valuable for the purpose of forecasting. 1. Constant mean 2. Constant (and finite) variance 3. Stable autocovariance function 5

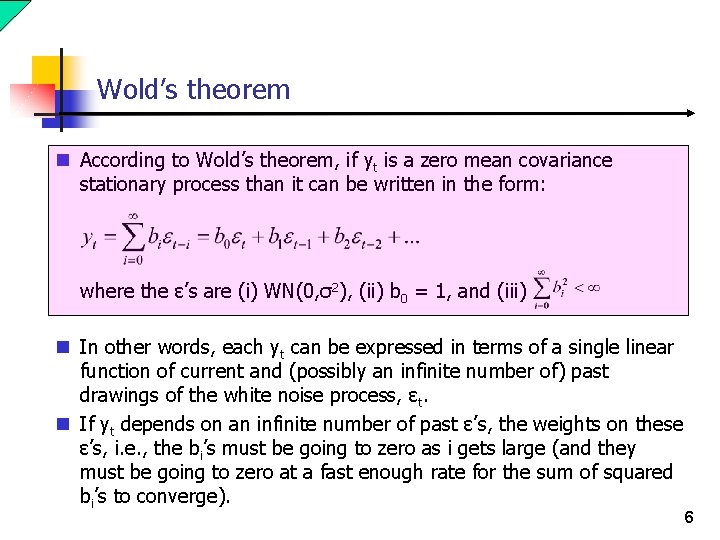

Wold’s theorem n According to Wold’s theorem, if yt is a zero mean covariance stationary process than it can be written in the form: where the ε’s are (i) WN(0, σ2), (ii) b 0 = 1, and (iii) n In other words, each yt can be expressed in terms of a single linear function of current and (possibly an infinite number of) past drawings of the white noise process, εt. n If yt depends on an infinite number of past ε’s, the weights on these ε’s, i. e. , the bi’s must be going to zero as i gets large (and they must be going to zero at a fast enough rate for the sum of squared bi’s to converge). 6

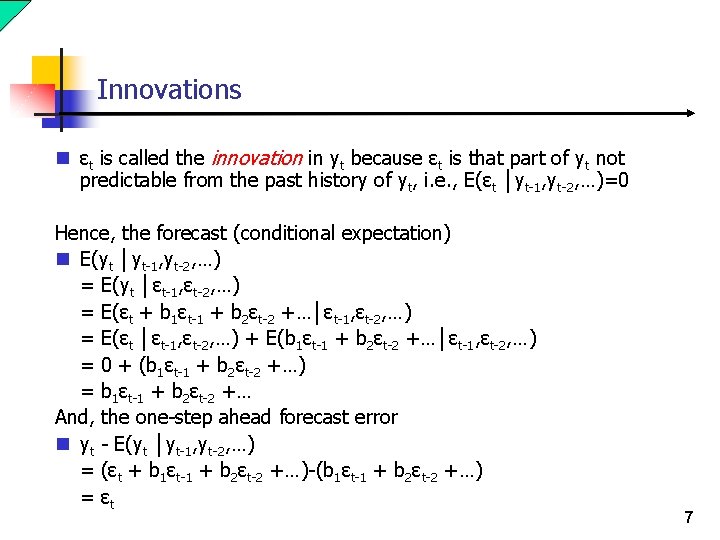

Innovations n εt is called the innovation in yt because εt is that part of yt not predictable from the past history of yt, i. e. , E(εt │yt-1, yt-2, …)=0 Hence, the forecast (conditional expectation) n E(yt │yt-1, yt-2, …) = E(yt │εt-1, εt-2, …) = E(εt + b 1εt-1 + b 2εt-2 +…│εt-1, εt-2, …) = E(εt │εt-1, εt-2, …) + E(b 1εt-1 + b 2εt-2 +…│εt-1, εt-2, …) = 0 + (b 1εt-1 + b 2εt-2 +…) = b 1εt-1 + b 2εt-2 +… And, the one-step ahead forecast error n yt - E(yt │yt-1, yt-2, …) = (εt + b 1εt-1 + b 2εt-2 +…)-(b 1εt-1 + b 2εt-2 +…) = εt 7

Mapping Wold to a variety of models n It turns out that the Wold representation can usually be wellapproximated by a variety of models that can expressed in terms of a very small number of parameters. n the moving-average (MA) models, n the autoregressive (AR) models, and n the autoregressive moving-average (ARMA) models. 8

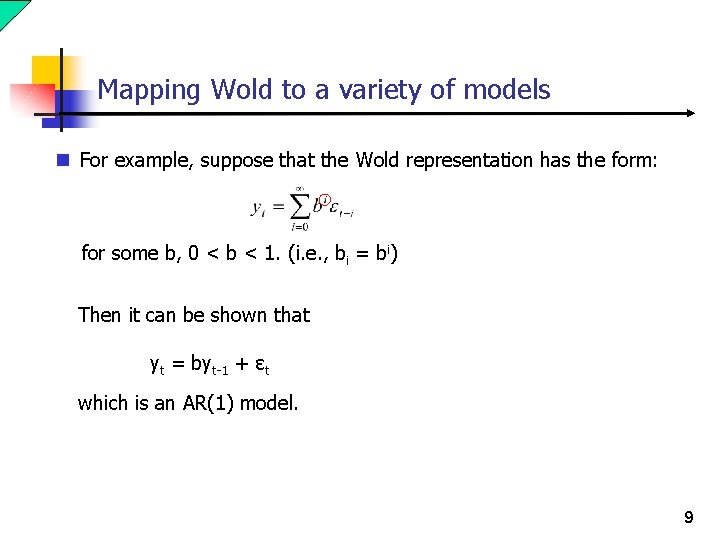

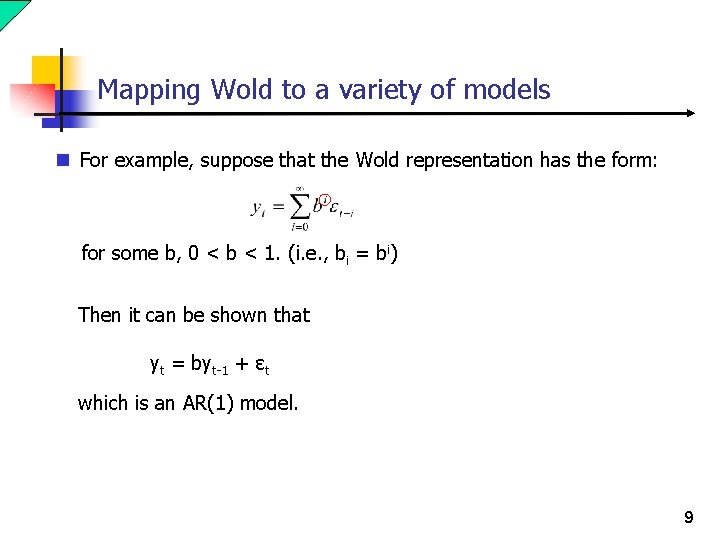

Mapping Wold to a variety of models n For example, suppose that the Wold representation has the form: for some b, 0 < b < 1. (i. e. , bi = bi) Then it can be shown that yt = byt-1 + εt which is an AR(1) model. 9

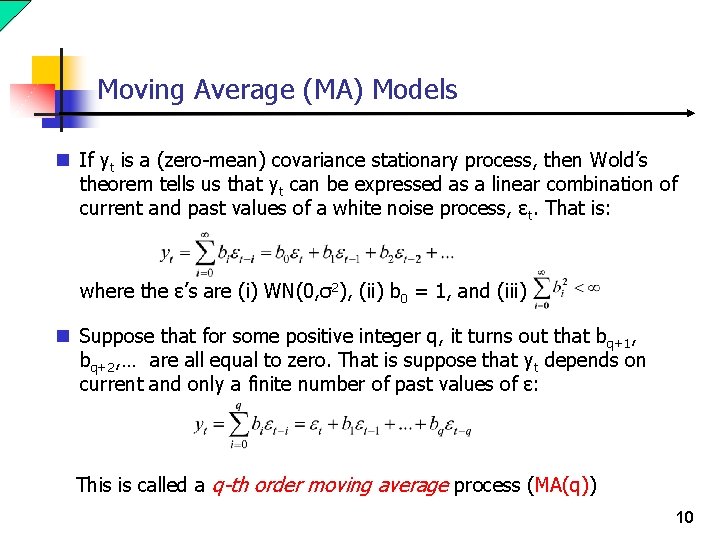

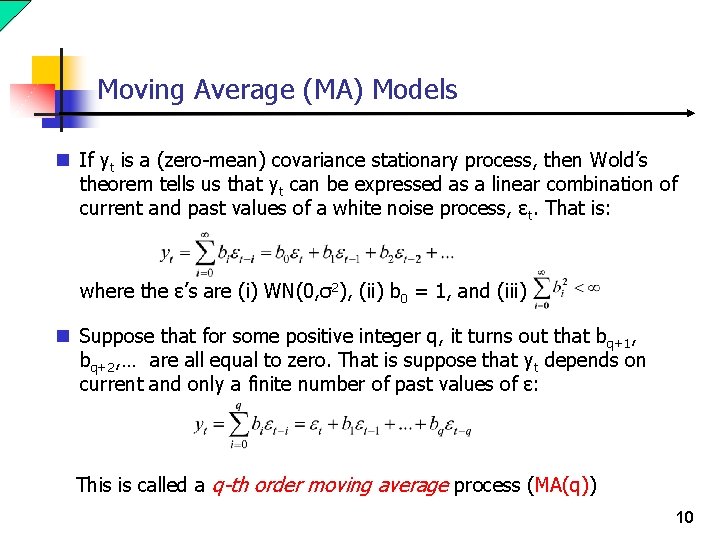

Moving Average (MA) Models n If yt is a (zero-mean) covariance stationary process, then Wold’s theorem tells us that yt can be expressed as a linear combination of current and past values of a white noise process, εt. That is: where the ε’s are (i) WN(0, σ2), (ii) b 0 = 1, and (iii) n Suppose that for some positive integer q, it turns out that b q+1, bq+2, … are all equal to zero. That is suppose that yt depends on current and only a finite number of past values of ε: This is called a q-th order moving average process (MA(q)) 10

Autoregressive Models (AR(p)) n In certain circumstances, the Wold form for yt, can be “inverted” into a finite-order autoregressive form, i. e. , yt = φ1 yt-1+ φ2 yt-2+…+ φpyt-p+εt This is called a p-th order autoregressive process AR(p)). Note that it has p unknown coefficients: φ1, …, φp Note too that the AR(p) model looks like a standard linear regression model with zero-mean, homoskedastic, and serially uncorrelated errors. 11

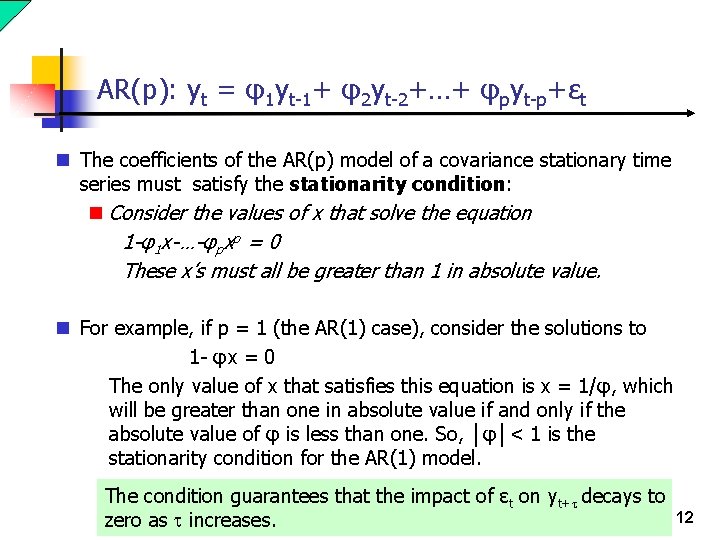

AR(p): yt = φ1 yt-1+ φ2 yt-2+…+ φpyt-p+εt n The coefficients of the AR(p) model of a covariance stationary time series must satisfy the stationarity condition: n Consider the values of x that solve the equation 1 -φ1 x-…-φpxp = 0 These x’s must all be greater than 1 in absolute value. n For example, if p = 1 (the AR(1) case), consider the solutions to 1 - φx = 0 The only value of x that satisfies this equation is x = 1/φ, which will be greater than one in absolute value if and only if the absolute value of φ is less than one. So, │φ│< 1 is the stationarity condition for the AR(1) model. The condition guarantees that the impact of εt on yt+t decays to zero as t increases. 12

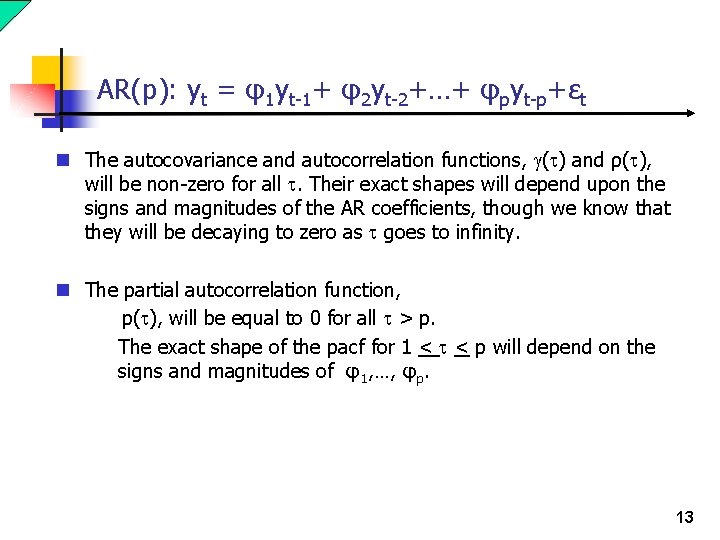

AR(p): yt = φ1 yt-1+ φ2 yt-2+…+ φpyt-p+εt n The autocovariance and autocorrelation functions, g(t) and ρ(t), will be non-zero for all t. Their exact shapes will depend upon the signs and magnitudes of the AR coefficients, though we know that they will be decaying to zero as t goes to infinity. n The partial autocorrelation function, p(t), will be equal to 0 for all t > p. The exact shape of the pacf for 1 < t < p will depend on the signs and magnitudes of φ1, …, φp. 13

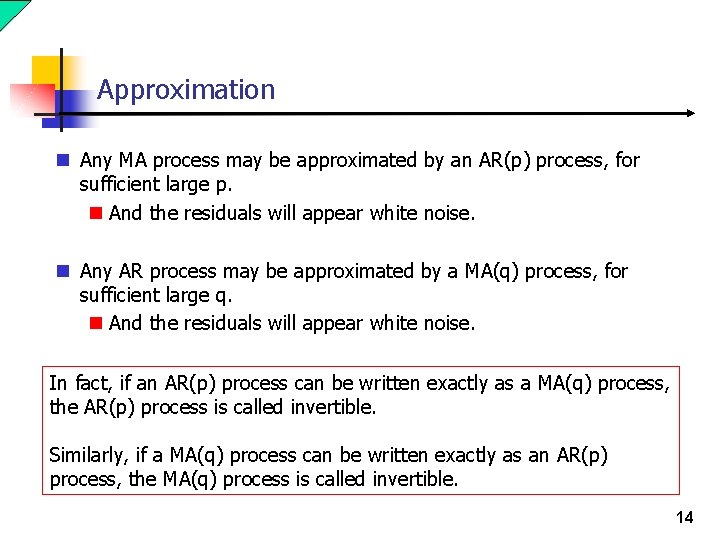

Approximation n Any MA process may be approximated by an AR(p) process, for sufficient large p. n And the residuals will appear white noise. n Any AR process may be approximated by a MA(q) process, for sufficient large q. n And the residuals will appear white noise. In fact, if an AR(p) process can be written exactly as a MA(q) process, the AR(p) process is called invertible. Similarly, if a MA(q) process can be written exactly as an AR(p) process, the MA(q) process is called invertible. 14

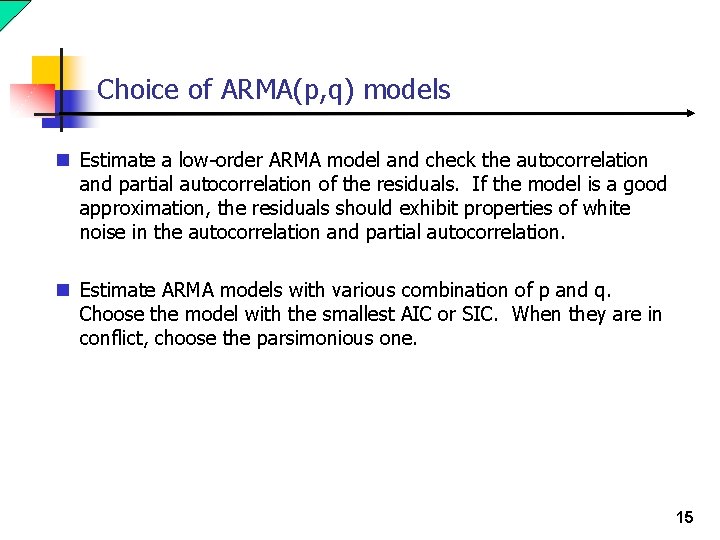

Choice of ARMA(p, q) models n Estimate a low-order ARMA model and check the autocorrelation and partial autocorrelation of the residuals. If the model is a good approximation, the residuals should exhibit properties of white noise in the autocorrelation and partial autocorrelation. n Estimate ARMA models with various combination of p and q. Choose the model with the smallest AIC or SIC. When they are in conflict, choose the parsimonious one. 15

Has the probability structure remained same throughout the sample? n Check parameter constancy if we are suspicious. n Allow for breaks if they are known. n If we know breaks exist but do not know exactly where the break point should be, try to identify the breaks. 16

Assessing Model Stability Using Recursive Estimation and Recursive Residuals n Forecast: If the model’s parameters are different during the forecast period than they were during the sample period, then the model we estimated will not be very useful , regardless of how well it was estimated. n Model: If the model’s parameters were unstable over the sample period, then model was not even a good representation of how the series evolved over the sample period. 17

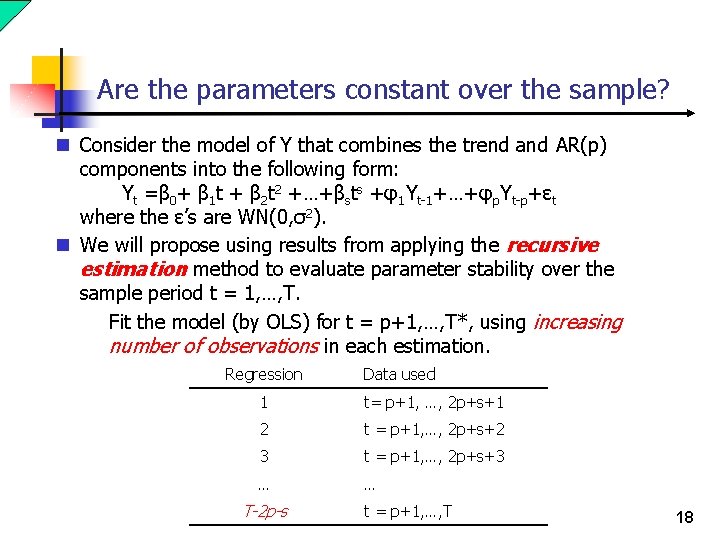

Are the parameters constant over the sample? n Consider the model of Y that combines the trend and AR(p) components into the following form: Yt =β 0+ β 1 t + β 2 t 2 +…+βsts +φ1 Yt-1+…+φp. Yt-p+εt where the ε’s are WN(0, σ2). n We will propose using results from applying the recursive estimation method to evaluate parameter stability over the sample period t = 1, …, T. Fit the model (by OLS) for t = p+1, …, T*, using increasing number of observations in each estimation. Regression Data used 1 t= p+1, …, 2 p+s+1 2 t = p+1, …, 2 p+s+2 3 t = p+1, …, 2 p+s+3 … … T-2 p-s t = p+1, …, T 18

Recursive estimation n The recursive estimation yield parameter estimates for each T*: and for i = 1, . . , s, j = 1, …, p and T* = 2 p+s+1, …, T. n If the model is stable over time then what we should find is that as T* increases the recursive parameter estimates should stabilize at some level. n A model parameter is unstable if it does not appear to stabilize as T* increases or if there appears to be a sharp break in the behavior of the sequence before and after some T*. 19

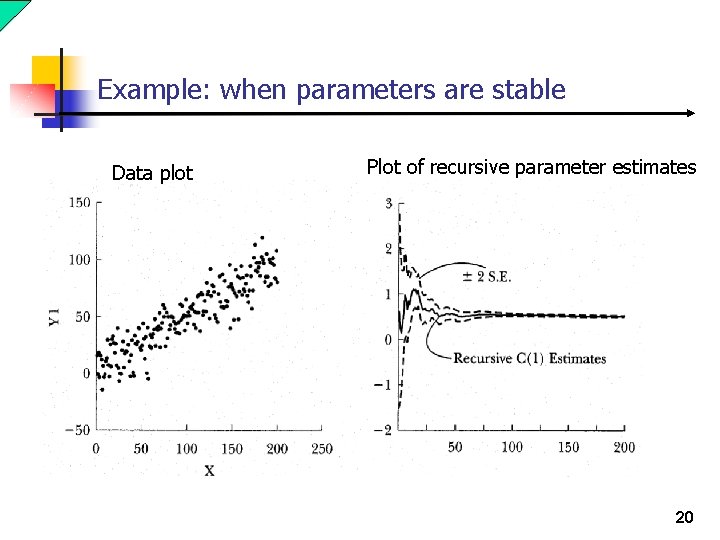

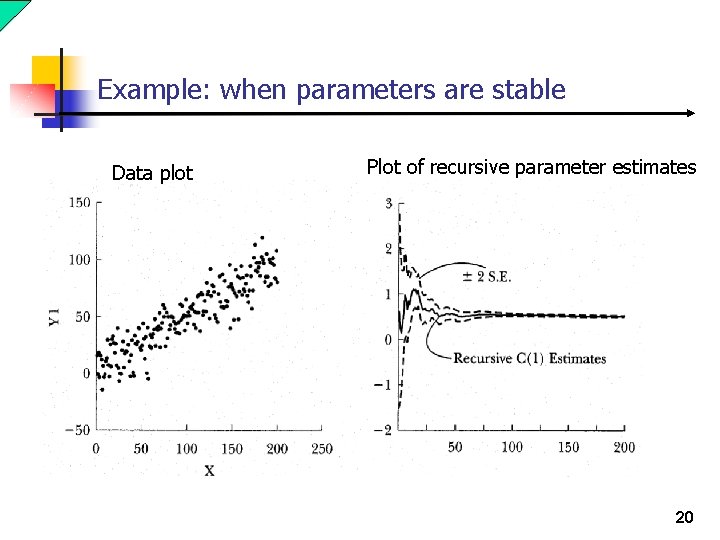

Example: when parameters are stable Data plot Plot of recursive parameter estimates 20

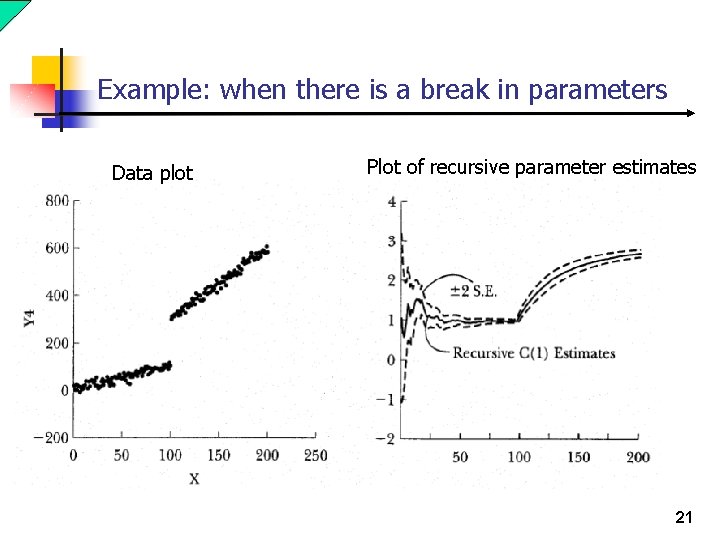

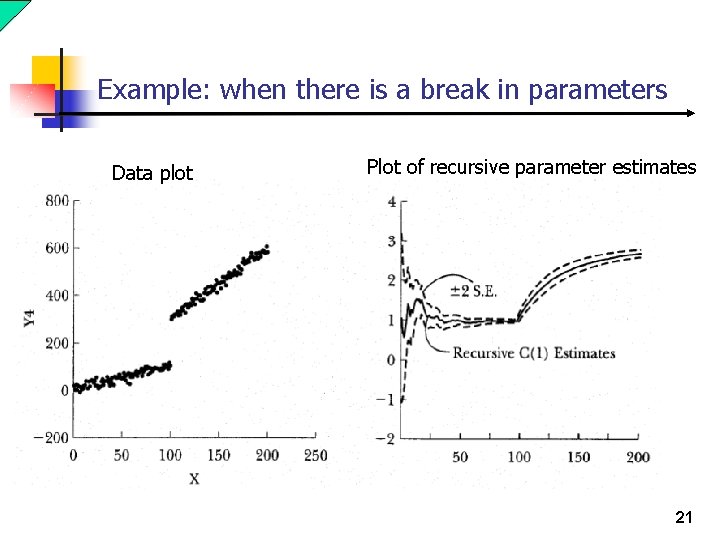

Example: when there is a break in parameters Data plot Plot of recursive parameter estimates 21

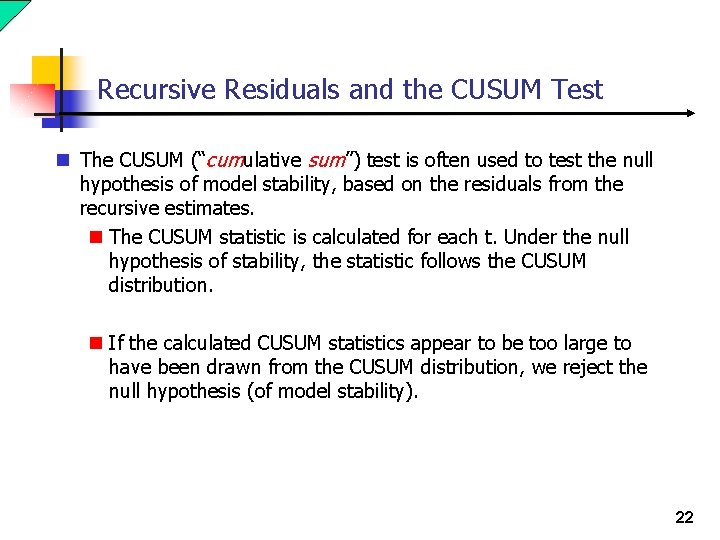

Recursive Residuals and the CUSUM Test n The CUSUM (“cumulative sum”) test is often used to test the null hypothesis of model stability, based on the residuals from the recursive estimates. n The CUSUM statistic is calculated for each t. Under the null hypothesis of stability, the statistic follows the CUSUM distribution. n If the calculated CUSUM statistics appear to be too large to have been drawn from the CUSUM distribution, we reject the null hypothesis (of model stability). 22

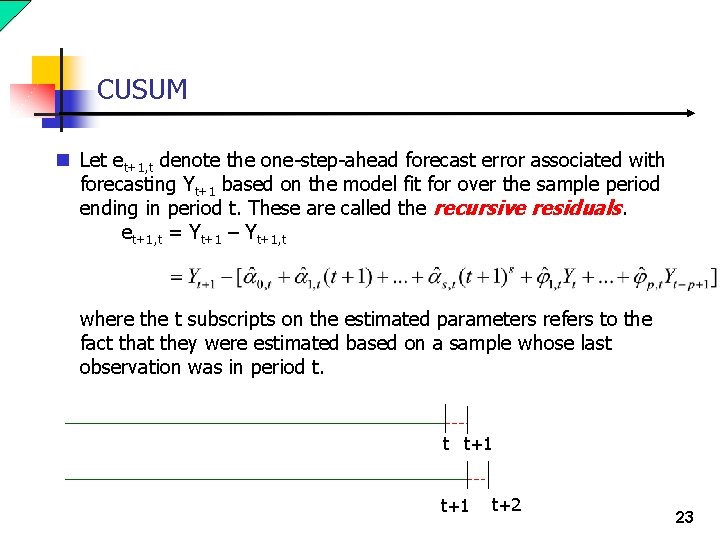

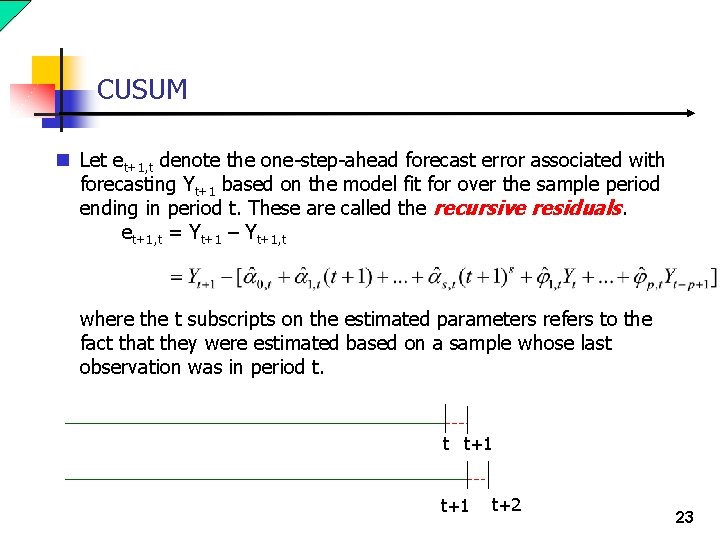

CUSUM n Let et+1, t denote the one-step-ahead forecast error associated with forecasting Yt+1 based on the model fit for over the sample period ending in period t. These are called the recursive residuals. et+1, t = Yt+1 – Yt+1, t where the t subscripts on the estimated parameters refers to the fact that they were estimated based on a sample whose last observation was in period t. t t+1 t+2 23

CUSUM n Let σ1, t denote the standard error of the one-step ahead forecast of Y formed at time t, i. e, σ1, t = sqrt(var(et+1, t)) n Define the standardized recursive residuals, wt+1, t, according to wt+1, t = et+1, t/σ1, t n Fact: Under our maintained assumptions, including model homogeneity, wt+1, t ~ i. i. d. N(0, 1). Note that there will be a set of standardized recursive residuals for each sample. 24

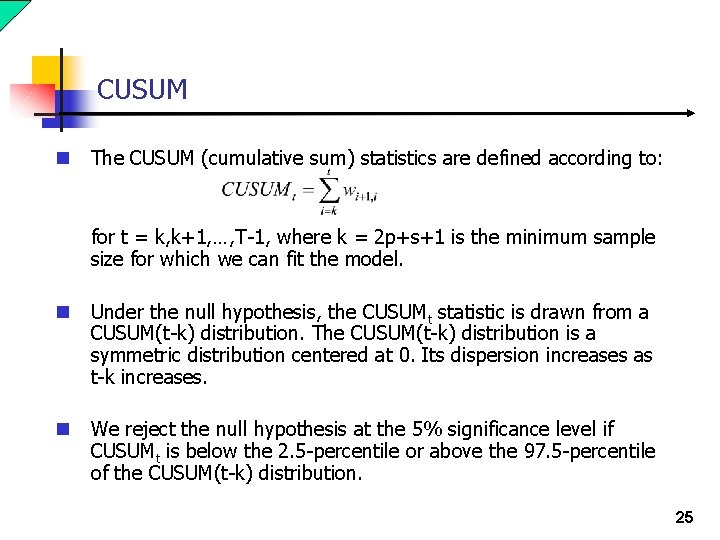

CUSUM n The CUSUM (cumulative sum) statistics are defined according to: for t = k, k+1, …, T-1, where k = 2 p+s+1 is the minimum sample size for which we can fit the model. n Under the null hypothesis, the CUSUMt statistic is drawn from a CUSUM(t-k) distribution. The CUSUM(t-k) distribution is a symmetric distribution centered at 0. Its dispersion increases as t-k increases. n We reject the null hypothesis at the 5% significance level if CUSUMt is below the 2. 5 -percentile or above the 97. 5 -percentile of the CUSUM(t-k) distribution. 25

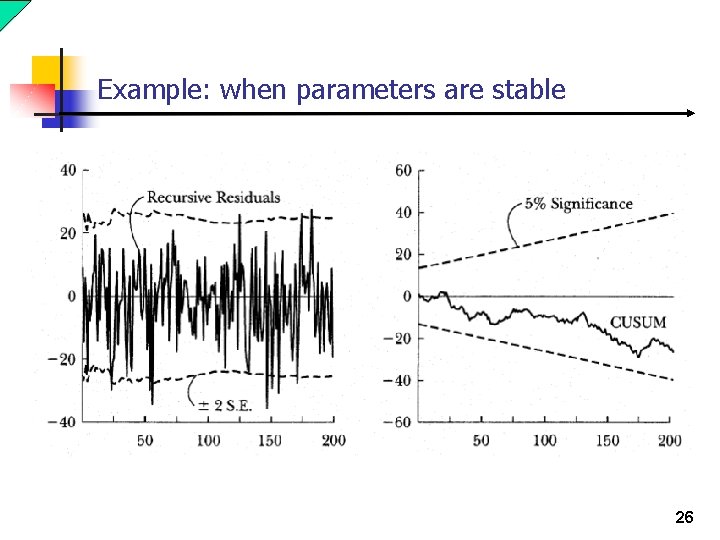

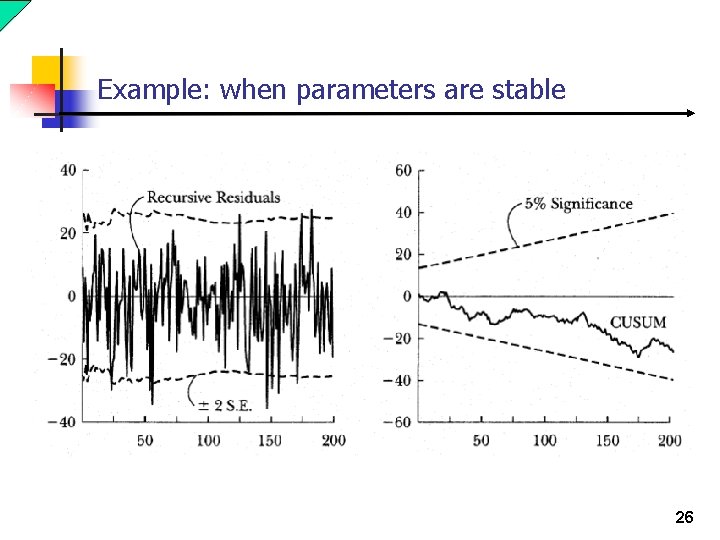

Example: when parameters are stable 26

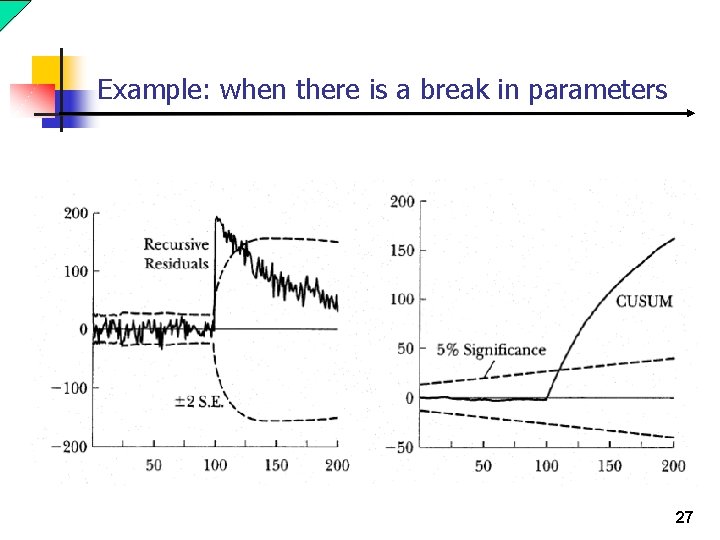

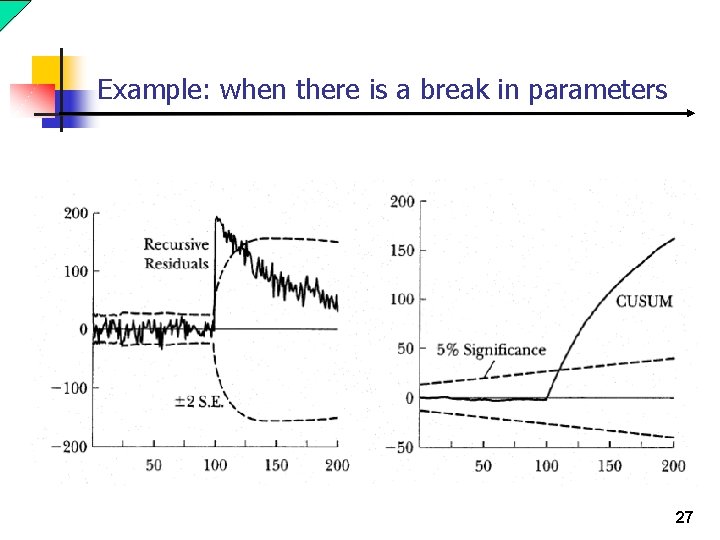

Example: when there is a break in parameters 27

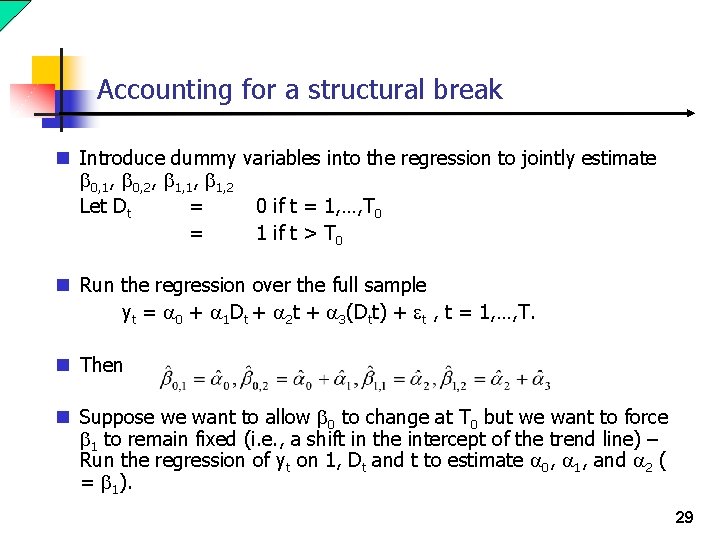

Accounting for a structural break n Suppose it is known that there is a structural break in the trend of a series in 1998 – due to Asian Financial Crisis. 28

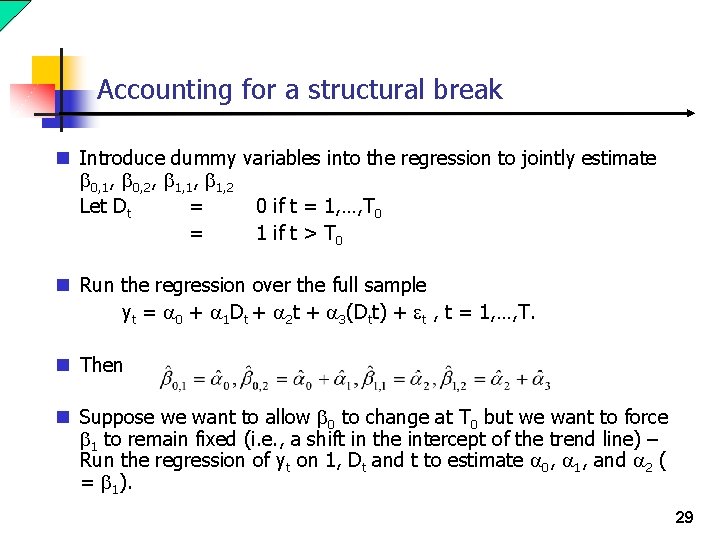

Accounting for a structural break n Introduce dummy variables into the regression to jointly estimate 0, 1, 0, 2, 1, 1, 2 Let Dt = 0 if t = 1, …, T 0 = 1 if t > T 0 n Run the regression over the full sample yt = 0 + 1 Dt + 2 t + 3(Dtt) + t , t = 1, …, T. n Then n Suppose we want to allow 0 to change at T 0 but we want to force 1 to remain fixed (i. e. , a shift in the intercept of the trend line) – Run the regression of yt on 1, Dt and t to estimate 0, 1, and 2 ( = 1). 29

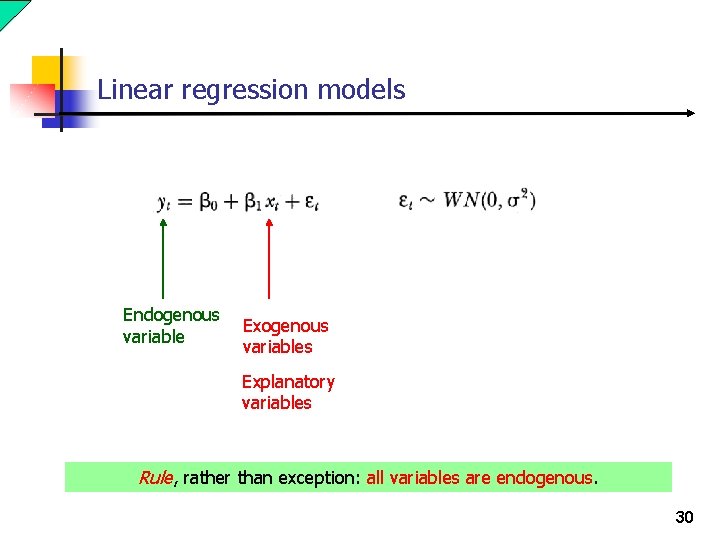

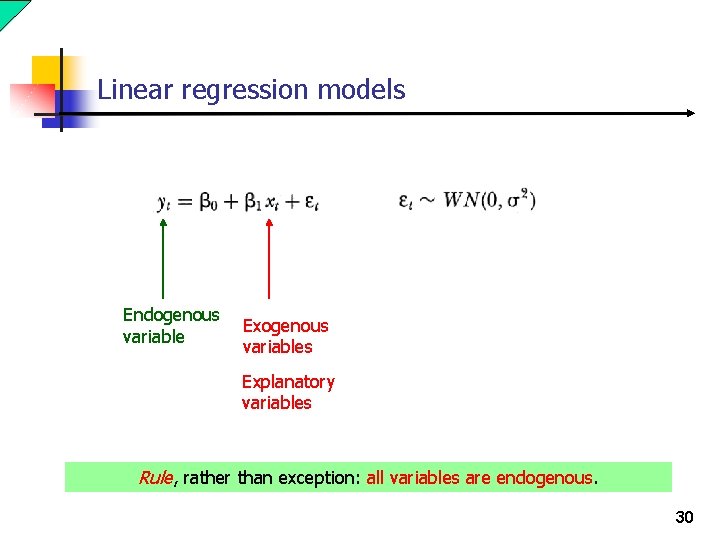

Linear regression models Endogenous variable Exogenous variables Explanatory variables Rule, rather than exception: all variables are endogenous. 30

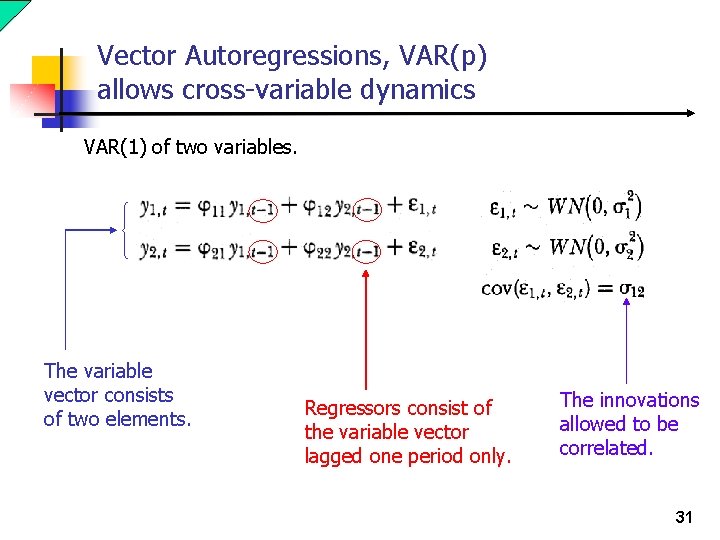

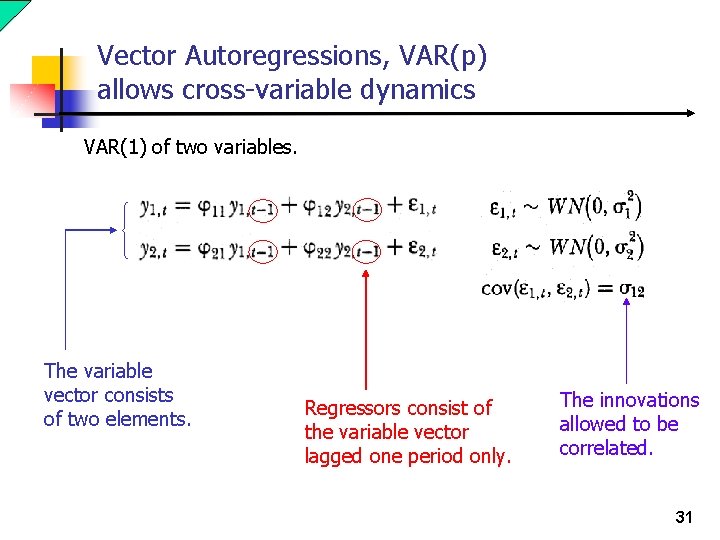

Vector Autoregressions, VAR(p) allows cross-variable dynamics VAR(1) of two variables. The variable vector consists of two elements. Regressors consist of the variable vector lagged one period only. The innovations allowed to be correlated. 31

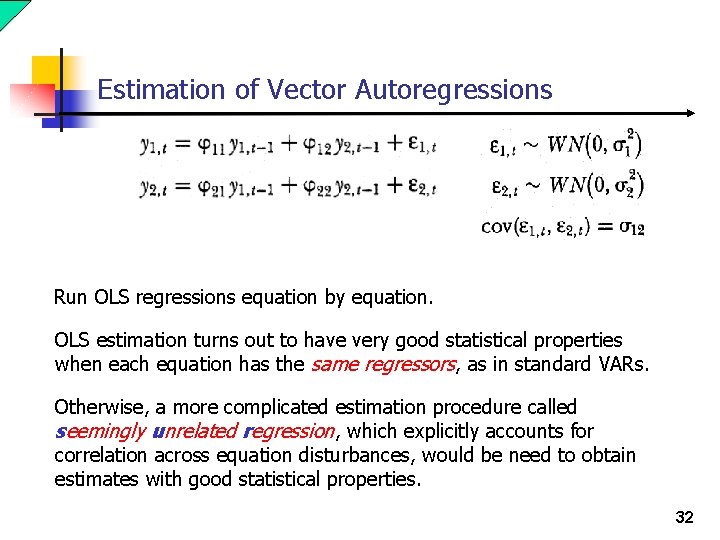

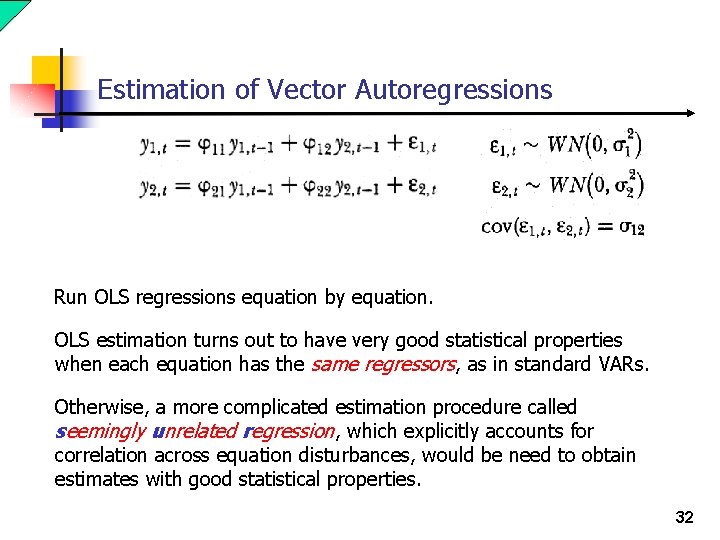

Estimation of Vector Autoregressions Run OLS regressions equation by equation. OLS estimation turns out to have very good statistical properties when each equation has the same regressors, as in standard VARs. Otherwise, a more complicated estimation procedure called seemingly unrelated regression, which explicitly accounts for correlation across equation disturbances, would be need to obtain estimates with good statistical properties. 32

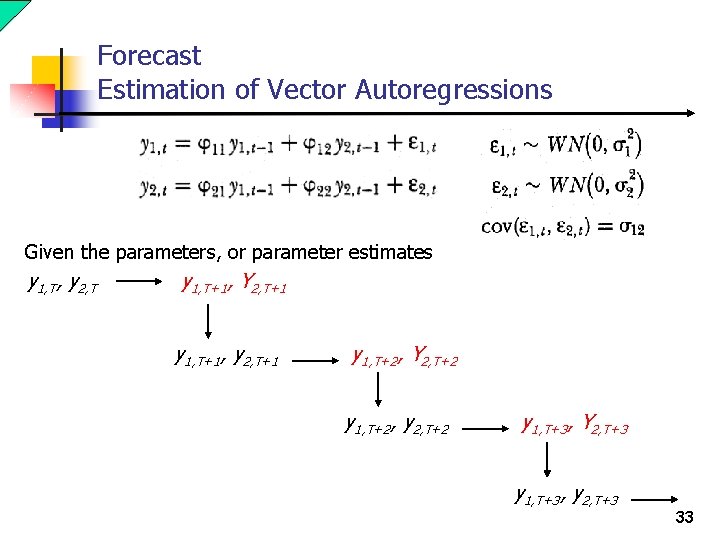

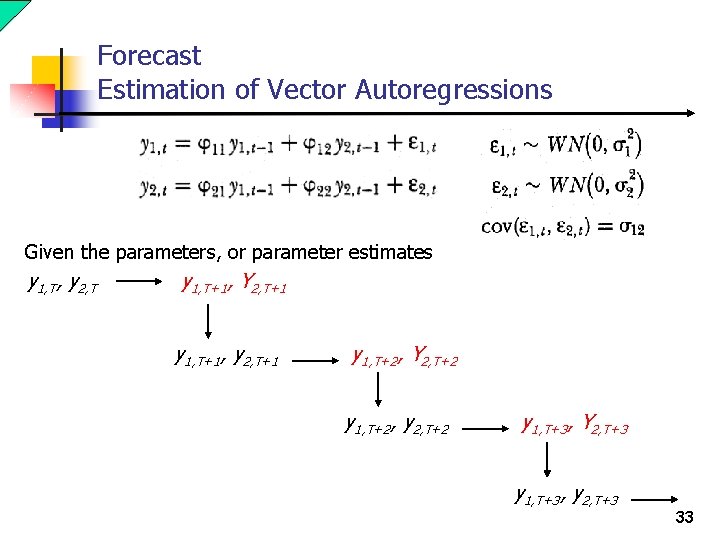

Forecast Estimation of Vector Autoregressions Given the parameters, or parameter estimates y 1, T, y 2, T y 1, T+1, Y 2, T+1 y 1, T+1, y 2, T+1 y 1, T+2, Y 2, T+2 y 1, T+2, y 2, T+2 y 1, T+3, Y 2, T+3 y 1, T+3, y 2, T+3 33

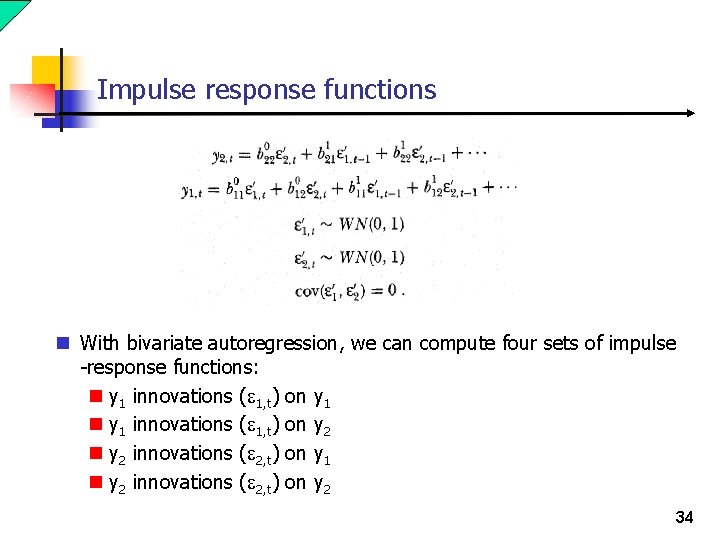

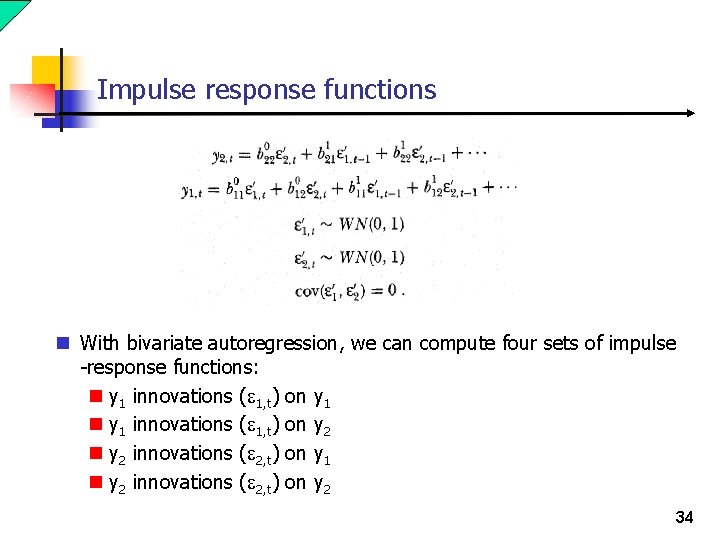

Impulse response functions n With bivariate autoregression, we can compute four sets of impulse -response functions: n y 1 innovations ( 1, t) on y 2 innovations ( 2, t) on y 1 n y 2 innovations ( 2, t) on y 2 34

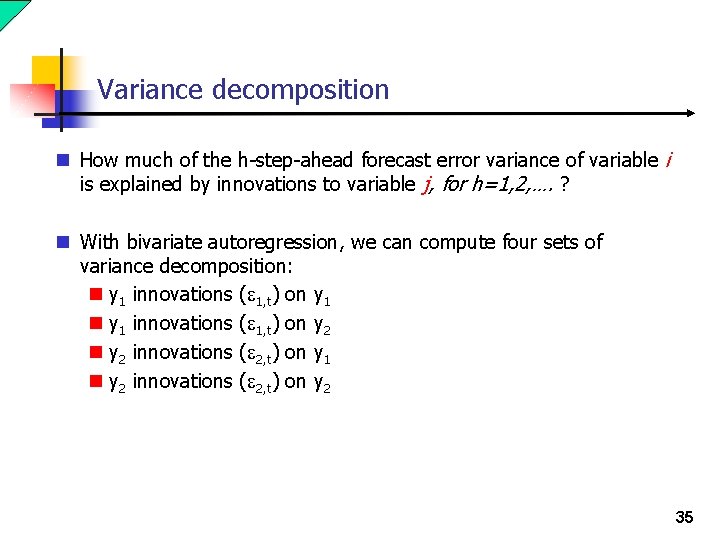

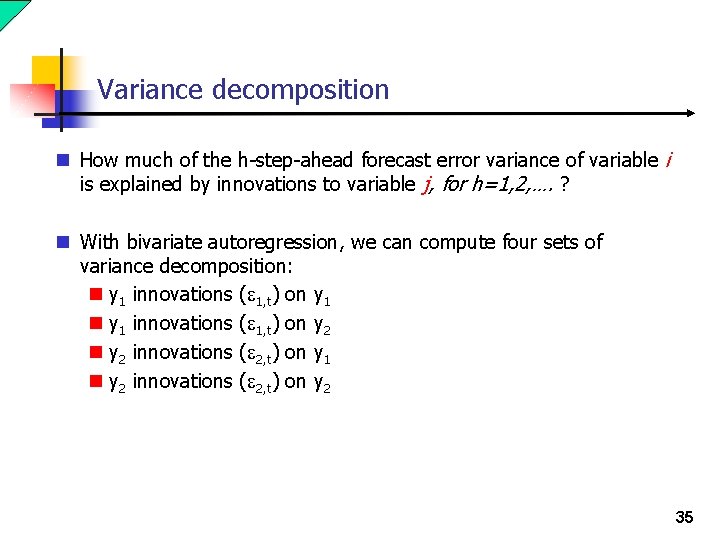

Variance decomposition n How much of the h-step-ahead forecast error variance of variable i is explained by innovations to variable j, for h=1, 2, …. ? n With bivariate autoregression, we can compute four sets of variance decomposition: n y 1 innovations ( 1, t) on y 2 innovations ( 2, t) on y 1 n y 2 innovations ( 2, t) on y 2 35

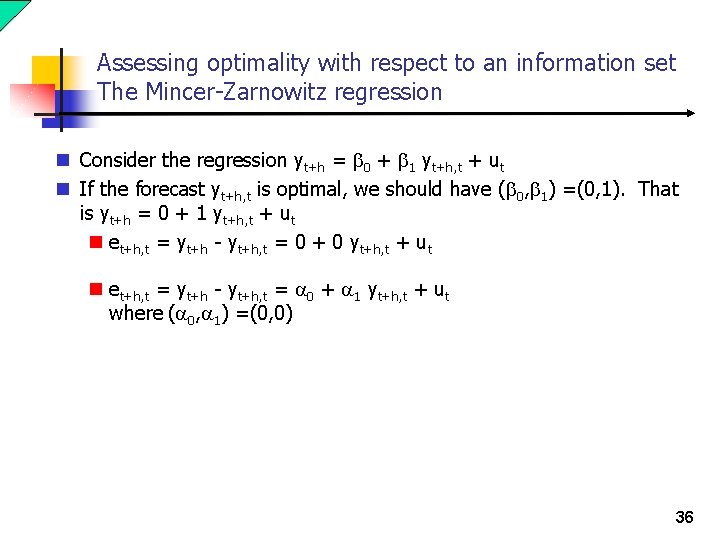

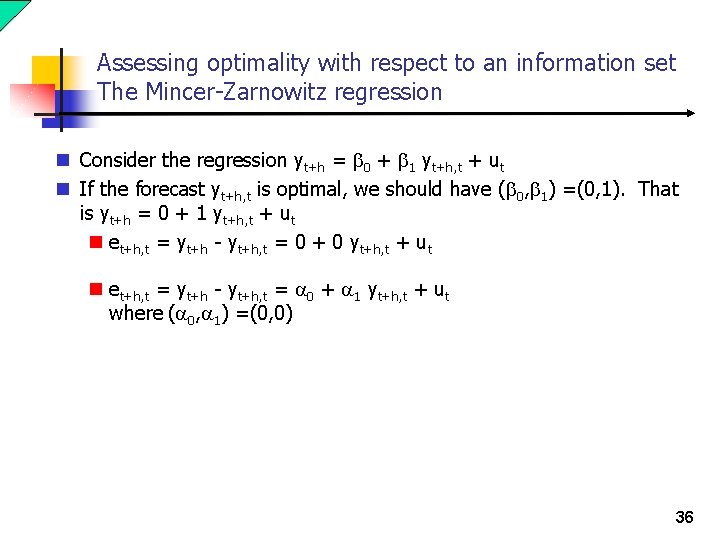

Assessing optimality with respect to an information set The Mincer-Zarnowitz regression n Consider the regression yt+h = 0 + 1 yt+h, t + ut n If the forecast yt+h, t is optimal, we should have ( 0, 1) =(0, 1). That is yt+h = 0 + 1 yt+h, t + ut n et+h, t = yt+h - yt+h, t = 0 + 0 yt+h, t + ut n et+h, t = yt+h - yt+h, t = 0 + 1 yt+h, t + ut where ( 0, 1) =(0, 0) 36

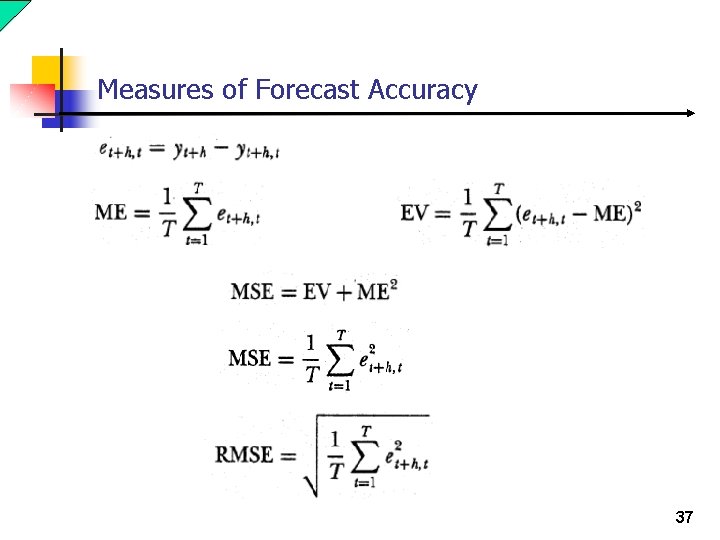

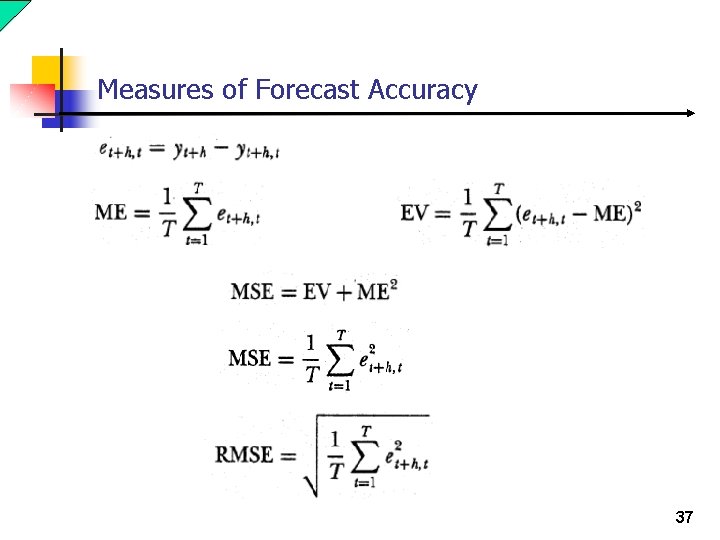

Measures of Forecast Accuracy 37

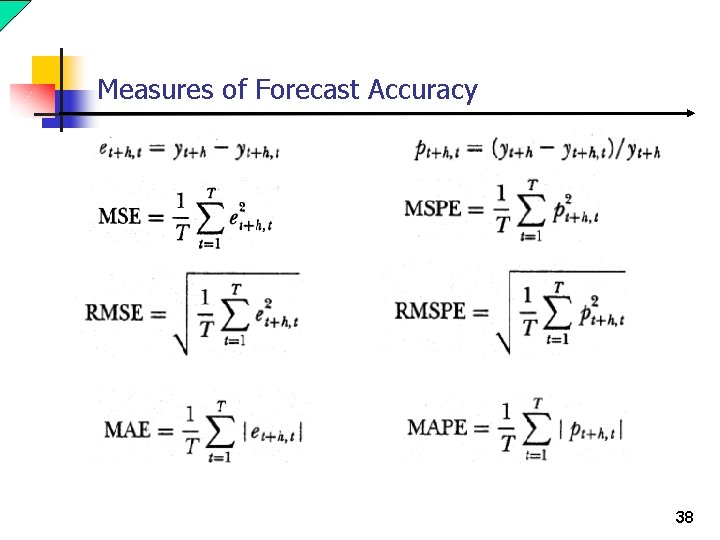

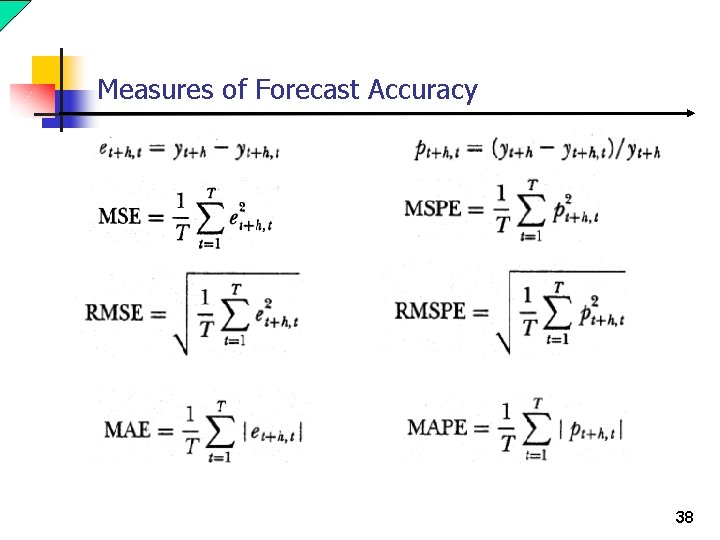

Measures of Forecast Accuracy 38

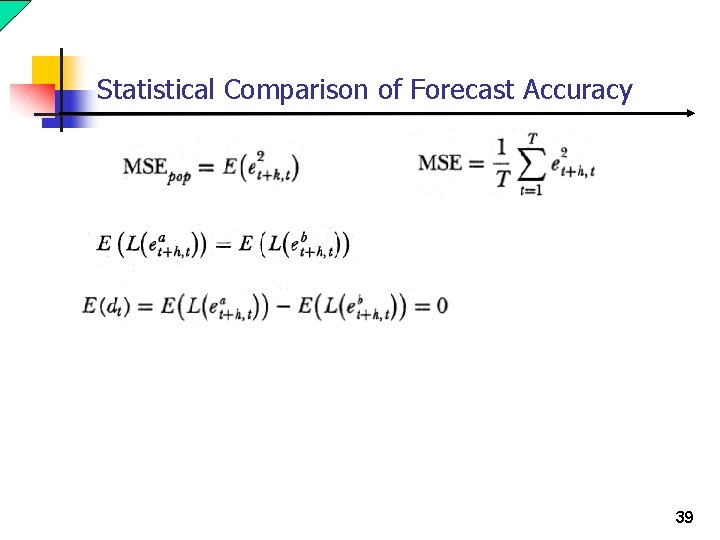

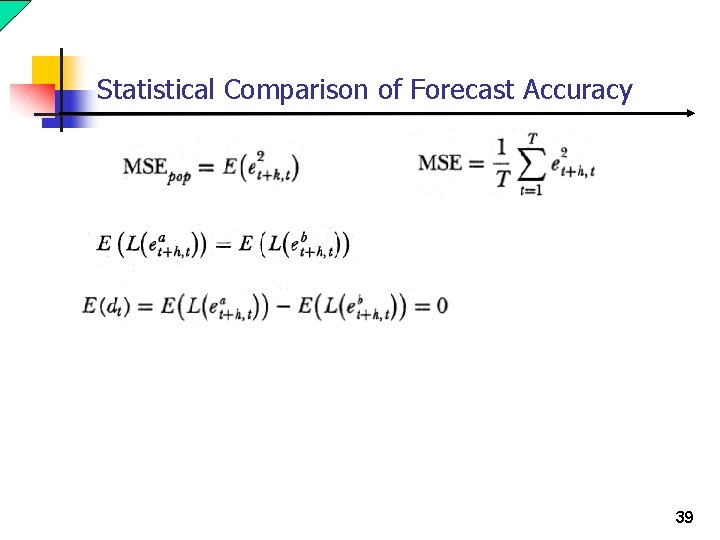

Statistical Comparison of Forecast Accuracy 39

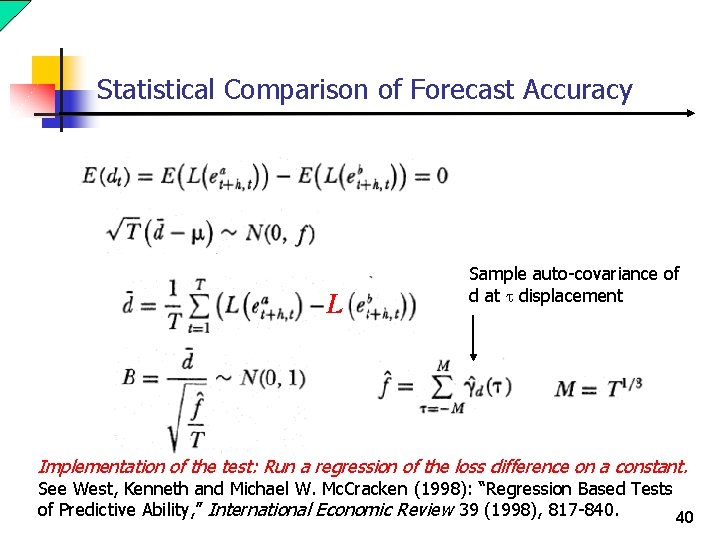

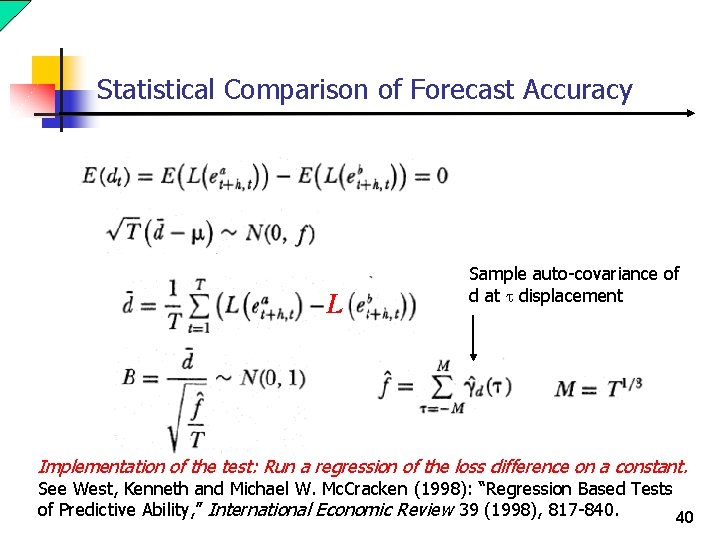

Statistical Comparison of Forecast Accuracy L Sample auto-covariance of d at t displacement Implementation of the test: Run a regression of the loss difference on a constant. See West, Kenneth and Michael W. Mc. Cracken (1998): “Regression Based Tests of Predictive Ability, ” International Economic Review 39 (1998), 817 -840. 40

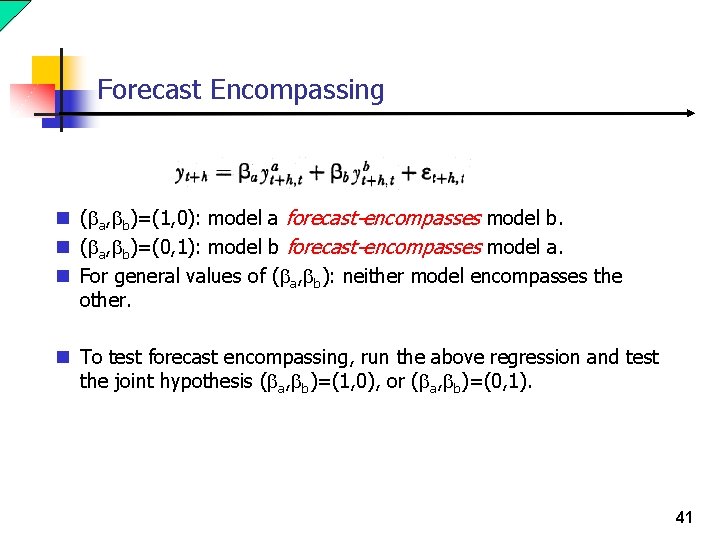

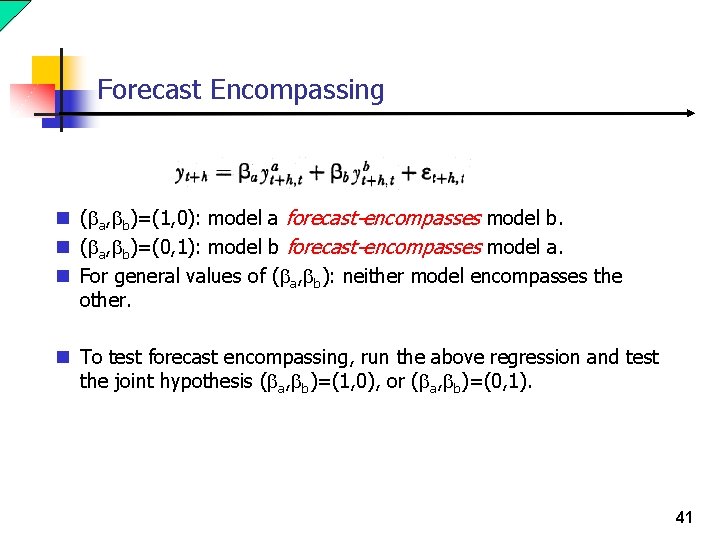

Forecast Encompassing n ( a, b)=(1, 0): model a forecast-encompasses model b. n ( a, b)=(0, 1): model b forecast-encompasses model a. n For general values of ( a, b): neither model encompasses the other. n To test forecast encompassing, run the above regression and test the joint hypothesis ( a, b)=(1, 0), or ( a, b)=(0, 1). 41

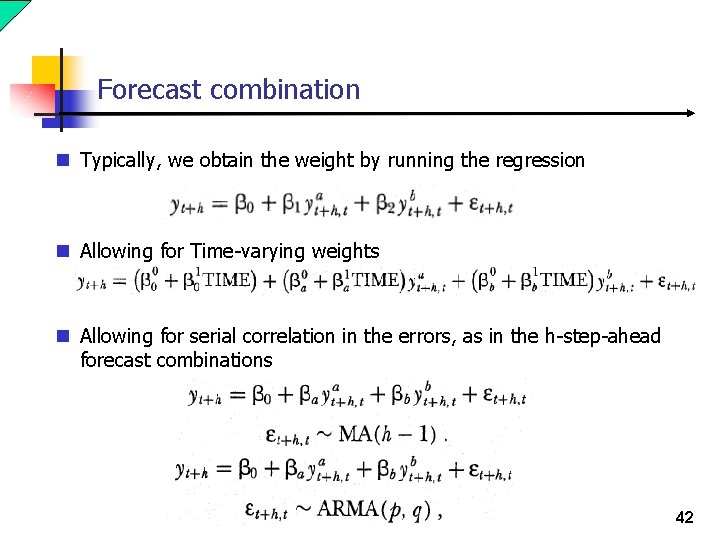

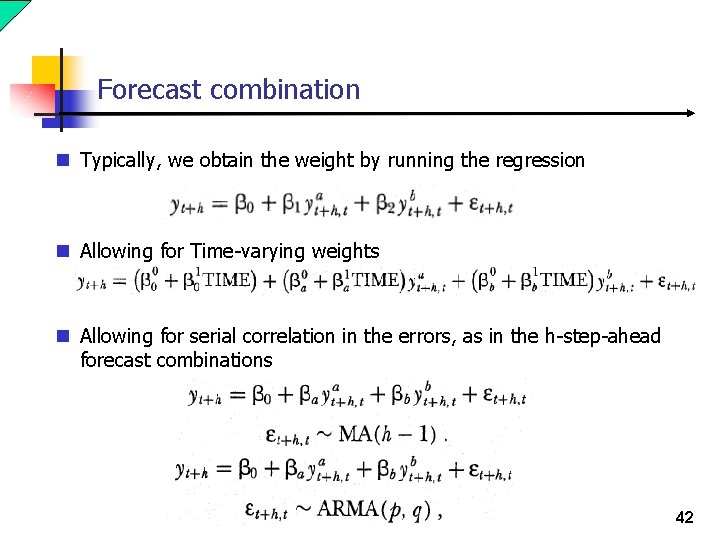

Forecast combination n Typically, we obtain the weight by running the regression n Allowing for Time-varying weights n Allowing for serial correlation in the errors, as in the h-step-ahead forecast combinations 42

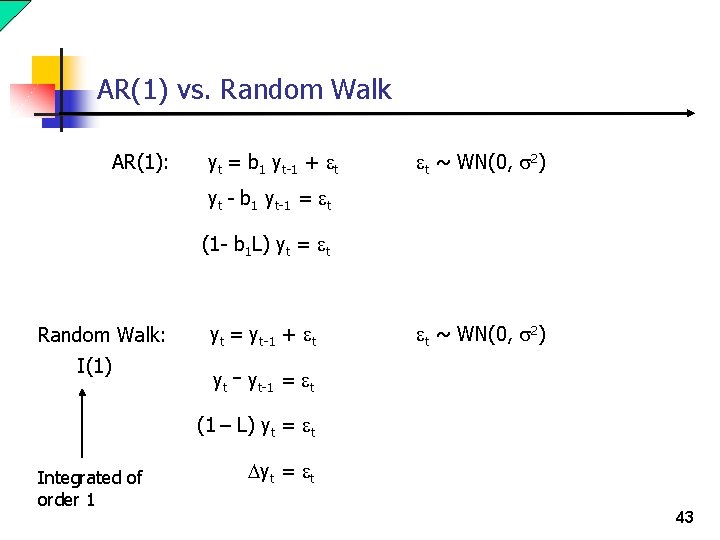

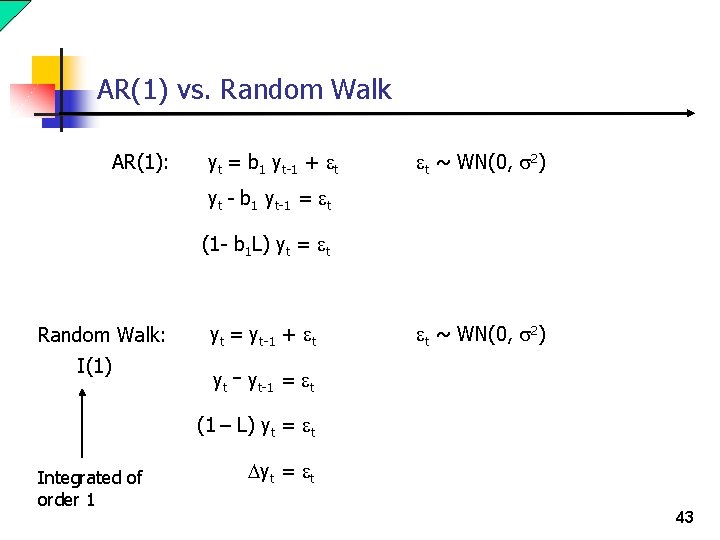

AR(1) vs. Random Walk AR(1): yt = b 1 yt-1 + t t ~ WN(0, s 2) yt - b 1 yt-1 = t (1 - b 1 L) yt = t Random Walk: I(1) yt = yt-1 + t t ~ WN(0, s 2) yt - yt-1 = t (1 – L) yt = t Integrated of order 1 Dyt = t 43

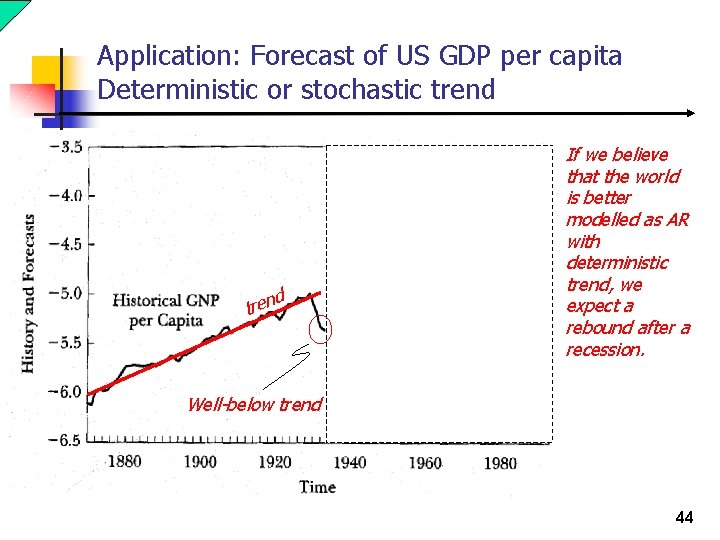

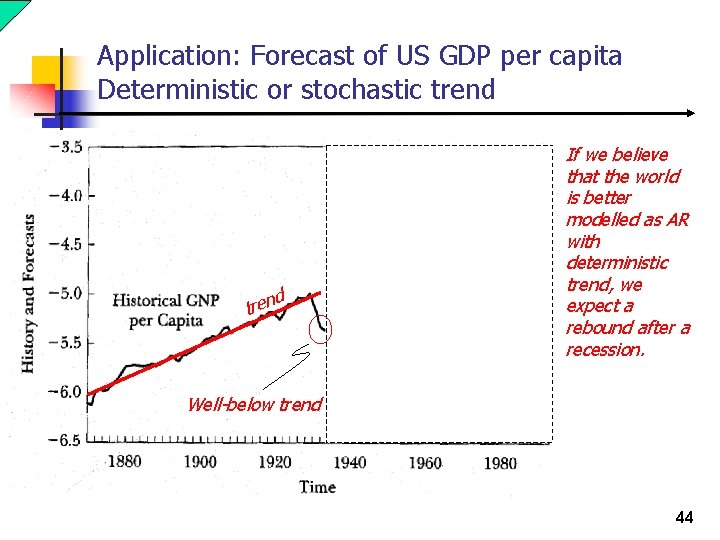

Application: Forecast of US GDP per capita Deterministic or stochastic trend d er ack b ts rev d tren t o t If we believe that the world is better modelled as AR with deterministic trend, we expect a rebound after a recession. Well-below trend 44

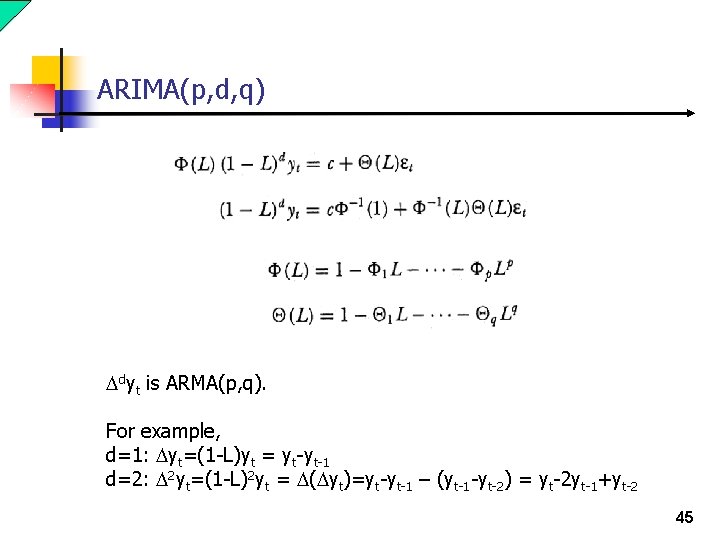

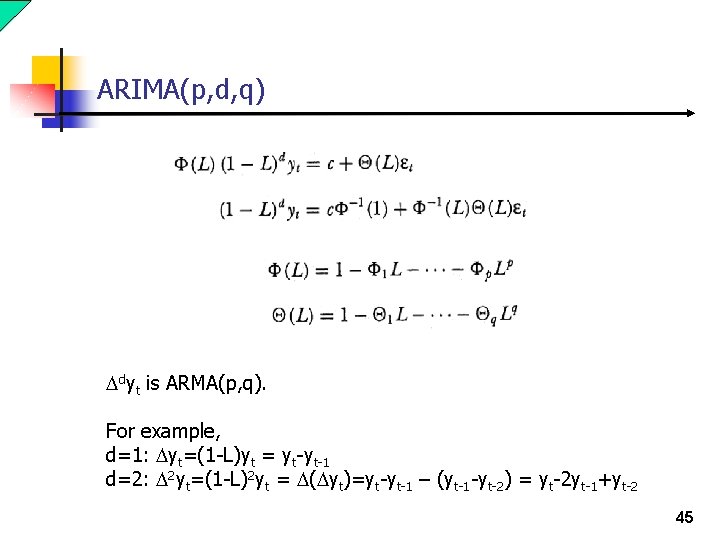

ARIMA(p, d, q) Ddyt is ARMA(p, q). For example, d=1: Dyt=(1 -L)yt = yt-yt-1 d=2: D 2 yt=(1 -L)2 yt = D(Dyt)=yt-yt-1 – (yt-1 -yt-2) = yt-2 yt-1+yt-2 45

Similarity of ARIMA(p, 1, q) to random walk n ARIMA(p, 1, q) processes are appropriately made stationary by differencing. n Shocks ( t) to ARIMA(p, 1, q) processes have permanent effects. n Hence, shock persistence means that optimal forecasts even at very long horizons don’t completely revert to a mean or a trend. n The variance of an ARIMA(p, 1, q) process grows without bound as time progresses. n Uncertainty associated with our forecasts grows with horizon of our forecast. n Width of our interval forecast grows without bound with the horizon of our forecast. 46

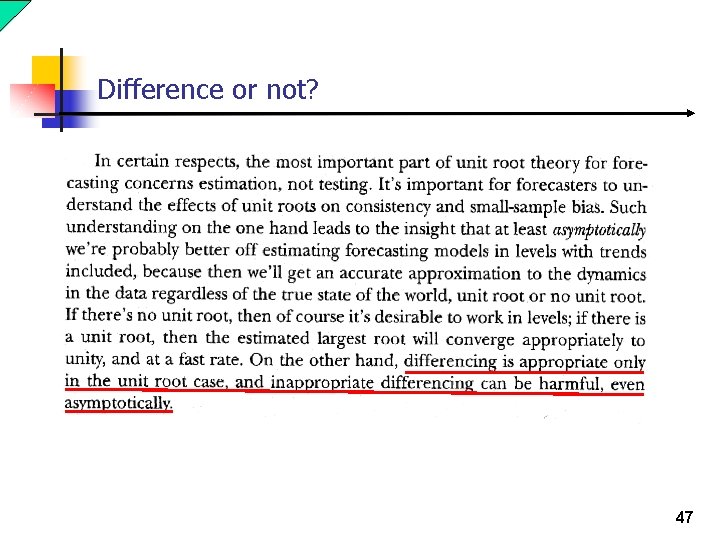

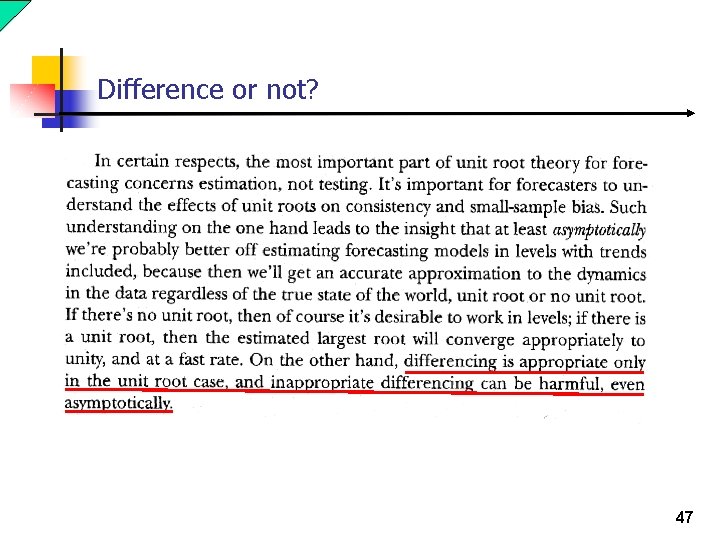

Difference or not? 47

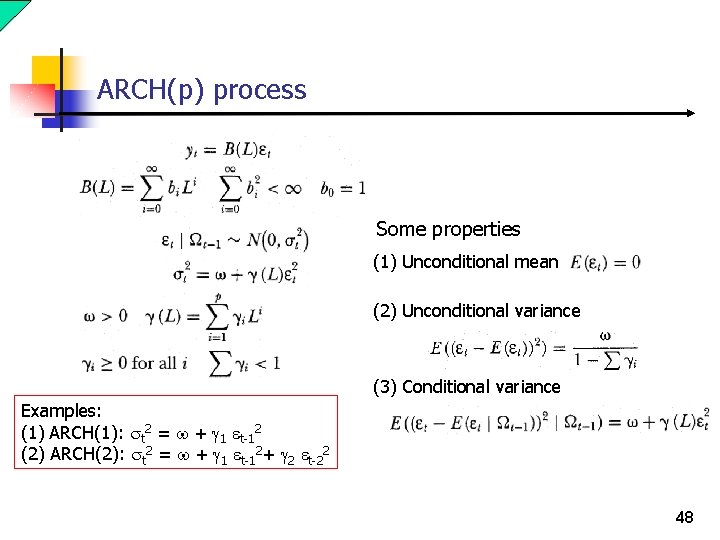

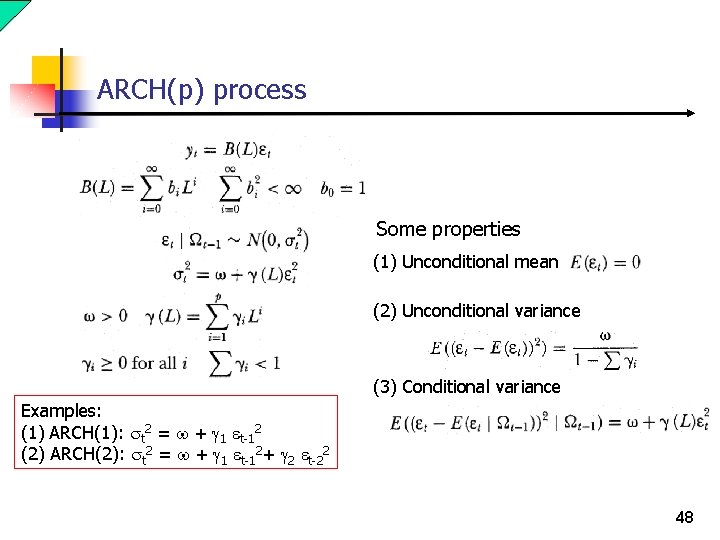

ARCH(p) process Some properties (1) Unconditional mean (2) Unconditional variance (3) Conditional variance Examples: (1) ARCH(1): st 2 = w + g 1 t-12 (2) ARCH(2): st 2 = w + g 1 t-12+ g 2 t-22 48

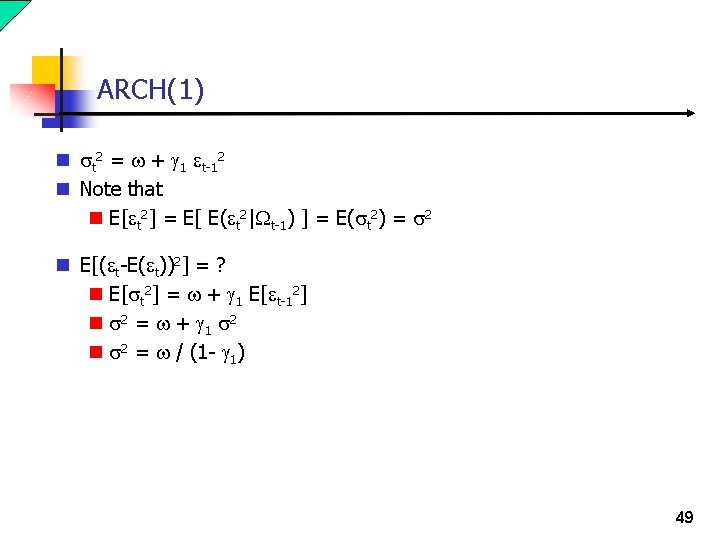

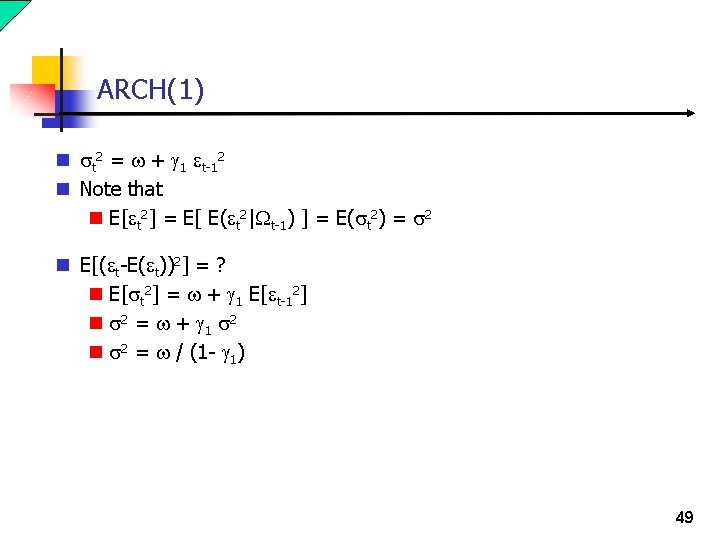

ARCH(1) n st 2 = w + g 1 t-12 n Note that n E[ t 2] = E[ E( t 2| t-1) ] = E(st 2) = s 2 n E[( t-E( t))2] = ? n E[st 2] = w + g 1 E[ t-12] n s 2 = w + g 1 s 2 n s 2 = w / (1 - g 1) 49

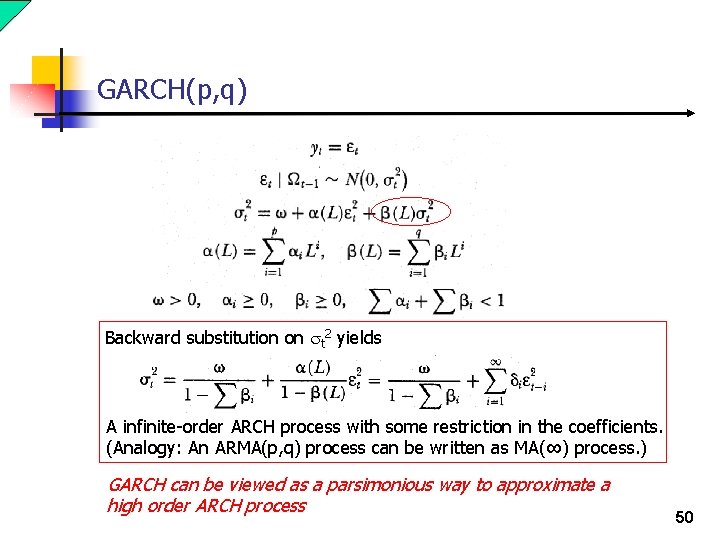

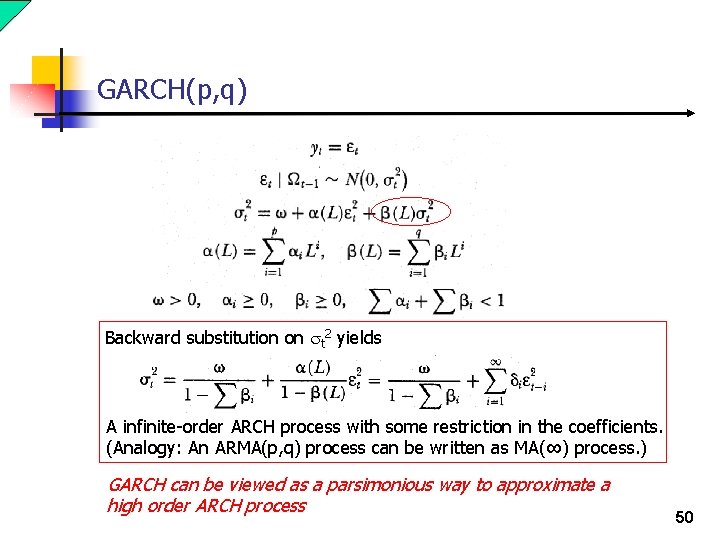

GARCH(p, q) Backward substitution on st 2 yields A infinite-order ARCH process with some restriction in the coefficients. (Analogy: An ARMA(p, q) process can be written as MA(∞) process. ) GARCH can be viewed as a parsimonious way to approximate a high order ARCH process 50

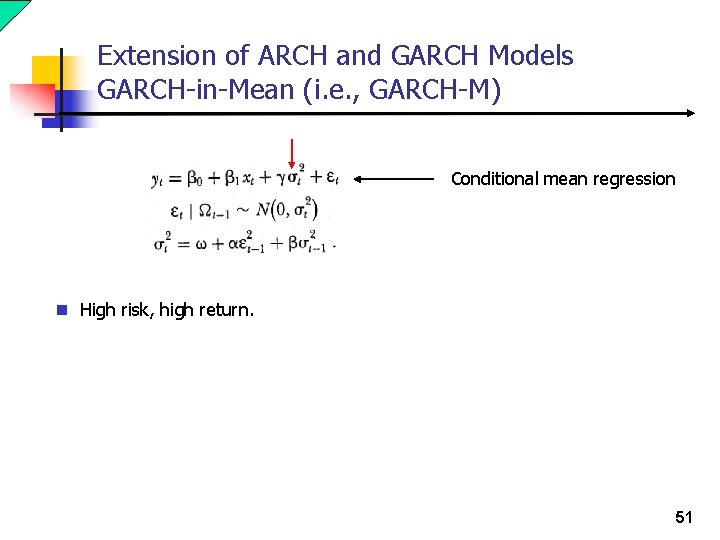

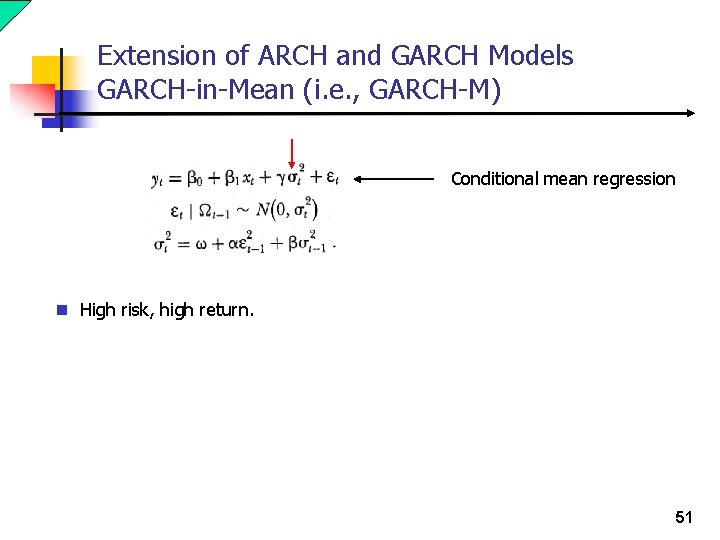

Extension of ARCH and GARCH Models GARCH-in-Mean (i. e. , GARCH-M) Conditional mean regression n High risk, high return. 51

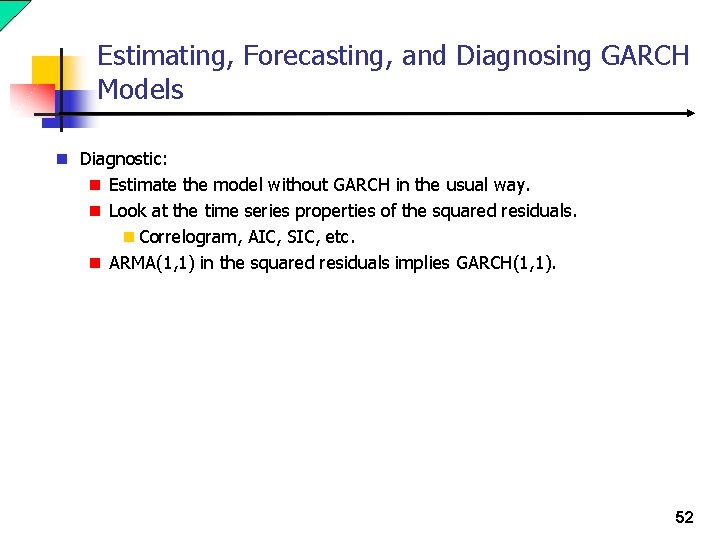

Estimating, Forecasting, and Diagnosing GARCH Models n Diagnostic: n Estimate the model without GARCH in the usual way. n Look at the time series properties of the squared residuals. n Correlogram, AIC, SIC, etc. n ARMA(1, 1) in the squared residuals implies GARCH(1, 1). 52

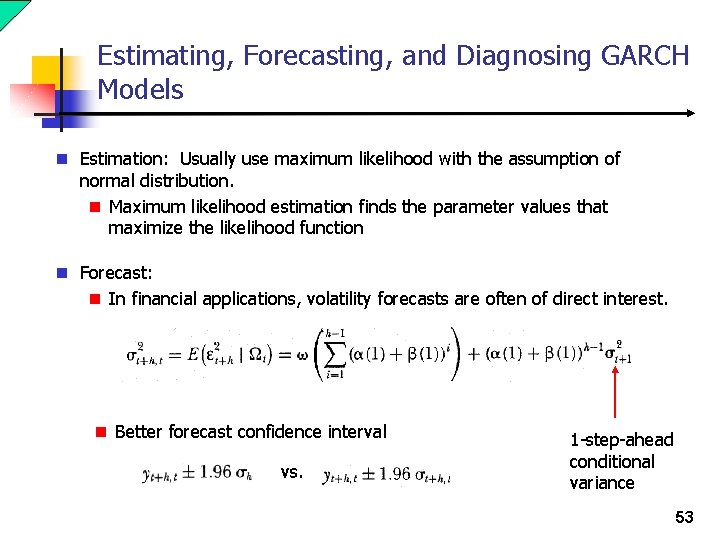

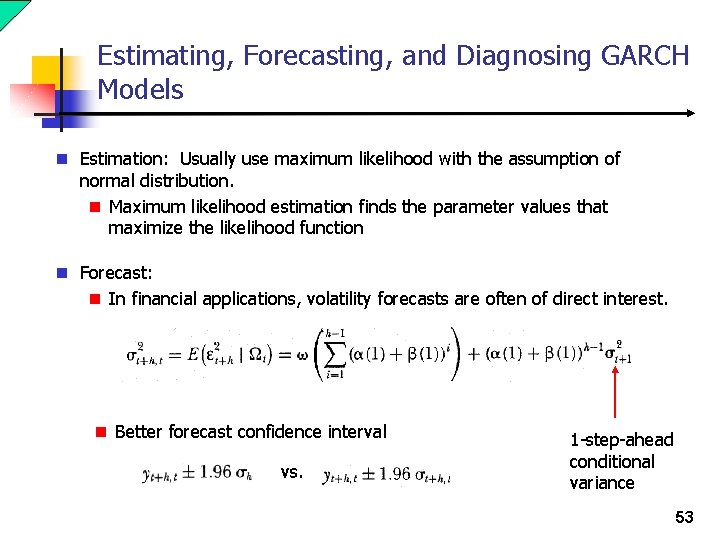

Estimating, Forecasting, and Diagnosing GARCH Models n Estimation: Usually use maximum likelihood with the assumption of normal distribution. n Maximum likelihood estimation finds the parameter values that maximize the likelihood function n Forecast: n In financial applications, volatility forecasts are often of direct interest. n Better forecast confidence interval vs. 1 -step-ahead conditional variance 53

Does Anything Beat A GARCH(1, 1) out of sample? n No. So, use GARCH(1, 1) if no other information is available. 54

Additional interesting topics / references n Forecasting turning points. Lahiri, Kajal and Geoffrey H. Moore (1991): Leading Economic Indicators: New Approaches and Forecasting Records, Cambridge University Press. n Forecasting cycles: Niemira, Michael P. and Philip A. Klein (1994): Forecasting Financial and Economic Cycles, John Wiley and Sons. 55

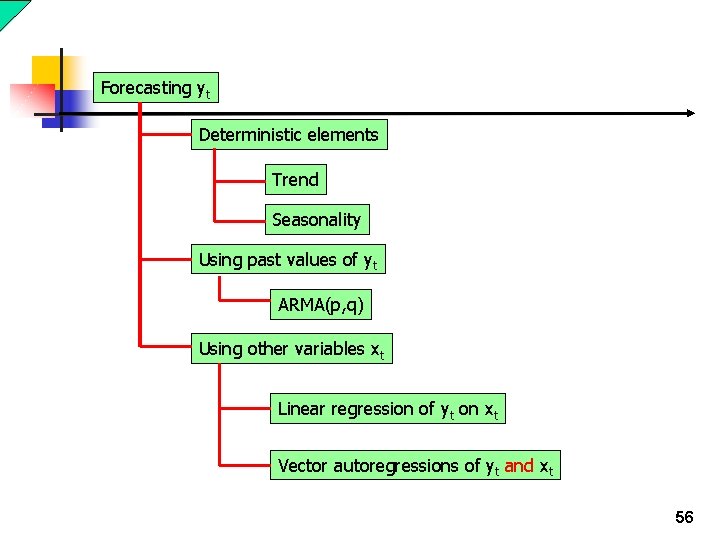

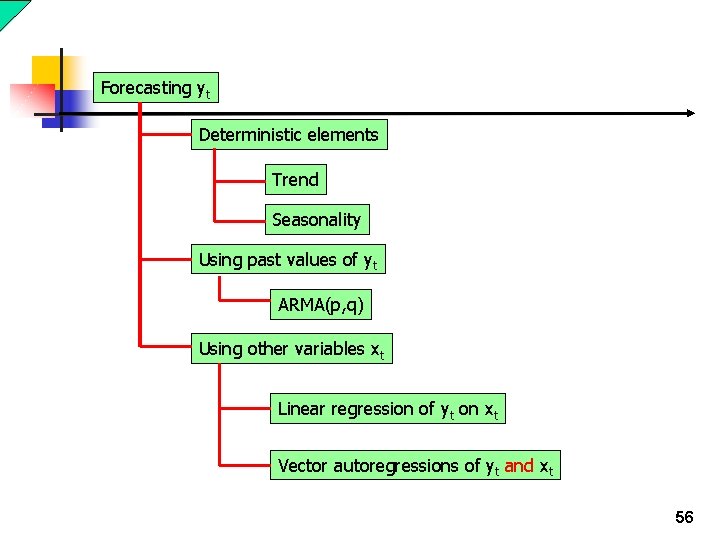

Forecasting yt Deterministic elements Trend Seasonality Using past values of yt ARMA(p, q) Using other variables xt Linear regression of yt on xt Vector autoregressions of yt and xt 56

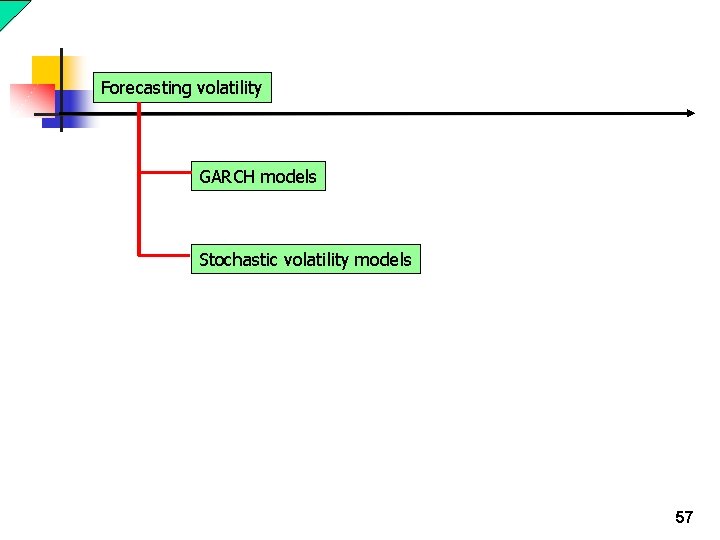

Forecasting volatility GARCH models Stochastic volatility models 57

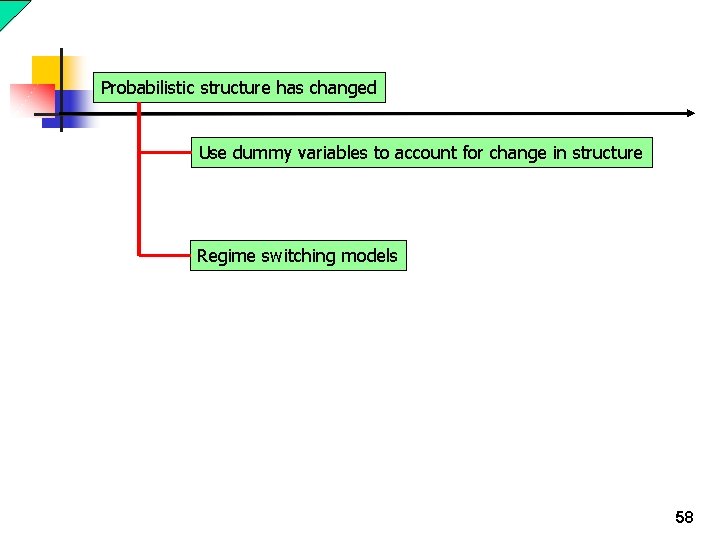

Probabilistic structure has changed Use dummy variables to account for change in structure Regime switching models 58

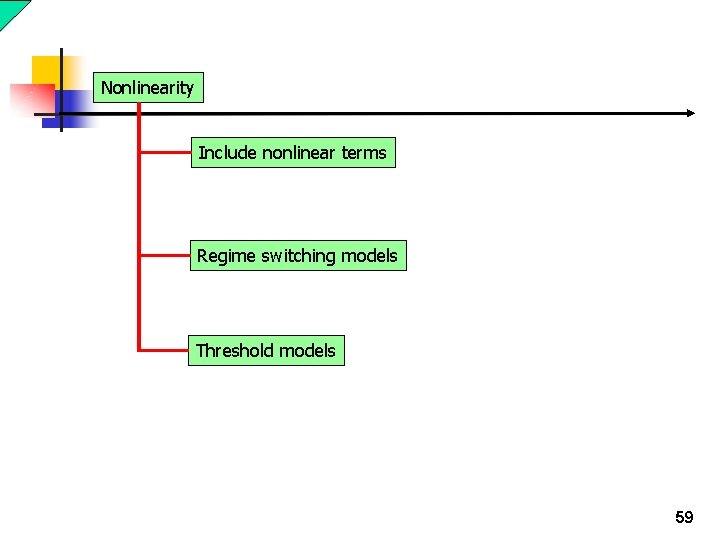

Nonlinearity Include nonlinear terms Regime switching models Threshold models 59

Using models as an approximation of the real world. n No one knows what the true model should be. n Even if we know the true model, we may need to include too many variables, which are not feasible. 60

End 61