Solving ImperfectInformation Games with CFR Noam Brown Carnegie

Solving Imperfect-Information Games with CFR Noam Brown Carnegie Mellon University Computer Science Department

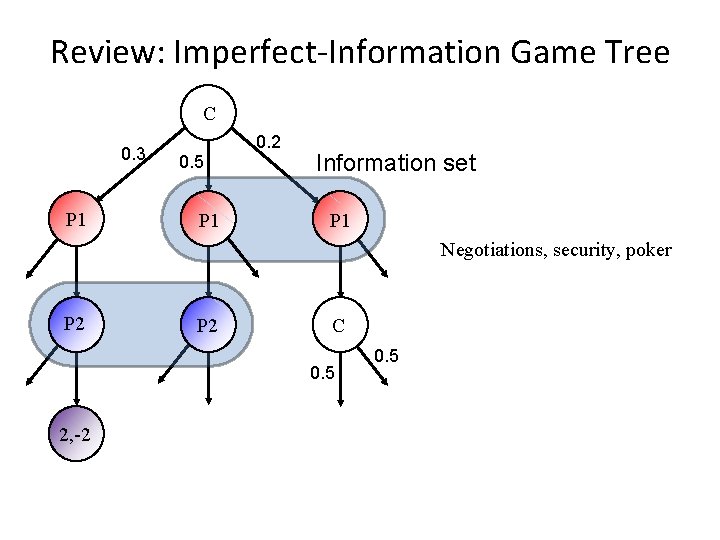

Review: Imperfect-Information Game Tree C 0. 3 P 1 0. 5 P 1 0. 2 Information set P 1 Negotiations, security, poker P 2 C 0. 5 2, -2 0. 5

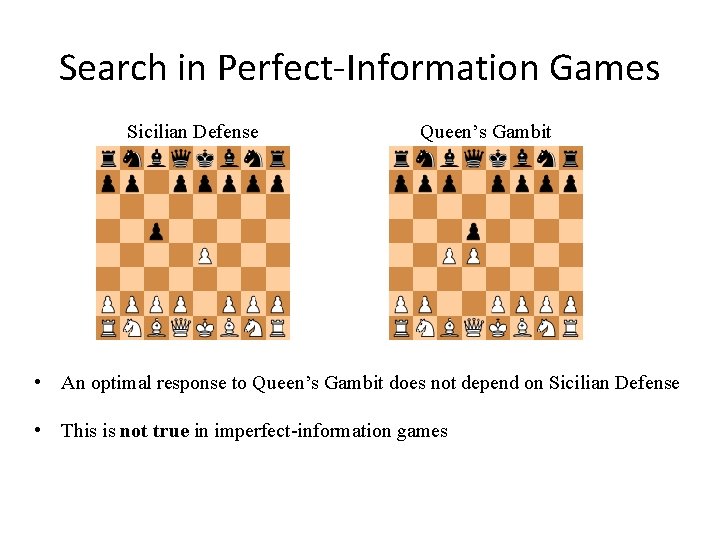

Search in Perfect-Information Games

Search in Perfect-Information Games Sicilian Defense Queen’s Gambit • An optimal response to Queen’s Gambit does not depend on Sicilian Defense • This is not true in imperfect-information games

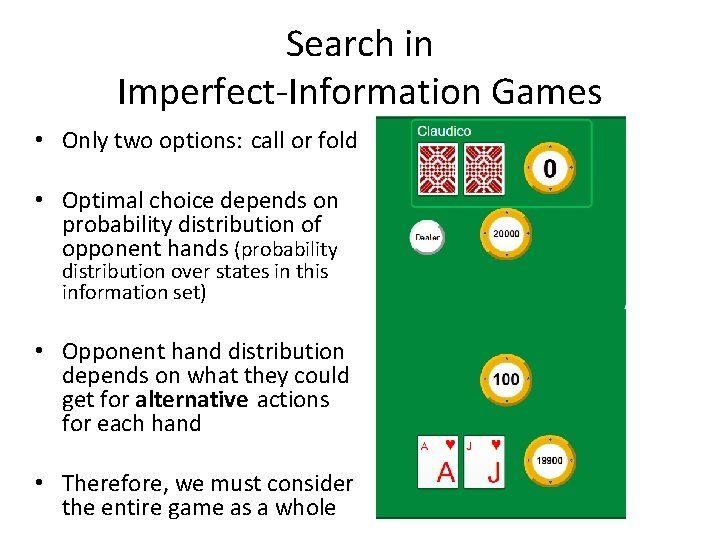

Search in Imperfect-Information Games • Only two options: call or fold • Optimal choice depends on probability distribution of opponent hands (probability distribution over states in this information set) • Opponent hand distribution depends on what they could get for alternative actions for each hand • Therefore, we must consider the entire game as a whole

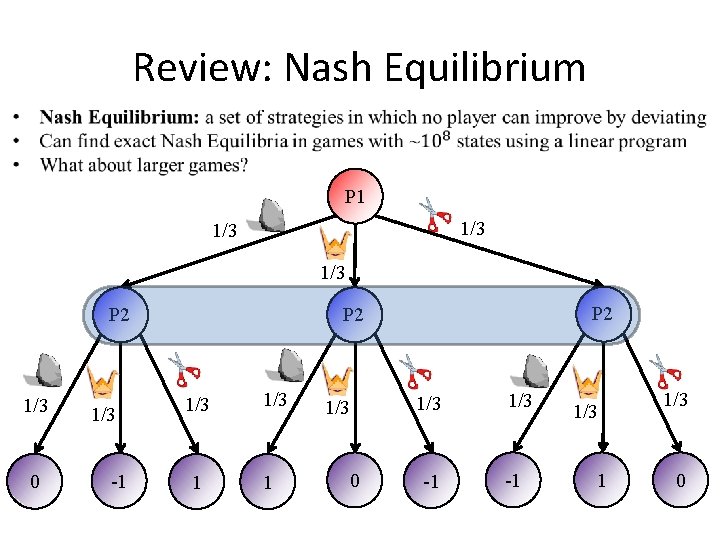

Review: Nash Equilibrium P 1 1/3 1/3 P 2 1/3 0 1/3 -1 P 2 1/3 1 1/3 0 1/3 -1 1/3 0

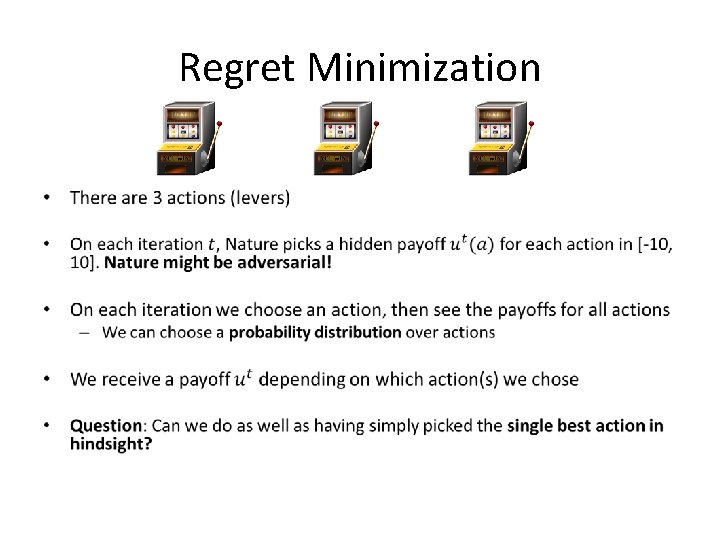

Regret Minimization •

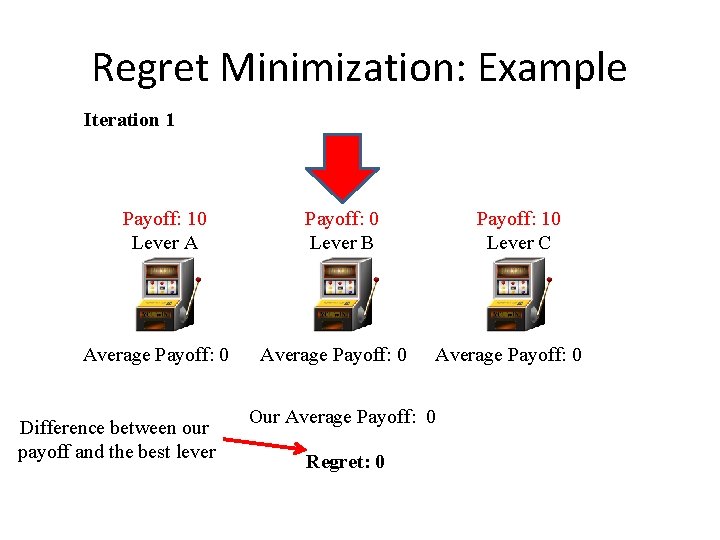

Regret Minimization: Example Iteration 1 Payoff: 10 Lever A Average Payoff: 0 Difference between our payoff and the best lever Payoff: 0 Lever B Average Payoff: 0 Payoff: 10 Lever C Average Payoff: 0 Our Average Payoff: 0 Regret: 0

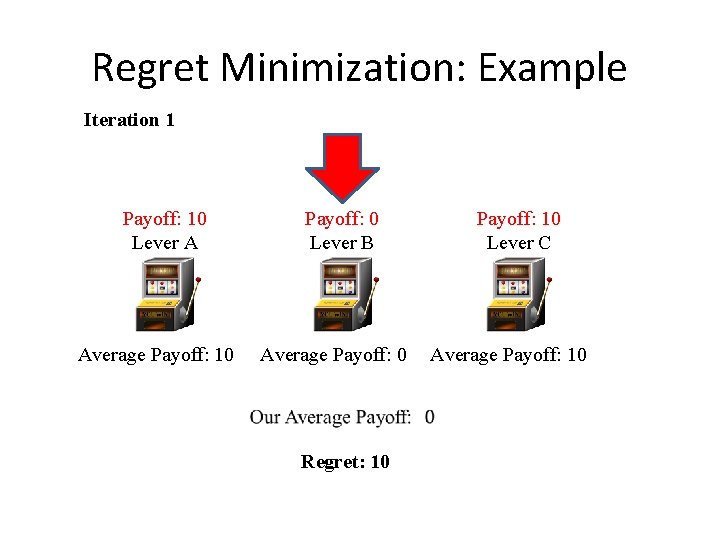

Regret Minimization: Example Iteration 1 Payoff: 10 Lever A Average Payoff: 10 Payoff: 0 Lever B Average Payoff: 0 Regret: 10 Payoff: 10 Lever C Average Payoff: 10

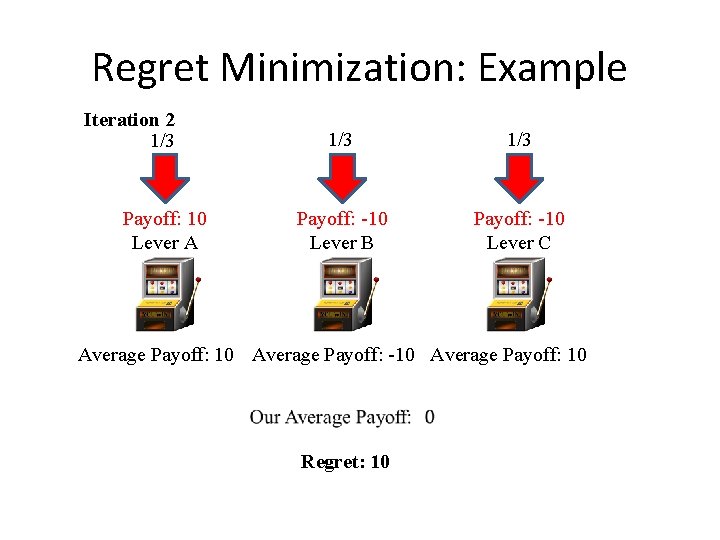

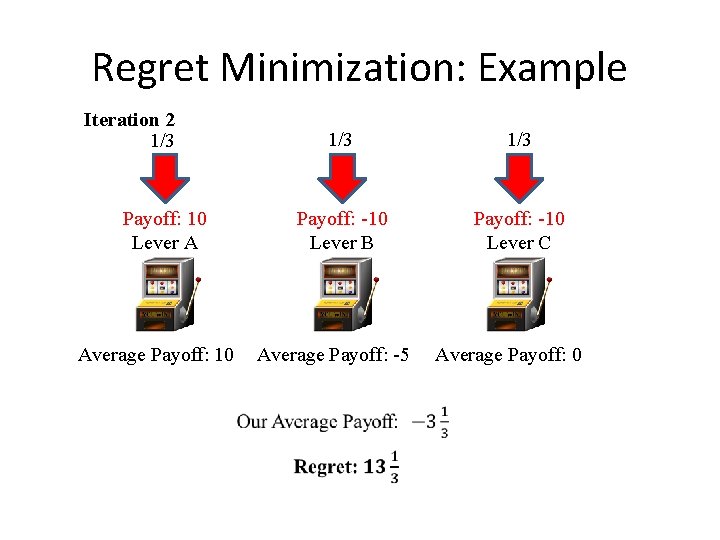

Regret Minimization: Example Iteration 2 1/3 Payoff: 10 Lever A 1/3 Payoff: -10 Lever B Payoff: -10 Lever C Average Payoff: 10 Average Payoff: -10 Average Payoff: 10 Regret: 10

Regret Minimization: Example Iteration 2 1/3 Payoff: 10 Lever A Average Payoff: 10 1/3 Payoff: -10 Lever B Payoff: -10 Lever C Average Payoff: -5 Average Payoff: 0

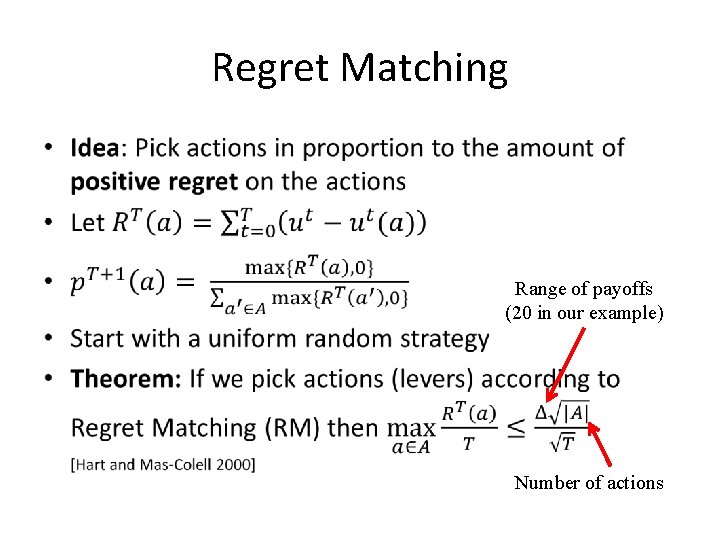

Regret Matching • Range of payoffs (20 in our example) Number of actions

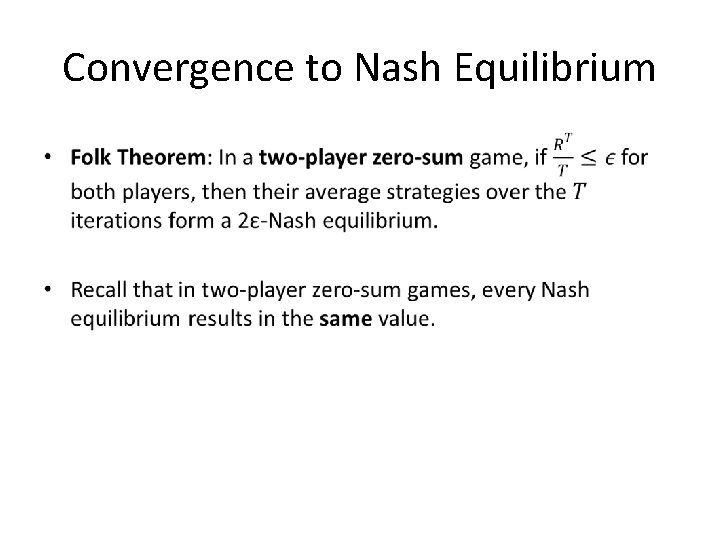

Convergence to Nash Equilibrium •

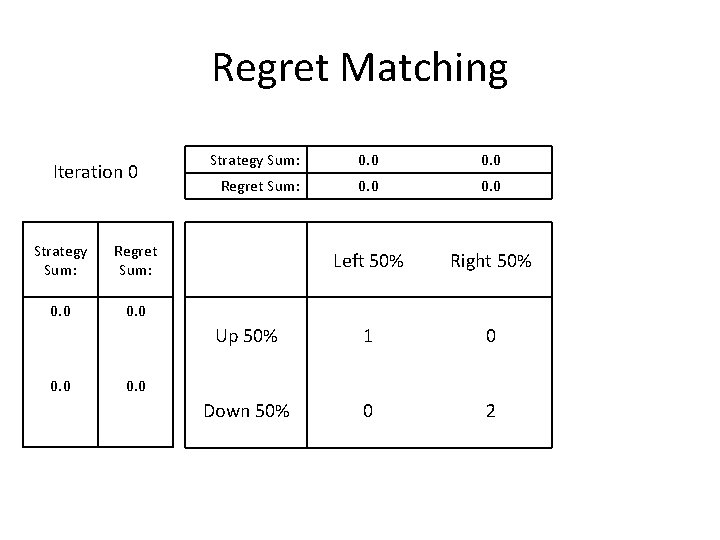

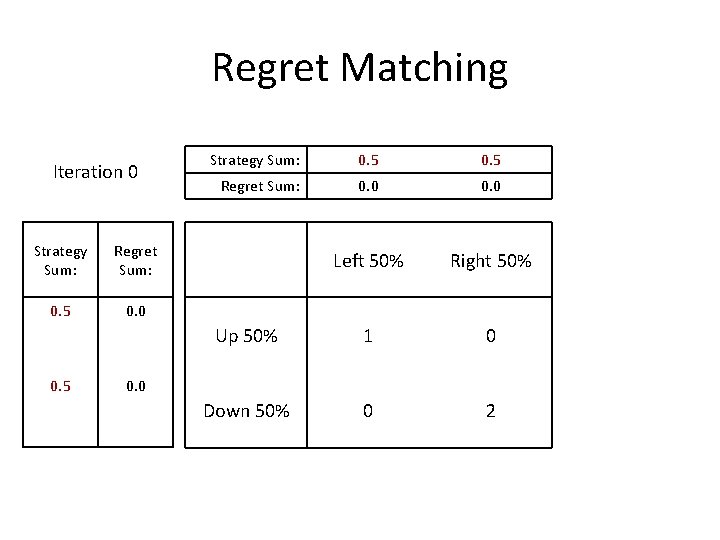

Regret Matching Iteration 0 Strategy Sum: Regret Sum: 0. 0 Strategy Sum: 0. 0 Regret Sum: 0. 0 Left 50% Right 50% Up 50% 1 0 Down 50% 0 2 0. 0

Regret Matching Iteration 0 Strategy Sum: Regret Sum: 0. 5 0. 0 0. 5 Strategy Sum: 0. 5 Regret Sum: 0. 0 Left 50% Right 50% Up 50% 1 0 Down 50% 0 2 0. 0

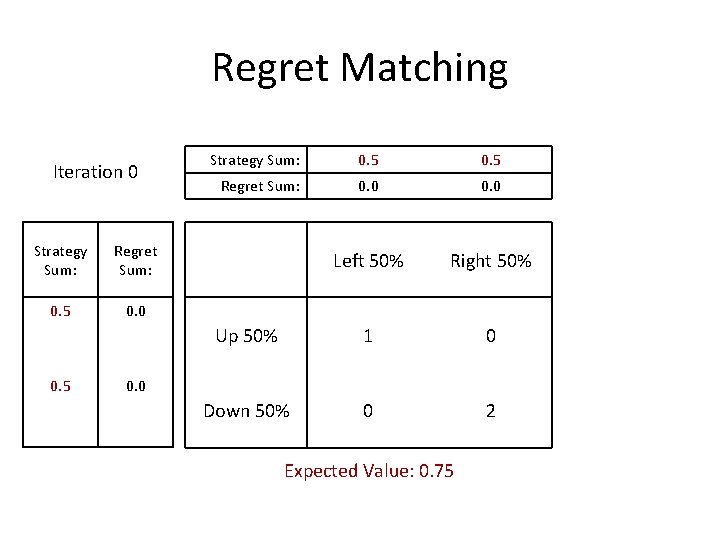

Regret Matching Iteration 0 Strategy Sum: Regret Sum: 0. 5 0. 0 0. 5 Strategy Sum: 0. 5 Regret Sum: 0. 0 Left 50% Right 50% Up 50% 1 0 Down 50% 0 2 0. 0 Expected Value: 0. 75

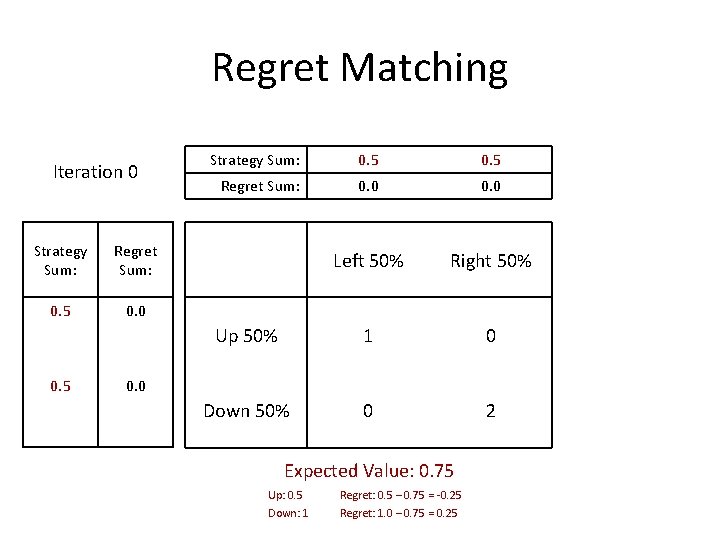

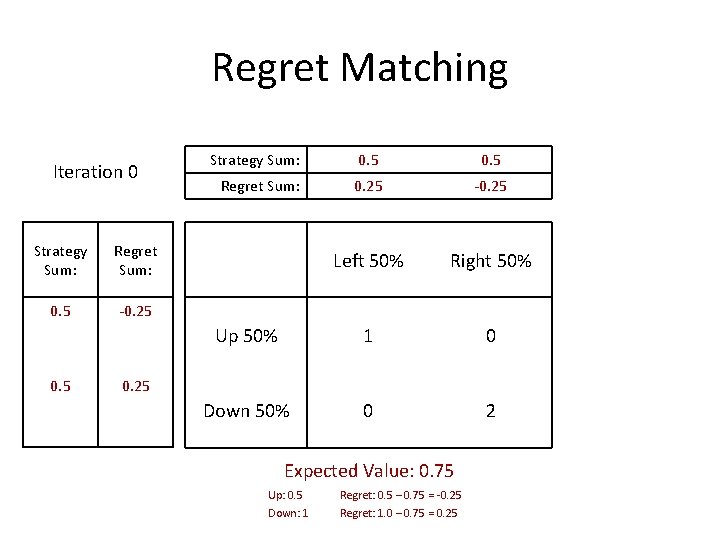

Regret Matching Iteration 0 Strategy Sum: Regret Sum: 0. 5 0. 0 0. 5 Strategy Sum: 0. 5 Regret Sum: 0. 0 Left 50% Right 50% Up 50% 1 0 Down 50% 0 2 0. 0 Expected Value: 0. 75 Up: 0. 5 Down: 1 Regret: 0. 5 – 0. 75 = -0. 25 Regret: 1. 0 – 0. 75 = 0. 25

Regret Matching Iteration 0 Strategy Sum: Regret Sum: 0. 5 -0. 25 0. 5 Strategy Sum: 0. 5 Regret Sum: 0. 25 -0. 25 Left 50% Right 50% Up 50% 1 0 Down 50% 0 2 0. 25 Expected Value: 0. 75 Up: 0. 5 Down: 1 Regret: 0. 5 – 0. 75 = -0. 25 Regret: 1. 0 – 0. 75 = 0. 25

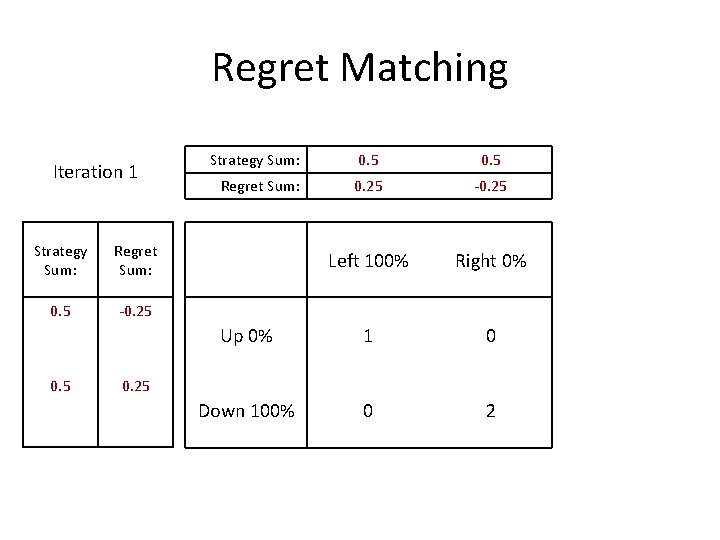

Regret Matching Iteration 1 Strategy Sum: Regret Sum: 0. 5 -0. 25 0. 5 Strategy Sum: 0. 5 Regret Sum: 0. 25 -0. 25 Left 100% Right 0% Up 0% 1 0 Down 100% 0 2 0. 25

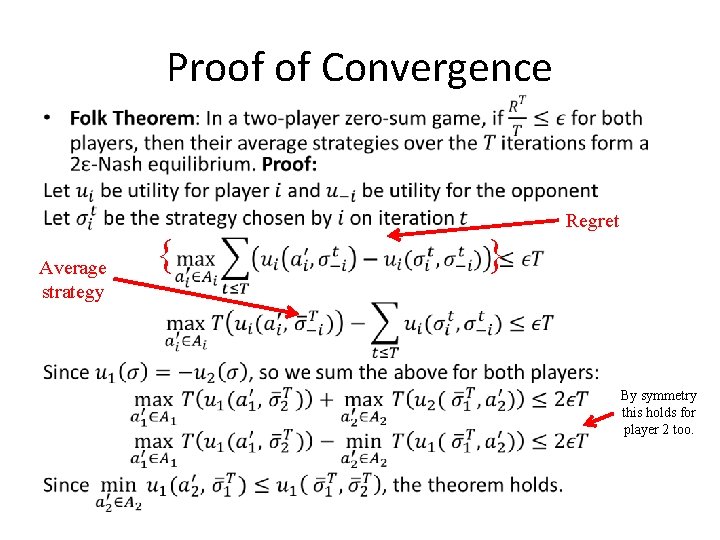

Proof of Convergence • Average strategy { } Regret By symmetry this holds for player 2 too.

![Warm Starting for Regret Minimization [Brown & Sandholm AAAI-16] Convergence to Nash 0. 12 Warm Starting for Regret Minimization [Brown & Sandholm AAAI-16] Convergence to Nash 0. 12](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-21.jpg)

Warm Starting for Regret Minimization [Brown & Sandholm AAAI-16] Convergence to Nash 0. 12 Distance from Nash Equilibrium 0. 1 0. 08 0. 06 0. 04 0. 02 0 100 200 300 400 500 600 700 800 900 1000 1100 1200 1300 1400 1500 1600 1700 1800 1900 2000 Iterations

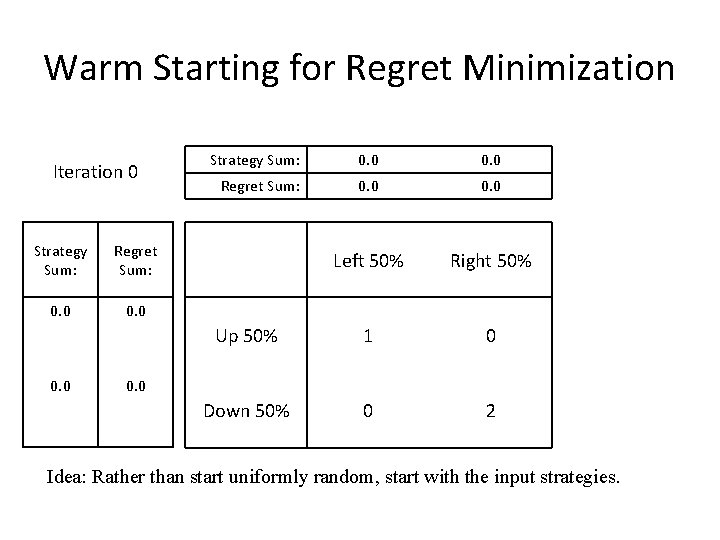

Warm Starting for Regret Minimization Iteration 0 Strategy Sum: Regret Sum: 0. 0 Strategy Sum: 0. 0 Regret Sum: 0. 0 Left 50% Right 50% Up 50% 1 0 Down 50% 0 2 0. 0 Idea: Rather than start uniformly random, start with the input strategies.

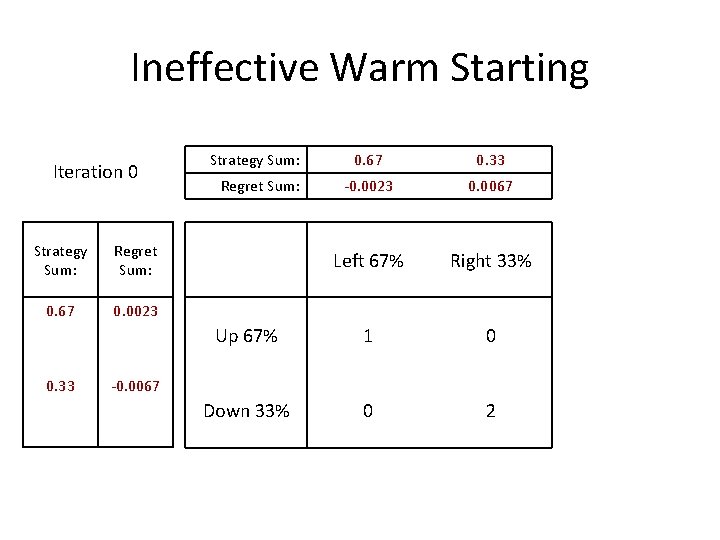

Ineffective Warm Starting Iteration 0 Strategy Sum: Regret Sum: 0. 0 Strategy Sum: 0. 0 Regret Sum: 0. 0 Left 67% Right 33% Up 67% 1 0 Down 33% 0 2 0. 0 Idea: Rather than start uniformly random, start with the input strategies.

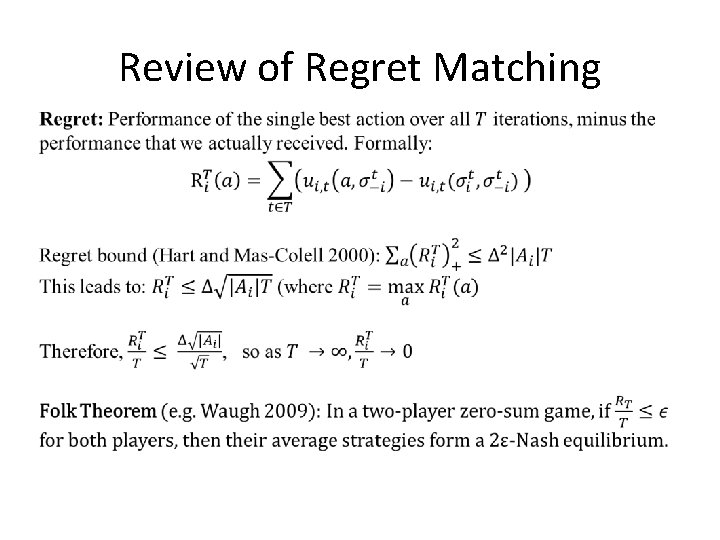

Ineffective Warm Starting Iteration 0 Strategy Sum: Regret Sum: 0. 67 0. 0023 0. 33 Strategy Sum: 0. 67 0. 33 -0. 0023 0. 0067 Left 67% Right 33% Up 67% 1 0 Down 33% 0 2 Regret Sum: -0. 0067

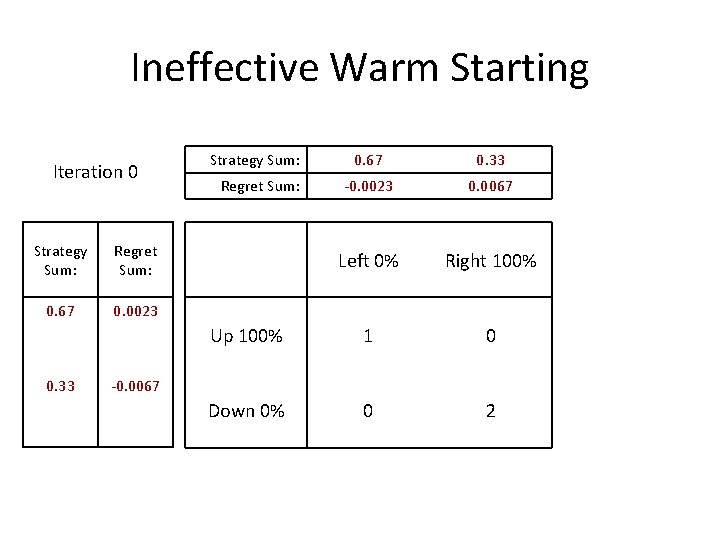

Ineffective Warm Starting Iteration 0 Strategy Sum: Regret Sum: 0. 67 0. 0023 0. 33 Strategy Sum: 0. 67 0. 33 -0. 0023 0. 0067 Left 0% Right 100% Up 100% 1 0 Down 0% 0 2 Regret Sum: -0. 0067

Ineffective Warm Starting Strategy Sum: Iteration 0 0. 33 -1. 0023 0. 0067 Left 0% Right 100% Up 100% 1 0 Down 0% 0 2 Regret Sum: Strategy Sum: Regret Sum: 0. 67 0. 0023 0. 33 0. 67 1. 9933 Intuitively, not initializing the regrets is similar to not setting the initial step size in gradient decent.

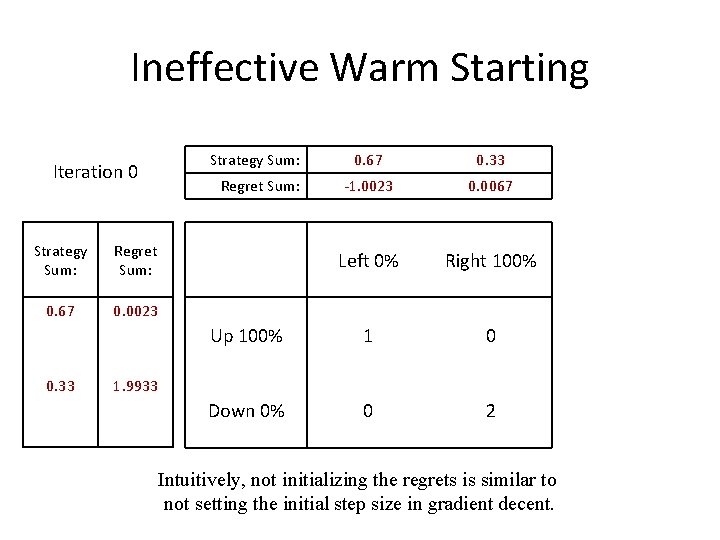

Review of Regret Matching

![Warm Starting for Regret Minimization [Brown & Sandholm AAAI-16] Warm Starting for Regret Minimization [Brown & Sandholm AAAI-16]](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-28.jpg)

Warm Starting for Regret Minimization [Brown & Sandholm AAAI-16]

![Warm Starting for Regret Minimization [Brown & Sandholm AAAI-16] Cancels out Warm Starting for Regret Minimization [Brown & Sandholm AAAI-16] Cancels out](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-29.jpg)

Warm Starting for Regret Minimization [Brown & Sandholm AAAI-16] Cancels out

![Warm Starting for Regret Minimization [Brown & Sandholm AAAI-16] Warm Starting for Regret Minimization [Brown & Sandholm AAAI-16]](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-30.jpg)

Warm Starting for Regret Minimization [Brown & Sandholm AAAI-16]

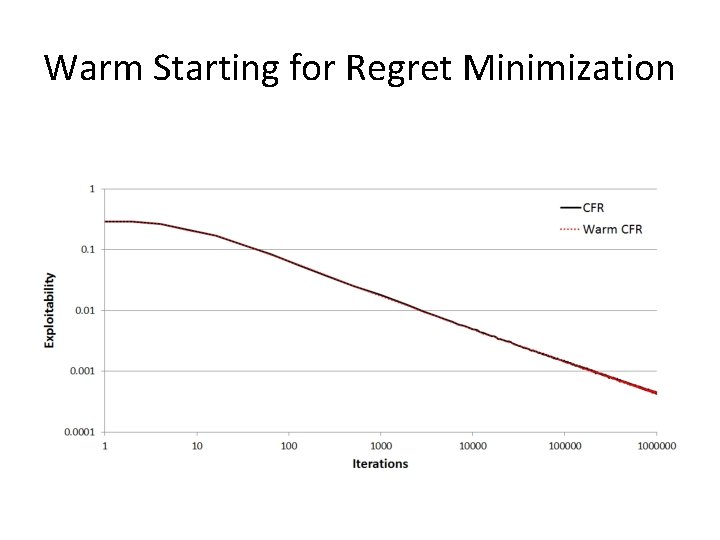

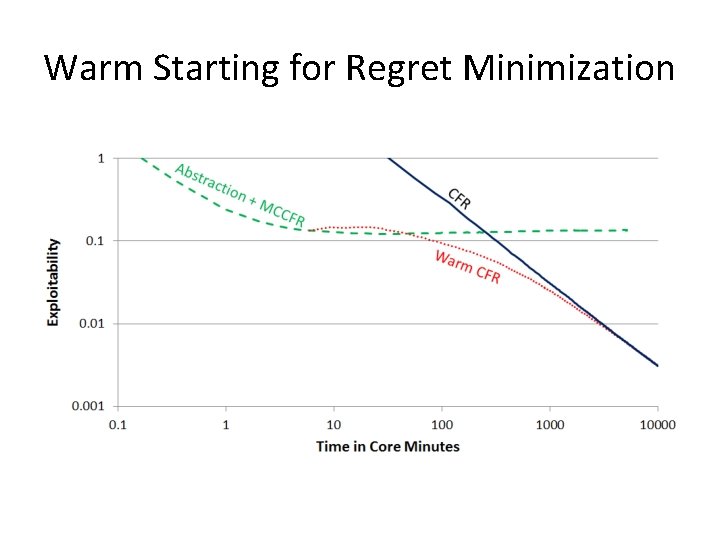

Warm Starting for Regret Minimization

Warm Starting for Regret Minimization

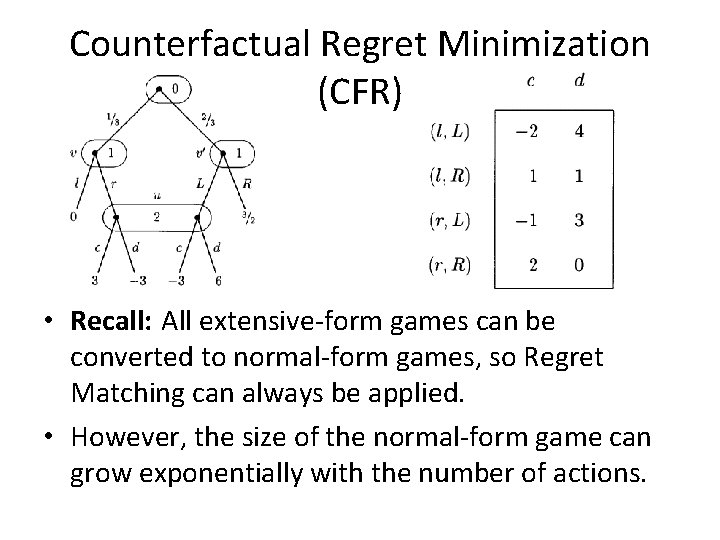

Counterfactual Regret Minimization (CFR) • Recall: All extensive-form games can be converted to normal-form games, so Regret Matching can always be applied. • However, the size of the normal-form game can grow exponentially with the number of actions.

![Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] • Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] •](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-34.jpg)

Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] •

![Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 P 2 P 1 Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 P 2 P 1](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-35.jpg)

Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 P 2 P 1 -1 P 2 1 1 P 1 -1 -1 P 1 1 1 -1

![Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-36.jpg)

Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 0. 1 0. 9 P 1 -1 • 1 1 -1

![Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-37.jpg)

Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 0. 1 0. 9 • P 1 0. 5 -1 0. 5 -1

![Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-38.jpg)

Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 0. 1 0. 9 • P 1 0. 5 -1 0. 5 -1

![Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-39.jpg)

Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 0. 1 0. 9 • P 1 0. 5 -1 0. 5 -1

![Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-40.jpg)

Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 0. 1 0. 9 • P 1 0. 5 -1 0. 5 -1

![Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-41.jpg)

Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 0. 1 0. 9 • P 1 0. 5 -1 0. 5 -1

![Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-42.jpg)

Counterfactual Regret Minimization (CFR) [Zinkevich et al. NIPS-2007] P 1 0. 5 P 2 0. 1 0. 9 • P 1 0. 5 -1 0. 5 -1

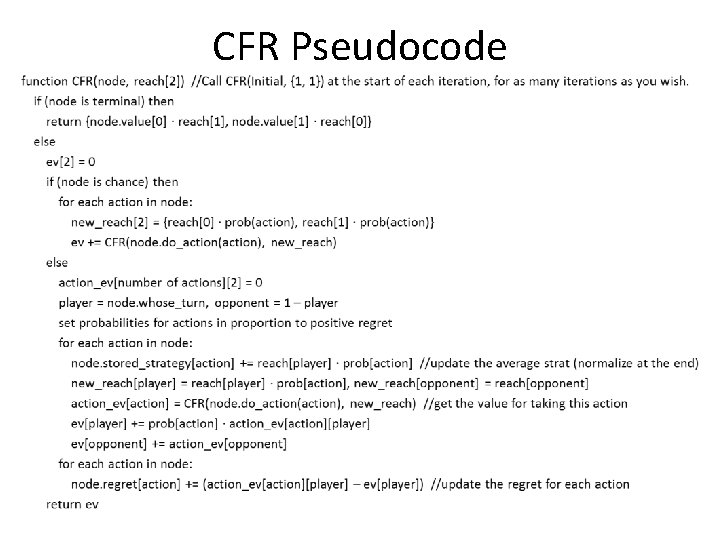

• CFR Pseudocode

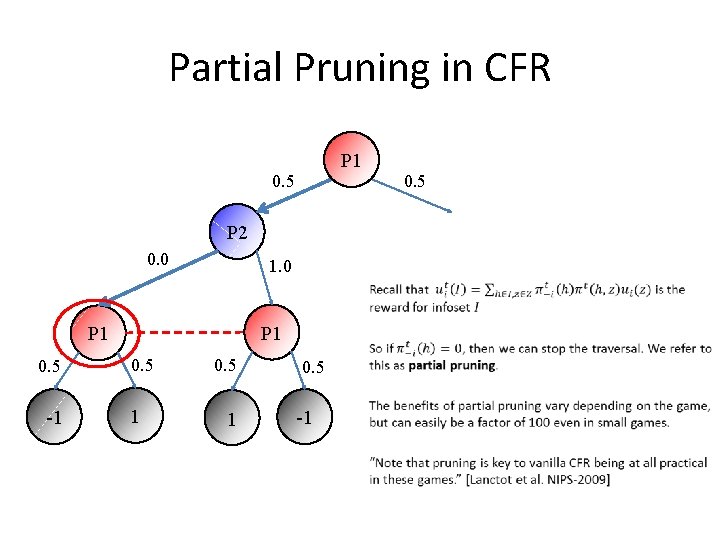

Partial Pruning in CFR P 1 0. 5 P 2 0. 0 1. 0 • P 1 0. 5 -1 0. 5 -1

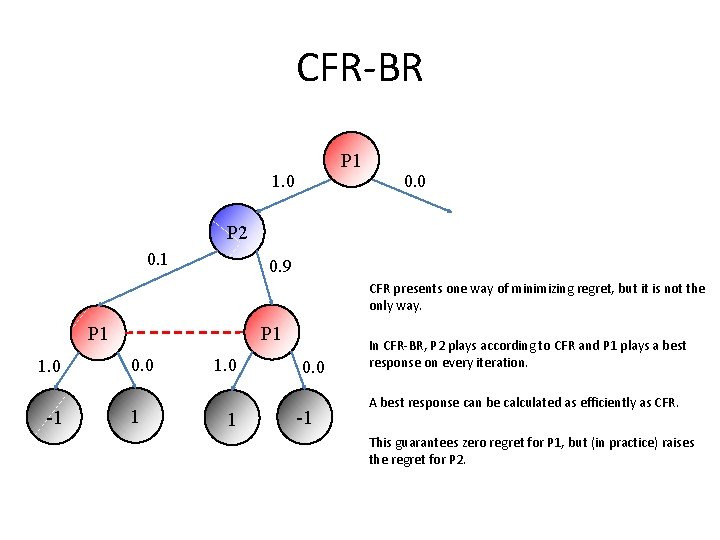

CFR-BR P 1 1. 0 0. 0 P 2 0. 1 0. 9 CFR presents one way of minimizing regret, but it is not the only way. P 1 1. 0 -1 0. 0 1 1. 0 1 0. 0 -1 In CFR-BR, P 2 plays according to CFR and P 1 plays a best response on every iteration. A best response can be calculated as efficiently as CFR. This guarantees zero regret for P 1, but (in practice) raises the regret for P 2.

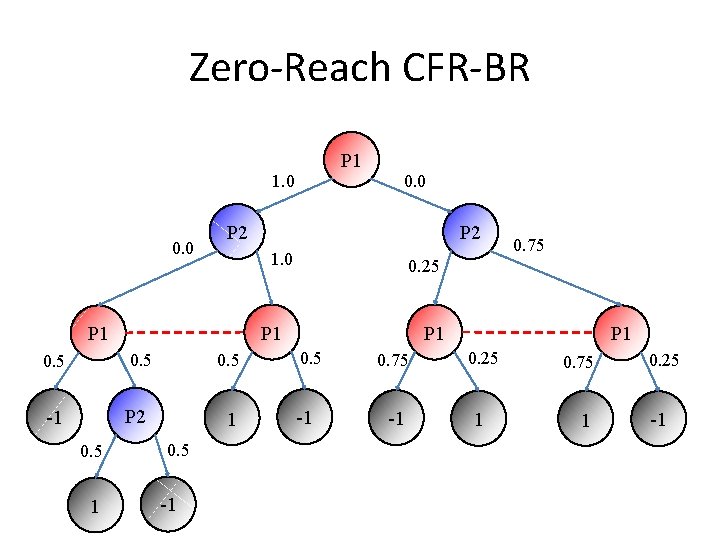

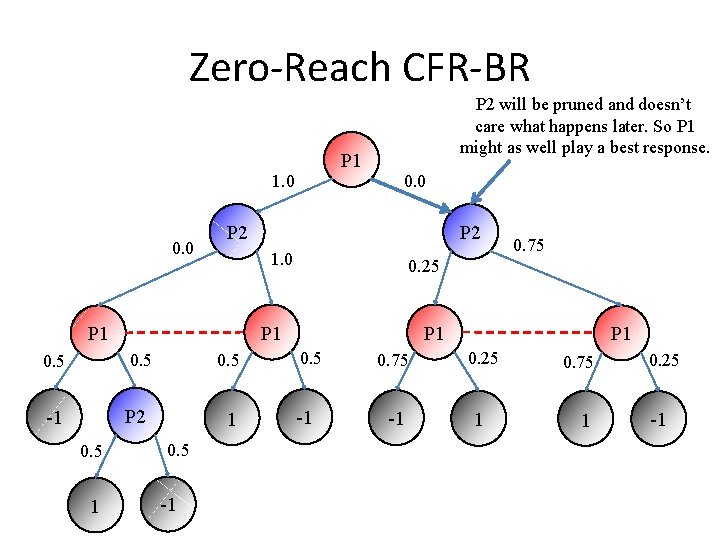

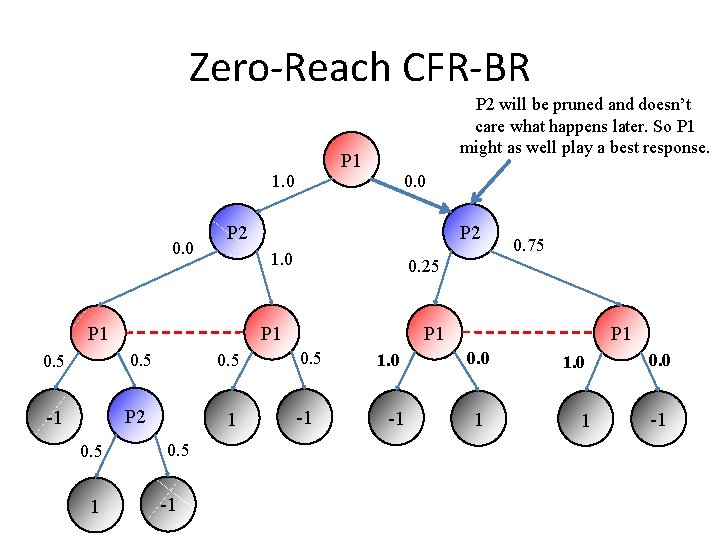

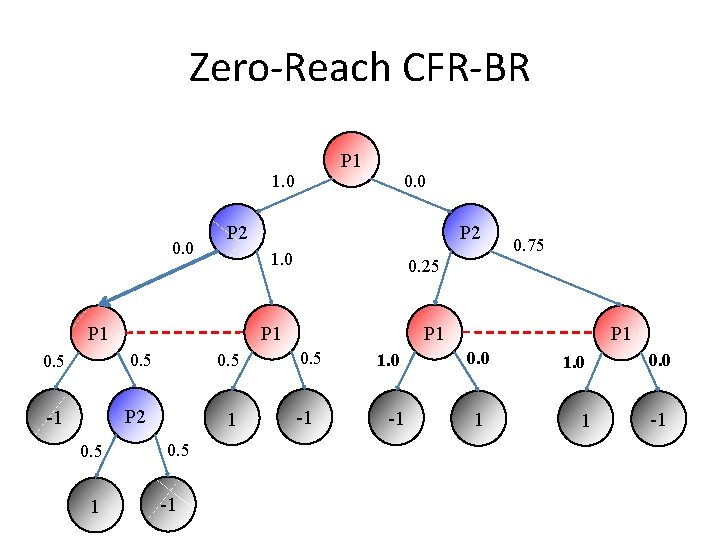

Zero-Reach CFR-BR P 1 1. 0 0. 0 P 2 0. 5 -1 P 2 1 0. 5 -1 0. 75 0. 25 P 1 0. 5 1 P 2 1. 0 P 1 0. 5 0. 0 P 1 0. 5 -1 P 1 0. 75 0. 25 -1 1 0. 75 1 0. 25 -1

Zero-Reach CFR-BR P 2 will be pruned and doesn’t care what happens later. So P 1 might as well play a best response. P 1 1. 0 0. 0 P 2 0. 5 -1 P 2 1 0. 5 -1 0. 75 0. 25 P 1 0. 5 1 P 2 1. 0 P 1 0. 5 0. 0 P 1 0. 5 -1 P 1 0. 75 0. 25 -1 1 0. 75 1 0. 25 -1

Zero-Reach CFR-BR P 2 will be pruned and doesn’t care what happens later. So P 1 might as well play a best response. P 1 1. 0 0. 0 P 2 0. 5 -1 P 2 1 0. 5 -1 0. 75 0. 25 P 1 0. 5 1 P 2 1. 0 P 1 0. 5 0. 0 P 1 0. 5 -1 1. 0 -1 P 1 0. 0 1 1. 0 0. 0 1 -1

Zero-Reach CFR-BR P 1 1. 0 0. 0 P 2 0. 5 -1 P 2 1 0. 5 -1 0. 75 0. 25 P 1 0. 5 1 P 2 1. 0 P 1 0. 5 0. 0 P 1 0. 5 -1 1. 0 -1 P 1 0. 0 1 1. 0 0. 0 1 -1

Zero-Reach CFR-BR improves CFR by about a factor of 2. But we can do better! 0. 0 P 2 1. 0 0. 5 -1 P 2 1 1. 0 -1 0. 75 0. 25 P 1 0. 5 1 1. 0 P 2 P 1 0. 0 P 1 0. 5 -1 1. 0 -1 P 1 0. 0 1 1. 0 0. 0 1 -1

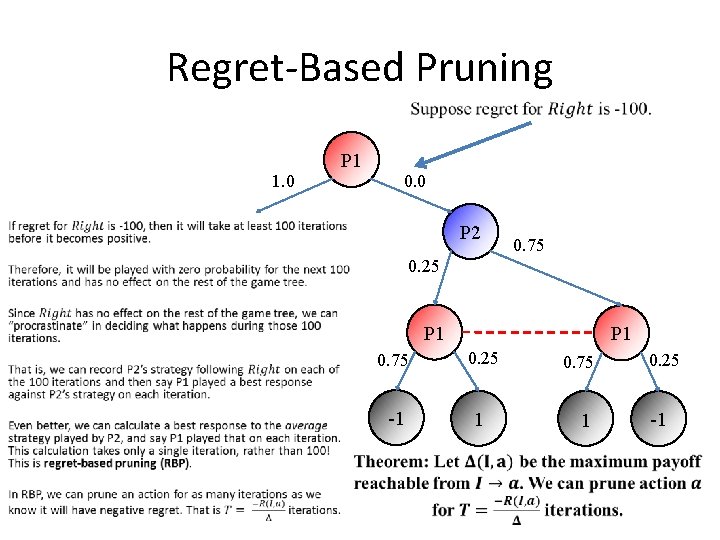

Regret-Based Pruning P 1 1. 0 0. 0 • P 2 0. 75 0. 25 P 1 0. 75 0. 25 -1 1 0. 75 1 0. 25 -1

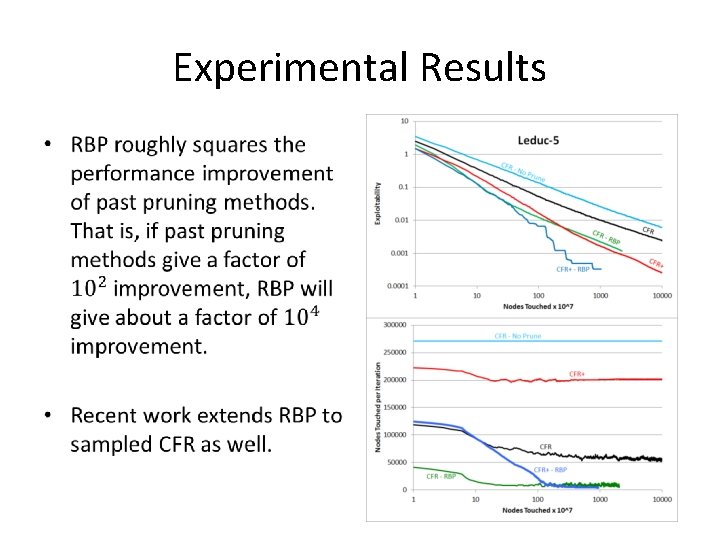

Experimental Results •

Other CFR Variants • CFR+: After each iteration, set all negative-regret actions to zero. – Same convergence bound, but faster in practice • Monte-Carlo CFR: Traverse the game tree separately for each player. Sample chance and opponent actions (and treat them as occuring with probability 1). – Does iterations much more quickly, which is particularly useful for large abstracted games. – Don’t need to pass down reach, since it’s always 1.

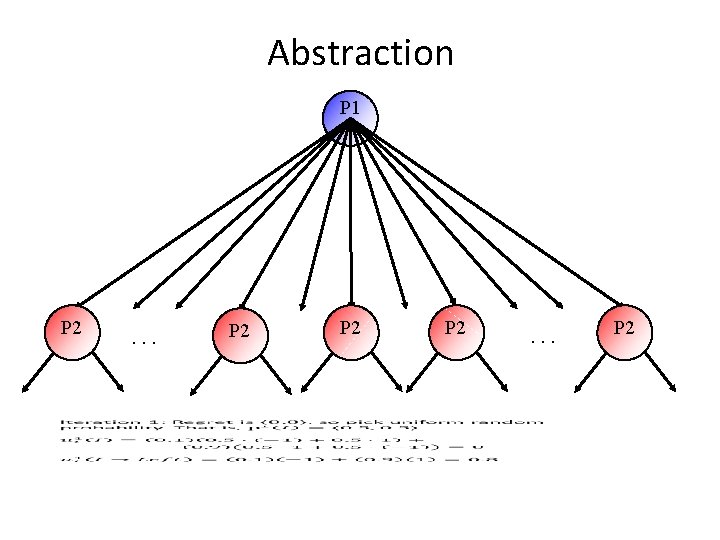

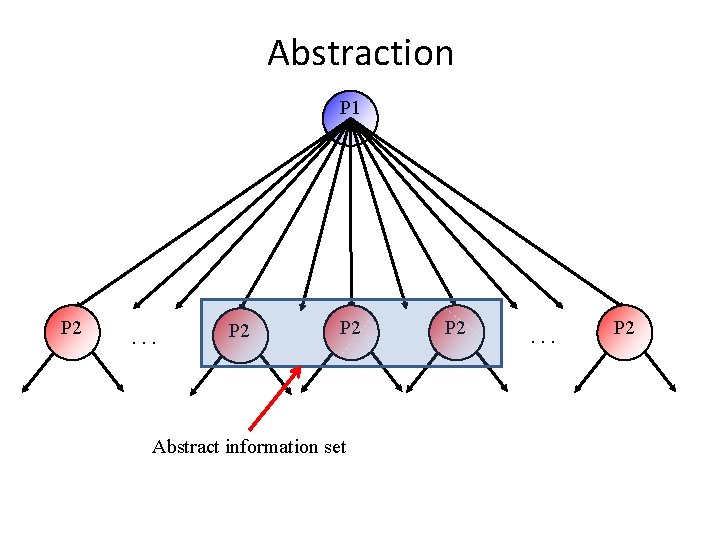

Abstraction P 1 P 2 . . . P 2

Abstraction P 1 P 2 . . . P 2 Abstract information set P 2 . . . P 2

![Standard Approach [Gilpin & Sandholm EC-06, J. of the ACM 2007…] Original game Abstracted Standard Approach [Gilpin & Sandholm EC-06, J. of the ACM 2007…] Original game Abstracted](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-56.jpg)

Standard Approach [Gilpin & Sandholm EC-06, J. of the ACM 2007…] Original game Abstracted game Automated abstraction Custom equilibrium-finding algorithm Reverse model Abstract Nash equilibrium Foreshadowed by Shi & Littman 01, Billings et al. IJCAI-03

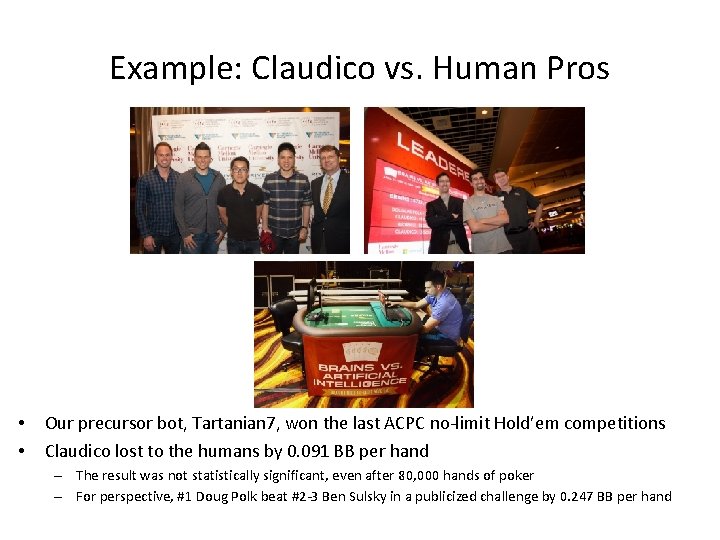

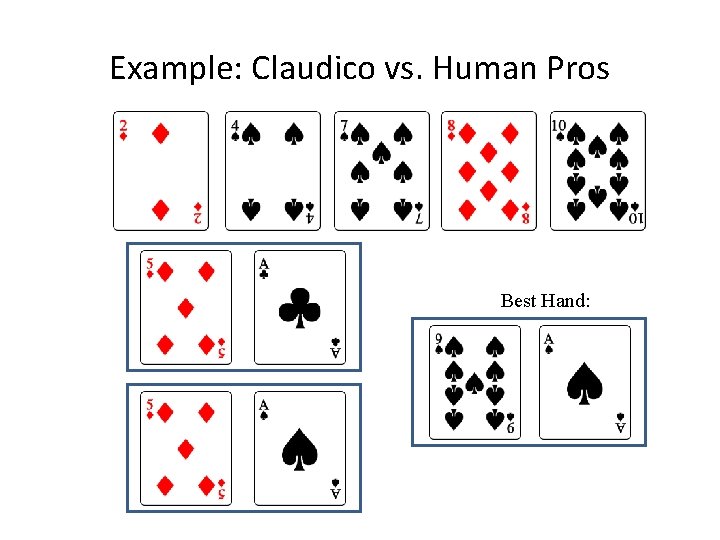

Example: Claudico vs. Human Pros Annual Computer Poker Competition • • Our precursor bot, Tartanian 7, won the last ACPC no-limit Hold’em competitions Claudico lost to the humans by 0. 091 BB per hand – The result was not statistically significant, even after 80, 000 hands of poker – For perspective, #1 Doug Polk beat #2 -3 Ben Sulsky in a publicized challenge by 0. 247 BB per hand

Example: Claudico vs. Human Pros Annual Computer Poker Competition Best Hand:

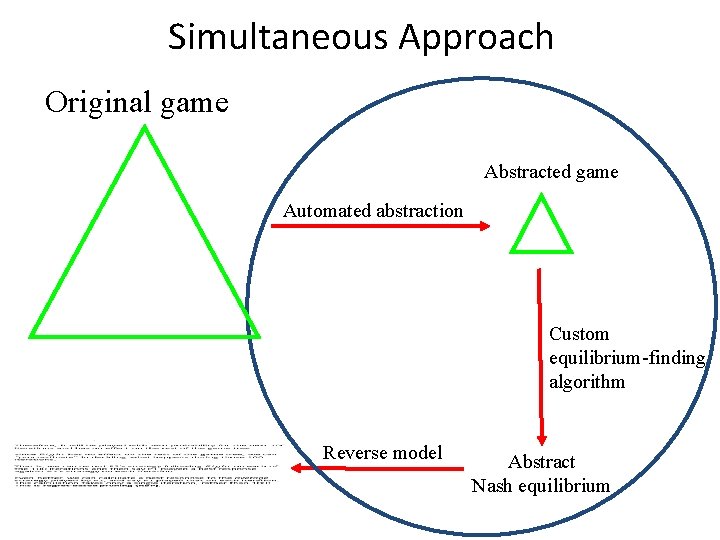

Simultaneous Approach Original game Abstracted game Automated abstraction Custom equilibrium-finding algorithm Reverse model Abstract Nash equilibrium

![Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF) Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF)](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-60.jpg)

Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF) Coar se ab strac tion Equilibrium finding Warm starting Abstraction refinement Full-game exploitability calculation

![Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF) Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF)](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-61.jpg)

Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF) Coar se ab strac tion Equilibrium finding Warm starting Abstraction refinement Full-game exploitability calculation

![Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF) Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF)](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-62.jpg)

Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF) Coar se ab strac tion Equilibrium finding Warm starting Abstraction refinement Full-game exploitability calculation

![Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF) Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF)](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-63.jpg)

Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF) Coar se ab strac tion Equilibrium finding Warm starting Abstraction refinement Full-game exploitability calculation

![Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF) Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF)](http://slidetodoc.com/presentation_image_h/a2bc5896540a3d89f51f3bd274998b7d/image-64.jpg)

Simultaneous Approach [Brown & Sandholm IJCAI-15] Original game Simultaneous abstraction and equilibrium finding (SAEF) Coar se ab strac tion Equilibrium finding Warm starting Abstraction refinement Full-game exploitability calculation

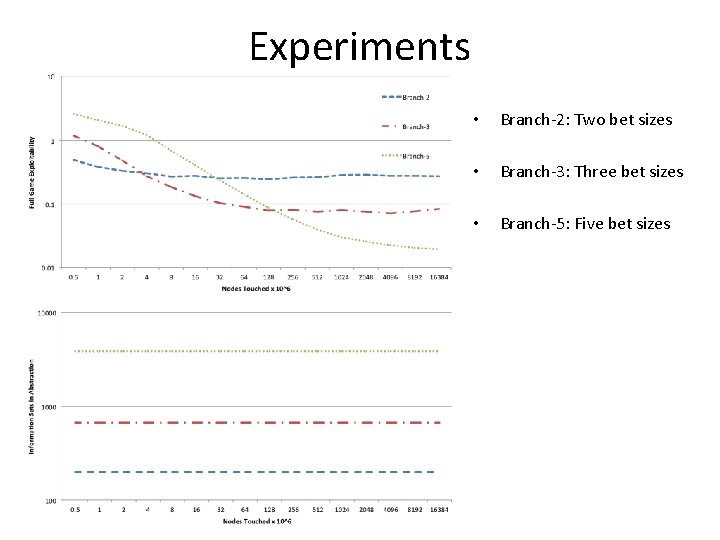

Experiments • Branch-2: Two bet sizes • Branch-3: Three bet sizes • Branch-5: Five bet sizes

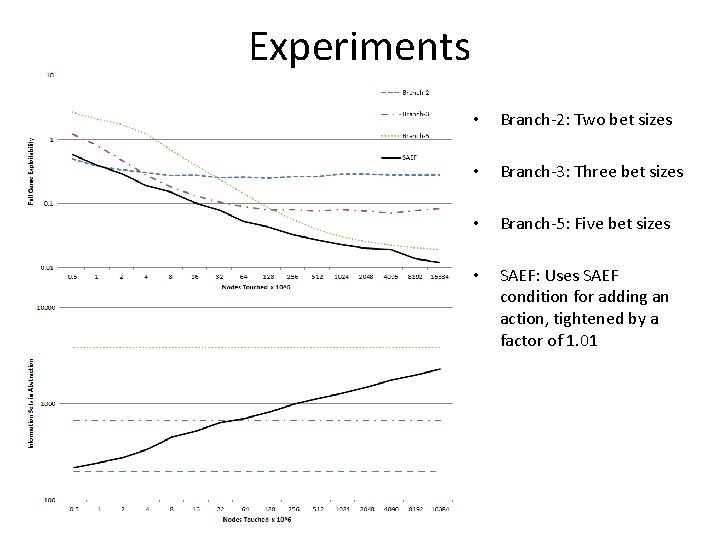

Experiments • Branch-2: Two bet sizes • Branch-3: Three bet sizes • Branch-5: Five bet sizes • SAEF: Uses SAEF condition for adding an action, tightened by a factor of 1. 01

- Slides: 66