Solr Integration and Enhancements Solr has a lot

![[core]/conf/solrconfig. xml Main configuration for solr core <query. Response. Writer name=“json” /> determines the [core]/conf/solrconfig. xml Main configuration for solr core <query. Response. Writer name=“json” /> determines the](https://slidetodoc.com/presentation_image_h2/a997df390ff7a988d06356eedfbf1aca/image-5.jpg)

![[core]/conf/schema. xml Field Types maps custom types to the solr/lucene type solr. Text. Field [core]/conf/schema. xml Field Types maps custom types to the solr/lucene type solr. Text. Field](https://slidetodoc.com/presentation_image_h2/a997df390ff7a988d06356eedfbf1aca/image-6.jpg)

![[core]/conf/schema. xml cont. English. Porter. Filter. Factory determines root word using word variations like [core]/conf/schema. xml cont. English. Porter. Filter. Factory determines root word using word variations like](https://slidetodoc.com/presentation_image_h2/a997df390ff7a988d06356eedfbf1aca/image-7.jpg)

![[core]/conf/schema. xml cont. Similar to creating a database table. Maps field names to types [core]/conf/schema. xml cont. Similar to creating a database table. Maps field names to types](https://slidetodoc.com/presentation_image_h2/a997df390ff7a988d06356eedfbf1aca/image-8.jpg)

- Slides: 12

Solr Integration and Enhancements Solr has a lot of extensive features Todd Hatcher

What is Solr? Solr offers advanced, optimized, scalable searching capabilities Communicate with Solr using XML, JSON and HTTP Includes a HTML admin interface Solr is built on top of Lucene Rich features of Lucene can be leveraged when using Solr is very configurable

Integration with Cold. Fusion Very little direct integration with Cold. Fusion communicates with Solr using HTTP Solr runs in its own JVM, does not share with Cold. Fusion Using Cold. Fusion installation, Solr runs in a jetty servlet container on port 8983 (http: //localhost: 8983/solr) Solr is exposed in production by default Important files located C: Cold. Fusion 9solrmulticore Solr offers a lot more than what is available using cfindex cfcollection cfsearch

Solr What is a core? – it’s like a verity collection (a searchable data group) Single Core (one index) vs Multicore (multiple isolated configurations/schemas/indexes using same Solr instance) C: Cold. Fusion 9solrmulticoresolr. xml is the central file that points to locations of the Solr cores’ configuration and data (this what CF administrator reads/writes to when creating and using Solr collections) You can put your Solr cores under you project directory and keep them in source control

![coreconfsolrconfig xml Main configuration for solr core query Response Writer namejson determines the [core]/conf/solrconfig. xml Main configuration for solr core <query. Response. Writer name=“json” /> determines the](https://slidetodoc.com/presentation_image_h2/a997df390ff7a988d06356eedfbf1aca/image-5.jpg)

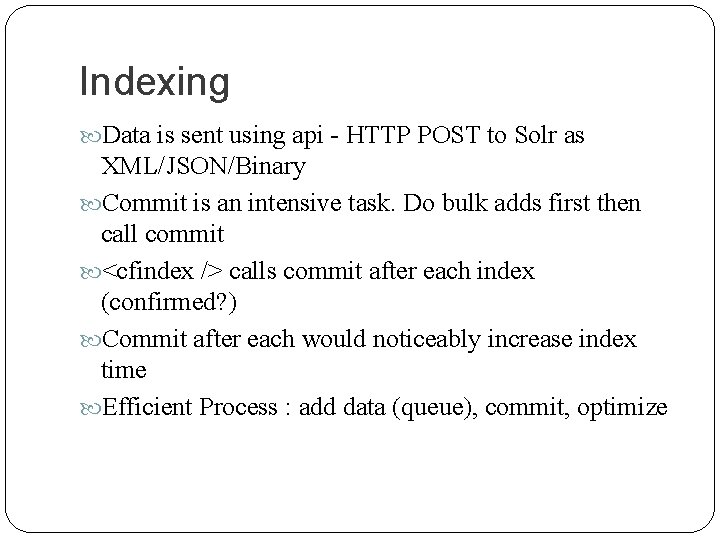

[core]/conf/solrconfig. xml Main configuration for solr core <query. Response. Writer name=“json” /> determines the format of the results. Cold. Fusion uses xslt by default You can return JSON, XML, python, ruby, php Multiple query response writers can be configured, one can be set as default others can be specified by passing parameter wt: [name] (eg. wt: json) cfsearch type of methods will not work if the response writer is not what Cold. Fusion is expecting

![coreconfschema xml Field Types maps custom types to the solrlucene type solr Text Field [core]/conf/schema. xml Field Types maps custom types to the solr/lucene type solr. Text. Field](https://slidetodoc.com/presentation_image_h2/a997df390ff7a988d06356eedfbf1aca/image-6.jpg)

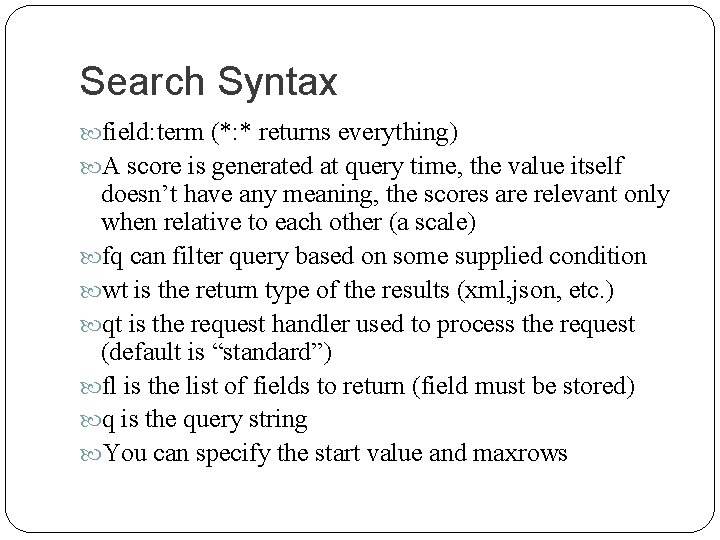

[core]/conf/schema. xml Field Types maps custom types to the solr/lucene type solr. Text. Field allows for analyzers Analyzers can be run at index time or query time They allow for manipulations of the data (typically filtering) The order in which filters are declared is the order processed Stop. Filter. Factory removes common words that do not help the search results Word. Delimiter. Filter. Factory can adds words like Wi. Fi, Wi, Fi by splitting the original into subwords

![coreconfschema xml cont English Porter Filter Factory determines root word using word variations like [core]/conf/schema. xml cont. English. Porter. Filter. Factory determines root word using word variations like](https://slidetodoc.com/presentation_image_h2/a997df390ff7a988d06356eedfbf1aca/image-7.jpg)

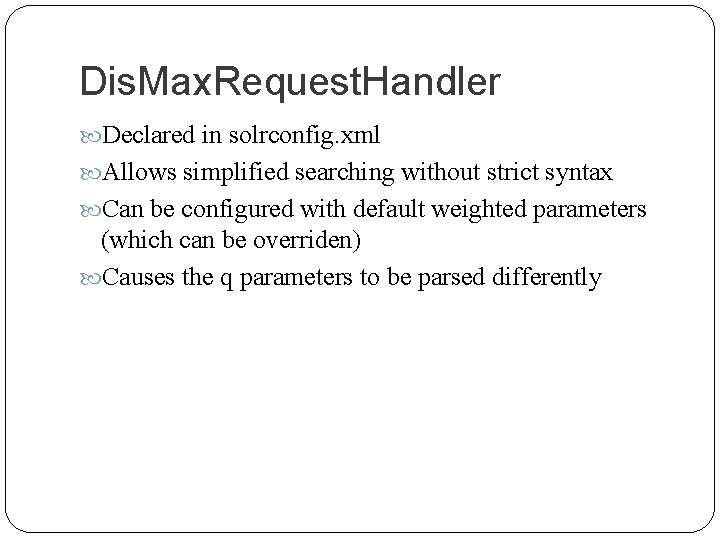

[core]/conf/schema. xml cont. English. Porter. Filter. Factory determines root word using word variations like -ing determines root word and adds to index Synonym. Filter. Factory treats words as same Double. Metaphone. Filter. Factory for phonetic logic (better than Soundex which Verity uses) Text. Spell/Text. Spell. Phrase feedback “did you mean” <copy. Field source=“field. Name” dest=“d”/> dest fieldtype can run different analyzers on source field and store result wiki. apache. org/solr/Analyzers. Tokenizers. Token. Filters Adobe adds quite a bit to the file to create fieldtypes to be compatible with what was in verity

![coreconfschema xml cont Similar to creating a database table Maps field names to types [core]/conf/schema. xml cont. Similar to creating a database table. Maps field names to types](https://slidetodoc.com/presentation_image_h2/a997df390ff7a988d06356eedfbf1aca/image-8.jpg)

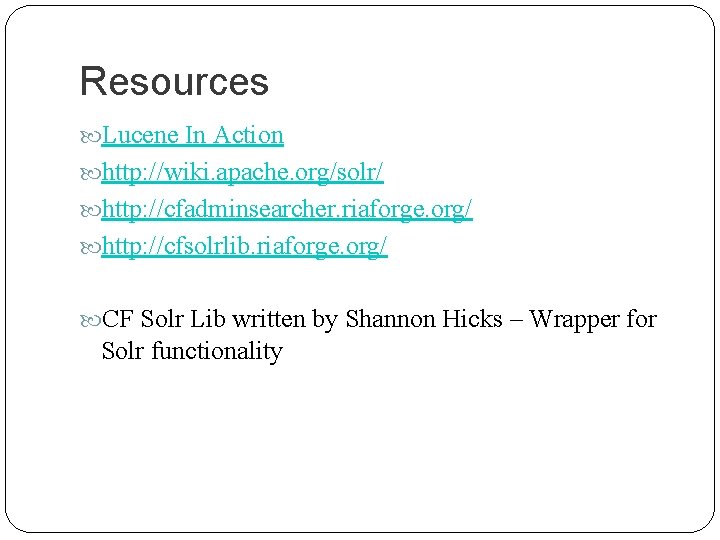

[core]/conf/schema. xml cont. Similar to creating a database table. Maps field names to types using <field /> Gives you the ability to store additional data Field can be indexed (searchable) Field can be stored (referenced and returned with results) Field can be required <unique. Key>[field name]</unique. Key> <solr. Query. Parse default. Operator=“OR” />

Indexing Data is sent using api - HTTP POST to Solr as XML/JSON/Binary Commit is an intensive task. Do bulk adds first then call commit <cfindex /> calls commit after each index (confirmed? ) Commit after each would noticeably increase index time Efficient Process : add data (queue), commit, optimize

Search Syntax field: term (*: * returns everything) A score is generated at query time, the value itself doesn’t have any meaning, the scores are relevant only when relative to each other (a scale) fq can filter query based on some supplied condition wt is the return type of the results (xml, json, etc. ) qt is the request handler used to process the request (default is “standard”) fl is the list of fields to return (field must be stored) q is the query string You can specify the start value and maxrows

Dis. Max. Request. Handler Declared in solrconfig. xml Allows simplified searching without strict syntax Can be configured with default weighted parameters (which can be overriden) Causes the q parameters to be parsed differently

Resources Lucene In Action http: //wiki. apache. org/solr/ http: //cfadminsearcher. riaforge. org/ http: //cfsolrlib. riaforge. org/ CF Solr Lib written by Shannon Hicks – Wrapper for Solr functionality