Software Verification and Validation Dolores R Wallace NASA

- Slides: 27

Software Verification and Validation Dolores R. Wallace* NASA Goddard Space Flight Center Greenbelt, Maryland 20771 dwallac@pop 300. gsfc. nasa. gov for the American Society for Quality Special Interest Group for Software June, 2000 * This work was performed when MS Wallace was employed by the Information Technology Laboratory, National Institute of Standards and Technology SATC June 2000 1

Tonight’s Discussion • Definition and Role of V&V in Building Software Quality • Standards and Guidance for V&V • V&V Tasks • V&V Examples , via Case Studies • NIST Fault and Failure Repository SATC June 2000 2

Elements of Software Quality • Process – examples CMM; ISO 9000 (ASQ) - Standards to define what must be performed to build software systems - Assessment of organizations for conformance • People – example ASQ’s CSQE - Skilled people to get processes right - People with knowledge beyond computer science/swe - Licensing “software engineers” by state examinations • Product – example Software V&V* - Evaluation, measurement of the product - Uncertainty reduced by reference, measurement methods * Quality built in via excellent development practices but assurance needed SATC June 2000 3

Definition of V&V • Verification – Confirmation by examination and provisions of objective evidence that specified requirements have been fulfilled. Ensures that product(s) of each development phase meets requirements levied by previous phase, and is internally complete, consistent and correct enough to support the next development phase. • Validation – Confirmation by examination and provisions of objective evidence that the particular requirements for a specific intended use are fulfilled. Through the process of execution, ensures that product conforms to functional and performance specifications stipulated in the requirements. SATC June 2000 4

V&V Objectives • Assess software products, processes during life cycle • Facilitate early detection and correction of software errors • Reduce effort to remove faults, via early detection • Demonstrate software, system requirements correct, complete, accurate, consistent, testable • Enhance management insight into process and product risk • Support the software life cycle processes to ensure compliance with program performance, schedule, and cost requirements, and • Enhance operational correctness and product SATC June 2000 5

Organization of V&V • Independent V&V • Part of “development” process • Combination • All use standards, guidelines to determine best fit SATC June 2000 6

New : IEEE 1012 -1998 Software V&V • • • More comprehensive than IEEE 1012 -1986 Product and process examination Integrity levels, metrics, independence Compliance with a higher level standard Separation of operation and maintenance New tasks: criticality analysis; hazard analysis; risk assessment; configuration management assessment • Retention of tasks in original 1012 standard: – V&V management – Evaluation of all artifacts, all stages – Testing, beginning with requirements activities SATC June 2000 7

Guidance: NIST SP 500 -234 http: //hissa. nist. gov/VV 234 • Provides guidance for performing V&V – independence types – step-by-step activities • Describes verification, test techniques – brief overview identifies issues for each technique – questions, issues for V&V of reused software • Explains some metrics for V&V – general metrics; metrics for design, code, test – reliability models SATC June 2000 8

Selected V&V Tasks • Traceability Analysis • Evaluation of Requirements, Design, Code – – Inspection, walkthrough, review Analysis (e. g. , control flow, database, algorithm, performance) Formal Verification Simulation, modeling • Change Impact Assessment • Configuration Management Assessment • Test – – Requirements based Evaluation of test documentation Simulation Regression testing • Measurement SATC June 2000 9

Traceability Analysis Trace system, software requirements through design, code and test materials. Benefits: • Identification of most important or riskiest paths • Sets stage for special analyses (e. g. , timing, interface) • Locations of interactions • Completeness / omission • Identification of re-test areas • Impact of change • Discovery of root cause of faults, failures SATC June 2000 10

Change Impact Analysis Use traceability analysis to identify every place affected by proposed change • Identification and evaluation of all interactions affected by changes • Evaluation of how changes affect assumptions about COTS, other components • Identification of regression tests SATC June 2000 11

EVALUATION • Verify and validate that the software item satisfies the requirements of its predecessor software requirements (e. g. , design to requirements; code to design). • Verify software item complies with standards, references, regulations, policies, physical laws, and business rules. • Validate the design sequences of states and state changes using logic and data flows coupled with domain expertise, prototyping results, engineering principles, or other basis. • Validate that the flow of data and control satisfy functionality and performance requirements. • Validate data usage and format. • Assess the appropriateness of methods and standards for that item. • Verify specified configuration management procedures. SATC June 2000 12

EVALUATION (Con’t) • Verify internal consistency between the elements of the item and external consistency with its predecessor. • Verify that all terms and concepts are documented consistently. • Validate that the logic, computational, and interface precision (e. g. , truncation and rounding) satisfy the requirements in the system environment. • Validate that the modeled physical phenomena conform to system accuracy requirements and physical laws. • Verify and validate that the software source code interfaces with hardware, user, operator, software, and other systems for correctness, consistency, completeness, accuracy, testability. • Verify functionality: algorithms, states, performance & scheduling, controls, configuration data, exception handling. SATC June 2000 13

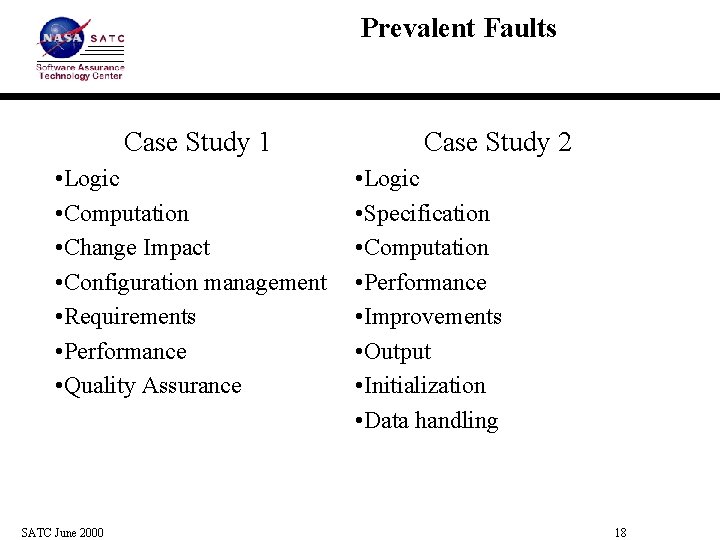

Two Case Studies Affirm Need for V&V • Case Study 1 - 342 failures or real systems, in service - Logic, calculation, and CM problems prevalent - QA not implemented in maintenance - Logic faults: many likely in requirements • Case Study 2 - 1 system under development - Strong focus on requirements analysis found 54% faults - Logic, calculation faults prevalent • Further information on Case Study 1: http: //hissa. nist. gov/eff. Project/handbook/failure http: //hissa. nist. gov/project/lessonsfailures. pdf SATC June 2000 14

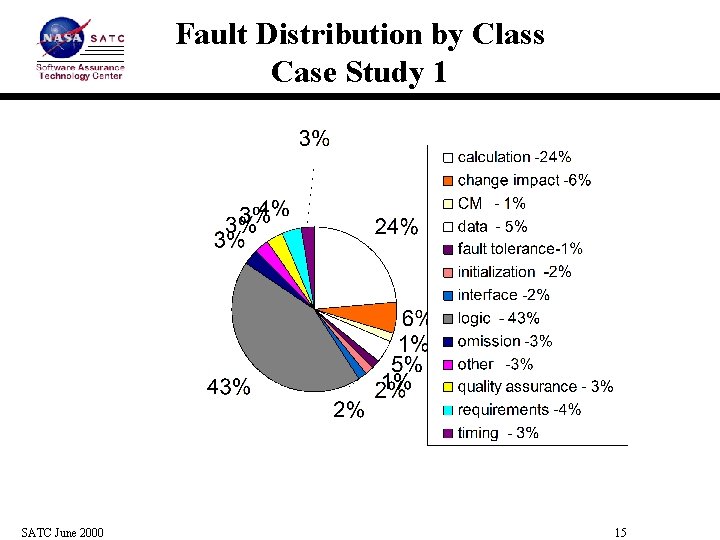

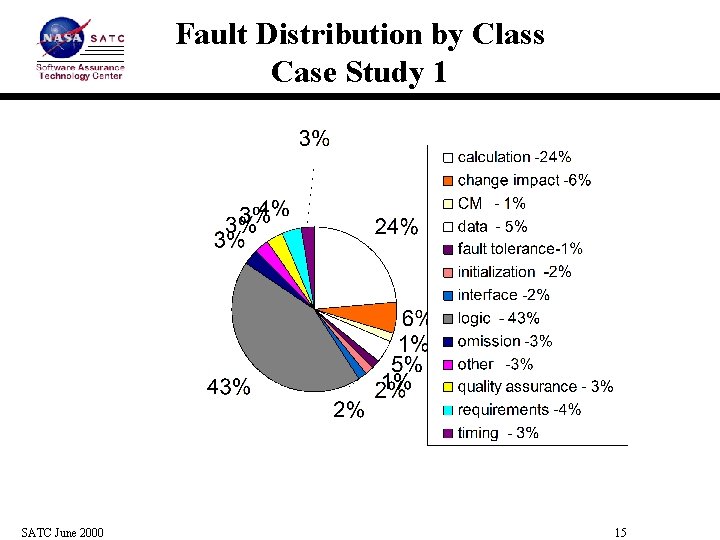

Fault Distribution by Class Case Study 1 SATC June 2000 15

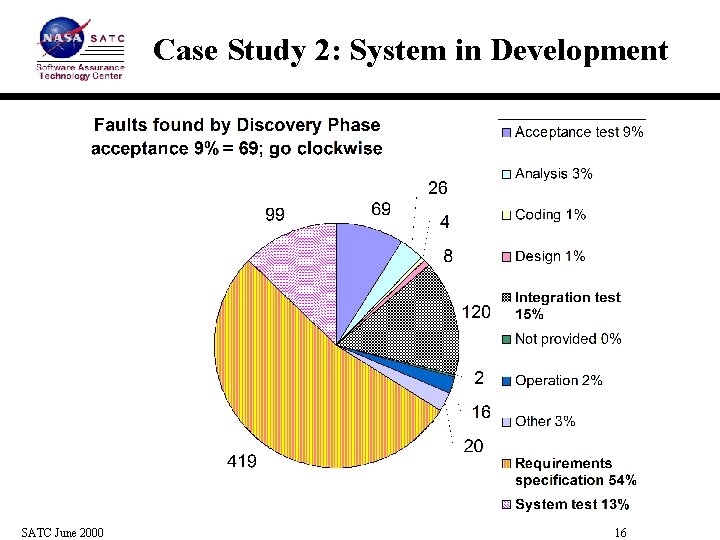

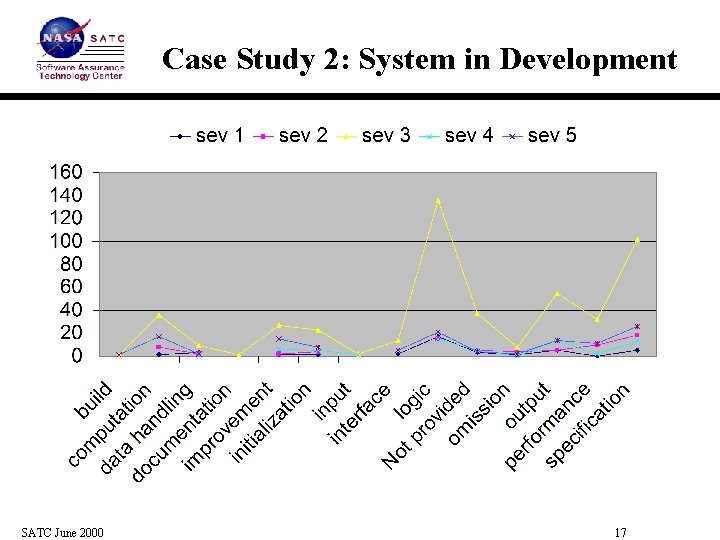

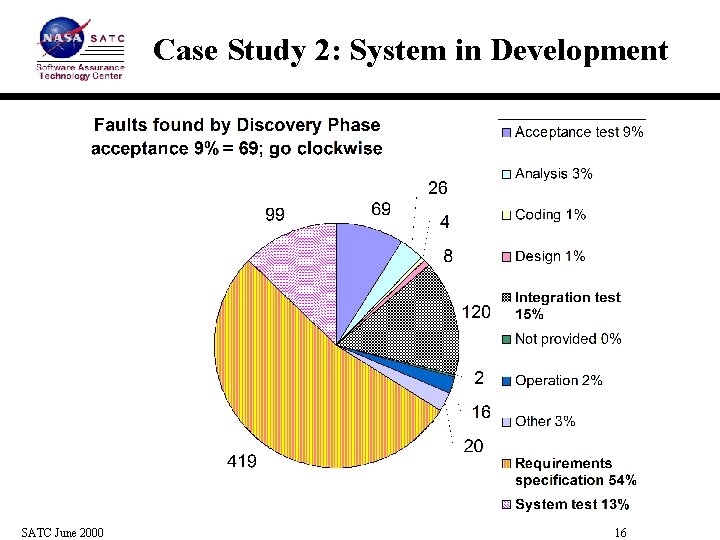

Case Study 2: System in Development SATC June 2000 16

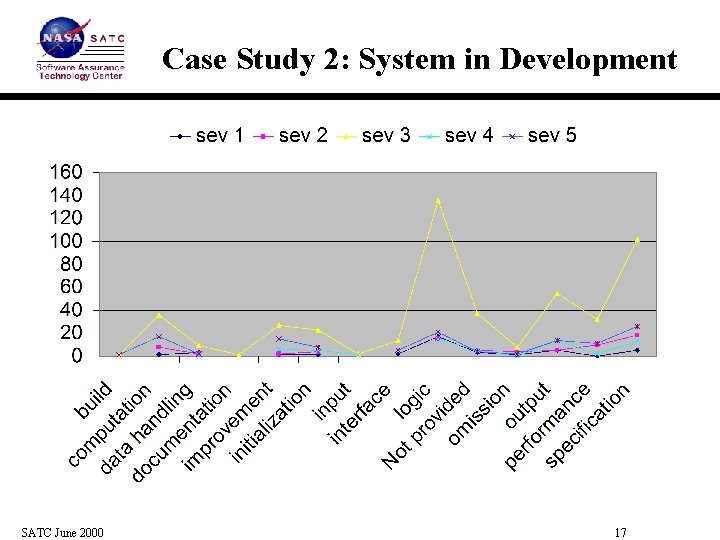

Case Study 2: System in Development SATC June 2000 17

Prevalent Faults Case Study 1 • Logic • Computation • Change Impact • Configuration management • Requirements • Performance • Quality Assurance SATC June 2000 Case Study 2 • Logic • Specification • Computation • Performance • Improvements • Output • Initialization • Data handling 18

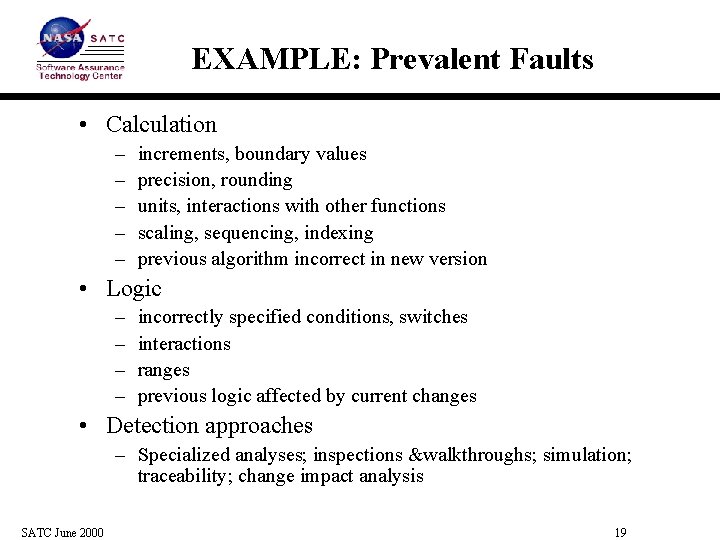

EXAMPLE: Prevalent Faults • Calculation – – – increments, boundary values precision, rounding units, interactions with other functions scaling, sequencing, indexing previous algorithm incorrect in new version • Logic – – incorrectly specified conditions, switches interactions ranges previous logic affected by current changes • Detection approaches – Specialized analyses; inspections &walkthroughs; simulation; traceability; change impact analysis SATC June 2000 19

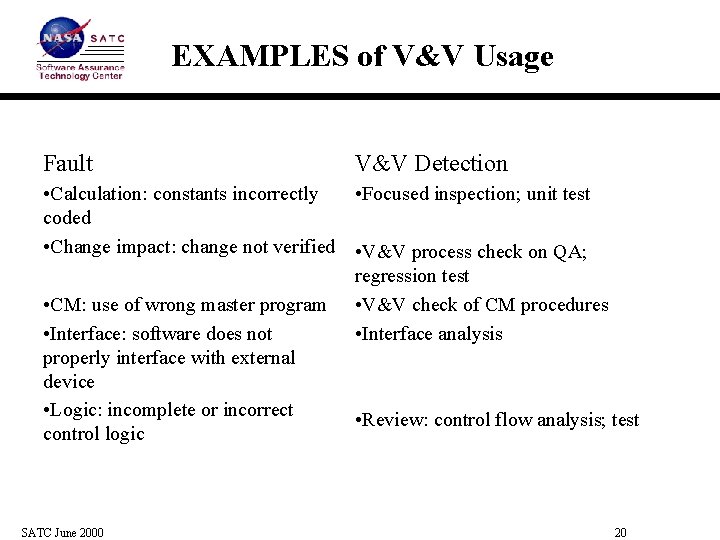

EXAMPLES of V&V Usage Fault V&V Detection • Calculation: constants incorrectly • Focused inspection; unit test coded • Change impact: change not verified • V&V process check on QA; regression test • CM: use of wrong master program • V&V check of CM procedures • Interface: software does not • Interface analysis properly interface with external device • Logic: incomplete or incorrect • Review: control flow analysis; test control logic SATC June 2000 20

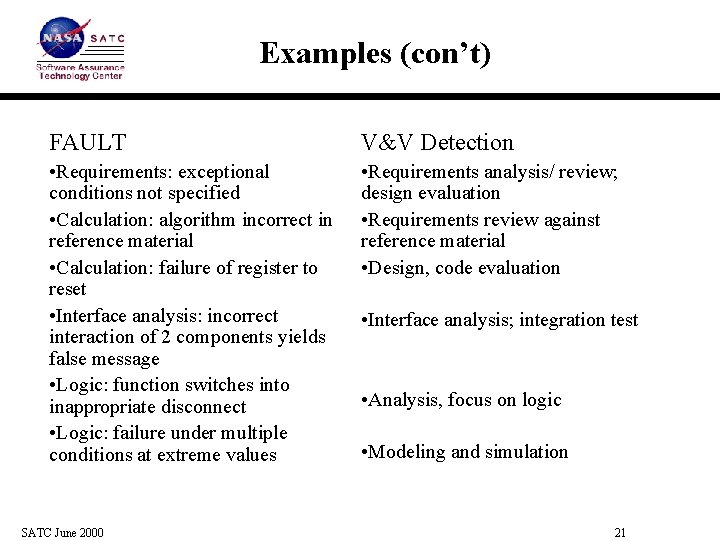

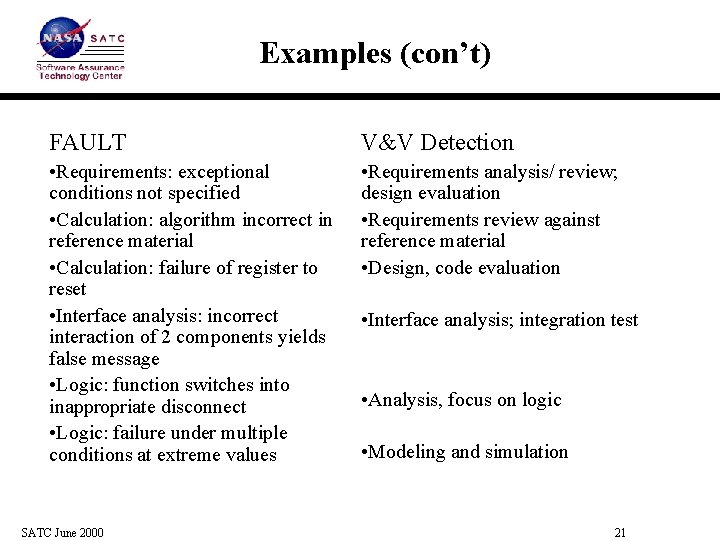

Examples (con’t) FAULT V&V Detection • Requirements: exceptional conditions not specified • Calculation: algorithm incorrect in reference material • Calculation: failure of register to reset • Interface analysis: incorrect interaction of 2 components yields false message • Logic: function switches into inappropriate disconnect • Logic: failure under multiple conditions at extreme values • Requirements analysis/ review; design evaluation • Requirements review against reference material • Design, code evaluation SATC June 2000 • Interface analysis; integration test • Analysis, focus on logic • Modeling and simulation 21

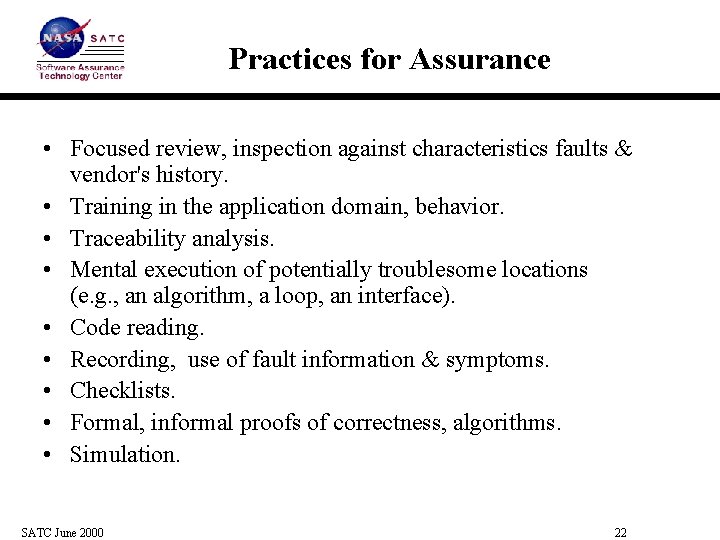

Practices for Assurance • Focused review, inspection against characteristics faults & vendor's history. • Training in the application domain, behavior. • Traceability analysis. • Mental execution of potentially troublesome locations (e. g. , an algorithm, a loop, an interface). • Code reading. • Recording, use of fault information & symptoms. • Checklists. • Formal, informal proofs of correctness, algorithms. • Simulation. SATC June 2000 22

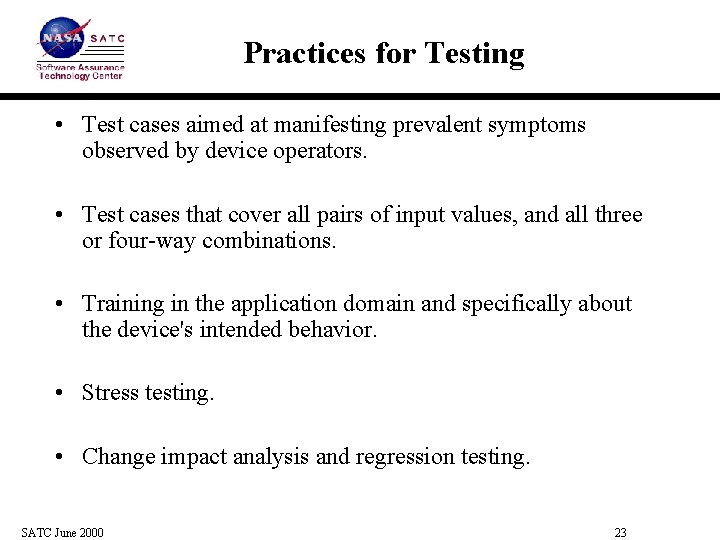

Practices for Testing • Test cases aimed at manifesting prevalent symptoms observed by device operators. • Test cases that cover all pairs of input values, and all three or four-way combinations. • Training in the application domain and specifically about the device's intended behavior. • Stress testing. • Change impact analysis and regression testing. SATC June 2000 23

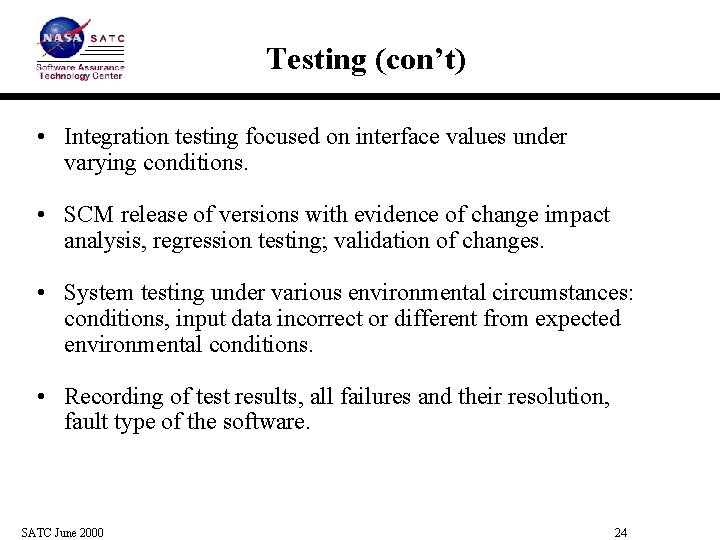

Testing (con’t) • Integration testing focused on interface values under varying conditions. • SCM release of versions with evidence of change impact analysis, regression testing; validation of changes. • System testing under various environmental circumstances: conditions, input data incorrect or different from expected environmental conditions. • Recording of test results, all failures and their resolution, fault type of the software. SATC June 2000 24

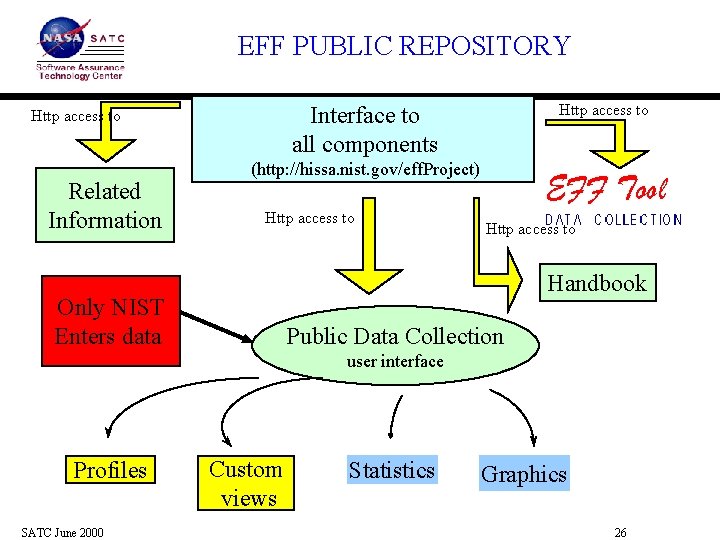

NIST Project to Build Fault Profiles • • Industry data (under non-disclosure agreements) Profiles generated by language, other characteristics Custom profiles generated by users Public domain tools for use by developers to collect, analyze their project fault an failure data • NIST public repository housing tools, handbook, current data at http: //hissa. nist. gov/eff. Project CONTACT: Michael. Koo@nist. gov SATC June 2000 25

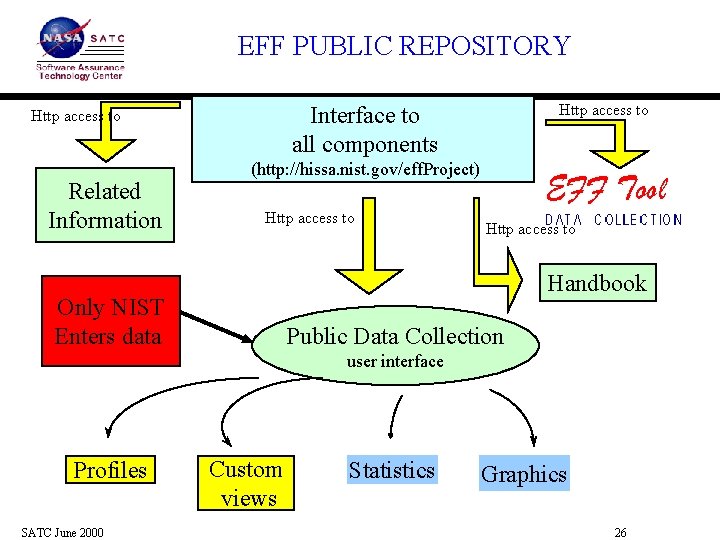

EFF PUBLIC REPOSITORY Related Information Http access to Interface to all components Http access to (http: //hissa. nist. gov/eff. Project) Http access to Handbook Only NIST Enters data Public Data Collection user interface Profiles SATC June 2000 Custom views Statistics Graphics 26

SUMMARY • V&V is a rigorous engineering discipline • V&V encompasses all life cycle • V&V tasks repeat, with specifics to the stage of development • V&V tasks and their intensity vary with integrity level • Other technical methods support the tasks • V&V testing at all levels requires early plans SATC June 2000 27