Software Testing Sudipto Ghosh CS 406 Fall 99Also

- Slides: 40

Software Testing Sudipto Ghosh CS 406 Fall 99(Also used later) November 2, 1999 11/02/99 CS 406 Testing

Learning Objectives • Testing • What is testing? • Why test? • How testing increase our confidence in program correctness? • When to test? • What to test? • How to test? • Different types of testing • How to assess test adequacy? 11/02/99 CS 406 Testing 2

Testing • The act of checking if a part or product performs as expected. • Why do we test? • Check if there any errors? • Increase confidence in the “correctness” 11/02/99 CS 406 Testing 3

Testing • When should we test? • Separate phase for testing? OR • At the end of every phase? OR • During each phase, simultaneously with all development and maintenance activities 11/02/99 CS 406 Testing 4

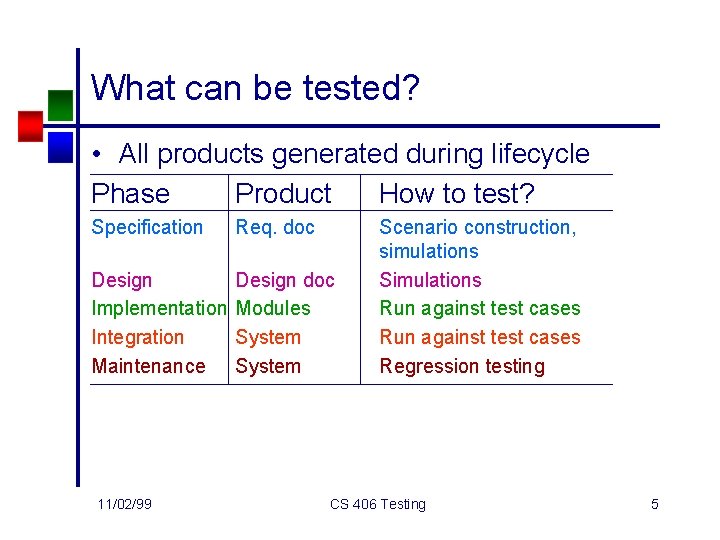

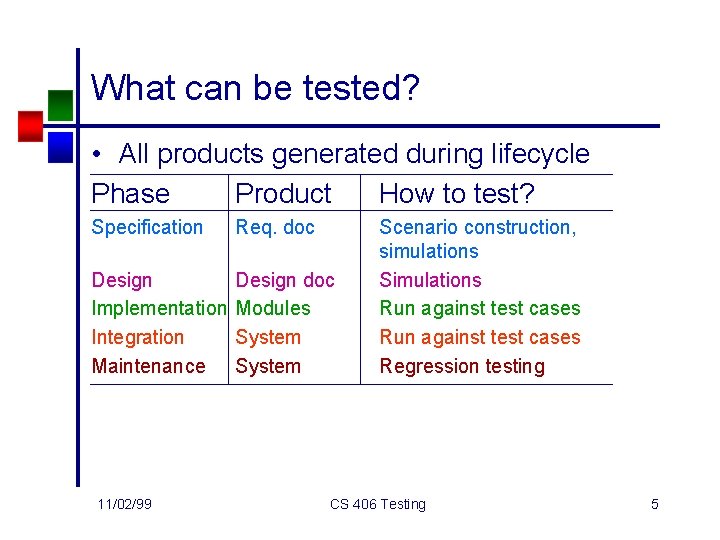

What can be tested? • All products generated during lifecycle Phase Product How to test? Specification Req. doc Design Implementation Integration Maintenance Design doc Modules System 11/02/99 Scenario construction, simulations Simulations Run against test cases Regression testing CS 406 Testing 5

Our focus • Focus: Testing programs • Programs may be subsystems or complete systems • Programs are written in a programming language • Explore techniques and tools for testing programs 11/02/99 CS 406 Testing 6

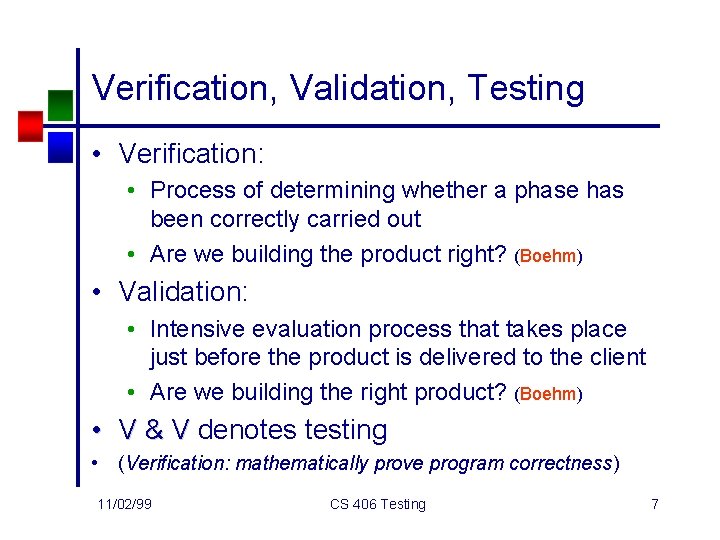

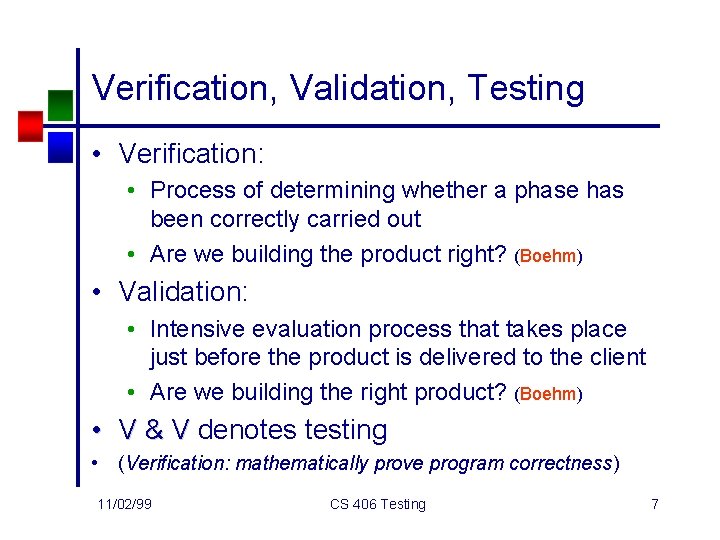

Verification, Validation, Testing • Verification: • Process of determining whether a phase has been correctly carried out • Are we building the product right? (Boehm) • Validation: • Intensive evaluation process that takes place just before the product is delivered to the client • Are we building the right product? (Boehm) • V & V denotes testing • (Verification: mathematically prove program correctness) 11/02/99 CS 406 Testing 7

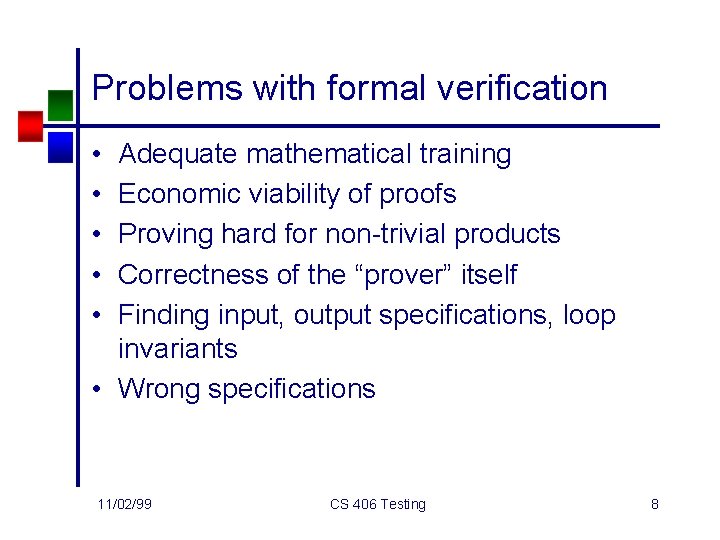

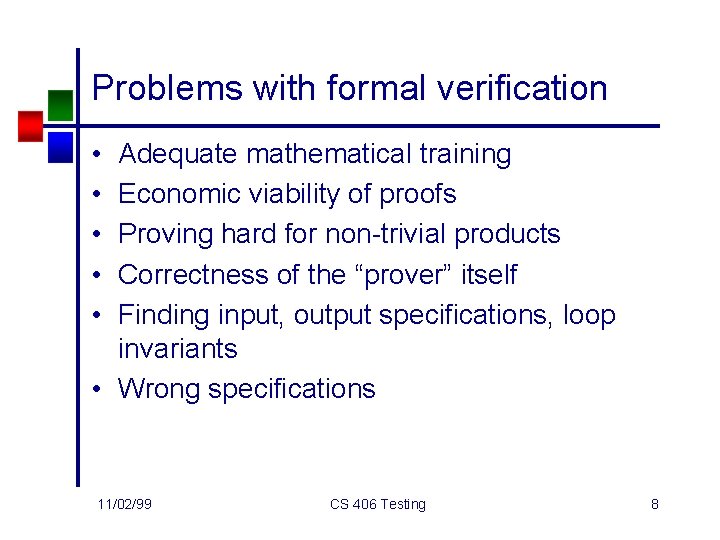

Problems with formal verification • • • Adequate mathematical training Economic viability of proofs Proving hard for non-trivial products Correctness of the “prover” itself Finding input, output specifications, loop invariants • Wrong specifications 11/02/99 CS 406 Testing 8

Terminology • Program - Sort • Collection of functions (C) or classes (Java) • Specification • Input: p - array of n integers, n>0 • Output: q - array of n integers such that • q[0] q[1] … q[n] • Elements in q are a permutation of elements in p, which are unchanged • Description of requirements of a program 11/02/99 CS 406 Testing 9

Tests • Test input (Test case) • A set of values given as input to a program • Includes environment variables • {2, 3, 6, 5, 4} • Test set • A set of test cases • { {0}, {9, 8, 7, 6, 5}, {1, 3, 4, 5}, {2, 1, 2, 3} } 11/02/99 CS 406 Testing 10

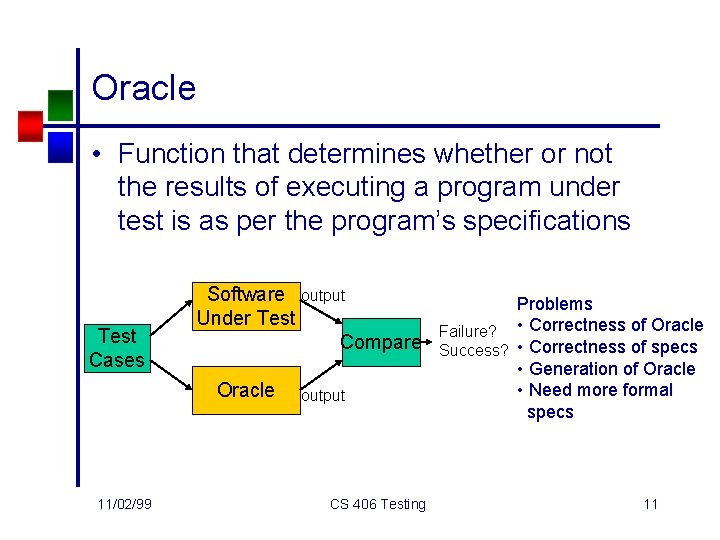

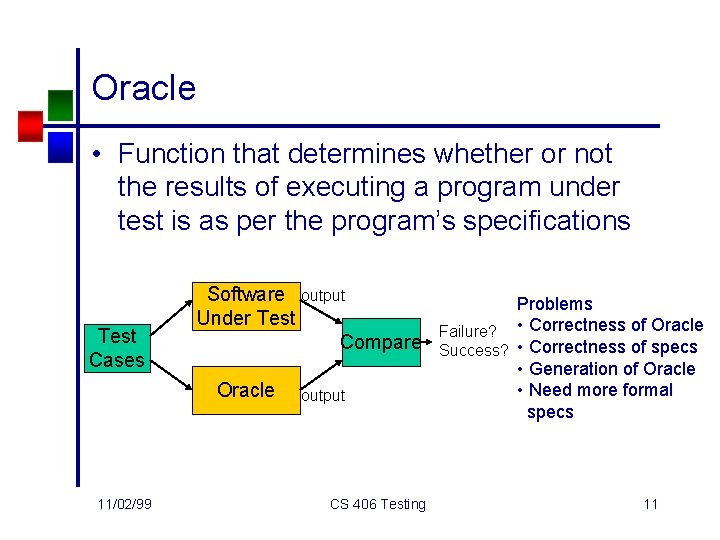

Oracle • Function that determines whether or not the results of executing a program under test is as per the program’s specifications Test Cases Software output Under Test Compare Oracle 11/02/99 output CS 406 Testing Problems Failure? • Correctness of Oracle Success? • Correctness of specs • Generation of Oracle • Need more formal specs 11

Correctness • Program Correctness • A program P is considered with respect to a specification S, if and only if: • For each valid input, the output of P is in accordance with the specification S • What if the specifications are themselves incorrect? 11/02/99 CS 406 Testing 12

Errors, defects, faults • Often used interchangeably. • Error: • Mistake made by programmer. • Human action that results in the software containing a fault/defect. • Defect / Fault: • Manifestation of the error in a program. • Condition that causes the system to fail. • Synonymous with bug 11/02/99 CS 406 Testing 13

Failure • Incorrect program behavior due to a fault in the program. • Failure can be determined only with respect to a set of requirement specs. • For failure to occur, the testing of the program should force the erroneous portion of the program to be executed. 11/02/99 CS 406 Testing 14

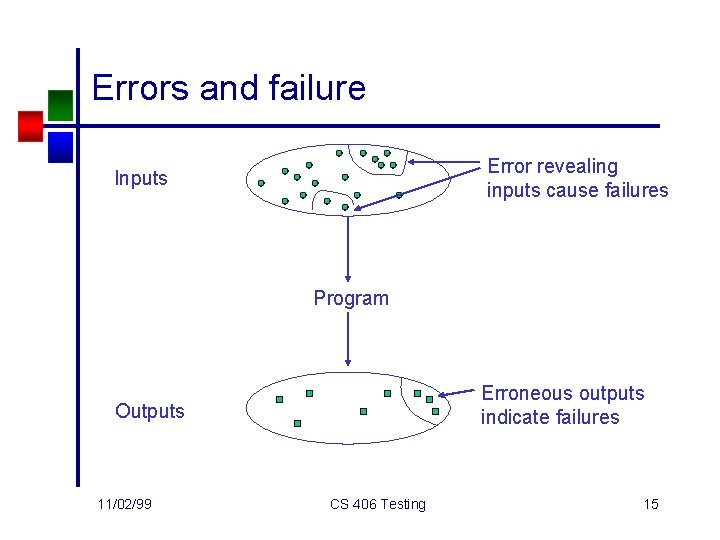

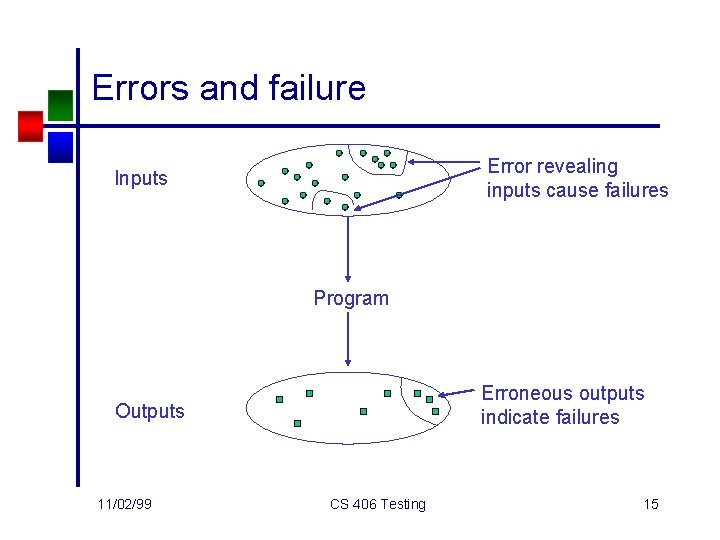

Errors and failure Error revealing inputs cause failures Inputs Program Erroneous outputs indicate failures Outputs 11/02/99 CS 406 Testing 15

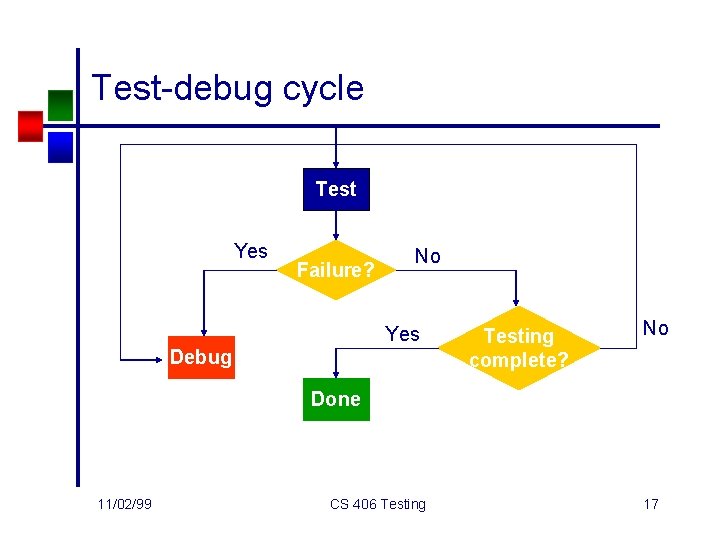

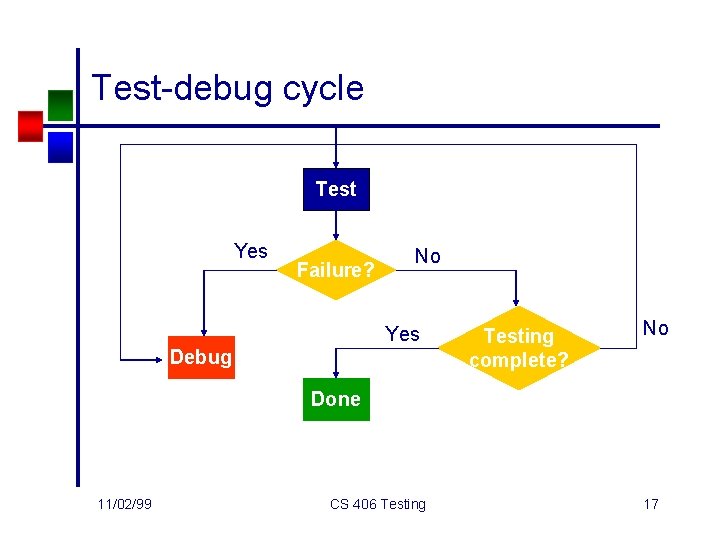

Debugging • Suppose that a failure is detected during the testing of P. • The process of finding and removing the cause of this failure is known as debugging. • Testing usually leads to debugging. • Testing and debugging usually happen in a cycle. 11/02/99 CS 406 Testing 16

Test-debug cycle Test Yes Failure? No Yes Debug Testing complete? No Done 11/02/99 CS 406 Testing 17

Software quality • Extent to which the product satisfies its specifications. • Get the software to function correctly. • SQA group is in charge of ensuring correctness. • Testing • Develop various standards to which the software must conform • Establish monitoring procedures for assuring compliance with those standards 11/02/99 CS 406 Testing 18

Nonexecution-Based Testing • Person creating a product should not be the only one responsible for reviewing it. • A document is is checked by a team of software professionals with a range of skills. • Increases chances of finding a fault. • Types of reviews • Walkthroughs • Inspections 11/02/99 CS 406 Testing 19

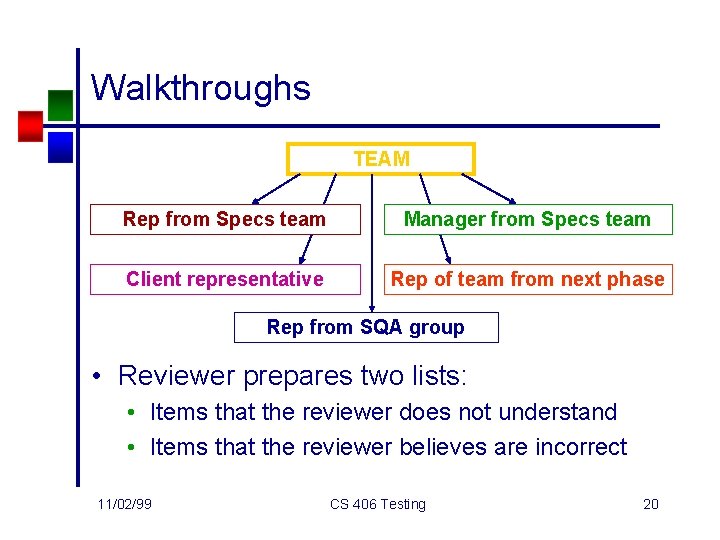

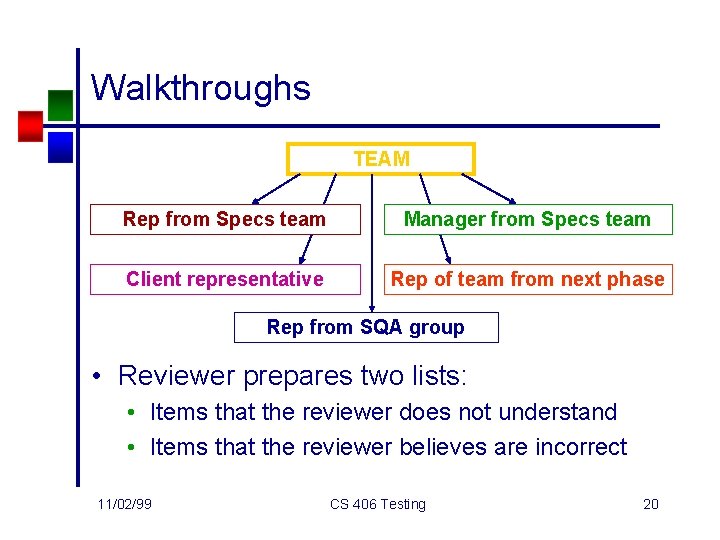

Walkthroughs TEAM Rep from Specs team Manager from Specs team Client representative Rep of team from next phase Rep from SQA group • Reviewer prepares two lists: • Items that the reviewer does not understand • Items that the reviewer believes are incorrect 11/02/99 CS 406 Testing 20

Managing walkthroughs • Distribute material for walkthrough in advance. • Includes senior technical staff. • Chaired by the SQA representative. • Task is to record fault for later correction • • Not much time to fix it during walkthrough Other individuals are trained to fix it better Cost of 1 team vs cost of 1 person Not all faults need to be fixed as not all “faults” flagged are incorrect 11/02/99 CS 406 Testing 21

Managing walkthroughs (contd. ) • Two ways of doing walkthroughs • Participant driven • Present lists of unclear items and incorrect items • Rep from specs team responds to each query • Document driven • Person responsible for document walks the participants through the document • Reviewers interrupt with prepared comments or comments triggered by the presentation • Interactive process • Not to be used for the evaluation of participants 11/02/99 CS 406 Testing 22

Inspections • Proposed by Fagan for testing • designs • code • An inspection goes beyond a walkthrough • Five formal stages • Stage 1 - Overview • Overview document (specs/design/code/ plan) to be prepared by person responsible for producing the product. • Document is distributed to participants. 11/02/99 CS 406 Testing 23

Inspections (contd. ) • Stage 2 - Preparation • Understand the document in detail. • List of fault types found in inspections ranked by frequency used for concentrating efforts. • Stage 3 - Inspection • Walk through the document and ensure that • • 11/02/99 Each item is covered Every branch is taken at least once Find faults and document them (don’t correct) Leader (moderator) produces a written report CS 406 Testing 24

Inspections (contd. ) • Stage 4 - Rework • Resolve all faults and problems • Stage 5 - Follow-up • Moderator must ensure that every issue has been resolved in some way • Team • • Moderator-manager, leader of inspection team Designer - team responsible for current phase Implementer - team responsible for next phase Tester - preferably from SQA team 11/02/99 CS 406 Testing 25

What to look for in inspections • Is each item in a specs doc adequately and correctly addressed? • Do actual and formal parameters match? • Error handling mechanisms identified? • Design compatible with hardware resources? • What about with software resources? 11/02/99 CS 406 Testing 26

What to record in inspections • Record fault statistics • Categorize by severity, fault type • Compare # faults with average # faults in same stage of development • Find disproportionate # in some modules, then begin checking other modules • Too many faults => redesign the module • Information on fault types will help in code inspection in the same module 11/02/99 CS 406 Testing 27

Pros and cons of inspection • High number of faults found even before testing (design and code inspections) • Higher programmer productivity, less time on module testing • Fewer faults found in product that was inspected before • If faults are detected early in the process there is a huge savings • What if walkthroughs are used for performance appraisal? 11/02/99 CS 406 Testing 28

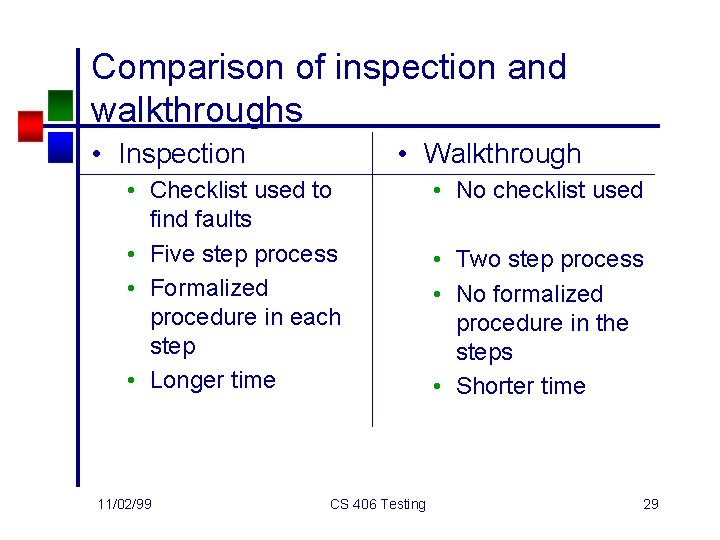

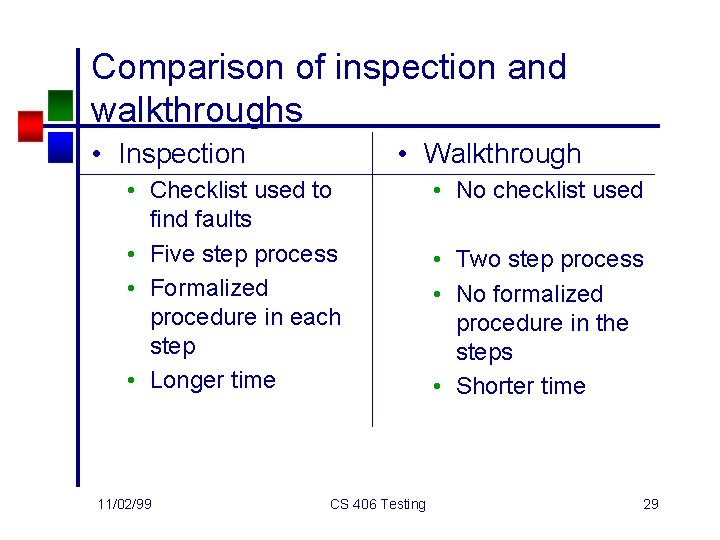

Comparison of inspection and walkthroughs • Inspection • Walkthrough • Checklist used to find faults • Five step process • Formalized procedure in each step • Longer time 11/02/99 CS 406 Testing • No checklist used • Two step process • No formalized procedure in the steps • Shorter time 29

Strengths and weaknesses of reviews • Effective way of detecting faults • Faults detected early in development • Effectiveness reduced if software process is inadequate • Large scale software hard to review if it consists of smaller largely independent units (OO) • Need access to complete, updated documents from previous phase 11/02/99 CS 406 Testing 30

Testing and code inspection • Code inspection is generally considered complimentary to testing • Neither is more important than the other • One is not likely to replace testing by code inspection or by formal verification 11/02/99 CS 406 Testing 31

Program testing • Testing is a demonstration that faults are not present. • Program testing can be an effective way to show the presence of bugs, but it is hopelessly inadequate for showing their absence. - Dijkstra 11/02/99 CS 406 Testing 32

Execution-based testing • Execution-based testing is a process of inferring certain behavioral properties of a product, based, in part, on the results of executing the product in a known environment with selected inputs. • Depends on environment and inputs • How well do we know the environment? • How much control do we have over test inputs? • Real time systems 11/02/99 CS 406 Testing 33

Utility • Extent to which a user’s needs are met when a correct product is used under conditions permitted by its specs. • How easy is the product to use? • Does it perform useful functions? • Is it cost-effective? 11/02/99 CS 406 Testing 34

Reliability • Probability of failure-free operation of a product for a given time duration • How often does the product fail? • mean time between failures • How bad are the effects? • How long does it take to repair it? • mean time to repair • Failure behavior controlled by • Number of faults • Operational profile of execution 11/02/99 CS 406 Testing 35

Robustness • How well does the product behave with • range of operating conditions • possibility of unacceptable results with valid input? • possibility of unacceptable results with invalid input? • Should not crash even when not used under permissible conditions 11/02/99 CS 406 Testing 36

Performance • To what extent does the product meet its requirements with regard to • response time • space requirements • What if there are too many clients/ processes, etc. . . 11/02/99 CS 406 Testing 37

Levels of testing • • Unit testing Integration testing System testing Acceptance testing 11/02/99 CS 406 Testing 38

Test plan • • • Test unit specification Features to be tested Approach for testing Test deliverables Schedule Personnel allocation 11/02/99 CS 406 Testing 39

References • Textbook • Scach - Classical and Object-Oriented Software Engineering • Other books • Beizer - Software Testing Techniques • Beizer - Black-Box Testing: Techniques for Functional Testing of Software and Systems • Marick - The Craft of Software Testing • Myers - The Art of Software Testing 11/02/99 CS 406 Testing 40