Software Testing Seminar Mooly Sagiv http www math

- Slides: 38

Software Testing Seminar Mooly Sagiv http: //www. math. tau. ac. il/~sagiv/courses/testing. html Tel Aviv University 640 -6706 Sunday 16 -18 Monday 10 -12 Schrieber 317

Bibliography • Michael Young, University of Oregon UW/MSR 1999 • Glenford Myers “The Art of Software Testing” 1978!

Outline • • Testing Questions Goals of Testing The Psychology of Testing Program Inspections and Reviews Test Case Design Achieving Reliability Other Techniques A Success Story

Standard Testing Questions • Did this test execution succeed or fail? – Oracles • How shall we select test cases? – Selection; generation • How do we know when we’ve tested enough? – Adequacy • What do we know when we’re done? – Assessment

Possible Goals of Testing • Find faults – Glenford Myers, The Art of Software Testing • Provide confidence – of reliability – of (probable) correctness – of detection (therefore absence) of particular faults

Testing Theory (such as it is) • Plenty of negative results – Nothing guarantees correctness – Statistical confidence is prohibitively expensive – Being systematic may not improve fault detection • as compared to simple random testing • So what did you expect, decision procedures for undecidable problems?

What Information Can We Exploit? • Specifications (formal or informal) – in Oracles – for Selection, Generation, Adequacy • Designs –… • Code – for Selection, Generation, Adequacy • Usage (historical or models) • Organization experience

The Psychology of Testing • “Testing is the process of demonstrating that an errors are not present” • “Testing is the process of establishing confidence that the program does what it intends to do” • “Testing is the process of executing programs with the intent of finding errors” – Successful (positive) test: exposes an error

Black Box Testing • View the program as a black box • Exhaustive testing is infeasible even for tiny programs – – Can never guarantee correctness Fundamental question is economics “Maximize investment” Example: “Partition test”

White Box Testing • Investigate the internal structure of the program • Exhaustive paths testing is infeasible • Does not even guarantee correctness – Specification is needed – Missing paths – Data dependent paths if (a-b < epsilon) • Becomes an economical question

Testing Principles • Test case must include the definition of expected results • A program should not test her/his own code • A programming organization should not test its own programs • Thoroughly inspect the results of each test • Test cases must be also written for invalid inputs • Check that programs do not do unexpected things • Test cases should not be thrown a way • Do not plan testing assuming that there are no errors • The probably of error in a piece of code is proportional to the errors found so far in this part of the code

Program Inspections and Reviews • Conducted in groups/sessions • A well established process • Modern programming languages eliminate many programming errors: – Type errors – Memory violations – Uninitialized variables • But many errors are not currently found – Division by zero – Overflow – Wrong precedence of logical operators

Test Case Design • What subset of inputs has the highest probability of detecting the most errors? • Combine black and white box testing

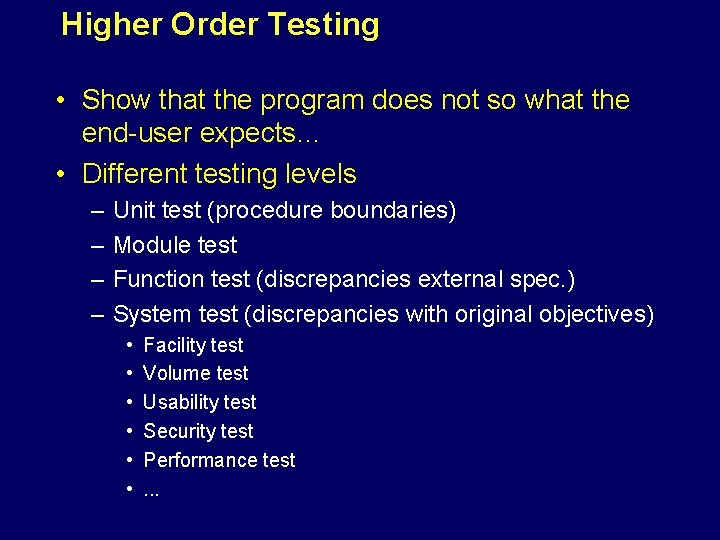

Higher Order Testing • Show that the program does not so what the end-user expects… • Different testing levels – – Unit test (procedure boundaries) Module test Function test (discrepancies external spec. ) System test (discrepancies with original objectives) • • • Facility test Volume test Usability test Security test Performance test. . .

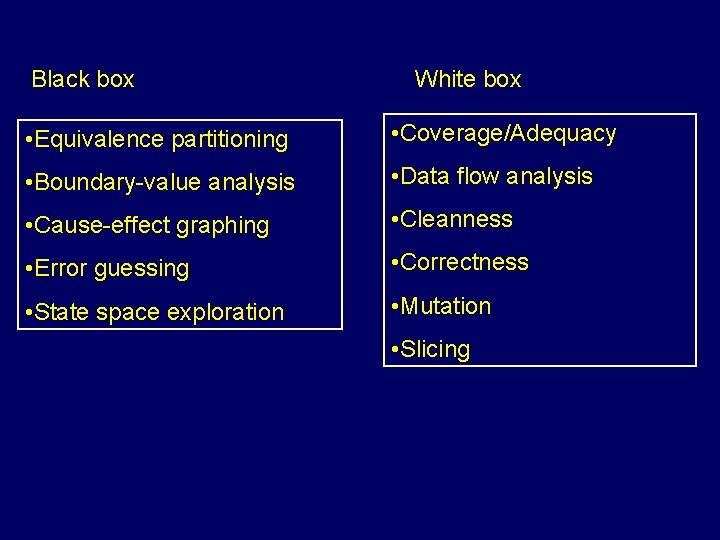

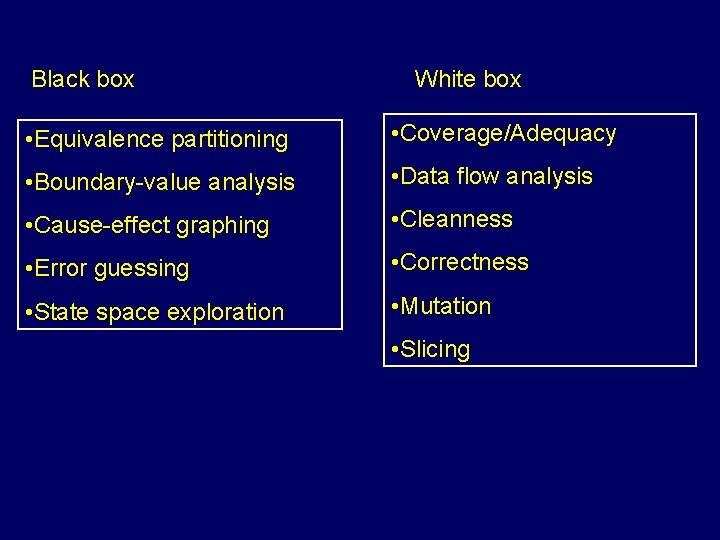

Black box White box • Equivalence partitioning • Coverage/Adequacy • Boundary-value analysis • Data flow analysis • Cause-effect graphing • Cleanness • Error guessing • Correctness • State space exploration • Mutation • Slicing

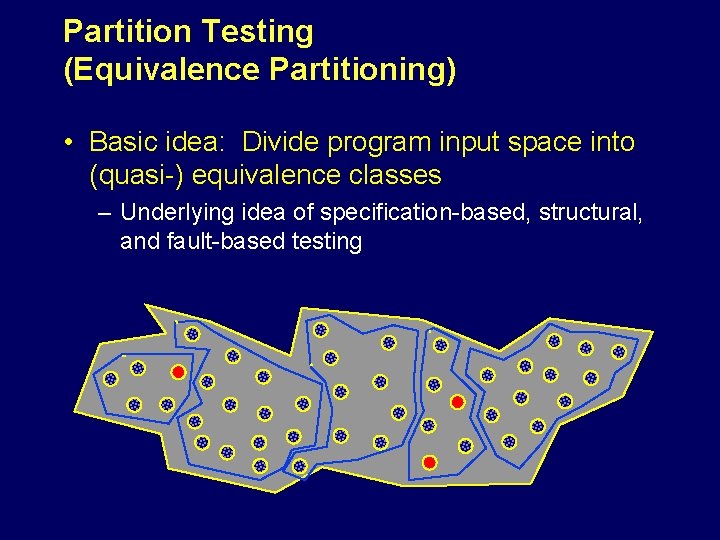

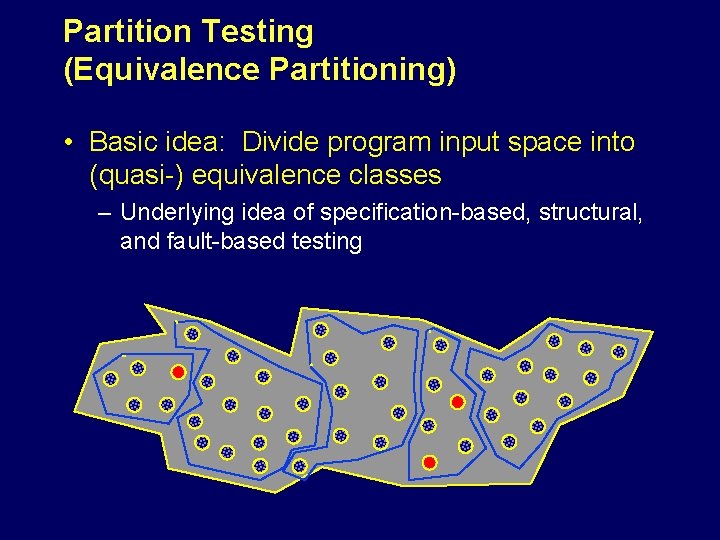

Partition Testing (Equivalence Partitioning) • Basic idea: Divide program input space into (quasi-) equivalence classes – Underlying idea of specification-based, structural, and fault-based testing

Specification-Based Partition Testing • Divide the program input space according to identifiable cases in the specification – May emphasize boundary cases – May include combinations of features or values • If all combinations are considered, the space is usually too large • Systematically “cover” the categories – May be driven by scripting tools or input generators – Example: Category-Partition testing [Ostrand]

“Adequate” testing • Ideally: adequate testing ensures some property (proof by cases) – Origins in [Goodenough & Gerhart], [Weyuker and Ostrand] – In reality: as impractical as other program proofs • Practical “adequacy” criteria are really “inadequacy” criteria – If no case from class XX has been chosen, surely more testing is needed. . .

Structural Coverage Testing • )In)adequacy criteria – If significant parts of program structure are not tested, testing is surely inadequate • Control flow coverage criteria – – Statement (node, basic block) coverage Branch (edge) and condition coverage Data flow (syntactic dependency) coverage Various control-flow criteria • Attempted compromise between the impossible and the inadequate

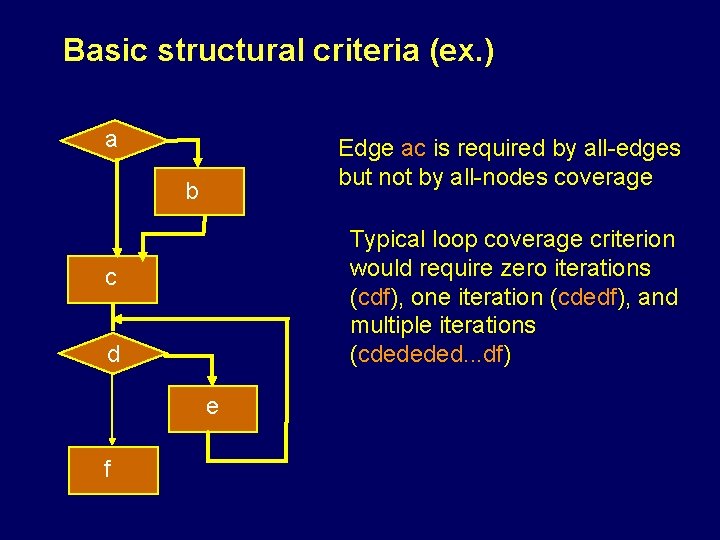

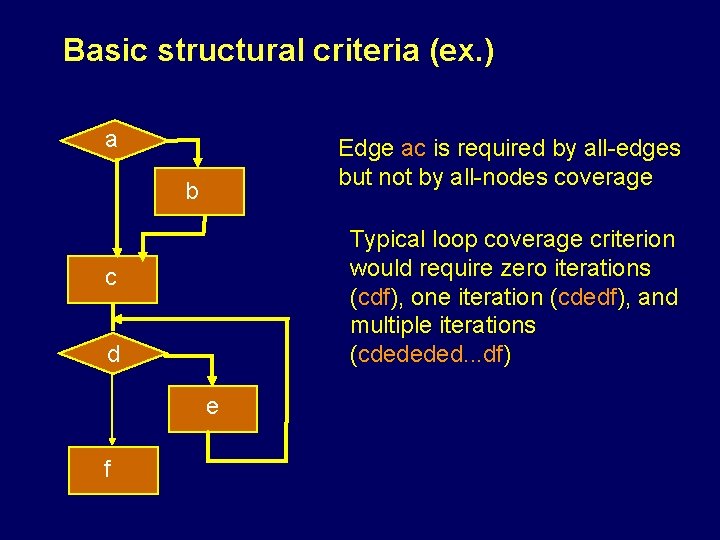

Basic structural criteria (ex. ) a Edge ac is required by all-edges but not by all-nodes coverage b Typical loop coverage criterion would require zero iterations (cdf), one iteration (cdedf), and multiple iterations (cdededed. . . df) c d e f

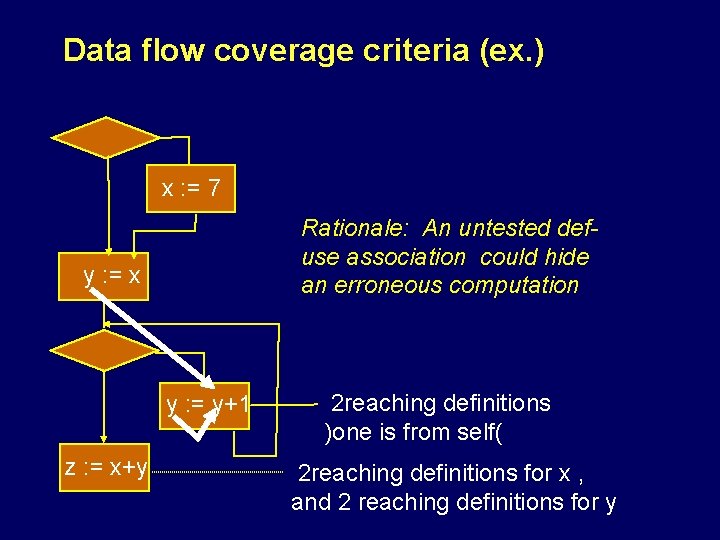

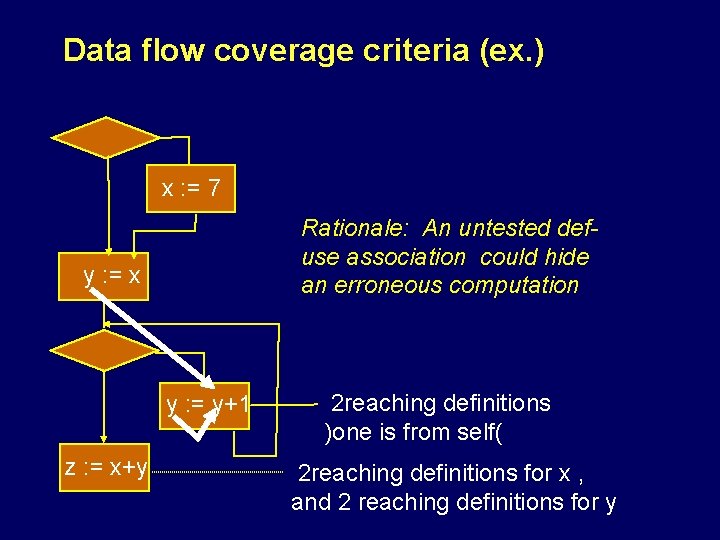

Data flow coverage criteria (ex. ) x : = 7 Rationale: An untested defuse association could hide an erroneous computation y : = x y : = y+1 z : = x+y 2 reaching definitions )one is from self( 2 reaching definitions for x , and 2 reaching definitions for y

The Infeasibility Problem • Syntactically indicated behaviors (paths, data flows, etc. ) are often impossible – Infeasible control flow, data flow, and data states • Adequacy criteria are typically impossible to satisfy • Unsatisfactory approaches: – Manual justification for omitting each impossible test case (esp. for more demanding criteria) – Adequacy “scores” based on coverage • example: 95% statement coverage, 80% def-use coverage

Challenges in Structural Coverage • Interprocedural and gross-level coverage – e. g. , interprocedural data flow, call-graph coverage • Regression testing • Late binding (OO programming languages) – coverage of actual and apparent polymorphism • Fundamental challenge: Infeasible behaviors – underlies problems in inter-procedural and polymorphic coverage, as well as obstacles to adoption of more sophisticated coverage criteria and dependence analysis

Structural Coverage in Practice • Statement and sometimes edge or condition coverage is used in practice – Simple lower bounds on adequate testing; may even be harmful if inappropriately used for test selection • Additional control flow heuristics sometimes used – Loops (never, once, many), combinations of conditions

Testing for Reliability • Reliability is statistical, and requires a statistically valid sampling scheme • Programs are complex human artifacts with few useful statistical properties • In some cases the environment (usage) of the program has useful statistical properties – Usage profiles can be obtained for relatively stable, pre-existing systems (telephones), or systems with thoroughly modeled environments (avionics)

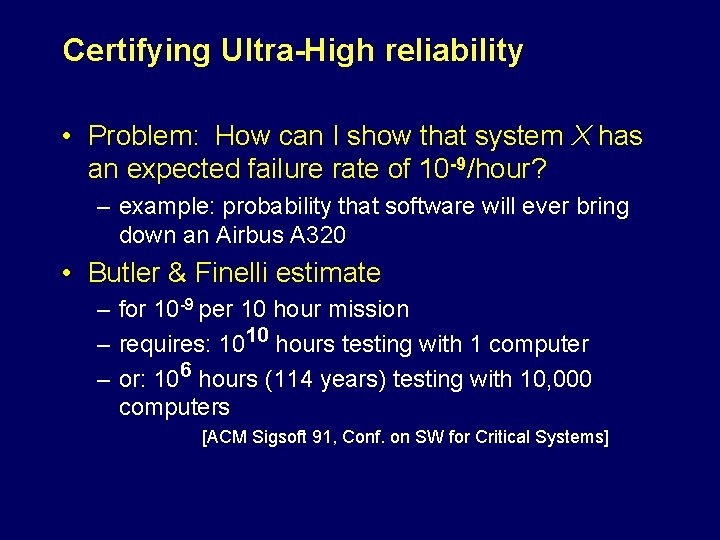

Certifying Ultra-High reliability • Problem: How can I show that system X has an expected failure rate of 10 -9/hour? – example: probability that software will ever bring down an Airbus A 320 • Butler & Finelli estimate – for 10 -9 per 10 hour mission – requires: 1010 hours testing with 1 computer – or: 106 hours (114 years) testing with 10, 000 computers [ACM Sigsoft 91, Conf. on SW for Critical Systems]

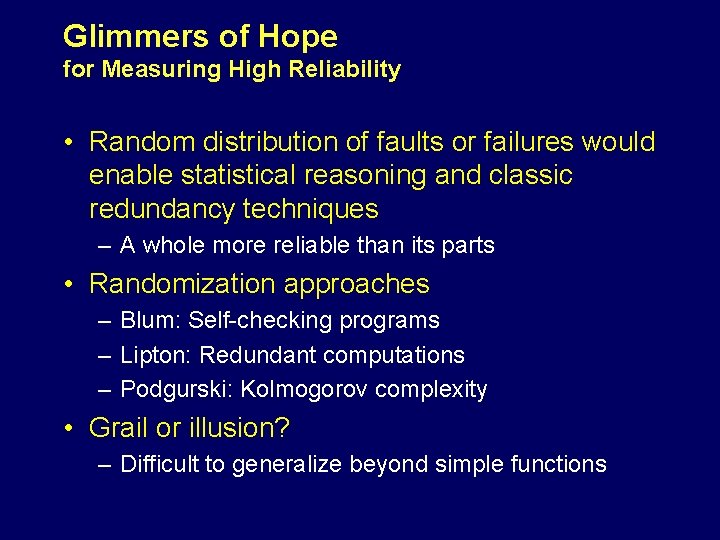

Glimmers of Hope for Measuring High Reliability • Random distribution of faults or failures would enable statistical reasoning and classic redundancy techniques – A whole more reliable than its parts • Randomization approaches – Blum: Self-checking programs – Lipton: Redundant computations – Podgurski: Kolmogorov complexity • Grail or illusion? – Difficult to generalize beyond simple functions

Process-Based Reliability Testing • Rather than relying only on properties of the program, we may use historical characteristics of the development process • Reliability growth models (Musa, Littlewood, et al) project reliability based on experience with the current system and previous similar systems

Fault-based testing • Given a fault model – hypothesized set of deviations from correct program – typically, simple syntactic mutations; relies on coupling of simple faults with complex faults • Coverage criterion: Test set should be adequate to reveal (all, or x%) faults generated by the model – similar to hardware test coverage

Fault Models • Fault models are key to semiconductor testing – Test vectors graded by coverage of accepted model of faults (e. g. , “stuck-at” faults) • What are fault models for software? – What would a fault model look like? – How general would it be? • Across application domains? • Across organizations? • Across time? • Defect tracking is a start

The Budget Coverage Criterion • A common answer to “when is testing done” – When the money is used up – When the deadline is reached • This is sometimes a rational approach! – Implication 1: Test selection is more important than stopping criteria per se. – Implication 2: Practical comparison of approaches must consider the cost of test case selection

Test Selection: Standard Advice • Specification coverage is good for selection as well as adequacy – applicable to informal as well as formal specs • + Fault-based tests – usually ad hoc, sometimes from check-lists • Program coverage last – to suggest uncovered cases, not just to achieve a coverage criterion

The Importance of Oracles • Much testing research has concentrated on adequacy, and ignored oracles • Much testing practice has relied on the “eyeball oracle” – Expensive, especially for regression testing • makes large numbers of tests infeasible – Not dependable • Automated oracles are essential to costeffective testing

Sources of Oracles • Specifications – sufficiently formal (e. g. , Z spec) – but possibly incomplete (e. g. , assertions in Anna, ADL, APP, Nana) • Design models – treated as specifications, as in protocol conformance testing • Prior runs (capture/replay) – especially important for regression testing and GUIs; hard problem is parameterization

What can be automated? • Oracles – assertions; replay; from some specifications • Selection (Generation) – scripting; specification-driven; replay variations – selective regression test • Coverage – statement, branch, dependence • Management

Design for Test: 3 Principles Adapted from circuit and chip design • Observability – Providing the right interfaces to observe the behavior of an individual unit or subsystem • Controllability – Providing interfaces to force behaviors of interest • Partitioning – Separating control and observation of one component from details of others

Problems & Opportunities • Compositionality – for components; for regression • Specifications – low entry barrier, incremental payoff • Synergy with Analysis – conformance test w/ verified models – “backstop” for unsafe assumptions • … (your idea here)

A recent success story • The Prefix program analysis tool • Analyzes C/C++ sources • Scans for cleanness bugs, e. g. , dereferences to NULL pointers • Symbolically executes the program on some paths • May miss some errors and generate false alarms • Tried on Windows 2000 • Located 65, 000 potential bugs 28, 000 out of which are real bugs