Software Testing Reference Software Engineering Ian Sommerville 6

- Slides: 36

Software Testing Reference: Software Engineering, Ian Sommerville, 6 th edition, Chapter 20 CMSC 345, Version 4/04

Topics Covered l l The testing process Defect testing • Black box testing » Equivalence partitions • White box testing » Equivalence partitions » Path testing l Integration testing • • • l Top-down, bottom-up, sandwich, thread Interface testing Stress testing Object-oriented testing CMSC 345, Version 4/04 2

Testing Goal l l The goal of testing is to discover defects in programs. A successful test is a test that causes a program to behave in an anomalous way. Tests show the presence, not the absence, of defects. Test planning should be continuous throughout the software development process. Note that testing is the only validation technique for non-functional requirements. CMSC 345, Version 4/04 3

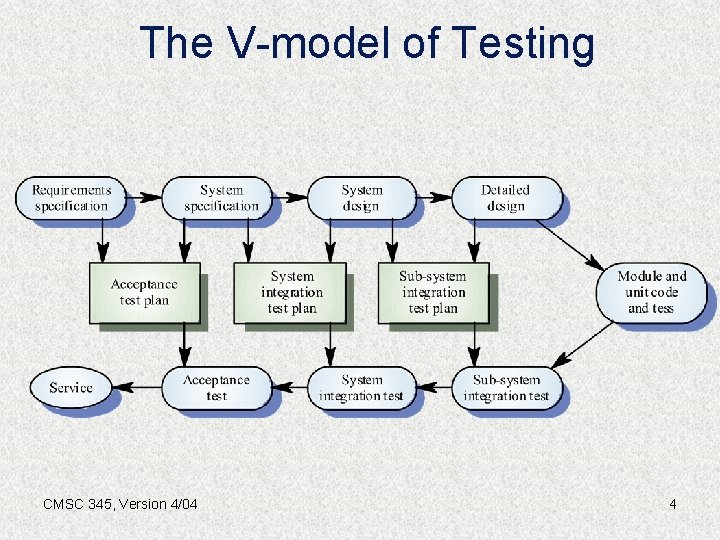

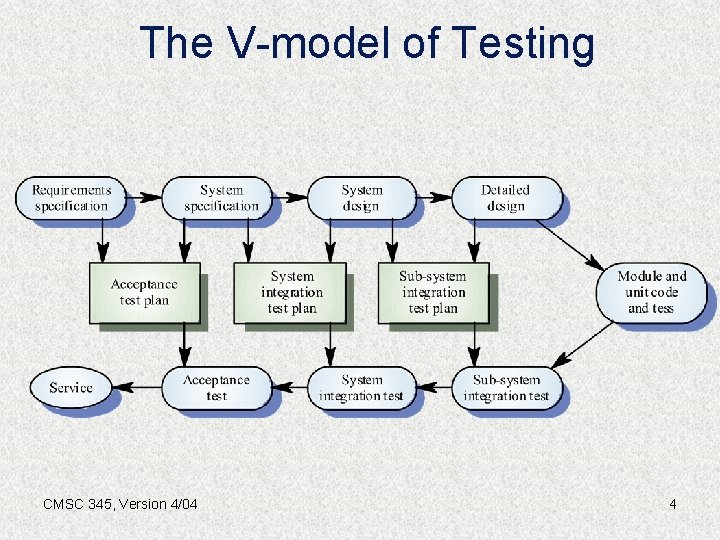

The V-model of Testing CMSC 345, Version 4/04 4

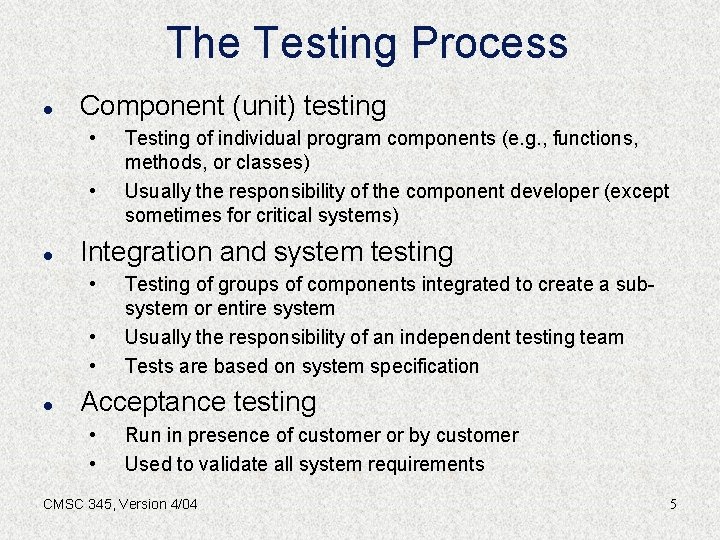

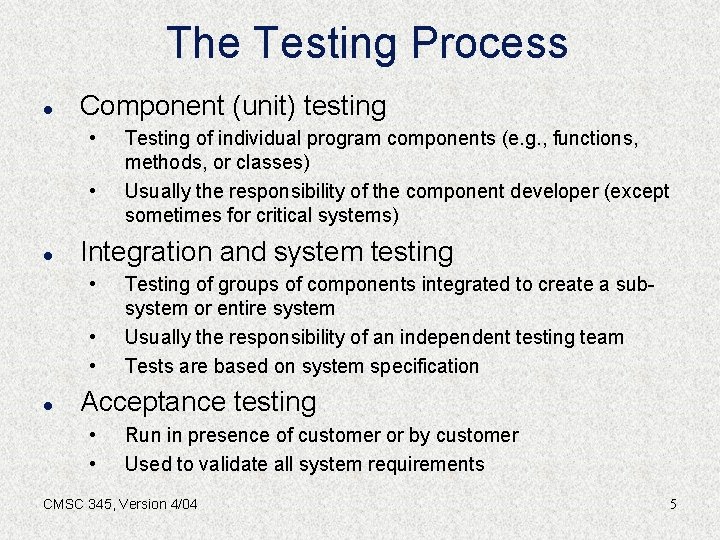

The Testing Process l Component (unit) testing • • l Integration and system testing • • • l Testing of individual program components (e. g. , functions, methods, or classes) Usually the responsibility of the component developer (except sometimes for critical systems) Testing of groups of components integrated to create a subsystem or entire system Usually the responsibility of an independent testing team Tests are based on system specification Acceptance testing • • Run in presence of customer or by customer Used to validate all system requirements CMSC 345, Version 4/04 5

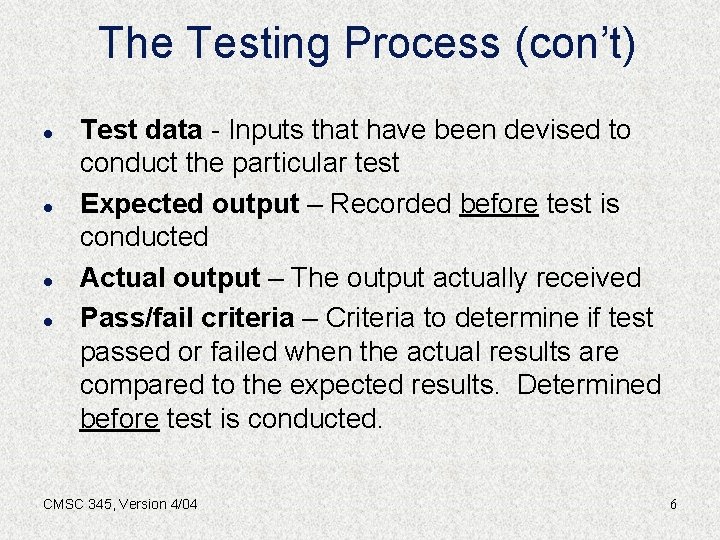

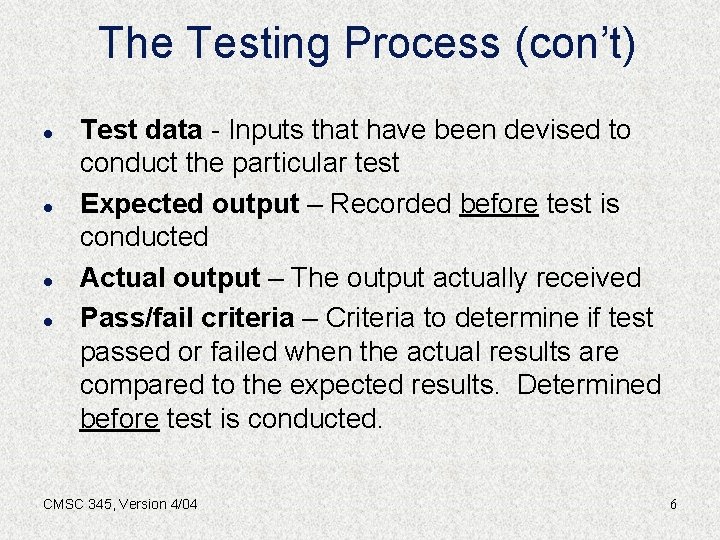

The Testing Process (con’t) l l Test data - Inputs that have been devised to conduct the particular test Expected output – Recorded before test is conducted Actual output – The output actually received Pass/fail criteria – Criteria to determine if test passed or failed when the actual results are compared to the expected results. Determined before test is conducted. CMSC 345, Version 4/04 6

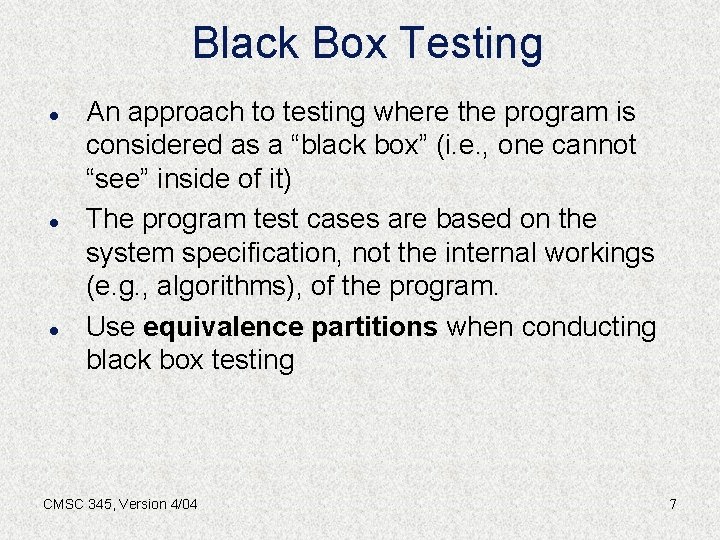

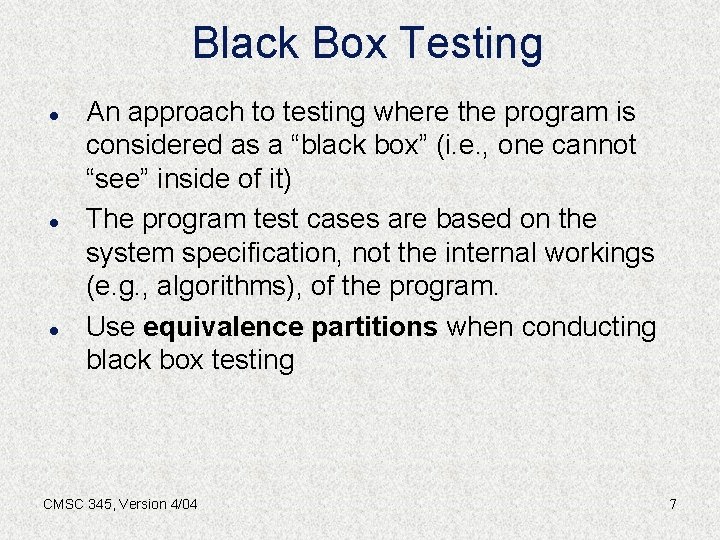

Black Box Testing l l l An approach to testing where the program is considered as a “black box” (i. e. , one cannot “see” inside of it) The program test cases are based on the system specification, not the internal workings (e. g. , algorithms), of the program. Use equivalence partitions when conducting black box testing CMSC 345, Version 4/04 7

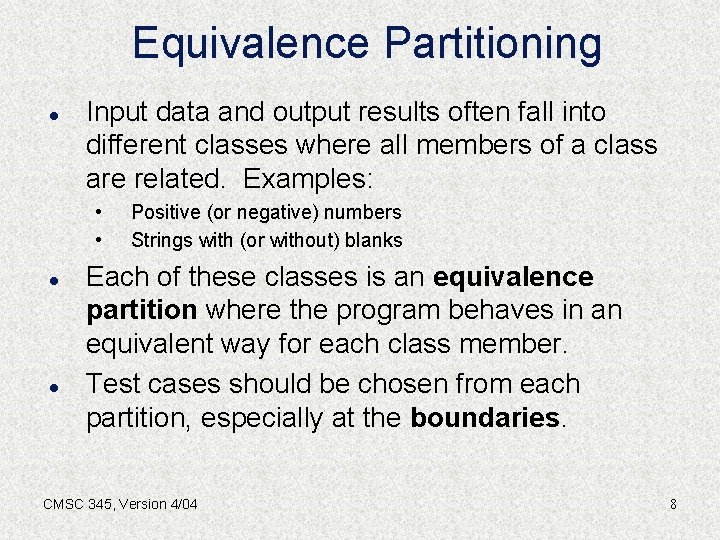

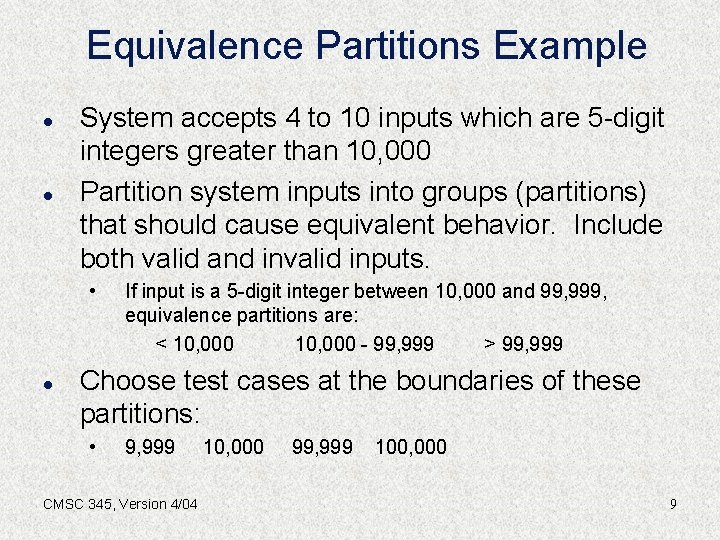

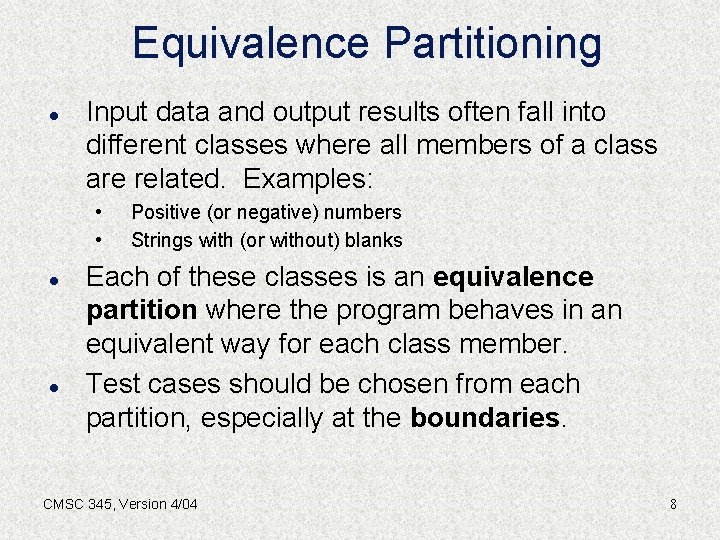

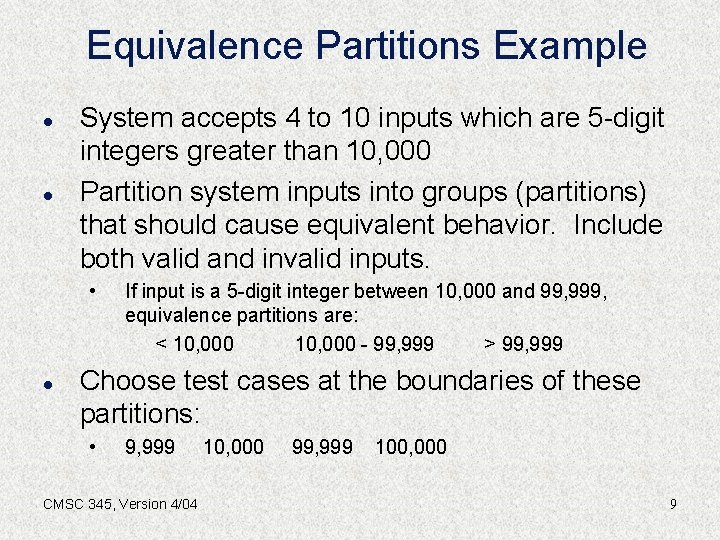

Equivalence Partitioning l Input data and output results often fall into different classes where all members of a class are related. Examples: • • l l Positive (or negative) numbers Strings with (or without) blanks Each of these classes is an equivalence partition where the program behaves in an equivalent way for each class member. Test cases should be chosen from each partition, especially at the boundaries. CMSC 345, Version 4/04 8

Equivalence Partitions Example l l System accepts 4 to 10 inputs which are 5 -digit integers greater than 10, 000 Partition system inputs into groups (partitions) that should cause equivalent behavior. Include both valid and invalid inputs. • l If input is a 5 -digit integer between 10, 000 and 99, 999, equivalence partitions are: < 10, 000 - 99, 999 > 99, 999 Choose test cases at the boundaries of these partitions: • 9, 999 CMSC 345, Version 4/04 10, 000 99, 999 100, 000 9

Equivalence Partitions Example CMSC 345, Version 4/04 10

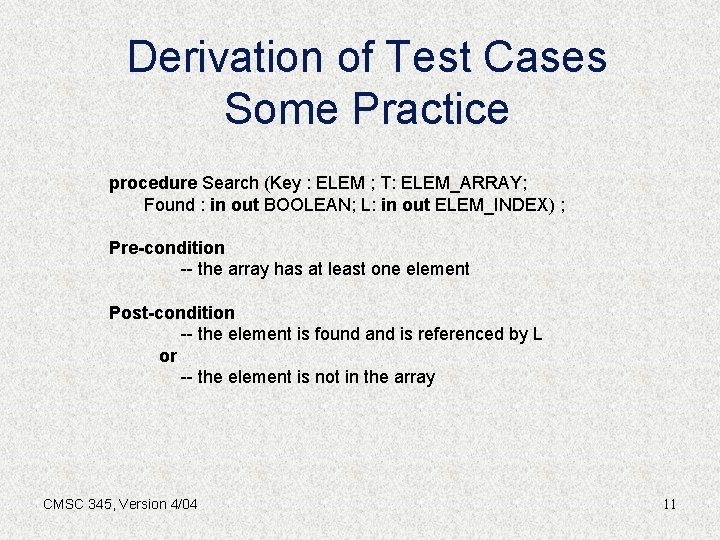

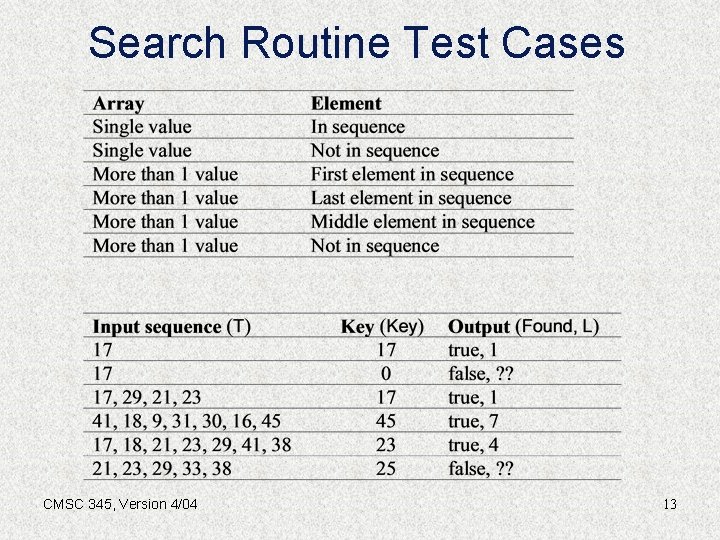

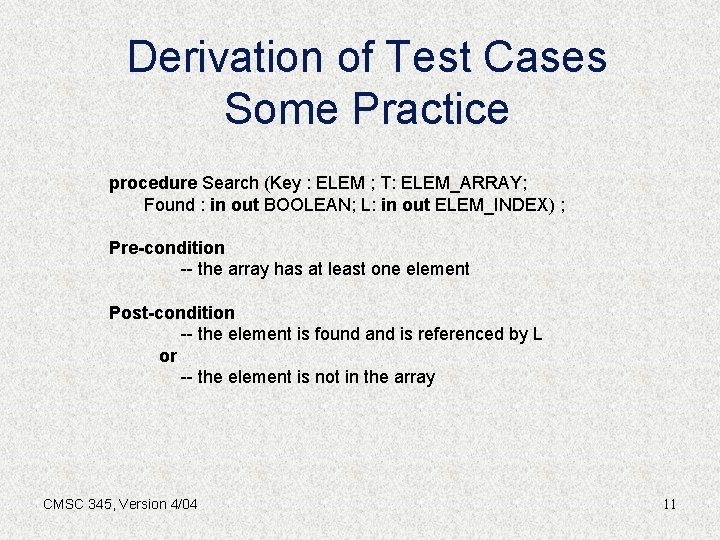

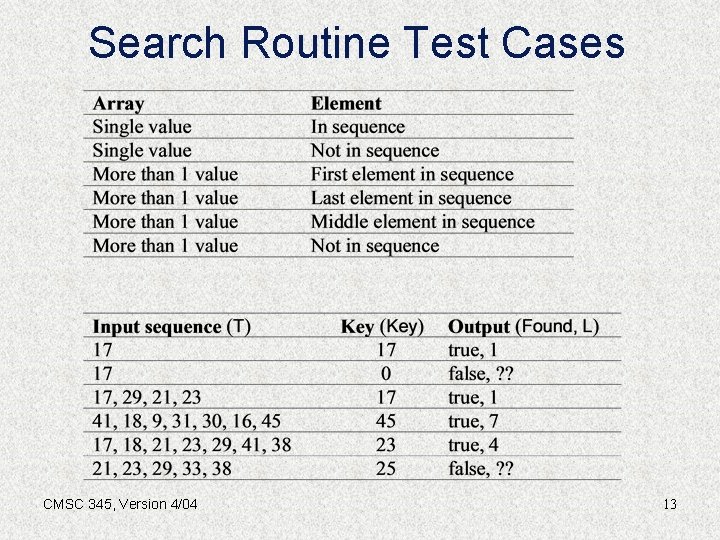

Derivation of Test Cases Some Practice procedure Search (Key : ELEM ; T: ELEM_ARRAY; Found : in out BOOLEAN; L: in out ELEM_INDEX) ; Pre-condition -- the array has at least one element Post-condition -- the element is found and is referenced by L or -- the element is not in the array CMSC 345, Version 4/04 11

What Tests Should We Use? l Equivalence Partitions CMSC 345, Version 4/04 12

Search Routine Test Cases CMSC 345, Version 4/04 13

White Box Testing l l Sometimes called structural testing Derivation of test cases according to program structure (can “see” inside) Objective is to exercise all program statements at least once Usually applied to relatively small program units such as functions or class methods CMSC 345, Version 4/04 14

Binary Search Routine l l Assume that we now know that the search routine is a binary search. Any new tests? CMSC 345, Version 4/04 15

Binary Search Test Cases CMSC 345, Version 4/04 16

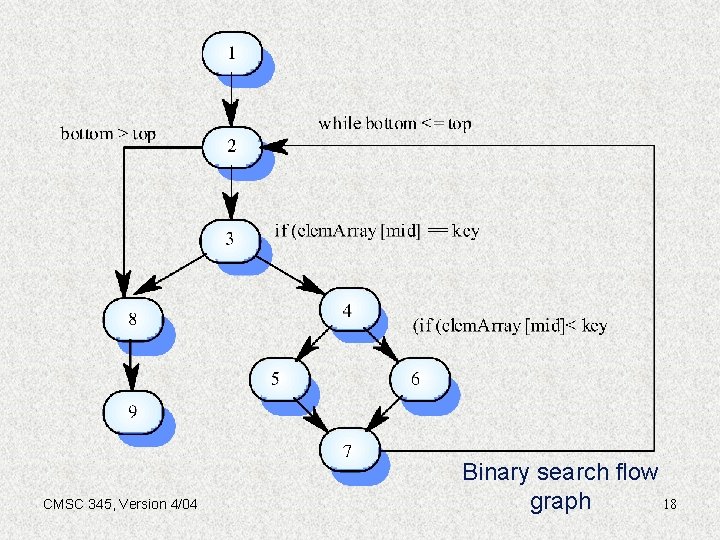

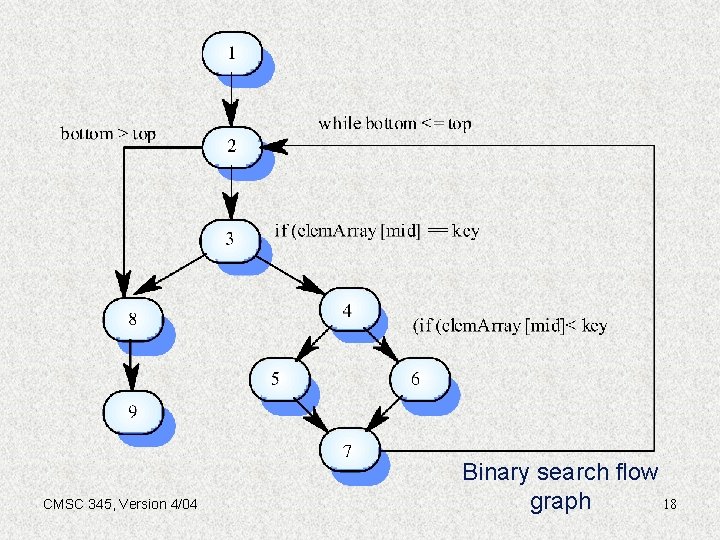

Path Testing l l The objective of path testing is to ensure that the set of test cases is such that each path through the program is executed at least once. The starting point for path testing is a program flow graph. CMSC 345, Version 4/04 17

CMSC 345, Version 4/04 Binary search flow graph 18

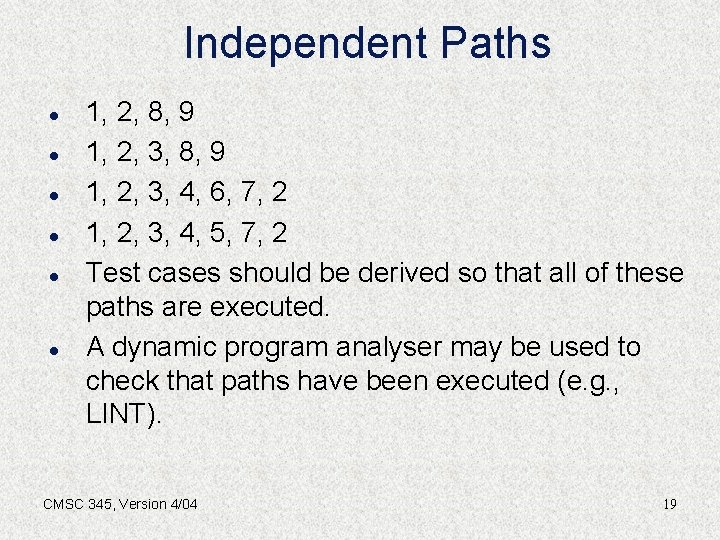

Independent Paths l l l 1, 2, 8, 9 1, 2, 3, 4, 6, 7, 2 1, 2, 3, 4, 5, 7, 2 Test cases should be derived so that all of these paths are executed. A dynamic program analyser may be used to check that paths have been executed (e. g. , LINT). CMSC 345, Version 4/04 19

Cyclomatic Complexity l l l The minimum number of tests needed to test all statements equals the cyclomatic complexity. CC = number_edges – number_nodes + 2 In the case of no goto’s, CC = number_decisions + 1 Although all paths are executed, all combinations of paths are not executed. Some paths may be impossible to test. CMSC 345, Version 4/04 20

Integration Testing l l Tests the complete system or subsystems composed of integrated components Integration testing is black box testing with tests derived from the requirements and design specifications. Main difficulty is localizing errors Incremental integration testing reduces this problem. CMSC 345, Version 4/04 21

Incremental Integration Testing CMSC 345, Version 4/04 22

Approaches to Integration Testing l Top-down testing • l Bottom-up testing • l Start with high-level system and integrate from the top-down, replacing individual components by stubs where appropriate Integrate individual components in levels until the complete system is created In practice, most integration testing involves a combination of both of these strategies: • • Sandwich testing (outside-in) Thread testing CMSC 345, Version 4/04 23

Top-down Testing CMSC 345, Version 4/04 24

Bottom-up Testing CMSC 345, Version 4/04 25

Method “Pro’s” l Top-down l Bottom-up l Sandwich l Thread CMSC 345, Version 4/04 26

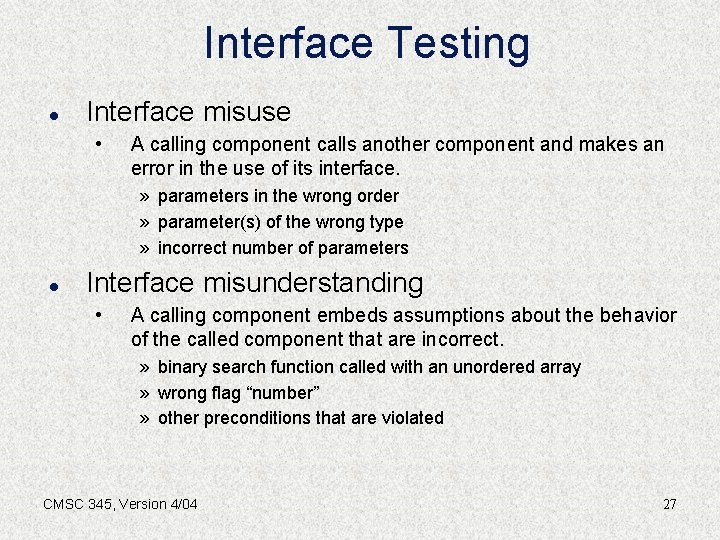

Interface Testing l Interface misuse • A calling component calls another component and makes an error in the use of its interface. » parameters in the wrong order » parameter(s) of the wrong type » incorrect number of parameters l Interface misunderstanding • A calling component embeds assumptions about the behavior of the called component that are incorrect. » binary search function called with an unordered array » wrong flag “number” » other preconditions that are violated CMSC 345, Version 4/04 27

Interface Types l Parameter interfaces • Data passed from one procedure to another » functions l Shared memory interfaces • Block of memory is shared between procedures » global data l Message passing interfaces • Sub-systems request services from other sub-systems » OO systems » client-server systems CMSC 345, Version 4/04 28

Some Interface Testing Guidelines l l l Design tests so that parameters to a called procedure at the extreme ends of their ranges (boundaries). Always test pointer parameters with null pointers. Design tests that cause the component to fail. • l violate preconditions In shared memory systems, vary the order in which components are activated. CMSC 345, Version 4/04 29

Stress Testing l Exercises the system beyond its maximum design load. • • • l l Exceed string lengths Store/manipulate more data than in specification Load system with more users than in specification Stressing the system often causes defects to come to light. Systems should not fail catastrophically. Stress testing checks for unacceptable loss of service or data. CMSC 345, Version 4/04 30

Object-oriented Testing l l l The components to be tested are classes that are instantiated as objects. No obvious “top” or “bottom” to the system for top-down or bottom-up integration and testing. Levels: • • Testing class methods Testing the class as a whole Testing clusters of cooperating classes Testing the complete OO system CMSC 345, Version 4/04 31

Class Testing l Complete test coverage of a class involves • • • l Testing all operations associated with an object Setting and interrogating all object attributes Exercising the object in all possible states; i. e. , all events that cause a state (attribute(s)) change in the object should be tested Inheritance makes it more difficult to design class tests as the information to be tested is not localized. CMSC 345, Version 4/04 32

Object Integration l l l Levels of integration are less distinct in objectoriented systems. Cluster testing is concerned with integrating and testing clusters of cooperating objects. Identify clusters using knowledge of the operation of objects and the system features that are implemented by these clusters CMSC 345, Version 4/04 33

Approaches to Cluster Testing l Use case or scenario testing • • l Testing is based on user (actor) interactions with the system Has the advantage that it tests system features as experienced by users Thread testing • Tests the system’s response to events as processing threads through the system » a button click CMSC 345, Version 4/04 34

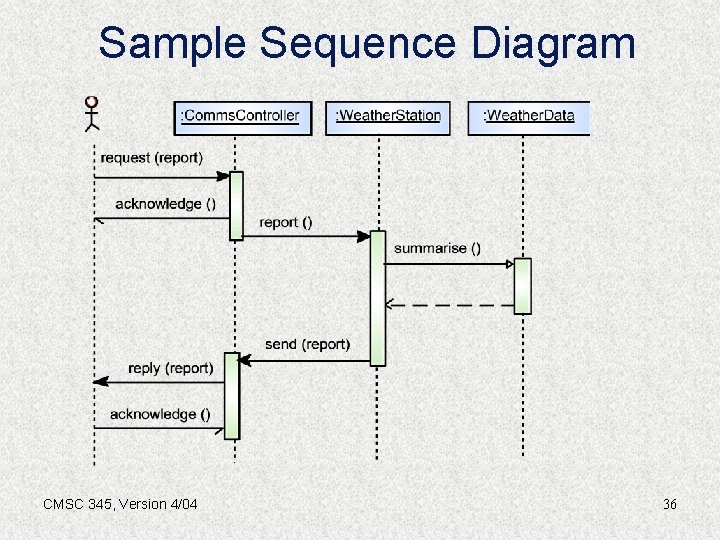

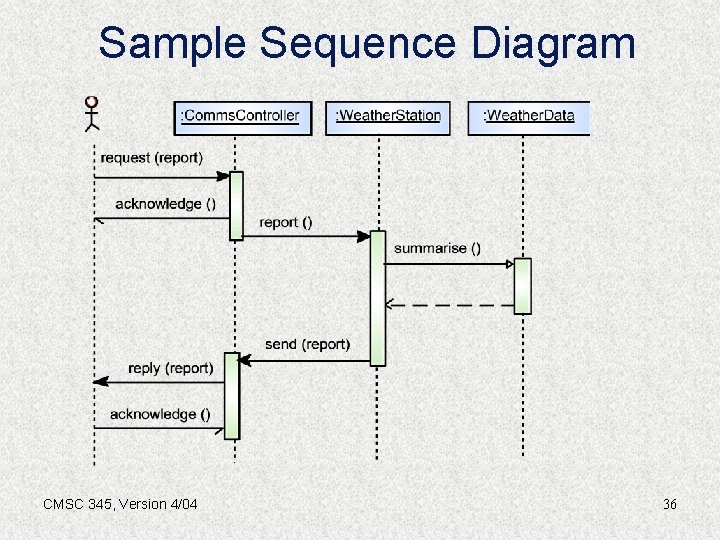

Scenario-based Testing l Identify scenarios from use cases and supplement these with sequence diagrams that show the objects involved in the scenario CMSC 345, Version 4/04 35

Sample Sequence Diagram CMSC 345, Version 4/04 36