Software Testing Methods and Techniques Philippe CHARMAN charmanfr

- Slides: 35

Software Testing Methods and Techniques Philippe CHARMAN charman@fr. ibm. com http: //users. polytech. unice. fr/~charman/ Last update: 21 -05 -2014

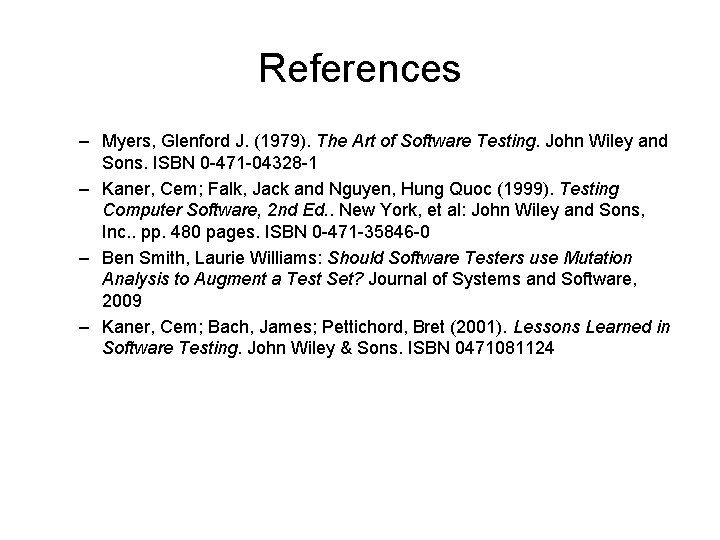

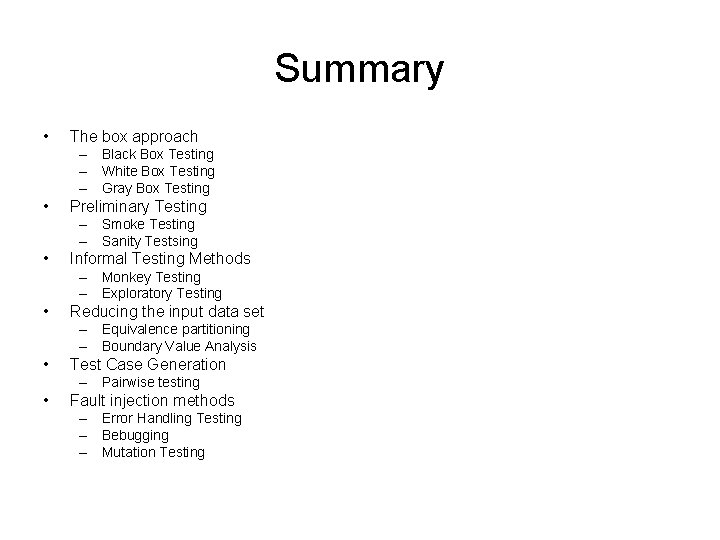

Summary • The box approach – Black Box Testing – White Box Testing – Gray Box Testing • Preliminary Testing – Smoke Testing – Sanity Testsing • Informal Testing Methods – Monkey Testing – Exploratory Testing • Reducing the input data set – Equivalence partitioning – Boundary Value Analysis • Test Case Generation – Pairwise testing • Fault injection methods – Error Handling Testing – Bebugging – Mutation Testing

About the Testing Terminolgy • Software Testing has developed many redundant or overlapping terminologies over the last decade • For instance black box testing is equivalent to specification-based testing or functional Testing • The reference is the ISTQB glossary

Black Box Testing • Testing software based on output requirements and without any knowledge of the internal structure or coding in the program Input -> -> Output Program • Black-box testing is focused on results • Synonyms: specification-based testing, functional testing

Black Box Testing • Pros – Quick understanding of the system behaviour – Simplicity: design the input and get the output – Unaffiliated opinion • Cons – Too many redundant test cases may be created – Missing test cases

Black Box Test Design Techniques • • • • boundary value analysis cause-effect graphing classification tree method decision table testing domain analysis elementary comparison testing equivalence partitioning n-wise testing pairwise testing process cycle test random testing state transition testing syntax testing use case testing user story testing

White Box Testing • White box testing is when the tester has access to the internal data structures and algorithms including the code that implement these • Some types: – Code review – Code coverage – Performance – etc. • Usually performed with dedicated QA tools (static or dynamic tools) • Synonyms: clear-box testing, glass box testing, code-based testing, logic-coverage testing, logic-driven testing, structural testing

White Box Testing • Pro – May detect missing test cases with code coverage – May detect bugs with dedicated tools (memory debuggers, static analysis code tools, etc. ) – Unit tests can be easily automated • Cons – Programming skills required – Need to understand the implementation that may be complicated – Time-expensive activity

White Box Test Design Techniques • • • branch testing condition testing data flow testing decision condition testing decision testing LCSAJ testing modified condition decision testing multiple condition testing: path testing statement testing:

Gray Box Testing • Combination of black box testing and white box testing • Idea: if one knows something about how the product works on the inside, one can test it better, even from the outside

Gray Box Testing • Pros – Better test suite with less redundant and more relevant test cases • Cons – The tester may loose his/her unaffiliated opinion

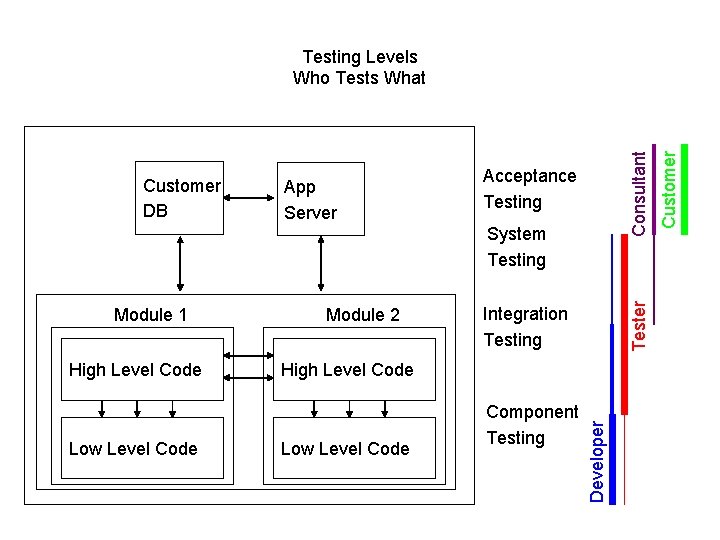

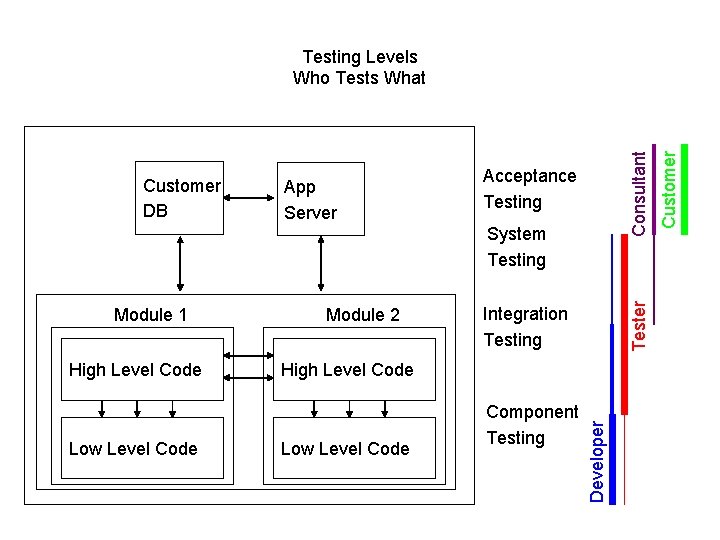

App Server Acceptance Testing High Level Code Low Level Code Module 2 Integration Testing High Level Code Low Level Code Component Testing Developer Module 1 Tester System Testing Customer DB Consultant Testing Levels Who Tests What

Smoke Testing • In electronics jargon: when a new electrical system is to be tested, plug it into the power – If there’s smoke, stop the tests – If no smoke, more tests can be executed • In software engineering: – Preliminary tests before advanced tests, for instance when assembling the differents modules – A smoke test is a quick test done at integration time • Good practice: perform smoke tests after every build in continuous integration system • A smoke test should not be confused with a stress test!

Sanity Testing • Quick, broad, and shallow testing • 2 possible goals: – Determine whether it is reasonable to proceed with further testing – Determine whether the very last release candidate can be shipped (provided more advanced and detailed tests have been performed on previous release candidates) • Example when testing a GUI: checking all the actions from the menus work for at least one test case

Smoke Testing vs. Sanity Testing • According to a unfortunately shared opinion: – A smoke test determines whether it is possible to continue testing • e. g. “File > Open” doesn’t work at all whatever the format of the data -> useless to perform more tests – A sanity test determines whether it is reasonable to continue testing • e. g. “File > Open” works only for a subset of the formats of the data -> we should be able to perform more tests • According to ISTQB: – smoke testing = sanity testing

Monkey Testing • Monkey testing is random testing performed by – a person testing an application on the fly with no idea in mind – or an automated test tool that generate random input • Depending on the characteristics of the random input, we can distinguish: – Dumb Monkey Testing: uniform probability distribution – Smart Monkey Testing: sophisticated probability distribution

Monkey Testing • Pro: – May find severe bugs not found by developers that make the application crash • Con: – Less efficient than directed testing

Exploratory Testing • Not well-know technique that consists of – Learning the product – Designing the test plan – Executig the test – Interpreting the results in parallel • Exploratory testing is particularly suitable if requirements and specifications are incomplete, or if there is lack of time

Exploratory Testing • Pros: – less preparation is needed – important bugs are found quickly – and at execution time, the approach tends to be more intellectually stimulating than execution of scripted tests. • Cons: – skill testers are needed – tests can't be reviewed in advance – difficult trace which tests have been run

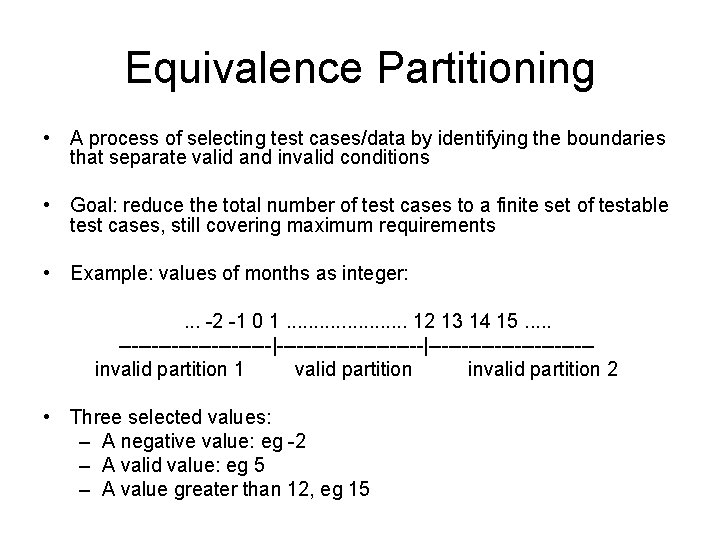

Equivalence Partitioning • A process of selecting test cases/data by identifying the boundaries that separate valid and invalid conditions • Goal: reduce the total number of test cases to a finite set of testable test cases, still covering maximum requirements • Example: values of months as integer: . . . -2 -1 0 1. . . . . 12 13 14 15. . . ------------|------------------------- invalid partition 1 valid partition invalid partition 2 • Three selected values: – A negative value: eg -2 – A valid value: eg 5 – A value greater than 12, eg 15

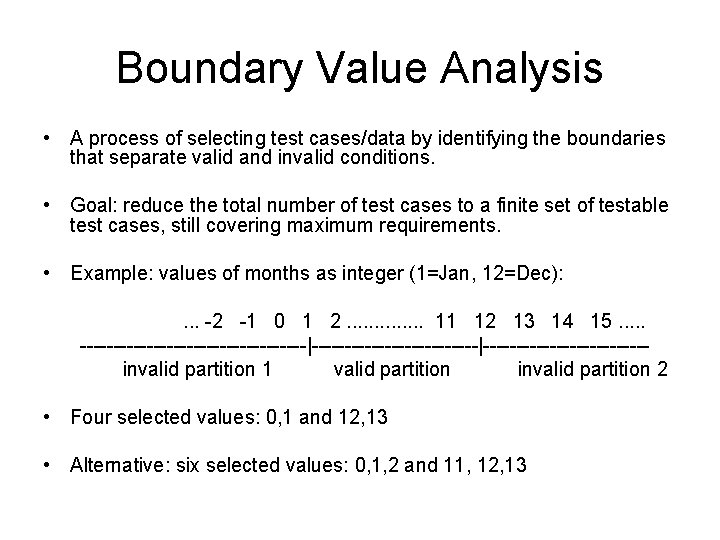

Boundary Value Analysis • A process of selecting test cases/data by identifying the boundaries that separate valid and invalid conditions. • Goal: reduce the total number of test cases to a finite set of testable test cases, still covering maximum requirements. • Example: values of months as integer (1=Jan, 12=Dec): . . . -2 -1 0 1 2. . . 11 12 13 14 15. . . -----------------|------------------------- invalid partition 1 valid partition invalid partition 2 • Four selected values: 0, 1 and 12, 13 • Alternative: six selected values: 0, 1, 2 and 11, 12, 13

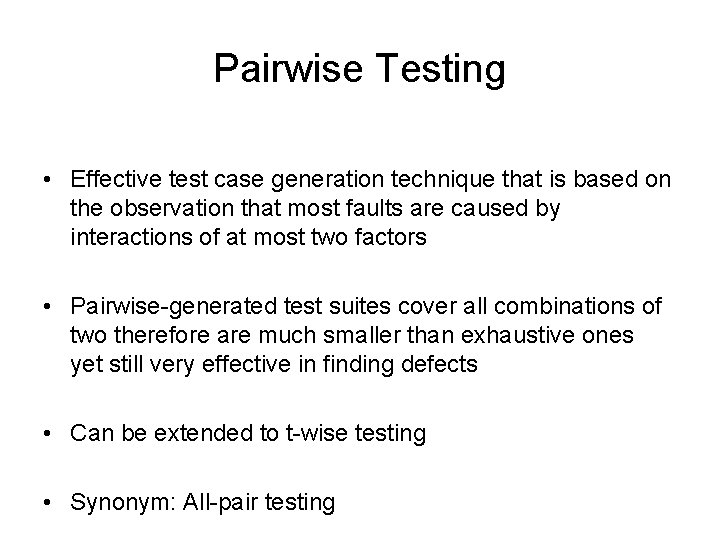

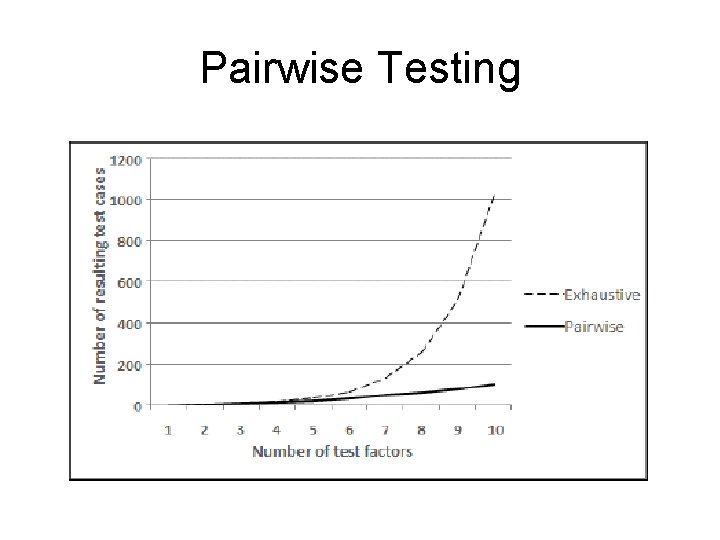

Pairwise Testing • Effective test case generation technique that is based on the observation that most faults are caused by interactions of at most two factors • Pairwise-generated test suites cover all combinations of two therefore are much smaller than exhaustive ones yet still very effective in finding defects • Can be extended to t-wise testing • Synonym: All-pair testing

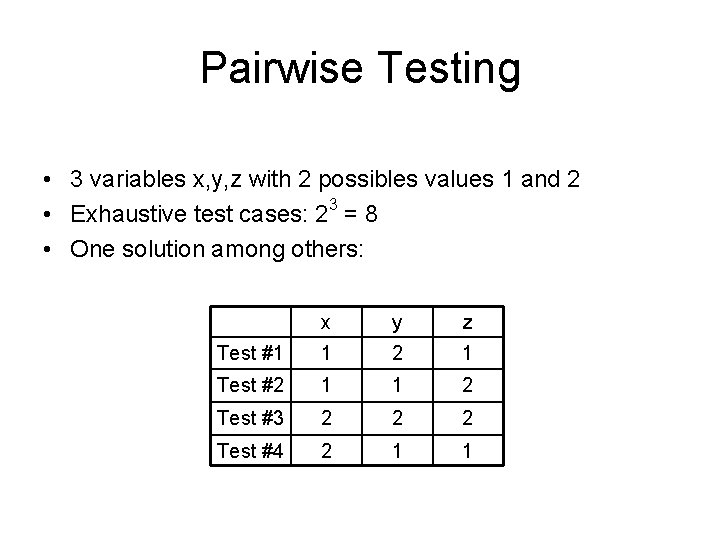

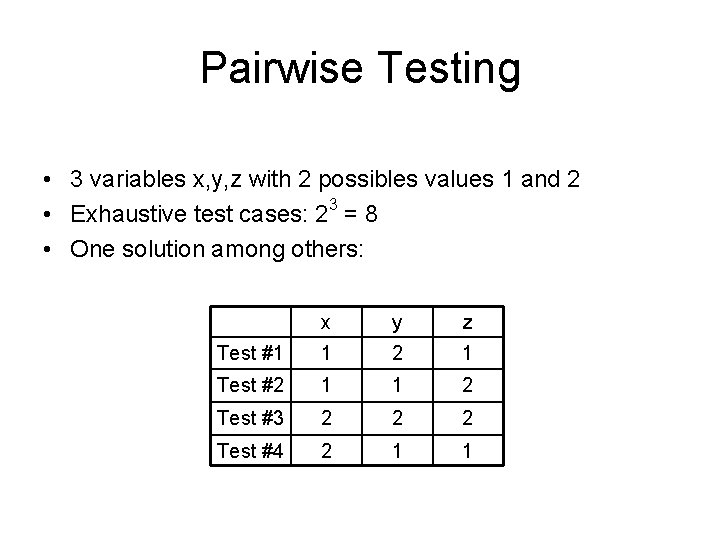

Pairwise Testing • 3 variables x, y, z with 2 possibles values 1 and 2 • Exhaustive test cases: 23 = 8 • One solution among others: x y z Test #1 1 2 1 Test #2 1 1 2 Test #3 2 2 2 Test #4 2 1 1

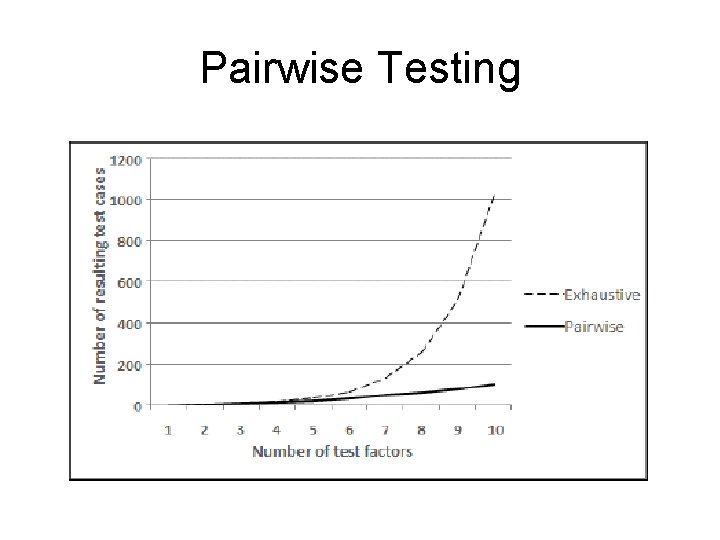

Pairwise Testing

Basis Path Testing • One testing strategy, called Basis Path Testing by Mc. Cabe who first proposed it, is to test each linearly independent path through the program; in this case, the number of test cases will equal the cyclomatic complexity of the program. [1]

Error Handling Testing • Objective: to ensure that an application is capable to handling incorrect input data • Usually neglected by developers in unit tests • Benefit: improving the code coverage by introducing invalid input data to test error handling code paths

Error Handling Testing • What kind of bugs can be found • Severe – application frozen or crash • Major: – Undo doesn’t work • Minor: – Missing button Cancel in dialog box – Cryptic error messages – Non-localized error messages

Bebugging • The process of intentionally adding known faults to those already in a computer program for the purpose of monitoring the rate of detection and removal, and estimating the number of faults remaining in the program. • Bebugging is a type of fault injection • Synonym: fault seeding

Mutation Testing • Suppose a test suite has been written to check a feature or a function • Some questions that may arise: – What is the quality of this test suite ? – Are the relevant input values well tested ? – How to be sure the test suite covers all possible test cases ? • Code coverage and pairwise test set generation can help • Another less known technique is mutation testing

Mutation Testing • Idea: bugs are intentionally introduced into source code • Each new version of the source code is called a mutant and contains a single bug • The mutants are executed with the test suite • If a mutant successfully pass the test suite: – either the test suite needs to be completed – or the execution of the mutant code is equivalent to the original code • A test suite which does reject the mutant code is considered defective

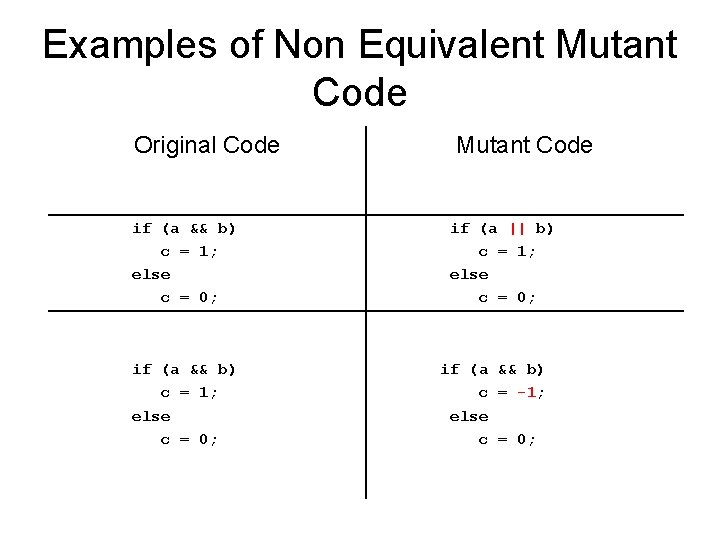

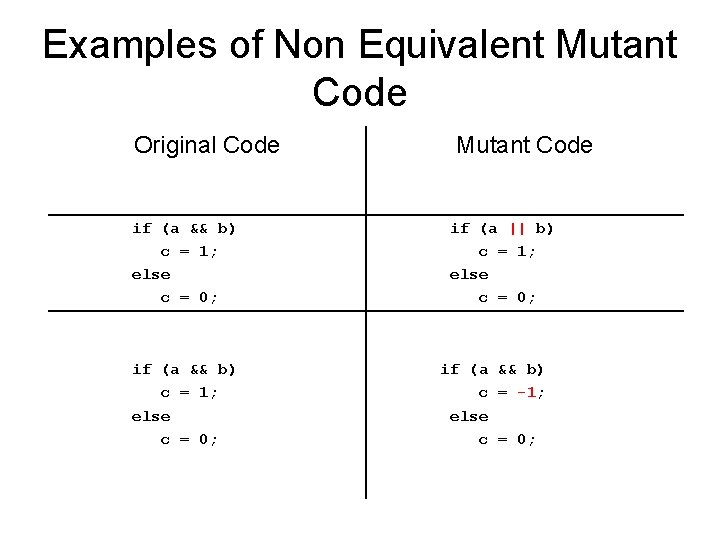

Examples of Non Equivalent Mutant Code Original Code if (a && b) c = 1; else c = 0; Mutant Code if (a || b) c = 1; else c = 0; if (a && b) c = -1; else c = 0;

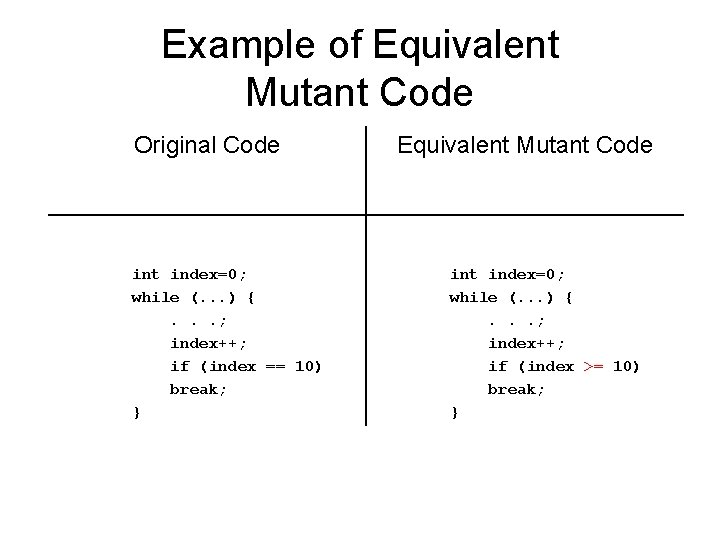

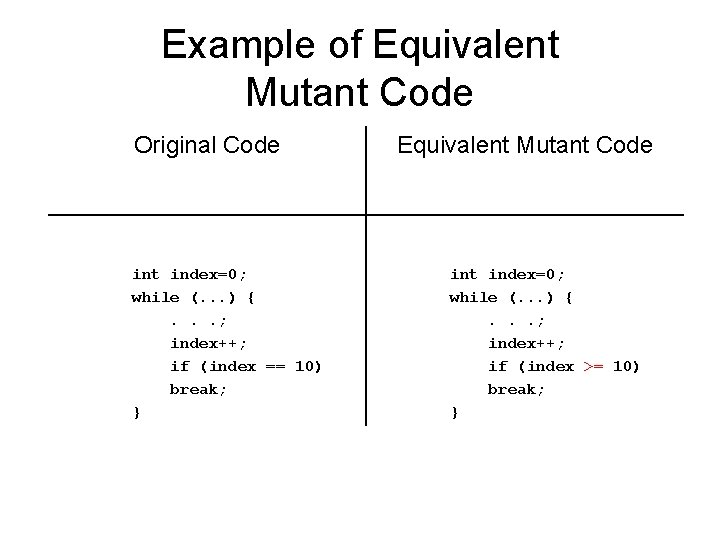

Example of Equivalent Mutant Code Original Code int index=0; while (. . . ) {. . . ; index++; if (index == 10) break; } Equivalent Mutant Code int index=0; while (. . . ) {. . . ; index++; if (index >= 10) break; }

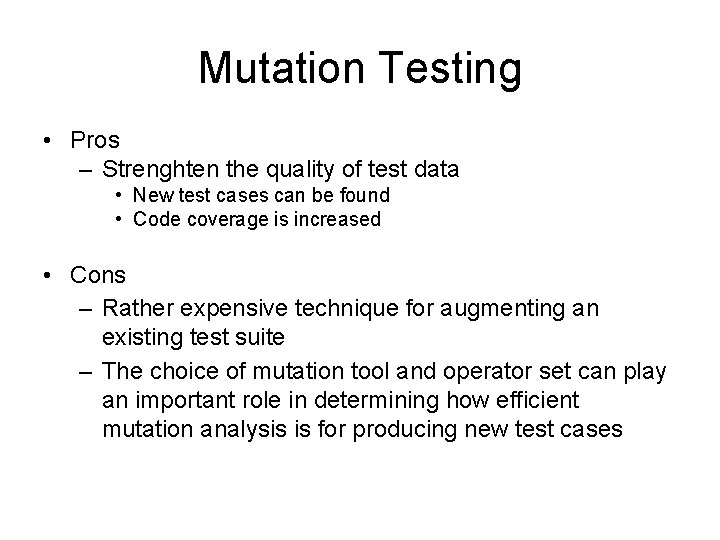

Mutation Testing • Pros – Strenghten the quality of test data • New test cases can be found • Code coverage is increased • Cons – Rather expensive technique for augmenting an existing test suite – The choice of mutation tool and operator set can play an important role in determining how efficient mutation analysis is for producing new test cases

References – Myers, Glenford J. (1979). The Art of Software Testing. John Wiley and Sons. ISBN 0 -471 -04328 -1 – Kaner, Cem; Falk, Jack and Nguyen, Hung Quoc (1999). Testing Computer Software, 2 nd Ed. . New York, et al: John Wiley and Sons, Inc. . pp. 480 pages. ISBN 0 -471 -35846 -0 – Ben Smith, Laurie Williams: Should Software Testers use Mutation Analysis to Augment a Test Set? Journal of Systems and Software, 2009 – Kaner, Cem; Bach, James; Pettichord, Bret (2001). Lessons Learned in Software Testing. John Wiley & Sons. ISBN 0471081124

Further Reading • http: //www. pairwise. org/ • http: //msdn. microsoft. com/en-us/library/cc 150619. aspx