Software Testing IS 301 Software Engineering Lecture 31

- Slides: 49

Software Testing IS 301 – Software Engineering Lecture #31 – 2004 -11 -12 M. E. Kabay, Ph. D, CISSP Assoc. Prof. Information Assurance Division of Business & Management, Norwich University mailto: mkabay@norwich. edu 1 V: 802. 479. 7937 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Topics Ø Defect Testing Ø Integration Testing Ø Object-Oriented Testing Ø Testing Workbenches 2 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Defect Testing ØTesting programs to establish presence of system defects 3 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

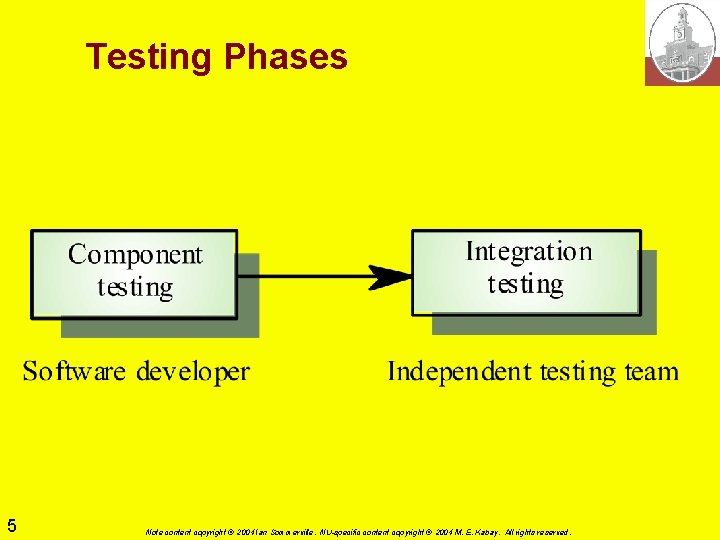

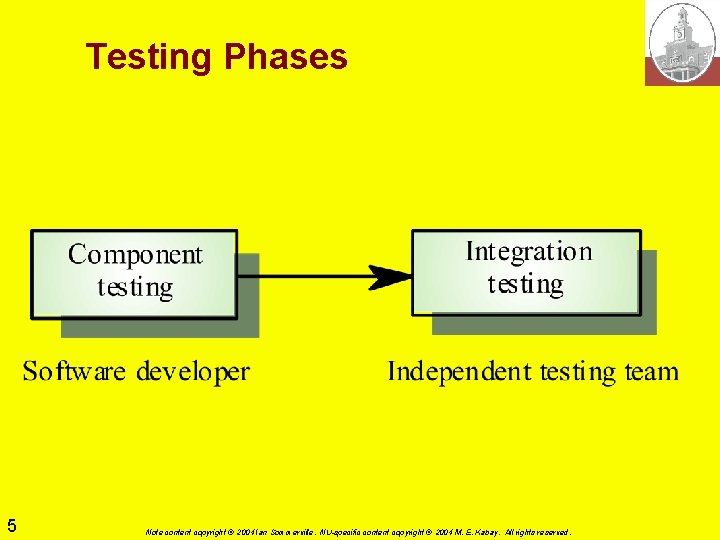

Testing Process Ø Component testing q. Testing of individual program components q. Usually responsibility of component developer (except sometimes for critical systems) q. Tests derived from developer’s experience Ø Integration testing q. Testing of groups of components integrated to create system or sub-system q responsibility of independent testing team q. Tests based on system specification 4 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Testing Phases 5 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Defect Testing Ø Goal of defect testing to discover defects in programs Ø Successful defect test which causes program to behave in anomalous way Ø Tests show presence not absence of defects 6 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Testing priorities Ø Only exhaustive testing can show program free from defects. However, exhaustive testing impossible Ø Tests should exercise system's capabilities rather than its components Ø Testing old capabilities more important than testing new capabilities Ø Testing typical situations more important than boundary value cases 7 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

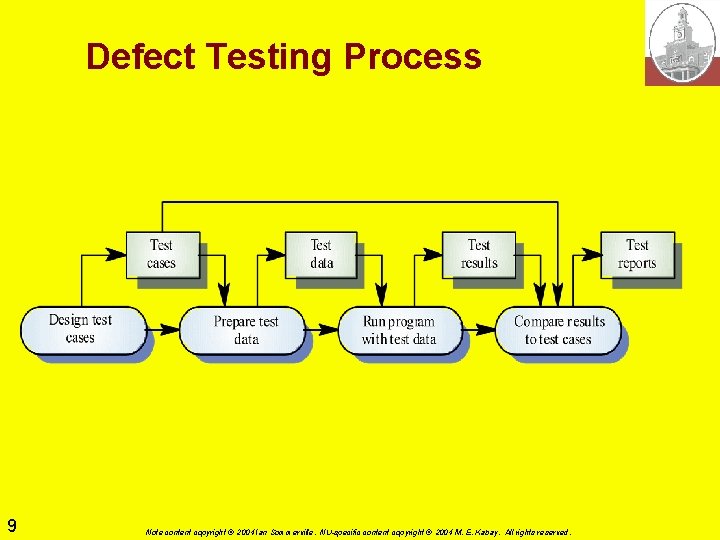

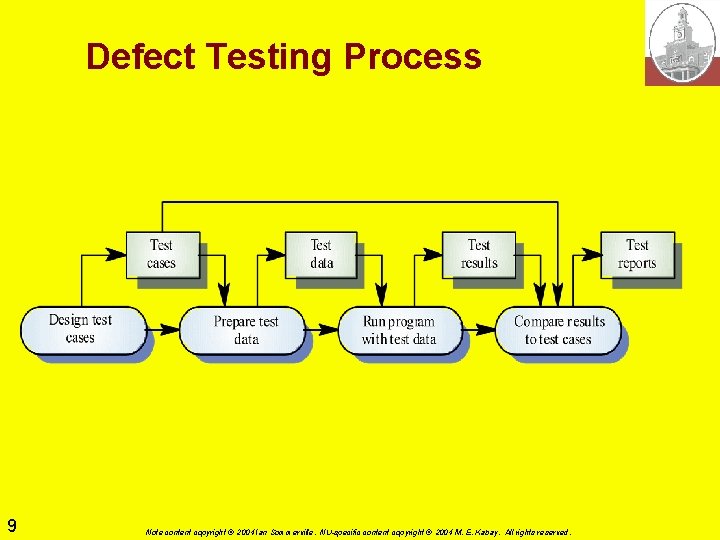

Test Data and Test Cases Ø Test data q. Inputs which have been devised to test system Ø Test cases q. Inputs to test system plus q. Predicted outputs from these inputs üIf system operates according to its specification 8 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Defect Testing Process 9 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

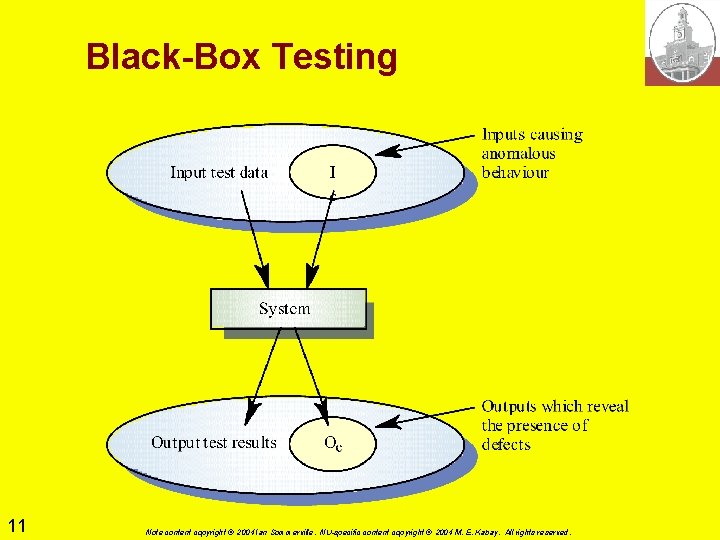

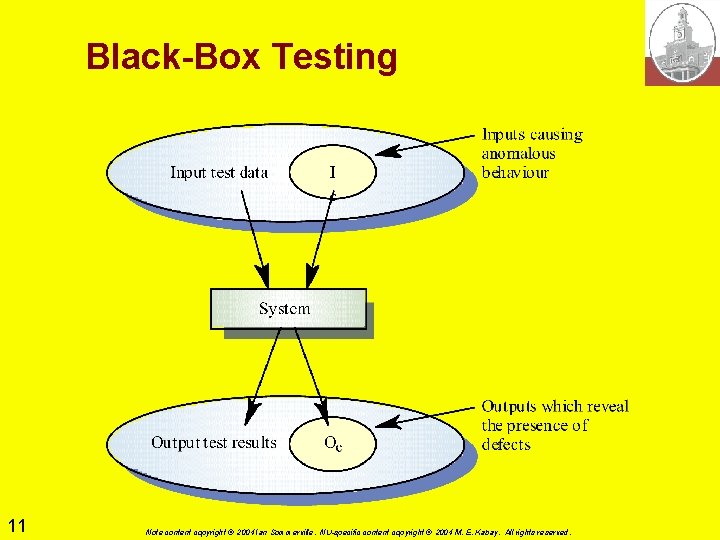

Black-Box Testing Ø Program considered as ‘black-box’ Ø Test cases based on system specification Ø Test planning can begin early in software process 10 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Black-Box Testing 11 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

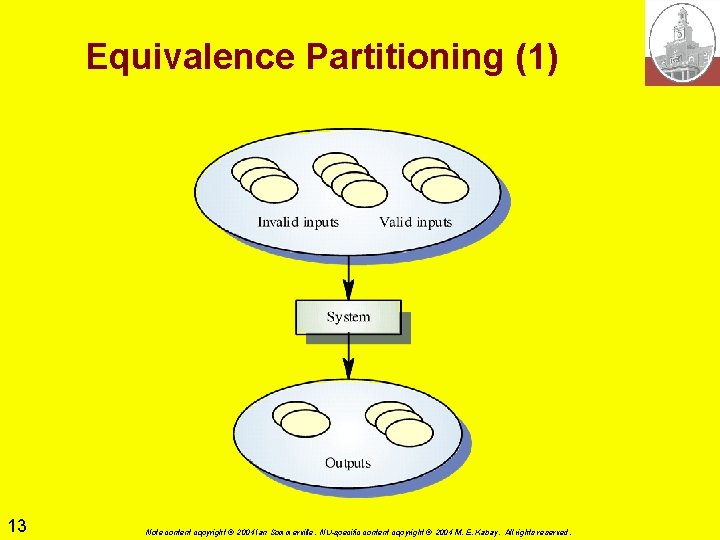

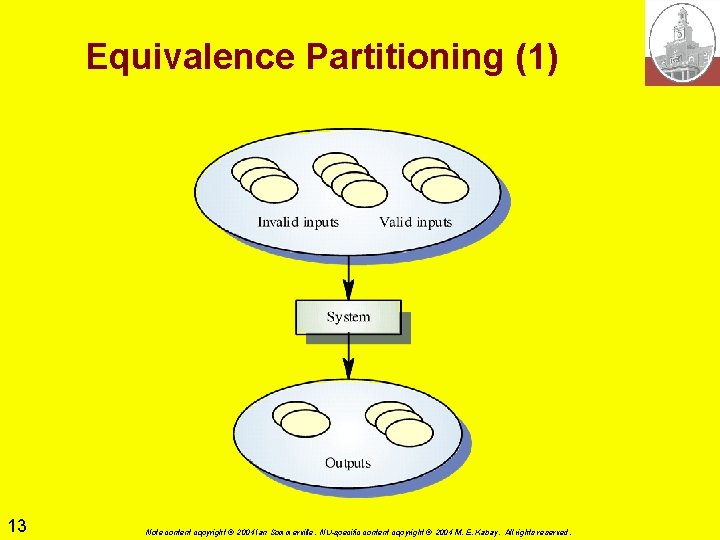

Equivalence Partitioning Ø Input data and output results often fall into different classes where all members of class related Ø Each of these classes = equivalence partition where program behaves in equivalent way for each class member Ø Test cases should be chosen from each partition 12 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Equivalence Partitioning (1) 13 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

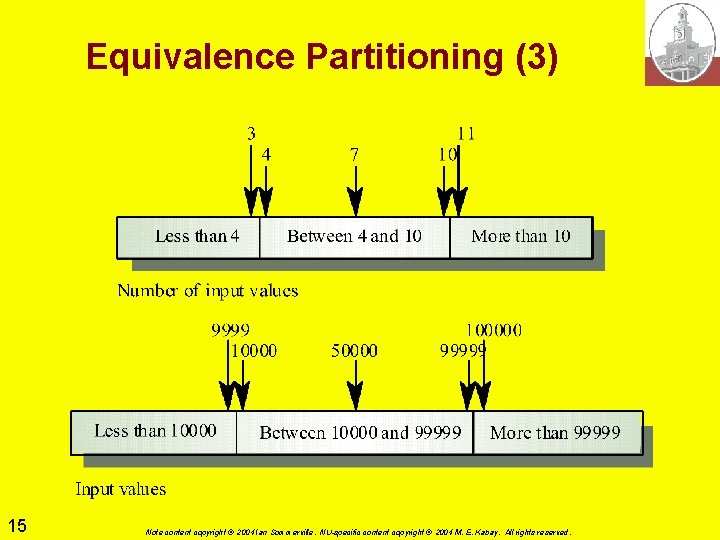

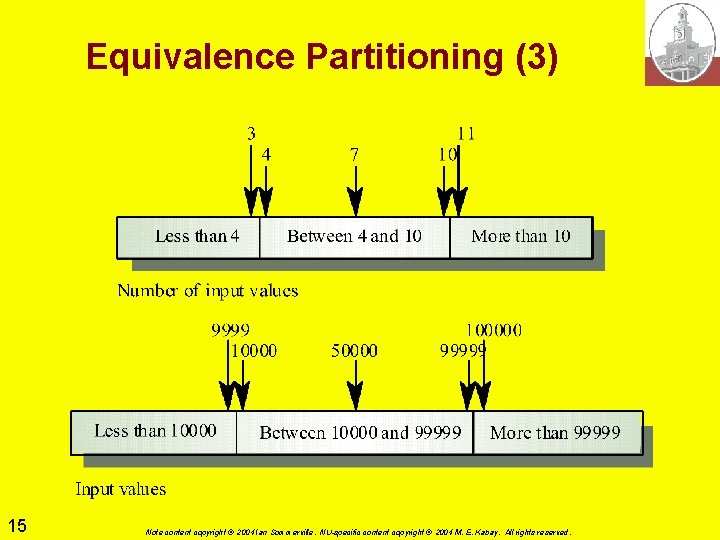

Equivalence partitioning (2) Ø Partition system inputs and outputs into ‘equivalence sets’; e. g. , q. If input 5 -digit integer übetween 10, 000 and 99, 999, üthen equivalence partitions are § <10, 000, § 10, 000 -99, 999 and § >99, 999 Ø Choose test cases at boundaries of these sets: q 00000, 09999, 10000, 10001, 99998, 99999, & 100000 14 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Equivalence Partitioning (3) 15 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

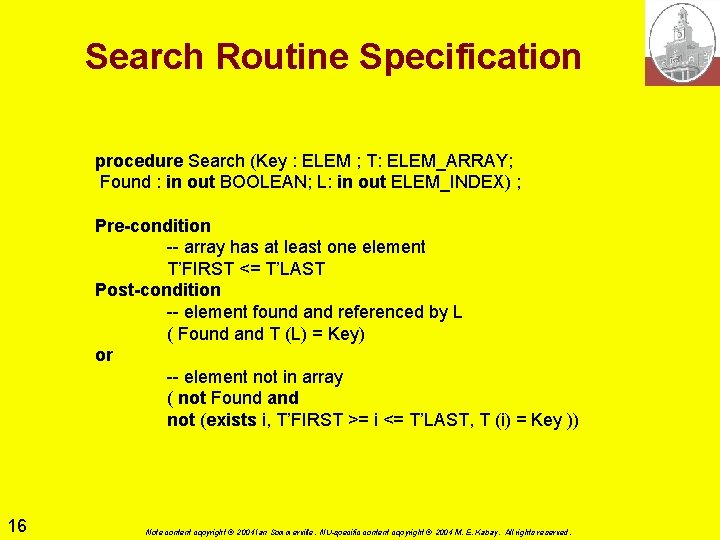

Search Routine Specification procedure Search (Key : ELEM ; T: ELEM_ARRAY; Found : in out BOOLEAN; L: in out ELEM_INDEX) ; Pre-condition -- array has at least one element T’FIRST <= T’LAST Post-condition -- element found and referenced by L ( Found and T (L) = Key) or -- element not in array ( not Found and not (exists i, T’FIRST >= i <= T’LAST, T (i) = Key )) 16 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

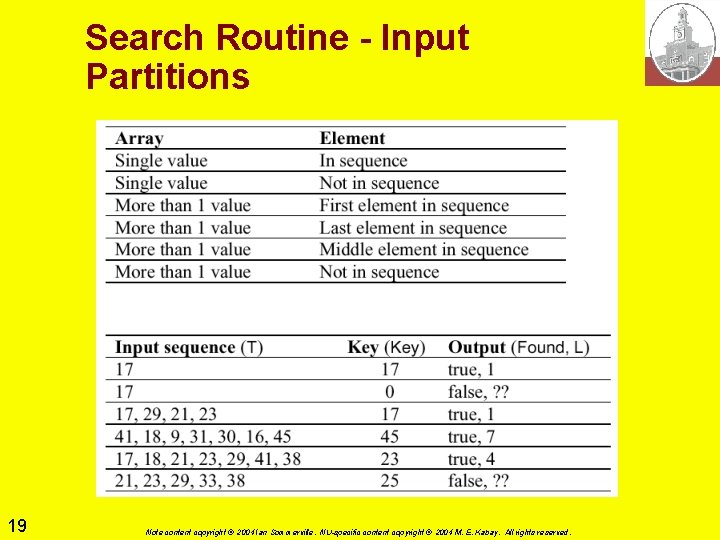

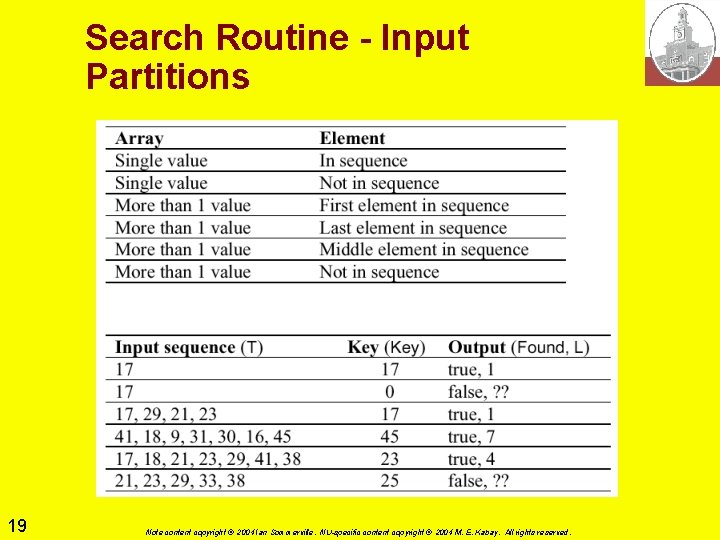

Search Routine - Input Partitions Ø Inputs which conform to pre-conditions Ø Inputs where pre-condition does not hold Ø Inputs where key element is member of array Ø Inputs where key element not member of array 17 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Testing Guidelines (Sequences) Ø Test software with sequences which have only single value Ø Use sequences of different sizes in different tests Ø Derive tests so that first, middle and last elements of sequence accessed Ø Test with sequences of zero length 18 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Search Routine - Input Partitions 19 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

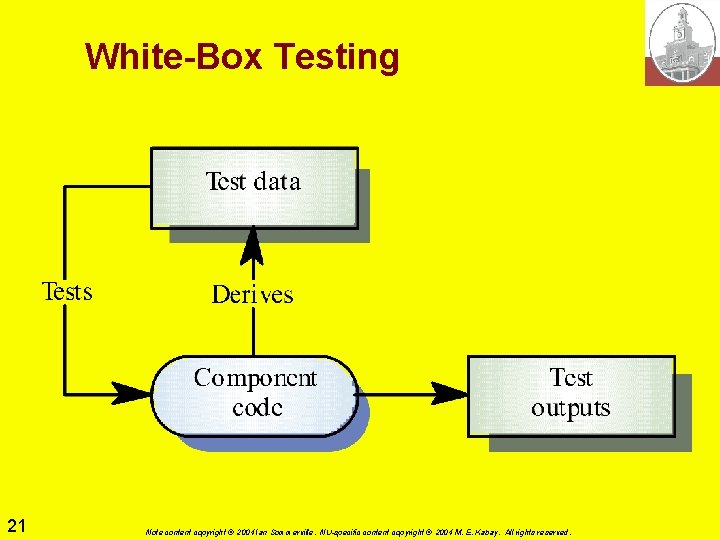

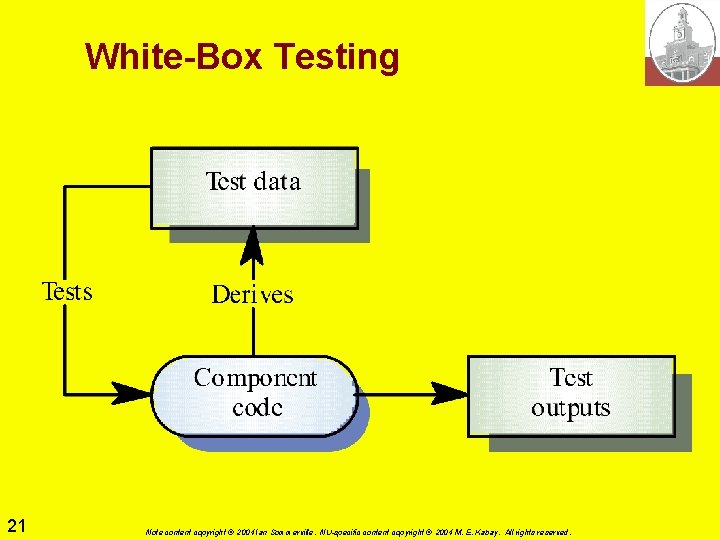

Structural Testing Ø Sometime called white-box testing Ø Derivation of test cases according to program structure. Ø Knowledge of program used to identify additional test cases Ø Objective to exercise qall program statements qnot all path combinations 20 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

White-Box Testing 21 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

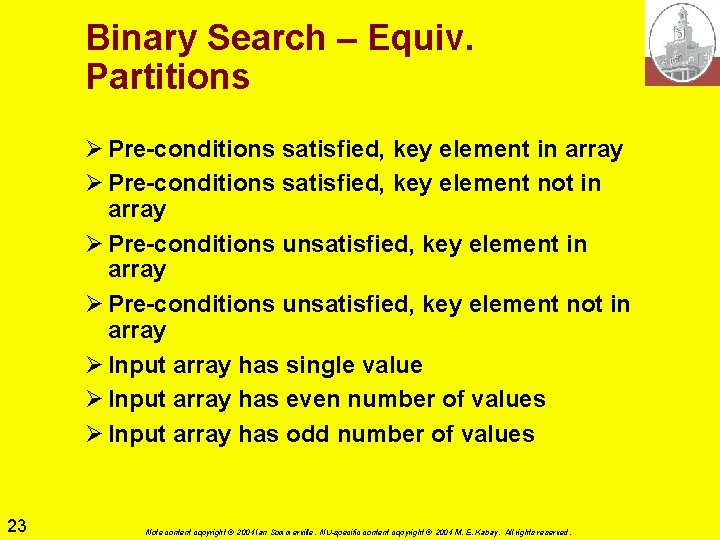

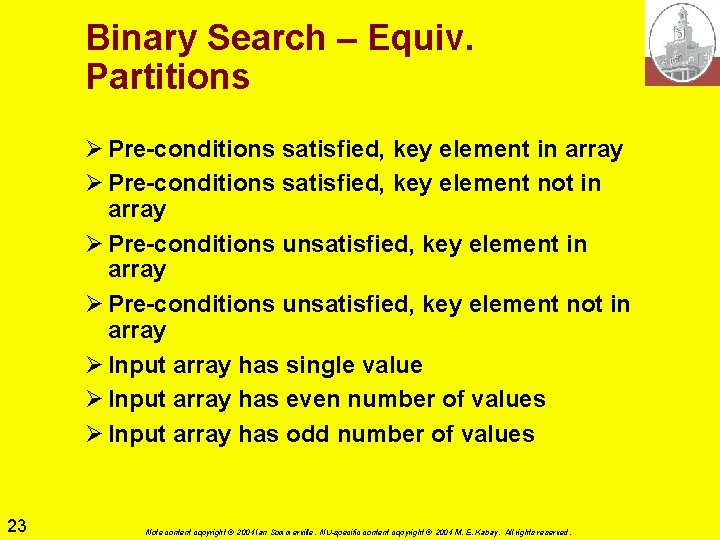

Binary Search – Equiv. Partitions Ø Pre-conditions satisfied, key element in array Ø Pre-conditions satisfied, key element not in array Ø Pre-conditions unsatisfied, key element not in array Ø Input array has single value Ø Input array has even number of values Ø Input array has odd number of values 23 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Binary Search – Equiv. Partitions 24 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

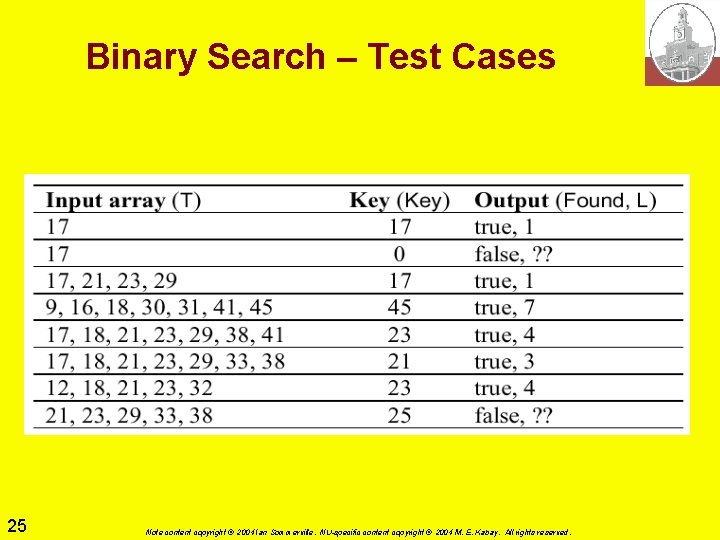

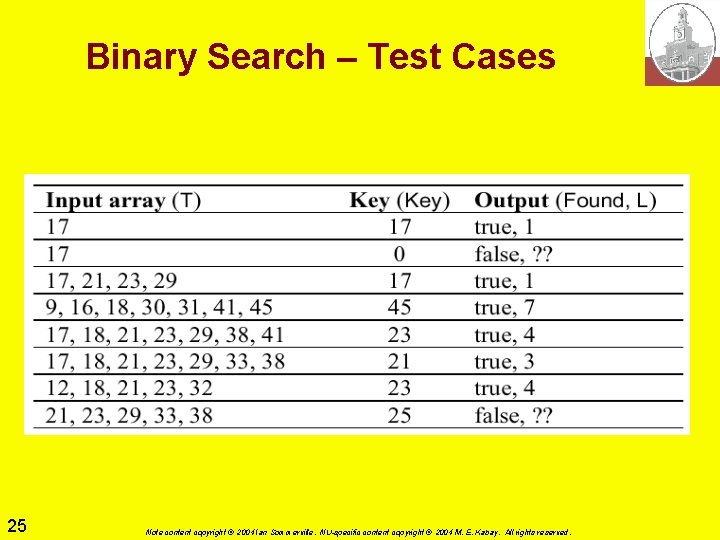

Binary Search – Test Cases 25 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Path Testing Ø Objective: ensure each path through program executed at least once Ø Starting point: flow graph q. Shows nodes representing program decisions q. Arcs representing flow of control Ø Statements with conditions therefore = nodes in flow graph 26 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Program Flow Graphs Ø Each branch shown as separate path Ø Loops shown by arrows looping back to loop condition node Ø Basis for computing cyclomatic complexity Ø Cyclomatic complexity = Number of edges Number of nodes + 2 27 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Cyclomatic Complexity Ø Number of tests needed to test all control statements equals cyclomatic complexity Ø Cyclomatic complexity equals number of conditions in program Ø Useful if used with care. q. Does not imply adequacy of testing. Ø Although all paths executed, all combinations of paths not necessarily executed 28 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

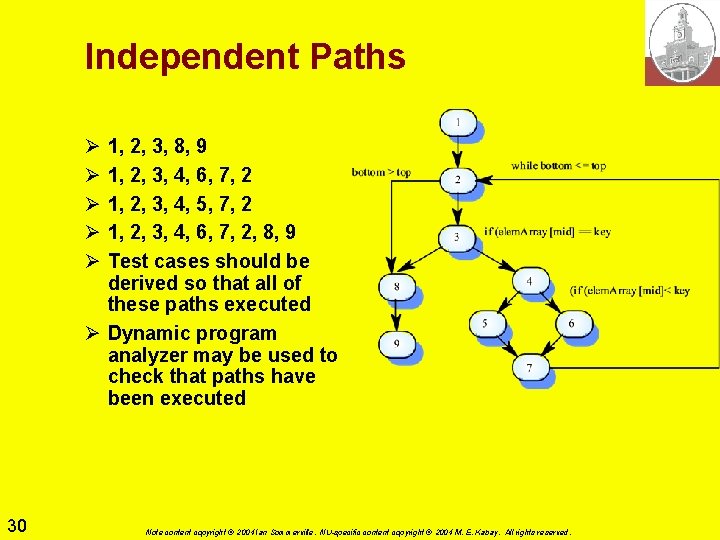

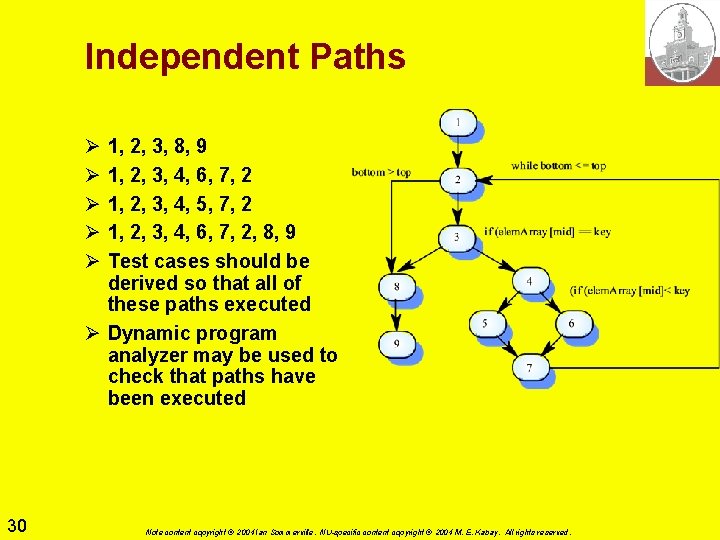

Binary Search Flow Graph

Independent Paths Ø Ø Ø 1, 2, 3, 8, 9 1, 2, 3, 4, 6, 7, 2 1, 2, 3, 4, 5, 7, 2 1, 2, 3, 4, 6, 7, 2, 8, 9 Test cases should be derived so that all of these paths executed Ø Dynamic program analyzer may be used to check that paths have been executed 30 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

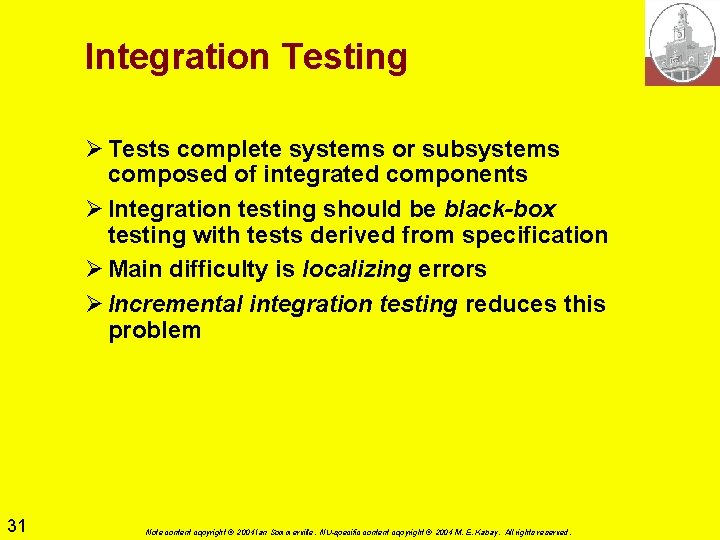

Integration Testing Ø Tests complete systems or subsystems composed of integrated components Ø Integration testing should be black-box testing with tests derived from specification Ø Main difficulty is localizing errors Ø Incremental integration testing reduces this problem 31 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Incremental Integration Testing 32 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

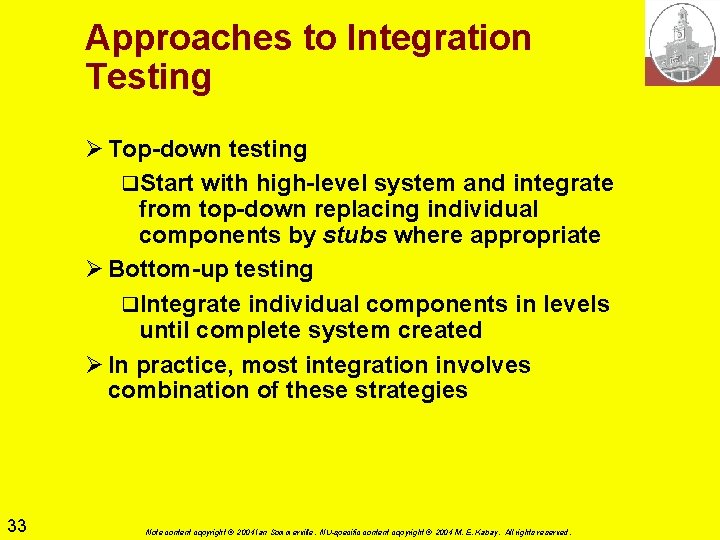

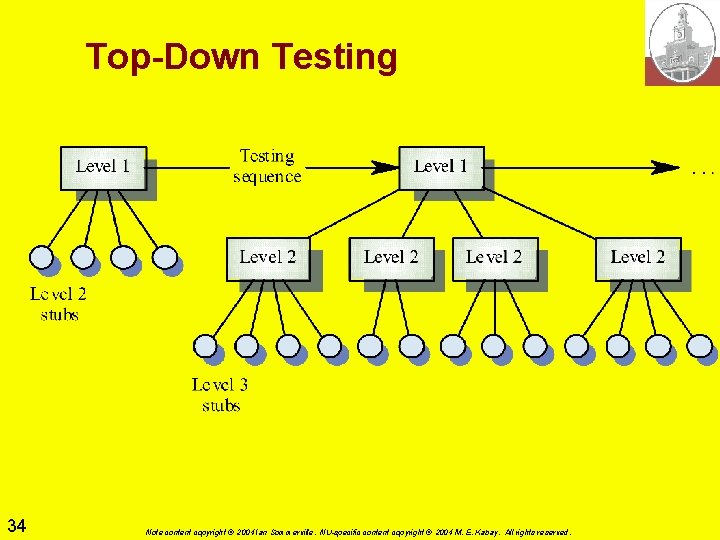

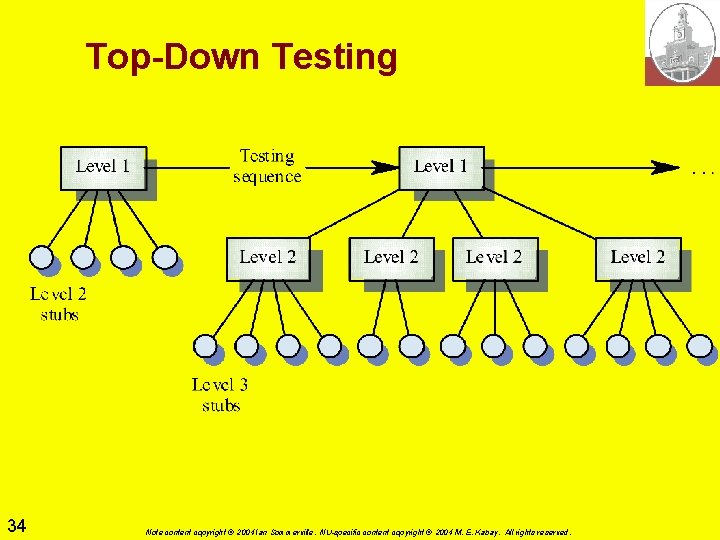

Approaches to Integration Testing Ø Top-down testing q. Start with high-level system and integrate from top-down replacing individual components by stubs where appropriate Ø Bottom-up testing q. Integrate individual components in levels until complete system created Ø In practice, most integration involves combination of these strategies 33 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Top-Down Testing 34 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Bottom-Up Testing 35 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Testing Approaches Ø Architectural validation q. Top-down integration testing better at discovering errors in system architecture Ø System demonstration q. Top-down integration testing allows limited demonstration at early stage in development Ø Test implementation q. Often easier with bottom-up integration testing Ø Test observation – what’s happening during test? q. Problems with both approaches q. Extra code may be required to observe tests üInstrumenting the code 36 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

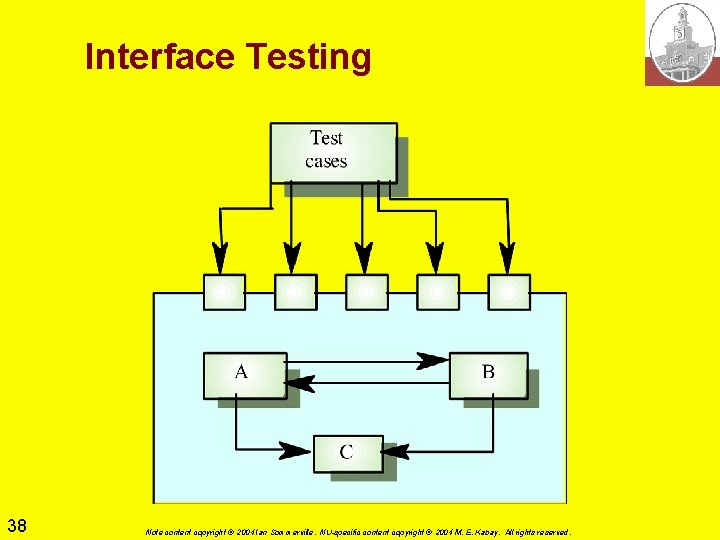

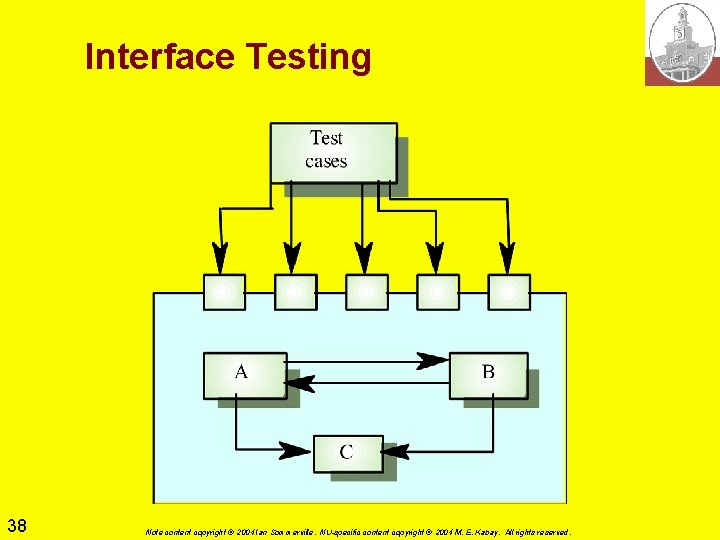

Interface Testing Ø Takes place when modules or sub-systems integrated to create larger systems Ø Objective: Detect faults due to q. Interface errors or q. Invalid assumptions about interfaces Ø Particularly important for object-oriented development q. Objects defined by their interfaces 37 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Interface Testing 38 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Interface Types Ø Parameter interfaces q. Data passed from one procedure to another Ø Shared-memory interfaces q. Block of memory shared between / among procedures Ø Procedural interfaces q. Sub-system encapsulates set of procedures to be called by other sub-systems Ø Message-passing interfaces q. Sub-systems request services from other sub-systems 39 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Interface Errors Ø Interface misuse q. Calling component makes error use of interface; e. g. parameters in wrong order Ø Interface misunderstanding q. Calling component embeds incorrect assumptions about behavior of called component Ø Timing errors q. Called and calling component operate at different speeds q. Out-of-date information accessed q“Race conditions” 40 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Interface Testing Guidelines Ø Parameters to called procedure at extreme ends of their ranges Ø Always test pointer parameters with null pointers Ø Design tests which cause component to fail Ø Use stress testing in message passing systems (see next slide) Ø In shared-memory systems, vary order in which components activated q. Why? 41 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Stress Testing Ø Exercises system beyond its maximum design load q. Stressing system often causes defects to come to light Ø Stressing system tests failure behavior q. Should not fail catastrophically q. Check for unacceptable loss of service or data Ø Distributed systems q. Non-linear performance degradation 42 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Approaches to Cluster Testing Ø Use-case or scenario testing q. Testing based on user interactions with system q. Tests system features as experienced by users Ø Thread testing q. Tests systems response to events as processing threads through system Ø Object interaction testing q. Tests sequences of object interactions q. Stop when object operation does not call on services from another object 48 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Scenario-Based Testing Ø Identify scenarios from use-cases q. Supplement with interaction diagrams q. Show objects involved in scenario Ø Consider scenario in weather station system where report generated (next slide) 49 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

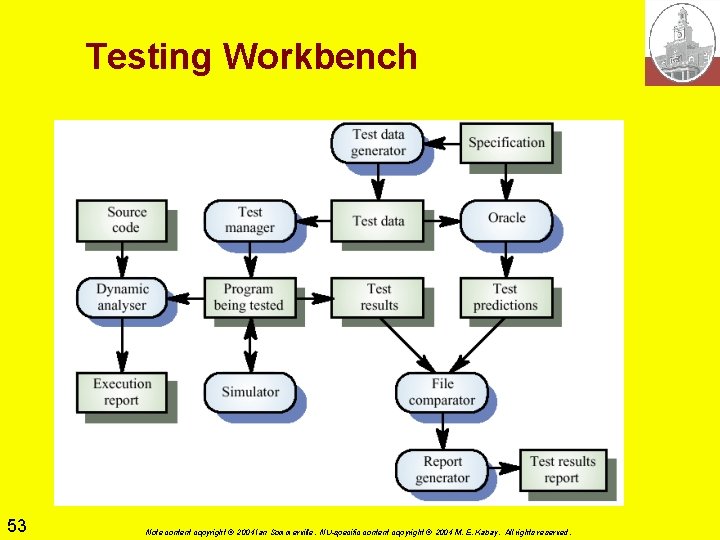

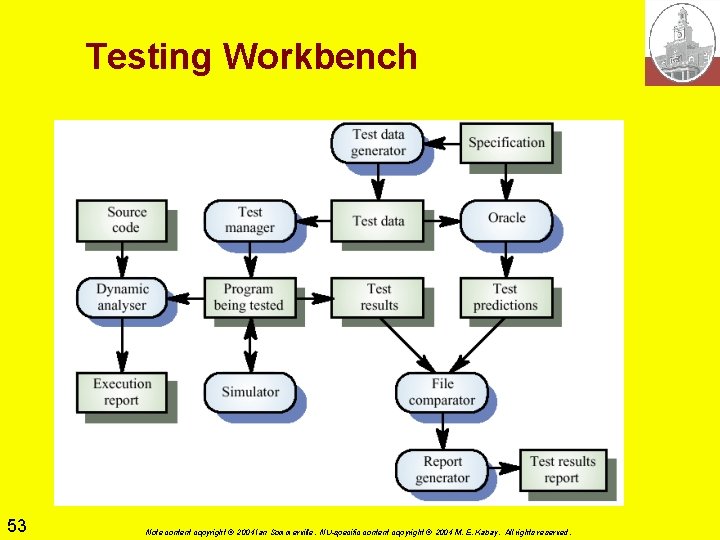

Testing Workbenches Ø Testing expensive Ø Workbenches q. Range of tools q. Reduce üTime required and üTotal testing costs Ø Most testing workbenches are open systems q. Testing needs are organization-specific q. Difficult to integrate with closed design and analysis workbenches 52 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Testing Workbench 53 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Testing Workbench Adaptation Ø Scripts q. User interface simulators and q. Patterns for test data generators Ø Test outputs q. May have to be prepared manually for comparison q. Special-purpose file comparators may be developed 54 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Required Homework Ø Read-recite-review Chapter 23 of Sommerville’s text Ø Survey-Question Chapter 24 for Monday Ø Quiz on WEDNESDAY 17 th Nov: Chapters 17 -21 Ø Required q. For Fri 19 Nov 2004 for 35 points q 23. 1 & 23. 3 (@5) q 23. 4 & 23. 5 (@10) q 23. 7 (@5) 55 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

Optional Homework Ø By Mon 29 Nov 2004, for up to 14 extra points, complete any or all of q 23. 2, 23. 6, 23. 8 (@2) q 23. 9 (@5) q 23. 10 (@3) 56 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.

DISCUSSION 57 Note content copyright © 2004 Ian Sommerville. NU-specific content copyright © 2004 M. E. Kabay. All rights reserved.