Software Testing Building Test Cases Dr Pedro Mejia

Software Testing: Building Test Cases Dr. Pedro Mejia Alvarez Software Testing

Topics covered l l l Software Testing Process Software Testability Test Cases Black box testing Search and Sort Cases Debuging the application Dr. Pedro Mejia Alvarez Software Testing

Dynamics of Faults l l Fault Detection: “Waiting” or causing (or finding) for the error or failure to ocurr. Fault Location: Finding where the fault(s) ocurred, its causes and its consequences. Fault Recovery: Fixing the fault and NOT causing others. Regresion Testing: Testing the software again with the same data that caused the original fault Pedro Mejia Introduction to. Dr. Software Testing, Alvarez Software Testing 3

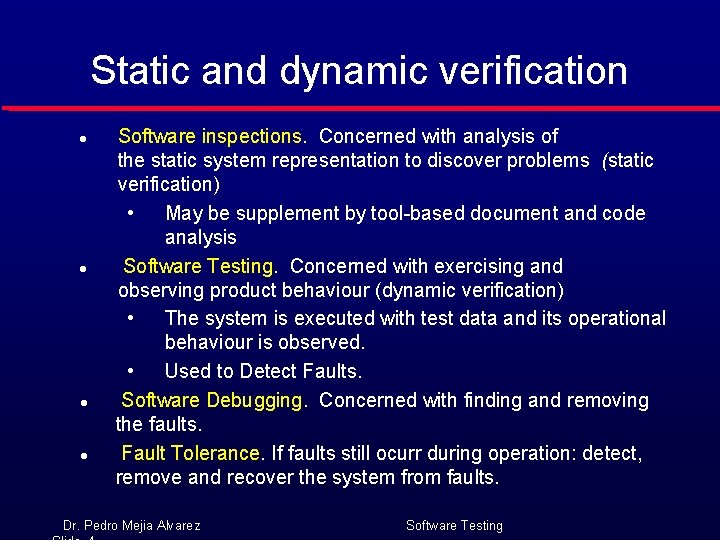

Static and dynamic verification l l Software inspections. Concerned with analysis of the static system representation to discover problems (static verification) • May be supplement by tool-based document and code analysis Software Testing. Concerned with exercising and observing product behaviour (dynamic verification) • The system is executed with test data and its operational behaviour is observed. • Used to Detect Faults. Software Debugging. Concerned with finding and removing the faults. Fault Tolerance. If faults still ocurr during operation: detect, remove and recover the system from faults. Dr. Pedro Mejia Alvarez Software Testing

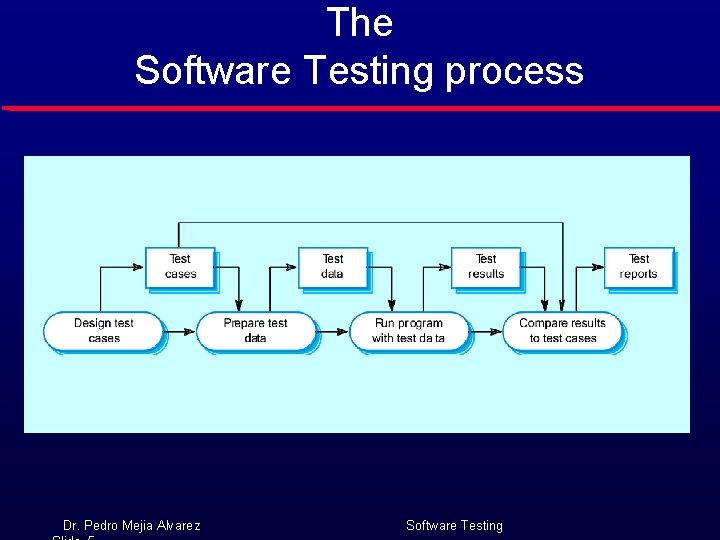

The Software Testing process Dr. Pedro Mejia Alvarez Software Testing

Software Testability l The degree to which a system or component facilitates the establishment of test criteria and the performance of tests to determine whether those criteria have been Plainly speaking – how hard it is met to find faults in the l software Testability is determined by two practical problems • • How to provide the test values to the software How to observe the results of test execution Dr. Pedro Mejia Alvarez Software Testing

Observability and Controllability l Observability How easy it is to observe the behavior of a program in terms of its outputs, effects on the environment and other hardware and software components • l l Software that affects hardware devices, databases, or remote files have low observability Controllability How easy it is to provide a program with the needed inputs, in terms of values, operations, and behaviors • Easy to control software with inputs from keyboards • Inputs from hardware sensors or distributed software is harder Data abstraction reduces controllability and observability Dr. Pedro Mejia Alvarez Software Testing

Components of a Test Case A test case is a multipart artifact with a definite structure The values that directly satisfy one test requirement l l Test case values The result that will be produced when executing the test if the program satisfies it intended behavior l Expected results Dr. Pedro Mejia Alvarez Software Testing

Affecting Controllability and Observability Preconditions l Any conditions necessary for the correct execution of the software l Postconditions Any conditions that must be observed after executing the software 1. Verification Values : Values needed to see the results of the test case values 2. Exit Commands : Values needed to terminate the program or otherwise return it to a stable state l Executable test script A test case that is prepared in a form to be executed automatically on the test software and produce a report Dr. Pedro Mejia Alvarez Software Testing

Algorithms to Test Search • Binary Search • Interpolation Search Sort • Heap Sort • Merge Sort. Dr. Pedro Mejia Alvarez Software Testing

Testing the algorithms Black Box Testing. l l l l l Study the algorithms Programming. Pre-conditions & Post-Conditions of each algorithm. Develop Test Cases. Develop Oracle. Run the test. Record Inputs and Outputs. Record Invalid Outputs If invalid Outputs or Execution Errors then: Debug the program: Finding the fault. Dr. Pedro Mejia Alvarez Software Testing

Test case design l l l Involves designing the test cases (inputs and outputs) used to test the system. The goal of test case design is to create a set of tests that are effective in validation and defect testing. Design approaches: • • Black Box Testing: Partition testing; White Box Testing: • Structural testing. • Criteria based on Structures. Dr. Pedro Mejia Alvarez Software Testing

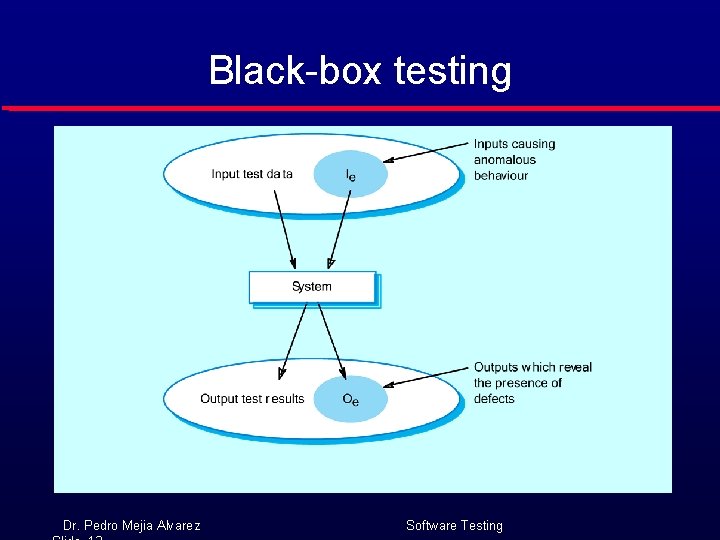

Black-box testing Dr. Pedro Mejia Alvarez Software Testing

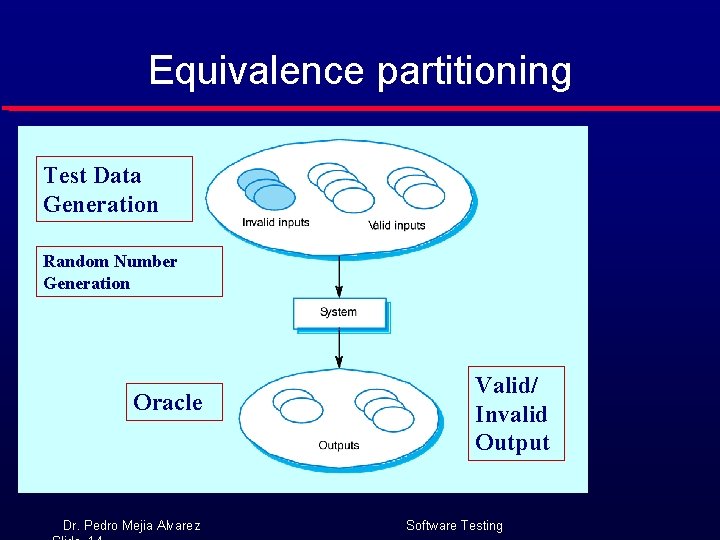

Equivalence partitioning Test Data Generation Random Number Generation Oracle Dr. Pedro Mejia Alvarez Valid/ Invalid Output Software Testing

Testing guidelines l Testing guidelines are hints for the testing team to help them choose tests that will reveal defects in the system • Choose inputs that force the system to generate valid outputs; • Choose inputs that force the system to generate faults (invalid outputs); • Check if valid inputs generate invalid outputs. • Check if invalid outputs generate valid outputs. • Design inputs that cause buffers to overflow; • Repeat the same input or input series several times; • Force computation results to be too large or too small. Dr. Pedro Mejia Alvarez Software Testing

Partition testing l l l Input data and output results often fall into different classes where all members of a class are related. Each of these classes is an equivalence partition or domain where the program behaves in an equivalent way for each class member. Test cases should be chosen from each partition. Dr. Pedro Mejia Alvarez Software Testing

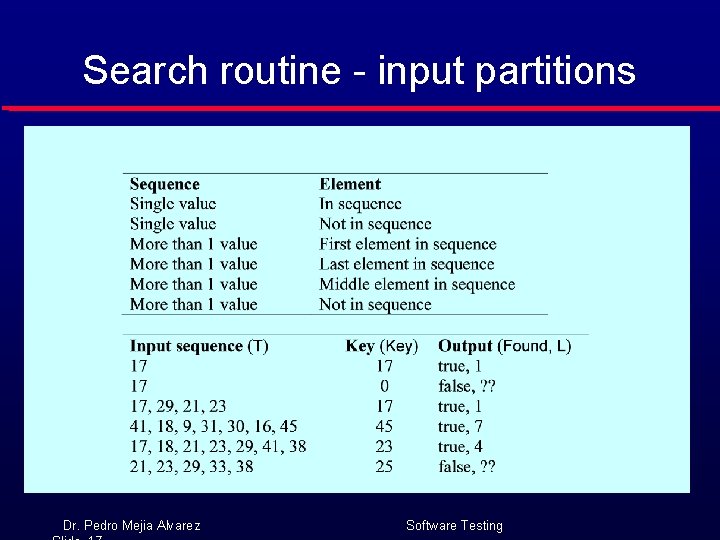

Search routine - input partitions Dr. Pedro Mejia Alvarez Software Testing

Sort Routine – Partitions Sequences: Array of 1, 000 elements Ø Ø Ø Sort an array with all elements not sorted. Sort an array with all elements sorted. Sort an array with many equal elements. Oracle: Ø Simple: Check that first element in array is less or equal than next. Ø Check how many elements are not correctly sorted. Ø Check what input makes out to be incorrect. Dr. Pedro Mejia Alvarez Software Testing

Testing and debugging l l Defect testing and debugging are distinct processes. Verification and validation is concerned with establishing the existence of defects in a program. Debugging is concerned with locating and repairing these errors. Debugging involves formulating a hypothesis about program behaviour then testing these hypotheses to find the system error. Dr. Pedro Mejia Alvarez Software Testing

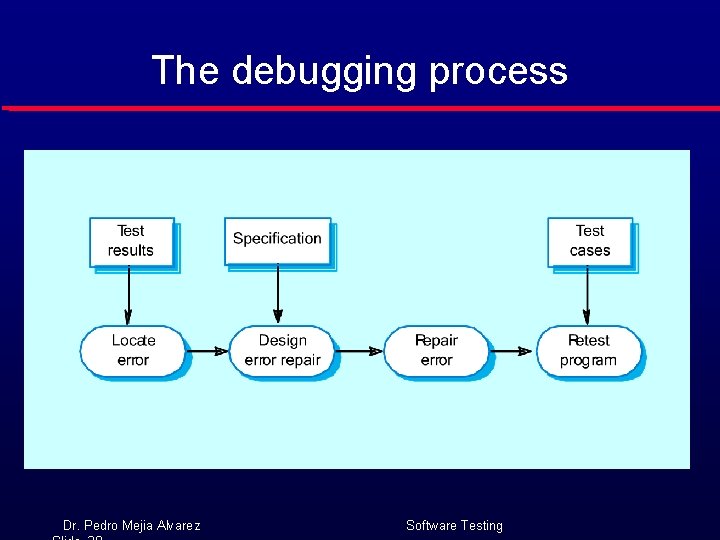

The debugging process Dr. Pedro Mejia Alvarez Software Testing

Debugging What can debuggers do? l l l Run programs Make the program stops on specified places or on specified conditions Give information about current variables’ values, the memory and the stack Let you examine the program execution step by step - stepping Let you examine the change of program variables’ values - tracing ! To be able to debug your program, you must compile it with the -g option (creates the symbol table) ! CC –g my_prog Dr. Pedro Mejia Alvarez Software Testing

GDB – Running Programs Running a program: run (or r) -- creates an inferior process that runs your program. Ø Ø if there are no execution errors the program will finish and results will be displayed in case of error, the GDB will show: - the line the program has stopped on and - a short description of what it believes has caused the error Dr. Pedro Mejia Alvarez Software Testing

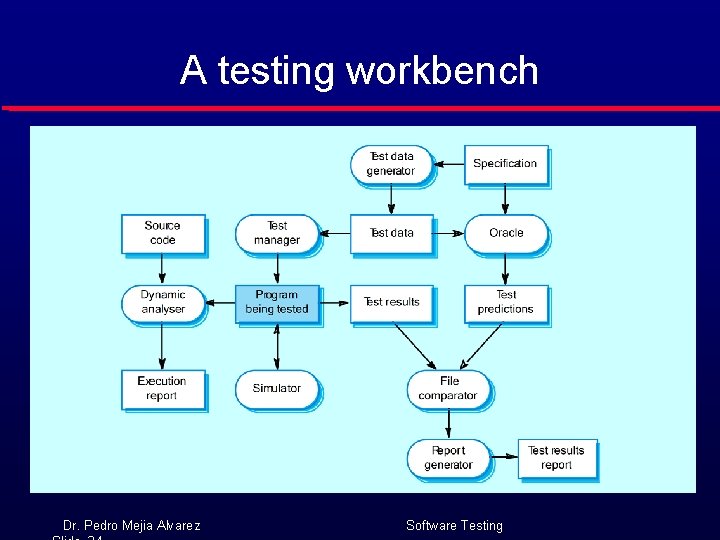

Test automation l l Testing is an expensive process phase. Testing workbenches provide a range of tools to reduce the time required and total testing costs. Systems such as Junit support the automatic execution of tests. Most testing workbenches are open systems because testing needs are organisation-specific. They are sometimes difficult to integrate with closed design and analysis workbenches. Dr. Pedro Mejia Alvarez Software Testing

A testing workbench Dr. Pedro Mejia Alvarez Software Testing

What do we need to improve testing? l Run programs Dr. Pedro Mejia Alvarez Software Testing

- Slides: 25