Software Excellence via Program Verification at Microsoft Manuvir

![SALinfer void work() { int elements[200]; wrap(elements, 200); } void wrap(pre wrap( element. Count(len) SALinfer void work() { int elements[200]; wrap(elements, 200); } void wrap(pre wrap( element. Count(len)](https://slidetodoc.com/presentation_image_h2/43d3f43c54d7d5baf0ff8f8cc81c5d36/image-32.jpg)

![esp. X void work() { int elements[200]; wrap(elements, 200); } void wrap(pre element. Count(len) esp. X void work() { int elements[200]; wrap(elements, 200); } void wrap(pre element. Count(len)](https://slidetodoc.com/presentation_image_h2/43d3f43c54d7d5baf0ff8f8cc81c5d36/image-33.jpg)

![Example entry [Closed] T dump F Open [Opened|dump=T] T p F x = 0 Example entry [Closed] T dump F Open [Opened|dump=T] T p F x = 0](https://slidetodoc.com/presentation_image_h2/43d3f43c54d7d5baf0ff8f8cc81c5d36/image-40.jpg)

- Slides: 57

Software Excellence via Program Verification at Microsoft Manuvir Das Center for Software Excellence Microsoft Corporation

Software Excellence via Program Verification at Microsoft Manuvir Das Center for Software Excellence Microsoft Corporation

Software Excellence via Program Analysis at Microsoft Manuvir Das Center for Software Excellence Microsoft Corporation

Talking the talk … l l l 4 Program analysis technology can make a huge impact on how software is engineered The trick is to properly balance research on new techniques with a focus on deployment The Center for Software Excellence (CSE) at Microsoft is doing this (well? ) today Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

… walking the walk l Program Analysis group in June 2005 Filed 7000+ bugs – Automatically added 10, 000+ specifications – Answered hundreds of emails (one future version of one product) – l We are program analysis researchers – – 5 but we live and breathe deployment & adoption and we feel the pain of the customer Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Context l The Nail (Windows) – l The Hammer (Program Analysis) – l Automated methods for “searching” programs The Carpenter (CSE) – 6 Manual processes do not scale to “real” software A systematic, heavily automated, approach to improving the “quality” of software Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

What is program analysis? l l l 7 grep == program analysis == grep syntax trees, CFGs, instrumentation, alias analysis, dataflow analysis, dependency analysis, binary analysis, automated debugging, fault isolation, testing, symbolic evaluation, model checking, specifications, … Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Roadmap l l l 8 (part of) The engineering process today (some of) The tools that enable the process (a few) Program analyses behind the tools (too many) Lessons learned along the way (too few) Suggestions for future research Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Engineering process 9 Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Methodology Root Cause Analysis Measurement Engineering Process Analysis Technology 10 Arizona, 6 Oct ‘ 05 Resource Constraints Manuvir Das, Microsoft Corporation

Root cause analysis l Understand important failures in a deep way – – – l 11 Every MSRC bulletin Beta release feedback Watson crash reports Self host Bug databases Design and adjust the engineering process to ensure that these failures are prevented Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Measurement l Measure everything about the process – – l 12 Code quality Code velocity Tools effectiveness Developer productivity Tweak the process accordingly Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

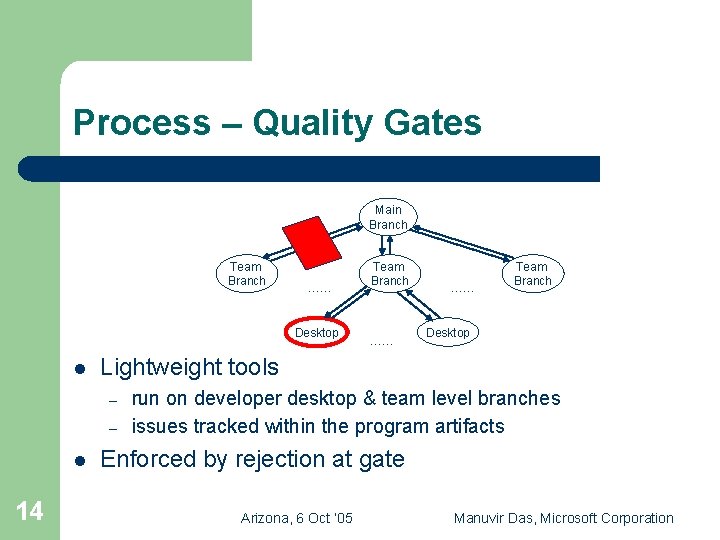

Process – Build Architecture Main Branch Team Branch …… Desktop 13 Team Branch …… Arizona, 6 Oct ‘ 05 …… Team Branch Desktop Manuvir Das, Microsoft Corporation

Process – Quality Gates Main Branch Team Branch …… Desktop l – 14 …… …… Team Branch Desktop Lightweight tools – l Team Branch run on developer desktop & team level branches issues tracked within the program artifacts Enforced by rejection at gate Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Process – Automated Bug Filing Main Branch Team Branch …… Desktop l – 15 …… …… Team Branch Desktop Heavyweight tools – l Team Branch run on main branch issues tracked through a central bug database Enforced by bug cap Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Tools 16 Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

QG – Code Coverage via Testing l Reject code that is not adequately tested – l l 17 Maintain a minimum bar for code coverage Code coverage tool – Magellan Based on binary analysis - Vulcan Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Magellan l BBCover – – l Sleuth – l coverage migration Scout – 18 coverage visualization, reporting & analysis Blender – l low overhead instrumentation & collection down to basic block level test prioritization Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

QG – Component Integrity l Reject code that breaks the componentized architecture of the product – l l 19 Control all dependencies across components Dependency analysis tool – Ma. X Based on binary analysis - Vulcan Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Ma. X l Constructs a graph of dependencies between binaries (DLLs) in the system – – l l 20 Obvious : call graph Subtle : registry, RPC, … Compare policy graph and actual graph Some discrepancies are treated as errors Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Vulcan l l Input – binary code Output – program abstractions Adapts to level of debug information Makes code instrumentation easy – l Makes code modification easy – 21 think ATOM link time, post link time, install time, run time Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

QG – Formal Specifications l Reject code with poorly designed and/or insufficiently specified interfaces l Lightweight specification language – SAL – l l 22 initial focus on memory usage All functions must be SAL annotated Fully supported in Visual Studio (see MSDN) Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

SAL l l A language of contracts between functions preconditions – – l postconditions – – l Statements that hold at entry to the callee What does a callee expect from its callers? Statements that hold at exit from the callee What does a callee promise its callers? Usage example: a 0 RT func(a 1 … an T par) l 23 Buffer sizes, null pointers, memory usage, … Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

SAL Example l wcsncpy – precondition: destination must have enough allocated space wchar_t wcsncpy ( wchar_t *dest, wchar_t *src, size_t num ); wchar_t wcsncpy ( __pre __writable. To(element. Count(num)) wchar_t *dest, wchar_t *src, size_t num ); 24 Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

SAL Principle l Control the power of the specifications: – – Impractical solution: Rewrite code in a different language that is amenable to automated analysis Practical solution: Formalize invariants that are implicit in the code in intuitive notations l 25 These invariants often already appear in comments Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Defect Detection Process – 1 26 Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

QG – Integer Overflow l Reject code with potential security holes due to unchecked integer arithmetic l Range specifications + range checker – IO Local (intra-procedural) analysis Runs on developer desktop as part of regular compilation process l l 27 Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

IO l Enforces correct arithmetic for allocations size 1 = … size 2 = … data = My. Alloc(size 1+size 2); for (i = 0; i < size 1; i++) data[i] = … l l 28 Construct an expression tree for every interesting expression in the code Ensure that every node in the tree is checked Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

QG – Buffer Overruns l Reject code with potential security holes due to out of bounds buffer accesses l Buffer size specifications + buffer overrun checker – esp. X Local (intra-procedural) analysis Runs on developer desktop as part of regular compilation process l l 29 Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

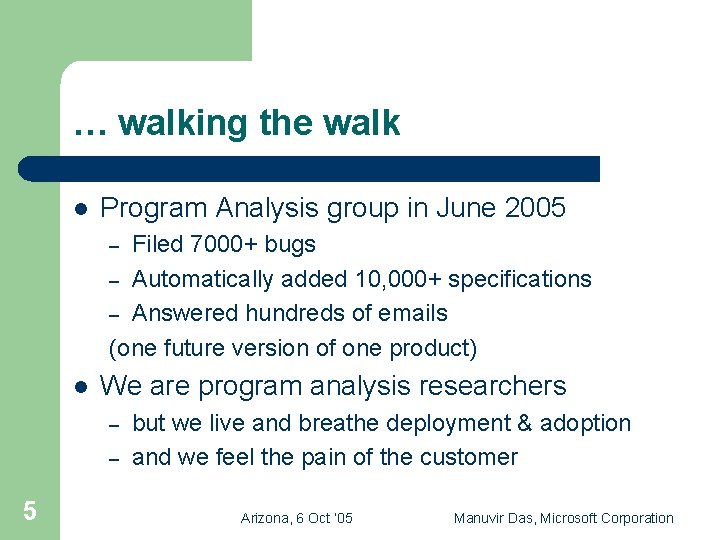

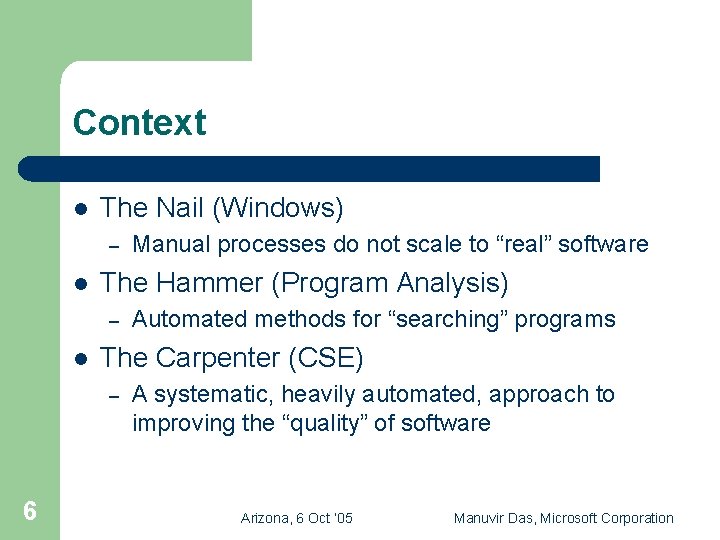

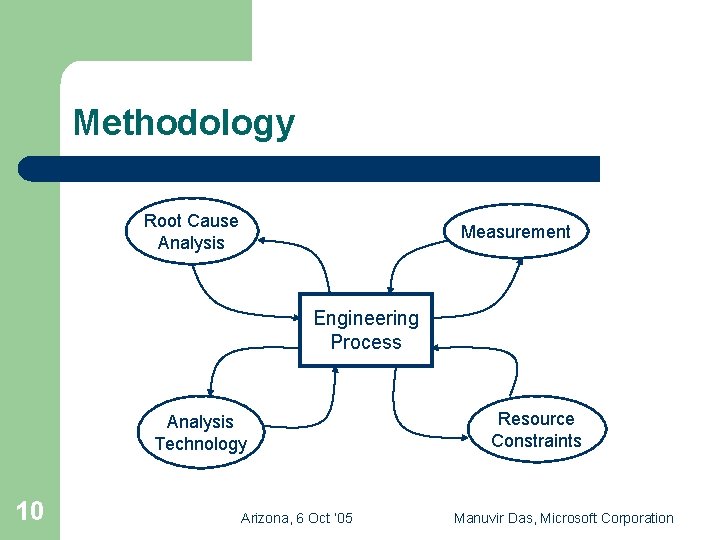

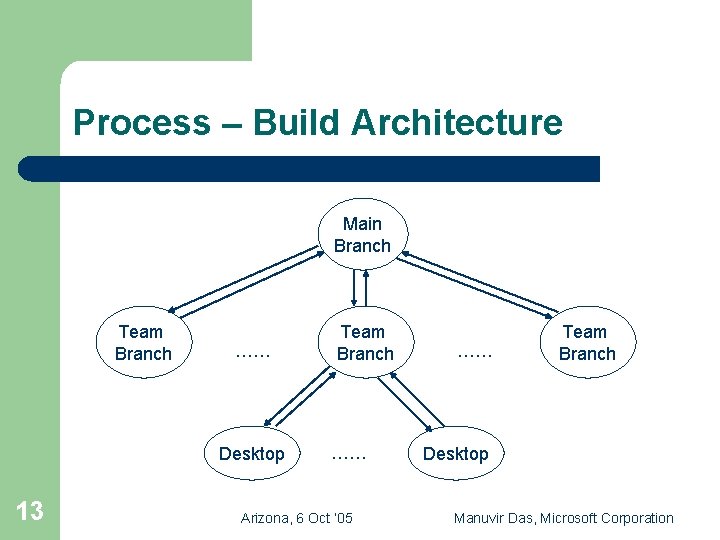

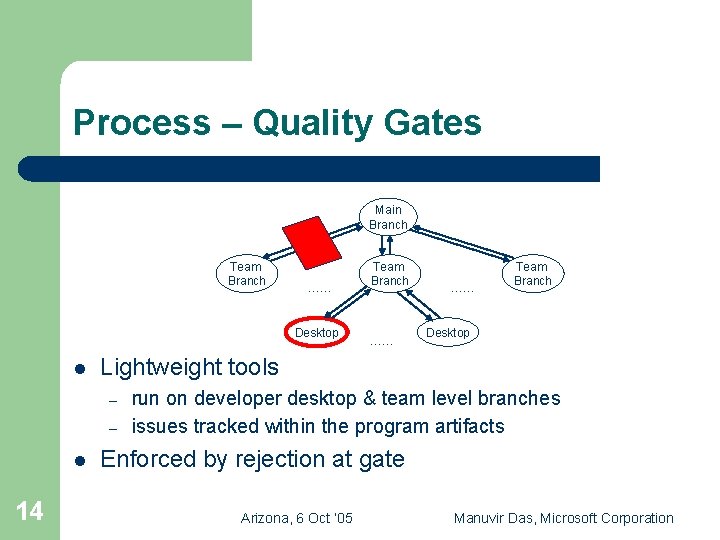

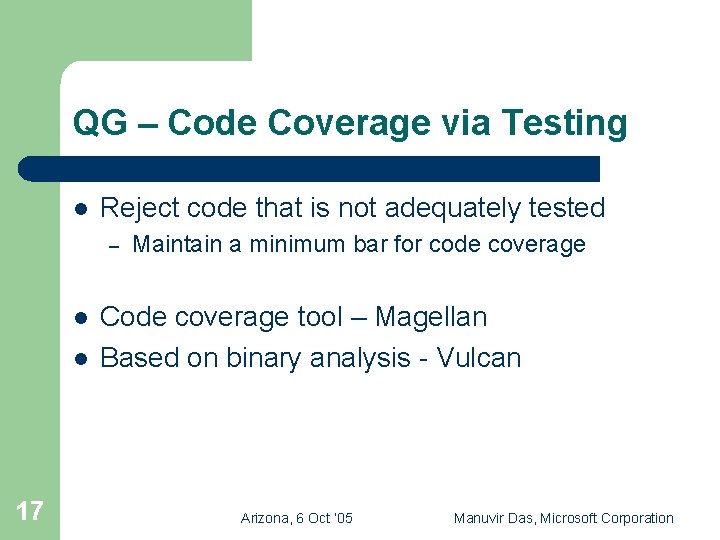

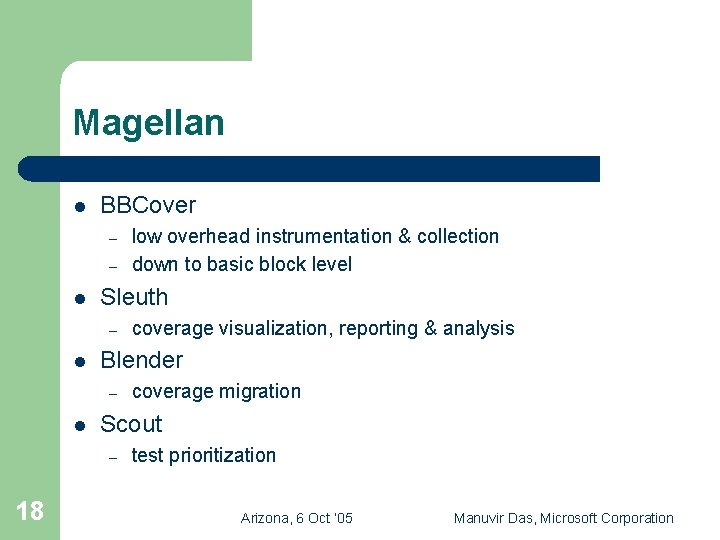

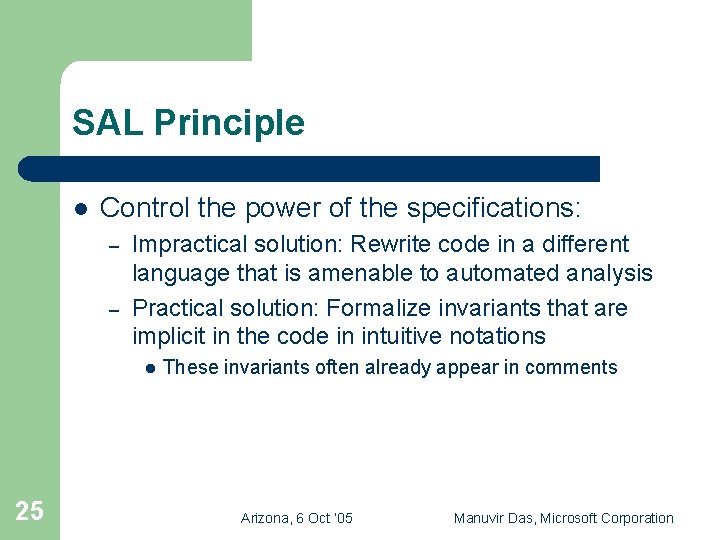

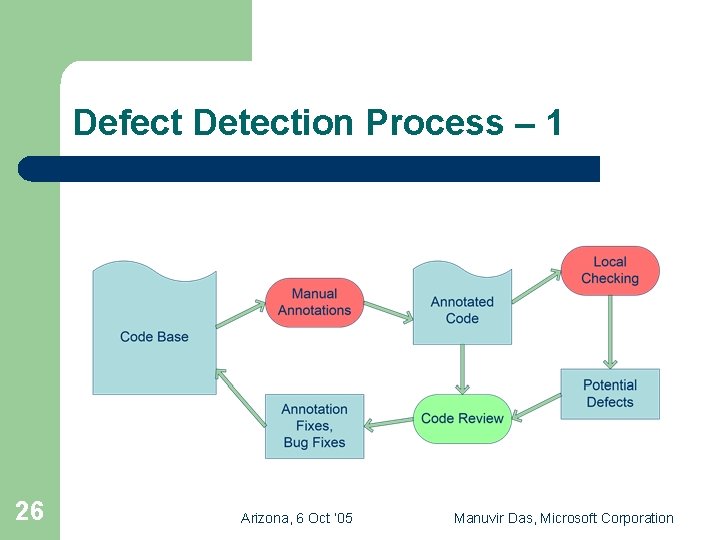

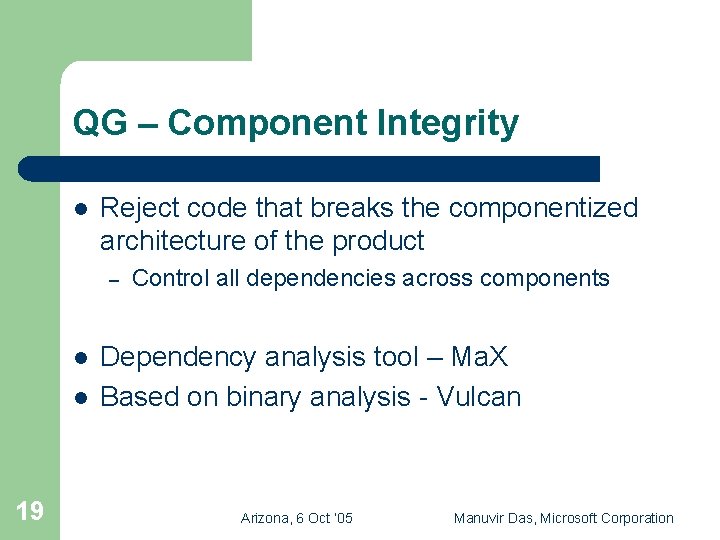

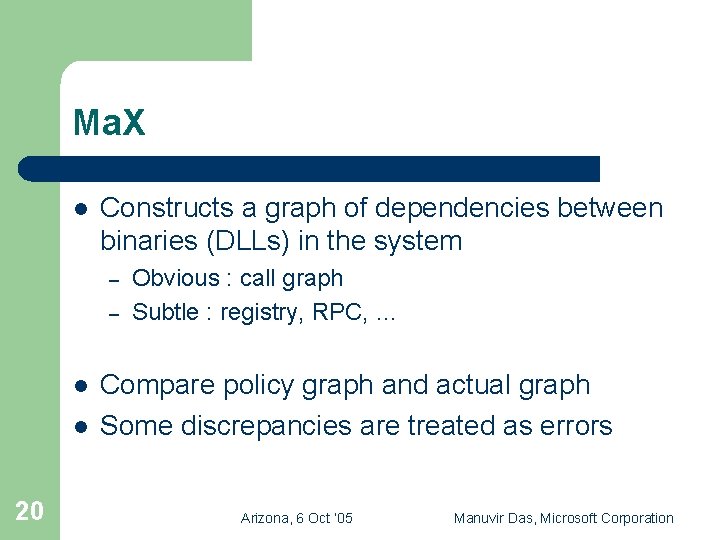

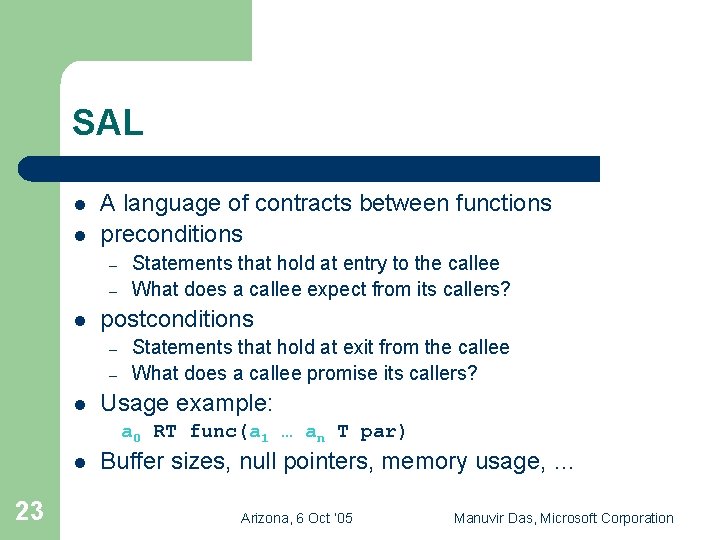

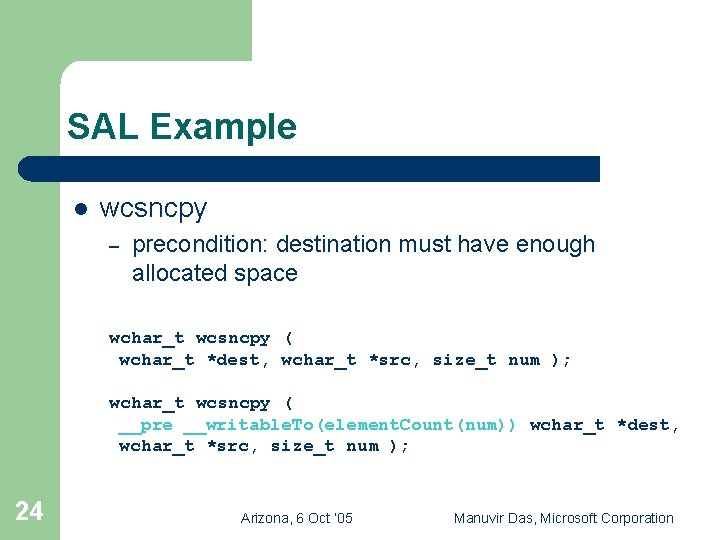

Bootstrap the process l Combine global and local analysis: – – 30 Weak global analysis to infer (potentially inaccurate) interface annotations - SALinfer Strong local analysis to identify incorrect code and/or annotations - esp. X Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

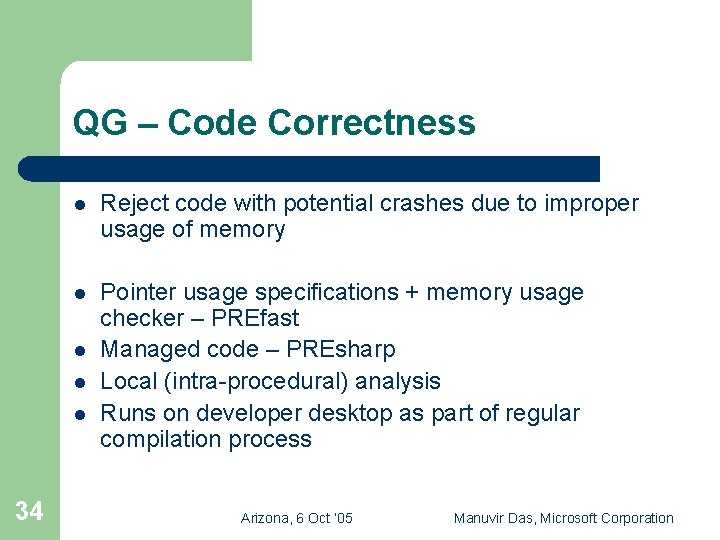

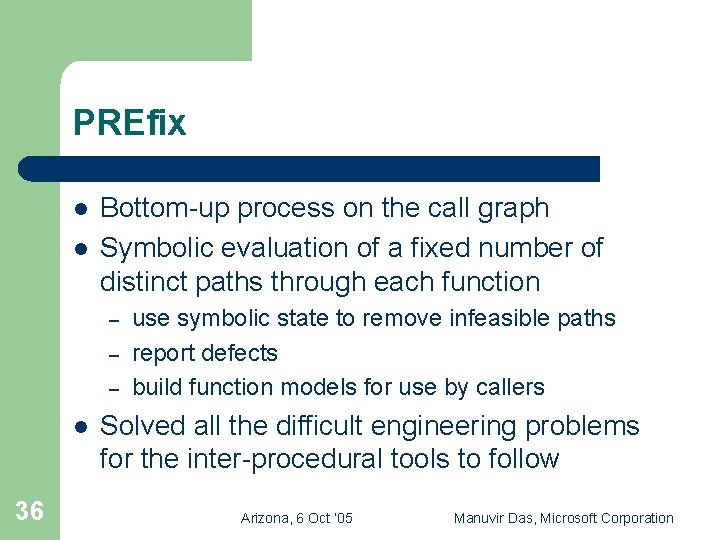

Defect Detection Process - 2 31 Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

![SALinfer void work int elements200 wrapelements 200 void wrappre wrap element Countlen SALinfer void work() { int elements[200]; wrap(elements, 200); } void wrap(pre wrap( element. Count(len)](https://slidetodoc.com/presentation_image_h2/43d3f43c54d7d5baf0ff8f8cc81c5d36/image-32.jpg)

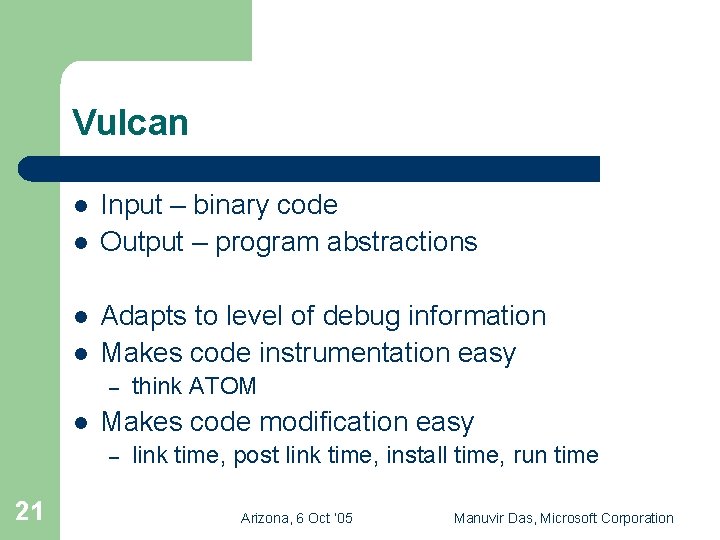

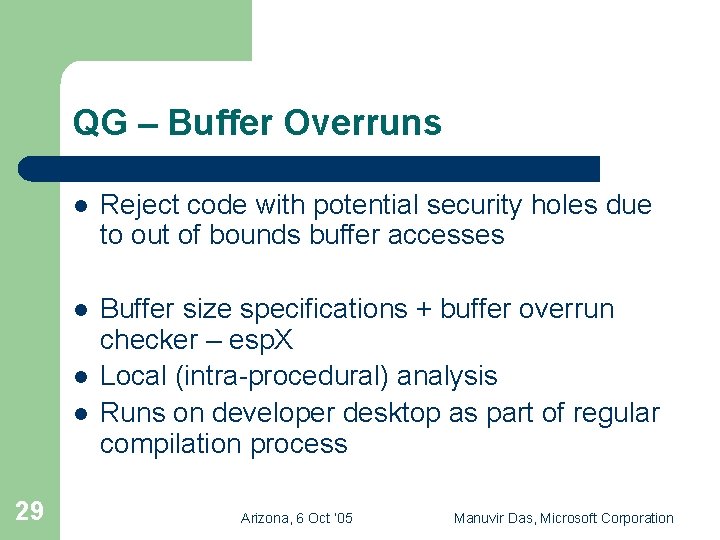

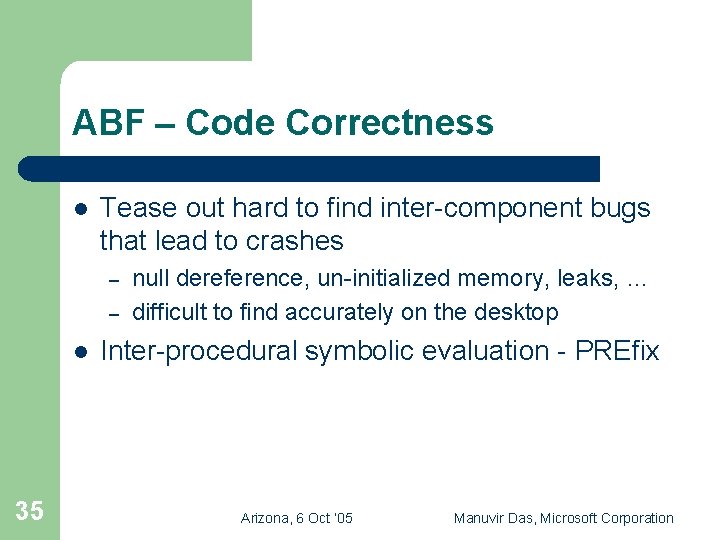

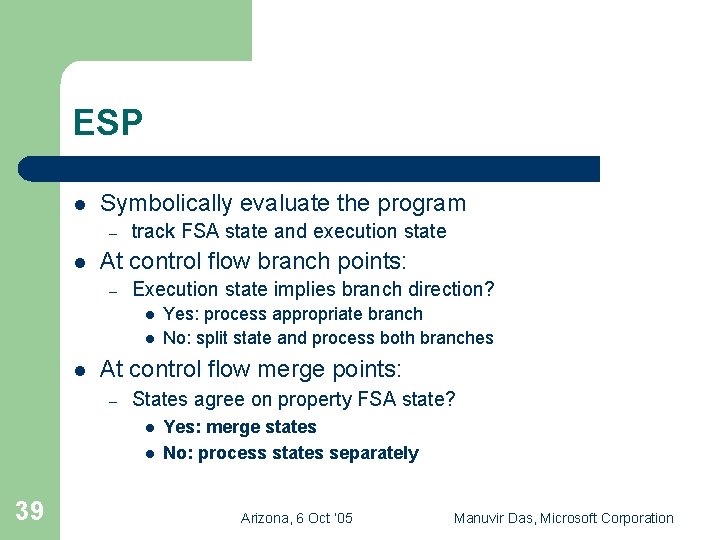

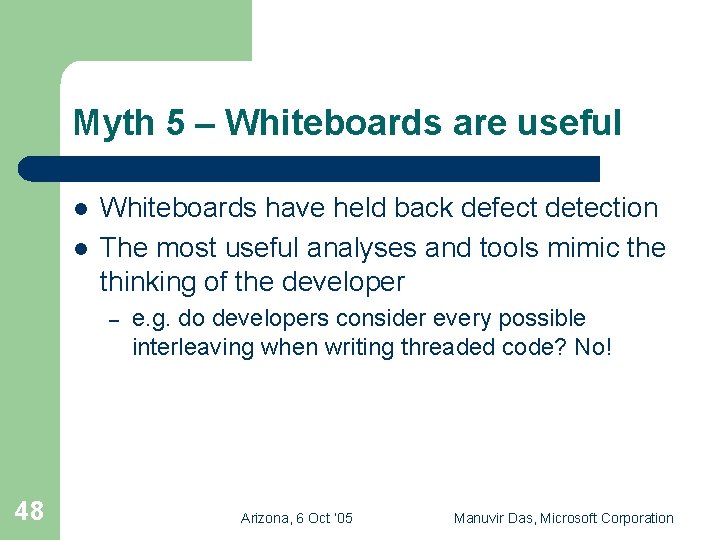

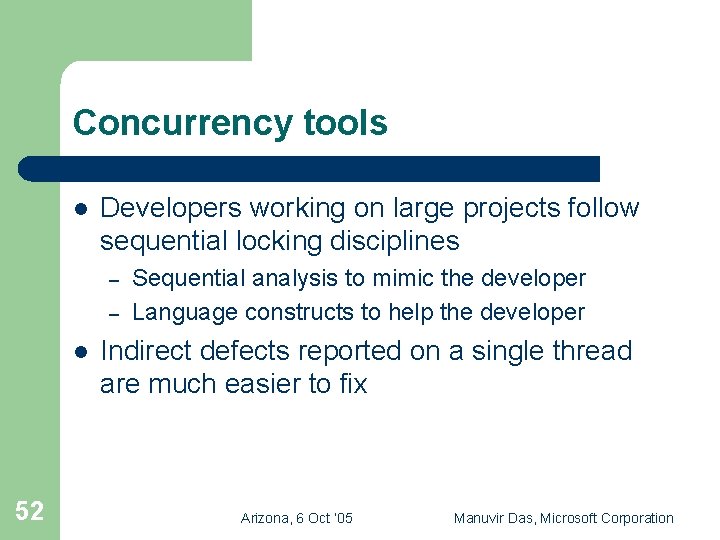

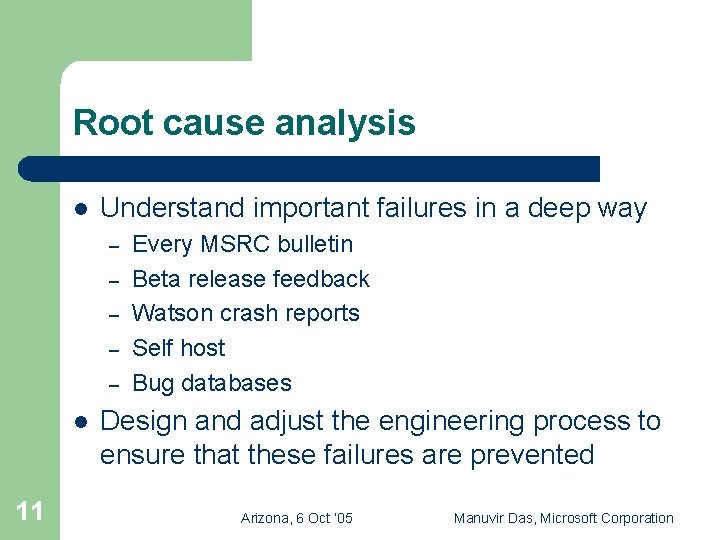

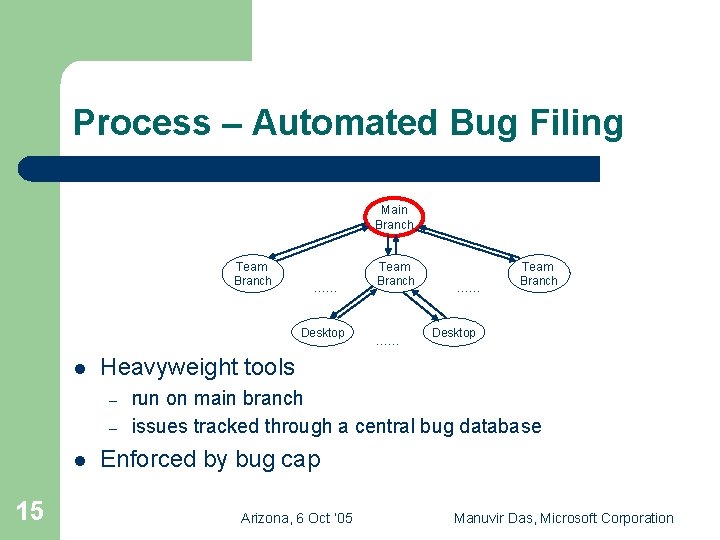

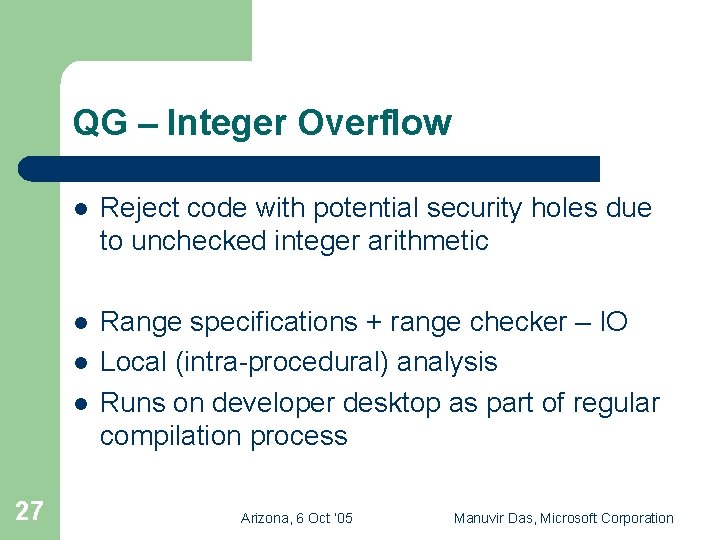

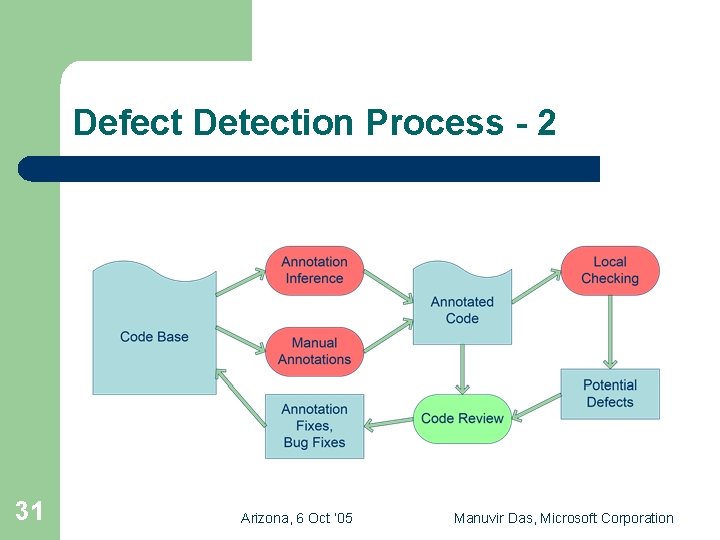

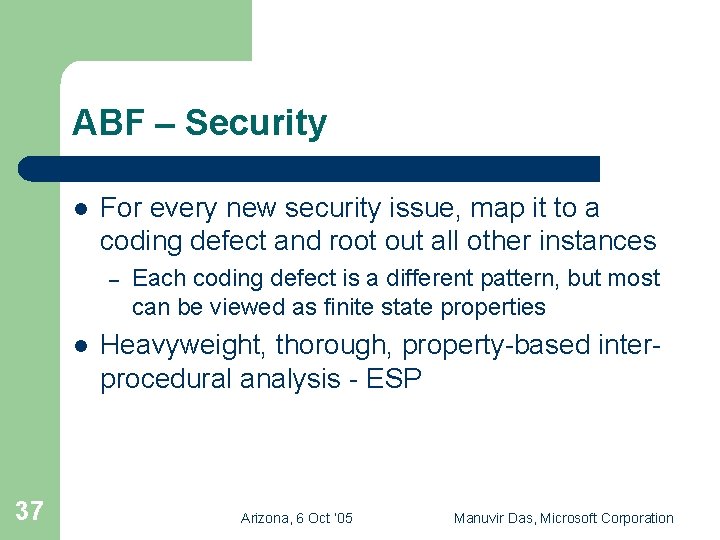

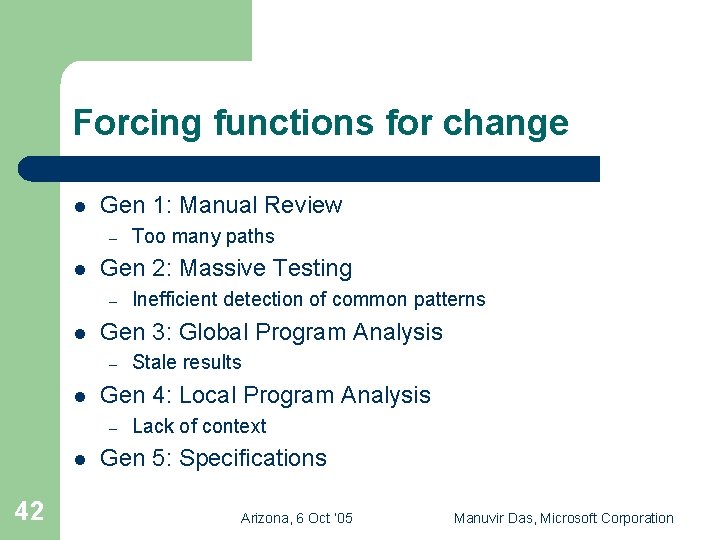

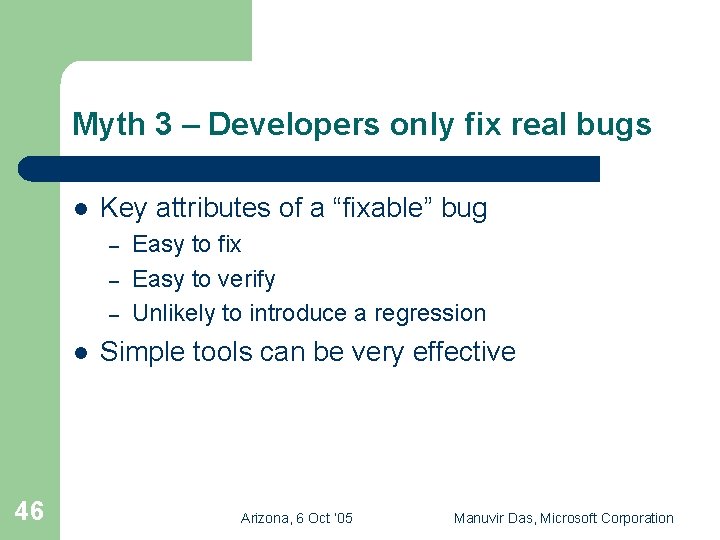

SALinfer void work() { int elements[200]; wrap(elements, 200); } void wrap(pre wrap( element. Count(len) int *buf, int len) { int *buf 2 = buf; int len 2 = len; zero(buf 2, len 2); } Track flow of values through the code 1. 2. 3. 4. Finds stack buffer Adds annotation Finds assignments Adds annotation void zero(pre element. Count(len) int *buf, int len) { int i; for(i = 0; i <= len; i++) buf[i] = 0; } 32 Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

![esp X void work int elements200 wrapelements 200 void wrappre element Countlen esp. X void work() { int elements[200]; wrap(elements, 200); } void wrap(pre element. Count(len)](https://slidetodoc.com/presentation_image_h2/43d3f43c54d7d5baf0ff8f8cc81c5d36/image-33.jpg)

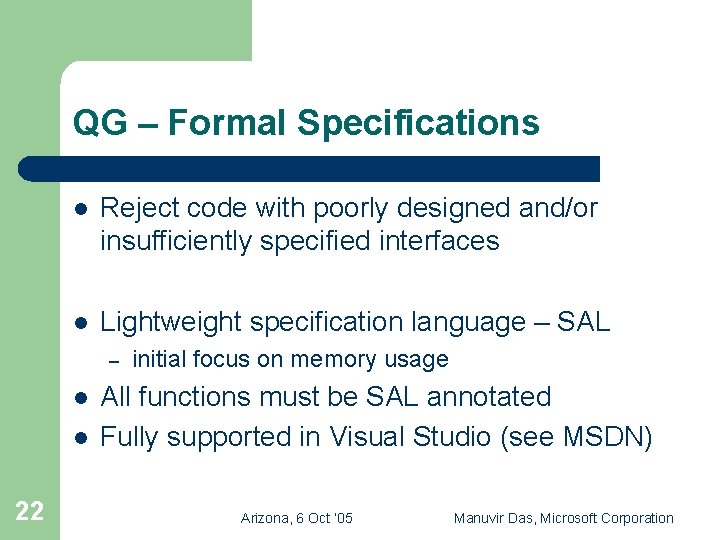

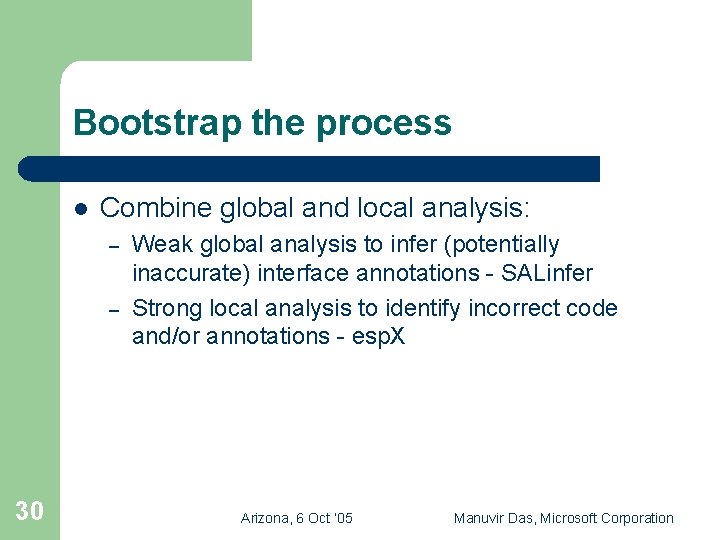

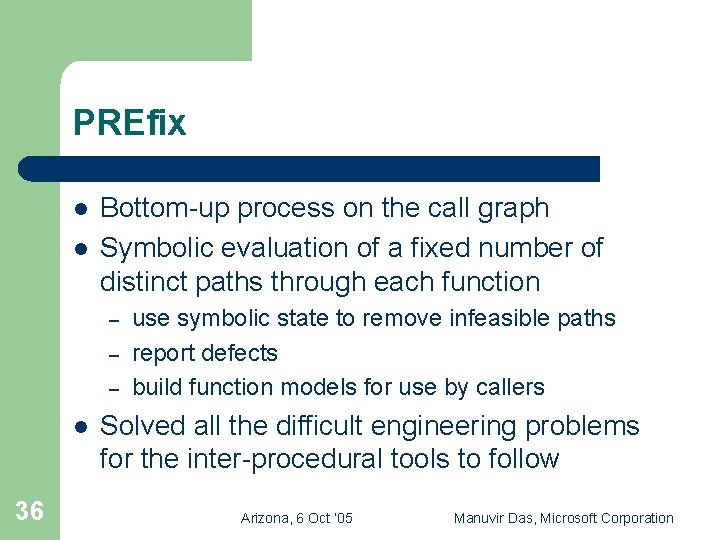

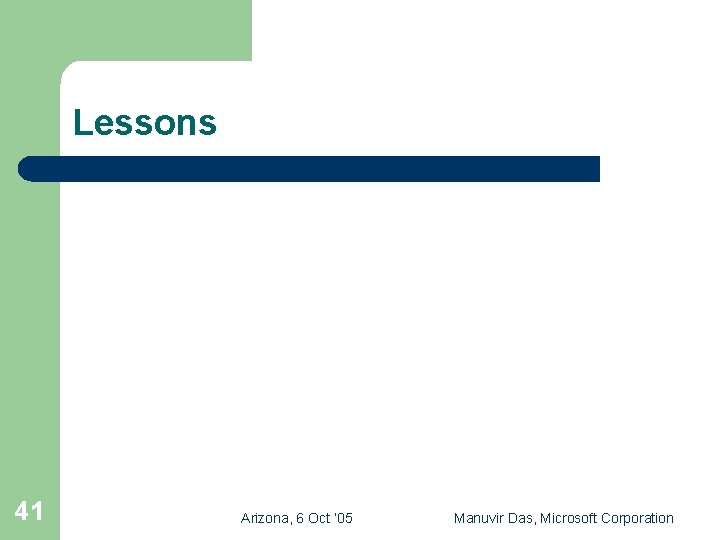

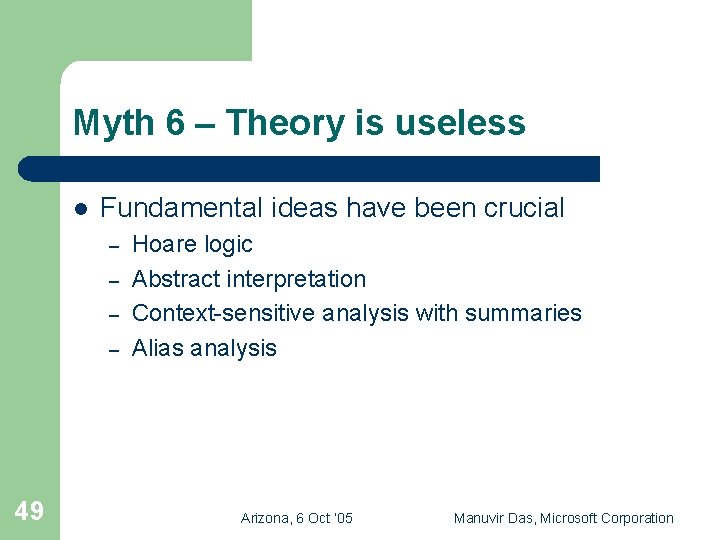

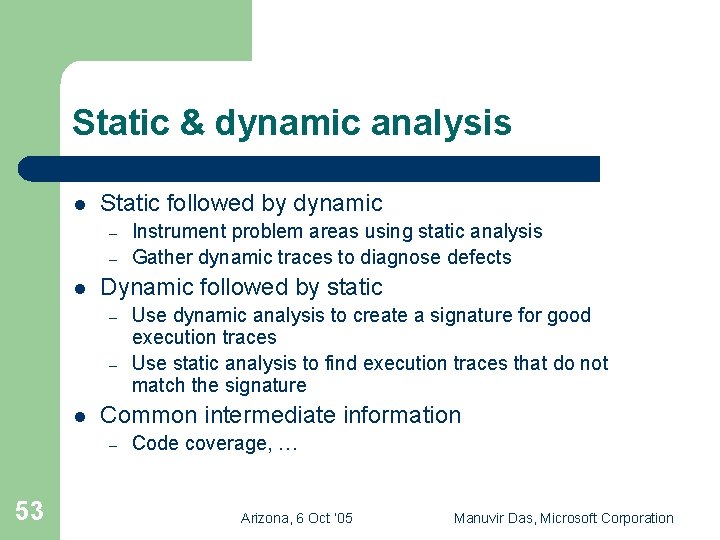

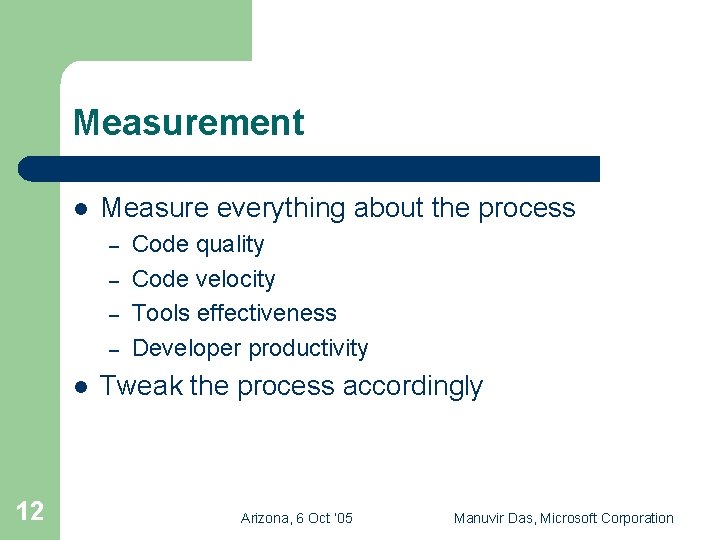

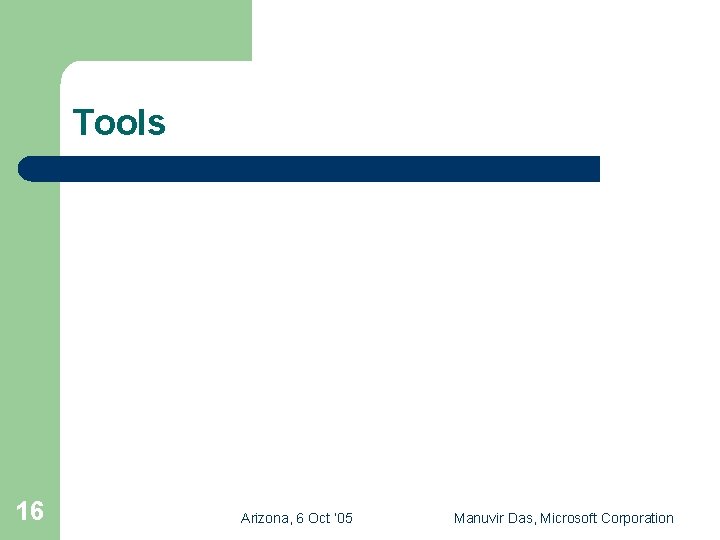

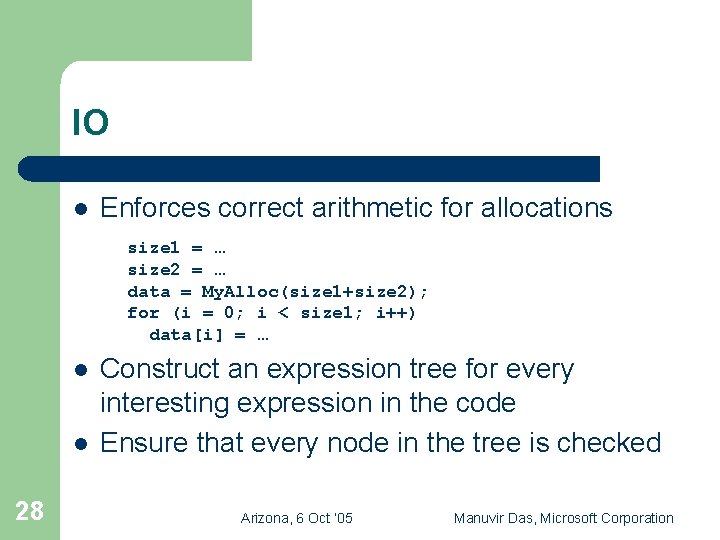

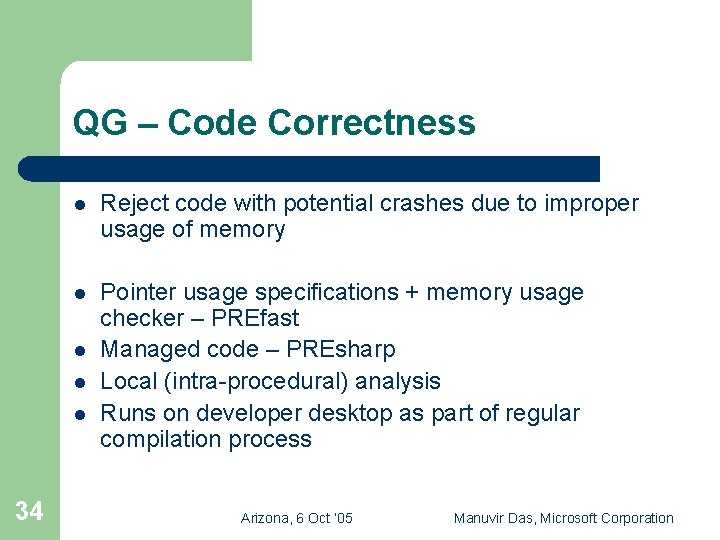

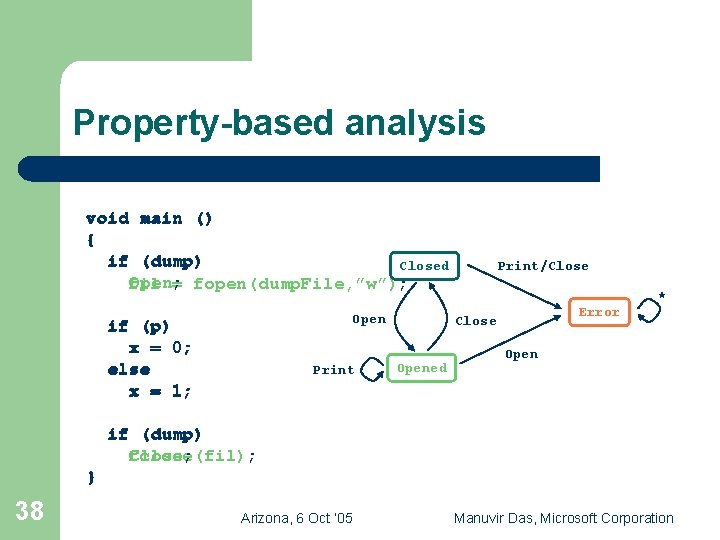

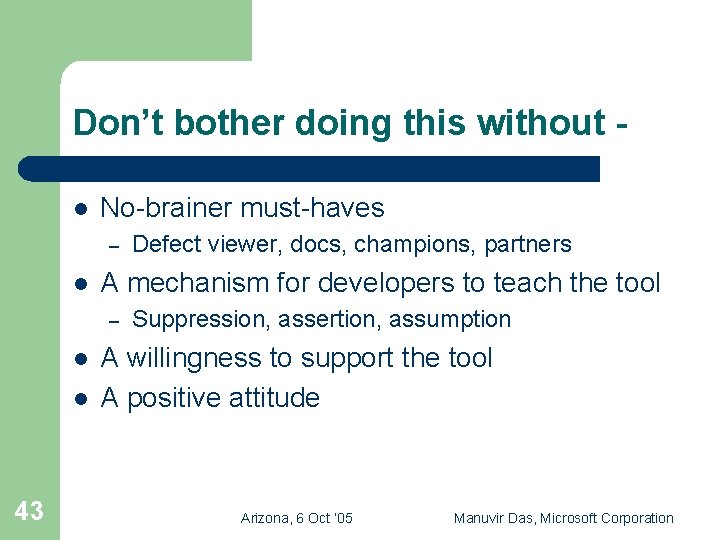

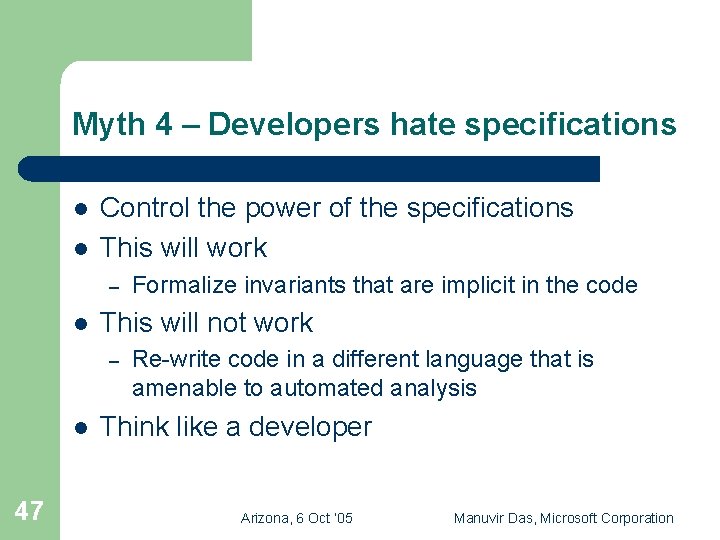

esp. X void work() { int elements[200]; wrap(elements, 200); } void wrap(pre element. Count(len) int *buf, int len) { int *buf 2 = buf; int len 2 = len; zero(buf 2, len 2); } void zero(pre element. Count(len) int *buf, int len) { int i; for(i = 0; i <= len; i++) buf[i] = 0; } 33 Arizona, 6 Oct ‘ 05 Building and solving constraints 1. 2. 3. Builds constraints Verifies contract Builds constraints len = length(buf); i ≤ len 4. Finds overrun i < length(buf) ? NO! Manuvir Das, Microsoft Corporation

QG – Code Correctness l Reject code with potential crashes due to improper usage of memory l Pointer usage specifications + memory usage checker – PREfast Managed code – PREsharp Local (intra-procedural) analysis Runs on developer desktop as part of regular compilation process l l l 34 Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

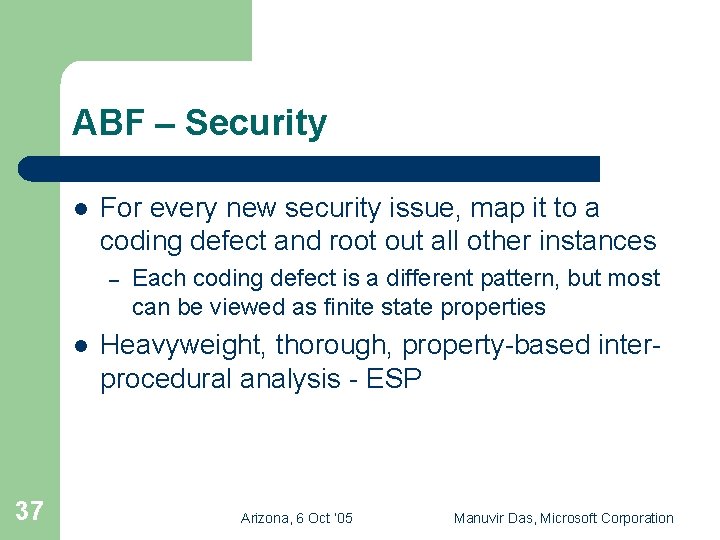

ABF – Code Correctness l Tease out hard to find inter-component bugs that lead to crashes – – l 35 null dereference, un-initialized memory, leaks, … difficult to find accurately on the desktop Inter-procedural symbolic evaluation - PREfix Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

PREfix l l Bottom-up process on the call graph Symbolic evaluation of a fixed number of distinct paths through each function – – – l 36 use symbolic state to remove infeasible paths report defects build function models for use by callers Solved all the difficult engineering problems for the inter-procedural tools to follow Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

ABF – Security l For every new security issue, map it to a coding defect and root out all other instances – l 37 Each coding defect is a different pattern, but most can be viewed as finite state properties Heavyweight, thorough, property-based interprocedural analysis - ESP Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

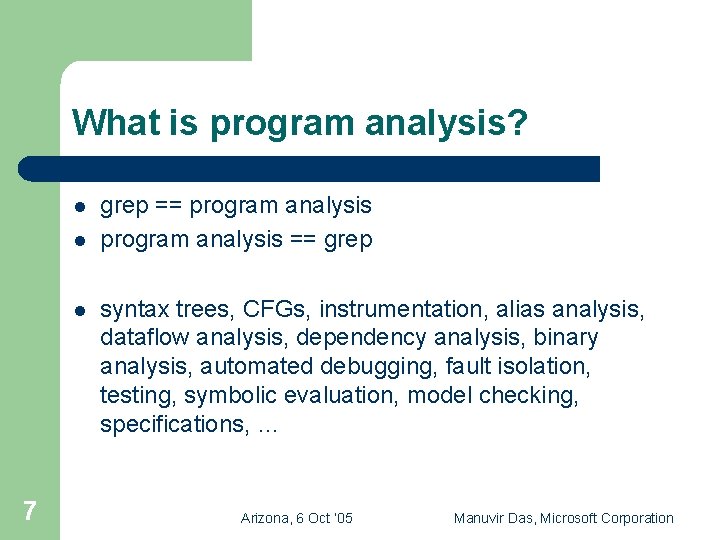

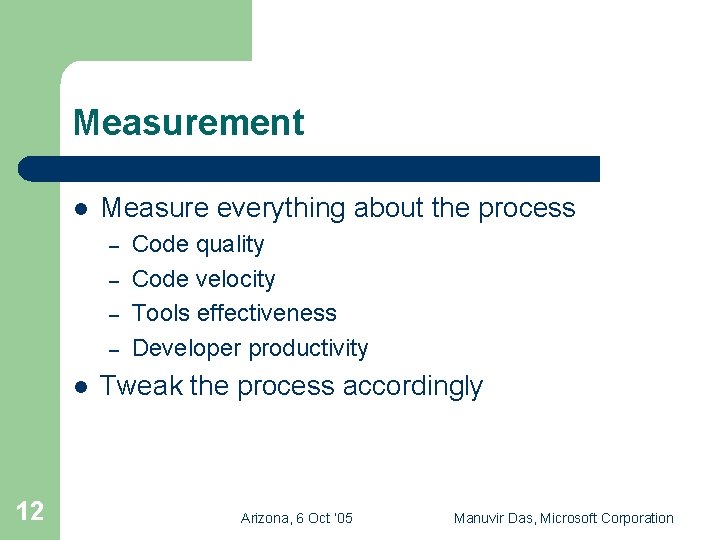

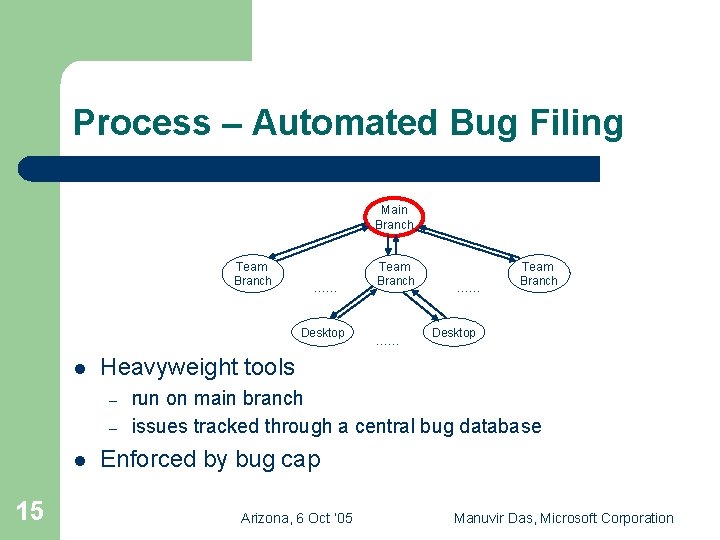

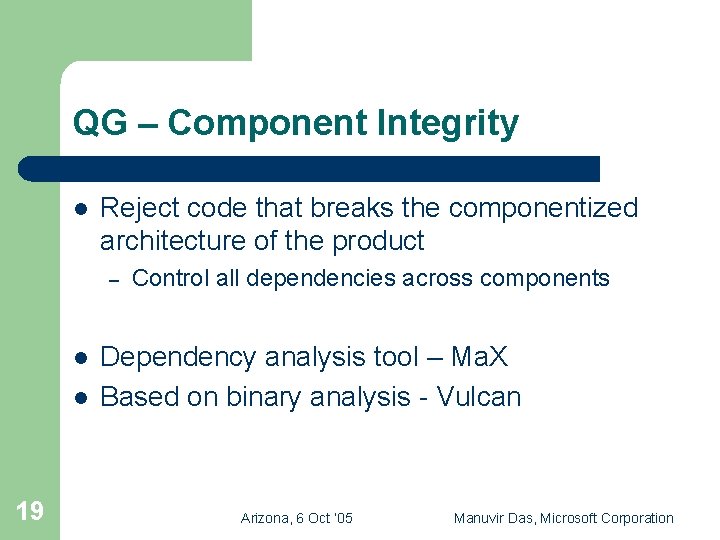

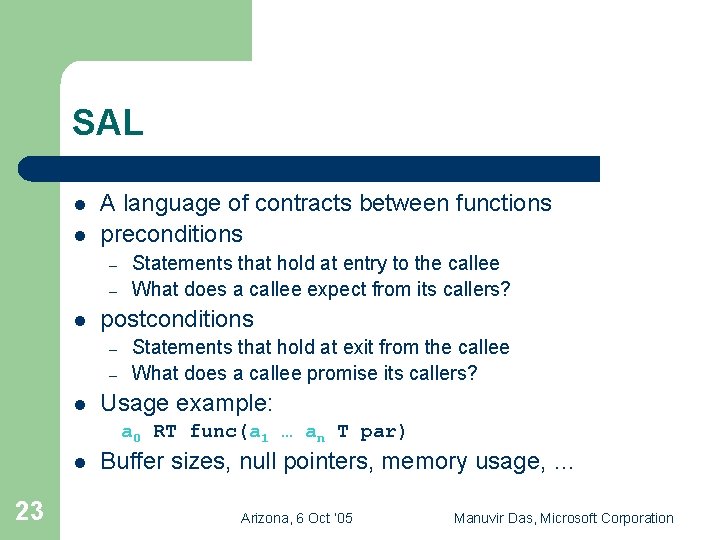

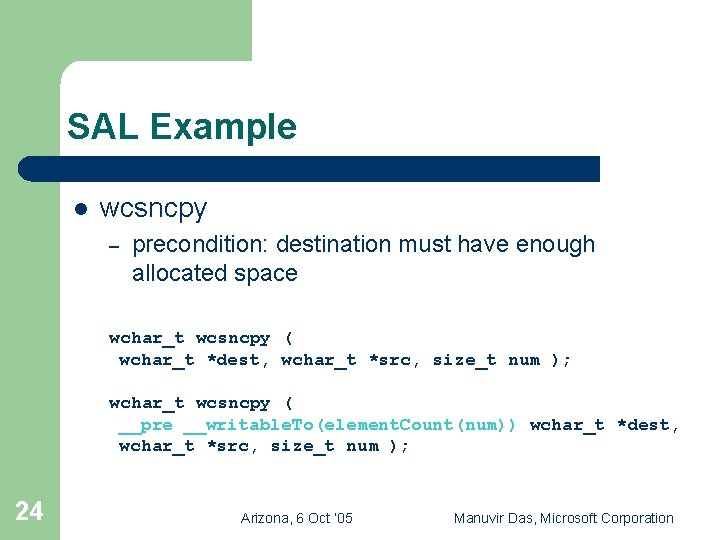

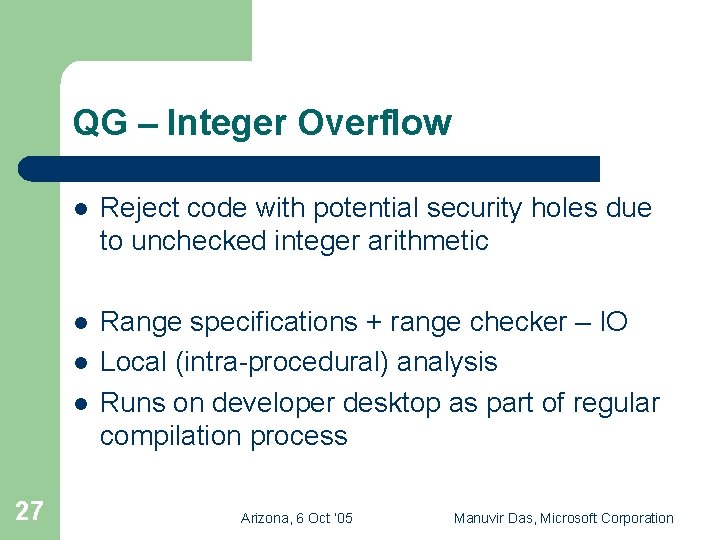

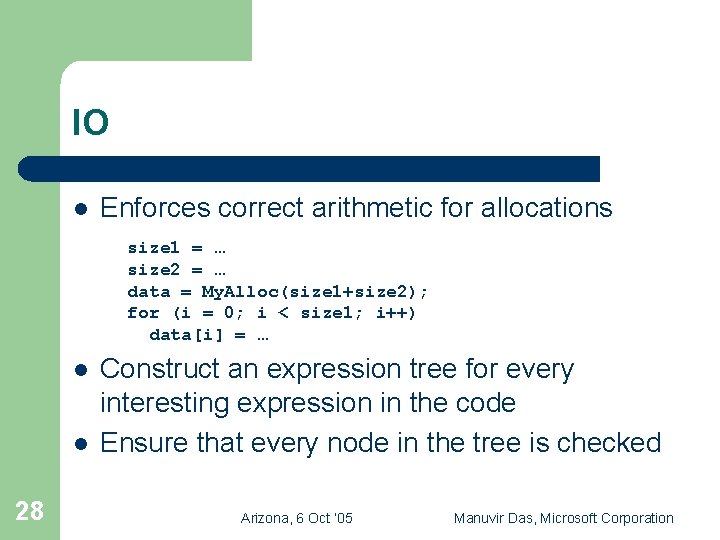

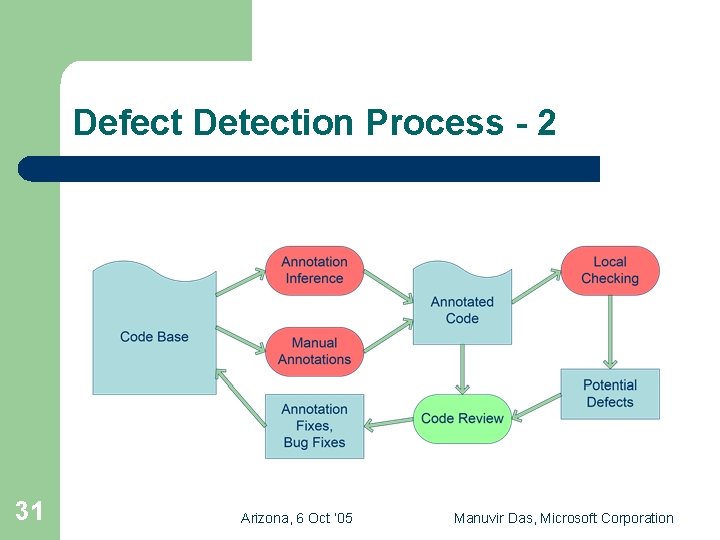

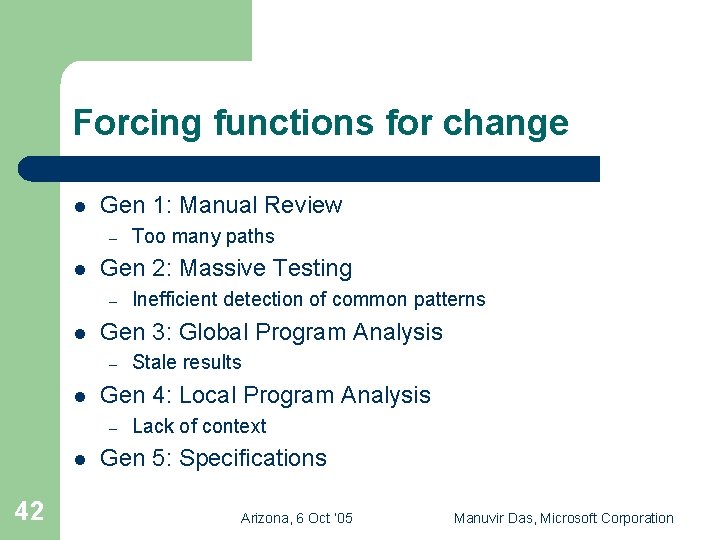

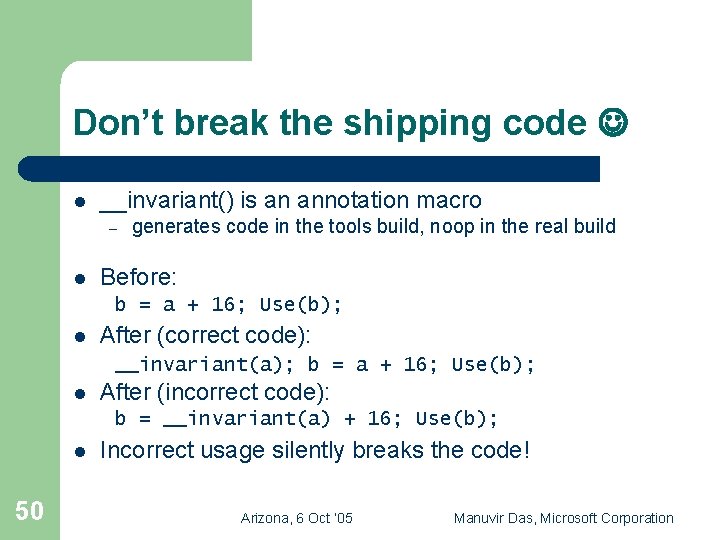

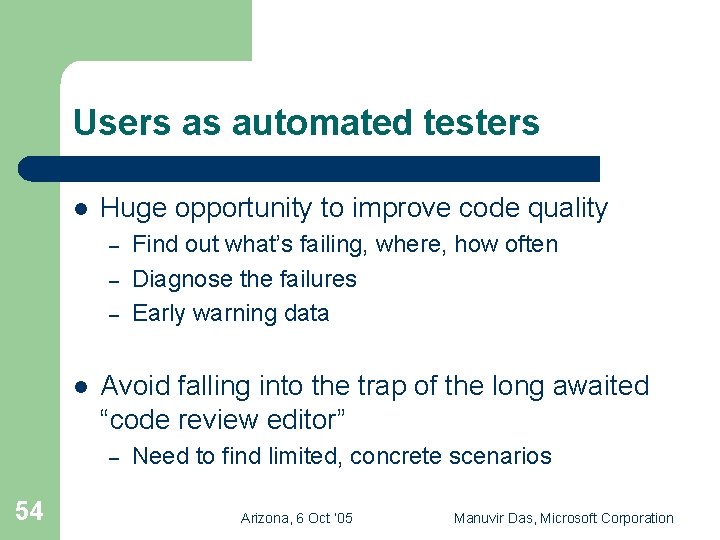

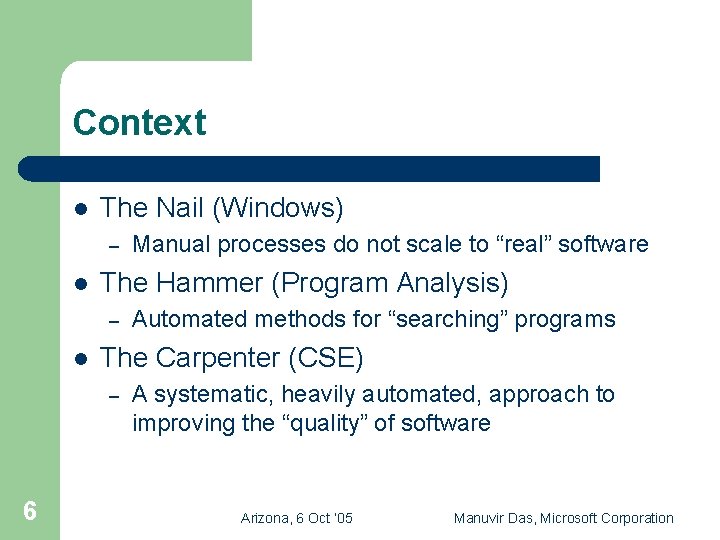

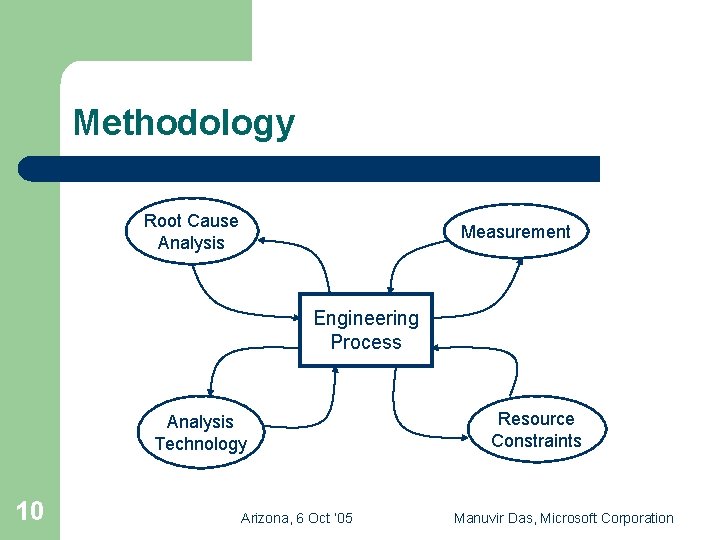

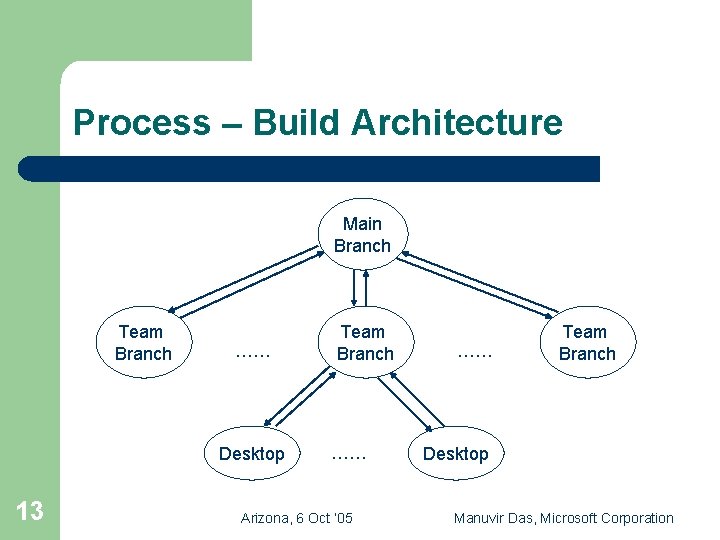

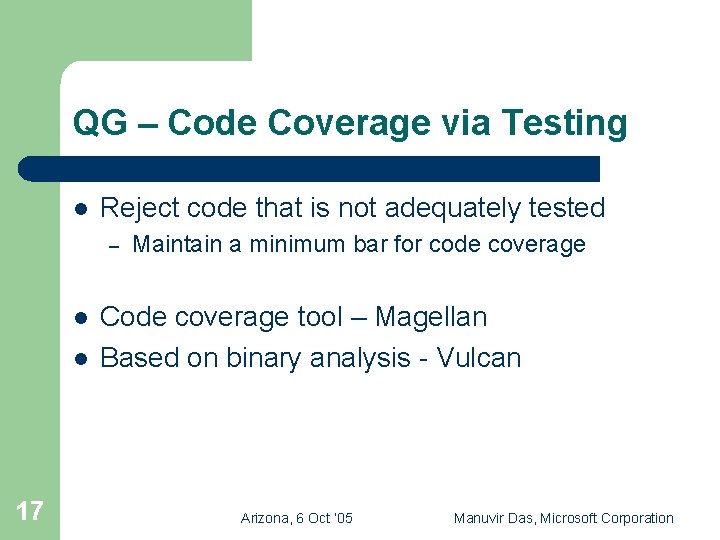

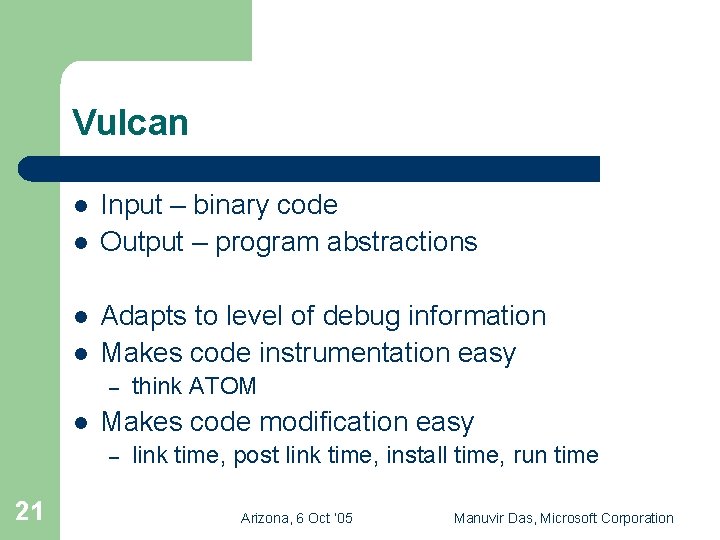

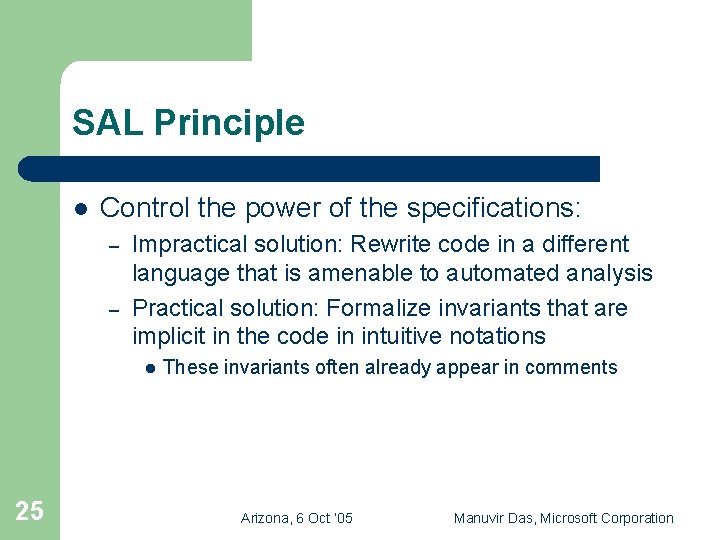

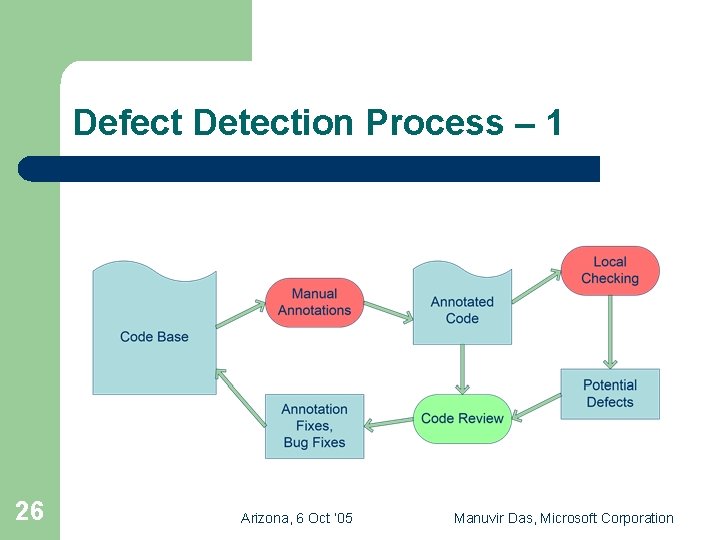

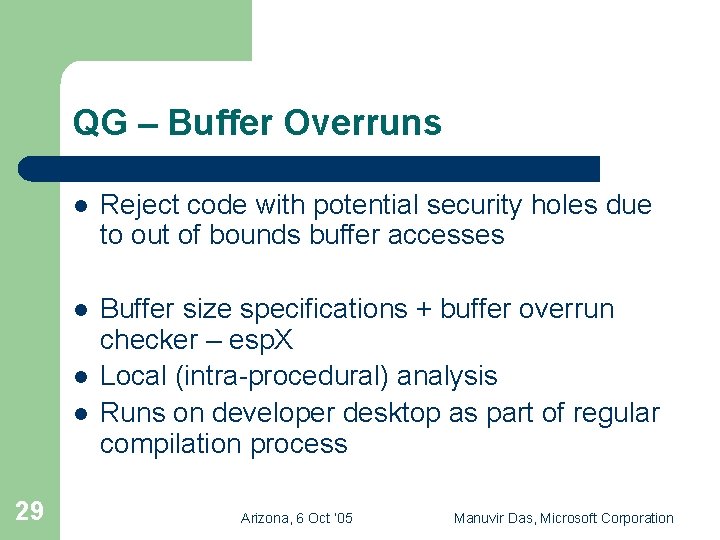

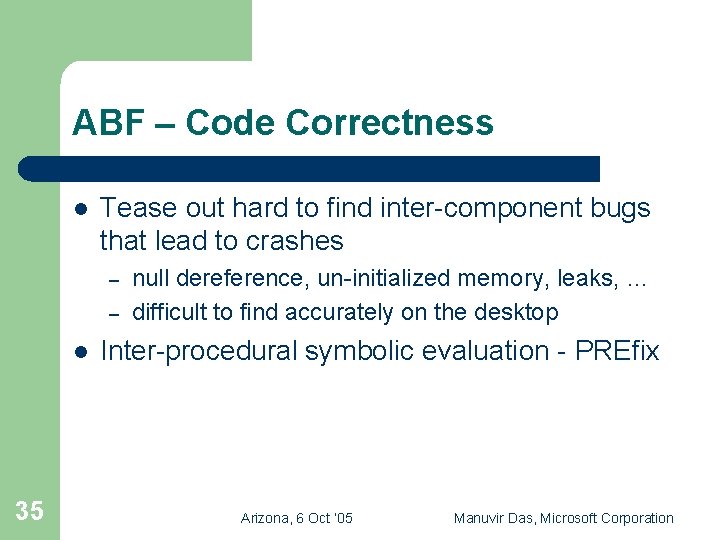

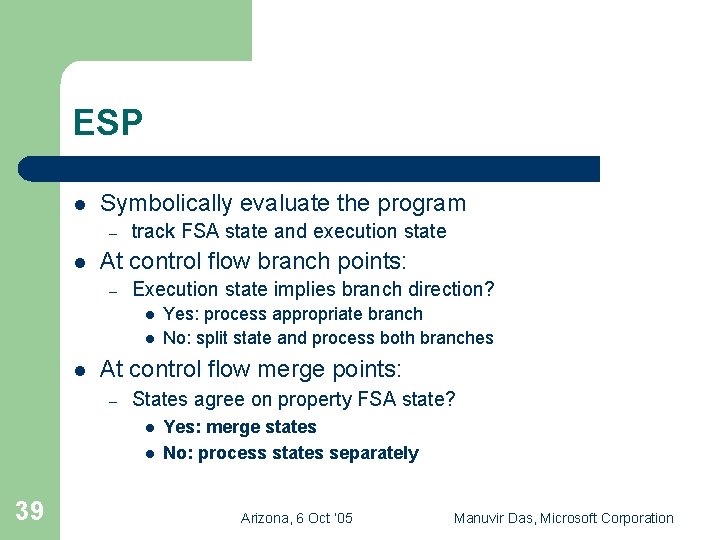

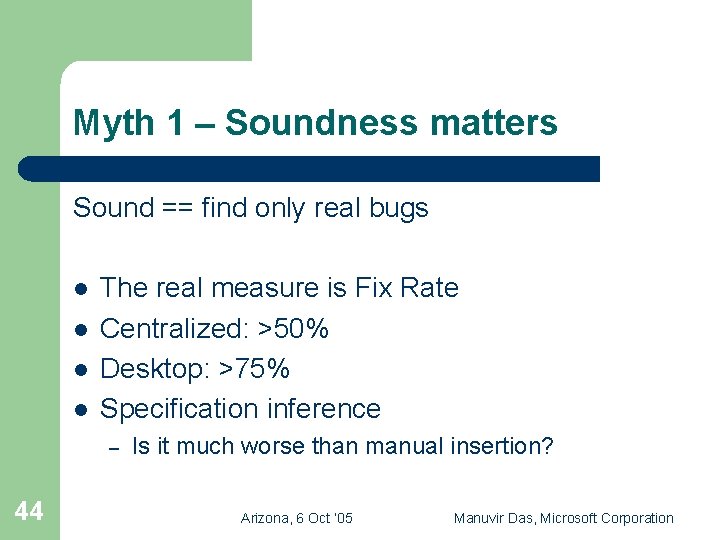

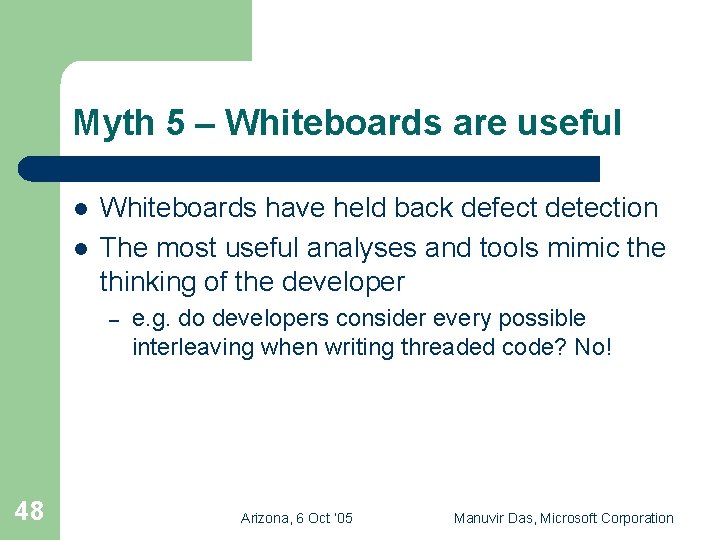

Property-based analysis void main () { if (dump) Closed Open; fil = fopen(dump. File, ”w”); Open if (p) x = 0; else x = 1; Print/Close Error Close Opened * Open if (dump) Close; fclose(fil); } 38 Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

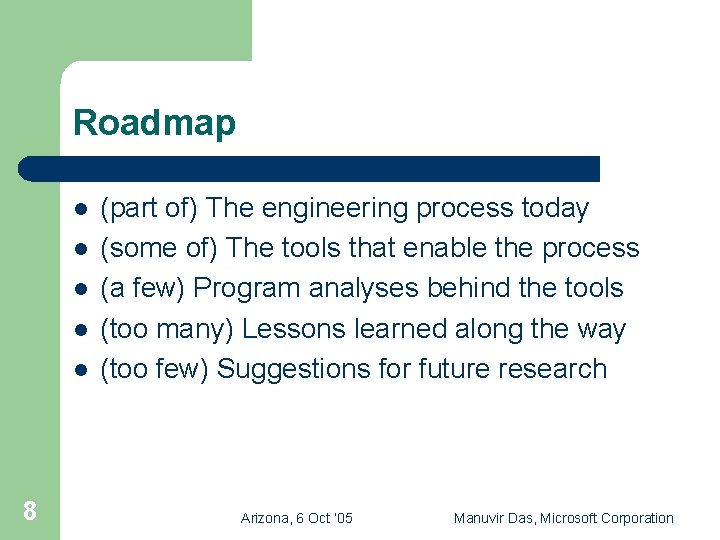

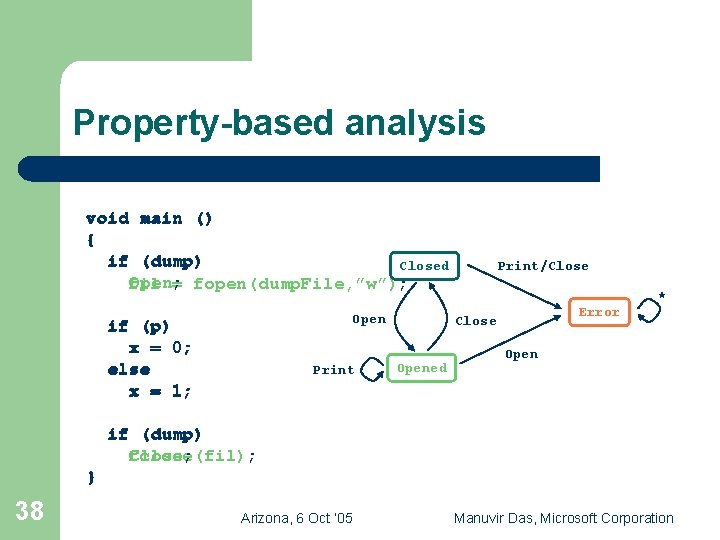

ESP l Symbolically evaluate the program – l track FSA state and execution state At control flow branch points: – Execution state implies branch direction? l l l At control flow merge points: – States agree on property FSA state? l l 39 Yes: process appropriate branch No: split state and process both branches Yes: merge states No: process states separately Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

![Example entry Closed T dump F Open OpeneddumpT T p F x 0 Example entry [Closed] T dump F Open [Opened|dump=T] T p F x = 0](https://slidetodoc.com/presentation_image_h2/43d3f43c54d7d5baf0ff8f8cc81c5d36/image-40.jpg)

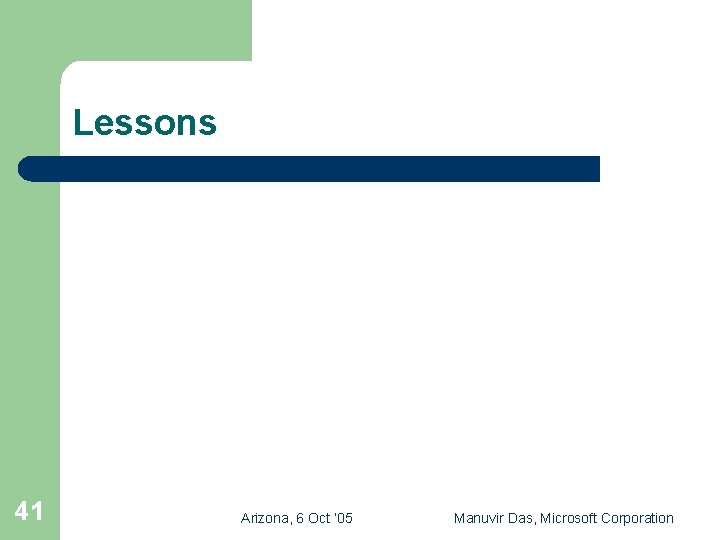

Example entry [Closed] T dump F Open [Opened|dump=T] T p F x = 0 [Opened|dump=T] [Opened|dump=T, x=0] T x = 1 dump 40 [Closed|dump=F] [Opened|dump=T, p=F, x=1] F Close [Closed|dump=T] [Closed|dump=F] exit Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Lessons 41 Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Forcing functions for change l Gen 1: Manual Review – l Gen 2: Massive Testing – l 42 Stale results Gen 4: Local Program Analysis – l Inefficient detection of common patterns Gen 3: Global Program Analysis – l Too many paths Lack of context Gen 5: Specifications Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Don’t bother doing this without l No-brainer must-haves – l A mechanism for developers to teach the tool – l l 43 Defect viewer, docs, champions, partners Suppression, assertion, assumption A willingness to support the tool A positive attitude Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Myth 1 – Soundness matters Sound == find only real bugs l l The real measure is Fix Rate Centralized: >50% Desktop: >75% Specification inference – 44 Is it much worse than manual insertion? Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Myth 2 – Completeness matters Complete == find all the bugs l There will never be a complete analysis – – l Developers want consistent analysis – – 45 Partial specifications Missing code Tools should be stable w. r. t. minor code changes Systematic, thorough, tunable program analysis Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Myth 3 – Developers only fix real bugs l Key attributes of a “fixable” bug – – – l 46 Easy to fix Easy to verify Unlikely to introduce a regression Simple tools can be very effective Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Myth 4 – Developers hate specifications l l Control the power of the specifications This will work – l This will not work – l 47 Formalize invariants that are implicit in the code Re-write code in a different language that is amenable to automated analysis Think like a developer Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Myth 5 – Whiteboards are useful l l Whiteboards have held back defect detection The most useful analyses and tools mimic the thinking of the developer – 48 e. g. do developers consider every possible interleaving when writing threaded code? No! Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Myth 6 – Theory is useless l Fundamental ideas have been crucial – – 49 Hoare logic Abstract interpretation Context-sensitive analysis with summaries Alias analysis Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Don’t break the shipping code l __invariant() is an annotation macro – l generates code in the tools build, noop in the real build Before: b = a + 16; Use(b); l After (correct code): __invariant(a); b = a + 16; Use(b); l After (incorrect code): b = __invariant(a) + 16; Use(b); l 50 Incorrect usage silently breaks the code! Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Research directions 51 Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Concurrency tools l Developers working on large projects follow sequential locking disciplines – – l 52 Sequential analysis to mimic the developer Language constructs to help the developer Indirect defects reported on a single thread are much easier to fix Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Static & dynamic analysis l Static followed by dynamic – – l Dynamic followed by static – – l Use dynamic analysis to create a signature for good execution traces Use static analysis to find execution traces that do not match the signature Common intermediate information – 53 Instrument problem areas using static analysis Gather dynamic traces to diagnose defects Code coverage, … Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Users as automated testers l Huge opportunity to improve code quality – – – l Avoid falling into the trap of the long awaited “code review editor” – 54 Find out what’s failing, where, how often Diagnose the failures Early warning data Need to find limited, concrete scenarios Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Evolutionary tools l Specification-based tools evolve a language – – l We have tackled memory usage – 55 Introduce a programming discipline Increase the portability of legacy code Rinse and repeat Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

Summary l l l 56 Program analysis technology can make a huge impact on how software is developed The trick is to properly balance research on new techniques with a focus on deployment The Center for Software Excellence (CSE) at Microsoft is doing this (well? ) today Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation

http: //www. microsoft. com/cse http: //research. microsoft. com/manuvir 57 © 2005 Microsoft Corporation. All rights reserved. This presentation is for informational purposes only. MICROSOFT MAKES NO WARRANTIES, EXPRESS OR IMPLIED, IN THIS SUMMARY. Arizona, 6 Oct ‘ 05 Manuvir Das, Microsoft Corporation