Software Engineering Challenges of the H 2020 DEEPEST

- Slides: 25

Software Engineering Challenges of the H 2020 DEEP-EST Modular Supercomputing Architecture Helmut Neukirchen University of Iceland helmut@hi. is The research leading to these results has received funding from the European Union Horizon 2020 – the Framework Programme for Research and Innovation (2014 -2020) under Grant Agreement n° 754304

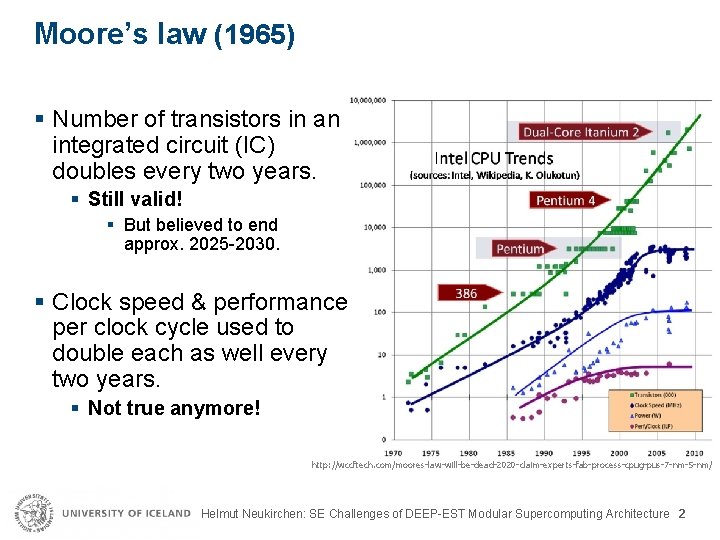

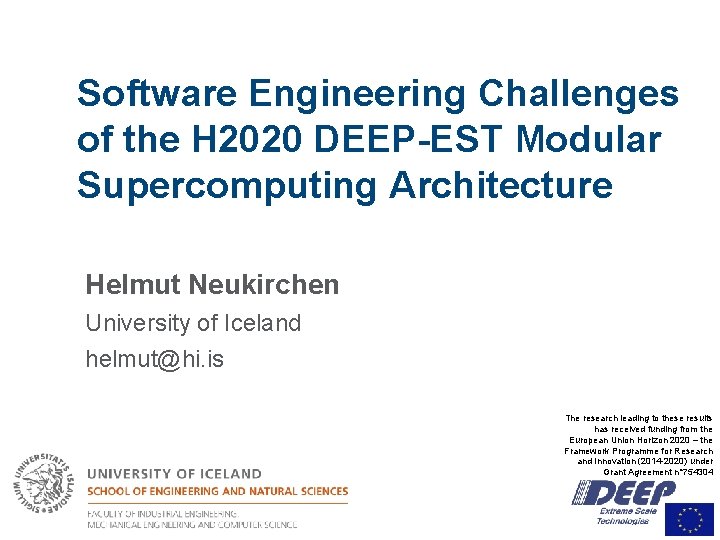

Moore’s law (1965) § Number of transistors in an integrated circuit (IC) doubles every two years. § Still valid! § But believed to end approx. 2025 -2030. § Clock speed & performance per clock cycle used to double each as well every two years. § Not true anymore! http: //wccftech. com/moores-law-will-be-dead-2020 -claim-experts-fab-process-cpug-pus-7 -nm-5 -nm/ Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 2

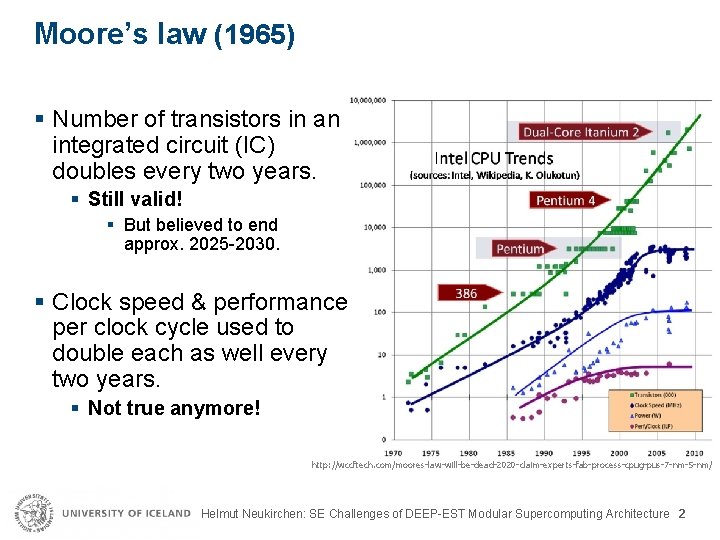

For what is the doubling of number transistors used? § More cores, bigger caches. https: //hpc. postech. ac. kr/~jangwoo/research. html § Today’s main way to achieve speed: § Parallel processing: § Many cores per CPU, § Many CPU nodes. § Needs to be supported by software Software Engineering (SE) challenges! Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 3

Parallel processing programming models (1/2) § Big data processing (Apache Hadoop, Apache Spark): § Write serial high-level code, frameworks take care of parallelization, § Nice from SE point of view. § Performance not on par with HPC. § Partly due to Java/Scala, Python: 10 x slower than C/C++. F Glaser, H Neukirchen, T Rings, J Grabowski: Using Map. Reduce for High Energy Physics Data Analysis. Proc. Int. Symp. on Map. Reduce and Big Data Infrastructure (MR. BDI), 2013. H Neukirchen: Performance of Big Data versus High-Performance Computing: Some Observations. Extended Abstract. Clausthal-Göttingen Int. Workshop on Simulation Science (Sim. Science), 2017. Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 4

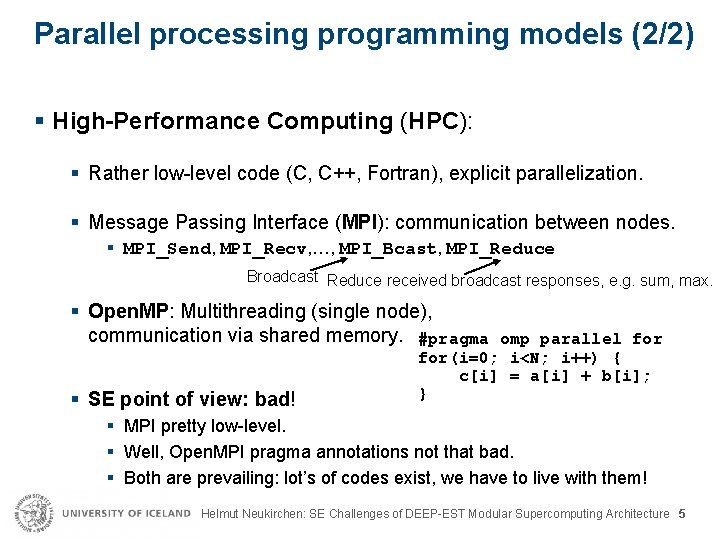

Parallel processing programming models (2/2) § High-Performance Computing (HPC): § Rather low-level code (C, C++, Fortran), explicit parallelization. § Message Passing Interface (MPI): communication between nodes. § MPI_Send, MPI_Recv, …, MPI_Bcast, MPI_Reduce Broadcast Reduce received broadcast responses, e. g. sum, max. § Open. MP: Multithreading (single node), communication via shared memory. #pragma omp parallel for § SE point of view: bad! for(i=0; i<N; i++) { c[i] = a[i] + b[i]; } § MPI pretty low-level. § Well, Open. MPI pragma annotations not that bad. § Both are prevailing: lot’s of codes exist, we have to live with them! Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 5

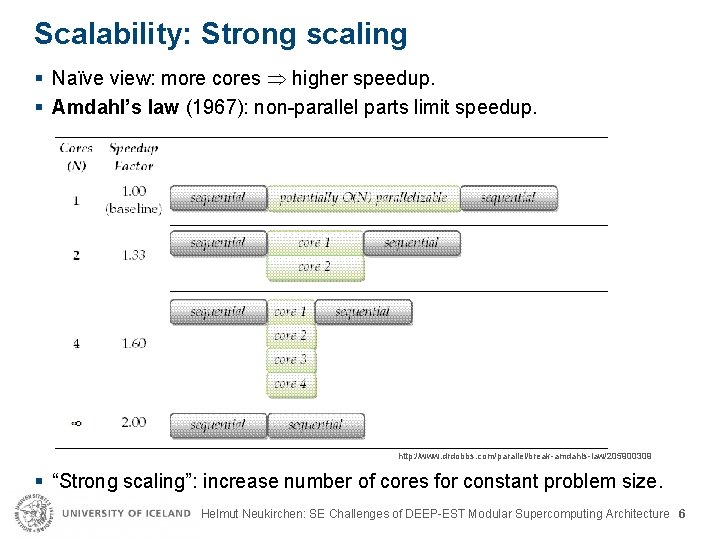

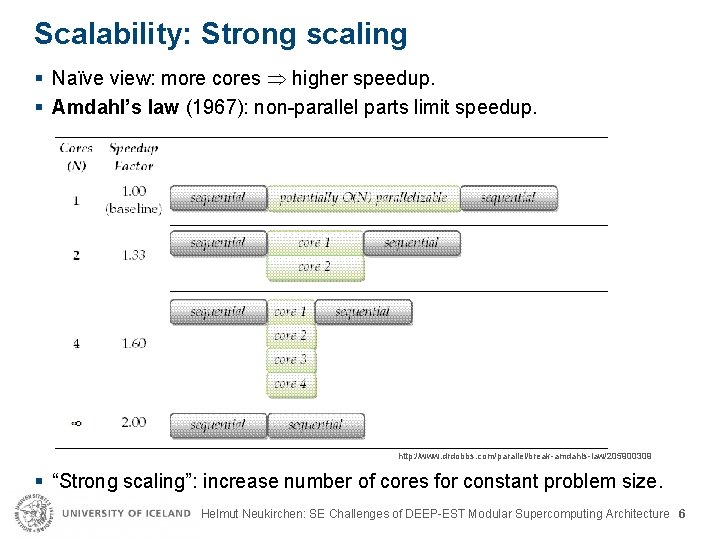

Scalability: Strong scaling § Naïve view: more cores higher speedup. § Amdahl’s law (1967): non-parallel parts limit speedup. http: //www. drdobbs. com/parallel/break-amdahls-law/205900309 § “Strong scaling”: increase number of cores for constant problem size. Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 6

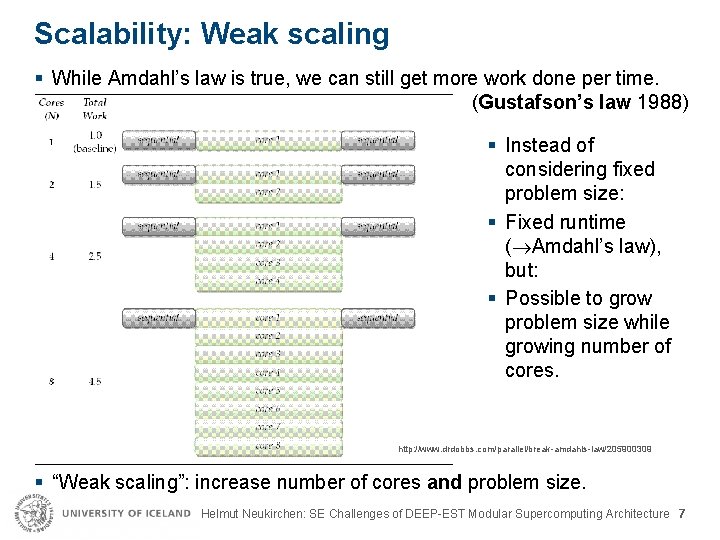

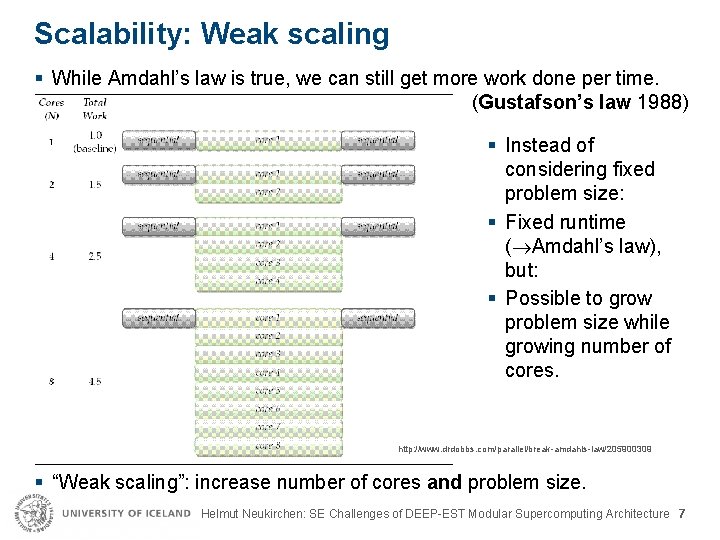

Scalability: Weak scaling § While Amdahl’s law is true, we can still get more work done per time. (Gustafson’s law 1988) § Instead of considering fixed problem size: § Fixed runtime ( Amdahl’s law), but: § Possible to grow problem size while growing number of cores. http: //www. drdobbs. com/parallel/break-amdahls-law/205900309 § “Weak scaling”: increase number of cores and problem size. Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 7

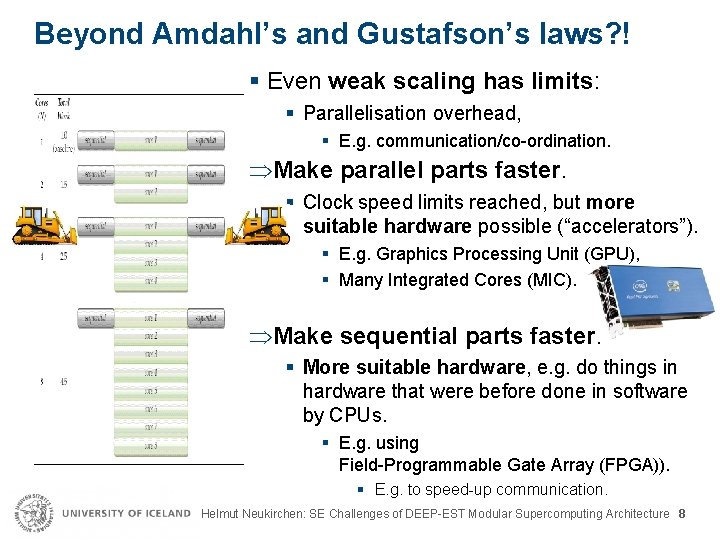

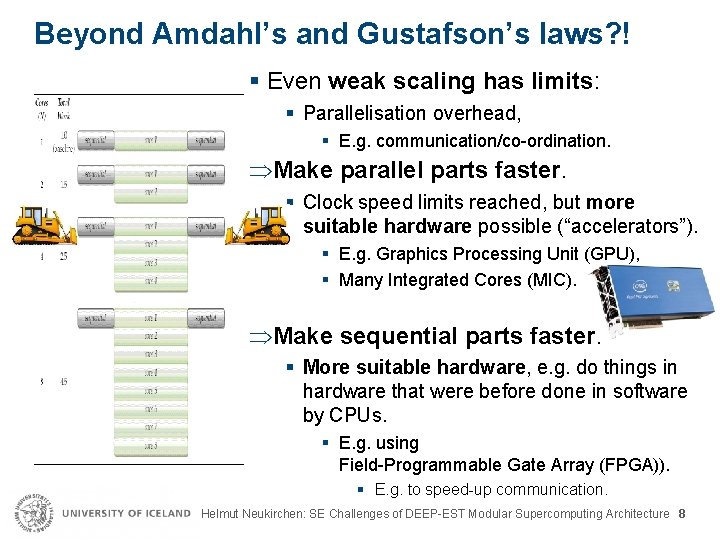

Beyond Amdahl’s and Gustafson’s laws? ! § Even weak scaling has limits: § Parallelisation overhead, § E. g. communication/co-ordination. Make parallel parts faster. § Clock speed limits reached, but more suitable hardware possible (“accelerators”). § E. g. Graphics Processing Unit (GPU), § Many Integrated Cores (MIC). Make sequential parts faster. § More suitable hardware, e. g. do things in hardware that were before done in software by CPUs. § E. g. using Field-Programmable Gate Array (FPGA)). § E. g. to speed-up communication. Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 8

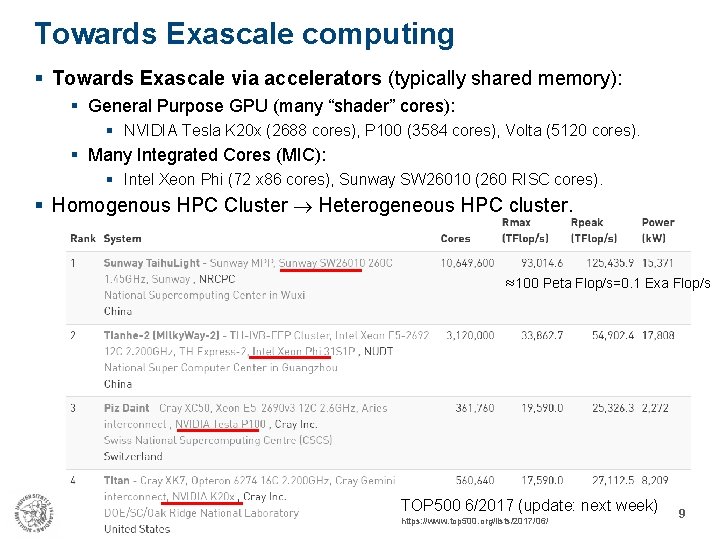

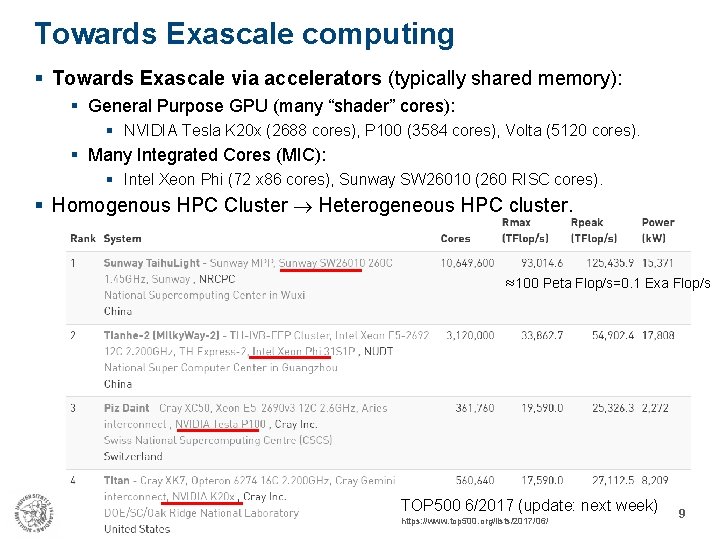

Towards Exascale computing § Towards Exascale via accelerators (typically shared memory): § General Purpose GPU (many “shader” cores): § NVIDIA Tesla K 20 x (2688 cores), P 100 (3584 cores), Volta (5120 cores). § Many Integrated Cores (MIC): § Intel Xeon Phi (72 x 86 cores), Sunway SW 26010 (260 RISC cores). § Homogenous HPC Cluster Heterogeneous HPC cluster. ≈100 Peta Flop/s=0. 1 Exa Flop/s TOP 500 6/2017 (update: next week) https: //www. top 500. org/lists/2017/06/ 9

EU Exascale projects: DEEP, DEEP-ER § Flexible integration of accelerators and special hardware. § “Blueprints” of future Exascale technology: small prototype platforms. § Co-design: HPC applications drive hardware requirements. § 2011 -2015: DEEP (Dynamical Exascale Entry Platform): § Standard cluster + flexible attachment of MIC “booster”. § 2013 -2017: DEEP-ER (Dynamical Exascale Entry Platform – Extended Reach): § E. g. I/O and storage for fast checkpointing (resiliency/fault tolerance). Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 10

EU Exascale projects: DEEP-EST § 7/2017 -6/2020: DEEP-EST (Dynamical Exascale Entry Platform – Extreme Scale Technologies): § MSA Modular Supercomputing Architecture: more accelerators, e. g. : § Network Attached Memory (NAM), FPGA-supported communication. § EC Funding: 14. 998. 342 € § http: //www. deep-est. eu § University of Iceland provides machine learning applications. § Port HPC machine learning implementations to DEEP-EST hardware. § Clustering (DBSCAN), Classification (SVM), Deep learning (Neural networks). § Software Engineering more a voluntary side aspect (no deliverables). Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 11

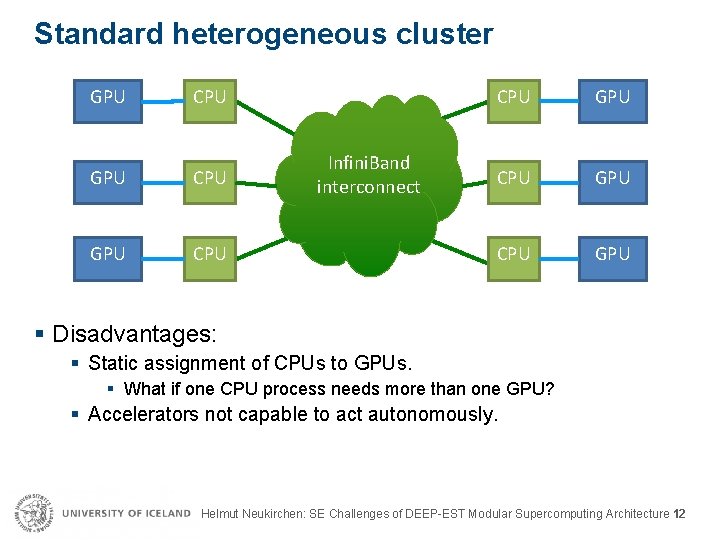

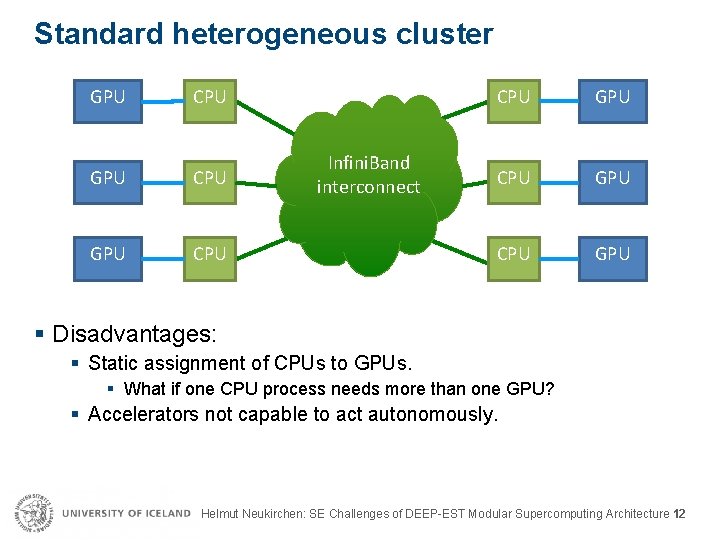

Standard heterogeneous cluster GPU CPU Infini. Band interconnect CPU GPU § Disadvantages: § Static assignment of CPUs to GPUs. § What if one CPU process needs more than one GPU? § Accelerators not capable to act autonomously. Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 12

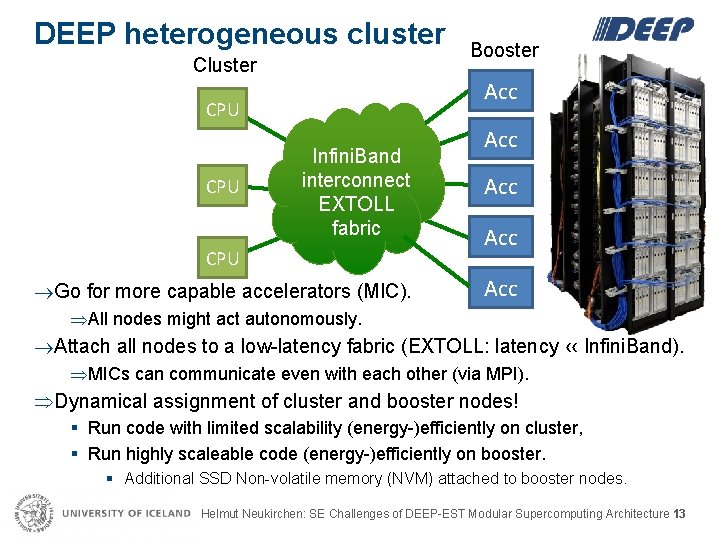

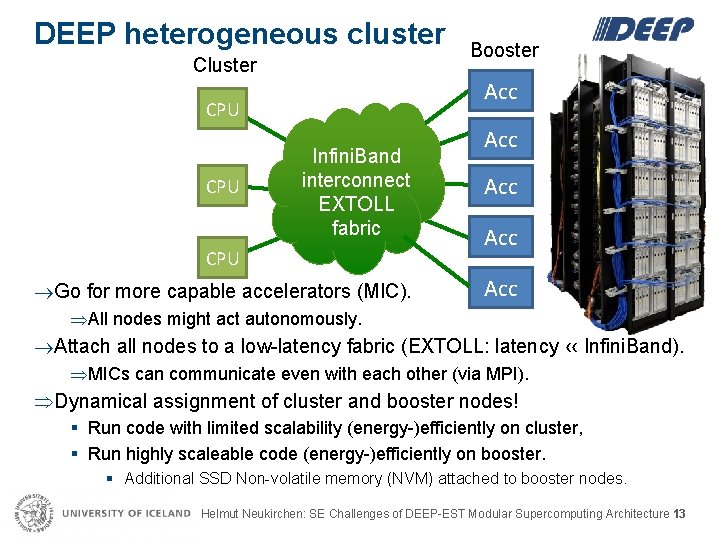

DEEP heterogeneous cluster Cluster Acc CPU Booster Infini. Band interconnect EXTOLL fabric CPU Go for more capable accelerators (MIC). Acc Acc All nodes might act autonomously. Attach all nodes to a low-latency fabric (EXTOLL: latency ‹‹ Infini. Band). MICs can communicate even with each other (via MPI). Dynamical assignment of cluster and booster nodes! § Run code with limited scalability (energy-)efficiently on cluster, § Run highly scaleable code (energy-)efficiently on booster. § Additional SSD Non-volatile memory (NVM) attached to booster nodes. Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 13

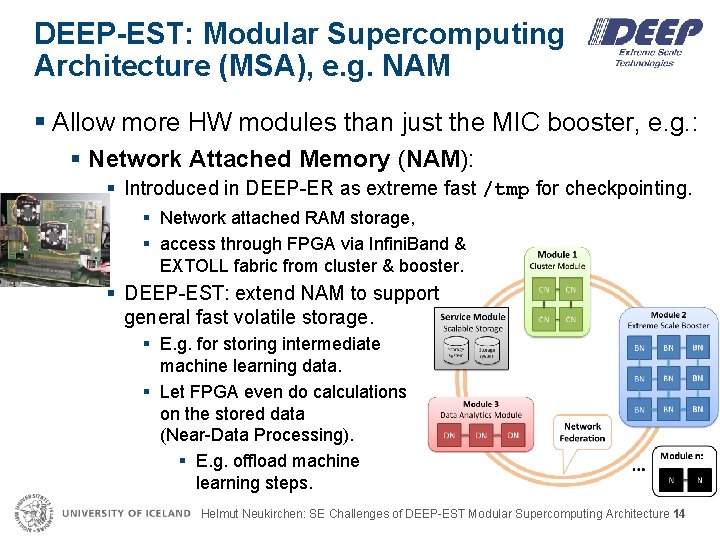

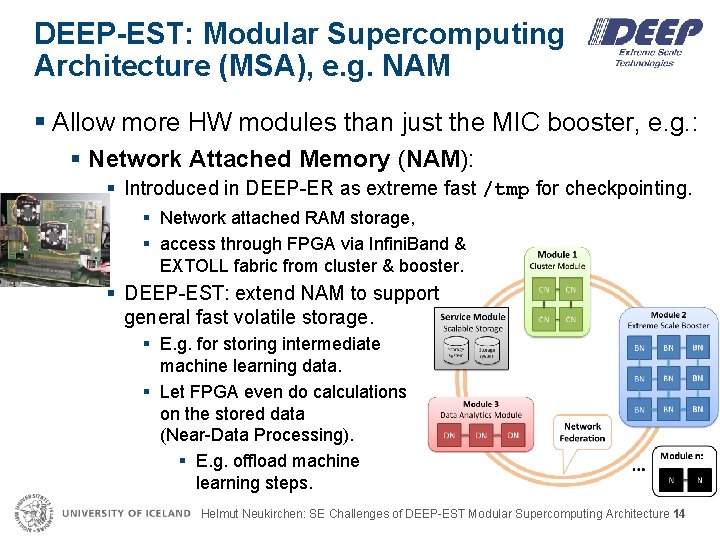

DEEP-EST: Modular Supercomputing Architecture (MSA), e. g. NAM § Allow more HW modules than just the MIC booster, e. g. : § Network Attached Memory (NAM): § Introduced in DEEP-ER as extreme fast /tmp for checkpointing. § Network attached RAM storage, § access through FPGA via Infini. Band & EXTOLL fabric from cluster & booster. § DEEP-EST: extend NAM to support general fast volatile storage. § E. g. for storing intermediate machine learning data. § Let FPGA even do calculations on the stored data (Near-Data Processing). § E. g. offload machine learning steps. Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 14

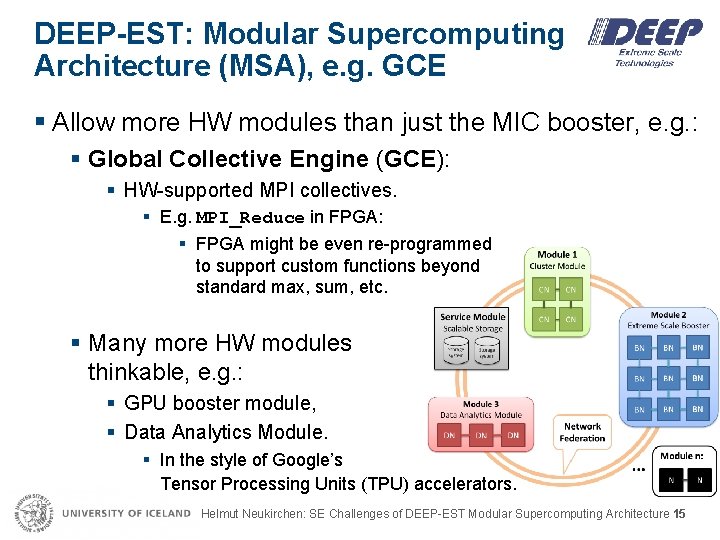

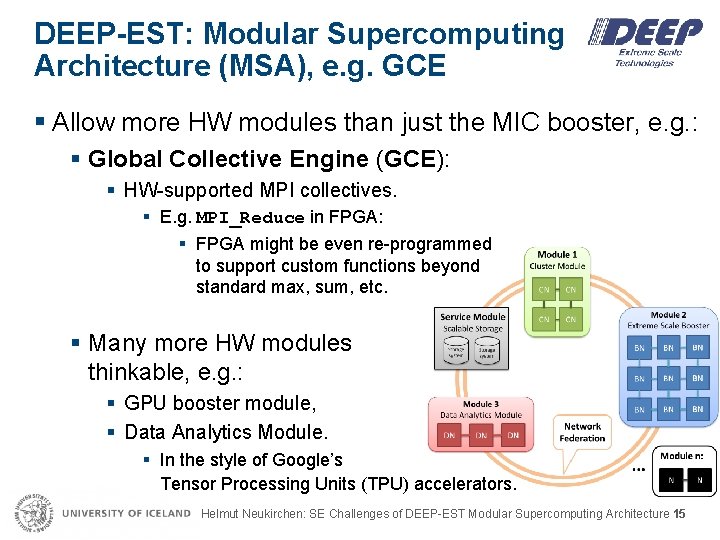

DEEP-EST: Modular Supercomputing Architecture (MSA), e. g. GCE § Allow more HW modules than just the MIC booster, e. g. : § Global Collective Engine (GCE): § HW-supported MPI collectives. § E. g. MPI_Reduce in FPGA: § FPGA might be even re-programmed to support custom functions beyond standard max, sum, etc. § Many more HW modules thinkable, e. g. : § GPU booster module, § Data Analytics Module. § In the style of Google’s Tensor Processing Units (TPU) accelerators. Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 15

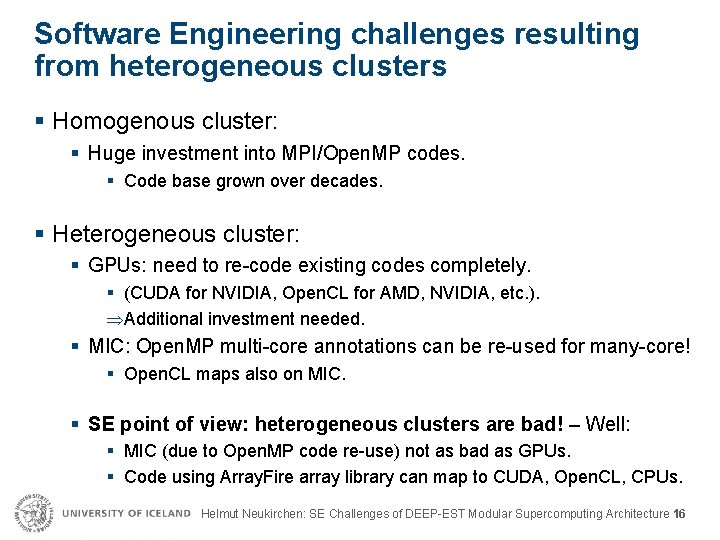

Software Engineering challenges resulting from heterogeneous clusters § Homogenous cluster: § Huge investment into MPI/Open. MP codes. § Code base grown over decades. § Heterogeneous cluster: § GPUs: need to re-code existing codes completely. § (CUDA for NVIDIA, Open. CL for AMD, NVIDIA, etc. ). Additional investment needed. § MIC: Open. MP multi-core annotations can be re-used for many-core! § Open. CL maps also on MIC. § SE point of view: heterogeneous clusters are bad! – Well: § MIC (due to Open. MP code re-use) not as bad as GPUs. § Code using Array. Fire array library can map to CUDA, Open. CL, CPUs. Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 16

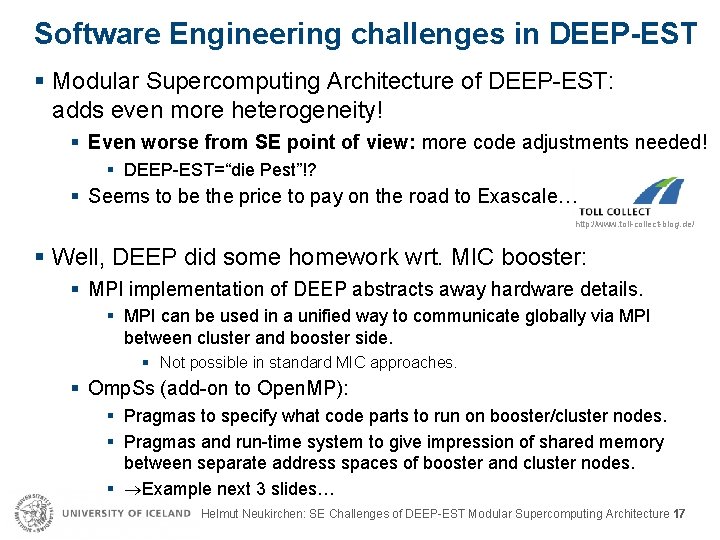

Software Engineering challenges in DEEP-EST § Modular Supercomputing Architecture of DEEP-EST: adds even more heterogeneity! § Even worse from SE point of view: more code adjustments needed! § DEEP-EST=“die Pest”!? § Seems to be the price to pay on the road to Exascale… http: //www. toll-collect-blog. de/ § Well, DEEP did some homework wrt. MIC booster: § MPI implementation of DEEP abstracts away hardware details. § MPI can be used in a unified way to communicate globally via MPI between cluster and booster side. § Not possible in standard MIC approaches. § Omp. Ss (add-on to Open. MP): § Pragmas to specify what code parts to run on booster/cluster nodes. § Pragmas and run-time system to give impression of shared memory between separate address spaces of booster and cluster nodes. § Example next 3 slides… Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 17

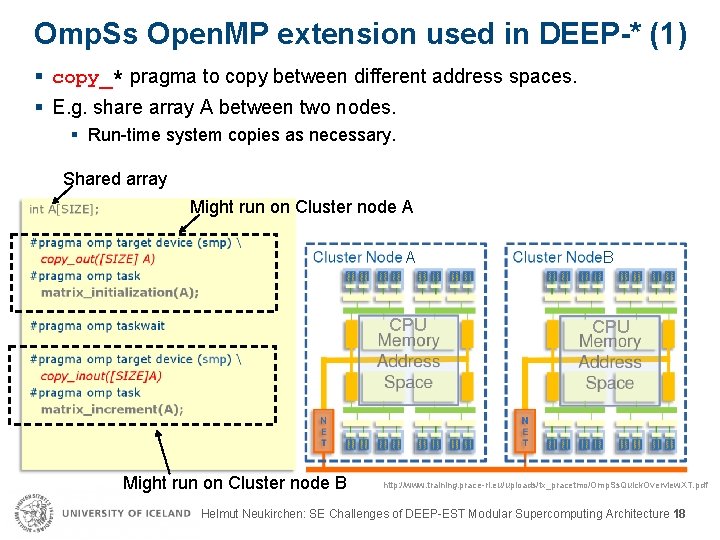

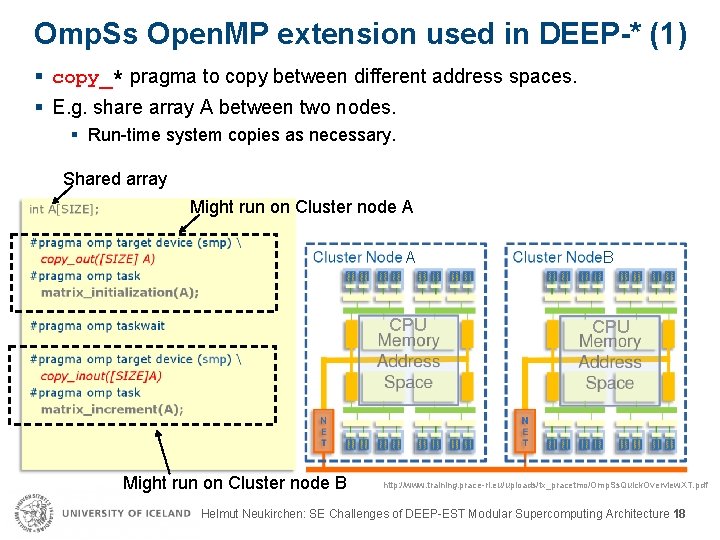

Omp. Ss Open. MP extension used in DEEP-* (1) § copy_* pragma to copy between different address spaces. § E. g. share array A between two nodes. § Run-time system copies as necessary. Shared array Might run on Cluster node A Might run on Cluster node B A B CPU http: //www. training. prace-ri. eu/uploads/tx_pracetmo/Omp. Ss. Quick. Overview. XT. pdf Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 18

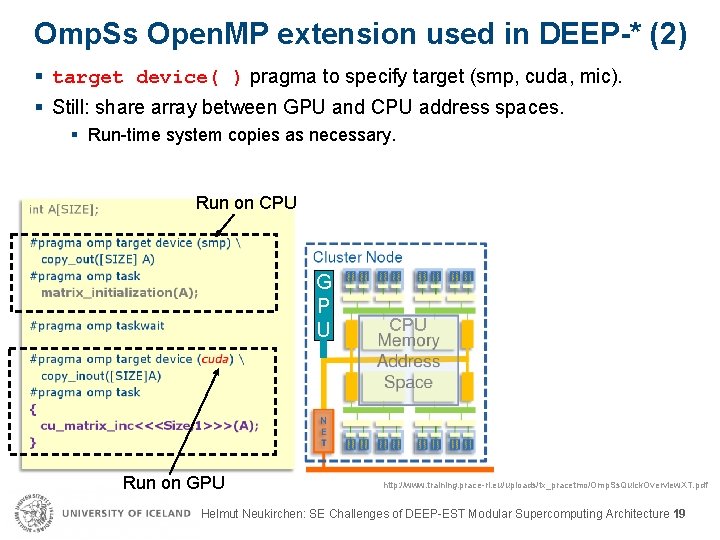

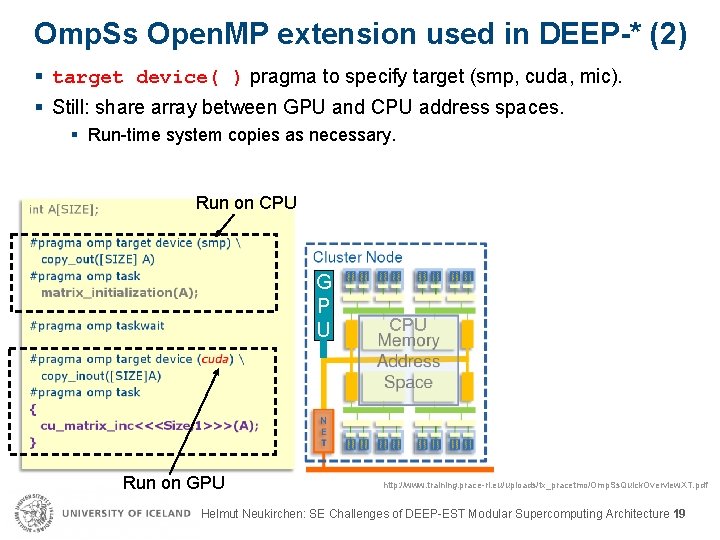

Omp. Ss Open. MP extension used in DEEP-* (2) § target device( ) pragma to specify target (smp, cuda, mic). § Still: share array between GPU and CPU address spaces. § Run-time system copies as necessary. Run on CPU B G P U Run on GPU CPU http: //www. training. prace-ri. eu/uploads/tx_pracetmo/Omp. Ss. Quick. Overview. XT. pdf Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 19

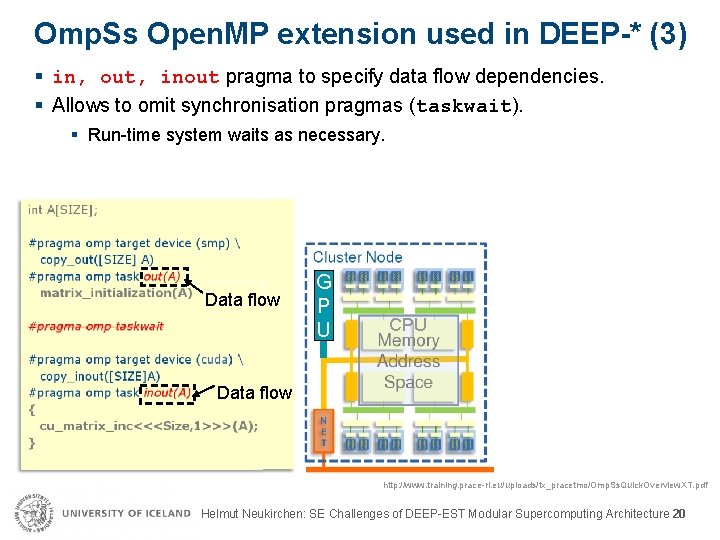

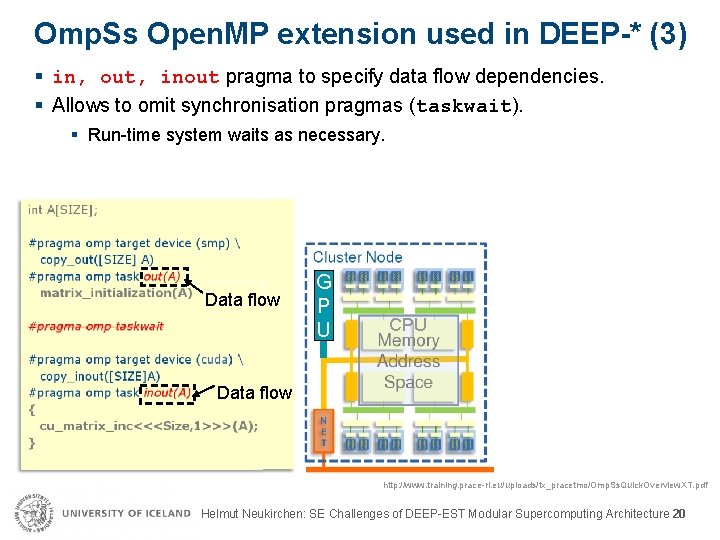

Omp. Ss Open. MP extension used in DEEP-* (3) § in, out, inout pragma to specify data flow dependencies. § Allows to omit synchronisation pragmas (taskwait). § Run-time system waits as necessary. B Data flow G P U CPU Data flow http: //www. training. prace-ri. eu/uploads/tx_pracetmo/Omp. Ss. Quick. Overview. XT. pdf Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 20

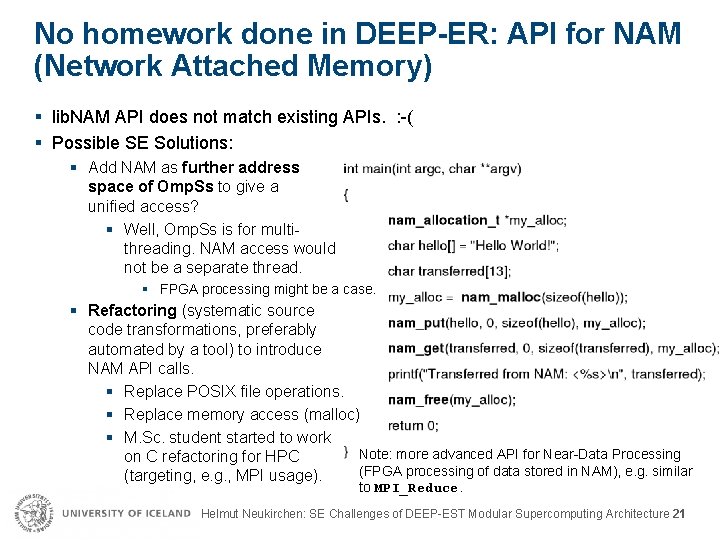

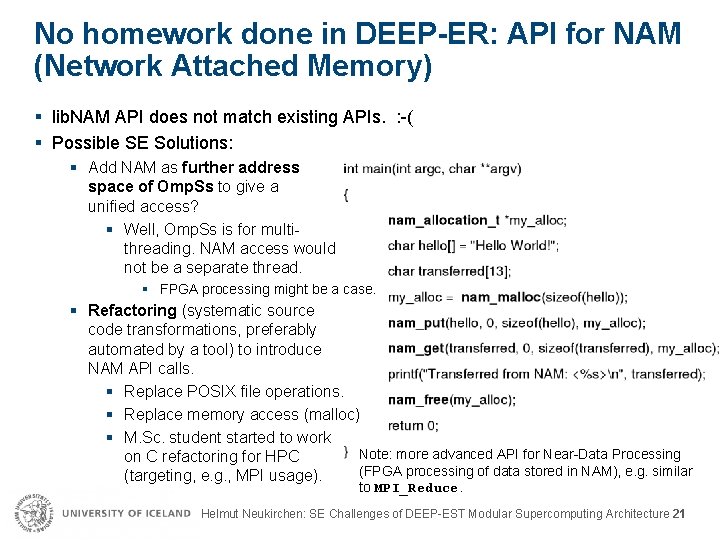

No homework done in DEEP-ER: API for NAM (Network Attached Memory) § lib. NAM API does not match existing APIs. : -( § Possible SE Solutions: § Add NAM as further address space of Omp. Ss to give a unified access? § Well, Omp. Ss is for multithreading. NAM access would not be a separate thread. § FPGA processing might be a case. § Refactoring (systematic source code transformations, preferably automated by a tool) to introduce NAM API calls. § Replace POSIX file operations. § Replace memory access (malloc) § M. Sc. student started to work Note: more advanced API for Near-Data Processing on C refactoring for HPC (FPGA processing of data stored in NAM), e. g. similar (targeting, e. g. , MPI usage). to MPI_Reduce. Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 21

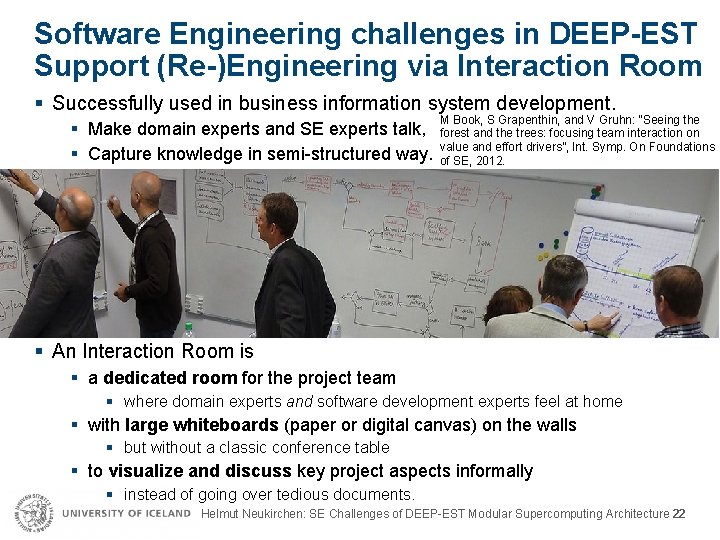

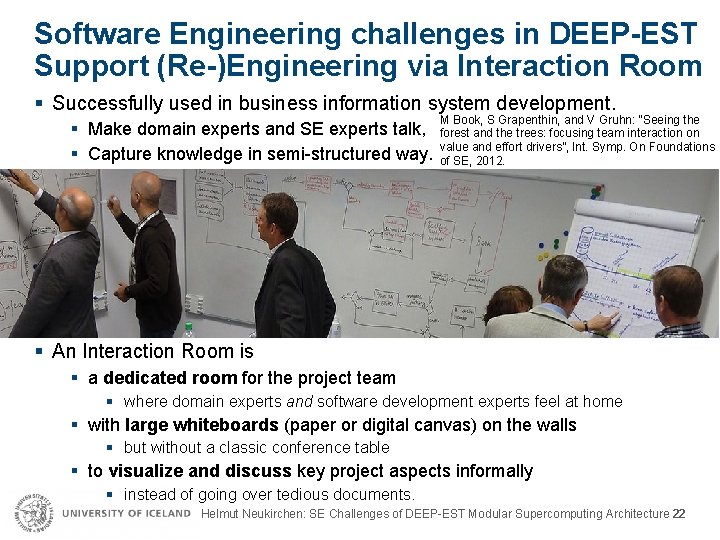

Software Engineering challenges in DEEP-EST Support (Re-)Engineering via Interaction Room § Successfully used in business information system development. § Make domain experts and SE experts talk, § Capture knowledge in semi-structured way. M Book, S Grapenthin, and V Gruhn: “Seeing the forest and the trees: focusing team interaction on value and effort drivers”, Int. Symp. On Foundations of SE, 2012. § An Interaction Room is § a dedicated room for the project team § where domain experts and software development experts feel at home § with large whiteboards (paper or digital canvas) on the walls § but without a classic conference table § to visualize and discuss key project aspects informally § instead of going over tedious documents. Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 22

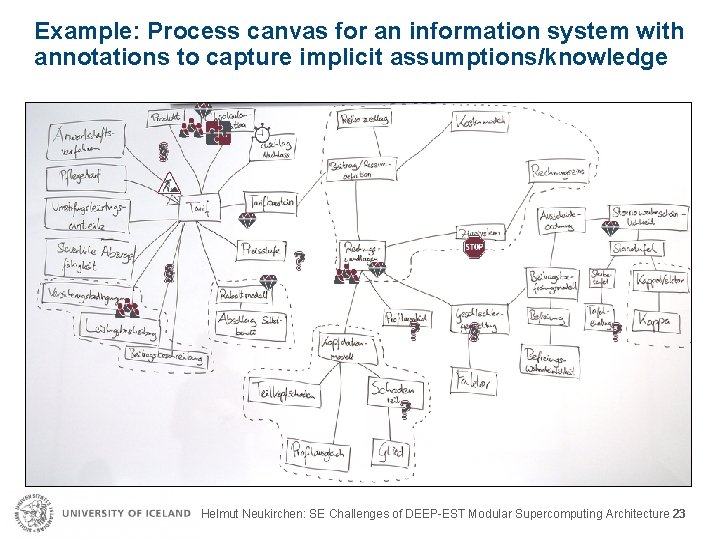

Example: Process canvas for an information system with annotations to capture implicit assumptions/knowledge Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 23

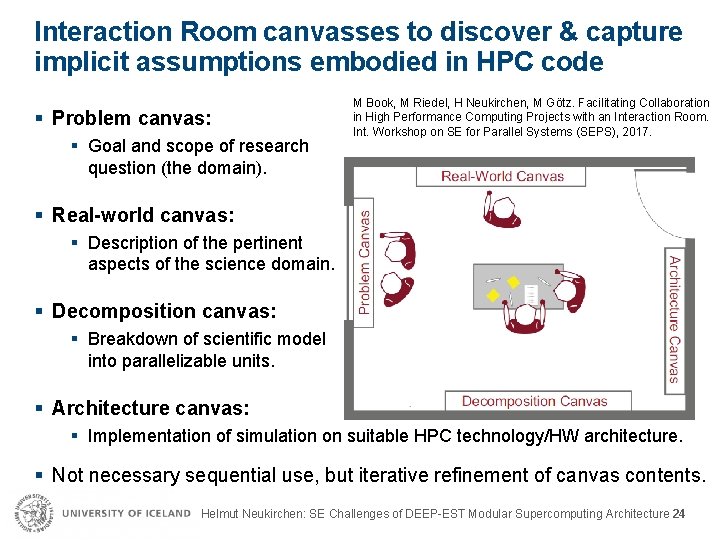

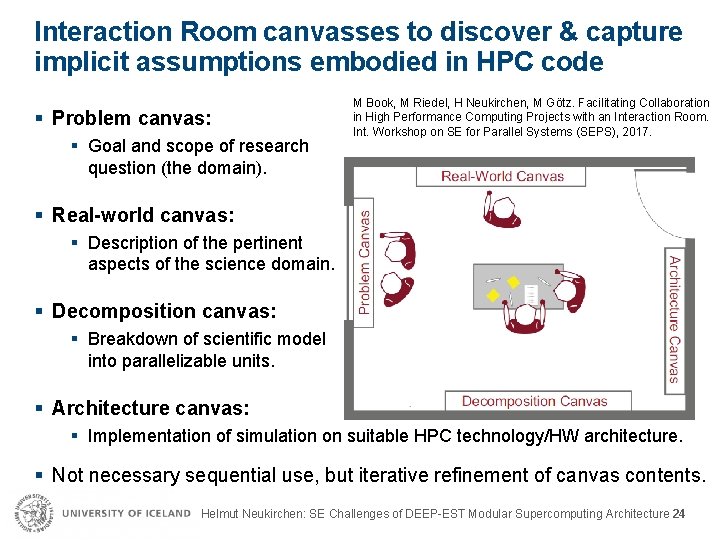

Interaction Room canvasses to discover & capture implicit assumptions embodied in HPC code § Problem canvas: § Goal and scope of research question (the domain). M Book, M Riedel, H Neukirchen, M Götz. Facilitating Collaboration in High Performance Computing Projects with an Interaction Room. Int. Workshop on SE for Parallel Systems (SEPS), 2017. § Real-world canvas: § Description of the pertinent aspects of the science domain. § Decomposition canvas: § Breakdown of scientific model into parallelizable units. § Architecture canvas: § Implementation of simulation on suitable HPC technology/HW architecture. § Not necessary sequential use, but iterative refinement of canvas contents. Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 24

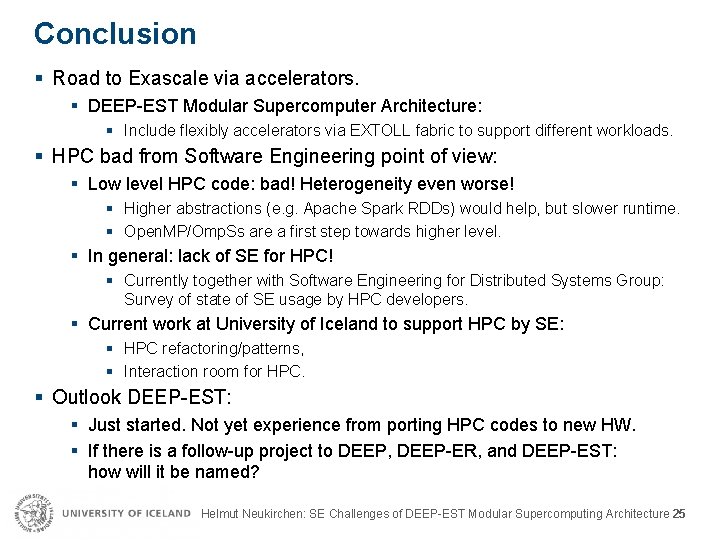

Conclusion § Road to Exascale via accelerators. § DEEP-EST Modular Supercomputer Architecture: § Include flexibly accelerators via EXTOLL fabric to support different workloads. § HPC bad from Software Engineering point of view: § Low level HPC code: bad! Heterogeneity even worse! § Higher abstractions (e. g. Apache Spark RDDs) would help, but slower runtime. § Open. MP/Omp. Ss are a first step towards higher level. § In general: lack of SE for HPC! § Currently together with Software Engineering for Distributed Systems Group: Survey of state of SE usage by HPC developers. § Current work at University of Iceland to support HPC by SE: § HPC refactoring/patterns, § Interaction room for HPC. § Outlook DEEP-EST: § Just started. Not yet experience from porting HPC codes to new HW. § If there is a follow-up project to DEEP, DEEP-ER, and DEEP-EST: how will it be named? Helmut Neukirchen: SE Challenges of DEEP-EST Modular Supercomputing Architecture 25