Software Defect Analysis Monitoring Quality Over Time q

- Slides: 73

Software Defect Analysis

Monitoring Quality Over Time q q q You also need to be concerned not just whether deliverables have appeared but also whether they are of appropriate quality Standard phase-based project monitoring Ø Defect counts, e. g. , defects per module during inspections Ø Rework levels, e. g. , percentage of design effort dedicated to rework (corrections) Ø Change requests, e. g. , amount and nature of late changes Acceptable quality may be determined based on past experience, aiming at continuous improvement © G. Antoniol 2012 LOG 6305 2

Faults Reported per Week © G. Antoniol 2012 LOG 6305 3

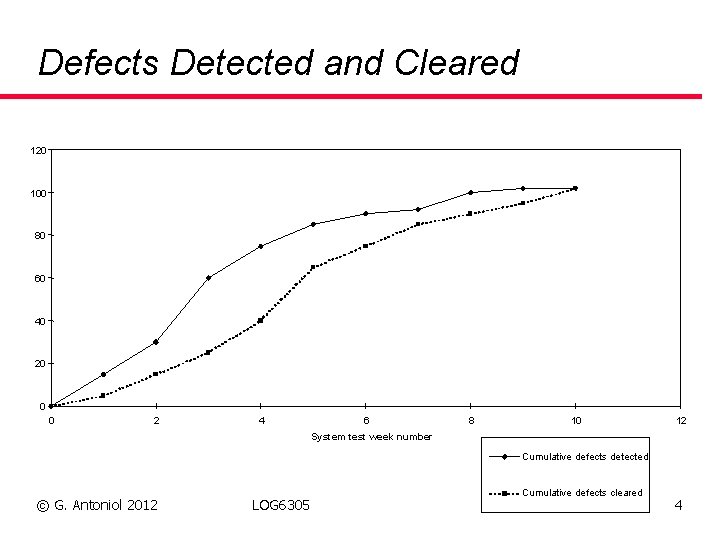

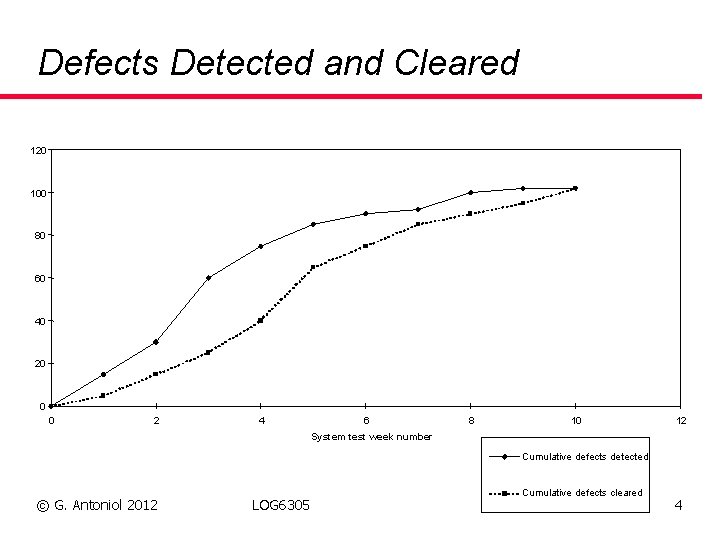

Defects Detected and Cleared 120 100 80 60 40 20 0 0 2 4 6 8 10 12 System test week number Cumulative defects detected © G. Antoniol 2012 LOG 6305 Cumulative defects cleared 4

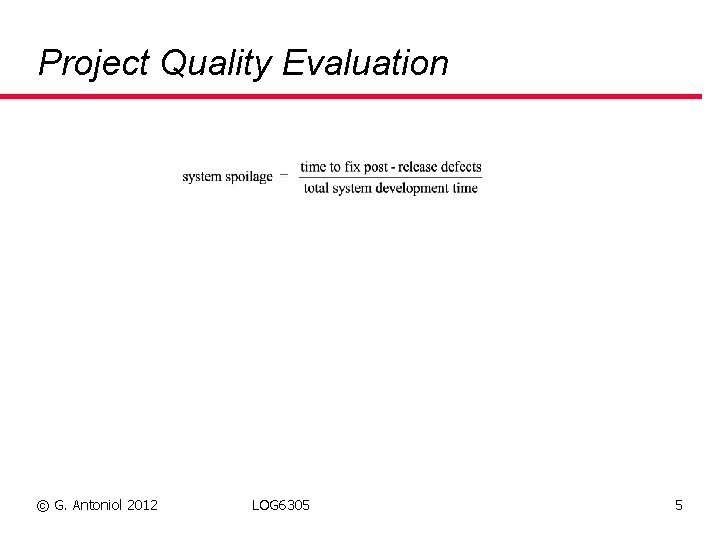

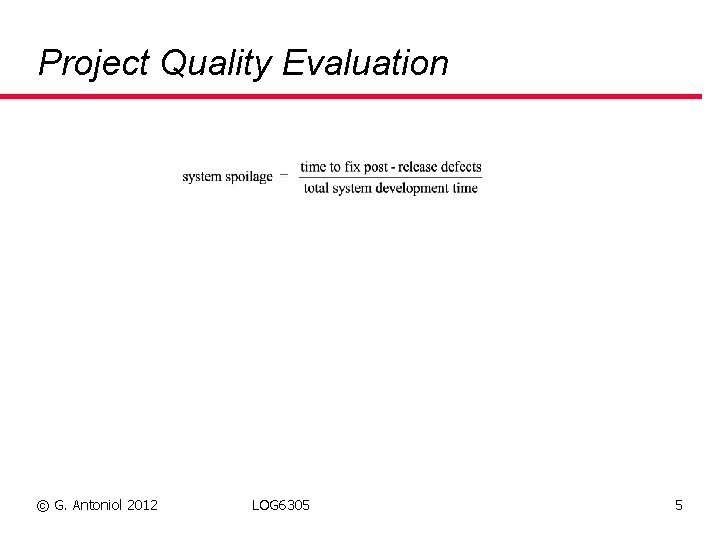

Project Quality Evaluation © G. Antoniol 2012 LOG 6305 5

AT&T Bell Labs Defect Measures q q q · Cumulative fault density faults found internally · Cumulative fault density faults found by customers · Total serious faults found · Mean time to close serious faults · Total field fixes · High-level design review errors per KNCSL · Low-level design errors per KNCSL · Code inspection errors per inspected KNCSL · Development test and integration errors found per KNCSL · System test problems found per developed KNCSL · First application test site errors found per developed KNCSL · Customer found problems per developed KNCSL © G. Antoniol 2012 LOG 6305 6

Phase-based Quality Control

Principles q q Monitor inputs and outputs of every phase of development Identify abnormal, unusual situations that may indicate future quality problems Determine whether actual problems Post-Mortem Analysis: problem occurrences, root causes © G. Antoniol 2012 LOG 6305 8

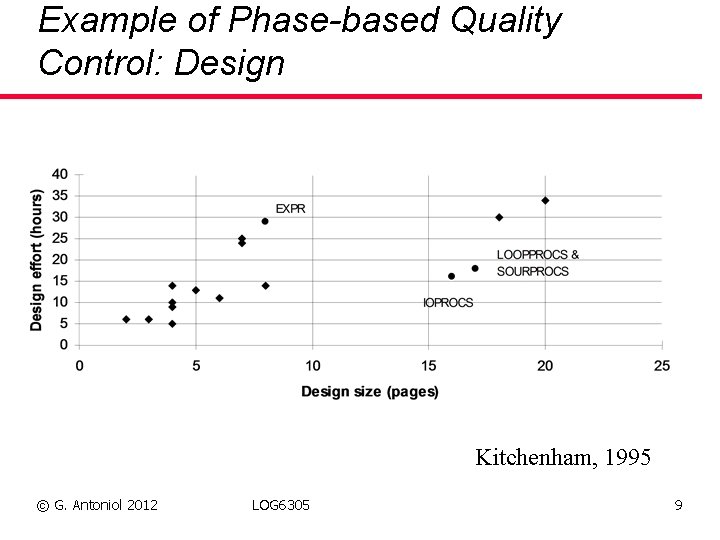

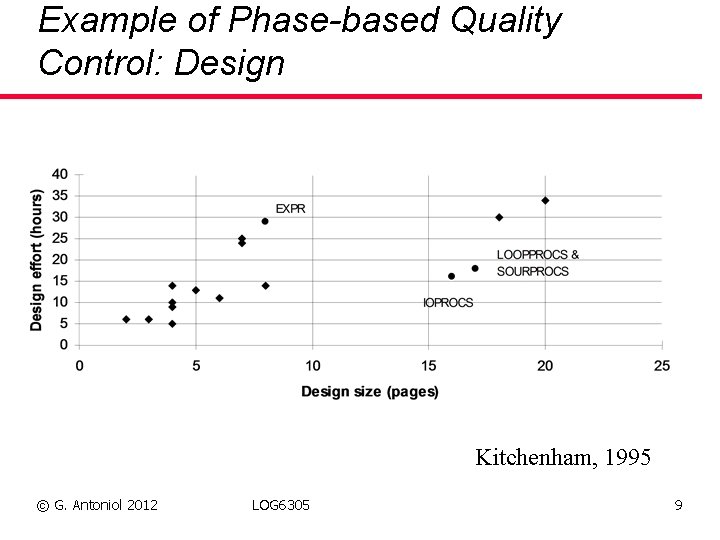

Example of Phase-based Quality Control: Design Kitchenham, 1995 © G. Antoniol 2012 LOG 6305 9

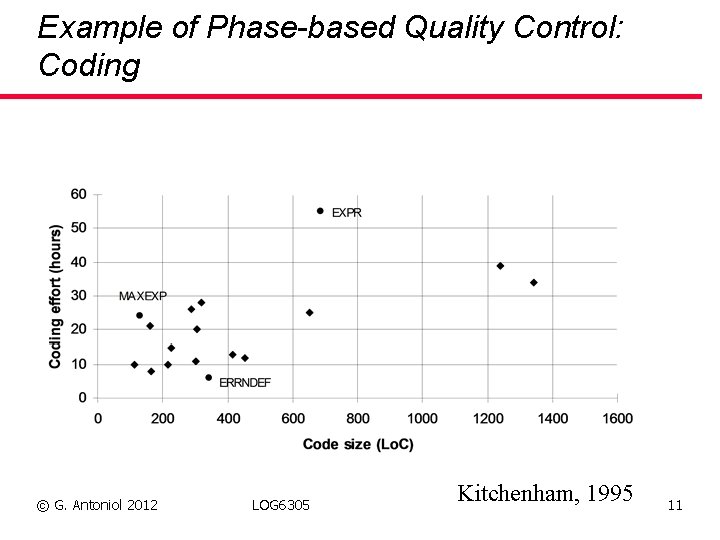

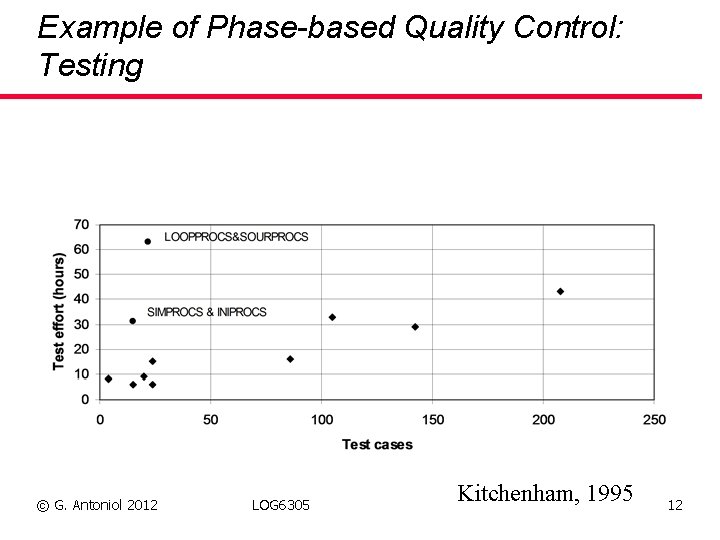

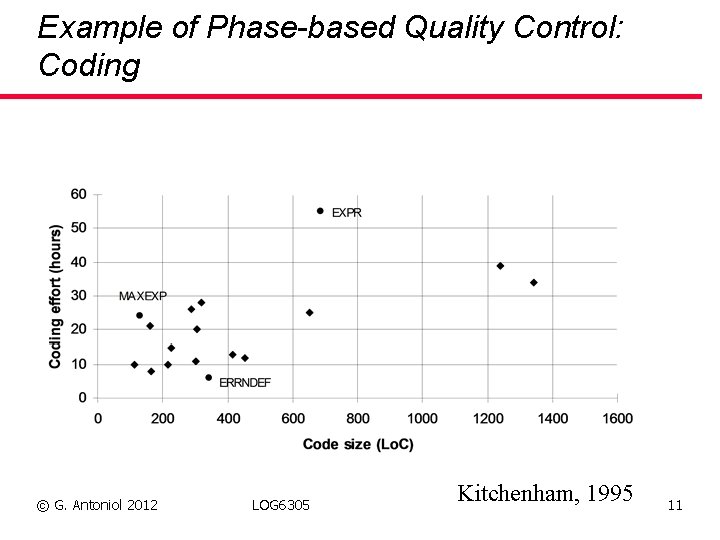

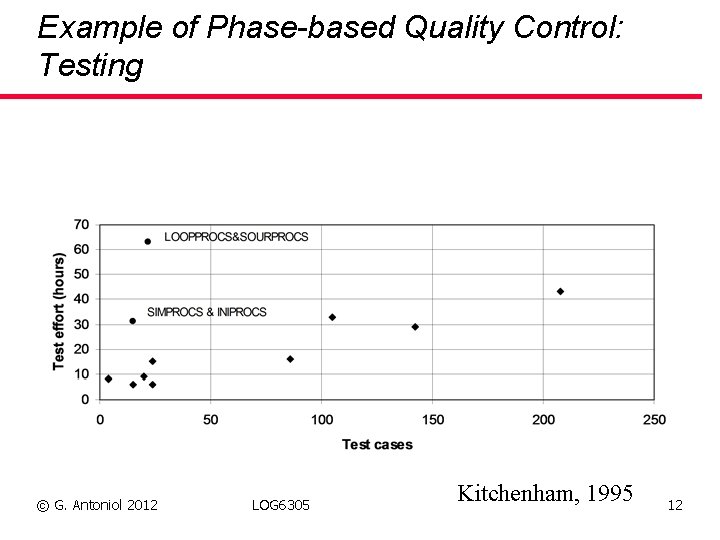

Investigation q q EXPR was a critical module, and the member of staff assigned to its design was relatively inexperienced. IOPROCS and LOOPPROCS&SOURPROCS were poorly designed. This problem showed up later in a large number of post release changes for IOPROCS and a large number of design problems when testing LOOPPROCS&SOURPROCS. © G. Antoniol 2012 LOG 6305 10

Example of Phase-based Quality Control: Coding © G. Antoniol 2012 LOG 6305 Kitchenham, 1995 11

Example of Phase-based Quality Control: Testing © G. Antoniol 2012 LOG 6305 Kitchenham, 1995 12

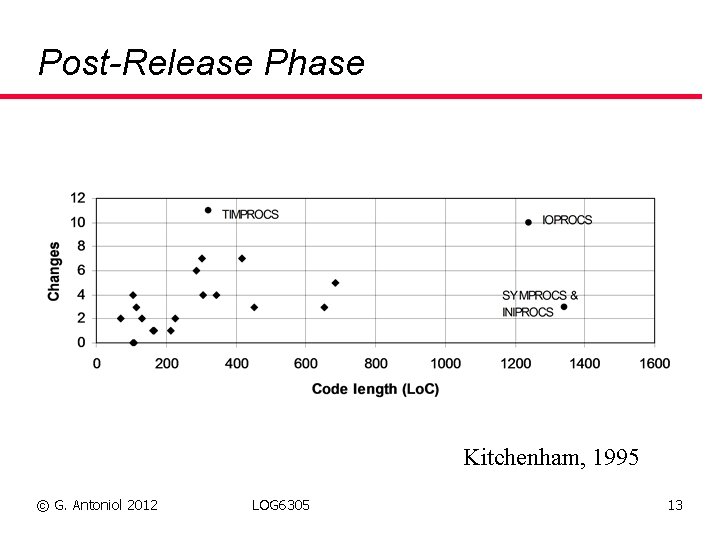

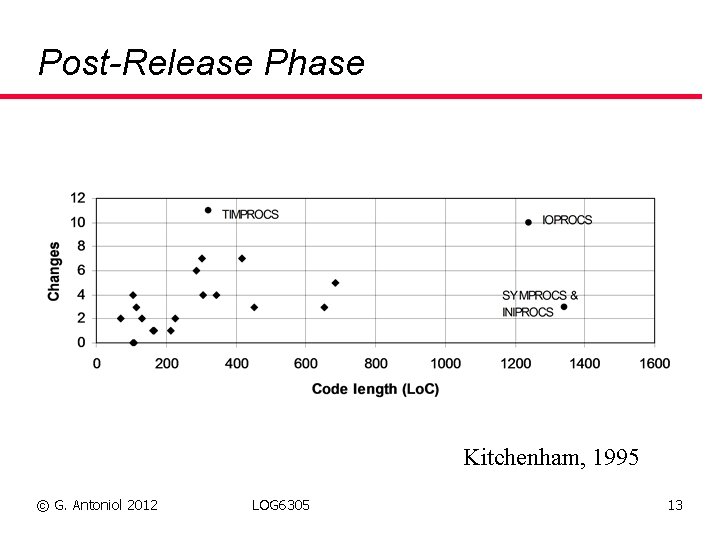

Post-Release Phase Kitchenham, 1995 © G. Antoniol 2012 LOG 6305 13

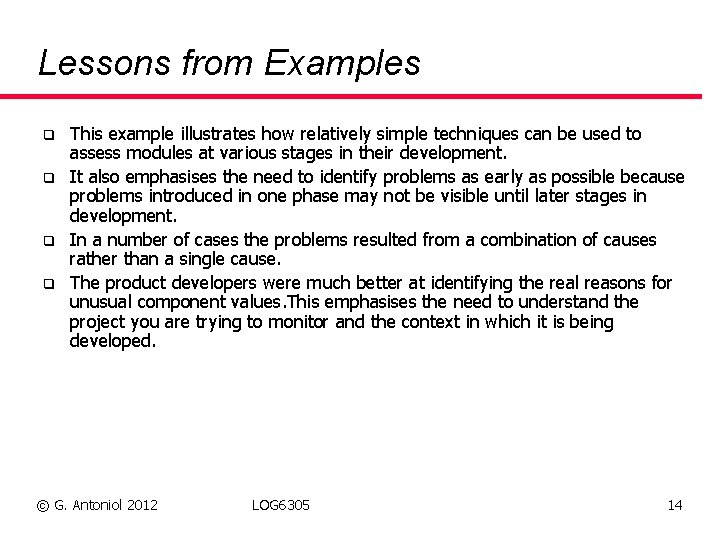

Lessons from Examples q q This example illustrates how relatively simple techniques can be used to assess modules at various stages in their development. It also emphasises the need to identify problems as early as possible because problems introduced in one phase may not be visible until later stages in development. In a number of cases the problems resulted from a combination of causes rather than a single cause. The product developers were much better at identifying the real reasons for unusual component values. This emphasises the need to understand the project you are trying to monitor and the context in which it is being developed. © G. Antoniol 2012 LOG 6305 14

Component Quality Control

Quality Control Principles q q q Quality control of manufactured product Common in other industries For software components: 1. Identify attributes that are early indicators of quality problems, 2. 3. 4. e. g. , highly complex module. Define acceptable statistical ranges for values of the attributes for each type of component. Identify “components” with attribute values outside ranges. Rework/Repair the components, if necessary. © G. Antoniol 2012 LOG 6305 16

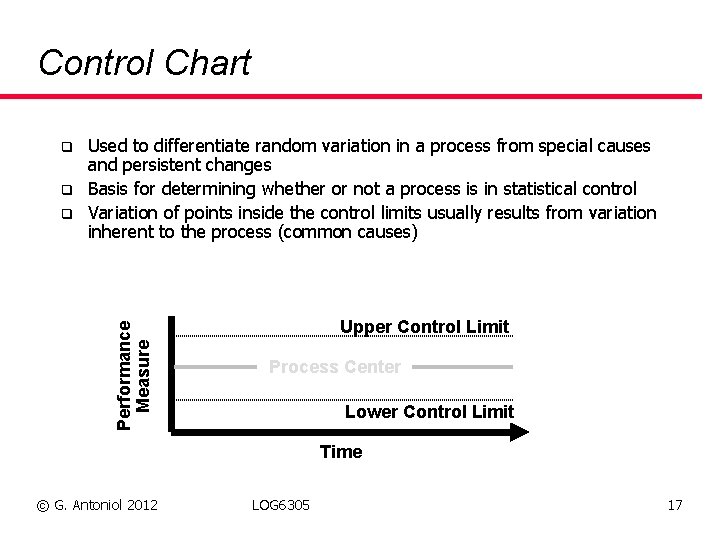

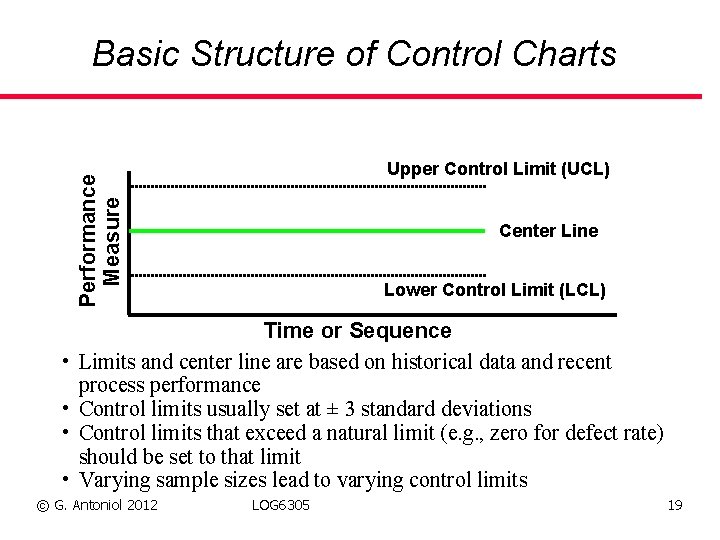

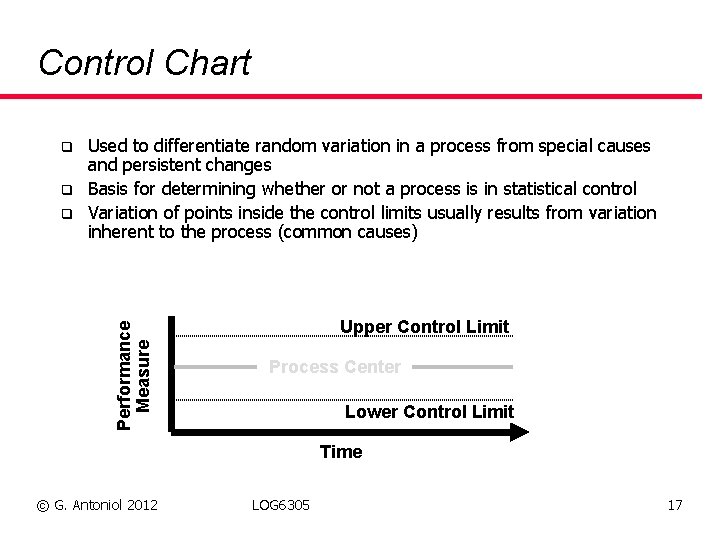

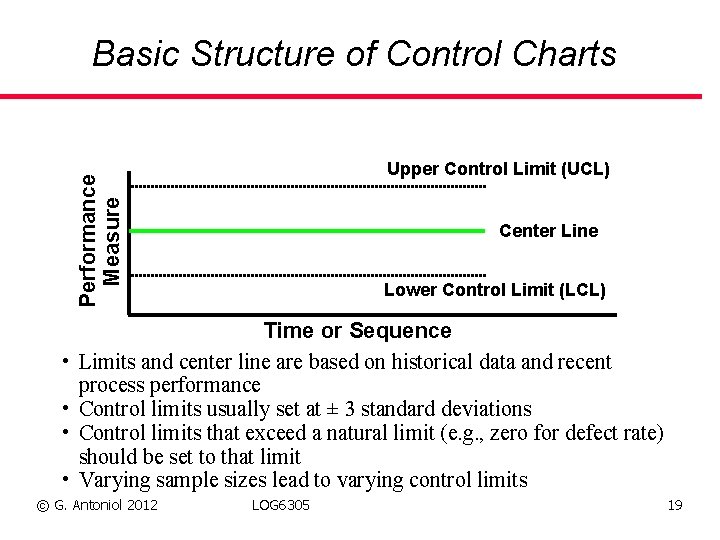

Control Chart q q Performance Measure q Used to differentiate random variation in a process from special causes and persistent changes Basis for determining whether or not a process is in statistical control Variation of points inside the control limits usually results from variation inherent to the process (common causes) Upper Control Limit Process Center Lower Control Limit Time © G. Antoniol 2012 LOG 6305 17

Control Chart (cont. ) q q Points outside the control limits result from events or situations that are not a part of the normal process (special causes) - often nonconformances Random variation within the limits is due “common causes” - addressed through improvement not reaction “In Control” means that the process performance is consistent with its baseline (not necessarily the specifications) Control charts only show that the process has changed (additional analysis usually needs to be performed to determine the underlying cause of the change) © G. Antoniol 2012 LOG 6305 18

Basic Structure of Control Charts Performance Measure Upper Control Limit (UCL) • • Center Line Lower Control Limit (LCL) Time or Sequence Limits and center line are based on historical data and recent process performance Control limits usually set at ± 3 standard deviations Control limits that exceed a natural limit (e. g. , zero for defect rate) should be set to that limit Varying sample sizes lead to varying control limits © G. Antoniol 2012 LOG 6305 19

Software Industry There are specific problems when dealing with software products and projects: q q q Lack of objective standards for component measures, e. g. , size, complexity? Compound anomalies Multiple causes of anomalies © G. Antoniol 2012 LOG 6305 20

Lack of Objective Standards q Acceptable ranges for attribute values? Ø Is a procedure which is 200 lines long acceptable or not? q Depends on the context Ø Population of subroutines in system(s) Ø Performance requirements Ø Control flow complexity of subroutines © G. Antoniol 2012 LOG 6305 21

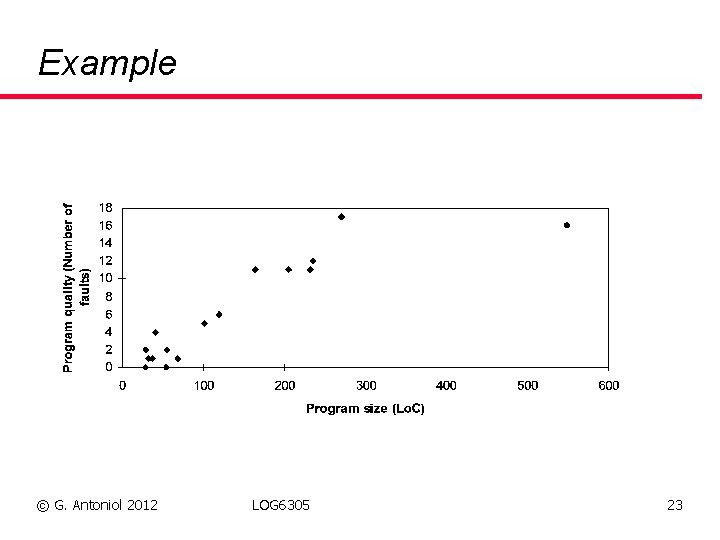

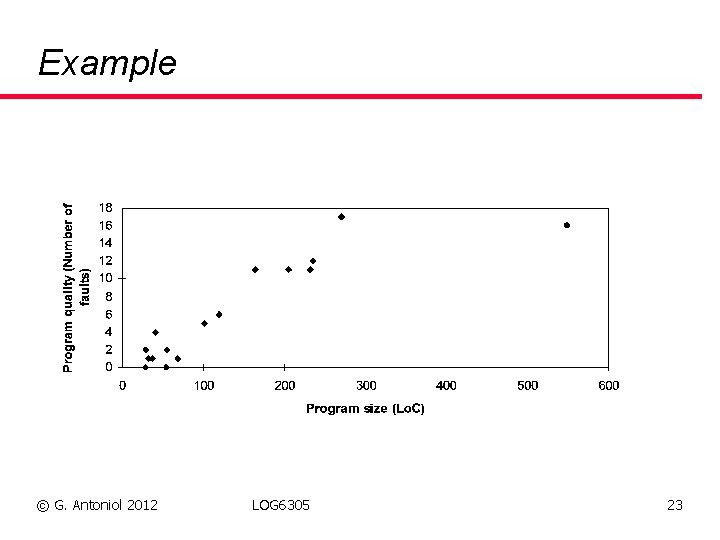

Compound Anomalies q q q Some unusual components (i. e. anomalies) may be detected only if two (or more) different measurements are considered in combination E. g. , a component with a small number of faults would only be considered unusual if it were relatively large Multi-dimensional methods, e. g. , determination of multivariate outliers, cluster analysis which is a statistical technique that groups items according to their values in many dimensions © G. Antoniol 2012 LOG 6305 22

Example © G. Antoniol 2012 LOG 6305 23

Multiple Causes for Anomalies q q Common characteristic of SW quality control If a module shows a low defect density, several causes are plausible: Ø Incomplete data; Ø Module re-used from another product or component library; Ø Extremely simple module; Ø Module developed by high calibre member of staff; Ø Module not properly tested; © G. Antoniol 2012 LOG 6305 24

Measurement for Quality Control q q Defect counts, to identify fault-prone components Size and structure measures: many alternatives depending on the nature, form, and level of abstraction of component Testing measures Expansion measures, e. g. , from specifications to design, from design to code © G. Antoniol 2012 LOG 6305 25

Size and Structure Measures

Many Possible Measures q q Many possible size measures, from components to entire systems Depend on the level of abstraction and content of the product being measured Some structure measures describe the links between components, the extent to which they depend on or interact with each other, e. g. , coupling between classes Structure and size measures are usually regarded as indicators of various quality characteristics © G. Antoniol 2012 LOG 6305 27

Module Information Flow q We say a local direct flow exists if either Ø i) a module invokes a second module and passes information to it, or Ø ii) the invoked module returns a result to the caller. q q Similarly, we say that a local indirect flow exists if the invoked module returns information that is subsequently passed to a second invoked module. A global flow exists if information flows from one module to another via a global data structure. © G. Antoniol 2012 LOG 6305 28

Information Flow Measurement q q The fan-in of a module M is the number of local flows that terminate at M, plus the number of data structures from which information is retrieved by M. Similarly, the fan-out of a module M is the number of local flows that emanate from M, plus the number of data structures that are updated by M. © G. Antoniol 2012 LOG 6305 29

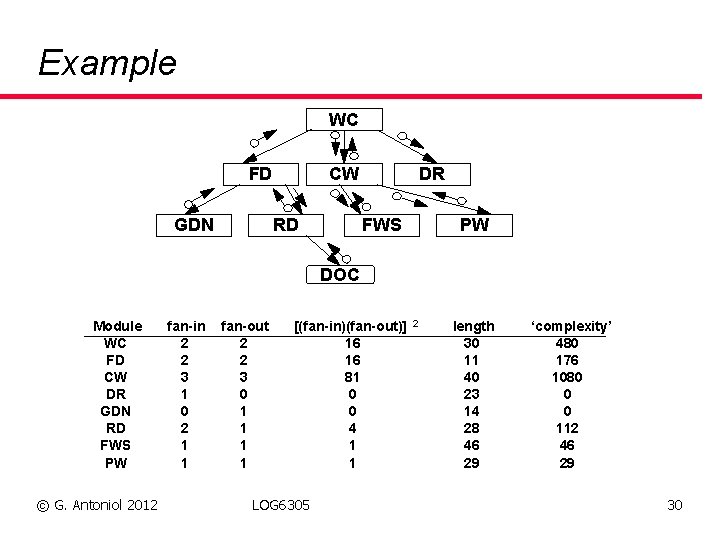

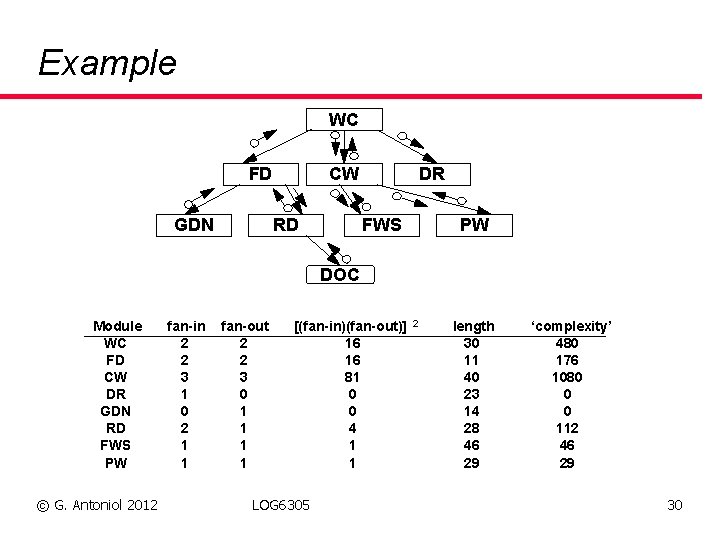

Example WC FD GDN CW RD DR FWS PW DOC Module WC FD CW DR GDN RD FWS PW © G. Antoniol 2012 fan-in 2 2 3 1 0 2 1 1 fan-out 2 2 3 0 1 1 [(fan-in)(fan-out)] 16 16 81 0 0 4 1 1 LOG 6305 2 length 30 11 40 23 14 28 46 29 ‘complexity’ 480 176 1080 0 0 112 46 29 30

Card and Glass Complexity Model C = S + D where C = system complexity S = structural (intermodule) complexity D = data (intramodule) complexity © G. Antoniol 2012 LOG 6305 31

Structural Complexity q Complexity increases as the square of connections between modules S = f 2(i) / n Where f(i) = fan-out of module i n = number of modules in system © G. Antoniol 2012 LOG 6305 32

Data Complexity The more I/O variables, the more functionality q The more fan-out, the more functionality is deferred to other modules Di = V(i) / (f(i) + 1) D = D(i) / n (average data complexity) Where V(i) = I/O variables in module I f(i) = fan-out of module I n = number of modules in system q © G. Antoniol 2012 LOG 6305 33

Case Study q q 8 projects Correlation between system complexity and development defect rates = 0. 83 Defect rate = -5. 2 + 0. 4 S (Complexity) Quality prediction models can be useful Ø To assess whether testing has been effective and sufficient Ø To plan testing and defect correction resources © G. Antoniol 2012 LOG 6305 34

Unusual Components q q q Unusual size and/or structure values May be difficult to implement and therefore error-prone Difficult to test and likely to contain residual errors at the end of testing Difficult to understand therefore to maintain All these hypotheses need to be empirically validated © G. Antoniol 2012 LOG 6305 35

Testing Measures

Types of Testing Measures q q q Measures related to software test items, testing activities, and test results Goal: Control SW quality through controlling SW testing 3 types of measures: Ø Testability, used as “ease of testing” here Ø Test control Ø Test results © G. Antoniol 2012 LOG 6305 37

Testability Measures q q q Integration testing: Testability may be based on module linkage metrics such as fan‑in, fan‑out , and counts of reads and writes to common data items. Unit testing: testability metrics include metrics based on module control flow such Mc. Cabe's cyclomatic number which identifies the number of independent control flow paths. Thus, testability metrics are actually usually size or structure measures used for the purpose of assessing ease of testing. © G. Antoniol 2012 LOG 6305 38

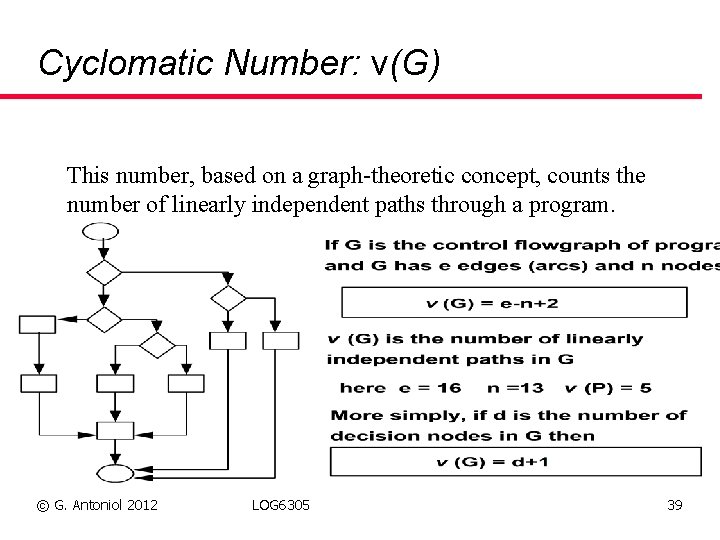

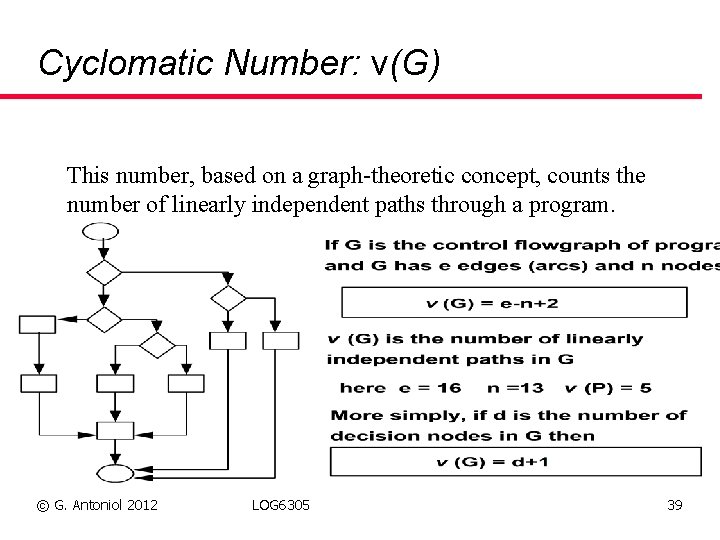

Cyclomatic Number: v(G) This number, based on a graph-theoretic concept, counts the number of linearly independent paths through a program. © G. Antoniol 2012 LOG 6305 39

Applications for Testability q q q To identify potentially untestable (or difficult to test) components at an early stage in the development process. This provides the opportunity to redesign components and/or provide additional testing effort; To assess the feasibility of test specifications in terms of the provision of adequate test cases and appropriate test completion criteria; To estimate the effort and schedule of test activities. This assumes that predictive models are available to relate testability metrics to testing effort and duration. © G. Antoniol 2012 LOG 6305 40

Test Control Measures 1. 2. 3. Test resource metrics: Test resource metrics are measures of effort, timescales, and staffing levels, and other cost items such as tool purchase, special test equipment, training etc. Test coverage metrics: They measure the amount of the product that has been tested at different levels of granularity. Test plan metrics: Test plan metrics indicate the amount of testing to be performed © G. Antoniol 2012 LOG 6305 41

Test Result Measures q q Test result metrics are usually based on fault counts Such counts can be related to the test item and the test activity in terms of fault rates (e. g. faults per 100 Lo. C and faults found per 100 testing hours respectively). Fault counts can also be made in terms of various fault categories (See Orthogonal Defect Classification later). Fault rates are often used to identify pass and fail criteria for test items © G. Antoniol 2012 LOG 6305 42

Summary q q q The production of software products needs the control mechanisms that exist in other industries The major problem is that although our measures can identify deviations from plans and anomalous components, they cannot usually identify the root cause(s). You can make constructive use of software measures only if you understand the context in which your products are developed, including the capabilities of your development staff. © G. Antoniol 2012 LOG 6305 43

Orthogonal Defect Classification (ODC)

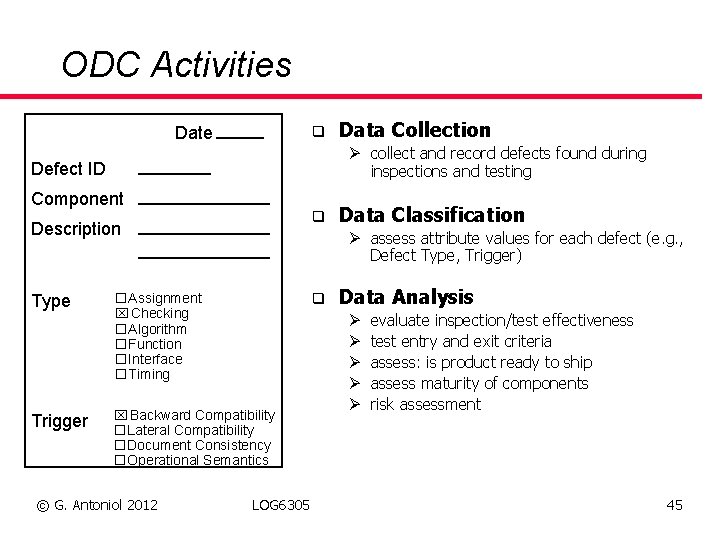

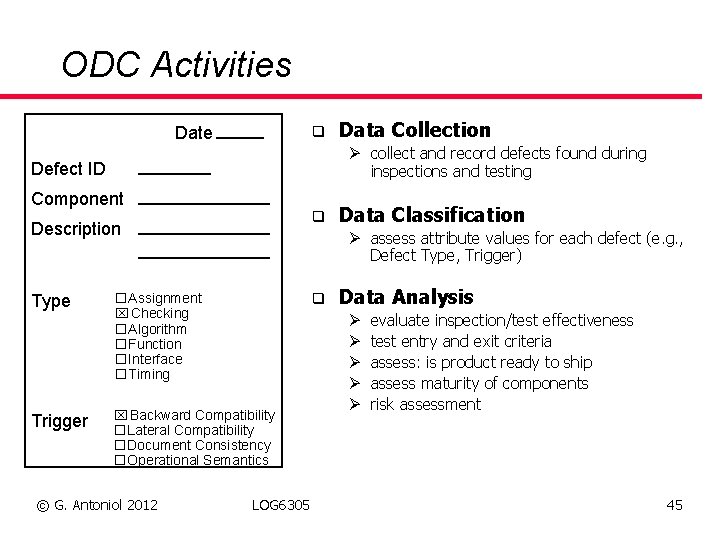

ODC Activities Date q Ø collect and record defects found during inspections and testing Defect ID Component q Description Type Trigger Data Collection Ø assess attribute values for each defect (e. g. , Defect Type, Trigger) q o. Assignment x. Checking o. Algorithm o. Function o. Interface o. Timing x. Backward Compatibility o. Lateral Compatibility o. Document Consistency o. Operational Semantics © G. Antoniol 2012 Data Classification LOG 6305 Data Analysis Ø Ø Ø evaluate inspection/test effectiveness test entry and exit criteria assess: is product ready to ship assess maturity of components risk assessment 45

Overview q q q Data Classification: The ODC Classification Scheme Data Analysis: How to evaluate one- and two-dimensional distributions ODC Attribute Defect Type Ø Theory Ø Example q ODC Attribute Trigger Ø Theory Ø Example q Technology Transfer Issues © G. Antoniol 2012 LOG 6305 46

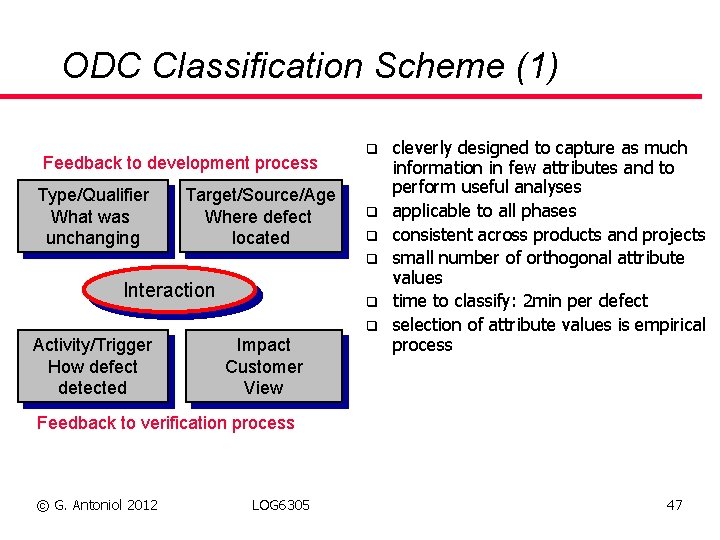

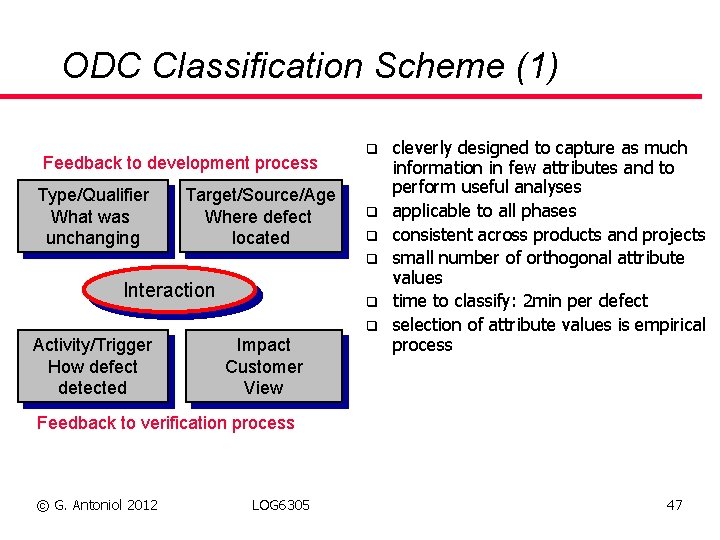

ODC Classification Scheme (1) Feedback to development process Type/Qualifier What was unchanging Target/Source/Age Where defect located q q Interaction q q Activity/Trigger How defect detected Impact Customer View cleverly designed to capture as much information in few attributes and to perform useful analyses applicable to all phases consistent across products and projects small number of orthogonal attribute values time to classify: 2 min per defect selection of attribute values is empirical process Feedback to verification process © G. Antoniol 2012 LOG 6305 47

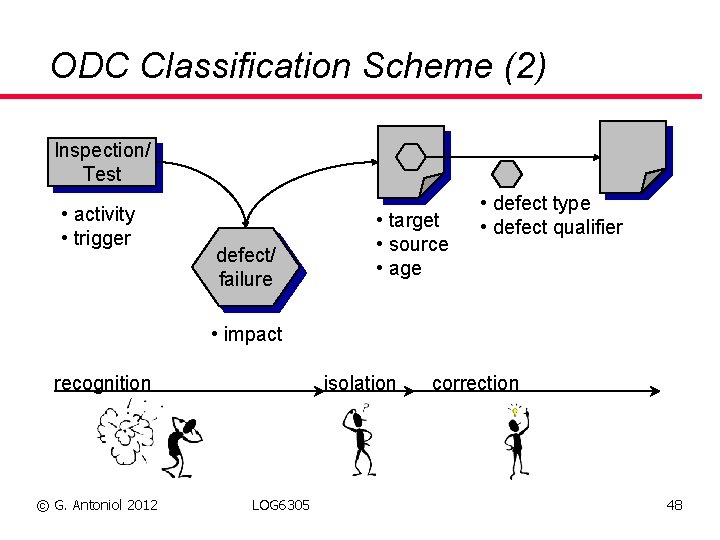

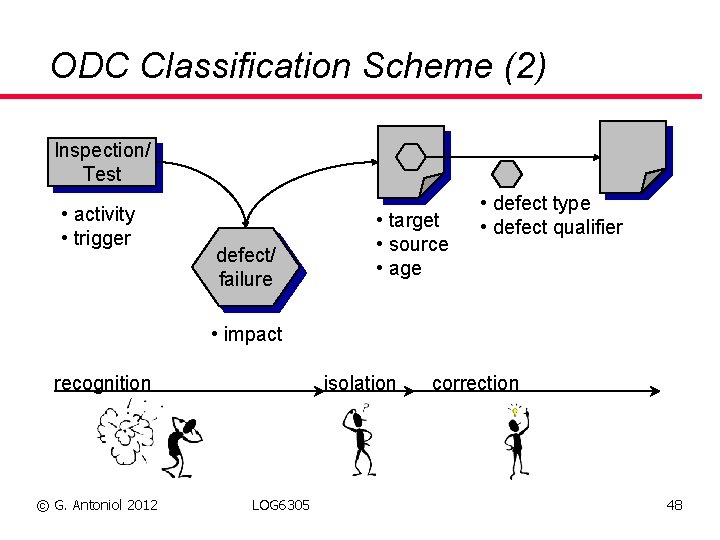

ODC Classification Scheme (2) Inspection/ Test • activity • trigger defect/ failure • target • source • age • defect type • defect qualifier • impact recognition © G. Antoniol 2012 isolation LOG 6305 correction 48

ODC Classification Scheme (3) q Activity: When did you detect the defect? ü design inspection, code inspection, unit test, integration test, system test q Trigger: How did you detect the defect? What condition had to exist for the defect to surface? How to reproduce the defect? Ø For each activity there exists a different set of categories ü Inspection Trigger: design conformance, logic/flow, lateral compatibility, backward compatibility, language dependency, concurrency, side effects, rare situation q Impact: What would have customer noticed if defect had escaped into the field? ü installability, serviceability, standard, integrity/security, migration, reliability, performance © G. Antoniol 2012 LOG 6305 49

ODC Classification Scheme (4) q q q Target: What high level entity was fixed? ü requirements, design, code Source: Who developed the target? ü in-house, library, out-sourced, ported Age: What is the history of the target? ü base, new, rewritten, re-fixed Defect Type: What had to be fixed? ü assignment, checking, algorithm, function, timing, interface, relationship Defect Qualifier ü missing, incorrect, extraneous © G. Antoniol 2012 LOG 6305 50

ODC Classification Scheme (5) q There exists a semantic classification of defects, such that its distribution, as a function of process activities, is expected to change as the product advances through the process Ø classify according to “meaning” of what is done => classification independent of product, process, activity q The set of all values of defect attributes must form a spanning set over the process subspace Ø all interesting topics can be investigated Ø requires experience, many pilot projects to determine the appropriate values for an attribute Ø small, orthogonal => easy classification © G. Antoniol 2012 LOG 6305 51

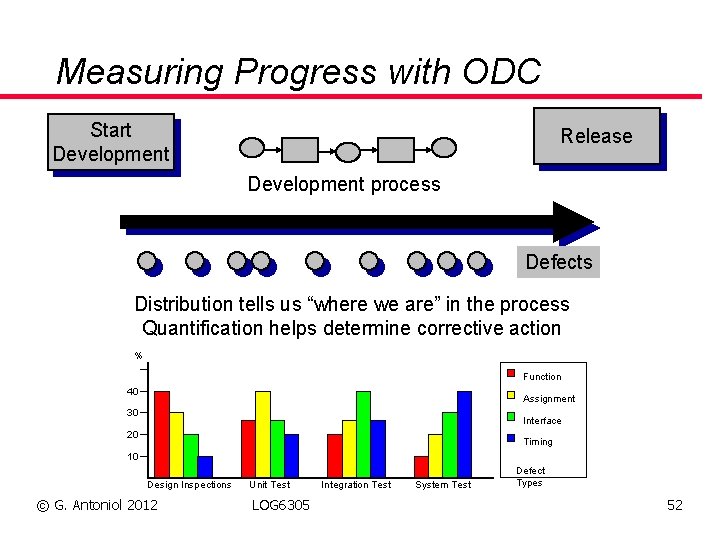

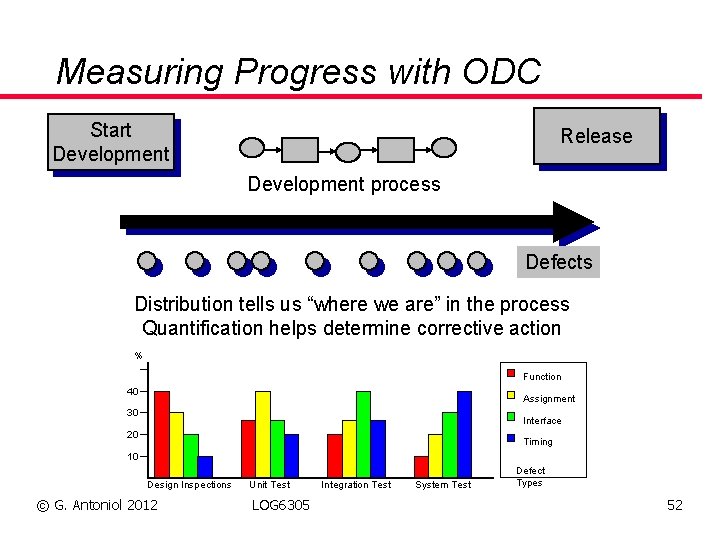

Measuring Progress with ODC Start Development Release Development process Defects Distribution tells us “where we are” in the process Quantification helps determine corrective action % Function 40 Assignment 30 Interface 20 Timing 10 Design Inspections © G. Antoniol 2012 Unit Test LOG 6305 Integration Test System Test Defect Types 52

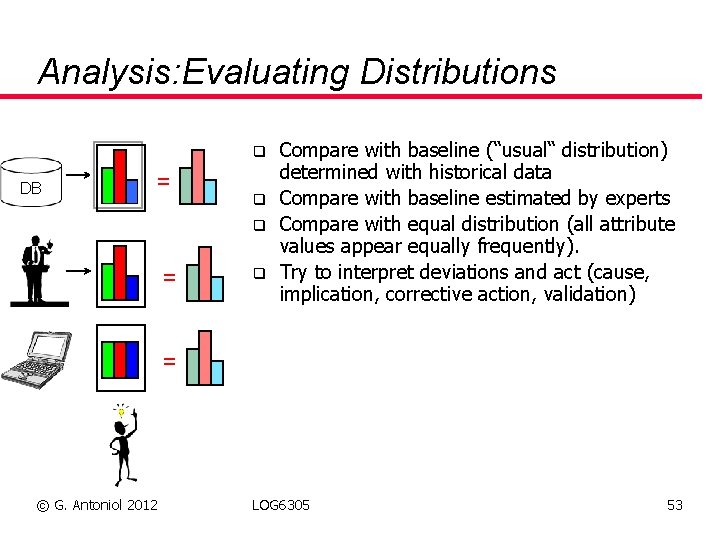

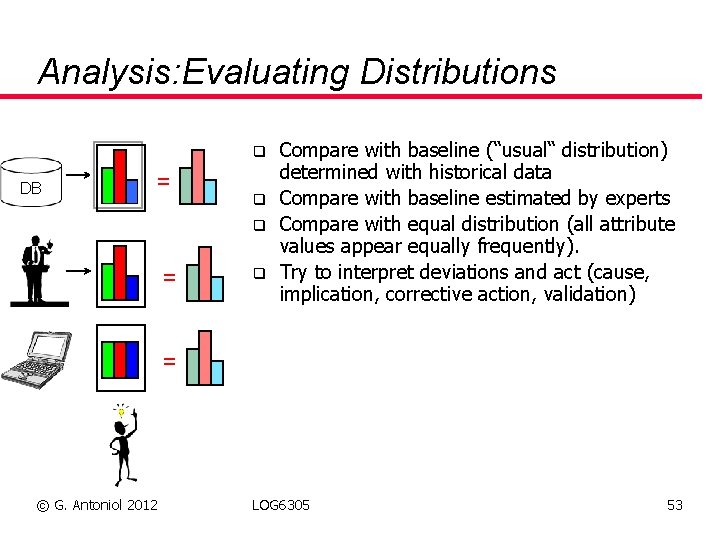

Analysis: Evaluating Distributions q DB = q q = q Compare with baseline (“usual“ distribution) determined with historical data Compare with baseline estimated by experts Compare with equal distribution (all attribute values appear equally frequently). Try to interpret deviations and act (cause, implication, corrective action, validation) = © G. Antoniol 2012 LOG 6305 53

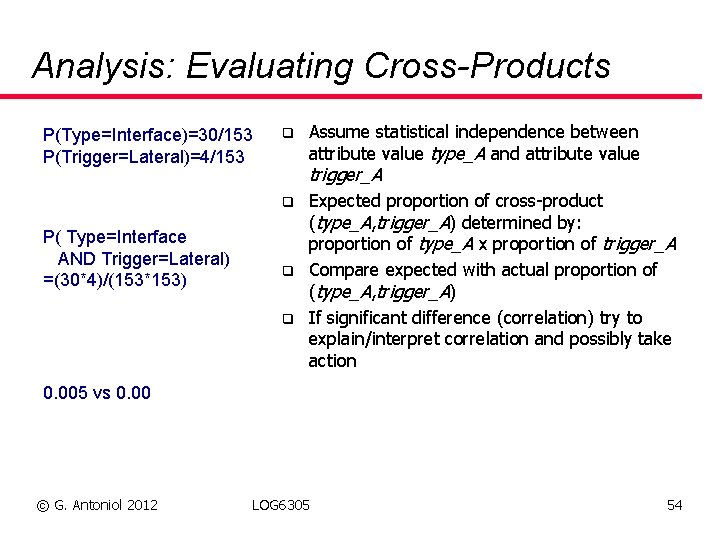

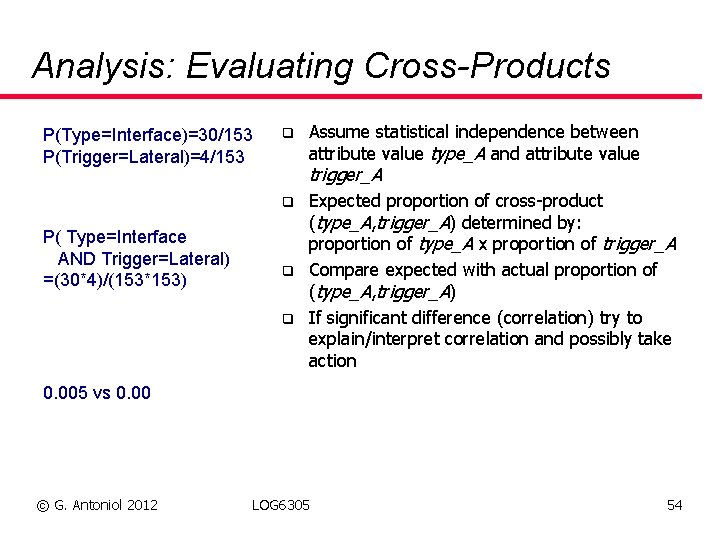

Analysis: Evaluating Cross-Products P(Type=Interface)=30/153 P(Trigger=Lateral)=4/153 q q P( Type=Interface AND Trigger=Lateral) =(30*4)/(153*153) q q Assume statistical independence between attribute value type_A and attribute value trigger_A Expected proportion of cross-product (type_A, trigger_A) determined by: proportion of type_A x proportion of trigger_A Compare expected with actual proportion of (type_A, trigger_A) If significant difference (correlation) try to explain/interpret correlation and possibly take action 0. 005 vs 0. 00 © G. Antoniol 2012 LOG 6305 54

ODC-Attribute Defect Type q q q Developer fixing the defect determines the defect type according to the nature of change Each defect type can be associated with a detection activity, in which it should be detected => for each detection activity unique ‘signature’ Attribute values Ø Ø Ø Ø Function: Requires Formal Design Change Assignment: Few Lo. C changed (e. g. , initialization) Interface: Interaction with other components, modules Algorithm: Errors wrt. efficiency, correctness problems Checking: No proper validation of data, loop conditions Timing/Serialization: problem with shared resources Relationship: Associations among procedures, data structures © G. Antoniol 2012 LOG 6305 55

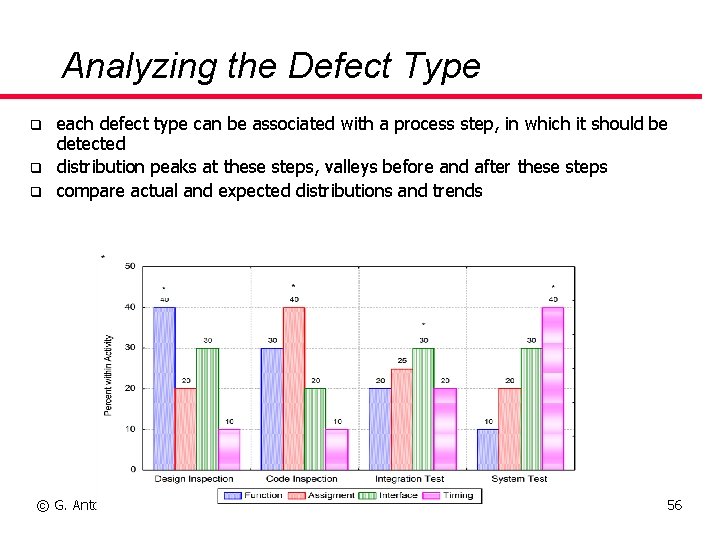

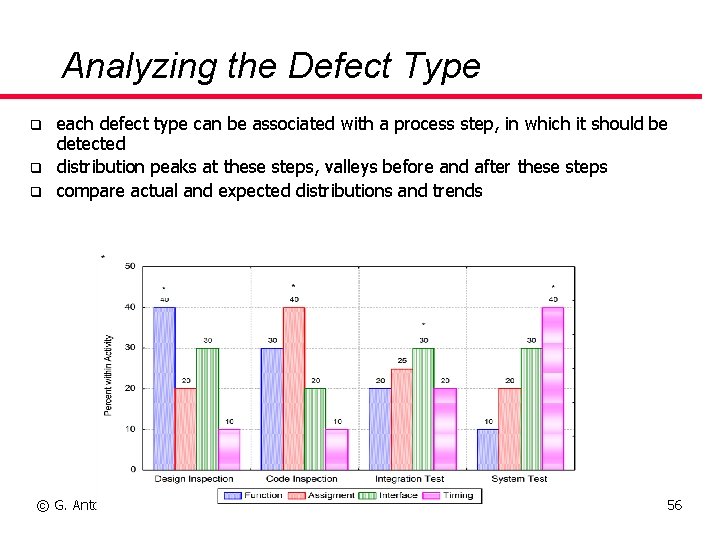

Analyzing the Defect Type q q q each defect type can be associated with a process step, in which it should be detected distribution peaks at these steps, valleys before and after these steps compare actual and expected distributions and trends © G. Antoniol 2012 LOG 6305 56

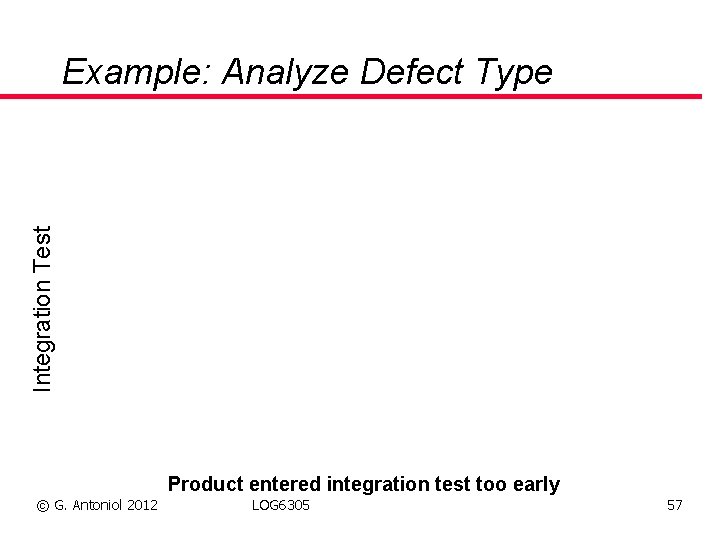

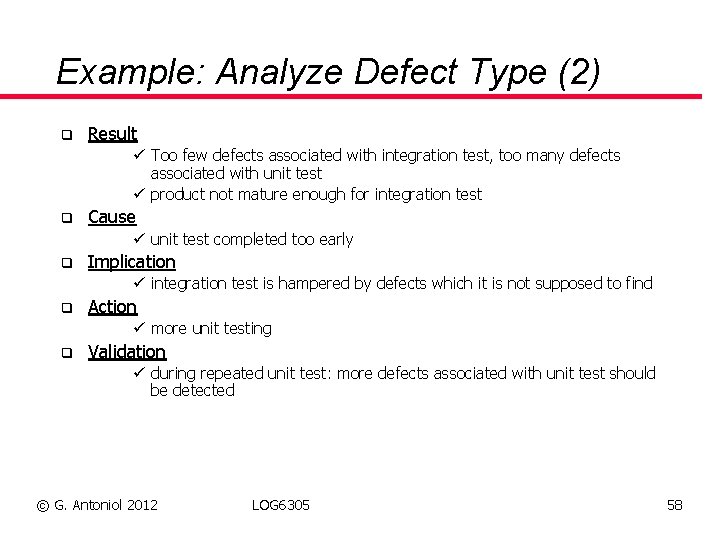

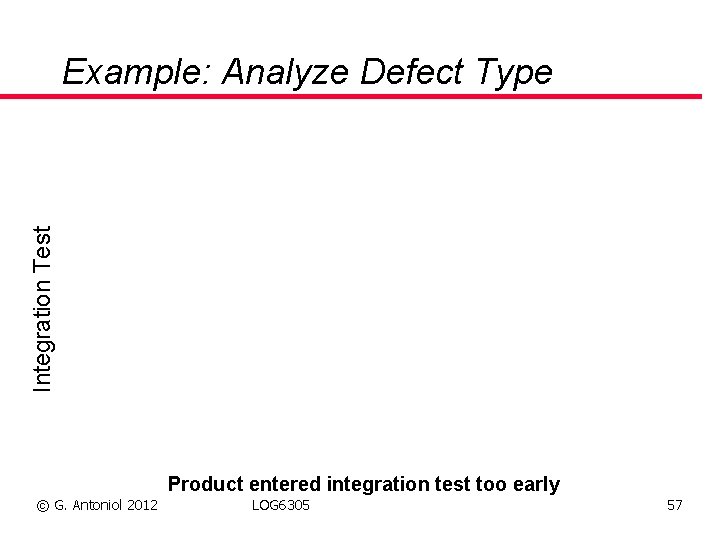

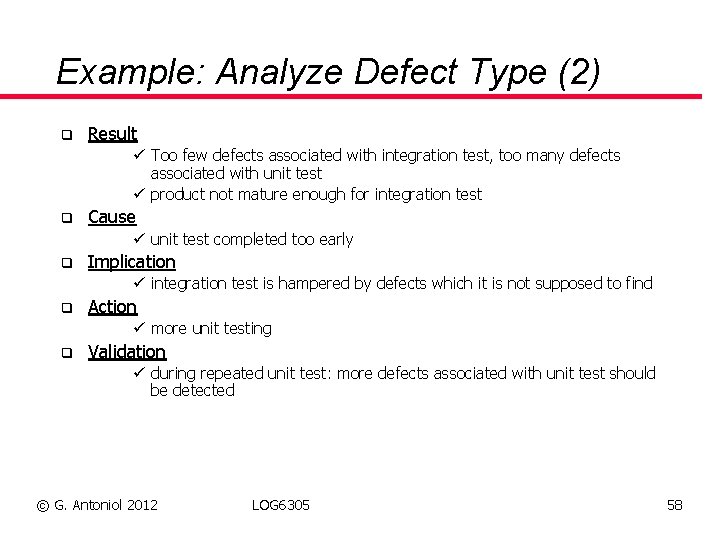

Integration Test Example: Analyze Defect Type Product entered integration test too early © G. Antoniol 2012 LOG 6305 57

Example: Analyze Defect Type (2) q Result ü Too few defects associated with integration test, too many defects associated with unit test ü product not mature enough for integration test q Cause ü unit test completed too early q Implication ü integration test is hampered by defects which it is not supposed to find q Action ü more unit testing q Validation ü during repeated unit test: more defects associated with unit test should be detected © G. Antoniol 2012 LOG 6305 58

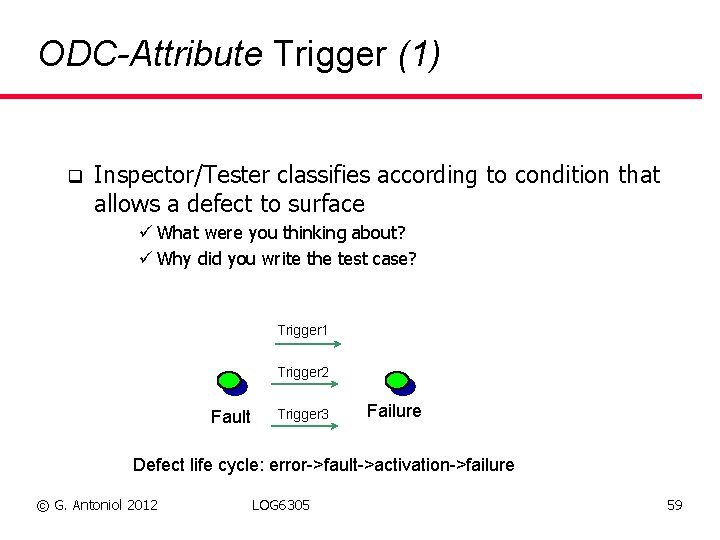

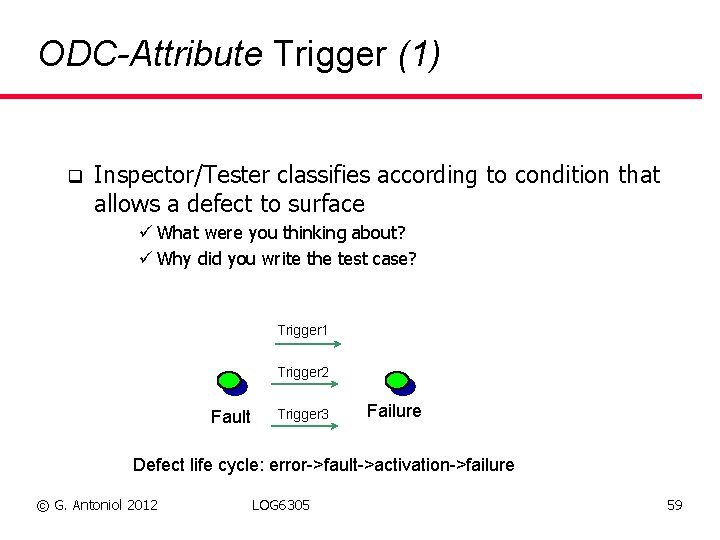

ODC-Attribute Trigger (1) q Inspector/Tester classifies according to condition that allows a defect to surface ü What were you thinking about? ü Why did you write the test case? Trigger 1 Trigger 2 Fault Trigger 3 Failure Defect life cycle: error->fault->activation->failure © G. Antoniol 2012 LOG 6305 59

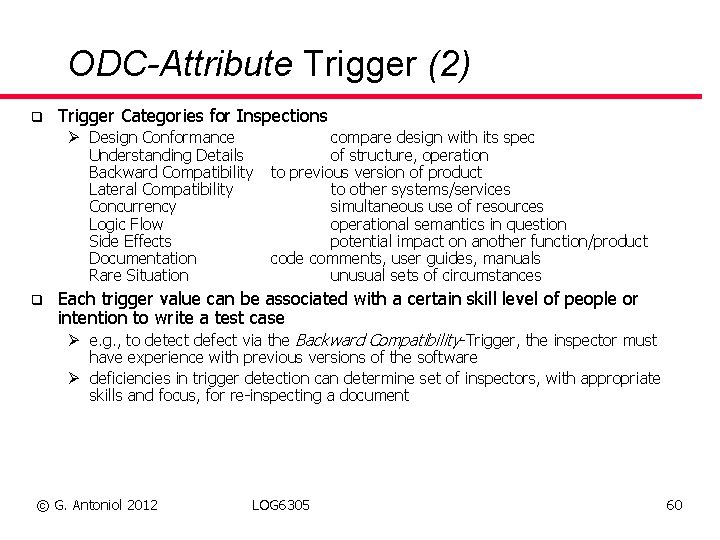

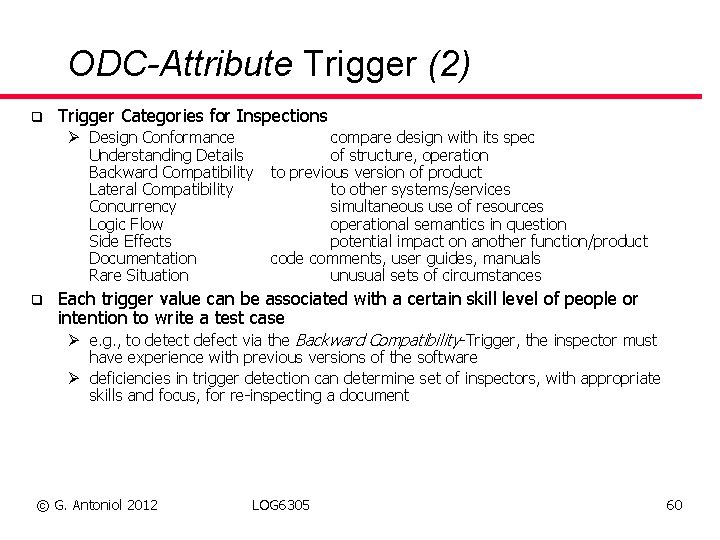

ODC-Attribute Trigger (2) q Trigger Categories for Inspections Ø Design Conformance Understanding Details Backward Compatibility Lateral Compatibility Concurrency Logic Flow Side Effects Documentation Rare Situation q compare design with its spec of structure, operation to previous version of product to other systems/services simultaneous use of resources operational semantics in question potential impact on another function/product code comments, user guides, manuals unusual sets of circumstances Each trigger value can be associated with a certain skill level of people or intention to write a test case Ø e. g. , to detect defect via the Backward Compatibility-Trigger, the inspector must have experience with previous versions of the software Ø deficiencies in trigger detection can determine set of inspectors, with appropriate skills and focus, for re-inspecting a document © G. Antoniol 2012 LOG 6305 60

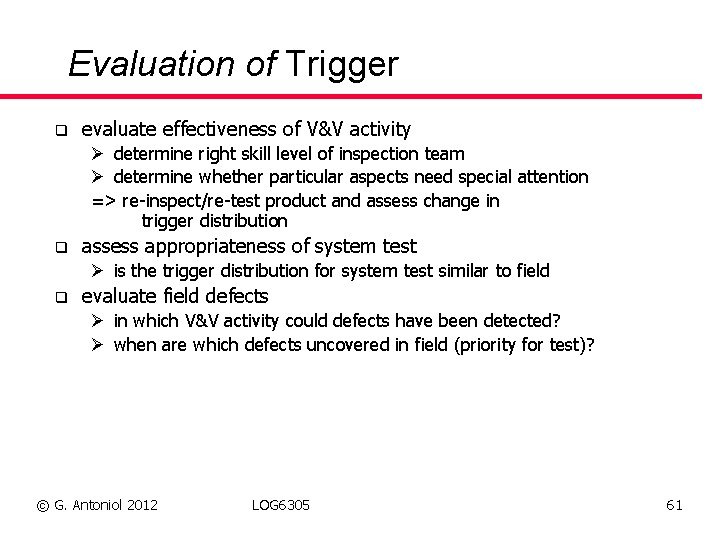

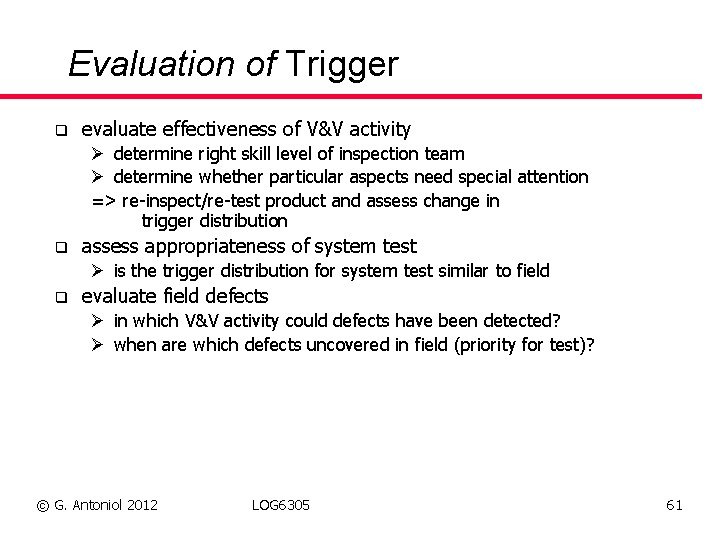

Evaluation of Trigger q evaluate effectiveness of V&V activity Ø determine right skill level of inspection team Ø determine whether particular aspects need special attention => re-inspect/re-test product and assess change in trigger distribution q assess appropriateness of system test Ø is the trigger distribution for system test similar to field q evaluate field defects Ø in which V&V activity could defects have been detected? Ø when are which defects uncovered in field (priority for test)? © G. Antoniol 2012 LOG 6305 61

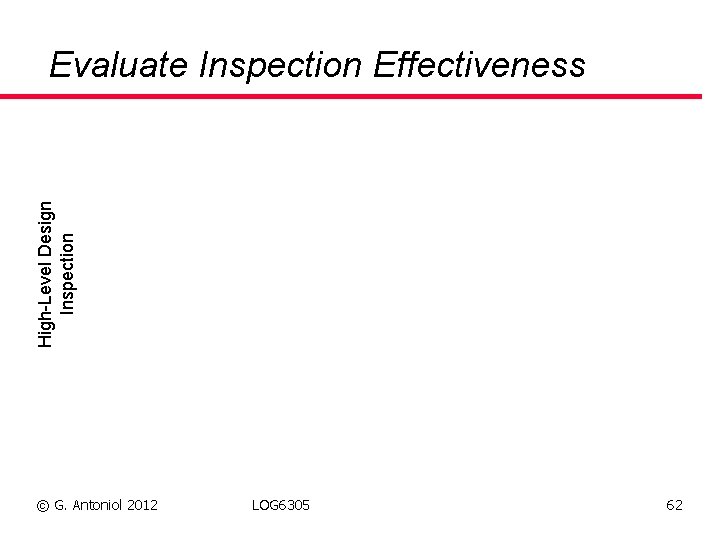

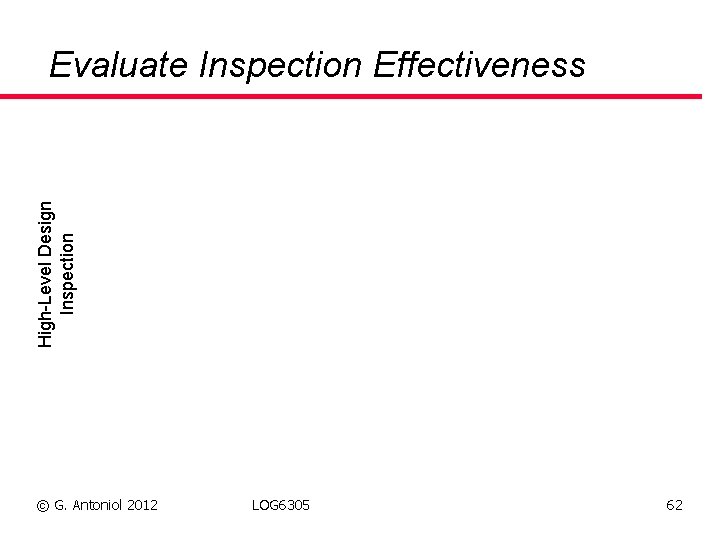

High-Level Design Inspection Evaluate Inspection Effectiveness © G. Antoniol 2012 LOG 6305 62

Evaluate Inspection Effectiveness (2) q Result ü Too few defects associated with lateral compatibility and interface q Cause ü inspection team consisted mainly of inexperienced inspectors, thus compatibility issues were not considered adequately q Implication ü many crucial defects still remain causing existing customer applications to fail q Action ü re-inspect with more experienced inspectors concentrating on deficiencies q Validation ü next slide © G. Antoniol 2012 LOG 6305 63

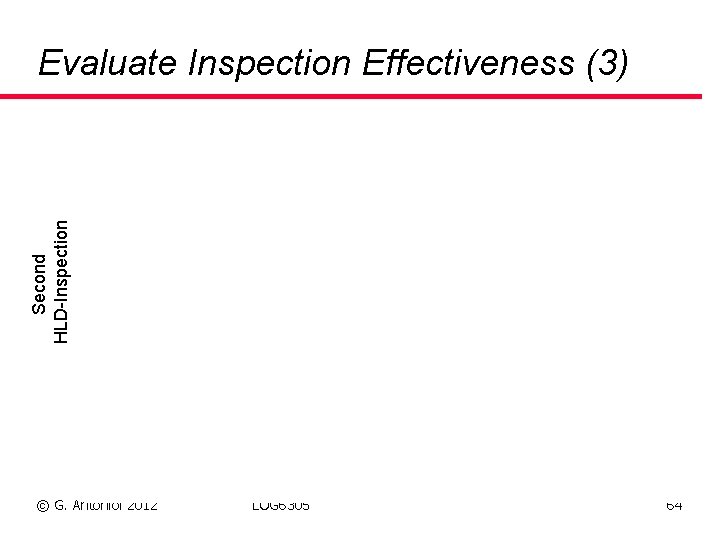

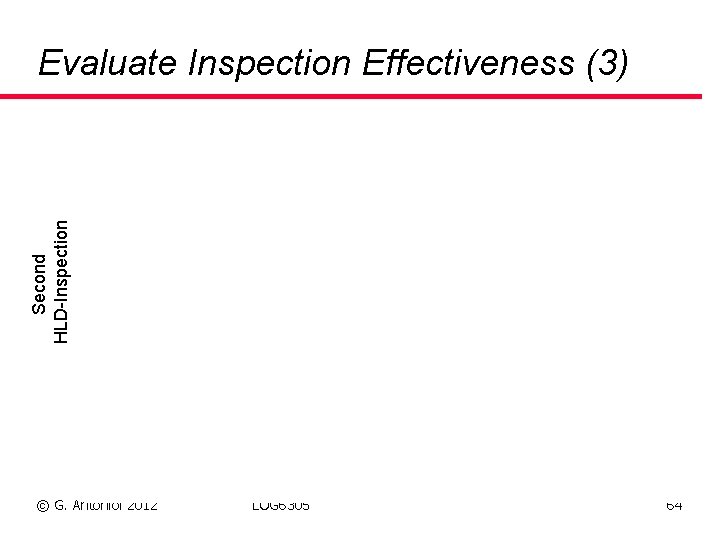

Second HLD-Inspection Evaluate Inspection Effectiveness (3) © G. Antoniol 2012 LOG 6305 64

Unit Test Triggers q Simple Path: The test case that found the defect was executing a simple code path related to a single function q Complex path: the test case that found the defect was executing some contrived combinations of code paths related to multiple functions. © G. Antoniol 2012 LOG 6305 65

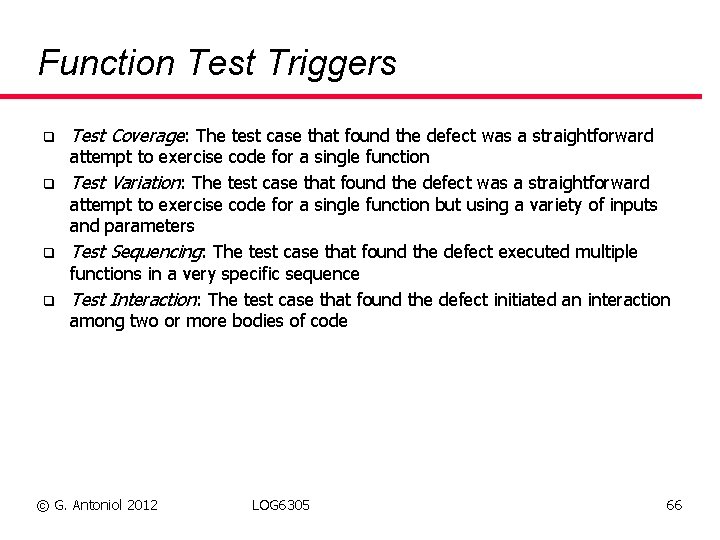

Function Test Triggers q q Test Coverage: The test case that found the defect was a straightforward attempt to exercise code for a single function Test Variation: The test case that found the defect was a straightforward attempt to exercise code for a single function but using a variety of inputs and parameters Test Sequencing: The test case that found the defect executed multiple functions in a very specific sequence Test Interaction: The test case that found the defect initiated an interaction among two or more bodies of code © G. Antoniol 2012 LOG 6305 66

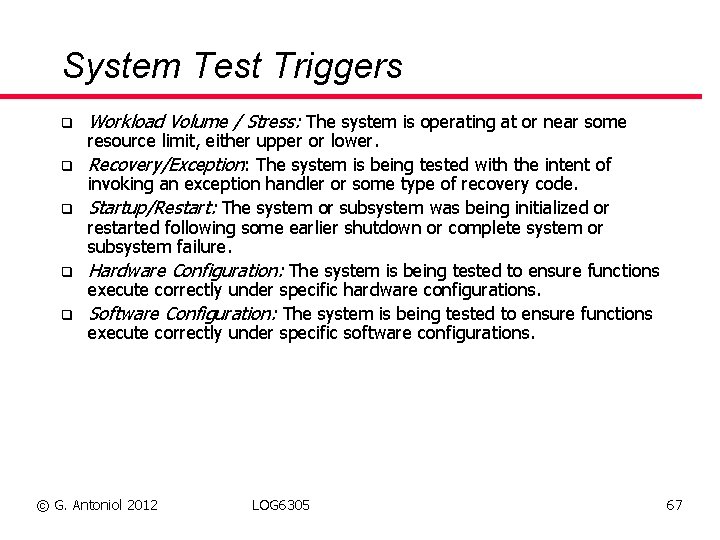

System Test Triggers q q q Workload Volume / Stress: The system is operating at or near some resource limit, either upper or lower. Recovery/Exception: The system is being tested with the intent of invoking an exception handler or some type of recovery code. Startup/Restart: The system or subsystem was being initialized or restarted following some earlier shutdown or complete system or subsystem failure. Hardware Configuration: The system is being tested to ensure functions execute correctly under specific hardware configurations. Software Configuration: The system is being tested to ensure functions execute correctly under specific software configurations. © G. Antoniol 2012 LOG 6305 67

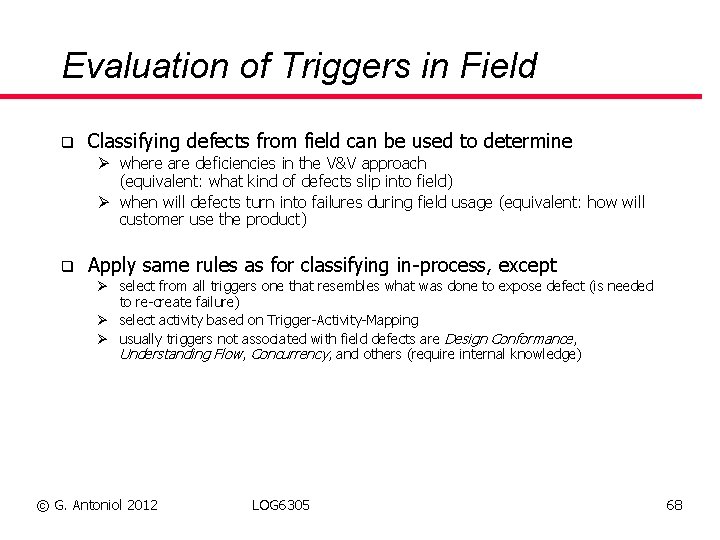

Evaluation of Triggers in Field q Classifying defects from field can be used to determine Ø where are deficiencies in the V&V approach (equivalent: what kind of defects slip into field) Ø when will defects turn into failures during field usage (equivalent: how will customer use the product) q Apply same rules as for classifying in-process, except Ø select from all triggers one that resembles what was done to expose defect (is needed to re-create failure) Ø select activity based on Trigger-Activity-Mapping Ø usually triggers not associated with field defects are Design Conformance, Understanding Flow, Concurrency, and others (require internal knowledge) © G. Antoniol 2012 LOG 6305 68

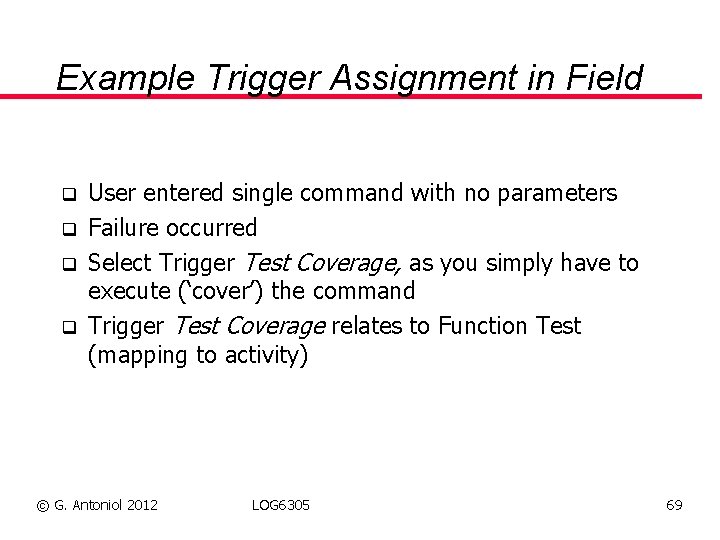

Example Trigger Assignment in Field q q User entered single command with no parameters Failure occurred Select Trigger Test Coverage, as you simply have to execute (‘cover’) the command Trigger Test Coverage relates to Function Test (mapping to activity) © G. Antoniol 2012 LOG 6305 69

Field Triggers & Process Escapes q © G. Antoniol 2012 LOG 6305 By analyzing the distribution of activities that let defects slip (created from triggeractivity mapping), priorities for process improvement can be determined 70

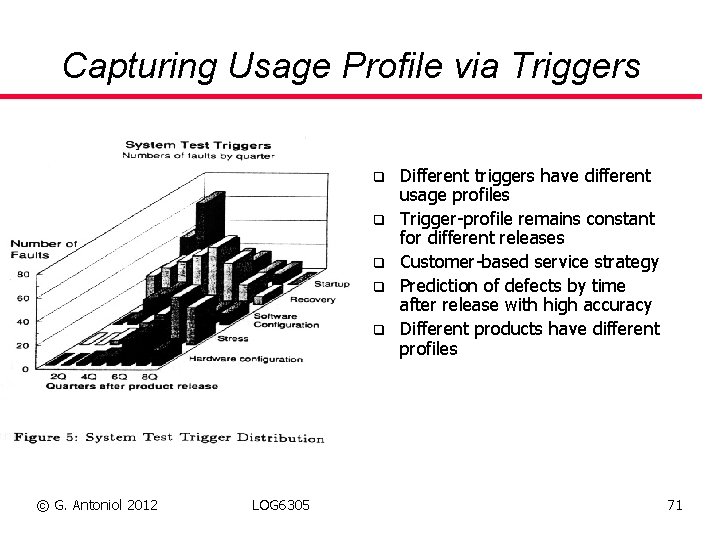

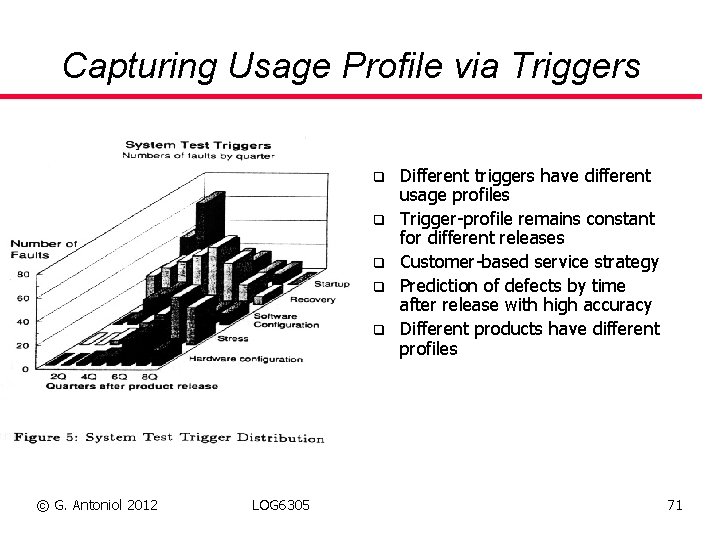

Capturing Usage Profile via Triggers q q q © G. Antoniol 2012 LOG 6305 Different triggers have different usage profiles Trigger-profile remains constant for different releases Customer-based service strategy Prediction of defects by time after release with high accuracy Different products have different profiles 71

Technology-Transfer Issues q Presenting Technology Ø Presenting to developers AND managers important q Tool support Ø classification itself necessary but tedious, thus provide easy-to-use, quick, simple tools Ø might be possible to integrate into existing defect tracking tools Ø highly motivated people do not necessarily need tools: they help themselves with paper forms q Technology Transfer is Two-Way Transfer Ø Initially: Look at trends (“function decreases“) (goal oriented) Ø Then: Use data exploration techniques to find interesting patterns © G. Antoniol 2012 LOG 6305 72

Summary In-process quality control mechanisms q Post-mortem assessment of V&V activities q Complementary to reliability models But q Human intensive and dependent q Specific classification schemes and heuristics have to be devised q © G. Antoniol 2012 LOG 6305 73