Software Assurance Metrics and Tool Evaluation Paul E

- Slides: 22

Software Assurance Metrics and Tool Evaluation Paul E. Black National Institute of Standards and Technology http: //www. nist. gov/ paul. black@nist. gov Paul E. Black

What is NIST? l l l National Institute of Standards and Technology A non-regulatory agency in Dept. of Commerce 3, 000 employees + adjuncts Gaithersburg, Maryland Boulder, Colorado Primarily research, not funding Over a century of experience in standards and measurement: from dental ceramics to microspheres, from quantum computers to building codes 9/25/2020 Paul E. Black 2

What is Software Assurance? l … the planned and systematic set of activities that ensures that software processes and products conform to requirements, standards, and procedures. – from NASA Software Assurance Guidebook and Standard to help achieve l Trustworthiness - No vulnerabilities exist, either of malicious or unintentional origin l Predictable Execution - Justifiable confidence that the software functions as intended 9/25/2020 Paul E. Black 3

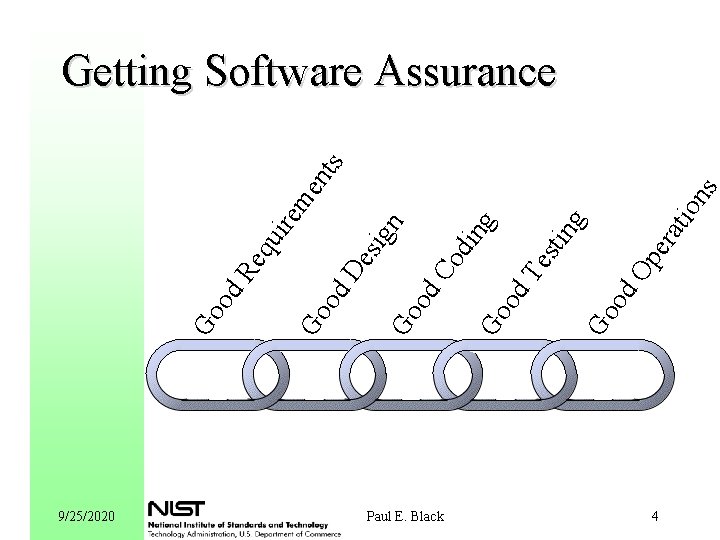

9/25/2020 Paul E. Black rat pe d. O Go o s ion g tin es d. T Go o ing d. C od Go o nts me ire es ign d. D Go o eq u d. R Go o Getting Software Assurance 4

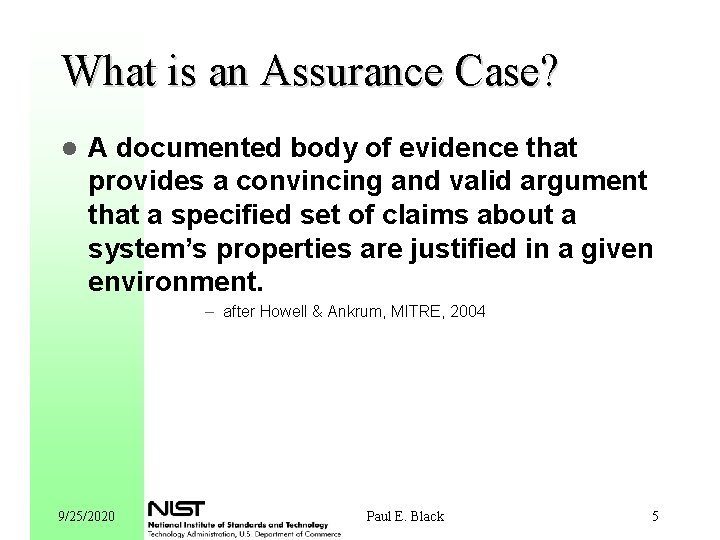

What is an Assurance Case? l A documented body of evidence that provides a convincing and valid argument that a specified set of claims about a system’s properties are justified in a given environment. – after Howell & Ankrum, MITRE, 2004 9/25/2020 Paul E. Black 5

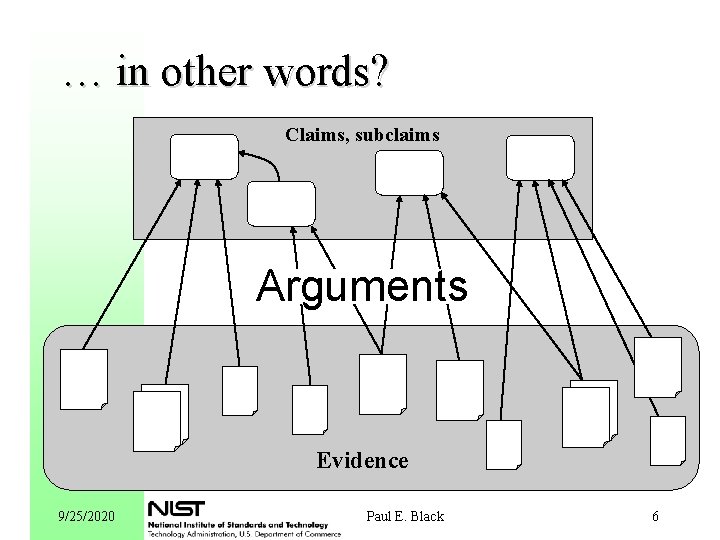

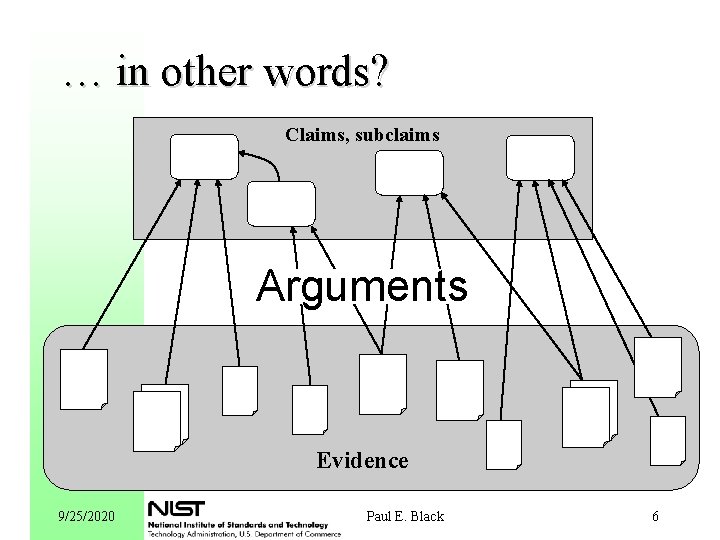

… in other words? Claims, subclaims Arguments Evidence 9/25/2020 Paul E. Black 6

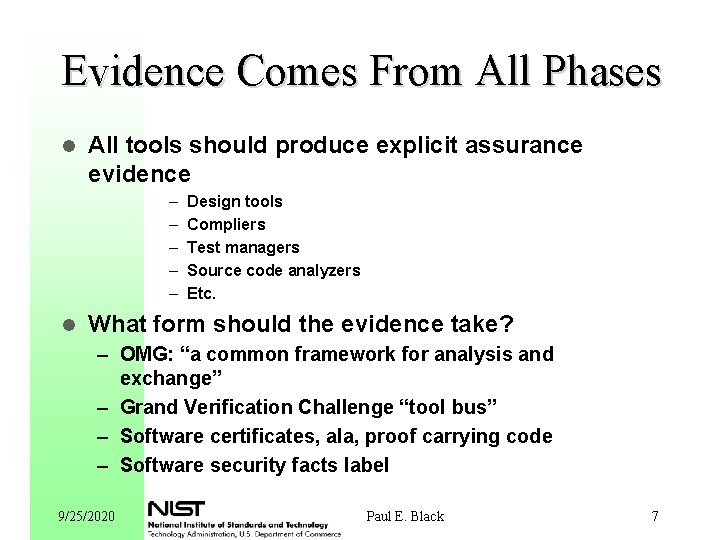

Evidence Comes From All Phases l All tools should produce explicit assurance evidence – – – l Design tools Compliers Test managers Source code analyzers Etc. What form should the evidence take? – OMG: “a common framework for analysis and exchange” – Grand Verification Challenge “tool bus” – Software certificates, ala, proof carrying code – Software security facts label 9/25/2020 Paul E. Black 7

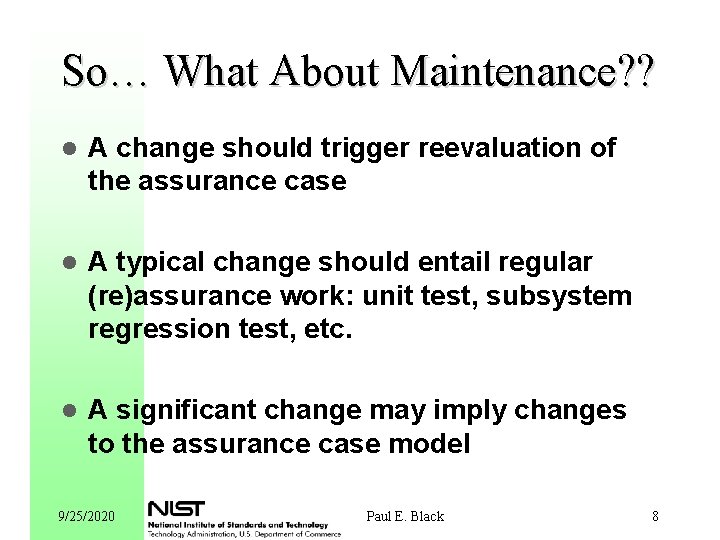

So… What About Maintenance? ? l A change should trigger reevaluation of the assurance case l A typical change should entail regular (re)assurance work: unit test, subsystem regression test, etc. l A significant change may imply changes to the assurance case model 9/25/2020 Paul E. Black 8

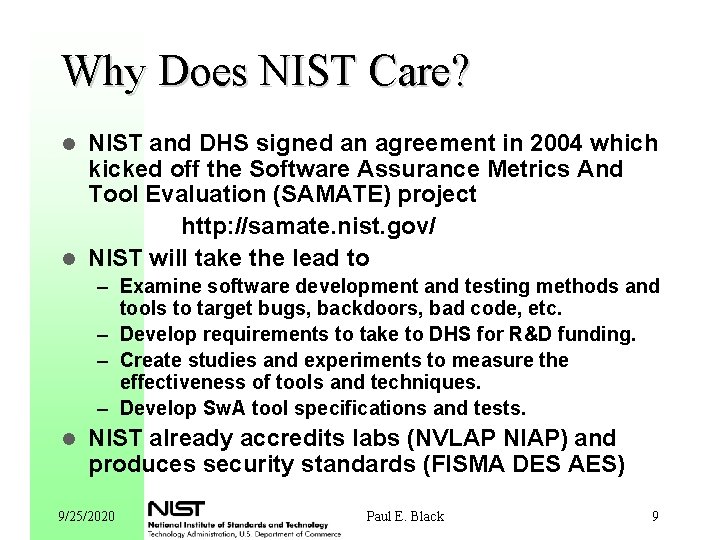

Why Does NIST Care? NIST and DHS signed an agreement in 2004 which kicked off the Software Assurance Metrics And Tool Evaluation (SAMATE) project http: //samate. nist. gov/ l NIST will take the lead to l – Examine software development and testing methods and tools to target bugs, backdoors, bad code, etc. – Develop requirements to take to DHS for R&D funding. – Create studies and experiments to measure the effectiveness of tools and techniques. – Develop Sw. A tool specifications and tests. l NIST already accredits labs (NVLAP NIAP) and produces security standards (FISMA DES AES) 9/25/2020 Paul E. Black 9

Details of Sw. A Tool Evaluations l Develop clear (testable) requirements – – l Focus group develops tool function specification Spec posted to web for public comment Comments incorporated Testable requirements developed Develop a measurement methodology: – – – 9/25/2020 Write test procedures Develop reference datasets or implementations Write scripts and auxiliary programs Document interpretation criteria Come up with test cases Paul E. Black 10

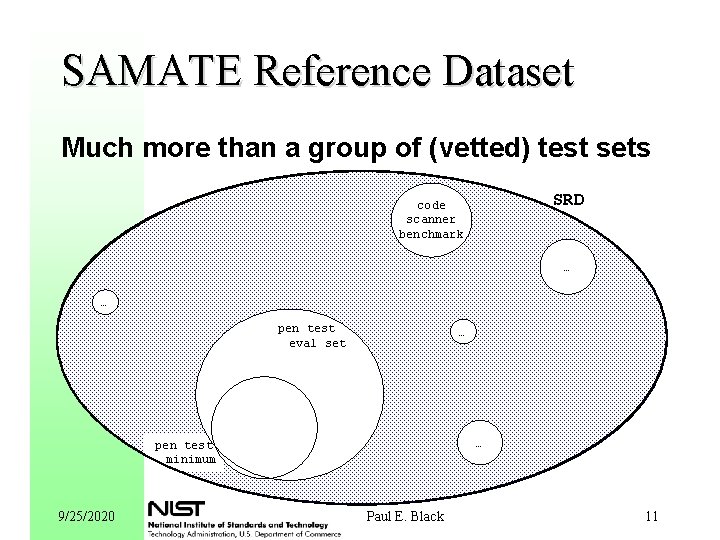

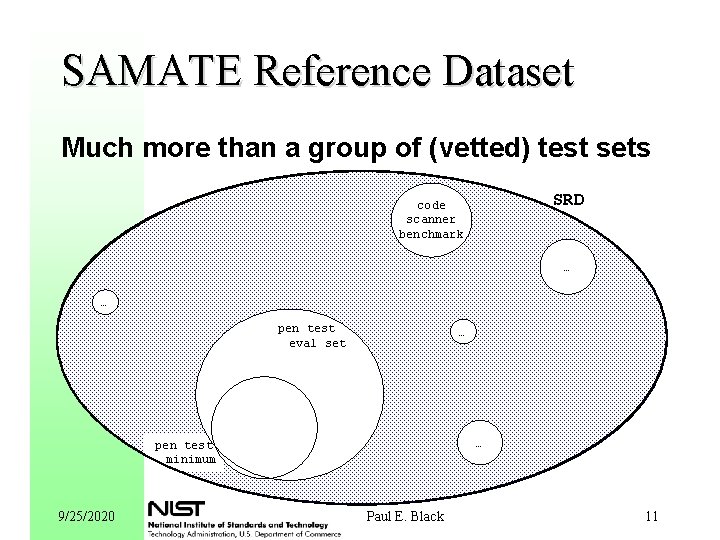

SAMATE Reference Dataset Much more than a group of (vetted) test sets SRD code scanner benchmark … … pen test eval set … … pen test minimum 9/25/2020 Paul E. Black 11

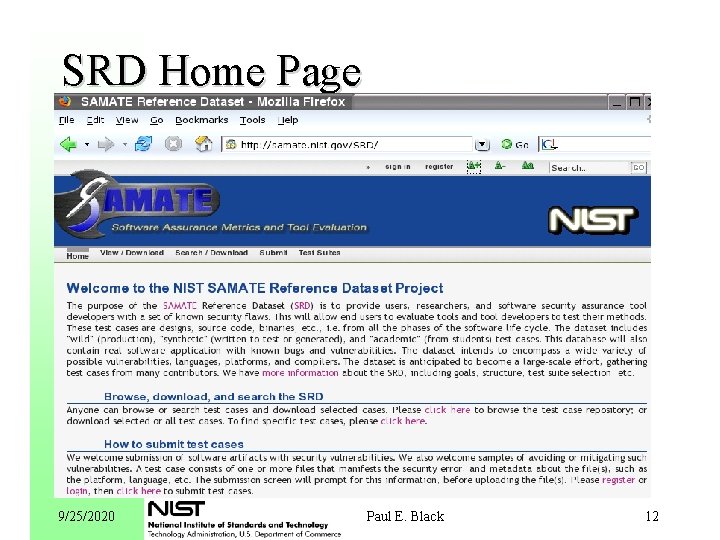

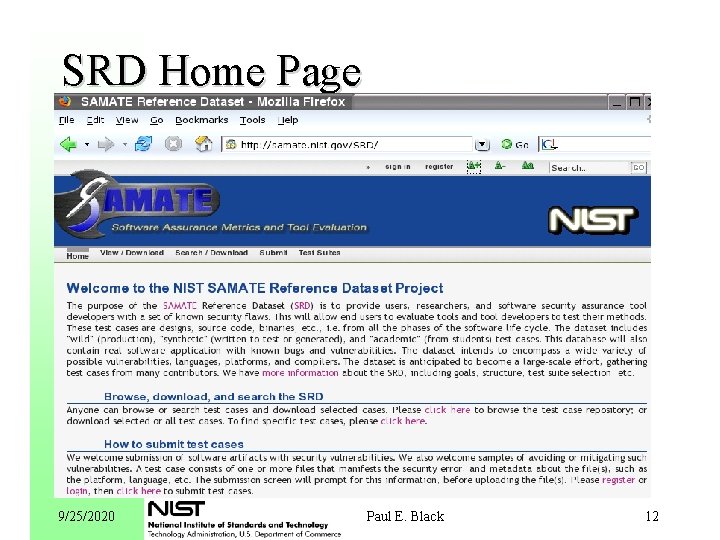

SRD Home Page 9/25/2020 Paul E. Black 12

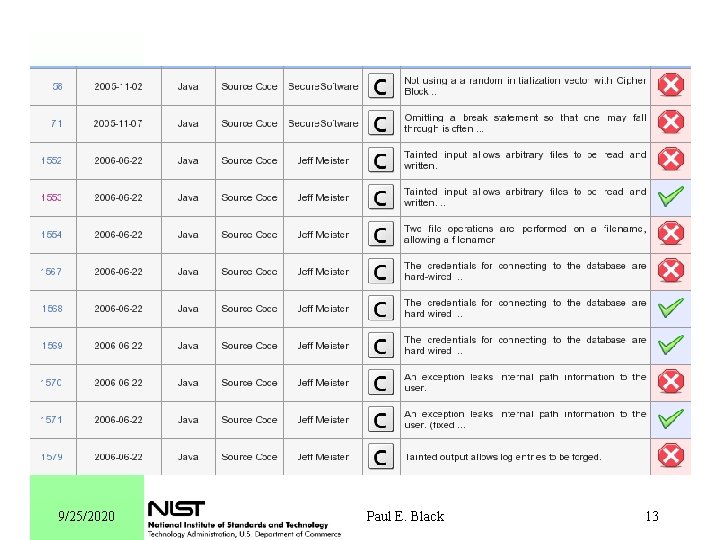

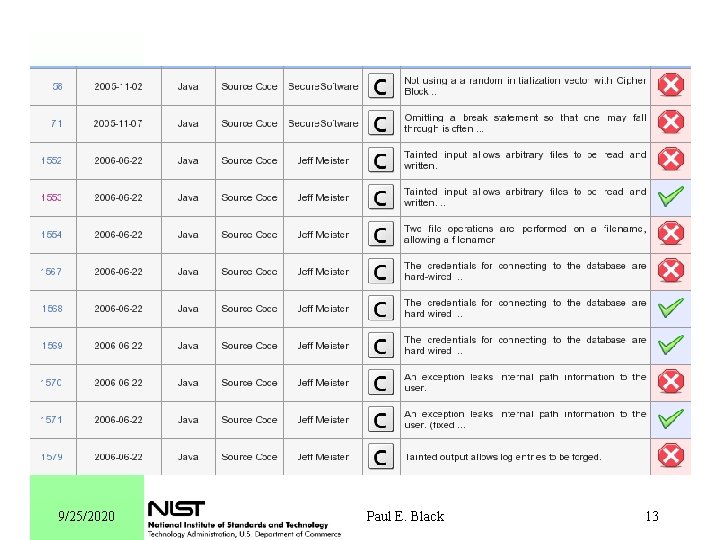

9/25/2020 Paul E. Black 13

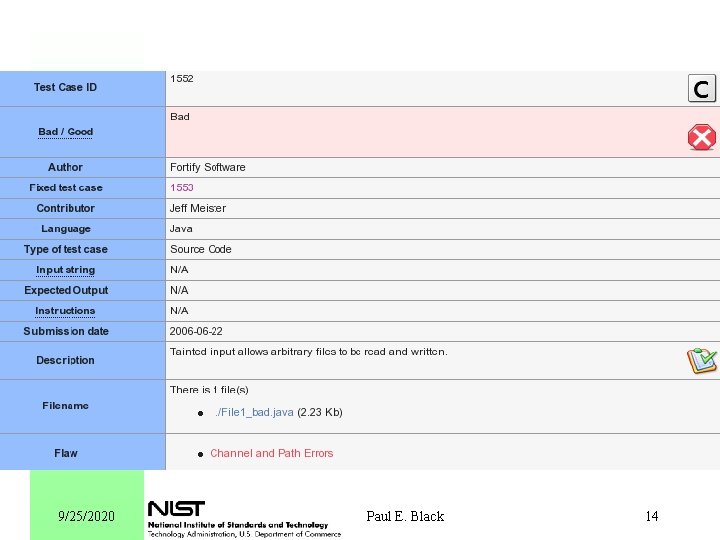

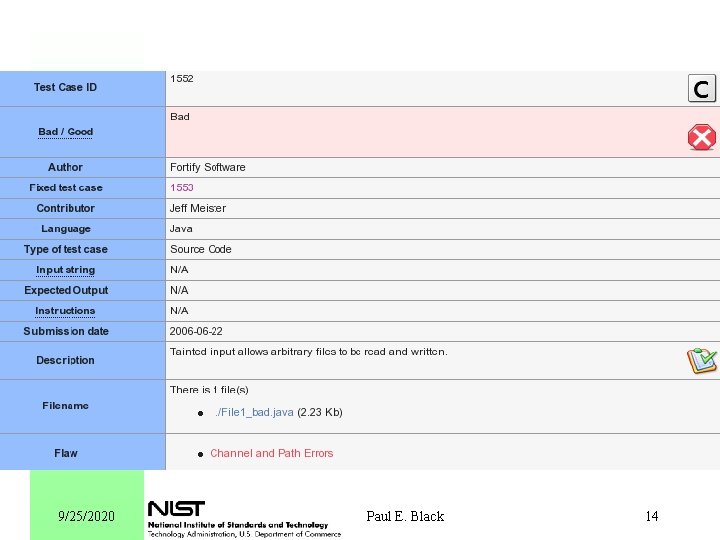

9/25/2020 Paul E. Black 14

9/25/2020 Paul E. Black 15

But, are the Tools Effective? Do they really find vulnerabilities? In other words, how much assurance does running a tool or using a technique provide? 9/25/2020 Paul E. Black 16

Studies and Experiments to Measure Tool Effectiveness l l l Do tools find real vulnerabilities? Is a program secure (enough)? How secure does tool X make a program? How much more secure does technique X make a program after doing Y and Z ? Dollar for dollar, can I get more reliability from methodology P or S ? 9/25/2020 Paul E. Black 17

Contact for Participation l Paul E. Black SAMATE Project Leader Information Technology Laboratory (ITL) U. S. National Institute of Standards and Technology (NIST) paul. black@nist. gov http: //samate. nist. gov/ 9/25/2020 Paul E. Black 18

Possible Study: Do Tools Catch Real Vulnerabilities? Step 1: Choose programs which are widely used, have source available, have long histories. l Step 2: Retrospective l – 2 a: Run tools on older versions of the programs. – 2 b: Compare alarms to reported vulnerabilities and patches. l Step 3: Prospective – 3 a: Run tools on current versions of the programs – 3 b: Wait (6 months to 1 year or more) – 3 d: Compare alarms to reported vulnerabilities and patches. 9/25/2020 Paul E. Black 19

Possible Study: Transformational Sensitivity Choose a program measure 2. Transform a program into a semantically equivalent (loop unrolling, in-lining, break into procedures, etc. ) 3. Measure the transformed program l Repeat steps 2 and 3 1. l If the measurement is consistent, it measures the algorithm. If it differs, it measures the program. 9/25/2020 Paul E. Black 20

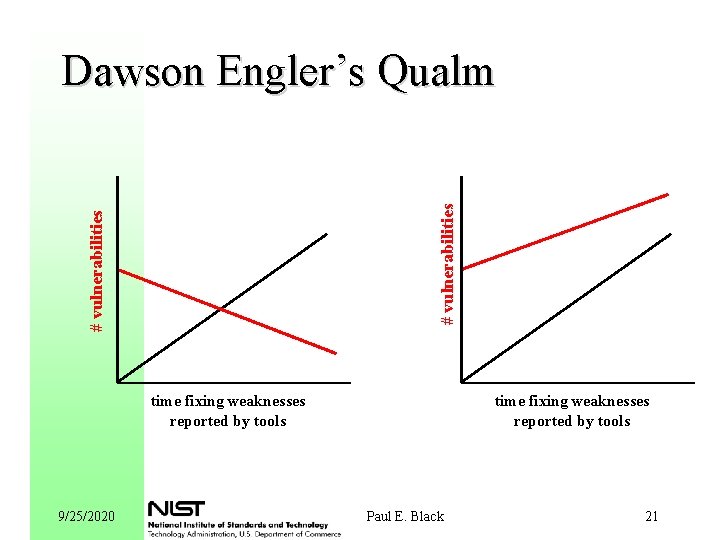

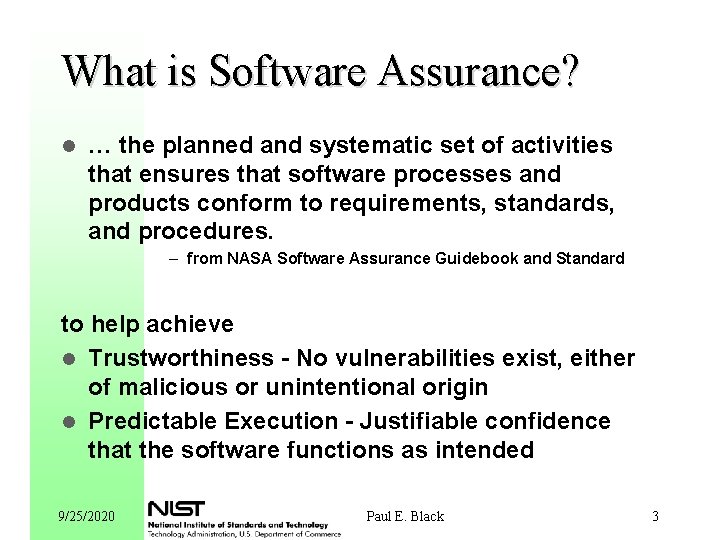

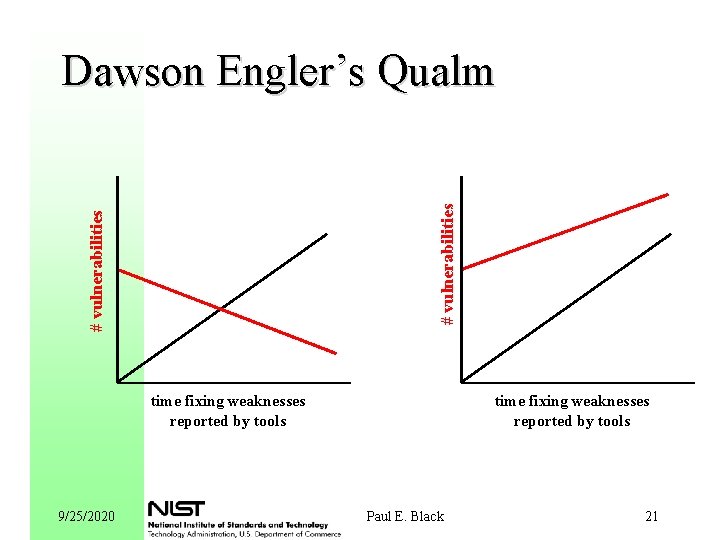

# vulnerabilities Dawson Engler’s Qualm time fixing weaknesses reported by tools 9/25/2020 Paul E. Black 21

Possible Study: Engler’s Qualm l Step 1: Choose programs which are widely used, have long histories, and adopted tools. Use number of vulnerabilities reported as a surrogate for (in)security. DHS funded Coverity to check many programs. Step 2: Count vulnerabilities before and after. Step 3: Compare for statistic significance. l Confounding factors l l – Change in size or popularity of a package – When was tool feedback incorporated? – Reported vulnerabilities may come from the tool. 9/25/2020 Paul E. Black 22