Software Agent Overview Outline Overview of agents Definition

![Software Agent • A formal definition of “Agent” [Wooldridge, 2002] – An agent is Software Agent • A formal definition of “Agent” [Wooldridge, 2002] – An agent is](https://slidetodoc.com/presentation_image/b9e2c0f3e0745298366a4eae7f198a54/image-8.jpg)

![Summary of Agents’ Features [Brenner et al. , 1998] 18/72 Summary of Agents’ Features [Brenner et al. , 1998] 18/72](https://slidetodoc.com/presentation_image/b9e2c0f3e0745298366a4eae7f198a54/image-19.jpg)

- Slides: 73

Software Agent - Overview -

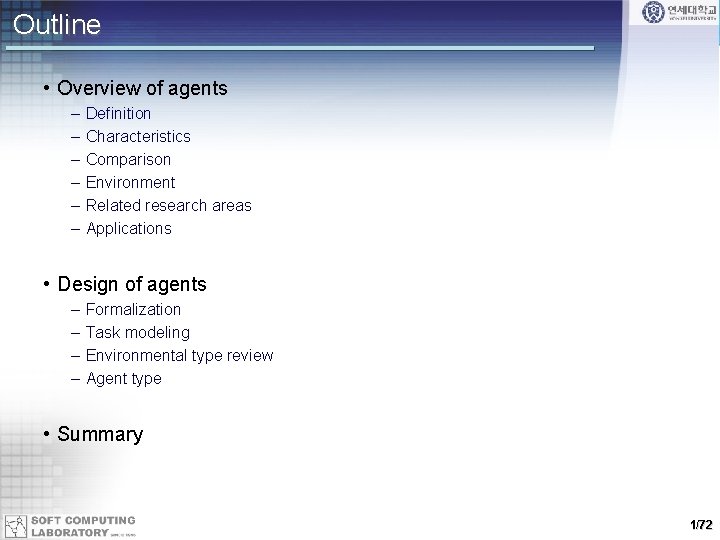

Outline • Overview of agents – – – Definition Characteristics Comparison Environment Related research areas Applications • Design of agents – – Formalization Task modeling Environmental type review Agent type • Summary 1/72

e g d e l ow n K o t Internet n o i t a Telephone m r o f n I Face to Face S T N E G A 2/72

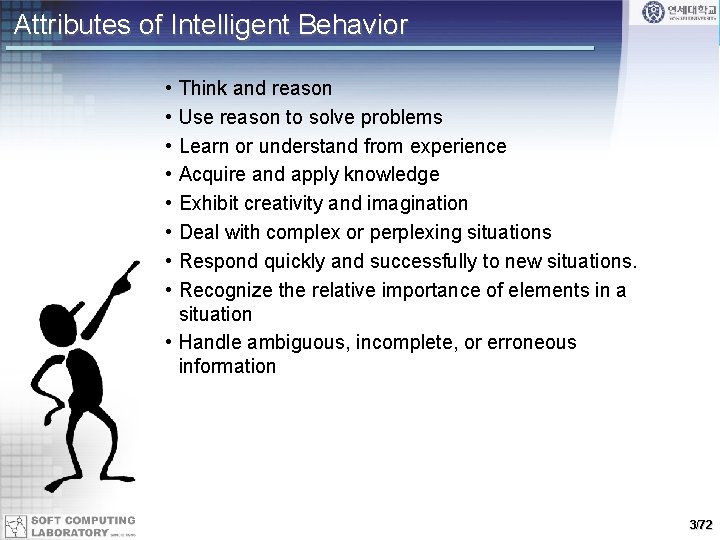

Attributes of Intelligent Behavior • • Think and reason Use reason to solve problems Learn or understand from experience Acquire and apply knowledge Exhibit creativity and imagination Deal with complex or perplexing situations Respond quickly and successfully to new situations. Recognize the relative importance of elements in a situation • Handle ambiguous, incomplete, or erroneous information 3/72

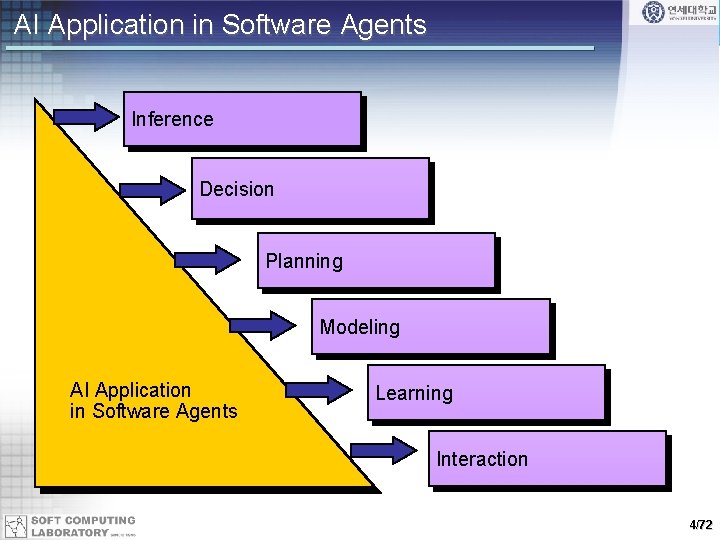

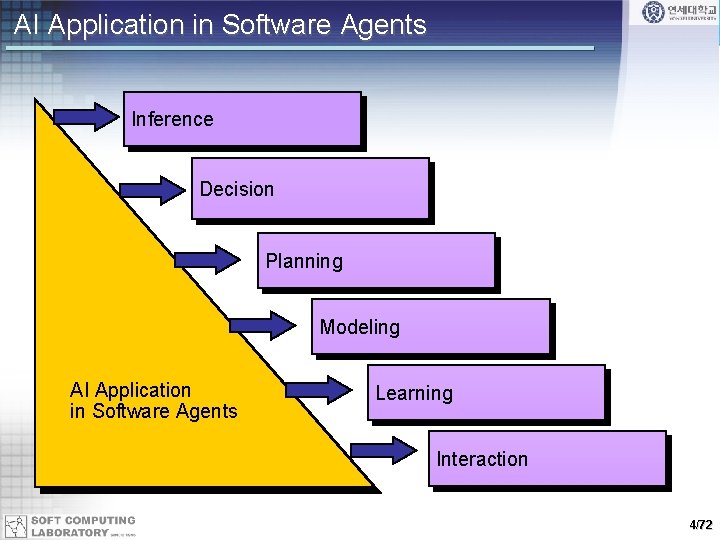

AI Application in Software Agents Inference Decision Planning Modeling AI Application in Software Agents Learning Interaction 4/72

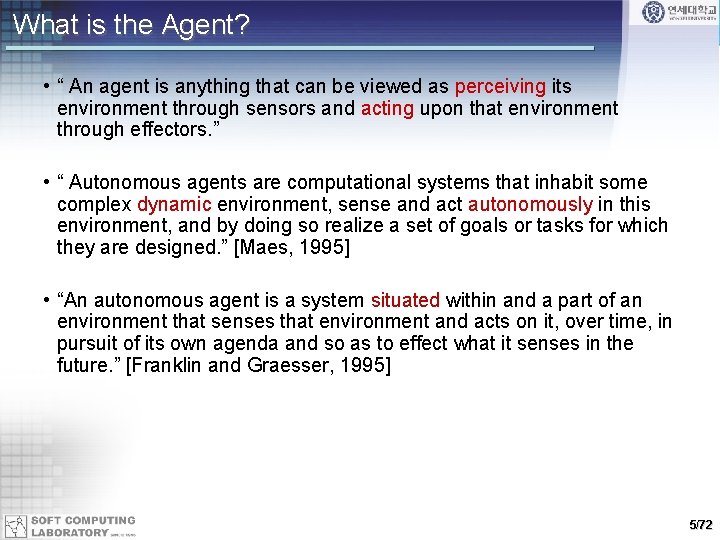

What is the Agent? • “ An agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through effectors. ” • “ Autonomous agents are computational systems that inhabit some complex dynamic environment, sense and act autonomously in this environment, and by doing so realize a set of goals or tasks for which they are designed. ” [Maes, 1995] • “An autonomous agent is a system situated within and a part of an environment that senses that environment and acts on it, over time, in pursuit of its own agenda and so as to effect what it senses in the future. ” [Franklin and Graesser, 1995] 5/72

What is the Agent? • “A hardware or (more usually) software-based computer system that enjoys the following properties: autonomy, social ability, reactivity, pro -activeness. ” [Wooldridge and Jennings, 1995] • “Intelligent agents continuously perform three functions: perception of dynamic conditions in the environment; action to affect conditions in the environment; and reasoning to interpret perceptions, solve problems, draw inferences, and determine actions. ” [Hayes-Roth, 1995] • “Intelligent agents are software entities that carry out some set of operations on behalf of a user or another program with some degree of independence or autonomy, and in so doing, employ some knowledge or representation of the user's goals or desires. ” [IBM] 6/72

![Software Agent A formal definition of Agent Wooldridge 2002 An agent is Software Agent • A formal definition of “Agent” [Wooldridge, 2002] – An agent is](https://slidetodoc.com/presentation_image/b9e2c0f3e0745298366a4eae7f198a54/image-8.jpg)

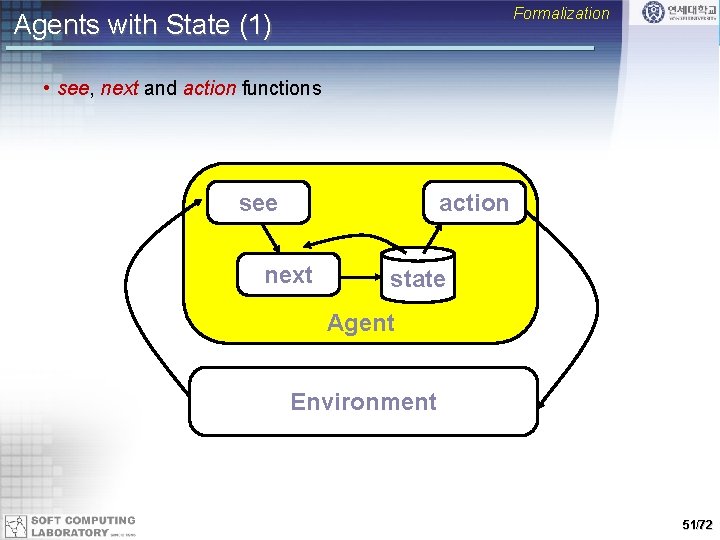

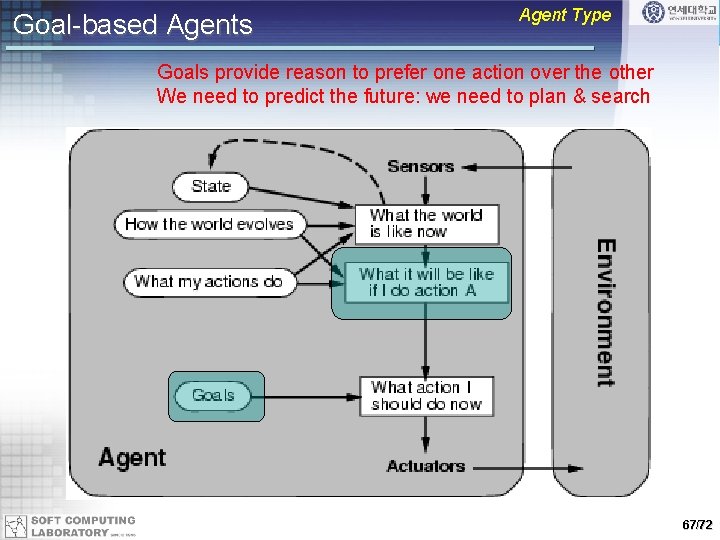

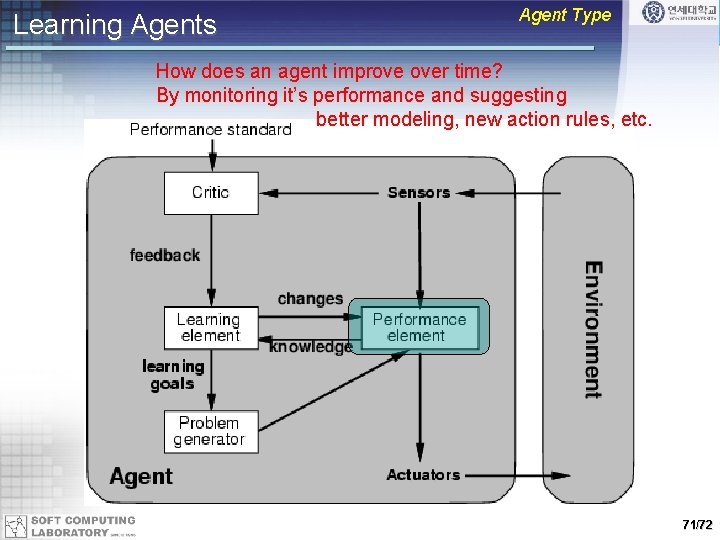

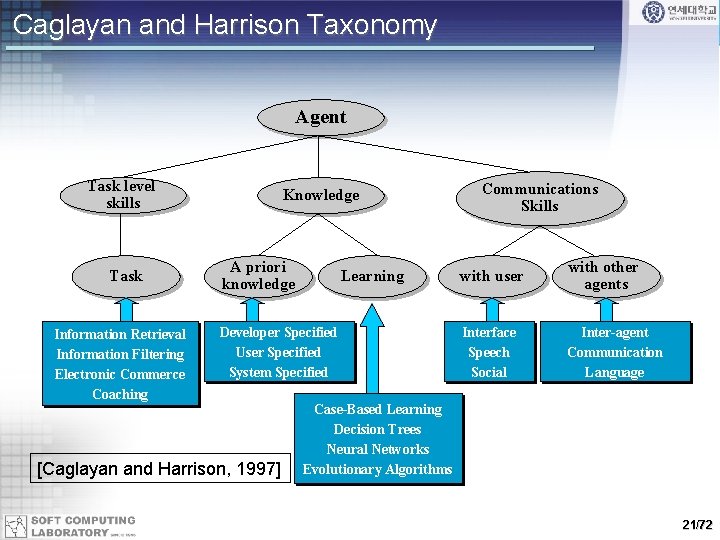

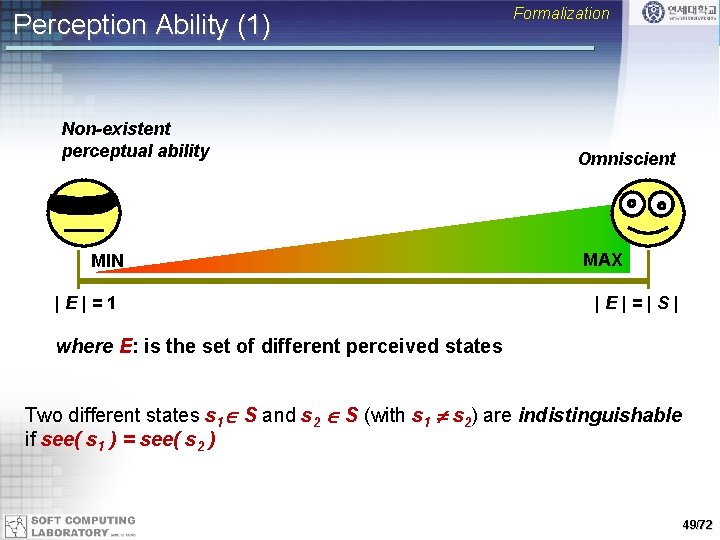

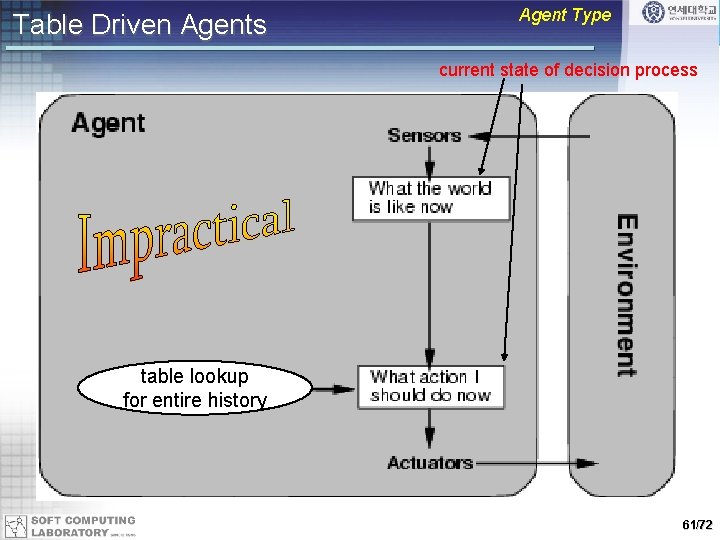

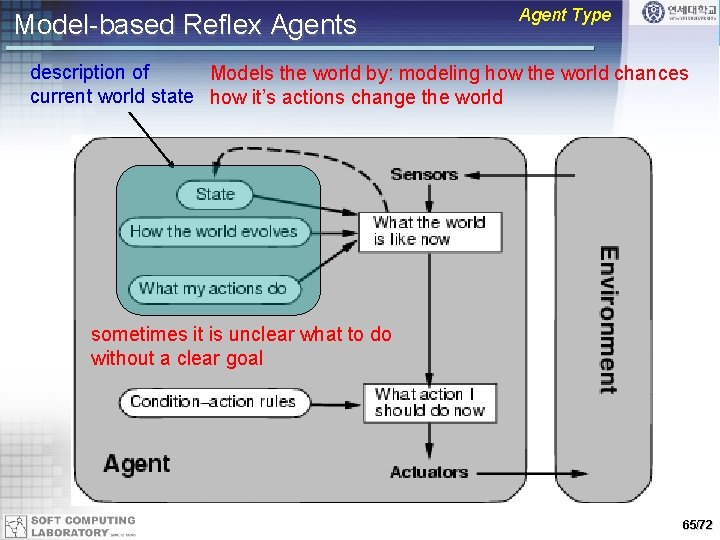

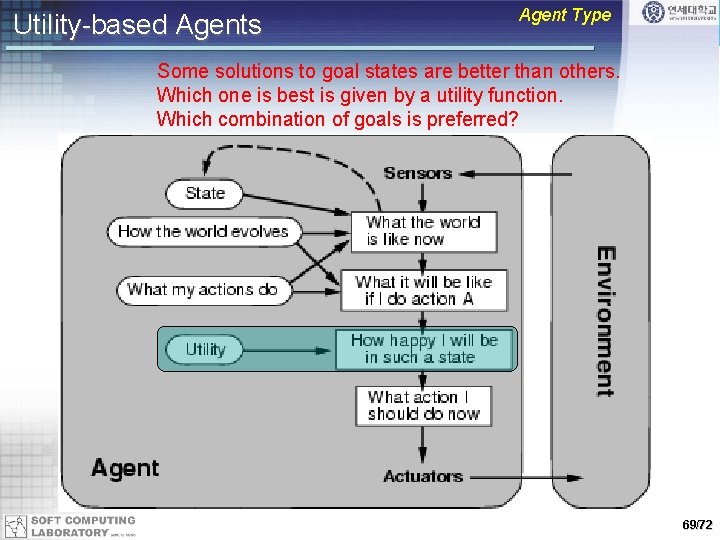

Software Agent • A formal definition of “Agent” [Wooldridge, 2002] – An agent is a computer system that is situated in some environment, and that is capable of autonomous action in this environment in order to meet its design objectives AGENT output input ENVIRONMENT • Key attributes – Autonomy: capable of acting independently, exhibiting control over their internal state and behavior – Situateness • Place to live: it situates in some environment • Perception capability: its ability to perceive the environment • Effector capability: its ability to modify the environment – Persistent: it functions continuously as long as it is alive 7/72

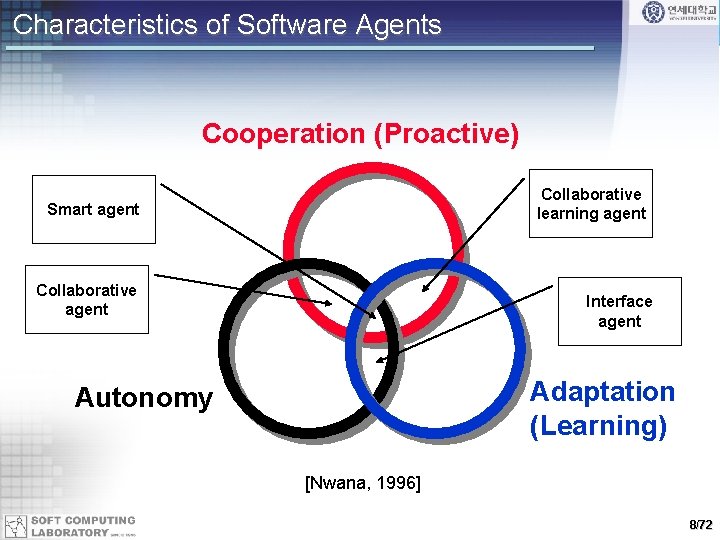

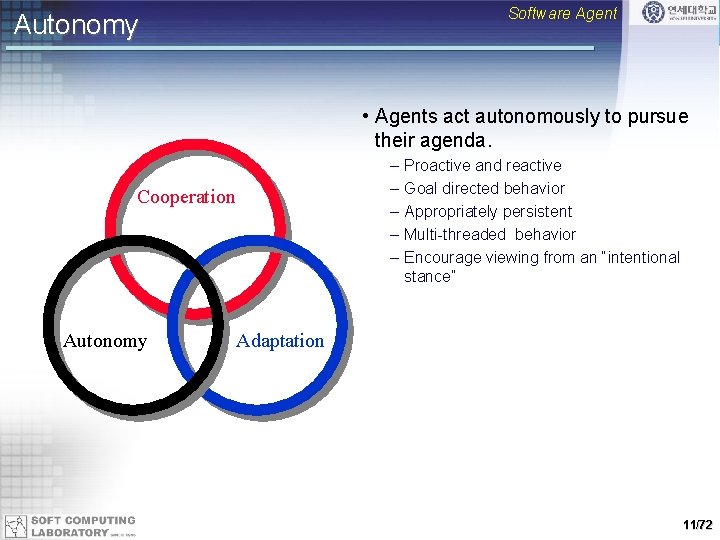

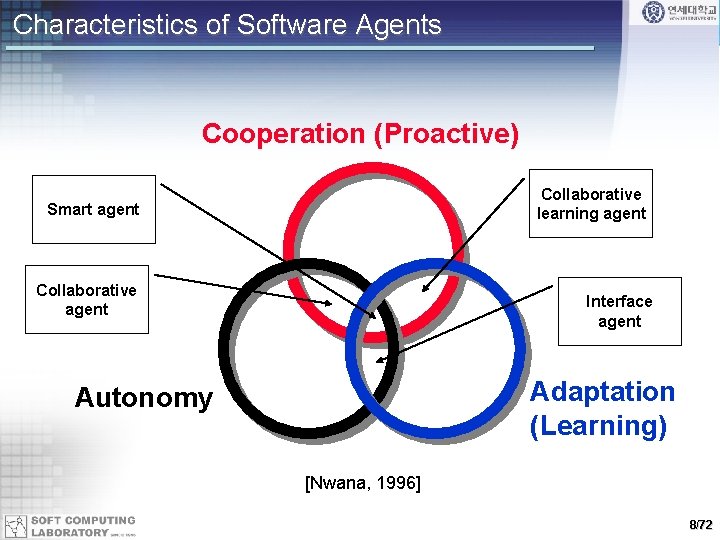

Characteristics of Software Agents Cooperation (Proactive) Collaborative learning agent Smart agent Collaborative agent Interface agent Adaptation (Learning) Autonomy [Nwana, 1996] 8/72

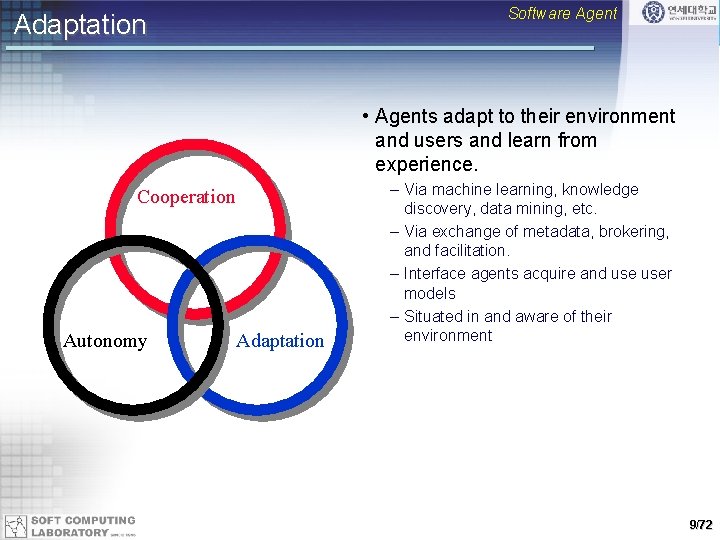

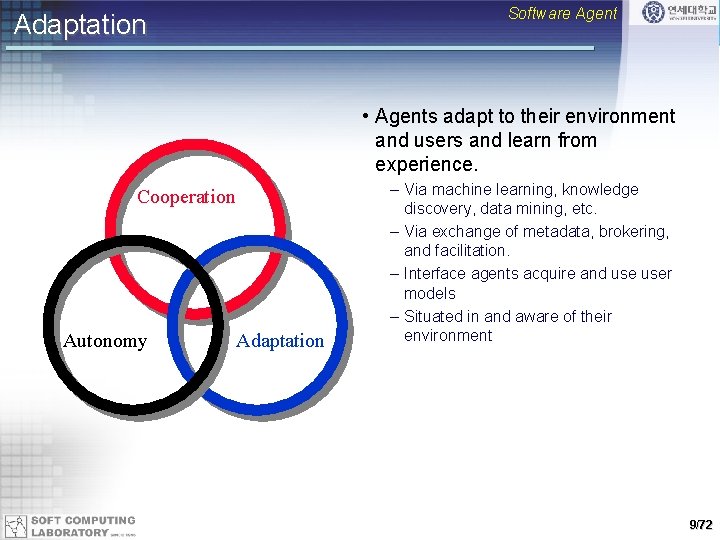

Software Agent Adaptation • Agents adapt to their environment and users and learn from experience. Cooperation Autonomy Adaptation – Via machine learning, knowledge discovery, data mining, etc. – Via exchange of metadata, brokering, and facilitation. – Interface agents acquire and user models – Situated in and aware of their environment 9/72

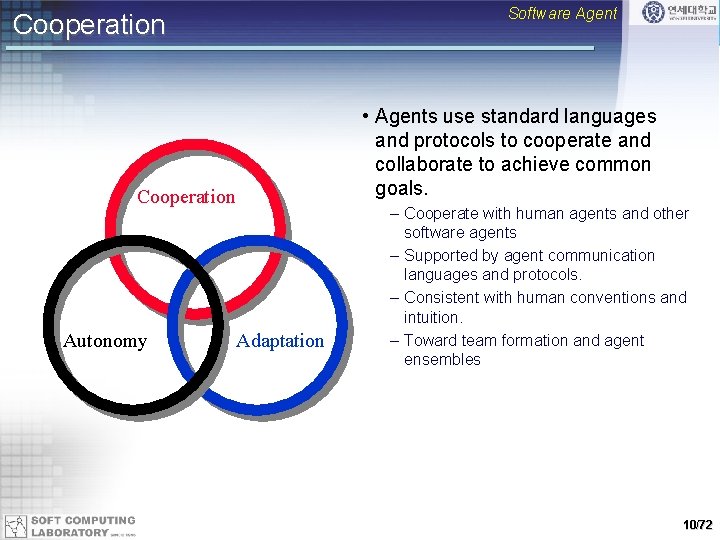

Software Agent Cooperation • Agents use standard languages and protocols to cooperate and collaborate to achieve common goals. Cooperation Autonomy Adaptation – Cooperate with human agents and other software agents – Supported by agent communication languages and protocols. – Consistent with human conventions and intuition. – Toward team formation and agent ensembles 10/72

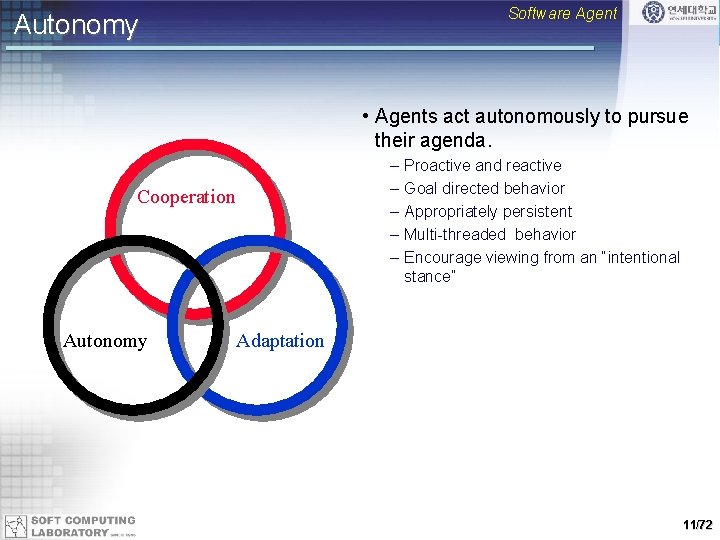

Software Agent Autonomy • Agents act autonomously to pursue their agenda. – – – Cooperation Autonomy Proactive and reactive Goal directed behavior Appropriately persistent Multi-threaded behavior Encourage viewing from an “intentional stance” Adaptation 11/72

Intelligent Agents • An intelligent agent is one that is capable of flexible autonomous action in order to meet its design objectives – Agent + flexibility • Properties on flexibility – Reactivity: agents are able to perceive their environment, and respond in a timely fashion to changes that occur in it in order to satisfy its design objectives – Pro-activeness: intelligent agents are able to exhibit goal-directed behavior by taking the initiative in order to satisfy its design objectives – Social ability: intelligent agents are capable of interacting with other agents (and possibly humans) in order to satisfy its design objectives • Open research issue – Purely reactive is easy – Purely proactive is not hard – But designing an agent that can balance the two remains open • Because most environments are dynamic rather than fixed • Taking into account possibility of program failure rather than blindly executing program 12/72

Reactivity Intelligent Agents • If a program’s environment is guaranteed to be fixed, the program need never worry about its own success or failure – program just executes blindly – Example of fixed environment: compiler • The real world is not like that: things change, information is incomplete. Many (most? ) interesting environments are dynamic • Software is hard to build for dynamic domains: program must take into account possibility of failure – ask itself whether it is worth executing! • A reactive system is one that maintains an ongoing interaction with its environment, and responds to changes that occur in it (in time for the response to be useful) 13/72

Proactiveness Intelligent Agents • Reacting to an environment is easy (e. g. , stimulus response rules) • But we generally want agents to do things for us • Hence goal directed behavior • Pro-activeness = generating and attempting to achieve goals; not driven solely by events; taking the initiative • Recognizing opportunities 14/72

Intelligent Agents Balancing Reactive and Goal-Oriented Behavior • We want our agents to be reactive, responding to changing conditions in an appropriate (timely) fashion • We want our agents to systematically work towards long-term goals • These two considerations can be at odds with one another • Designing an agent that can balance the two remains an open research problem 15/72

Social Ability Intelligent Agents • The real world is a multi-agent environment: we cannot go around attempting to achieve goals without taking others into account • Some goals can only be achieved with the cooperation of others • Similarly for many computer environments: witness the Internet • Social ability in agents is the ability to interact with other agents (and possibly humans) via some kind of agent-communication language, and perhaps cooperate with others 16/72

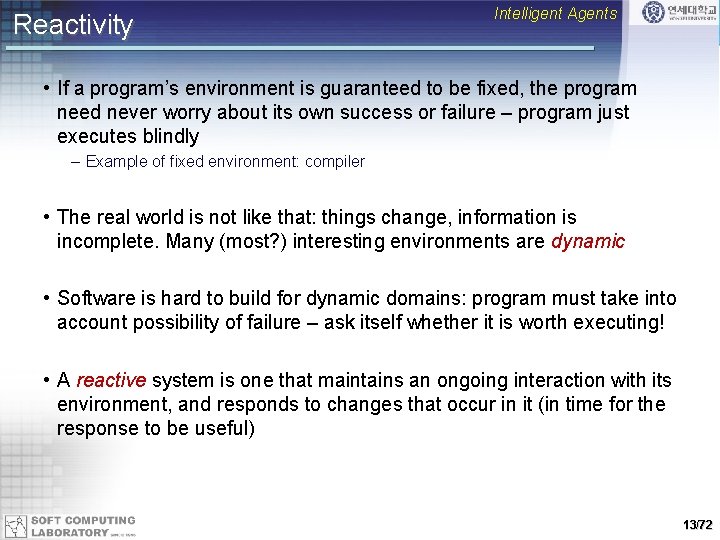

Other Properties Intelligent Agents • Mobility: the ability of an agent to move around an electronic network • Veracity – honesty: an agent will not knowingly communicate false information • Benevolence – kind: agents do not have conflicting goals, and that every agent will therefore always try to do what is asked of it • Rationality: agent will act in order to achieve its goals, and will not act in such a way as to prevent its goals being achieved - at least insofar as its beliefs permit • Learning/adaption: agents improve performance over time 17/72

![Summary of Agents Features Brenner et al 1998 1872 Summary of Agents’ Features [Brenner et al. , 1998] 18/72](https://slidetodoc.com/presentation_image/b9e2c0f3e0745298366a4eae7f198a54/image-19.jpg)

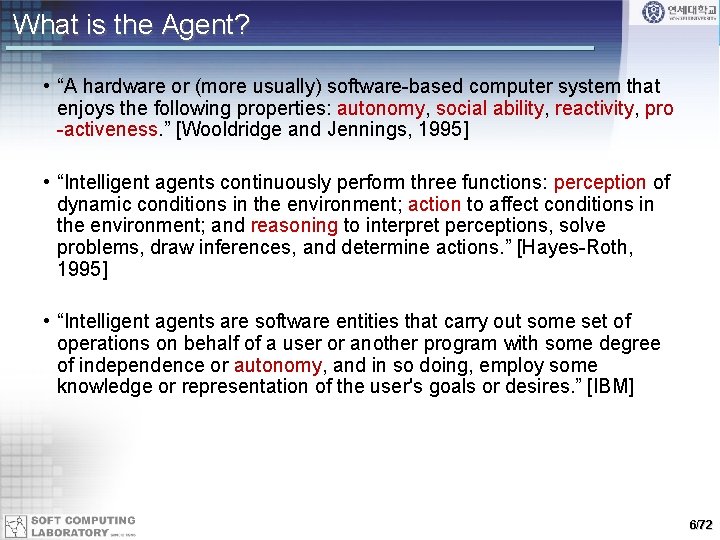

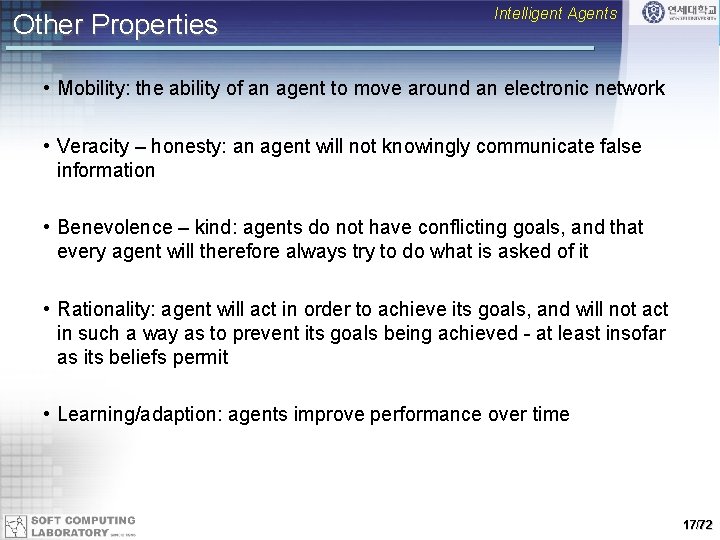

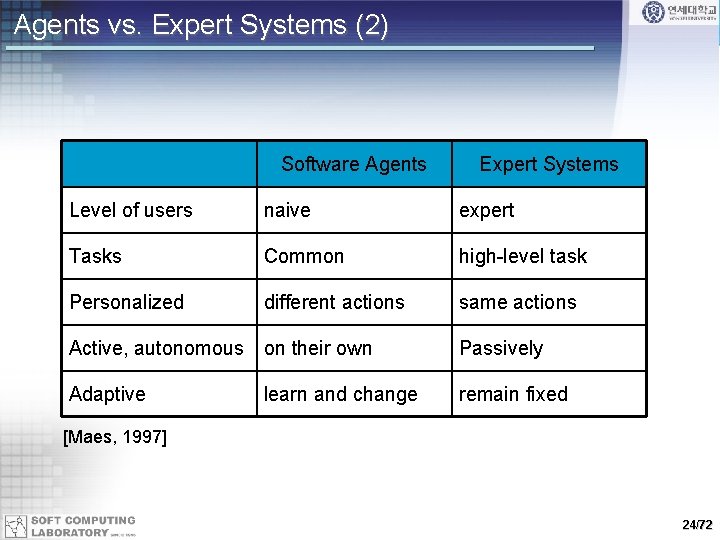

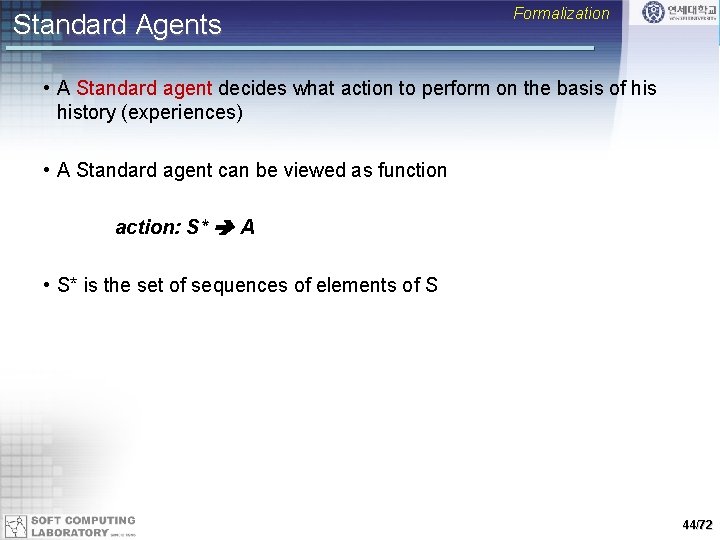

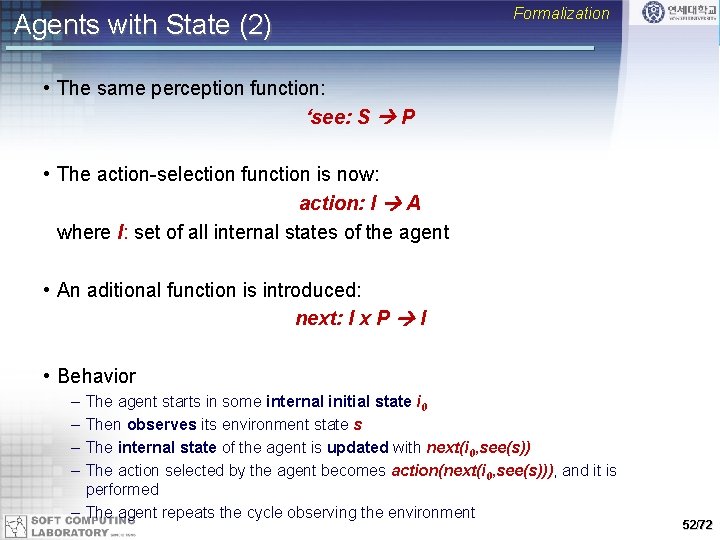

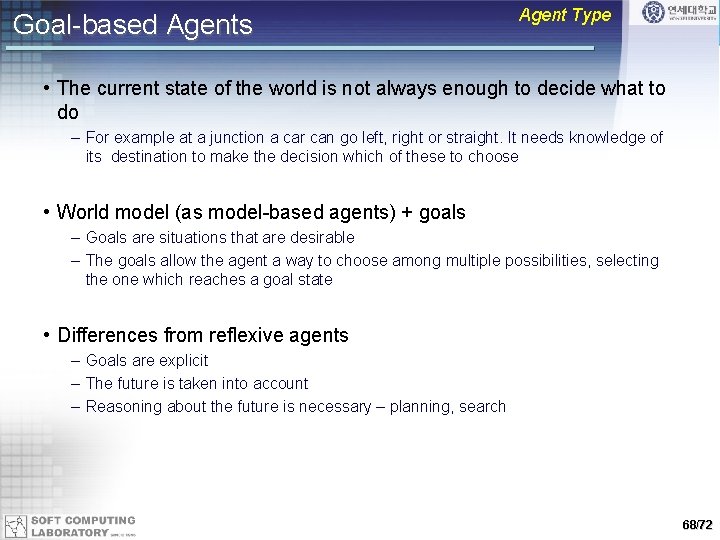

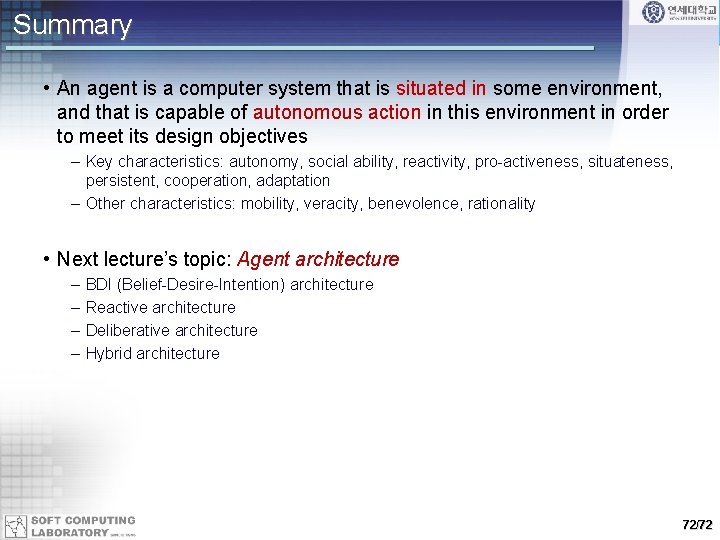

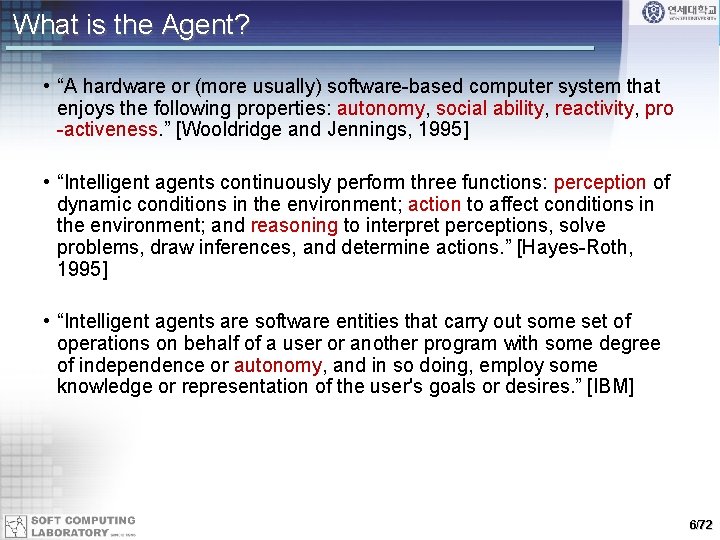

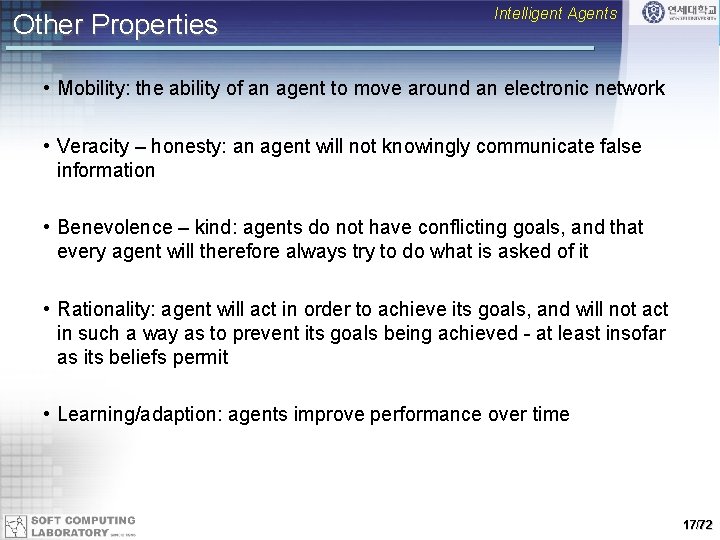

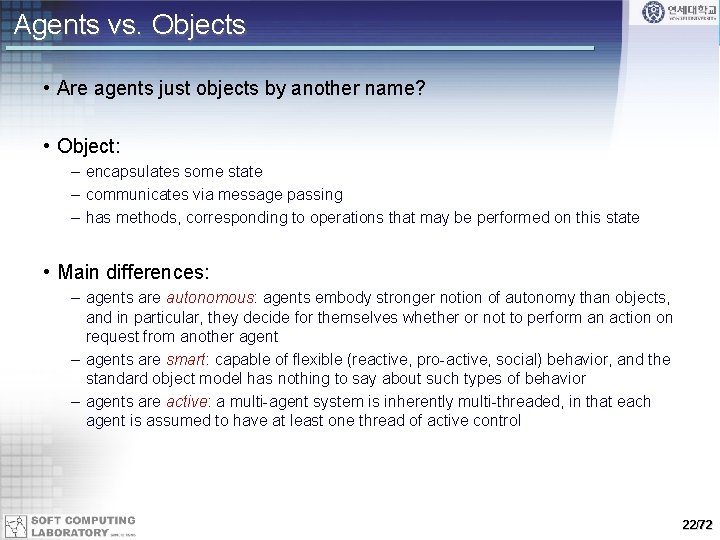

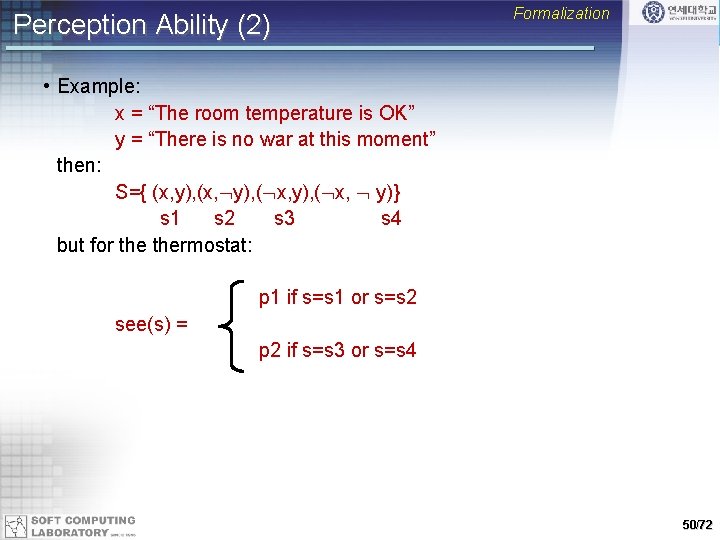

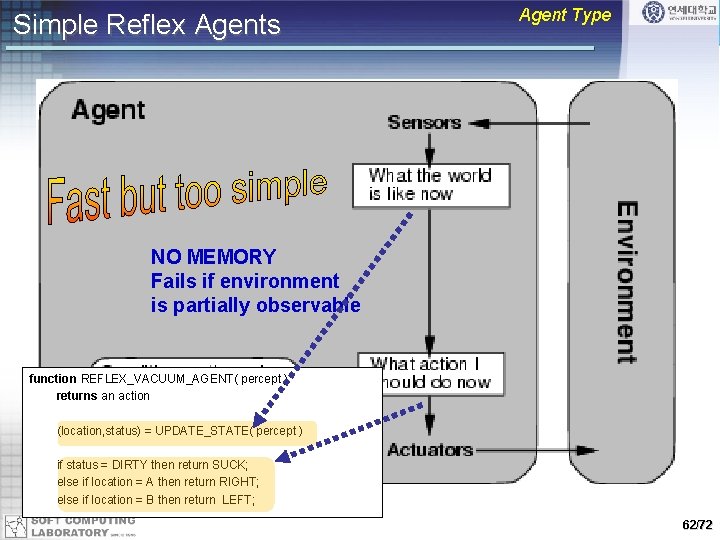

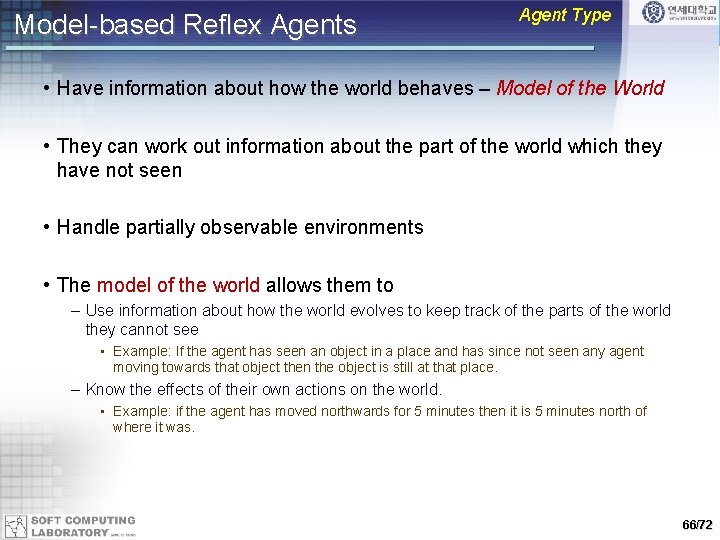

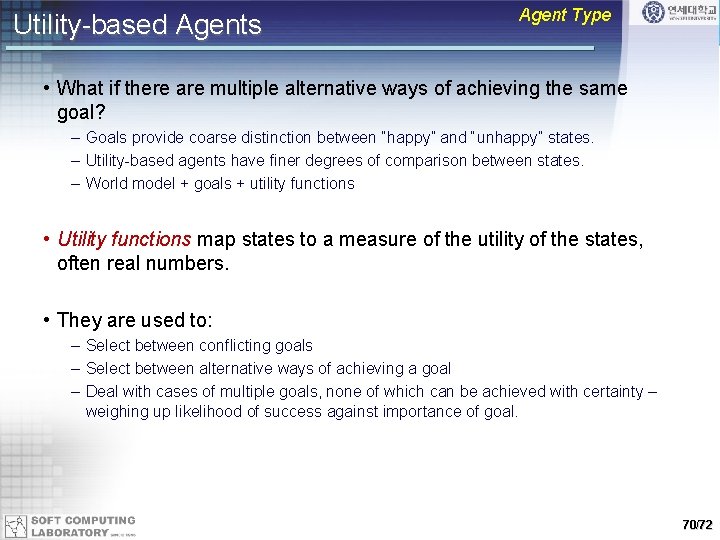

Summary of Agents’ Features [Brenner et al. , 1998] 18/72

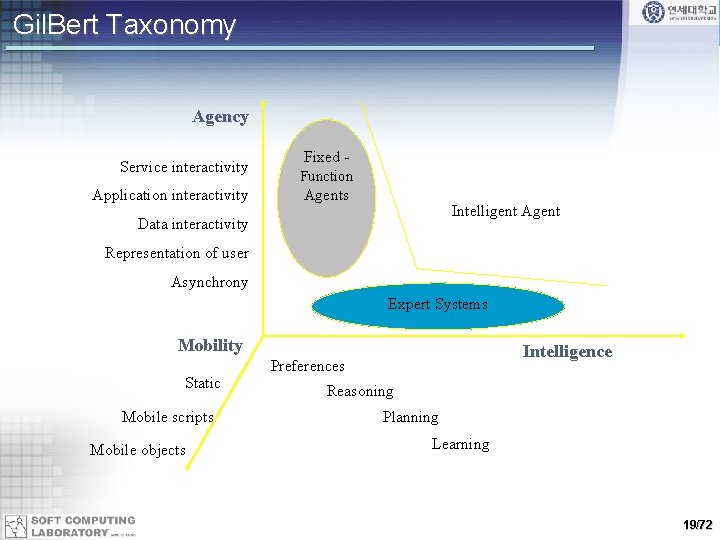

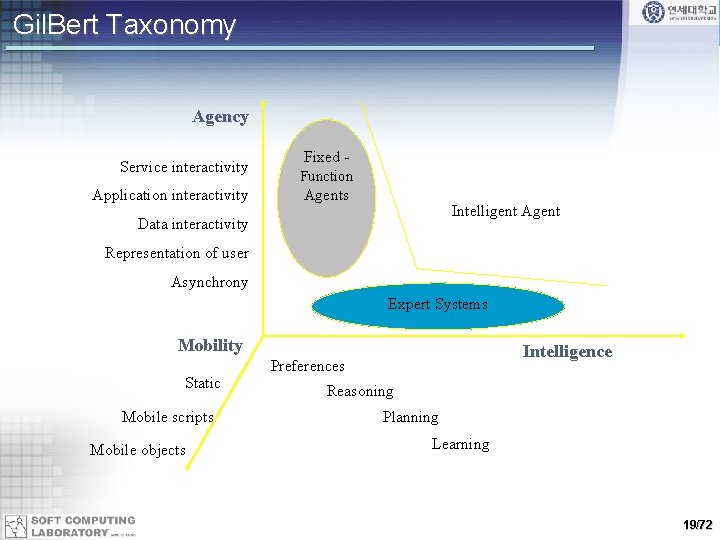

Gil. Bert Taxonomy Agency Service interactivity Application interactivity Fixed Function Agents Intelligent Agent Data interactivity Representation of user Asynchrony Expert Systems Mobility Static Mobile scripts Mobile objects Intelligence Preferences Reasoning Planning Learning 19/72

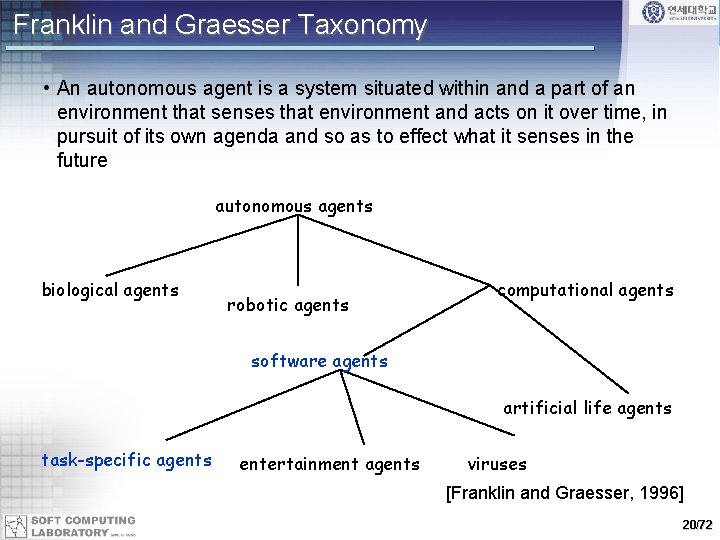

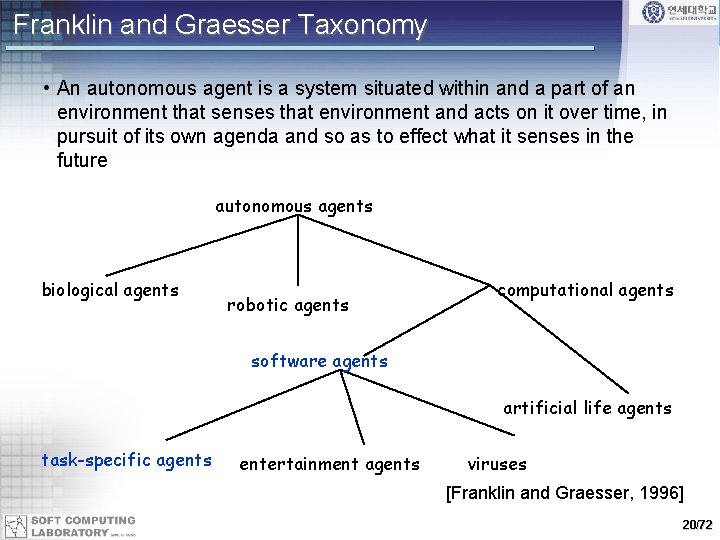

Franklin and Graesser Taxonomy • An autonomous agent is a system situated within and a part of an environment that senses that environment and acts on it over time, in pursuit of its own agenda and so as to effect what it senses in the future autonomous agents biological agents robotic agents computational agents software agents artificial life agents task-specific agents entertainment agents viruses [Franklin and Graesser, 1996] 20/72

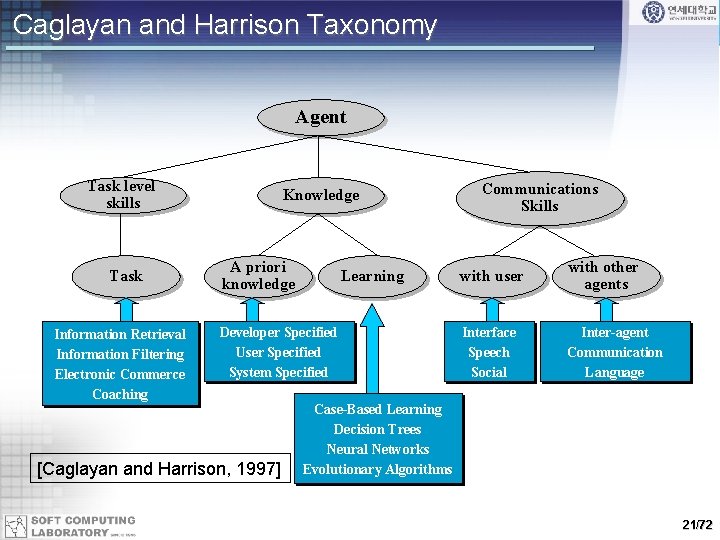

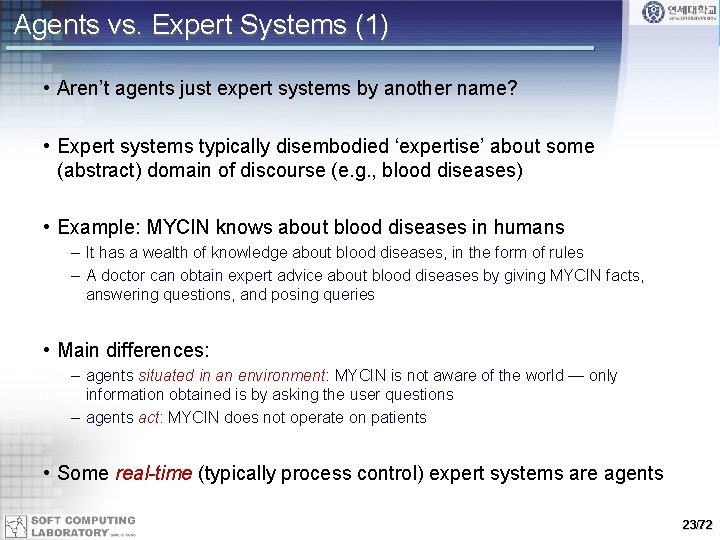

Caglayan and Harrison Taxonomy Agent Task level skills Task Information Retrieval Information Filtering Electronic Commerce Coaching Knowledge A priori knowledge Learning Developer Specified User Specified System Specified [Caglayan and Harrison, 1997] Communications Skills with user with other agents Interface Speech Social Inter-agent Communication Language Case-Based Learning Decision Trees Neural Networks Evolutionary Algorithms 21/72

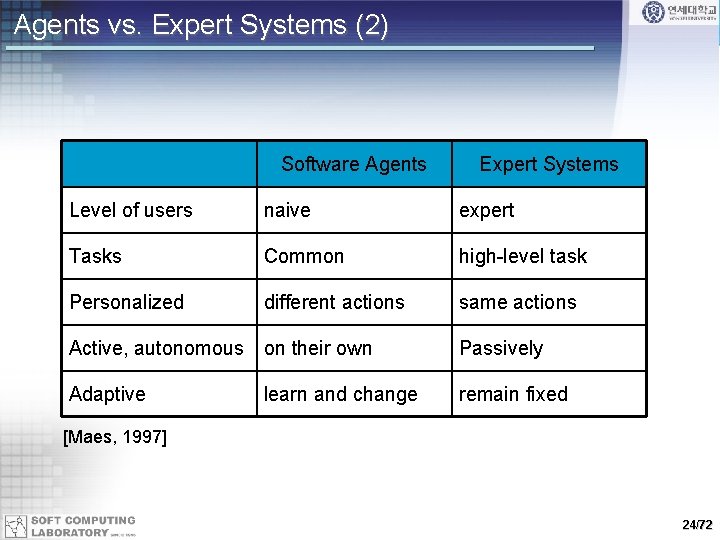

Agents vs. Objects • Are agents just objects by another name? • Object: – encapsulates some state – communicates via message passing – has methods, corresponding to operations that may be performed on this state • Main differences: – agents are autonomous: agents embody stronger notion of autonomy than objects, and in particular, they decide for themselves whether or not to perform an action on request from another agent – agents are smart: capable of flexible (reactive, pro-active, social) behavior, and the standard object model has nothing to say about such types of behavior – agents are active: a multi-agent system is inherently multi-threaded, in that each agent is assumed to have at least one thread of active control 22/72

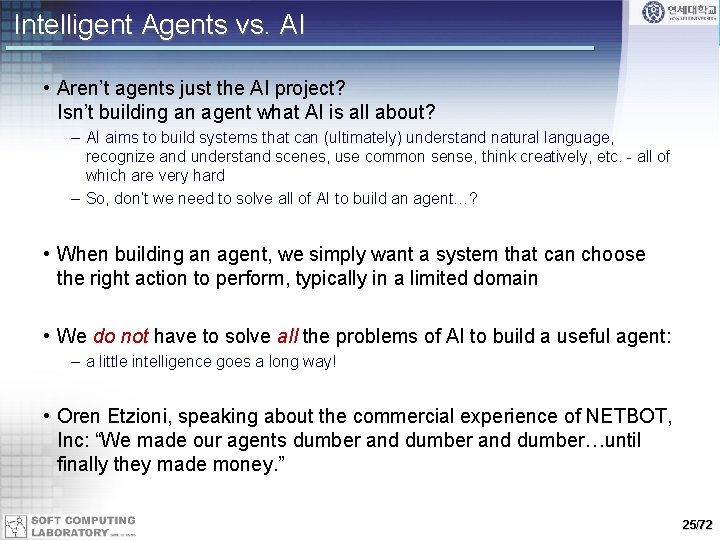

Agents vs. Expert Systems (1) • Aren’t agents just expert systems by another name? • Expert systems typically disembodied ‘expertise’ about some (abstract) domain of discourse (e. g. , blood diseases) • Example: MYCIN knows about blood diseases in humans – It has a wealth of knowledge about blood diseases, in the form of rules – A doctor can obtain expert advice about blood diseases by giving MYCIN facts, answering questions, and posing queries • Main differences: – agents situated in an environment: MYCIN is not aware of the world — only information obtained is by asking the user questions – agents act: MYCIN does not operate on patients • Some real-time (typically process control) expert systems are agents 23/72

Agents vs. Expert Systems (2) Software Agents Expert Systems Level of users naive expert Tasks Common high-level task Personalized different actions same actions Active, autonomous on their own Passively Adaptive remain fixed learn and change [Maes, 1997] 24/72

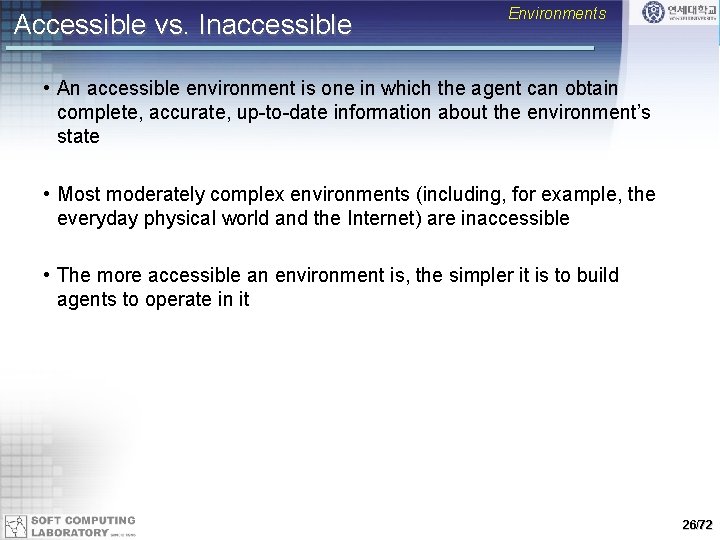

Intelligent Agents vs. AI • Aren’t agents just the AI project? Isn’t building an agent what AI is all about? – AI aims to build systems that can (ultimately) understand natural language, recognize and understand scenes, use common sense, think creatively, etc. - all of which are very hard – So, don’t we need to solve all of AI to build an agent…? • When building an agent, we simply want a system that can choose the right action to perform, typically in a limited domain • We do not have to solve all the problems of AI to build a useful agent: – a little intelligence goes a long way! • Oren Etzioni, speaking about the commercial experience of NETBOT, Inc: “We made our agents dumber and dumber…until finally they made money. ” 25/72

Accessible vs. Inaccessible Environments • An accessible environment is one in which the agent can obtain complete, accurate, up-to-date information about the environment’s state • Most moderately complex environments (including, for example, the everyday physical world and the Internet) are inaccessible • The more accessible an environment is, the simpler it is to build agents to operate in it 26/72

Deterministic vs. Non-deterministic Environments • A deterministic environment is one in which any action has a single guaranteed effect — there is no uncertainty about the state that will result from performing an action • The physical world can to all intents and purposes be regarded as non -deterministic • Non-deterministic environments present greater problems for the agent designer 27/72

Episodic vs. Non-episodic Environments • In an episodic environment, the performance of an agent is dependent on a number of discrete episodes, with no link between the performance of an agent in different scenarios • Episodic environments are simpler from the agent developer’s perspective because the agent can decide what action to perform based only on the current episode — it need not reason about the interactions between this and future episodes 28/72

Static vs. Dynamic Environments • A static environment is one that can be assumed to remain unchanged except by the performance of actions by the agent • A dynamic environment is one that has other processes operating on it, and which hence changes in ways beyond the agent’s control • Other processes can interfere with the agent’s actions (as in concurrent systems theory) • The physical world is a highly dynamic environment 29/72

Discrete vs. Continuous Environments • An environment is discrete if there a fixed, finite number of actions and percepts in it • Russell and Norvig give a chess game as an example of a discrete environment, and taxi driving as an example of a continuous one • Continuous environments have a certain level of mismatch with computer systems • Discrete environments could in principle be handled by a kind of “lookup table” 30/72

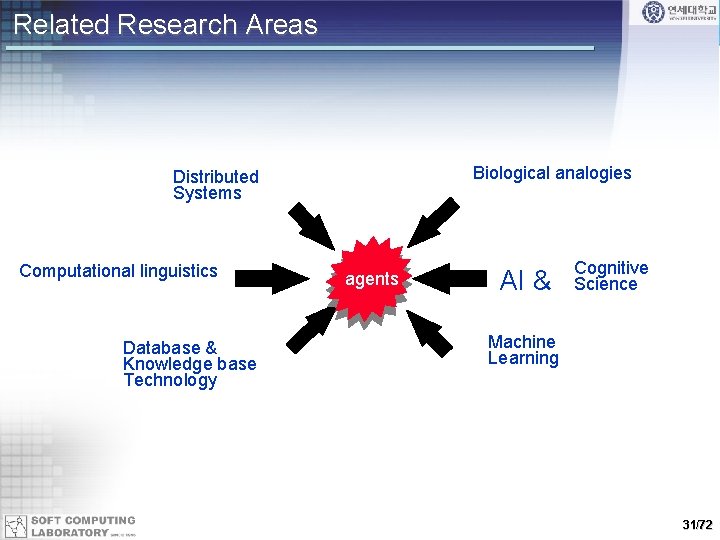

Related Research Areas Biological analogies Distributed Systems Computational linguistics Database & Knowledge base Technology agents AI & Cognitive Science Machine Learning 31/72

Database and Knowledge-base Related Area • Intelligent agents need to be able to represent and reason about a number of things, including: – – metadata about documents and collections of documents linguistic knowledge (e. g. , thesauri, proper name recognition, etc) domain-specific data, information and knowledge models of other agents (human or artificial): their capabilities, performance, beliefs, desires, intentions, plans, etc. – tasks, task structures, plans, etc. 32/72

Distributed Computing Related Area • Concurrency – analyzing and specifying protocols, e. g. , deadlock and livelock prevention, fairness – achieving and preserving consistency • Performance evaluation – visualization – debugging • Exploit the advantages of parallelism – multi-threaded implementation 33/72

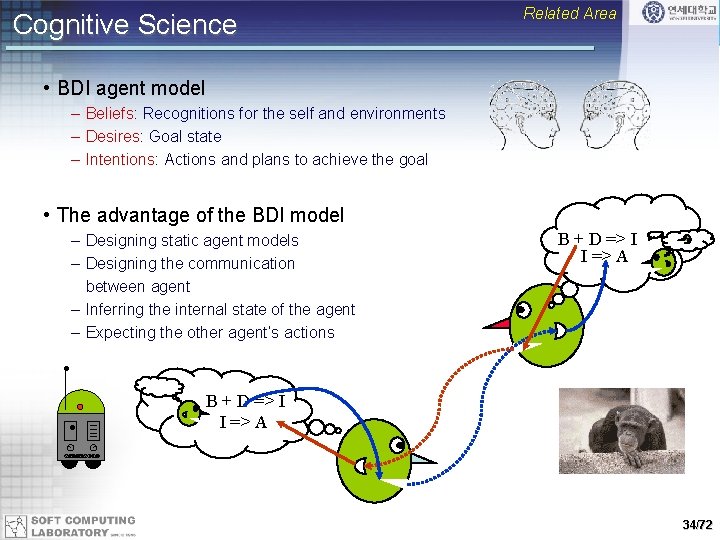

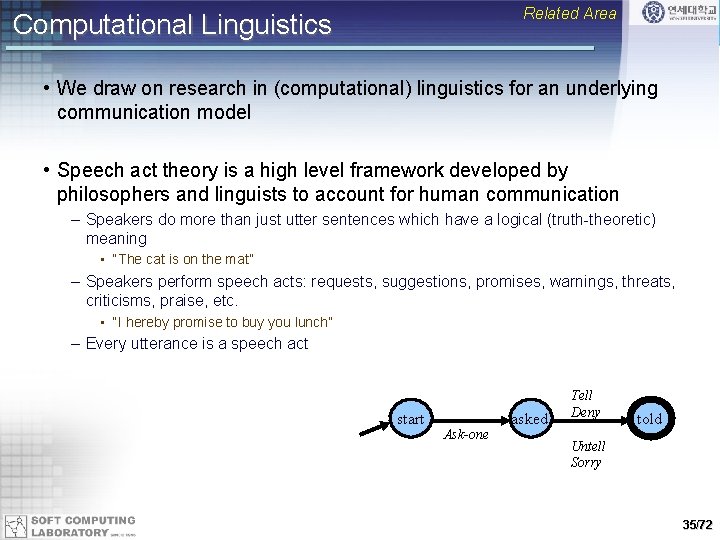

Cognitive Science Related Area • BDI agent model – Beliefs: Recognitions for the self and environments – Desires: Goal state – Intentions: Actions and plans to achieve the goal • The advantage of the BDI model – Designing static agent models – Designing the communication between agent – Inferring the internal state of the agent – Expecting the other agent’s actions B + D => I I => A 34/72

Related Area Computational Linguistics • We draw on research in (computational) linguistics for an underlying communication model • Speech act theory is a high level framework developed by philosophers and linguists to account for human communication – Speakers do more than just utter sentences which have a logical (truth-theoretic) meaning • “The cat is on the mat” – Speakers perform speech acts: requests, suggestions, promises, warnings, threats, criticisms, praise, etc. • “I hereby promise to buy you lunch” – Every utterance is a speech act start Ask-one asked Tell Deny told Untell Sorry 35/72

Econometric Models Related Area • Economics studies how to model predict and control the aggregate behavior of large collections of independent agents. • Conceptual tools include: – game theory – general equilibrium market mechanisms – protocols for voting and auctions • An objective is to design good artificial markets and protocols to result in the desired behavior of our agents • Michigan’s Digital Library project uses a market-based approach to control its agents. 36/72

Biological Analogies Related Area • One way that agents could adapt is via an evolutionary process. • Individual agents use their own strategies for behavior with “death” as the punishment for poor performance and “reproduction” the reward for good. • Artificial life techniques include: – genetic programming – natural selection – sexual reproduction 37/72

Machine Learning Related Area • Techniques developed for machine learning are being applied to software agents to allow them to adapt to users and other agents. • Popular techniques – – – reasoning with uncertainty decision tree induction neural networks reinforcement learning memory-based reasoning genetic algorithms Rev. Thomas Bayes and his Theorem 38/72

Applications of Intelligent Agents (1) • E-mail Agents – Beyond Mail, Lotus Notes, Maxims • Scheduling Agents – Contact. Finder • Desktop Agents – Office 2000 Help, Open Sesame • Web-Browsing Assistants – Web. Watcher, Letizia • Information Filtering Agents – Amalthaea, Jester, Info. Finders, Remembrance agent, PHOAKS, Site. Seer 39/72

Applications of Intelligent Agents (2) • News-service Agents – News. Hound, Group. Lens, Fire. Fly, Fab, Referral. Web, New. T • Comparison Shopping Agents – Mysimon, Bargain. Finder, Bazzar, Shopbor, Fido • Brokering Agents – Personal. Logic, Barnes, Kasbah, Jango, Yenta • Auction Agents – Auction. Bot, Auction. Web • Negotiation Agents – Data. Detector, T@T 40/72

• 10 min Breaktime 41/72

Formalization of Agents • Agents – Standard agents – Purely reactive agents – Agents with state • Environments • History • Perception 42/72

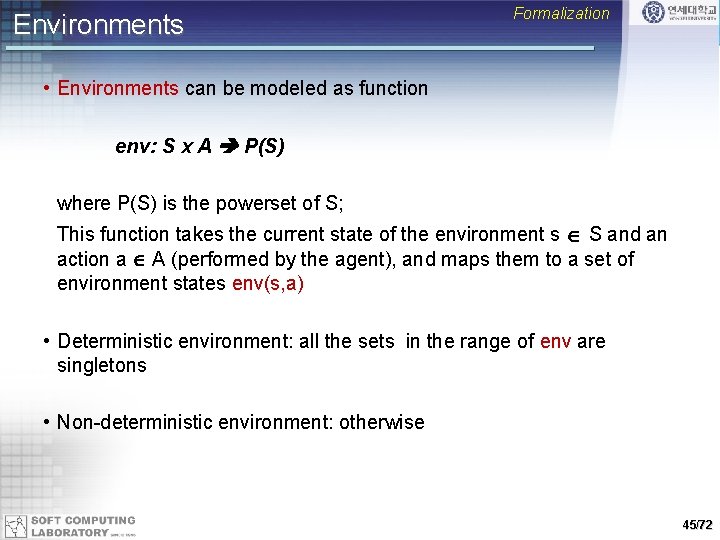

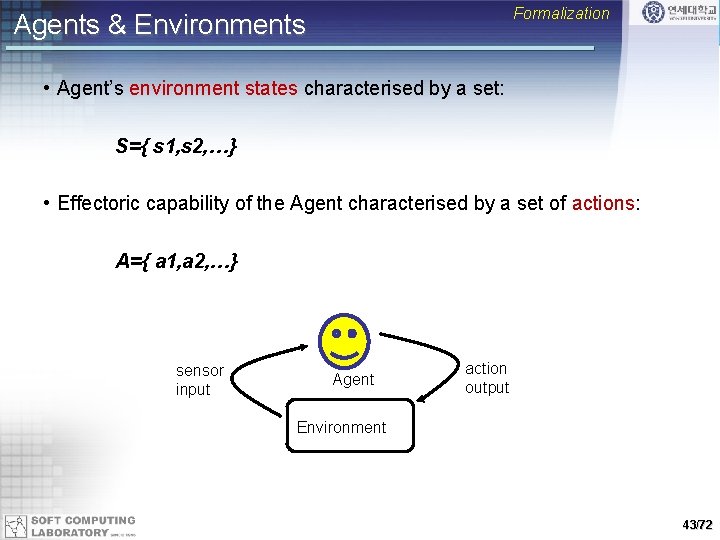

Formalization Agents & Environments • Agent’s environment states characterised by a set: S={ s 1, s 2, …} • Effectoric capability of the Agent characterised by a set of actions: A={ a 1, a 2, …} sensor input Agent action output Environment 43/72

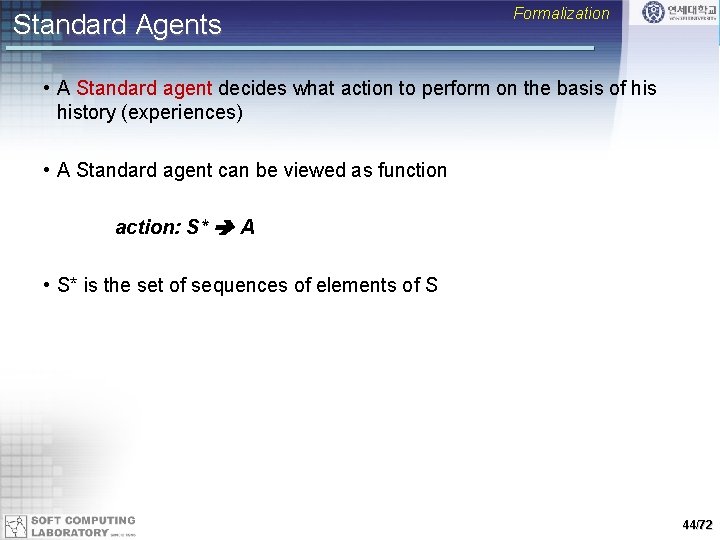

Standard Agents Formalization • A Standard agent decides what action to perform on the basis of history (experiences) • A Standard agent can be viewed as function action: S* A • S* is the set of sequences of elements of S 44/72

Environments Formalization • Environments can be modeled as function env: S x A P(S) where P(S) is the powerset of S; This function takes the current state of the environment s S and an action a A (performed by the agent), and maps them to a set of environment states env(s, a) • Deterministic environment: all the sets in the range of env are singletons • Non-deterministic environment: otherwise 45/72

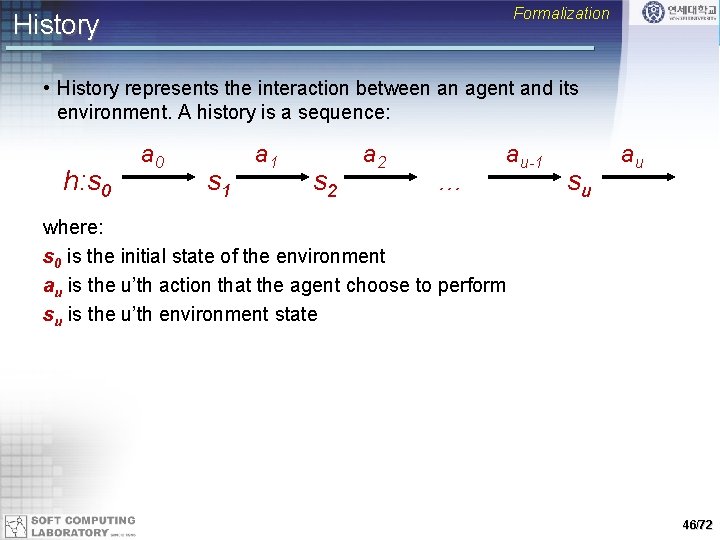

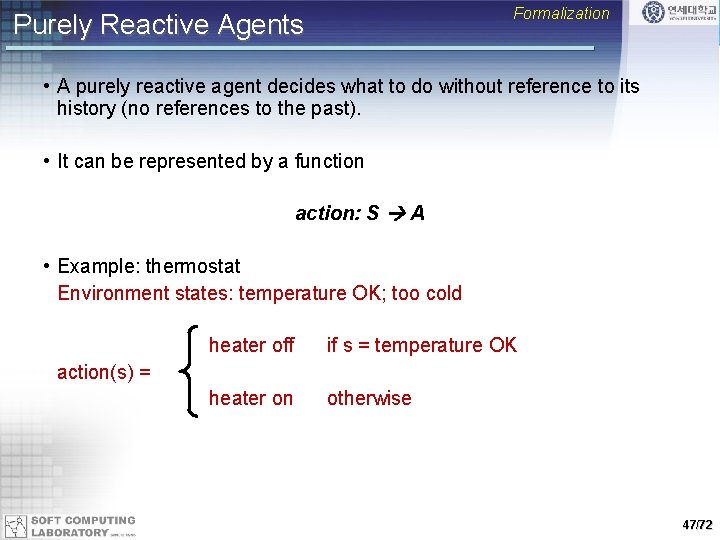

Formalization History • History represents the interaction between an agent and its environment. A history is a sequence: h: s 0 a 0 s 1 a 1 s 2 a 2 … au-1 su au where: s 0 is the initial state of the environment au is the u’th action that the agent choose to perform su is the u’th environment state 46/72

Formalization Purely Reactive Agents • A purely reactive agent decides what to do without reference to its history (no references to the past). • It can be represented by a function action: S A • Example: thermostat Environment states: temperature OK; too cold heater off if s = temperature OK heater on otherwise action(s) = 47/72

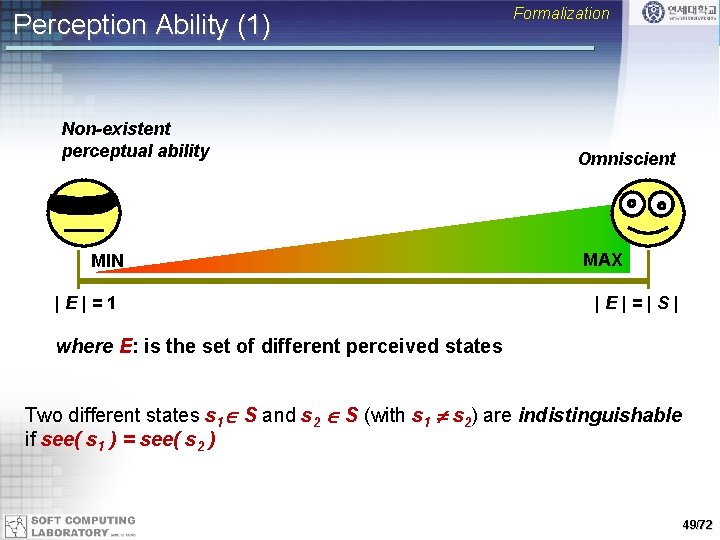

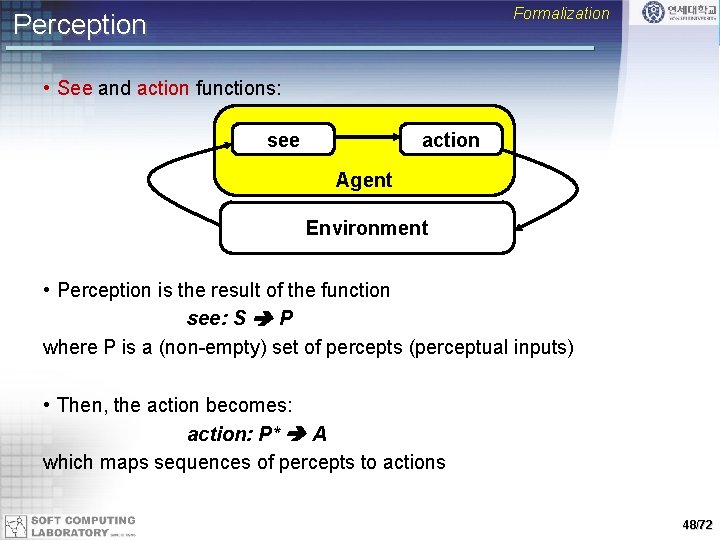

Formalization Perception • See and action functions: see action Agent Environment • Perception is the result of the function see: S P where P is a (non-empty) set of percepts (perceptual inputs) • Then, the action becomes: action: P* A which maps sequences of percepts to actions 48/72

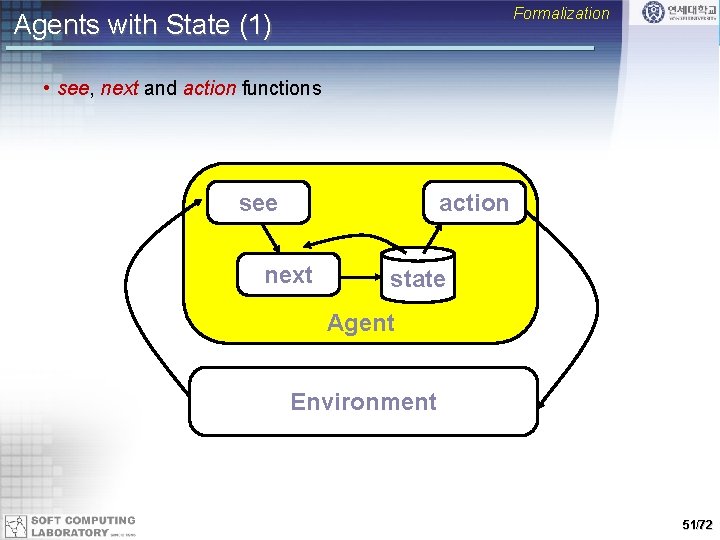

Perception Ability (1) Non-existent perceptual ability MIN |E|=1 Formalization Omniscient MAX |E|=|S| where E: is the set of different perceived states Two different states s 1 S and s 2 S (with s 1 s 2) are indistinguishable if see( s 1 ) = see( s 2 ) 49/72

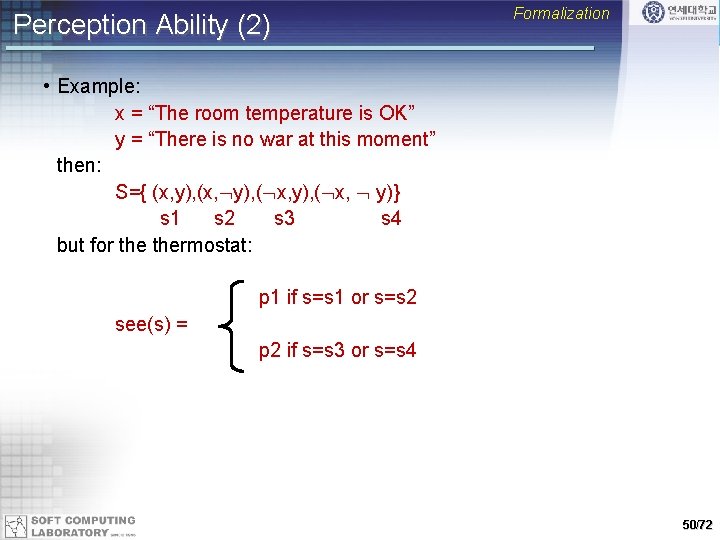

Perception Ability (2) Formalization • Example: x = “The room temperature is OK” y = “There is no war at this moment” then: S={ (x, y), ( x, y)} s 1 s 2 s 3 s 4 but for thermostat: p 1 if s=s 1 or s=s 2 see(s) = p 2 if s=s 3 or s=s 4 50/72

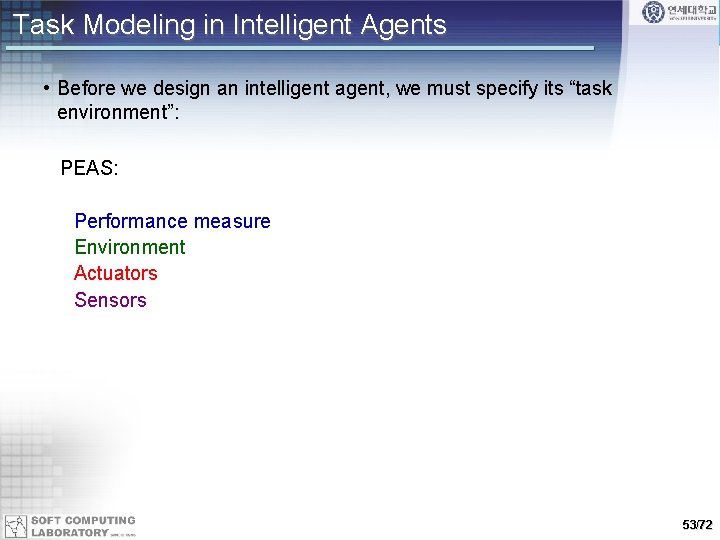

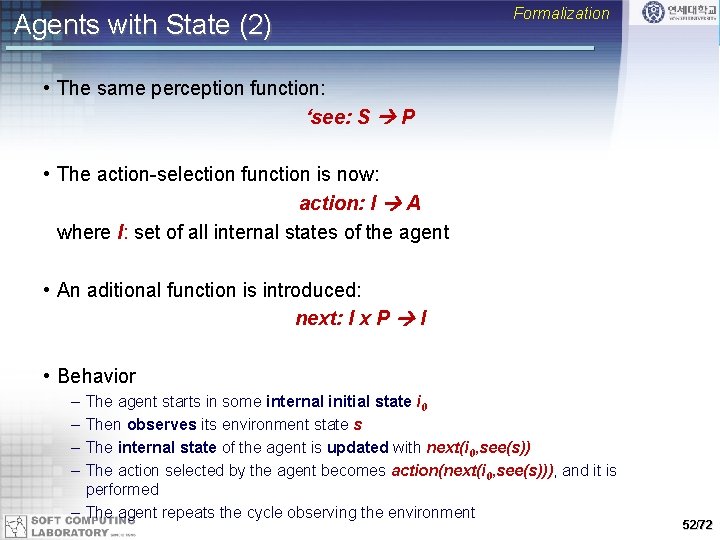

Formalization Agents with State (1) • see, next and action functions see action next state Agent Environment 51/72

Agents with State (2) Formalization • The same perception function: ‘see: S P • The action-selection function is now: action: I A where I: set of all internal states of the agent • An aditional function is introduced: next: I x P I • Behavior – – The agent starts in some internal initial state i 0 Then observes its environment state s The internal state of the agent is updated with next(i 0, see(s)) The action selected by the agent becomes action(next(i 0, see(s))), and it is performed – The agent repeats the cycle observing the environment 52/72

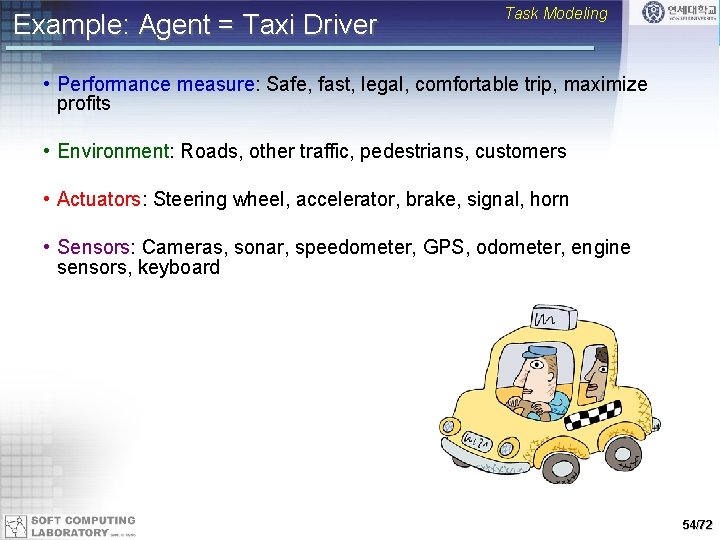

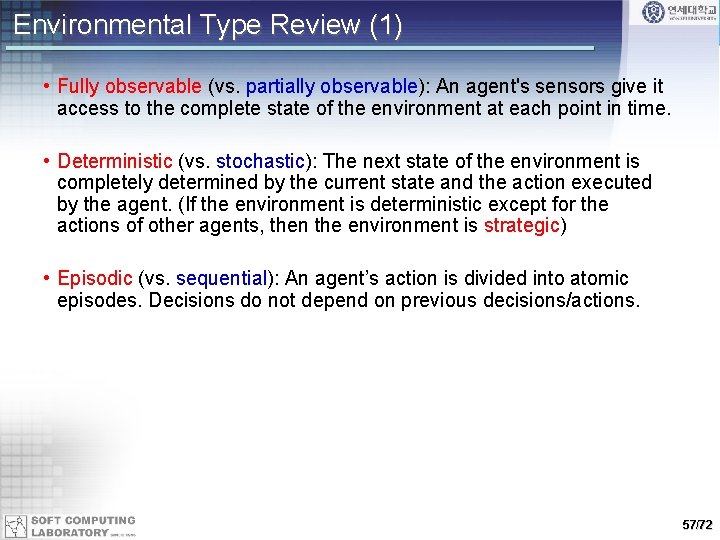

Task Modeling in Intelligent Agents • Before we design an intelligent agent, we must specify its “task environment”: PEAS: Performance measure Environment Actuators Sensors 53/72

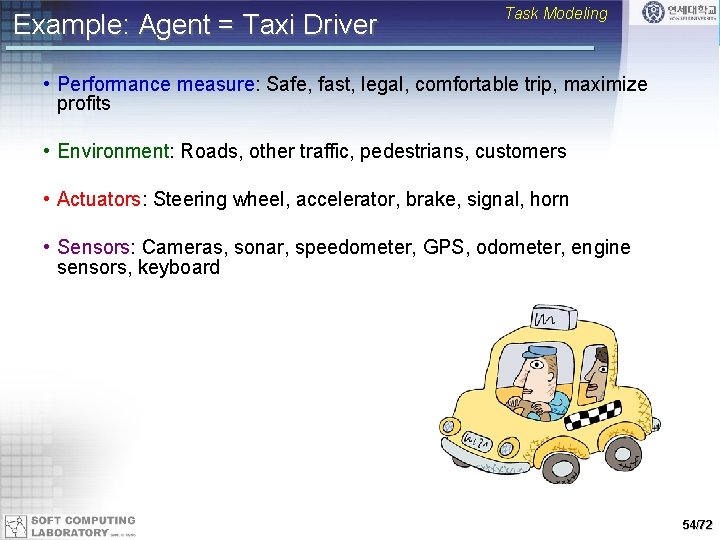

Example: Agent = Taxi Driver Task Modeling • Performance measure: Safe, fast, legal, comfortable trip, maximize profits • Environment: Roads, other traffic, pedestrians, customers • Actuators: Steering wheel, accelerator, brake, signal, horn • Sensors: Cameras, sonar, speedometer, GPS, odometer, engine sensors, keyboard 54/72

Task Modeling Example: Agent = Medical Diagnosis System • Performance measure: Healthy patient, minimize costs, lawsuits • Environment: Patient, hospital, staff • Actuators: Screen display (questions, tests, diagnoses, treatments, referrals) • Sensors: Keyboard (entry of symptoms, findings, patient's answers) 55/72

Example: Agent = Part-picking Robot Task Modeling • Performance measure: Percentage of parts in correct bins • Environment: Conveyor belt with parts, bins • Actuators: Jointed arm and hand • Sensors: Camera, joint angle sensors 56/72

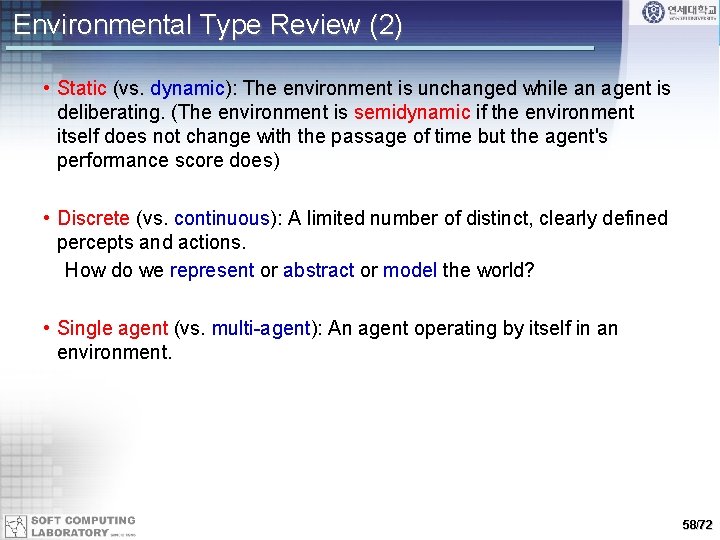

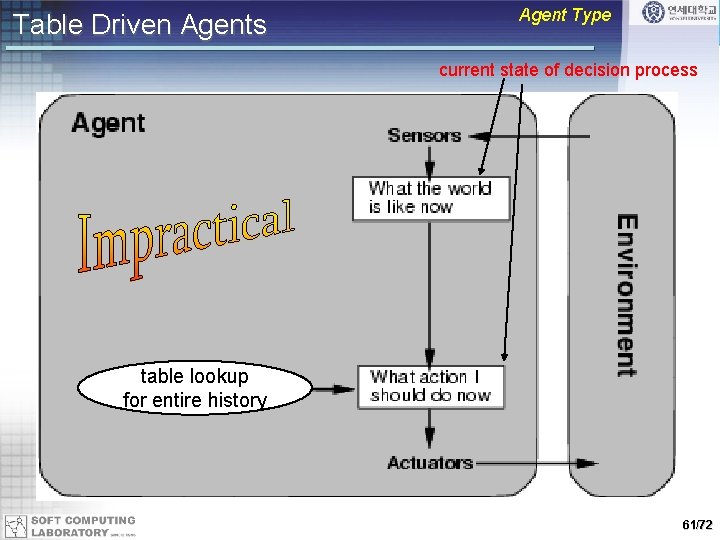

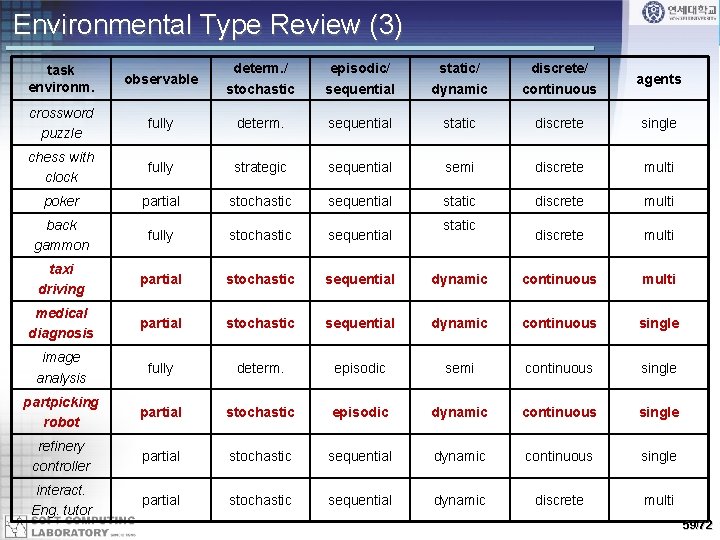

Environmental Type Review (1) • Fully observable (vs. partially observable): An agent's sensors give it access to the complete state of the environment at each point in time. • Deterministic (vs. stochastic): The next state of the environment is completely determined by the current state and the action executed by the agent. (If the environment is deterministic except for the actions of other agents, then the environment is strategic) • Episodic (vs. sequential): An agent’s action is divided into atomic episodes. Decisions do not depend on previous decisions/actions. 57/72

Environmental Type Review (2) • Static (vs. dynamic): The environment is unchanged while an agent is deliberating. (The environment is semidynamic if the environment itself does not change with the passage of time but the agent's performance score does) • Discrete (vs. continuous): A limited number of distinct, clearly defined percepts and actions. How do we represent or abstract or model the world? • Single agent (vs. multi-agent): An agent operating by itself in an environment. 58/72

Environmental Type Review (3) task environm. observable determ. / stochastic episodic/ sequential static/ dynamic discrete/ continuous agents crossword puzzle fully determ. sequential static discrete single chess with clock fully strategic sequential semi discrete multi poker partial stochastic sequential static discrete multi back gammon fully stochastic sequential discrete multi taxi driving partial stochastic sequential dynamic continuous multi medical diagnosis partial stochastic sequential dynamic continuous single image analysis fully determ. episodic semi continuous single partpicking robot partial stochastic episodic dynamic continuous single refinery controller partial stochastic sequential dynamic continuous single interact. Eng. tutor partial stochastic sequential dynamic discrete multi static 59/72

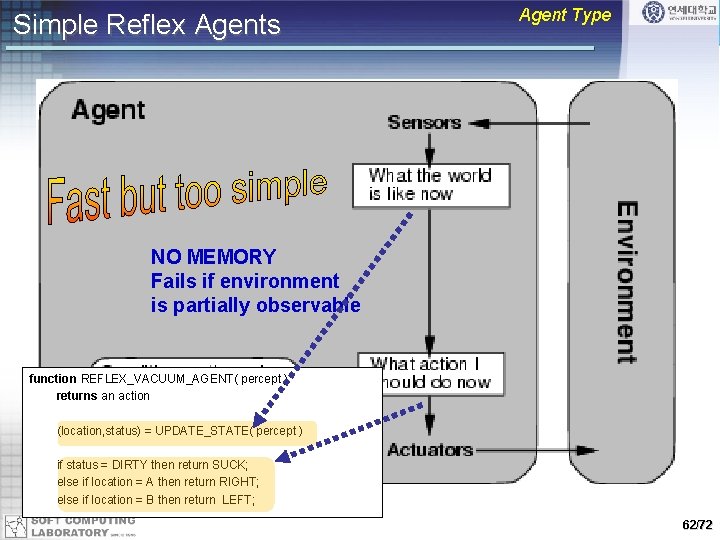

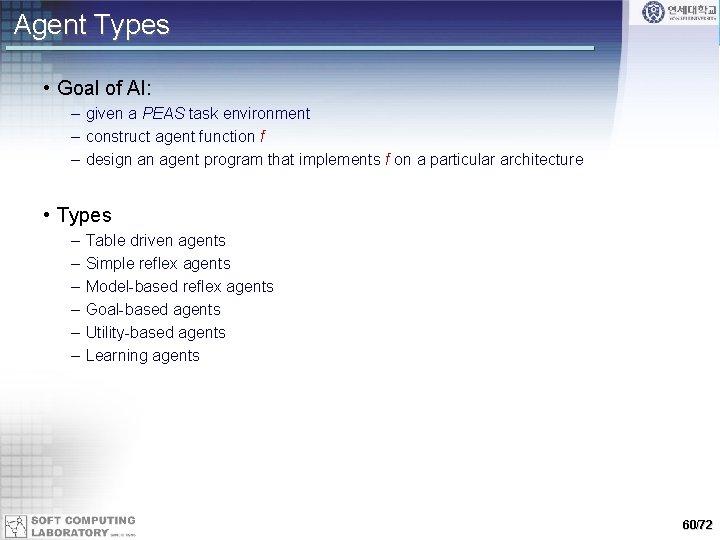

Agent Types • Goal of AI: – given a PEAS task environment – construct agent function f – design an agent program that implements f on a particular architecture • Types – – – Table driven agents Simple reflex agents Model-based reflex agents Goal-based agents Utility-based agents Learning agents 60/72

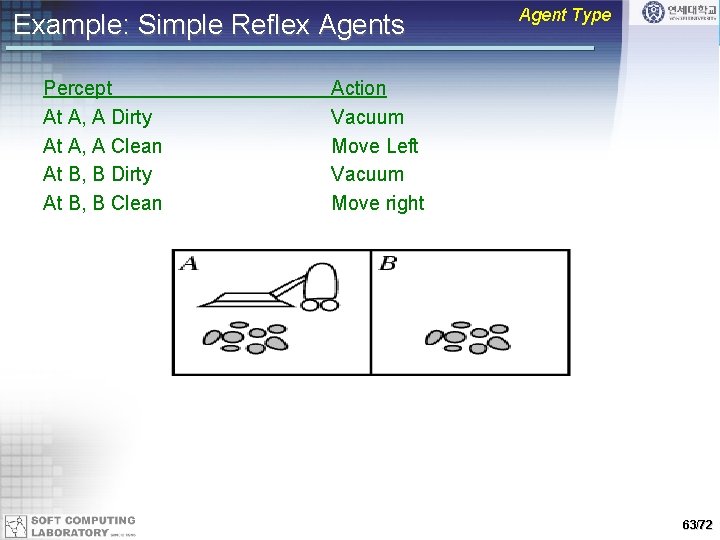

Table Driven Agents Agent Type current state of decision process table lookup for entire history 61/72

Simple Reflex Agents Agent Type NO MEMORY Fails if environment is partially observable function REFLEX_VACUUM_AGENT( percept ) returns an action (location, status) = UPDATE_STATE( percept ) example: vacuum cleaner world if status = DIRTY then return SUCK; else if location = A then return RIGHT; else if location = B then return LEFT; 62/72

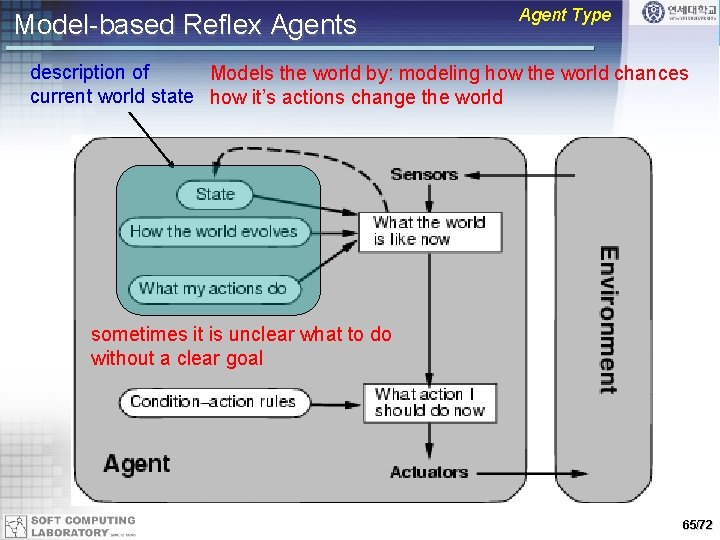

Example: Simple Reflex Agents Percept At A, A Dirty At A, A Clean At B, B Dirty At B, B Clean Agent Type Action Vacuum Move Left Vacuum Move right 63/72

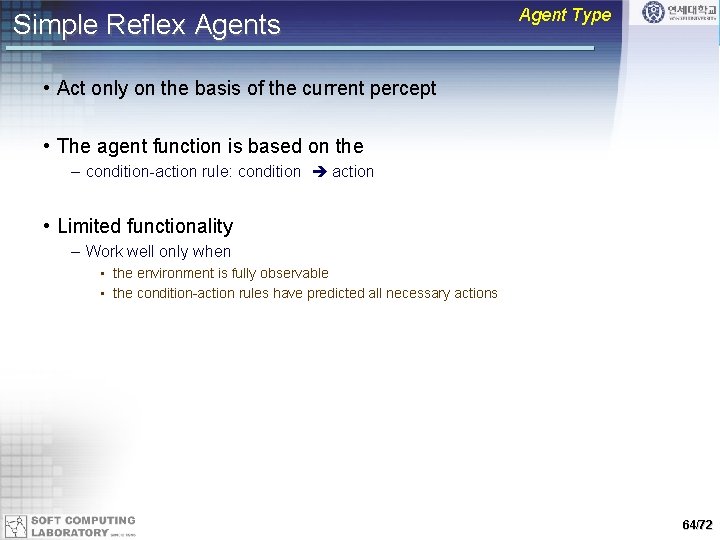

Simple Reflex Agents Agent Type • Act only on the basis of the current percept • The agent function is based on the – condition-action rule: condition action • Limited functionality – Work well only when • the environment is fully observable • the condition-action rules have predicted all necessary actions 64/72

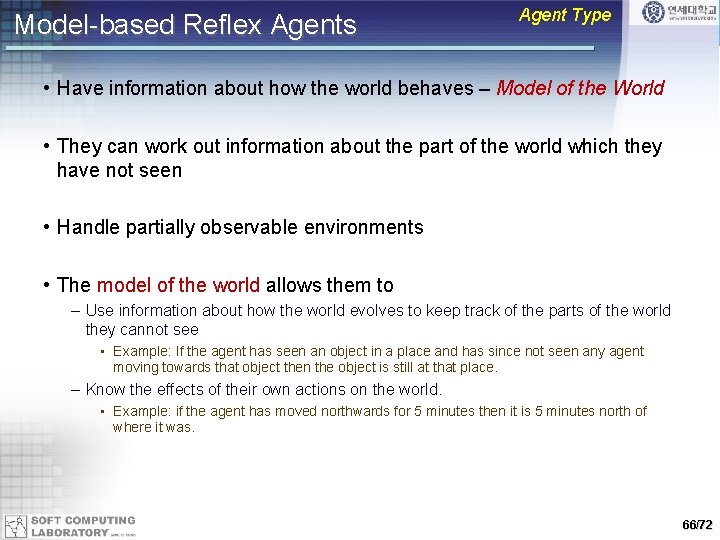

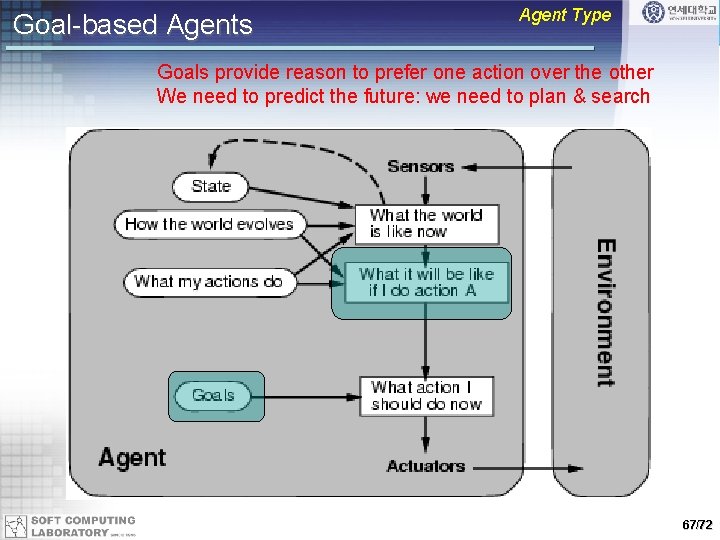

Model-based Reflex Agents Agent Type description of Models the world by: modeling how the world chances current world state how it’s actions change the world sometimes it is unclear what to do without a clear goal 65/72

Model-based Reflex Agents Agent Type • Have information about how the world behaves – Model of the World • They can work out information about the part of the world which they have not seen • Handle partially observable environments • The model of the world allows them to – Use information about how the world evolves to keep track of the parts of the world they cannot see • Example: If the agent has seen an object in a place and has since not seen any agent moving towards that object then the object is still at that place. – Know the effects of their own actions on the world. • Example: if the agent has moved northwards for 5 minutes then it is 5 minutes north of where it was. 66/72

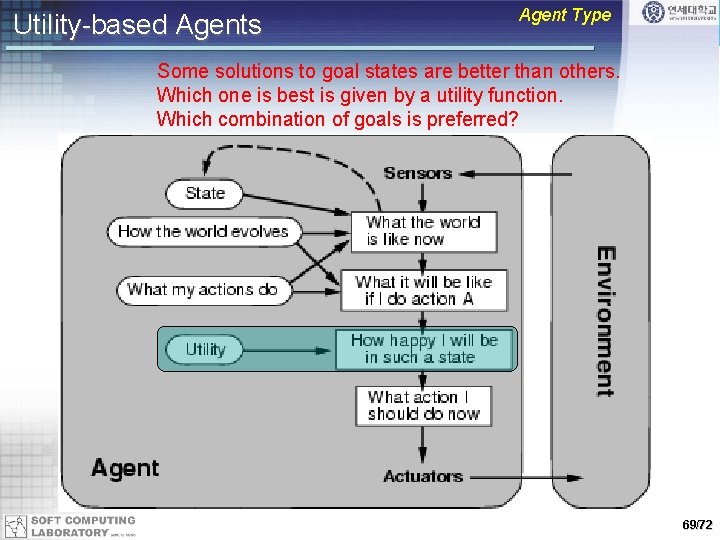

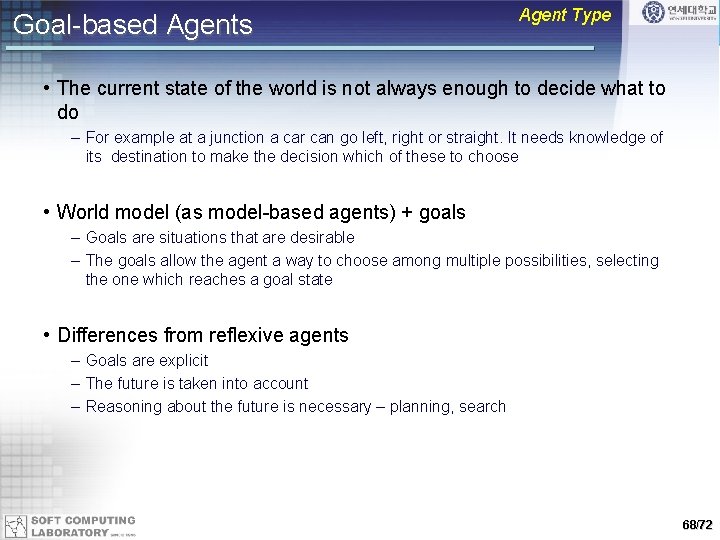

Goal-based Agents Agent Type Goals provide reason to prefer one action over the other We need to predict the future: we need to plan & search 67/72

Goal-based Agents Agent Type • The current state of the world is not always enough to decide what to do – For example at a junction a car can go left, right or straight. It needs knowledge of its destination to make the decision which of these to choose • World model (as model-based agents) + goals – Goals are situations that are desirable – The goals allow the agent a way to choose among multiple possibilities, selecting the one which reaches a goal state • Differences from reflexive agents – Goals are explicit – The future is taken into account – Reasoning about the future is necessary – planning, search 68/72

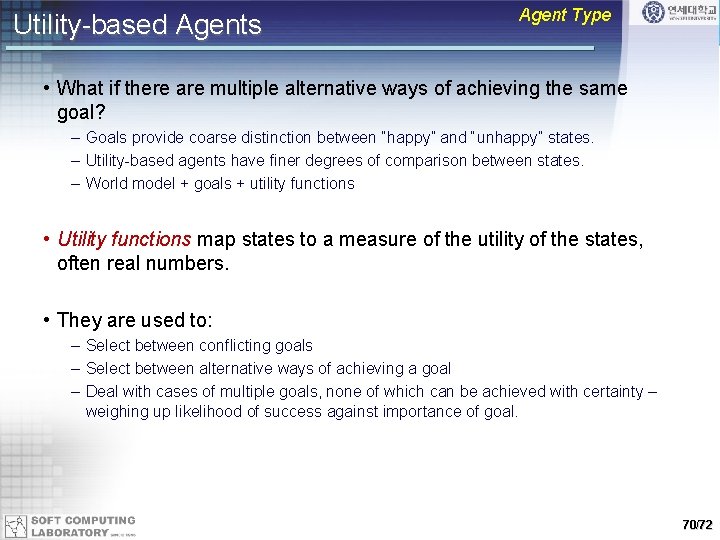

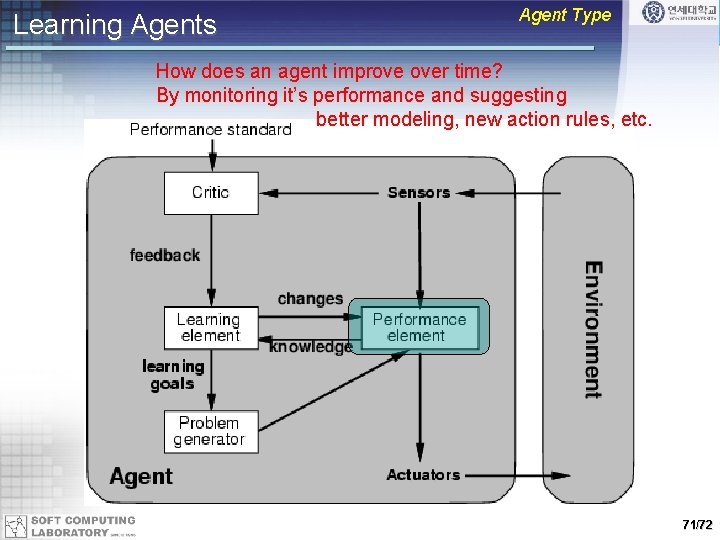

Utility-based Agents Agent Type Some solutions to goal states are better than others. Which one is best is given by a utility function. Which combination of goals is preferred? 69/72

Utility-based Agents Agent Type • What if there are multiple alternative ways of achieving the same goal? – Goals provide coarse distinction between “happy” and “unhappy” states. – Utility-based agents have finer degrees of comparison between states. – World model + goals + utility functions • Utility functions map states to a measure of the utility of the states, often real numbers. • They are used to: – Select between conflicting goals – Select between alternative ways of achieving a goal – Deal with cases of multiple goals, none of which can be achieved with certainty – weighing up likelihood of success against importance of goal. 70/72

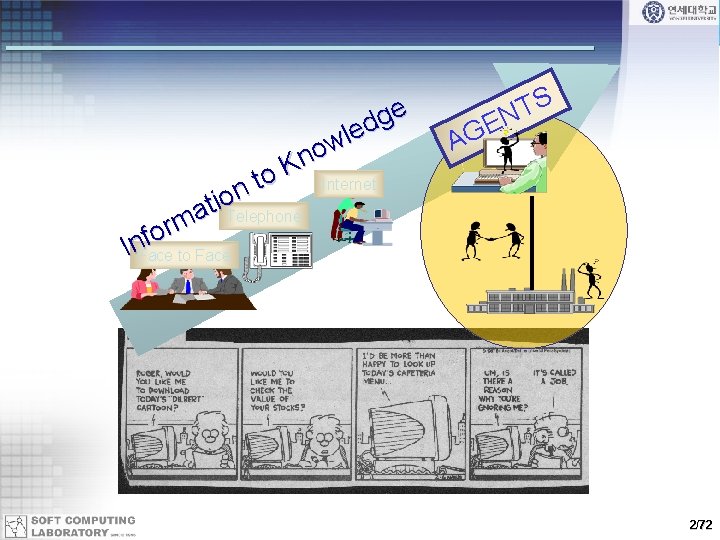

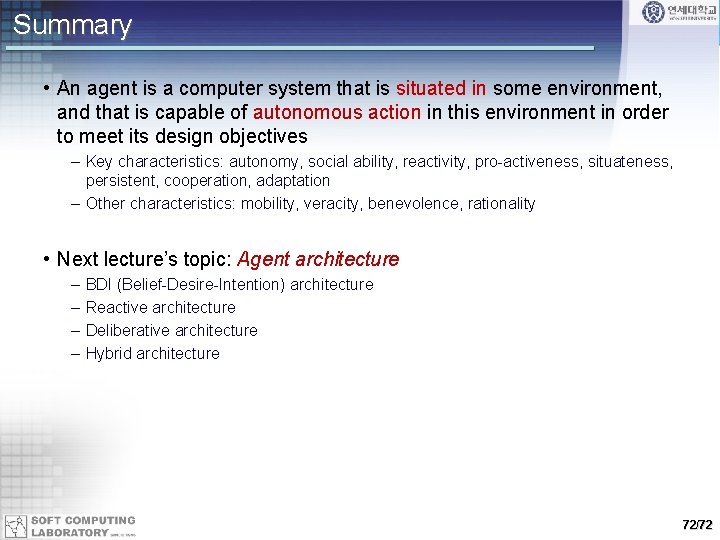

Learning Agents Agent Type How does an agent improve over time? By monitoring it’s performance and suggesting better modeling, new action rules, etc. 71/72

Summary • An agent is a computer system that is situated in some environment, and that is capable of autonomous action in this environment in order to meet its design objectives – Key characteristics: autonomy, social ability, reactivity, pro-activeness, situateness, persistent, cooperation, adaptation – Other characteristics: mobility, veracity, benevolence, rationality • Next lecture’s topic: Agent architecture – – BDI (Belief-Desire-Intention) architecture Reactive architecture Deliberative architecture Hybrid architecture 72/72