Social Media Analytics prediction and anomaly detection for

- Slides: 24

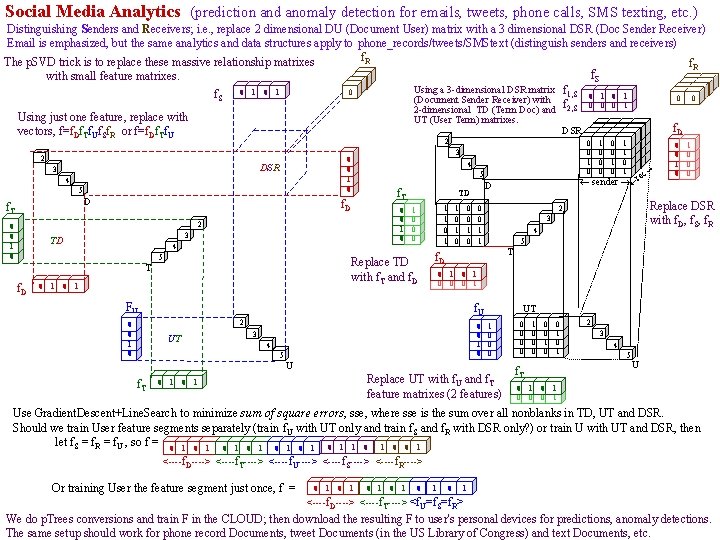

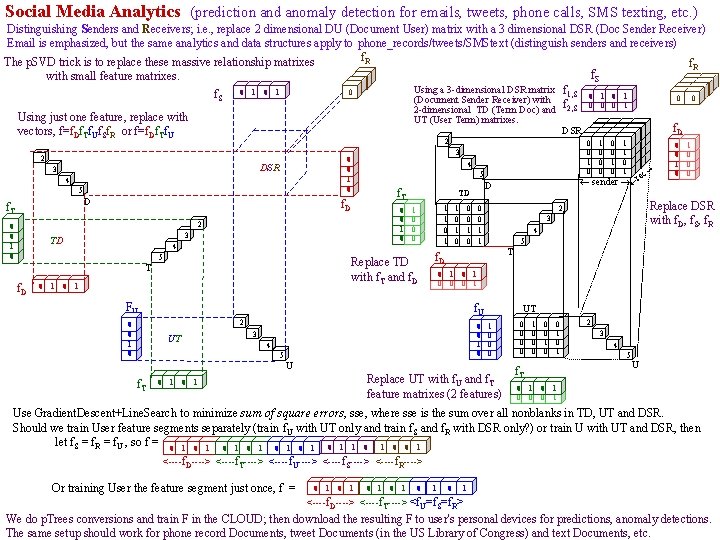

Social Media Analytics (prediction and anomaly detection for emails, tweets, phone calls, SMS texting, etc. ) Distinguishing Senders and Receivers; i. e. , replace 2 dimensional DU (Document User) matrix with a 3 dimensional DSR (Doc Sender Receiver) Email is emphasized, but the same analytics and data structures apply to phone_records/tweets/SMStext (distinguish senders and receivers) f. R The p. SVD trick is to replace these massive relationship matrixes f. R f with small feature matrixes. 0 S 0 0 0 Using a 3 -dimensional DSR matrix f 0 0 1, S f. S 0 1 0 0 (Document Sender Receiver) with 0 0 0 1 f 2 -dimensional TD (Term Doc) and 2, S Using just one feature, replace with UT (User Term) matrixes. f. D DSR 0 0 1 1 vectors, f=f. Df. Tf. Uf. Sf. R or f=f. Df. Tf. U 0 01 00 01 1 0 10 00 11 0 1 00 01 00 1 0 0 0 1 c 2 2 0 0 1 0 DSR 3 4 5 D f. T 3 4 5 f. T f. D 0 0 1 0 2 0 0 1 0 3 TD 4 5 0 1 1 0 1 Replace TD with f. T and f. D T f. D TD 1 0 0 0 1 0 4 5 T 0 1 0 0 0 1 2 0 1 0 3 4 5 f. T 3 f. U UT 0 UT 1 0 0 0 U Replace UT with f. U and f. T feature matrixes (2 features) 1 1 0 0 0 Replace DSR with f. D, f. S, f. R 2 0 0 1 1 f. D FU 0 0 1 0 e sender r D 0 0 1 0 0 0 0 0 1 0 1 f. T 2 3 4 5 U 0 1 0 0 0 1 Use Gradient. Descent+Line. Search to minimize sum of square errors, sse, where sse is the sum over all nonblanks in TD, UT and DSR. Should we train User feature segments separately (train f. U with UT only and train f. S and f. R with DSR only? ) or train U with UT and DSR, then let f. S = f. R = f. U , so f = 0 1 0 1 1 0 0 1 <----f. D----> <----f. T----> <----f. U----> <----f. S----> <----f. R----> Or training User the feature segment just once, f = 0 1 0 1 0 1 <----f. D----> <----f. T----> <f. U=f. S=f. R> We do p. Trees conversions and train F in the CLOUD; then download the resulting F to user's personal devices for predictions, anomaly detections. The same setup should work for phone record Documents, tweet Documents (in the US Library of Congress) and text Documents, etc.

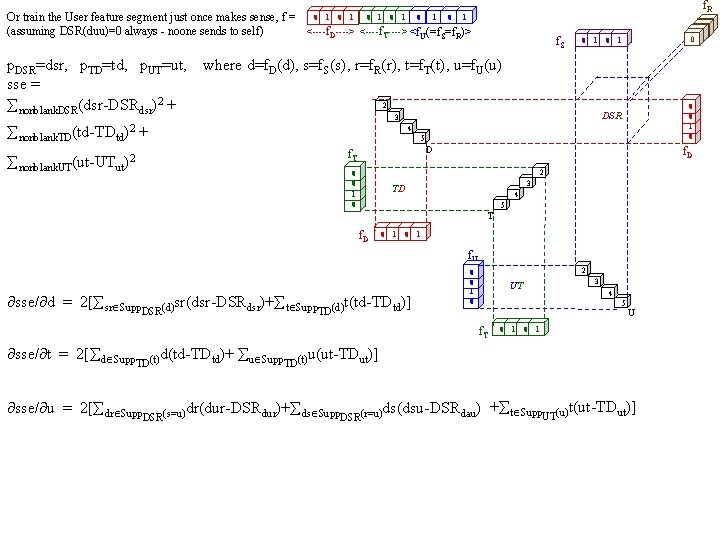

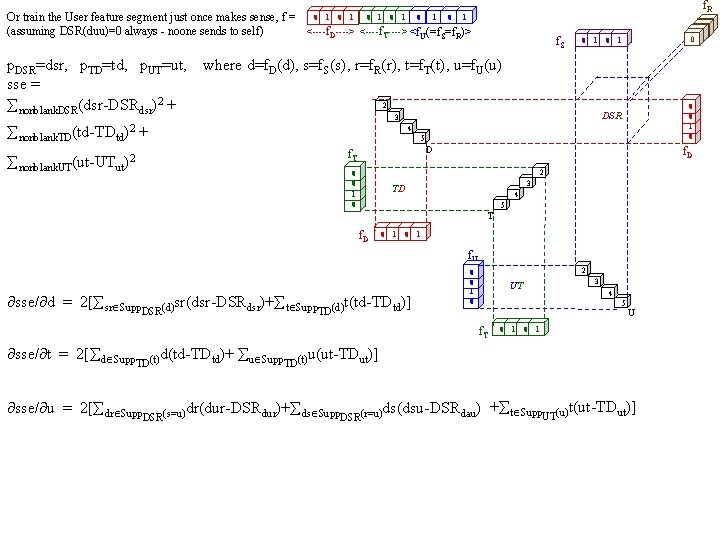

f. R 0 1 0 1 0 1 Or train the User feature segment just once makes sense, f = <----f. D----> <----f. T----> <f. U(=f. S=f. R)> (assuming DSR(duu)=0 always - noone sends to self) f. S 0 1 p. DSR=dsr, p. TD=td, p. UT=ut, where d=f. D(d), s=f. S(s), r=f. R(r), t=f. T(t), u=f. U(u) sse = 2 nonblank. DSR(dsr-DSRdsr)2 + 0 1 0 DSR 3 nonblank. TD(td-TDtd)2 + 0 4 5 D f. T nonblank. UT(ut-UTut)2 f. D 2 0 0 1 0 3 TD 4 5 T f. D 0 1 f. U sse/ d = 2[ sr Supp. DSR(d)sr(dsr-DSRdsr)+ t Supp. TD(d)t(td-TDtd)] 2 0 0 1 0 4 5 f. T sse/ t = 2[ d Supp. TD(t)d(td-TDtd)+ u Supp 3 UT 0 1 0 U 1 u(ut-TDut)] TD(t) sse/ u = 2[ dr Supp. DSR(s=u)dr(dur-DSRdur)+ ds Supp. DSR(r=u)ds(dsu-DSRdau) + t Supp. UT(u)t(ut-TDut)] 0 0 0

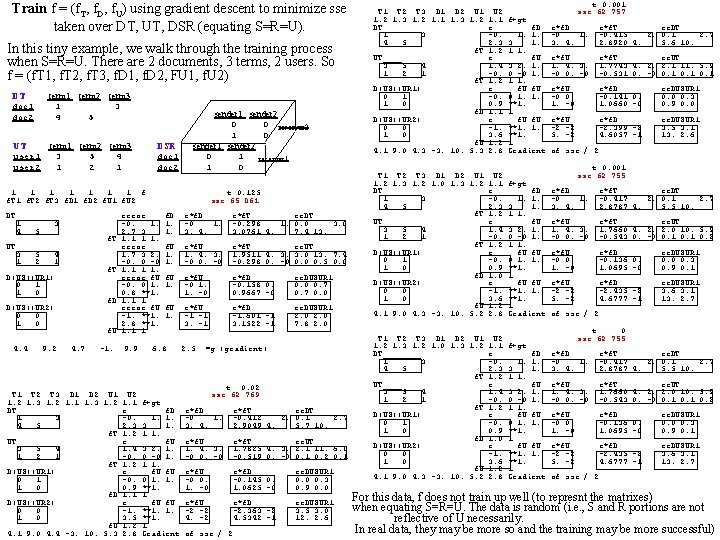

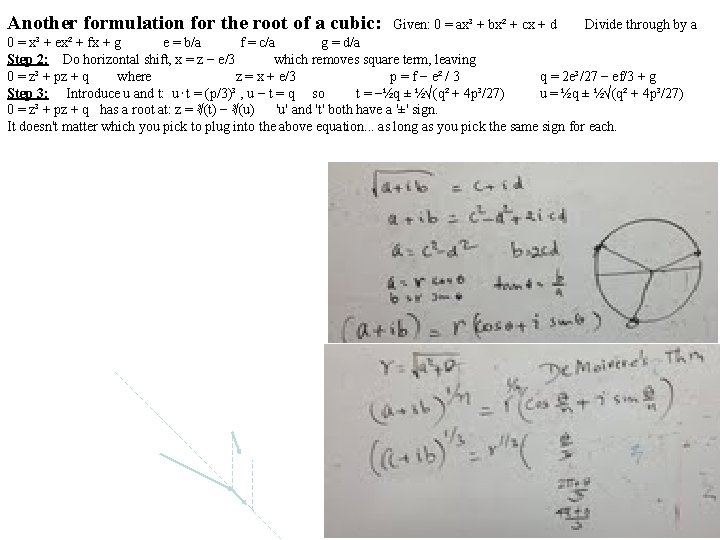

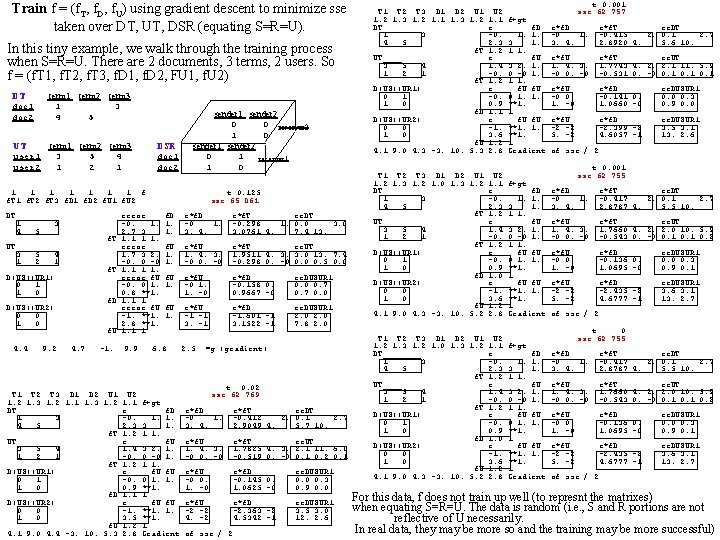

Train f = (f. T, f. D, f. U) using gradient descent to minimize sse taken over DT, UT, DSR (equating S=R=U). In this tiny example, we walk through the training process when S=R=U. There are 2 documents, 3 terms, 2 users. So f = (f. T 1, f. T 2, f. T 3, f. D 1, f. D 2, FU 1, f. U 2) DT term 1 term 2 term 3 doc 1 1 3 doc 2 4 5 UT term 1 term 2 term 3 user 1 3 5 4 user 2 1 sender 1 sender 2 0 0 receiver 2 1 0 DSR sender 1 sender 2 doc 1 0 1 receiver 1 doc 2 1 0 1 1 1 1 f f. T 1 f. T 2 f. T 3 f. D 1 f. D 2 f. U 1 f. U 2 DT____ 1 3 4 5 UT____ 3 5 1 2 f. T 4 1 f. T D(US)(UR 1) 0 1 1 0 f. D D(US)(UR 2) 0 0 1 0 4. 4 9. 2 f. U 4. 7 -1. t 0. 125 sse 65. 061 error -0. 1. 2. 7 3 1. 1 1 1. error 1. 7 3 2. -0. 0 -0 1. 1 1 1. error f. U -0. 0 1. 0. 8 **1. 1. 1 1 error f. U -1. **1. 2. 8 **1. 1. 1 1 9. 9 f. D 1. 1. e*f. D -0 1. 3. 4. e*f. T ee. DT -0. 298 1. 0. 0 3. 0761 4. 7. 4 13. f. U 1. 1. e*f. U 1. 4. 3. -0 0. -0 e*f. T ee. UT 1. 9511 4. 3. 3. 0 13. 7. 4 -0. 298 0. -0 0. 5 0. 0 f. U 1. e*f. U -0 1. 1. -0 e*f. D -0. 158 0. 0. 9667 -0 ee. DUSUR 1 0. 0 0. 7 0. 0 f. U 1. e*f. U -1 -1 3. -1 e*f. D -1. 601 -1 3. 1522 -1 ee. DUSUR 1 2. 0 7. 8 2. 0 6. 8 4. 4 9. 2 4. 7 -1. 9. 9 6. 8 2. 5 grad T 1 T 2 T 3 D 1 D 2 U 1 U 2 1. 3 1. 2 1. 1 f+gt DT____ e f. D 1 3 -0. 1. 1. 4 5 2. 3 3 1. f. T 1. 2 1 1. UT____ e f. U 3 5 4 1. 4 3 2. 1. 1 2 1 -0. 0 -0 1. f. T 1. 2 1 1. D(US)(UR 1) e f. U 0 1 -0. 0 1. 1. 1 0 0. 9 **1. f. D 1. 1 1 D(US)(UR 2) e f. U 0 0 -1. **1. 1. 1 0 3. 5 **1. f. U 1. 2 1 4. 1 9. 0 4. 4 -3. 10. 5. 3 2. 8 Gradient 2. 5 =g (gradient) t 0. 02 sse 62. 769 e*f. D -0 1. 3. 4. e*f. T ee. DT -0. 412 2. 0. 1 2. 7 2. 9049 4. 5. 7 10. e*f. U 1. 4. 3. -0 0. -0 e*f. T ee. UT 1. 7825 4. 3. 2. 1 11. 6. 0 -0. 519 0. -0 0. 1 0. 2 0. 1 e*f. U -0 0. 1. -0 e*f. D -0. 145 0. 1. 0625 -0 ee. DUSUR 1 0. 0 0. 3 0. 9 0. 0 e*f. U -2 -2 4. -2 e*f. D -2. 363 -2 4. 5342 -1 ee. DUSUR 1 3. 5 3. 0 12. 2. 6 of sse / 2 4. 1 9. 0 4. 4 -3. 10. 5. 3 2. 8 grad T 1 T 2 T 3 D 1 D 2 U 1 U 2 1. 3 1. 2 1. 1 f+gt DT____ e f. D 1 3 -0. 1. 1. 4 5 2. 3 3 1. f. T 1. 2 1 1. UT____ e f. U 3 5 4 1. 4 3 2. 1. 1 2 1 -0. 0 -0 1. f. T 1. 2 1 1. D(US)(UR 1) e f. U 0 1 -0. 0 1. 1. 1 0 0. 9 **1. f. D 1. 1 1 D(US)(UR 2) e f. U 0 0 -1. **1. 1. 1 0 3. 6 **1. f. U 1. 2 1 4. 1 9. 0 4. 3 -3. 10. 5. 3 2. 8 Gradient 4. 1 9. 0 4. 3 -3. 10. 5. 3 2. 8 grad T 1 T 2 T 3 D 1 D 2 U 1 U 2 1. 3 1. 2 1. 0 1. 3 1. 2 1. 1 f+gt DT____ e f. D 1 3 -0. 1. 1. 4 5 2. 3 3 1. f. T 1. 2 1 1. UT____ e f. U 3 5 4 1. 4 3 2. 1. 1 2 1 -0. 0 -0 1. f. T 1. 2 1 1. D(US)(UR 1) e f. U 0 1 -0. 0 1. 1. 1 0 0. 9 **1. f. D 1. 0 1 D(US)(UR 2) e f. U 0 0 -1. **1. 1. 1 0 3. 6 **1. f. U 1. 2 1 4. 1 9. 0 4. 3 -3. 10. 5. 2 2. 8 Gradient 4. 1 9. 0 4. 3 -3. 10. 5. 2 2. 8 grad T 1 T 2 T 3 D 1 D 2 U 1 U 2 1. 3 1. 2 1. 0 1. 3 1. 2 1. 1 f+gt DT____ e f. D 1 3 -0. 1. 1. 4 5 2. 3 3 1. f. T 1. 2 1 1. UT____ e f. U 3 5 4 1. 4 3 2. 1. 1 2 1 -0. 0 -0 1. f. T 1. 2 1 1. D(US)(UR 1) e f. U 0 1 -0. 0 1. 1. 1 0 0. 9 **1. f. D 1. 0 1 D(US)(UR 2) e f. U 0 0 -1. **1. 1. 1 0 3. 6 **1. f. U 1. 2 1 4. 1 9. 0 4. 3 -3. 10. 5. 2 2. 8 Gradient t 0. 001 sse 62. 757 e*f. D -0 1. 3. 4. e*f. T ee. DT -0. 415 2. 0. 1 2. 7 2. 8920 4. 5. 6 10. e*f. U 1. 4. 3. -0 0. -0 e*f. T ee. UT 1. 7743 4. 2. 2. 1 11. 5. 9 -0. 531 0. -0 0. 1 e*f. U -0 0. 1. -0 e*f. D -0. 141 0. 1. 0660 -0 ee. DUSUR 1 0. 0 0. 3 0. 9 0. 0 e*f. U -2 -2 5. -2 e*f. D -2. 399 -2 4. 6057 -1 ee. DUSUR 1 3. 5 3. 1 13. 2. 6 of sse / 2 t 0. 001 sse 62. 755 e*f. D -0 1. 3. 4. e*f. T ee. DT -0. 417 2. 0. 1 2. 7 2. 8787 4. 5. 5 10. e*f. U 1. 4. 3. -0 0. -0 e*f. T ee. UT 1. 7660 4. 2. 2. 0 10. 5. 9 -0. 543 0. -0 0. 1 0. 2 e*f. U -0 0. 1. -0 e*f. D -0. 136 0. 1. 0695 -0 ee. DUSUR 1 0. 0 0. 3 0. 9 0. 1 e*f. U -2 -2 5. -2 e*f. D -2. 435 -2 4. 6777 -1 ee. DUSUR 1 3. 6 3. 1 13. 2. 7 of sse / 2 t 0 sse 62. 755 e*f. D -0 1. 3. 4. e*f. T ee. DT -0. 417 2. 0. 1 2. 7 2. 8787 4. 5. 5 10. e*f. U 1. 4. 3. -0 0. -0 e*f. T ee. UT 1. 7660 4. 2. 2. 0 10. 5. 9 -0. 543 0. -0 0. 1 0. 2 e*f. U -0 0. 1. -0 e*f. D -0. 136 0. 1. 0695 -0 ee. DUSUR 1 0. 0 0. 3 0. 9 0. 1 e*f. U -2 -2 5. -2 e*f. D -2. 435 -2 4. 6777 -1 ee. DUSUR 1 3. 6 3. 1 13. 2. 7 of sse / 2 For this data, f does not train up well (to represnt the matrixes) when equating S=R=U. The data is random (i. e. , S and R portions are not reflective of U necessarily. In real data, they may be more so and the training may be more successful)

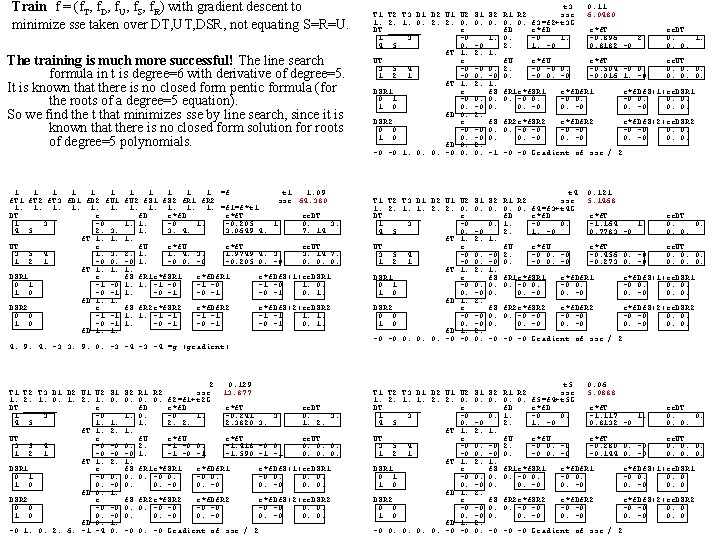

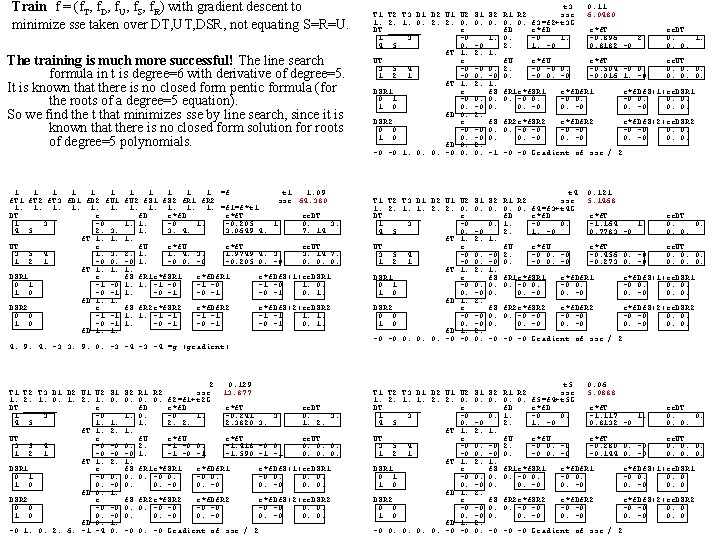

Train f = (f. T, f. D, f. U, f. S, f. R) with gradient descent to minimize sse taken over DT, UT, DSR, not equating S=R=U. The training is much more successful! The line search formula in t is degree=6 with derivative of degree=5. It is known that there is no closed form pentic formula (for the roots of a degree=5 equation). So we find the t that minimizes sse by line search, since it is known that there is no closed form solution for roots of degree=5 polynomials. 1 1 1 f. T 2 f. T 3 f. D 1 f. D 2 f. U 1 1. 1. 1. DT _______ e 1 3 -0 4 5 2. 3. f. T 1. 1. UT e 3 5 4 1. 3. 1 2 1 -0 0. f. T 1. 1. DSR 1 e 0 1 -1 -0 1 0 -0 -1 f. D 1. 1. DSR 2 e 0 0 -1 -1 1 0 -0 -1 f. D 1. 1. 1 1 1 =f t 1 1. 09 f. U 2 f. S 1 f. S 2 f. R 1 f. R 2 sse 64. 380 1. 1. 1. =f 1=f*t 1 f. D e*f. T ee. DT 1. 1. -0. 205 1. 0. 3. 1. 3. 4. 3. 0649 4. 7. 14 1. f. U e*f. T ee. UT 2. 1. 1. 4. 3. 1. 9749 4. 3. 3. 14 7. -0 1. -0 0. -0 -0. 205 0. -0 0. 0. 0. 1. f. S f. R 1 e*f. SR 1 e*f. Df. S(1)ee. DSR 1 1. 1. -1 -0 1. 0. 1. -0 -1 0. 1. f. S f. R 2 e*f. SR 2 1. 1. -1 -1 1. -0 -1 e*f. Df. R 2 -1 -1 -0 -1 e*f. Df. S(2)ee. DSR 2 -1 -1 1. 1. -0 -1 0. 1. 4. 9. 4. -3 3. 9. 0. -3 -4 =g (gradient) 4. T 1 1. DT 1 4 9. 4. -3 3. 9. 0. -3 T 2 T 3 D 1 D 2 U 1 U 2 S 1 2. 1. 0. 1. 2. 1. 0. _______ e 3 -0 5 1. 1. f. T 1. 2. UT e 3 5 4 -0 -0 1 2 1 -0 -0 f. T 1. 2. DSR 1 e 0 1 -0 0. 1 0 0. -0 f. D 0. 1. DSR 2 e 0 0 -0 -0 1 0 0. -0 f. D 0. 1. -0 1. 0. 2. 6. -1 -4 0. -4 -3 -4 f 2 0. 129 S 2 R 1 R 2 sse 13. 877 0. 0. 0. f 2=f 1+t 2 G f. D e*f. T 1. 0. -0 1. -0. 241 1. 2. 2. 2. 3820 1. f. U e*f. T 0. 2. -1 -0 0. -1. 418 -0 1. -1 -0 -1 -1. 590 1. f. S f. R 1 e*f. SR 1 e*f. Df. R 1 0. 0. -0 0. 0. 0. -0 f. S f. R 2 e*f. SR 2 0. 0. -0 -0 0. 0. -0 3. ee. DT 0. 3. 1. 2. -0 0. -1 -1 ee. UT 0. 0. 0. 3. e*f. Df. R 2 -0 -0 0. -0 e*f. Df. S(1)ee. DSR 1 -0 0. 0. -0 0. -0 Gradient of sse / 2 e*f. Df. S(2)ee. DSR 2 -0 -0 0. 0. 0. -0 0. 0. -0 T 1 1. DT 1 4 1. 0. 2. 6. -1 -4 0. T 2 T 3 D 1 D 2 U 1 U 2 S 1 2. 1. 0. 2. 2. 0. 0. _______ e 3 -0 5 0. -0 f. T 1. 2. UT e 3 5 4 -0 -0 1 2 1 -0 0. f. T 1. 2. DSR 1 e 0 1 -0 0. 1 0 0. -0 f. D 0. 2. DSR 2 e 0 0 -0 -0 1 0 0. -0 f. D 0. 2. -0 -0 1. 0. 0. -0 0. 0. -0 0. -0 f t 3 0. 11 S 2 R 1 R 2 sse 6. 0480 0. 0. 0. f 3=f 2+t 3 G f. D e*f. T 1. 0. -0 1. -0. 896 2. 1. -0 0. 8182 1. f. U e*f. T 0. 2. -0 -0 0. -0. 504 -0 0. -0 -0. 016 1. f. S f. R 1 e*f. SR 1 e*f. Df. R 1 0. 0. -0 0. 0. 0. -0 -0 T 1 1. DT 1 4 -0 1. 0. 0. -0 0. 0. T 2 T 3 D 1 D 2 U 1 U 2 S 1 2. 1. 1. 2. 2. 0. 0. _______ e 3 -0 5 0. -0 f. T 1. 2. UT e 3 5 4 -0 0. 1 2 1 -0 0. f. T 1. 2. DSR 1 e 0 1 -0 0. 1 0 0. -0 f. D 1. 2. DSR 2 e 0 0 -0 -0 1 0 0. -0 f. D 1. 2. -0 -0 0. 0. 0. -0 -0 0. -1 -0 -0 f t 4 0. 121 S 2 R 1 R 2 sse 5. 1468 0. 0. 0. f 4=f 3+t 4 G f. D e*f. T 0. 1. -0 0. -1. 164 2. 1. -0 0. 7783 1. f. U e*f. T -0 2. -0 0. -0 -0. 456 -0 0. -0 -0. 273 1. f. S f. R 1 e*f. SR 1 e*f. Df. R 1 0. 0. -0 0. 0. 0. -0 -0 T 1 1. DT 1 4 -0 -0 -0 f t 5 0. 06 S 2 R 1 R 2 sse 5. 0888 0. 0. 0. f 5=f 4+t 5 G f. D e*f. T 0. 1. -0 0. -1. 117 2. 1. -0 0. 8132 1. f. U e*f. T -0 2. -0 0. -0 -0. 280 -0 0. -0 -0. 144 1. f. S f. R 1 e*f. SR 1 e*f. Df. R 1 0. 0. -0 0. 0. 0. -0 -0 0. T 2 T 3 D 1 D 2 U 1 U 2 S 1 2. 1. 1. 2. 2. 0. 0. _______ e 3 -0 5 0. -0 f. T 1. 2. UT e 3 5 4 -0 0. 1 2 1 -0 0. f. T 1. 2. DSR 1 e 0 1 -0 0. 1 0 0. -0 f. D 1. 2. DSR 2 e 0 0 -0 -0 1 0 0. -0 f. D 1. 2. -0 0. 0. -0 -0 0. f. S f. R 2 e*f. SR 2 0. 0. -0 -0 0. 0. -0 2. ee. DT 0. 1. 0. 0. -0 0. 1. -0 ee. UT 0. 0. 0. -0 e*f. Df. R 2 -0 -0 0. -0 e*f. Df. S(1)ee. DSR 1 -0 0. 0. e*f. Df. S(2)ee. DSR 2 -0 -0 0. 0. 0. -0 0. 0. -1 -0 -0 Gradient of sse / 2 f. S f. R 2 e*f. SR 2 0. 0. -0 -0 0. 0. -0 1. ee. DT 0. 0. 0. -0 ee. UT 0. 0. 0. -0 e*f. Df. R 2 -0 -0 0. -0 e*f. Df. S(1)ee. DSR 1 -0 0. 0. e*f. Df. S(2)ee. DSR 2 -0 -0 0. 0. 0. -0 0. 0. -0 -0 -0 Gradient of sse / 2 f. S f. R 2 e*f. SR 2 0. 0. -0 -0 0. 0. -0 1. ee. DT 0. 0. 0. -0 ee. UT 0. 0. 0. -0 e*f. Df. R 2 -0 -0 0. -0 e*f. Df. S(1)ee. DSR 1 -0 0. 0. -0 -0 -0 Gradient of sse / 2 e*f. Df. S(2)ee. DSR 2 -0 -0 0. 0. 0. -0 0. 0.

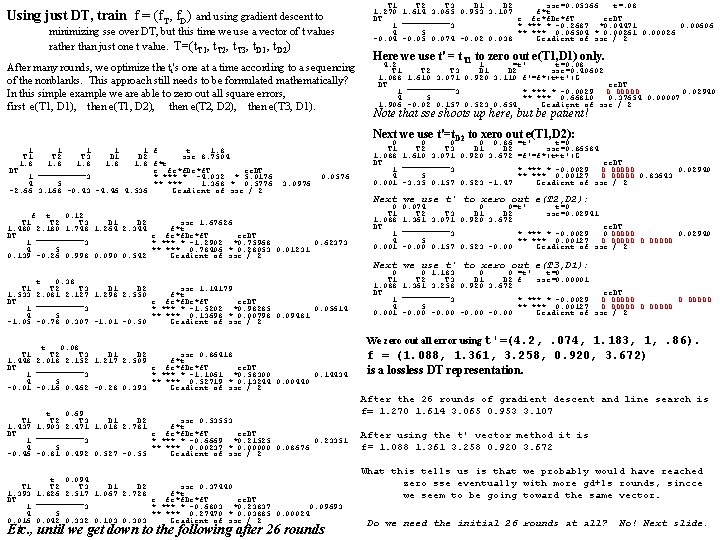

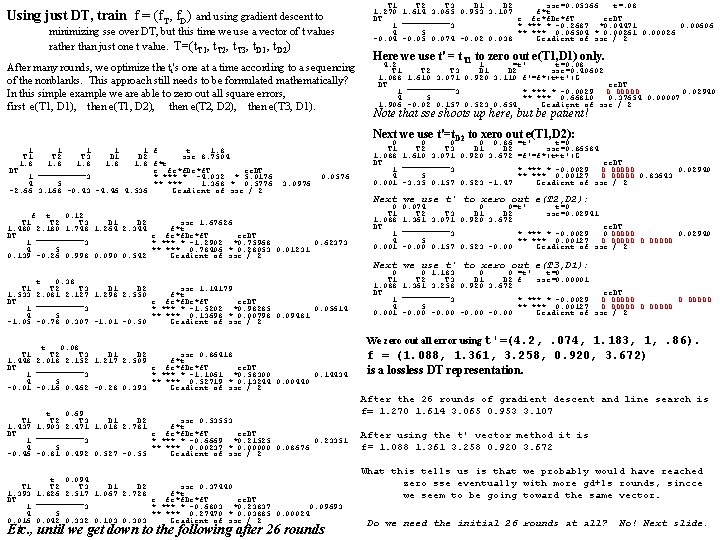

Using just DT, train f = (f. T, f. D) and using gradient descent to minimizing sse over DT, but this time we use a vector of t values rather than just one t value. T=(t. T 1, t. T 2, t. T 3, t. D 1, t. D 2) After many rounds, we optimize the ti's one at a time according to a sequencing of the nonblanks. This approach still needs to be formulated mathematically? In this simple example we are able to zero out all square errors, first e(T 1, D 1), then e(T 1, D 2), then e(T 2, D 2), then e(T 3, D 1). T 1 T 2 T 3 D 1 D 2 sse=0. 05366 t=. 08 1. 270 1. 614 3. 065 0. 953 3. 107 f*t DT _____ e fe*f. De*f. T ee. DT 1 3 * *** * -0. 2687 *0. 04471 0. 00606 4 5 ** *** 0. 06504 * 0. 00261 0. 00026 -0. 04 -0. 05 0. 074 -0. 02 0. 038 Gradient of sse / 2 Here we use t' = t. T 1 to zero out e(T 1, D 1) only. 4. 2 1 =t' t=0. 08 T 1 T 2 T 3 D 1 D 2 sse=0. 40602 1. 088 1. 610 3. 071 0. 920 3. 110 f'=f*(t+t')G DT _____ ee. DT 1 3 * *** * -0. 0029 0. 00000 0. 02940 4 5 ** *** 0. 66810 0. 37654 0. 00007 1. 906 -0. 02 0. 157 0. 523 0. 654 Gradient of sse / 2 Note that sse shoots up here, but be patient! Next we use t'=t. D 2 to xero out e(T 1, D 2): 1 T 1 1. 8 1 1 T 2 T 3 D 1 D 2 1. 8 DT _____ 1 3 4 5 -2. 66 3. 168 -0. 43 -4. 46 4. 536 f T 1 1. 480 DT 1 4 0. 139 T 1 1. 533 DT 1 4 -1. 05 f t 1. 8 sse 8. 7504 f*t e fe*f. De*f. T ee. DT * *** * -4. 032 * 5. 0176 ** *** 1. 368 * 0. 5776 Gradient of sse / 2 3. 0976 0. 0576 t 0. 12 T 3 D 1 D 2 sse 1. 67626 2. 180 1. 748 1. 264 2. 344 f*t _____ e fe*f. De*f. T ee. DT 3 * *** * -1. 2902 *0. 75968 0. 62373 5 ** *** 0. 78406 * 0. 28053 0. 01231 -0. 26 0. 998 0. 090 0. 542 Gradient of sse / 2 t 0. 38 T 2 T 3 D 1 D 2 sse 1. 14179 2. 081 2. 127 1. 298 2. 550 f*t _____ e fe*f. De*f. T ee. DT 3 * *** * -1. 5202 *0. 98285 0. 05614 5 ** *** 0. 13698 * 0. 00798 0. 09481 -0. 78 0. 307 -1. 01 -0. 50 Gradient of sse / 2 t 0. 08 T 1 T 2 T 3 D 1 D 2 sse 0. 86418 1. 448 2. 018 2. 152 1. 217 2. 509 f*t DT _____ e fe*f. De*f. T ee. DT 1 3 * *** * -1. 1061 *0. 58300 0. 14434 4 5 ** *** 0. 52719 * 0. 13244 0. 00440 -0. 01 -0. 16 0. 462 -0. 28 0. 393 Gradient of sse / 2 t 0. 69 T 1 T 2 T 3 D 1 D 2 sse 0. 53553 1. 437 1. 903 2. 471 1. 018 2. 781 f*t DT _____ e fe*f. De*f. T ee. DT 1 3 * *** * -0. 6669 *0. 21525 0. 23351 4 5 ** *** 0. 00237 * 0. 00000 0. 08676 -0. 46 -0. 81 0. 492 0. 527 -0. 55 Gradient of sse / 2 T 1 1. 393 DT 1 4 0. 016 t 0. 094 T 2 T 3 D 1 D 2 sse 0. 37440 1. 826 2. 517 1. 067 2. 728 f*t _____ e fe*f. De*f. T ee. DT 3 * *** * -0. 6803 *0. 23837 0. 09693 5 ** *** 0. 27470 * 0. 03885 0. 00024 0. 042 0. 332 0. 103 0. 303 Gradient of sse / 2 : Etc. , until we get down to the following after 26 rounds 0 0 0. 86 T 1 T 2 T 3 D 1 D 2 1. 088 1. 610 3. 071 0. 920 3. 672 DT _____ 1 3 4 5 0. 001 -3. 35 0. 157 0. 523 -1. 47 =t' t=0 sse=0. 86584 =f'=f*(t+t')G ee. DT * *** * -0. 0029 0. 00000 0. 02940 ** *** 0. 00127 0. 00000 0. 83643 Gradient of sse / 2 Next we use t' to xero out e(T 2, D 2): 0 T 1 1. 088 DT 1 4 0. 001 0. 074 0 0=t' t=0 T 2 T 3 D 1 D 2 sse=0. 02941 1. 361 3. 071 0. 920 3. 672 _____ ee. DT 3 * *** * -0. 0029 0. 00000 0. 02940 5 ** *** 0. 00127 0. 00000 -0. 00 0. 157 0. 523 -0. 00 Gradient of sse / 2 Next we use t' to xero out e(T 3, D 1): 0 0 1. 183 0 0 T 1 T 2 T 3 D 1 D 2 1. 088 1. 361 3. 258 0. 920 3. 672 DT _____ 1 3 4 5 0. 001 -0. 00 =t' t=0 f sse=0. 00001 ee. DT * *** * -0. 0029 0. 00000 ** *** 0. 00127 0. 00000 Gradient of sse / 2 We zero out all error using t'=(4. 2, . 074, 1. 183, 1, . 86). f = (1. 088, 1. 361, 3. 258, 0. 920, 3. 672) is a lossless DT representation. After the 26 rounds of gradient descent and line search is f= 1. 270 1. 614 3. 065 0. 953 3. 107 After using the t' vector method it is f= 1. 088 1. 361 3. 258 0. 920 3. 672 What this tells us is that we probably would have reached zero sse eventually with more gd+ls rounds, sincce we seem to be going toward the same vector. Do we need the initial 26 rounds at all? No! Next slide.

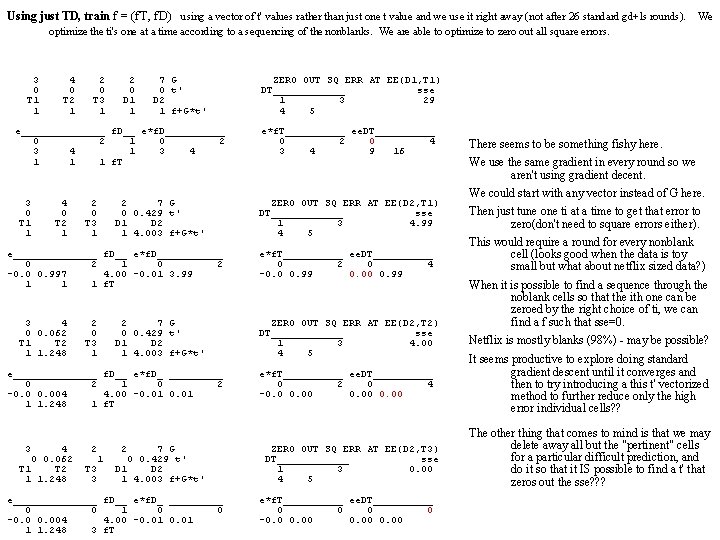

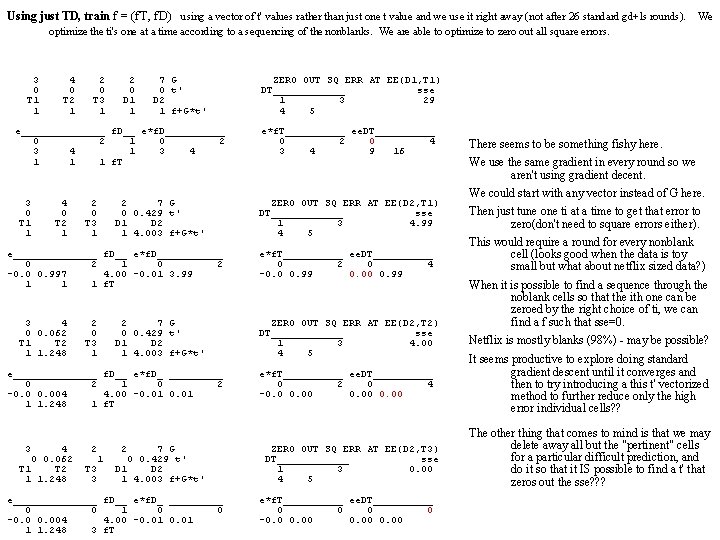

Using just TD, train f = (f. T, f. D) using a vector of t' values rather than just one t value and we use it right away (not after 26 standard gd+ls rounds). We optimize the ti's one at a time according to a sequencing of the nonblanks. We are able to optimize to zero out all square errors. 3 0 T 1 1 4 0 T 2 1 2 0 T 3 1 2 0 D 1 1 7 G 0 t' D 2 1 f+G*t' e_______ f. D__ e*f. D_____ 0 2 1 0 2 3 4 1 1 1 f. T 3 0 T 1 1 4 0 T 2 1 2 0 T 3 1 2 7 G 0 0. 429 t' D 1 D 2 1 4. 003 f+G*t' e_______ f. D__ e*f. D______ 0 2 1 0 2 -0. 0 0. 997 4. 00 -0. 01 3. 99 1 1 1 f. T 3 4 0 0. 062 T 1 T 2 1 1. 248 2 0 T 3 1 2 7 G 0 0. 429 t' D 1 D 2 1 4. 003 f+G*t' e_______ f. D__ e*f. D_ _____ 0 2 1 0 2 -0. 004 4. 00 -0. 01 1 1. 248 1 f. T 3 4 0 0. 062 T 1 T 2 1 1. 248 2 1 T 3 3 2 7 G 0 0. 429 t' D 1 D 2 1 4. 003 f+G*t' e_______ f. D__ e*f. D_ _____ 0 0 1 0 0 -0. 004 4. 00 -0. 01 1 1. 248 3 f. T ZERO OUT SQ ERR AT EE(D 1, T 1) DT______ sse 1 3 29 4 5 e*f. T_____ ee. DT_____ 0 2 0 4 3 4 9 16 ZERO OUT SQ ERR AT EE(D 2, T 1) DT______ sse 1 3 4. 99 4 5 e*f. T_____ ee. DT_____ 0 2 0 4 -0. 0 0. 99 0. 00 0. 99 ZERO OUT SQ ERR AT EE(D 2, T 2) DT______ sse 1 3 4. 00 4 5 e*f. T_____ ee. DT_____ 0 2 0 4 -0. 00 0. 00 ZERO OUT SQ ERR AT EE(D 2, T 3) DT______ sse 1 3 0. 00 4 5 e*f. T_____ ee. DT_____ 0 0 -0. 00 0. 00 There seems to be something fishy here. We use the same gradient in every round so we aren't using gradient decent. We could start with any vector instead of G here. Then just tune one ti at a time to get that error to zero(don't need to square errors either). This would require a round for every nonblank cell (looks good when the data is toy small but what about netflix sized data? ) When it is possible to find a sequence through the noblank cells so that the ith one can be zeroed by the right choice of ti, we can find a f such that sse=0. Netflix is mostly blanks (98%) - may be possible? It seems productive to explore doing standard gradient descent until it converges and then to try introducing a this t' vectorized method to further reduce only the high error individual cells? ? The other thing that comes to mind is that we may delete away all but the "pertinent" cells for a particular difficult prediction, and do it so that it IS possible to find a t' that zeros out the sse? ? ?

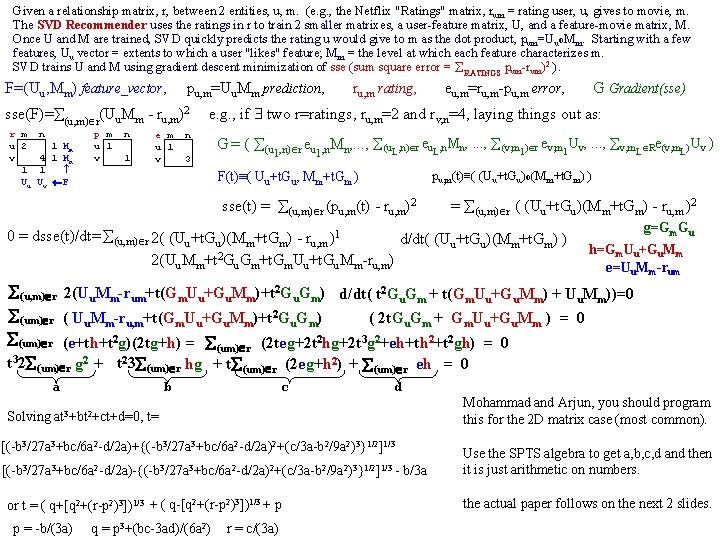

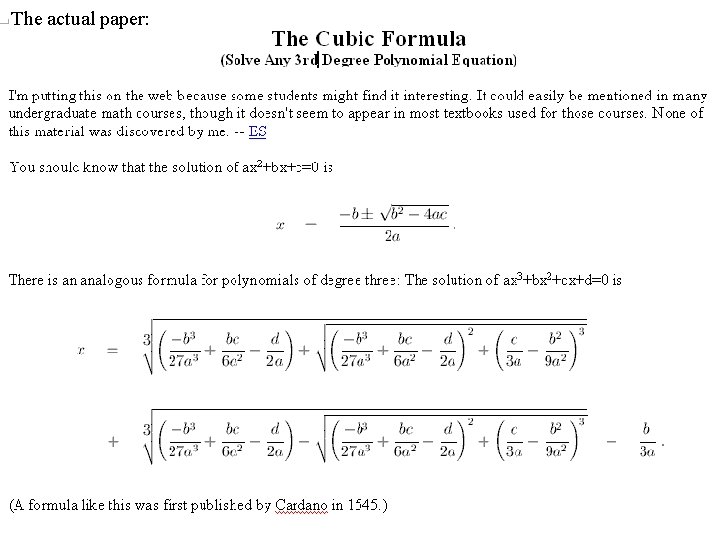

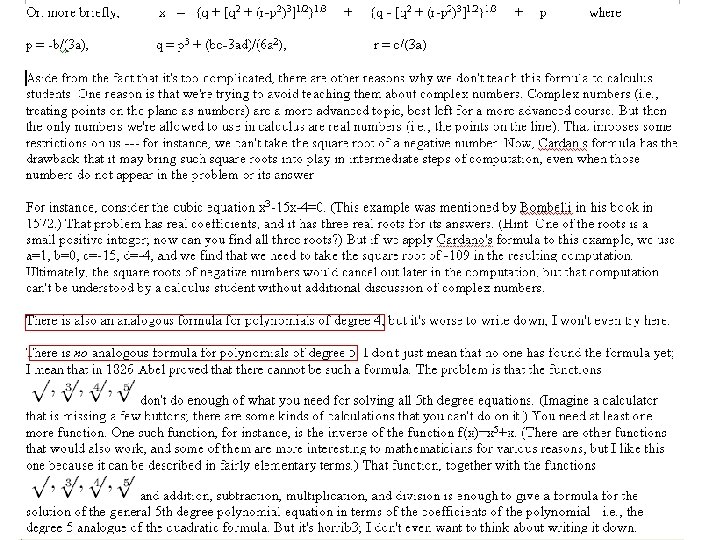

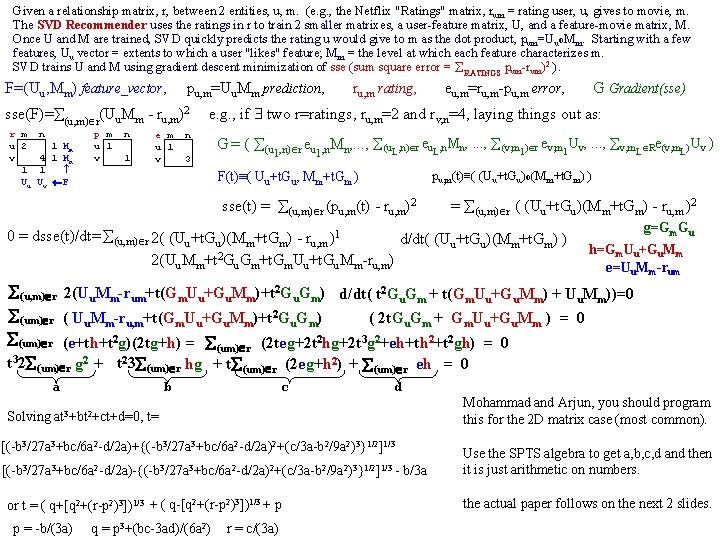

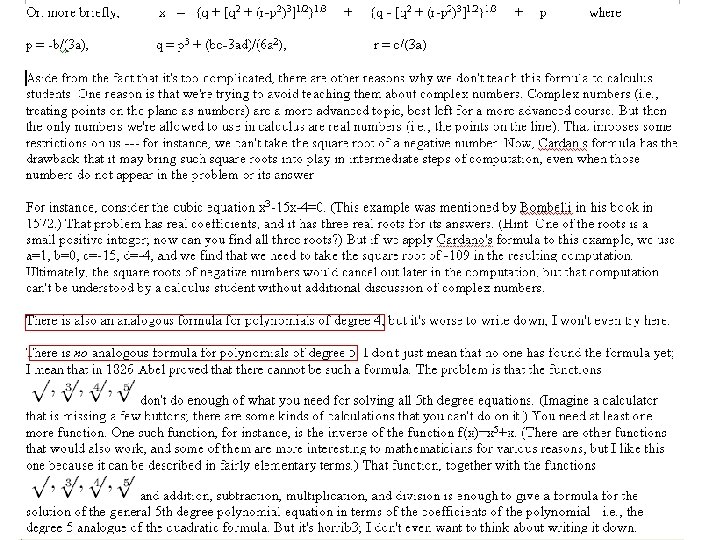

Given a relationship matrix, r, between 2 entities, u, m. (e. g. , the Netflix "Ratings" matrix, rum = rating user, u, gives to movie, m. The SVD Recommender uses the ratings in r to train 2 smaller matrixes, a user-feature matrix, U, and a feature-movie matrix, M. Once U and M are trained, SVD quickly predicts the rating u would give to m as the dot product, pum=Uuo. Mm. Starting with a few features, Uu vector = extents to which a user "likes" feature; Mm = the level at which each feature characterizes m. SVD trains U and M using gradient descent minimization of sse (sum square error = RATINGS pum-rum)2 ). F=(Uu, Mm) feature_vector, pu, m=Uu. Mm prediction, ru, m rating, eu, m=ru, m-pu, m error, G Gradient(sse) sse(F)= (u, m) r(Uu. Mm - ru, m)2 e. g. , if two r=ratings, ru, m=2 and rv, n=4, laying things out as: r m u 2 v 1 Uu n p m u 1 v 1 Mm 4 1 Mn 1 Uv F n 1 e m u 1 v n 3 G = ( (u 1, n) r eu 1, n. Mn, . . . , (u. L, n) r eu. L, n. Mn, . . . , (v, m 1) r ev, m 1 Uv, . . . , v, m. L Re(v, m. L)Uv ) pu, m(t)≡( (Uu+t. Gu)o(Mm+t. Gm) ) F(t)≡( Uu+t. Gu, Mm+t. Gm ) sse(t) = (u, m) r (pu, m(t) - ru, m)2 = (u, m) r ( (Uu+t. Gu)(Mm+t. Gm) - ru, m )2 0 = dsse(t)/dt= (u, m) r 2( (Uu+t. Gu)(Mm+t. Gm) - ru, m )1 d/dt( (Uu+t. Gu)(Mm+t. Gm) ) 2(Uu. Mm+t 2 Gu. Gm+t. Gm. Uu+t. Gu. Mm-ru, m) g=Gm. Gu h=Gm. Uu+Gu. Mm e=Uu. Mm-rum (u, m) r 2(Uu. Mm-rum+t(Gm. Uu+Gu. Mm)+t 2 Gu. Gm) d/dt( t 2 Gu. Gm + t(Gm. Uu+Gu. Mm) + Uu. Mm))=0 (um) r ( Uu. Mm-ru, m+t(Gm. Uu+Gu. Mm)+t 2 Gu. Gm) ( 2 t. Gu. Gm + Gm. Uu+Gu. Mm ) = 0 (um) r (e+th+t 2 g)(2 tg+h) = (2 teg+2 t 2 hg+2 t 3 g 2+eh+th 2+t 2 gh) = 0 t 32 (um) r g 2 + t 23 (um) r a hg + t (um) r (2 eg+h 2) + (um) r eh = 0 b c d Solving at 3+bt 2+ct+d=0, t= [(-b 3/27 a 3+bc/6 a 2 -d/2 a)+{(-b 3/27 a 3+bc/6 a 2 -d/2 a)2+(c/3 a-b 2/9 a 2)3}1/2]1/3 [(-b 3/27 a 3+bc/6 a 2 -d/2 a)-{(-b 3/27 a 3+bc/6 a 2 -d/2 a)2+(c/3 a-b 2/9 a 2)3}1/2]1/3 - b/3 a or t = ( q+[q 2+(r-p 2)3])1/3 + ( q-[q 2+(r-p 2)3])1/3 + p p = -b/(3 a) q = p 3+(bc-3 ad)/(6 a 2) r = c/(3 a) Mohammad and Arjun, you should program this for the 2 D matrix case (most common). Use the SPTS algebra to get a, b, c, d and then it is just arithmetic on numbers. the actual paper follows on the next 2 slides.

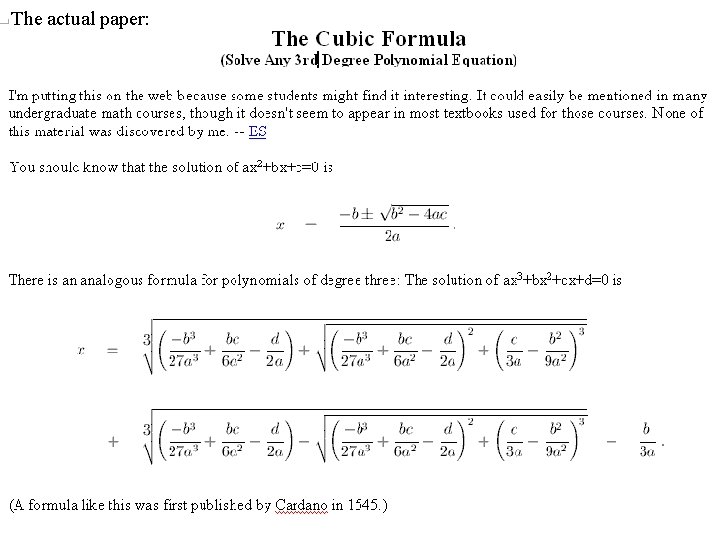

The actual paper:

Another formulation for the root of a cubic: Given: 0 = ax³ + bx² + cx + d Divide through by a 0 = x³ + ex² + fx + g e = b/a f = c/a g = d/a Step 2: Do horizontal shift, x = z − e/3 which removes square term, leaving 0 = z³ + pz + q where z = x + e/3 p = f − e² / 3 q = 2 e³/27 − ef/3 + g Step 3: Introduce u and t: u⋅t = (p/3)³ , u − t = q so t = −½q ± ½√(q² + 4 p³/27) u = ½q ± ½√(q² + 4 p³/27) 0 = z³ + pz + q has a root at: z = ∛(t) − ∛(u) 'u' and 't' both have a '±' sign. It doesn't matter which you pick to plug into the above equation. . . as long as you pick the same sign for each.

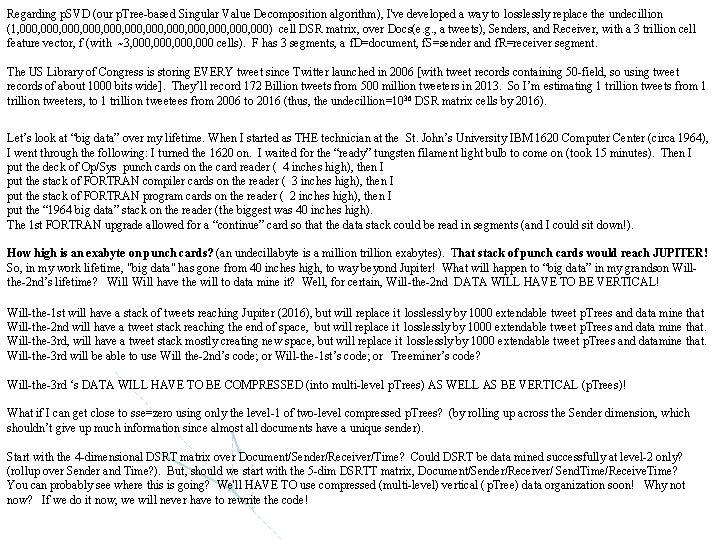

Regarding p. SVD (our p. Tree-based Singular Value Decomposition algorithm), I've developed a way to losslessly replace the undecillion (1, 000, 000, 000, 000) cell DSR matrix, over Docs(e. g. , a tweets), Senders, and Receiver, with a 3 trillion cell feature vector, f (with ~3, 000, 000 cells). F has 3 segments, a f. D=document, f. S=sender and f. R=receiver segment. The US Library of Congress is storing EVERY tweet since Twitter launched in 2006 [with tweet records containing 50 -field, so using tweet records of about 1000 bits wide]. They’ll record 172 Billion tweets from 500 million tweeters in 2013. So I’m estimating 1 trillion tweets from 1 trillion tweeters, to 1 trillion tweetees from 2006 to 2016 (thus, the undecillion=1036 DSR matrix cells by 2016). Let’s look at “big data” over my lifetime. When I started as THE technician at the St. John’s University IBM 1620 Computer Center (circa 1964), I went through the following: I turned the 1620 on. I waited for the “ready” tungsten filament light bulb to come on (took 15 minutes). Then I put the deck of Op/Sys punch cards on the card reader ( 4 inches high), then I put the stack of FORTRAN compiler cards on the reader ( 3 inches high), then I put the stack of FORTRAN program cards on the reader ( 2 inches high), then I put the “ 1964 big data” stack on the reader (the biggest was 40 inches high). The 1 st FORTRAN upgrade allowed for a “continue” card so that the data stack could be read in segments (and I could sit down!). How high is an exabyte on punch cards? (an undecillabyte is a million trillion exabytes). That stack of punch cards would reach JUPITER! So, in my work lifetime, "big data" has gone from 40 inches high, to way beyond Jupiter! What will happen to “big data” in my grandson Willthe-2 nd’s lifetime? Will have the will to data mine it? Well, for certain, Will-the-2 nd DATA WILL HAVE TO BE VERTICAL! Will-the-1 st will have a stack of tweets reaching Jupiter (2016), but will replace it losslessly by 1000 extendable tweet p. Trees and data mine that Will-the-2 nd will have a tweet stack reaching the end of space, but will replace it losslessly by 1000 extendable tweet p. Trees and data mine that. Will-the-3 rd, will have a tweet stack mostly creating new space, but will replace it losslessly by 1000 extendable tweet p. Trees and datamine that. Will-the-3 rd will be able to use Will the-2 nd’s code; or Will-the-1 st’s code; or Treeminer’s code? Will-the-3 rd ‘s DATA WILL HAVE TO BE COMPRESSED (into multi-level p. Trees) AS WELL AS BE VERTICAL (p. Trees)! What if I can get close to sse=zero using only the level-1 of two-level compressed p. Trees? (by rolling up across the Sender dimension, which shouldn’t give up much information since almost all documents have a unique sender). Start with the 4 -dimensional DSRT matrix over Document/Sender/Receiver/Time? Could DSRT be data mined successfully at level-2 only? (rollup over Sender and Time? ). But, should we start with the 5 -dim DSRTT matrix, Document/Sender/Receiver/ Send. Time/Receive. Time? You can probably see where this is going? We'll HAVE TO use compressed (multi-level) vertical ( p. Tree) data organization soon! Why not now? If we do it now, we will never have to rewrite the code!

Ancillary Background stuff: (on the Twitter Archive at US Library of Congress, NSA's PRISM, . . . (My omments in are in red)) Certainly the record of what millions of Americans say, think, and feel each day (their tweets) is a treasure-trove for historians [and for NSA? ]. But is the technology feasible, and important for a federal agency> Is it cost-effective to handle three V's that form fingerprint of a Big Data project – volume, velocity, and variety? U. S. Library of Congress said yes and agreed to archive all tweets sent since 2006 for posterity. But the task is daunting. Volume? US Lo. C will archive 172 billion tweets in 2013 alone (~300 each from 500 million tweeters), so many trillions, 2006 -2016? Velocity? currently absorbing > 20 million tweets/hour, 24 hours/day, seven days/week, each stored in a way that can last. Variety? tweets from a woman who may run for president in 2016 – and Lady Gaga. And they're different in other ways. "Sure, a tweet is 140 characters" says Jim Gallagher, US Library of Congress Director of Strategic Initiatives, "There are 50 fields. We need to record who wrote it. Where. When. " Because many tweets seem banal, the project has inspired ridicule. When the library posted its announcement of the project, one reader wrote in the comments box: "I'm guessing a good chunk. . . came from the Kardashians. " But isn't banality the point? Historians want to know not just what happened in the past but how people lived. It is why they rejoice in finding a semiliterate diary kept by a Confederate soldier, or pottery fragments in a colonial town. It's as if a historian today writing about Lincoln could listen in on what millions of Americans were saying on the day he was shot. [Or NSA could classify Tweet. Senders. Receivers ove 10 years to profile the class of "likely terrorists". Single feature "ill-intent", use p. SVD to predict it (trained on tweets from known or convicted ill-intenders. ] Youkel and Mandelbaum might seem like an odd couple to carry out a Big Data project: One is a career Library of Congress researcher with an undergraduate degree in history, the other a geologist who worked for years with oil companies. But they demonstrate something Babson's Mr. Davenport has written about the emerging field of analytics: "hybrid specialization. " [How about Data Mining and Mathematics? !? ] For organizations to use the new technology well, traditional skills, like computer science, aren't enough. Davenport points out that just as Big Data combines many innovations, finding meaning in the world's welter of statistics means combining many different disciplines. Mandelbaum and Youkel pool their knowledge to figure out how to archive the tweets, how researchers can find what they want, and how to train librarians to guide them [but not how to data mine them!]. Even before opening tweets to the public, the library has gotten more than 400 requests from doctoral candidates, professors, and journalists. "This is a pioneering project, " Mr. Dizard says. "It's helping us begin to handle large digital data. " For "America's library, " at this moment, that means housing a Gutenberg Bible and Lady Gaga tweets. What will it mean in 50 years? I ask Dizard. He laughs. "I wouldn't look that far ahead. " [sea-change: vertically storing all big data!] NSA programs (PRISM (email/twitter/facebook analytics? ) and ? (phone record analytics) Two US surveillance programs – one scooping up records of Americans' phone calls and the other collecting information on Internet-based activities (PRISM? ) – came to public attention. The aim: data-mining to help NSA thwart terrorism. But not everyone is cool with it. In the name of fighting terrorism, the US gov has been mining data collected from phone companies such as Verizon for the past seven years and from Google, Facebook, and other social media firms for at least 4 yrs, according to gov docs leaked this week to news orgs. The two surveillance programs, one that collects detailed records of telephone calls, the other that collects data on Internet-based activities such as e-mail, instant messaging, and video conferencing [facetime, skype? ], were publicly revealed in "top secret" docs leaked to the British newspaper the Guardian and the Washington Post. Both are run by the National Security Agency (NSA), the papers reported. The existence of the telephone data-mining program was previously known, and civil libertarians have for years complained that it represents a dangerous and overbroad incursion into the privacy of all Americans. What became clear this week were certain details about its operation – such as that the government sweeps up data daily and that a special court has been renewing the program every 90 days since about 2007. But the reports about the Internet-based data-mining program, called PRISM, represent a new revelation, to the public. Data-mining can involve the use of automated algorithms to sift through a database for clues as to the existence of a terrorist plot. One member of Congress claimed this week that the telephone data-mining program helped to thwart a significant terrorism incident in the United States "within the last few years, " but could not offer more specifics because the whole program is classified. Others in Congress, as well as President Obama and the director of national intelligence, sought to allay concerns of critics that the surveillance programs represent Big Government run amok. But it would be wrong to suggest that every member of Congress is on board with the sweep of such data mining programs or with the amount of oversight such national-security endeavors get from other branches of government. Some have hinted for years that they find such programs disturbing and an infringement of people's privacy. Here's an overview of these two data-mining programs, and how much oversight they are known to have. Phone-record data mining On Thursday, the Guardian displayed on its website a top-secret court order authorizing the telephone data-collection prog. The order, signed by a federal judge on the mysterious Foreign Intelligence Surveillance Court, requires a subsidiary of Verizon to send to the NSA “on an ongoing daily basis” through July its “telephony metadata, ” or communications logs, “between the United States and abroad” or “wholly within the United States, including local telephone calls. ” Such metadata include the phone number calling and the number called, telephone calling card numbers, and time and duration of calls. What's not included is permission for the NSA to record or listen to a phone conversation. That would require a separate court order, federal officials said after the program's details were made public. After the Guardian published the court's order, it became clear that the document merely renewed a data-collection that has been under way since 2007 – and one that does not target Americans, federal officials said. “The judicial order that was disclosed in the press is used to support a sensitive intelligence collection op, on which members of Congress have been fully and repeatedly briefed, ” said James Clapper, director of national intelligence, in a statement about the phone surveillance program. “The classified program has been authorized by all three branches of the Government. ” That does not do much to assuage civil libertarians, who complain that the government can use the program to piece together moment-by-moment movements of individuals throughout their day and to identify to whom they speak most often. Such intelligence operations are permitted by law under Section 215 of the Patriot Act, so-called “business records” provision. It compels businesses to provide information about their subscribers to the government. Some companies responded, but obliquely, given that by law they cannot comment on the surveillance programs or even confirm their existence. Randy Milch, general counsel for Verizon, said in an e-mail to employees that he had no comment on the accuracy of the Guardian article, the Washington Post reported. The “alleged order, ” he said, contains language “compels Verizon to respond” to gov requests and “forbids Verizon from revealing [the order's] existence. ”

Short Message Service (SMS) is a text messaging service component of phone, web, or mobile communication systems, uses standardized communications protocols that allow the exchange of short text messages between fixed line or mobile phone devices. SMS is the most widely used data application in the world, with 3. 5 billion active users, or 78% of all mobile phone subscribers. The term "SMS" is used for all types of short text messaging and the user activity itself in many parts of the world. SMS as used on modern handsets originated from radio telegraphy in radio memo pagers using standardized phone protocols. These were defined in 1985, as part of the Global System for Mobile Communications (GSM) series of standards as a means of sending messages of up to 160 characters to and from GSM mobile handsets. Though most SMS messages are mobile-to-mobile text messages, support for the service has expanded to include other mobile technologies, such as ANSI CDMA networks and Digital AMPS, as well as satellite and landline networks. Message size: Transmission of short messages between the SMSC and the handset is done whenever using the Mobile Application Part (MAP) of the SS 7 protocol. Messages are sent with the MAP MO- and MT-Forward. SM operations, whose payload length is limited by the constraints of the signaling protocol to precisely 140 octets (140 octets = 140 * 8 bits = 1120 bits). Short messages can be encoded using a variety of alphabets: the default GSM 7 -bit alphabet, the 8 -bit data alphabet, and the 16 -bit UCS-2 alphabet. Larger content (concatenated SMS, multipart or segmented SMS, or "long SMS") can be sent using multiple messages, in which case each message will start with a User Data Header (UDH) containing segmentation info. Text messaging, or texting, is the act of typing and sending a brief, electronic message between two or more mobile phones or fixed or portable devices over a phone network. The term originally referred to messages sent using the Short Message Service (SMS) only; it has grown to include messages containing image, video, and sound content (known as MMS messages). The sender of a text message is known as a texter, while the service itself has different colloquialisms depending on the region. It may simply be referred to as a text in North America, the United Kingdom, Australia and the Philippines, an SMS in most of mainland Europe, and a TMS or SMS in the Middle East, Africa and Asia. Text messages can be used to interact with automated systems to, for example, order products or services, or participate in contests. Advertisers and service providers use direct text marketing to message mobile phone users about promotions, payment due dates, etcetera instead of using mail, e-mail or voicemail. In a straight and concise definition for the purposes of this English Language article, text messaging by phones or mobile phones should include all 26 letters of the alphabet and 10 numerals, i. e. , alpha-numeric messages, or text, to be sent by texter or received by the textee. Security concerns: Consumer SMS should not be used for confidential communication. The contents of common SMS messages are known to the network operator's systems and personnel. Therefore, consumer SMS is not an appropriate technology for secure communications. To address this issue, many companies use an SMS gateway provider based on SS 7 connectivity to route the messages. The advantage of this international termination model is the ability to route data directly through SS 7, which gives the provider visibility of the complete path of the SMS. This means SMS messages can be sent directly to and from recipients without having to go through the SMS-C of other mobile operators. This approach reduces the number of mobile operators that handle the message; however, it should not be considered as an end-to-end secure communication, as the content of the message is exposed to the SMS gateway provider. Failure rates without backward notification can be hi between carriers (T-Mobile to Verizon notorious in US). Intnl texting can be extremely unreliable depending on the country of origin, dest and respective carriers.

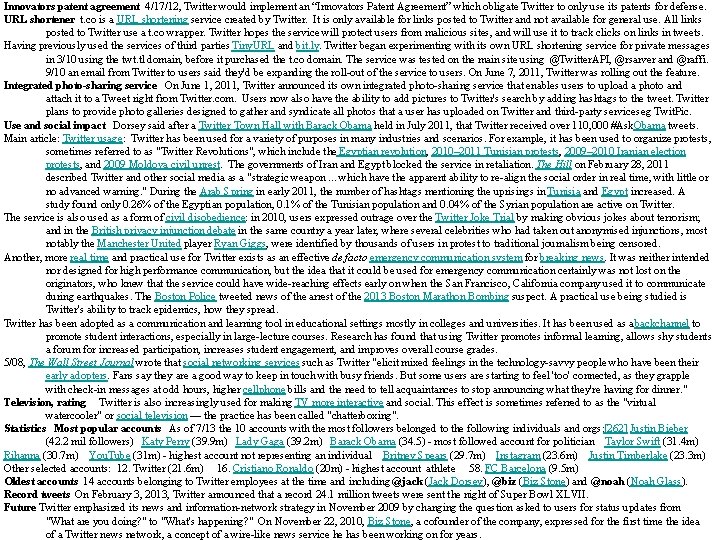

Twitter is an online social networking and microblogging service enabling its users to send and read text-based messages of up to 140 chars known as tweets. Twitter was created in March 2006 by Jack Dorsey. The service rapidly gained worldwide popularity, with over 500 million registered users as of 2012, generating over 340 million tweets daily and handling over 1. 6 billion search queries per day. Since its launch, Twitter has become one of the ten most visited websites on the Internet, and has been described as "the SMS of the Internet. Unregistered users can read tweets, while registered users can post tweets thru the website, SMS, or a range of apps for mobiles. Twitter Inc. is in San Francisco, with servers and offices in NYC, Boston, San Antonio. Tweets are publicly visible by default, but senders can restrict message delivery to just their followers. Users can tweet via the Twitter website, compatible external apps (such as for smartphones), or by Short Message Service (SMS) available in certain countries. While the service is free, accessing it thru SMS has phone fees. Users may subscribe to other users' tweets – this is known as following and subscribers are known as followers or tweeps, a portmanteau of Twitter and peeps. Users can also check people who are un-subscribing them on Twitter (unfollowing). Also, users can block those who have followed them. Twitter allows users to update their profile via their mobile phone either by text messaging or by apps released for certain smartphones and tablets. As a social network, Twitter revolves around the principle of followers. When you choose to follow another user, that user's tweets appear in reverse chronological order on your main Twitter pg. If you follow 20 people, you'll see a mix of tweets scrolling down the page: breakfast-cereal updates, interesting new links, music recommendations, even musings on the future of edu. Pear Analytic analyzed 2, 000 tweets (sent from US in English) over 2 -weeks in 8/09 from 11: 00 am to 5: 00 pm (CST) and separated them into 6 categories: Pointless babble – 40% Conversational – 38% Pass-along value – 9% Self-promotion – 6% Spam – 4% News – 4% Researcher Danah Boyd argues what Pear researchers labeled "pointless babble" is better characterized as "social grooming" and/or "peripheral awareness" (persons "want[ing] to know what the people around them are thinking and doing and feeling, even when co-presence isn’t viable"). Format: Users can group posts by topic/type with hashtags ( hashtags ) – words or phrases prefixed with a "#" sign. Similarly, "@" sign followed by a username is used for mention/reply to other users. To repost a message from another Twitter user, and share it with one's own followers, retweet function, symbolized by "RT" in the message. Twitter Lists feature was added 2009. Users could follow (as well as mention and reply to) ad hoc lists of authors instead of individual authors Through SMS, users can communicate with Twitter thru 5 gateway numbers: short codes for US, Canada, India, New Zealand, Isle of Man-based number for international use. In India, since Twitter only supports tweets from Bharti Airtel an alternative platform called sms. Tweet was set up by a user to work on all networks - Gladly. Cast exists for mobile phone users in Singapore, Malaysia, Philippines. Tweets were set to a 140 -character limit for compatibility with SMS messaging, introducing shorthand notation and slang commonly used in SMS messages. The 140 -character limit has also increased the usage of URL shortening services such as bit. ly, goo. gl, and tr. im, and content-hosting services, such as Twitpic, memozu. com and Note. Pub to accommodate multimedia content and text longer than 140 characters. Since June 2011, Twitter has used its own t. co domain for automatic shortening of all URLs posted on its website.

Trending topics: A word, phrase or topic that is tagged at a greater rate than other tags is said to be a trending topic. Trending topics become popular either thru an effort by users, or by an event prompts people to talk about one specific topic Topics help understand what's happening in the world. Trending topics sometimes result from efforts by fans of certain celebs or cultural phenom. Twitter's March 30, 2010 blog post announced that the hottest Twitter trending topics would scroll across the Twitter homepage. Controversies abound on Twitter trending topics: Twitter has censored hashtags other users found offensive. Twitter censored the #Thatsafrican and the #thingsdarkiessay hashtags after users complained they found the hashtags offensive. There allegations that twitter removed #Na. MOin. Hyd from trending list and added Indian National Congress sponsored hashtag. Adding and following content There are numerous tools for adding content, monitoring content and conversations including Telly (video sharing, old name is Twitvid), Tweet. Deck, Salesforce. com, Hoot. Suite, and Twitterfeed. As of 2009, fewer than half of tweets were posted using the web user interface with most users using third-party applications (based on analysis of 500 million tweets by Sysomos). Verified accounts In June 2008, Twitter launched a verification program, allowing celebrities to get their accounts verified. [97] Originally intended to help users verify which celebrity accounts were created by the celebrities themselves (and therefore are not fake), they have since been used to verify accounts of businesses and accounts for public figures who may not actually tweet but still wish to maintain control over the account that bears their name. Mobile Twitter has mobile apps for i. Phone, i. Pad, Android, Windows Phone, Black. Berry, and Nokia There is also version of the website for mobile devices, SMS and MMS service. Twitter limits the use of third party applications utilizing the service by implementing a 100, 000 user limit. Authentication As of August 31, 2010, third-party Twitter applications are required to use OAuth, an authentication method that does not require users to enter their password into the authenticating application. Previously, the OAuth authentication method was optional, it is now compulsory and the username/password authentication method has been made redundant and is no longer functional. Twitter stated that the move to OAuth will mean "increased security and a better experience". Related Headlines On August 19, 2013, Twitter announced Twitter Related Headlines. Usage Rankings Twitter is ranked as one of the ten-most-visited websites worldwide by Alexa's web traffic analysis. Daily user estimates vary as the company does not publish statistics on active accounts. A February 2009 Compete. com blog entry ranked Twitter as the third most used social network based on their count of 6 million unique monthly visitors and 55 million monthly visits. In March 2009, a Nielsen. com blog ranked Twitter as the fastest-growing website in the Member Communities category for February 2009. Twitter had annual growth of 1, 382 percent, increasing from 475, 000 unique visitors in Feb 08 to 7 million in Feb 09. In 2009, Twitter had a monthly user retention rate. Demographics Indonesia Brazil Venezuela Netherlands Japan Twitter. com Top 5 Global Markets by Reach (%) Country. Percent Jun 2010 20. 8%, Dec 2010 19. 0% Jun 2010 20. 5%, Dec 2010 21. 8% Jun 2010 19. 0%, Dec 2010 21. 1% Jun 2010 17. 7%, Dec 2010 22. 3% Jun 2010 16. 8%, Dec 2010 20. 0%

Technology Implementation Great reliance is placed on open-source software. The Twitter Web interface uses the Ruby on Rails framework, deployed on a performance enhanced Ruby Enterprise Edition implementation of Ruby. As of April 6, 2011, Twitter engineers confirmed they had switched away from their Ruby on Rails search-stack, to a Java server they call Blender. From spring 2007 to 2008 the messages were handled by a Ruby persistent queue server called Starling, but since 2009 implementation has been gradually replaced with software written in Scala. The service's application programming interface (API) allows other web services and applications to integrate with Twitter. Individual tweets are registered under unique IDs using software called snowflake and geolocation data is added using 'Rockdove'. The URL shortner t. co then checks for a spam link and shortens the URL. The tweets are stored in a My. SQL database using Gizzard and acknowledged to users as having been sent. They are then sent to search engines via the Firehose API. The process itself is managed by Flock. DB and takes an average of 350 ms. Biz Stone explains that all messages are instantly indexed and that "with this newly launched feature, Twitter has become something unexpectedly important – a discovery engine for finding out what is happening right now. " Privacy and security Twitter messages are public but users can also send private messages. Twitter collects personally identifiable information about its users and shares it with third parties. The service reserves the right to sell this information as an asset if the company changes hands. While Twitter displays no advertising, advertisers can target users based on their history of tweets and may quote tweets in ads directed specifically to the user. A security vulnerability was reported on April 7, 2007, by Nitesh Dhanjani and Rujith. Since Twitter used the phone number of the sender of an SMS message as authentication, malicious users could update someone else's status page by using SMS spoofing. The vulnerability could be used if the spoofer knew the phone number registered to their victim's account. Within a few weeks of this discovery Twitter introduced an optional personal identification number (PIN) that its users could use to authenticate their SMS-originating messages. On January 5, 2009, 33 high-profile Twitter accounts were compromised after a Twitter administrator's password was guessed by a dictionary attack. Falsified tweets — including sexually explicit and drug-related messages — were sent from these accounts. Twitter launched the beta version of their "Verified Accounts" service on June 11, 2009, allowing famous or notable people to announce their Twitter account name. The home pages of these accounts display a badge indicating their status. In May 2010, a bug was discovered by İnci Sözlük, involving users that allowed Twitter users to force others to follow them without the other users' consent or knowledge. For example, comedian Conan O'Brien's account, which had been set to follow only one person, was changed to receive nearly 200 malicious subscriptions. In response to Twitter's security breaches, the US Federal Trade Commission brought charges against the service which were settled on June 24, 2010. This was the first time the FTC had taken action against a social network for security lapses. The settlement requires Twitter to take a number of steps to secure users' private information, including maintenance of a "comprehensive information security program" to be independently audited biannually. On 12/14/10, USDo. J issued a subpoena directing Twitter to provide information for accounts registered to or associated with Wiki. Leaks. Twitter decided to notify its users and said ". . . it's our policy to notify users about law enforcement and governmental requests for their information, unless we are prevented by law from doing so". . Open source Twitter has a history of both using and releasing open source software while overcoming technical challenges of their service. A page in their developer documentation thanks dozens of open source projects which they have used, from revision control software like Git to programming languages such as Ruby and Scala. Software released as open source by the company includes the Gizzard Scala framework for creating distributed datastores, the distributed graph database Flock. DB, the Finagle library for building asynchronous RPC servers and clients, the Tw. UI user interface framework for i. OS, and the Bower client-side package manager. The popular Twitter Bootstrap web design library was also started at Twitter and is the most popular repository on Git. Hub. .

Innovators patent agreement 4/17/12, Twitter would implement an “Innovators Patent Agreement” which obligate Twitter to only use its patents for defense. URL shortener t. co is a URL shortening service created by Twitter. It is only available for links posted to Twitter and not available for general use. All links posted to Twitter use a t. co wrapper. Twitter hopes the service will protect users from malicious sites, and will use it to track clicks on links in tweets. Having previously used the services of third parties Tiny. URL and bit. ly. Twitter began experimenting with its own URL shortening service for private messages in 3/10 using the twt. tl domain, before it purchased the t. co domain. The service was tested on the main site using @Twitter. API, @rsarver and @raffi. 9/10 an email from Twitter to users said they'd be expanding the roll-out of the service to users. On June 7, 2011, Twitter was rolling out the feature. Integrated photo-sharing service On June 1, 2011, Twitter announced its own integrated photo-sharing service that enables users to upload a photo and attach it to a Tweet right from Twitter. com. Users now also have the ability to add pictures to Twitter's search by adding hashtags to the tweet. Twitter plans to provide photo galleries designed to gather and syndicate all photos that a user has uploaded on Twitter and third-party services eg Twit. Pic. Use and social impact Dorsey said after a Twitter Town Hall with Barack Obama held in July 2011, that Twitter received over 110, 000 #Ask. Obama tweets. Main article: Twitter usage: Twitter has been used for a variety of purposes in many industries and scenarios. For example, it has been used to organize protests, sometimes referred to as "Twitter Revolutions", which include the Egyptian revolution, 2010– 2011 Tunisian protests, 2009– 2010 Iranian election protests, and 2009 Moldova civil unrest. The governments of Iran and Egypt blocked the service in retaliation. The Hill on February 28, 2011 described Twitter and other social media as a "strategic weapon. . . which have the apparent ability to re-align the social order in real time, with little or no advanced warning. " During the Arab Spring in early 2011, the number of hashtags mentioning the uprisings in Tunisia and Egypt increased. A study found only 0. 26% of the Egyptian population, 0. 1% of the Tunisian population and 0. 04% of the Syrian population are active on Twitter. The service is also used as a form of civil disobedience: in 2010, users expressed outrage over the Twitter Joke Trial by making obvious jokes about terrorism; and in the British privacy injunction debate in the same country a year later, where several celebrities who had taken out anonymised injunctions, most notably the Manchester United player Ryan Giggs, were identified by thousands of users in protest to traditional journalism being censored. Another, more real time and practical use for Twitter exists as an effective de facto emergency communication system for breaking news. It was neither intended nor designed for high performance communication, but the idea that it could be used for emergency communication certainly was not lost on the originators, who knew that the service could have wide-reaching effects early on when the San Francisco, California company used it to communicate during earthquakes. The Boston Police tweeted news of the arrest of the 2013 Boston Marathon Bombing suspect. A practical use being studied is Twitter's ability to track epidemics, how they spread. Twitter has been adopted as a communication and learning tool in educational settings mostly in colleges and universities. It has been used as a backchannel to promote student interactions, especially in large-lecture courses. Research has found that using Twitter promotes informal learning, allows shy students a forum for increased participation, increases student engagement, and improves overall course grades. 5/08, The Wall Street Journal wrote that social networking services such as Twitter "elicit mixed feelings in the technology-savvy people who have been their early adopters. Fans say they are a good way to keep in touch with busy friends. But some users are starting to feel 'too' connected, as they grapple with check-in messages at odd hours, higher cellphone bills and the need to tell acquaintances to stop announcing what they're having for dinner. " Television, rating Twitter is also increasingly used for making TV more interactive and social. This effect is sometimes referred to as the "virtual watercooler" or social television — the practice has been called "chatterboxing". Statistics Most popular accounts As of 7/13 the 10 accounts with the most followers belonged to the following individuals and orgs: [262] Justin Bieber (42. 2 mil followers) Katy Perry (39. 9 m) Lady Gaga (39. 2 m) Barack Obama (34. 5) - most followed account for politician Taylor Swift (31. 4 m) Rihanna (30. 7 m) You. Tube (31 m) - highest account not representing an individual Britney Spears (29. 7 m) Instagram (23. 6 m) Justin Timberlake (23. 3 m) Other selected accounts: 12. Twitter (21. 6 m) 16. Cristiano Ronaldo (20 m) - highest account athlete 58. FC Barcelona (9. 5 m) Oldest accounts 14 accounts belonging to Twitter employees at the time and including @jack (Jack Dorsey), @biz (Biz Stone) and @noah (Noah Glass). Record tweets On February 3, 2013, Twitter announced that a record 24. 1 million tweets were sent the night of Super Bowl XLVII. Future Twitter emphasized its news and information-network strategy in November 2009 by changing the question asked to users for status updates from "What are you doing? " to "What's happening? " On November 22, 2010, Biz Stone, a cofounder of the company, expressed for the first time the idea of a Twitter news network, a concept of a wire-like news service he has been working on for years.

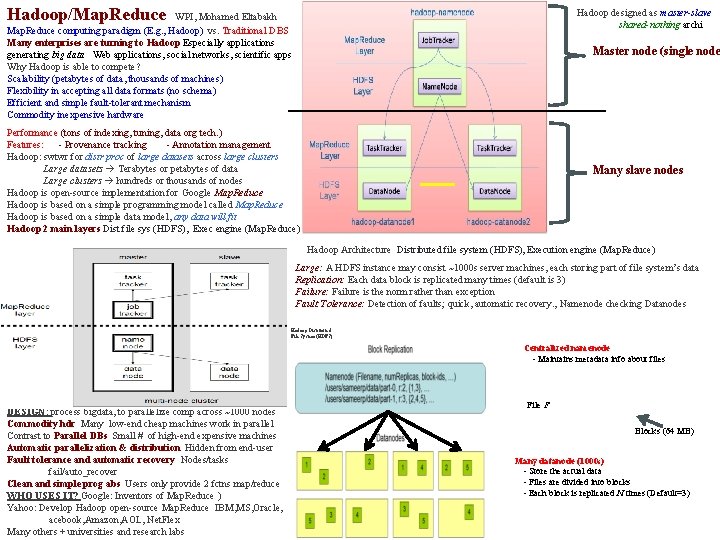

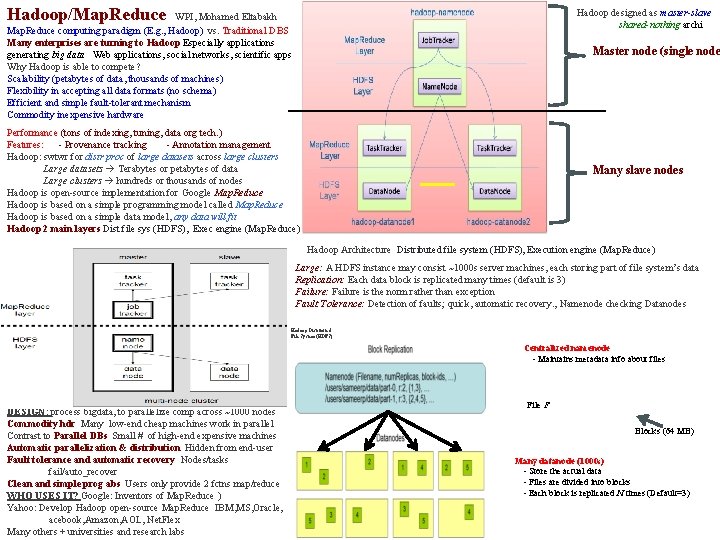

Hadoop/Map. Reduce Hadoop designed as master-slave shared-nothing archi WPI, Mohamed Eltabakh Map. Reduce computing paradigm (E. g. , Hadoop) vs. Traditional DBS Many enterprises are turning to Hadoop Especially applications generating big data Web applications, social networks, scientific apps Why Hadoop is able to compete? Scalability (petabytes of data, thousands of machines) Flexibility in accepting all data formats (no schema) Efficient and simple fault-tolerant mechanism Commodity inexpensive hardware Master node (single node Performance (tons of indexing, tuning, data org tech. ) Features: - Provenance tracking - Annotation management Hadoop: swtwr for distr proc of large datasets across large clusters Large datasets Terabytes or petabytes of data Large clusters hundreds or thousands of nodes Hadoop is open-source implementation for Google Map. Reduce Hadoop is based on a simple programming model called Map. Reduce Hadoop is based on a simple data model, any data will fit Hadoop 2 main layers Dist file sys (HDFS), Exec engine (Map. Reduce) Many slave nodes Hadoop Architecture Distributed file system (HDFS), Execution engine (Map. Reduce) Large: A HDFS instance may consist ~1000 s server machines, each storing part of file system’s data Replication: Each data block is replicated many times (default is 3) Failure: Failure is the norm rather than exception Fault Tolerance: Detection of faults; quick, automatic recovery. , Namenode checking Datanodes Hadoop Distributed File System (HDFS) Centralized namenode - Maintains metadata info about files DESIGN: process bigdata, to parallelize comp across ~1000 nodes Commodity hdr Many low-end cheap machines work in parallel Contrast to Parallel DBs Small # of high-end expensive machines Automatic parallelization & distribution Hidden from end-user Fault tolerance and automatic recovery Nodes/tasks fail/auto_recover Clean and simple prog abs Users only provide 2 fctns map/reduce WHO USES IT? Google: Inventors of Map. Reduce ) Yahoo: Develop Hadoop open-source Map. Reduce IBM, MS, Oracle, acebook, Amazon, AOL, Net. Flex Many others + universities and research labs File F Blocks (64 MB) Many datanode (1000 s) - Store the actual data - Files are divided into blocks - Each block is replicated N times (Default=3)

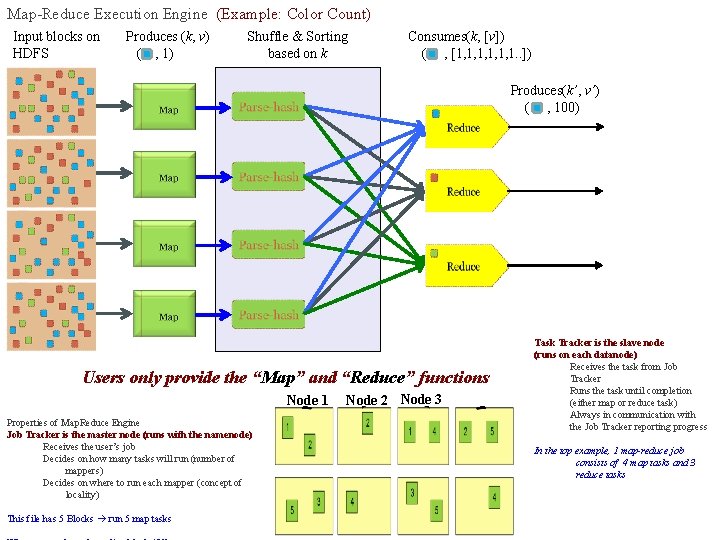

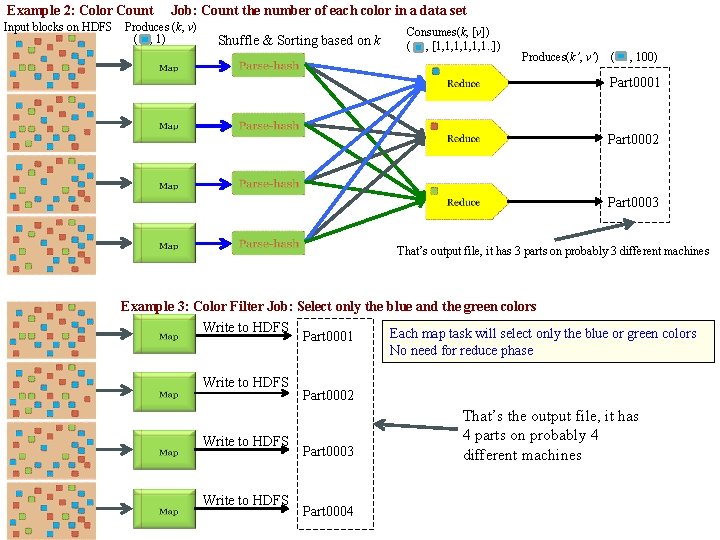

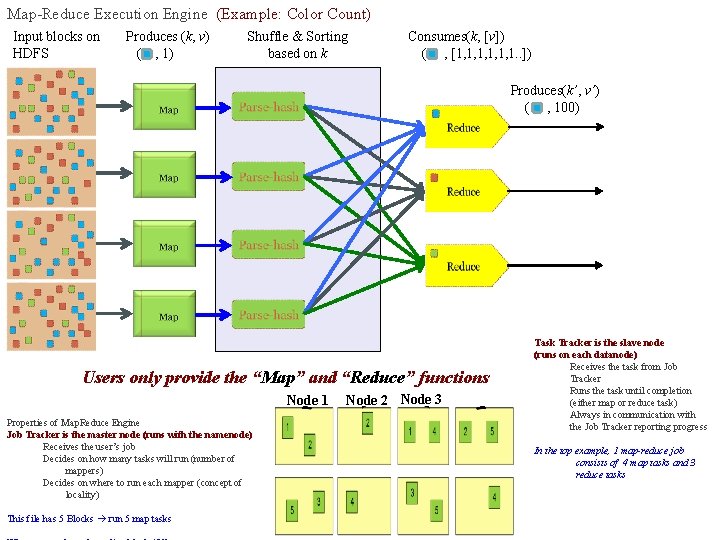

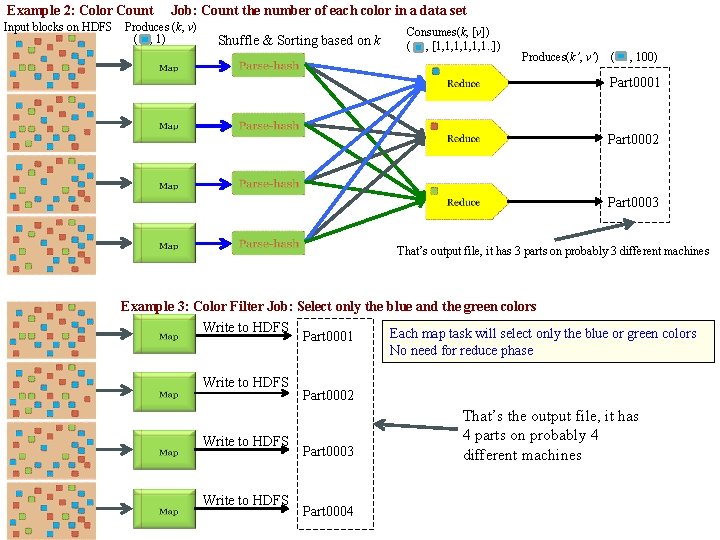

Map-Reduce Execution Engine (Example: Color Count) Input blocks on HDFS Produces (k, v) ( , 1) Shuffle & Sorting based on k Consumes(k, [v]) ( , [1, 1, 1, 1. . ]) Produces(k’, v’) ( , 100) Users only provide the “Map” and “Reduce” functions Node 1 Properties of Map. Reduce Engine Job Tracker is the master node (runs with the namenode) Receives the user’s job Decides on how many tasks will run (number of mappers) Decides on where to run each mapper (concept of locality) This file has 5 Blocks run 5 map tasks Node 2 Node 3 Task Tracker is the slave node (runs on each datanode) Receives the task from Job Tracker Runs the task until completion (either map or reduce task) Always in communication with the Job Tracker reporting progress In the top example, 1 map-reduce job consists of 4 map tasks and 3 reduce tasks

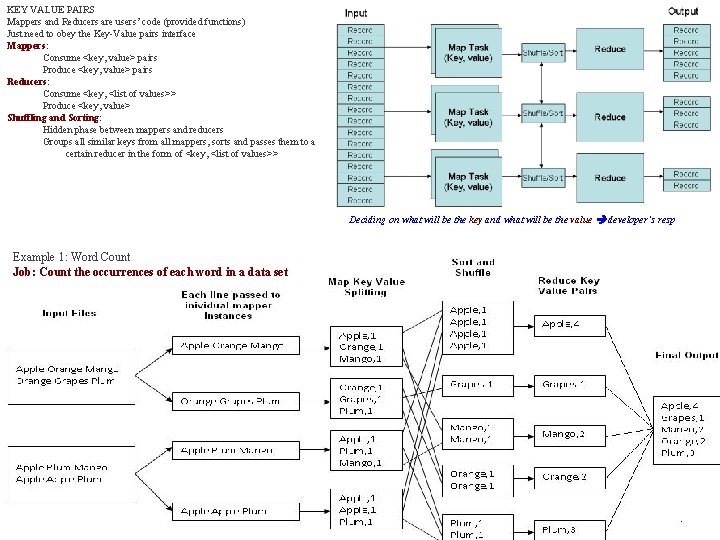

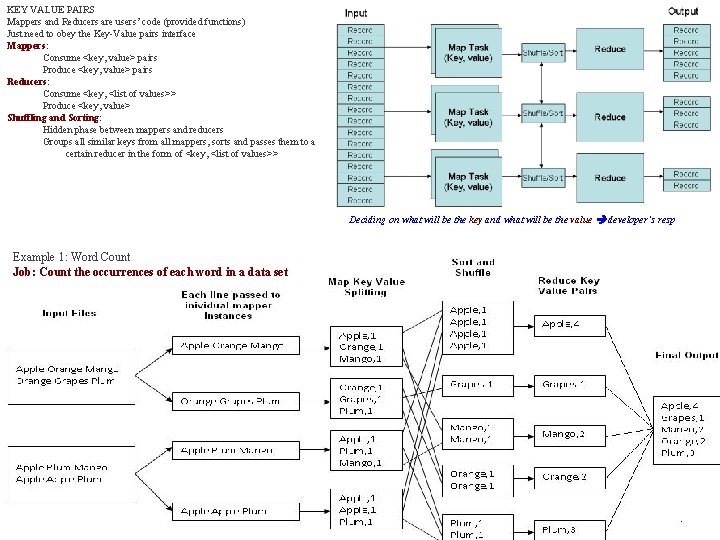

KEY VALUE PAIRS Mappers and Reducers are users’ code (provided functions) Just need to obey the Key-Value pairs interface Mappers: Consume <key, value> pairs Produce <key, value> pairs Reducers: Consume <key, <list of values>> Produce <key, value> Shuffling and Sorting: Hidden phase between mappers and reducers Groups all similar keys from all mappers, sorts and passes them to a certain reducer in the form of <key, <list of values>> Deciding on what will be the key and what will be the value developer’s resp Example 1: Word Count Job: Count the occurrences of each word in a data set

Example 2: Color Count Input blocks on HDFS Job: Count the number of each color in a data set Produces (k, v) ( , 1) Shuffle & Sorting based on k Consumes(k, [v]) ( , [1, 1, 1, 1. . ]) Produces(k’, v’) ( , 100) Part 0001 Part 0002 Part 0003 That’s output file, it has 3 parts on probably 3 different machines Example 3: Color Filter Job: Select only the blue and the green colors Write to HDFS Part 0001 Each map task will select only the blue or green colors No need for reduce phase Part 0002 Part 0003 Part 0004 That’s the output file, it has 4 parts on probably 4 different machines

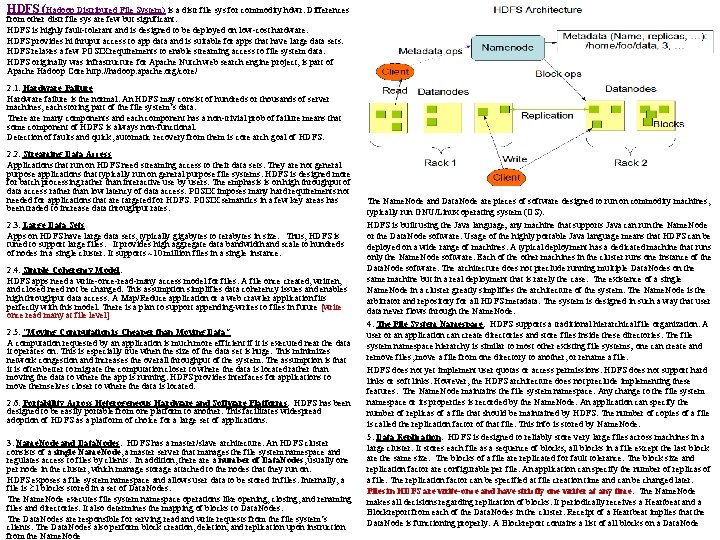

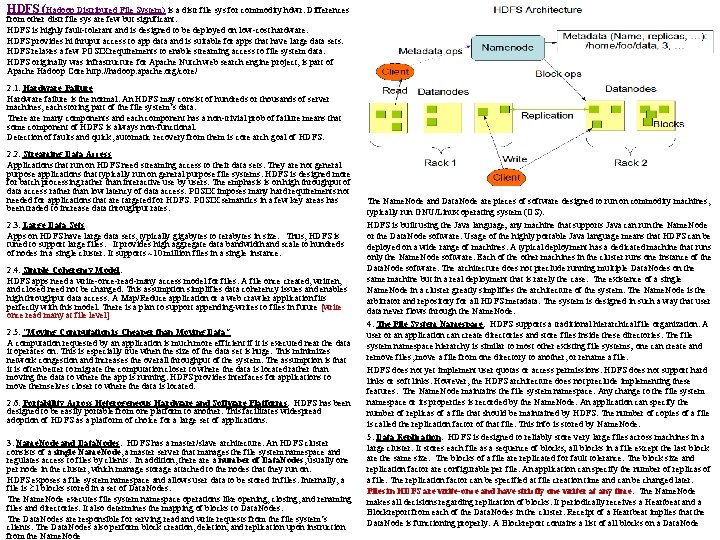

HDFS (Hadoop Distributed File System) is a distr file sys for commodity hdwr. Differences from other distr file sys are few but significant. HDFS is highly fault-tolerant and is designed to be deployed on low-cost hardware. HDFS provides hi thruput access to app data and is suitable for apps that have large data sets. HDFS relaxes a few POSIX requirements to enable streaming access to file system data. HDFS originally was infrastructure for Apache Nutch web search engine project, is part of Apache Hadoop Core http: //hadoop. apache. org/core/ 2. 1. Hardware Failure Hardware failure is the normal. An HDFS may consist of hundreds or thousands of server machines, each storing part of the file system’s data. There are many components and each component has a non-trivial prob of failure means that some component of HDFS is always non-functional. Detection of faults and quick, automatic recovery from them is core arch goal of HDFS. 2. 2. Streaming Data Access Applications that run on HDFS need streaming access to their data sets. They are not general purpose applications that typically run on general purpose file systems. HDFS is designed more for batch processing rather than interactive use by users. The emphasis is on high throughput of data access rather than low latency of data access. POSIX imposes many hard requirements not needed for applications that are targeted for HDFS. POSIX semantics in a few key areas has been traded to increase data throughput rates. 2. 3. Large Data Sets Apps on HDFS have large data sets, typically gigabytes to terabytes in size. Thus, HDFS is tuned to support large files. It provides high aggregate data bandwidth and scale to hundreds of nodes in a single cluster. It supports ~10 million files in a single instance. 2. 4. Simple Coherency Model: HDFS apps need a write-once-read-many access model for files. A file once created, written, and closed need not be changed. This assumption simplifies data coherency issues and enables high throughput data access. A Map/Reduce application or a web crawler application fits perfectly with this model. There is a plan to support appending-writes to files in future [write once read many at file level] 2. 5. “Moving Computation is Cheaper than Moving Data” A computation requested by an application is much more efficient if it is executed near the data it operates on. This is especially true when the size of the data set is huge. This minimizes network congestion and increases the overall throughput of the system. The assumption is that it is often better to migrate the computation closer to where the data is located rather than moving the data to where the app is running. HDFS provides interfaces for applications to move themselves closer to where the data is located. 2. 6. Portability Across Heterogeneous Hardware and Software Platforms: HDFS has been designed to be easily portable from one platform to another. This facilitates widespread adoption of HDFS as a platform of choice for a large set of applications. 3. Name. Node and Data. Nodes: HDFS has a master/slave architecture. An HDFS cluster consists of a single Name. Node, a master server that manages the file system namespace and regulates access to files by clients. In addition, there a number of Data. Nodes, usually one per node in the cluster, which manage storage attached to the nodes that they run on. HDFS exposes a file system namespace and allows user data to be stored in files. Internally, a file is 1 blocks stored in a set of Data. Nodes. The Name. Node executes file system namespace operations like opening, closing, and renaming files and directories. It also determines the mapping of blocks to Data. Nodes. The Data. Nodes are responsible for serving read and write requests from the file system’s clients. The Data. Nodes also perform block creation, deletion, and replication upon instruction from the Name. Node The Name. Node and Data. Node are pieces of software designed to run on commodity machines, typically run GNU/Linux operating system (OS). HDFS is built using the Java language; any machine that supports Java can run the Name. Node or the Data. Node software. Usage of the highly portable Java language means that HDFS can be deployed on a wide range of machines. A typical deployment has a dedicated machine that runs only the Name. Node software. Each of the other machines in the cluster runs one instance of the Data. Node software. The architecture does not preclude running multiple Data. Nodes on the same machine but in a real deployment that is rarely the case. The existence of a single Name. Node in a cluster greatly simplifies the architecture of the system. The Name. Node is the arbitrator and repository for all HDFS metadata. The system is designed in such a way that user data never flows through the Name. Node. 4. The File System Namespace: HDFS supports a traditional hierarchical file organization. A user or an application can create directories and store files inside these directories. The file system namespace hierarchy is similar to most other existing file systems; one can create and remove files, move a file from one directory to another, or rename a file. HDFS does not yet implement user quotas or access permissions. HDFS does not support hard links or soft links. However, the HDFS architecture does not preclude implementing these features. The Name. Node maintains the file system namespace. Any change to the file system namespace or its properties is recorded by the Name. Node. An application can specify the number of replicas of a file that should be maintained by HDFS. The number of copies of a file is called the replication factor of that file. This info is stored by Name. Node. 5. Data Replication: HDFS is designed to reliably store very large files across machines in a large cluster. It stores each file as a sequence of blocks; all blocks in a file except the last block are the same size. The blocks of a file are replicated for fault tolerance. The block size and replication factor are configurable per file. An application can specify the number of replicas of a file. The replication factor can be specified at file creation time and can be changed later. Files in HDFS are write-once and have strictly one writer at any time. The Name. Node makes all decisions regarding replication of blocks. It periodically receives a Heartbeat and a Blockreport from each of the Data. Nodes in the cluster. Receipt of a Heartbeat implies that the Data. Node is functioning properly. A Blockreport contains a list of all blocks on a Data. Node

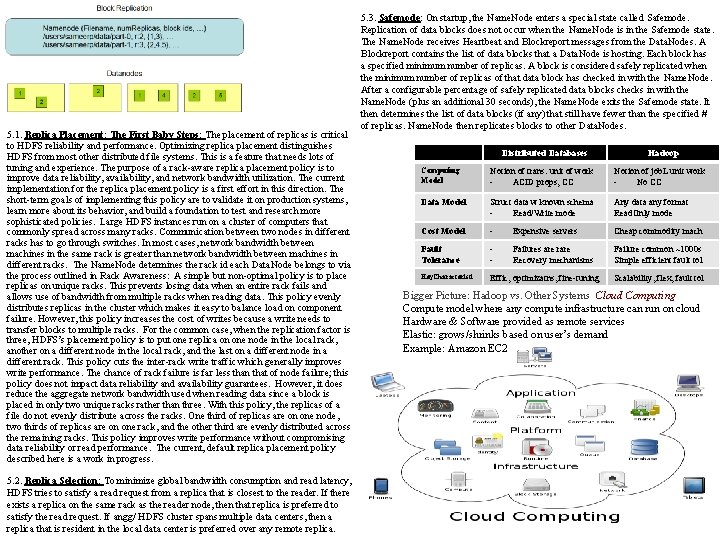

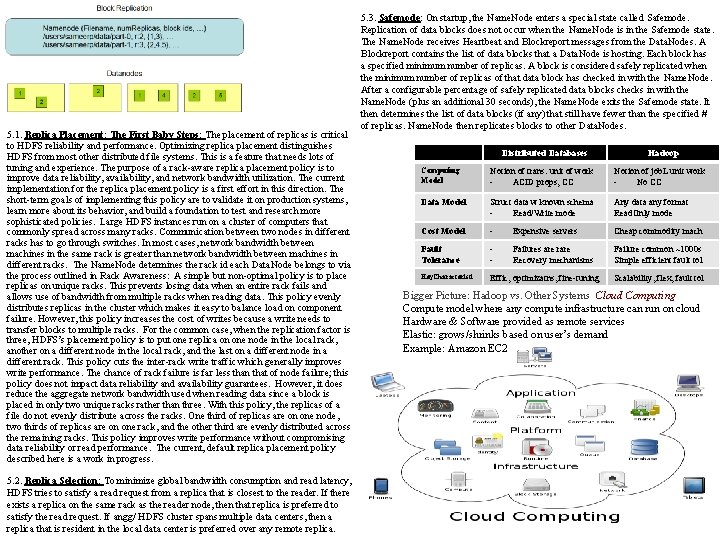

5. 1. Replica Placement: The First Baby Steps: The placement of replicas is critical to HDFS reliability and performance. Optimizing replica placement distinguishes HDFS from most other distributed file systems. This is a feature that needs lots of tuning and experience. The purpose of a rack-aware replica placement policy is to improve data reliability, availability, and network bandwidth utilization. The current implementation for the replica placement policy is a first effort in this direction. The short-term goals of implementing this policy are to validate it on production systems, learn more about its behavior, and build a foundation to test and research more sophisticated policies. Large HDFS instances run on a cluster of computers that commonly spread across many racks. Communication between two nodes in different racks has to go through switches. In most cases, network bandwidth between machines in the same rack is greater than network bandwidth between machines in different racks. The Name. Node determines the rack id each Data. Node belongs to via the process outlined in Rack Awareness: A simple but non-optimal policy is to place replicas on unique racks. This prevents losing data when an entire rack fails and allows use of bandwidth from multiple racks when reading data. This policy evenly distributes replicas in the cluster which makes it easy to balance load on component failure. However, this policy increases the cost of writes because a write needs to transfer blocks to multiple racks. For the common case, when the replication factor is three, HDFS’s placement policy is to put one replica on one node in the local rack, another on a different node in the local rack, and the last on a different node in a different rack. This policy cuts the inter-rack write traffic which generally improves write performance. The chance of rack failure is far less than that of node failure; this policy does not impact data reliability and availability guarantees. However, it does reduce the aggregate network bandwidth used when reading data since a block is placed in only two unique racks rather than three. With this policy, the replicas of a file do not evenly distribute across the racks. One third of replicas are on one node, two thirds of replicas are on one rack, and the other third are evenly distributed across the remaining racks. This policy improves write performance without compromising data reliability or read performance. The current, default replica placement policy described here is a work in progress. 5. 2. Replica Selection: To minimize global bandwidth consumption and read latency, HDFS tries to satisfy a read request from a replica that is closest to the reader. If there exists a replica on the same rack as the reader node, then that replica is preferred to satisfy the read request. If angg/ HDFS cluster spans multiple data centers, then a replica that is resident in the local data center is preferred over any remote replica. 5. 3. Safemode: On startup, the Name. Node enters a special state called Safemode. Replication of data blocks does not occur when the Name. Node is in the Safemode state. The Name. Node receives Heartbeat and Blockreport messages from the Data. Nodes. A Blockreport contains the list of data blocks that a Data. Node is hosting. Each block has a specified minimum number of replicas. A block is considered safely replicated when the minimum number of replicas of that data block has checked in with the Name. Node. After a configurable percentage of safely replicated data blocks checks in with the Name. Node (plus an additional 30 seconds), the Name. Node exits the Safemode state. It then determines the list of data blocks (if any) that still have fewer than the specified # of replicas. Name. Node then replicates blocks to other Data. Nodes. Distributed Databases Hadoop Computing Model Notion of trans: unit of work ACID props, CC Notion of job. L unit work No CC Data Model Struct data w known schema Read/Write mode Any data any format Read. Only mode Cost Model - Expensive servers Cheap commodity mach Fault Tolerance - Failures are rare Recovery mechanisms Failure common ~1000 s Simple efficient fault tol Key. Characteristi Effic, optimizatns, fine-tuning Scalability, flex, fault tol Bigger Picture: Hadoop vs. Other Systems Cloud Computing Compute model where any compute infrastructure can run on cloud Hardware & Software provided as remote services Elastic: grows/shrinks based on user’s demand Example: Amazon EC 2

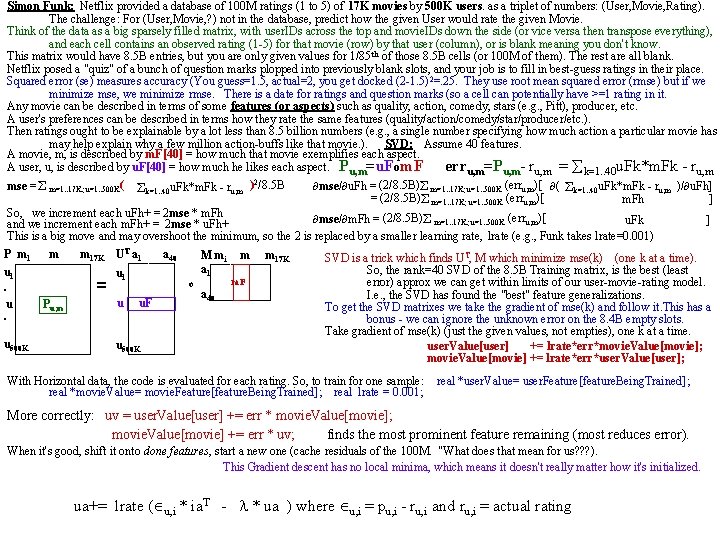

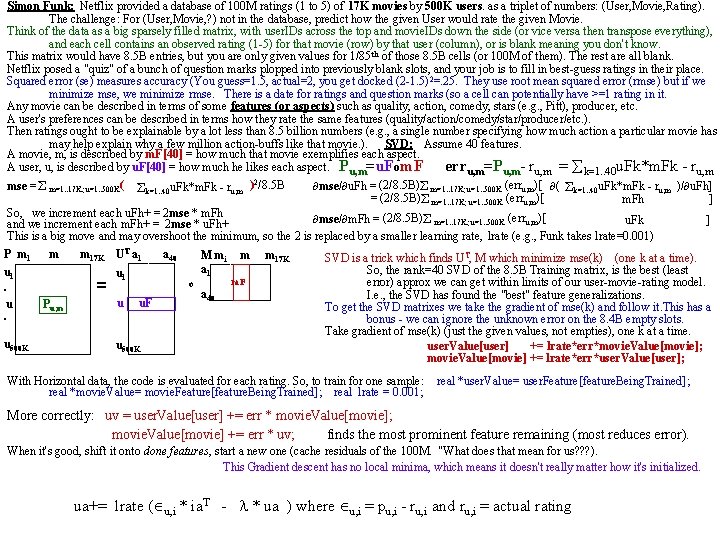

Simon Funk: Netflix provided a database of 100 M ratings (1 to 5) of 17 K movies by 500 K users. as a triplet of numbers: (User, Movie, Rating). The challenge: For (User, Movie, ? ) not in the database, predict how the given User would rate the given Movie. Think of the data as a big sparsely filled matrix, with user. IDs across the top and movie. IDs down the side (or vice versa then transpose everything), and each cell contains an observed rating (1 -5) for that movie (row) by that user (column), or is blank meaning you don't know. This matrix would have 8. 5 B entries, but you are only given values for 1/85 th of those 8. 5 B cells (or 100 M of them). The rest are all blank. Netflix posed a "quiz" of a bunch of question marks plopped into previously blank slots, and your job is to fill in best-guess ratings in their place. Squared error (se) measures accuracy (You guess=1. 5, actual=2, you get docked (2 -1. 5) 2=. 25. They use root mean squared error (rmse) but if we minimize mse, we minimize rmse. There is a date for ratings and question marks (so a cell can potentially have >=1 rating in it. Any movie can be described in terms of some features (or aspects) such as quality, action, comedy, stars (e. g. , Pitt), producer, etc. A user's preferences can be described in terms how they rate the same features (quality/action/comedy/star/producer/etc. ). Then ratings ought to be explainable by a lot less than 8. 5 billion numbers (e. g. , a single number specifying how much action a particular movie has may help explain why a few million action-buffs like that movie. ). SVD: Assume 40 features. A movie, m, is described by m. F[40] = how much that movie exemplifies each aspect. = k=1. . 40 u. Fk*m. Fk - ru, m A user, u, is described by u. F[40] = how much he likes each aspect. Pu, m=u. Fom. F erru, m=Pu, m- ru, m mse/ u. Fh = (2/8. 5 B) m=1. . 17 K; u=1. . 500 K (erru, m)[ ( )/ u. Fh] k=1. . 40 u. Fk*m. Fk - ru, m mse = m=1. . 17 K; u=1. . 500 K ( ) k=1. . 40 u. Fk*m. Fk - ru, m 2/8. 5 B = (2/8. 5 B) m=1. . 17 K; u=1. . 500 K (erru, m)[ m. Fh ] So, we increment each u. Fh+ = 2 mse * m. Fh mse/ m. Fh = (2/8. 5 B) m=1. . 17 K; u=1. . 500 K (erru, m)[ u. Fk ] and we increment each m. Fh+ = 2 mse * u. Fh+ This is a big move and may overshoot the minimum, so the 2 is replaced by a smaller learning rate, lrate (e. g. , Funk takes lrate=0. 001) P m 1 m m 17 K UT a 1 a 40 M m 1 m m 17 K SVD is a trick which finds UT, M which minimize mse(k) (one k at a time). a 1 So, the rank=40 SVD of the 8. 5 B Training matrix, is the best (least u 1 m. F error) approx we can get within limits of our user-movie-rating model. o . = a 40 I. e. , the SVD has found the "best" feature generalizations. u u. F u Pu, m To get the SVD matrixes we take the gradient of mse(k) and follow it. This has a . bonus - we can ignore the unknown error on the 8. 4 B empty slots. Take gradient of mse(k) (just the given values, not empties), one k at a time. u 500 K user. Value[user] += lrate*err*movie. Value[movie]; movie. Value[movie] += lrate*err*user. Value[user]; With Horizontal data, the code is evaluated for each rating. So, to train for one sample: real *user. Value= user. Feature[feature. Being. Trained]; real *movie. Value= movie. Feature[feature. Being. Trained]; real lrate = 0. 001; More correctly: uv = user. Value[user] += err * movie. Value[movie]; movie. Value[movie] += err * uv; finds the most prominent feature remaining (most reduces error). When it's good, shift it onto done features, start a new one (cache residuals of the 100 M. "What does that mean for us? ? ? ). This Gradient descent has no local minima, which means it doesn't really matter how it's initialized. ua+= lrate ( u, i * ia. T - * ua ) where u, i = pu, i - ru, i and ru, i = actual rating