Skew Reduce SkewResistant Parallel Processing of FeatureExtracting Scientific

- Slides: 21

Skew. Reduce Skew-Resistant Parallel Processing of Feature-Extracting Scientific User-Defined Functions Yong. Chul Kwon Magdalena Balazinska, Bill Howe, Jerome Rolia* University of Washington, *HP Labs Published in So. CC 2010

Motivation • Science is becoming a data management problem • Map. Reduce is an attractive solution – Simple API, declarative layer, seamless scalability, … • But it is hard to – Express complex algorithms and – Get good performance (14 hours vs. 70 minutes) • Skew. Reduce: – Goal: Scalable analysis with minimal effort – Toward scalable feature extraction analysis 2

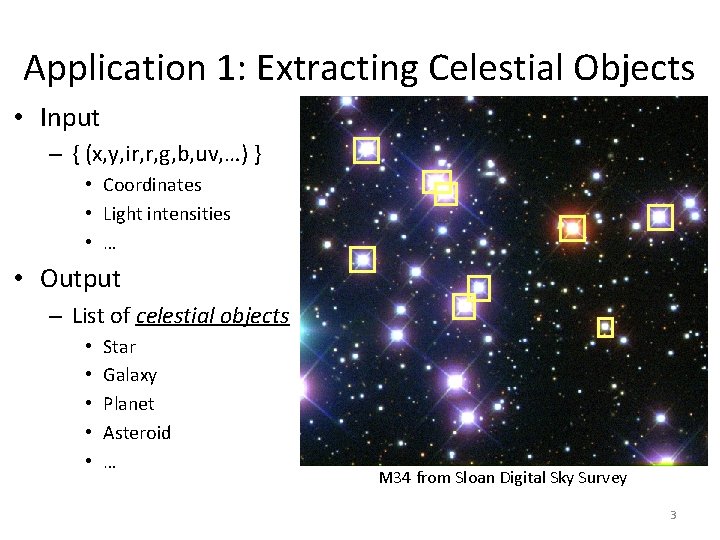

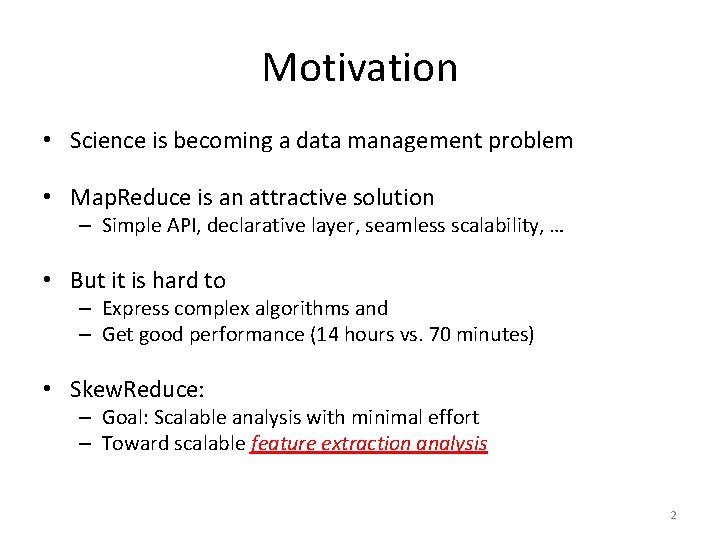

Application 1: Extracting Celestial Objects • Input – { (x, y, ir, r, g, b, uv, …) } • Coordinates • Light intensities • … • Output – List of celestial objects • • • Star Galaxy Planet Asteroid … M 34 from Sloan Digital Sky Survey 3

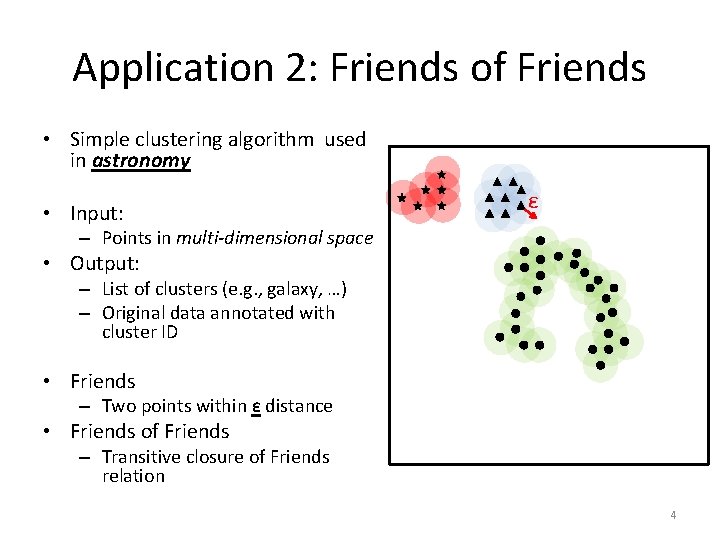

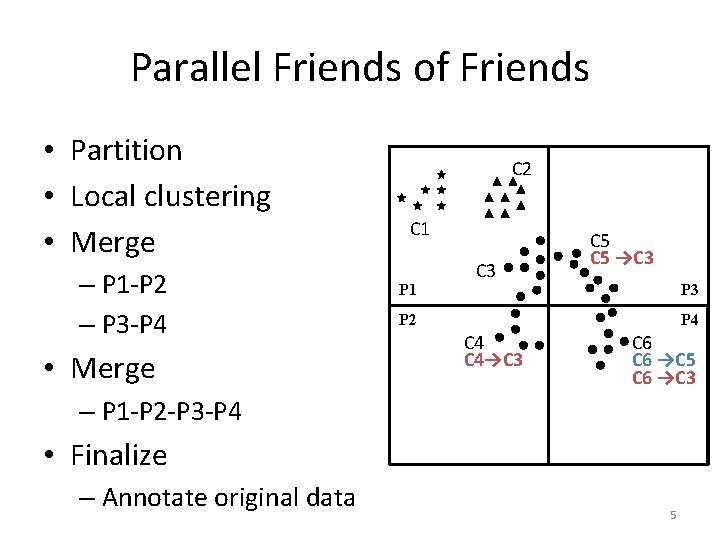

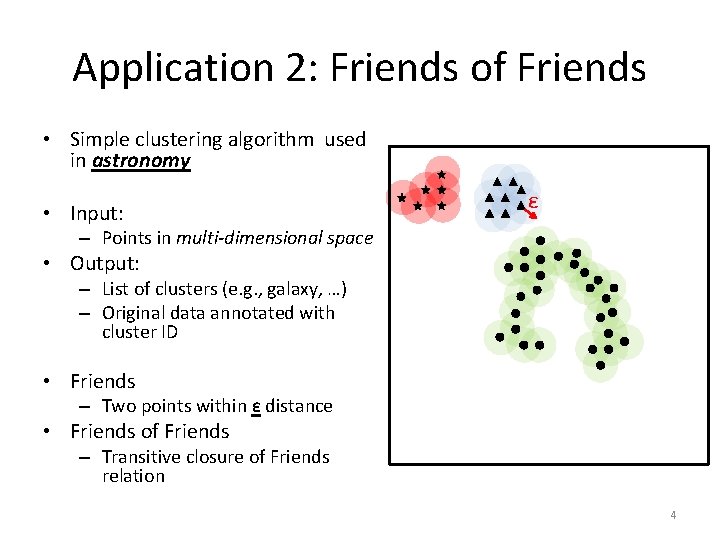

Application 2: Friends of Friends • Simple clustering algorithm used in astronomy • Input: ε – Points in multi-dimensional space • Output: – List of clusters (e. g. , galaxy, …) – Original data annotated with cluster ID • Friends – Two points within ε distance • Friends of Friends – Transitive closure of Friends relation 4

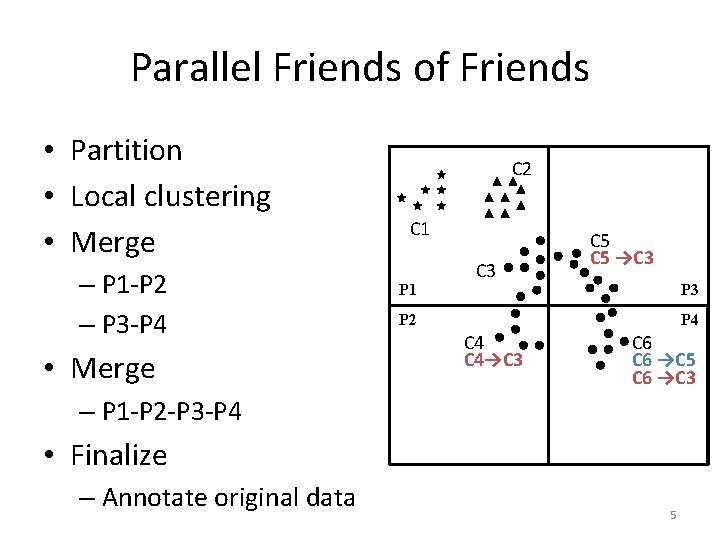

Parallel Friends of Friends • Partition • Local clustering • Merge – P 1 -P 2 – P 3 -P 4 • Merge C 2 C 1 P 1 C 3 C 5 →C 3 P 2 P 4 C 4→C 3 C 6 →C 5 C 6 →C 3 – P 1 -P 2 -P 3 -P 4 • Finalize – Annotate original data 5

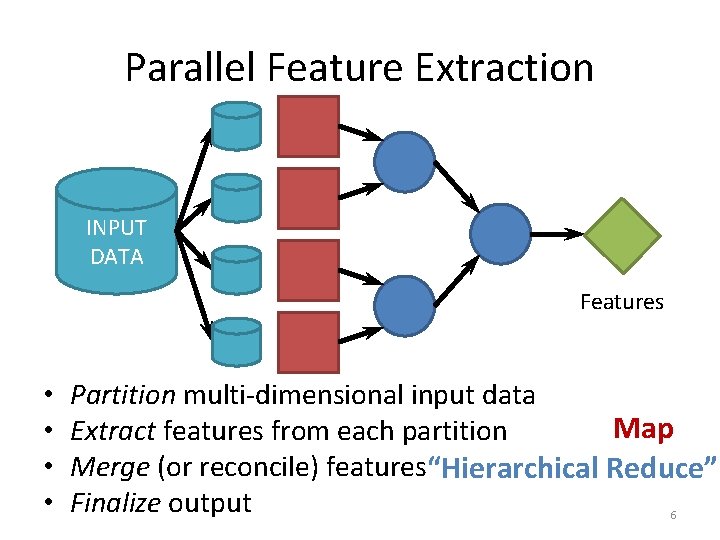

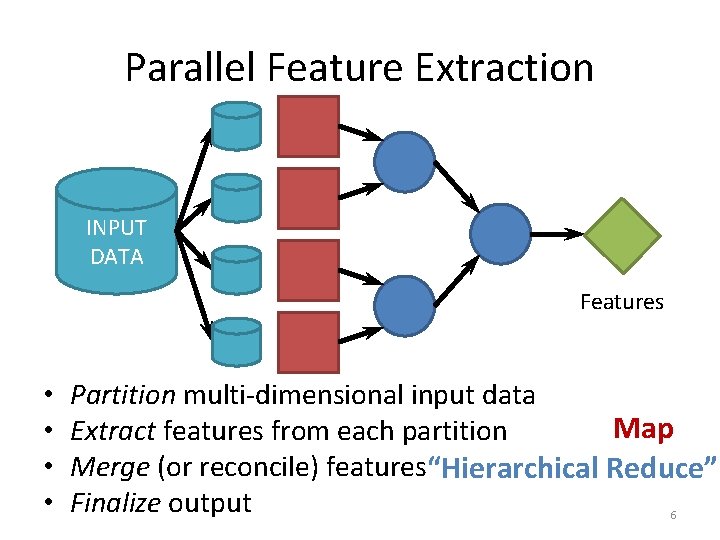

Parallel Feature Extraction INPUT DATA Features • • Partition multi-dimensional input data Map Extract features from each partition Merge (or reconcile) features“Hierarchical Reduce” Finalize output 6

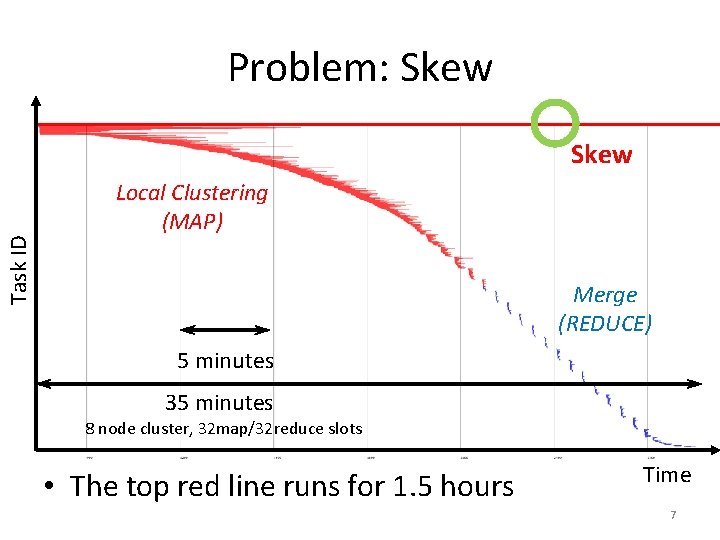

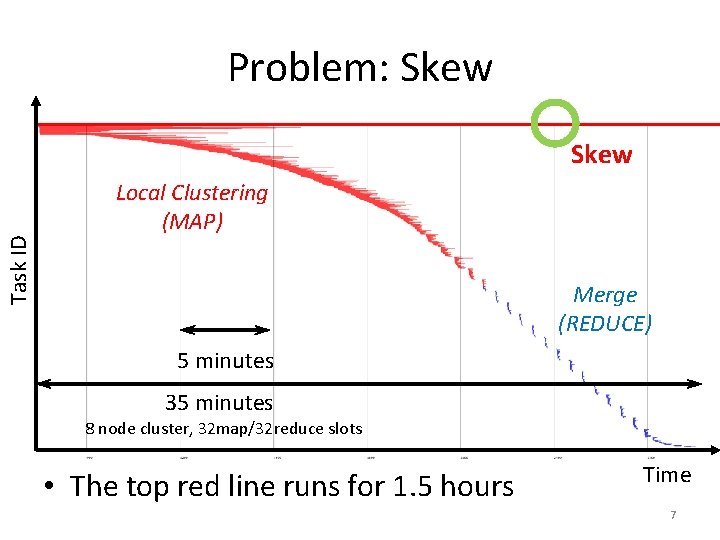

Problem: Skew Task ID Skew Local Clustering (MAP) Merge (REDUCE) 5 minutes 35 minutes 8 node cluster, 32 map/32 reduce slots • The top red line runs for 1. 5 hours Time 7

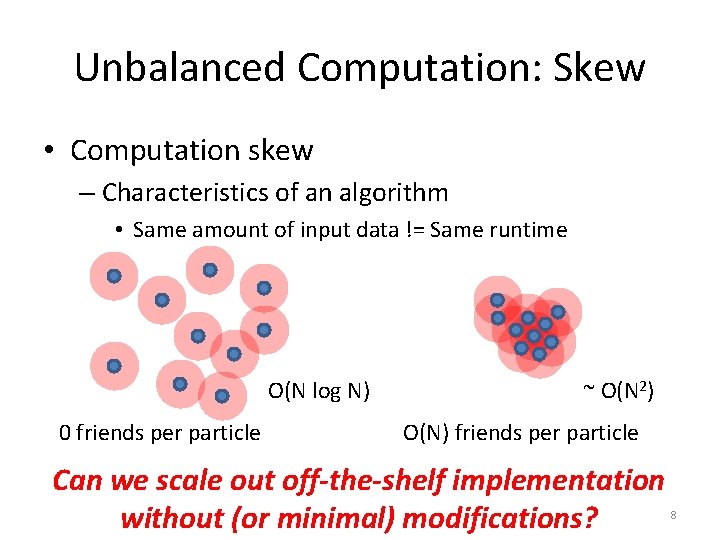

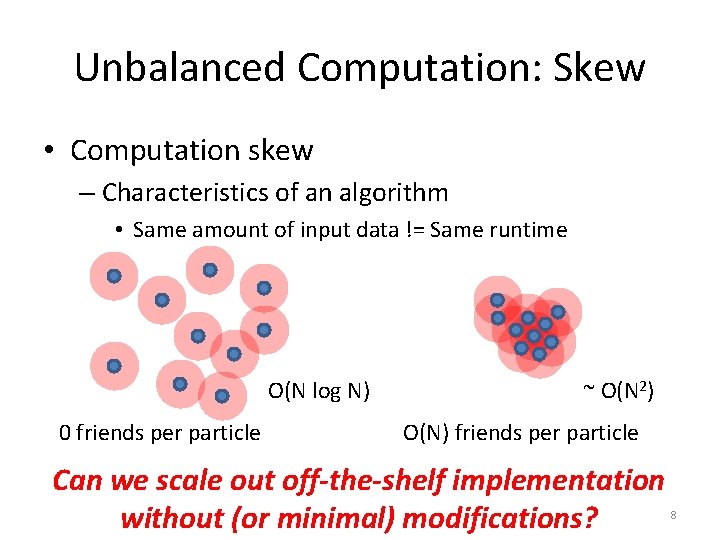

Unbalanced Computation: Skew • Computation skew – Characteristics of an algorithm • Same amount of input data != Same runtime O(N log N) 0 friends per particle ~ O(N 2) O(N) friends per particle Can we scale out off-the-shelf implementation without (or minimal) modifications? 8

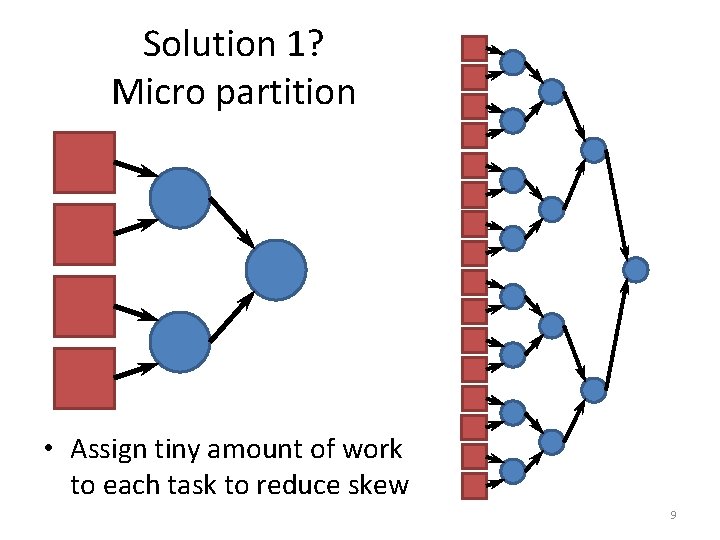

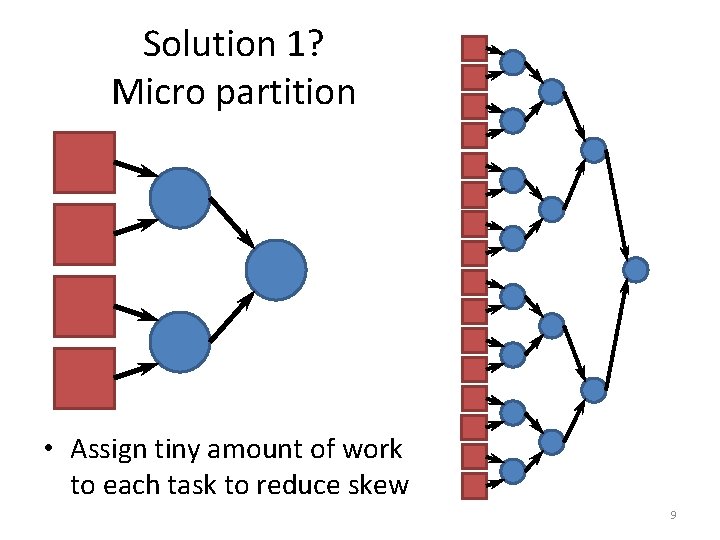

Solution 1? Micro partition • Assign tiny amount of work to each task to reduce skew 9

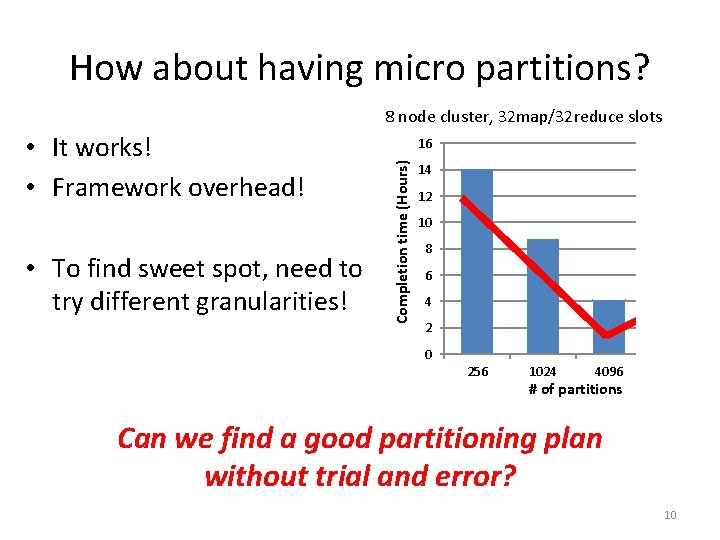

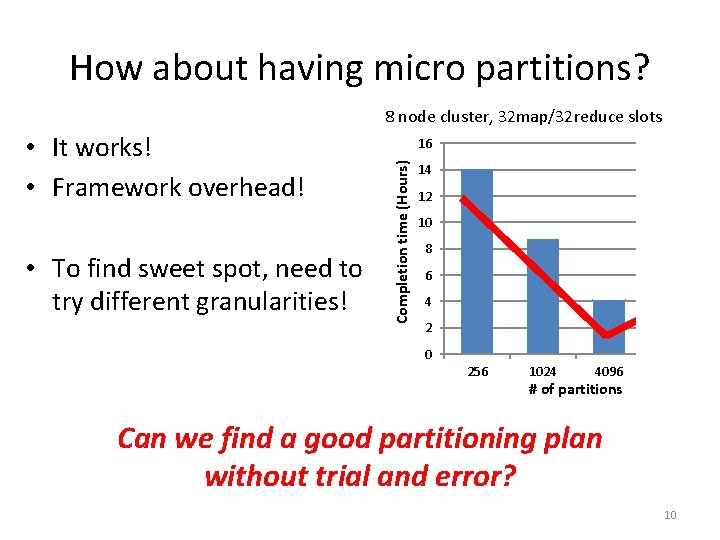

How about having micro partitions? 8 node cluster, 32 map/32 reduce slots • To find sweet spot, need to try different granularities! 16 Completion time (Hours) • It works! • Framework overhead! 14 12 10 8 6 4 2 0 256 1024 4096 # of partitions 8192 Can we find a good partitioning plan without trial and error? 10

Outline • Motivation • Skew. Reduce – API (in the paper) – Partition Optimization • Evaluation • Summary 11

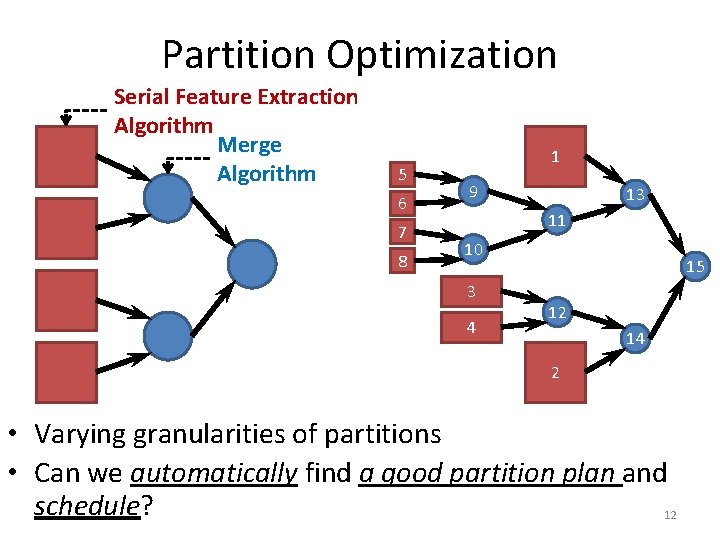

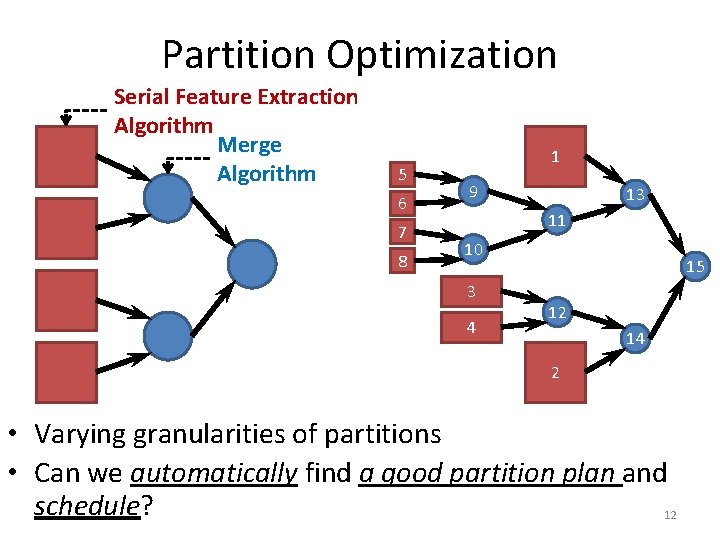

Partition Optimization Serial Feature Extraction Algorithm Merge Algorithm 5 6 7 8 1 9 13 11 10 3 4 15 12 14 2 • Varying granularities of partitions • Can we automatically find a good partition plan and schedule? 12

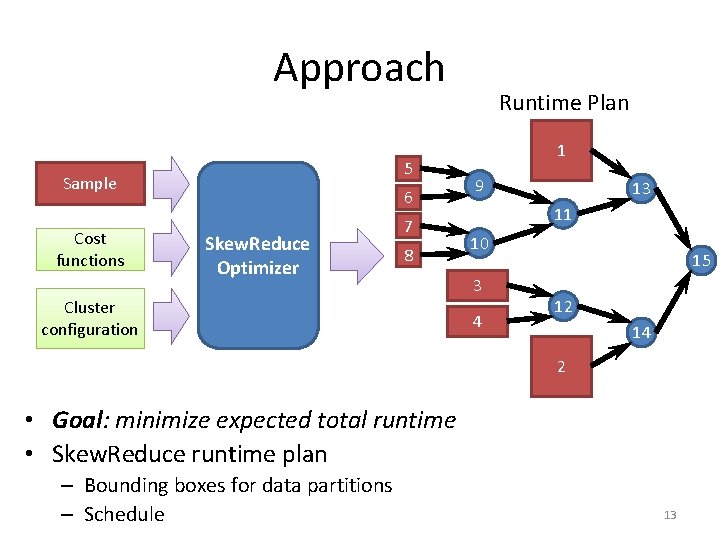

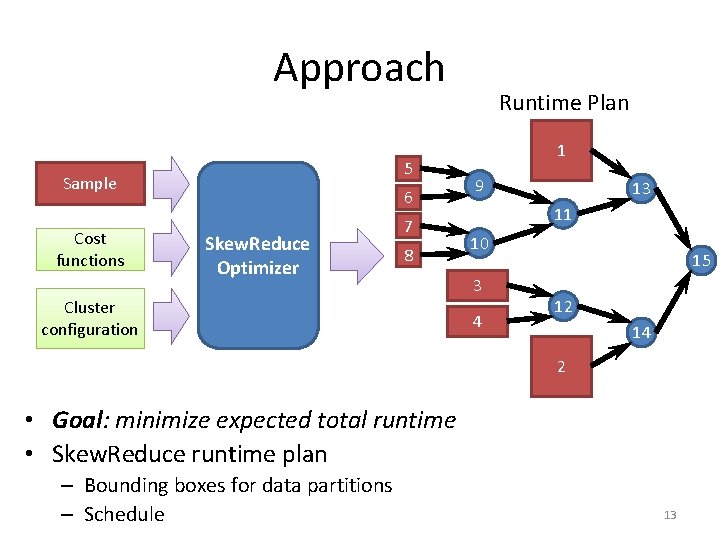

Approach 5 Sample Cost functions 6 Skew. Reduce Optimizer 7 8 Cluster configuration Runtime Plan 1 9 13 11 10 3 4 15 12 14 2 • Goal: minimize expected total runtime • Skew. Reduce runtime plan – Bounding boxes for data partitions – Schedule 13

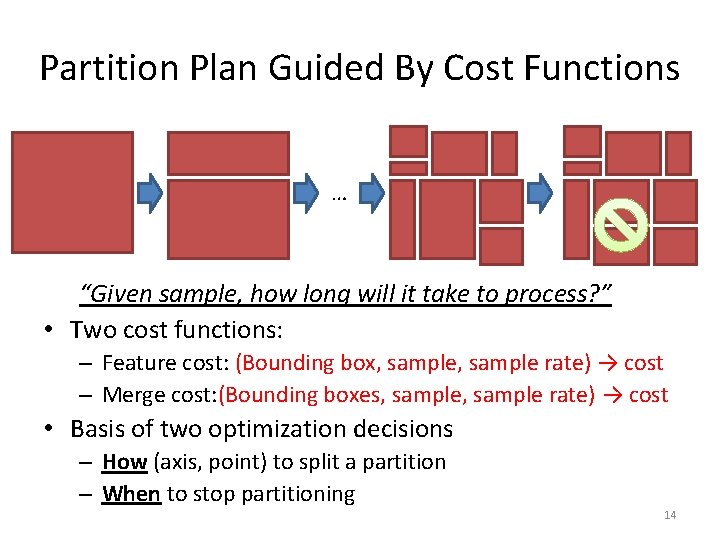

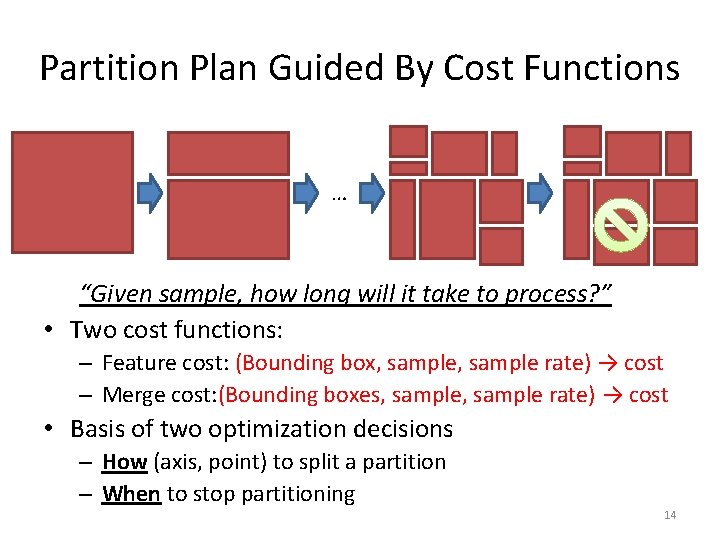

Partition Plan Guided By Cost Functions … “Given sample, how long will it take to process? ” • Two cost functions: – Feature cost: (Bounding box, sample rate) → cost – Merge cost: (Bounding boxes, sample rate) → cost • Basis of two optimization decisions – How (axis, point) to split a partition – When to stop partitioning 14

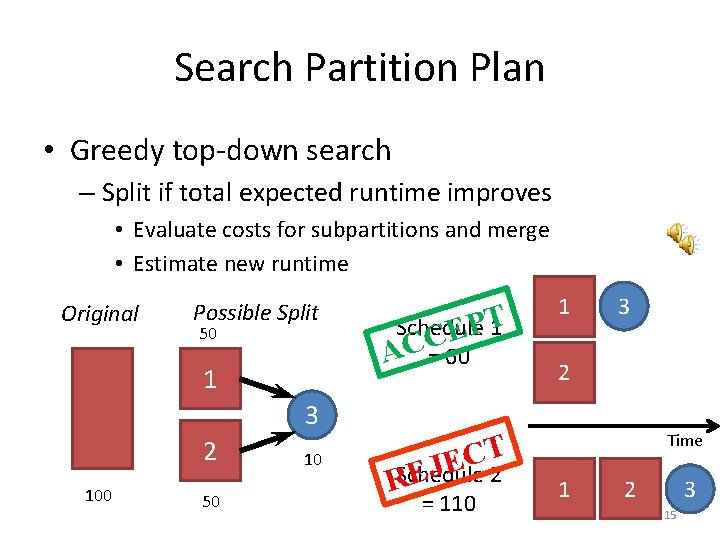

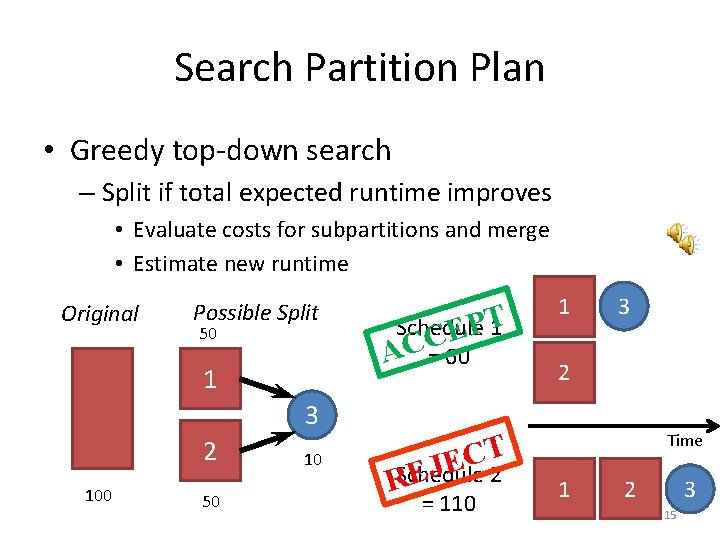

Search Partition Plan • Greedy top-down search – Split if total expected runtime improves • Evaluate costs for subpartitions and merge • Estimate new runtime Original Possible Split 50 1 3 2 100 50 10 T Schedule 1 P E ACC = 60 T C E EJ 2 RSchedule = 110 1 3 2 Time 1 2 3 15

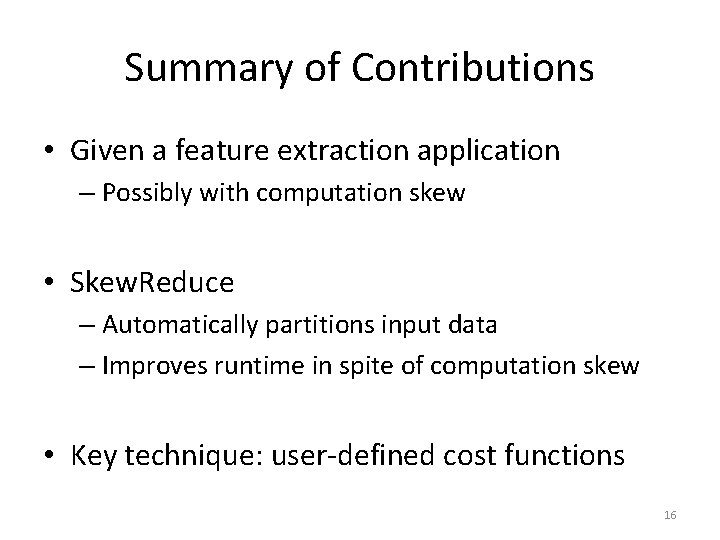

Summary of Contributions • Given a feature extraction application – Possibly with computation skew • Skew. Reduce – Automatically partitions input data – Improves runtime in spite of computation skew • Key technique: user-defined cost functions 16

Evaluation • 8 node cluster – Dual quad core CPU, 16 GB RAM – Hadoop 0. 20. 1 + custom patch in Map. Reduce API • Distributed Friends of Friends – Astro: Gravitational simulation snapshot • 900 M particles – Seaflow: flow cytometry survey • 59 M observations 17

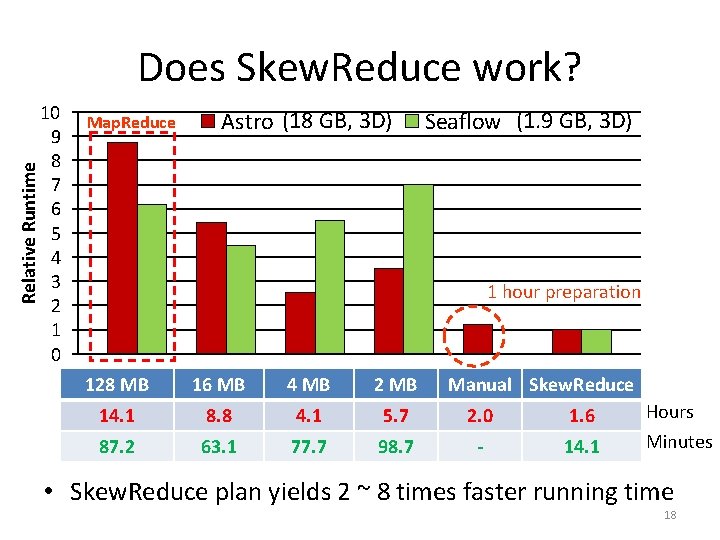

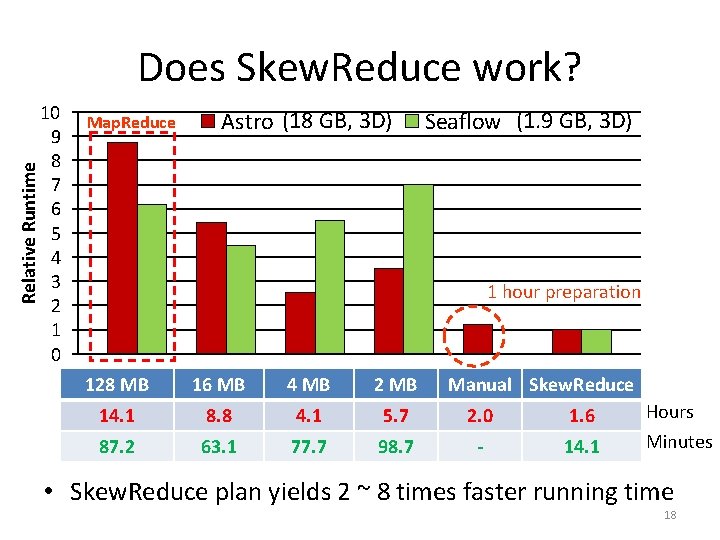

Relative Runtime Does Skew. Reduce work? 10 9 8 7 6 5 4 3 2 1 0 Map. Reduce Astro (18 GB, 3 D) Seaflow (1. 9 GB, 3 D) 1 hour preparation 128 MB 16 MB 4 MB 2 MB Manual Skew. Reduce 14. 1 8. 8 4. 1 5. 7 2. 0 1. 6 87. 2 63. 1 77. 7 98. 7 - 14. 1 Hours Minutes • Skew. Reduce plan yields 2 ~ 8 times faster running time 18

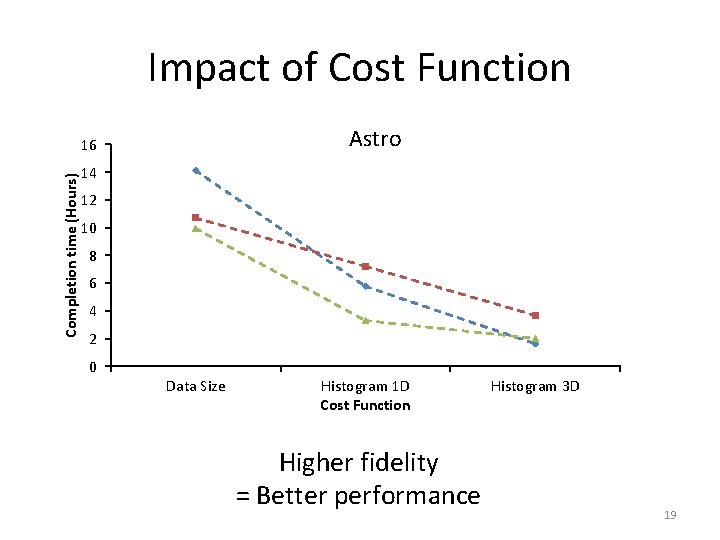

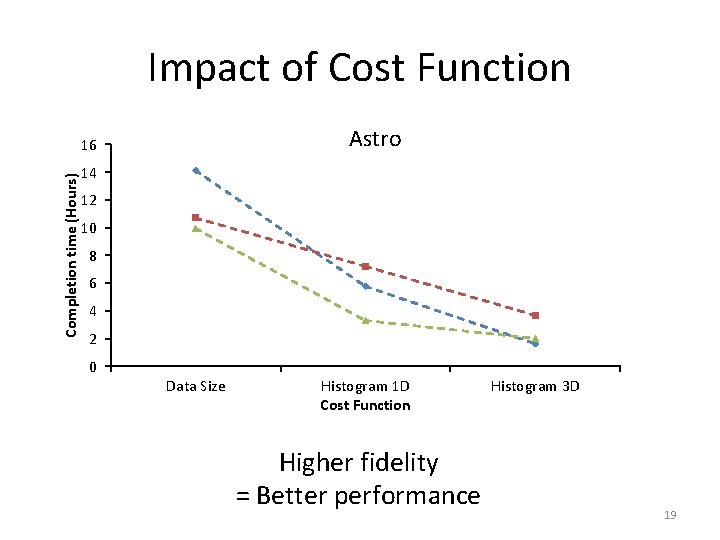

Impact of Cost Function Astro Completion time (Hours) 16 14 12 10 8 6 4 2 0 Data Size Histogram 1 D Cost Function Higher fidelity = Better performance Histogram 3 D 19

Highlights of Evaluation • Sample size – Representativeness of sample is important • Runtime of Skew. Reduce optimization – Less than 15% of real runtime of Skew. Reduce plan • Data volume in Merge phase – Total volume during Merge = 1% of input data • Details in the paper 20

Conclusion • Scientific analysis should be easy to write, scalable, and have a predictable performance • Skew. Reduce – API for feature extracting functions – Scalable execution – Good performance in spite of skew • Cost-based partition optimization using a data sample • Published in So. CC 2010 – More general version is coming out soon! 21