Skeleton based Continuous Gesture Recognition CHI TSUNG CHANG

Skeleton based Continuous Gesture Recognition CHI TSUNG, CHANG

Outline Introduction Background Knowledge l LSTM/GRU l Multilayer RNN l Related work Model and Result Advanced method – CTC Conclusion

Introduction Hand Gesture recognition – Understand the meaning of some motion of the hand l Static hand gesture l Dynamic hand gesture type l Presegmented l Continuous(weakly segmented)

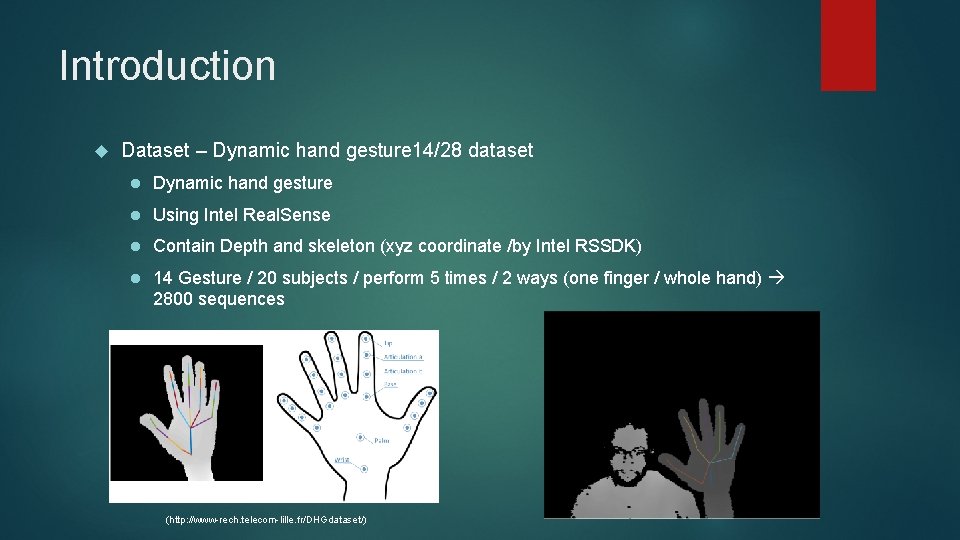

Introduction Dataset – Dynamic hand gesture 14/28 dataset l Dynamic hand gesture l Using Intel Real. Sense l Contain Depth and skeleton (xyz coordinate /by Intel RSSDK) l 14 Gesture / 20 subjects / perform 5 times / 2 ways (one finger / whole hand) 2800 sequences (http: //www-rech. telecom-lille. fr/DHGdataset/)

Introduction Target : Using the dataset DHG 14/28 to implement a continuous hand gesture recognition system based on skeleton Why skeleton: l the feature can fully represent hand motion l for interaction system in the environment like AR and VR, usually hand’s pose (skeleton) is known, thus should take this information to do the gesture recognition

Outline Introduction l Hand gesture l Dataset Background Knowledge l LSTM/GRU l Multilayer RNN l Related work Model and Result Advanced method – CTC Conclusion

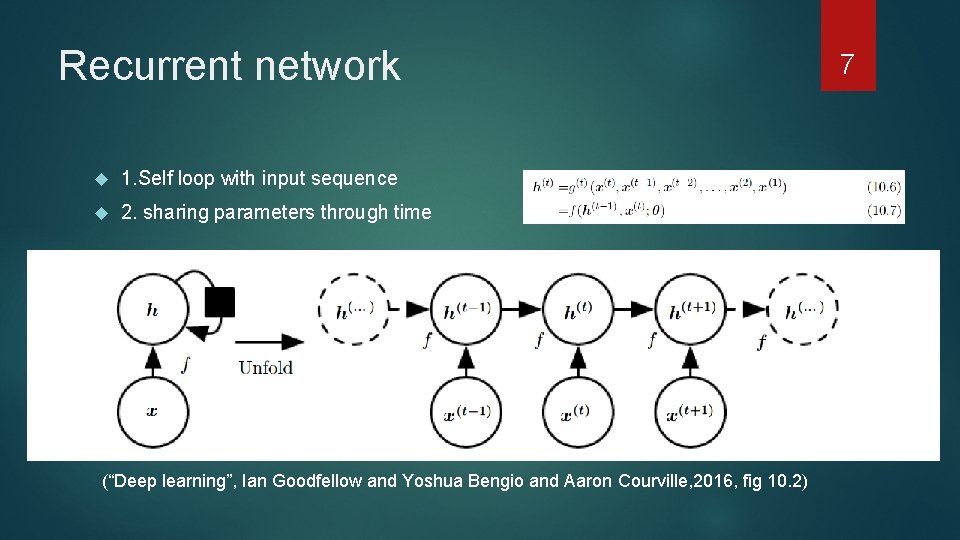

Recurrent network 1. Self loop with input sequence 2. sharing parameters through time (“Deep learning”, Ian Goodfellow and Yoshua Bengio and Aaron Courville, 2016, fig 10. 2) 7

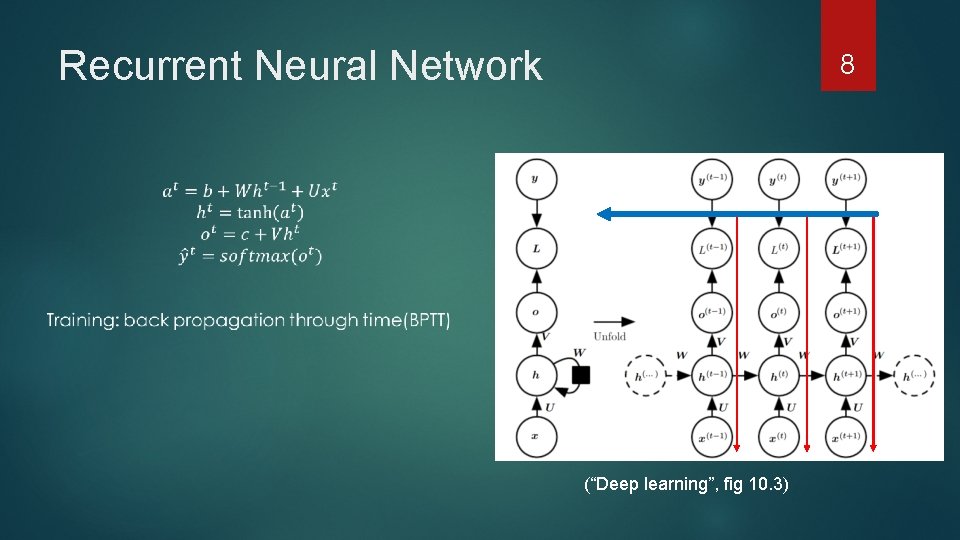

Recurrent Neural Network 8 (“Deep learning”, fig 10. 3)

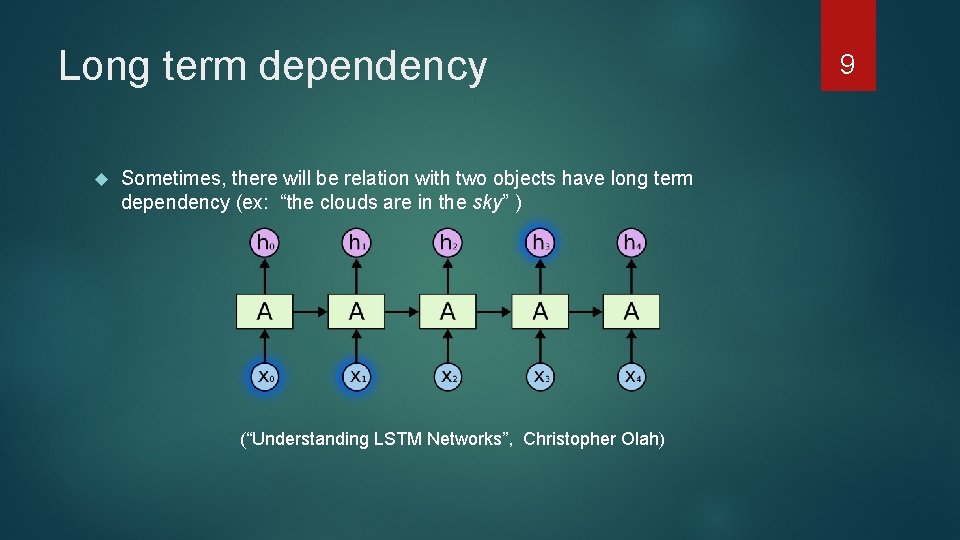

Long term dependency Sometimes, there will be relation with two objects have long term dependency (ex: “the clouds are in the sky” ) (“Understanding LSTM Networks”, Christopher Olah) 9

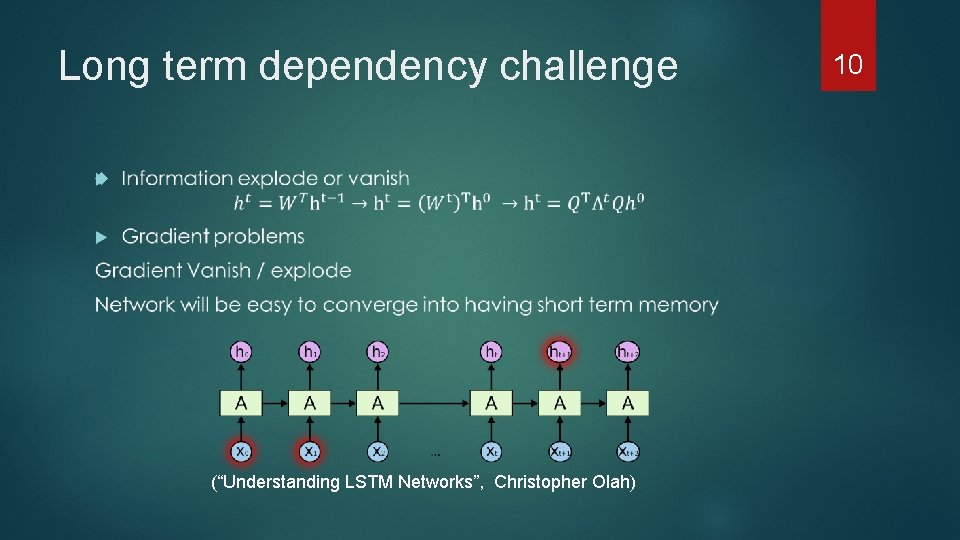

Long term dependency challenge (“Understanding LSTM Networks”, Christopher Olah) 10

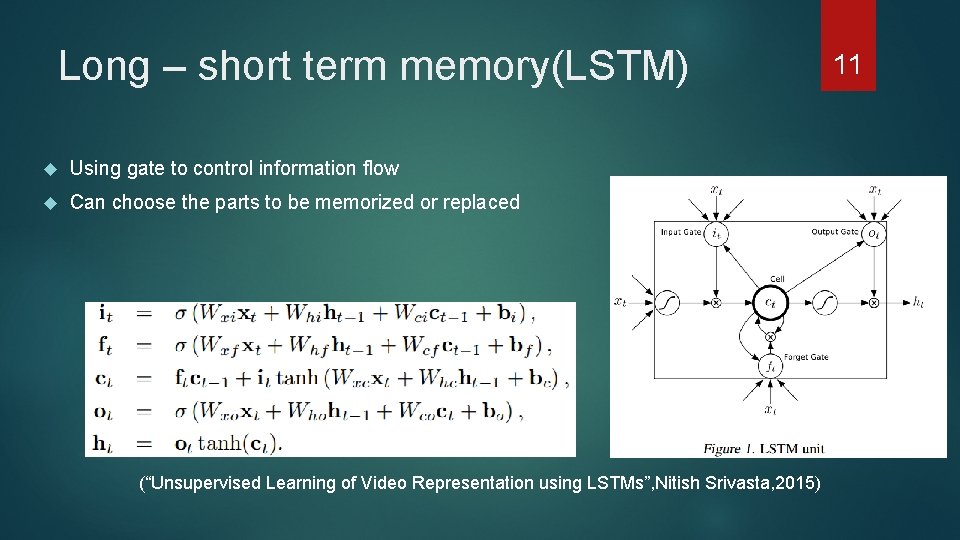

Long – short term memory(LSTM) Using gate to control information flow Can choose the parts to be memorized or replaced (“Unsupervised Learning of Video Representation using LSTMs”, Nitish Srivasta, 2015) 11

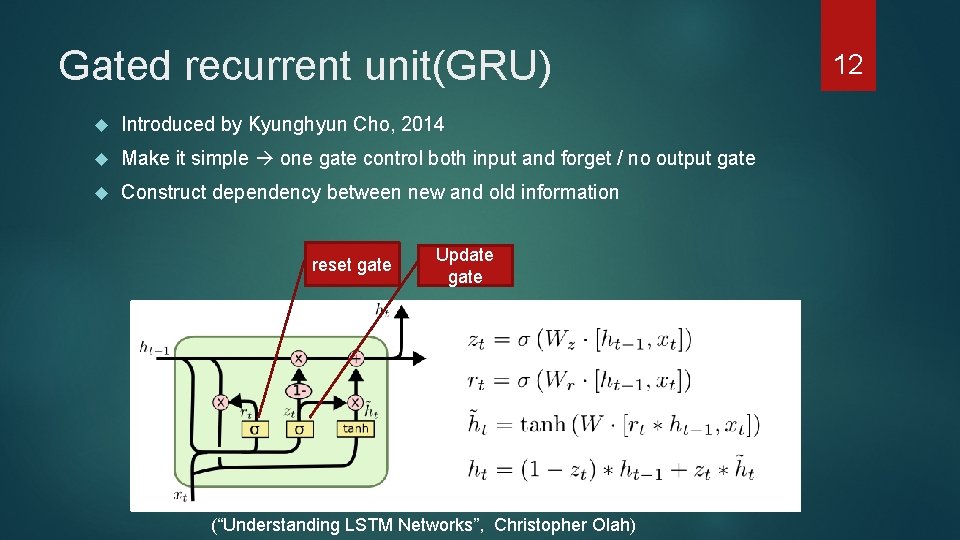

Gated recurrent unit(GRU) Introduced by Kyunghyun Cho, 2014 Make it simple one gate control both input and forget / no output gate Construct dependency between new and old information reset gate Update gate (“Understanding LSTM Networks”, Christopher Olah) 12

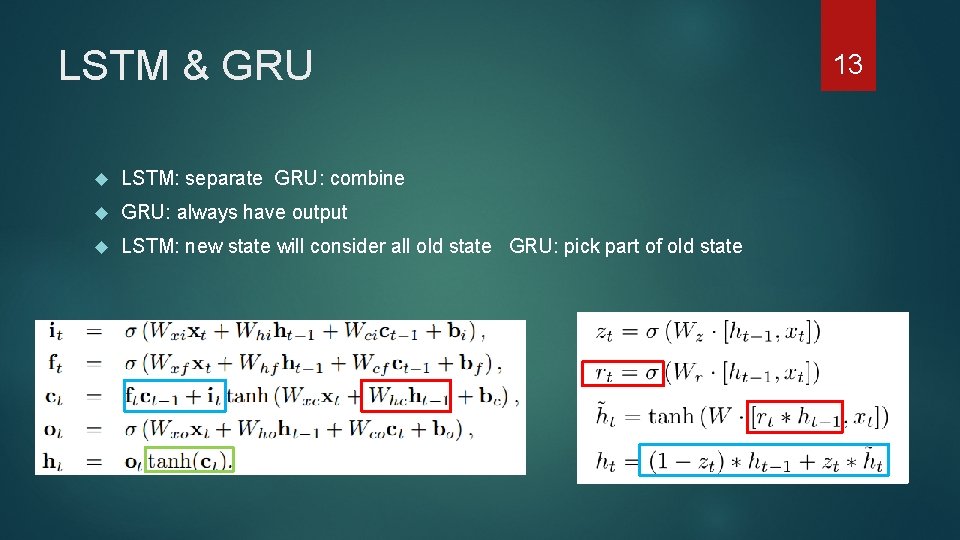

LSTM & GRU LSTM: separate GRU: combine GRU: always have output LSTM: new state will consider all old state GRU: pick part of old state 13

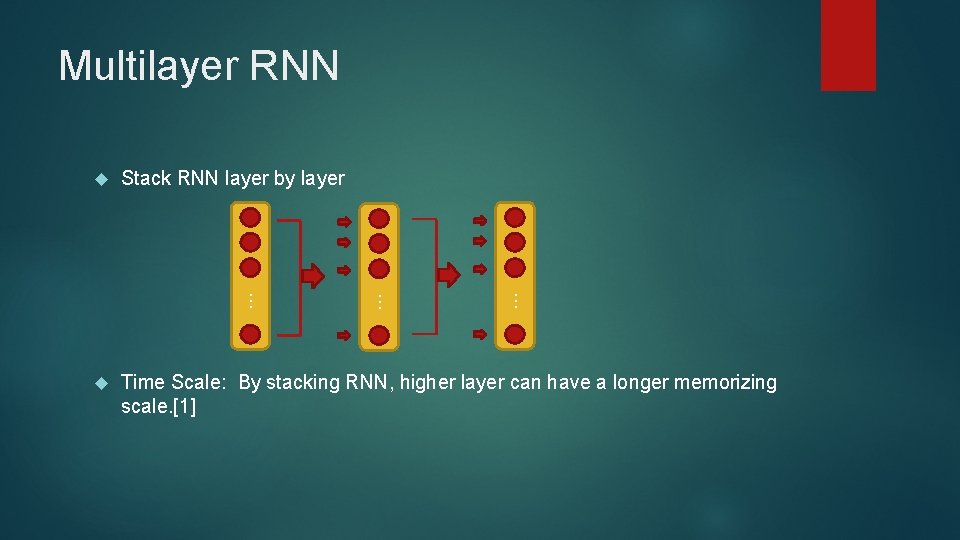

Multilayer RNN Time Scale: By stacking RNN, higher layer can have a longer memorizing scale. [1] … … Stack RNN layer by layer …

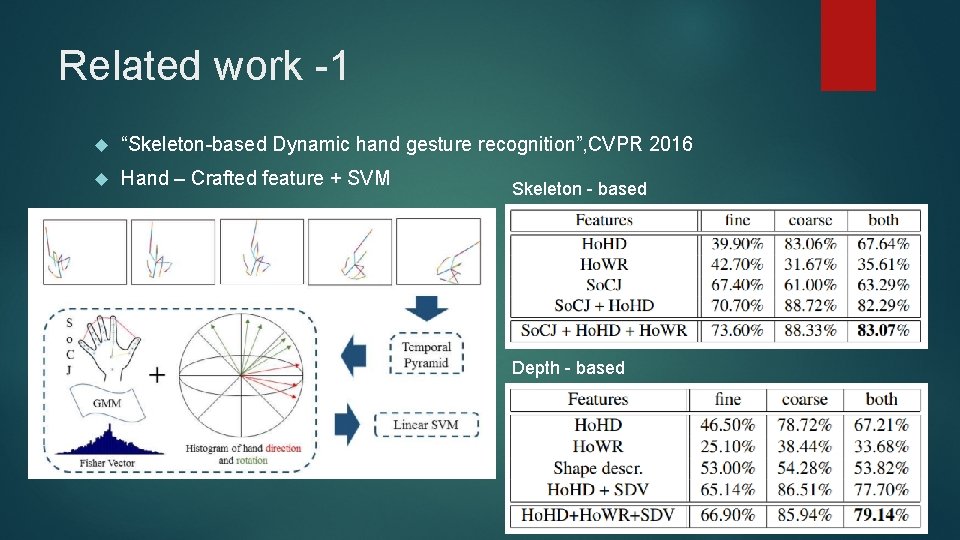

Related work -1 “Skeleton-based Dynamic hand gesture recognition”, CVPR 2016 Hand – Crafted feature + SVM Skeleton - based Depth - based

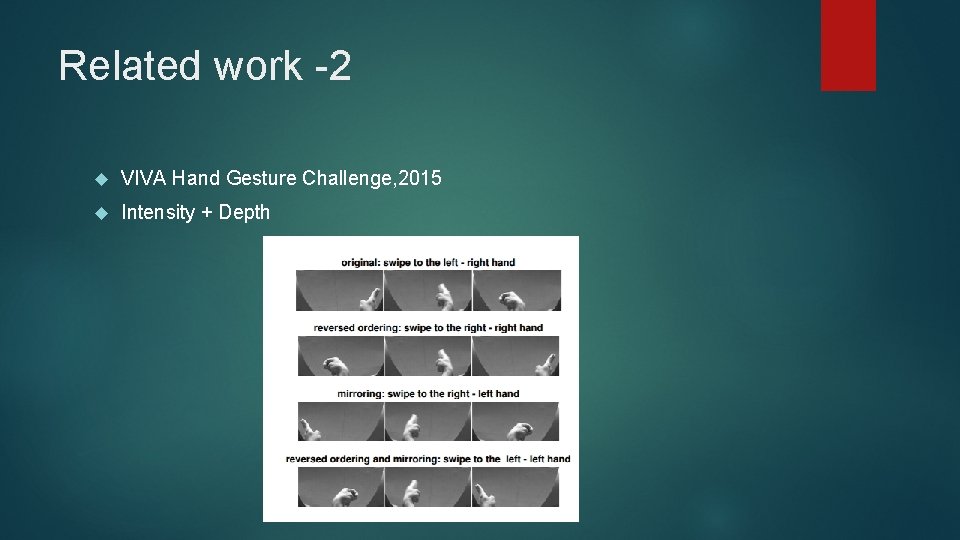

Related work -2 VIVA Hand Gesture Challenge, 2015 Intensity + Depth

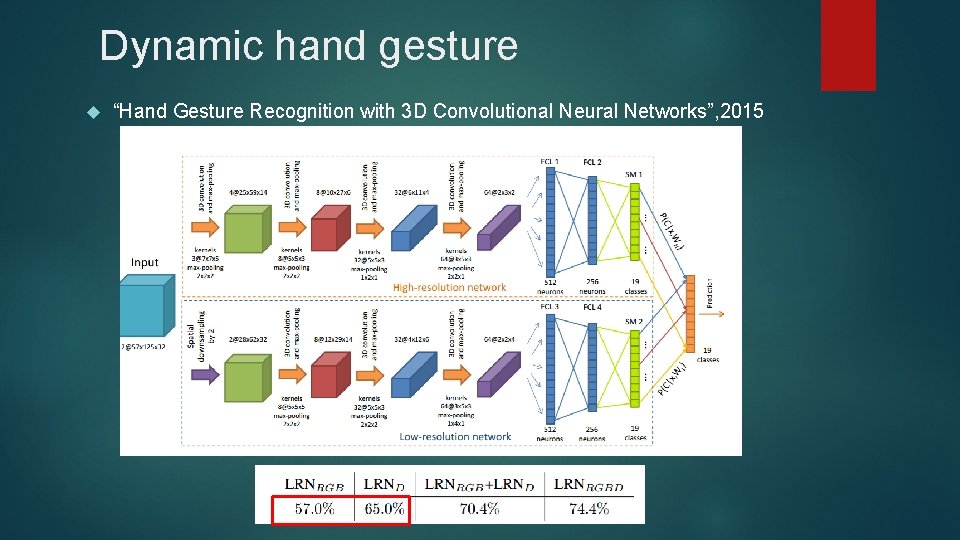

Dynamic hand gesture “Hand Gesture Recognition with 3 D Convolutional Neural Networks”, 2015

Related work -3 3 Dynamic hand gesture – continuous/weakly segmented NVIDIA Dynamic Hand Gesture Dataset, 2016

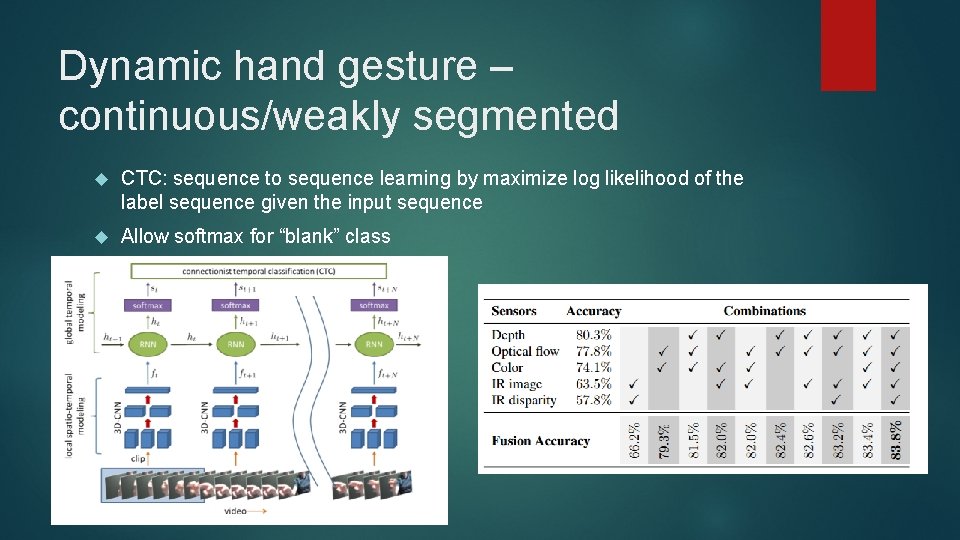

Dynamic hand gesture – continuous/weakly segmented CTC: sequence to sequence learning by maximize log likelihood of the label sequence given the input sequence Allow softmax for “blank” class

Outline Introduction l Hand gesture l Dataset Background Knowledge l LSTM/GRU l Multilayer RNN l Related work Model and Result Advanced method – CTC Conclusion

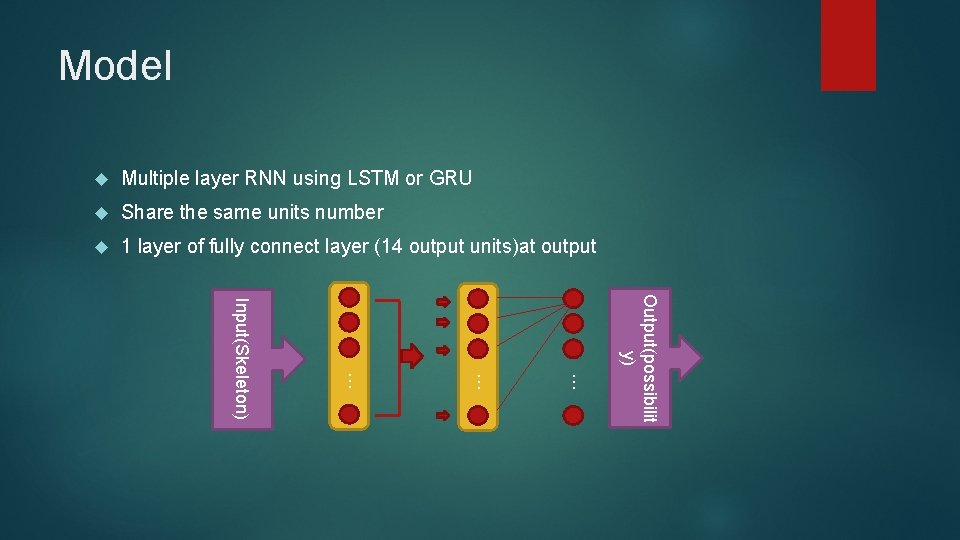

Model Multiple layer RNN using LSTM or GRU Share the same units number 1 layer of fully connect layer (14 output units)at output Output(possibilit y) … … … Input(Skeleton)

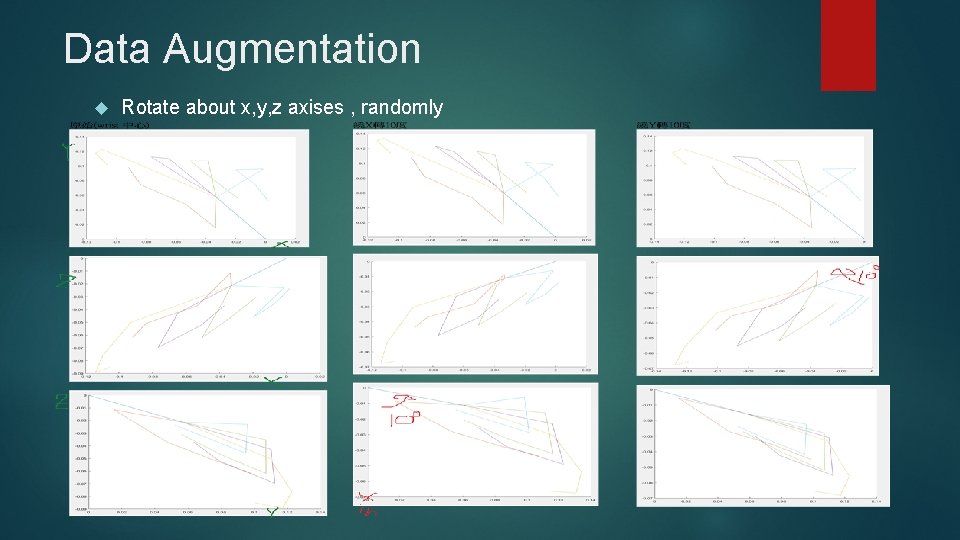

Data Augmentation Rotate about x, y, z axises , randomly

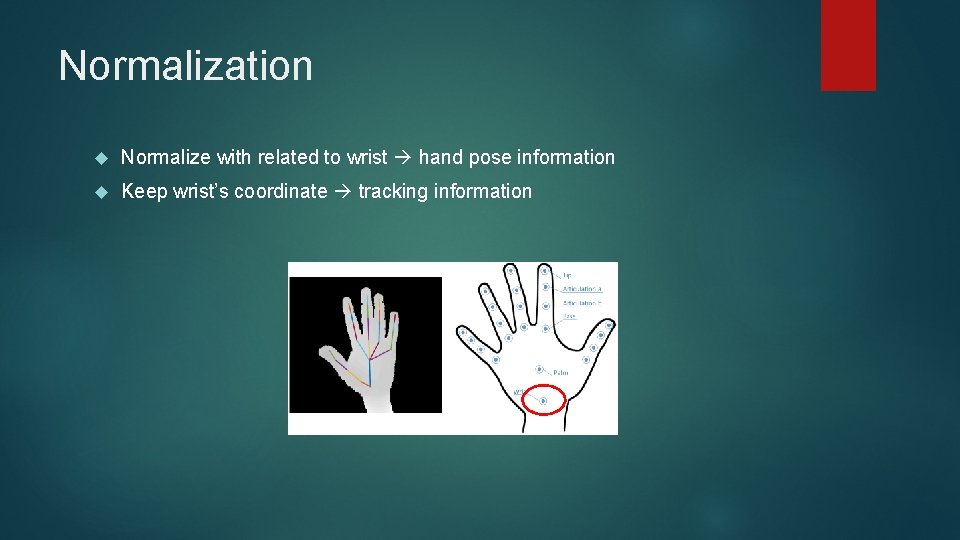

Normalization Normalize with related to wrist hand pose information Keep wrist’s coordinate tracking information

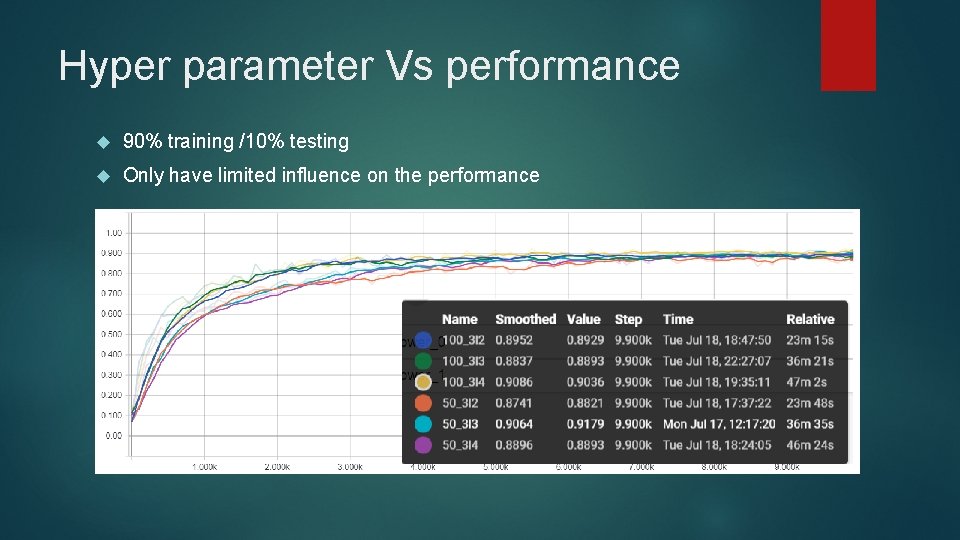

Hyper parameter Vs performance 90% training /10% testing Only have limited influence on the performance

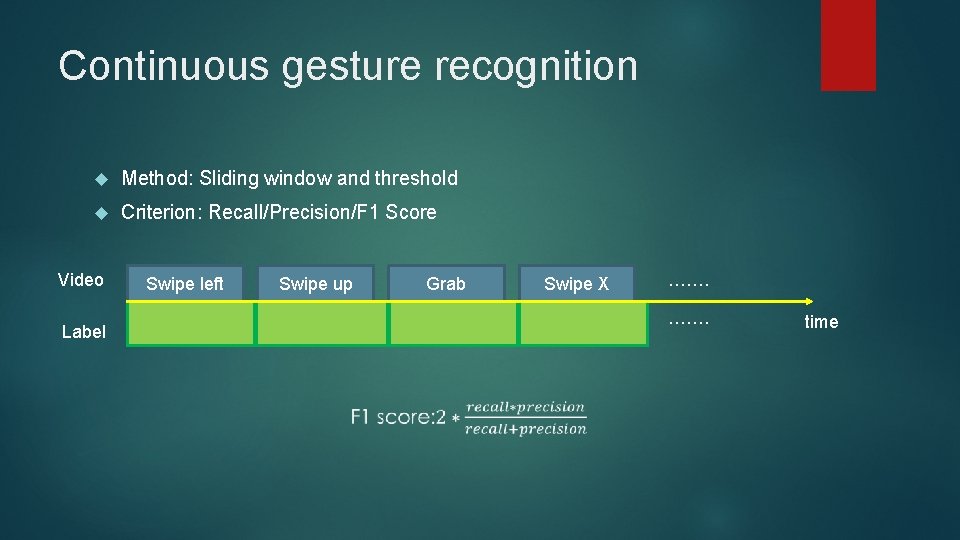

Continuous gesture recognition Method: Sliding window and threshold Criterion: Recall/Precision/F 1 Score Video Label Swipe left Swipe up Grab Swipe X ……. time

Continuous gesture recognition 12 sequences, each contains 4~8 gestures Total possible combination= 14*14=196 Now only about 50 cases Skeleton input only

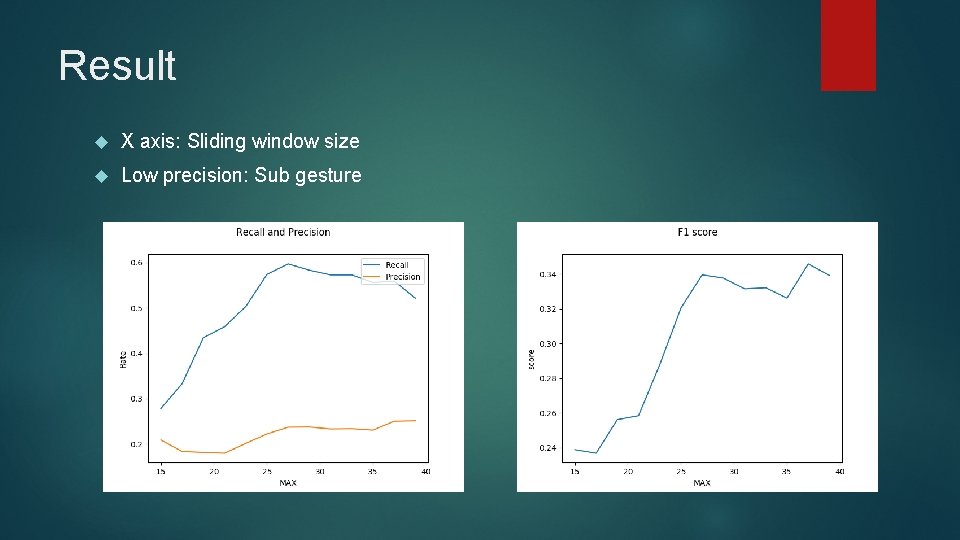

Result X axis: Sliding window size Low precision: Sub gesture

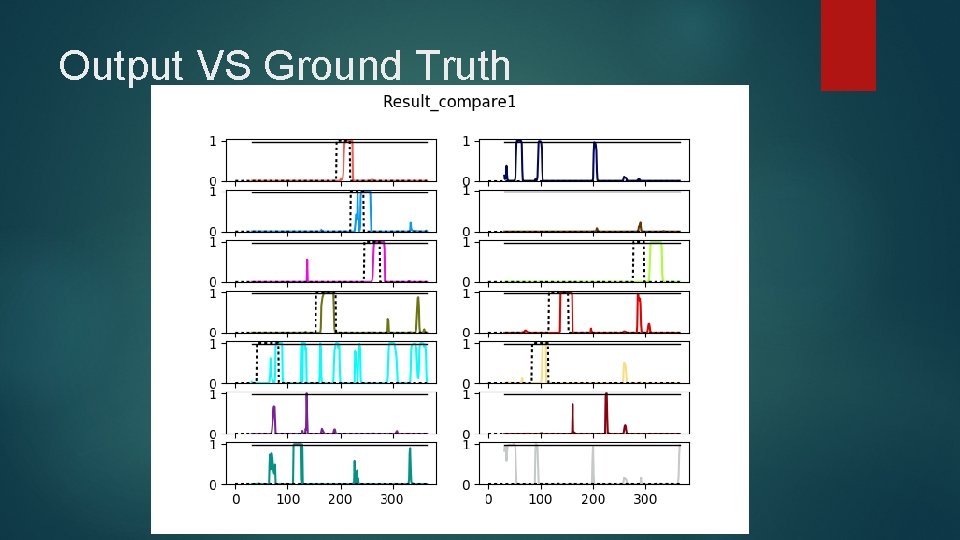

Output VS Ground Truth

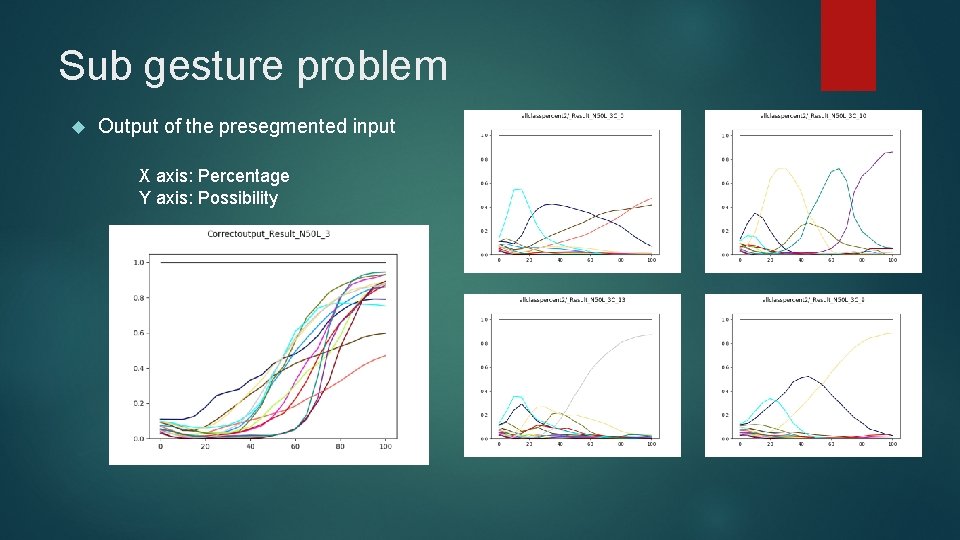

Sub gesture problem Output of the presegmented input X axis: Percentage Y axis: Possibility

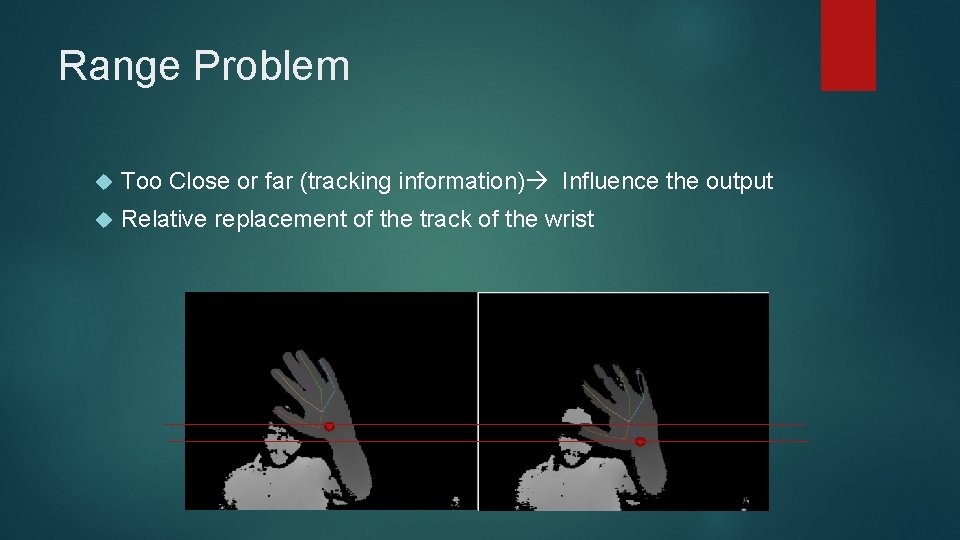

Range Problem Too Close or far (tracking information) Influence the output Relative replacement of the track of the wrist

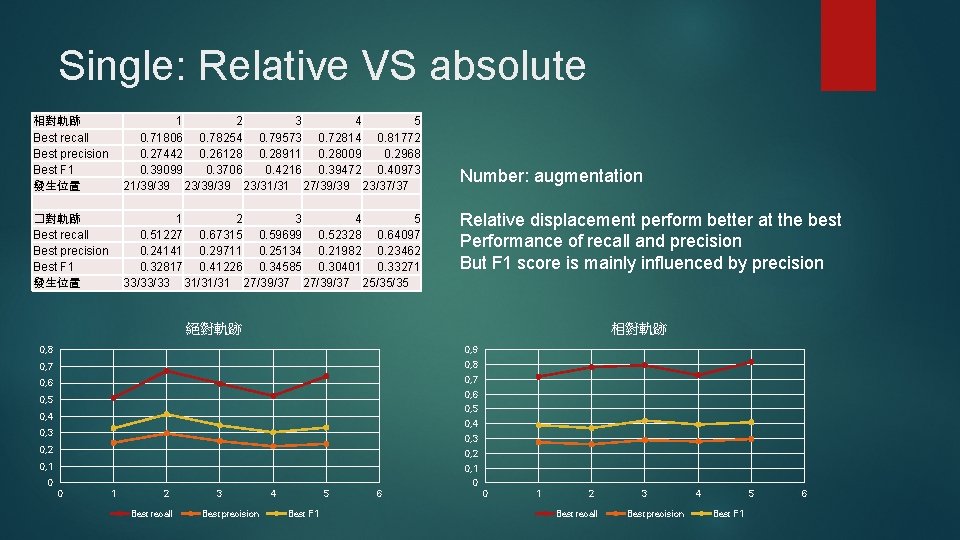

Single: Relative VS absolute 相對軌跡 Best recall Best precision Best F 1 發生位置 1 2 3 4 5 0. 71806 0. 78254 0. 79573 0. 72814 0. 81772 0. 27442 0. 26128 0. 28911 0. 28009 0. 2968 0. 39099 0. 3706 0. 4216 0. 39472 0. 40973 21/39/39 23/31/31 27/39/39 23/37/37 �對軌跡 Best recall Best precision Best F 1 發生位置 1 2 3 4 5 0. 51227 0. 67315 0. 59699 0. 52328 0. 64097 0. 24141 0. 29711 0. 25134 0. 21982 0. 23462 0. 32817 0. 41226 0. 34585 0. 30401 0. 33271 33/33/33 31/31/31 27/39/37 25/35/35 Number: augmentation Relative displacement perform better at the best Performance of recall and precision But F 1 score is mainly influenced by precision 絕對軌跡 相對軌跡 0, 8 0, 9 0, 7 0, 8 0, 6 0, 7 0, 6 0, 5 0, 4 0, 3 0, 2 0, 1 0 0 0 1 2 Best recall 3 Best precision 4 5 Best F 1 6

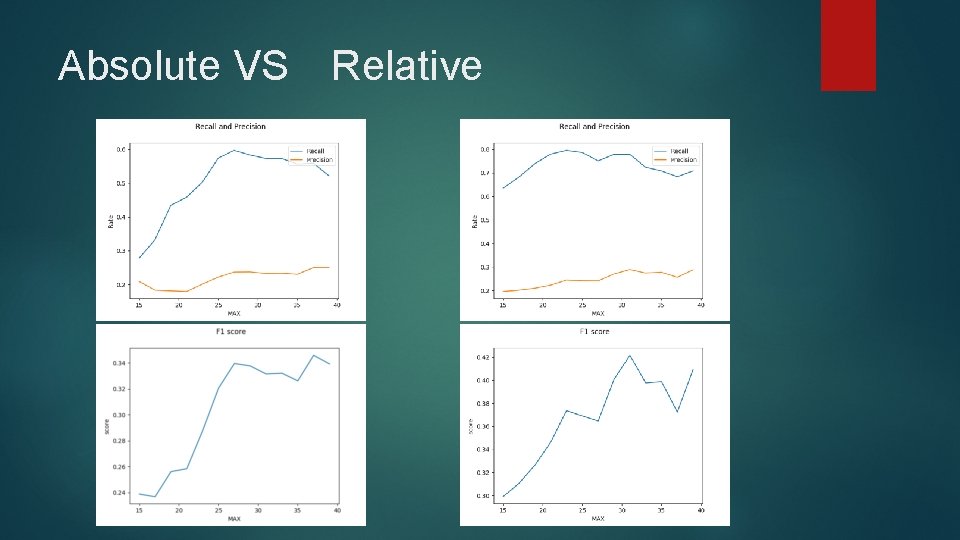

Absolute VS Relative

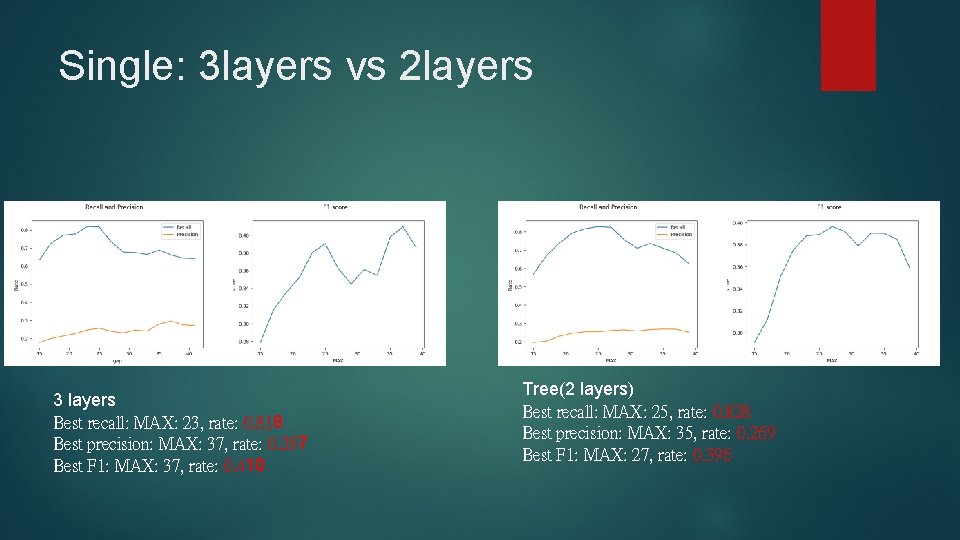

Single: 3 layers vs 2 layers 3 layers Best recall: MAX: 23, rate: 0. 818 Best precision: MAX: 37, rate: 0. 297 Best F 1: MAX: 37, rate: 0. 410 Tree(2 layers) Best recall: MAX: 25, rate: 0. 828 Best precision: MAX: 35, rate: 0. 269 Best F 1: MAX: 27, rate: 0. 396

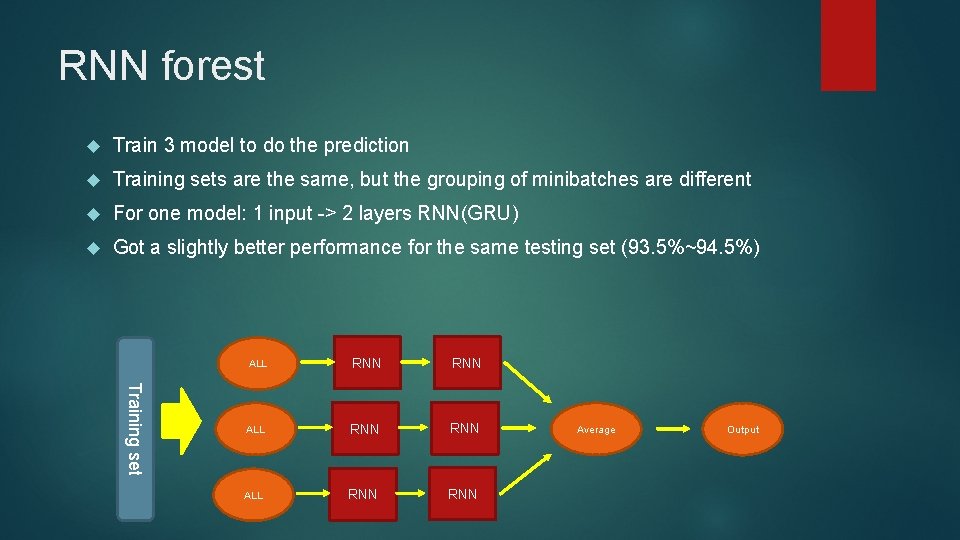

RNN forest Train 3 model to do the prediction Training sets are the same, but the grouping of minibatches are different For one model: 1 input -> 2 layers RNN(GRU) Got a slightly better performance for the same testing set (93. 5%~94. 5%) Training set ALL RNN RNN ALL RNN Average Output

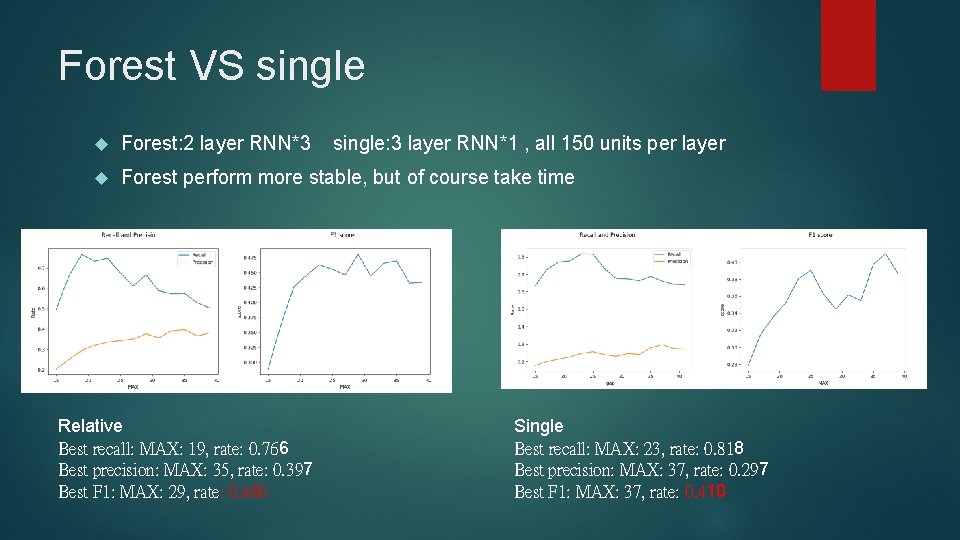

Forest VS single Forest: 2 layer RNN*3 Forest perform more stable, but of course take time Relative Best recall: MAX: 19, rate: 0. 766 Best precision: MAX: 35, rate: 0. 397 Best F 1: MAX: 29, rate: 0. 480 single: 3 layer RNN*1 , all 150 units per layer Single Best recall: MAX: 23, rate: 0. 818 Best precision: MAX: 37, rate: 0. 297 Best F 1: MAX: 37, rate: 0. 410

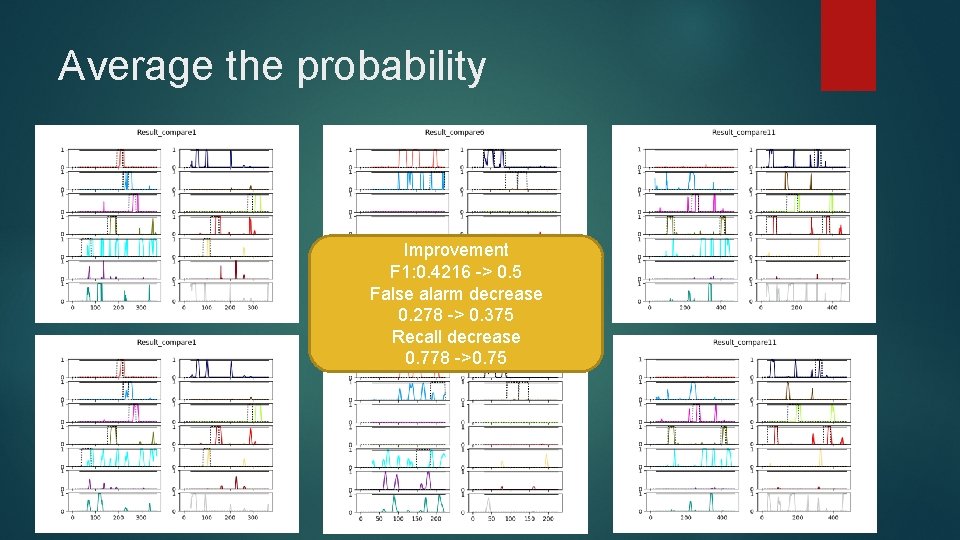

Average the probability Improvement F 1: 0. 4216 -> 0. 5 False alarm decrease 0. 278 -> 0. 375 Recall decrease 0. 778 ->0. 75

Problem to solve Precision still too low sub gesture need to output the possibility for every class every time step Should only output the definite result at specific time

Outline Introduction l Hand gesture l Dataset Background Knowledge l LSTM/GRU l Multilayer RNN l Related work Model and Result Advanced method – CTC Conclusion

Sequence to Sequence labelling

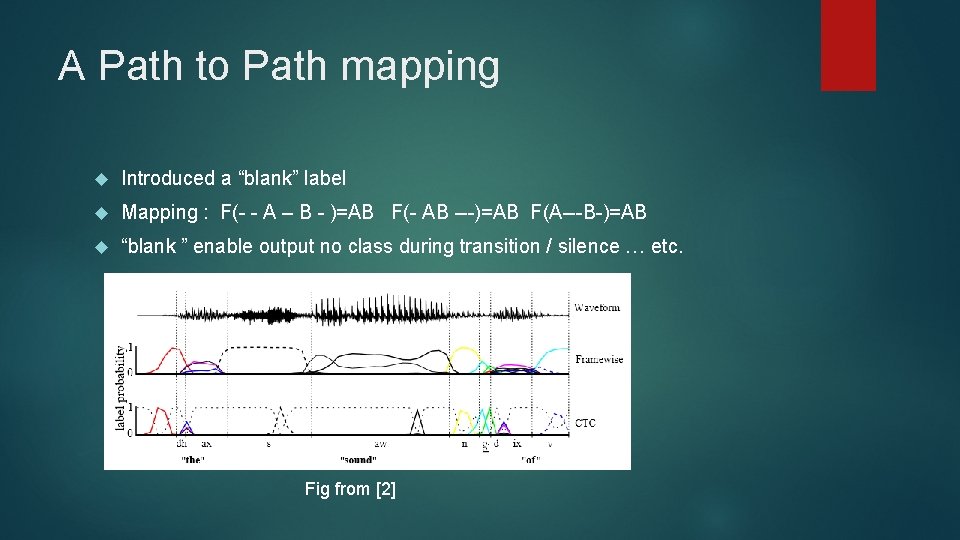

A Path to Path mapping Introduced a “blank” label Mapping : F(- - A – B - )=AB F(- AB ---)=AB F(A---B-)=AB “blank ” enable output no class during transition / silence … etc. Fig from [2]

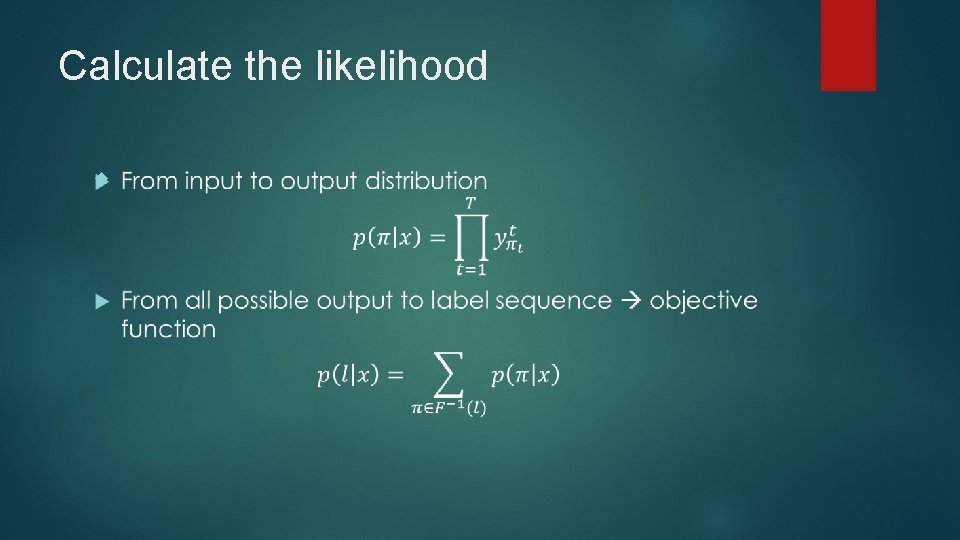

Calculate the likelihood

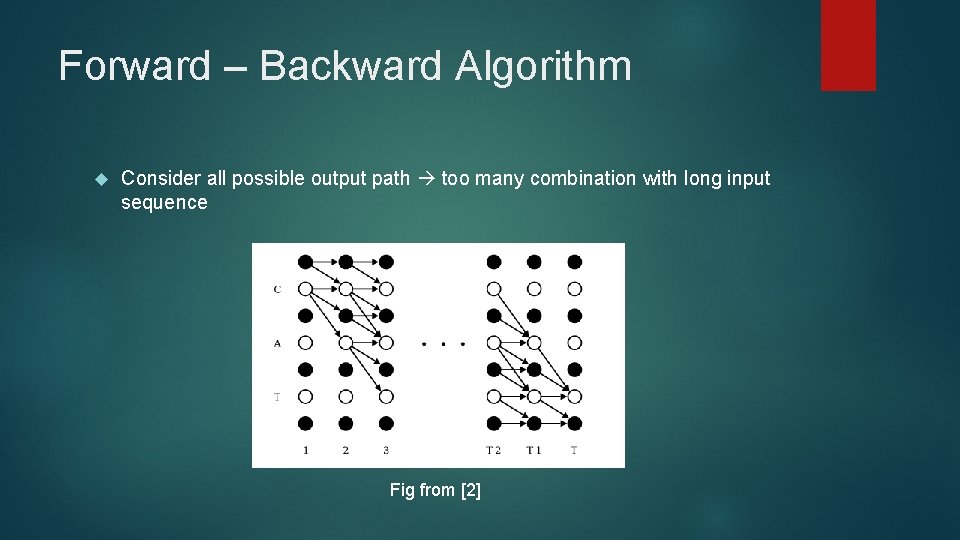

Forward – Backward Algorithm Consider all possible output path too many combination with long input sequence Fig from [2]

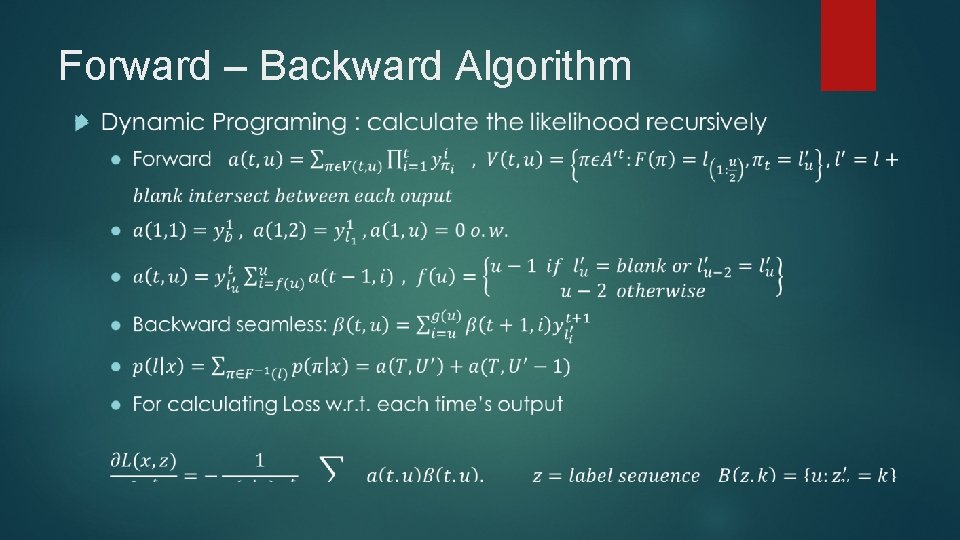

Forward – Backward Algorithm

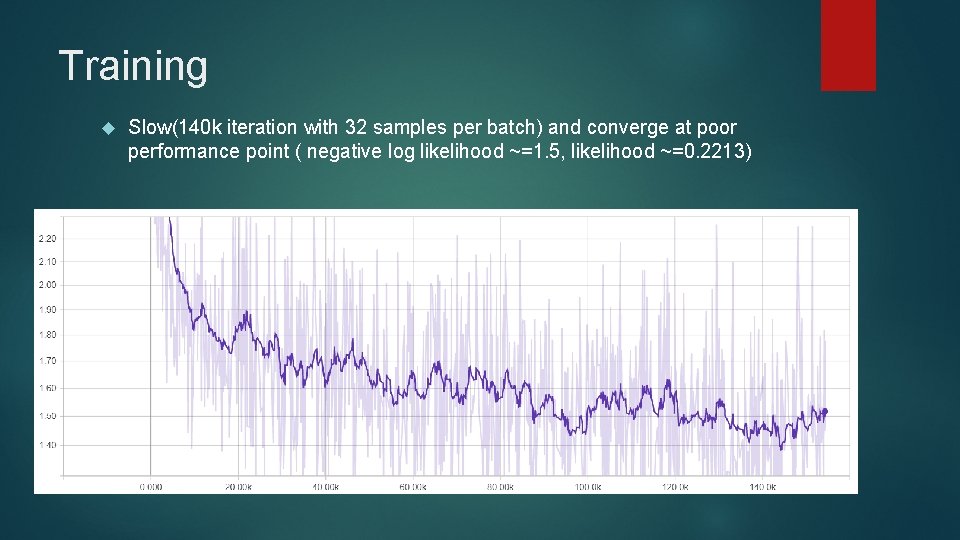

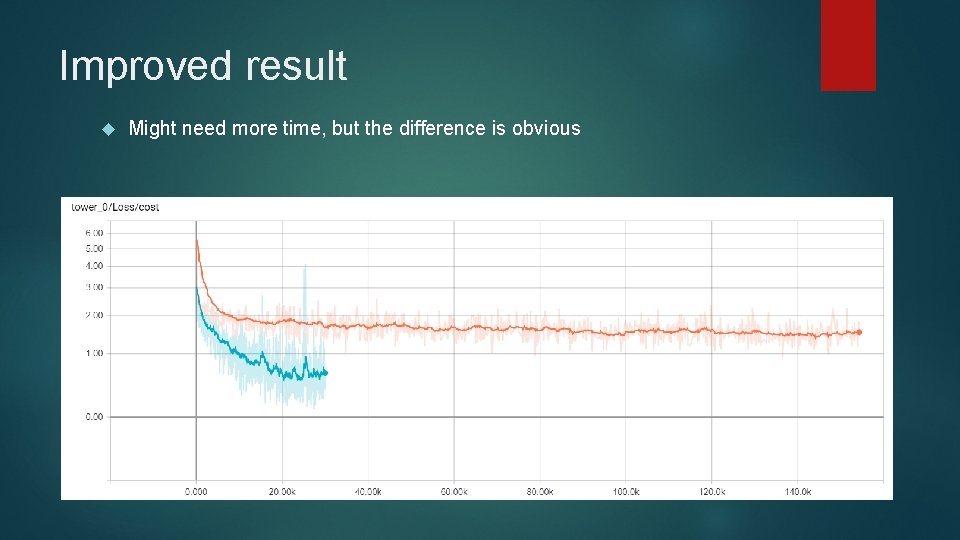

Training Slow(140 k iteration with 32 samples per batch) and converge at poor performance point ( negative log likelihood ~=1. 5, likelihood ~=0. 2213)

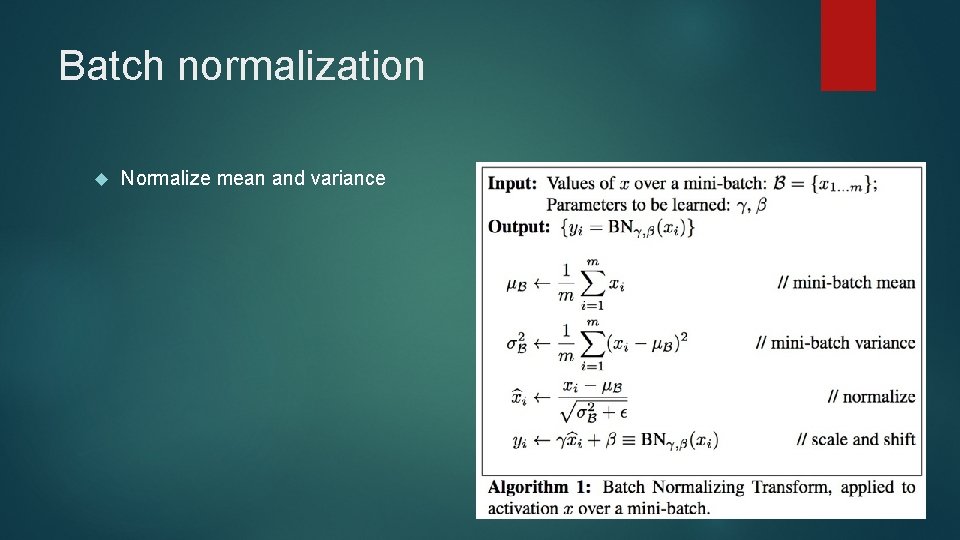

Batch normalization Normalize mean and variance

Improved result Might need more time, but the difference is obvious

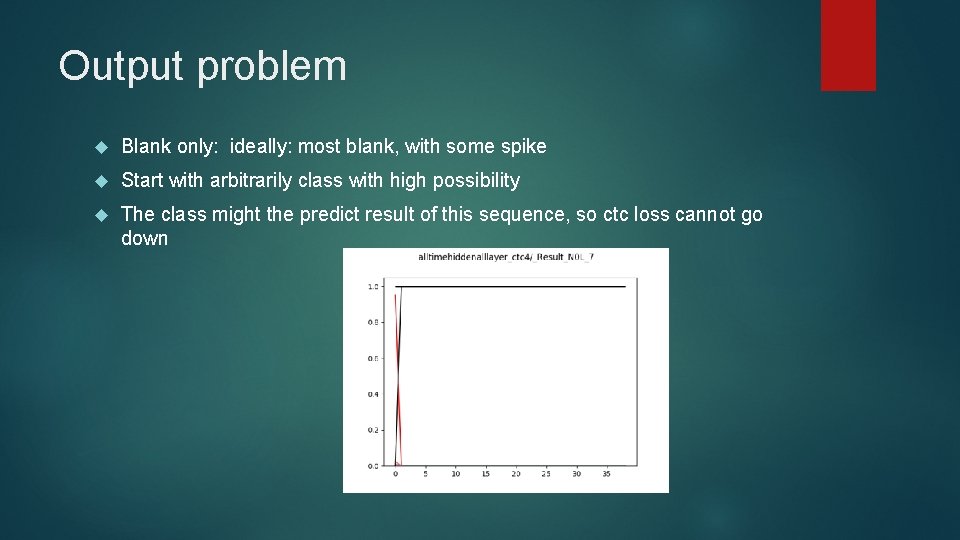

Output problem Blank only: ideally: most blank, with some spike Start with arbitrarily class with high possibility The class might the predict result of this sequence, so ctc loss cannot go down

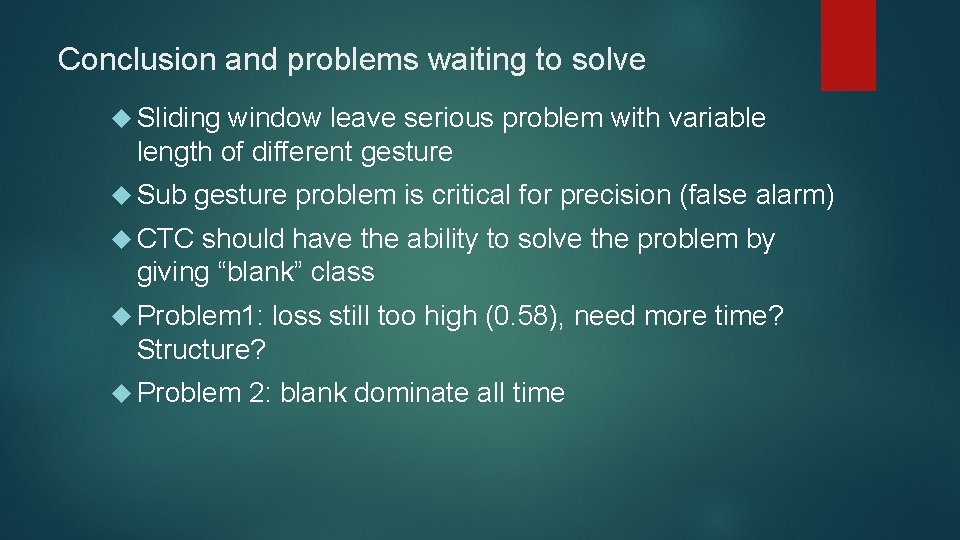

Conclusion and problems waiting to solve Sliding window leave serious problem with variable length of different gesture Sub gesture problem is critical for precision (false alarm) CTC should have the ability to solve the problem by giving “blank” class Problem 1: loss still too high (0. 58), need more time? Structure? Problem 2: blank dominate all time

![Ref [1] “Training and analyzing deep recurrent neural networks” [2] “Supervised Sequence Labelling with Ref [1] “Training and analyzing deep recurrent neural networks” [2] “Supervised Sequence Labelling with](http://slidetodoc.com/presentation_image_h2/a3296d0308bd4f6fdede622ba2243e31/image-49.jpg)

Ref [1] “Training and analyzing deep recurrent neural networks” [2] “Supervised Sequence Labelling with Recurrent Neural Networks”, Alex Graves [3] “Deep learning”, Ian Goodfellow and Yoshua Bengio and Aaron Courville, 2016 [4] “Understanding LSTM Networks”, Christopher Olah [5] “Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift”, Sergey Ioffe, Christian Szegedy, 2015

- Slides: 49