Single Path Oneshot NAS SPOS v o o

- Slides: 35

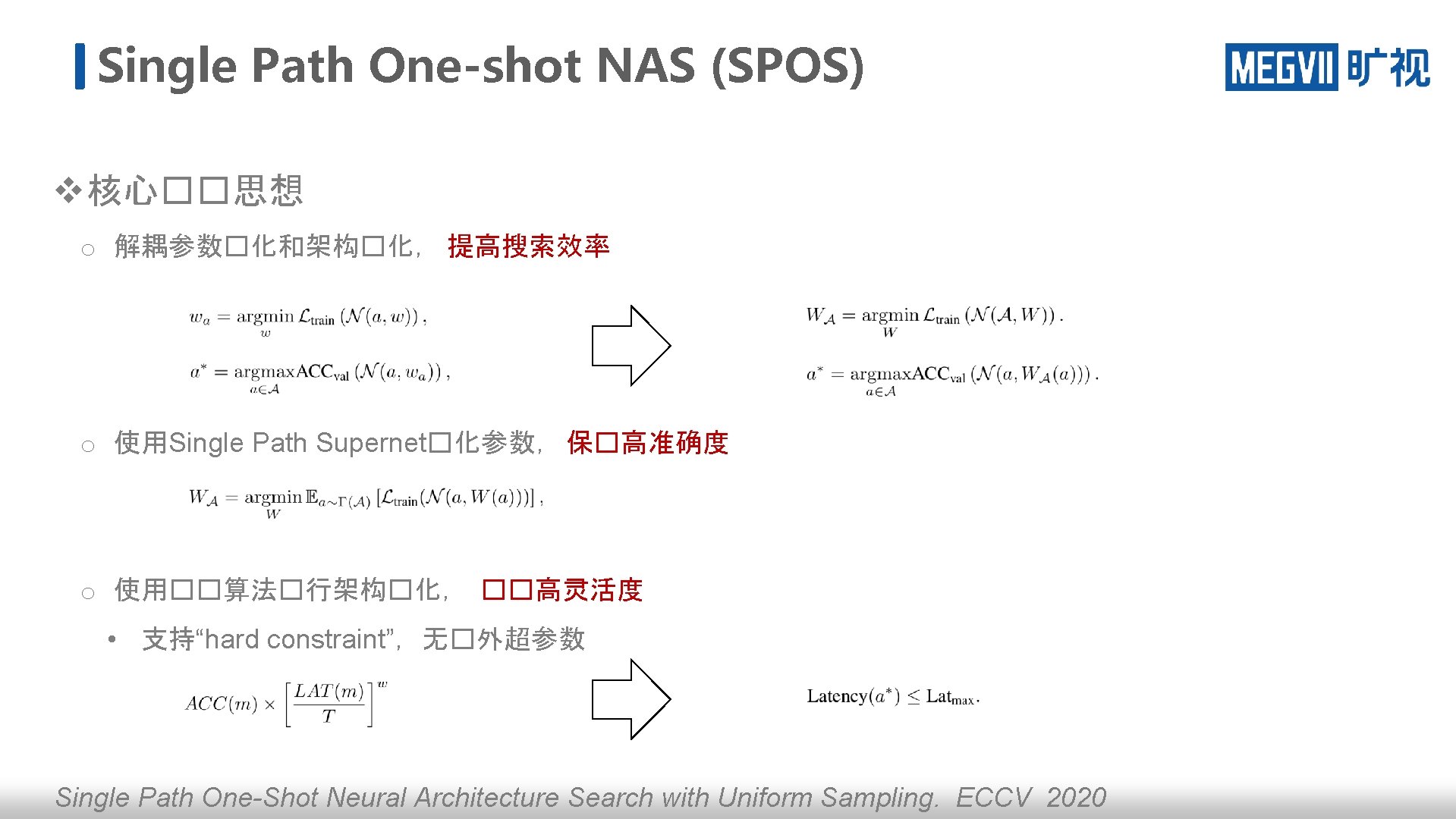

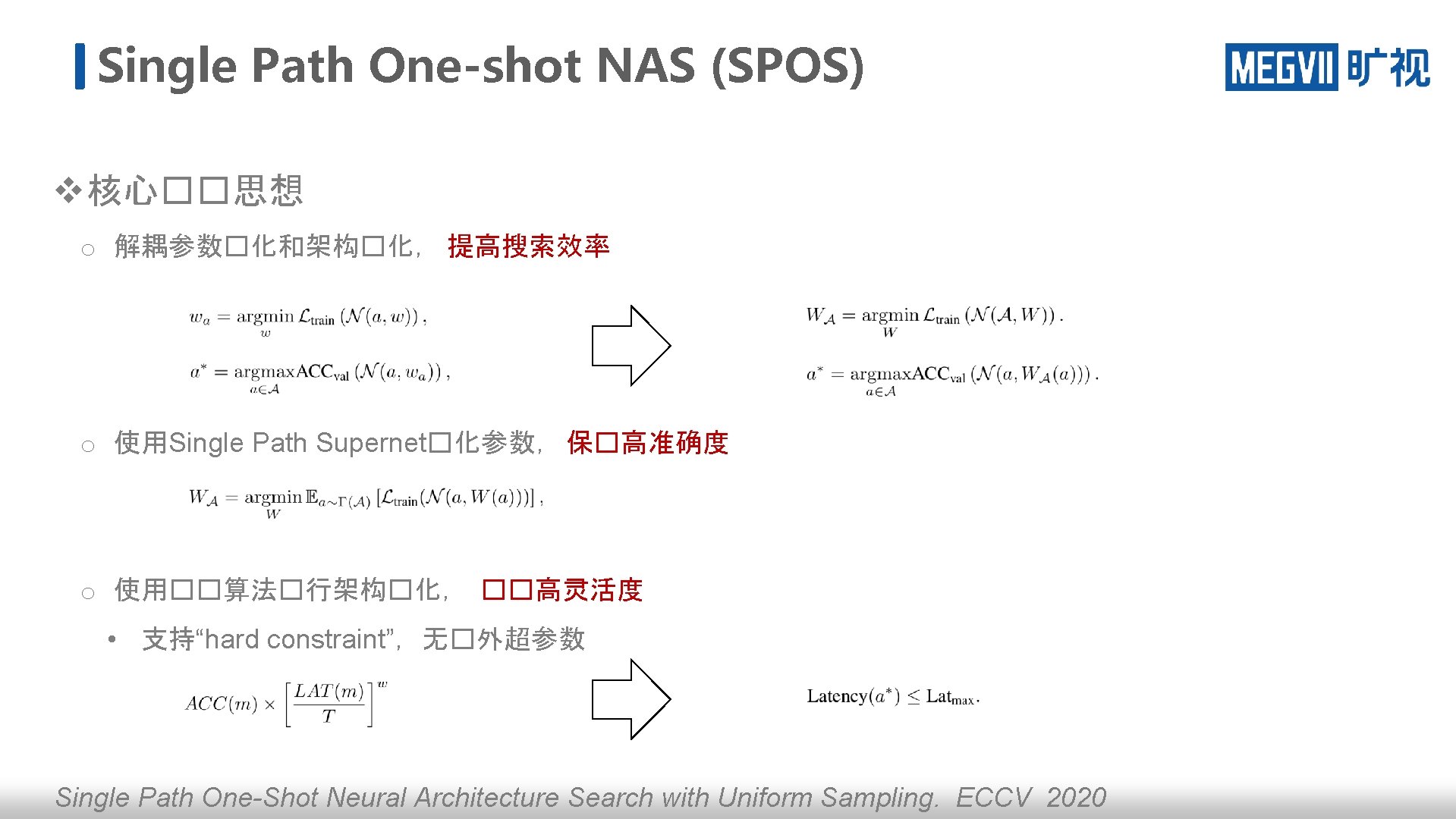

Single Path One-shot NAS (SPOS) v 核心��思想 o 解耦参数�化和架构�化, 提高搜索效率 o 使用Single Path Supernet�化参数, 保�高准确度 o 使用��算法�行架构�化, ��高灵活度 • 支持“hard constraint”,无�外超参数 Single Path One-Shot Neural Architecture Search with Uniform Sampling. ECCV 2020

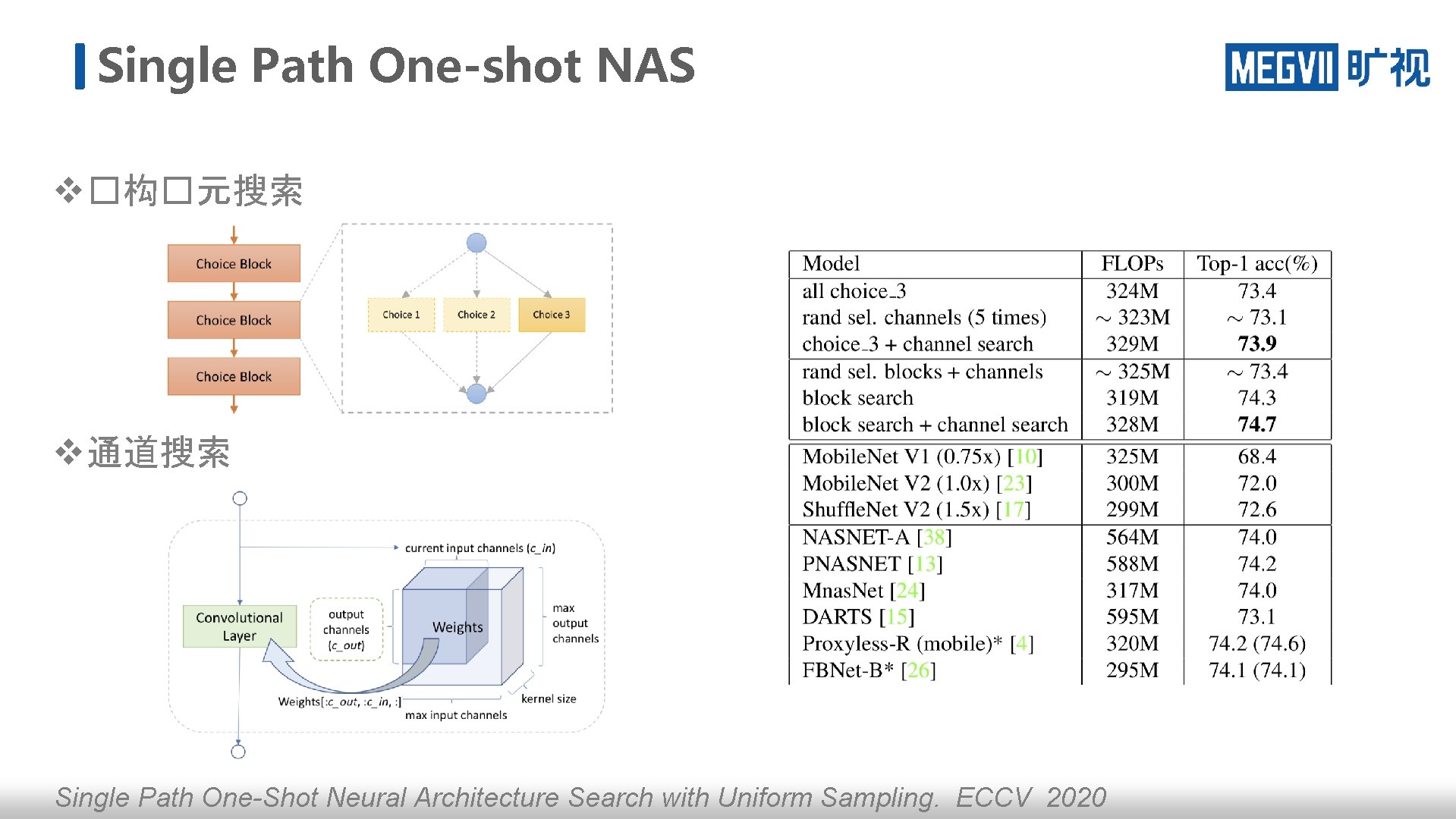

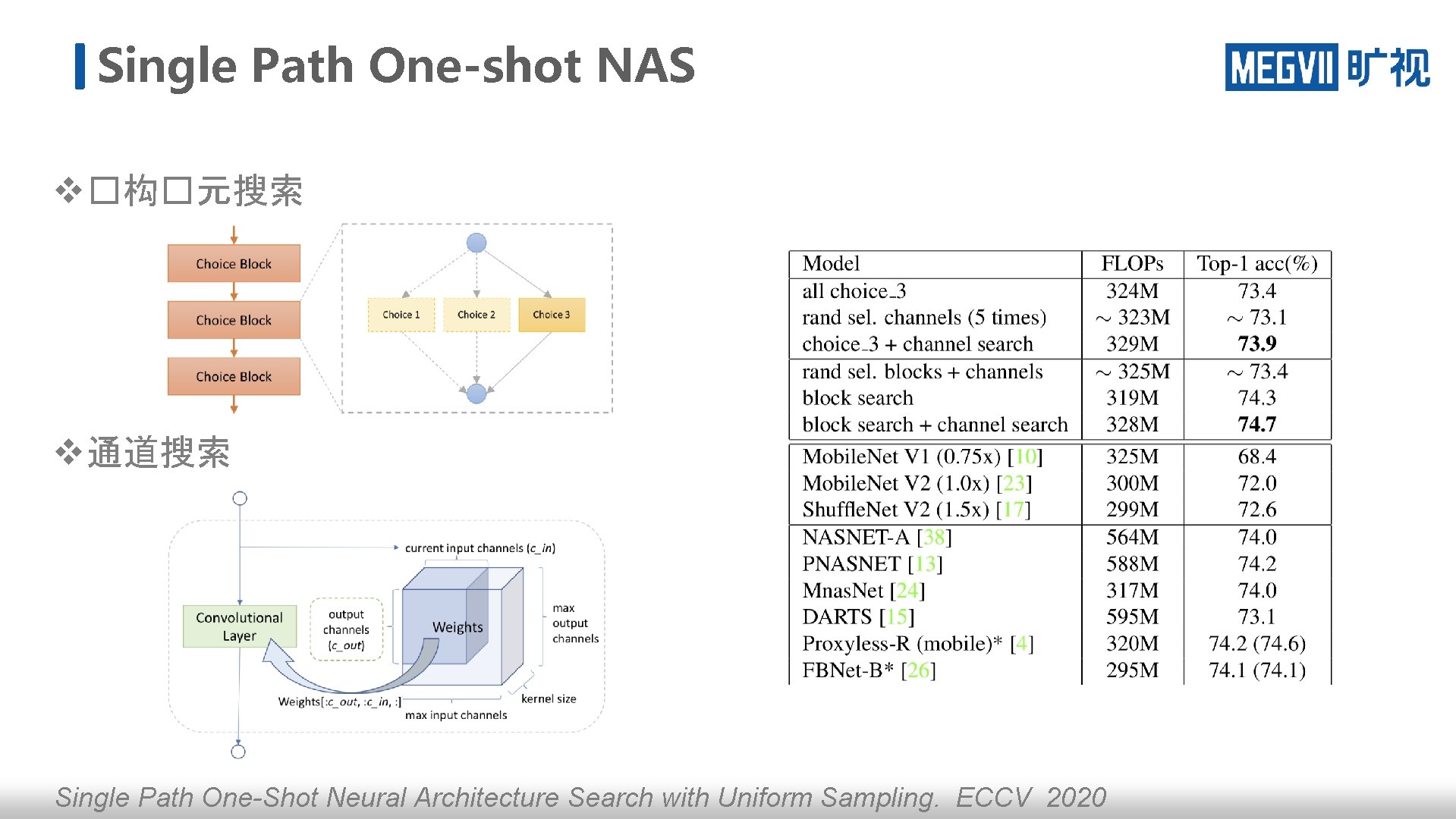

Single Path One-shot NAS v �构�元搜索 v 通道搜索 Single Path One-Shot Neural Architecture Search with Uniform Sampling. ECCV 2020

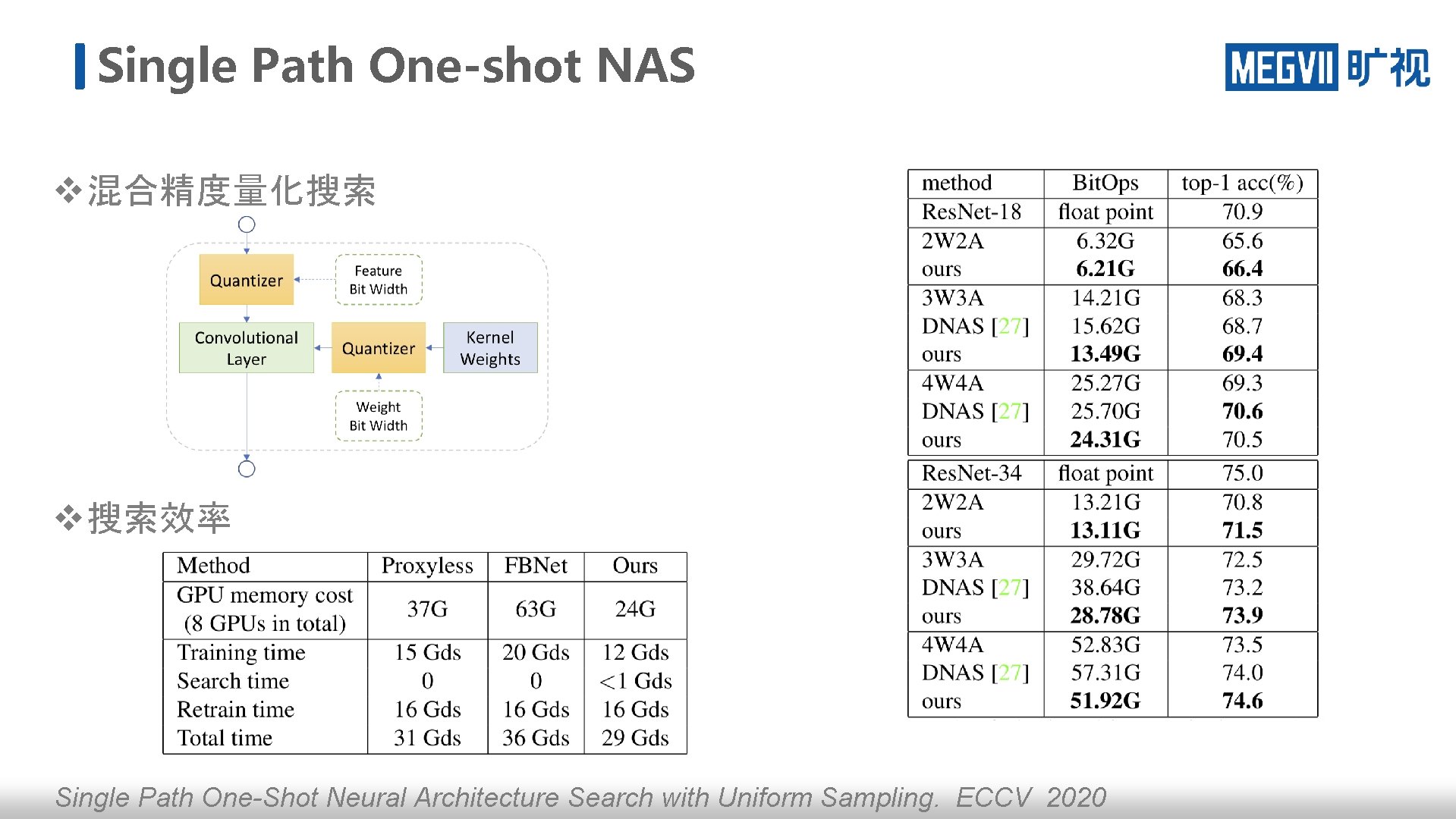

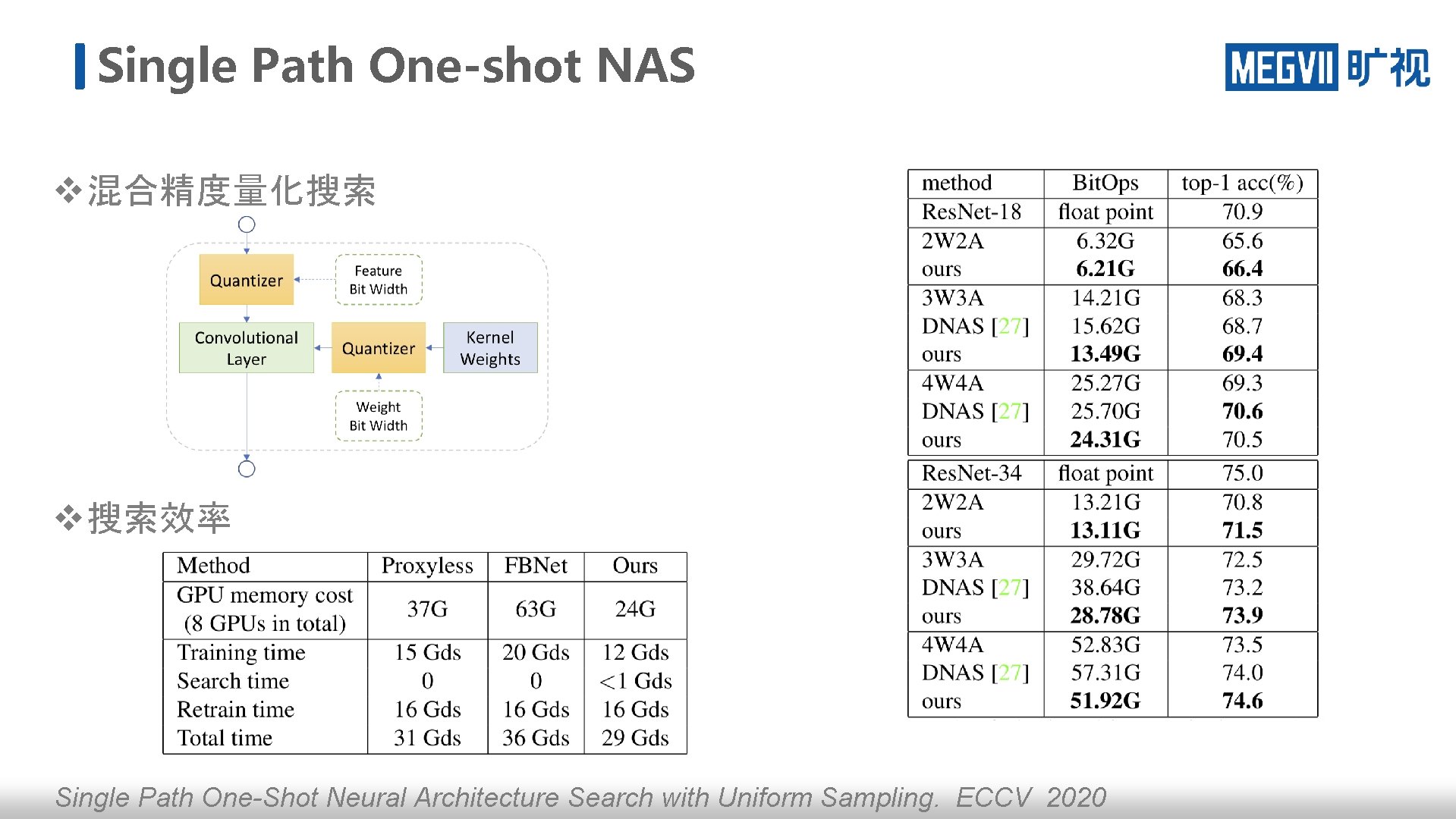

Single Path One-shot NAS v 混合精度量化搜索 v 搜索效率 Single Path One-Shot Neural Architecture Search with Uniform Sampling. ECCV 2020

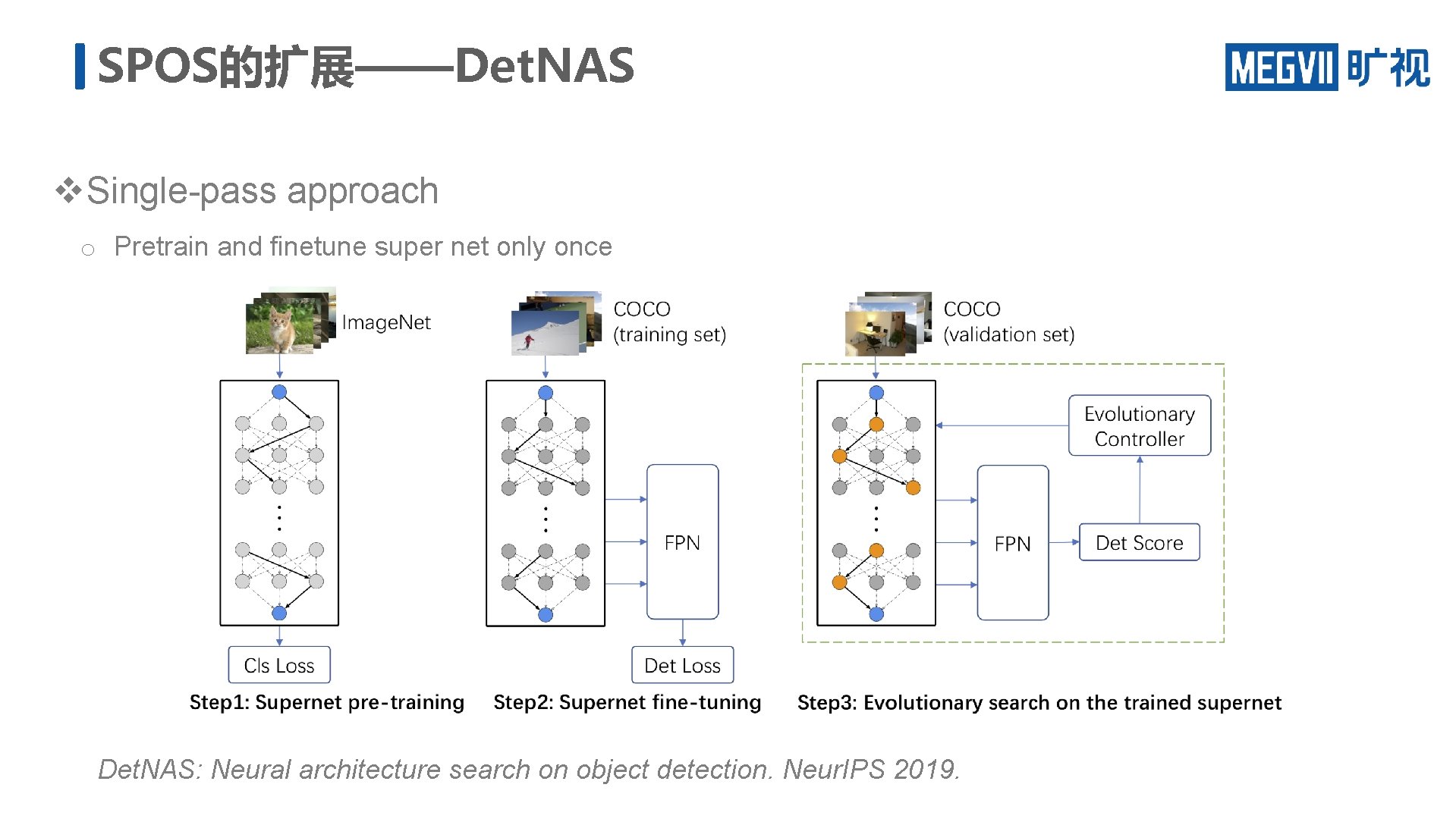

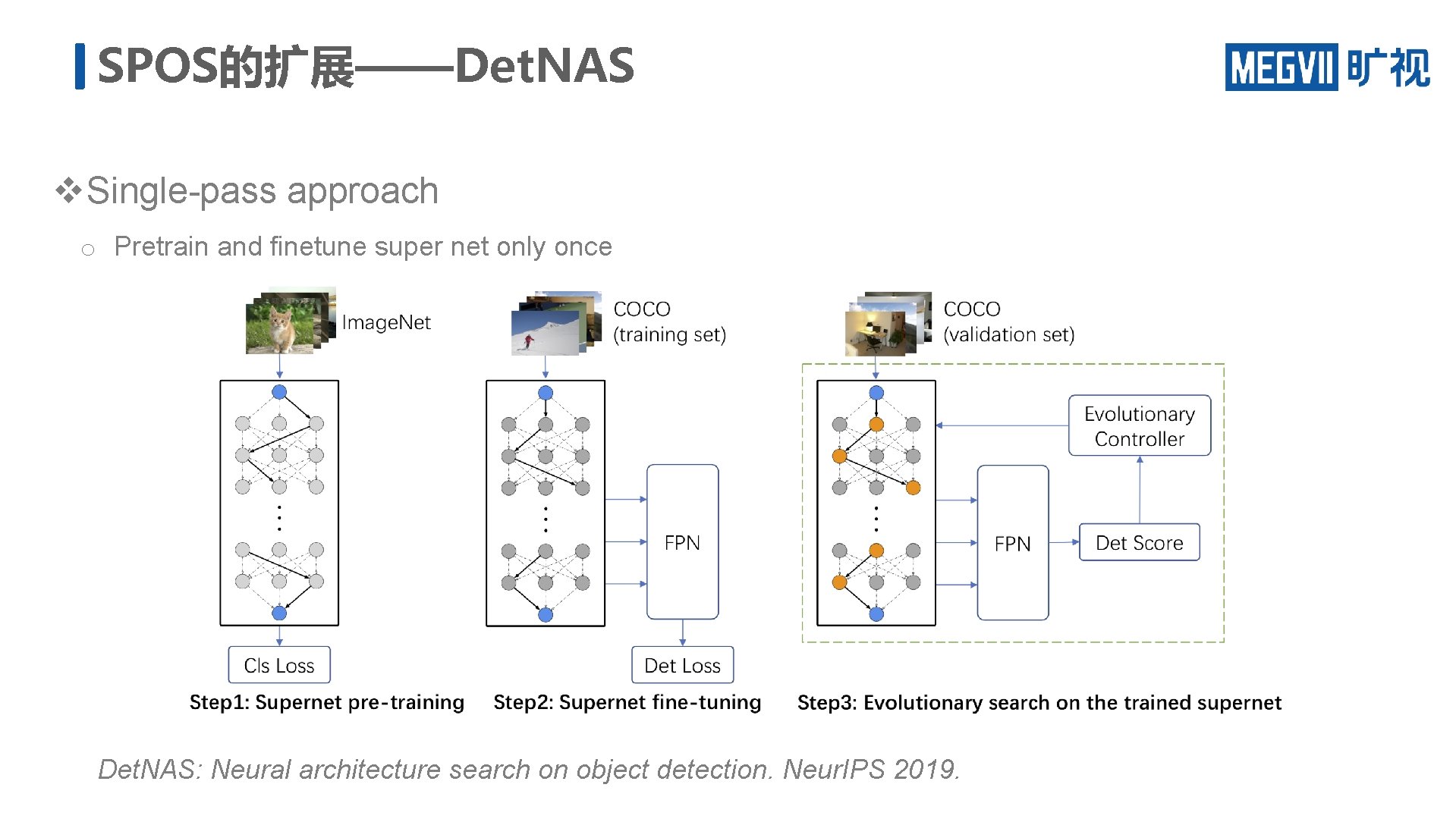

SPOS的扩展——Det. NAS v Single-pass approach o Pretrain and finetune super net only once Det. NAS: Neural architecture search on object detection. Neur. IPS 2019.

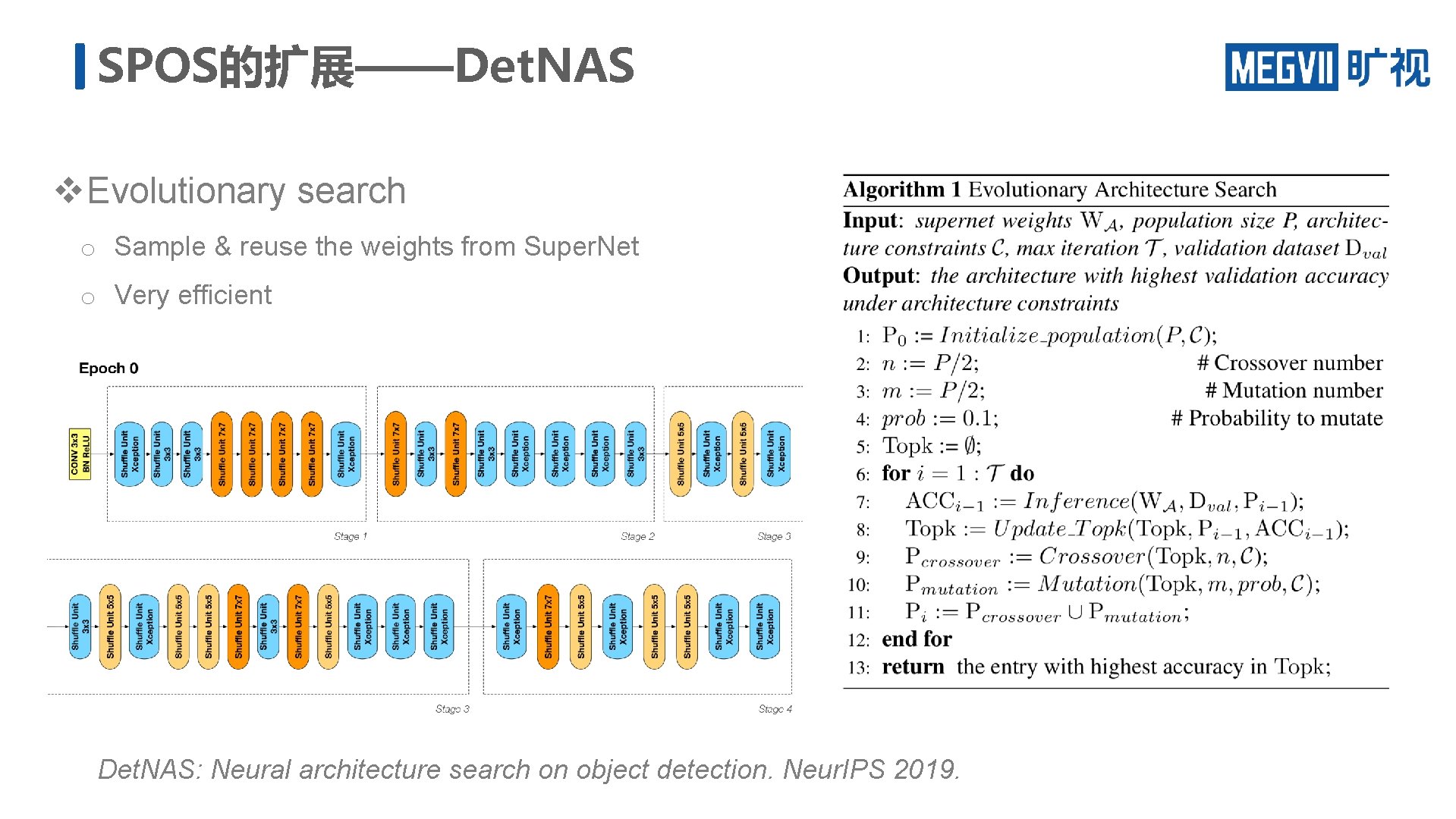

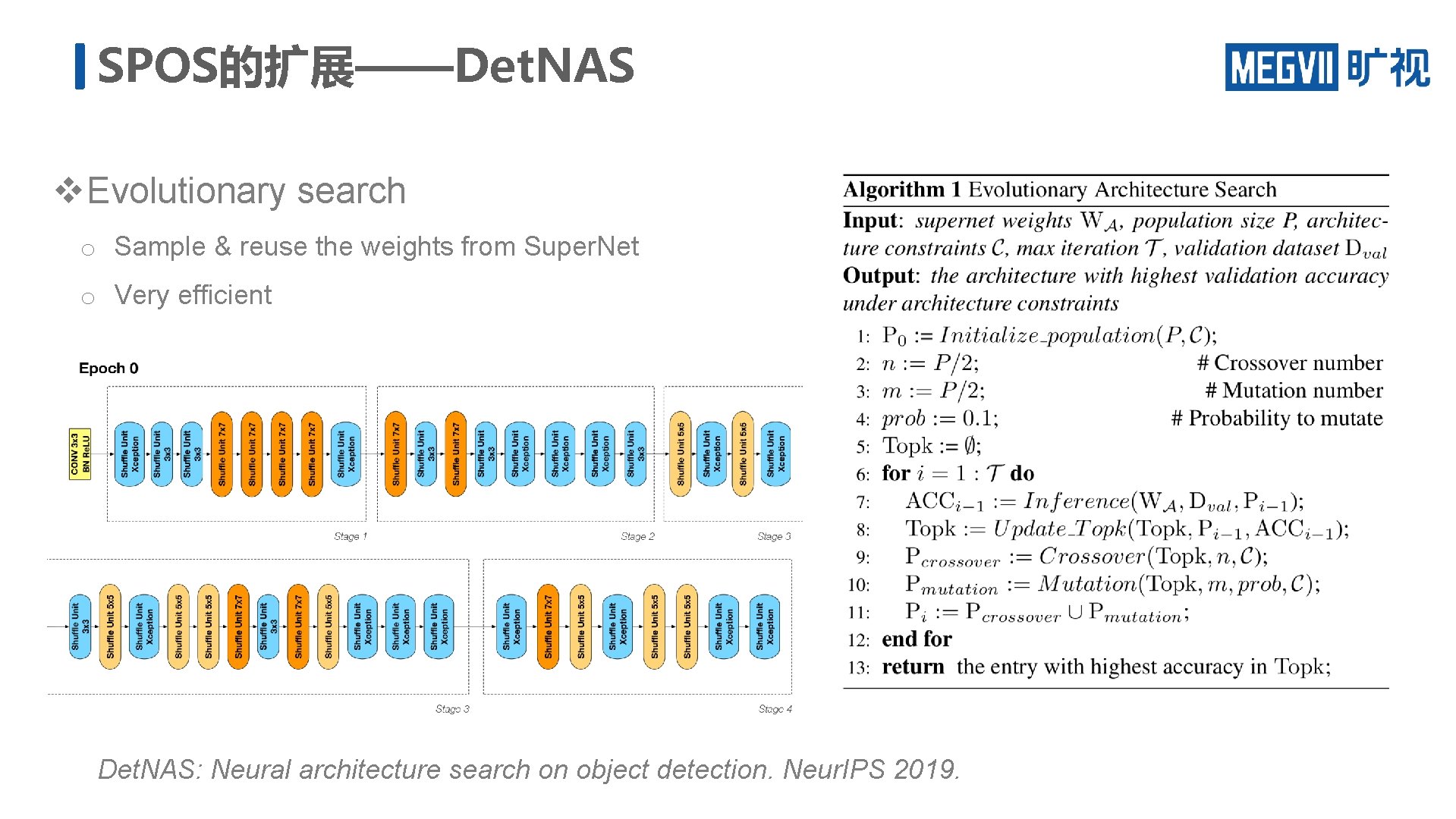

SPOS的扩展——Det. NAS v Evolutionary search o Sample & reuse the weights from Super. Net o Very efficient Det. NAS: Neural architecture search on object detection. Neur. IPS 2019.

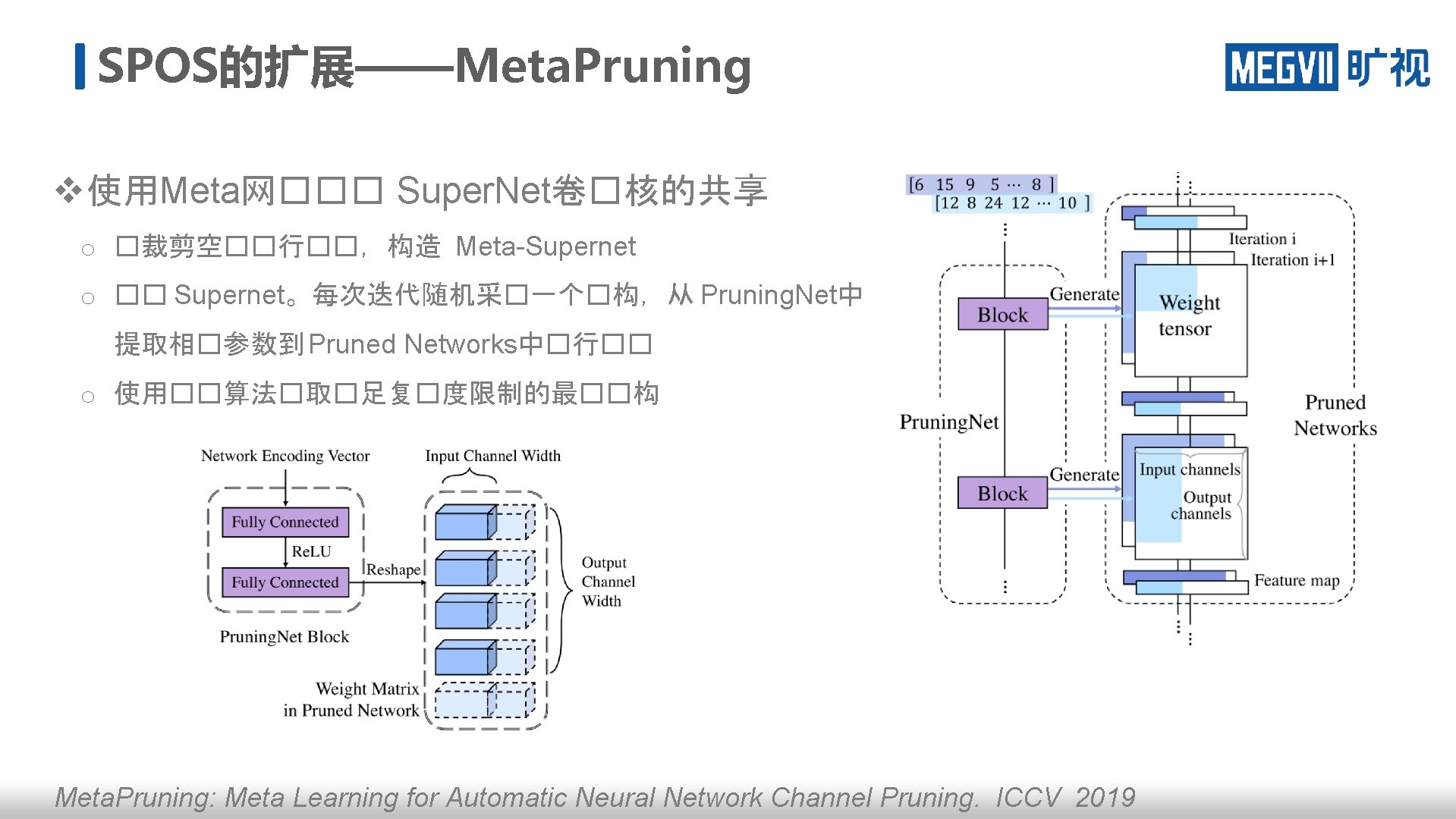

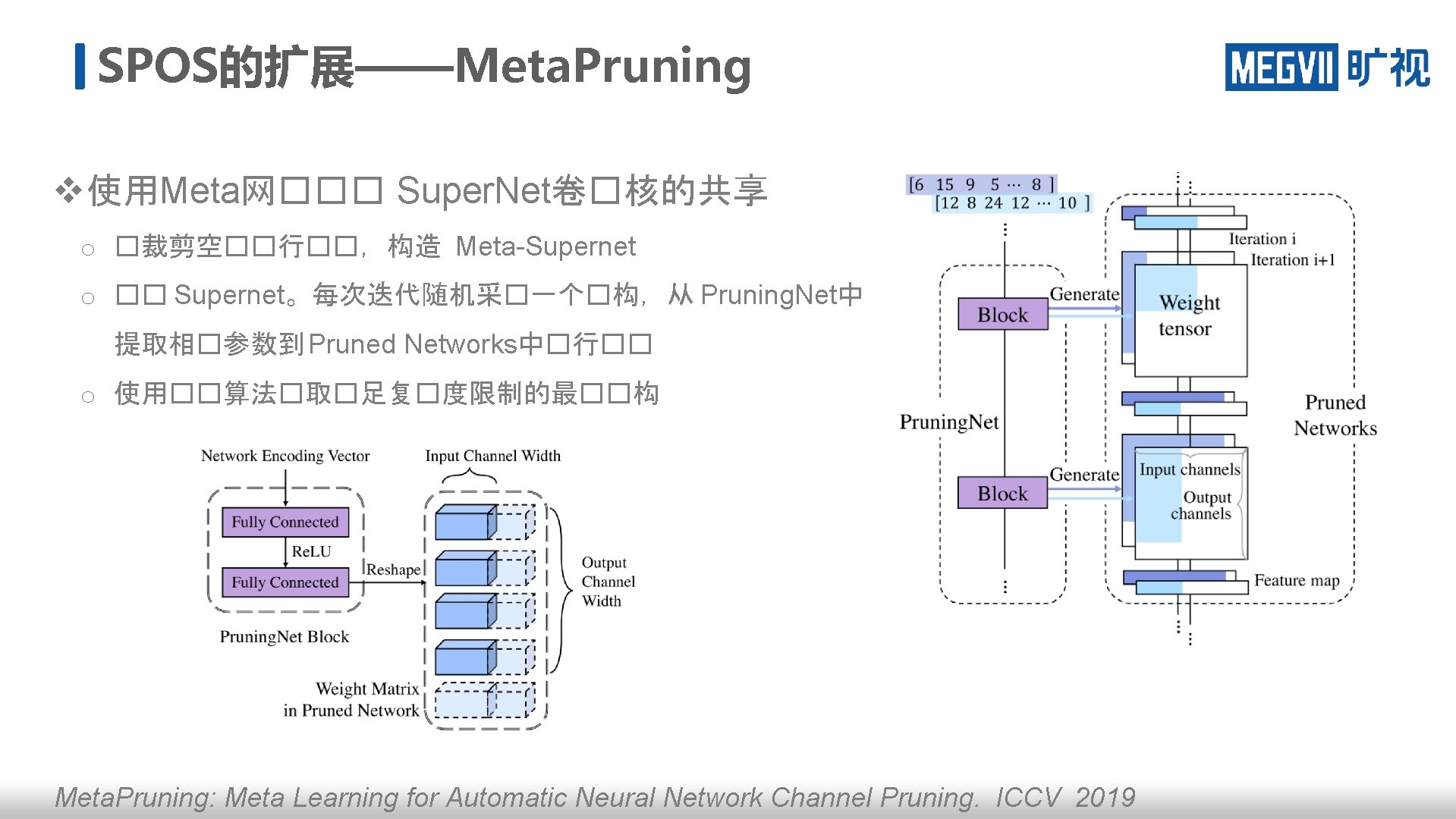

SPOS的扩展——Meta. Pruning v 使用Meta网��� Super. Net卷�核的共享 o �裁剪空��行��,构造 Meta-Supernet o �� Supernet。每次迭代随机采�一个�构,从 Pruning. Net中 提取相�参数到 Pruned Networks中�行�� o 使用��算法�取�足复�度限制的最��构 Meta. Pruning: Meta Learning for Automatic Neural Network Channel Pruning. ICCV 2019

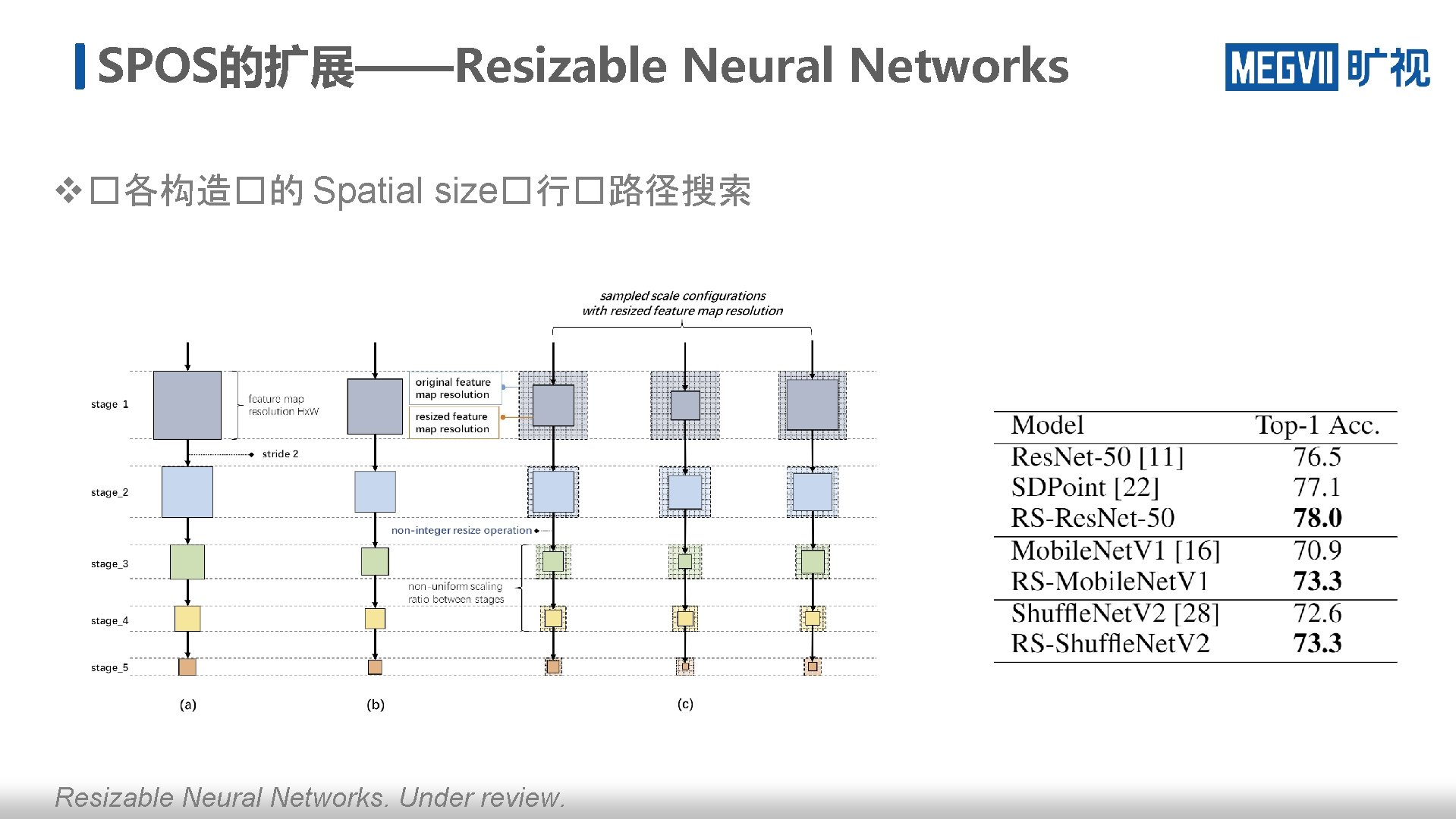

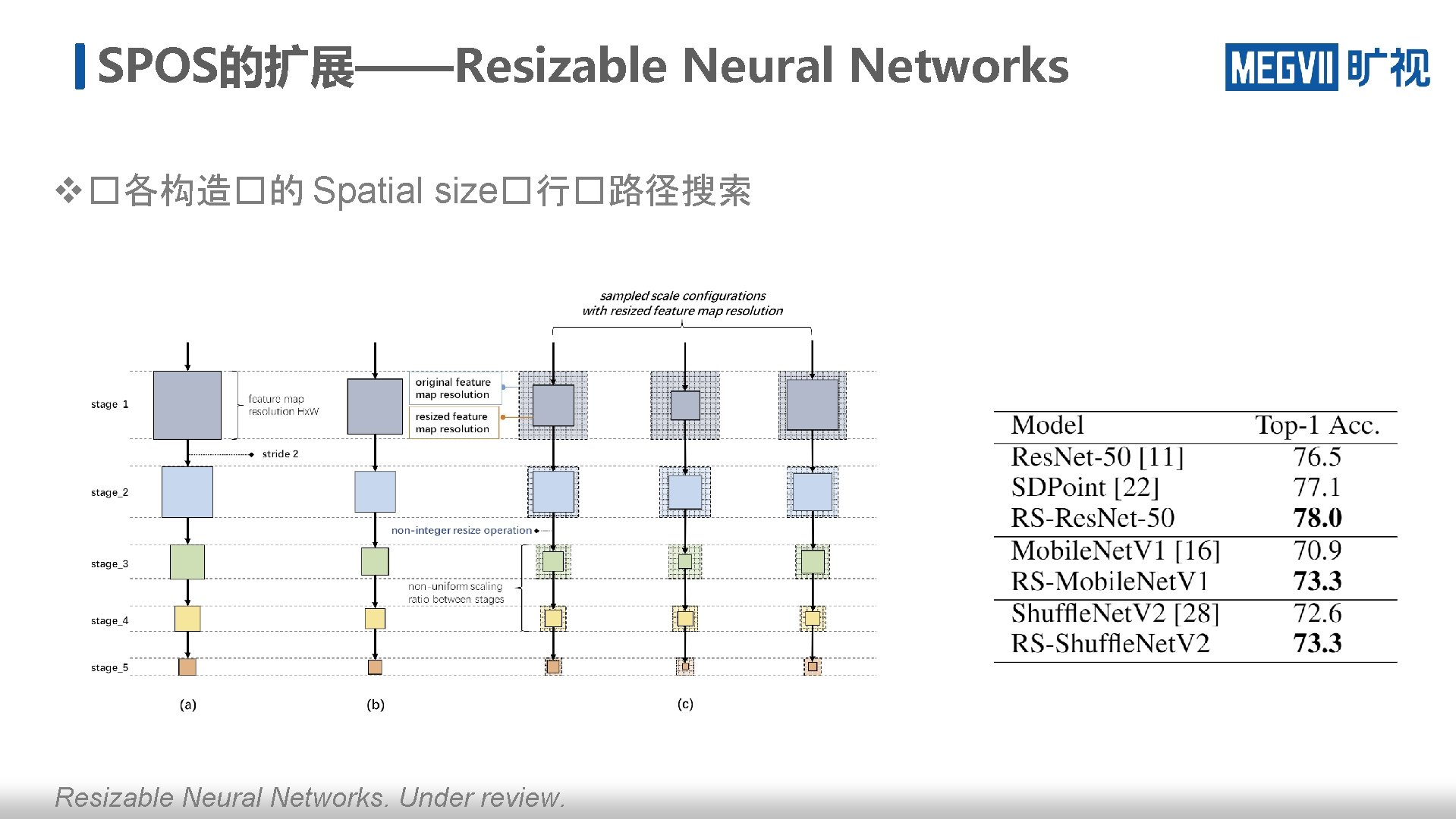

SPOS的扩展——Resizable Neural Networks v �各构造�的 Spatial size�行�路径搜索 Resizable Neural Networks. Under review.

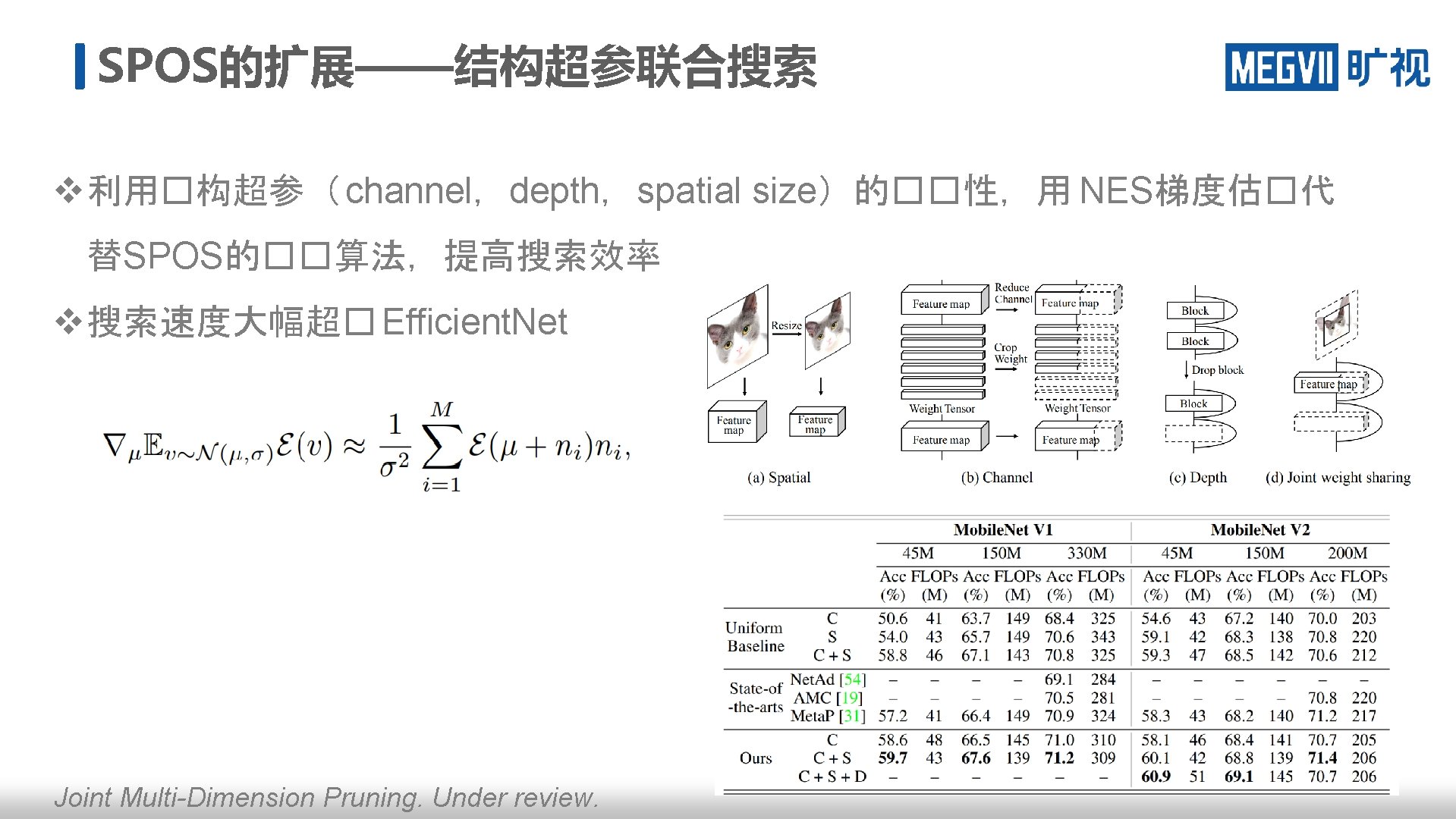

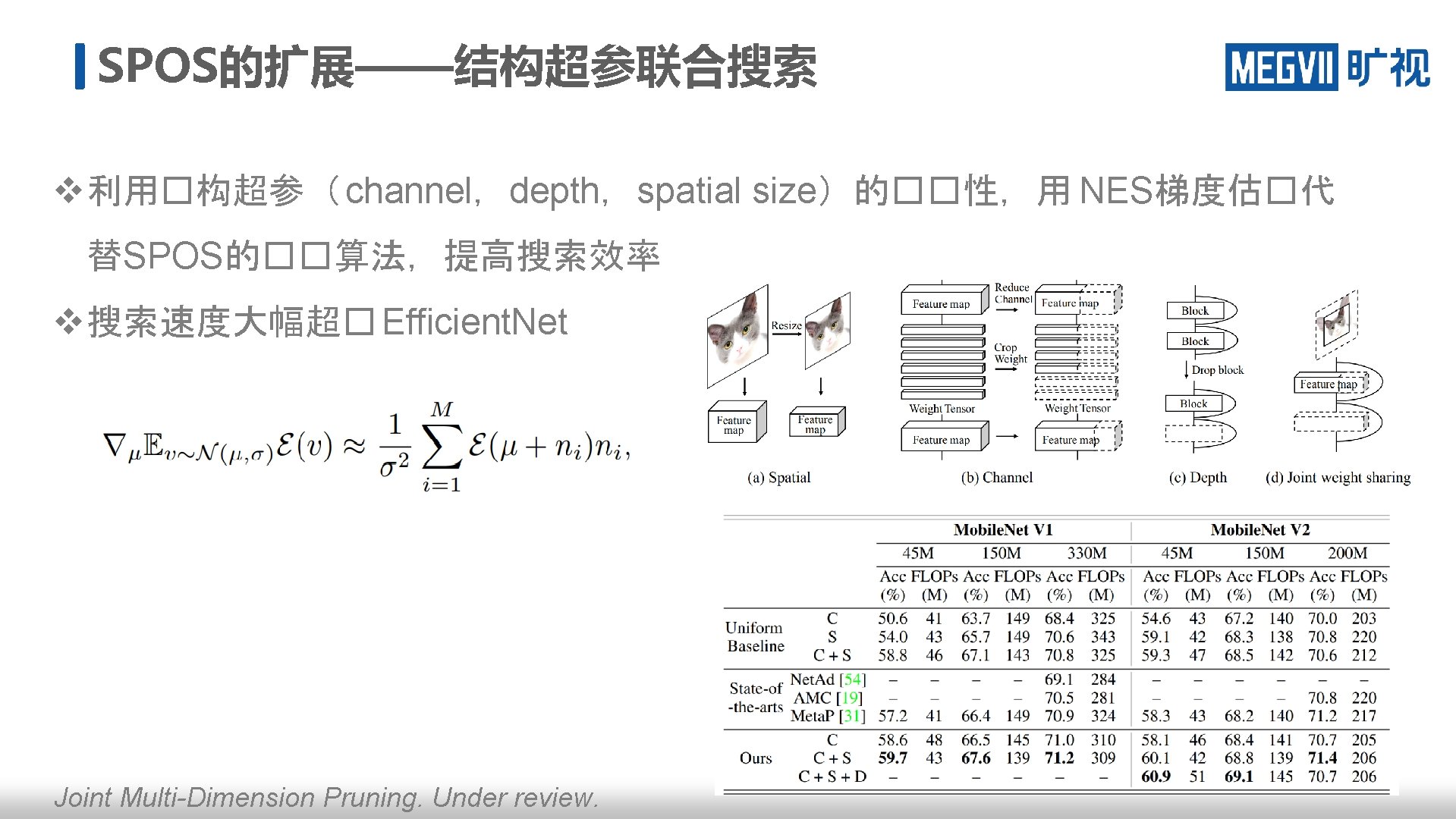

SPOS的扩展——结构超参联合搜索 v 利用�构超参( channel,depth,spatial size)的��性,用 NES梯度估�代 替SPOS的��算法,提高搜索效率 v 搜索速度大幅超� Efficient. Net Joint Multi-Dimension Pruning. Under review.

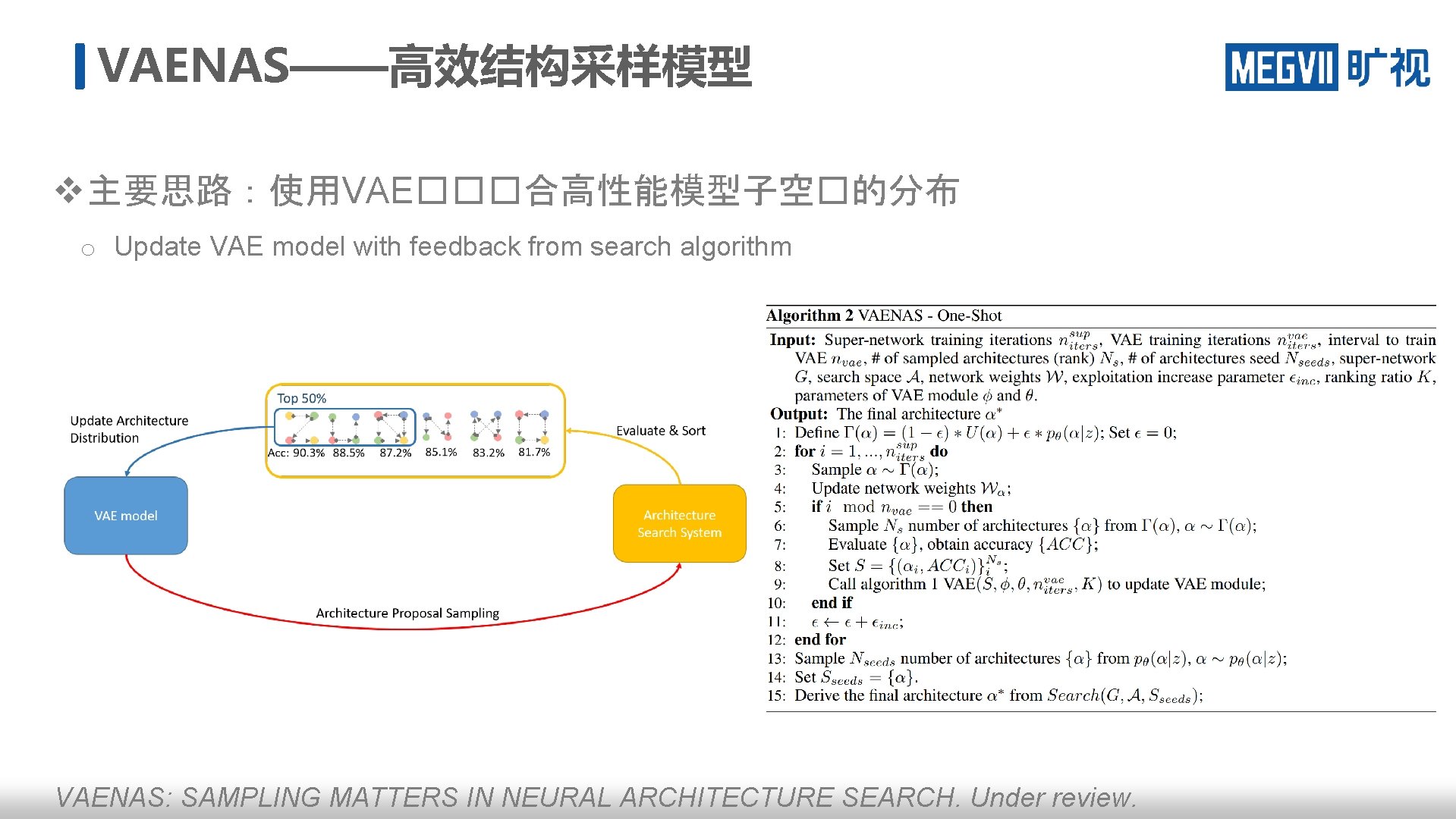

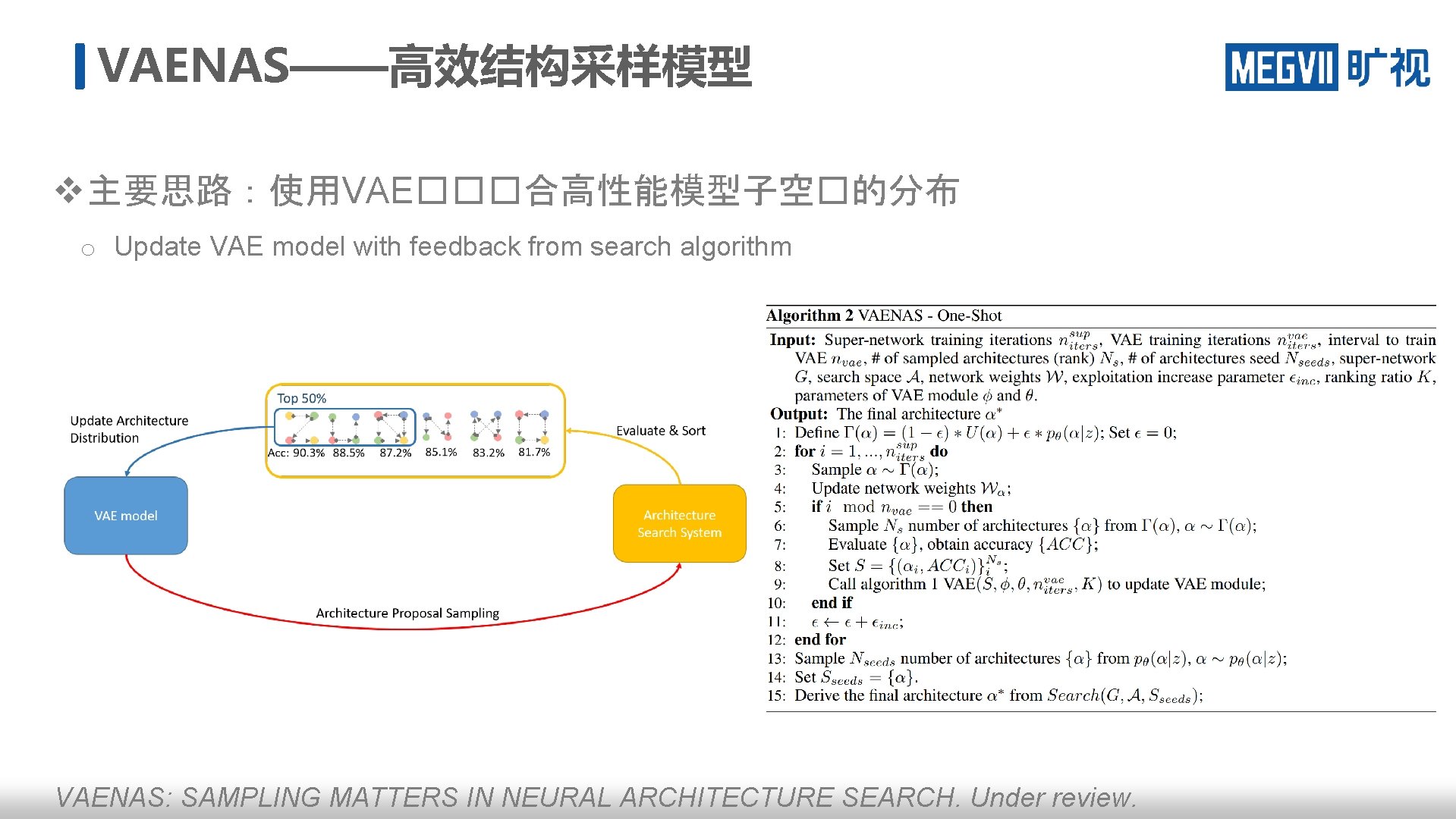

VAENAS——高效结构采样模型 v 主要思路:使用VAE���合高性能模型子空�的分布 o Update VAE model with feedback from search algorithm VAENAS: SAMPLING MATTERS IN NEURAL ARCHITECTURE SEARCH. Under review.

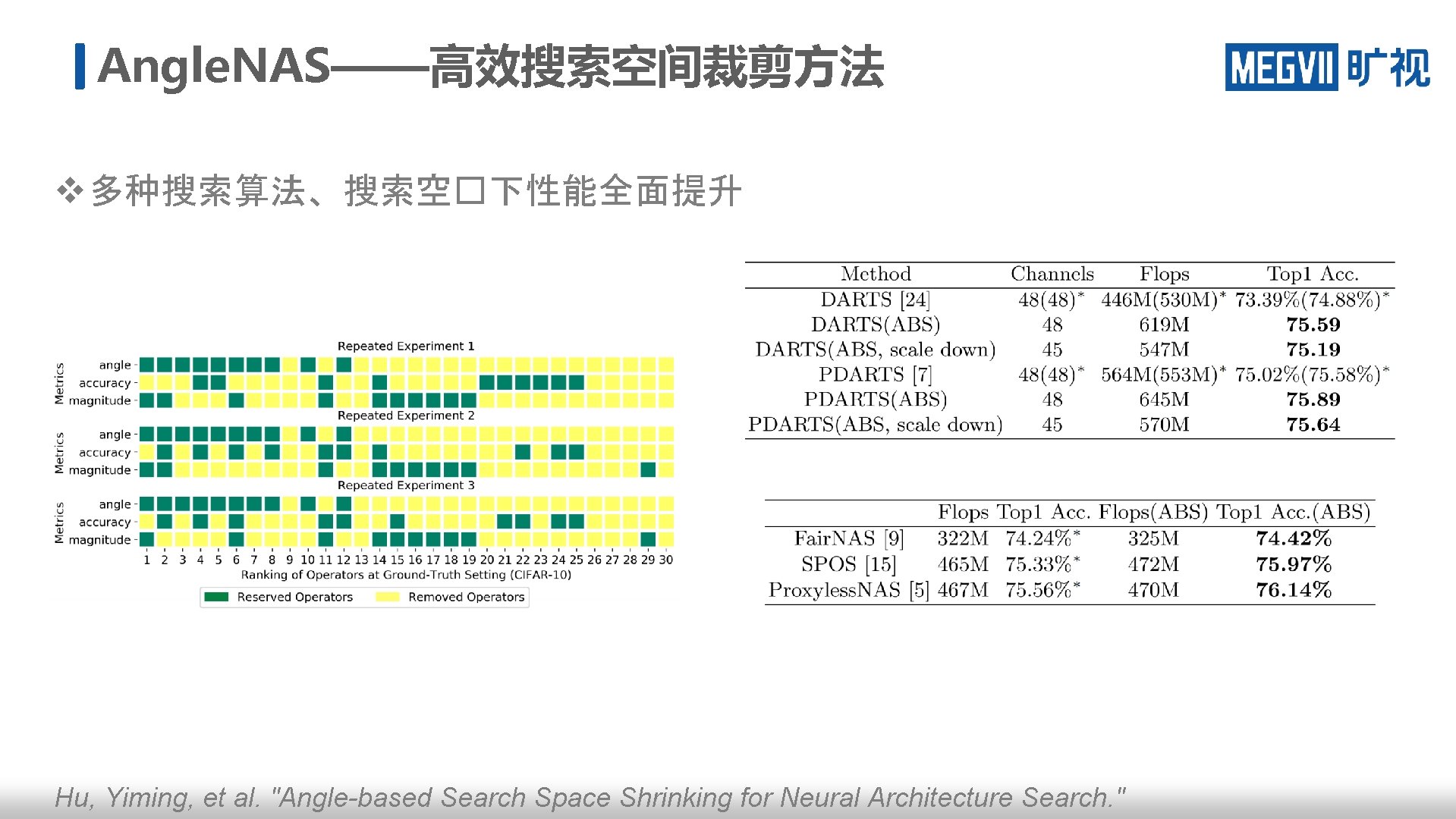

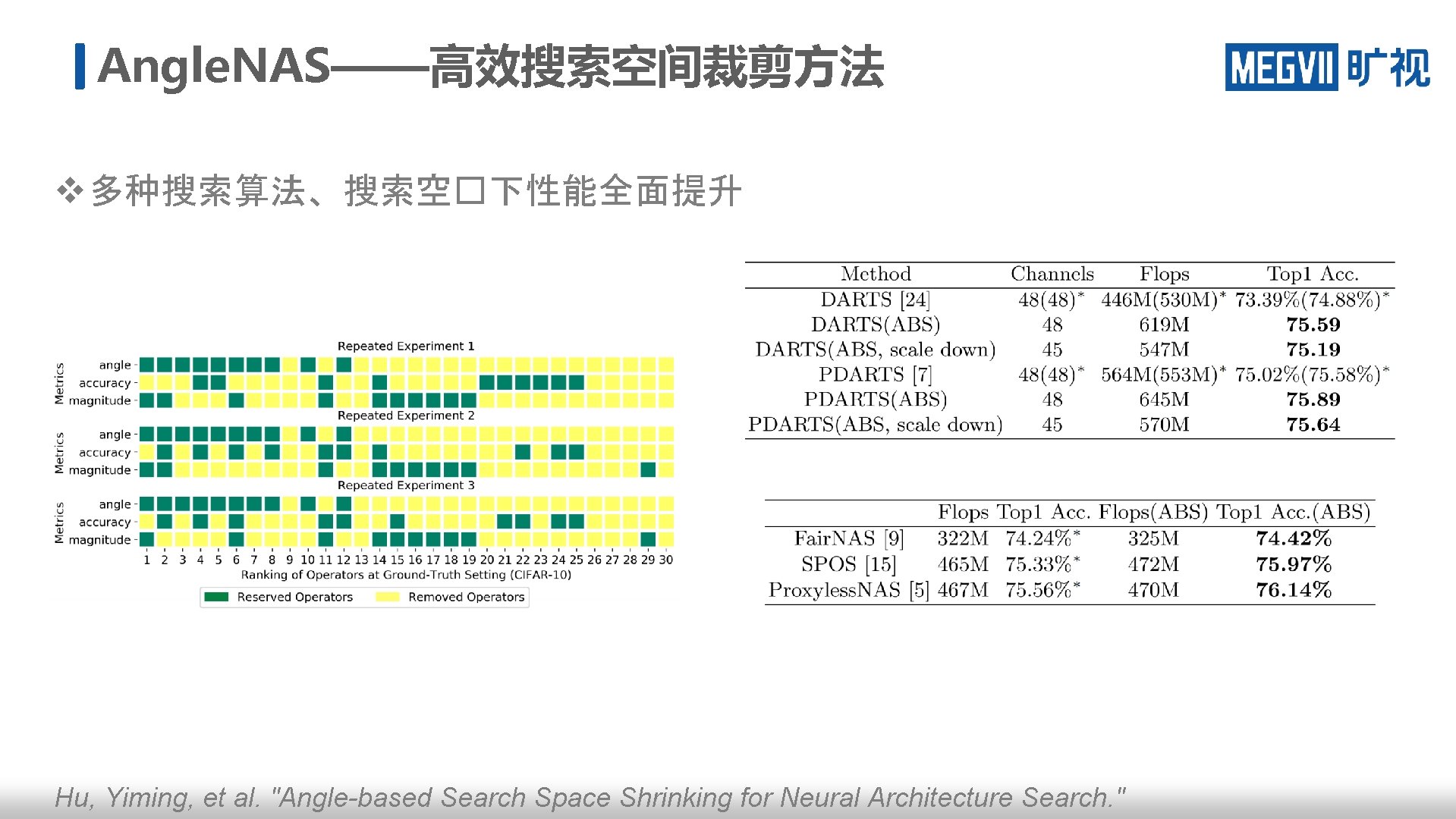

Angle. NAS——高效搜索空间裁剪方法 v 多种搜索算法、搜索空�下性能全面提升 Hu, Yiming, et al. "Angle-based Search Space Shrinking for Neural Architecture Search. "

动态模型 v “Instance-level NAS” o Dynamic routing o Dynamic weight/kernel o Dynamic architecture hyper-parameters v �用意�: o �参数化 o 参数�性能、空����

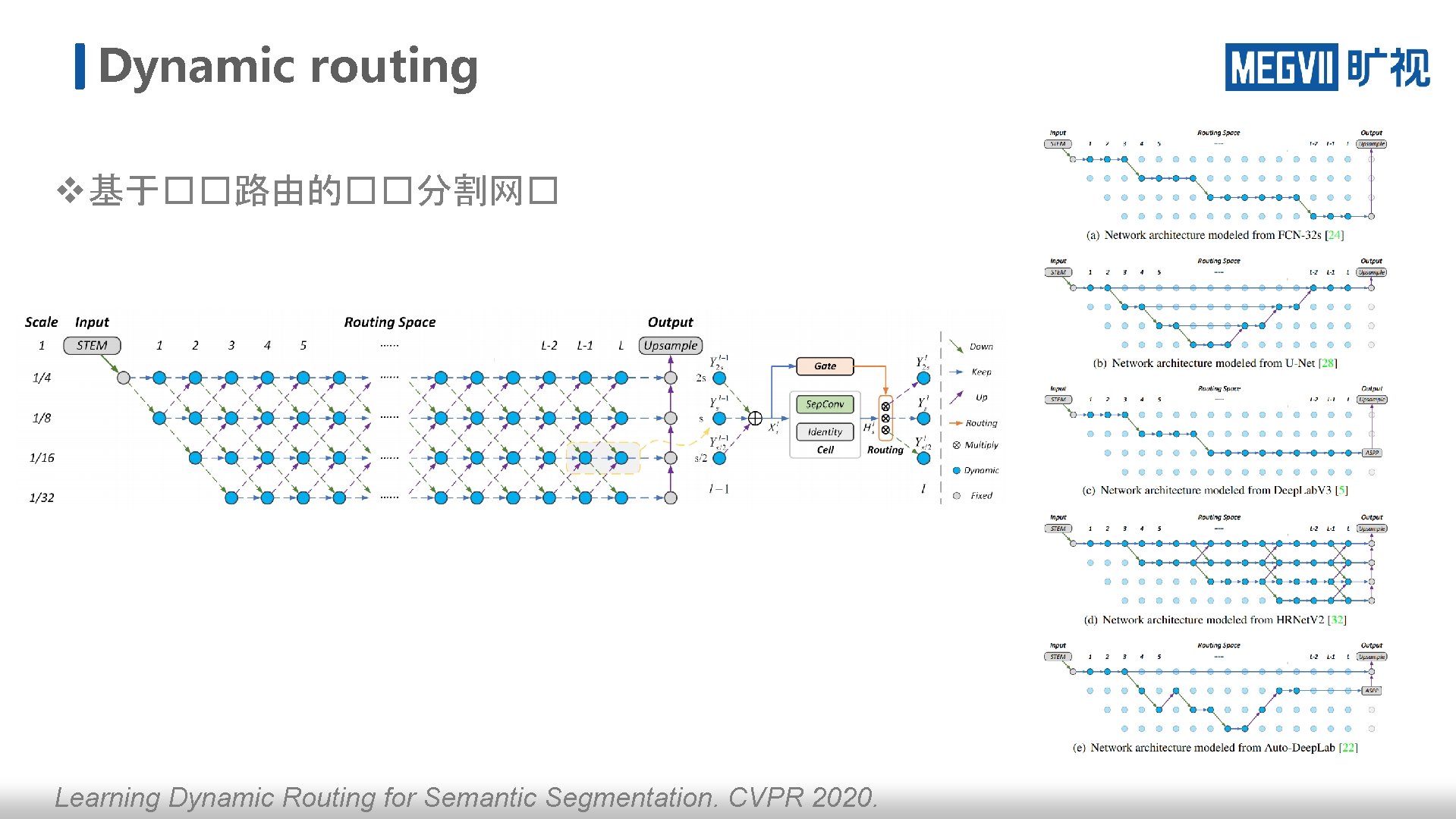

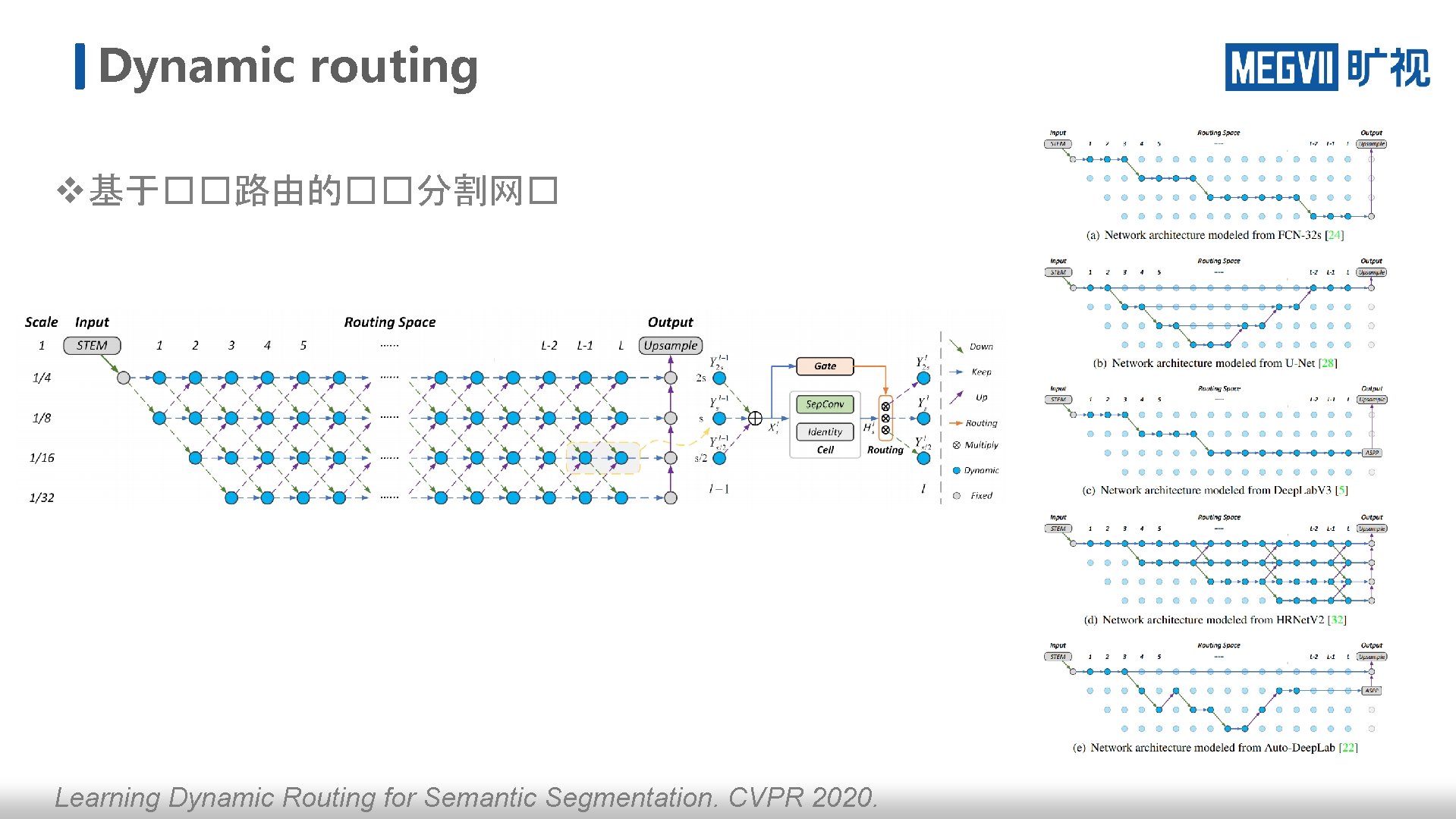

Dynamic routing v 基于��路由的��分割网� Learning Dynamic Routing for Semantic Segmentation. CVPR 2020.

Dynamic routing v 同FLOPs下更强的性能(更多的参数) v ��:�以 Batch推理,影响��速度 Learning Dynamic Routing for Semantic Segmentation. CVPR 2020.

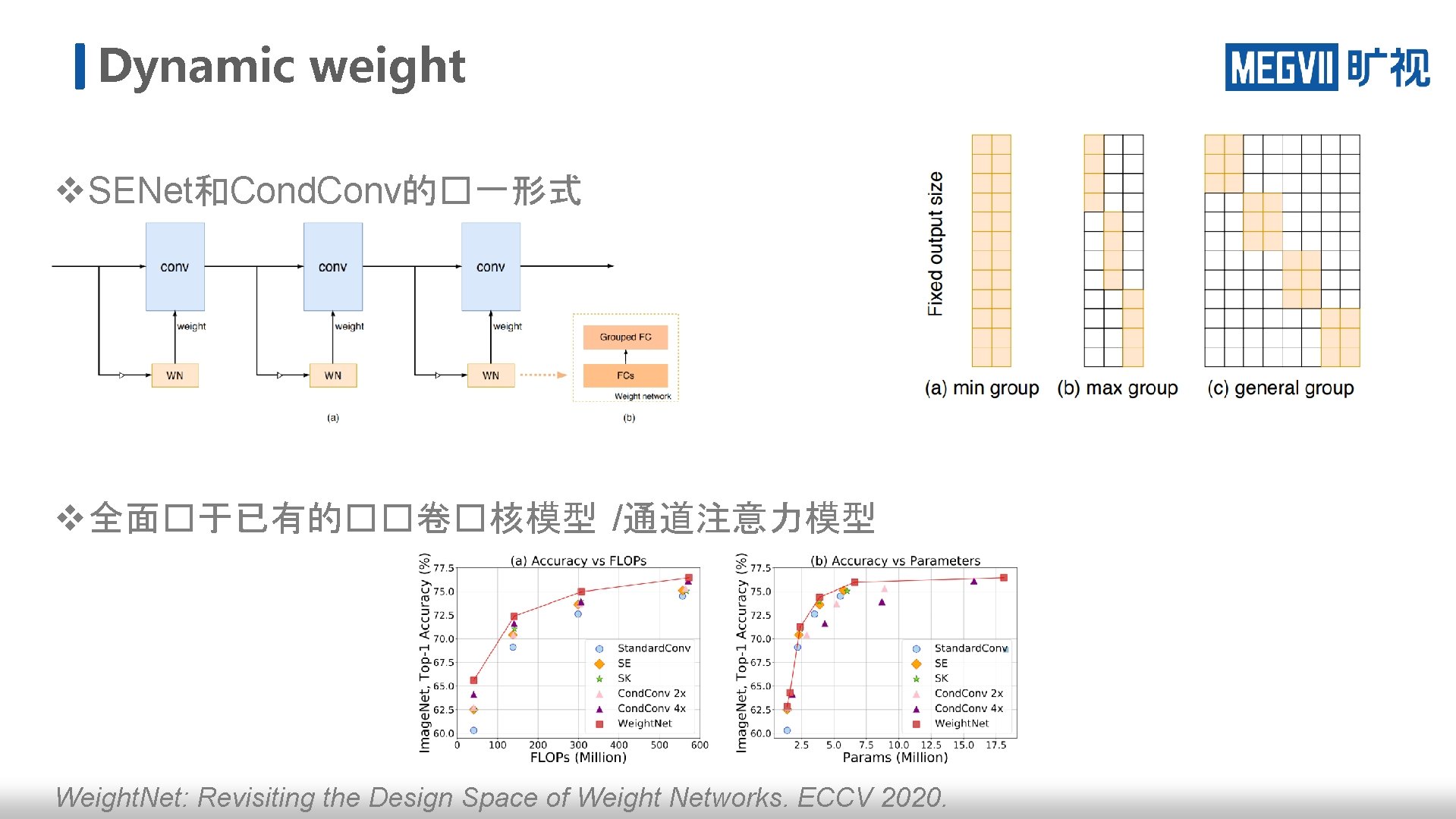

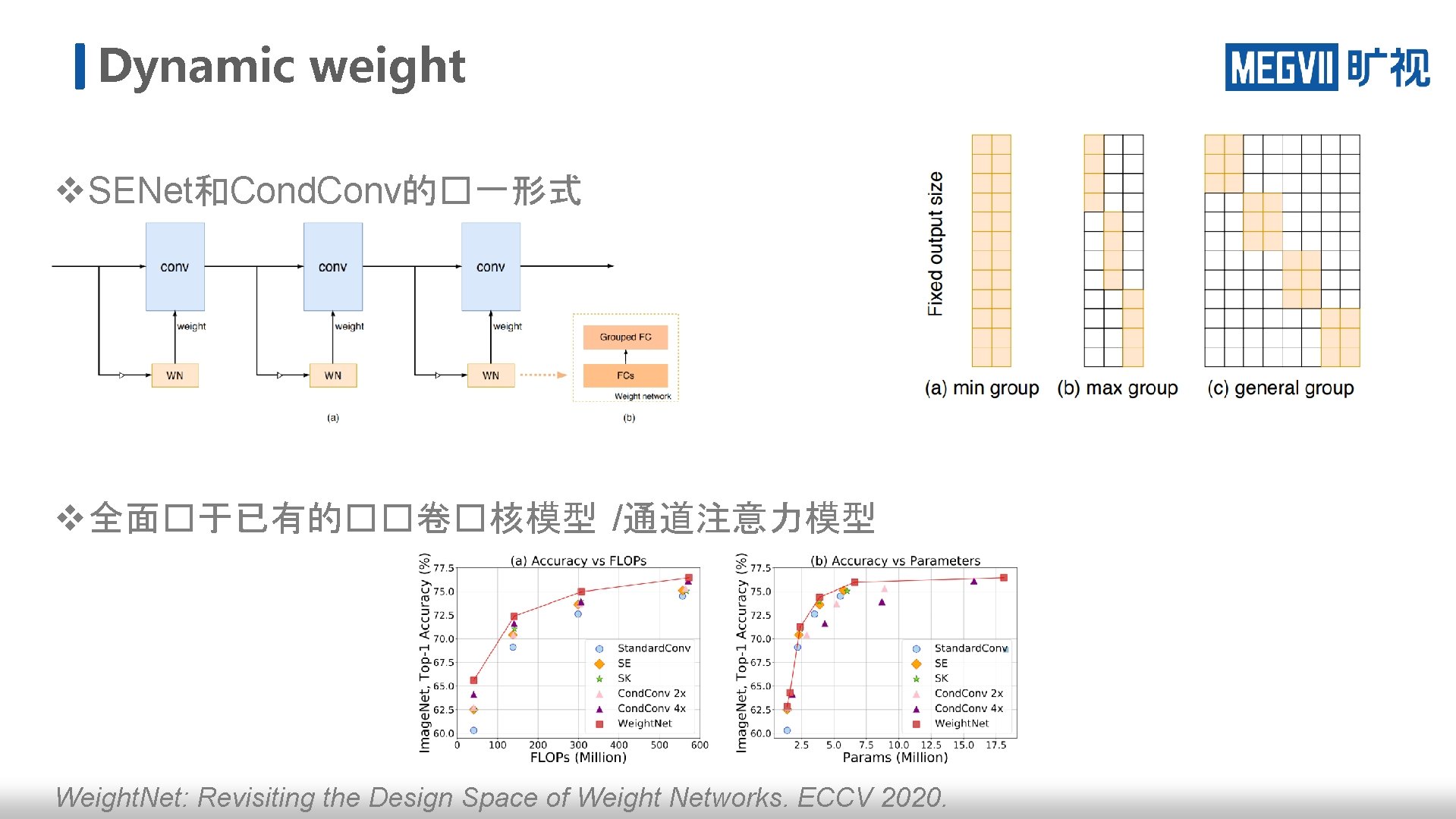

Dynamic weight v SENet和Cond. Conv的�一形式 v 全面�于已有的��卷�核模型 /通道注意力模型 Weight. Net: Revisiting the Design Space of Weight Networks. ECCV 2020.

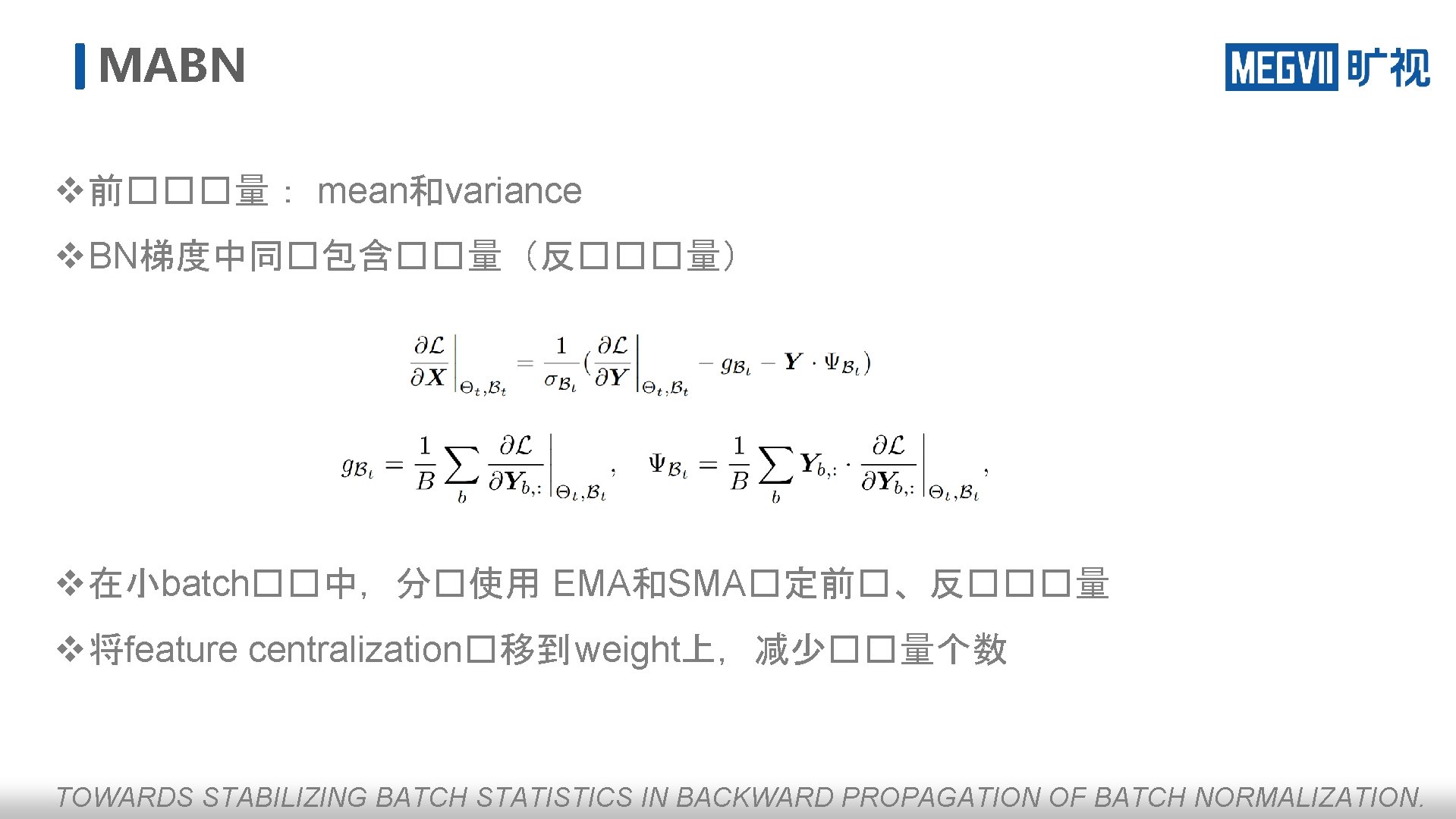

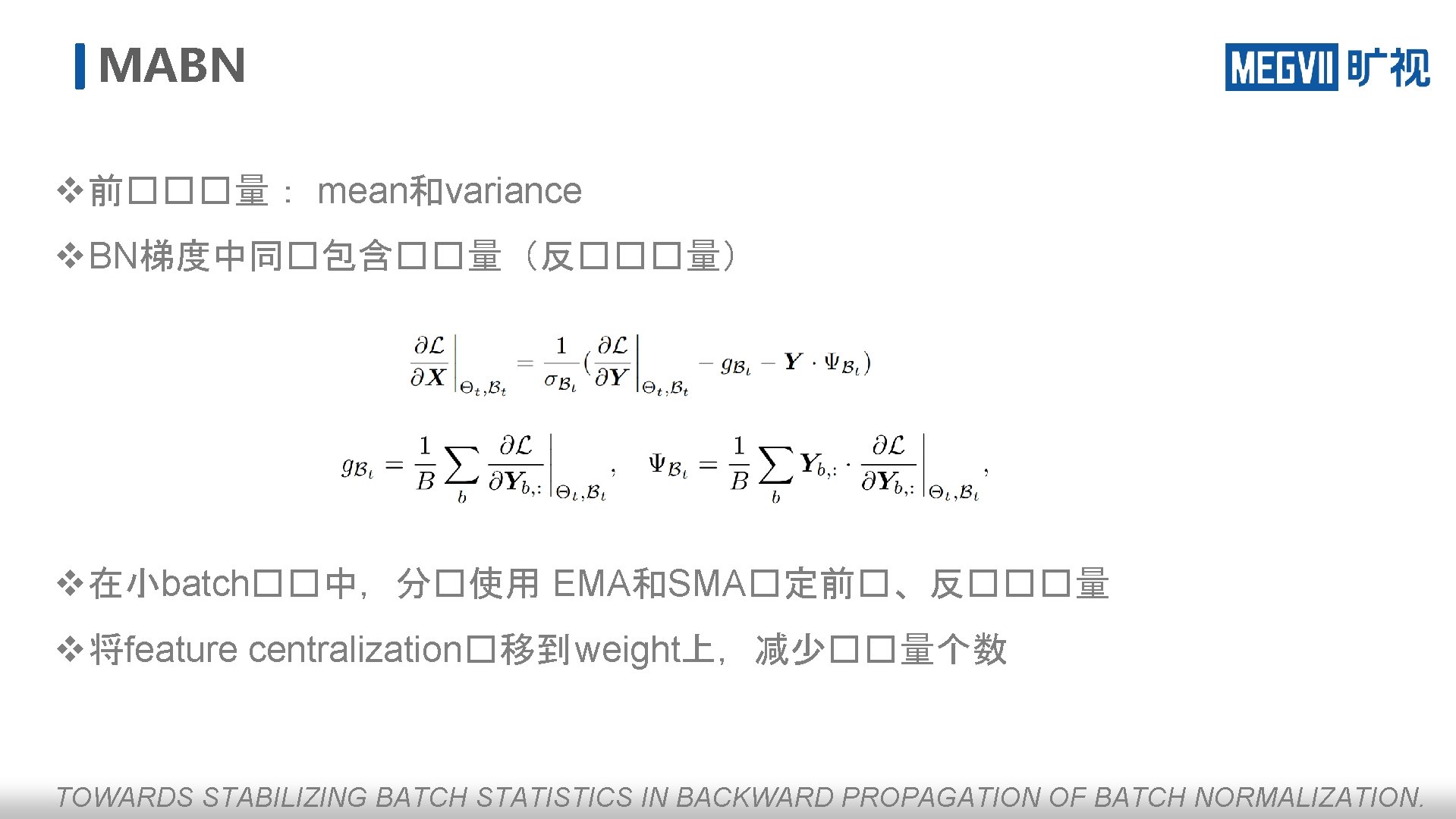

MABN v 前���量: mean和variance v BN梯度中同�包含��量(反���量) v 在小batch��中,分�使用 EMA和SMA�定前�、反���量 v 将feature centralization�移到 weight上,减少��量个数 TOWARDS STABILIZING BATCH STATISTICS IN BACKWARD PROPAGATION OF BATCH NORMALIZATION.

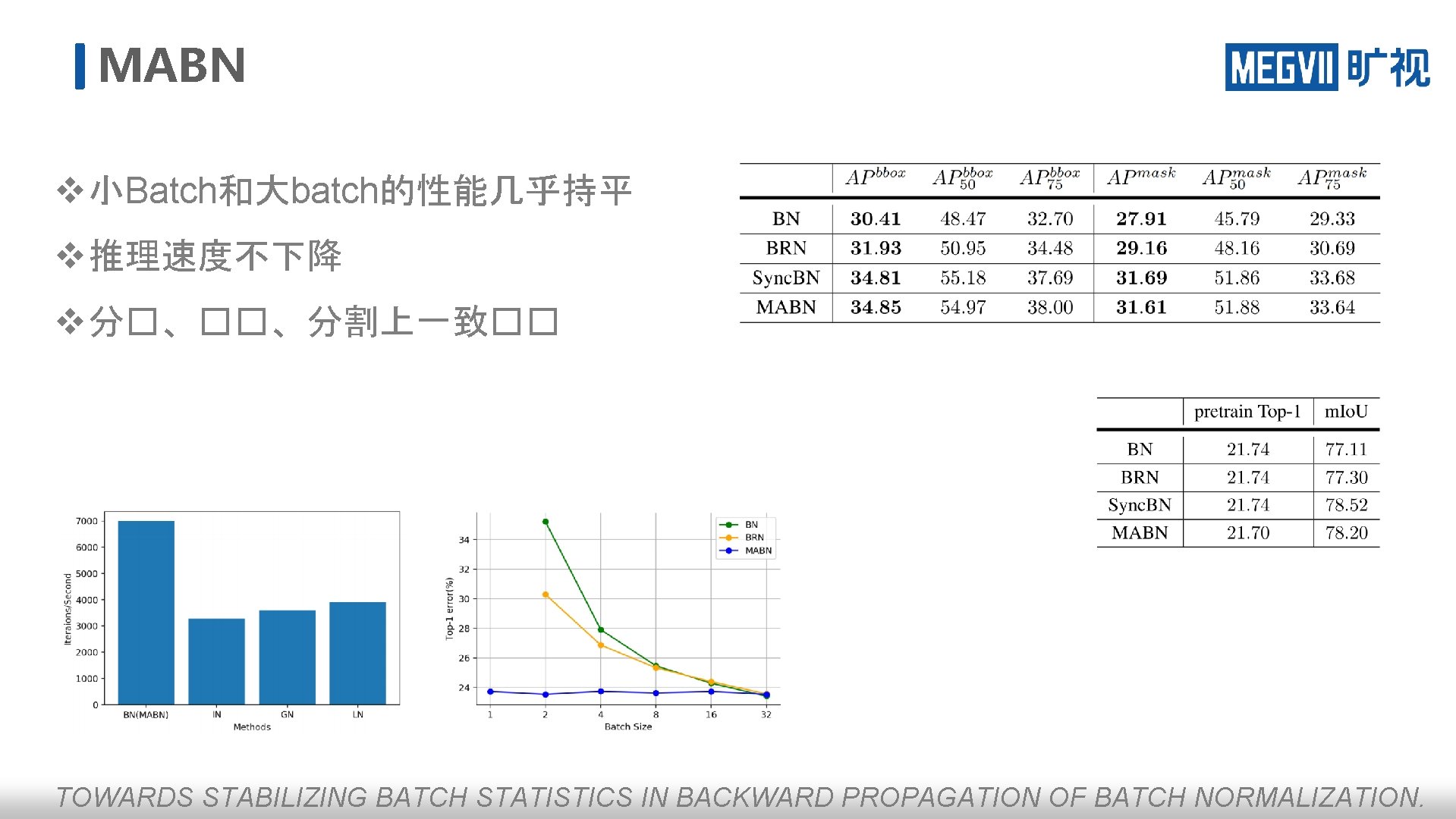

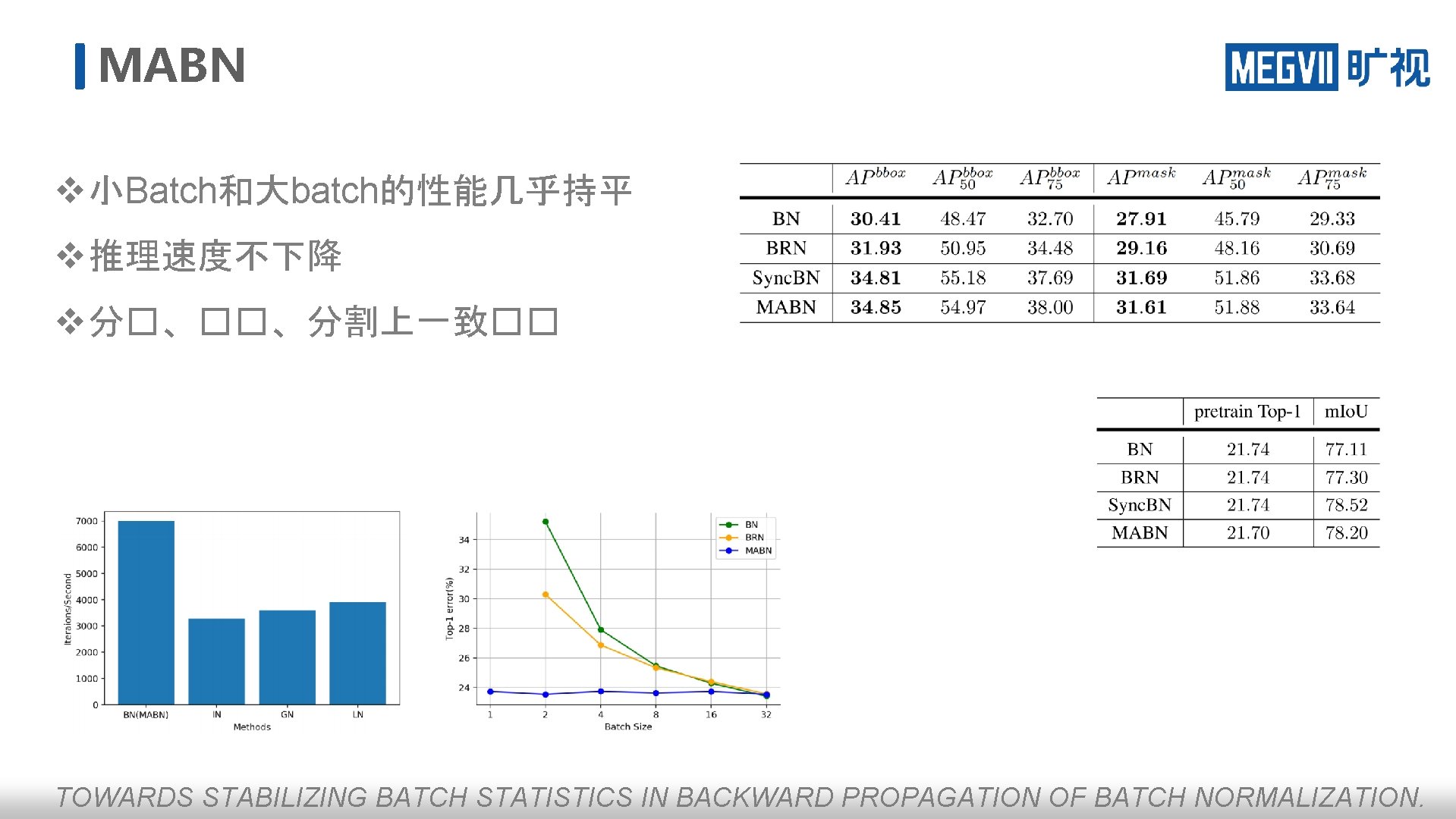

MABN v 小Batch和大batch的性能几乎持平 v 推理速度不下降 v 分�、��、分割上一致�� TOWARDS STABILIZING BATCH STATISTICS IN BACKWARD PROPAGATION OF BATCH NORMALIZATION.

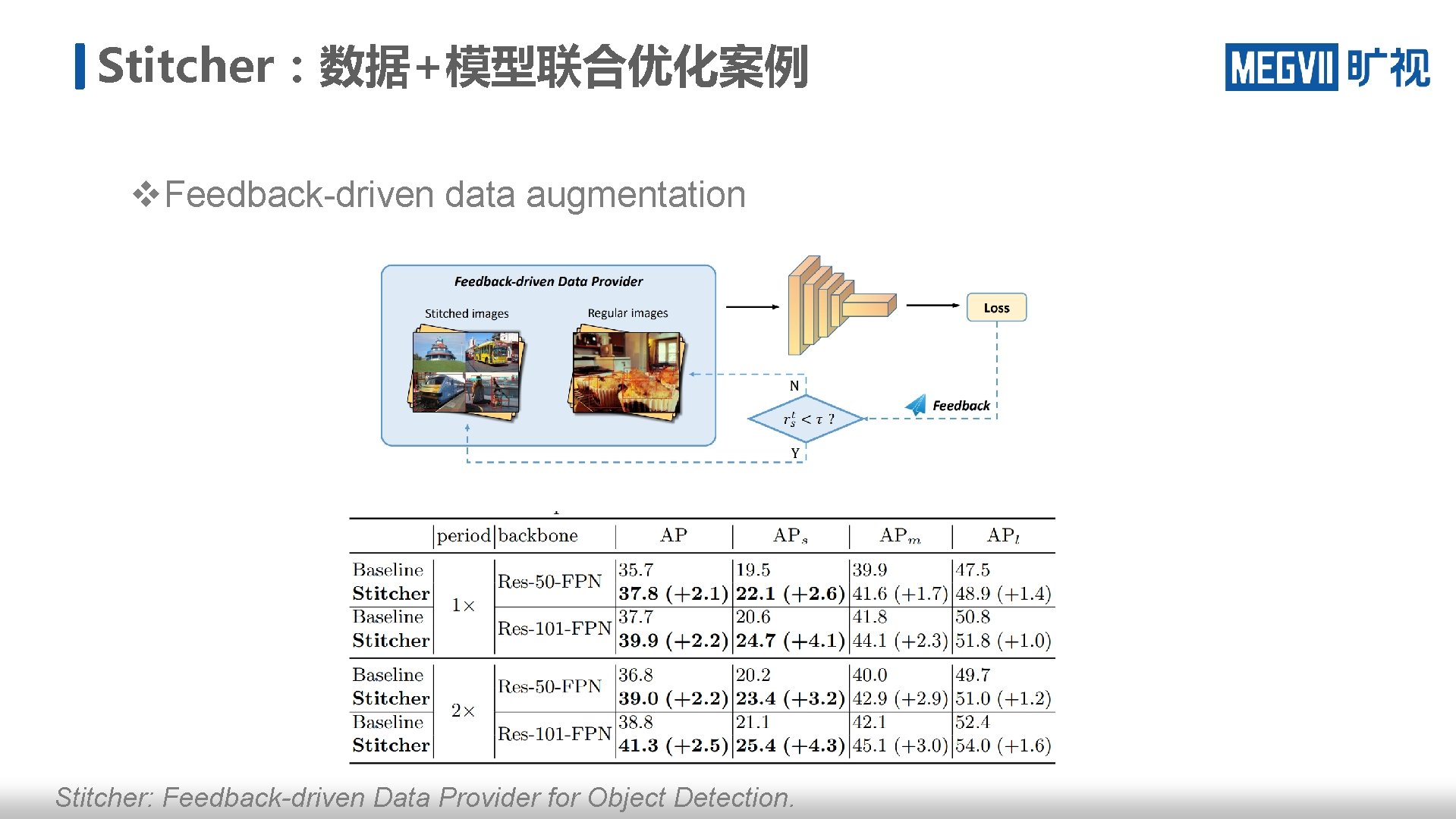

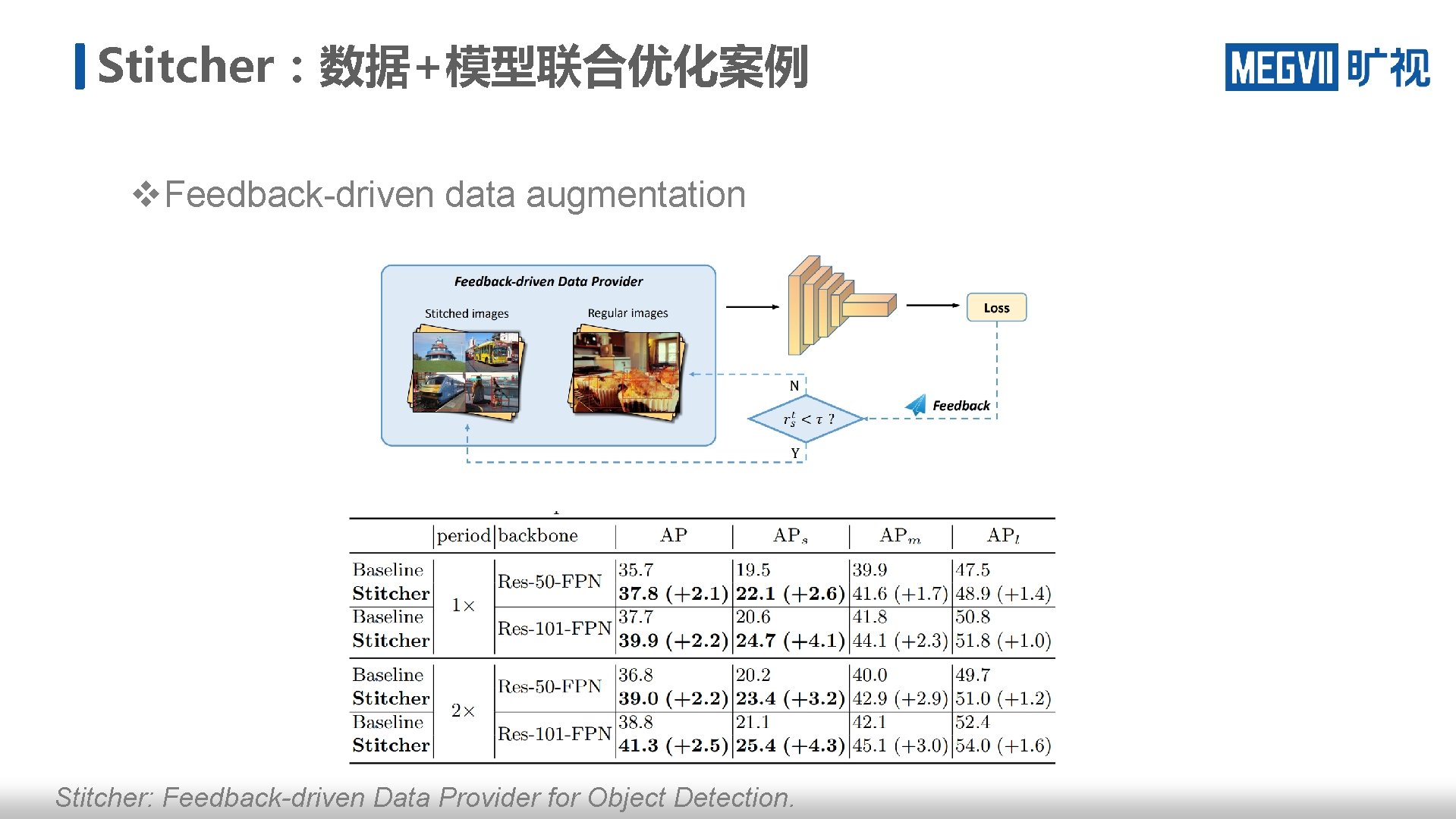

Stitcher:数据+模型联合优化案例 v Feedback-driven data augmentation Stitcher: Feedback-driven Data Provider for Object Detection.

Thanks