Single Machine Scheduling Problem Lesson 5 Maximum Lateness

Single Machine Scheduling Problem Lesson 5

Maximum Lateness and Related Criteria • Problem 1|rj|Lmax is NP-hard.

1|rj|Lmax Polynomial solvable cases: • rj = r for all j = 1, . . . , n. Jackson’s rule: Schedule jobs in order of nondecreasing due dates. • dj = d for all j = 1, . . . , n. Schedule jobs in order of nondecreasing release dates. • pj = 1 for all j = 1, . . . , n. Horn’s rule: At any time schedule an available job with the smallest due date. It is easy to prove the correctness of all these rules by using interchange arguments.

Precedence relations • The previous results may be extended to the corresponding problems with precedence relations between jobs. • In case dj = d we have to modify the release dates before applying the corresponding rule. • Other cases require a similar modification to the due dates.

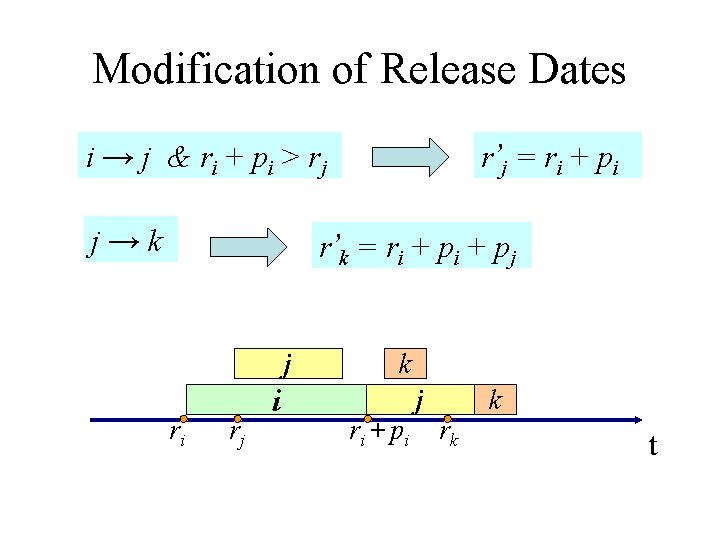

Modification of Release Dates i → j & ri + pi > rj j→k r’j = ri + pi r’k = ri + pj j ri rj i k ri + pi j rk k t

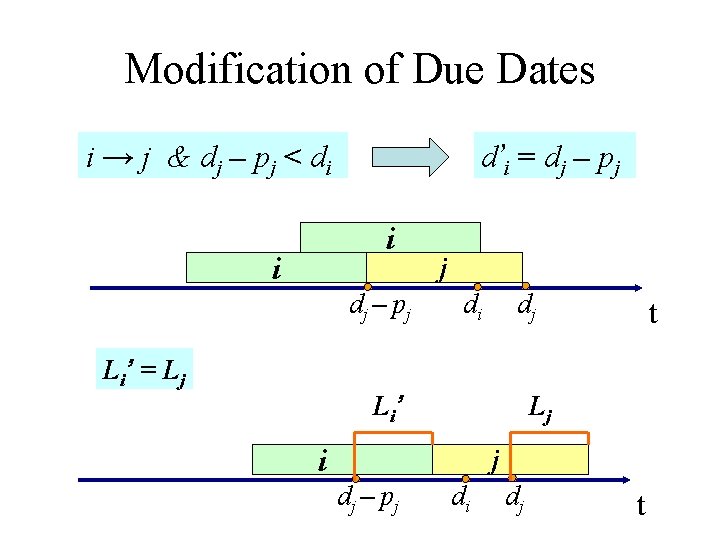

Modification of Due Dates i → j & dj – pj < di d’i = dj – pj i i dj – pj L i’ = L j j di dj L i’ t Lj i j dj – pj di dj t

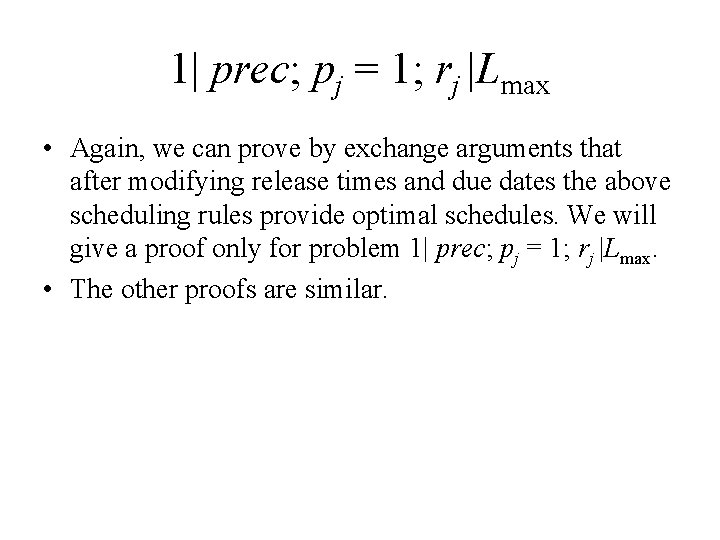

1| prec; pj = 1; rj |Lmax • Again, we can prove by exchange arguments that after modifying release times and due dates the above scheduling rules provide optimal schedules. We will give a proof only for problem 1| prec; pj = 1; rj |Lmax. • The other proofs are similar.

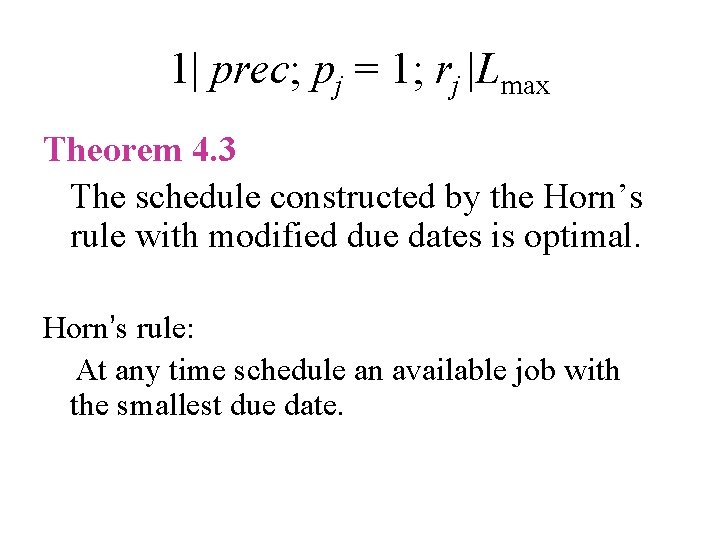

1| prec; pj = 1; rj |Lmax Theorem 4. 3 The schedule constructed by the Horn’s rule with modified due dates is optimal. Horn’s rule: At any time schedule an available job with the smallest due date.

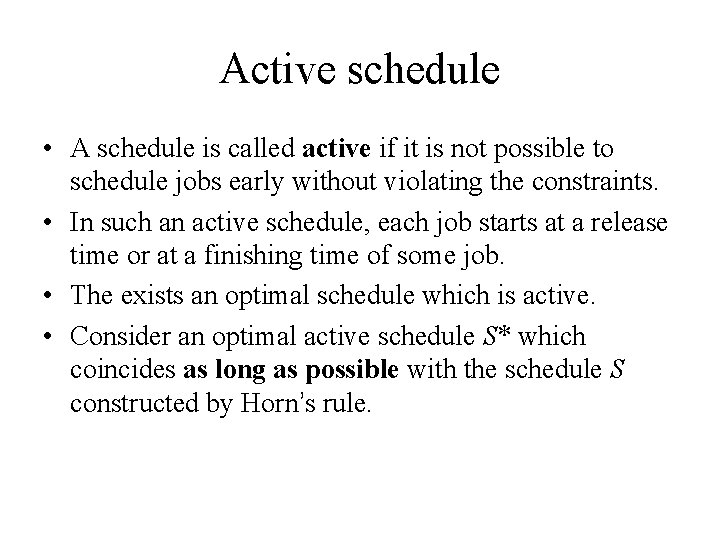

Active schedule • A schedule is called active if it is not possible to schedule jobs early without violating the constraints. • In such an active schedule, each job starts at a release time or at a finishing time of some job. • The exists an optimal schedule which is active. • Consider an optimal active schedule S* which coincides as long as possible with the schedule S constructed by Horn’s rule.

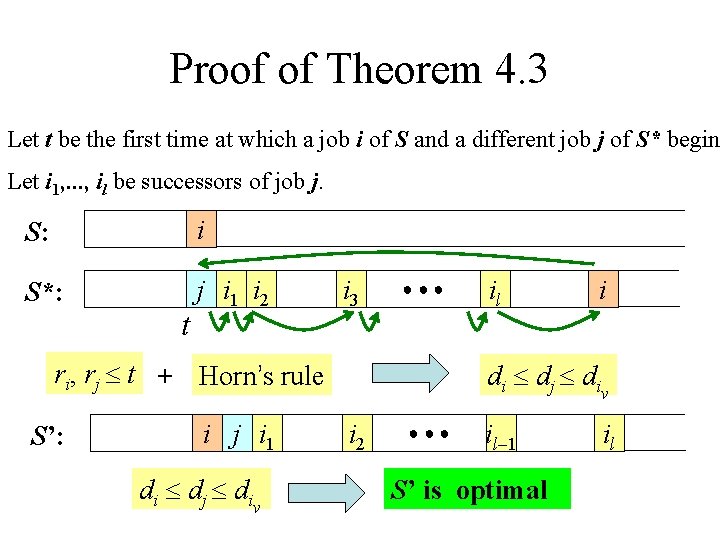

Proof of Theorem 4. 3 Let t be the first time at which a job i of S and a different job j of S* begin Let i 1, . . . , il be successors of job j. S: i S*: j i 1 i 2 t i 3 ●●● ri, rj t + Horn’s rule S’: i j i 1 di dj di ν i 2 ●●● il i di dj di ν il– 1 il S’ is optimal

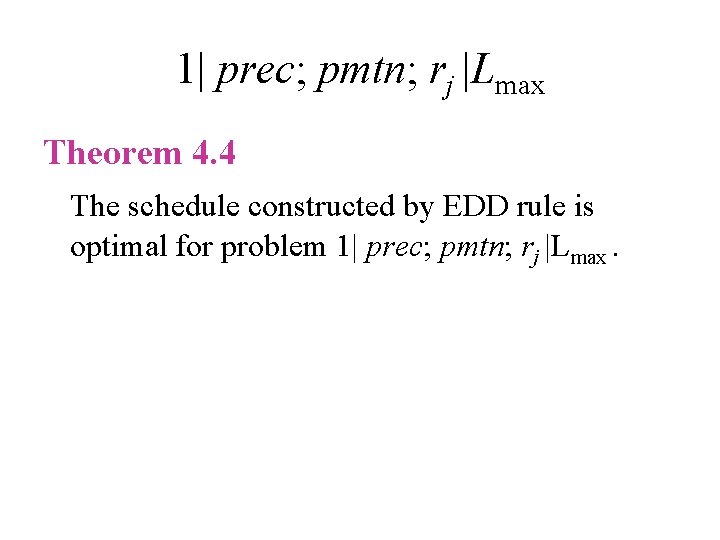

1| prec; pmtn; rj |Lmax Earliest Due Date Rule (EDD-rule): Schedule the jobs starting at the smallest rj-value. At each decision point t given by a release time or a finishing time of some job, schedule a job j with the following properties: r j t, all its predecessors are scheduled, and it has the smallest modified due date. . (4. 7)

1| prec; pmtn; rj |Lmax Theorem 4. 4 The schedule constructed by EDD rule is optimal for problem 1| prec; pmtn; rj |Lmax.

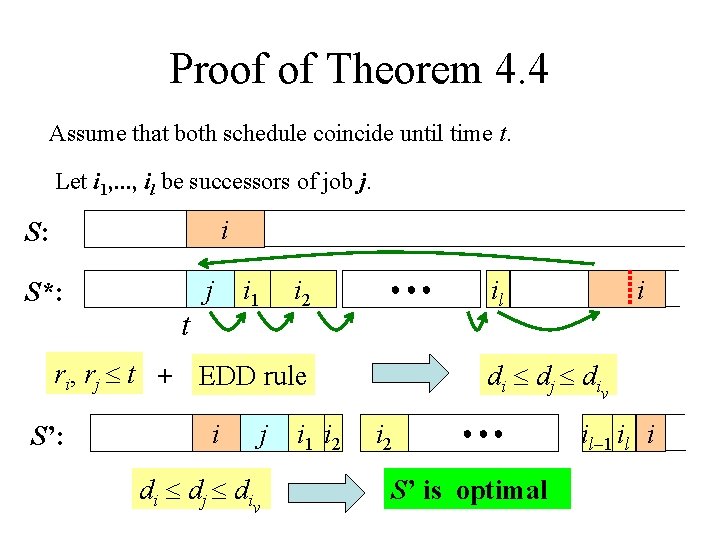

Proof of Theorem 4. 4 Assume that both schedule coincide until time t. Let i 1, . . . , il be successors of job j. i S: S*: j t i 1 i 2 ●●● ri, rj t + EDD rule S’: i di dj di j ν i 1 i 2 il i di dj di i 2 ●●● S’ is optimal ν il– 1 il i

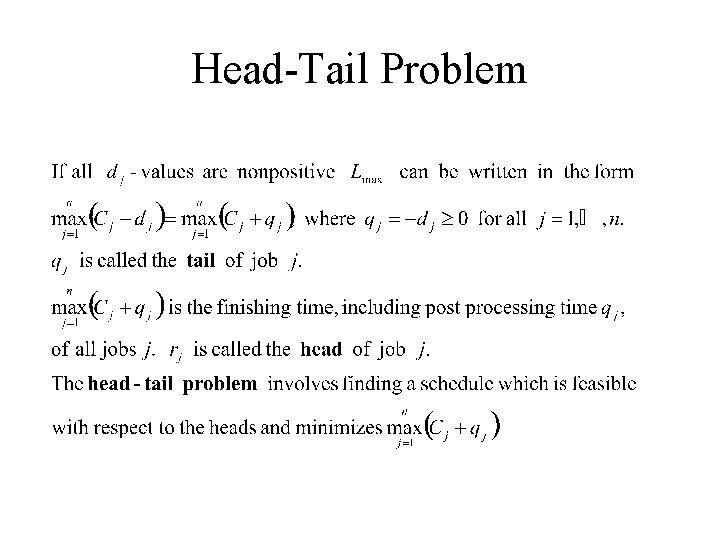

Head-Tail Problem

Largest Tail Rule Corollary 4. 5 A preemptive schedule for the one machine headtail problem with precedence constraints can be constructed in O(n 2) time using the following rule: At each time given by a head t or a finishing time t of some job, schedule a precedence feasible job j with rj t which has a largest tail.

1| prec; pj = 1|Lmax • The first step is to modify the due dates in such a way that they are compatible with the precedence relations. • Additionally, we assume that all modified due dates are nonnegative. So we have Lmax 0. • Using the modified due dates dj, an optimal schedule can be calculated in O(n) time.

![Two ideas. • The jobs are processed in [0, n]. This implies that no Two ideas. • The jobs are processed in [0, n]. This implies that no](http://slidetodoc.com/presentation_image_h/b5f362fe5027ddc14f83d6605d7450ea/image-17.jpg)

Two ideas. • The jobs are processed in [0, n]. This implies that no job j with dj n is late, even if it is processed as the last job. Because Lmax 0, these jobs have no influence on the Lmax-value. • To sort the jobs we may use a bucket sorting method i. e. we construct the sets

1|tree| Σwj Cj • We have to schedule jobs with arbitrary processing times on a single machine so that a weighted sum of completion times is minimized. The processing time are assumed to be positive. Precedence constraints are given by a tree. • We first assume that the tree is an outtree (i. e. each node in the tree has at most one predecessor). • Before presenting an algorithm for outtrees, we will prove some basic properties of optimal schedules.

Notation For each job i = 1, . . . , n, define qi = wi/pi and let S(i) be the set of (not necessarily immediate) successors of i including i. For a set of jobs I {1, . . . , n} define Two subsets I, J {1, . . . , n} are parallel (I ~ J) if, for all i I, j J, neither i is a successor of j nor vise versa. The parallel sets must be disjoint. In the case {i} ~ {j} we simply write i ~ j.

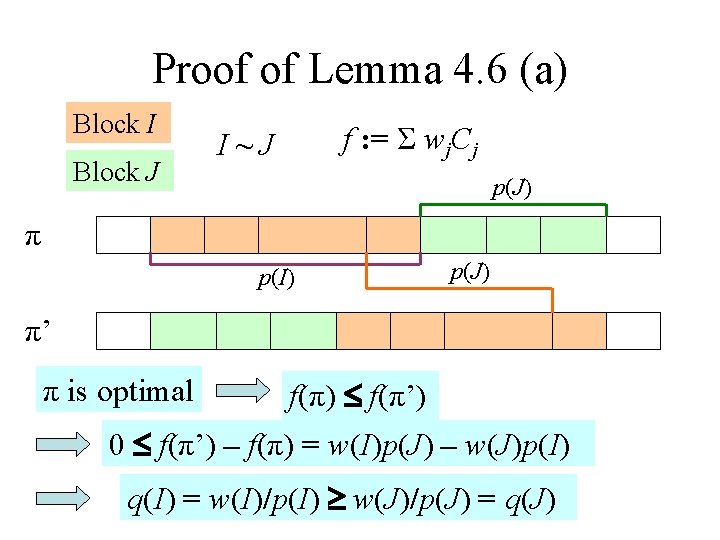

Property of Optimal Schedule (1) Lemma 4. 6 Let π be an optimal sequence and let I, J represent two blocks (sets of jobs to be processed consequently) of π such that I is scheduled before J. Let π’ be the sequence we get from π by swapping I and J. Then • I ~ J implies q(I) q(J), • if I ~ J and q(I) = q(J), then π’ is also optimal.

Proof of Lemma 4. 6 (a) Block I Block J I~J f : = Σ wj. Cj p(J) π p(I) p(J) π’ π is optimal f(π) f(π’) 0 f(π’) – f(π)f(π’) = w(I)p(J) – w(J)p(I) q(I) = w(I)/p(I) w(J)/p(J) = q(J)

Proof of Lemma 4. 6 (b) Block I Block J I~J f : = Σ wj. Cj p(J) π p(I) p(J) π’ q(I) = q(J) w(I)p(J) = w(J)p(I) f(π’) = f(π)

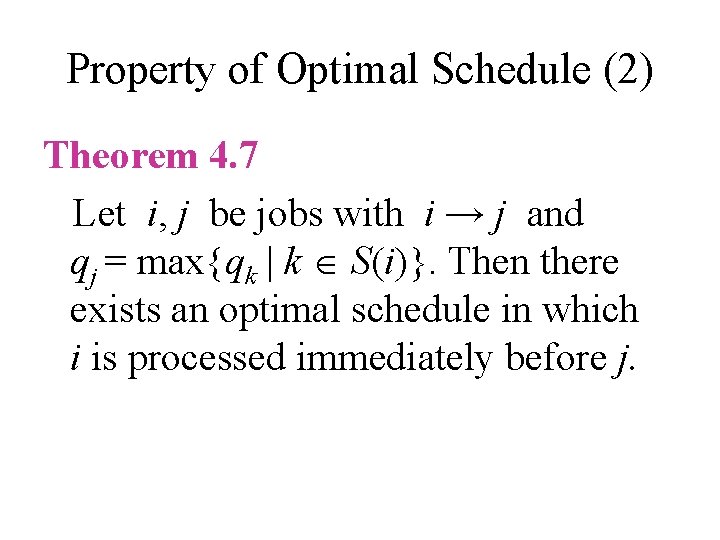

Property of Optimal Schedule (2) Theorem 4. 7 Let i, j be jobs with i → j and qj = max{qk | k S(i)}. Then there exists an optimal schedule in which i is processed immediately before j.

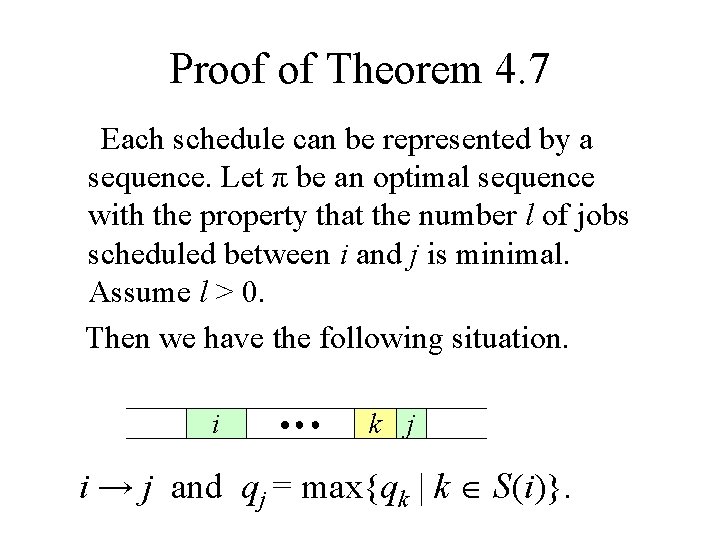

Proof of Theorem 4. 7 Each schedule can be represented by a sequence. Let π be an optimal sequence with the property that the number l of jobs scheduled between i and j is minimal. Assume l > 0. Then we have the following situation. i ●●● k j i → j and qj = max{qk | k S(i)}.

Case 1: k S(i) Optimal schedule π : ●●● i k j qj = max{qk | k S(i)} Lemma 4. 6 i→j Outtree q(k) q(j) k~j q(k) = q(j) Optimal schedule π’ : i ●●● j k

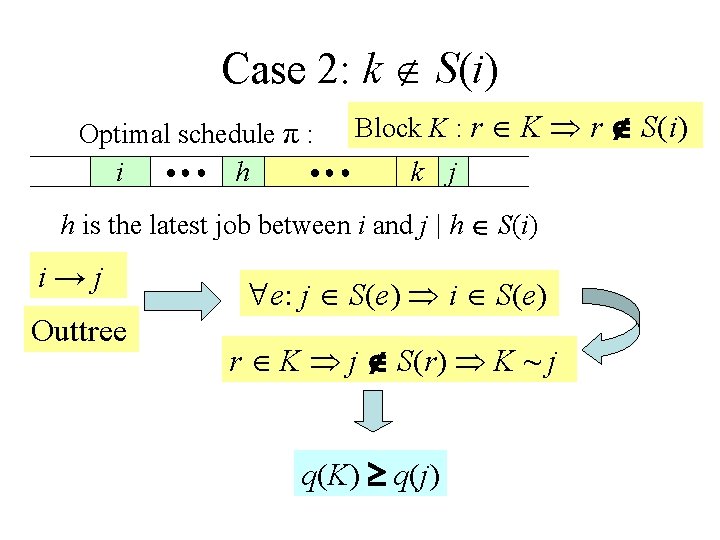

Case 2: k S(i) Optimal schedule π : Block K : r K r S(i) ●●● i ●●● h k j h is the latest job between i and j | h S(i) i→j Outtree e: j S(e) i S(e) r K j S(r) K ~ j q(K) q(j)

Case 2: k S(i) Optimal schedule π : Block K : r K r S(i) ●●● i ●●● h k j h is the latest job between i and j | h S(i) r S(h) K ~ j qj = max{qk|k S(i)} q(h) q(K) q(j) q(h) q(j) Optimal schedule π’ : i ●●● h j q(K) = q(j) + Lemma 4. 6 ●●● k

Idea of Algorithm The conditions of Theorem 4. 7 are satisfied if we choose a job different from the root with maximal qj-value, along with its unique father i. Since the exist an optimal schedule in which i is processed immediately before j, we merge nodes i and j and make all sons of j additional sons of i. The new node i, which represents the subsequence πi: i, j, will have the label q(i): = q(Ji), with Ji = {i, j}. Note that for a son of j, its new farther i (represented by Ji) can be identified by looking for the set Ji which contains j.

Merging Procedure The merging process will be apply recursively. • In the general step, each node i represents a set of jobs Ji and corresponding sequence πi of the jobs Ji, where i is the first job in this sequence. We select a vertex j different from the root with maximal q(j)value. Let f be the unique father of j in the original outtree. Then we have to find a node i of the current tree with f Ji. We merge j and i, replacing Ji and πi by Ji ∪ Jj and πi ○ πj, where πi ○ πj is the concatenation of the sequences πi and πj.

Optimality Theorem 4. 8 The Merging Procedure can be implemented in polynomial time and calculate an optimal sequence of the 1|outtree| Σwj Cj problem.

Proof of Theorem 4. 8 We proof optimality by induction on the number of jobs. Clearly the procedure is correct if we have only one job. Let P be a problem with n jobs. Assume that i, j are the first jobs merged by the algorithm. Let P’ be the resulting problem with n – 1 jobs, where i is replaced by I: ={i, j} with w(I) = w(i) + w(j) and p(I) = p(i) + p(j).

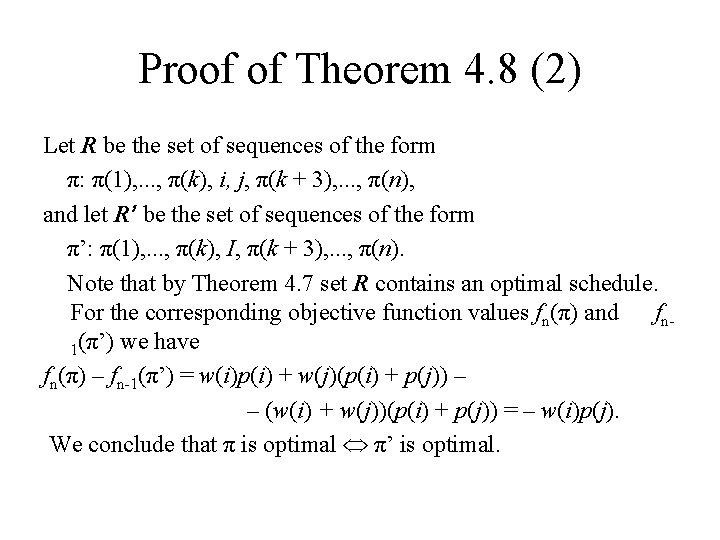

Proof of Theorem 4. 8 (2) Let R be the set of sequences of the form π: π(1), . . . , π(k), i, j, π(k + 3), . . . , π(n), and let R’ be the set of sequences of the form π’: π(1), . . . , π(k), I, π(k + 3), . . . , π(n). Note that by Theorem 4. 7 set R contains an optimal schedule. For the corresponding objective function values fn(π) and fn 1(π’) we have fn(π) – fn-1(π’) = w(i)p(i) + w(j)(p(i) + p(j)) – – (w(i) + w(j))(p(i) + p(j)) = – w(i)p(j). We conclude that π is optimal π’ is optimal.

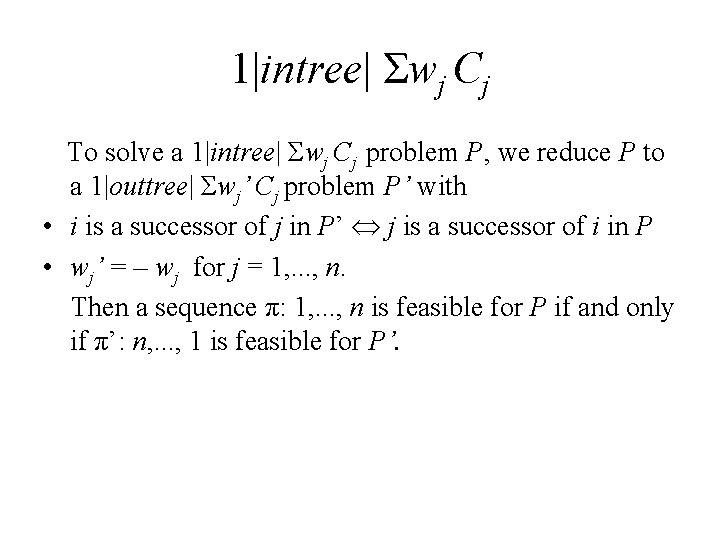

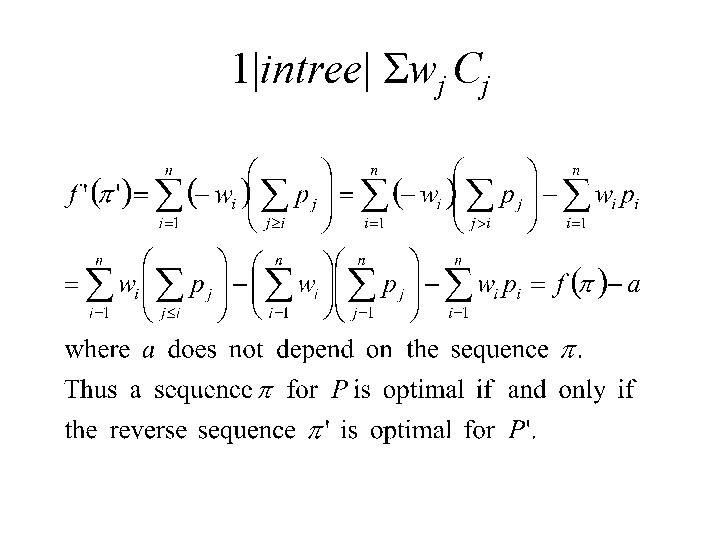

1|intree| Σwj Cj To solve a 1|intree| Σwj Cj problem P, we reduce P to a 1|outtree| Σwj’ Cj problem P’ with • i is a successor of j in P’ j is a successor of i in P • wj’ = – wj for j = 1, . . . , n. Then a sequence π: 1, . . . , n is feasible for P if and only if π’: n, . . . , 1 is feasible for P’.

1|intree| Σwj Cj

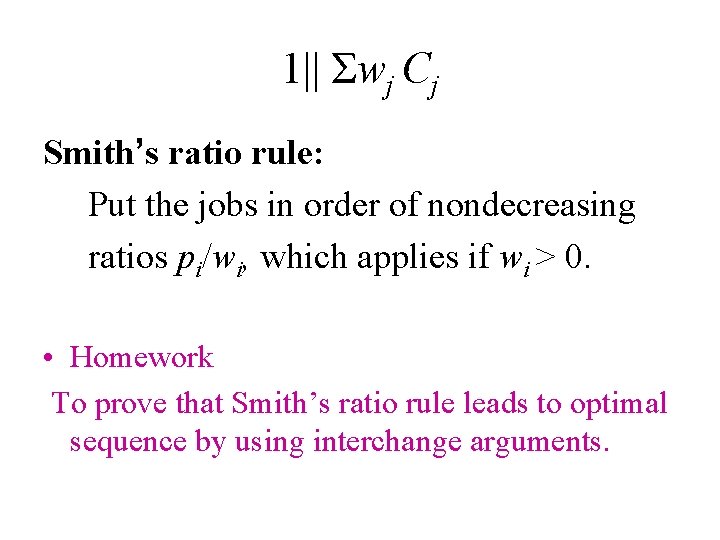

1|| Σwj Cj Smith’s ratio rule: Put the jobs in order of nondecreasing ratios pi/wi, which applies if wi > 0. • Homework To prove that Smith’s ratio rule leads to optimal sequence by using interchange arguments.

1|| ΣCj Smith’s rule (SPT-rule): Put the jobs in order of nondecreasing processing times.

1| pmtn; rj | ΣCj Modified Smith’s rule: At each release time or finishing time of a job, schedule an unfinished job which is available and has the smallest remaining processing time.

Optimality Theorem 4. 9 A schedule constructed by modified Smith’s rule is optimal for problem 1| pmtn; rj | ΣCj.

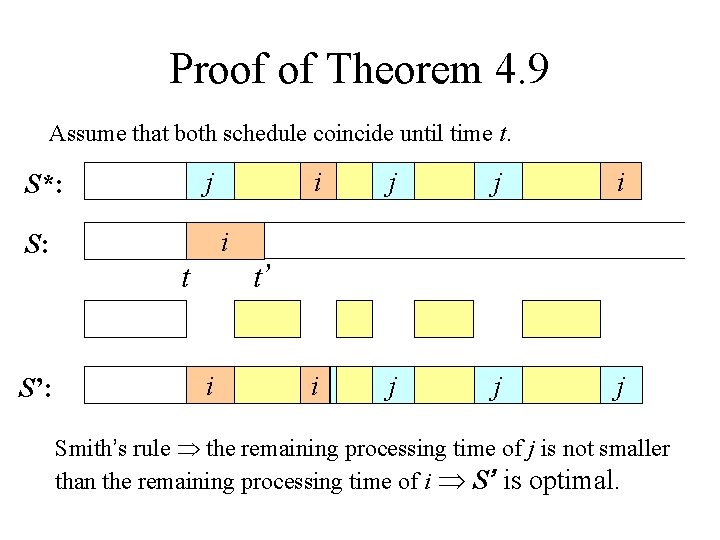

Proof of Theorem 4. 9 Assume that both schedule coincide until time t. j S*: S: S’: i j j i i j j j i t t’ i Smith’s rule the remaining processing time of j is not smaller than the remaining processing time of i S’ is optimal.

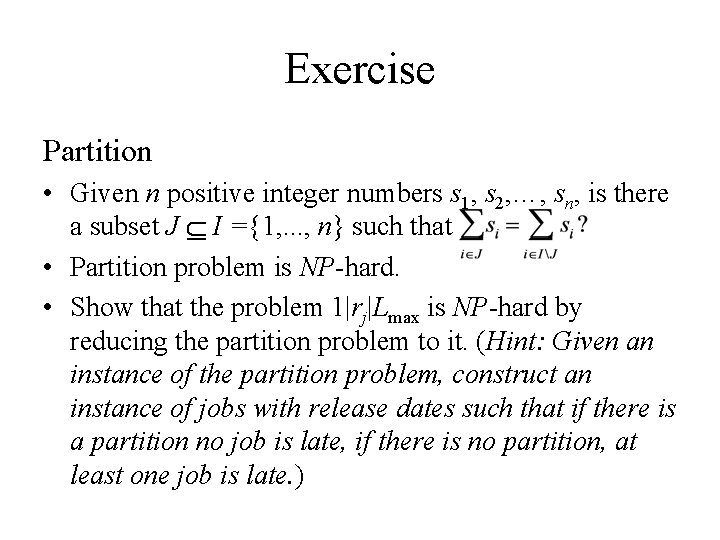

Exercise Partition • Given n positive integer numbers s 1, s 2, …, sn, is there a subset J I ={1, . . . , n} such that • Partition problem is NP-hard. • Show that the problem 1|rj|Lmax is NP-hard by reducing the partition problem to it. (Hint: Given an instance of the partition problem, construct an instance of jobs with release dates such that if there is a partition no job is late, if there is no partition, at least one job is late. )

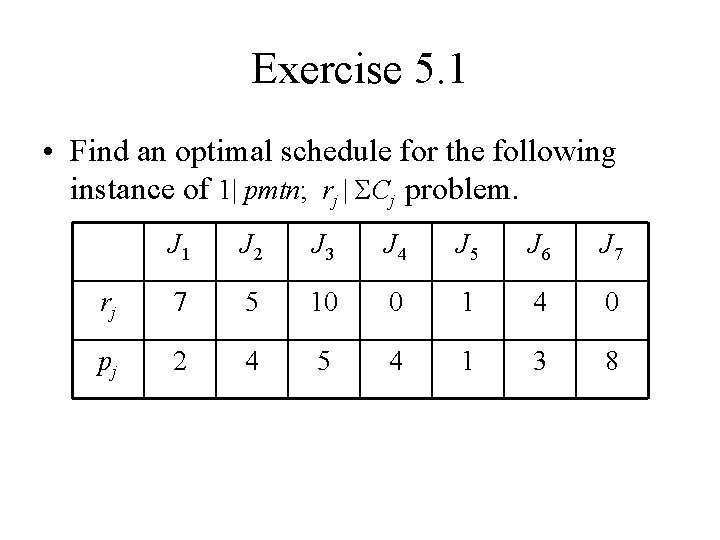

Exercise 5. 1 • Find an optimal schedule for the following instance of 1| pmtn; rj | ΣCj problem. J 1 J 2 J 3 J 4 J 5 J 6 J 7 rj 7 5 10 0 1 4 0 pj 2 4 5 4 1 3 8

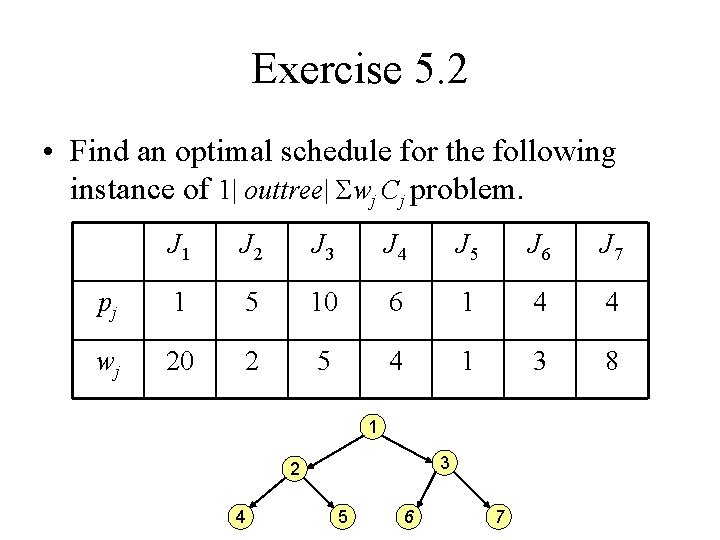

Exercise 5. 2 • Find an optimal schedule for the following instance of 1| outtree| Σwj Cj problem. J 1 J 2 J 3 J 4 J 5 J 6 J 7 pj 1 5 10 6 1 4 4 wj 20 2 5 4 1 3 8 1 3 2 4 5 6 7

Running time • The complexity of the algorithm is O(n 2). This can be seen as follows. If we exclude the recursive calls in Step 7, the number of steps for the Procedure Decompose is O(|S|). Thus, for the number f (n) of computational steps we have the recursion f (n) = cn + Σ f (ni) where ni is the number of jobs in the i-th block and Σ ni n.

- Slides: 43