SIMS 247 Information Visualization and Presentation Marti Hearst

- Slides: 32

SIMS 247: Information Visualization and Presentation Marti Hearst Nov 30, 2005 1

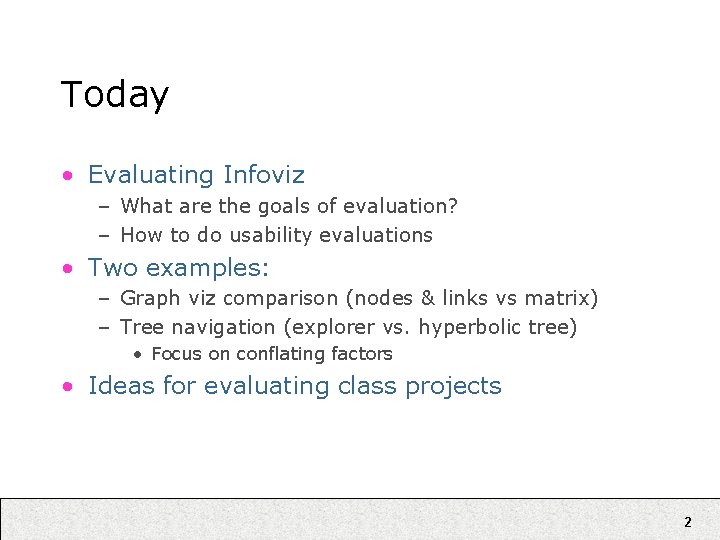

Today • Evaluating Infoviz – What are the goals of evaluation? – How to do usability evaluations • Two examples: – Graph viz comparison (nodes & links vs matrix) – Tree navigation (explorer vs. hyperbolic tree) • Focus on conflating factors • Ideas for evaluating class projects 2

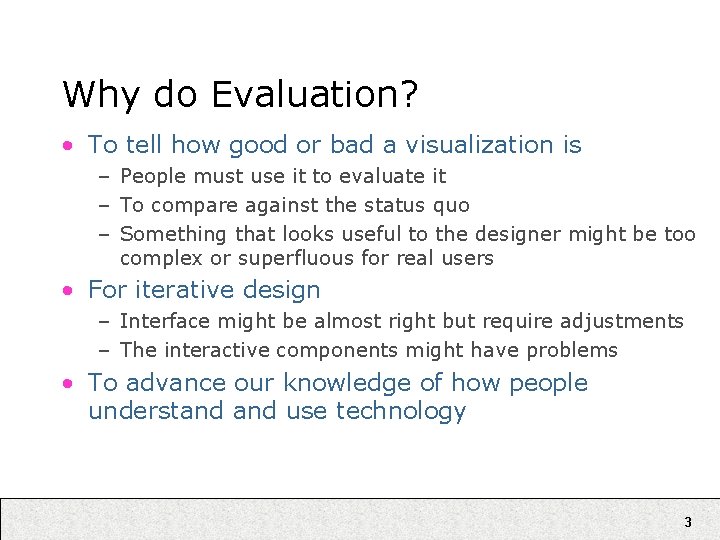

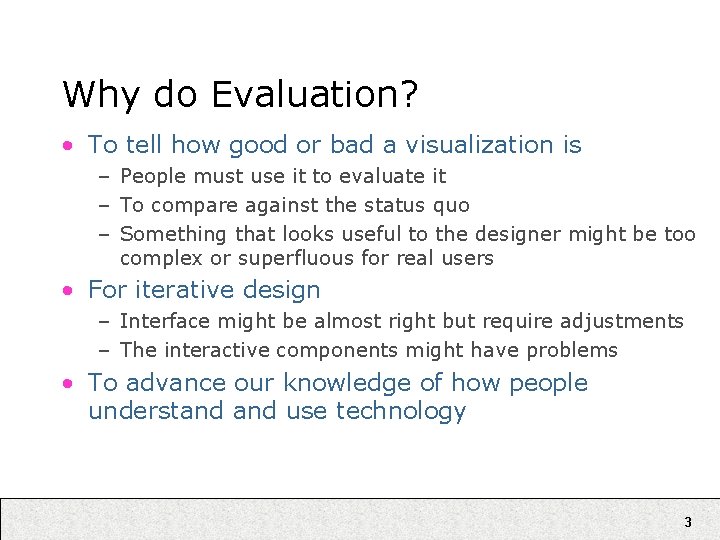

Why do Evaluation? • To tell how good or bad a visualization is – People must use it to evaluate it – To compare against the status quo – Something that looks useful to the designer might be too complex or superfluous for real users • For iterative design – Interface might be almost right but require adjustments – The interactive components might have problems • To advance our knowledge of how people understand use technology 3

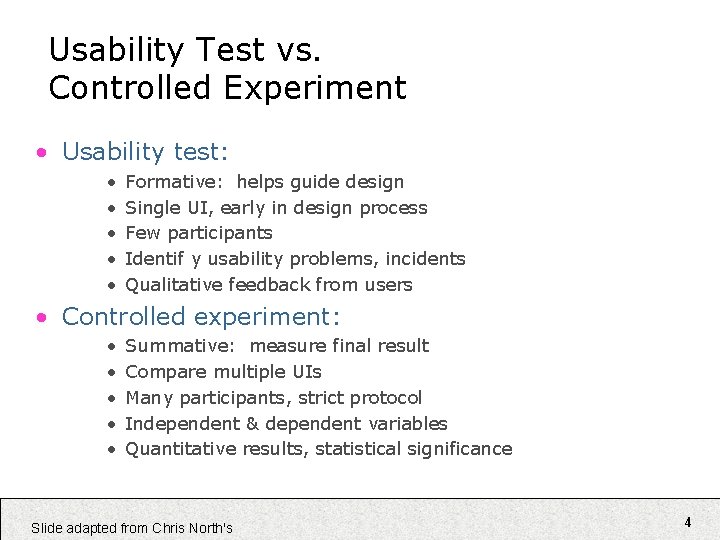

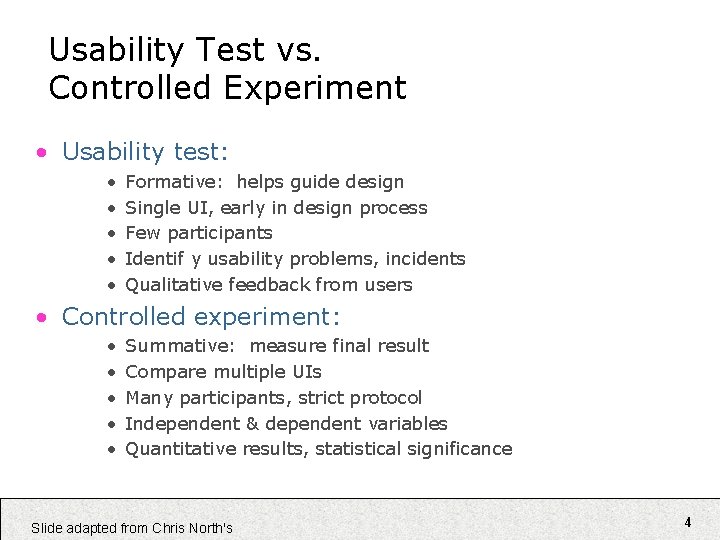

Usability Test vs. Controlled Experiment • Usability test: • • • Formative: helps guide design Single UI, early in design process Few participants Identif y usability problems, incidents Qualitative feedback from users • Controlled experiment: • • • Summative: measure final result Compare multiple UIs Many participants, strict protocol Independent & dependent variables Quantitative results, statistical significance Slide adapted from Chris North's 4

Controlled Experiments Slide adapted from Chris North's 5

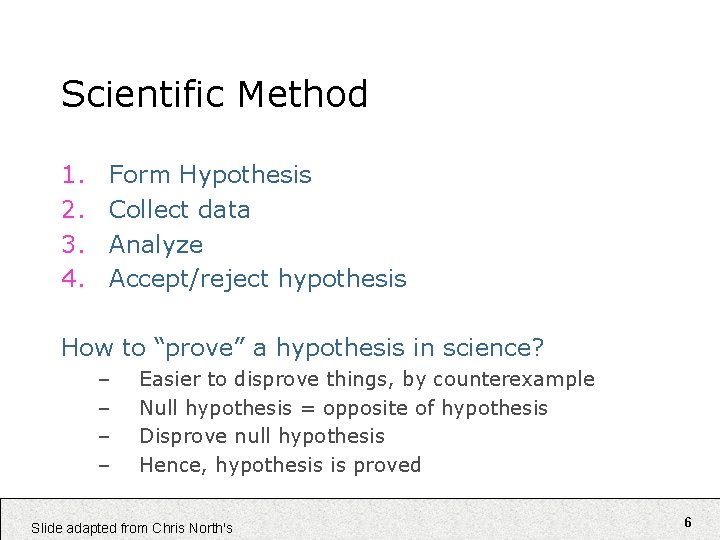

Scientific Method 1. 2. 3. 4. Form Hypothesis Collect data Analyze Accept/reject hypothesis How to “prove” a hypothesis in science? – – Easier to disprove things, by counterexample Null hypothesis = opposite of hypothesis Disprove null hypothesis Hence, hypothesis is proved Slide adapted from Chris North's 6

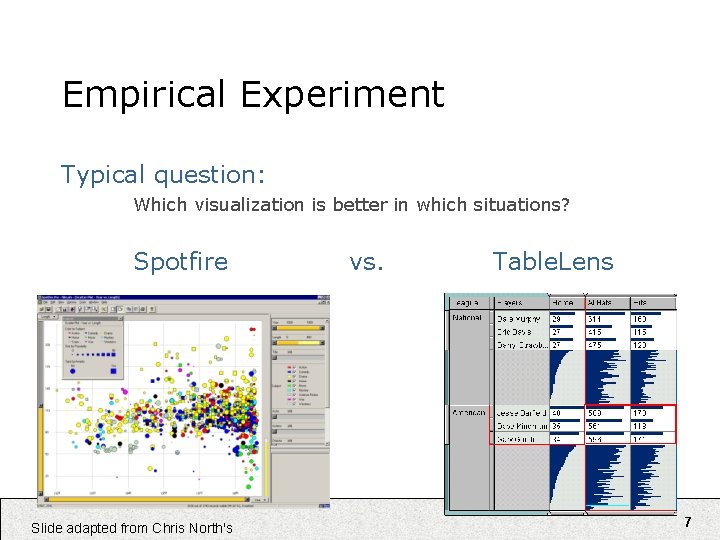

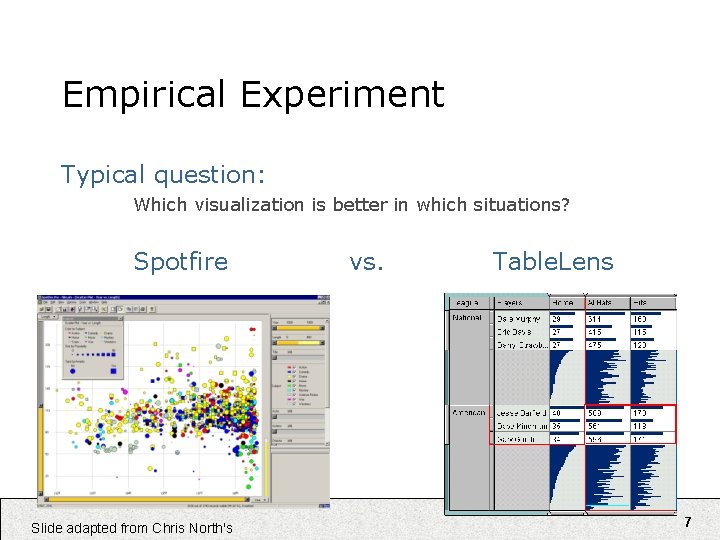

Empirical Experiment Typical question: Which visualization is better in which situations? Spotfire Slide adapted from Chris North's vs. Table. Lens 7

Cause and Effect • Goal: determine “cause and effect” • Cause = visualization tool (Spotfire vs. Table. Lens) • Effect = user performance time on task T • Procedure: • Vary cause • Measure effect • Problem: random variation • Cause = vis tool OR random variation? Slide adapted from Chris North's 8

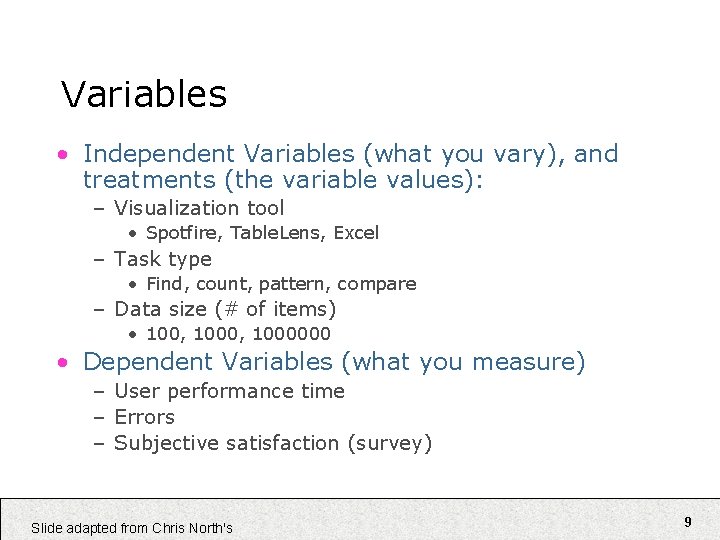

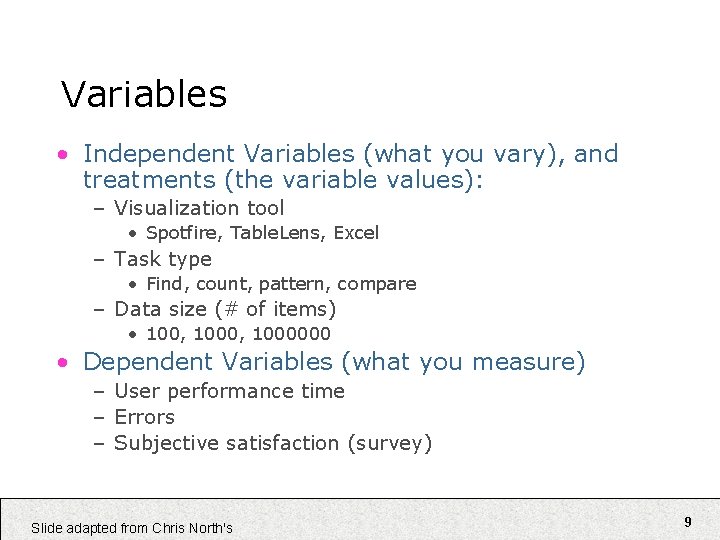

Variables • Independent Variables (what you vary), and treatments (the variable values): – Visualization tool • Spotfire, Table. Lens, Excel – Task type • Find, count, pattern, compare – Data size (# of items) • 100, 1000000 • Dependent Variables (what you measure) – User performance time – Errors – Subjective satisfaction (survey) Slide adapted from Chris North's 9

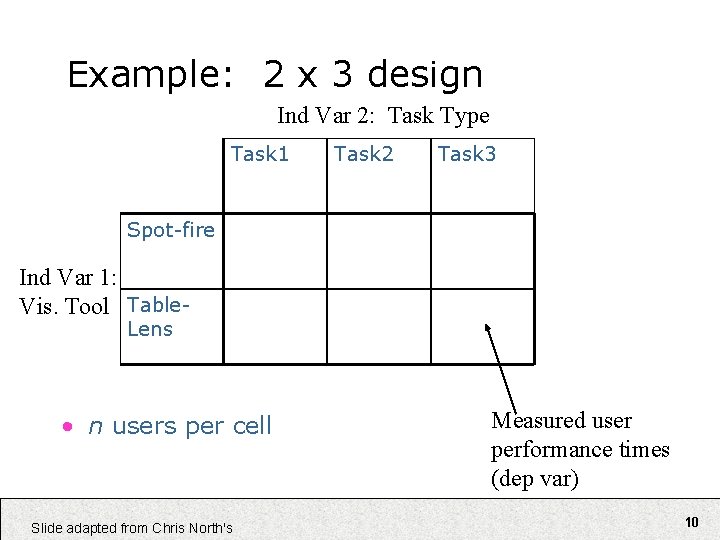

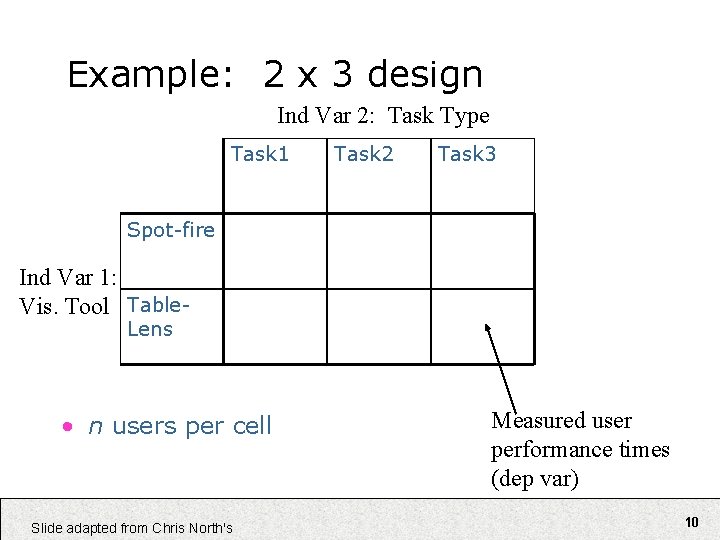

Example: 2 x 3 design Ind Var 2: Task Type Task 1 Task 2 Task 3 Spot-fire Ind Var 1: Vis. Tool Table. Lens • n users per cell Slide adapted from Chris North's Measured user performance times (dep var) 10

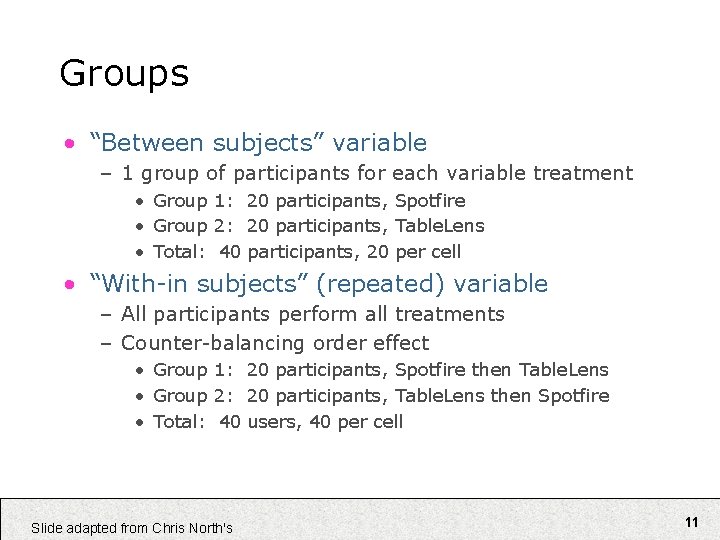

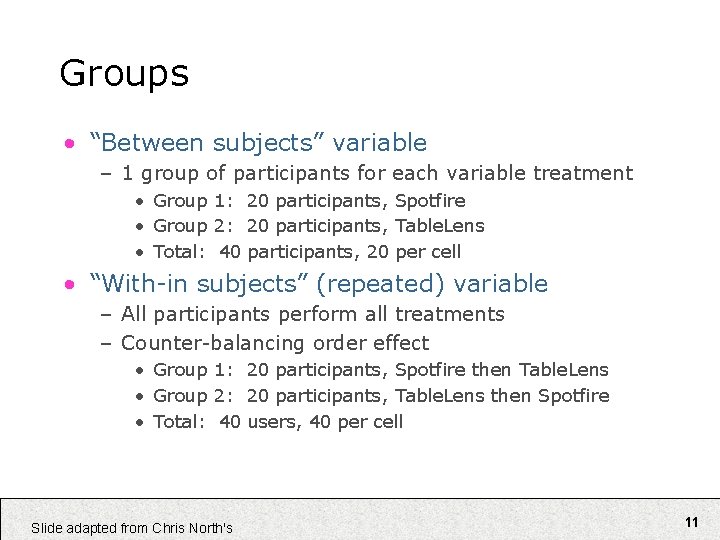

Groups • “Between subjects” variable – 1 group of participants for each variable treatment • Group 1: 20 participants, Spotfire • Group 2: 20 participants, Table. Lens • Total: 40 participants, 20 per cell • “With-in subjects” (repeated) variable – All participants perform all treatments – Counter-balancing order effect • Group 1: 20 participants, Spotfire then Table. Lens • Group 2: 20 participants, Table. Lens then Spotfire • Total: 40 users, 40 per cell Slide adapted from Chris North's 11

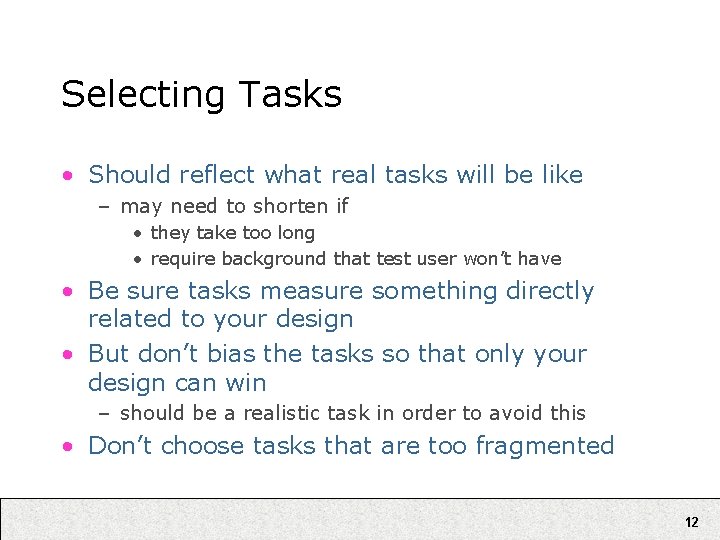

Selecting Tasks • Should reflect what real tasks will be like – may need to shorten if • they take too long • require background that test user won’t have • Be sure tasks measure something directly related to your design • But don’t bias the tasks so that only your design can win – should be a realistic task in order to avoid this • Don’t choose tasks that are too fragmented 12

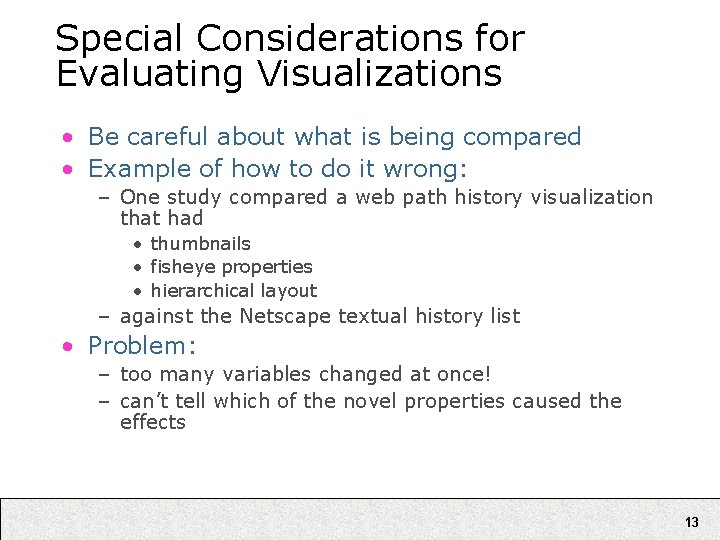

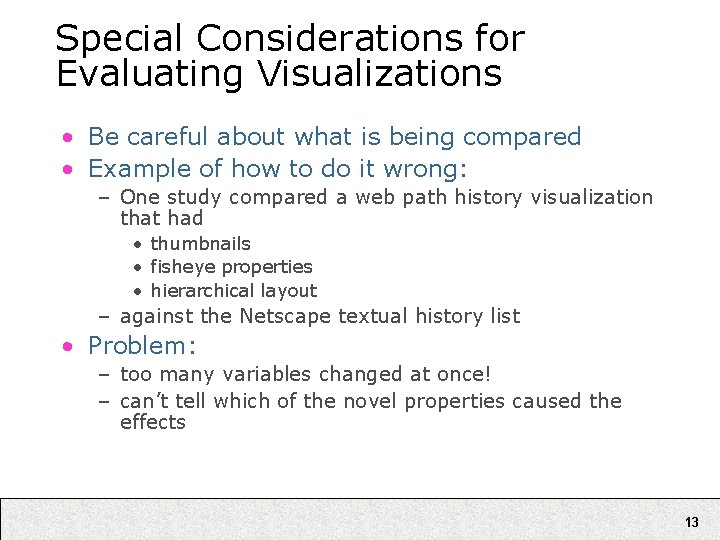

Special Considerations for Evaluating Visualizations • Be careful about what is being compared • Example of how to do it wrong: – One study compared a web path history visualization that had • thumbnails • fisheye properties • hierarchical layout – against the Netscape textual history list • Problem: – too many variables changed at once! – can’t tell which of the novel properties caused the effects 13

Important Factors • Perceptual abilities – spatial abilities tests – colorblindness – handedness (lefthanded vs. righthanded) 14

Procedure • For each participant: • • • Sign legal forms Pre-Survey: demographics Instructions Training runs Actual runs – Give task, measure performance • Post-Survey: subjective measures Slide adapted from Chris North's 15

Usability Testing Slide adapted from Chris North's 16

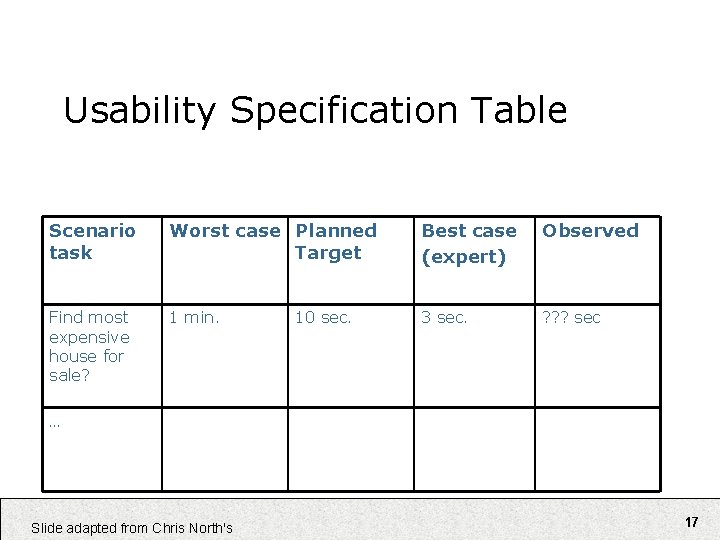

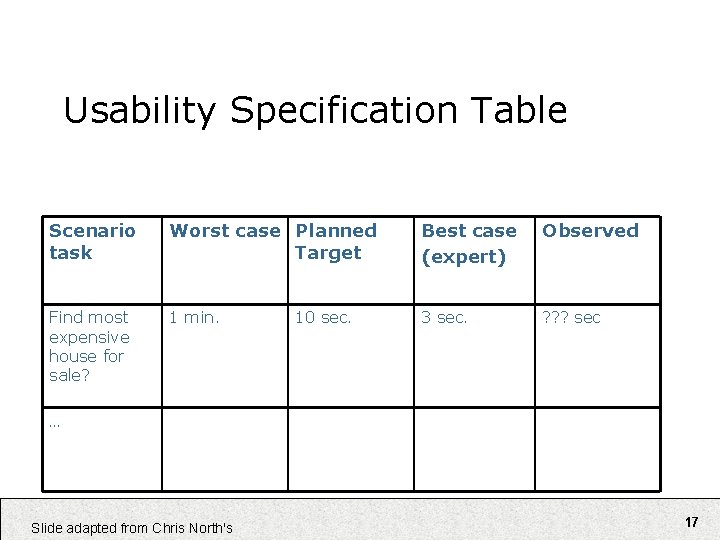

Usability Specification Table Scenario task Worst case Planned Target Best case (expert) Observed Find most expensive house for sale? 1 min. 3 sec. ? ? ? sec 10 sec. … Slide adapted from Chris North's 17

Usability Test Setup • Set of benchmark tasks – Easy to hard, specific to open-ended – Coverage of different UI features – E. g. “find the 5 most expensive houses for sale” • Experimenters: – Facilitator: instructs user – Observers: take notes, collect data, video tape screen – Executor: run the prototype if low-fi • Participants – 3 -5; quality not quantity Slide adapted from Chris North's 18

“Think Aloud” Method • This is for usability testing, not formal • Need to know what users are thinking, not just what they are doing • Ask participants to talk while performing tasks – – tell us what they are thinking tell us what they are trying to do tell us questions that arise as they work tell us things they read • Make a recording or take good notes – make sure you can tell what they were doing 19

Thinking Aloud (cont. ) • Prompt the user to keep talking – “tell me what you are thinking” • Try to only help on things you have predecided to help with – keep track of anything you do give help on – if participant stuck or frustrated, then end the task (gracefully) or help them 20

Pilot Study • Goal: – help fix problems with the study – make sure you are measuring what you mean to be • Procedure: – do twice, • first with colleagues • then with real users – usually end up making changes both times 21

Usability Test Procedure • Goal: mimic real life – Do not cheat by showing them how to use the UI! • Initial instructions – “We are evaluating the system, not you. ” • Repeat: – – Give participant a task Ask participant to “think aloud” Observe, note mistakes and problems Avoid interfering, hint only if completely stuck • Interview – Verbal feedback – Questionnaire • ~1 hour / participant (max) Slide adapted from Chris North's 22

Data • Note taking – E. g. “&%$#@ user keeps clicking on the wrong button…” • Verbal protocol: think aloud – E. g. user expects that button to do something else… • Rough quantitative measures – e. g. task completion time, . . • Interview feedback and surveys • Video-tape screen & mouse Slide adapted from Chris North's 23

Analyze • Initial reaction: – “stupid user!”, “that’s developer X’s fault!” • Mature reaction: – “how can we redesign UI to solve that usability problem? ” – the user is always right • Identify usability problems – Learning issues: e. g. can’t figure out or didn’t notice feature – Performance issues: e. g. arduous, tiring to solve tasks – Subjective issues: e. g. annoying, ugly • Problem severity: critical vs. minor Slide adapted from Chris North's 24

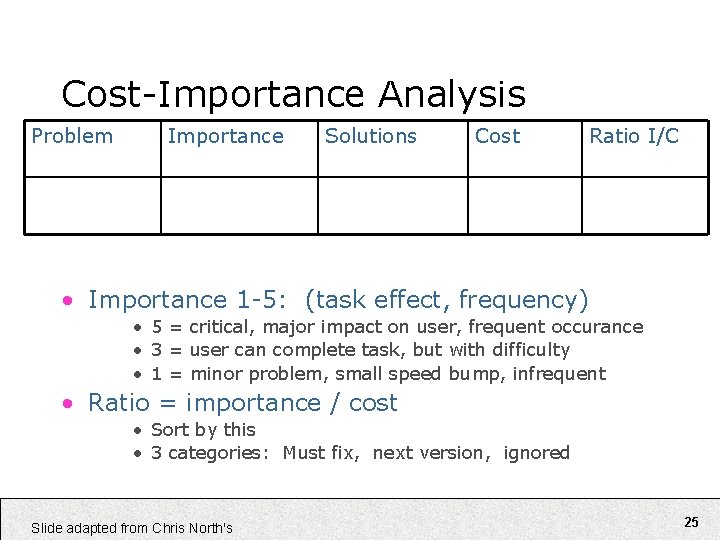

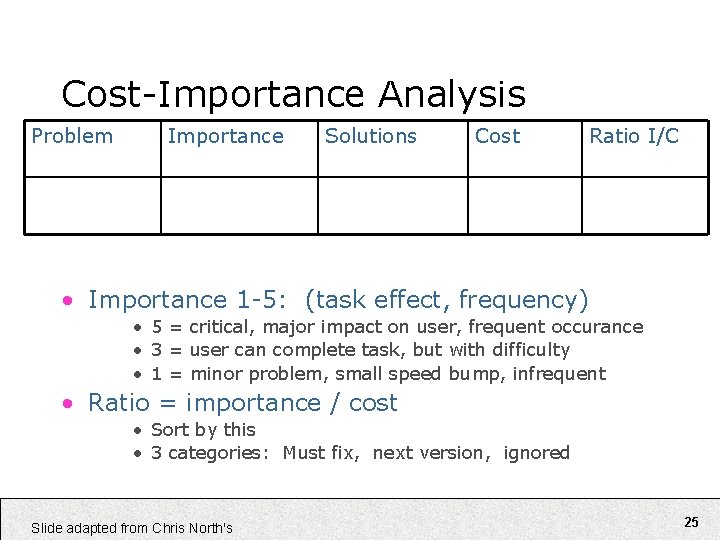

Cost-Importance Analysis Problem Importance Solutions Cost Ratio I/C • Importance 1 -5: (task effect, frequency) • 5 = critical, major impact on user, frequent occurance • 3 = user can complete task, but with difficulty • 1 = minor problem, small speed bump, infrequent • Ratio = importance / cost • Sort by this • 3 categories: Must fix, next version, ignored Slide adapted from Chris North's 25

Refine UI • Simple solutions vs. major redesigns • Solve problems in order of: importance/cost • Example: – Problem: user didn’t know he could zoom in to see more… – Potential solutions: • • Better zoom button icon, tooltip Add a zoom bar slider (like moosburg) Icons for different zoom levels: boundaries, roads, buildings NOT: more “help” documentation!!! You can do better. • Iterate – Test, refine, test, refine, … – Until? Meets usability specification Slide adapted from Chris North's 26

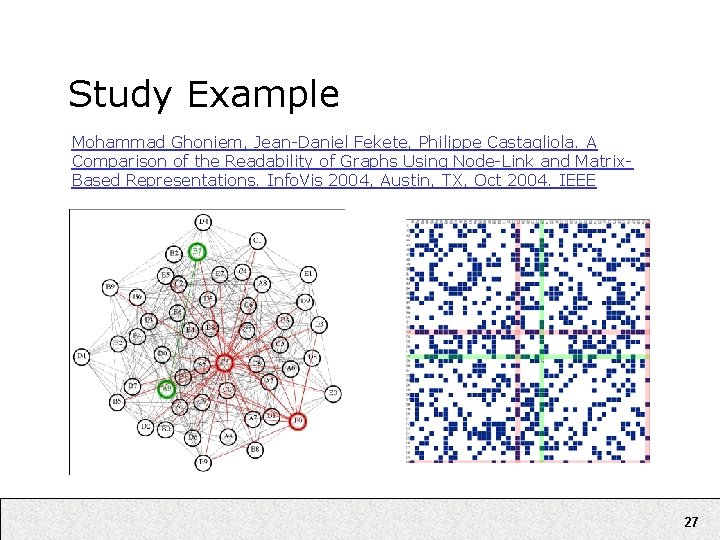

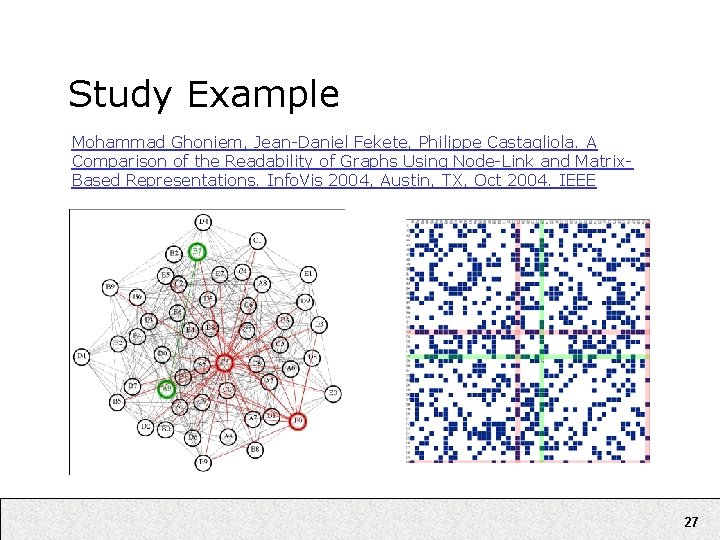

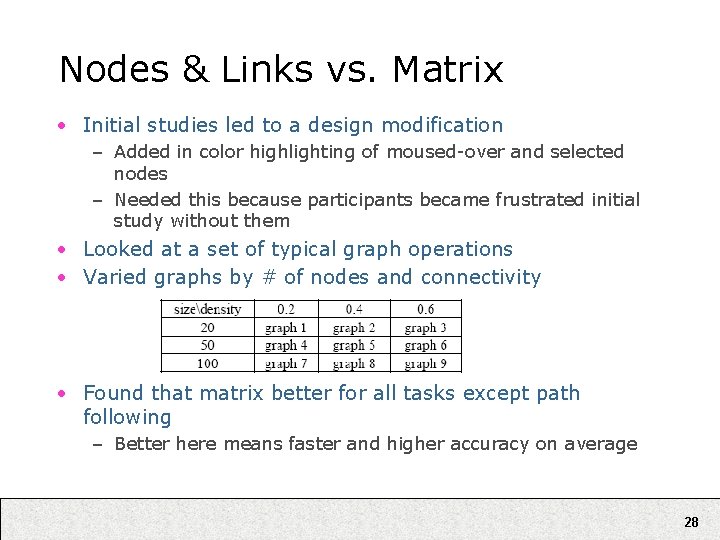

Study Example Mohammad Ghoniem, Jean-Daniel Fekete, Philippe Castagliola. A Comparison of the Readability of Graphs Using Node-Link and Matrix. Based Representations. Info. Vis 2004, Austin, TX, Oct 2004. IEEE 27

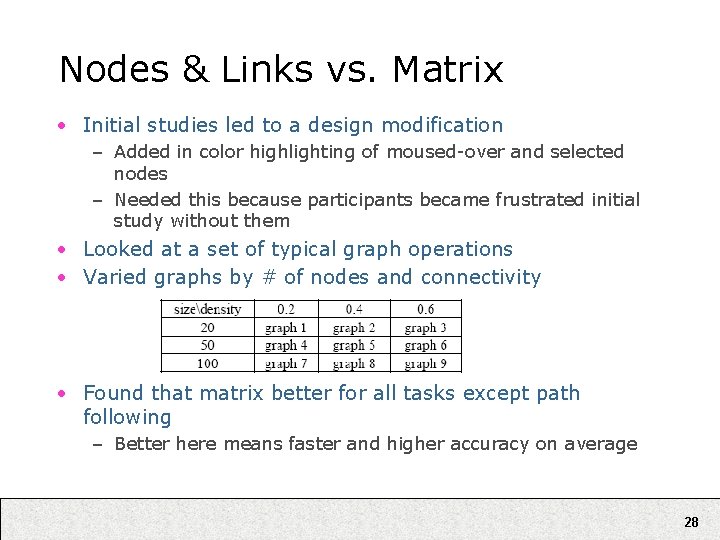

Nodes & Links vs. Matrix • Initial studies led to a design modification – Added in color highlighting of moused-over and selected nodes – Needed this because participants became frustrated initial study without them • Looked at a set of typical graph operations • Varied graphs by # of nodes and connectivity • Found that matrix better for all tasks except path following – Better here means faster and higher accuracy on average 28

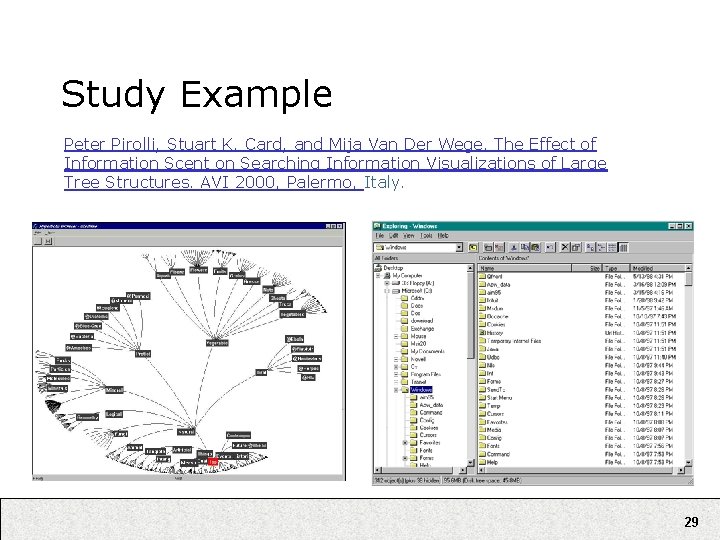

Study Example Peter Pirolli, Stuart K. Card, and Mija Van Der Wege. The Effect of Information Scent on Searching Information Visualizations of Large Tree Structures. AVI 2000, Palermo, Italy. 29

Main Conclusions • Results of the Browse-Off were a function of the types of tasks, the structure of the information, and the skills of the participants – NOT the difference in the browsers • The Hyperbolic Browser can be faster when – The clues about where to go next are clear – The tasks are relatively simple 30

Lessons for Study Design • This paper contains a wealth of good ideas about how to – Isolate what’s really going on – Assess and understand the data 31

Assessing Infoviz Projects • How does all this apply to you? 32