SIMS 213 User Interface Design Development Marti Hearst

- Slides: 51

SIMS 213: User Interface Design & Development Marti Hearst March 9 and 16, 2006

Formal Usability Studies

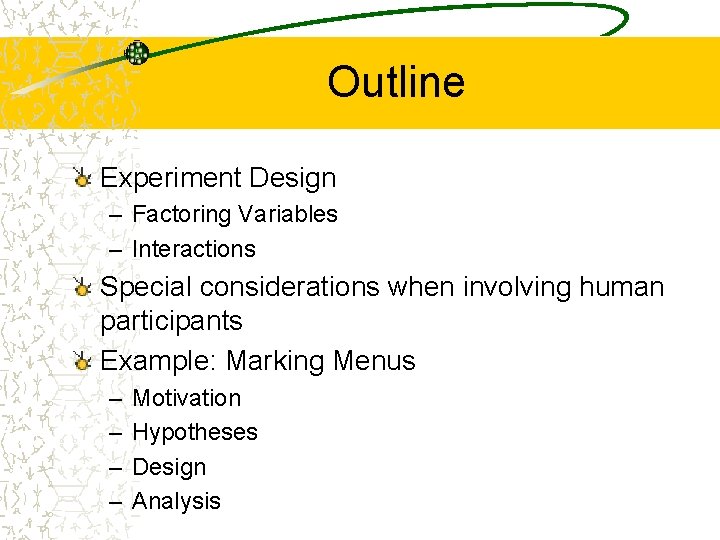

Outline Experiment Design – Factoring Variables – Interactions Special considerations when involving human participants Example: Marking Menus – – Motivation Hypotheses Design Analysis

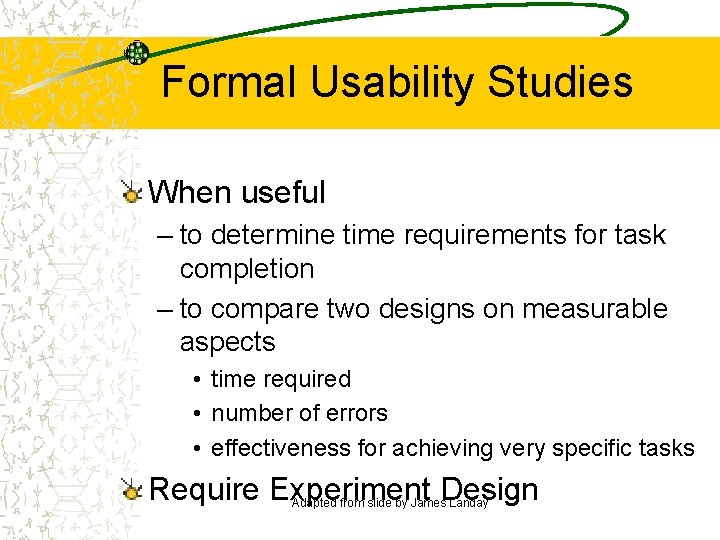

Formal Usability Studies When useful – to determine time requirements for task completion – to compare two designs on measurable aspects • time required • number of errors • effectiveness for achieving very specific tasks Require Experiment Design Adapted from slide by James Landay

Experiment Design Experiment design involves determining how many experiments to run and which attributes to vary in each experiment Goal: isolate which aspects of the interface really make a difference

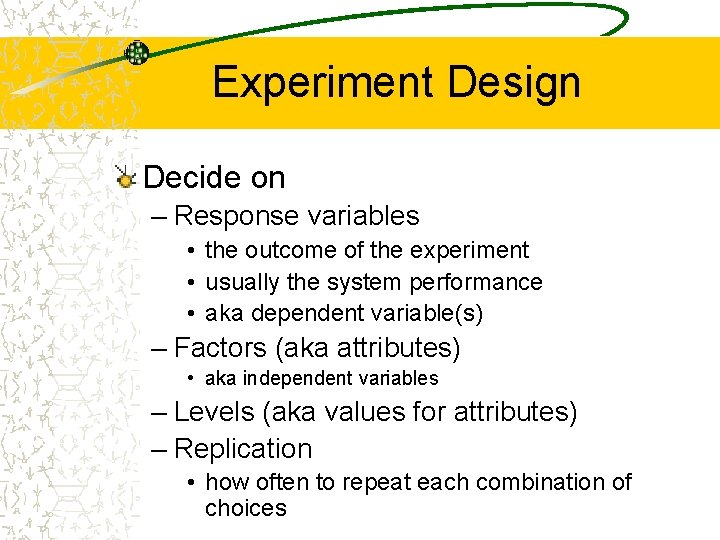

Experiment Design Decide on – Response variables • the outcome of the experiment • usually the system performance • aka dependent variable(s) – Factors (aka attributes) • aka independent variables – Levels (aka values for attributes) – Replication • how often to repeat each combination of choices

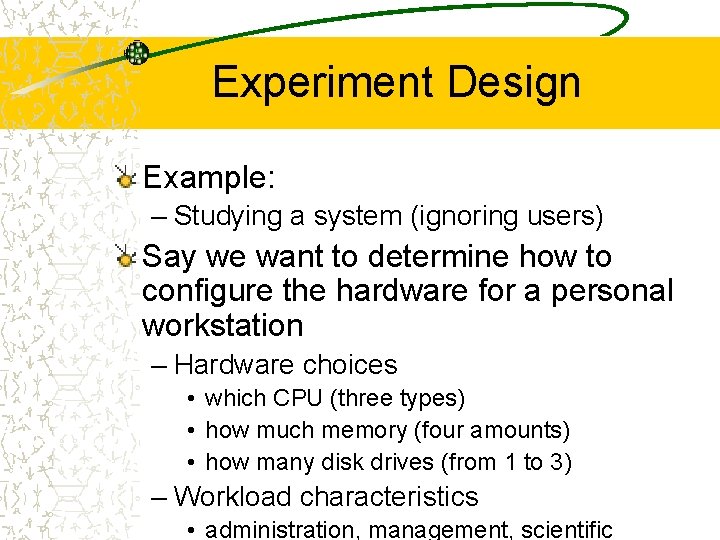

Experiment Design Example: – Studying a system (ignoring users) Say we want to determine how to configure the hardware for a personal workstation – Hardware choices • which CPU (three types) • how much memory (four amounts) • how many disk drives (from 1 to 3) – Workload characteristics • administration, management, scientific

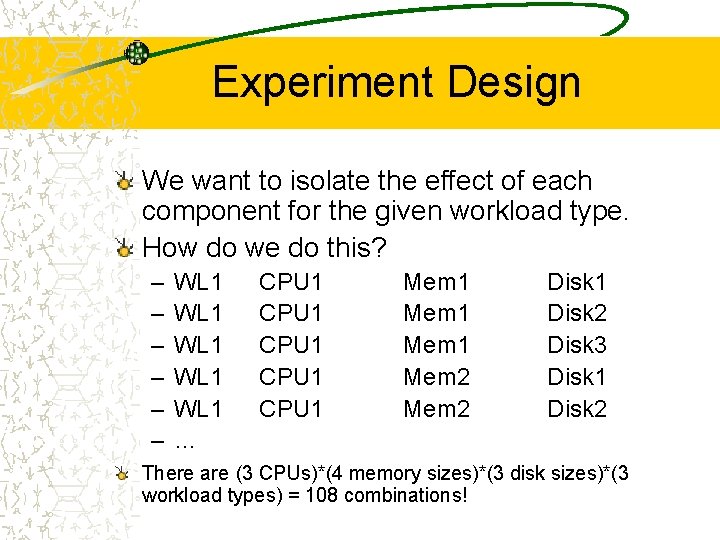

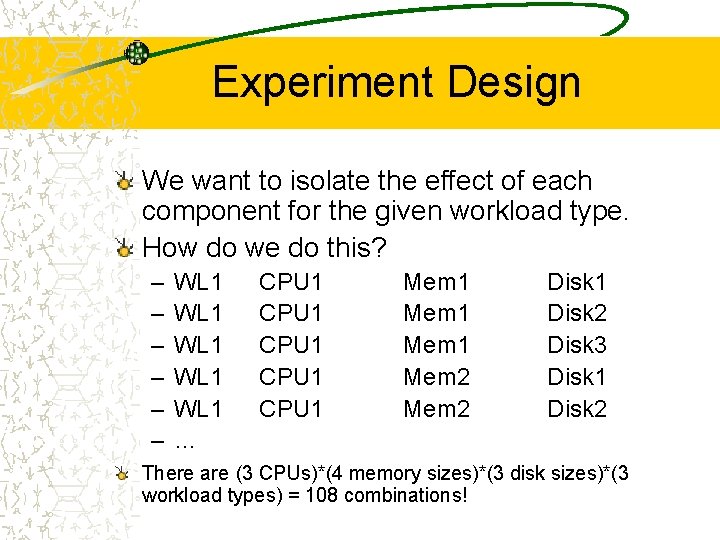

Experiment Design We want to isolate the effect of each component for the given workload type. How do we do this? – – – WL 1 WL 1 … CPU 1 CPU 1 Mem 2 Mem 2 Disk 1 Disk 2 Disk 3 Disk 1 Disk 2 There are (3 CPUs)*(4 memory sizes)*(3 disk sizes)*(3 workload types) = 108 combinations!

Experiment Design One strategy to reduce the number of comparisons needed: – pick just one attribute – vary it – hold the rest constant Problems: – inefficient – might miss effects of interactions

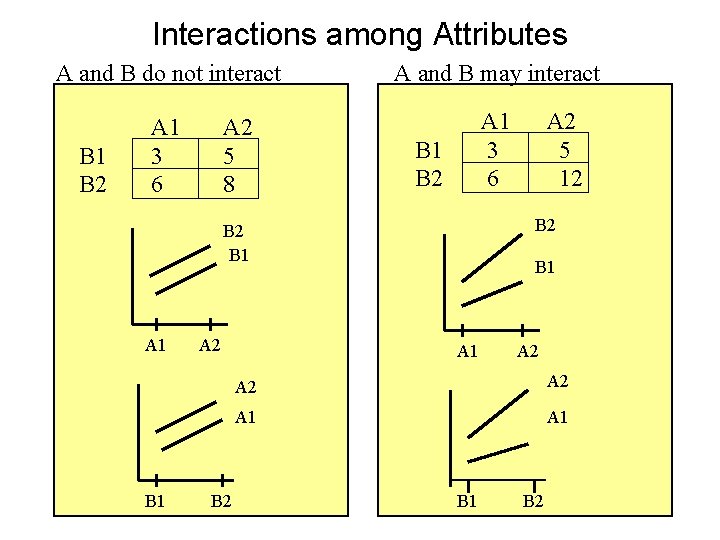

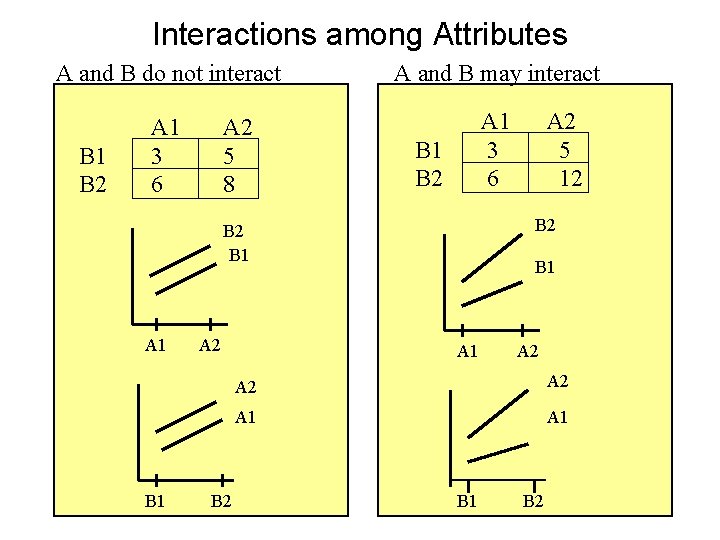

Interactions among Attributes A and B do not interact A and B may interact A 1 3 6 B 1 B 2 A 2 5 8 B 1 B 2 B 2 B 1 A 1 B 1 A 2 B 2 A 2 5 12 B 1 A 2 A 2 A 1 B 1 B 2

Experiment Design Another strategy: figure out which attributes are important first Do this by just comparing a few major attributes at a time – if an attribute has a strong effect, include it in future studies – otherwise assume it is safe to drop it This strategy also allows you to find interactions between attributes

Experiment Design Common practice: Fractional Factorial Design – Just compare important subsets – Use experiment design to partially vary the combinations of attributes Blocking – Group factors or levels together – Use a Latin Square design to arrange the blocks

Between-Groups Design Wilma and Betty use one interface Dino and Fred use the other

Within-Groups Design Everyone uses both interfaces

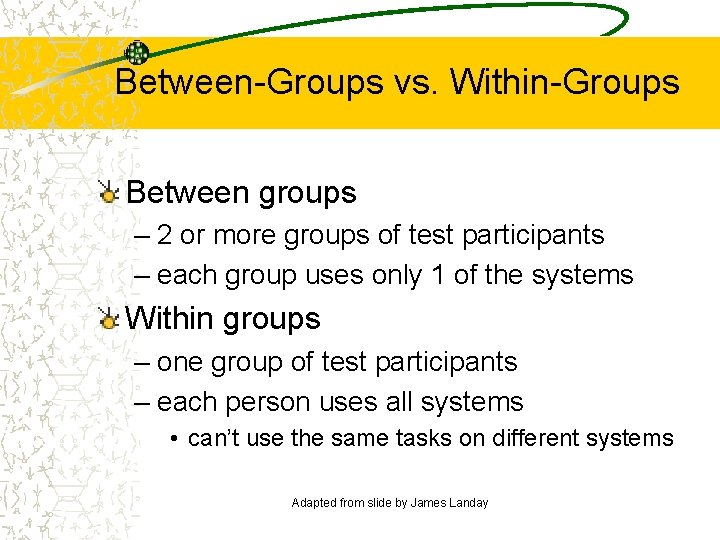

Between-Groups vs. Within-Groups Between groups – 2 or more groups of test participants – each group uses only 1 of the systems Within groups – one group of test participants – each person uses all systems • can’t use the same tasks on different systems Adapted from slide by James Landay

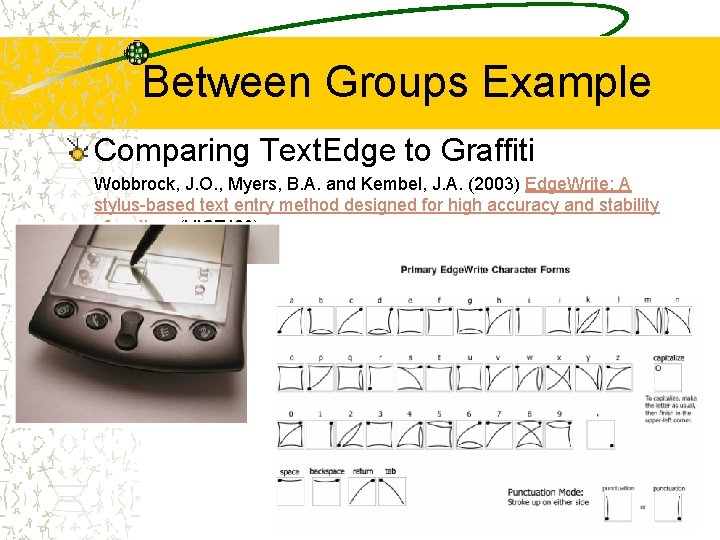

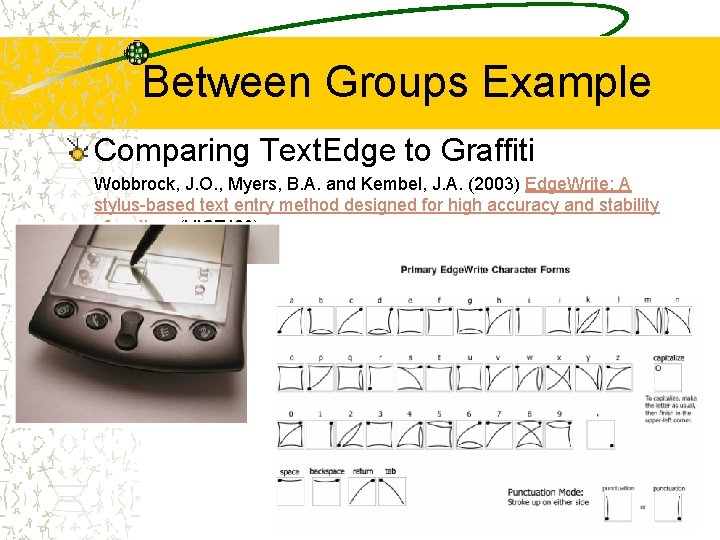

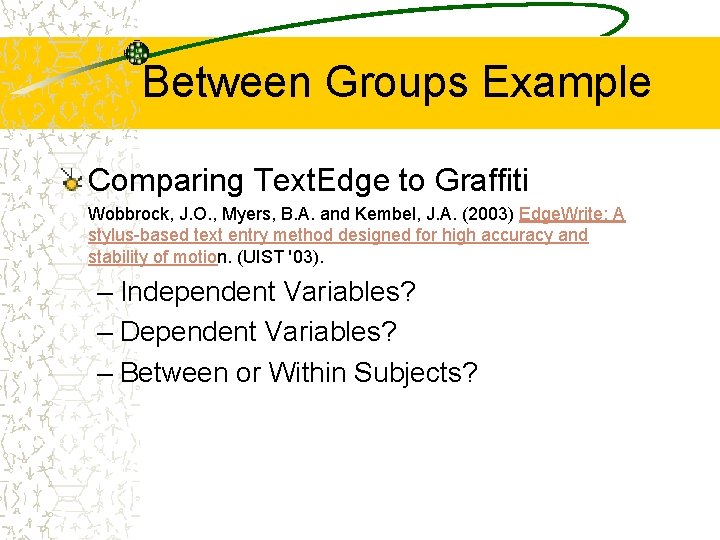

Between Groups Example Comparing Text. Edge to Graffiti Wobbrock, J. O. , Myers, B. A. and Kembel, J. A. (2003) Edge. Write: A stylus-based text entry method designed for high accuracy and stability of motion. (UIST '03).

Between Groups Example Comparing Text. Edge to Graffiti Wobbrock, J. O. , Myers, B. A. and Kembel, J. A. (2003) Edge. Write: A stylus-based text entry method designed for high accuracy and stability of motion. (UIST '03). – Independent Variables? – Dependent Variables? – Between or Within Subjects?

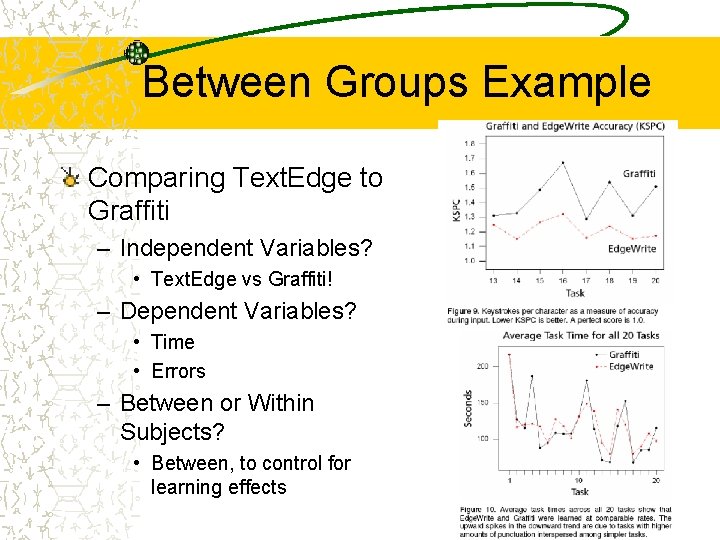

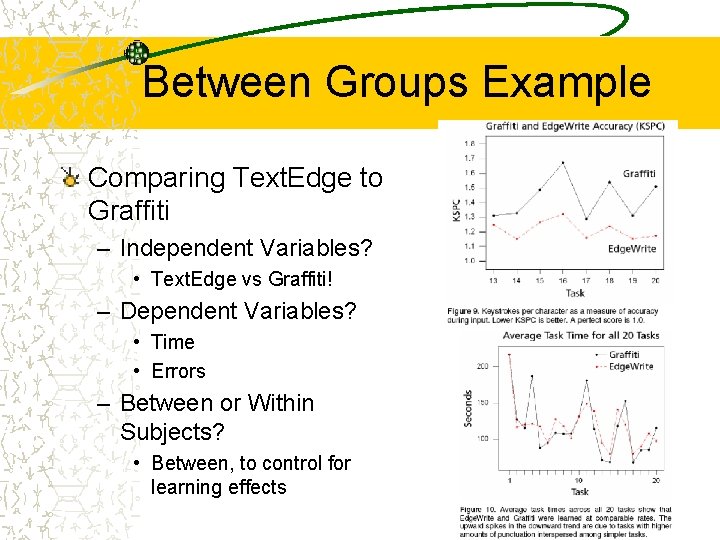

Between Groups Example Comparing Text. Edge to Graffiti – Independent Variables? • Text. Edge vs Graffiti! – Dependent Variables? • Time • Errors – Between or Within Subjects? • Between, to control for learning effects

Between-Groups vs. Within-Groups Within groups design – Pros: • Is more powerful statistically (can compare the same person across different conditions, thus isolating effects of individual differences) • Requires fewer participants than between-groups – Cons: • Learning effects • Fatigue effects

Special Considerations for Formal Studies with Human Participants Studies involving human participants vs. measuring automated systems – – people get tired people get bored people (may) get upset by some tasks learning effects • people will learn how to do the tasks (or the answers to questions) if repeated • people will (usually) learn how to use the system over time

More Special Considerations High variability among people – especially when involved in reading/comprehension tasks – especially when following hyperlinks! (can go all over the place)

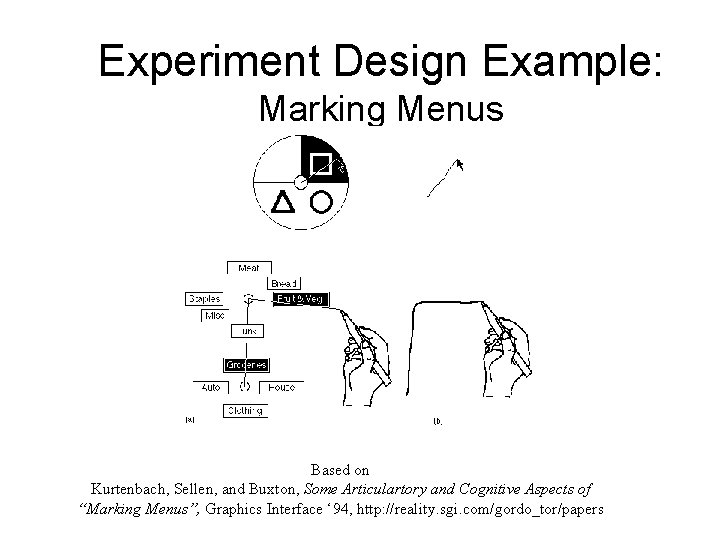

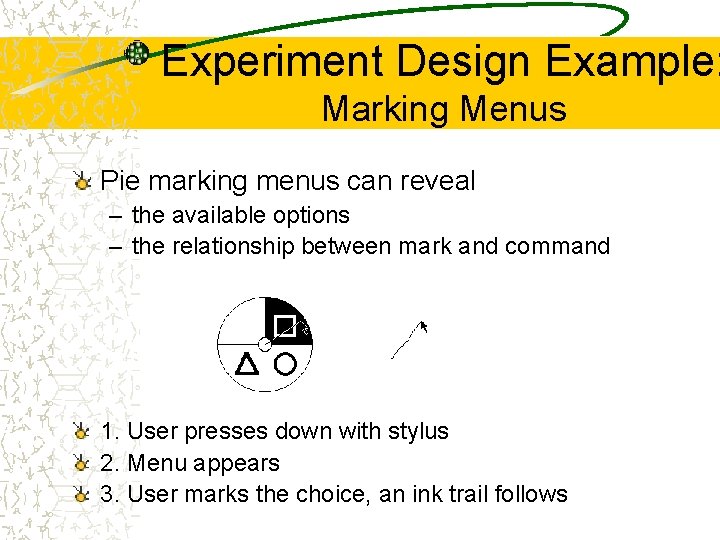

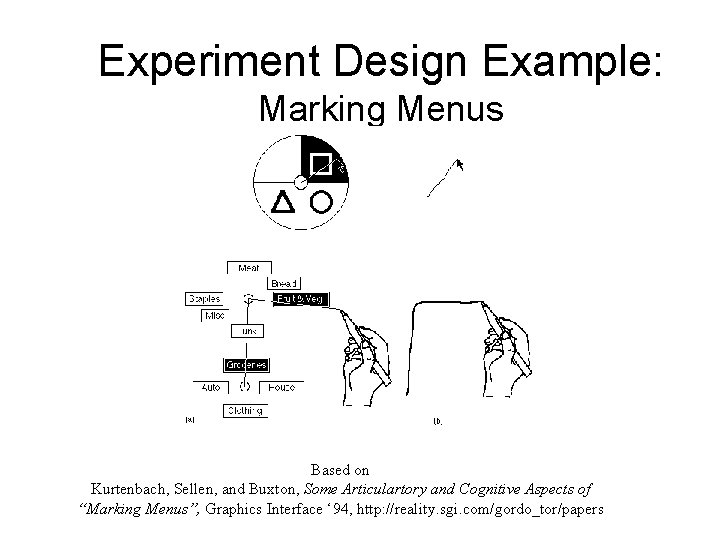

Experiment Design Example: Marking Menus Based on Kurtenbach, Sellen, and Buxton, Some Articulartory and Cognitive Aspects of “Marking Menus”, Graphics Interface ‘ 94, http: //reality. sgi. com/gordo_tor/papers

Experiment Design Example: Marking Menus Pie marking menus can reveal – the available options – the relationship between mark and command 1. User presses down with stylus 2. Menu appears 3. User marks the choice, an ink trail follows

Why Marking Menus? Same movement for selecting command as for executing it Supporting markings with pie menus should help transition between novice and expert Useful for keyboardless devices Useful for large screens Pie menus have been shown to be faster than linear menus in certain situations

What do we want to know? Are marking menus better than pie menus? – Do users have to see the menu? – Does leaving an “ink trail” make a difference? – Do people improve on these new menus as they practice? Related questions: – What, if any, are the effects of different input devices?

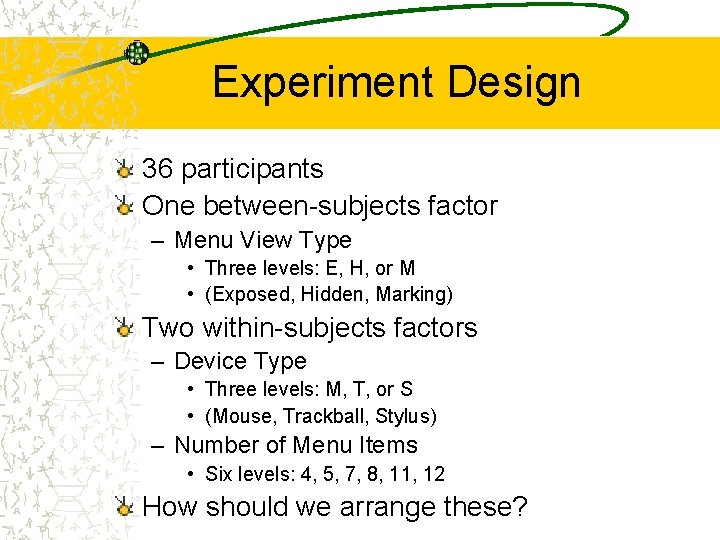

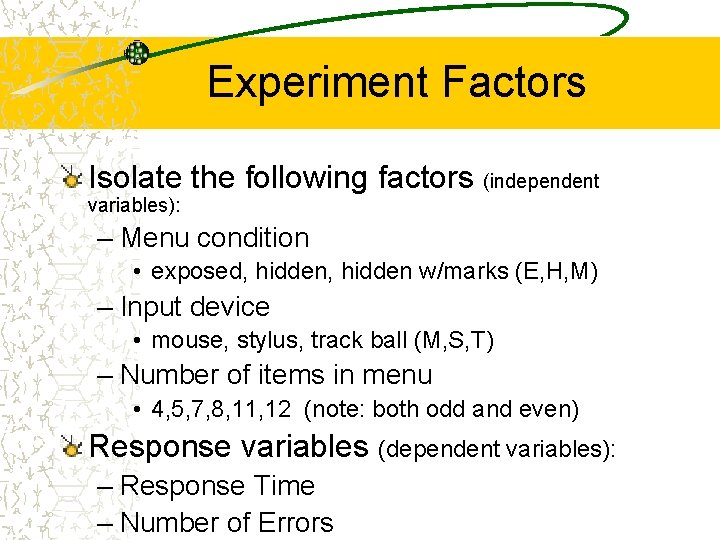

Experiment Factors Isolate the following factors (independent variables): – Menu condition • exposed, hidden w/marks (E, H, M) – Input device • mouse, stylus, track ball (M, S, T) – Number of items in menu • 4, 5, 7, 8, 11, 12 (note: both odd and even) Response variables (dependent variables): – Response Time – Number of Errors

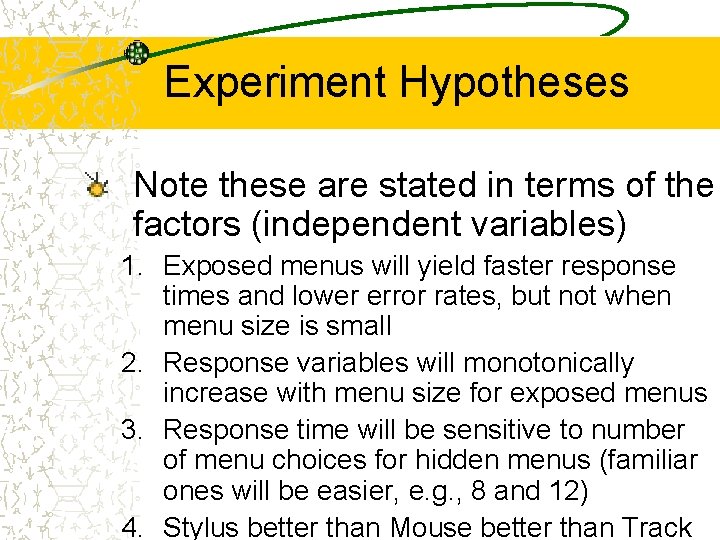

Experiment Hypotheses Note these are stated in terms of the factors (independent variables) 1. Exposed menus will yield faster response times and lower error rates, but not when menu size is small 2. Response variables will monotonically increase with menu size for exposed menus 3. Response time will be sensitive to number of menu choices for hidden menus (familiar ones will be easier, e. g. , 8 and 12) 4. Stylus better than Mouse better than Track

Experiment Hypotheses 5. Device performance is independent of menu type 6. Performance on hidden menus (both marking and hidden) will improve steadily across trials. Performance on exposed menus will remain constant.

Experiment Design Participants – 36 right-handed people • usually gender distribution is stated – considerable mouse experience – (almost) no trackball, stylus experience

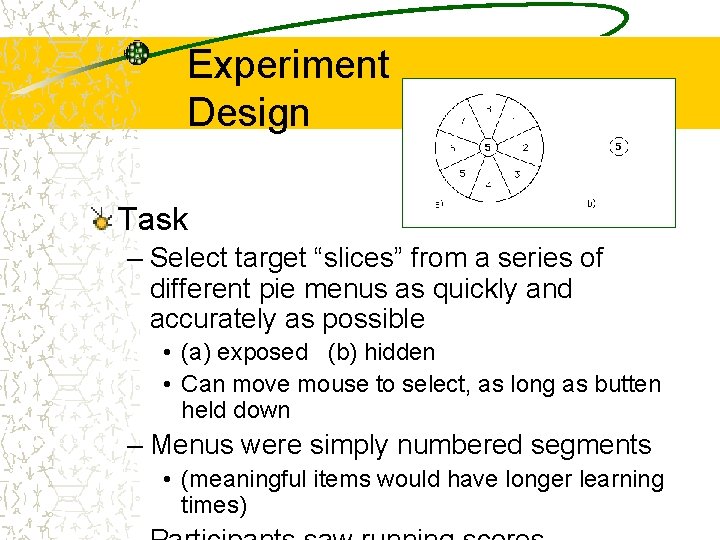

Experiment Design Task – Select target “slices” from a series of different pie menus as quickly and accurately as possible • (a) exposed (b) hidden • Can move mouse to select, as long as butten held down – Menus were simply numbered segments • (meaningful items would have longer learning times)

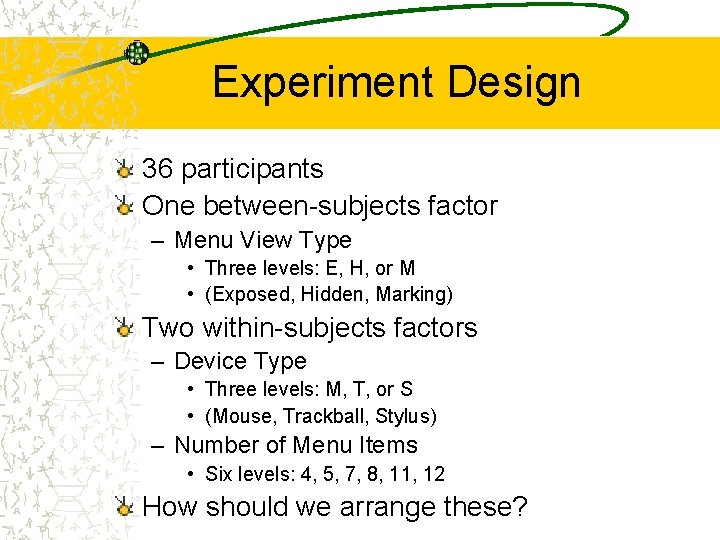

Experiment Design 36 participants One between-subjects factor – Menu View Type • Three levels: E, H, or M • (Exposed, Hidden, Marking) Two within-subjects factors – Device Type • Three levels: M, T, or S • (Mouse, Trackball, Stylus) – Number of Menu Items • Six levels: 4, 5, 7, 8, 11, 12 How should we arrange these?

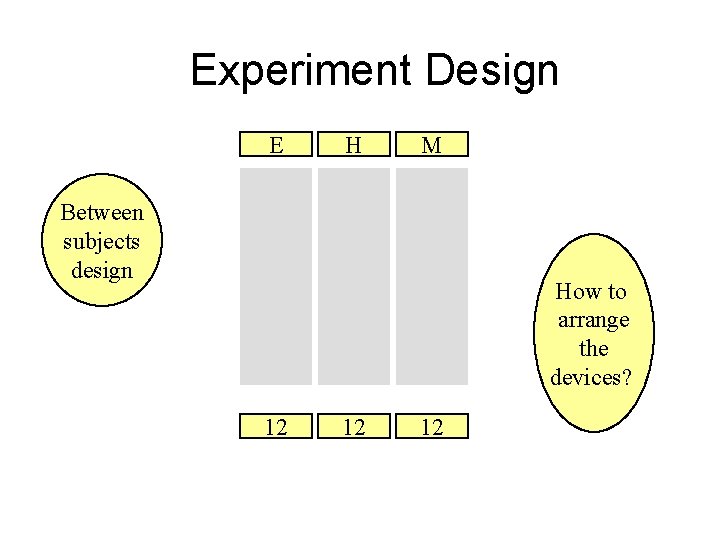

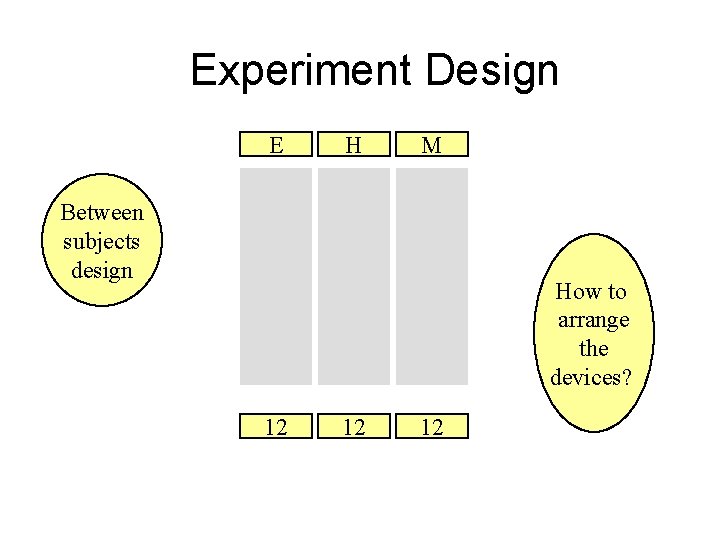

Experiment Design E H M Between subjects design How to arrange the devices? 12 12 12

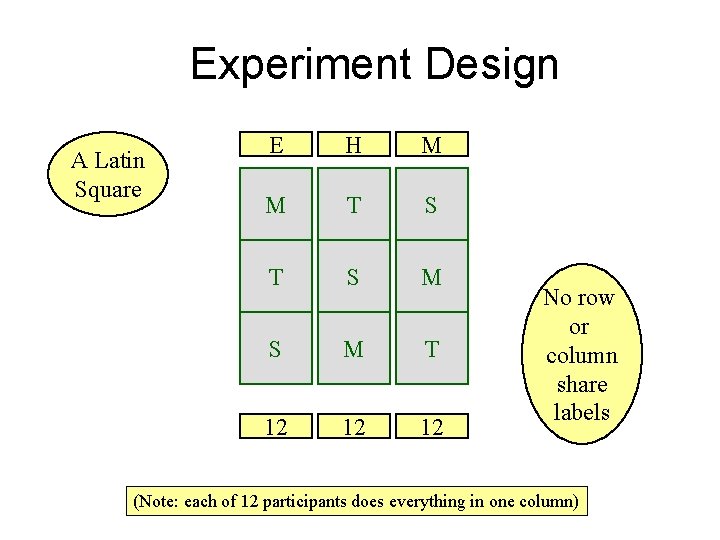

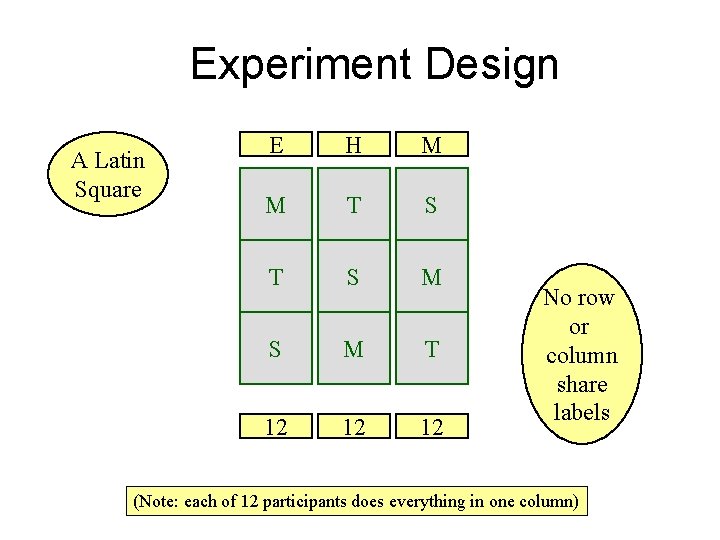

Experiment Design A Latin Square E H M M T S M S M T 12 12 12 No row or column share labels (Note: each of 12 participants does everything in one column)

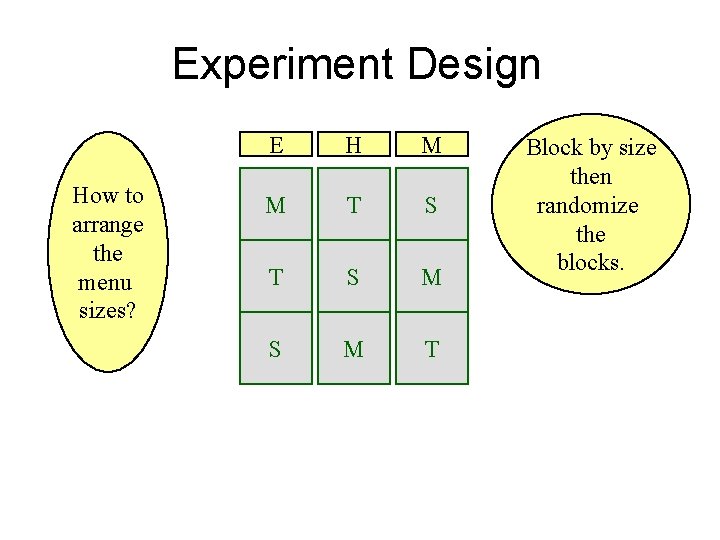

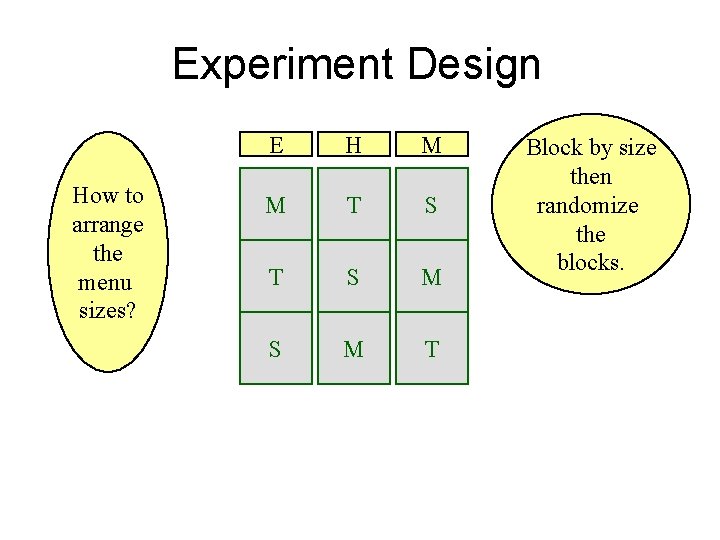

Experiment Design How to arrange the menu sizes? E H M M T S M S M T Block by size then randomize the blocks.

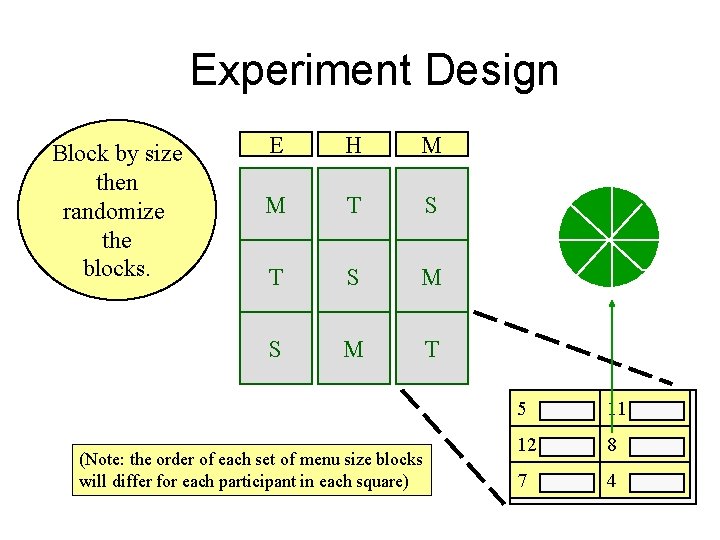

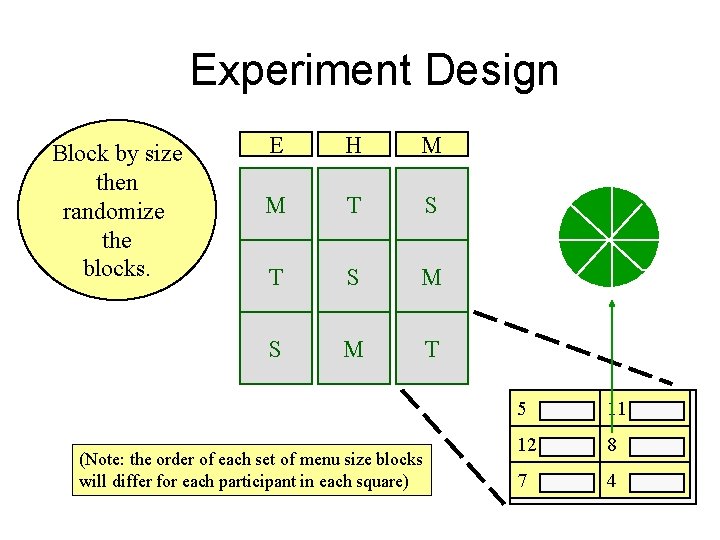

Experiment Design Block by size then randomize the blocks. E H M M T S M S M T (Note: the order of each set of menu size blocks will differ for each participant in each square) 5 11 12 8 7 4

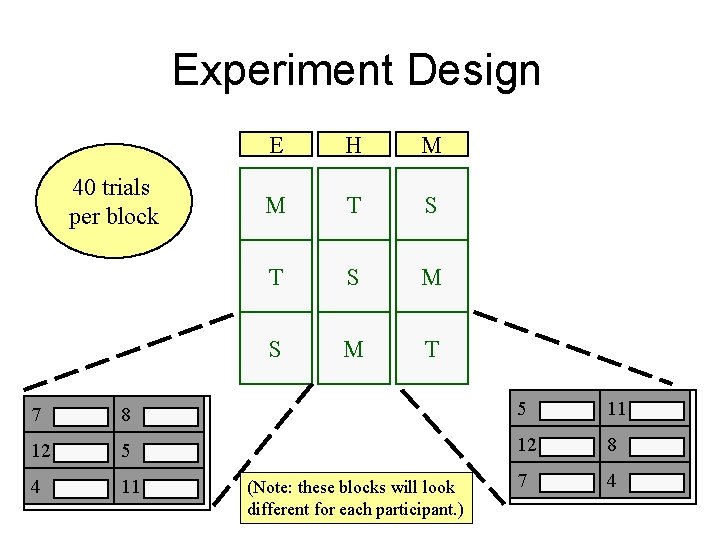

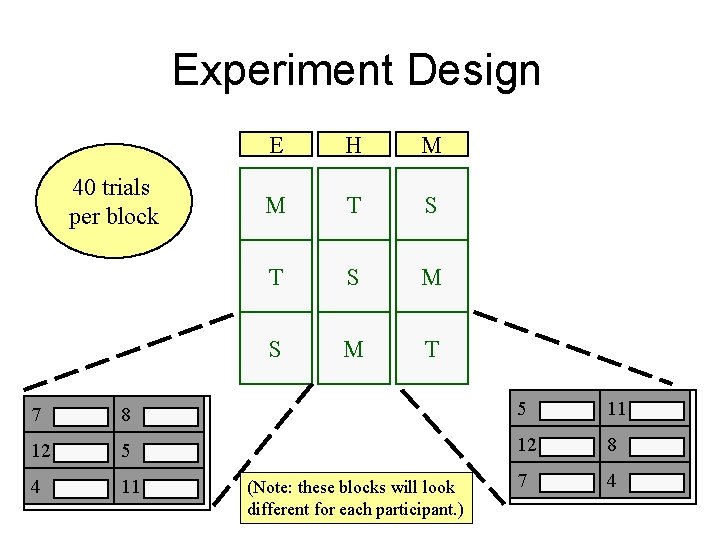

Experiment Design 40 trials per block E H M M T S M S M T 7 8 5 11 12 5 12 8 4 11 7 4 (Note: these blocks will look different for each participant. )

Experiment Overall Results So exposing menus is faster … or is it? Let’s factor things out more.

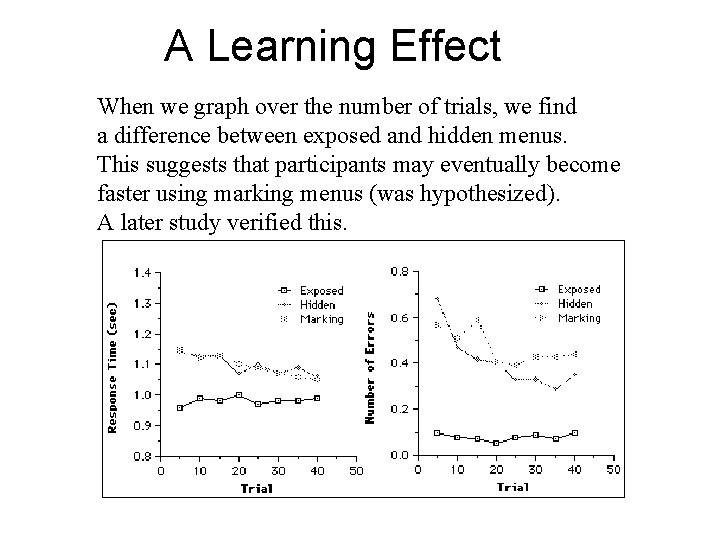

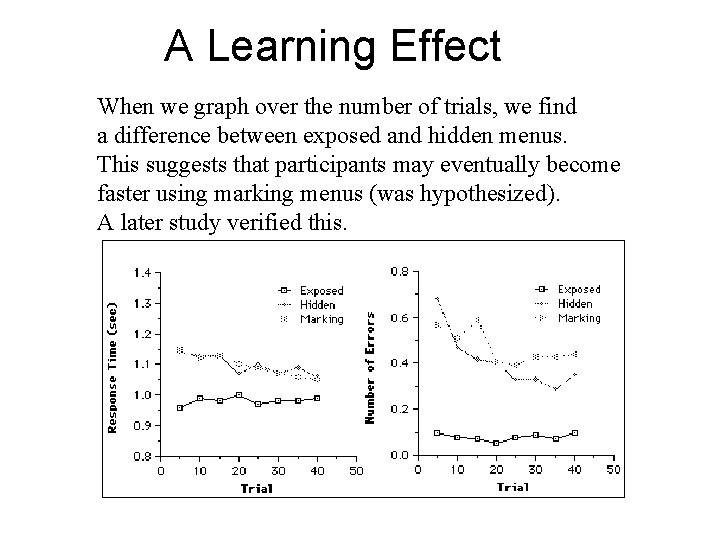

A Learning Effect When we graph over the number of trials, we find a difference between exposed and hidden menus. This suggests that participants may eventually become faster using marking menus (was hypothesized). A later study verified this.

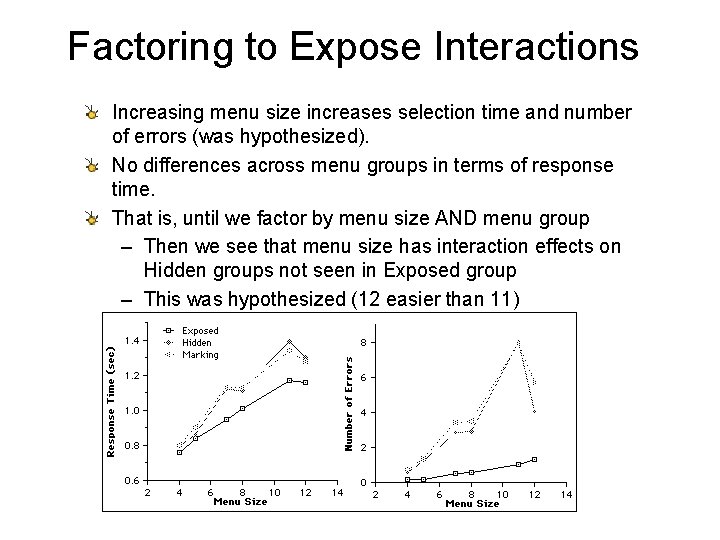

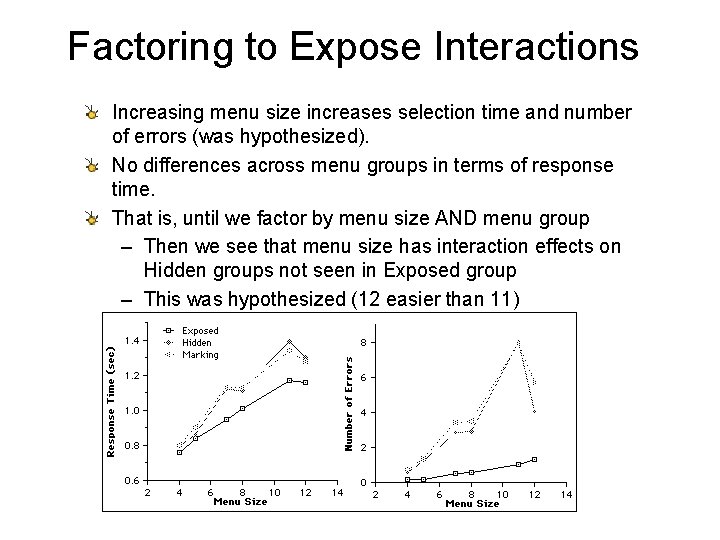

Factoring to Expose Interactions Increasing menu size increases selection time and number of errors (was hypothesized). No differences across menu groups in terms of response time. That is, until we factor by menu size AND menu group – Then we see that menu size has interaction effects on Hidden groups not seen in Exposed group – This was hypothesized (12 easier than 11)

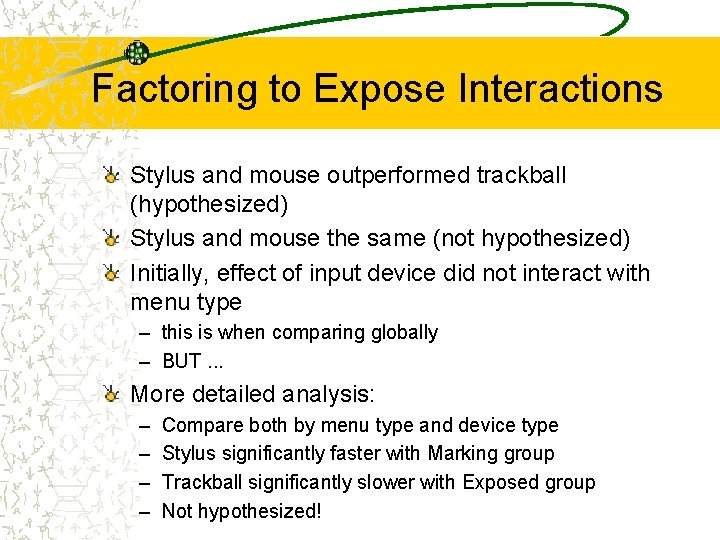

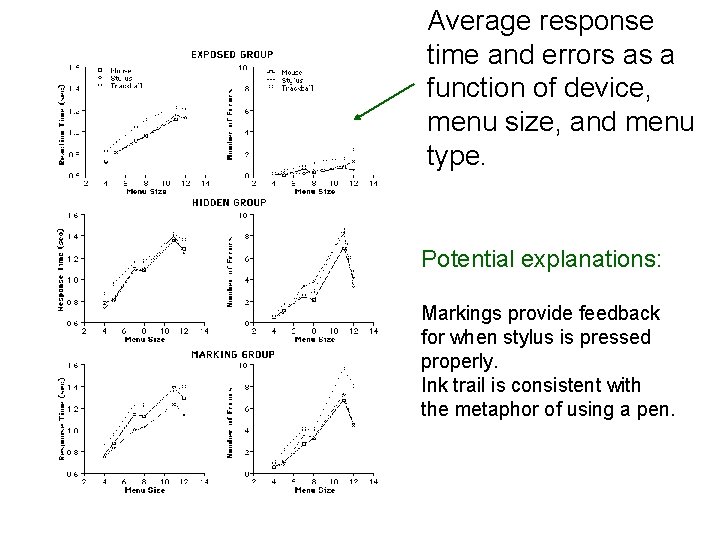

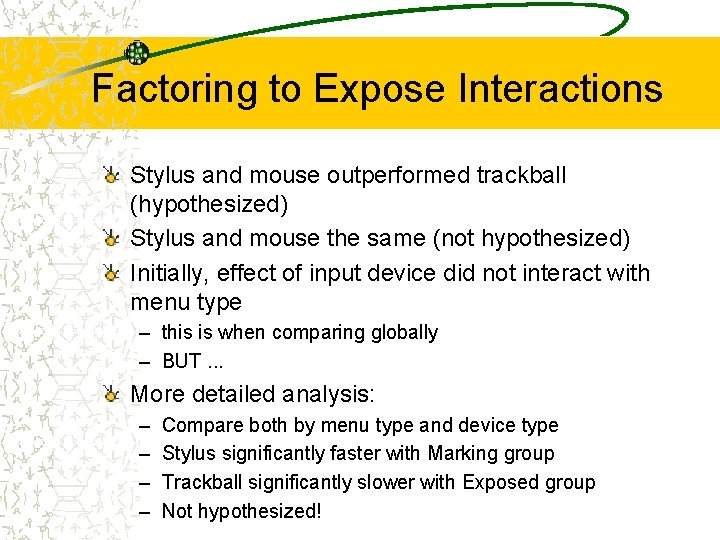

Factoring to Expose Interactions Stylus and mouse outperformed trackball (hypothesized) Stylus and mouse the same (not hypothesized) Initially, effect of input device did not interact with menu type – this is when comparing globally – BUT. . . More detailed analysis: – – Compare both by menu type and device type Stylus significantly faster with Marking group Trackball significantly slower with Exposed group Not hypothesized!

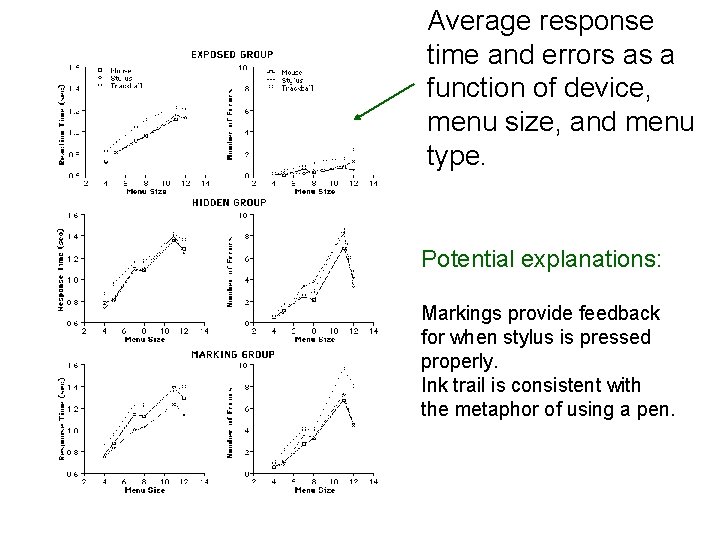

Average response time and errors as a function of device, menu size, and menu type. Potential explanations: Markings provide feedback for when stylus is pressed properly. Ink trail is consistent with the metaphor of using a pen.

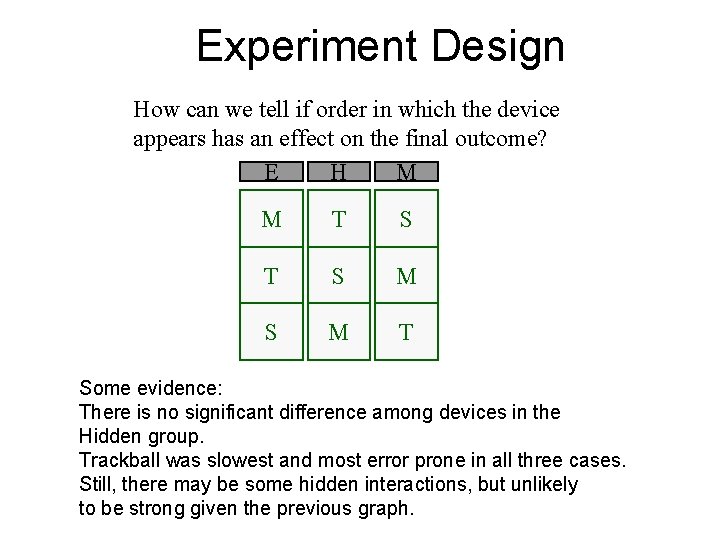

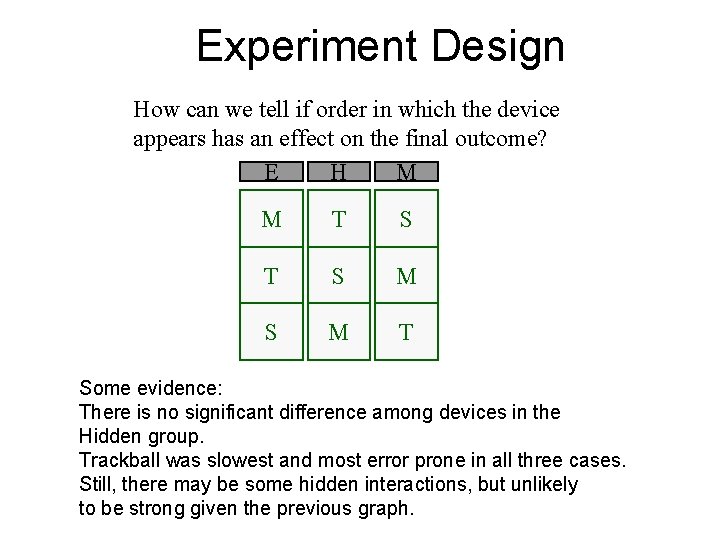

Experiment Design How can we tell if order in which the device appears has an effect on the final outcome? E H M M T S M S M T Some evidence: There is no significant difference among devices in the Hidden group. Trackball was slowest and most error prone in all three cases. Still, there may be some hidden interactions, but unlikely to be strong given the previous graph.

Statistical Tests Need to test for statistical significance – This is a big area – Assuming a normal distribution: • Students t-test to compare two variables • ANOVA to compare more than two variables

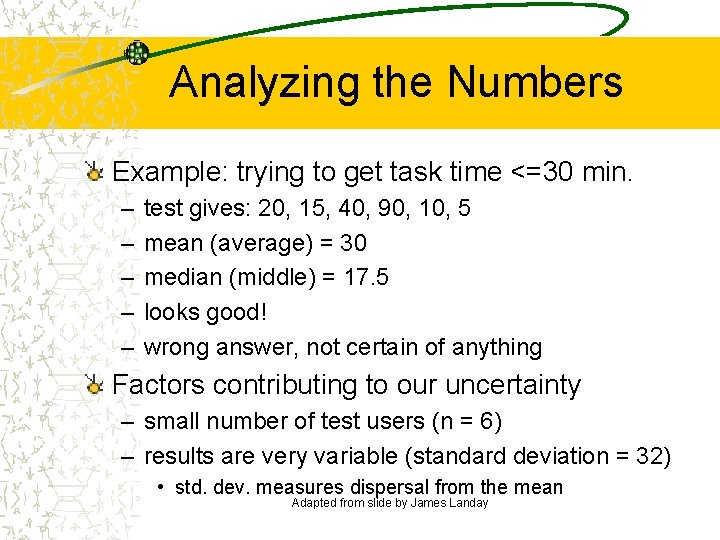

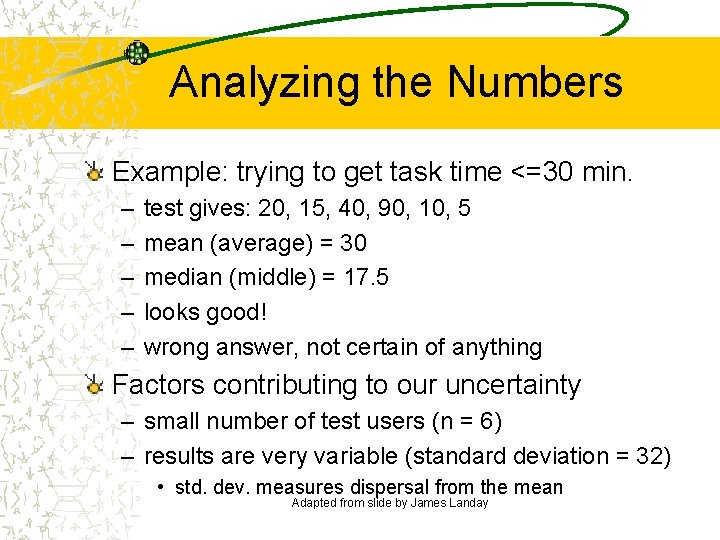

Analyzing the Numbers Example: trying to get task time <=30 min. – – – test gives: 20, 15, 40, 90, 10, 5 mean (average) = 30 median (middle) = 17. 5 looks good! wrong answer, not certain of anything Factors contributing to our uncertainty – small number of test users (n = 6) – results are very variable (standard deviation = 32) • std. dev. measures dispersal from the mean Adapted from slide by James Landay

Analyzing the Numbers (cont. ) This is what statistics are for Crank through the procedures and you find – 95% certain that typical value is between 5 & 55 Usability test data is quite variable – need lots to get good estimates of typical values – 4 times as many tests will only narrow range by 2 x Adapted from slide by James Landay

Followup Work Hierarchical Markup Menu study

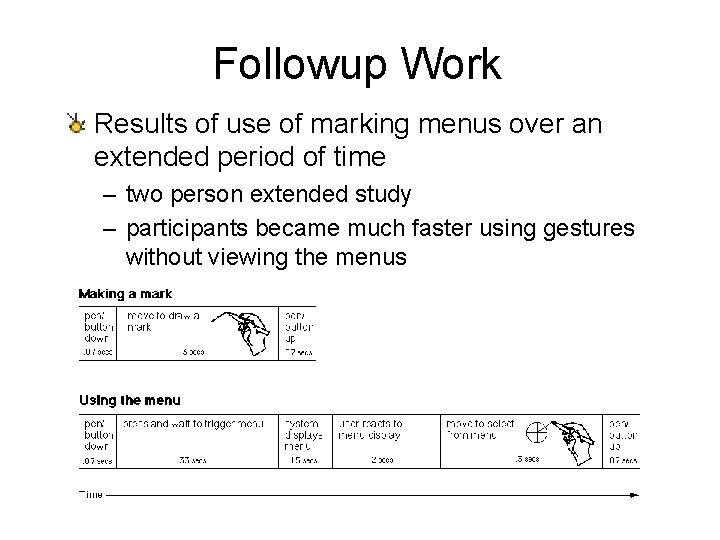

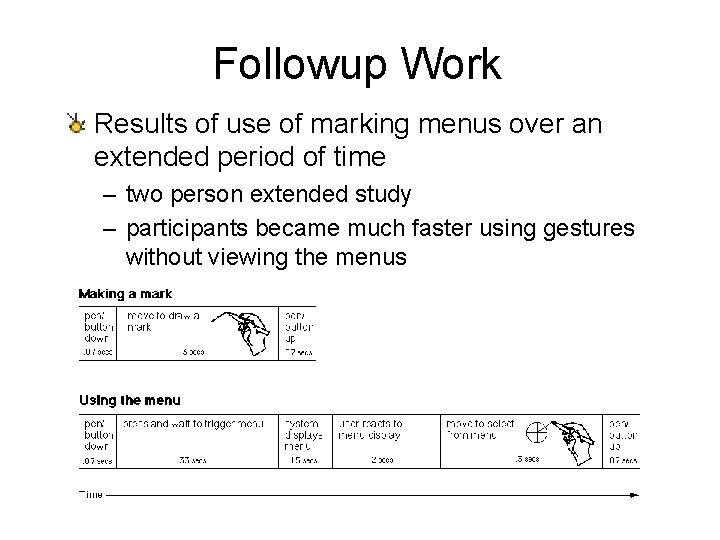

Followup Work Results of use of marking menus over an extended period of time – two person extended study – participants became much faster using gestures without viewing the menus

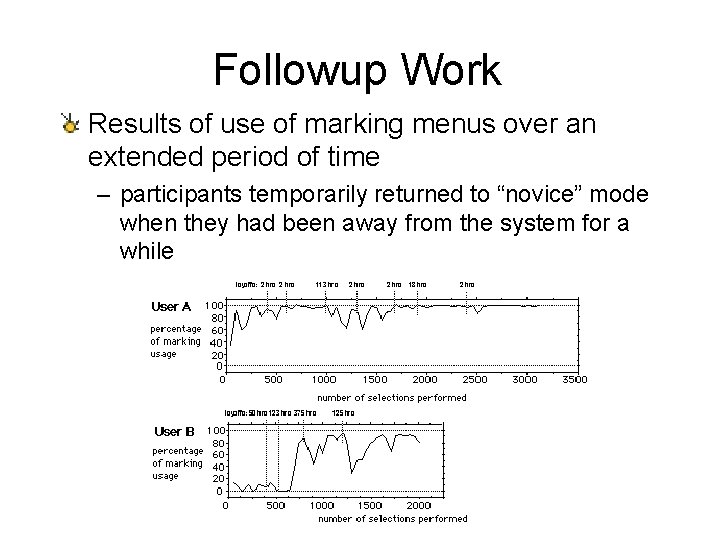

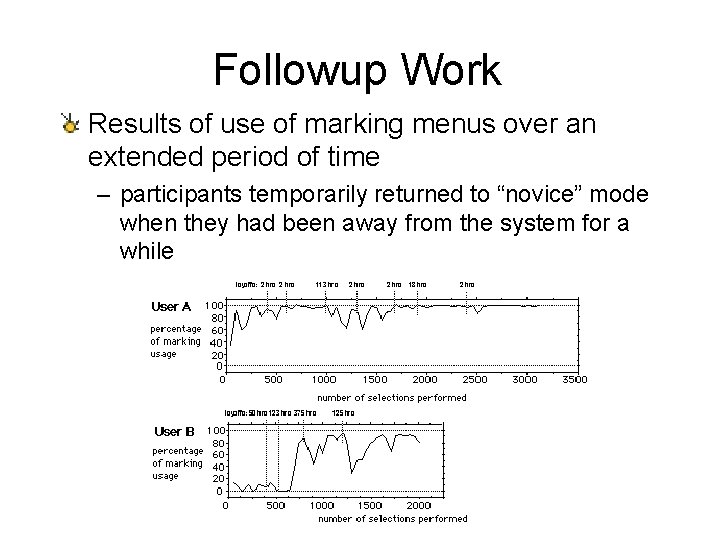

Followup Work Results of use of marking menus over an extended period of time – participants temporarily returned to “novice” mode when they had been away from the system for a while

Summary Formal studies can reveal detailed information but take extensive time/effort Human participants entail special requirements Experiment design involves – Factors, levels, participants, tasks, hypotheses – Important to consider which factors are likely to have real effects on the results, and isolate these Analysis – Often need to involve a statistician to do it right – Need to determine statistical significance – Important to make plots and explore the data

References Kurtenbach, Sellen, and Buxton, Some Articulartory and Cognitive Aspects of “Marking Menus”, Graphics Interface ‘ 94, http: //reality. sgi. com/gordo_tor/papers Kurtenbach and Buxton, User Learning and Performance with Marking Menus, Graphics Interface ‘ 94, http: //reality. sgi. com/gordo_tor/papers Jain, The art of computer systems performance analysis, Wiley, 1991 http: //www. statsoft. com/textbook/stanman. html Gonick and Smith, The Cartoon Guide to Statistics, Harper. Perennial, 1993 Dix et al. textbook

Discuss Jeffries et al. Compared 4 Evaluation Techniques – Heuristic Evaluation – Software Guidelines – Cognitive Walkthroughs – Usability Testing Findings?