Signal Enhancement Using Multivariate Classification Techniques and Physical

- Slides: 18

Signal Enhancement Using Multivariate Classification Techniques and Physical Constraints Ricardo Vilalta Puneet Sarda Gordon Mutchler Paul Padley

Outline l l 2 Introduction The Goal Kinematic Fitting Experiments

Introduction l Multivariate Classification Techniques – – l Experiments using CLAS – – – l 3 Bayesian Functions Neural Networks Decision Trees Rule based Detecting charged particles, inferring uncharged Measure momentum, polar angle and azimuthal angle, time of flight Infer mass Using G 1 C dataset

Introduction Reactions we will look for l l Background Reactions 4 K*+ measurement is not the real interest. We use it as a convenient test case to develop the multivariate techniques which will be used on new data.

The Goal l 5 Empirical comparison of several multivariate classification techniques for signal enhancement Use of Kinematic Fitting to enhance original feature representation Effect of cost matrices in generalization performance

Kinematic Fitting l l 6 Mathematical procedure Takes advantage of constraints such as energy/momentum conservation improve measured quantities provide a means to cut background

Experiments l l l 7 WEKA Characteristics of Data Feature Selection Initial Classification Cost Sensitive Classification

Characteristics of Data l l l l 8 1000 MC Signal Samples ~6000 MC Background Samples ~13, 500 Real Samples 45 Attributes Attribute 1 – 4 : Confidence Levels Attribute 5 : Total Energy Level Attribute 6 – 44 : (3 measured + 5 derived) Four Vectors + Mass**2 Attribute 45 : Class (Signal/Background)

Feature Selection l l l 9 Analysis using Information Gain Comparison of top 5/10/15 attributes Final selection = top 5

Initial Classification 10 Algorithm Accuracy (%) False Positives Rate Naive Bayes 85. 59 0. 201 Support Vector 87. 69 0. 187 Multilayer Perceptron 88. 57 0. 143 ADTree 88. 90 0. 115 C 4. 5 89. 23 0. 127 Random Forest 90. 02 0. 116

Cost Sensitive Classification 11 Algorithm Accuracy (%) False Positives Rate Naive Bayes 86. 79 0. 068 Support Vector 88. 29 0. 016 Multilayer Perceptron 90. 58 0. 03 ADTree 90. 81 0. 037 C 4. 5 91. 97 0. 047 Random Forest 92. 34 0. 043

Comparison 12 Algorithm FP Rate (Initial) FP Rate (Cost Sensitive) Naive Bayes 0. 201 0. 068 Support Vector 0. 187 0. 016 Multilayer Perceptron 0. 143 0. 03 ADTree 0. 115 0. 037 C 4. 5 0. 127 0. 047 Random Forest 0. 116 0. 043

Random Forest l l l Grows many classification trees Voting among trees Growing a tree – – – l 13 Sampling on N cases M input variables No pruning Error rate

Histograms 14

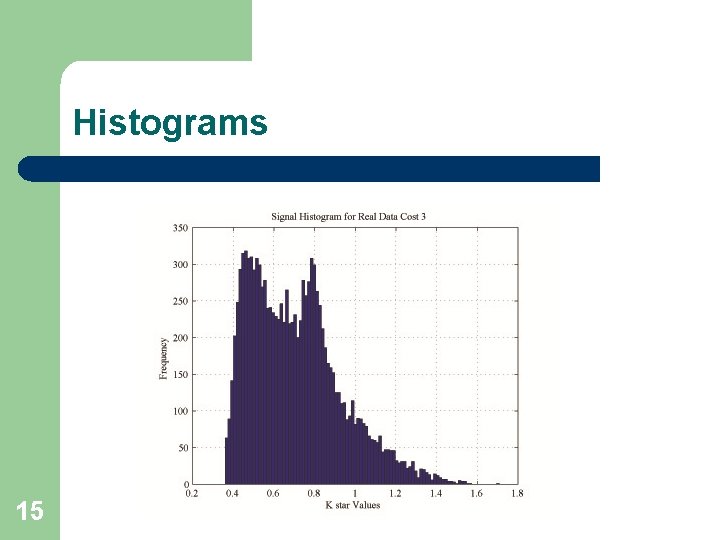

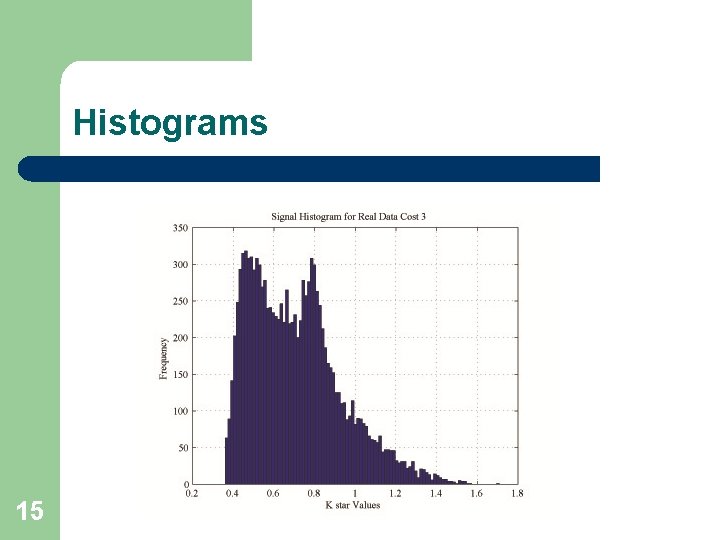

Histograms 15

Histograms 16

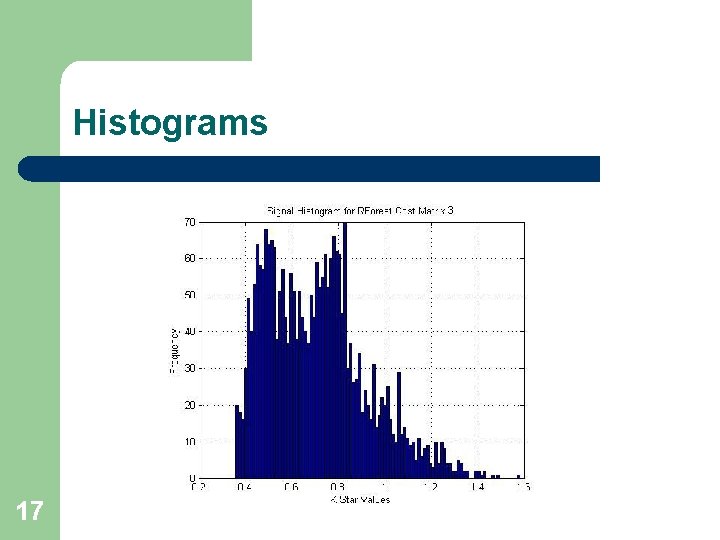

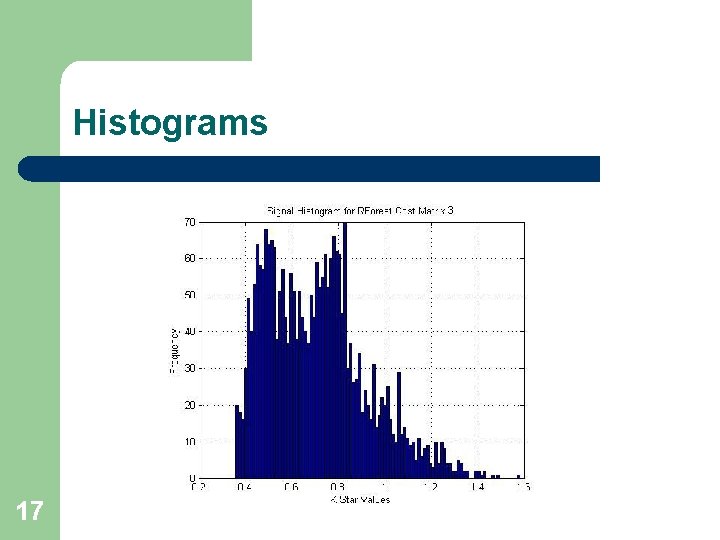

Histograms 17

Summary l l l 18 Monte Carlo Data Kinematic Fitting Learning Algorithm Real Data Signal Enhancement