Shared Memory Programming with Pthreads Critical section Thread

![Message sending with semaphores sprintf(my_msg, "Hello to %ld from %ld", dest, my_rank); messages[dest] = Message sending with semaphores sprintf(my_msg, "Hello to %ld from %ld", dest, my_rank); messages[dest] =](https://slidetodoc.com/presentation_image_h/1bebb81ed95b10854e3d212493f496c6/image-36.jpg)

![False Sharing: Example Two CPUs execute: for( i=0; i<n; i++ ) a[i] = b[i]; False Sharing: Example Two CPUs execute: for( i=0; i<n; i++ ) a[i] = b[i];](https://slidetodoc.com/presentation_image_h/1bebb81ed95b10854e3d212493f496c6/image-44.jpg)

- Slides: 48

Shared Memory Programming with Pthreads Critical section & Thread Synchronization

• Critical section & thread synchronization. § Mutexes § Semaphores § Producer-consumer synchronization. § Barriers and condition variables. • Thread safety. Copyright © 2010, Elsevier Inc. All rights Reserved # Chapter Subtitle Outline

Synchronization & Critical Sections Copyright © 2010, Elsevier Inc. All rights Reserved

Why Do Parallel Computing? • Limits of single CPU computing § Performance § Available memory • Parallel computing allows one to: § Solve problems that don’t fit on a single CPU § Solve problems that can’t be solved in a reasonable time • We can solve… § larger problems § faster § more cases

TYPES OF PARALLELISM

Multicore Programming: Types of parallelism • Data parallelism – distributes subsets of the same data across multiple cores, same operation on each – Give an example ? ? – What about summing the contents of an array of size N • Task parallelism – distributing Threads/Processes across cores, each performing unique operation, different threads may be operating on the same data, or they may be operating on different data. – Give an Example ? ? ? – What about performing different but unique statistical operation on an array of elements (Max, Min, Avg. etc. ). – Thus, threads again are operating in parallel on separate computing cores, but each is performing a unique operation. • Fundamentally, then, data parallelism involves the distribution of data across multiple cores, and task parallelism involves the distribution of tasks across multiple cores

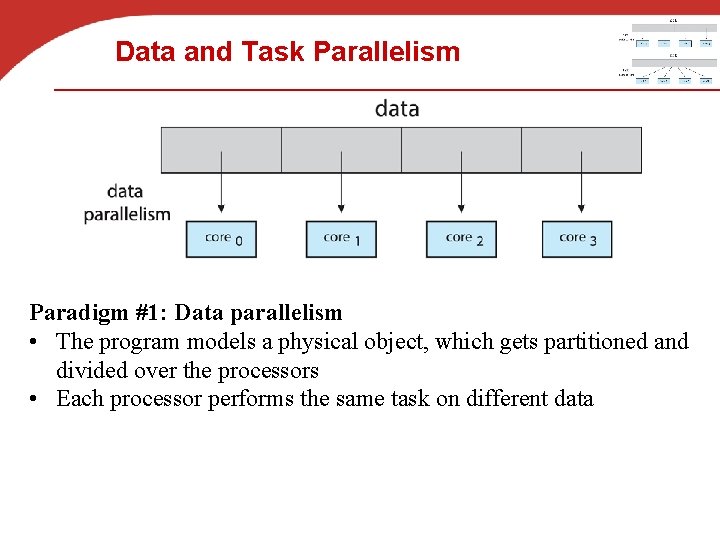

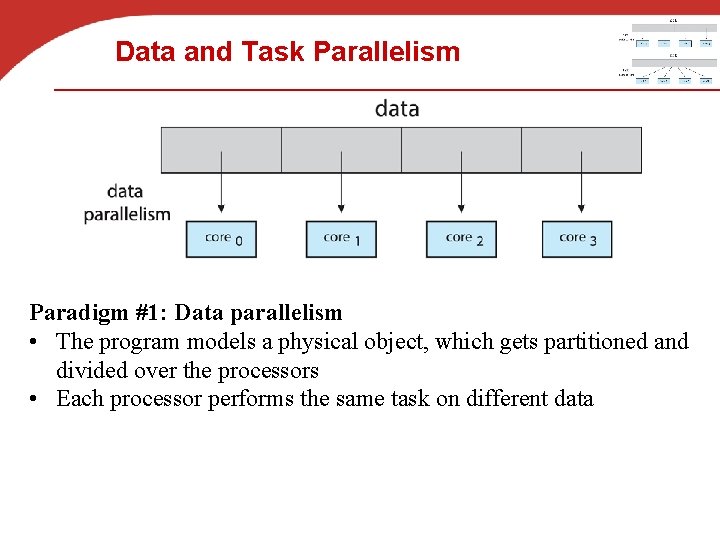

Data and Task Parallelism Paradigm #1: Data parallelism • The program models a physical object, which gets partitioned and divided over the processors • Each processor performs the same task on different data

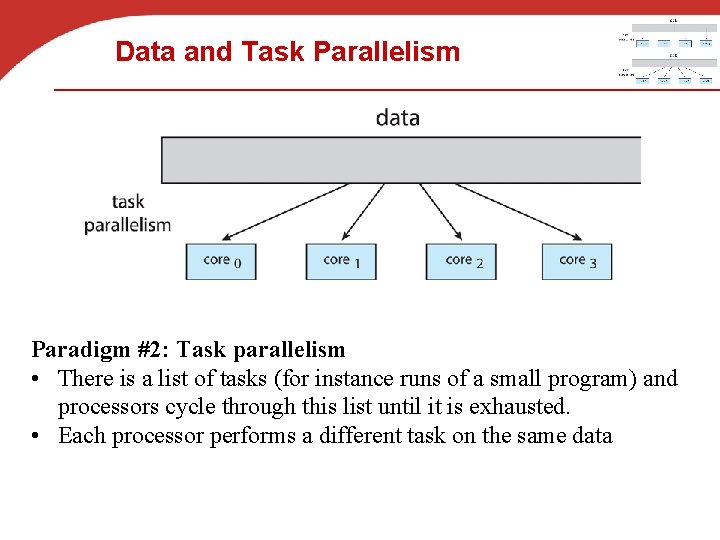

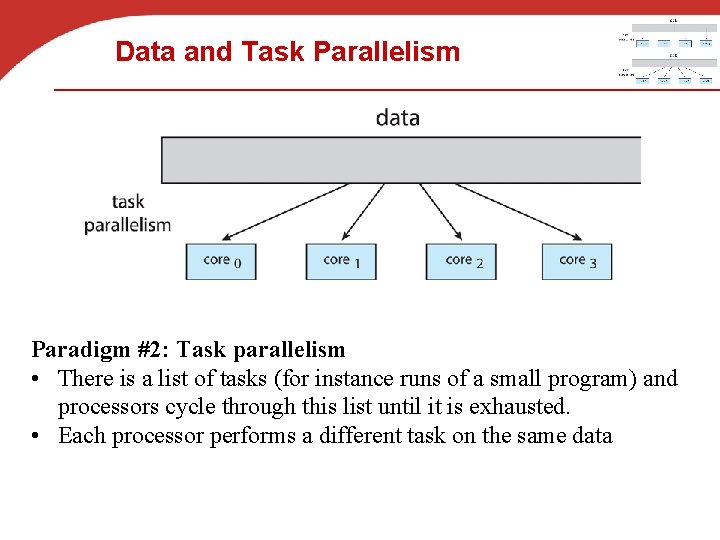

Data and Task Parallelism Paradigm #2: Task parallelism • There is a list of tasks (for instance runs of a small program) and processors cycle through this list until it is exhausted. • Each processor performs a different task on the same data

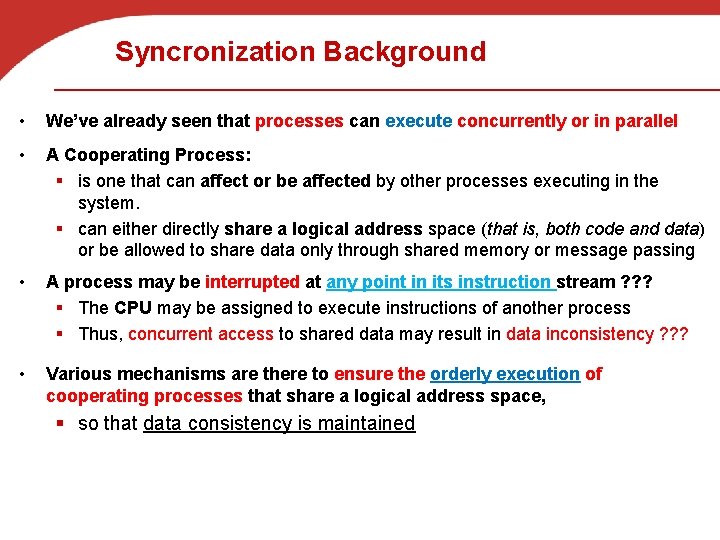

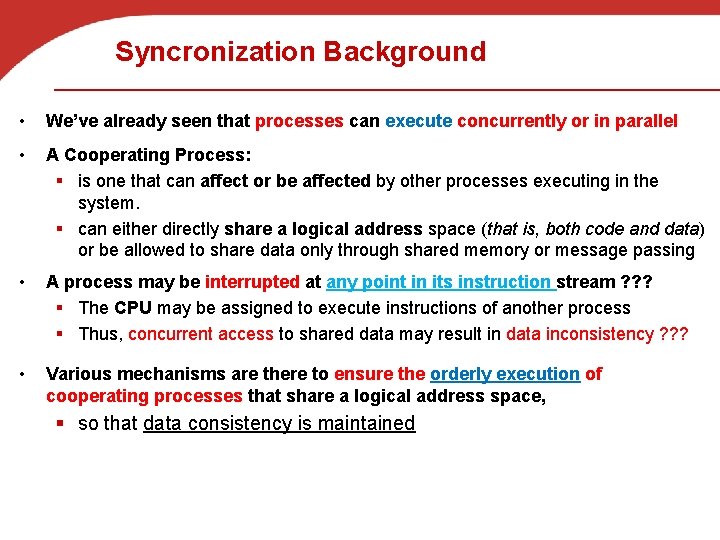

Syncronization Background • We’ve already seen that processes can execute concurrently or in parallel • A Cooperating Process: § is one that can affect or be affected by other processes executing in the system. § can either directly share a logical address space (that is, both code and data) or be allowed to share data only through shared memory or message passing • A process may be interrupted at any point in its instruction stream ? ? ? § The CPU may be assigned to execute instructions of another process § Thus, concurrent access to shared data may result in data inconsistency ? ? ? • Various mechanisms are there to ensure the orderly execution of cooperating processes that share a logical address space, § so that data consistency is maintained

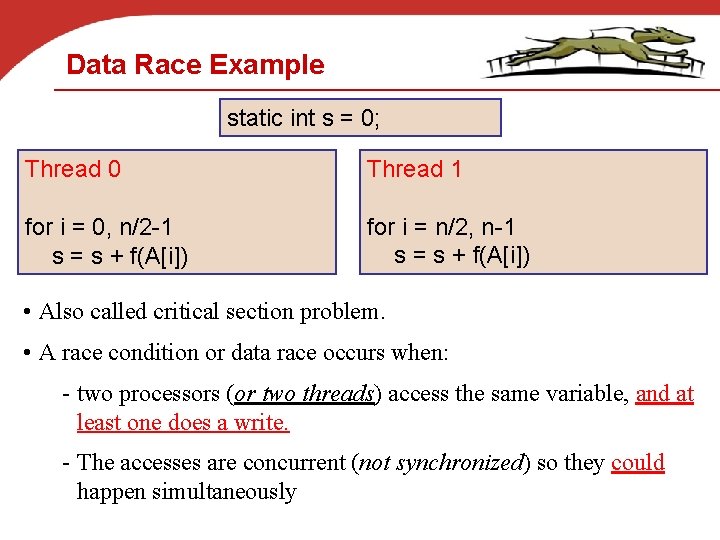

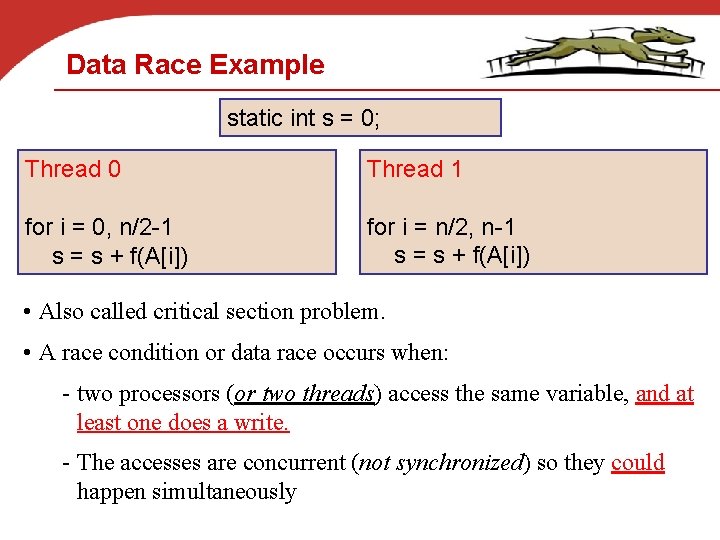

Data Race Example static int s = 0; Thread 0 Thread 1 for i = 0, n/2 -1 s = s + f(A[i]) for i = n/2, n-1 s = s + f(A[i]) • Also called critical section problem. • A race condition or data race occurs when: - two processors (or two threads) access the same variable, and at least one does a write. - The accesses are concurrent (not synchronized) so they could happen simultaneously

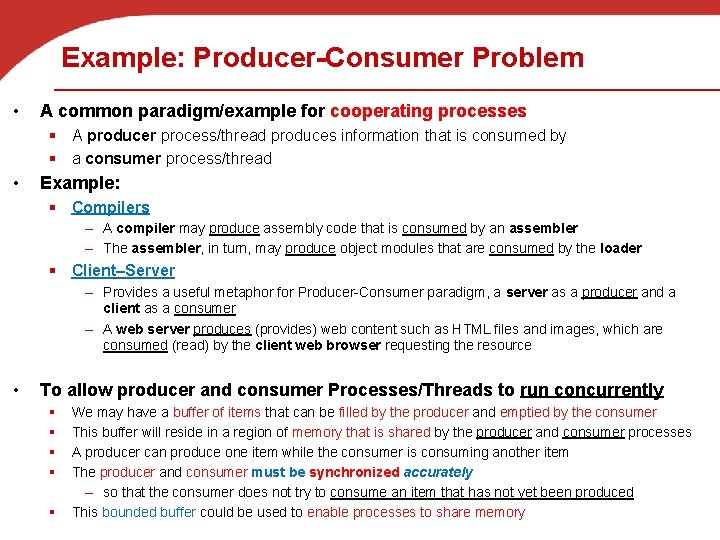

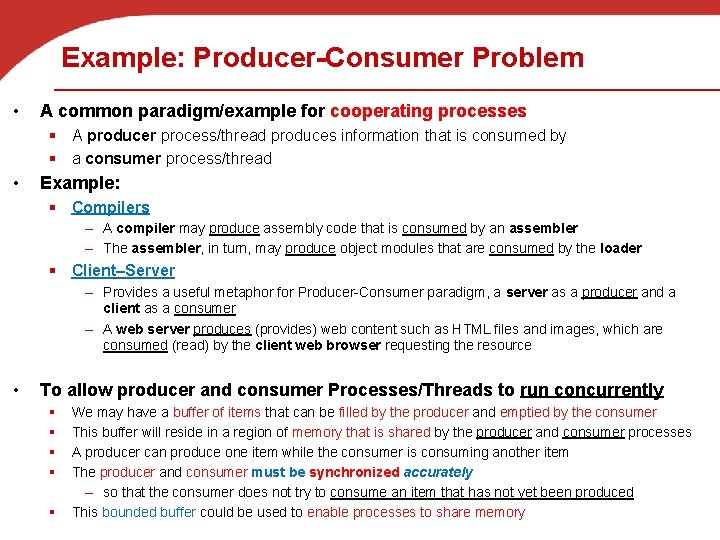

Example: Producer-Consumer Problem • A common paradigm/example for cooperating processes § A producer process/thread produces information that is consumed by § a consumer process/thread • Example: § Compilers – A compiler may produce assembly code that is consumed by an assembler – The assembler, in turn, may produce object modules that are consumed by the loader § Client–Server – Provides a useful metaphor for Producer-Consumer paradigm, a server as a producer and a client as a consumer – A web server produces (provides) web content such as HTML files and images, which are consumed (read) by the client web browser requesting the resource • To allow producer and consumer Processes/Threads to run concurrently § § § We may have a buffer of items that can be filled by the producer and emptied by the consumer This buffer will reside in a region of memory that is shared by the producer and consumer processes A producer can produce one item while the consumer is consuming another item The producer and consumer must be synchronized accurately – so that the consumer does not try to consume an item that has not yet been produced This bounded buffer could be used to enable processes to share memory

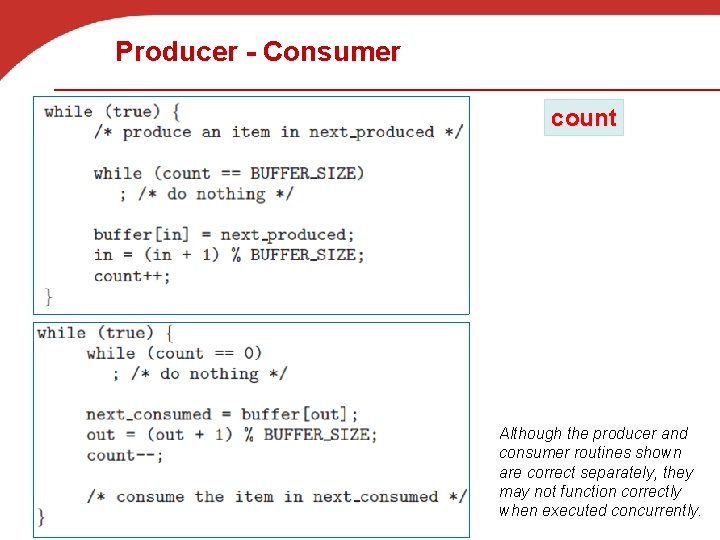

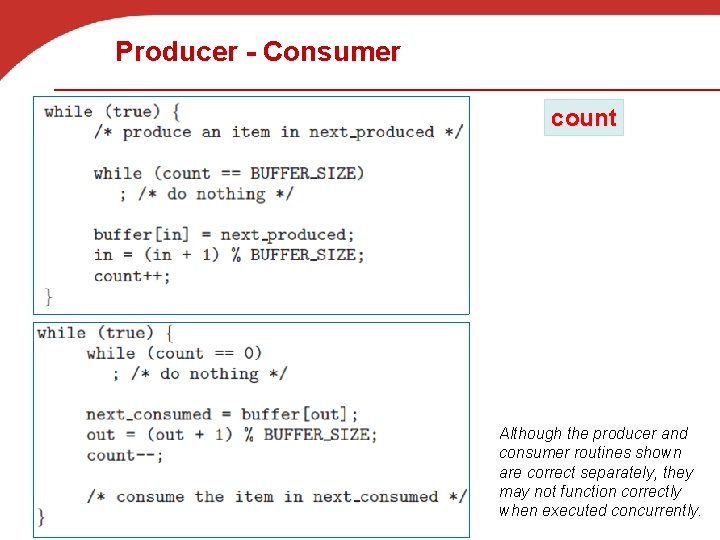

Producer-Consumer Problem • Producer-Consumer Problem: § Suppose that we wanted to provide a solution to the consumerproducer problem that two Processes/Threads work on the same buffer (bounded buffer) § We can do so by having an integer counter that keeps track of the number of items in the buffer § Initially, counter is set to 0 § counter: is incremented by the producer after it produces a new item to the buffer § counter: is decremented by the consumer after it consumes an item from the buffer

Producer - Consumer count Although the producer and consumer routines shown are correct separately, they may not function correctly when executed concurrently.

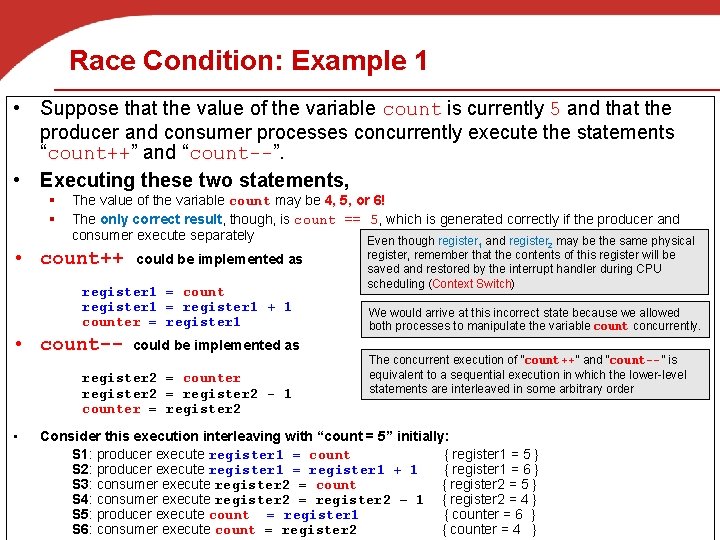

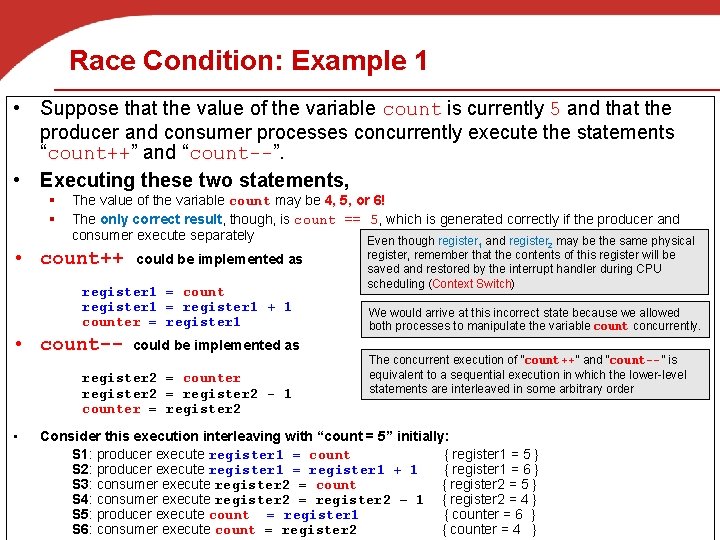

Race Condition: Example 1 • Suppose that the value of the variable count is currently 5 and that the producer and consumer processes concurrently execute the statements “count++” and “count--”. • Executing these two statements, § § The value of the variable count may be 4, 5, or 6! The only correct result, though, is count == 5, which is generated correctly if the producer and consumer execute separately Even though register and register may be the same physical • count++ 1 could be implemented as register 1 = count register 1 = register 1 + 1 counter = register 1 • count-- could be implemented as register 2 = counter register 2 = register 2 - 1 counter = register 2 • 2 register, remember that the contents of this register will be saved and restored by the interrupt handler during CPU scheduling (Context Switch) We would arrive at this incorrect state because we allowed both processes to manipulate the variable count concurrently. The concurrent execution of “count++” and “count--” is equivalent to a sequential execution in which the lower-level statements are interleaved in some arbitrary order Consider this execution interleaving with “count = 5” initially: S 1: producer execute register 1 = count { register 1 = 5 } S 2: producer execute register 1 = register 1 + 1 { register 1 = 6 } S 3: consumer execute register 2 = count { register 2 = 5 } S 4: consumer execute register 2 = register 2 – 1 { register 2 = 4 } S 5: producer execute count = register 1 { counter = 6 } S 6: consumer execute count = register 2 { counter = 4 }

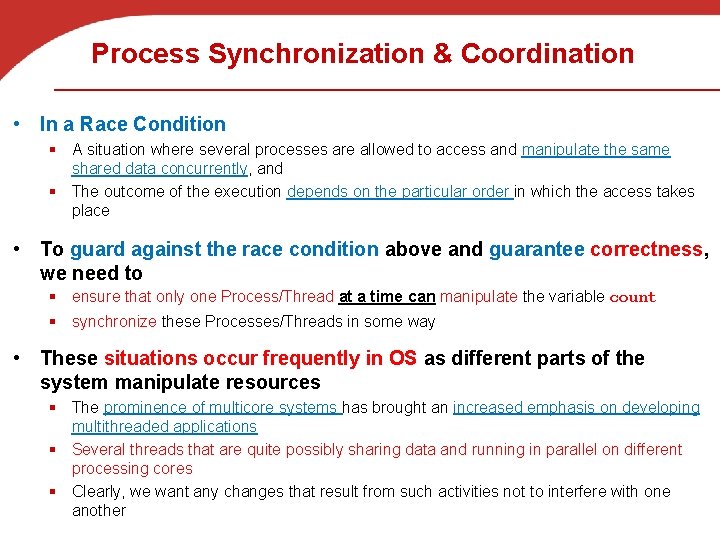

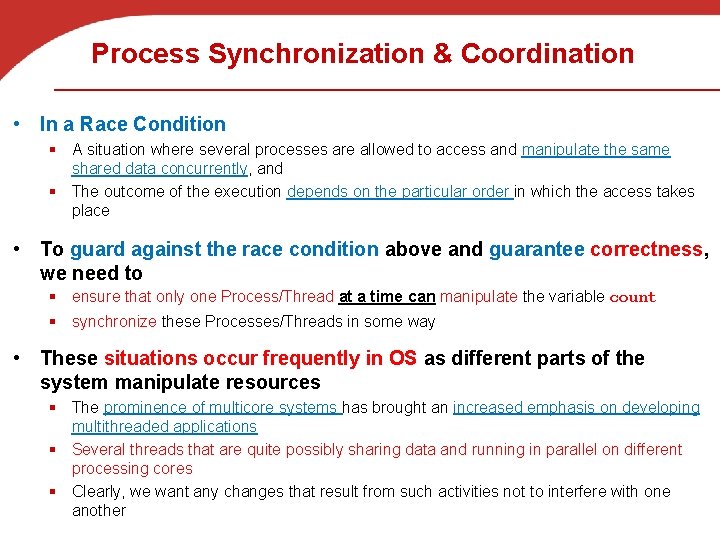

Process Synchronization & Coordination • In a Race Condition § A situation where several processes are allowed to access and manipulate the same shared data concurrently, and § The outcome of the execution depends on the particular order in which the access takes place • To guard against the race condition above and guarantee correctness, we need to § ensure that only one Process/Thread at a time can manipulate the variable count § synchronize these Processes/Threads in some way • These situations occur frequently in OS as different parts of the system manipulate resources § The prominence of multicore systems has brought an increased emphasis on developing multithreaded applications § Several threads that are quite possibly sharing data and running in parallel on different processing cores § Clearly, we want any changes that result from such activities not to interfere with one another

Synchronization Solutions 1. Busy waiting 2. Mutex (lock) 3. Semaphore 4. Conditional Variables

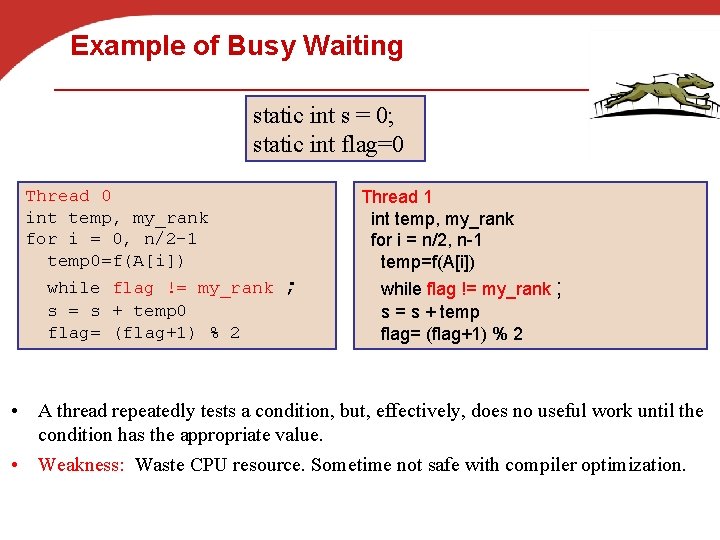

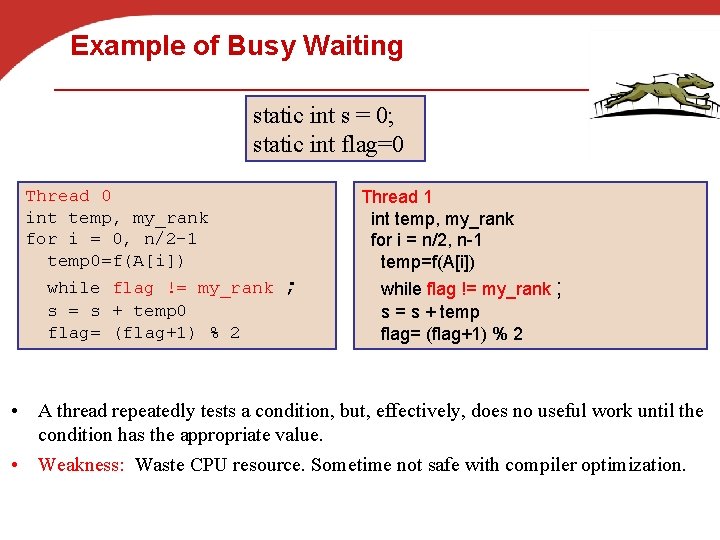

Example of Busy Waiting static int s = 0; static int flag=0 Thread 0 int temp, my_rank for i = 0, n/2 -1 temp 0=f(A[i]) while flag != my_rank s = s + temp 0 flag= (flag+1) % 2 Thread 1 int temp, my_rank for i = n/2, n-1 temp=f(A[i]) ; while flag != my_rank ; s = s + temp flag= (flag+1) % 2 • A thread repeatedly tests a condition, but, effectively, does no useful work until the condition has the appropriate value. • Weakness: Waste CPU resource. Sometime not safe with compiler optimization.

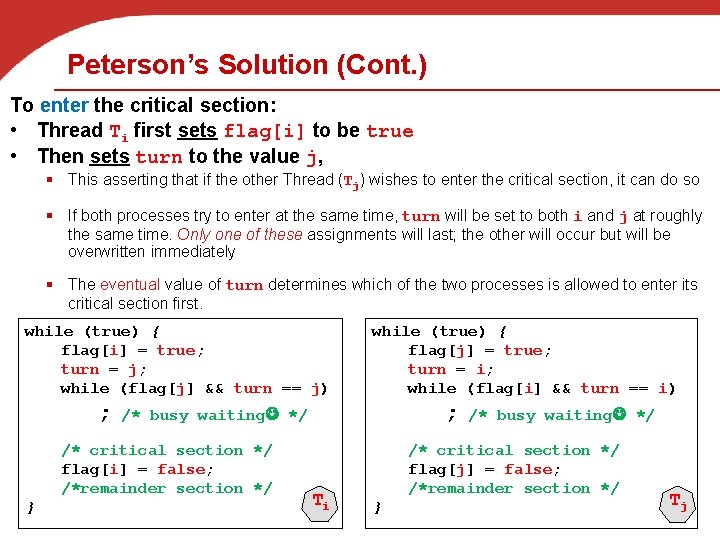

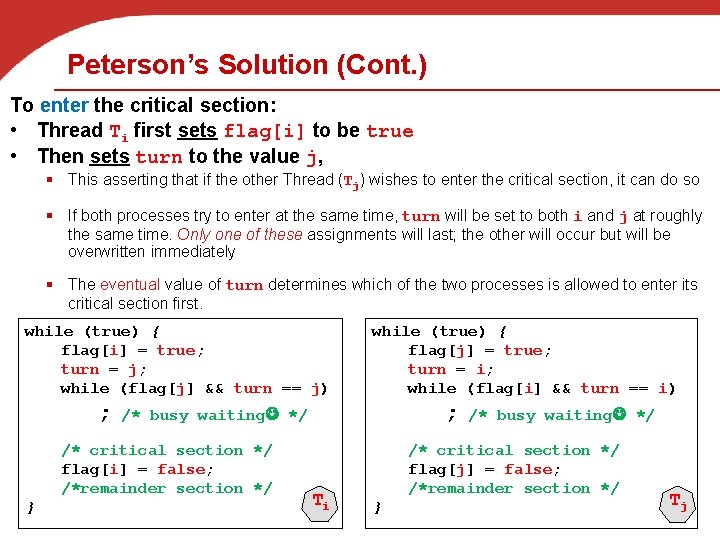

Peterson’s Solution (Cont. ) To enter the critical section: • Thread Ti first sets flag[i] to be true • Then sets turn to the value j, § This asserting that if the other Thread (Tj) wishes to enter the critical section, it can do so § If both processes try to enter at the same time, turn will be set to both i and j at roughly the same time. Only one of these assignments will last; the other will occur but will be overwritten immediately § The eventual value of turn determines which of the two processes is allowed to enter its critical section first. while (true) { flag[i] = true; turn = j; while (flag[j] && turn == j) ; ; /* busy waiting */ /* critical section */ flag[i] = false; /*remainder section */ } while (true) { flag[j] = true; turn = i; while (flag[i] && turn == i) Ti /* busy waiting */ /* critical section */ flag[j] = false; /*remainder section */ } Tj

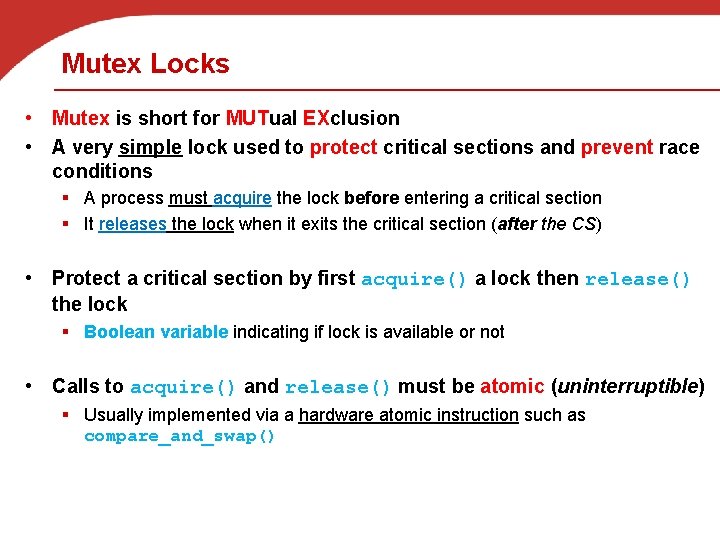

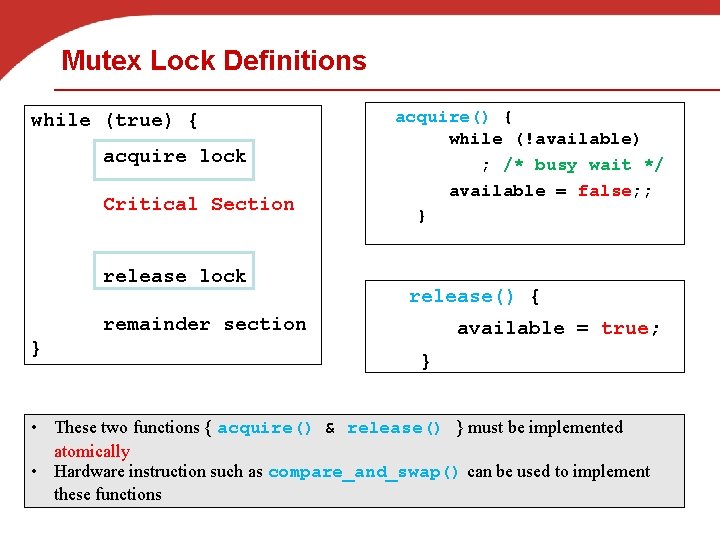

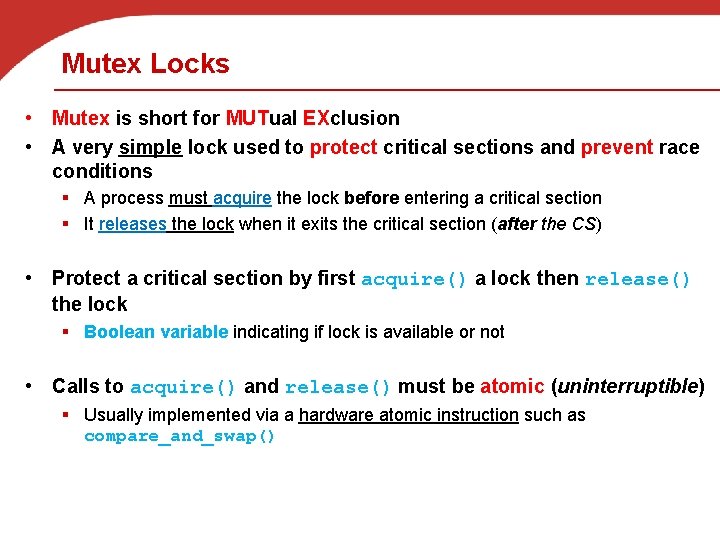

Mutex Locks • Mutex is short for MUTual EXclusion • A very simple lock used to protect critical sections and prevent race conditions § A process must acquire the lock before entering a critical section § It releases the lock when it exits the critical section (after the CS) • Protect a critical section by first acquire() a lock then release() the lock § Boolean variable indicating if lock is available or not • Calls to acquire() and release() must be atomic (uninterruptible) § Usually implemented via a hardware atomic instruction such as compare_and_swap()

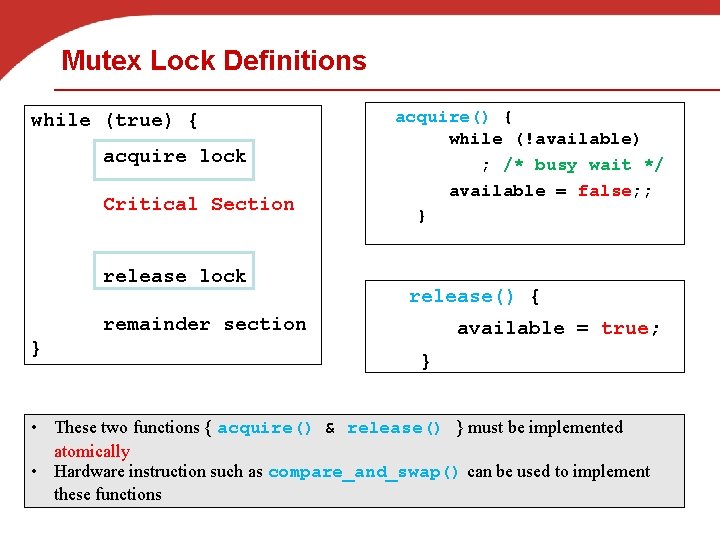

Mutex Lock Definitions while (true) { acquire lock Critical Section release lock acquire() { while (!available) ; /* busy wait */ available = false; ; } release() { remainder section } available = true; } • These two functions { acquire() & release() } must be implemented atomically • Hardware instruction such as compare_and_swap() can be used to implement these functions

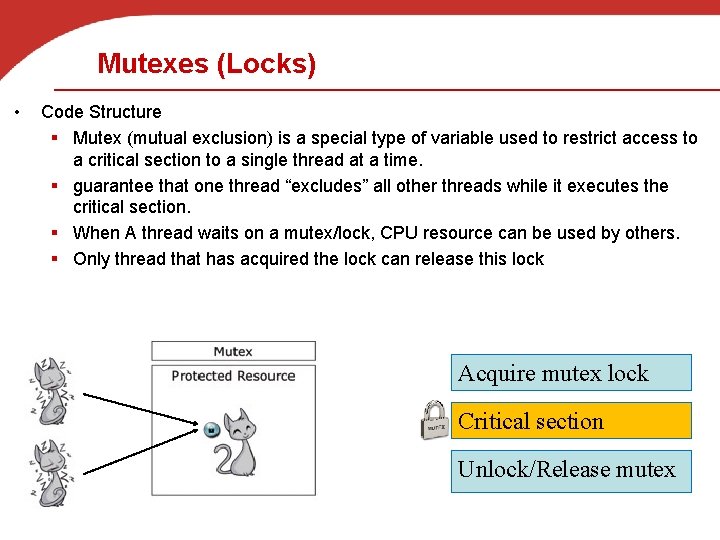

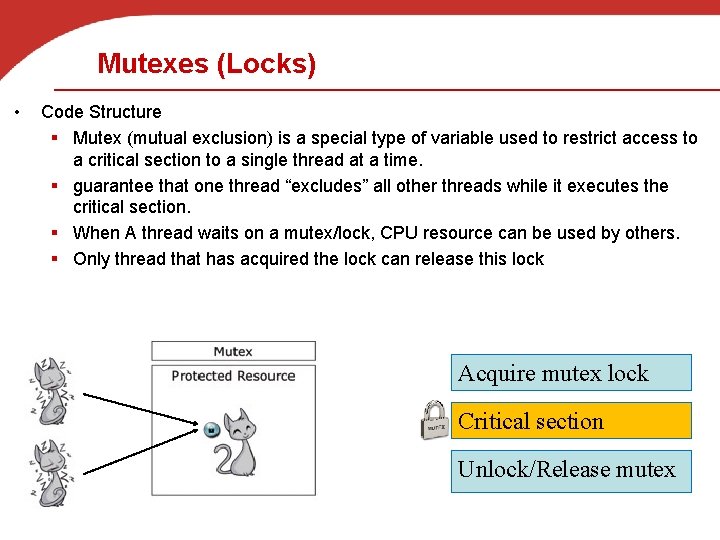

Mutexes (Locks) • Code Structure § Mutex (mutual exclusion) is a special type of variable used to restrict access to a critical section to a single thread at a time. § guarantee that one thread “excludes” all other threads while it executes the critical section. § When A thread waits on a mutex/lock, CPU resource can be used by others. § Only thread that has acquired the lock can release this lock Acquire mutex lock Critical section Unlock/Release mutex

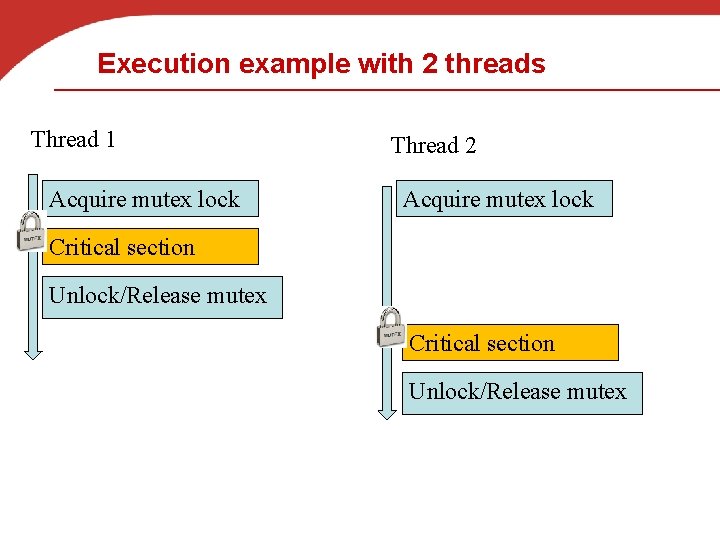

Execution example with 2 threads Thread 1 Acquire mutex lock Thread 2 Acquire mutex lock Critical section Unlock/Release mutex

Mutexes in Pthreads • A special type for mutexes: pthread_mutex_t. • To gain access to a critical section, call • To release • When finishing use of a mutex, call Copyright © 2010, Elsevier Inc. All rights Reserved

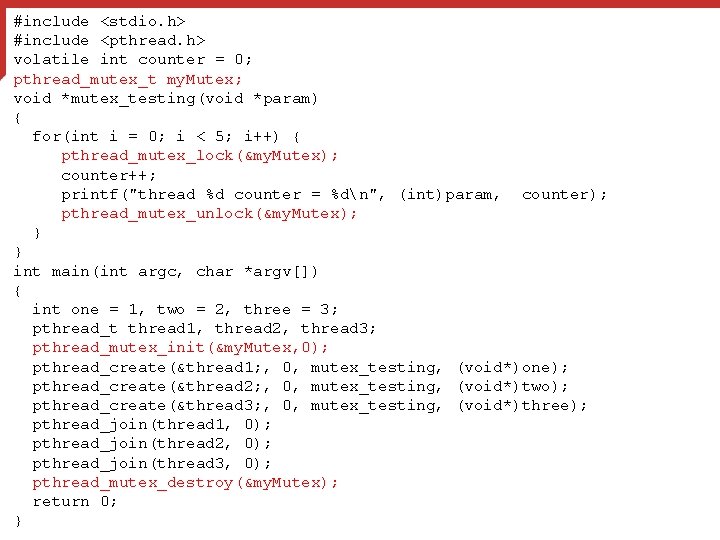

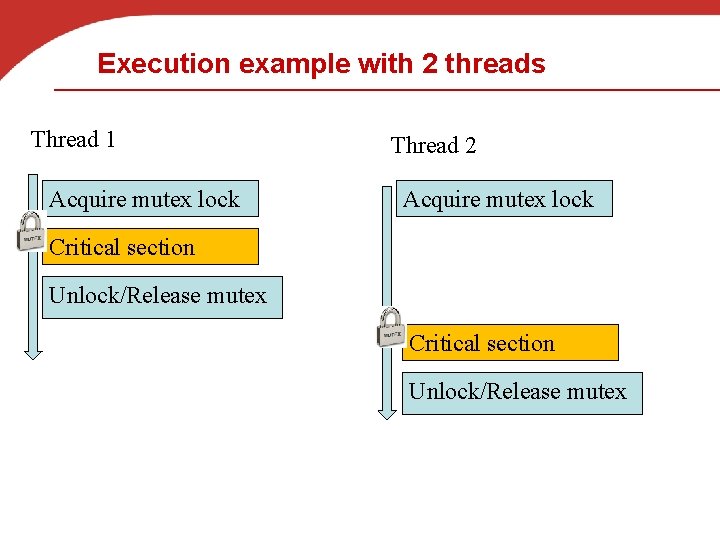

#include <stdio. h> #include <pthread. h> volatile int counter = 0; pthread_mutex_t my. Mutex; void *mutex_testing(void *param) { for(int i = 0; i < 5; i++) { pthread_mutex_lock(&my. Mutex); counter++; printf("thread %d counter = %dn", (int)param, counter); pthread_mutex_unlock(&my. Mutex); } } int main(int argc, char *argv[]) { int one = 1, two = 2, three = 3; pthread_t thread 1, thread 2, thread 3; pthread_mutex_init(&my. Mutex, 0); pthread_create(&thread 1; , 0, mutex_testing, (void*)one); pthread_create(&thread 2; , 0, mutex_testing, (void*)two); pthread_create(&thread 3; , 0, mutex_testing, (void*)three); pthread_join(thread 1, 0); pthread_join(thread 2, 0); pthread_join(thread 3, 0); pthread_mutex_destroy(&my. Mutex); return 0; } Example

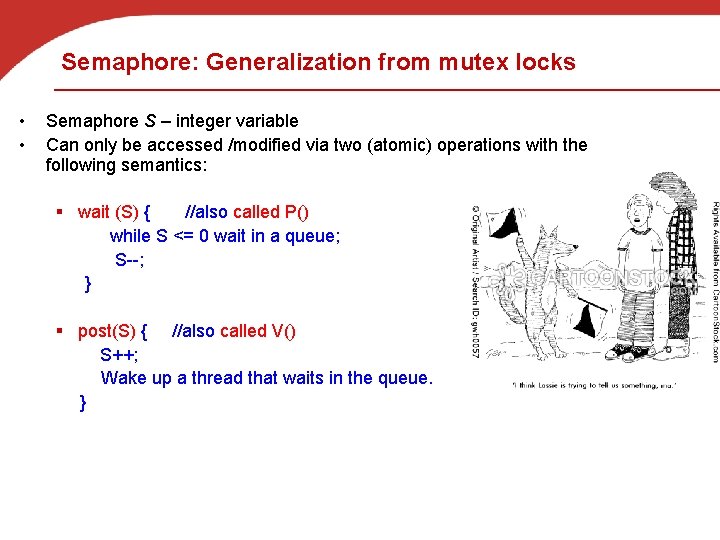

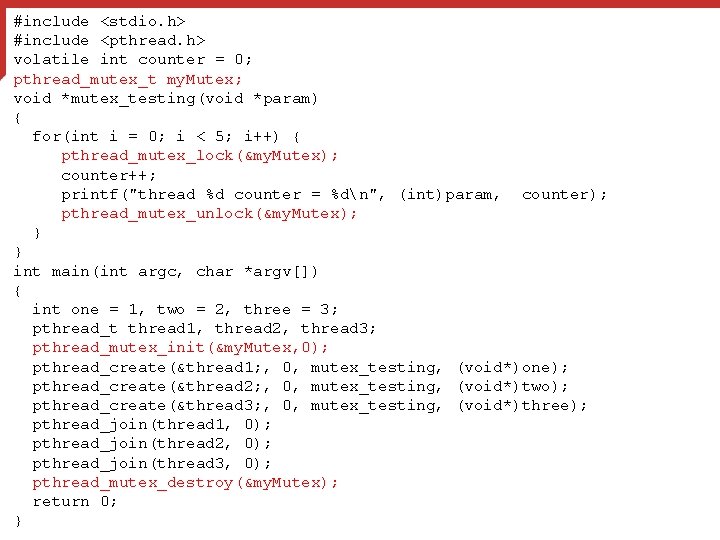

Semaphore: Generalization from mutex locks • • Semaphore S – integer variable Can only be accessed /modified via two (atomic) operations with the following semantics: § wait (S) { //also called P() while S <= 0 wait in a queue; S--; } § post(S) { //also called V() S++; Wake up a thread that waits in the queue. }

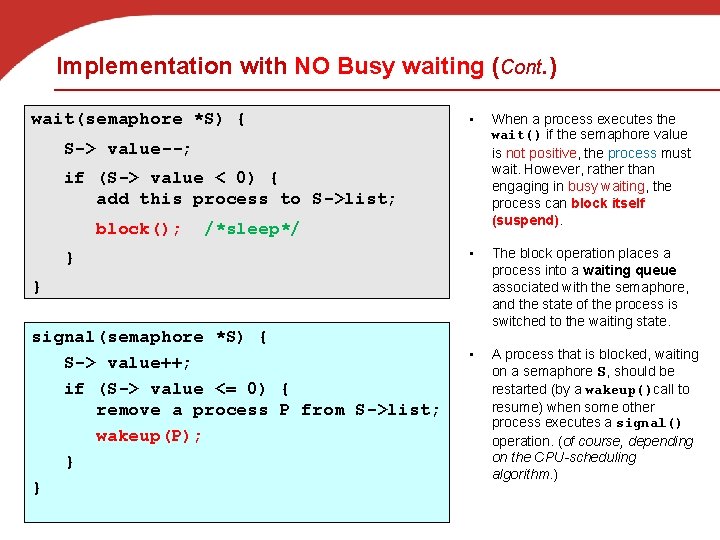

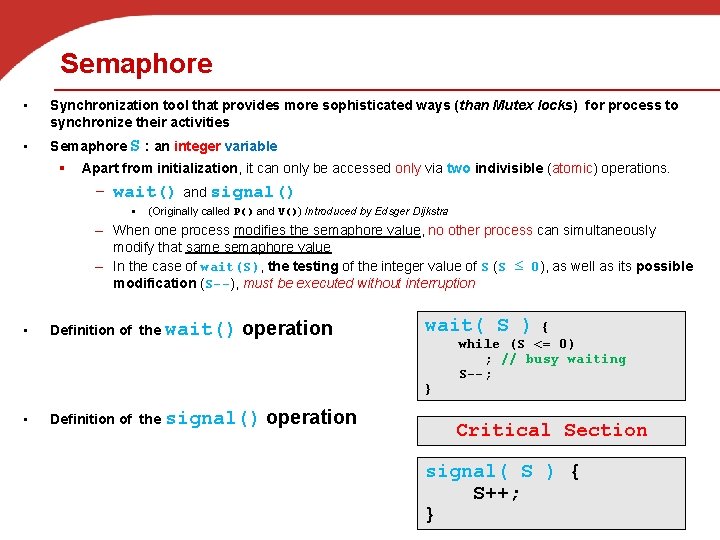

Semaphore • Synchronization tool that provides more sophisticated ways (than Mutex locks) for process to synchronize their activities • Semaphore S : an integer variable § Apart from initialization, it can only be accessed only via two indivisible (atomic) operations. – wait() and signal() § (Originally called P() and V()) Introduced by Edsger Dijkstra – When one process modifies the semaphore value, no other process can simultaneously modify that same semaphore value – In the case of wait(S), the testing of the integer value of S (S ≤ 0), as well as its possible modification (S--), must be executed without interruption • Definition of the wait() operation wait( S ) } • Definition of the signal() operation { while (S <= 0) ; // busy waiting S--; Critical Section signal( S ) { S++; }

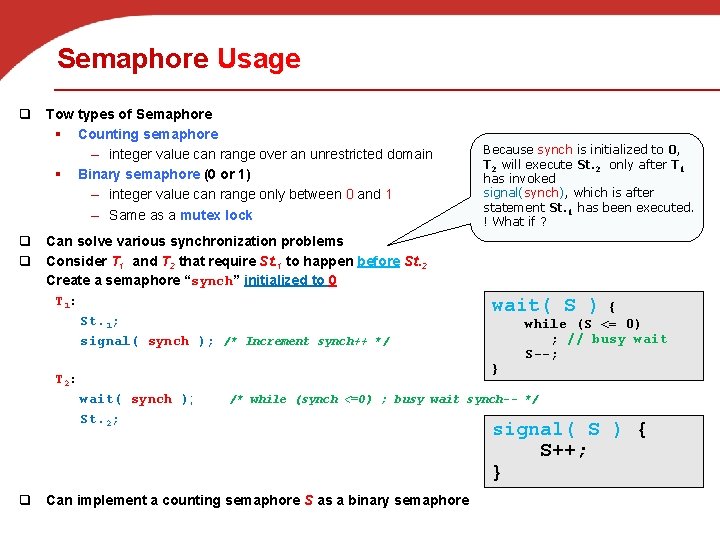

Semaphore Usage q Tow types of Semaphore § Counting semaphore – integer value can range over an unrestricted domain § Binary semaphore (0 or 1) – integer value can range only between 0 and 1 – Same as a mutex lock q Can solve various synchronization problems q Consider T 1 and T 2 that require St. 1 to happen before St. 2 Create a semaphore “synch” initialized to 0 T 1: St. 1; signal( synch ); /* Increment synch++ */ Because synch is initialized to 0, T 2 will execute St. 2 only after T 1 has invoked signal(synch), which is after statement St. 1 has been executed. ! What if ? wait( S ) } T 2: wait( synch ); St. 2; { while (S <= 0) ; // busy wait S--; /* while (synch <=0) ; busy wait synch-- */ q Can implement a counting semaphore S as a binary semaphore signal( S ) { S++; }

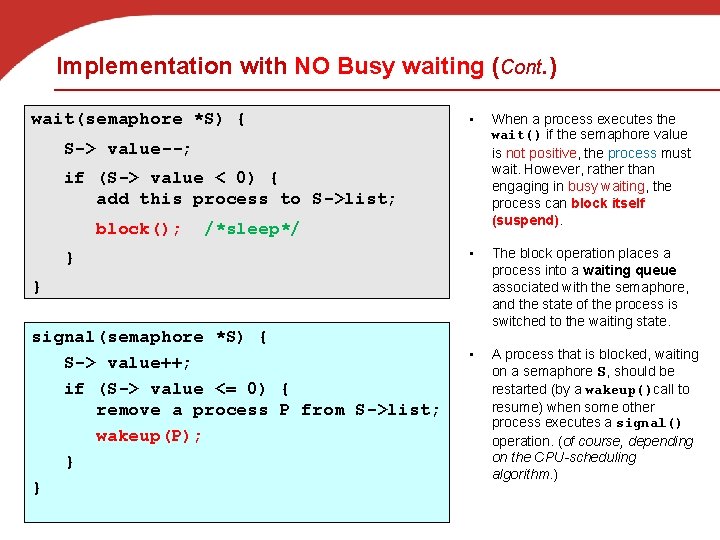

Implementation with NO Busy waiting (Cont. ) wait(semaphore *S) { • When a process executes the wait() if the semaphore value is not positive, the process must wait. However, rather than engaging in busy waiting, the process can block itself (suspend). • The block operation places a process into a waiting queue associated with the semaphore, and the state of the process is switched to the waiting state. • A process that is blocked, waiting on a semaphore S, should be restarted (by a wakeup()call to resume) when some other process executes a signal() operation. (of course, depending on the CPU-scheduling algorithm. ) S-> value--; if (S-> value < 0) { add this process to S->list; block(); /*sleep*/ } } signal(semaphore *S) { S-> value++; if (S-> value <= 0) { remove a process P from S->list; wakeup(P); } }

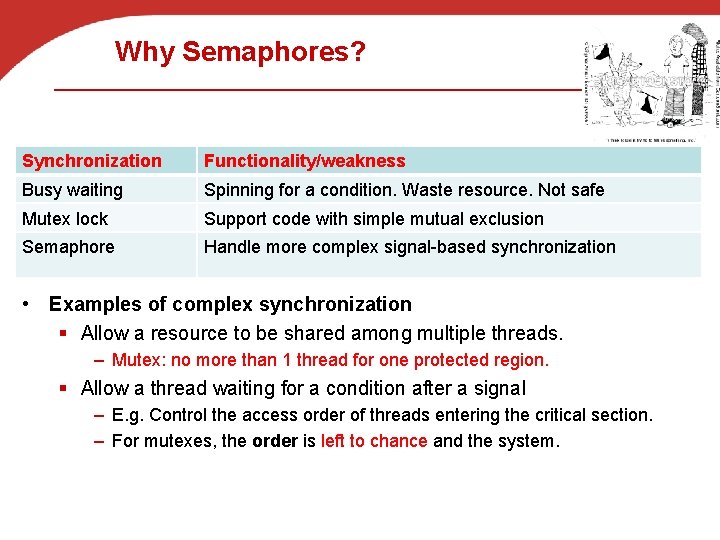

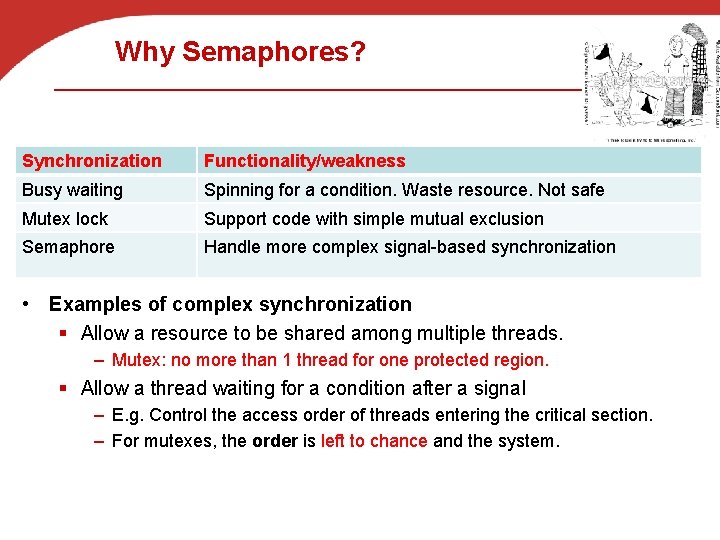

Why Semaphores? Synchronization Functionality/weakness Busy waiting Spinning for a condition. Waste resource. Not safe Mutex lock Support code with simple mutual exclusion Semaphore Handle more complex signal-based synchronization • Examples of complex synchronization § Allow a resource to be shared among multiple threads. – Mutex: no more than 1 thread for one protected region. § Allow a thread waiting for a condition after a signal – E. g. Control the access order of threads entering the critical section. – For mutexes, the order is left to chance and the system.

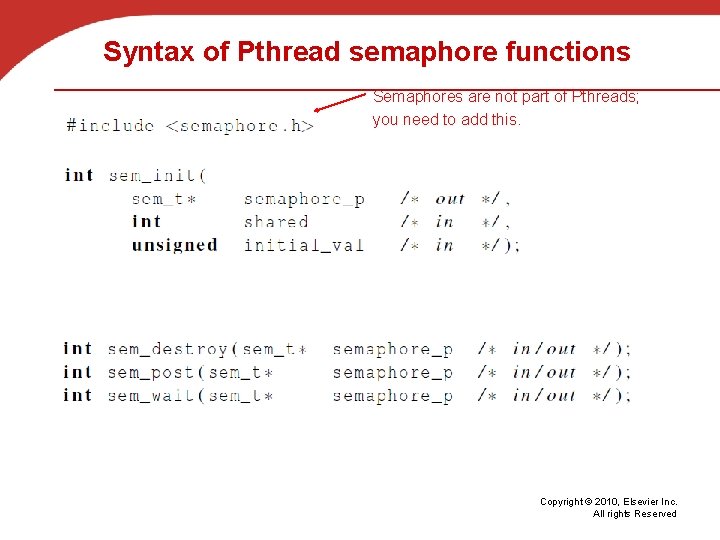

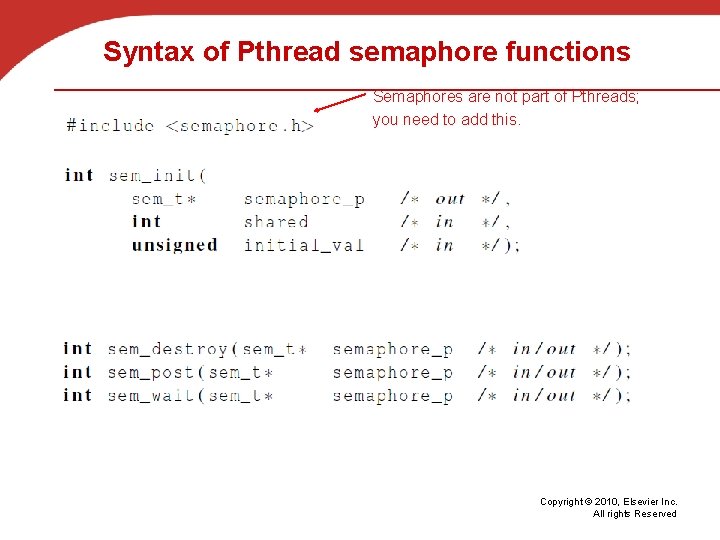

Syntax of Pthread semaphore functions Semaphores are not part of Pthreads; you need to add this. Copyright © 2010, Elsevier Inc. All rights Reserved

PRODUCER-CONSUMER SYNCHRONIZATION AND SEMAPHORES COPYRIGHT © 2010, ELSEVIER INC. ALL RIGHTS RESERVED

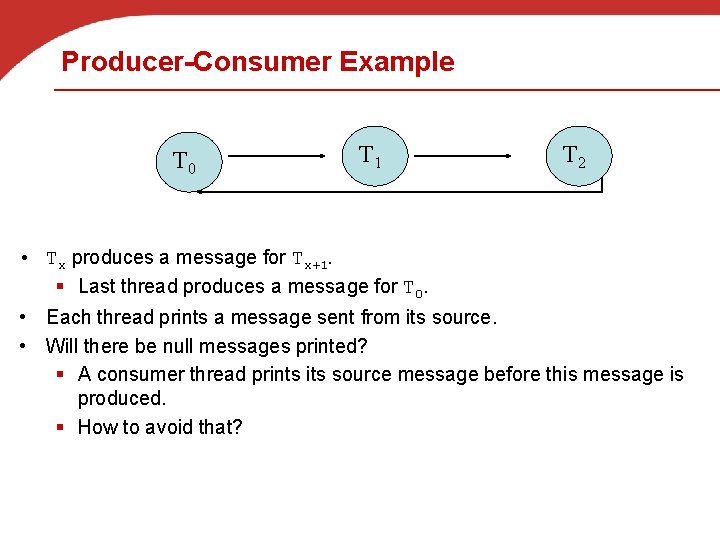

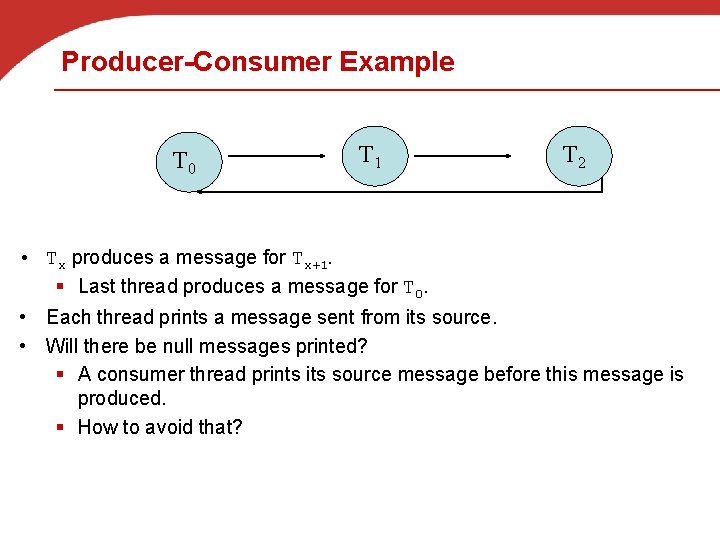

Producer-Consumer Example T 0 T 1 T 2 • Tx produces a message for Tx+1. § Last thread produces a message for T 0. • Each thread prints a message sent from its source. • Will there be null messages printed? § A consumer thread prints its source message before this message is produced. § How to avoid that?

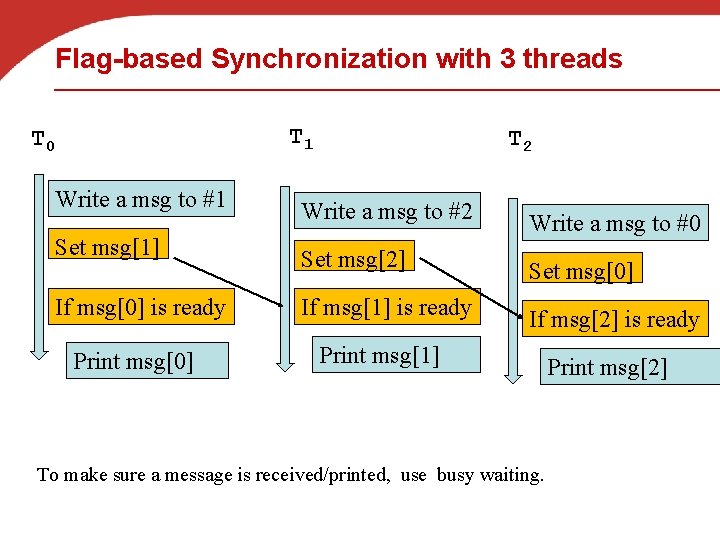

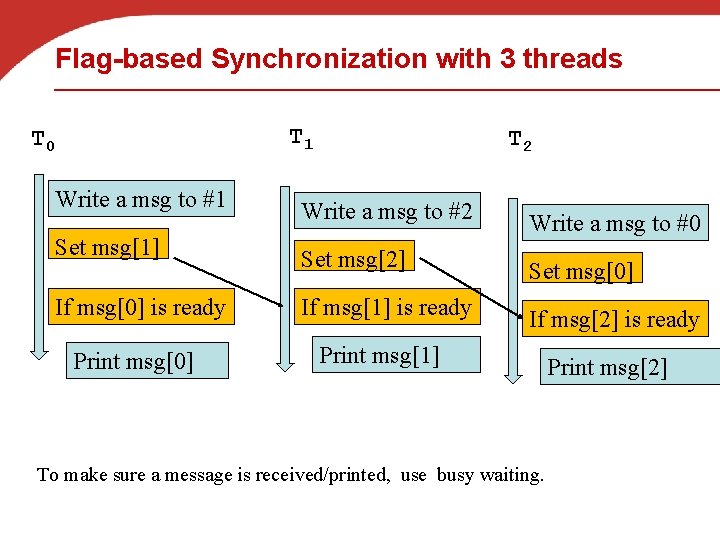

Flag-based Synchronization with 3 threads T 1 T 0 T 2 Write a msg to #1 Write a msg to #2 Set msg[1] Write a msg to #0 Set msg[2] Set msg[0] If msg[0] is ready If msg[1] is ready If msg[2] is ready Print msg[0] Print msg[1] To make sure a message is received/printed, use busy waiting. Print msg[2]

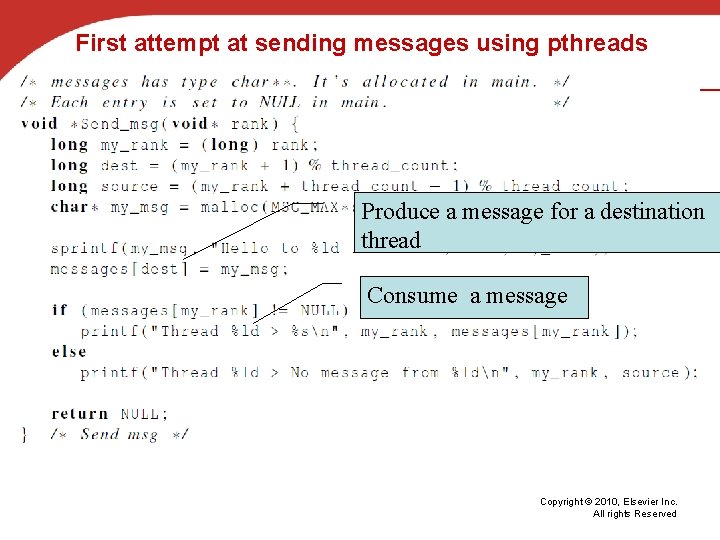

First attempt at sending messages using pthreads Produce a message for a destination thread Consume a message Copyright © 2010, Elsevier Inc. All rights Reserved

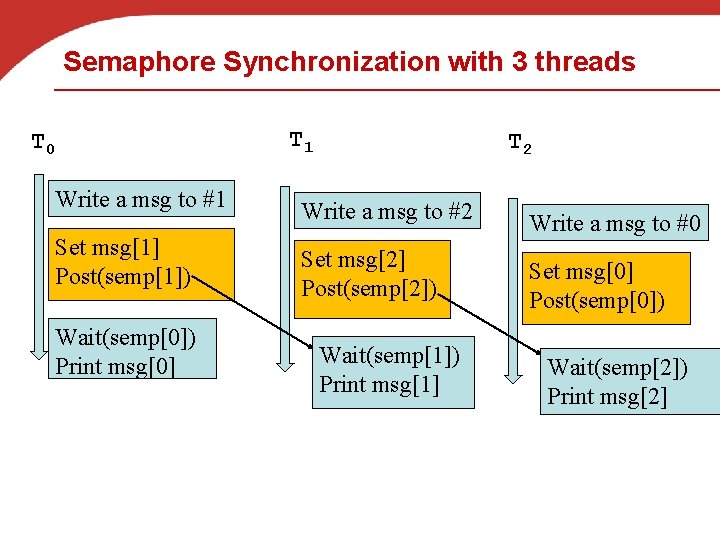

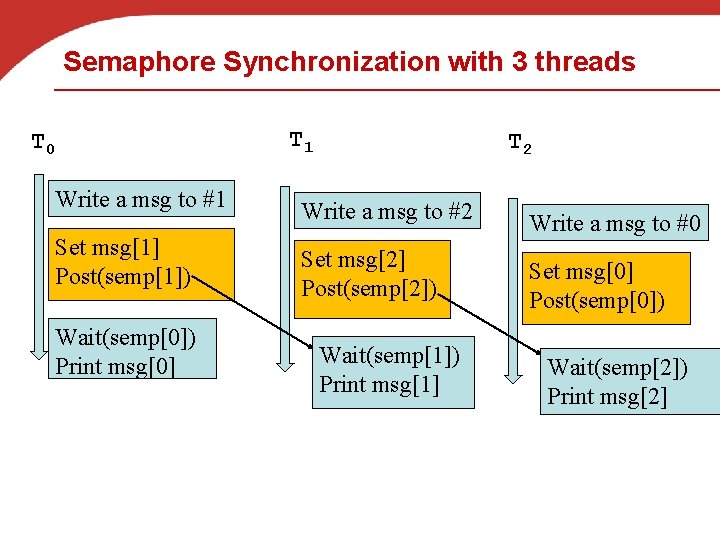

Semaphore Synchronization with 3 threads T 1 T 0 T 2 Write a msg to #1 Write a msg to #2 Set msg[1] Post(semp[1]) Write a msg to #0 Set msg[2] Post(semp[2]) Set msg[0] Post(semp[0]) Wait(semp[0]) Print msg[0] Wait(semp[1]) Print msg[1] Wait(semp[2]) Print msg[2]

![Message sending with semaphores sprintfmymsg Hello to ld from ld dest myrank messagesdest Message sending with semaphores sprintf(my_msg, "Hello to %ld from %ld", dest, my_rank); messages[dest] =](https://slidetodoc.com/presentation_image_h/1bebb81ed95b10854e3d212493f496c6/image-36.jpg)

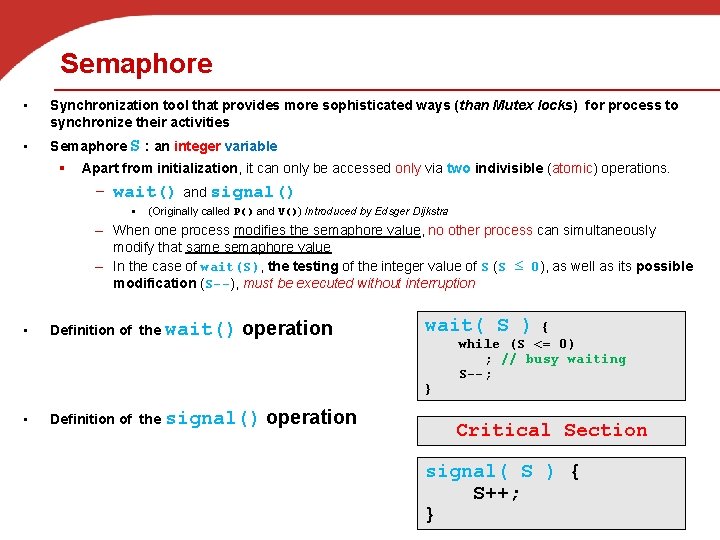

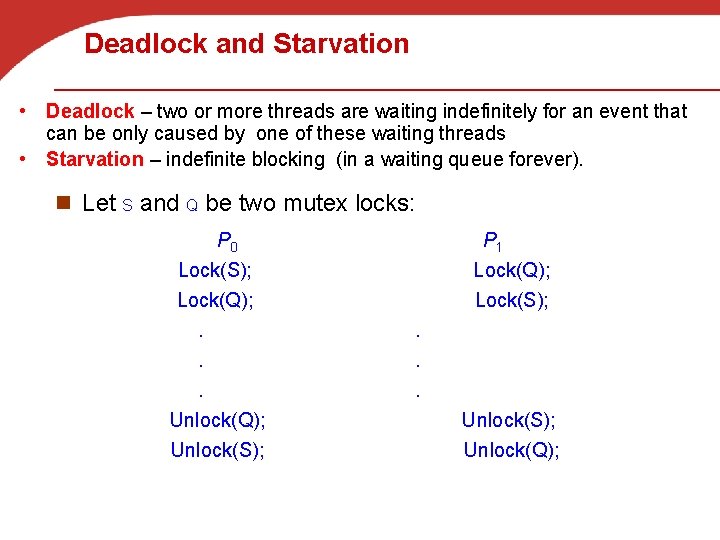

Message sending with semaphores sprintf(my_msg, "Hello to %ld from %ld", dest, my_rank); messages[dest] = my_msg; sem_post(&semaphores[dest]); /* signal the dest thread*/ sem_wait(&semaphores[my_rank]); /* Wait until the source message is created */ printf("Thread %ld > %sn", my_rank, messages[my_rank]);

Barriers • Synchronizing the threads to make sure that they all are at the same point in a program is called a barrier. • No thread can cross the barrier until all the threads have reached it. • Availability: § No barrier provided by Pthreads library and needs a custom implementation § Barrier is implicit in: – Open. MP – and available in MPI. Copyright © 2010, Elsevier Inc. All rights Reserved

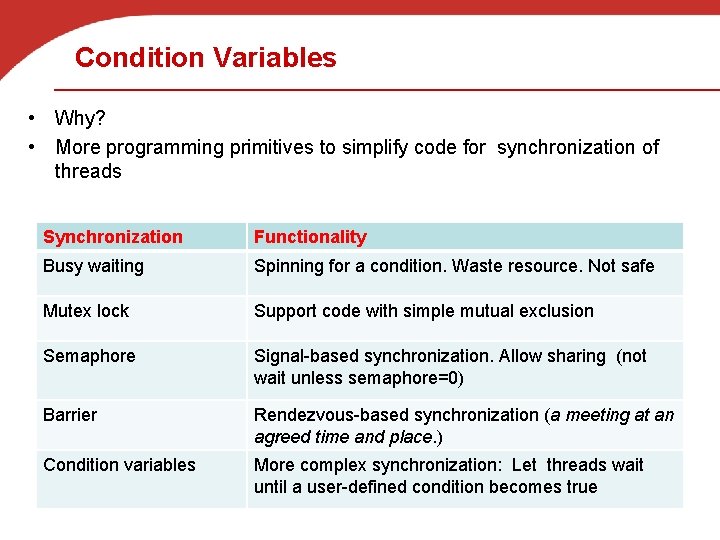

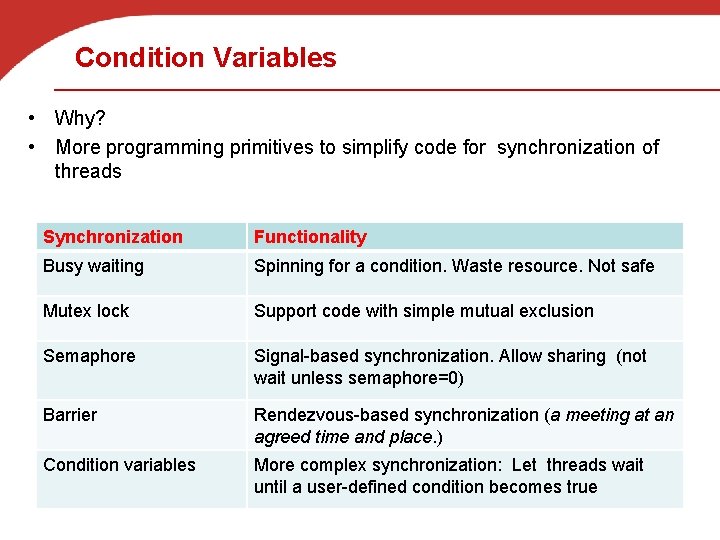

Condition Variables • Why? • More programming primitives to simplify code for synchronization of threads Synchronization Functionality Busy waiting Spinning for a condition. Waste resource. Not safe Mutex lock Support code with simple mutual exclusion Semaphore Signal-based synchronization. Allow sharing (not wait unless semaphore=0) Barrier Rendezvous-based synchronization (a meeting at an agreed time and place. ) Condition variables More complex synchronization: Let threads wait until a user-defined condition becomes true

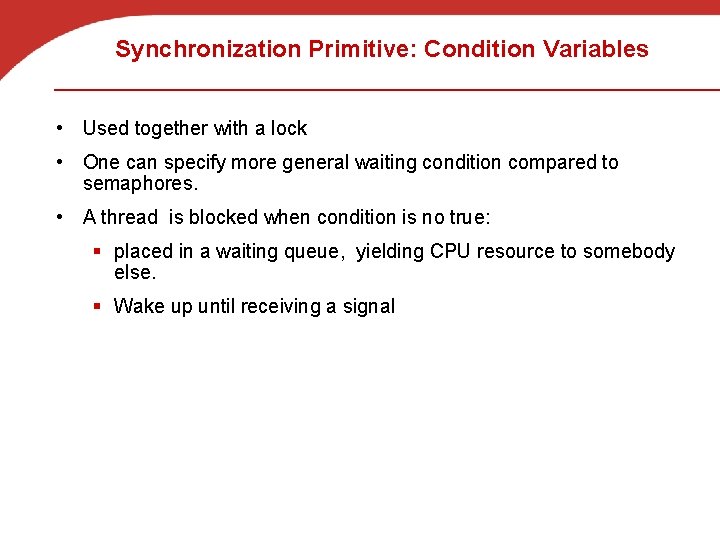

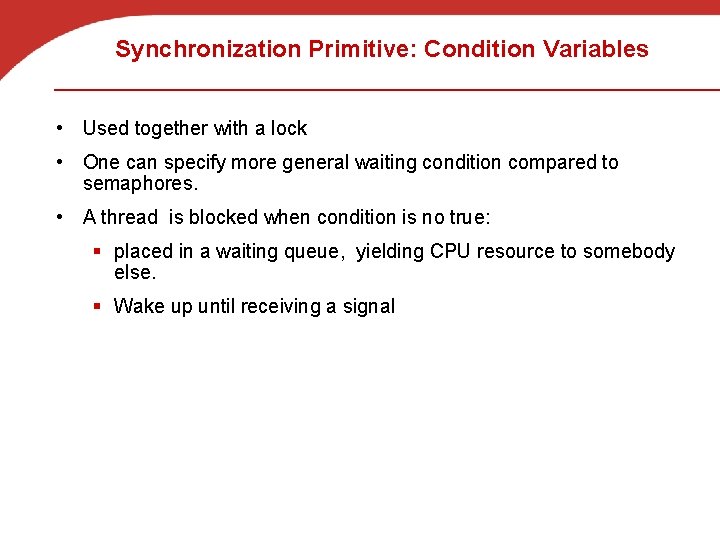

Synchronization Primitive: Condition Variables • Used together with a lock • One can specify more general waiting condition compared to semaphores. • A thread is blocked when condition is no true: § placed in a waiting queue, yielding CPU resource to somebody else. § Wake up until receiving a signal

Pthread synchronization: Condition variables int status; pthread_condition_t cond; const pthread_condattr_t attr; pthread_mutex; status = pthread_cond_init(&cond, &attr); status = pthread_cond_destroy(&cond); status = pthread_cond_wait(&cond, &mutex); -wait in a queue until somebody wakes up. Then the mutex is reacquired. status = pthread_cond_signal(&cond); - wake up one waiting thread. status = pthread_cond_broadcast(&cond); - wake up all waiting threads in that condition

ISSUES WITH THREADS: FALSE SHARING, DEADLOCKS, THREADSAFETY COPYRIGHT © 2010, ELSEVIER INC. ALL RIGHTS RESERVED

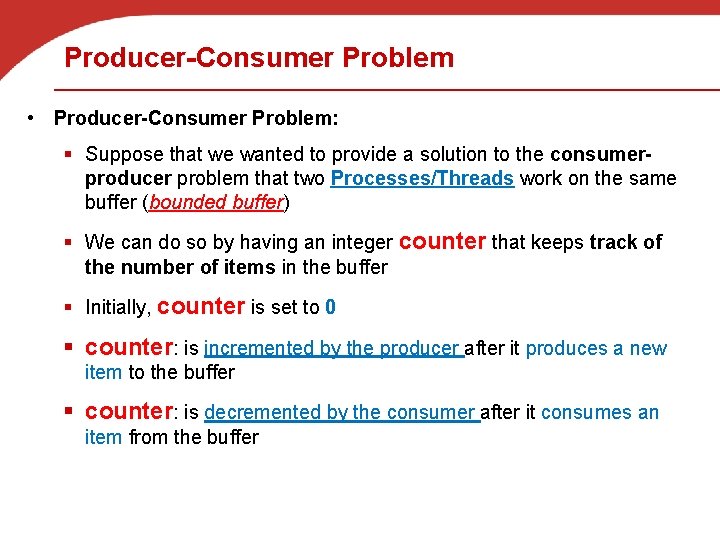

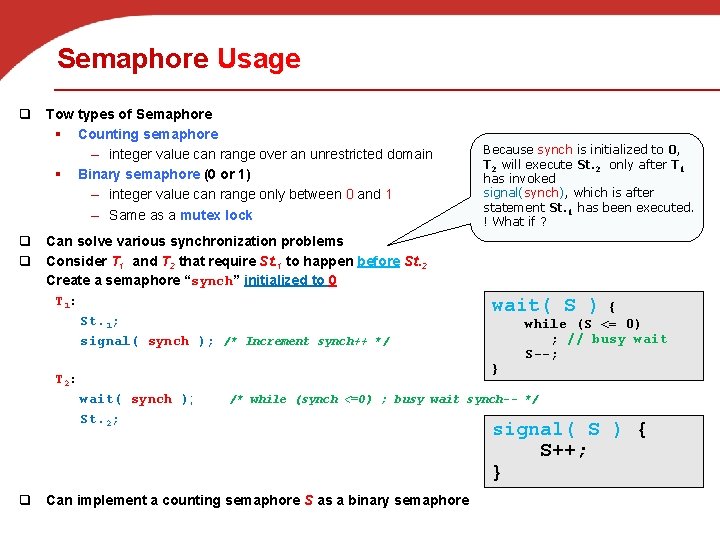

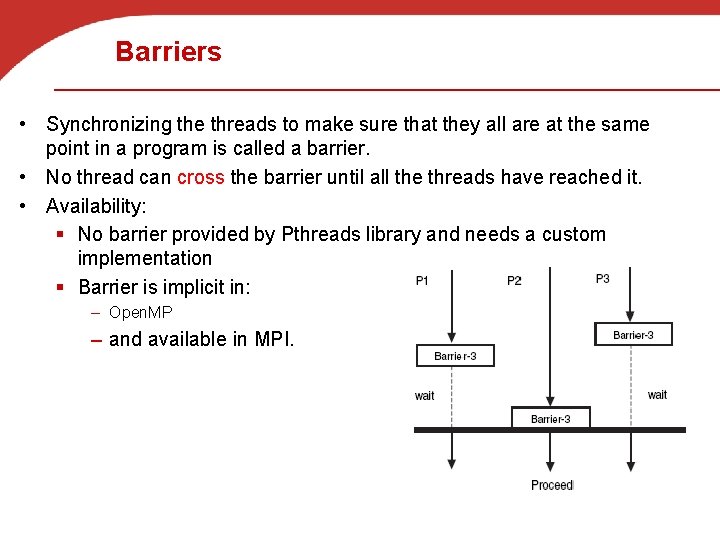

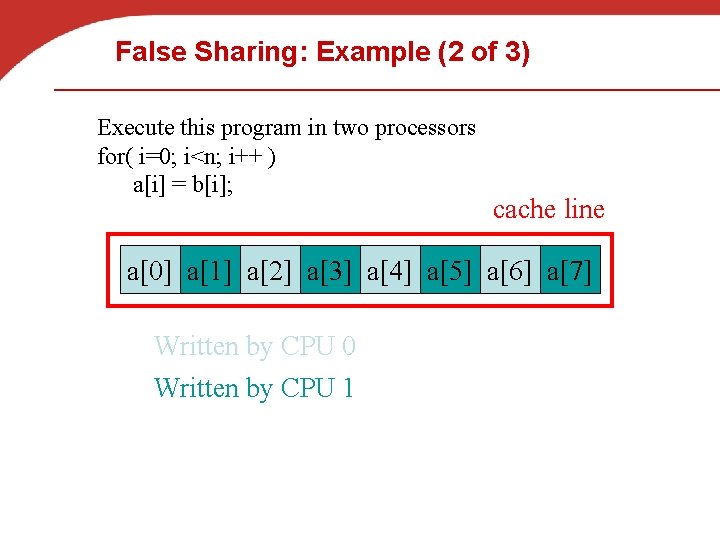

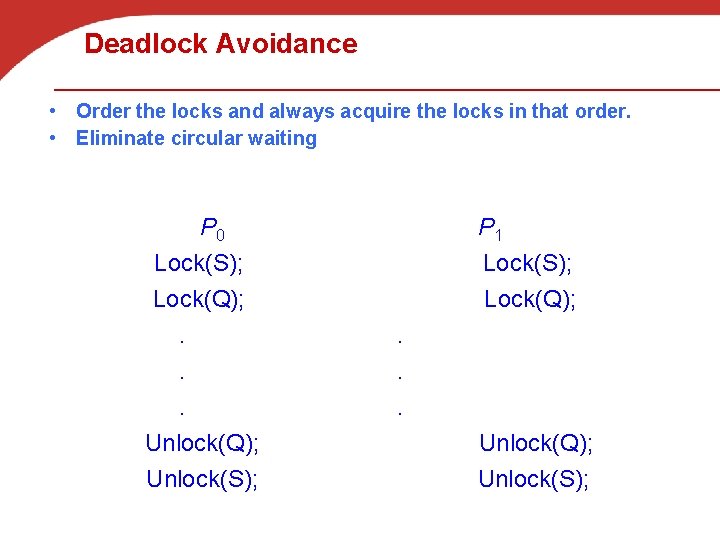

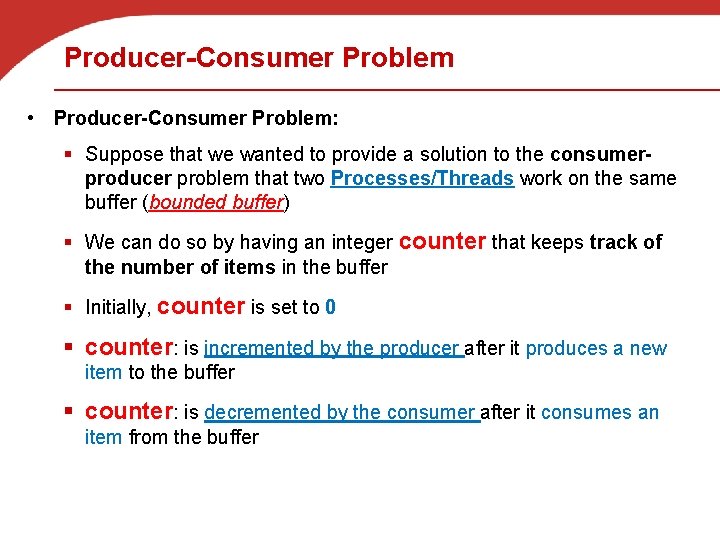

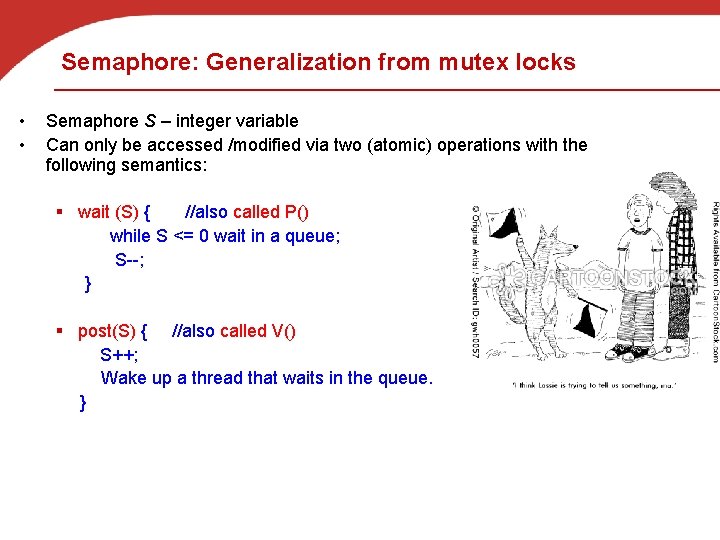

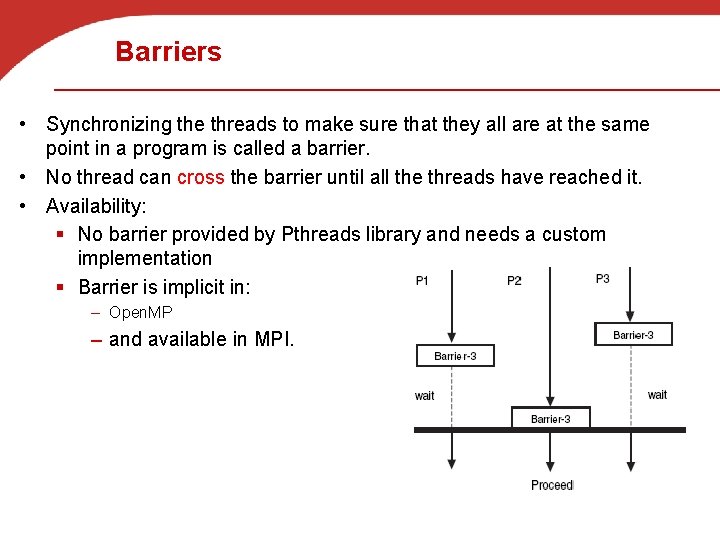

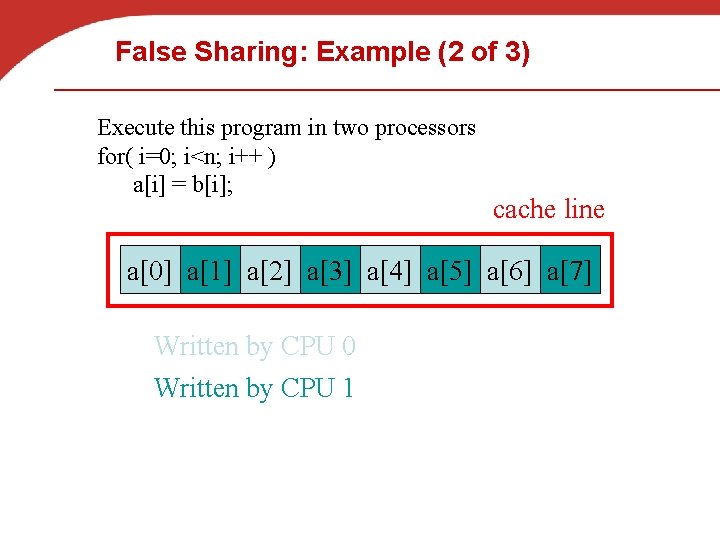

Problem: False Sharing • Occurs when two or more processors/cores access different data in same cache line, and at least one of them writes. § Leads to ping-pong effect. • Let’s assume we parallelize code with p=2: for( i=0; i<n; i++ ) a[i] = b[i]; § Each array element takes 8 bytes § Cache line has 64 bytes (8 numbers)

False Sharing: Example (2 of 3) Execute this program in two processors for( i=0; i<n; i++ ) a[i] = b[i]; cache line a[0] a[1] a[2] a[3] a[4] a[5] a[6] a[7] Written by CPU 0 Written by CPU 1

![False Sharing Example Two CPUs execute for i0 in i ai bi False Sharing: Example Two CPUs execute: for( i=0; i<n; i++ ) a[i] = b[i];](https://slidetodoc.com/presentation_image_h/1bebb81ed95b10854e3d212493f496c6/image-44.jpg)

False Sharing: Example Two CPUs execute: for( i=0; i<n; i++ ) a[i] = b[i]; a[0] a[1] a[2] a[3] a[4] a[5] a[6] a[7] cache line Written by CPU 0 Written by CPU 1 a[2] a[0] inv a[1] a[4] CPU 0 . . . data a[3] a[5] CPU 1

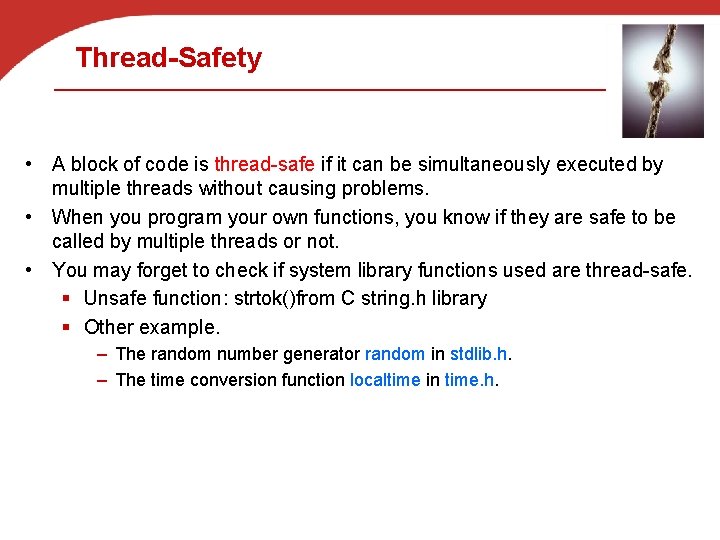

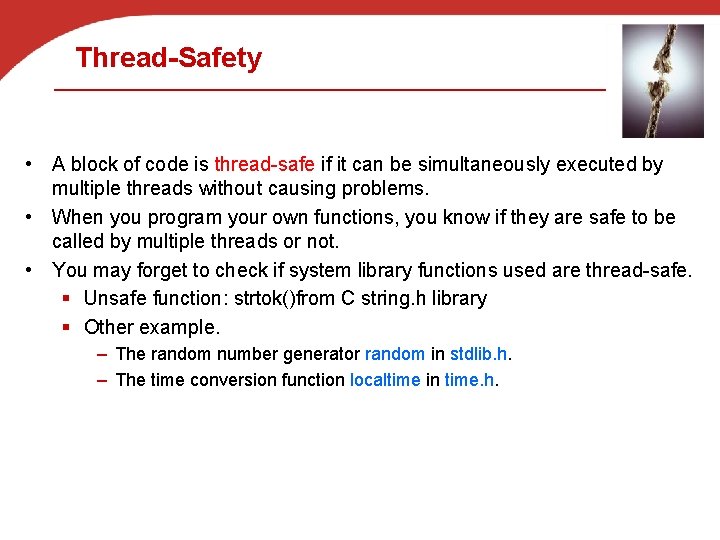

Deadlock and Starvation • Deadlock – two or more threads are waiting indefinitely for an event that can be only caused by one of these waiting threads • Starvation – indefinite blocking (in a waiting queue forever). n Let S and Q be two mutex locks: P 0 Lock(S); Lock(Q); . . . Unlock(Q); Unlock(S); P 1 Lock(Q); Lock(S); . . . Unlock(S); Unlock(Q);

Deadlock Avoidance • Order the locks and always acquire the locks in that order. • Eliminate circular waiting P 0 Lock(S); Lock(Q); . . . Unlock(Q); Unlock(S); P 1 Lock(S); Lock(Q); . . . Unlock(Q); Unlock(S);

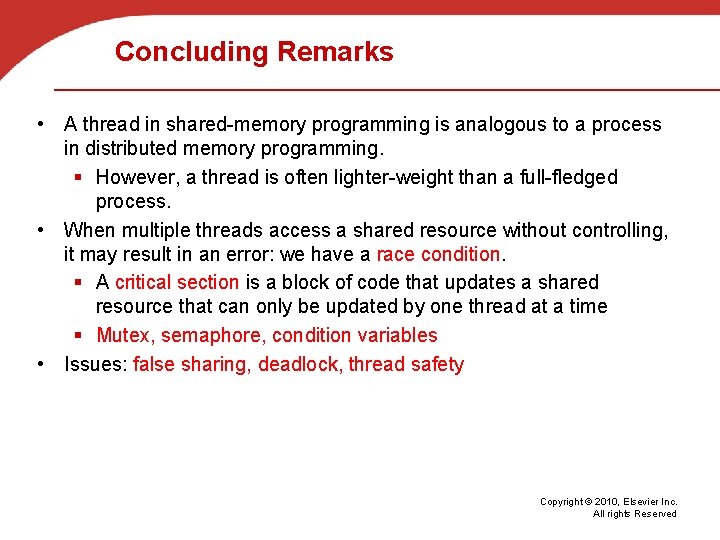

Thread-Safety • A block of code is thread-safe if it can be simultaneously executed by multiple threads without causing problems. • When you program your own functions, you know if they are safe to be called by multiple threads or not. • You may forget to check if system library functions used are thread-safe. § Unsafe function: strtok()from C string. h library § Other example. – The random number generator random in stdlib. h. – The time conversion function localtime in time. h.

Concluding Remarks • A thread in shared-memory programming is analogous to a process in distributed memory programming. § However, a thread is often lighter-weight than a full-fledged process. • When multiple threads access a shared resource without controlling, it may result in an error: we have a race condition. § A critical section is a block of code that updates a shared resource that can only be updated by one thread at a time § Mutex, semaphore, condition variables • Issues: false sharing, deadlock, thread safety Copyright © 2010, Elsevier Inc. All rights Reserved