Shared Memory Multiprocessors 1 Symmetric Multiprocessors o SMPs

- Slides: 21

Shared Memory Multiprocessors 1

Symmetric Multiprocessors o SMPs are the most prevalent form of parallel architectures n Provide global access to a global physical address n Dominate server market n On their way to dominating desktop o “Throughput engines” for multiple sequential jobs o Also, attractive for parallel programming n Uniform access via ordinary loads/stores n Automatic movement/replication of shared data in local caches o Can support message passing programming model n No operating system involvement needed; address translation and buffer protection provided by hardware 2

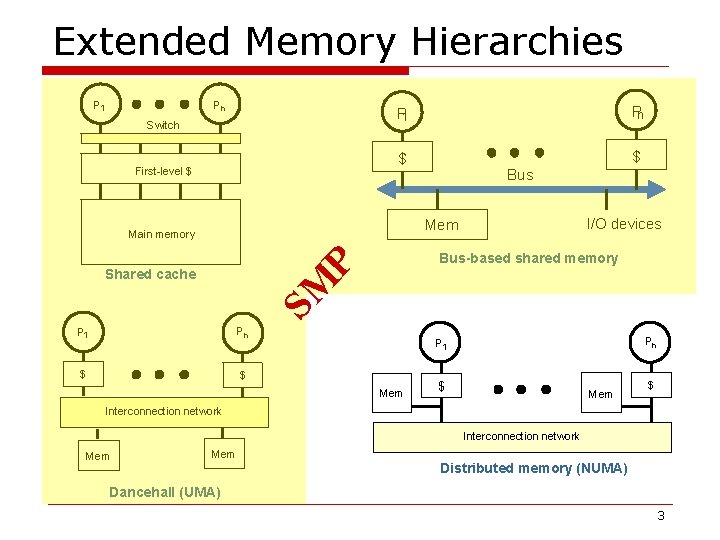

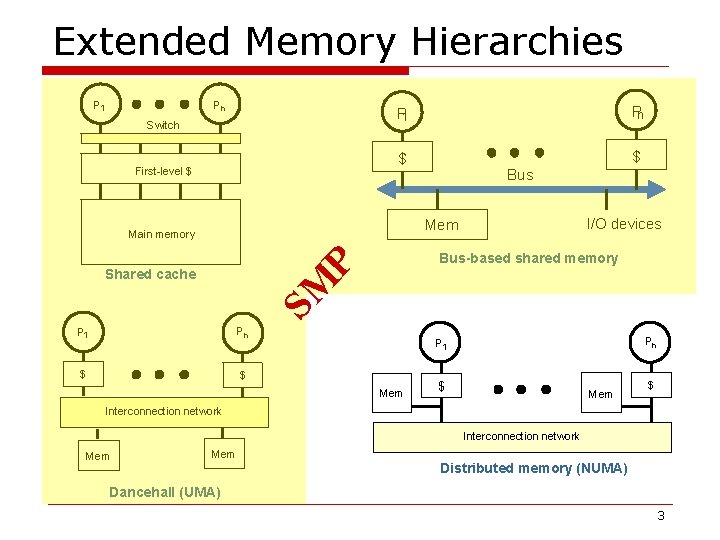

Extended Memory Hierarchies P 1 Pn Switch First-level $ P 1 Pn $ $ Bus I/O devices Mem Main memory P Bus-based shared memory SM Shared cache P 1 Pn $ $ Pn P 1 Mem $ Interconnection network Mem Distributed memory (NUMA) Dancehall (UMA) 3

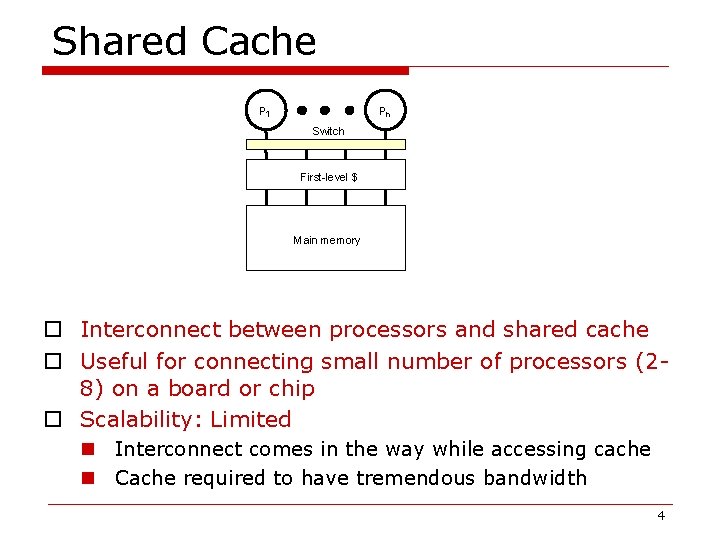

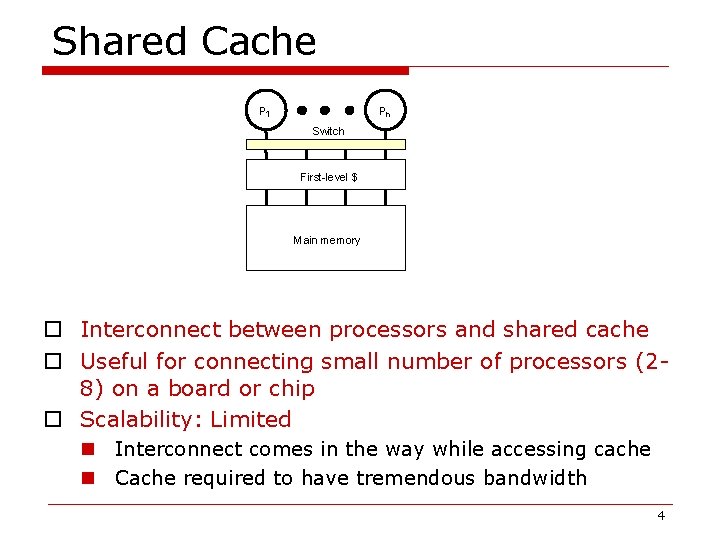

Shared Cache P 1 Pn Switch First-level $ Main memory o Interconnect between processors and shared cache o Useful for connecting small number of processors (28) on a board or chip o Scalability: Limited n Interconnect comes in the way while accessing cache n Cache required to have tremendous bandwidth 4

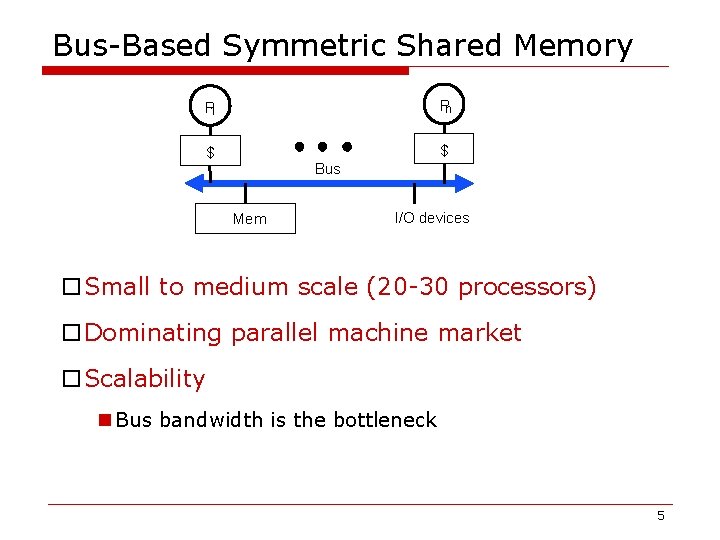

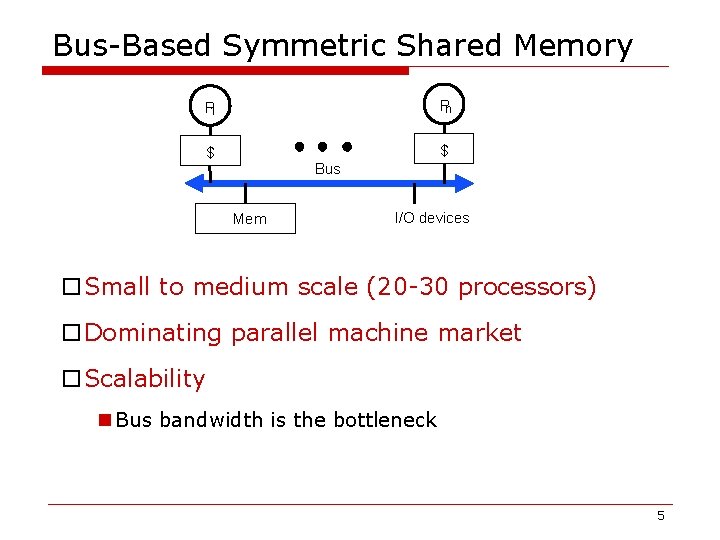

Bus-Based Symmetric Shared Memory P 1 Pn $ $ Bus Mem I/O devices o. Small to medium scale (20 -30 processors) o. Dominating parallel machine market o. Scalability n Bus bandwidth is the bottleneck 5

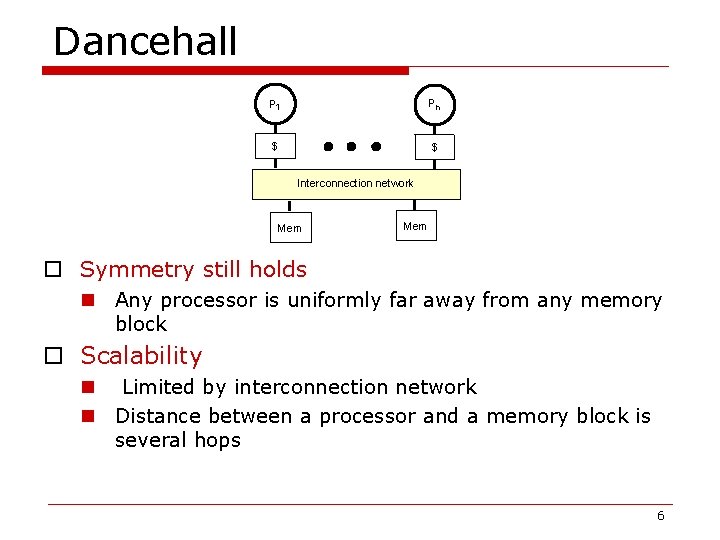

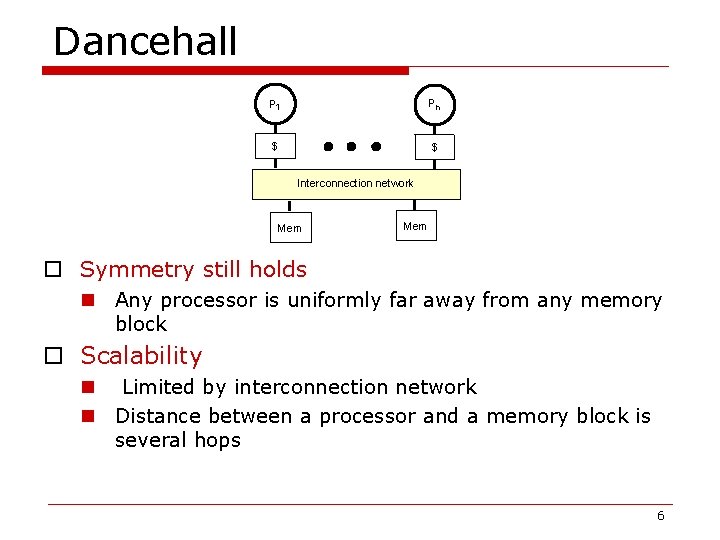

Dancehall P 1 Pn $ $ Interconnection network Mem o Symmetry still holds n Any processor is uniformly far away from any memory block o Scalability n Limited by interconnection network n Distance between a processor and a memory block is several hops 6

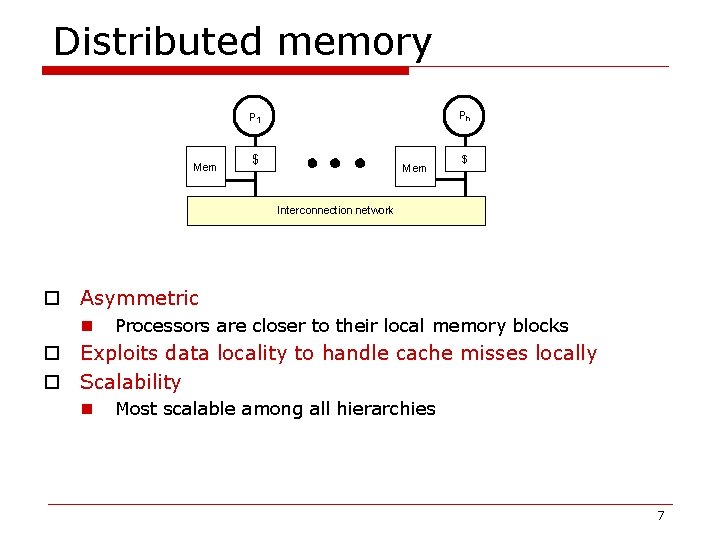

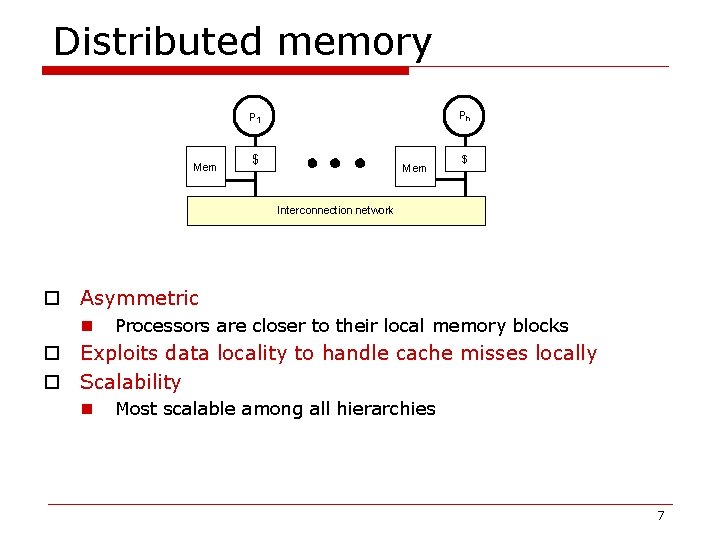

Distributed memory Pn P 1 Mem $ Interconnection network o Asymmetric n Processors are closer to their local memory blocks o Exploits data locality to handle cache misses locally o Scalability n Most scalable among all hierarchies 7

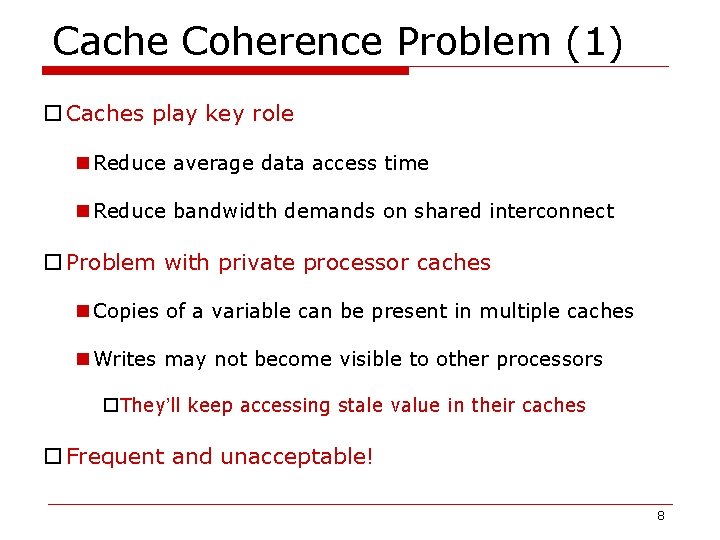

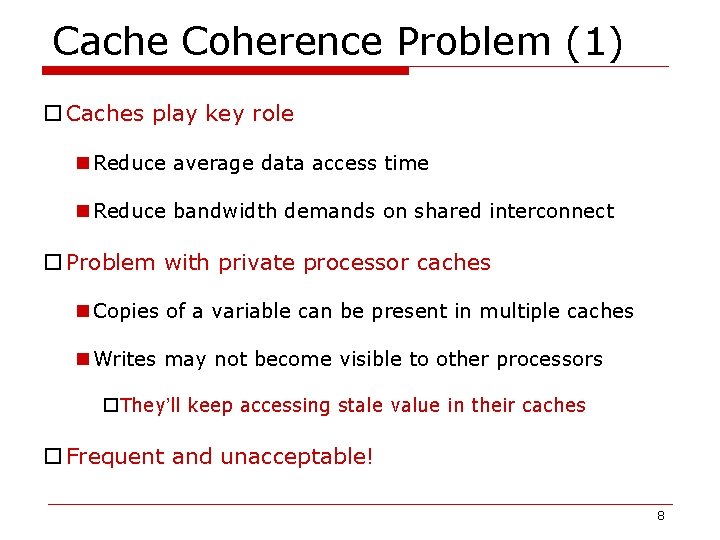

Cache Coherence Problem (1) o Caches play key role n Reduce average data access time n Reduce bandwidth demands on shared interconnect o Problem with private processor caches n Copies of a variable can be present in multiple caches n Writes may not become visible to other processors o They’ll keep accessing stale value in their caches o Frequent and unacceptable! 8

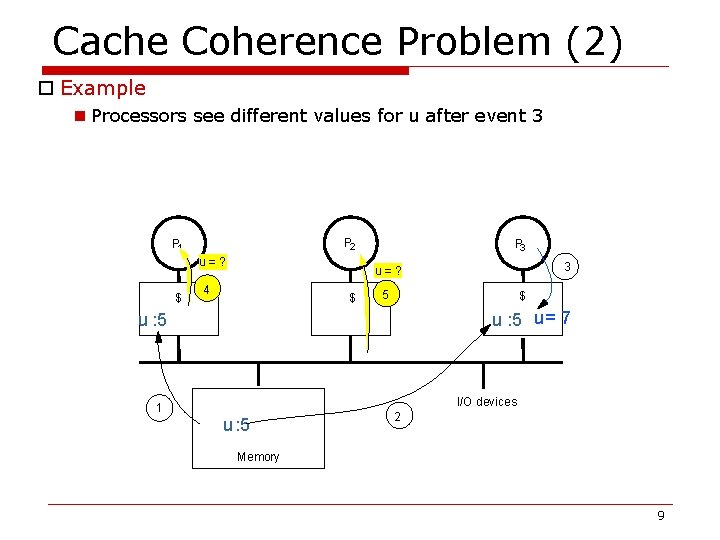

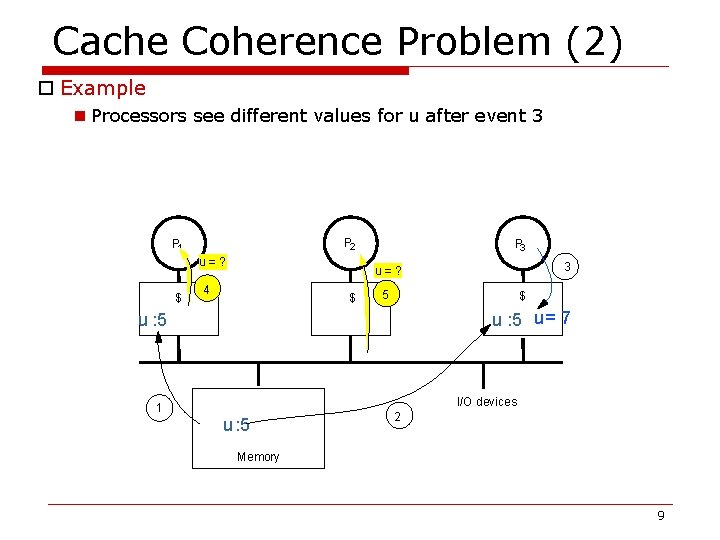

Cache Coherence Problem (2) o Example n Processors see different values for u after event 3 P 2 P 1 u=? $ P 3 3 u=? 4 $ 5 $ u : 5 u = 7 u : 5 I/O devices 1 u : 5 2 Memory 9

Cache Memory: Background 10

Background o Block placement o Block identification o Block replacement o Write policy o Performance 11

Block Placement o Three categories n Direct mapped n Fully associative n Set associative 12

Direct Mapped Cache o A memory block has only one place to go to in cache o Can also be called n One-way set associative (will know why soon) Block 0 MOD (cache size) Cache Block 7 Block 31 Memory 13

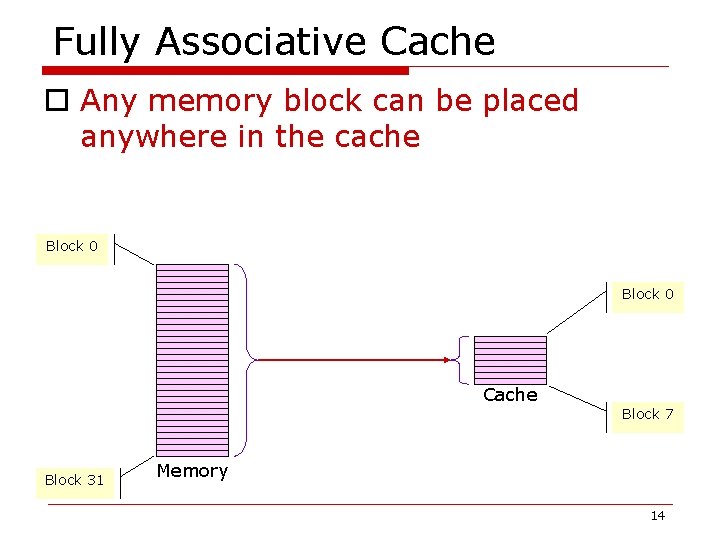

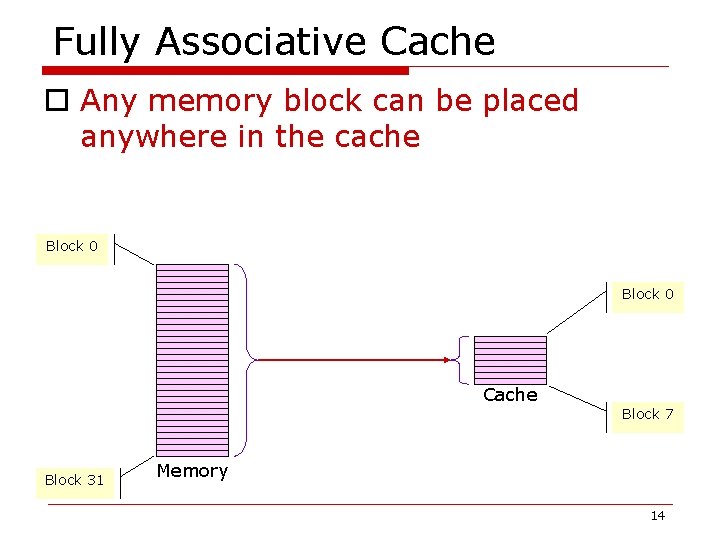

Fully Associative Cache o Any memory block can be placed anywhere in the cache Block 0 Cache Block 7 Block 31 Memory 14

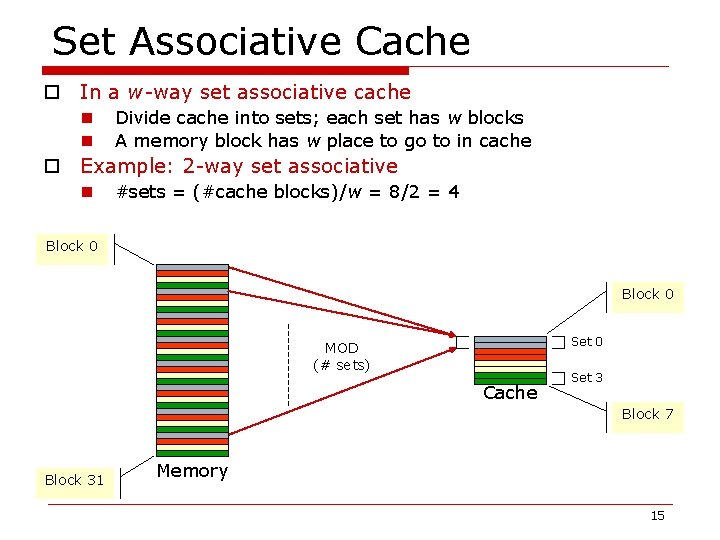

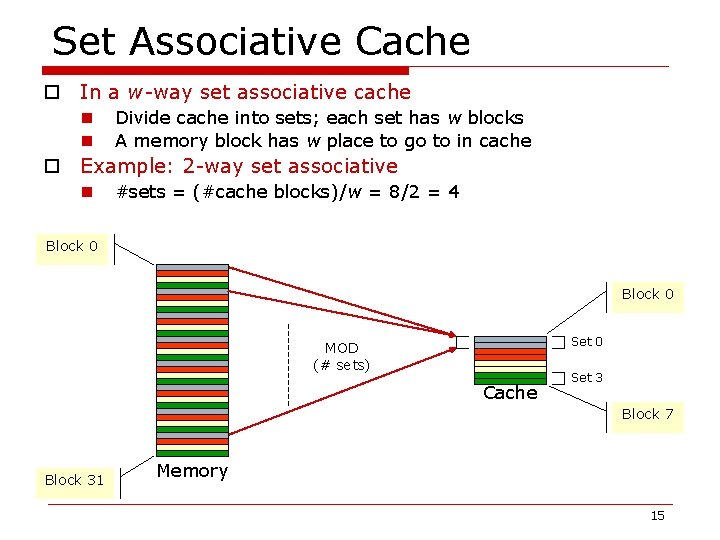

Set Associative Cache o In a w-way set associative cache n n Divide cache into sets; each set has w blocks A memory block has w place to go to in cache o Example: 2 -way set associative n #sets = (#cache blocks)/w = 8/2 = 4 Block 0 Set 0 MOD (# sets) Cache Set 3 Block 7 Block 31 Memory 15

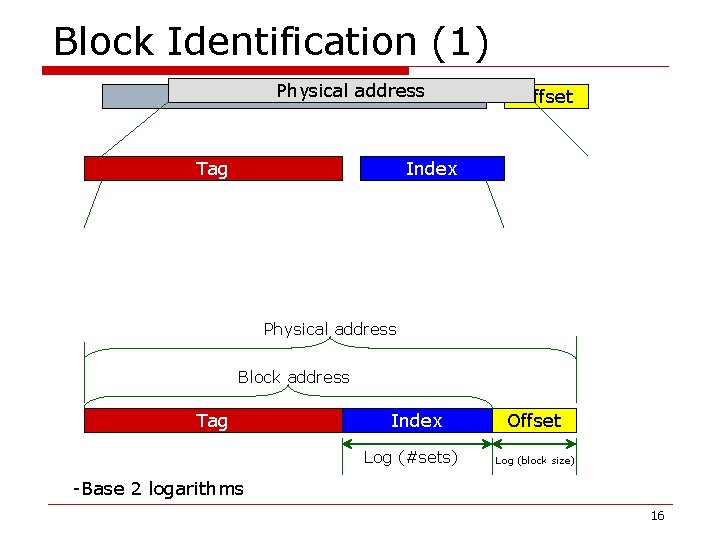

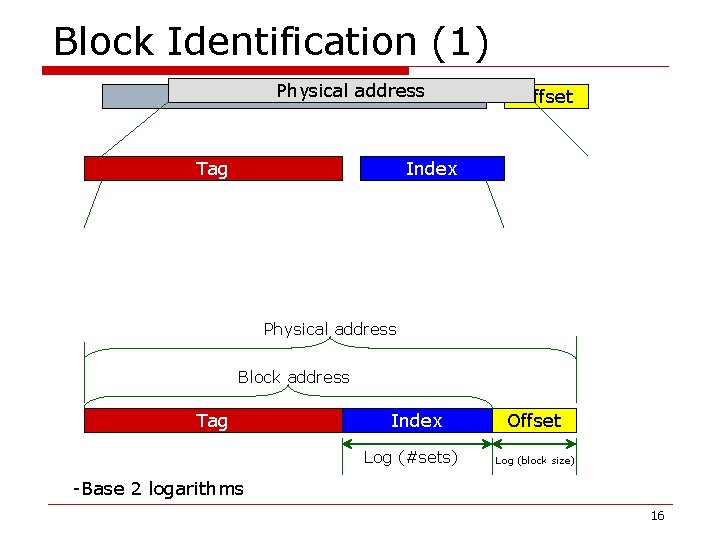

Block Identification (1) Block. Physical address Tag Offset Index Physical address Block address Tag Index Log (#sets) Offset Log (block size) -Base 2 logarithms 16

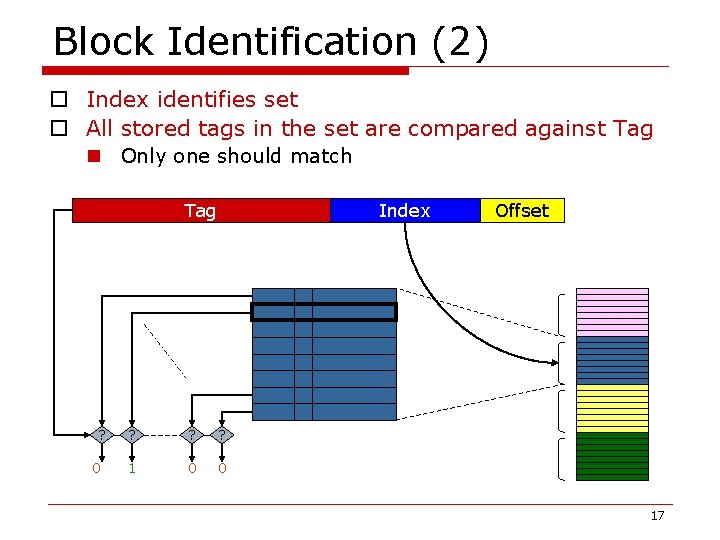

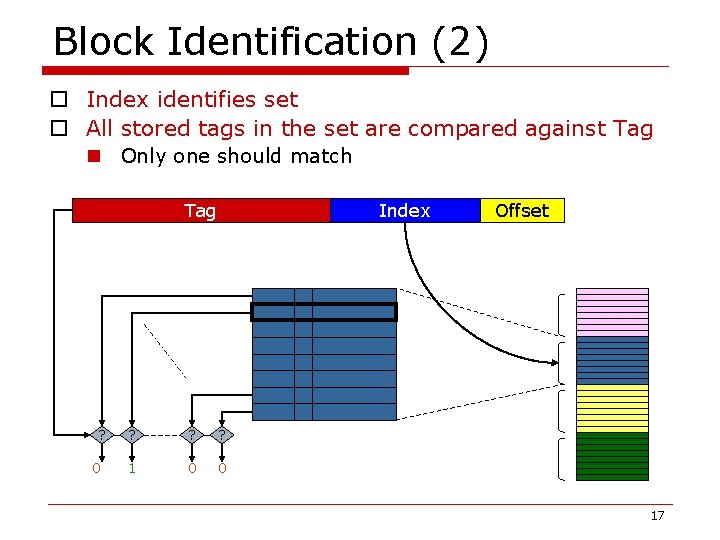

Block Identification (2) o Index identifies set o All stored tags in the set are compared against Tag n Only one should match Tag ? 0 Index ? ? ? 1 0 0 Offset 17

Block Replacement o FIFO: first in first out o LRU: least recently used o Random 18

Write Policy o Write-through caches n Values get updated in main memory immediately n Main memory always has up-to-date values n Leads to slower performance n Easier to implement o Write-back caches n Values don’t get updated in main memory n Main memory may contain outdated values n Leads to faster performance n Harder to implement 19

Cache Performance o Average access time n hit time + miss rate x miss penalty o Miss penalty n Time taken to access memory n In the order of 100 s of times of hit time o Miss rate n Depends on several factors o Design o Program n If miss rate is too small, average access time approaches the hit time 20

More on Cache Memory o For more, read Sections 5. 1 through 5. 3 of n J. L. Hennessy and D. A. Patterson, Computer Architecture: A Quantitative Approach, Morgan Kaufmann Publishers, Inc. , Palo Alto, CA, third edition, 2002. 21