Session 7 Planning for Evaluation Session Overview Key

- Slides: 23

Session 7: Planning for Evaluation

Session Overview Key definitions: § monitoring § evaluation Process monitoring and process evaluation Outcome monitoring and outcome evaluation Impact monitoring and impact evaluation Evaluation study designs 2

Session Learning Objectives By the end of the session, the participant will be able to: § plan for program-level monitoring and evaluation activities; § understand basic principles of evaluation study design; and § link evaluation designed to the type of decisions that need to be made. 3

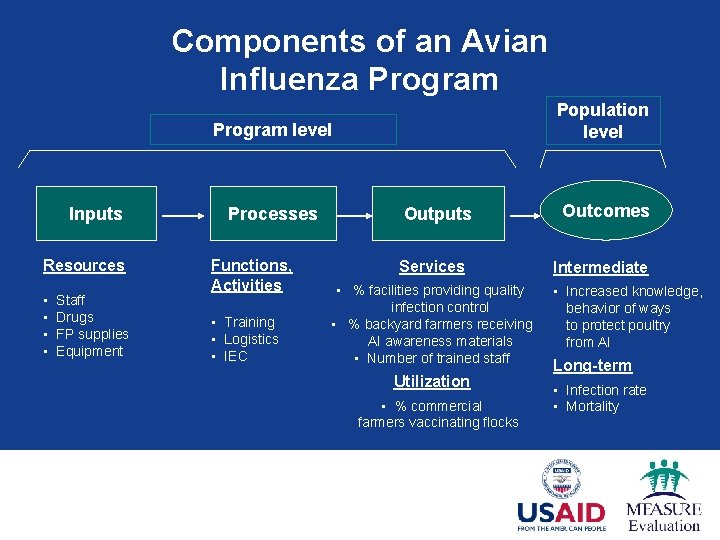

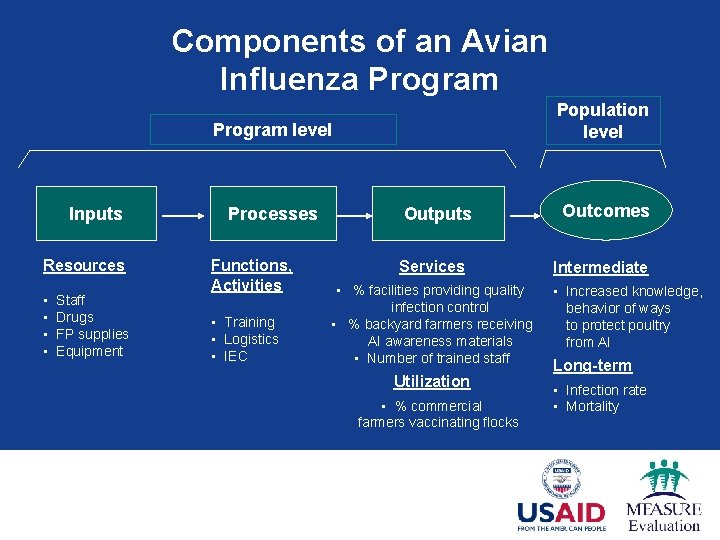

Components of an Avian Influenza Program Population level Program level Inputs Resources • • Staff Drugs FP supplies Equipment Processes Functions, Activities • Training • Logistics • IEC Outputs Services • % facilities providing quality infection control • % backyard farmers receiving AI awareness materials • Number of trained staff Utilization • % commercial farmers vaccinating flocks 4 Outcomes Intermediate • Increased knowledge, behavior of ways to protect poultry from AI Long-term • Infection rate • Mortality

Monitoring involves: § routine tracking of information about a program and its intended outputs, outcomes and impacts; § measurement of progress toward achieving program objectives; § tracking cost and program/project functioning; and § providing a basis for program evaluation, when linked to a specific program. 5

Evaluation involves: § activities designed to determine the value or worth of a specific program; § the use of epidemiological or social research methods to systematically investigate program effectiveness; § may include the examination of performance against defined standards, an assessment of actual and expected results and/or the identification of relevant lessons; and § should be planned from the beginning of a project. 6

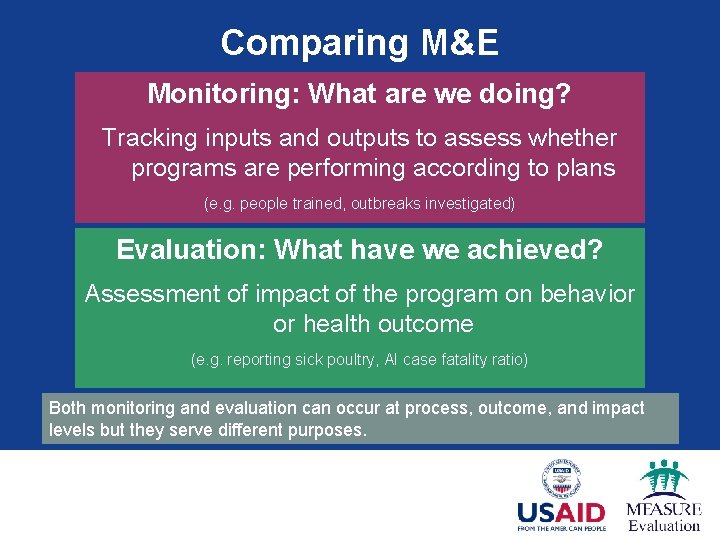

Comparing M&E Monitoring: What are we doing? Tracking inputs and outputs to assess whether programs are performing according to plans (e. g. people trained, outbreaks investigated) Evaluation: What have we achieved? Assessment of impact of the program on behavior or health outcome (e. g. reporting sick poultry, AI case fatality ratio) Both monitoring and evaluation can occur at process, outcome, and impact levels but they serve different purposes. 7

Process Monitoring Every program should engage in process monitoring. Process monitoring: § collects and analyzes data on inputs, processes, and outputs; § answers such questions as what staffing/resources are being used, what services are being delivered, or what populations are being served; and § provides information for program planning and management. 8

Process Evaluation Most programs, even small ones, should engage in process evaluation. Process evaluation: § occurs at specific points in time during the life of the program; § provides insights into the operations of the program, barriers and lessons learned; and § can help inform decisions as to whether conducting an outcome evaluation would be worthwhile. 9

Outcome Monitoring Outcome monitoring is basic tracking of indicators related to desired program/project outcomes. It answers the question: § Did the expected outcomes occur; e. g. , expected knowledge gained, expected change in behavior occurred, expected client use of services occurred? Example outcome indicators for AI programs include: § knowledge of appropriate poultry handling procedures § quality of infection control in hospitals § rapid response team coverage § biosecurity levels at wet markets 10

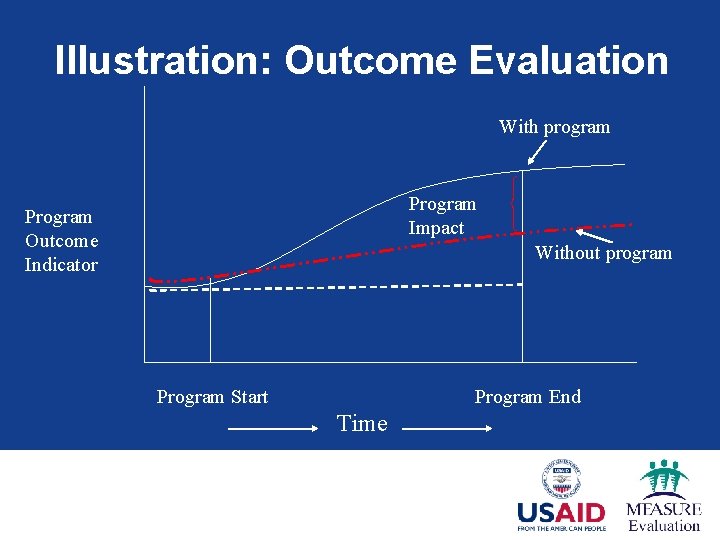

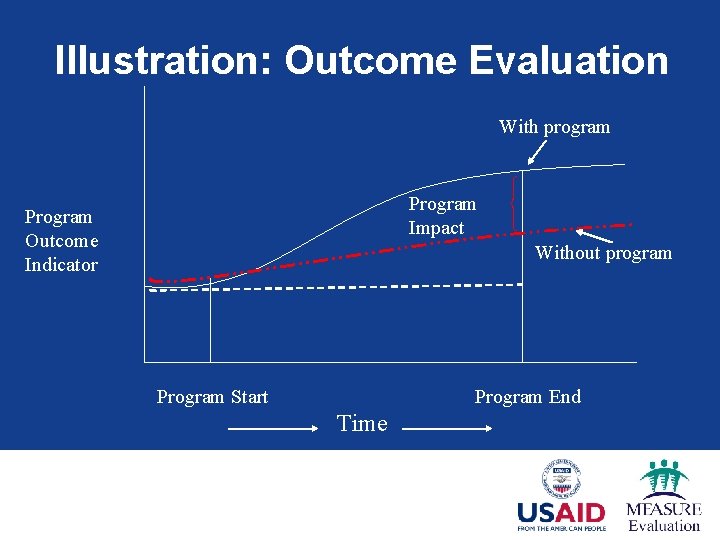

Outcome Evaluation Some programs, particularly larger ones, conduct outcome evaluations. Outcome evaluations collect and analyze data used to determine if and by how much an intervention achieved its intended outcomes. Answers the question: § Did the intervention cause the expected outcomes? 11

Illustration: Outcome Evaluation With program Program Impact Program Outcome Indicator Without program Program Start Program End Time 12

Impact Monitoring Impact monitoring, a variation on outcome monitoring, is focused on long term outcomes including AI infection and mortality rates. Impact monitoring answers the question: § What long-term changes in AI infection and mortality can we observe? Impact monitoring is usually conducted by national authorities, such as the ministries of health or agriculture. 13

Impact Evaluation Impact evaluation is a variation on outcome evaluation. It is focused on the increase or decrease in disease incidence as a result of a program. Impact evaluation usually addresses changes in an entire population – therefore, it is appropriate for large, wellestablished programs, such as the national AI program. Very few projects/programs conduct impact evaluations. 14

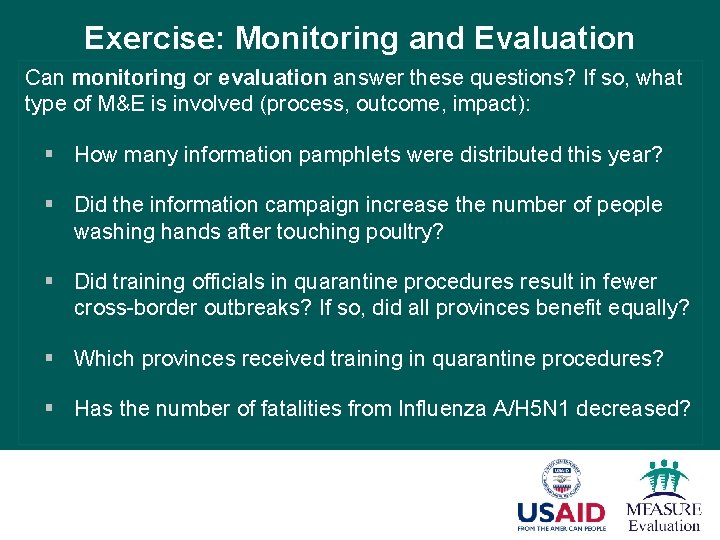

Exercise: Monitoring and Evaluation Can monitoring or evaluation answer these questions? If so, what type of M&E is involved (process, outcome, impact): § How many information pamphlets were distributed this year? § Did the information campaign increase the number of people washing hands after touching poultry? § Did training officials in quarantine procedures result in fewer cross-border outbreaks? If so, did all provinces benefit equally? § Which provinces received training in quarantine procedures? § Has the number of fatalities from Influenza A/H 5 N 1 decreased? 16

Evaluation Designs

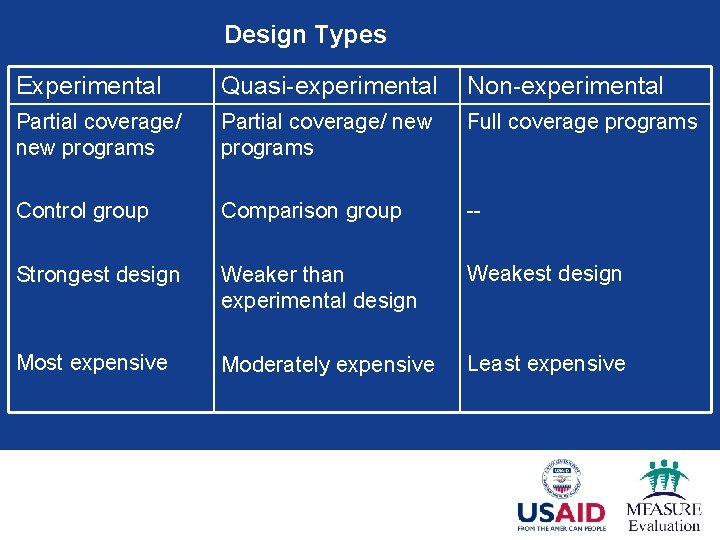

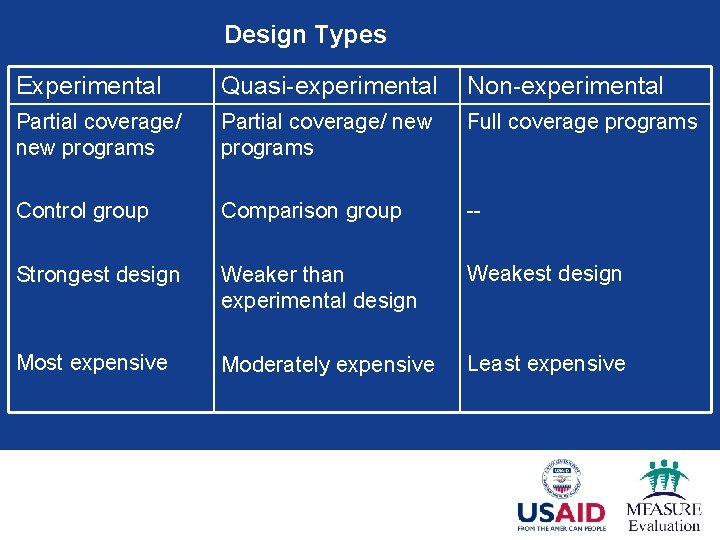

Design Types Experimental Quasi-experimental Non-experimental Partial coverage/ new programs Full coverage programs Control group Comparison group -- Strongest design Weaker than experimental design Weakest design Most expensive Moderately expensive Least expensive

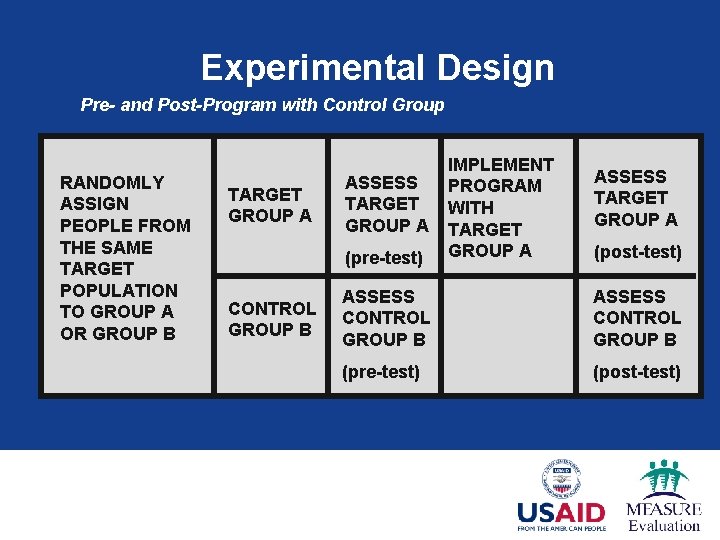

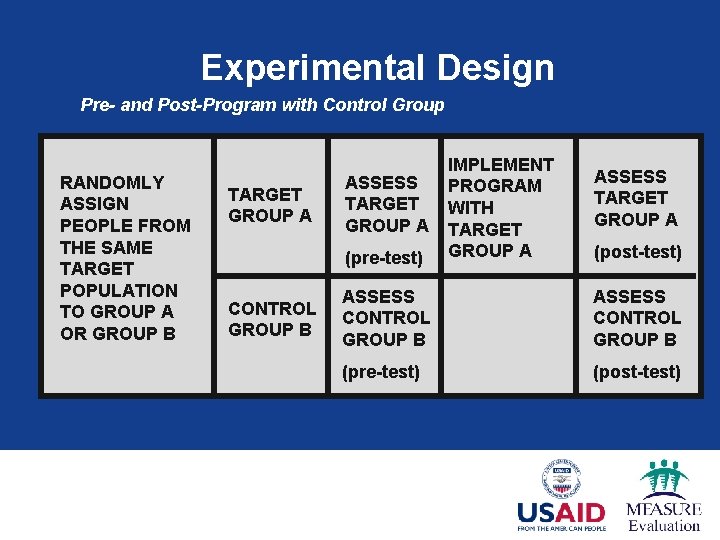

Experimental Design Pre- and Post-Program with Control Group RANDOMLY ASSIGN PEOPLE FROM THE SAME TARGET POPULATION TO GROUP A OR GROUP B TARGET GROUP A ASSESS TARGET GROUP A (pre-test) CONTROL GROUP B IMPLEMENT PROGRAM WITH TARGET GROUP A ASSESS TARGET GROUP A (post-test) ASSESS CONTROL GROUP B (pre-test) (post-test)

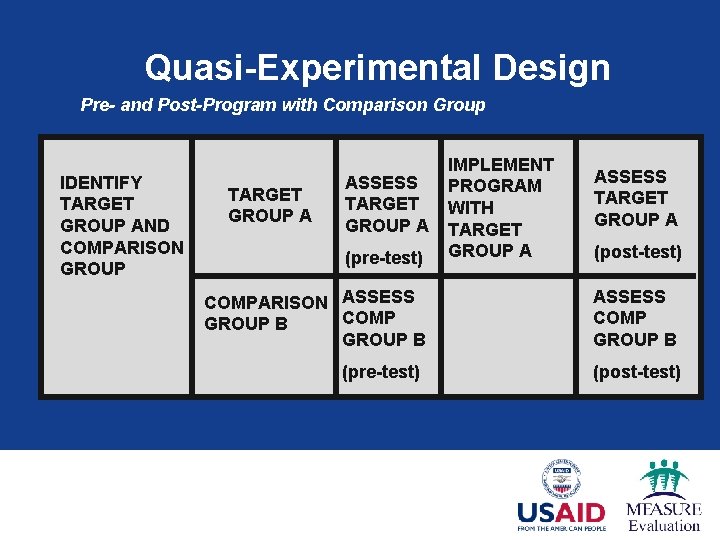

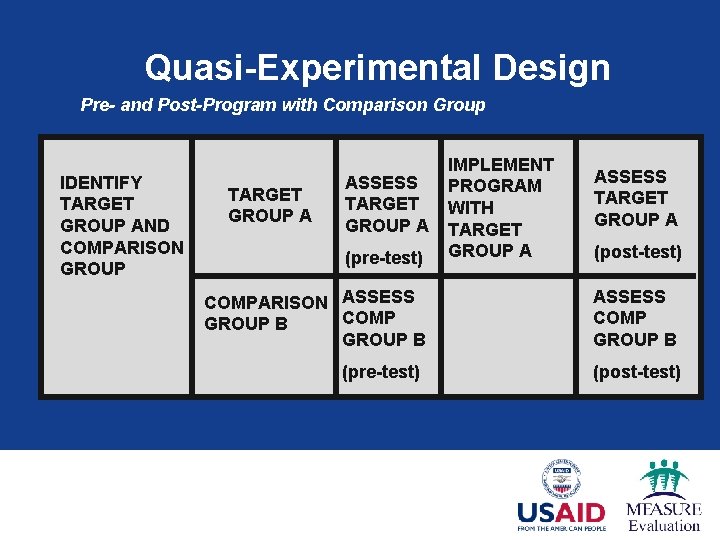

Quasi-Experimental Design Pre- and Post-Program with Comparison Group IDENTIFY TARGET GROUP AND COMPARISON GROUP TARGET GROUP A ASSESS TARGET GROUP A (pre-test) COMPARISON ASSESS COMP GROUP B (pre-test) IMPLEMENT PROGRAM WITH TARGET GROUP A ASSESS TARGET GROUP A (post-test) ASSESS COMP GROUP B (post-test)

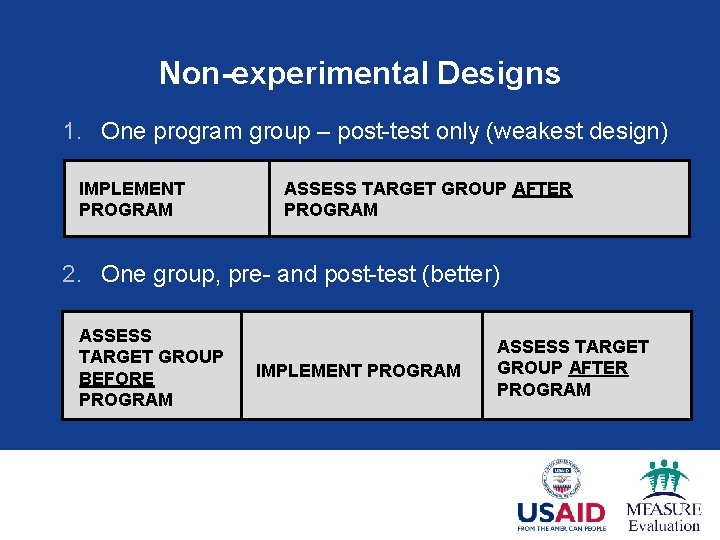

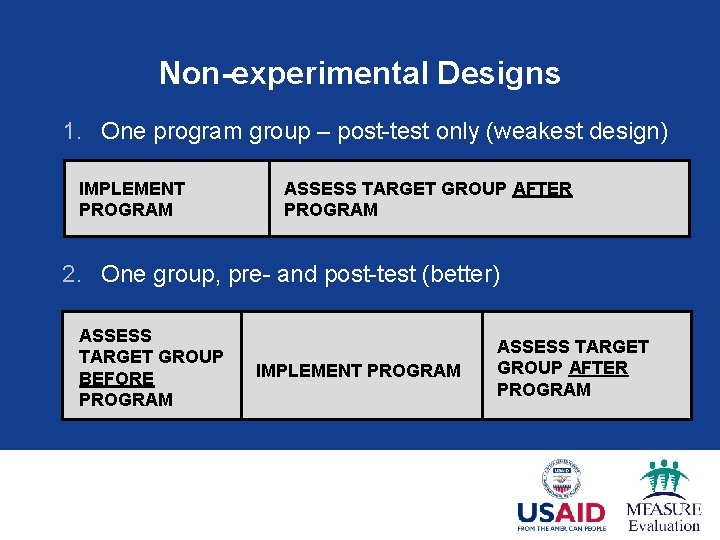

Non-experimental Designs 1. One program group – post-test only (weakest design) IMPLEMENT PROGRAM ASSESS TARGET GROUP AFTER PROGRAM 2. One group, pre- and post-test (better) ASSESS TARGET GROUP BEFORE PROGRAM IMPLEMENT PROGRAM ASSESS TARGET GROUP AFTER PROGRAM

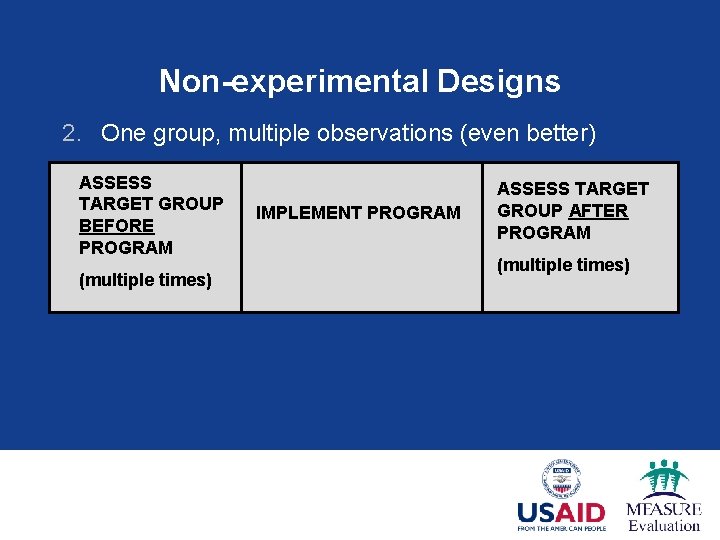

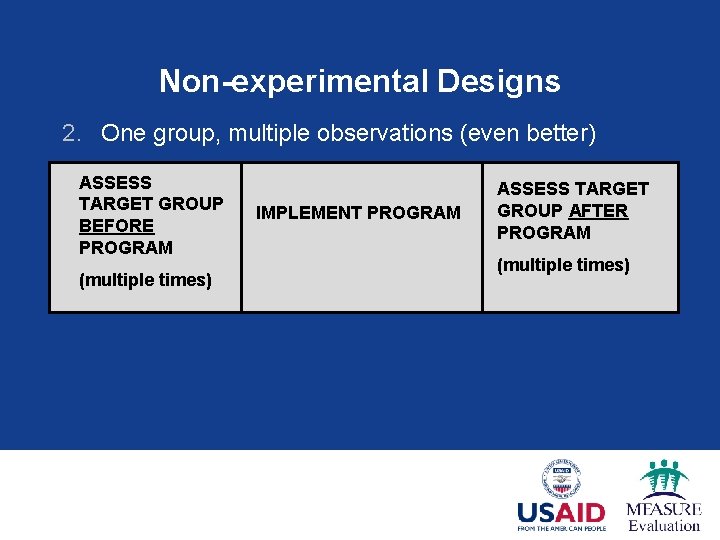

Non-experimental Designs 2. One group, multiple observations (even better) ASSESS TARGET GROUP BEFORE PROGRAM (multiple times) IMPLEMENT PROGRAM ASSESS TARGET GROUP AFTER PROGRAM (multiple times)

Choosing the Right Design for You Non-experimental designs will allow you to link program outcomes to program activities. If you want to have more certainty, try to use a pre- and posttest design. If you want to be more confident, you should try to use a comparison group or control group – it will be more costly. If you have the resources, hire an evaluator to help determine which design will maximize your program’s resources and answer your team’s evaluation questions with the greatest degree of certainty. Disseminate your findings and share lessons learned!

Exercise: Evaluation Plan In your groups: Using your M&E plan project, begin thinking about the evaluation plan for your program: § Identify one or two program evaluation questions for each level of evaluation (process, outcome, impact). § Suggest the type of design you would like to use to answer those questions – considering the type of data you’ll need to collect and the resources/timeline of the project. 24