Sequence Labeling IP notice slides from Dan Jurafsky

Sequence Labeling IP notice: slides from Dan Jurafsky

Outline l l Sequence Labeling Markov Chains Hidden Markov Models Two Algorithms for HMMs The Forward Algorithm § The Viterbi Algorithm § l Applications: The Ice Cream Task § Part of Speech Tagging §

Sequence Labeling l A classifier is enough to handle independent problems § E. g. text classification, image classification l The result for each input is independent from other inputs: y = C(x) l When dealing with sequences of input either in space or time, a sequence labeler is needed that takes into account previous or later inputs: y 1, y 2, …, yn = C(x 1, x 2, …, xn) § E. g. POS tagging, NER tagging, genomic sequences, speech l Trivial solution might be to concatenate inputs: y 1, y 2, …, yn = C([x 1, x 2, …, xn]) l However sequences may vary in length

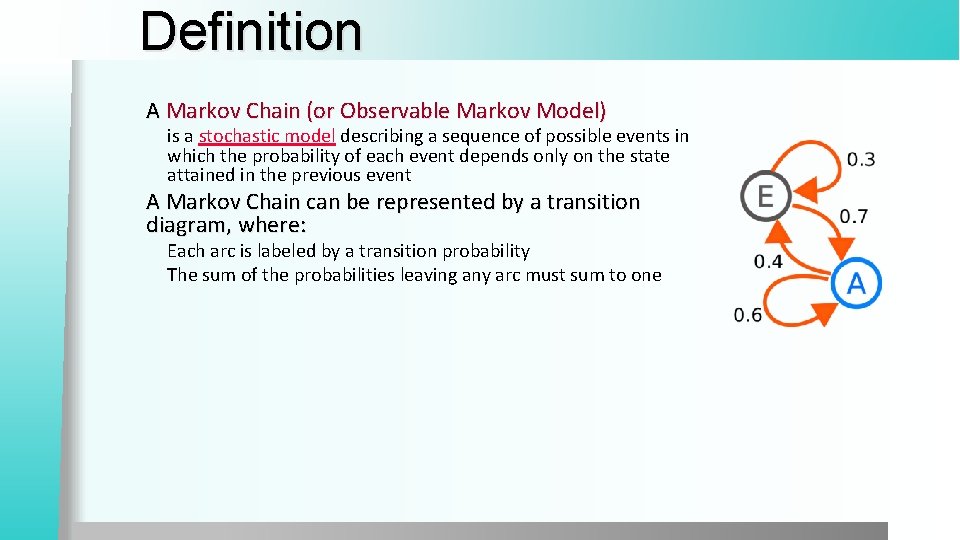

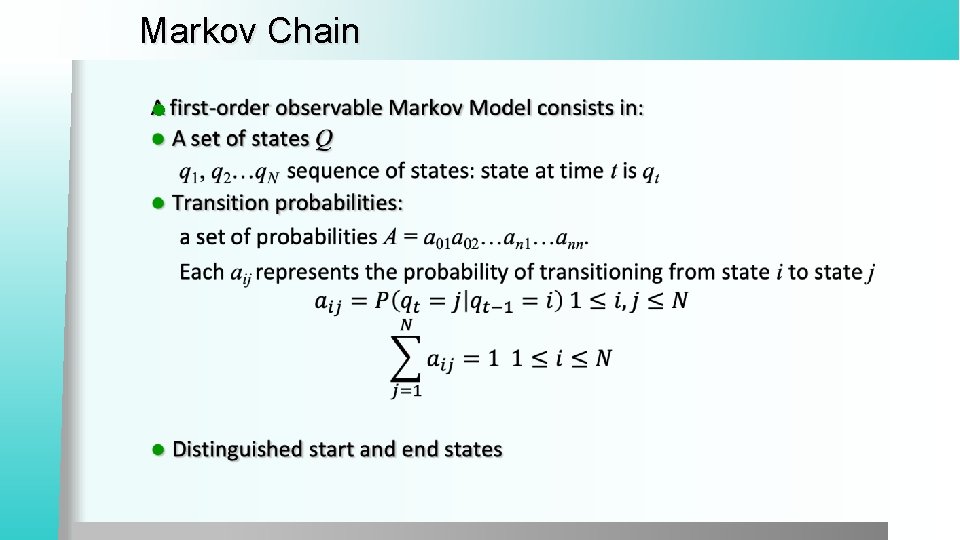

Definition A Markov Chain (or Observable Markov Model) is a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event A Markov Chain can be represented by a transition diagram, where: Each arc is labeled by a transition probability The sum of the probabilities leaving any arc must sum to one

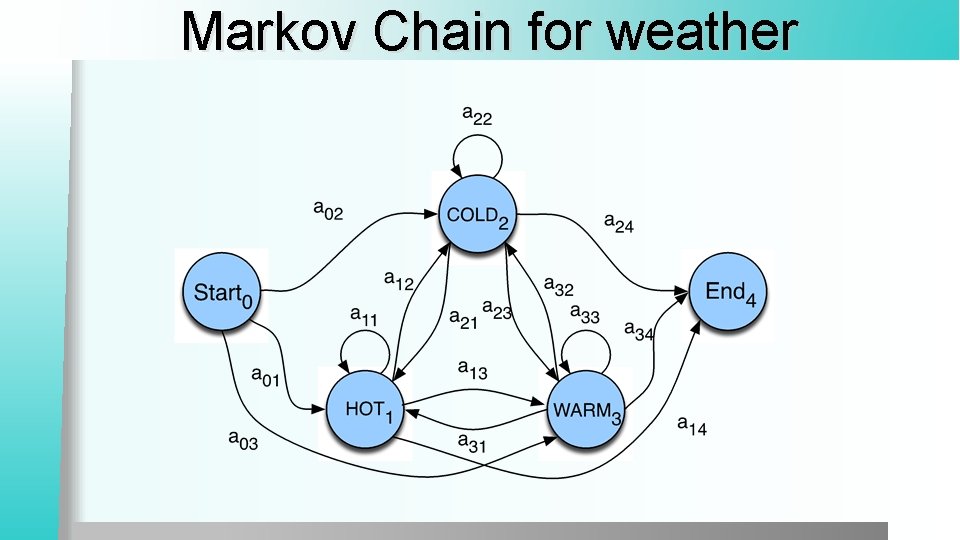

Markov Chain for weather

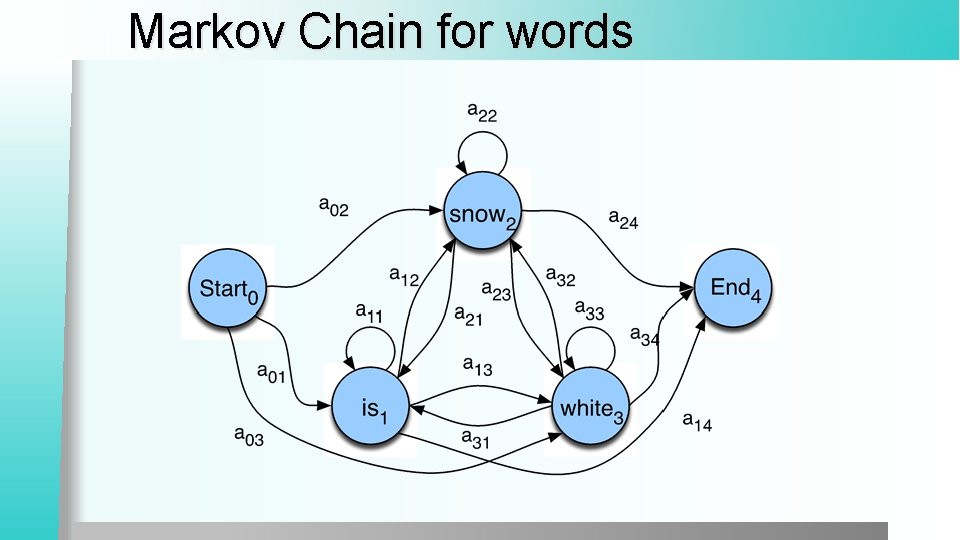

Markov Chain for words

Markov Chain l

Markov Chain Markov Assumption: l Current state only depends on previous state P(qi | q 1 … qi-1) = P(qi | qi-1)

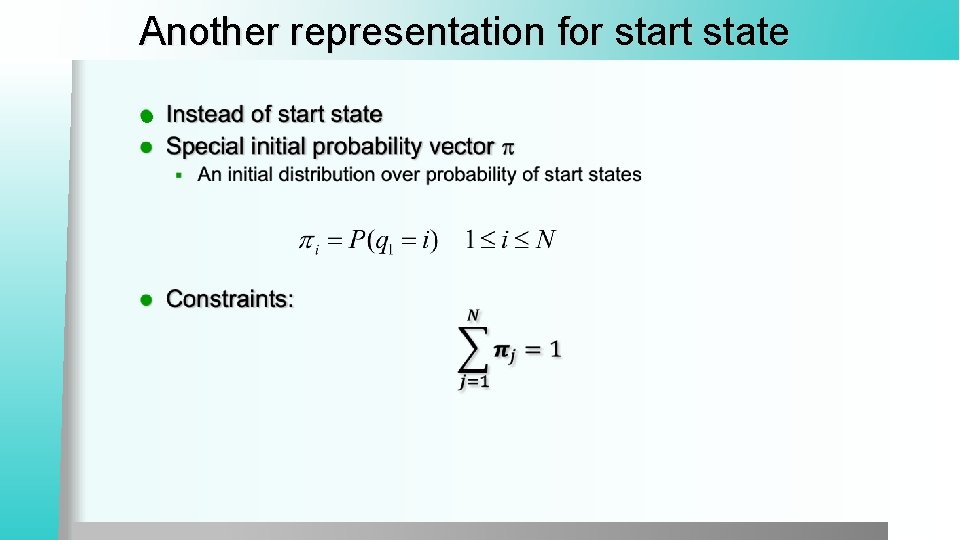

Another representation for start state l

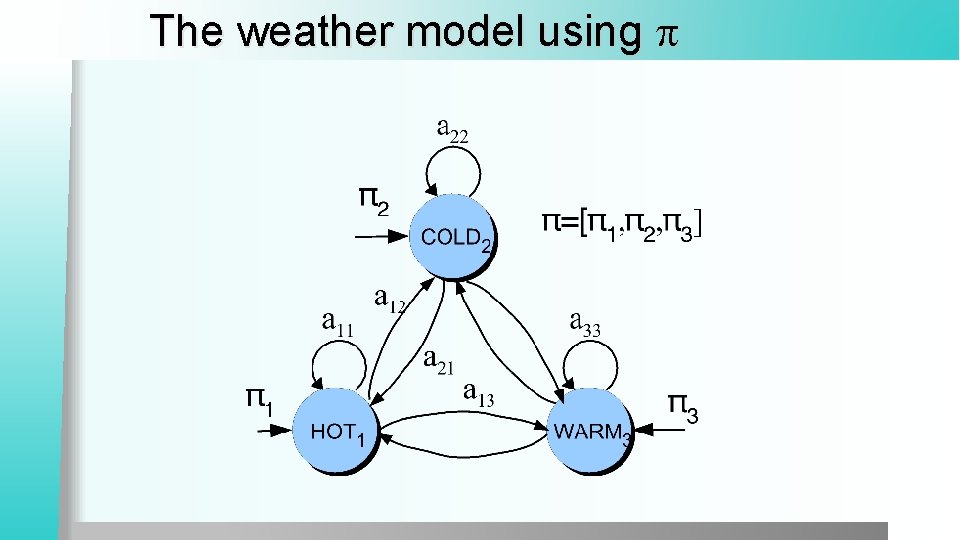

The weather model using

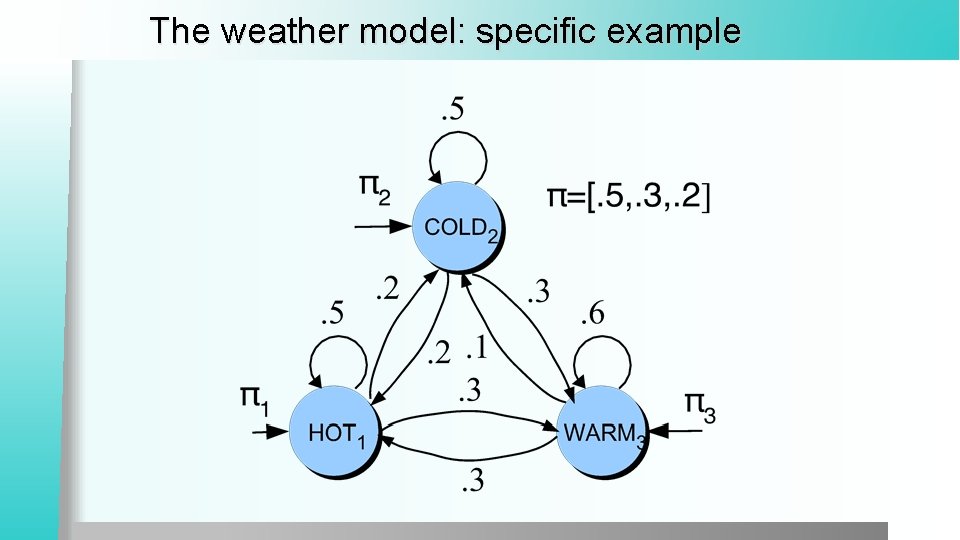

The weather model: specific example

Markov chain for weather What is the probability of 4 consecutive warm days? l Sequence is warm-warm-warm l i. e. , state sequence is 3 -3 -3 -3 l P(3, 3, 3, 3) = 3 a 33 a 33 = 0. 2 • (0. 6)3 = 0. 0432

How about? Hot hot hot l Cold hot cold hot l l What does the difference in these probabilities tell you about the real world weather info encoded in the figure?

Fun with Markov Chains l Markov Chains “Explained Visually”: http: //setosa. io/ev/markov-chains l Snakes and Ladders: http: //datagenetics. com/blog/november 12011/ l Candyland: http: //www. datagenetics. com/blog/december 12011/ l Yahtzee: http: //www. datagenetics. com/blog/january 42012/ l Chess pieces returning home and K-pop vs. ska: https: //www. youtube. com/watch? v=63 HHmjlh 794

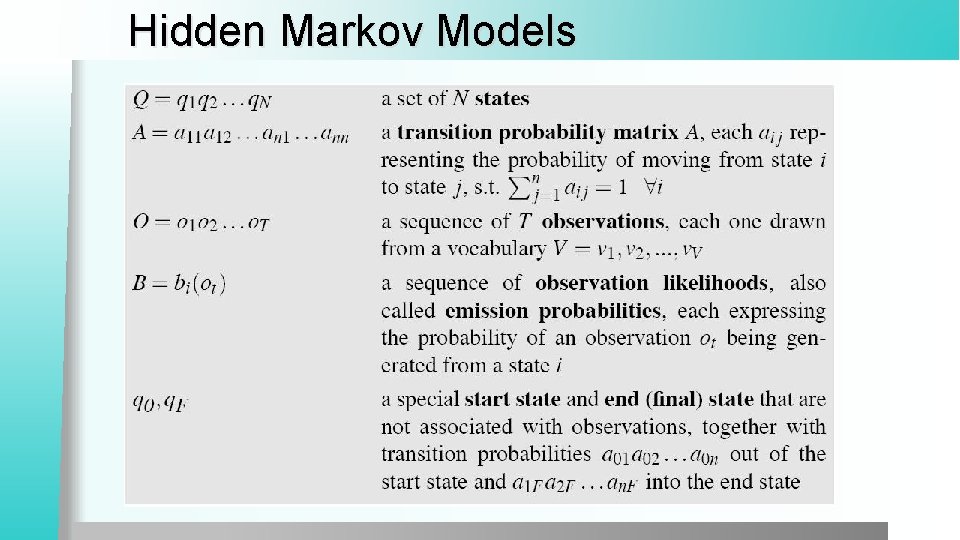

Hidden Markov Models

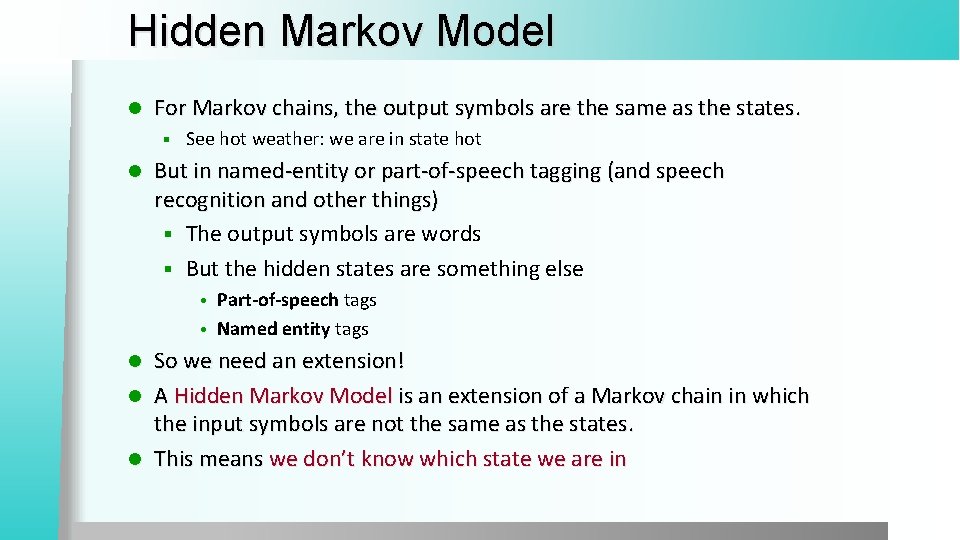

Hidden Markov Model l For Markov chains, the output symbols are the same as the states. § l See hot weather: we are in state hot But in named-entity or part-of-speech tagging (and speech recognition and other things) § The output symbols are words § But the hidden states are something else Part-of-speech tags • Named entity tags • So we need an extension! l A Hidden Markov Model is an extension of a Markov chain in which the input symbols are not the same as the states. l This means we don’t know which state we are in l

Hidden Markov Models

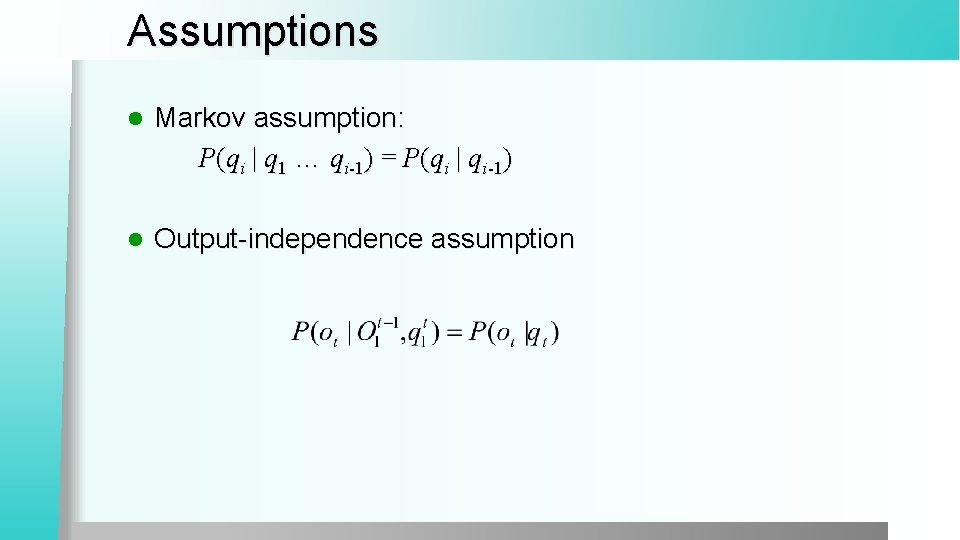

Assumptions l Markov assumption: P(qi | q 1 … qi-1) = P(qi | qi-1) l Output-independence assumption

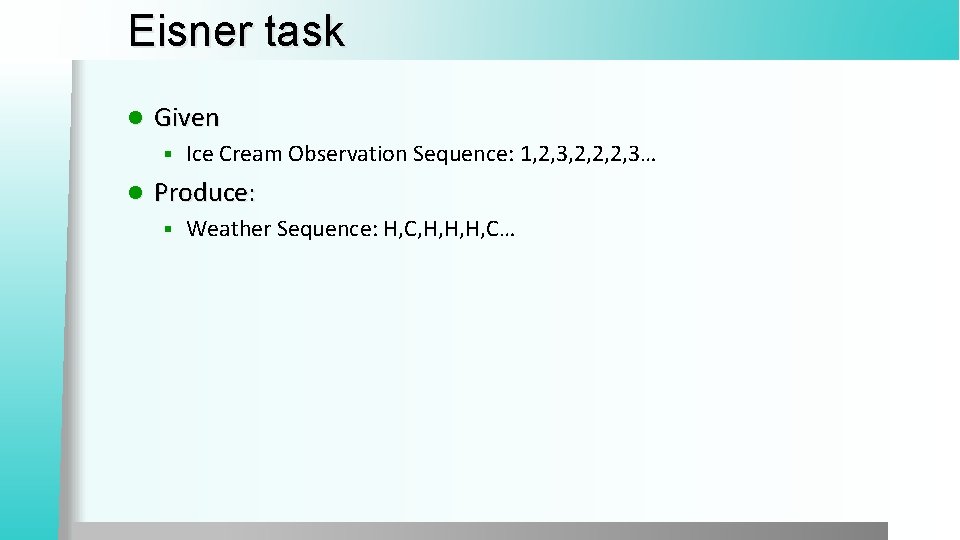

Toy Problem l l l You are a climatologist in the year 2799 Studying global warming You can’t find any records of the weather in Baltimore, MD for summer of 2008 But you find Jason Eisner’s diary Which lists how many ice-creams Jason ate every date that summer Our job: figure out how hot it was

Eisner task l Given § l Ice Cream Observation Sequence: 1, 2, 3, 2, 2, 2, 3… Produce: § Weather Sequence: H, C, H, H, H, C…

HMM for ice cream

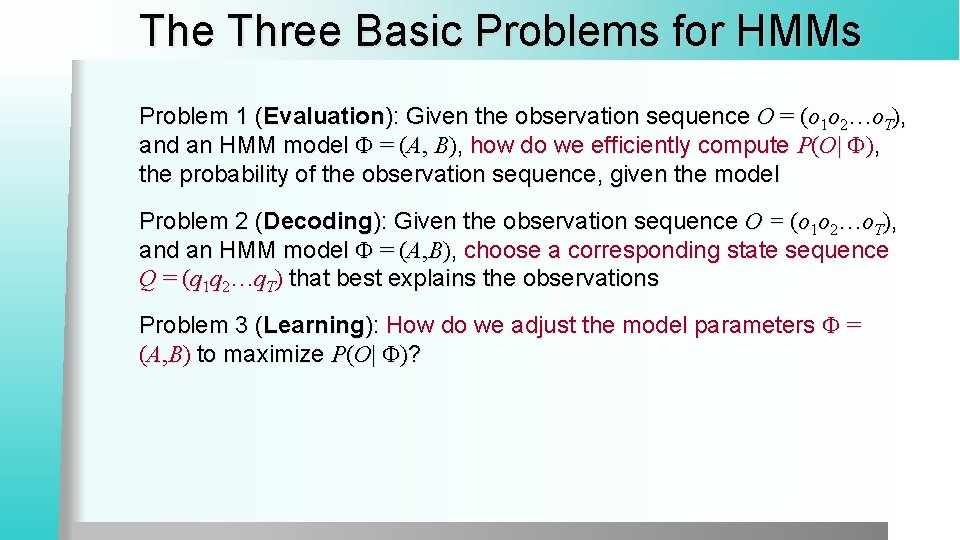

The Three Basic Problems for HMMs Problem 1 (Evaluation): Given the observation sequence O = (o 1 o 2…o. T), and an HMM model = (A, B), how do we efficiently compute P(O| ), the probability of the observation sequence, given the model Problem 2 (Decoding): Given the observation sequence O = (o 1 o 2…o. T), and an HMM model = (A, B), choose a corresponding state sequence Q = (q 1 q 2…q. T) that best explains the observations Problem 3 (Learning): How do we adjust the model parameters = (A, B) to maximize P(O| )?

Problem 1: computing the observation likelihood Computing Likelihood. Given the observation sequence O = (o 1 o 2…o. T), and an HMM model = (A, B), compute the likelihood P(O| ) l Given the following HMM: l How likely is the sequence 3 1 3?

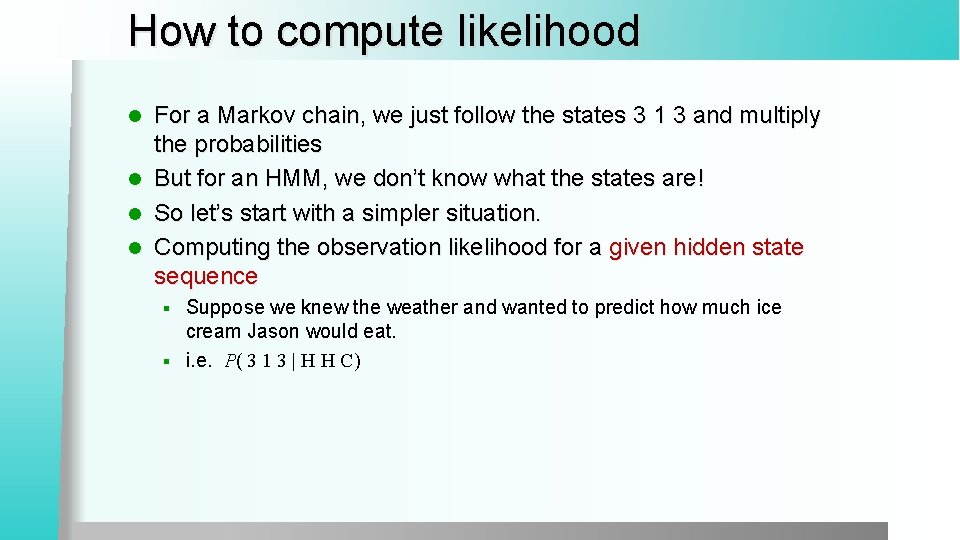

How to compute likelihood l l For a Markov chain, we just follow the states 3 1 3 and multiply the probabilities But for an HMM, we don’t know what the states are! So let’s start with a simpler situation. Computing the observation likelihood for a given hidden state sequence Suppose we knew the weather and wanted to predict how much ice cream Jason would eat. § i. e. P( 3 1 3 | H H C) §

Computing likelihood of 3 1 3 given hidden state sequence

Computing joint probability of observation and state sequence

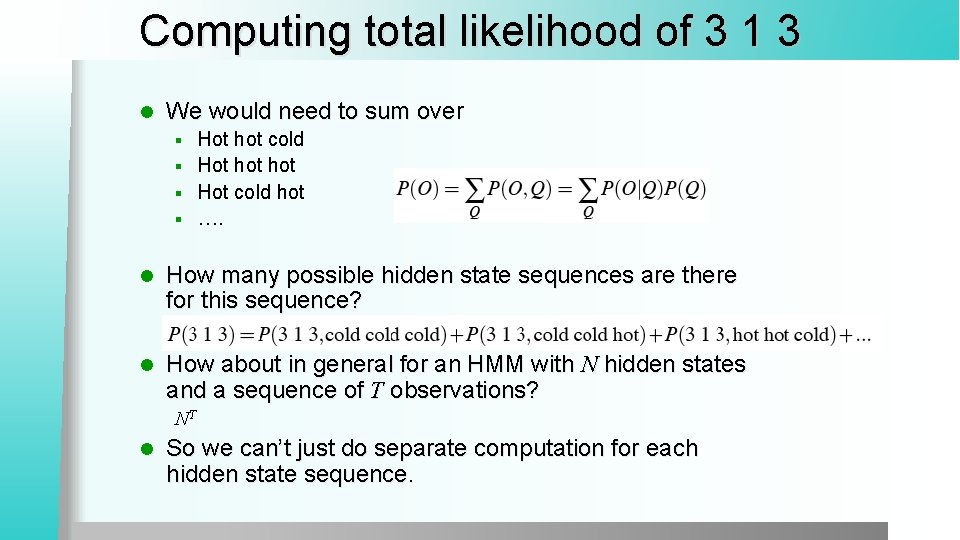

Computing total likelihood of 3 1 3 l We would need to sum over Hot hot cold § Hot hot § Hot cold hot § …. § l How many possible hidden state sequences are there for this sequence? l How about in general for an HMM with N hidden states and a sequence of T observations? NT l So we can’t just do separate computation for each hidden state sequence.

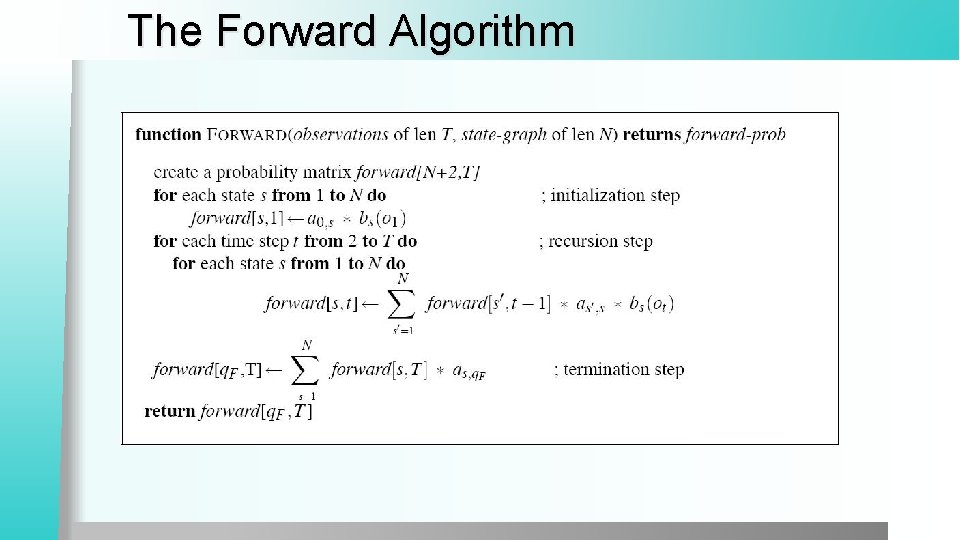

Instead: the Forward algorithm l A kind of dynamic programming algorithm § l Uses a table to store intermediate values Idea: Compute the likelihood of the observation sequence § By summing over all possible hidden state sequences § But doing this efficiently § • By folding all the sequences into a single trellis

The forward algorithm l The goal of the forward algorithm is to compute P(o 1, o 2 … o. T, q. T = q. F | l) l We’ll do this by recursion

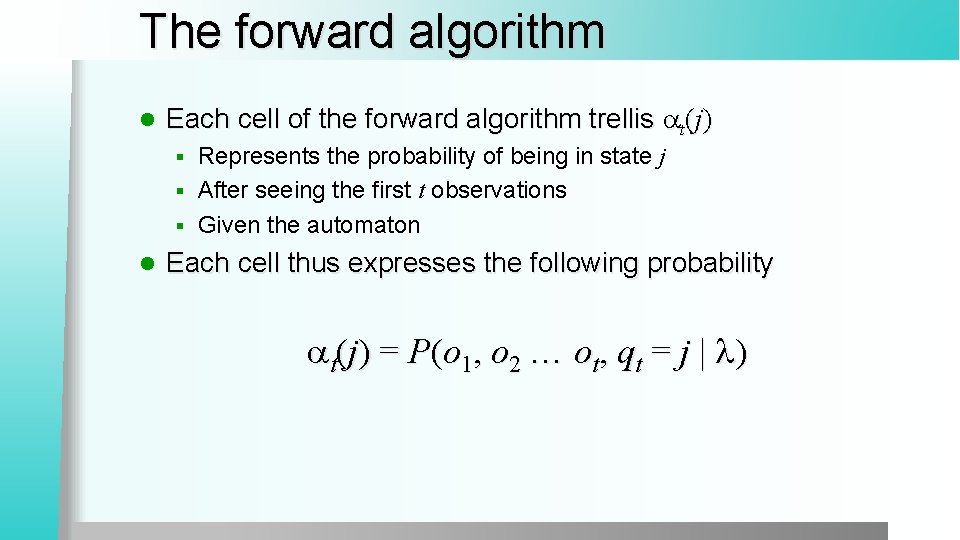

The forward algorithm l Each cell of the forward algorithm trellis at(j) Represents the probability of being in state j § After seeing the first t observations § Given the automaton § l Each cell thus expresses the following probability at(j) = P(o 1, o 2 … ot, qt = j | l)

The Forward Recursion

The Forward Trellis

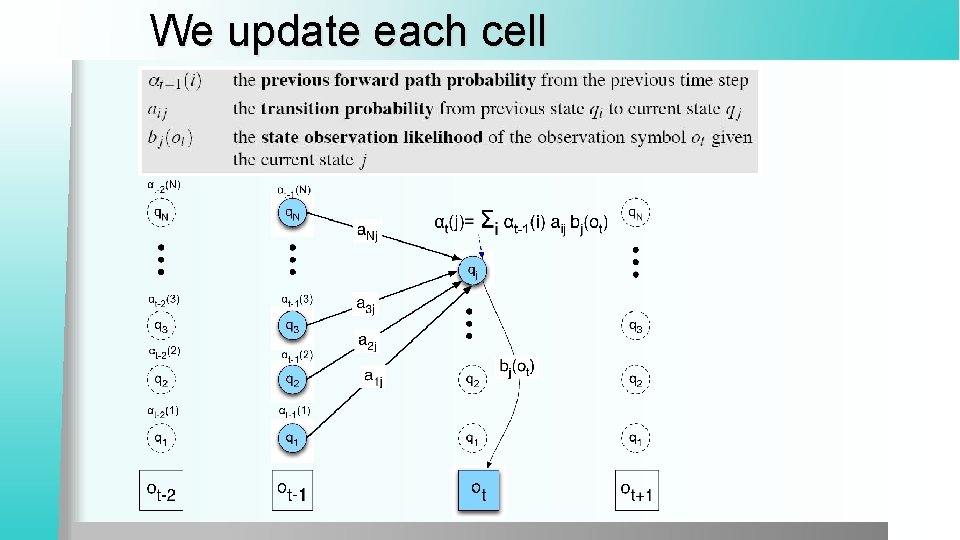

We update each cell

The Forward Algorithm

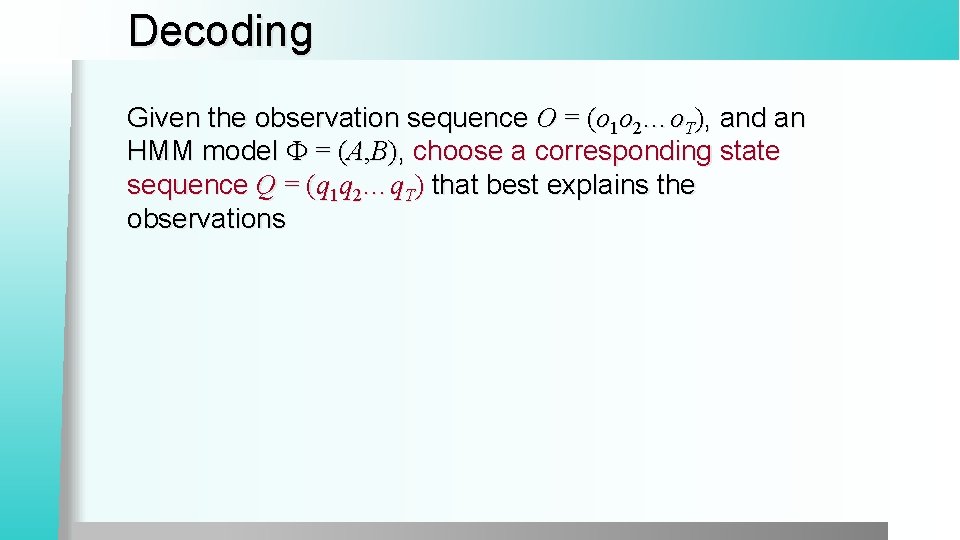

Decoding Given the observation sequence O = (o 1 o 2…o. T), and an HMM model = (A, B), choose a corresponding state sequence Q = (q 1 q 2…q. T) that best explains the observations

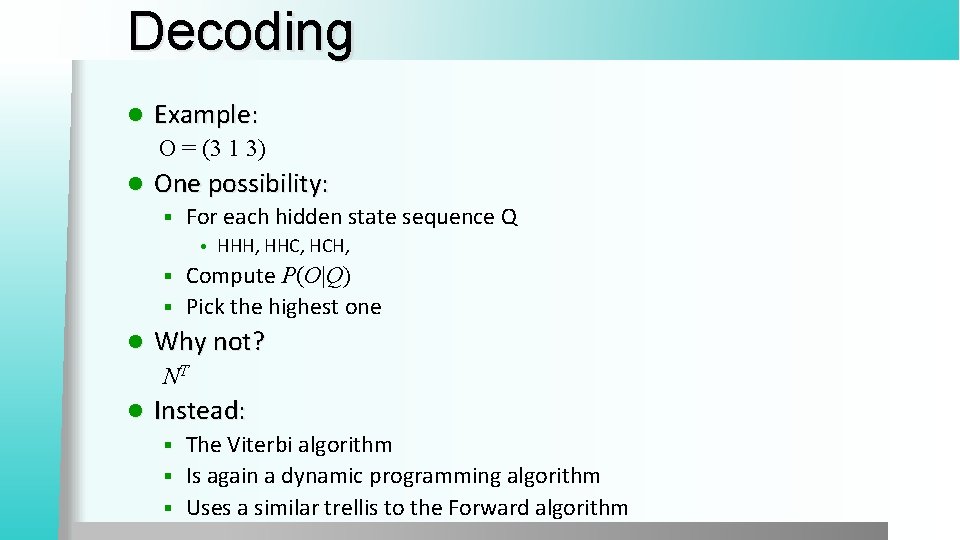

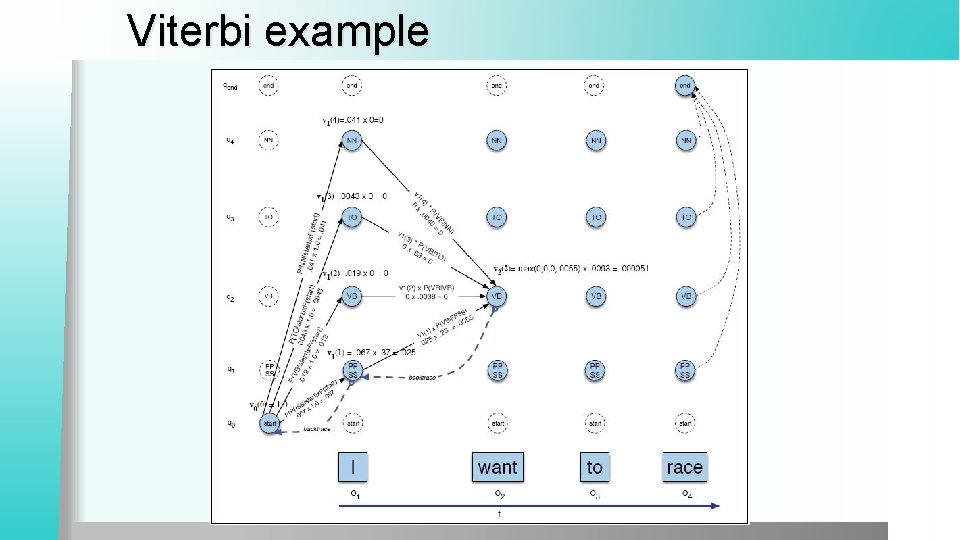

Decoding l Example: O = (3 1 3) l One possibility: § For each hidden state sequence Q • HHH, HHC, HCH, Compute P(O|Q) § Pick the highest one § l Why not? NT l Instead: The Viterbi algorithm § Is again a dynamic programming algorithm § Uses a similar trellis to the Forward algorithm §

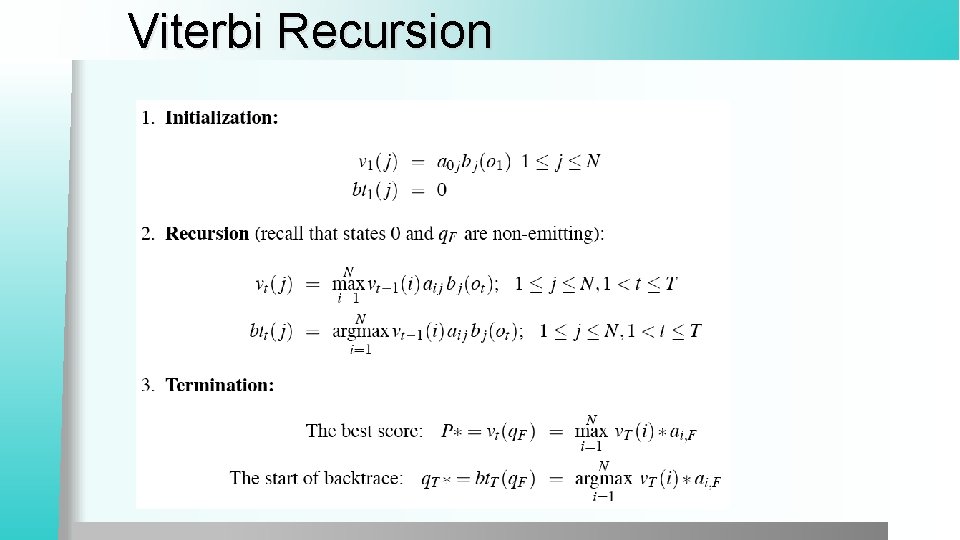

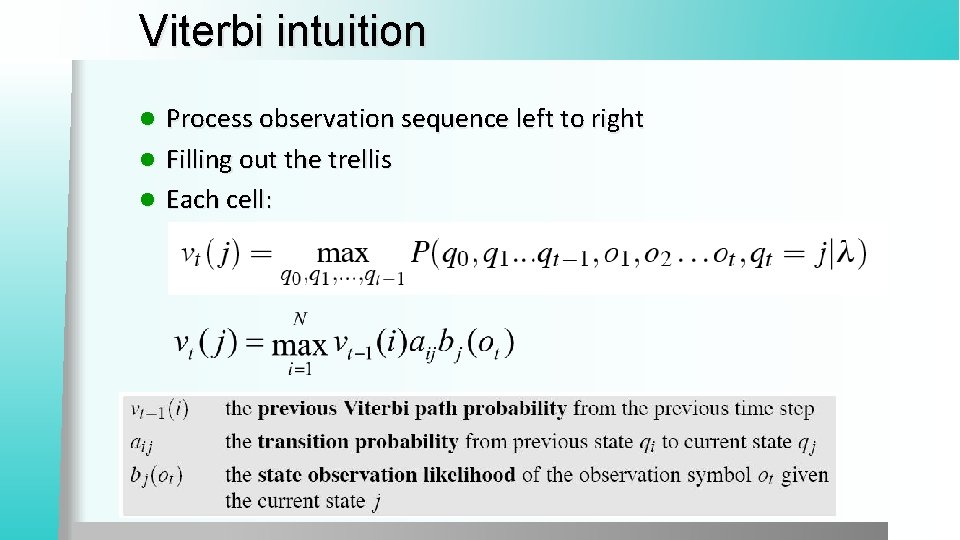

Viterbi intuition l We want to compute the joint probability of the observation sequence together with the best state sequence

Viterbi Recursion

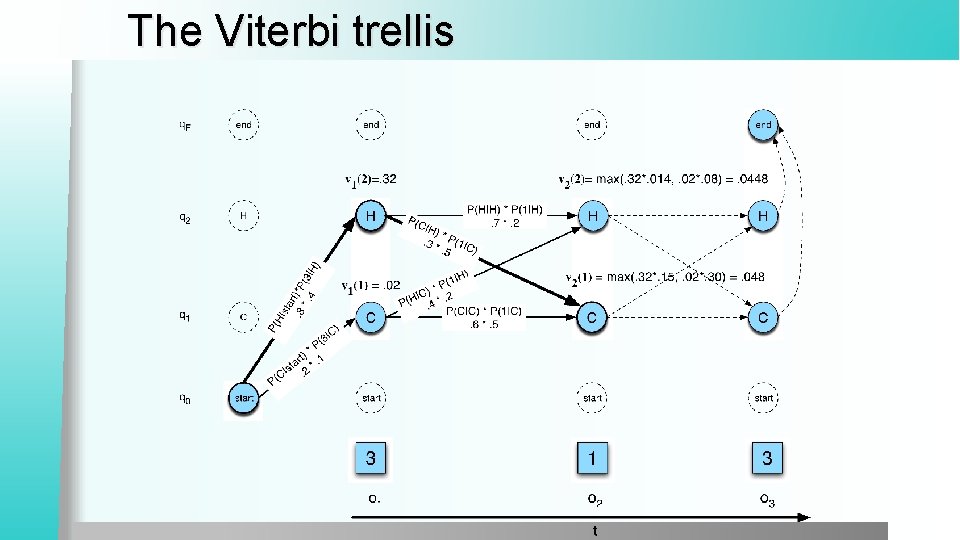

The Viterbi trellis

Viterbi intuition Process observation sequence left to right l Filling out the trellis l Each cell: l

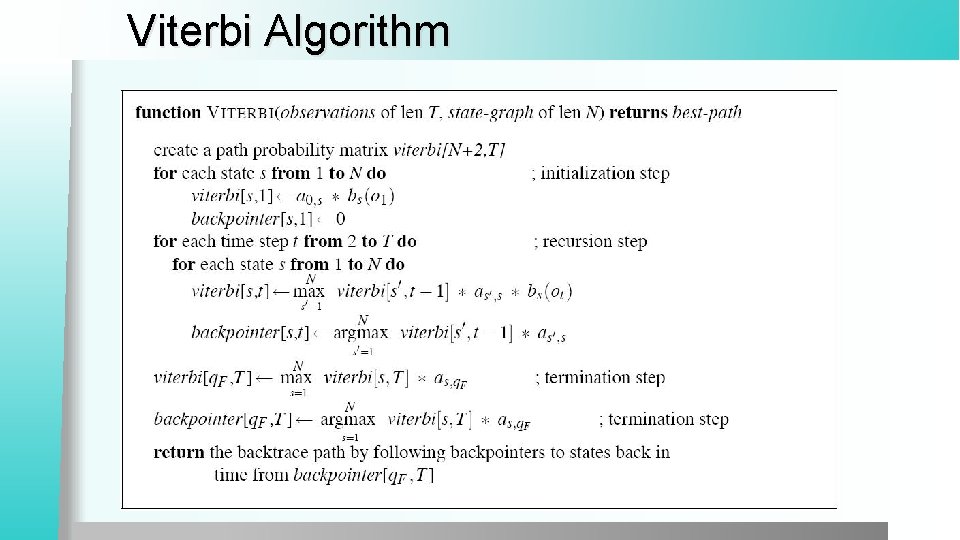

Viterbi Algorithm

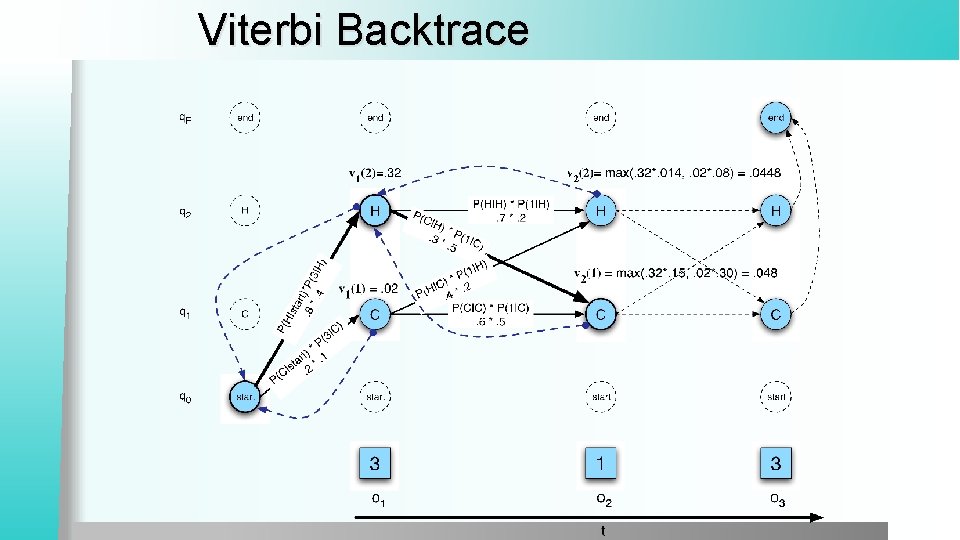

Viterbi Backtrace

Training a HMM Baum-Welch unsupervised learning algorithm (Expectation Maximization) l ML Estimates of the probabilities l

Hidden Markov Models for Part of Speech Tagging

Part of speech tagging l 8 (ish) traditional English parts of speech § § § Noun, verb, adjective, preposition, adverb, article, interjection, pronoun, conjunction, etc. This idea has been around for over 2000 years (Dionysius Thrax of Alexandria, c. 100 B. C. ) Called: parts-of-speech, lexical category, word classes, morphological classes, lexical tags, POS We’ll use POS most frequently Assuming that you know what these are

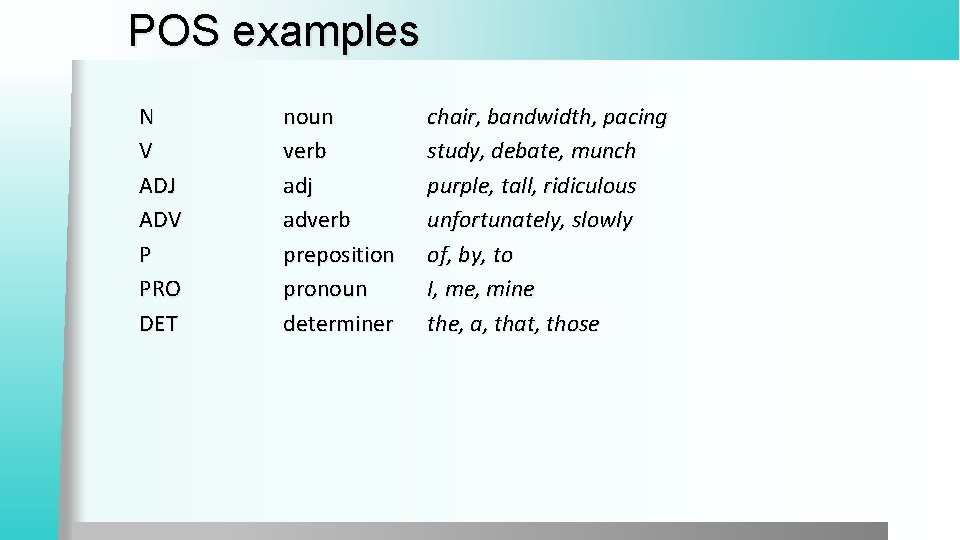

POS examples N V ADJ ADV P PRO DET noun verb adj adverb preposition pronoun determiner chair, bandwidth, pacing study, debate, munch purple, tall, ridiculous unfortunately, slowly of, by, to I, me, mine the, a, that, those

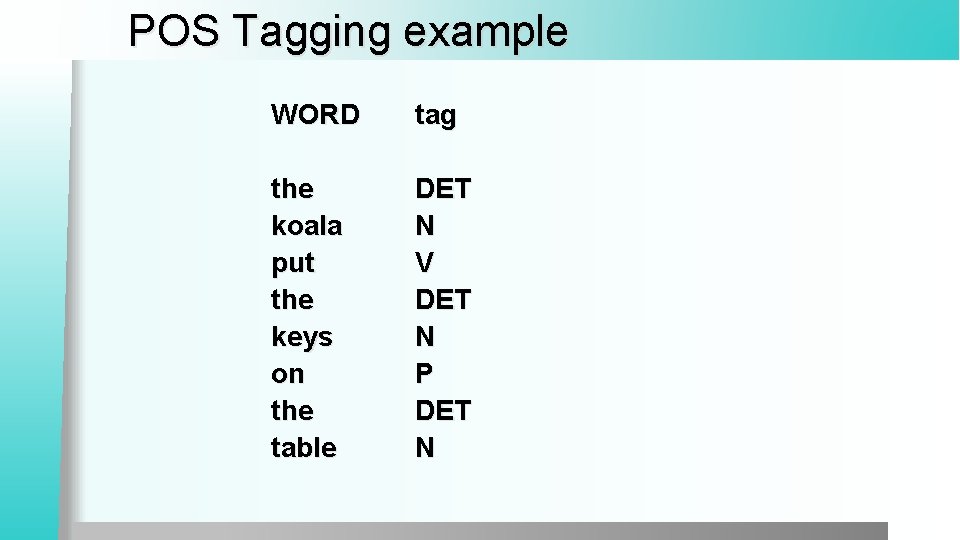

POS Tagging example WORD tag the koala put the keys on the table DET N V DET N P DET N

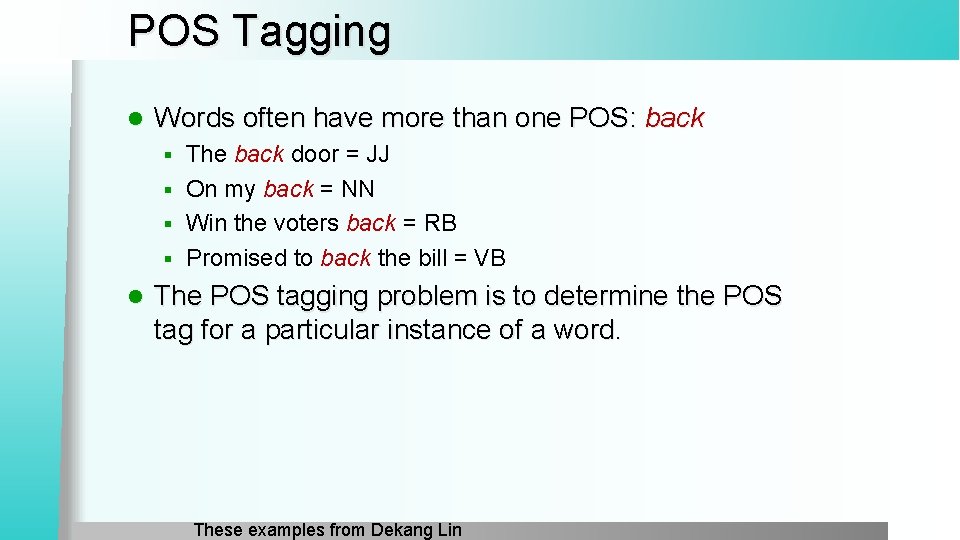

POS Tagging l Words often have more than one POS: back The back door = JJ § On my back = NN § Win the voters back = RB § Promised to back the bill = VB § l The POS tagging problem is to determine the POS tag for a particular instance of a word. These examples from Dekang Lin

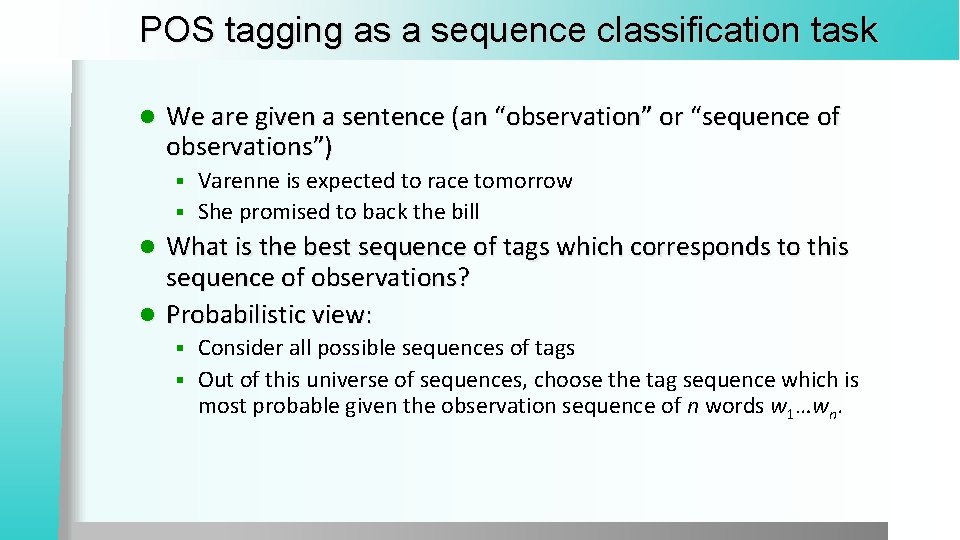

POS tagging as a sequence classification task l We are given a sentence (an “observation” or “sequence of observations”) Varenne is expected to race tomorrow § She promised to back the bill § What is the best sequence of tags which corresponds to this sequence of observations? l Probabilistic view: l Consider all possible sequences of tags § Out of this universe of sequences, choose the tag sequence which is most probable given the observation sequence of n words w 1…wn. §

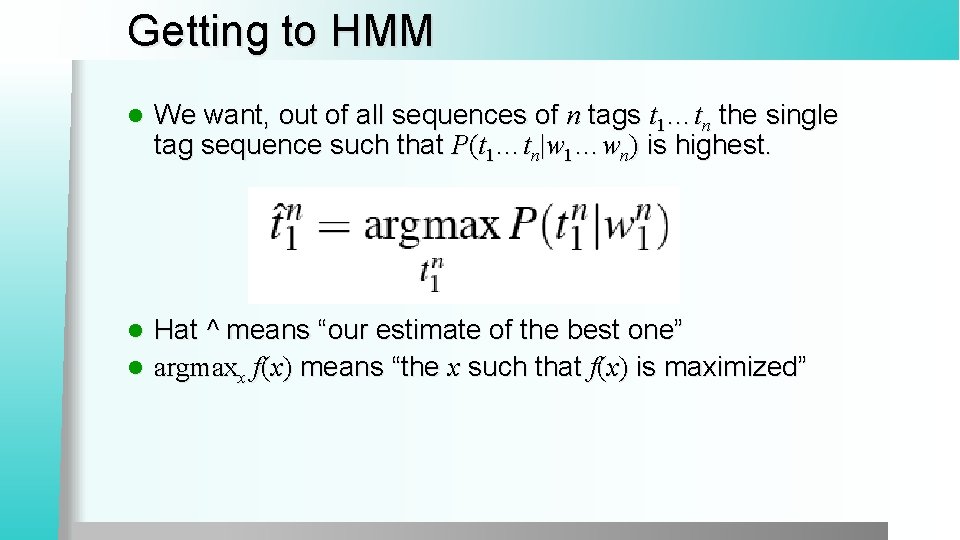

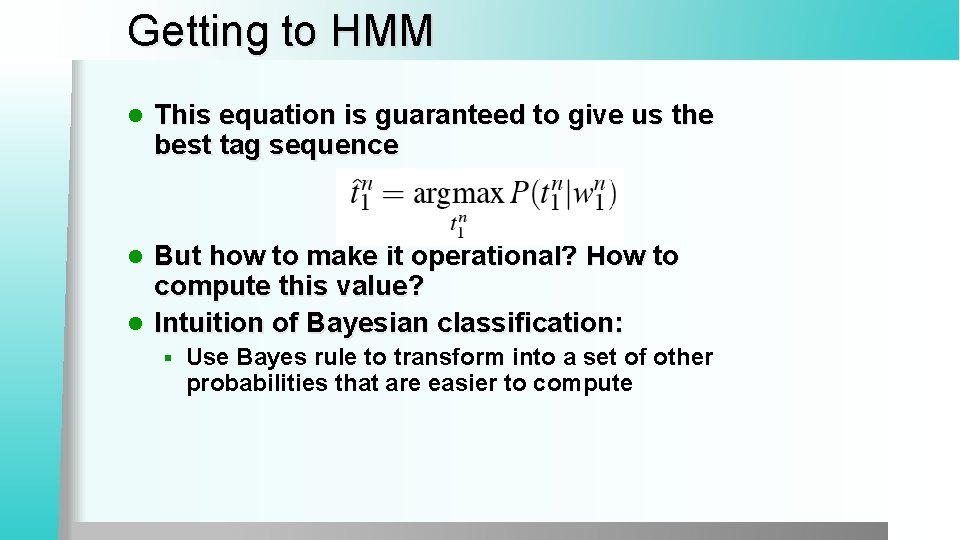

Getting to HMM l We want, out of all sequences of n tags t 1…tn the single tag sequence such that P(t 1…tn|w 1…wn) is highest. Hat ^ means “our estimate of the best one” l argmaxx f(x) means “the x such that f(x) is maximized” l

Getting to HMM l This equation is guaranteed to give us the best tag sequence But how to make it operational? How to compute this value? l Intuition of Bayesian classification: l § Use Bayes rule to transform into a set of other probabilities that are easier to compute

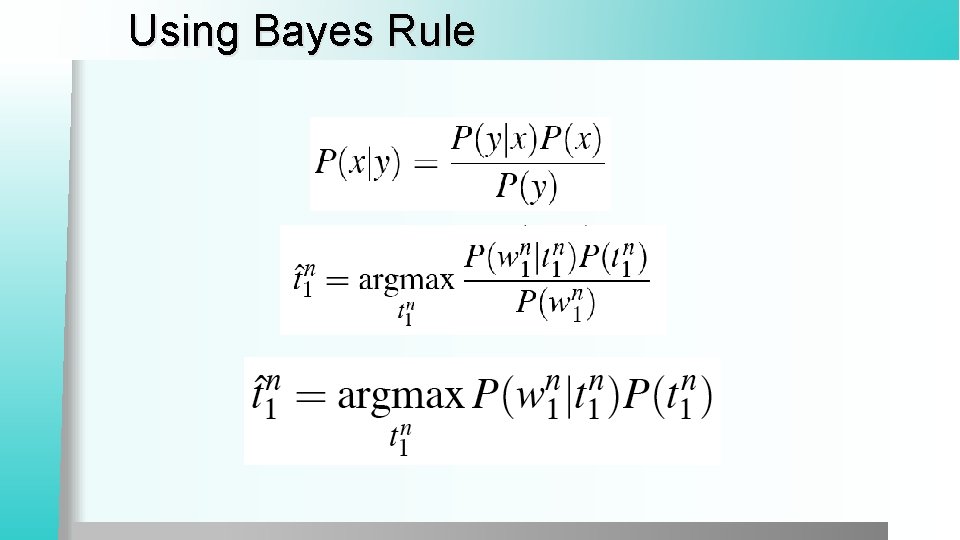

Using Bayes Rule

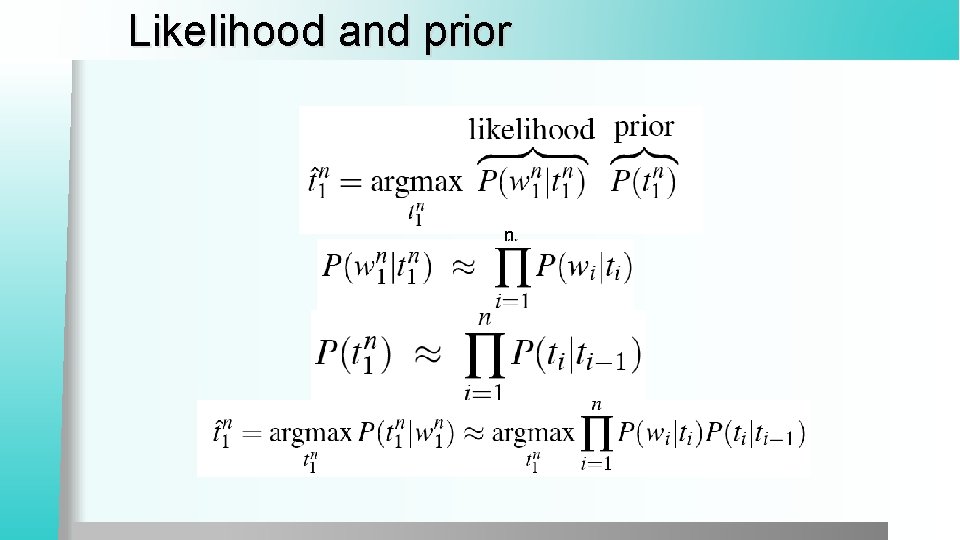

Likelihood and prior n

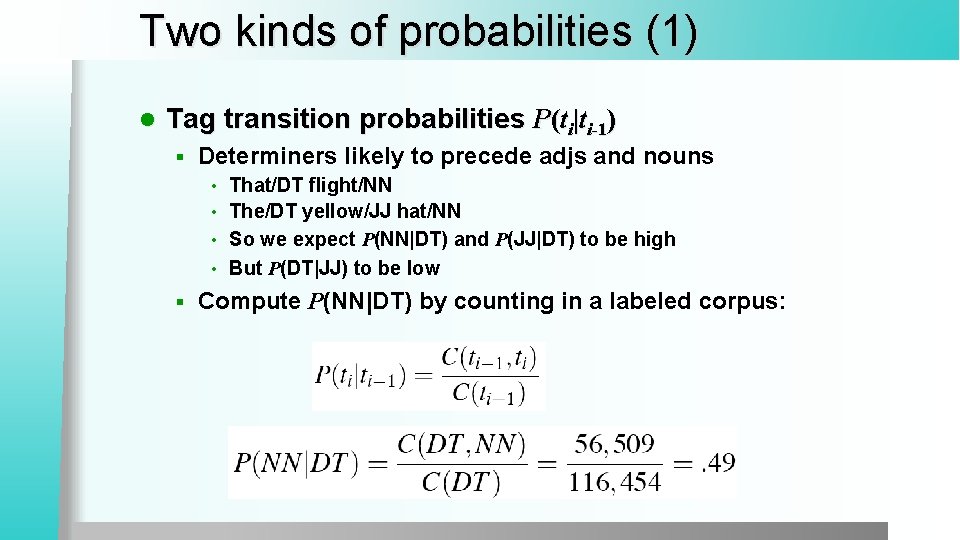

Two kinds of probabilities (1) l Tag transition probabilities P(ti|ti-1) § Determiners likely to precede adjs and nouns That/DT flight/NN • The/DT yellow/JJ hat/NN • So we expect P(NN|DT) and P(JJ|DT) to be high • But P(DT|JJ) to be low • § Compute P(NN|DT) by counting in a labeled corpus:

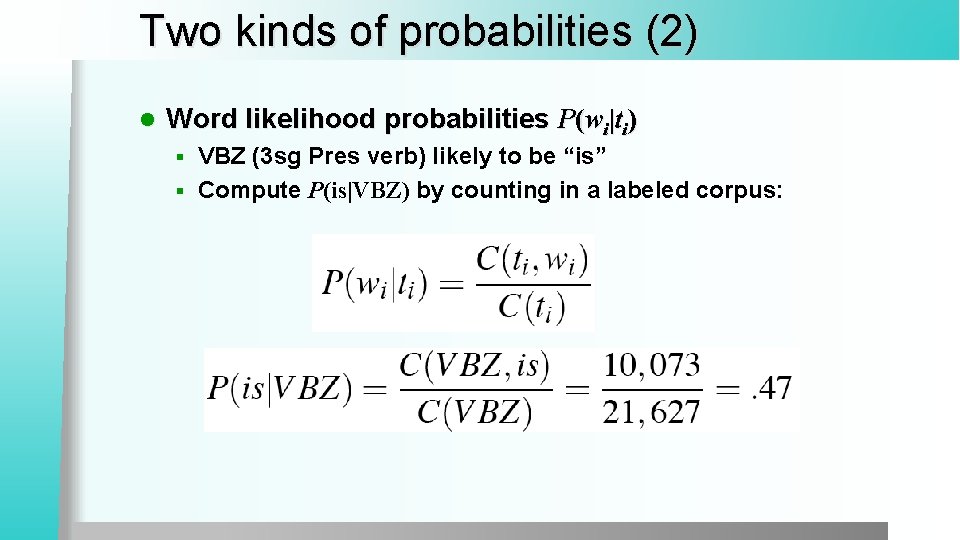

Two kinds of probabilities (2) l Word likelihood probabilities P(wi|ti) VBZ (3 sg Pres verb) likely to be “is” § Compute P(is|VBZ) by counting in a labeled corpus: §

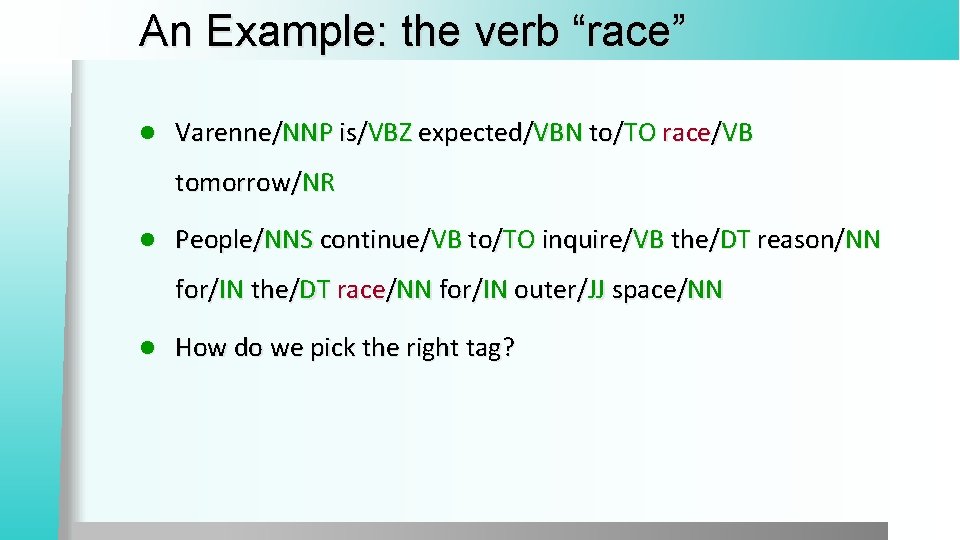

An Example: the verb “race” l Varenne/NNP is/VBZ expected/VBN to/TO race/VB tomorrow/NR l People/NNS continue/VB to/TO inquire/VB the/DT reason/NN for/IN the/DT race/NN for/IN outer/JJ space/NN l How do we pick the right tag?

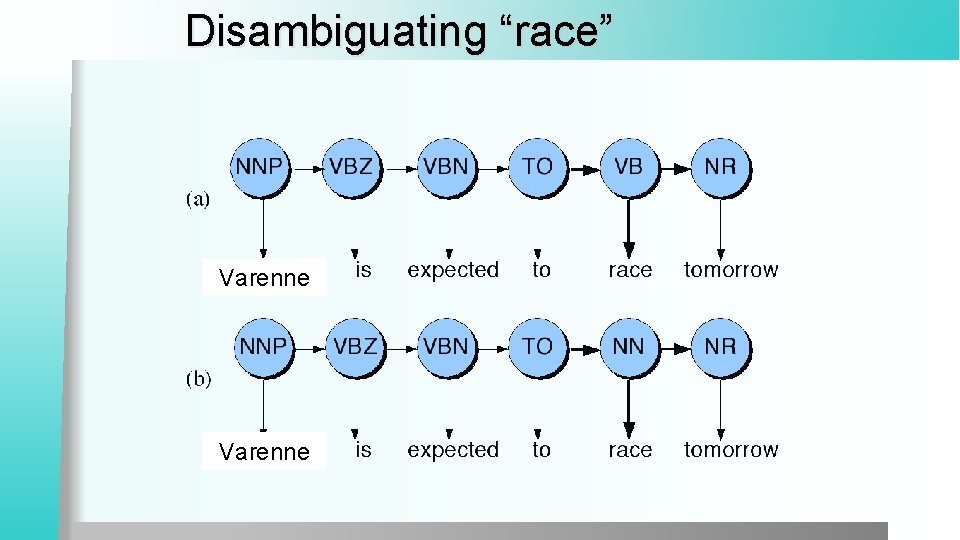

Disambiguating “race” Varenne

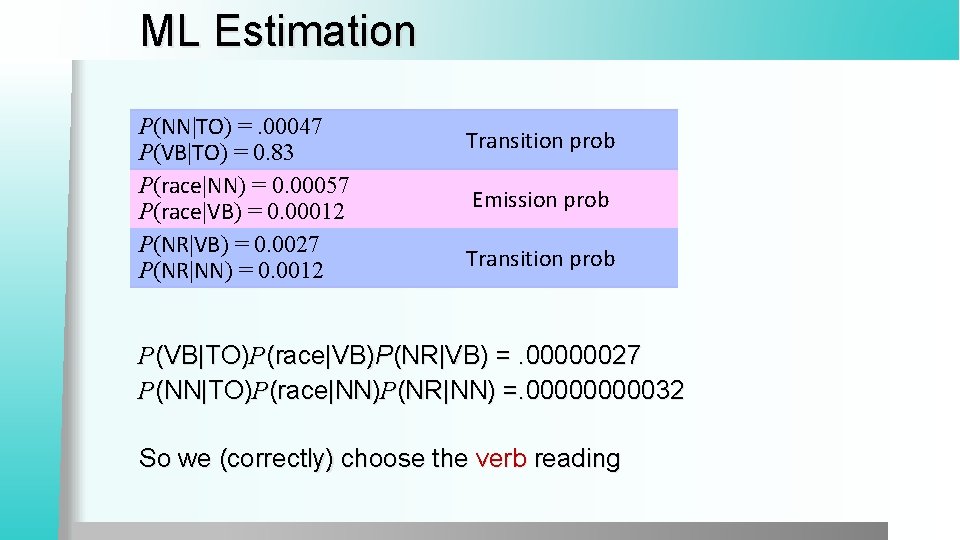

ML Estimation P(NN|TO) =. 00047 P(VB|TO) = 0. 83 P(race|NN) = 0. 00057 P(race|VB) = 0. 00012 P(NR|VB) = 0. 0027 P(NR|NN) = 0. 0012 Transition prob Emission prob Transition prob P(VB|TO)P(race|VB)P(NR|VB) =. 00000027 P(NN|TO)P(race|NN)P(NR|NN) =. 0000032 So we (correctly) choose the verb reading

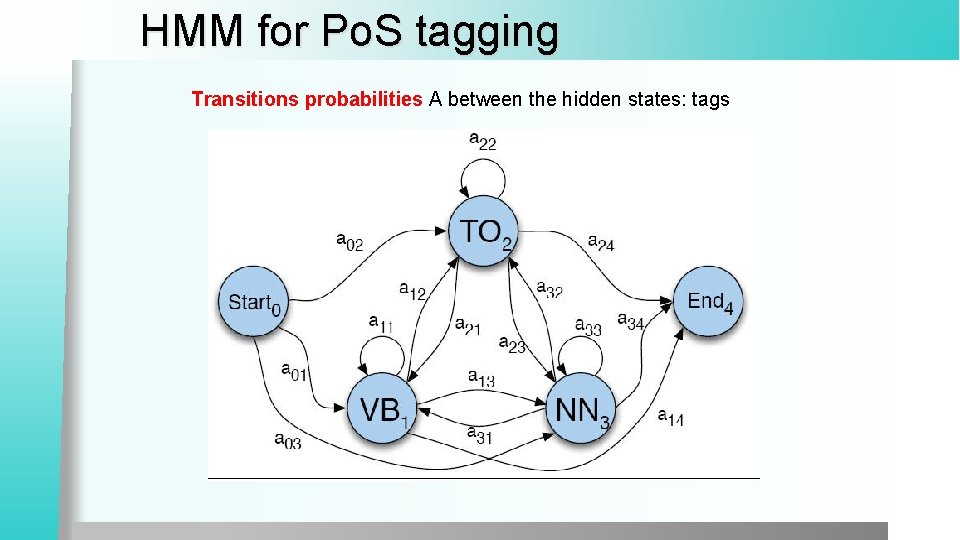

HMM for Po. S tagging Transitions probabilities A between the hidden states: tags

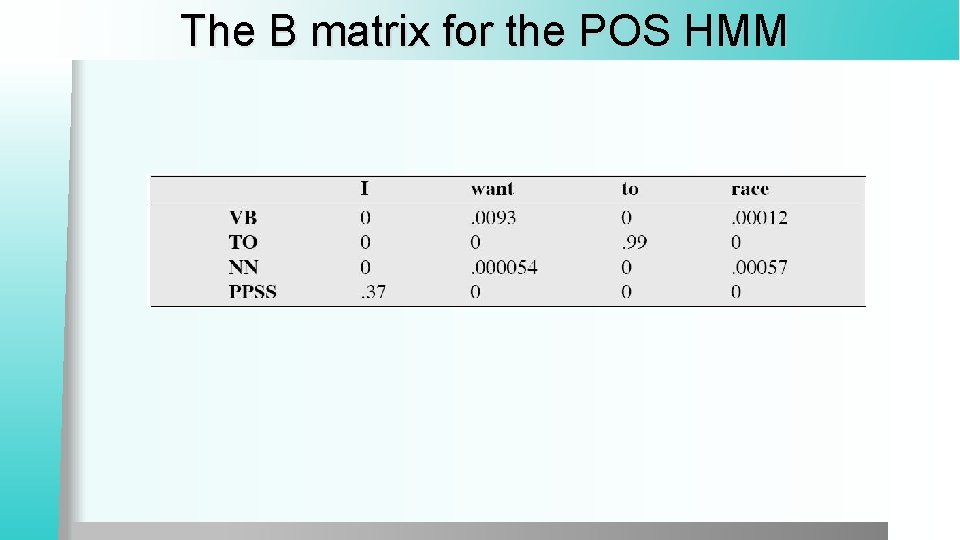

B observation likelihoods for POS HMM Emission probabilities B: words

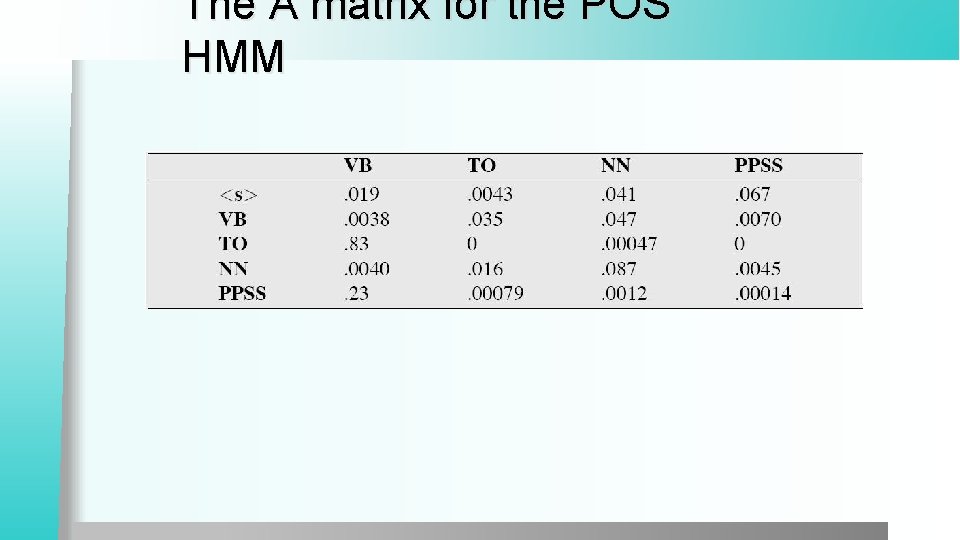

The A matrix for the POS HMM

The B matrix for the POS HMM

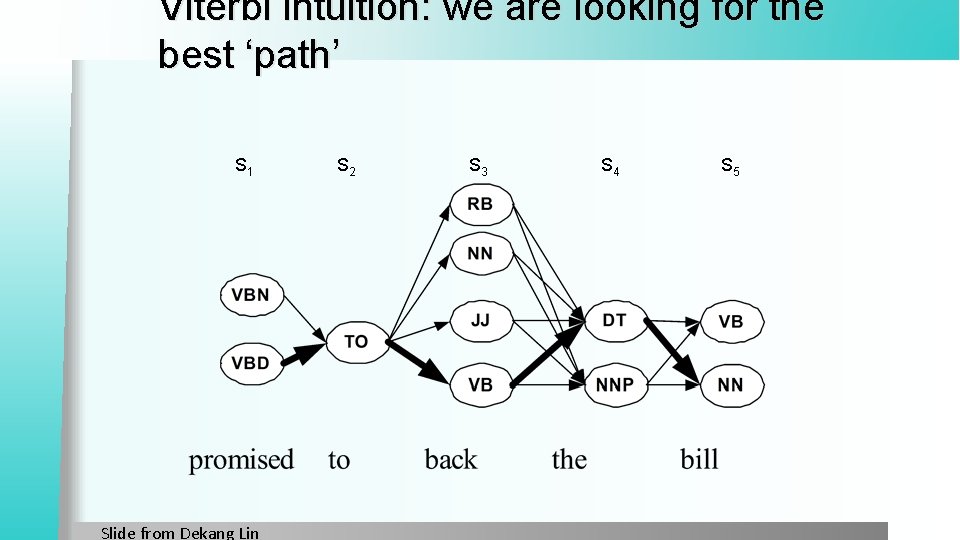

Viterbi intuition: we are looking for the best ‘path’ S 1 Slide from Dekang Lin S 2 S 3 S 4 S 5

Viterbi example

Outline Sequence Labeling l Markov Chains l Hidden Markov Models l Two Algorithms for HMMs l The Forward Algorithm § The Viterbi Algorithm § l Applications: The Ice Cream Task § Part of Speech Tagging § Next time: Named Entity Tagging §

- Slides: 65