Sentiment analysis using deep learning methods Antti Keurulainen

![Sentiment analysis using CNNs Models in [Kim 2014] q CNN-rand; all words are initialized Sentiment analysis using CNNs Models in [Kim 2014] q CNN-rand; all words are initialized](https://slidetodoc.com/presentation_image_h/910cdc2b968e903975e20136901ddcbc/image-18.jpg)

![Sentiment analysis using CNNs datasets [Kim 2014] q “Movie review data”. Bo Pang, Lillian Sentiment analysis using CNNs datasets [Kim 2014] q “Movie review data”. Bo Pang, Lillian](https://slidetodoc.com/presentation_image_h/910cdc2b968e903975e20136901ddcbc/image-19.jpg)

![Sentiment analysis using CNNs results [Kim 2014] Antti Keurulainen 14. 2. 2017 20 Sentiment analysis using CNNs results [Kim 2014] Antti Keurulainen 14. 2. 2017 20](https://slidetodoc.com/presentation_image_h/910cdc2b968e903975e20136901ddcbc/image-20.jpg)

![Sentiment analysis using RNNs q Analysis based on [Wan 2015]: Wang, X. , Liu, Sentiment analysis using RNNs q Analysis based on [Wan 2015]: Wang, X. , Liu,](https://slidetodoc.com/presentation_image_h/910cdc2b968e903975e20136901ddcbc/image-22.jpg)

![Sentiment analysis using RNNs Basic RNN architecture [Wan 2015] RNN architecture that is used Sentiment analysis using RNNs Basic RNN architecture [Wan 2015] RNN architecture that is used](https://slidetodoc.com/presentation_image_h/910cdc2b968e903975e20136901ddcbc/image-24.jpg)

- Slides: 37

Sentiment analysis using deep learning methods Antti Keurulainen 14. 2. 2017 1 1

Sentiment analysis using deep learning methods Two main approaches: q Convolutional neural networks (CNN) q Recurrent neural networks (RNN), can be enhanced by using LSTM Antti Keurulainen 14. 2. 2017 2

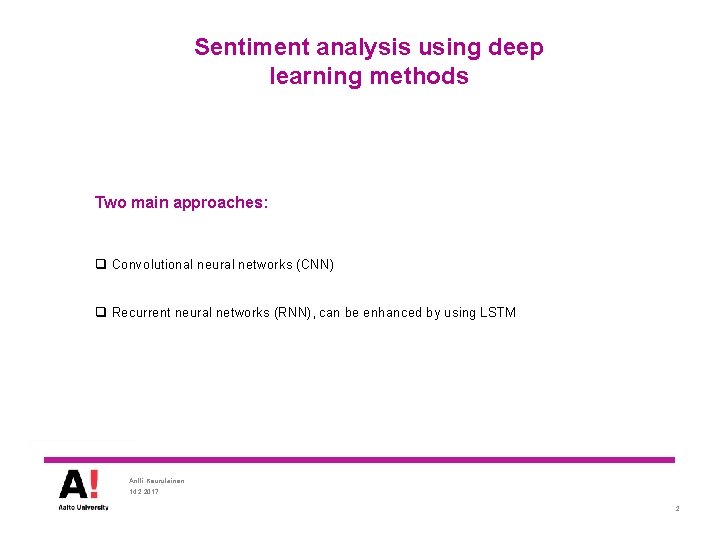

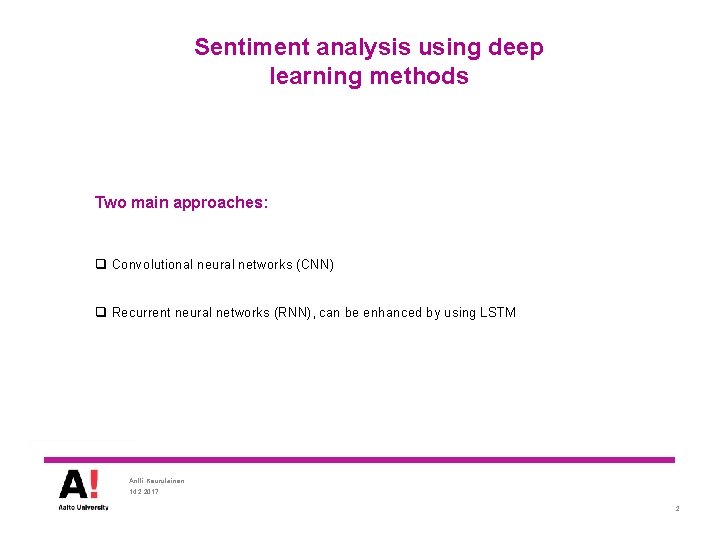

Deep Learning q One or more hidden layers and the ability to have trainable parameters in these layers q An artificial network, that is organized in hierarchical layers, has the capability to build hierarchical representations of the input data Antti Keurulainen 14. 2. 2017 3

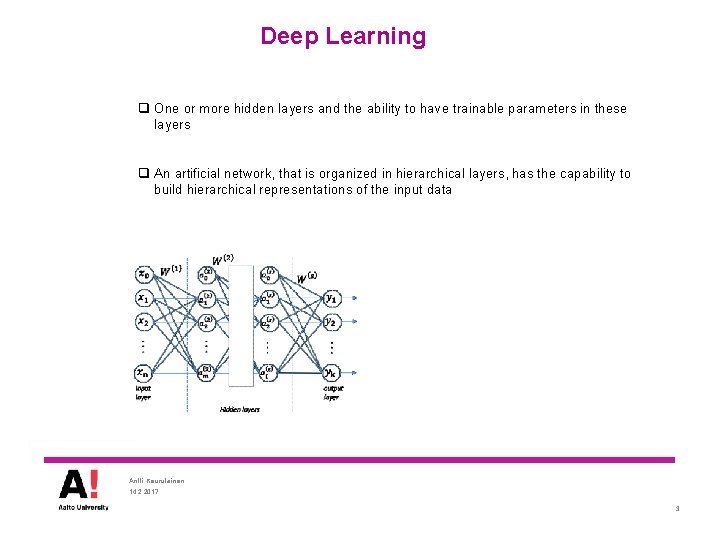

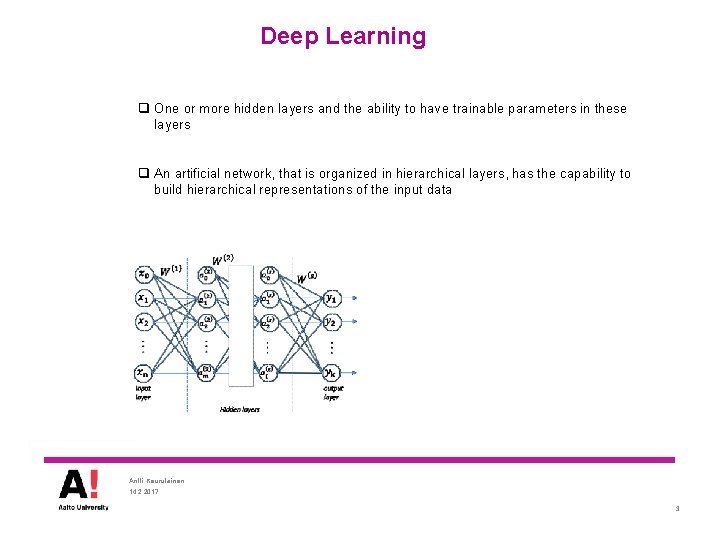

Convolutional neural network (CNN) q Simple example of convolution operation Input, e. g. an image 1 3 5 2 4 6 0 2 1 3 6 3 1 3 6 7 3 2 1 3 5 3 0 0 2 Filter (Kernel) 0. 2 0. 7 -0. 5 0. 7 Antti Keurulainen 14. 2. 2017 4

Convolutional neural network (CNN) q Simple example of convolution operation Input, e. g. an image 1 3 5 2 4 6 0 2 1 3 6 3 1 3 6 7 3 2 1 3 5 3 0 Filter (Kernel) 0. 2 0. 7 -0. 5 0. 7 0 2 Feature map Note: Bias terms omitted! f(-0. 7) f represents some nonlinear activation function Antti Keurulainen 14. 2. 2017 5

Convolutional neural network (CNN) q Simple example of convolution operation Input, e. g. an image 1 3 5 2 4 6 0 2 1 3 6 3 1 3 6 7 3 2 1 3 5 3 0 Filter (Kernel) 0. 2 0. 7 -0. 5 0. 7 0 2 Feature map f(-0. 7) Note: Bias terms omitted! f(5. 5) f represents some nonlinear activation function Antti Keurulainen 14. 2. 2017 6

Convolutional neural network (CNN) q Simple example of convolution operation Input, e. g. an image 1 3 5 2 4 6 0 2 1 3 6 3 1 3 6 7 3 2 1 3 5 3 0 Filter (Kernel) 0. 2 0. 7 -0. 5 0. 7 0 2 Note: Bias terms omitted! Feature map f(-0. 7) f(5. 5) … f represents some nonlinear activation function Antti Keurulainen 14. 2. 2017 7

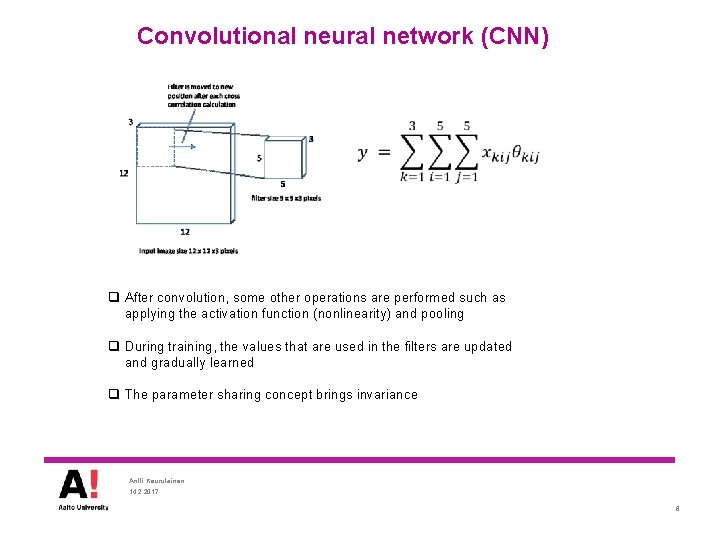

Convolutional neural network (CNN) q After convolution, some other operations are performed such as applying the activation function (nonlinearity) and pooling q During training, the values that are used in the filters are updated and gradually learned q The parameter sharing concept brings invariance Antti Keurulainen 14. 2. 2017 8

Recurrent Neural Network (RNN) q Shallow RNN: Source: Goodfellow, I. , Bengio, Y. , Courville, A. , Deep Learning, W . . . W U V V V W W U U W V V U U . . . Antti Keurulainen 14. 2. 2017 9

Recurrent Neural Network (RNN) q Deep RNN example: . . W. 2 W 2 1 W 1 U U V 1 W 1 . . . U U . . . V 1 W 2 V 1 W 1 V 2 W 2 V 1 U W 2 V 1 . . W. V 2 V 2 Antti Keurulainen 14. 2. 2017 10

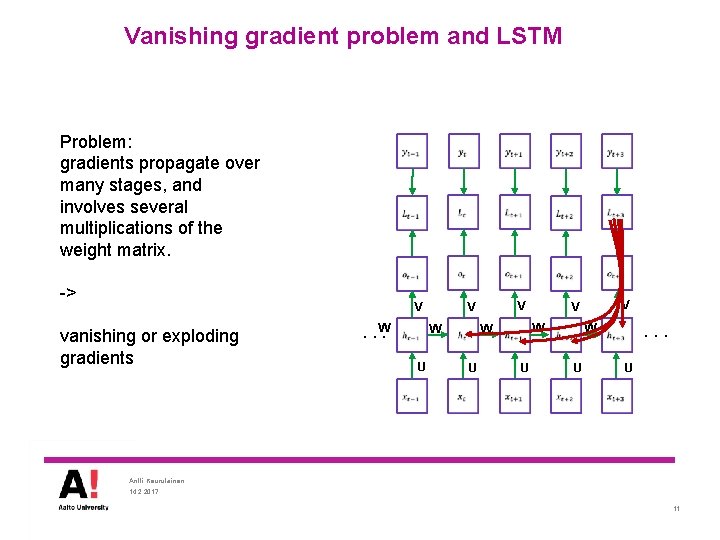

Vanishing gradient problem and LSTM Problem: gradients propagate over many stages, and involves several multiplications of the weight matrix. -> vanishing or exploding gradients W . . . W U V V V W W U U W V V U U . . . Antti Keurulainen 14. 2. 2017 11

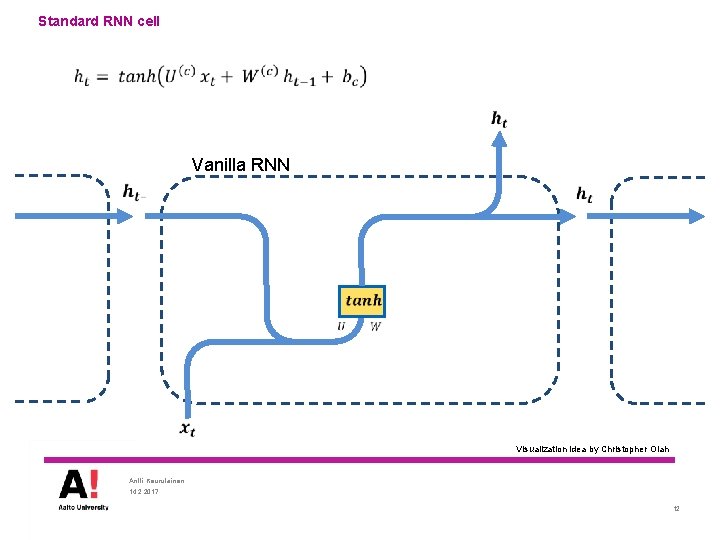

Standard RNN cell Vanilla RNN Visualization idea by Christopher Olah Antti Keurulainen 14. 2. 2017 12

LSTM + X Visualization idea by Christopher Olah Antti Keurulainen 14. 2. 2017 13

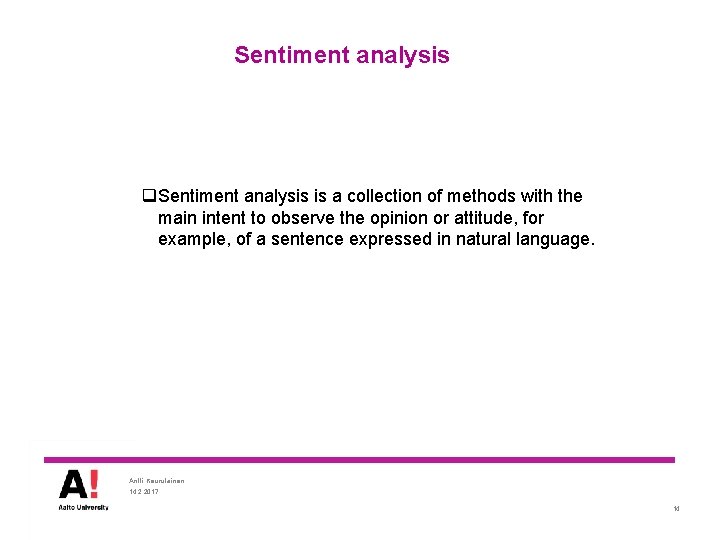

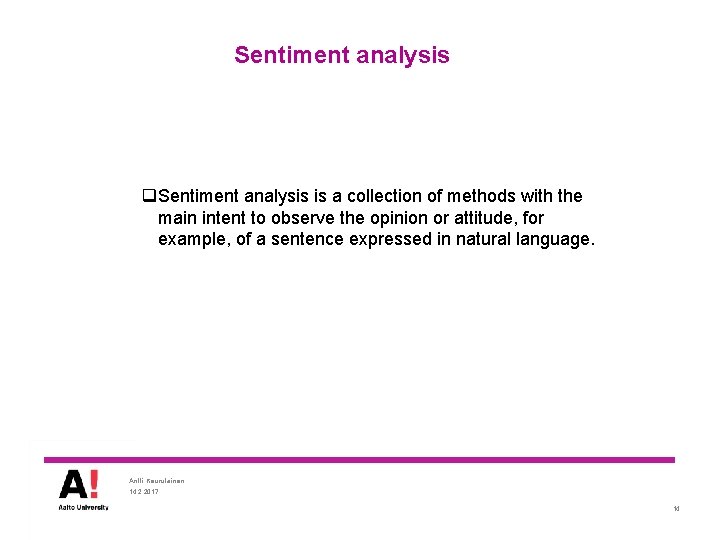

Sentiment analysis q. Sentiment analysis is a collection of methods with the main intent to observe the opinion or attitude, for example, of a sentence expressed in natural language. Antti Keurulainen 14. 2. 2017 14

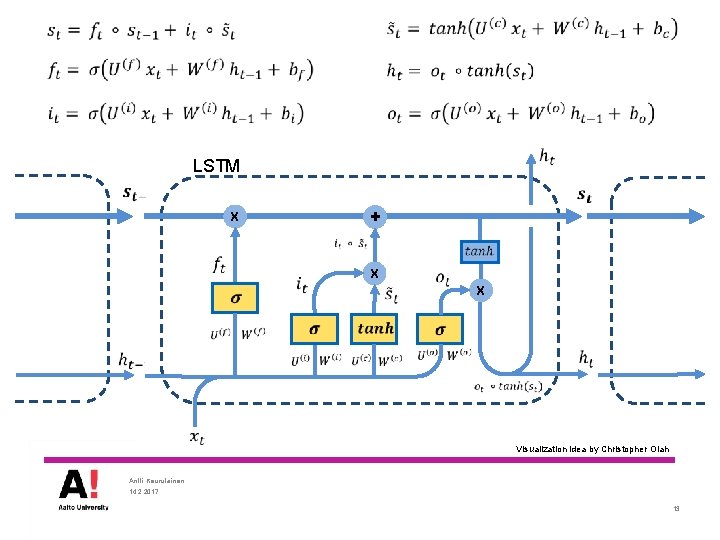

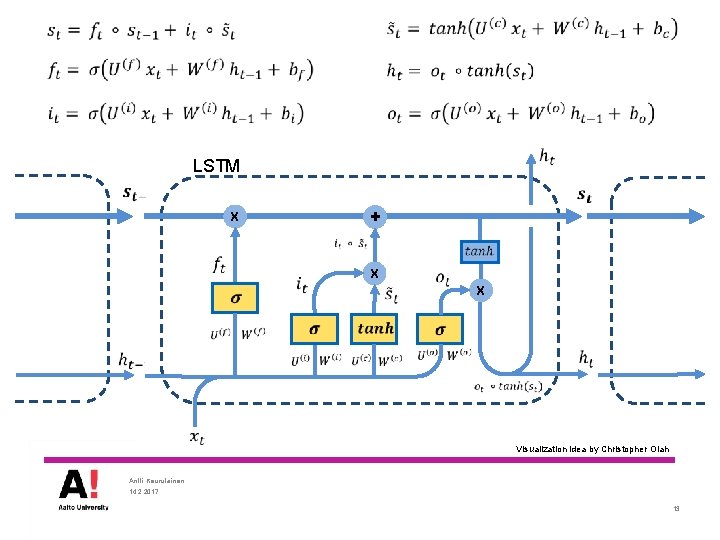

Sentiment analysis using CNNs q Analysis based on Kim, Y. (2014). Convolutional Neural Networks for Sentence Classification. Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP 2014), 1746– 1751. http: //aclweb. org/anthology/D/D 14 -1181. pdf q a simple CNN with one layer of convolution on top of word vectors obtained from an unsupervised neural language model. q Good results are obtained by using pre-trained word vector. Results are still improved by further training the word vectors for specific tasks. Antti Keurulainen 14. 2. 2017 15

Sentiment analysis using CNNs q. Simple CNN model for sentiment analysis Kim, Y. (2014). Convolutional Neural Networks for Sentence Classification. Antti Keurulainen 14. 2. 2017 16

Sentiment analysis using CNNs q Multiple filters sizes (3, 4, 5) to produce several feature maps (100 of each size) -> magnitude of 0, 3 – 0, 4 M parameters q Max-over-time pooling used to select the most important feature q Two input channels used, other with static word vectors and other with trainable vectors q Fully connected softmax layer on top to produce probabilities for each class q Dropout used in the fully connected layer for regularization, L 2 norm gradient clipping for other weights. Early stopping used. q Stochastic gradient descent update using Adadelta update rule q Pre-trained word 2 vec used, trained with 100 B words from Google news, 300 dimensions Kim, Y. (2014). Convolutional Neural Networks for Sentence Classification. Antti Keurulainen 14. 2. 2017 17

![Sentiment analysis using CNNs Models in Kim 2014 q CNNrand all words are initialized Sentiment analysis using CNNs Models in [Kim 2014] q CNN-rand; all words are initialized](https://slidetodoc.com/presentation_image_h/910cdc2b968e903975e20136901ddcbc/image-18.jpg)

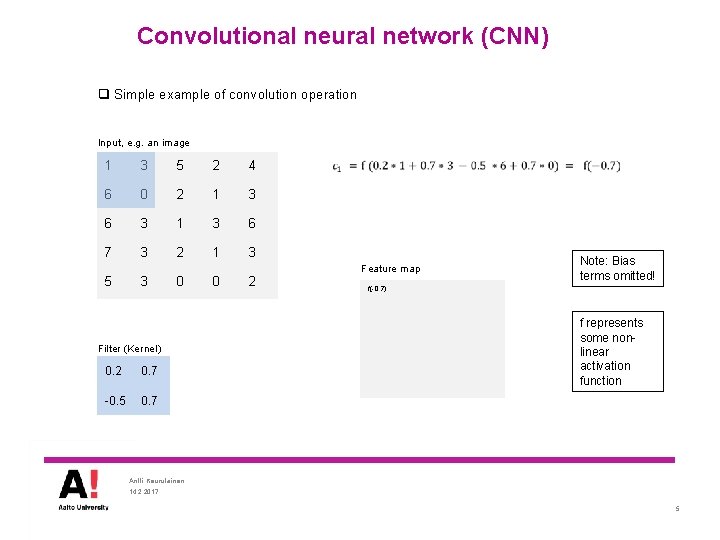

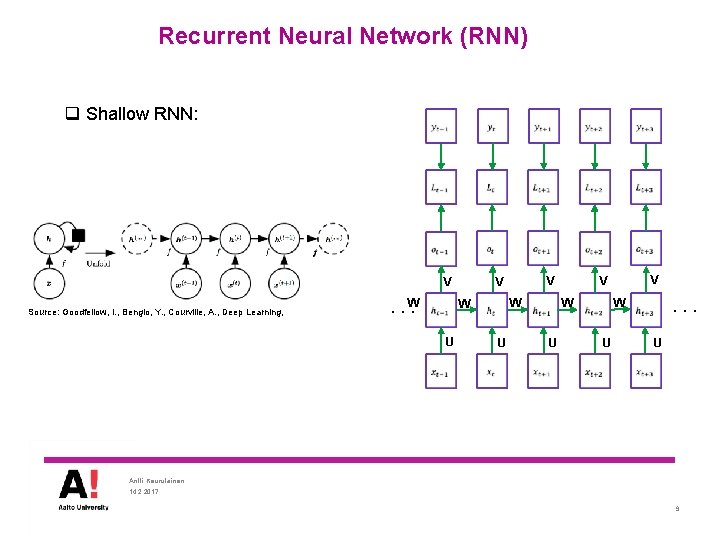

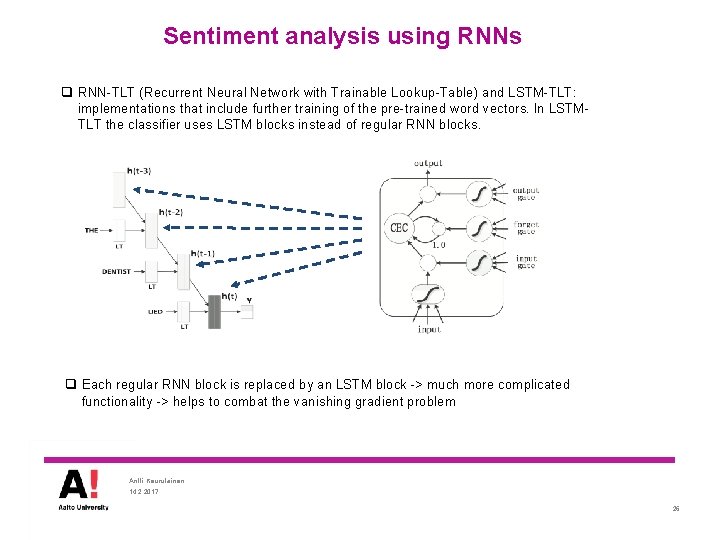

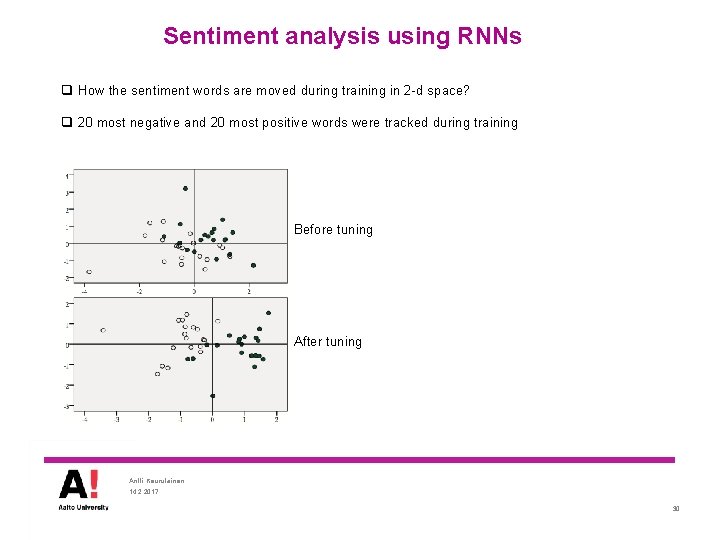

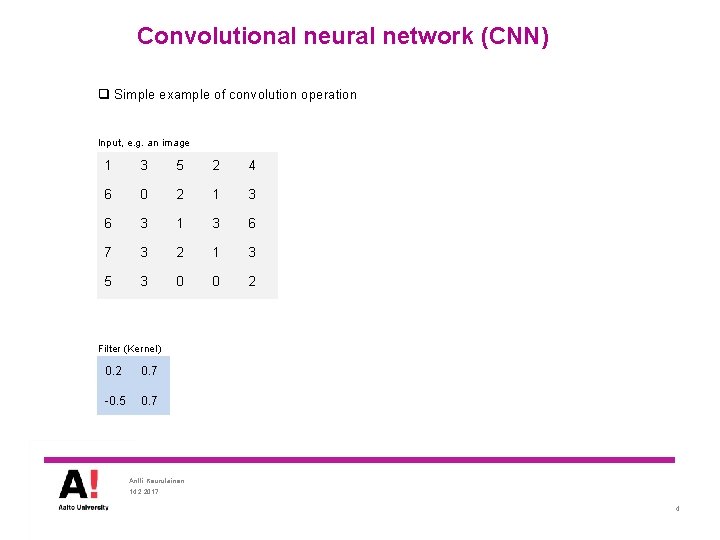

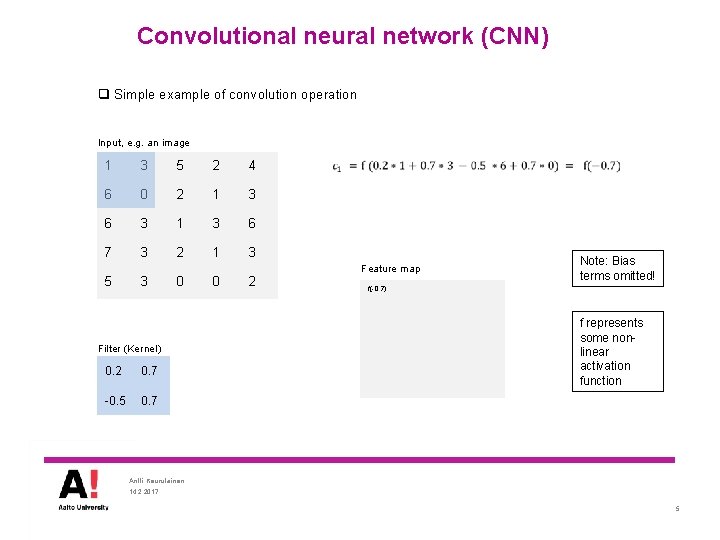

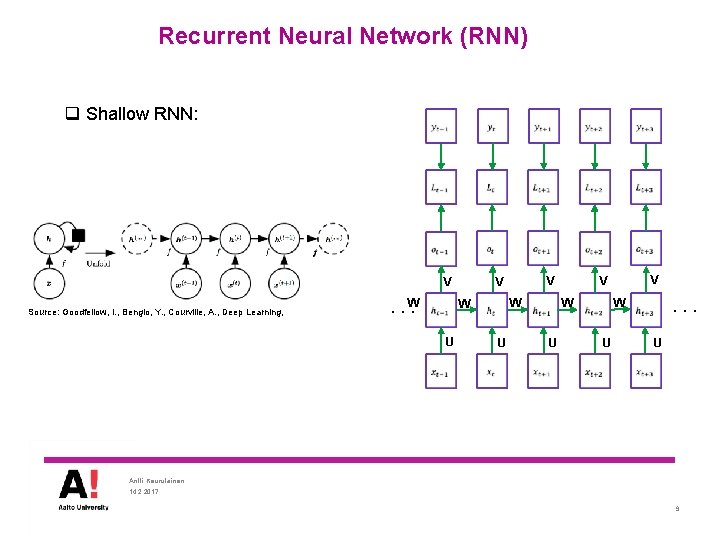

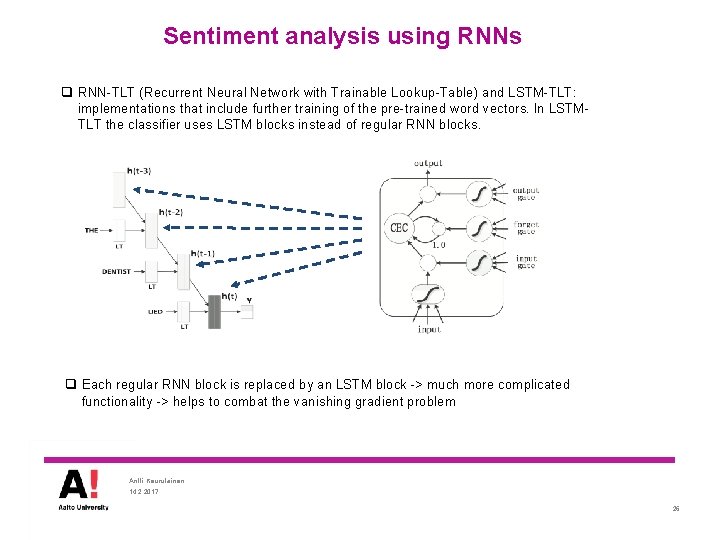

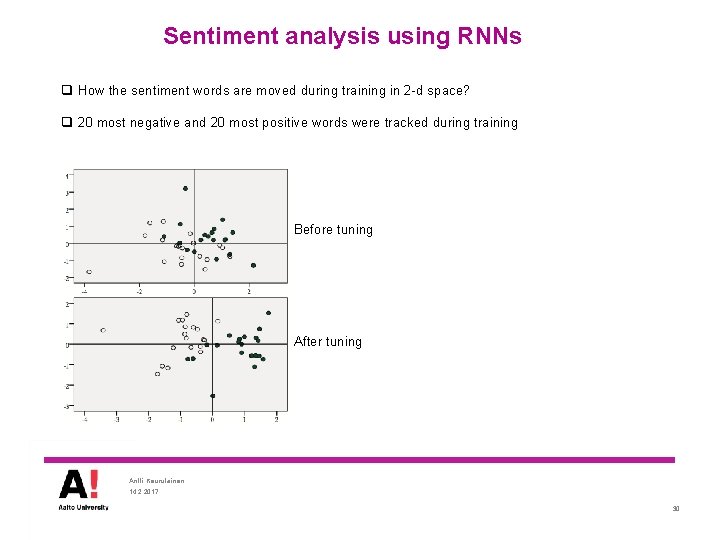

Sentiment analysis using CNNs Models in [Kim 2014] q CNN-rand; all words are initialized randomly and trained q CNN-static; initialized with word 2 vec used (unknown initialized randomly) and kept static q CNN-non-static; intialized with word 2 vec and trained further q CNN-multichannel; Initialized with word 2 vec, one channel stays static and other channel is further trained Kim, Y. (2014). Convolutional Neural Networks for Sentence Classification. Antti Keurulainen 14. 2. 2017 18

![Sentiment analysis using CNNs datasets Kim 2014 q Movie review data Bo Pang Lillian Sentiment analysis using CNNs datasets [Kim 2014] q “Movie review data”. Bo Pang, Lillian](https://slidetodoc.com/presentation_image_h/910cdc2b968e903975e20136901ddcbc/image-19.jpg)

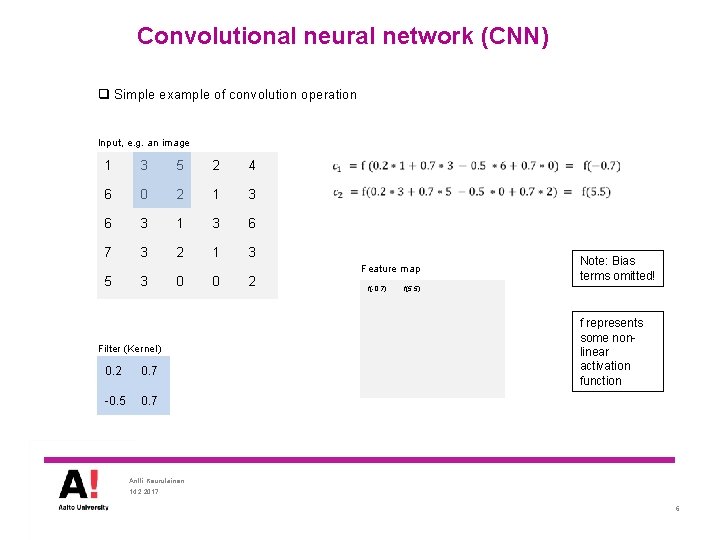

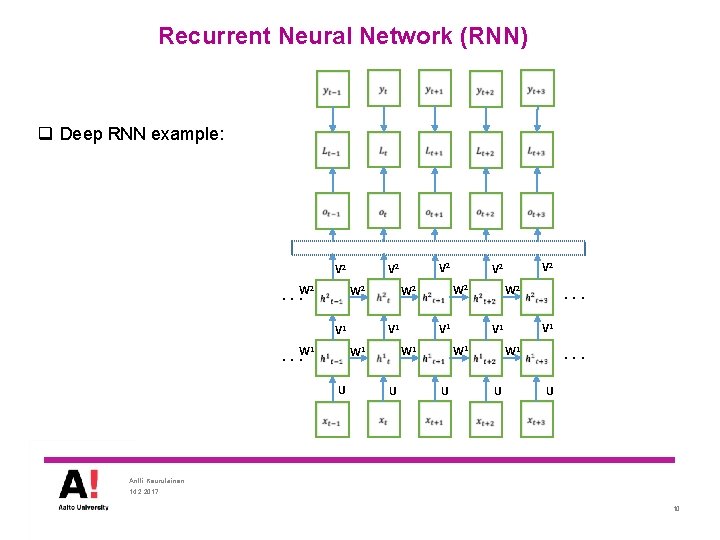

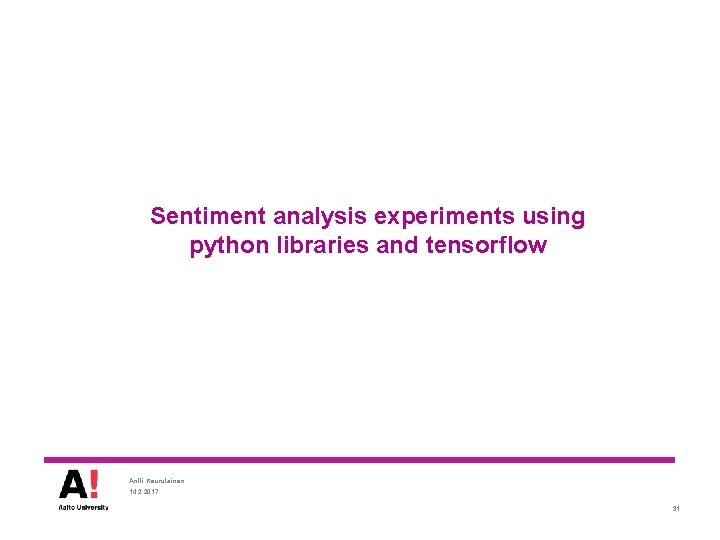

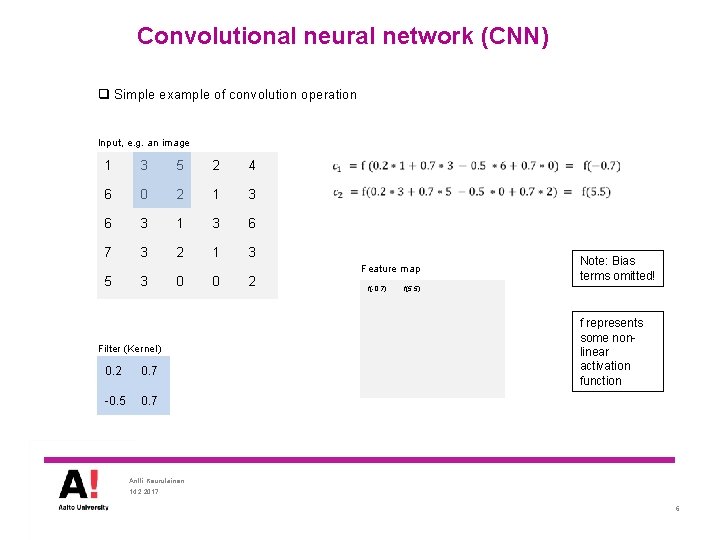

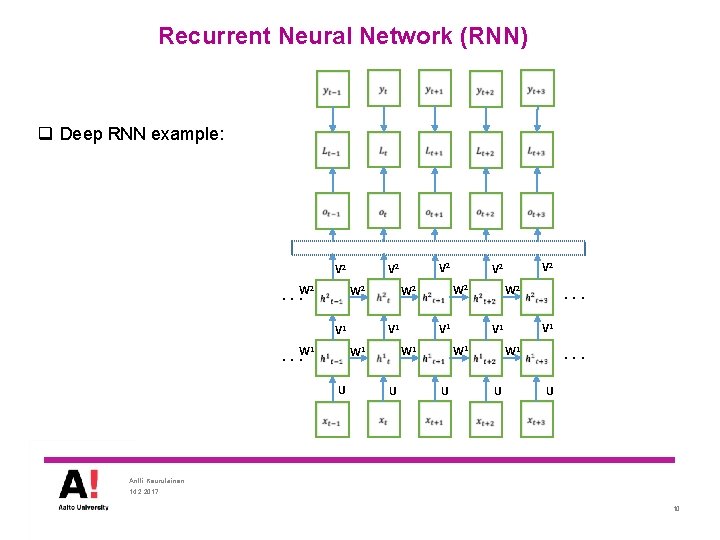

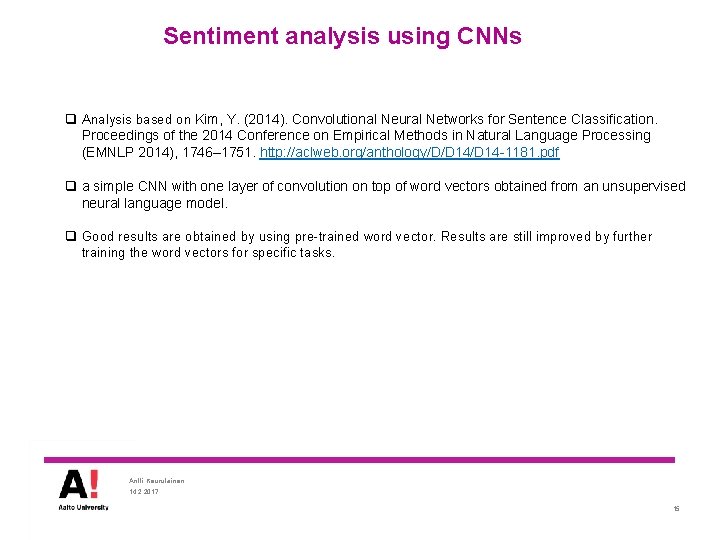

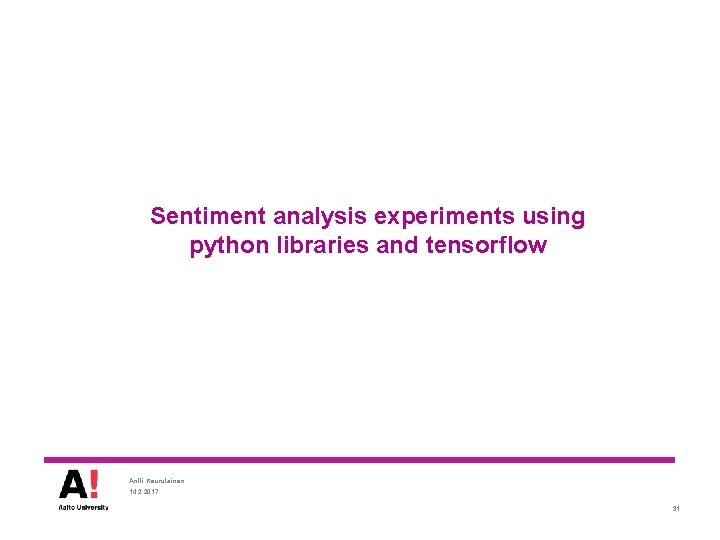

Sentiment analysis using CNNs datasets [Kim 2014] q “Movie review data”. Bo Pang, Lillian Lee, and Shivakumar Vaithyanathan, EMNLP 2002. Binary classification. q “Stanford sentiment treebank 1”. Extension of the above. Fine-graned labels added, Socher et al 2013. q “Stanford sentiment treebank 2”. Same as above but with neutral removed and binary labels. q “Subjectivity dataset”. 5000 subjective and 5000 objective processed sentences. Pang/Lee ACL 2004 q TREC question dataset, classifying a question type into 6 classes q Customer review dataset. Reviews of various products like cameras, mp 3 players etc. Hu & Liu 2004 q MPQA dataset. Opinion polarity subtask from MPQA dataset. Wiebe et al 2005 Antti Keurulainen 14. 2. 2017 19

![Sentiment analysis using CNNs results Kim 2014 Antti Keurulainen 14 2 2017 20 Sentiment analysis using CNNs results [Kim 2014] Antti Keurulainen 14. 2. 2017 20](https://slidetodoc.com/presentation_image_h/910cdc2b968e903975e20136901ddcbc/image-20.jpg)

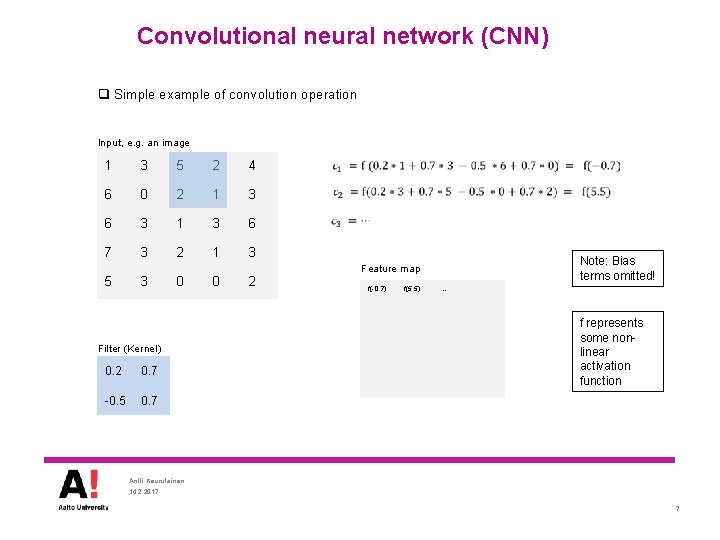

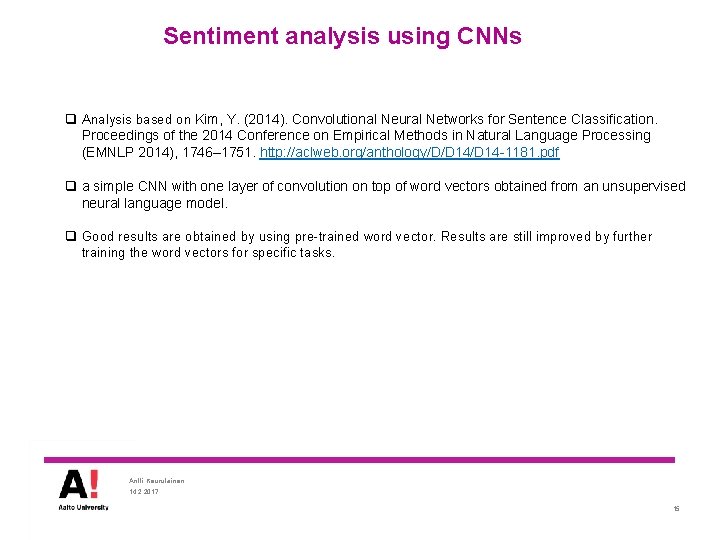

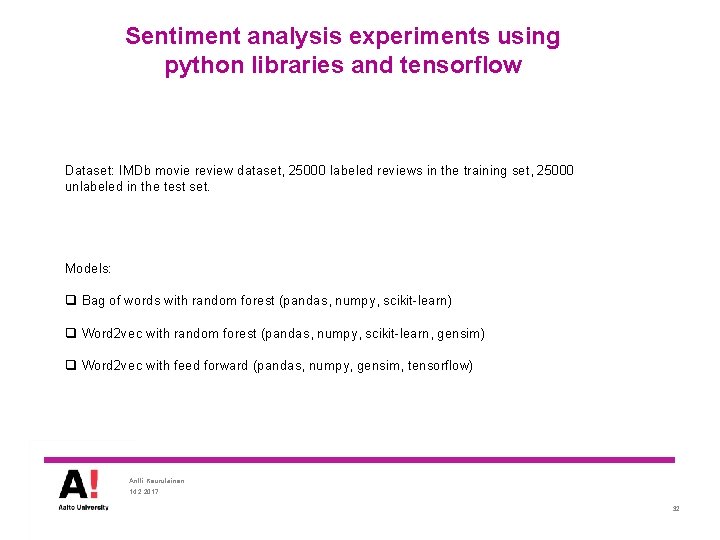

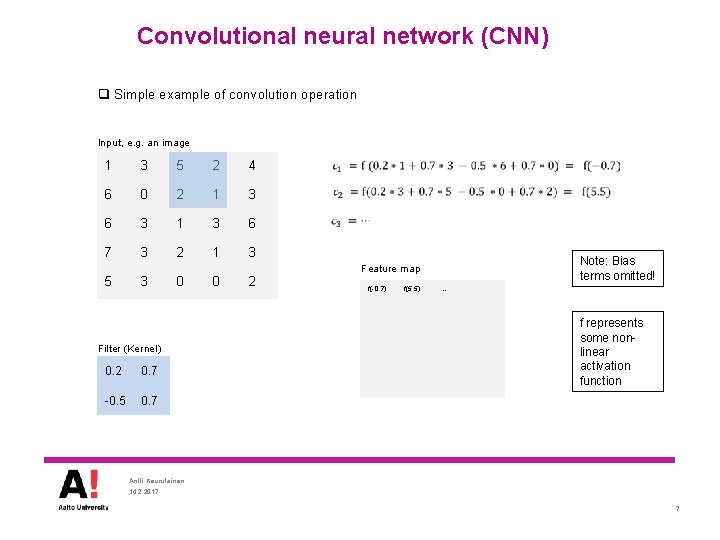

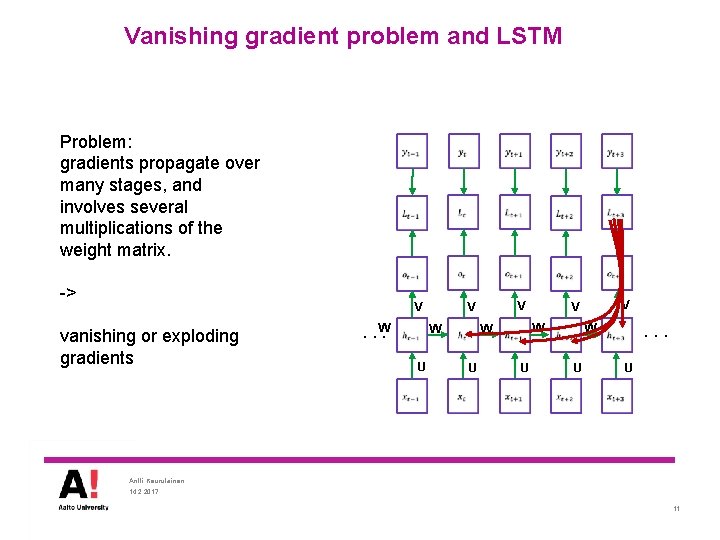

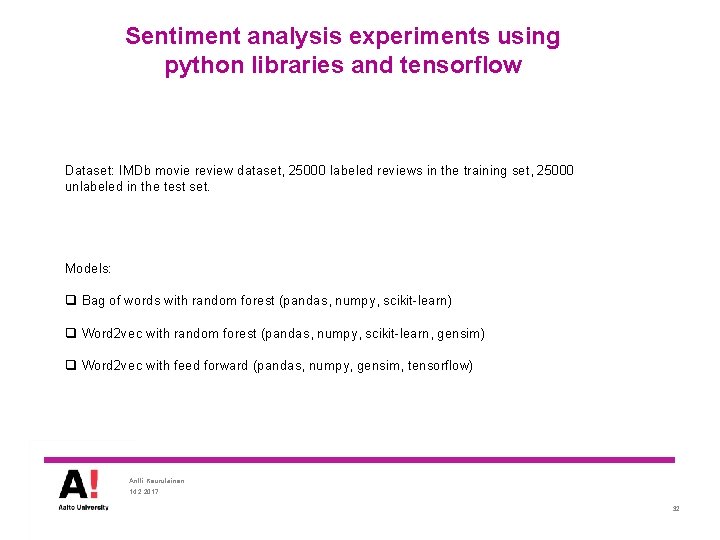

Sentiment analysis using CNNs results [Kim 2014] Antti Keurulainen 14. 2. 2017 20

Sentiment analysis using RNNs Antti Keurulainen 14. 2. 2017 21

![Sentiment analysis using RNNs q Analysis based on Wan 2015 Wang X Liu Sentiment analysis using RNNs q Analysis based on [Wan 2015]: Wang, X. , Liu,](https://slidetodoc.com/presentation_image_h/910cdc2b968e903975e20136901ddcbc/image-22.jpg)

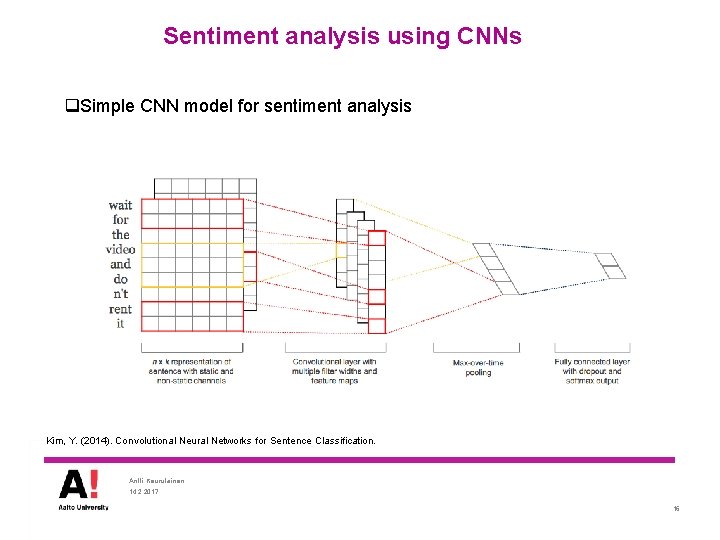

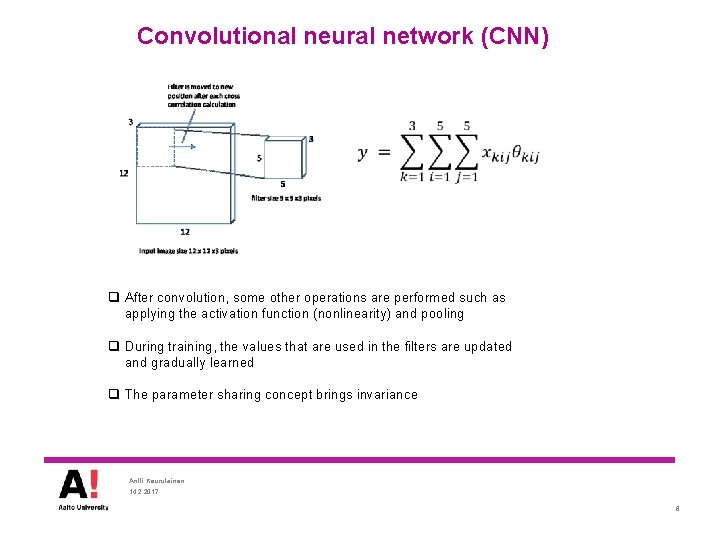

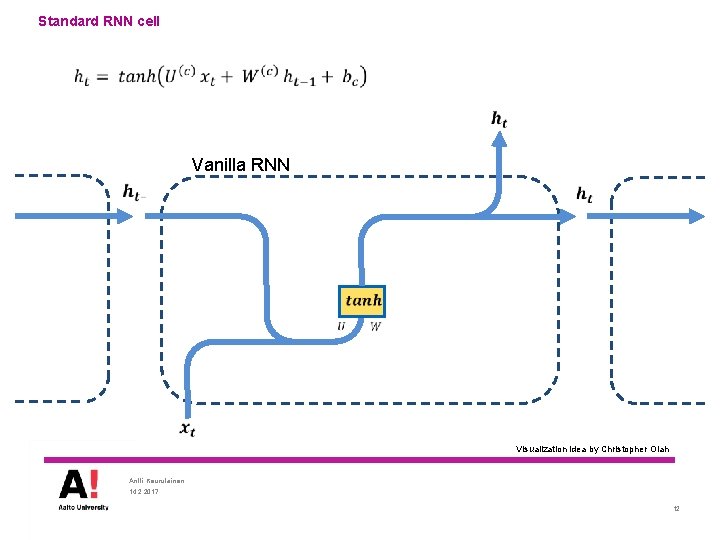

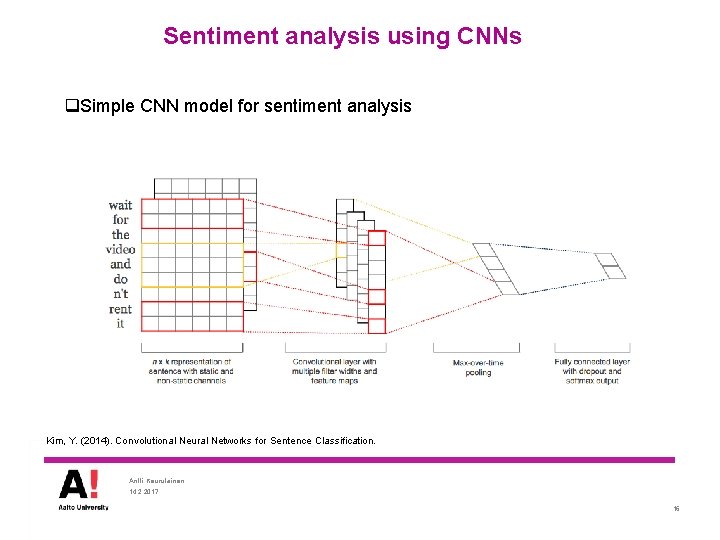

Sentiment analysis using RNNs q Analysis based on [Wan 2015]: Wang, X. , Liu, Y. , Sun, C. , Wang, B. , & Wang, X. (2015). Predicting Polarities of Tweets by Composing Word Embeddings with Long Short-Term Memory. In Proceedings of the 53 rd Annual Meeting of the Association for Computational Linguistics and the 7 th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pp. 1343– 1353, Beijing, China. Association for Computational Linguistics. http: //www. aclweb. org/anthology/P 15 -1130 q Twitter sentiment prediction, using simple RNN or LSTM recurrent network. Antti Keurulainen 14. 2. 2017 22

Sentiment analysis using RNNs q The word vectors created by co-occurrence statistics are not always suitable for sentiment analysis (e. g. words “good” and “bad” are close in word 2 vec representations) q Sentiments are expressed by phrases instead of individual words -> how to capture the representation of the whole sentence? q Additional challenge: Recurrent Neural Network (RNN) has difficulties to maintain longer time dependencies -> LSTM networks q It has been shown, that further task-specific training of the pre-trained word vectors help capturing the polarity information of the sentences Antti Keurulainen 14. 2. 2017 23

![Sentiment analysis using RNNs Basic RNN architecture Wan 2015 RNN architecture that is used Sentiment analysis using RNNs Basic RNN architecture [Wan 2015] RNN architecture that is used](https://slidetodoc.com/presentation_image_h/910cdc2b968e903975e20136901ddcbc/image-24.jpg)

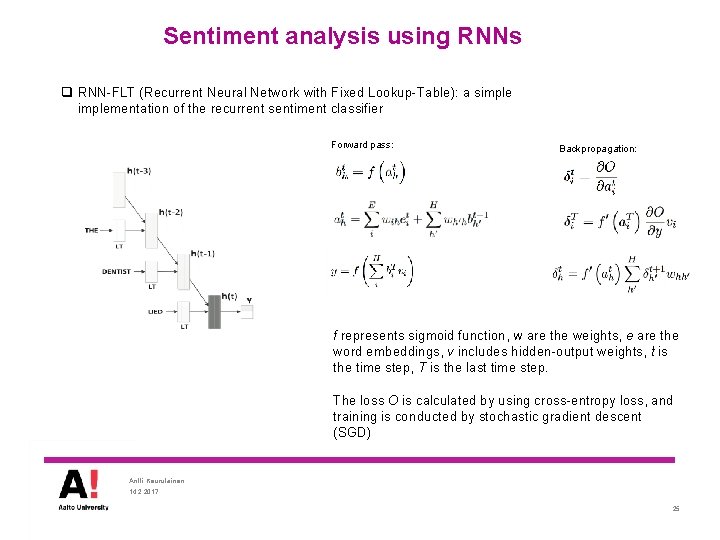

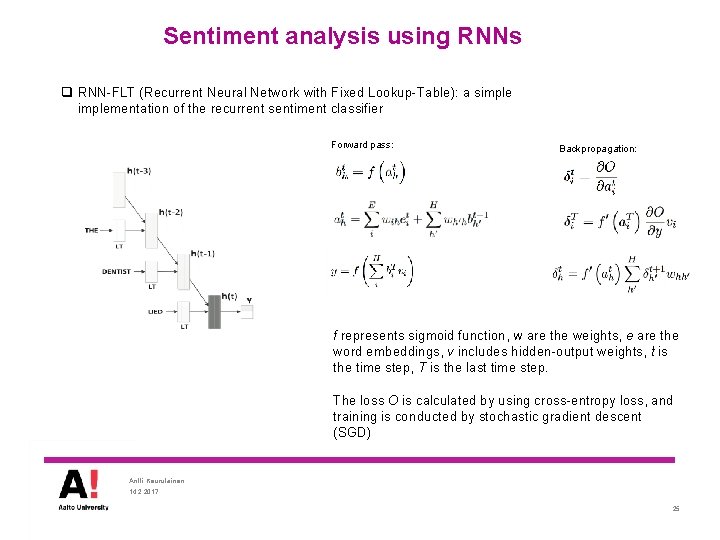

Sentiment analysis using RNNs Basic RNN architecture [Wan 2015] RNN architecture that is used in [Wan 2015] q In [Wan 2015], the sentence is expressed by the hidden state of the last time step. Antti Keurulainen 14. 2. 2017 24

Sentiment analysis using RNNs q RNN-FLT (Recurrent Neural Network with Fixed Lookup-Table): a simplementation of the recurrent sentiment classifier Forward pass: Backpropagation: f represents sigmoid function, w are the weights, e are the word embeddings, v includes hidden-output weights, t is the time step, T is the last time step. The loss O is calculated by using cross-entropy loss, and training is conducted by stochastic gradient descent (SGD) Antti Keurulainen 14. 2. 2017 25

Sentiment analysis using RNNs q RNN-TLT (Recurrent Neural Network with Trainable Lookup-Table) and LSTM-TLT: implementations that include further training of the pre-trained word vectors. In LSTMTLT the classifier uses LSTM blocks instead of regular RNN blocks. q Each regular RNN block is replaced by an LSTM block -> much more complicated functionality -> helps to combat the vanishing gradient problem Antti Keurulainen 14. 2. 2017 26

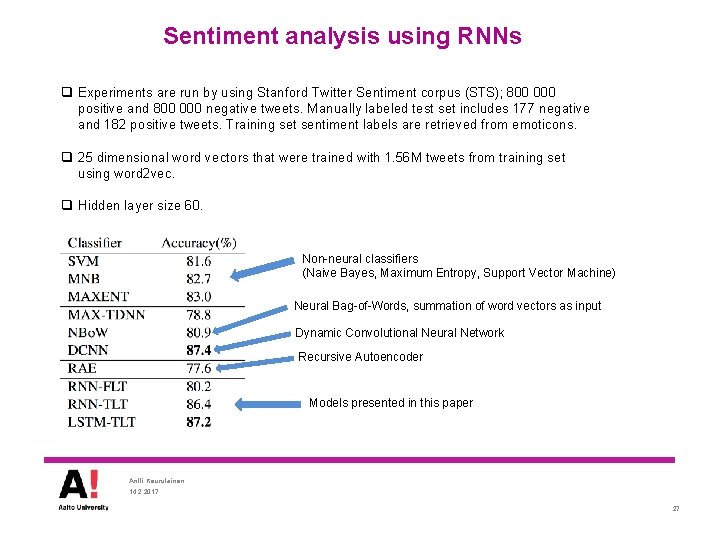

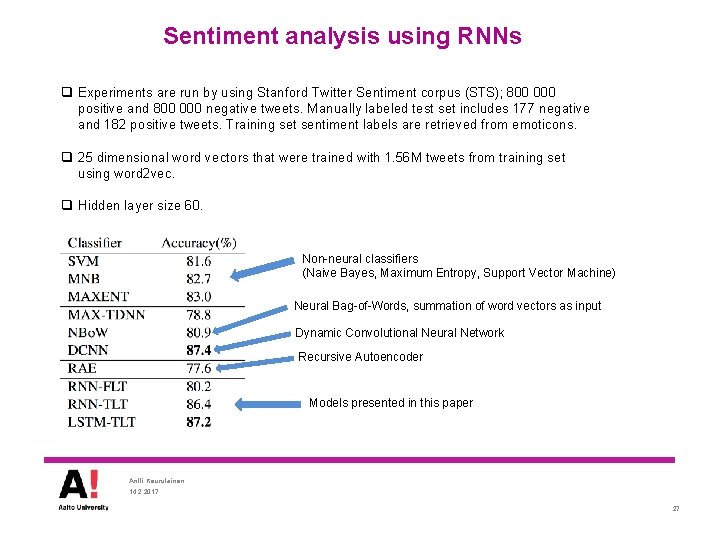

Sentiment analysis using RNNs q Experiments are run by using Stanford Twitter Sentiment corpus (STS); 800 000 positive and 800 000 negative tweets. Manually labeled test set includes 177 negative and 182 positive tweets. Training set sentiment labels are retrieved from emoticons. q 25 dimensional word vectors that were trained with 1. 56 M tweets from training set using word 2 vec. q Hidden layer size 60. Non-neural classifiers (Naive Bayes, Maximum Entropy, Support Vector Machine) Neural Bag-of-Words, summation of word vectors as input Dynamic Convolutional Neural Network Recursive Autoencoder Models presented in this paper Antti Keurulainen 14. 2. 2017 27

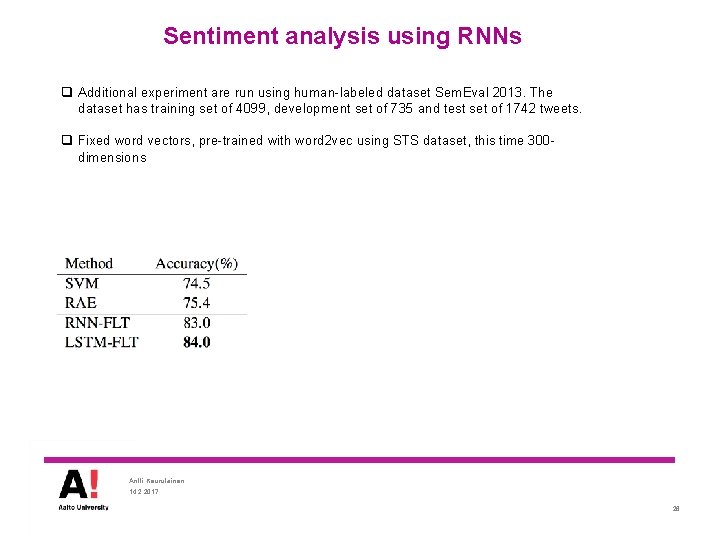

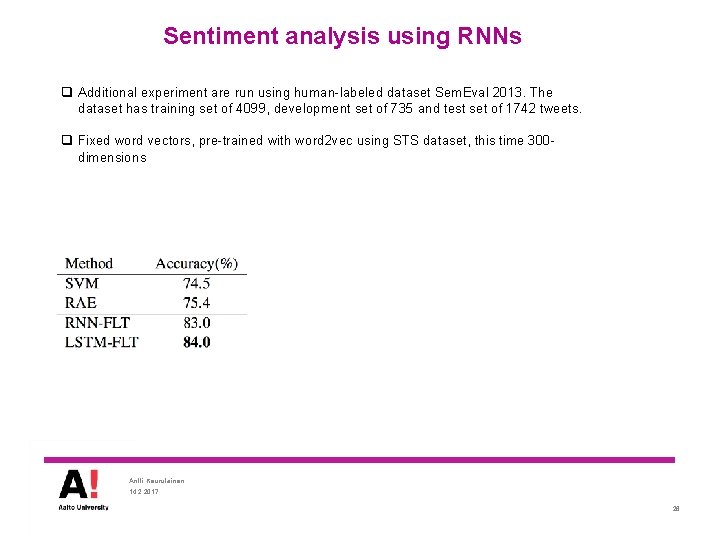

Sentiment analysis using RNNs q Additional experiment are run using human-labeled dataset Sem. Eval 2013. The dataset has training set of 4099, development set of 735 and test set of 1742 tweets. q Fixed word vectors, pre-trained with word 2 vec using STS dataset, this time 300 dimensions Antti Keurulainen 14. 2. 2017 28

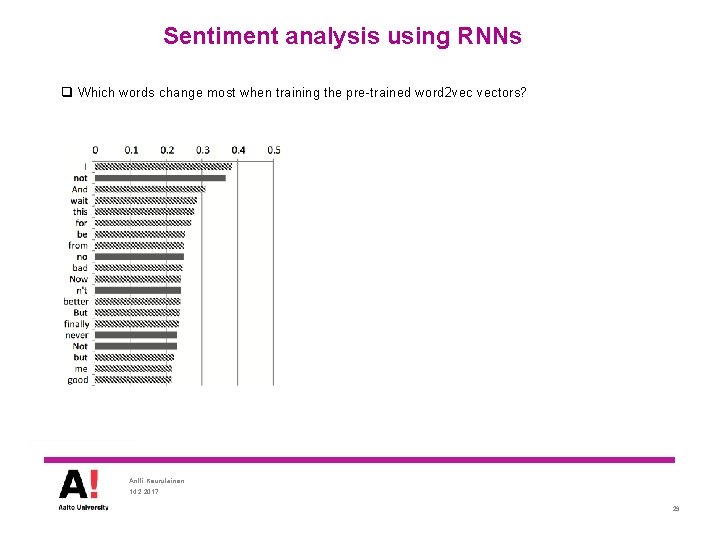

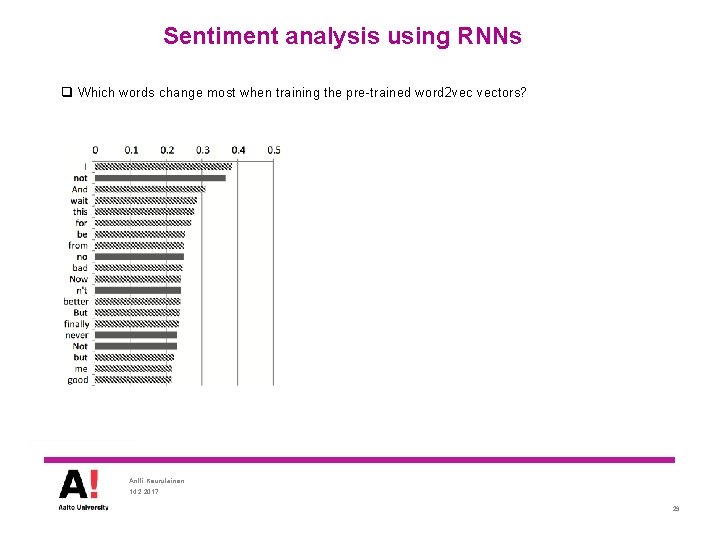

Sentiment analysis using RNNs q Which words change most when training the pre-trained word 2 vec vectors? Antti Keurulainen 14. 2. 2017 29

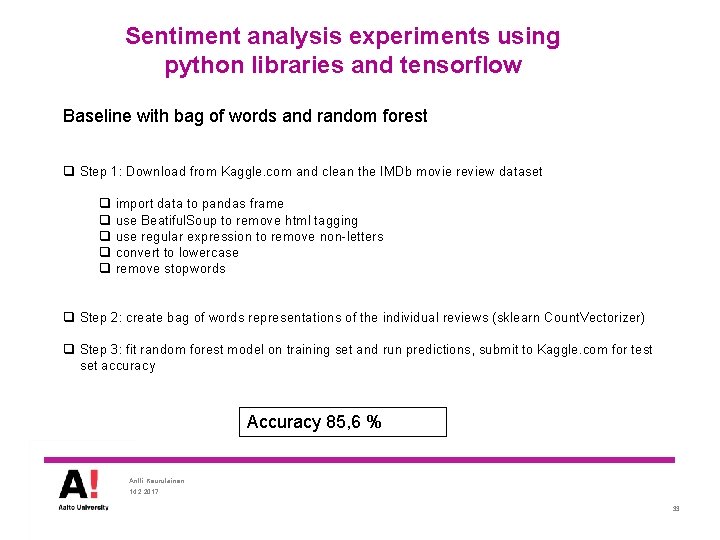

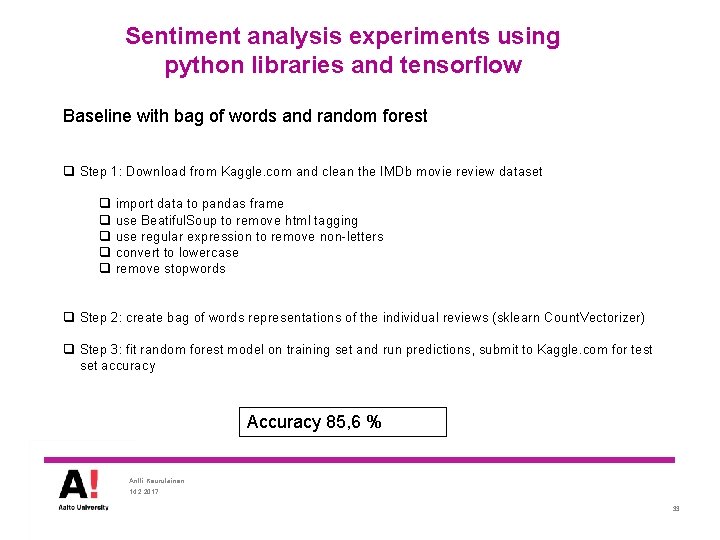

Sentiment analysis using RNNs q How the sentiment words are moved during training in 2 -d space? q 20 most negative and 20 most positive words were tracked during training Before tuning After tuning Antti Keurulainen 14. 2. 2017 30

Sentiment analysis experiments using python libraries and tensorflow Antti Keurulainen 14. 2. 2017 31

Sentiment analysis experiments using python libraries and tensorflow Dataset: IMDb movie review dataset, 25000 labeled reviews in the training set, 25000 unlabeled in the test set. Models: q Bag of words with random forest (pandas, numpy, scikit-learn) q Word 2 vec with random forest (pandas, numpy, scikit-learn, gensim) q Word 2 vec with feed forward (pandas, numpy, gensim, tensorflow) Antti Keurulainen 14. 2. 2017 32

Sentiment analysis experiments using python libraries and tensorflow Baseline with bag of words and random forest q Step 1: Download from Kaggle. com and clean the IMDb movie review dataset q q q import data to pandas frame use Beatiful. Soup to remove html tagging use regular expression to remove non-letters convert to lowercase remove stopwords q Step 2: create bag of words representations of the individual reviews (sklearn Count. Vectorizer) q Step 3: fit random forest model on training set and run predictions, submit to Kaggle. com for test set accuracy Accuracy 85, 6 % Antti Keurulainen 14. 2. 2017 33

Sentiment analysis experiments using python libraries and tensorflow Word 2 vec with random forest q Step 1: Download from Kaggle. com and clean the IMDb movie review dataset q q import data to pandas frame use Beatiful. Soup to remove html tagging use regular expression to remove non-letters convert to lowercase q Step 2: create word 2 vec representations of the individual words (gensim word 2 vec) q Step 3: average all word vectors in a review to form one single vector for a review q Step 4: fit random forest model on training set and run predictions, submit to Kaggle. com for test set accuracy Accuracy 83, 3 % Antti Keurulainen 14. 2. 2017 34

Sentiment analysis experiments using python libraries and tensorflow Word 2 vec with deep learning q Step 1: Download from Kaggle. com and clean the IMDb movie review dataset q q import data to pandas frame use Beatiful. Soup to remove html tagging use regular expression to remove non-letters convert to lowercase q Step 2: create word 2 vec representations of the individual words (gensim word 2 vec) q Step 3: average all word vectors in a review to form one single vector for the review q Step 4: fit feed forward deep learning model on training set and run predictions, submit to Kaggle. com for test set accuracy Accuracy 87, 0 % Antti Keurulainen 14. 2. 2017 35

A lot of hyperparameters and other decisions q q q q Remove stopwords? (yes for word 2 vec, no to sentiment analysis) Remove punctuation? (yes) Dimension of word vectors (300) Word 2 vec window size (10) Downsampling for frequent words (1 e-3) Minimum word count for word 2 vec (40) Deep Learning (DL) number of layers (3) DL width of the hidden layers (300 -150 -50 -2) DL activation functions (Relu) DL use dropout (tried, did not help. -> no) DL Initialization (random uniform between 0 and 1) DL Optimizer (Adam) DL Adam optimizer parameters, learning rate +3 others DL number of training steps DL regularization method and its parameters (none) DL loss function (cross entropy) Antti Keurulainen 14. 2. 2017 36

Homework q Consider the CNN-based single-channel version of the model architecture presented in [Kim 2014]. Consider a scenario where the pre-trained static word vectors have 300 dimensions, and three different filter sizes are used that span over 3, 4 and 5 words. Each filter size produces 100 feature maps. The feature maps are calculated by using the formula (2): meaning that the weight matrix is multiplied with the input vectors, bias is added, and the result is applied through non-linearity such as tanh. Then, the feature maps are max-pooled, and these results are connected to final two dimensional output layer in the fully connected manner. Calculate the number of trainable parameters (weights and biases) there are in this model. Antti Keurulainen 14. 2. 2017 37