Sentence Parsing 3 Dynamic Programming Acknowledgement Lecture based

Sentence Parsing 3 Dynamic Programming

Acknowledgement § Lecture based on § Jurafsky and Martin Ch. 13 (2 nd Edition) § J & M Lecture Notes Jan 2009 Speech and Language Processing - Jurafsky and Martin 2

Avoiding Repeated Work § Dynamic Programming § CKY Parsing § Earley Algorithm § Chart Parsing Jan 2009 Speech and Language Processing - Jurafsky and Martin 3

Dynamic Programming § DP search methods fill tables with partial results and thereby § Avoid doing avoidable repeated work § Solve exponential problems in polynomial time (well, no not really) § Efficiently store ambiguous structures with shared sub -parts. § We’ll cover two approaches that roughly correspond to top-down and bottom-up approaches. § CKY (after authors Cocke, Kasami and Younger) § Earley (often referred to as chart parsing, because it uses a data structure called a chart) Jan 2009 Speech and Language Processing - Jurafsky and Martin 4

CKY Parsing § First we’ll limit our grammar to epsilon-free, binary rules (more later) § Key intuition: consider the rule A BC § If there is an A somewhere in the input then there must be a B followed by a C in the input. § If the A spans from i to j in the input then there must be some k st. i<k<j § i. e. The B splits from the C someplace. § This intuition plays a role in both CKY and Earley methods. Jan 2009 Speech and Language Processing - Jurafsky and Martin 5

Problem § What if your grammar isn’t binary? § As in the case of the Tree. Bank grammar? § Convert it to binary… any arbitrary CFG can be rewritten into Chomsky-Normal Form automatically. § What does this mean? § The resulting grammar accepts (and rejects) the same set of strings as the original grammar. § But the resulting derivations (trees) are different. Jan 2009 Speech and Language Processing - Jurafsky and Martin 6

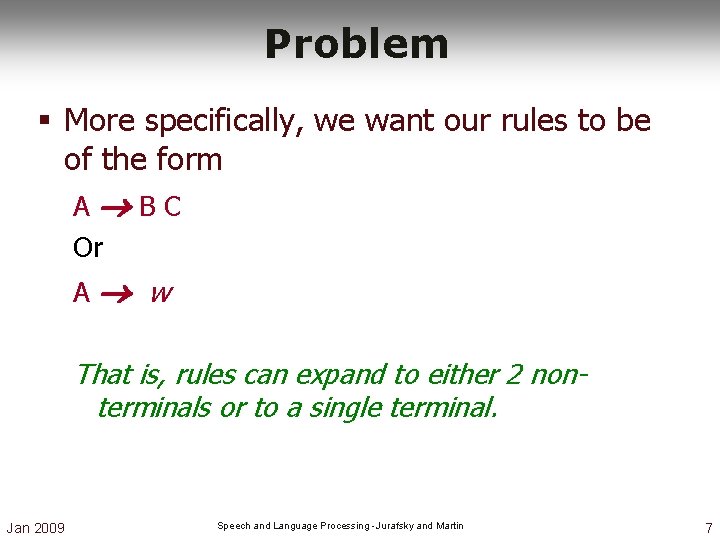

Problem § More specifically, we want our rules to be of the form A BC Or A w That is, rules can expand to either 2 nonterminals or to a single terminal. Jan 2009 Speech and Language Processing - Jurafsky and Martin 7

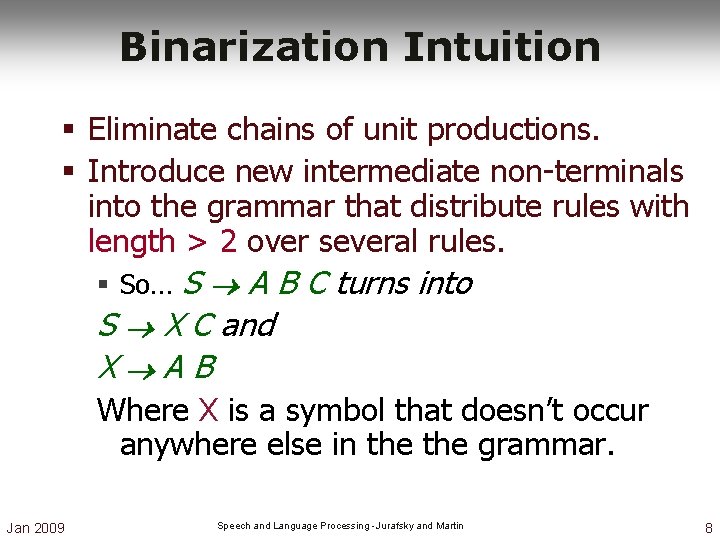

Binarization Intuition § Eliminate chains of unit productions. § Introduce new intermediate non-terminals into the grammar that distribute rules with length > 2 over several rules. § So… S A B C turns into S X C and X AB Where X is a symbol that doesn’t occur anywhere else in the grammar. Jan 2009 Speech and Language Processing - Jurafsky and Martin 8

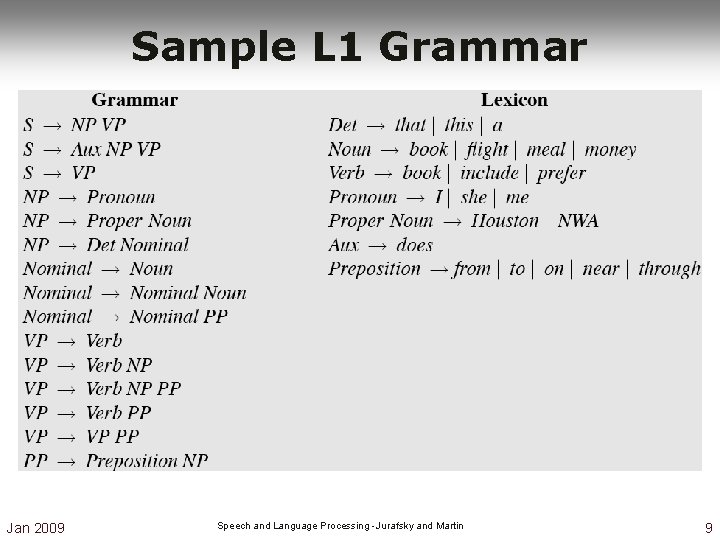

Sample L 1 Grammar Jan 2009 Speech and Language Processing - Jurafsky and Martin 9

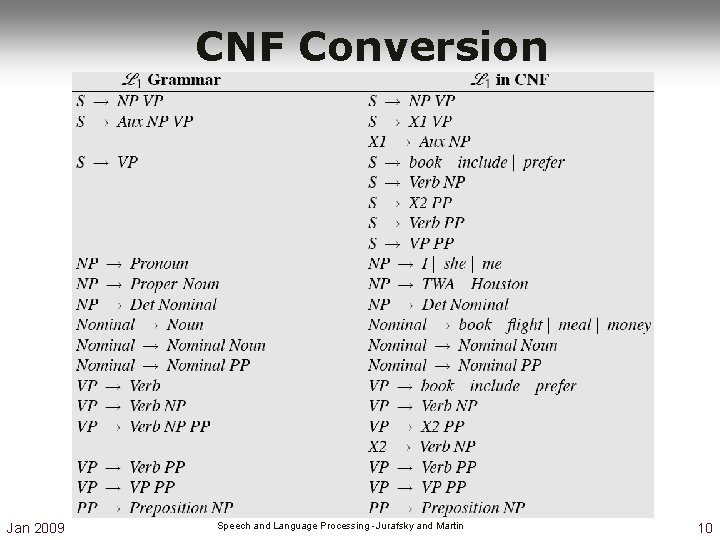

CNF Conversion Jan 2009 Speech and Language Processing - Jurafsky and Martin 10

CKY § Build a table so that an A spanning from i to j in the input is placed in cell [i, j] in the table. § So a non-terminal spanning an entire string will sit in cell [0, n] § Hopefully an S § If we build the table bottom-up, we’ll know that the parts of the A must go from i to k and from k to j, for some k. Jan 2009 Speech and Language Processing - Jurafsky and Martin 11

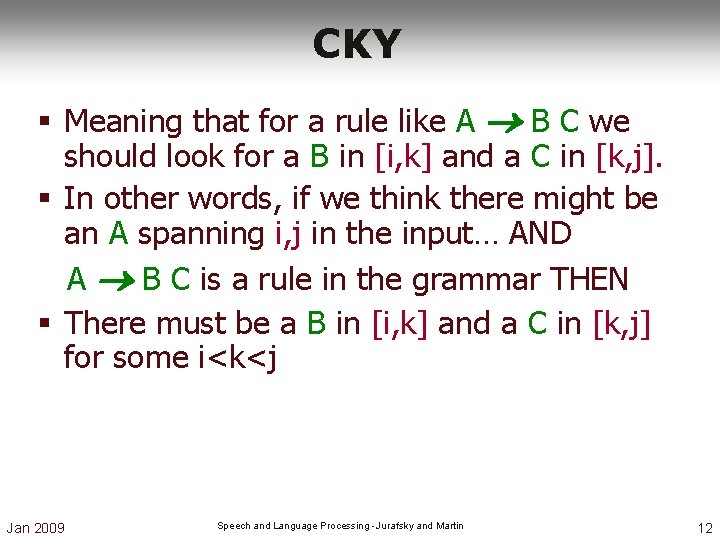

CKY § Meaning that for a rule like A B C we should look for a B in [i, k] and a C in [k, j]. § In other words, if we think there might be an A spanning i, j in the input… AND A B C is a rule in the grammar THEN § There must be a B in [i, k] and a C in [k, j] for some i<k<j Jan 2009 Speech and Language Processing - Jurafsky and Martin 12

![CKY § So to fill the table loop over the cell[i, j] values in CKY § So to fill the table loop over the cell[i, j] values in](http://slidetodoc.com/presentation_image_h2/5fe0b0e58b14db2997b1bb3fe42f6380/image-13.jpg)

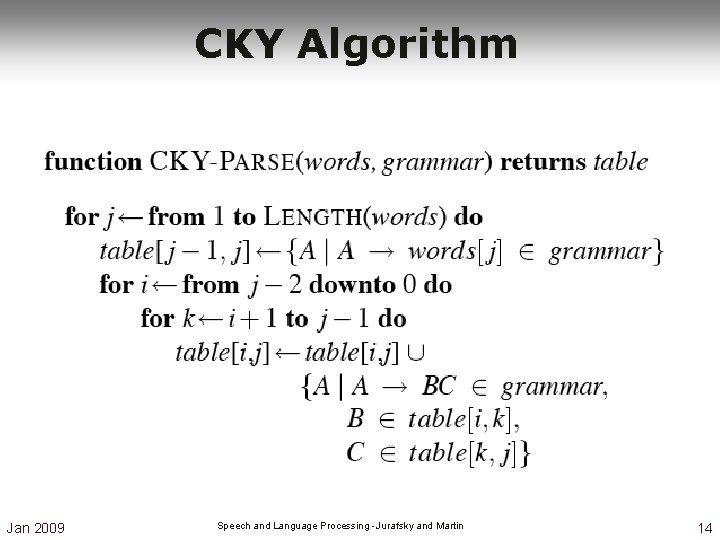

CKY § So to fill the table loop over the cell[i, j] values in some systematic way § What constraint should we put on that systematic search? § For each cell, loop over the appropriate k values to search for things to add. Jan 2009 Speech and Language Processing - Jurafsky and Martin 13

CKY Algorithm Jan 2009 Speech and Language Processing - Jurafsky and Martin 14

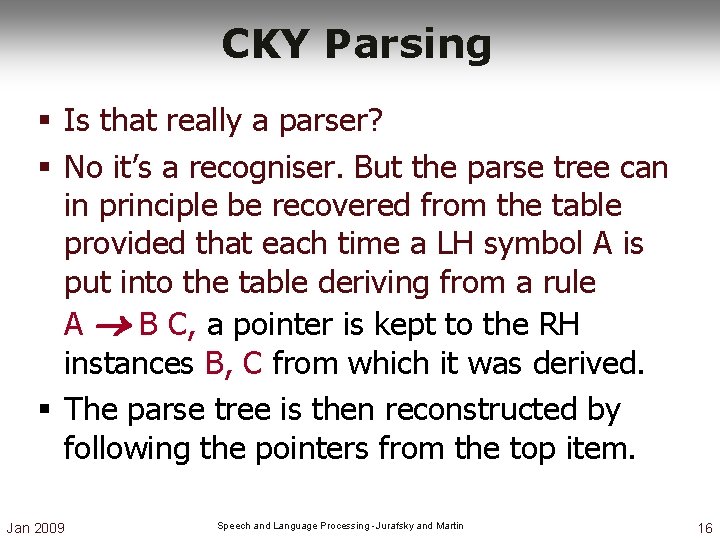

CKY Parsing § Is that really a parser? Jan 2009 Speech and Language Processing - Jurafsky and Martin 15

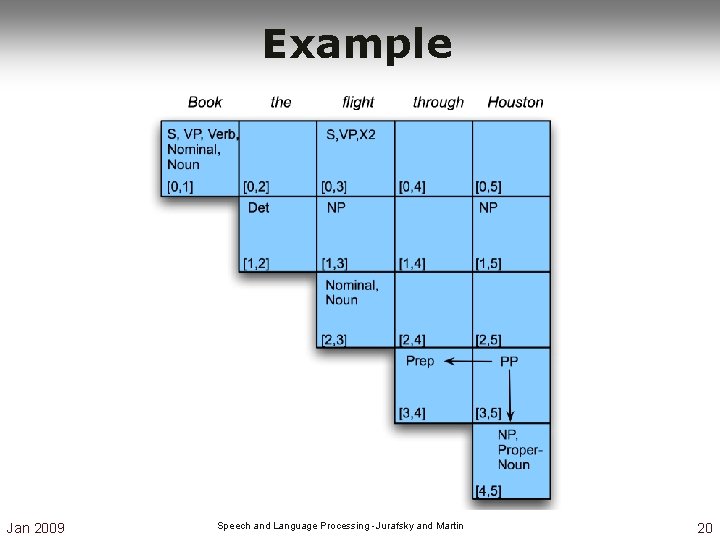

CKY Parsing § Is that really a parser? § No it’s a recogniser. But the parse tree can in principle be recovered from the table provided that each time a LH symbol A is put into the table deriving from a rule A B C, a pointer is kept to the RH instances B, C from which it was derived. § The parse tree is then reconstructed by following the pointers from the top item. Jan 2009 Speech and Language Processing - Jurafsky and Martin 16

Note § We arranged the loops to fill the table a column at a time, from left to right, bottom to top. § This assures us that whenever we’re filling a cell, the parts needed to fill it are already in the table (to the left and below) § It’s somewhat natural in that it processes the input left to right one word at a time § Known as online § Other ways of filling the table are possible Jan 2009 Speech and Language Processing - Jurafsky and Martin 17

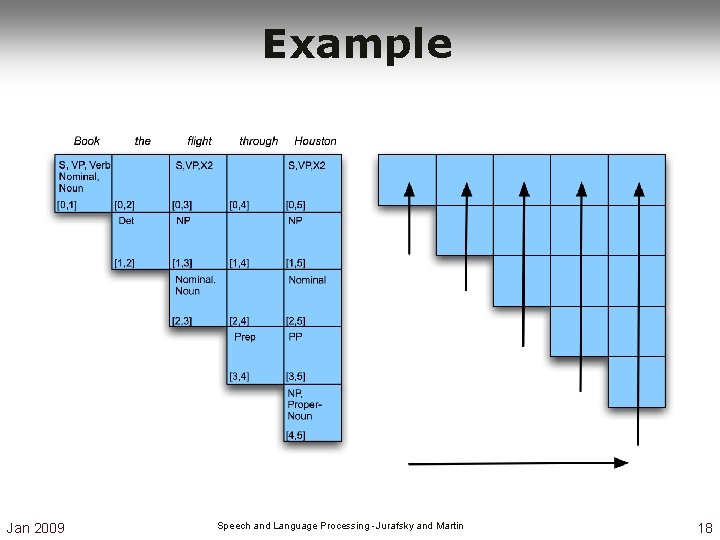

Example Jan 2009 Speech and Language Processing - Jurafsky and Martin 18

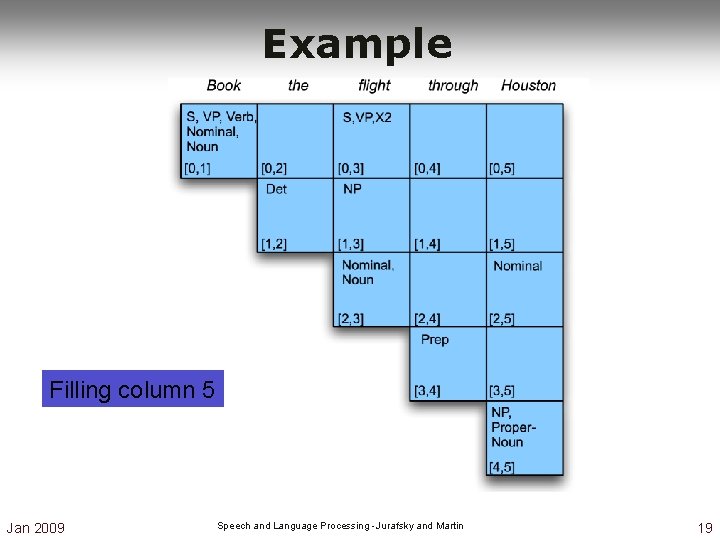

Example Filling column 5 Jan 2009 Speech and Language Processing - Jurafsky and Martin 19

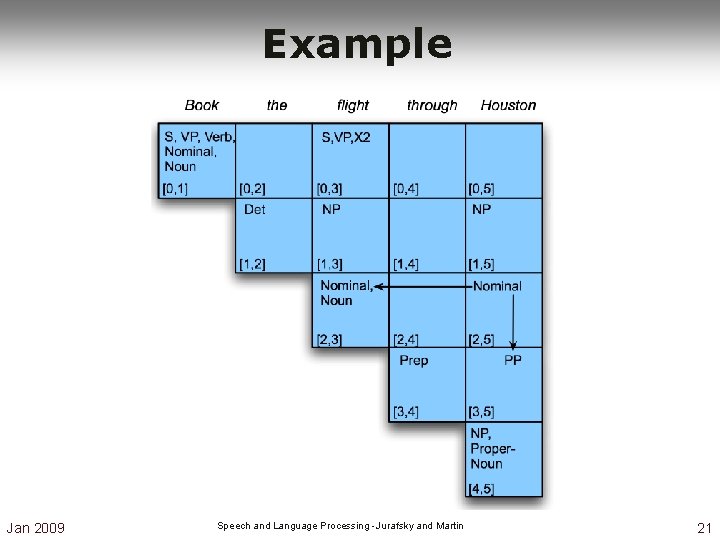

Example Jan 2009 Speech and Language Processing - Jurafsky and Martin 20

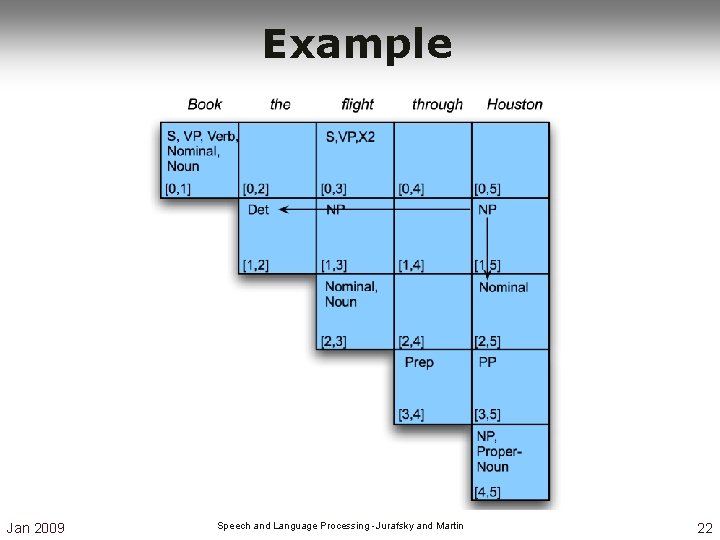

Example Jan 2009 Speech and Language Processing - Jurafsky and Martin 21

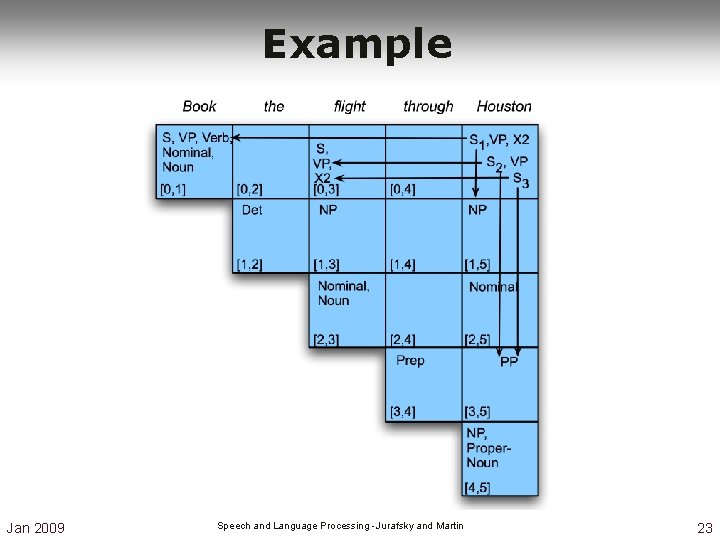

Example Jan 2009 Speech and Language Processing - Jurafsky and Martin 22

Example Jan 2009 Speech and Language Processing - Jurafsky and Martin 23

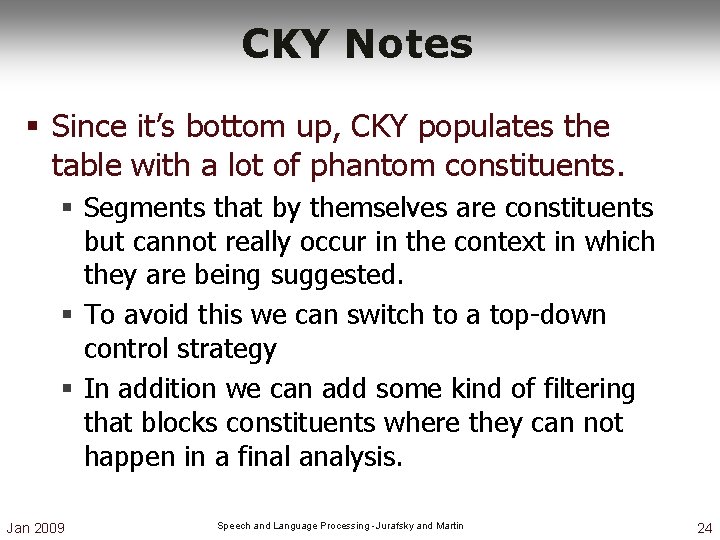

CKY Notes § Since it’s bottom up, CKY populates the table with a lot of phantom constituents. § Segments that by themselves are constituents but cannot really occur in the context in which they are being suggested. § To avoid this we can switch to a top-down control strategy § In addition we can add some kind of filtering that blocks constituents where they can not happen in a final analysis. Jan 2009 Speech and Language Processing - Jurafsky and Martin 24

Earley Parsing § Allows arbitrary CFGs (not just binary ones). § This requires dotted rules § Some top-down control § Uses a prediction operation § Parallel top-down search § Fills a table in a single sweep over the input § Table is length N+1; N is number of words § Table entries consist of dotted rules Jan 2009 Speech and Language Processing - Jurafsky and Martin 25

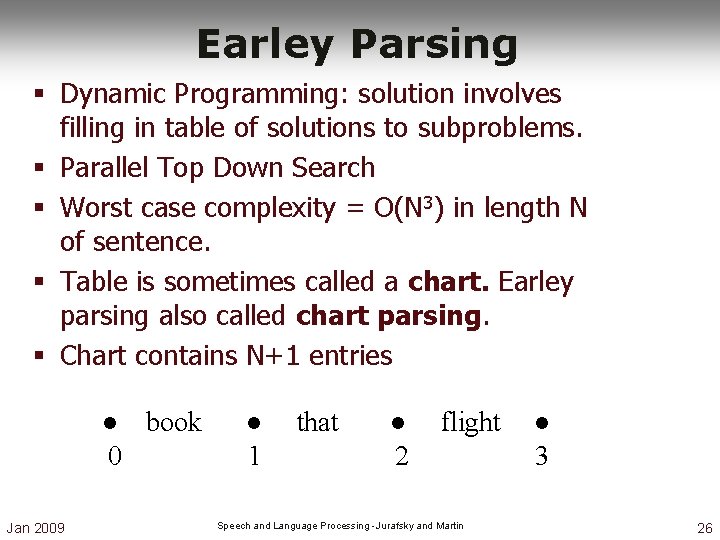

Earley Parsing § Dynamic Programming: solution involves filling in table of solutions to subproblems. § Parallel Top Down Search § Worst case complexity = O(N 3) in length N of sentence. § Table is sometimes called a chart. Earley parsing also called chart parsing. § Chart contains N+1 entries ● book 0 Jan 2009 ● 1 that ● 2 flight Speech and Language Processing - Jurafsky and Martin ● 3 26

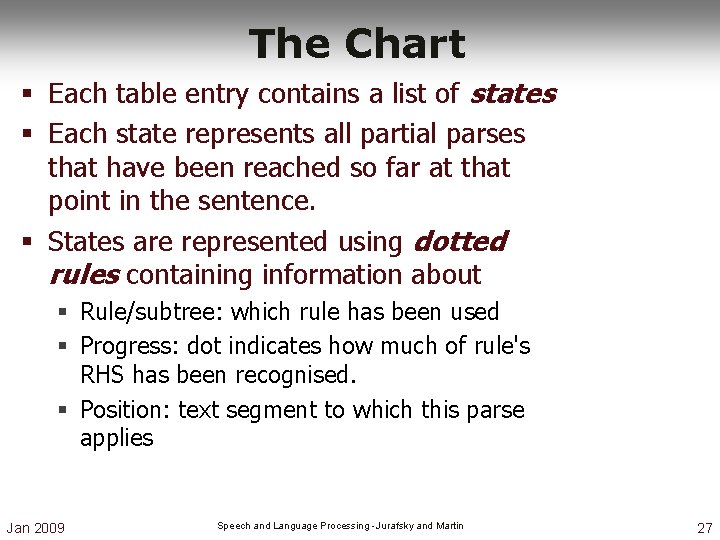

The Chart § Each table entry contains a list of states § Each state represents all partial parses that have been reached so far at that point in the sentence. § States are represented using dotted rules containing information about § Rule/subtree: which rule has been used § Progress: dot indicates how much of rule's RHS has been recognised. § Position: text segment to which this parse applies Jan 2009 Speech and Language Processing - Jurafsky and Martin 27

![Examples of Dotted Rules § Initial S Rule (incomplete) S ->. VP, [0, 0] Examples of Dotted Rules § Initial S Rule (incomplete) S ->. VP, [0, 0]](http://slidetodoc.com/presentation_image_h2/5fe0b0e58b14db2997b1bb3fe42f6380/image-28.jpg)

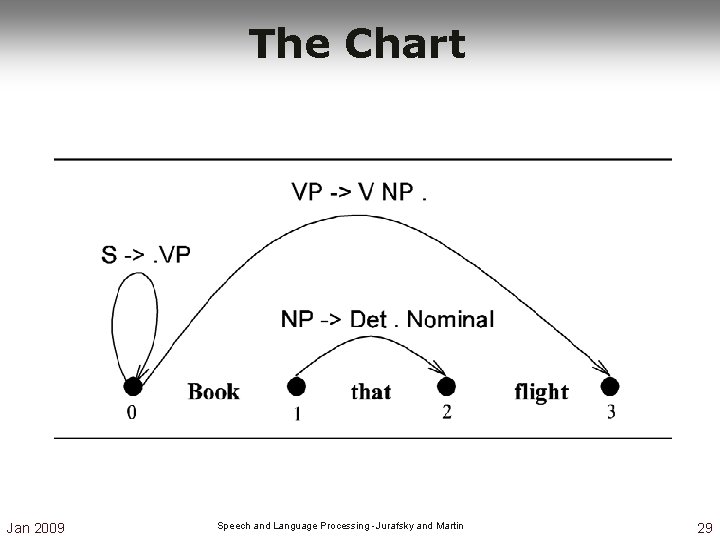

Examples of Dotted Rules § Initial S Rule (incomplete) S ->. VP, [0, 0] § Partially recognised NP (incomplete) NP -> Det. Nominal, [1, 2] § Fully recognised VP (complete) VP -> V VP. , [0, 3] § These states can also be represented graphically on the chart Jan 2009 Speech and Language Processing - Jurafsky and Martin 28

The Chart Jan 2009 Speech and Language Processing - Jurafsky and Martin 29

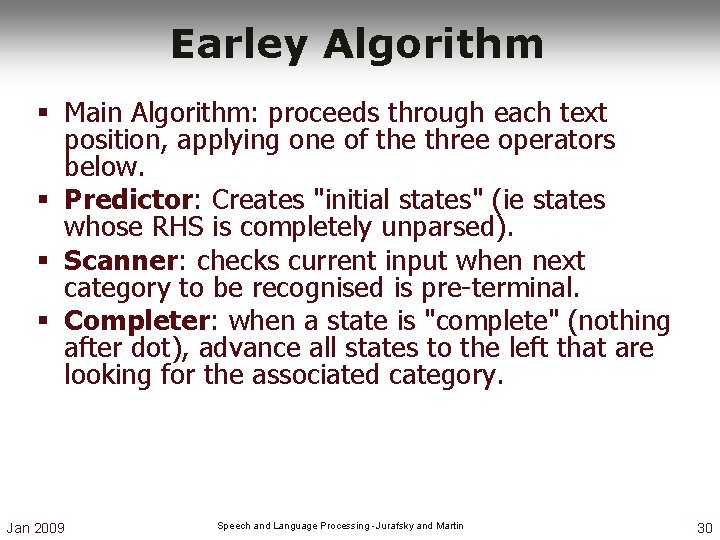

Earley Algorithm § Main Algorithm: proceeds through each text position, applying one of the three operators below. § Predictor: Creates "initial states" (ie states whose RHS is completely unparsed). § Scanner: checks current input when next category to be recognised is pre-terminal. § Completer: when a state is "complete" (nothing after dot), advance all states to the left that are looking for the associated category. Jan 2009 Speech and Language Processing - Jurafsky and Martin 30

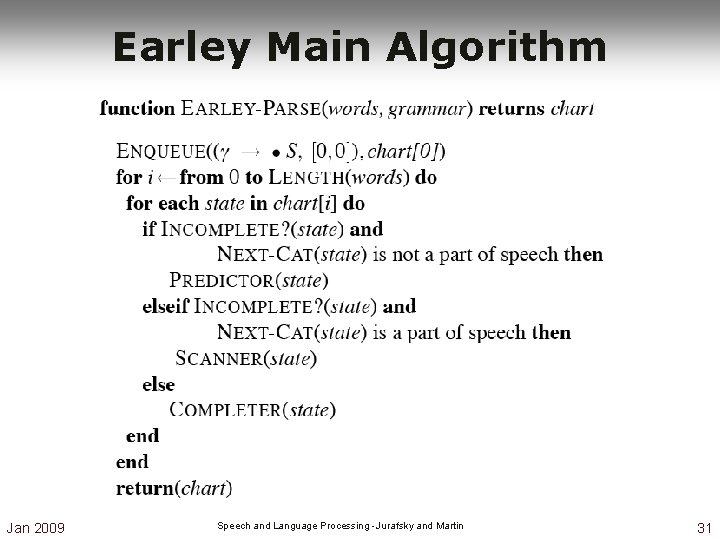

Earley Main Algorithm Jan 2009 Speech and Language Processing - Jurafsky and Martin 31

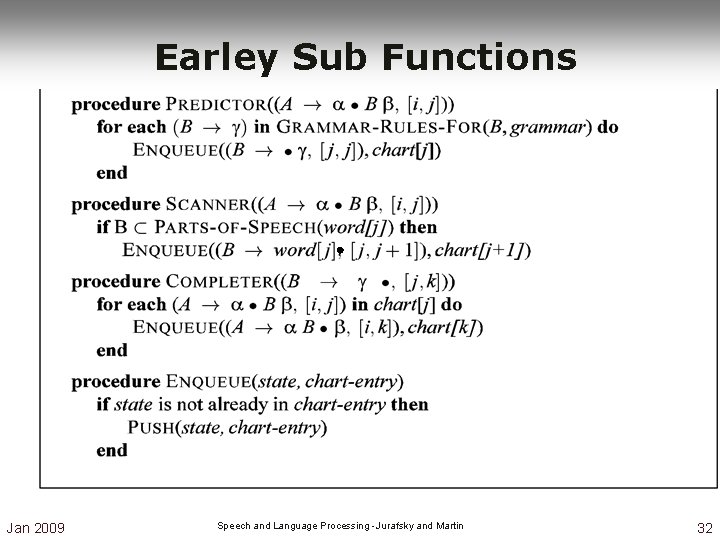

Earley Sub Functions Jan 2009 Speech and Language Processing - Jurafsky and Martin 32

![Early Algorithm – Sub Functions Predictor(A -> alpha. B beta, [i, j]) for each Early Algorithm – Sub Functions Predictor(A -> alpha. B beta, [i, j]) for each](http://slidetodoc.com/presentation_image_h2/5fe0b0e58b14db2997b1bb3fe42f6380/image-33.jpg)

Early Algorithm – Sub Functions Predictor(A -> alpha. B beta, [i, j]) for each B -> gamma in Grammar(B) enqueue((B ->. gamma, [j, j]), chart[j]) Scanner(A -> alpha. B beta, [i, j]) if B in Part. Of. Speech(word[j]) then enqueue((B -> word[j], [j, j+1]), chart[j+1]) Completer(B -> gamma. , [j, k]) for each (A ->. B beta) in chart[j] enqueue((A -> B. beta , [j, j]), chart[j]) Jan 2009 Speech and Language Processing - Jurafsky and Martin 33

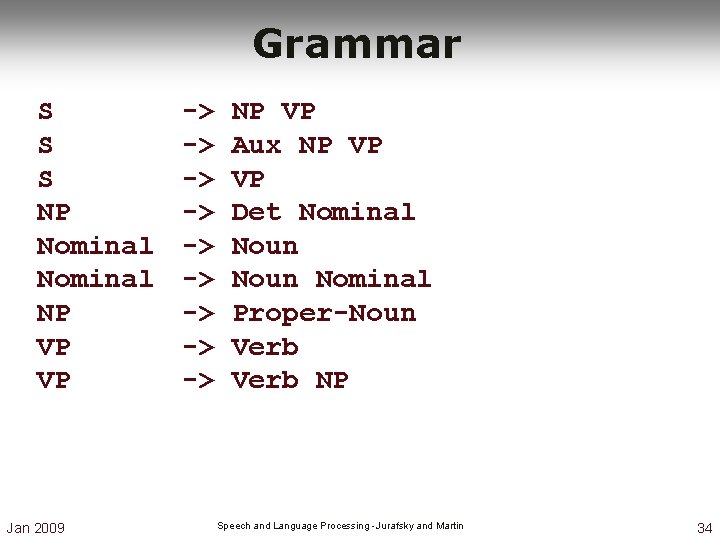

Grammar S S S NP Nominal NP VP VP Jan 2009 -> -> -> NP VP Aux NP VP VP Det Nominal Noun Nominal Proper-Noun Verb NP Speech and Language Processing - Jurafsky and Martin 34

Jan 2009 Speech and Language Processing - Jurafsky and Martin 35

Jan 2009 Speech and Language Processing - Jurafsky and Martin 36

fl Jan 2009 Speech and Language Processing - Jurafsky and Martin 37

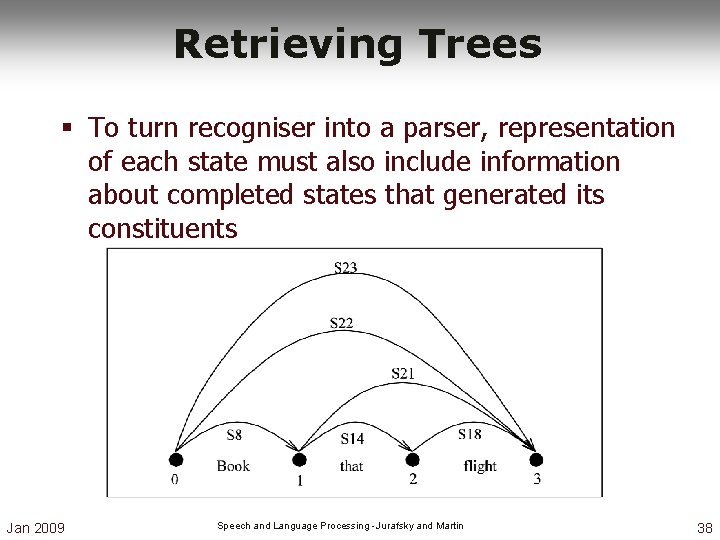

Retrieving Trees § To turn recogniser into a parser, representation of each state must also include information about completed states that generated its constituents Jan 2009 Speech and Language Processing - Jurafsky and Martin 38

Jan 2009 Speech and Language Processing - Jurafsky and Martin 39

![Chart[3] ↑ Extra Field Jan 2009 Speech and Language Processing - Jurafsky and Martin Chart[3] ↑ Extra Field Jan 2009 Speech and Language Processing - Jurafsky and Martin](http://slidetodoc.com/presentation_image_h2/5fe0b0e58b14db2997b1bb3fe42f6380/image-40.jpg)

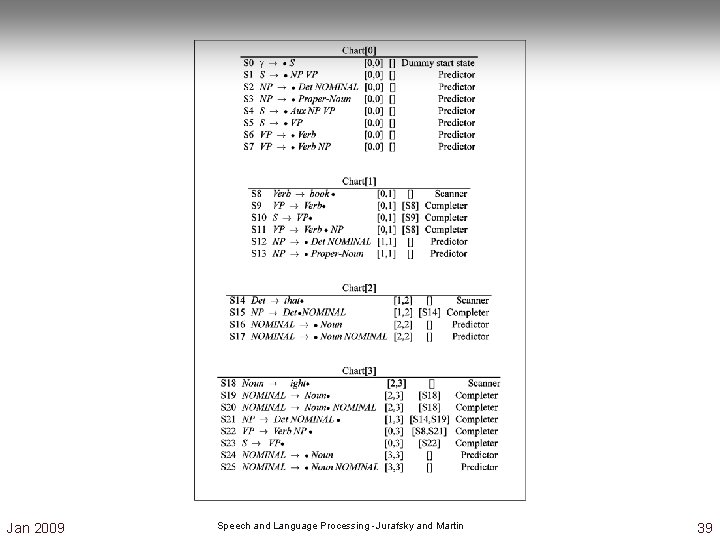

Chart[3] ↑ Extra Field Jan 2009 Speech and Language Processing - Jurafsky and Martin 40

Back to Ambiguity § Did we solve it? Jan 2009 Speech and Language Processing - Jurafsky and Martin 41

Ambiguity § No… § Both CKY and Earley will result in multiple S structures for the [0, N] table entry. § They both efficiently store the sub-parts that are shared between multiple parses. § And they obviously avoid re-deriving those sub-parts. § But neither can tell us which one is right. Jan 2009 Speech and Language Processing - Jurafsky and Martin 42

- Slides: 42