Sentence completion Korinna Grabski Tobias Scheffer Sentence completion

Sentence completion Korinna Grabski & Tobias Scheffer. Sentence completion. In the 27 th Annual International ACM SIGIR Conference (SIGIR'2004), 2004. Presenter: Suhan Yu

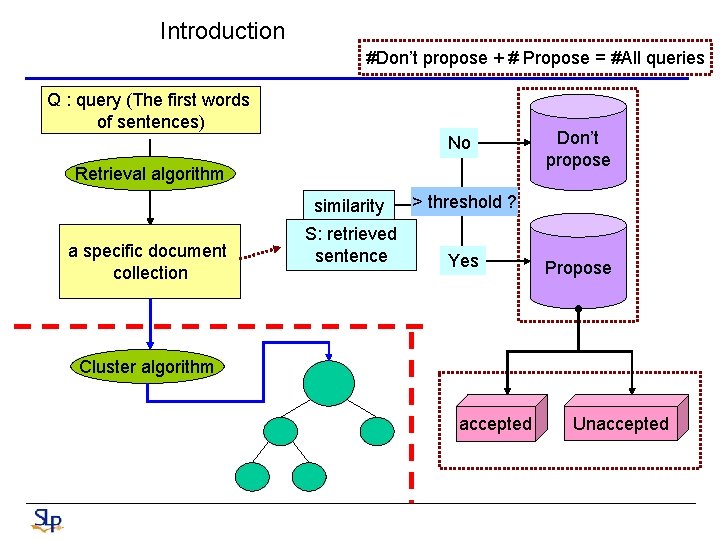

Introduction #Don’t propose + # Propose = #All queries Q : query (The first words of sentences) No Retrieval algorithm a specific document collection similarity > threshold ? S: retrieved sentence Yes Don’t propose Propose Cluster algorithm accepted Unaccepted

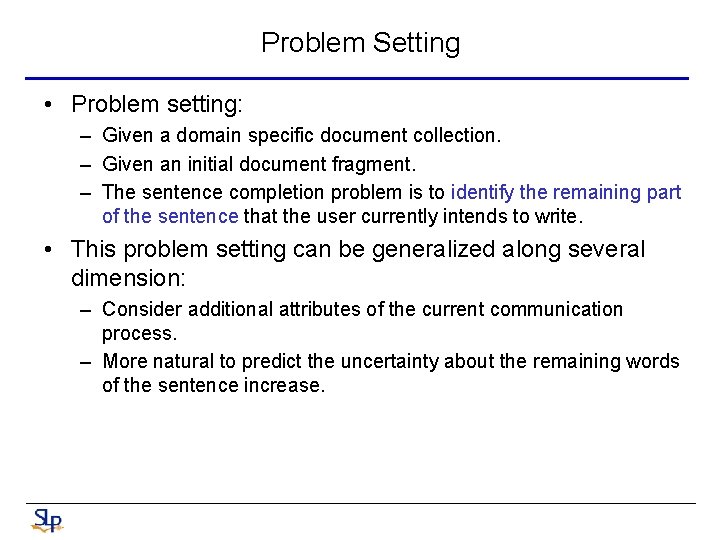

Problem Setting • Problem setting: – Given a domain specific document collection. – Given an initial document fragment. – The sentence completion problem is to identify the remaining part of the sentence that the user currently intends to write. • This problem setting can be generalized along several dimension: – Consider additional attributes of the current communication process. – More natural to predict the uncertainty about the remaining words of the sentence increase.

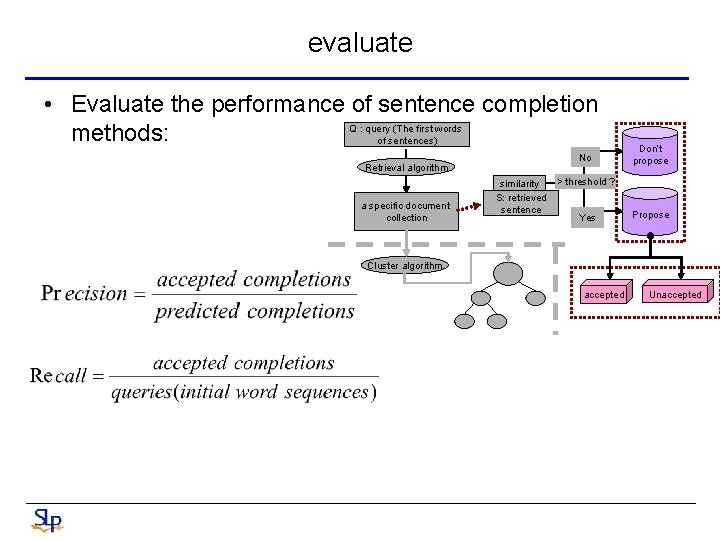

evaluate • Evaluate the performance of sentence completion Q : query (The first words methods: of sentences) Retrieval algorithm a specific document collection No > threshold ? similarity S: retrieved sentence Yes Don’t propose Propose Cluster algorithm accepted Unaccepted

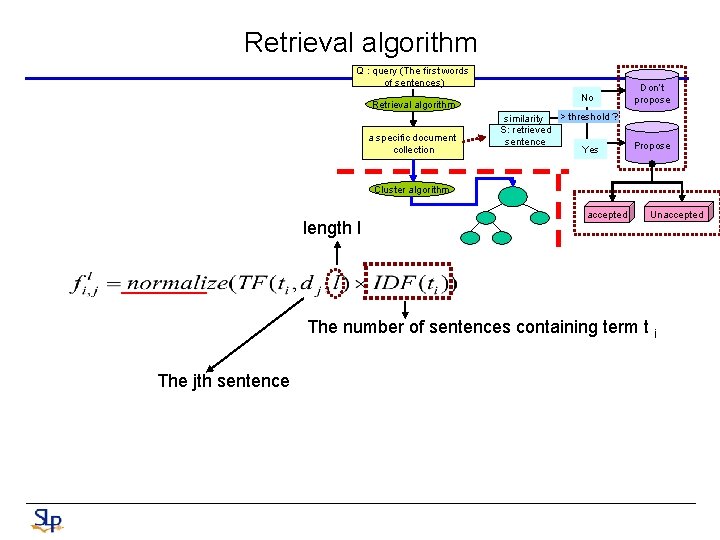

Retrieval algorithm Q : query (The first words of sentences) Retrieval algorithm a specific document collection No > threshold ? similarity S: retrieved sentence Yes Don’t propose Propose Cluster algorithm length l accepted Unaccepted The number of sentences containing term t i The jth sentence

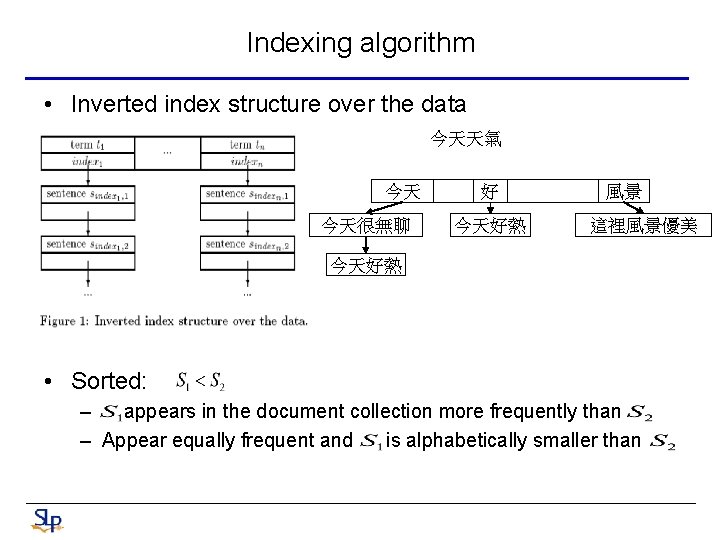

Indexing algorithm • Inverted index structure over the data 今天天氣 今天 今天很無聊 好 今天好熱 風景 這裡風景優美 今天好熱 • Sorted: – appears in the document collection more frequently than – Appear equally frequent and is alphabetically smaller than

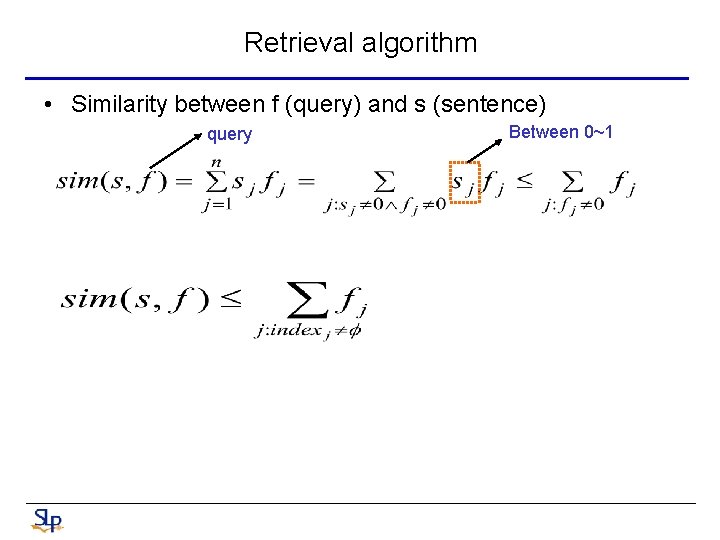

Retrieval algorithm • Similarity between f (query) and s (sentence) query Between 0~1

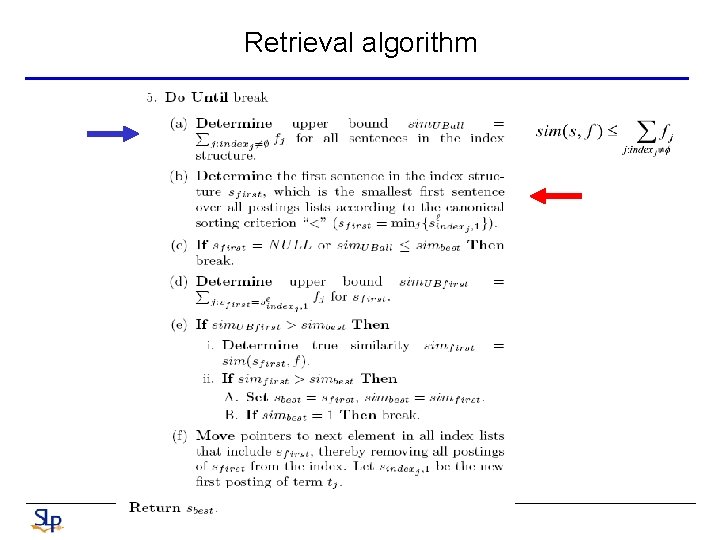

Retrieval algorithm

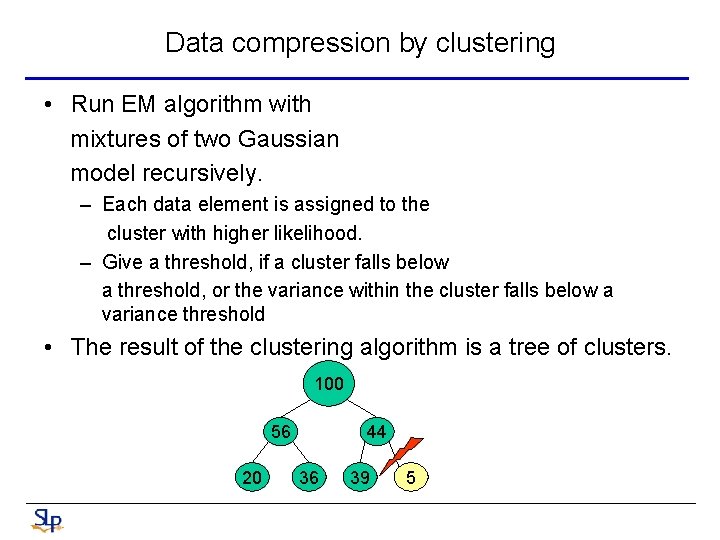

Data compression by clustering • Run EM algorithm with mixtures of two Gaussian model recursively. – Each data element is assigned to the cluster with higher likelihood. – Give a threshold, if a cluster falls below a threshold, or the variance within the cluster falls below a variance threshold • The result of the clustering algorithm is a tree of clusters. 100 56 20 44 36 39 5

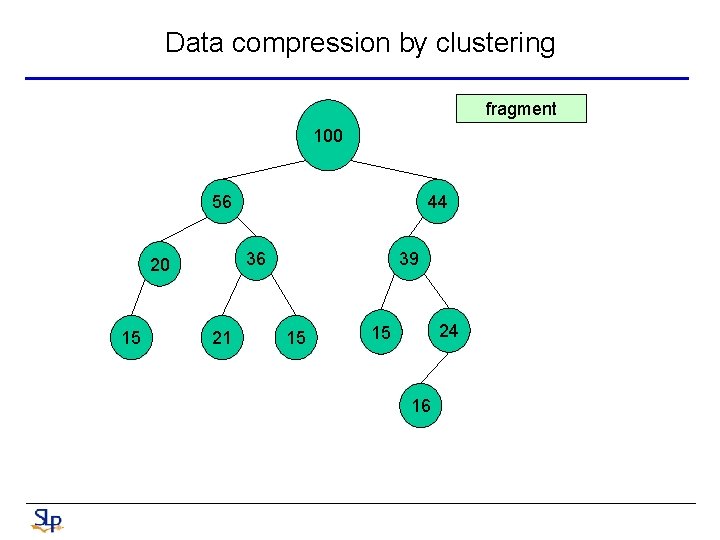

Data compression by clustering fragment 100 56 36 20 15 44 21 39 15 24 15 16

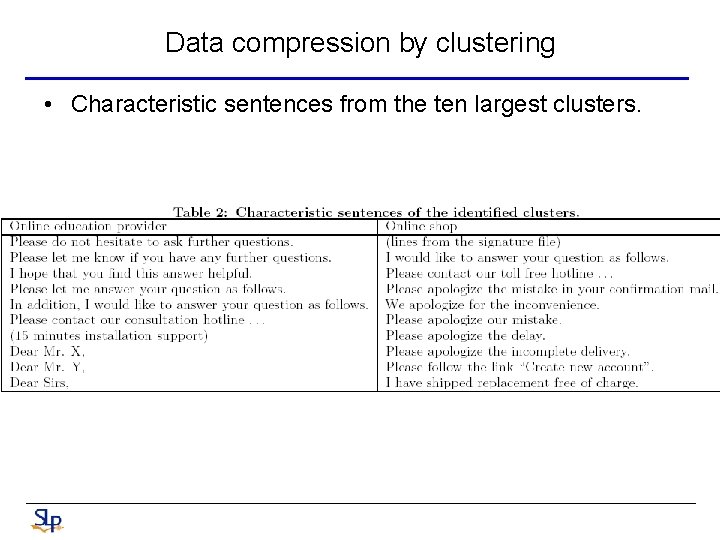

Data compression by clustering • Characteristic sentences from the ten largest clusters.

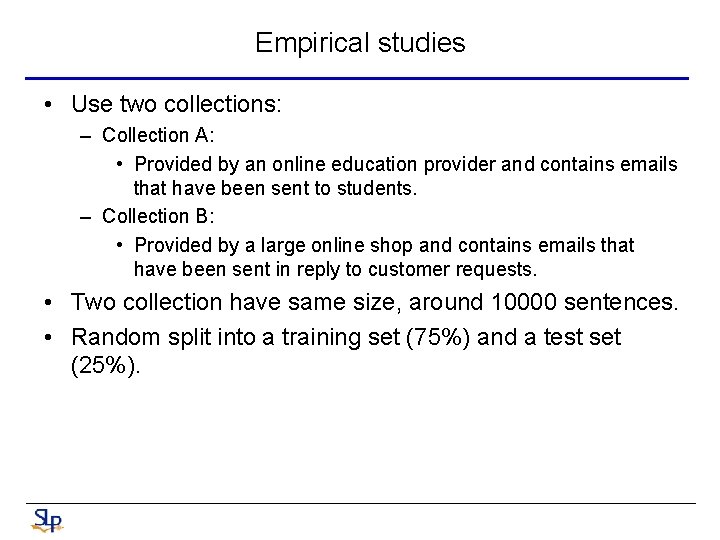

Empirical studies • Use two collections: – Collection A: • Provided by an online education provider and contains emails that have been sent to students. – Collection B: • Provided by a large online shop and contains emails that have been sent in reply to customer requests. • Two collection have same size, around 10000 sentences. • Random split into a training set (75%) and a test set (25%).

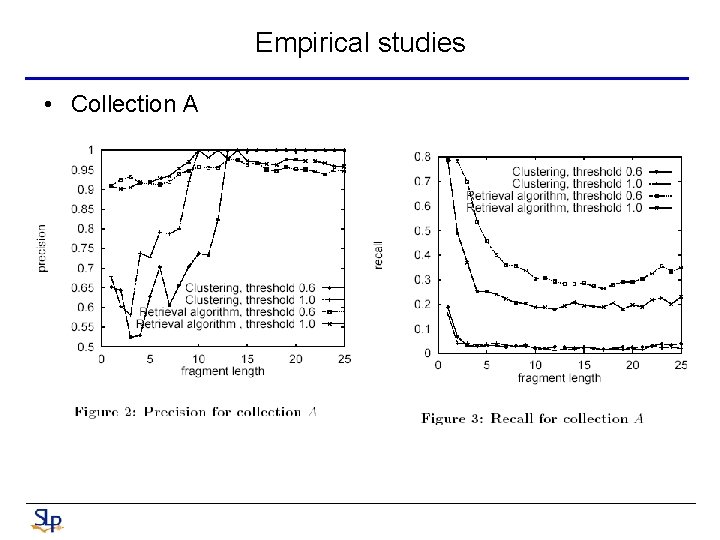

Empirical studies • Collection A

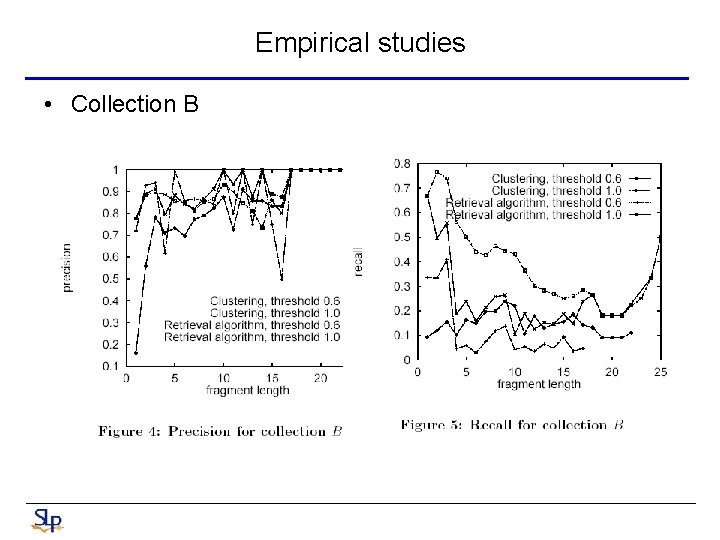

Empirical studies • Collection B

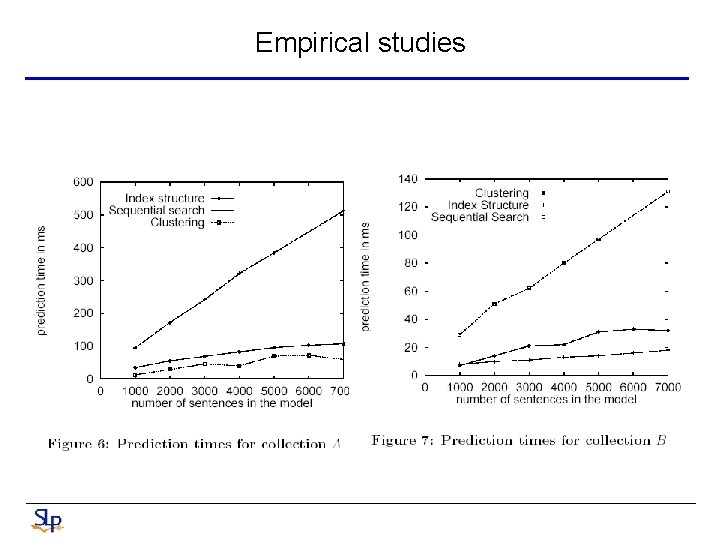

Empirical studies

Conclusion • Comparing the retrieval to the clustering approach we can conclude that the retrieval method, on average, has higher precision and recall. • This paper investigate on methods that may predict some succeeding words, but not necessarily the complete remainder of the current sentence. – “Your order” proceeds as “will be shipped on” but it may not be possible to predict whether the final word is “Monday” or “Tuesday”.

- Slides: 16