Senior Project Computer Science 2008 Machine Learning in

- Slides: 1

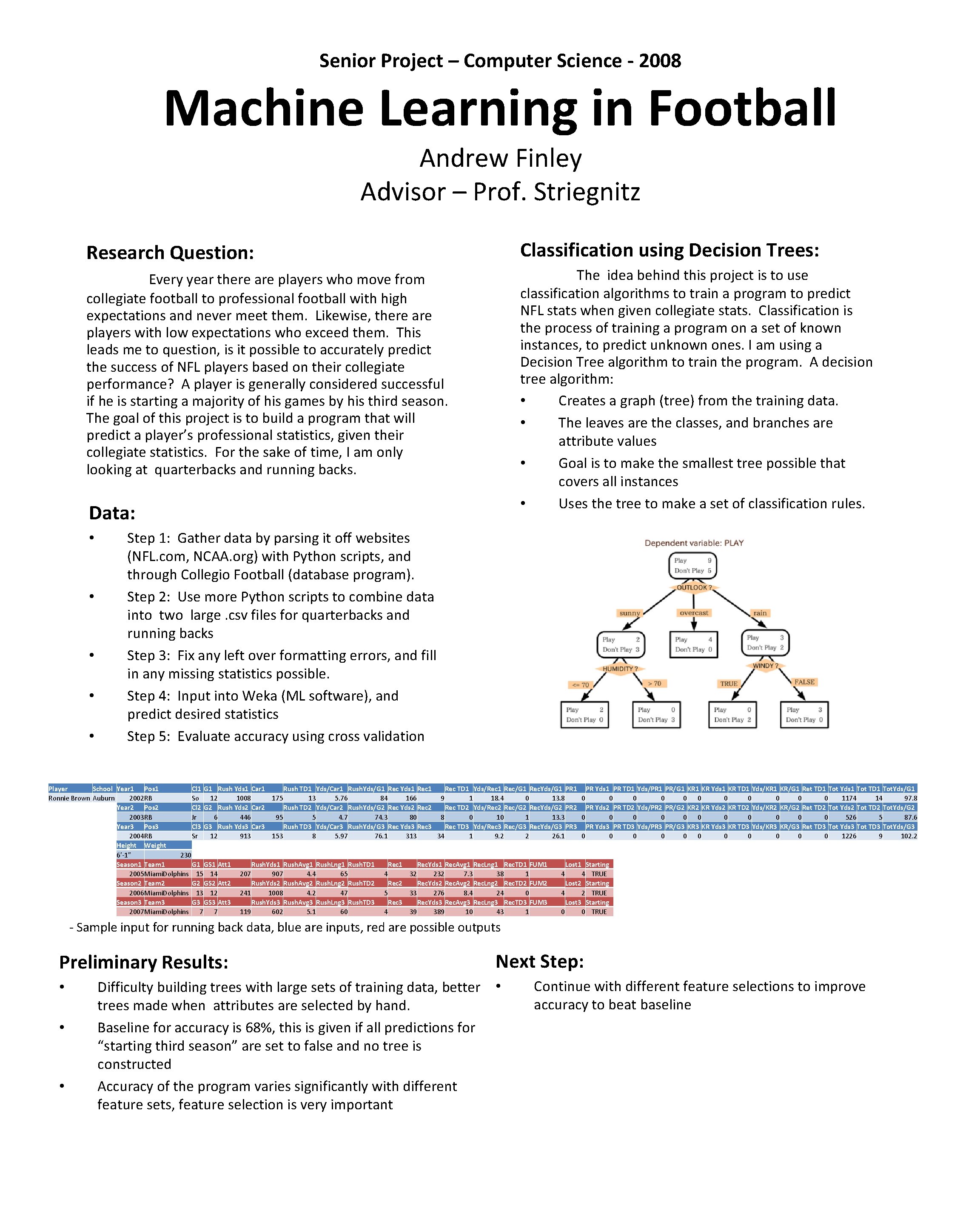

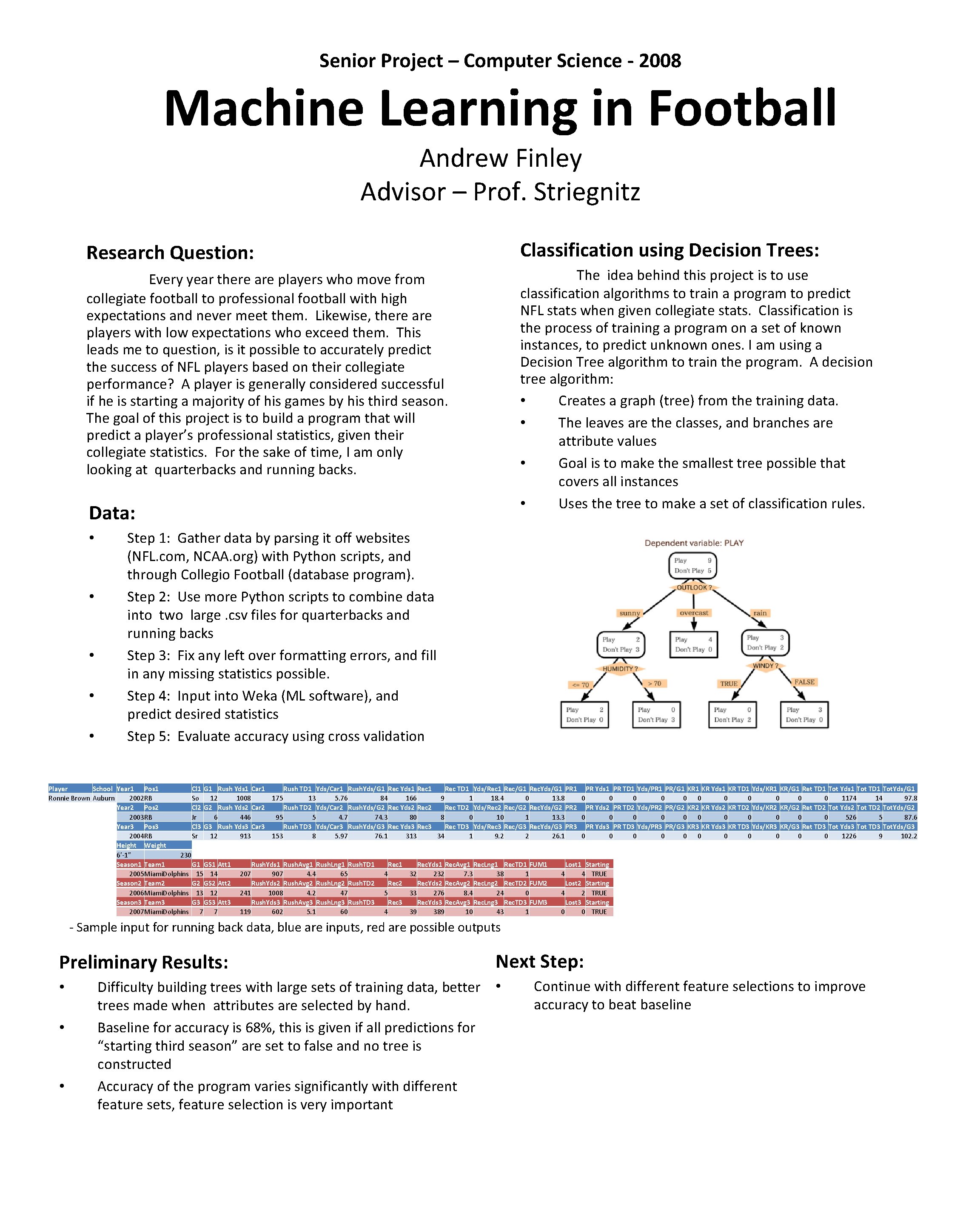

Senior Project – Computer Science - 2008 Machine Learning in Football Andrew Finley Advisor – Prof. Striegnitz Research Question: Classification using Decision Trees: Every year there are players who move from collegiate football to professional football with high expectations and never meet them. Likewise, there are players with low expectations who exceed them. This leads me to question, is it possible to accurately predict the success of NFL players based on their collegiate performance? A player is generally considered successful if he is starting a majority of his games by his third season. The goal of this project is to build a program that will predict a player’s professional statistics, given their collegiate statistics. For the sake of time, I am only looking at quarterbacks and running backs. The idea behind this project is to use classification algorithms to train a program to predict NFL stats when given collegiate stats. Classification is the process of training a program on a set of known instances, to predict unknown ones. I am using a Decision Tree algorithm to train the program. A decision tree algorithm: • Creates a graph (tree) from the training data. • The leaves are the classes, and branches are attribute values • Goal is to make the smallest tree possible that covers all instances • Uses the tree to make a set of classification rules. Data: • • • Step 1: Gather data by parsing it off websites (NFL. com, NCAA. org) with Python scripts, and through Collegio Football (database program). Step 2: Use more Python scripts to combine data into two large. csv files for quarterbacks and running backs Step 3: Fix any left over formatting errors, and fill in any missing statistics possible. Step 4: Input into Weka (ML software), and predict desired statistics Step 5: Evaluate accuracy using cross validation Player School Year 1 Pos 1 Cl 1 G 1 Rush Yds 1 Car 1 Rush TD 1 Yds/Car 1 Rush. Yds/G 1 Rec Yds 1 Rec TD 1 Yds/Rec 1 Rec/G 1 Rec. Yds/G 1 PR Yds 1 PR TD 1 Yds/PR 1 PR/G 1 KR Yds 1 KR TD 1 Yds/KR 1 KR/G 1 Ret TD 1 Tot Yds 1 Tot TD 1 Tot. Yds/G 1 Ronnie Brown Auburn 2002 RB So 12 1008 175 13 5. 76 84 166 9 1 18. 4 0 13. 8 0 0 0 1174 14 97. 8 Year 2 Pos 2 Cl 2 G 2 Rush Yds 2 Car 2 Rush TD 2 Yds/Car 2 Rush. Yds/G 2 Rec Yds 2 Rec TD 2 Yds/Rec 2 Rec/G 2 Rec. Yds/G 2 PR Yds 2 PR TD 2 Yds/PR 2 PR/G 2 KR Yds 2 KR TD 2 Yds/KR 2 KR/G 2 Ret TD 2 Tot Yds 2 Tot TD 2 Tot. Yds/G 2 2003 RB Jr 6 446 95 5 4. 7 74. 3 80 8 0 10 1 13. 3 0 0 0 526 5 87. 6 Year 3 Pos 3 Cl 3 G 3 Rush Yds 3 Car 3 Rush TD 3 Yds/Car 3 Rush. Yds/G 3 Rec Yds 3 Rec TD 3 Yds/Rec 3 Rec/G 3 Rec. Yds/G 3 PR Yds 3 PR TD 3 Yds/PR 3 PR/G 3 KR Yds 3 KR TD 3 Yds/KR 3 KR/G 3 Ret TD 3 Tot Yds 3 Tot TD 3 Tot. Yds/G 3 2004 RB Sr 12 913 153 8 5. 97 76. 1 313 34 1 9. 2 2 26. 1 0 0 0 1226 9 102. 2 Height Weight 6'-1'' 230 Season 1 Team 1 GS 1 Att 1 Rush. Yds 1 Rush. Avg 1 Rush. Lng 1 Rush. TD 1 Rec. Yds 1 Rec. Avg 1 Rec. Lng 1 Rec. TD 1 FUM 1 Lost 1 Starting 2005 Miami. Dolphins 15 14 207 907 4. 4 65 4 32 232 7. 3 38 1 4 4 TRUE Season 2 Team 2 GS 2 Att 2 Rush. Yds 2 Rush. Avg 2 Rush. Lng 2 Rush. TD 2 Rec. Yds 2 Rec. Avg 2 Rec. Lng 2 Rec. TD 2 FUM 2 Lost 2 Starting 2006 Miami. Dolphins 13 12 241 1008 4. 2 47 5 33 276 8. 4 24 0 4 2 TRUE Season 3 Team 3 GS 3 Att 3 Rush. Yds 3 Rush. Avg 3 Rush. Lng 3 Rush. TD 3 Rec. Yds 3 Rec. Avg 3 Rec. Lng 3 Rec. TD 3 FUM 3 Lost 3 Starting 2007 Miami. Dolphins 7 7 119 602 5. 1 60 4 39 389 10 43 1 0 0 TRUE - Sample input for running back data, blue are inputs, red are possible outputs Preliminary Results: • • • Next Step: Difficulty building trees with large sets of training data, better • trees made when attributes are selected by hand. Baseline for accuracy is 68%, this is given if all predictions for “starting third season” are set to false and no tree is constructed Accuracy of the program varies significantly with different feature sets, feature selection is very important Continue with different feature selections to improve accuracy to beat baseline