Send and Receive MPI Send and Receive Sending

- Slides: 32

Send and Receive

MPI Send and Receive • Sending and receiving are two foundational concepts of MPI • Almost every single function in MPI can be implemented with basic send and receive calls

Overview of Sending and Receiving • MPI’s send and receive calls operate in the following manner - Process 0 tries to send a message to process 1 - Process 0 packages the data into a buffer - Route the message to the right location -The location is defined by the process’s rank - Process 1 needs to acknowledge to want to receive 0’s data - Once process 1 acknowledges this, the data has been transmitted - Process 0 may go back to work

Overview of Sending and Receiving • If Process 0 has to send different types of messages to processor 1 - MPI allows senders and receivers to specify message IDs with the message (tags) - Process 1 can request a message with a certain tag number - Messages with different tag numbers will be buffered until Processor is ready to receive them

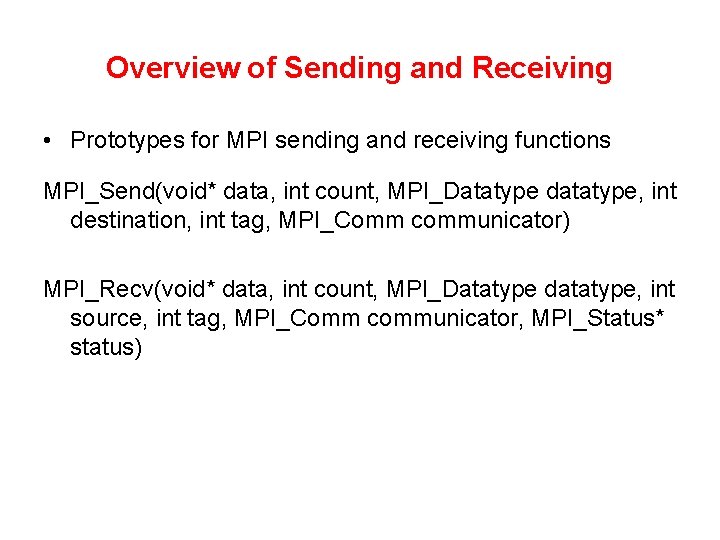

Overview of Sending and Receiving • Prototypes for MPI sending and receiving functions MPI_Send(void* data, int count, MPI_Datatype datatype, int destination, int tag, MPI_Comm communicator) MPI_Recv(void* data, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm communicator, MPI_Status* status)

Overview of Sending and Receiving • The first argument is the data buffer • The second and third arguments describe the count and type of elements that reside in the buffer • MPI_Send sends the exact count of elements • MPI_Recv will receive at most the count of elements • The fourth and fifth arguments specify the rank of the sending/receiving process and the tag of the message • The sixth argument specifies the communicator • The last argument (for MPI_Recv only) provides information about the received message

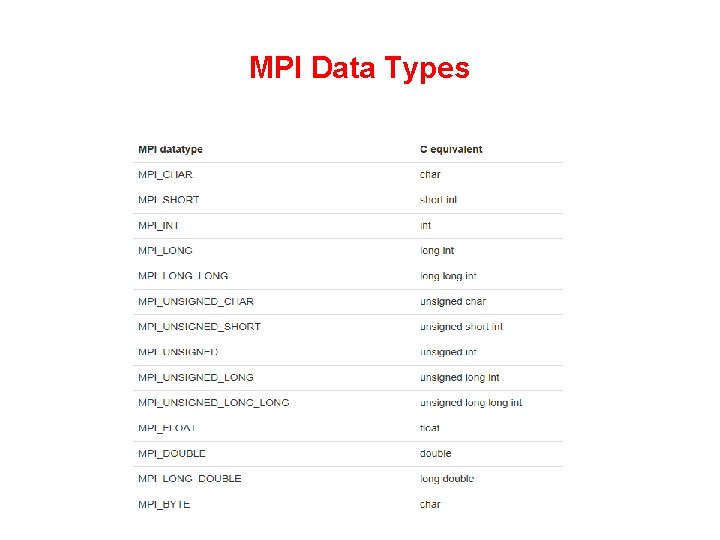

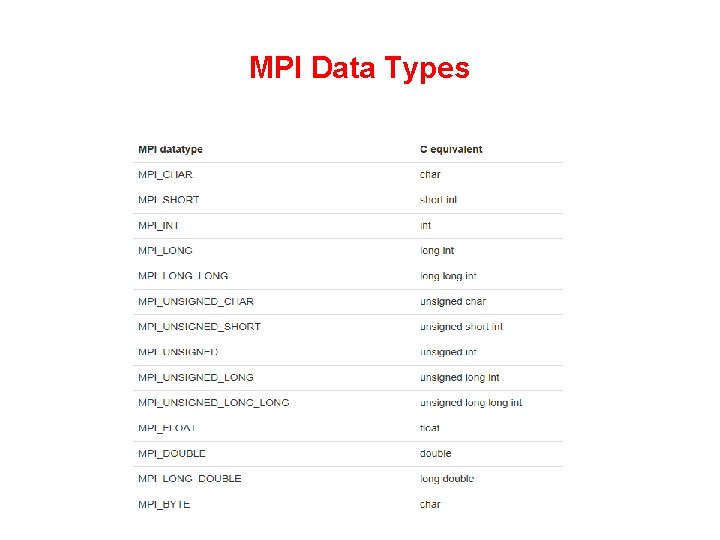

MPI Data Types

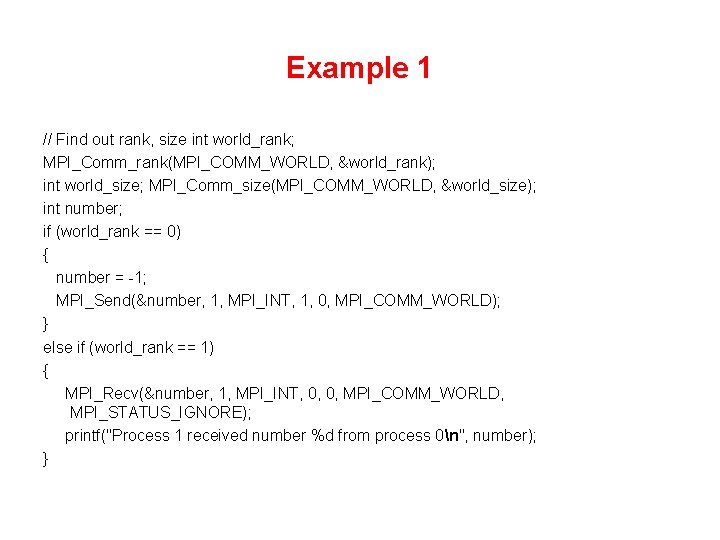

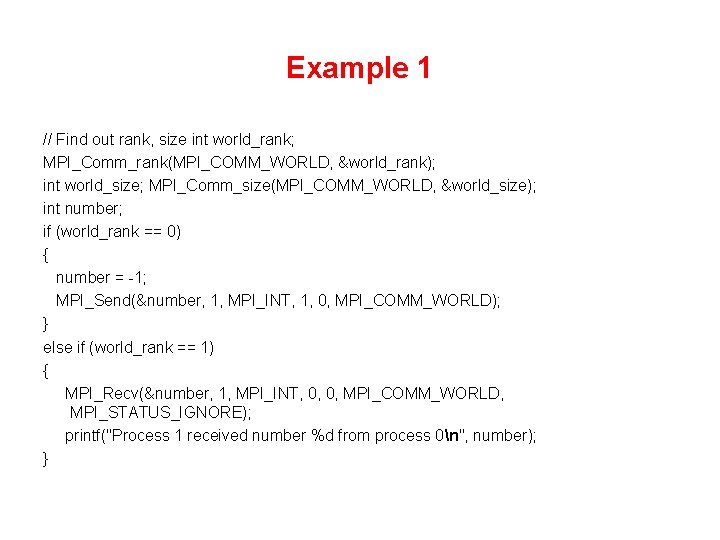

Example 1 // Find out rank, size int world_rank; MPI_Comm_rank(MPI_COMM_WORLD, &world_rank); int world_size; MPI_Comm_size(MPI_COMM_WORLD, &world_size); int number; if (world_rank == 0) { number = -1; MPI_Send(&number, 1, MPI_INT, 1, 0, MPI_COMM_WORLD); } else if (world_rank == 1) { MPI_Recv(&number, 1, MPI_INT, 0, 0, MPI_COMM_WORLD, MPI_STATUS_IGNORE); printf("Process 1 received number %d from process 0n", number); }

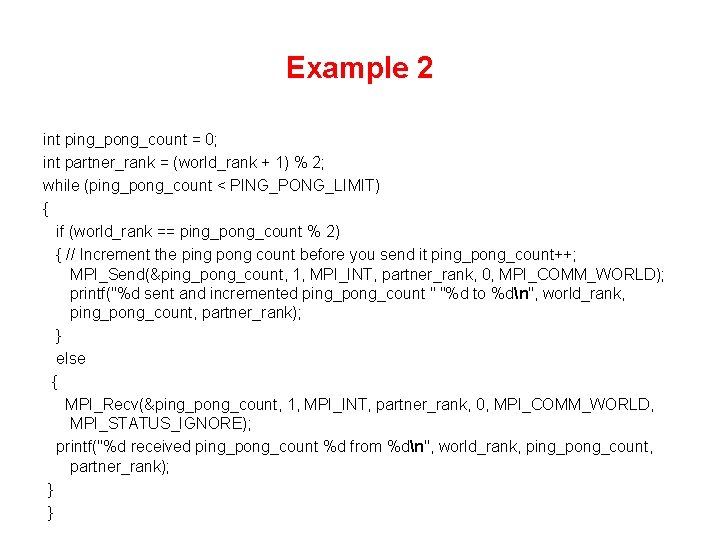

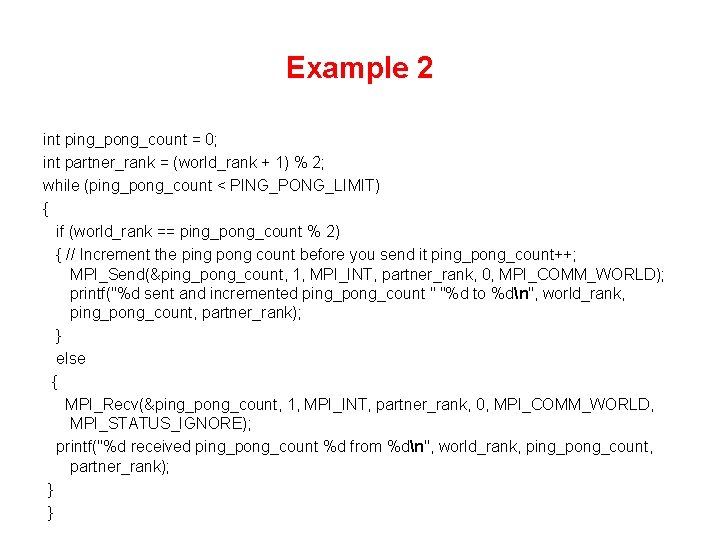

Example 2 int ping_pong_count = 0; int partner_rank = (world_rank + 1) % 2; while (ping_pong_count < PING_PONG_LIMIT) { if (world_rank == ping_pong_count % 2) { // Increment the ping pong count before you send it ping_pong_count++; MPI_Send(&ping_pong_count, 1, MPI_INT, partner_rank, 0, MPI_COMM_WORLD); printf("%d sent and incremented ping_pong_count " "%d to %dn", world_rank, ping_pong_count, partner_rank); } else { MPI_Recv(&ping_pong_count, 1, MPI_INT, partner_rank, 0, MPI_COMM_WORLD, MPI_STATUS_IGNORE); printf("%d received ping_pong_count %d from %dn", world_rank, ping_pong_count, partner_rank); } }

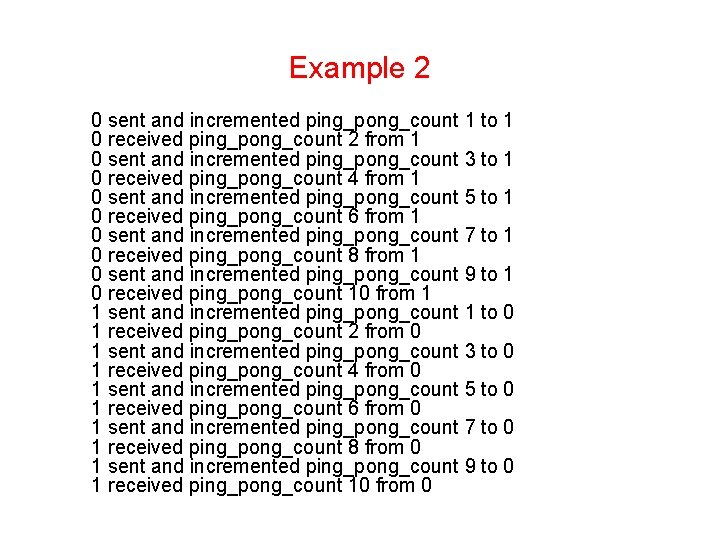

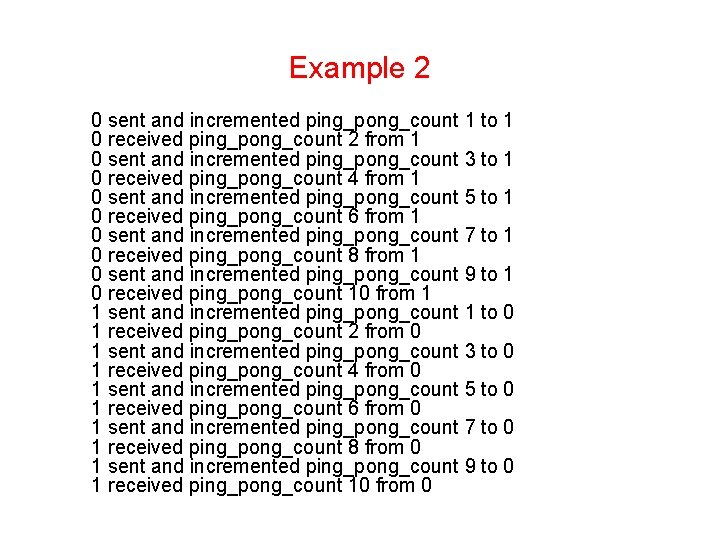

Example 2 0 sent and incremented ping_pong_count 1 to 1 0 received ping_pong_count 2 from 1 0 sent and incremented ping_pong_count 3 to 1 0 received ping_pong_count 4 from 1 0 sent and incremented ping_pong_count 5 to 1 0 received ping_pong_count 6 from 1 0 sent and incremented ping_pong_count 7 to 1 0 received ping_pong_count 8 from 1 0 sent and incremented ping_pong_count 9 to 1 0 received ping_pong_count 10 from 1 1 sent and incremented ping_pong_count 1 to 0 1 received ping_pong_count 2 from 0 1 sent and incremented ping_pong_count 3 to 0 1 received ping_pong_count 4 from 0 1 sent and incremented ping_pong_count 5 to 0 1 received ping_pong_count 6 from 0 1 sent and incremented ping_pong_count 7 to 0 1 received ping_pong_count 8 from 0 1 sent and incremented ping_pong_count 9 to 0 1 received ping_pong_count 10 from 0

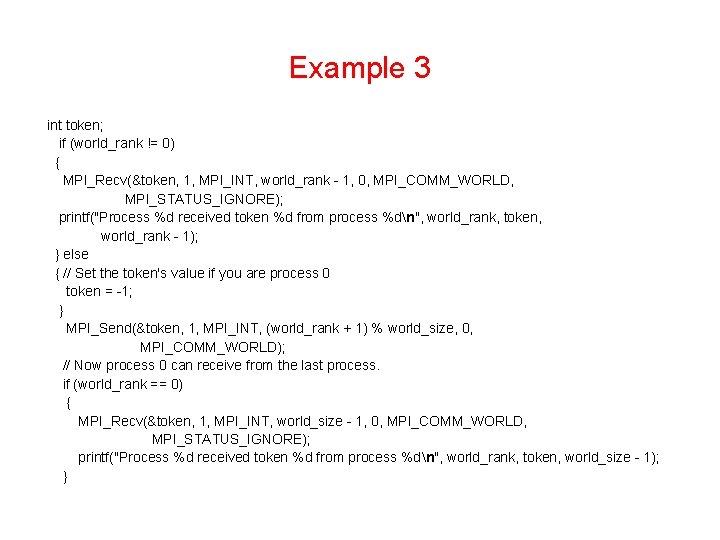

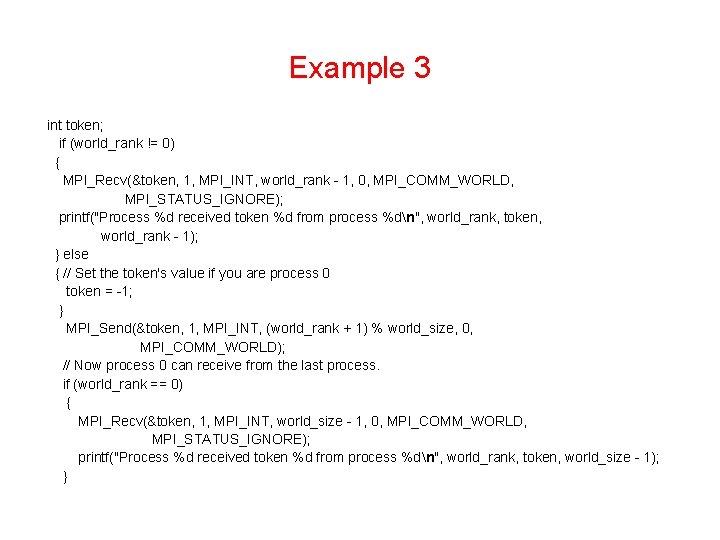

Example 3 int token; if (world_rank != 0) { MPI_Recv(&token, 1, MPI_INT, world_rank - 1, 0, MPI_COMM_WORLD, MPI_STATUS_IGNORE); printf("Process %d received token %d from process %dn", world_rank, token, world_rank - 1); } else { // Set the token's value if you are process 0 token = -1; } MPI_Send(&token, 1, MPI_INT, (world_rank + 1) % world_size, 0, MPI_COMM_WORLD); // Now process 0 can receive from the last process. if (world_rank == 0) { MPI_Recv(&token, 1, MPI_INT, world_size - 1, 0, MPI_COMM_WORLD, MPI_STATUS_IGNORE); printf("Process %d received token %d from process %dn", world_rank, token, world_size - 1); }

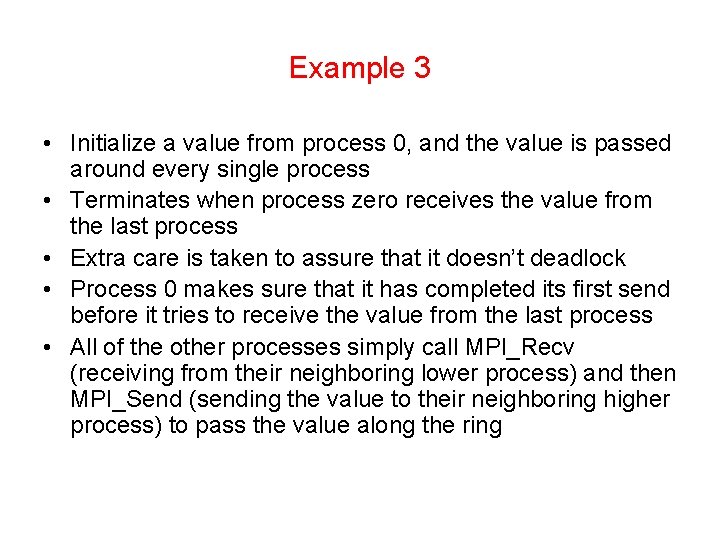

Example 3 • Initialize a value from process 0, and the value is passed around every single process • Terminates when process zero receives the value from the last process • Extra care is taken to assure that it doesn’t deadlock • Process 0 makes sure that it has completed its first send before it tries to receive the value from the last process • All of the other processes simply call MPI_Recv (receiving from their neighboring lower process) and then MPI_Send (sending the value to their neighboring higher process) to pass the value along the ring

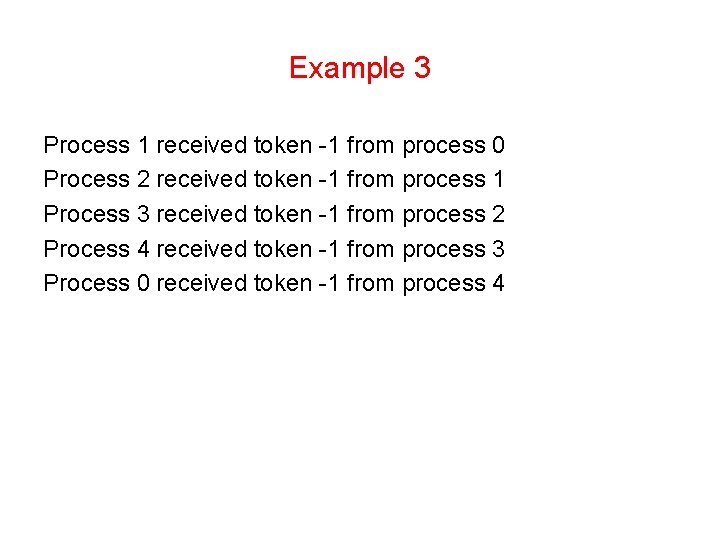

Example 3 Process 1 received token -1 from process 0 Process 2 received token -1 from process 1 Process 3 received token -1 from process 2 Process 4 received token -1 from process 3 Process 0 received token -1 from process 4

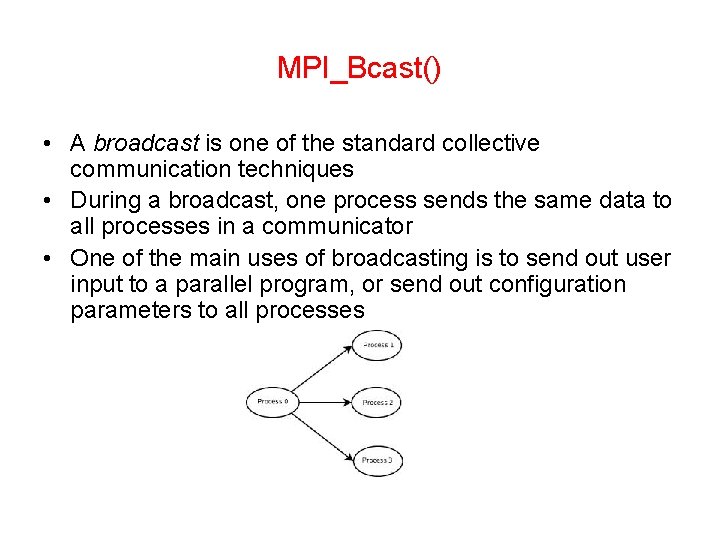

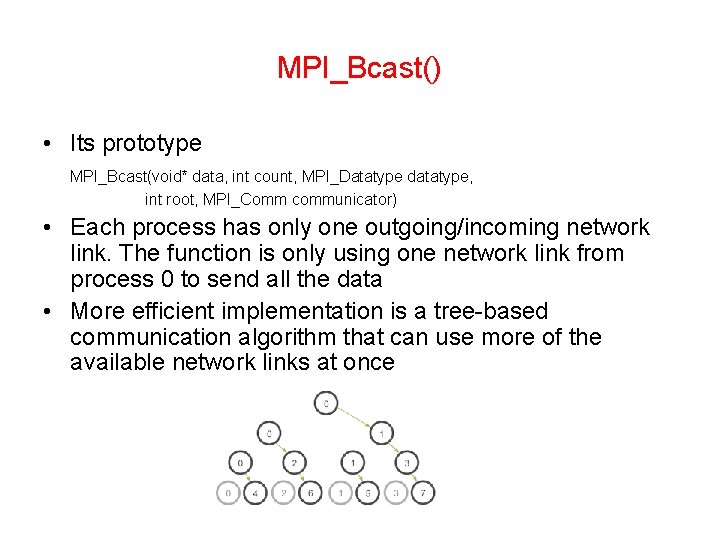

MPI_Bcast() • A broadcast is one of the standard collective communication techniques • During a broadcast, one process sends the same data to all processes in a communicator • One of the main uses of broadcasting is to send out user input to a parallel program, or send out configuration parameters to all processes

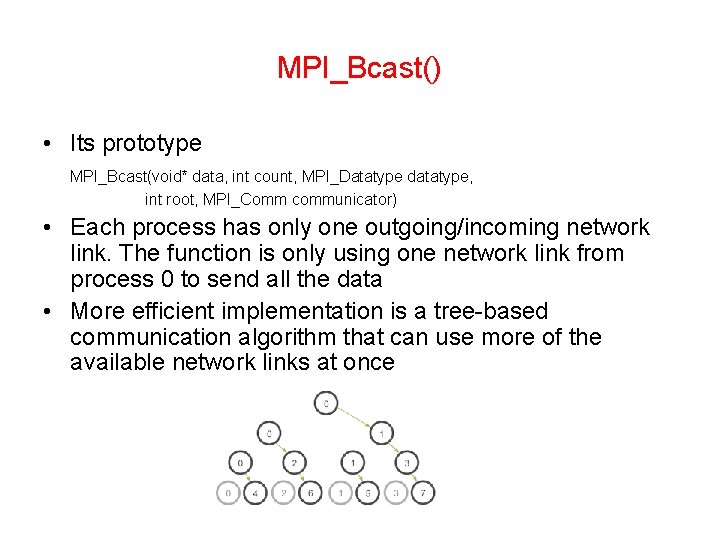

MPI_Bcast() • Its prototype MPI_Bcast(void* data, int count, MPI_Datatype datatype, int root, MPI_Comm communicator) • Each process has only one outgoing/incoming network link. The function is only using one network link from process 0 to send all the data • More efficient implementation is a tree-based communication algorithm that can use more of the available network links at once

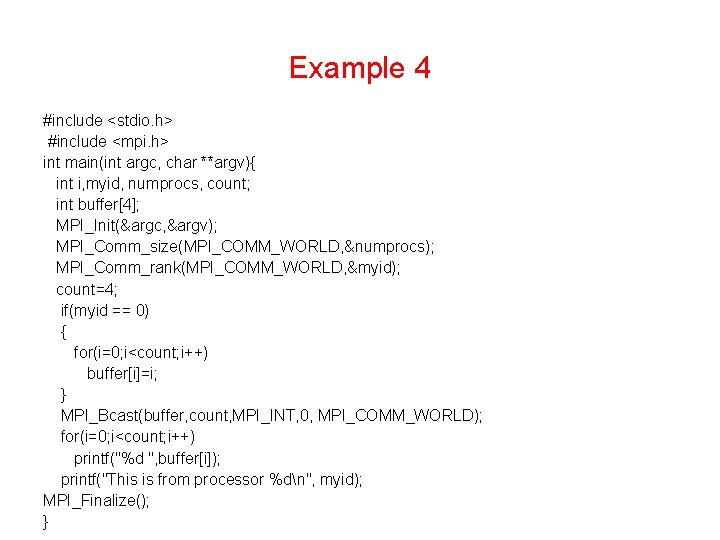

Example 4 #include <stdio. h> #include <mpi. h> int main(int argc, char **argv){ int i, myid, numprocs, count; int buffer[4]; MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &numprocs); MPI_Comm_rank(MPI_COMM_WORLD, &myid); count=4; if(myid == 0) { for(i=0; i<count; i++) buffer[i]=i; } MPI_Bcast(buffer, count, MPI_INT, 0, MPI_COMM_WORLD); for(i=0; i<count; i++) printf("%d ", buffer[i]); printf("This is from processor %dn", myid); MPI_Finalize(); }

Example 4 • The output should be 0 1 2 3 This is from processor 0 0 1 2 3 This is from processor 2 0 1 2 3 This is from processor 1 0 1 2 3 This is from processor 3

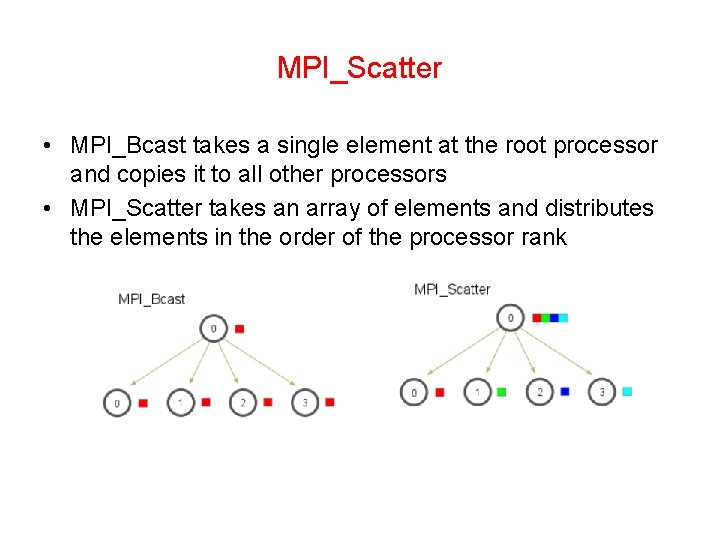

MPI_Scatter • MPI_Scatter is a collective routine that is very similar to MPI_Bcast • A root processor sending data to all processors in a communicator • MPI_Bcast sends the same piece of data to all processes • MPI_Scatter sends chunks of an array to different processors

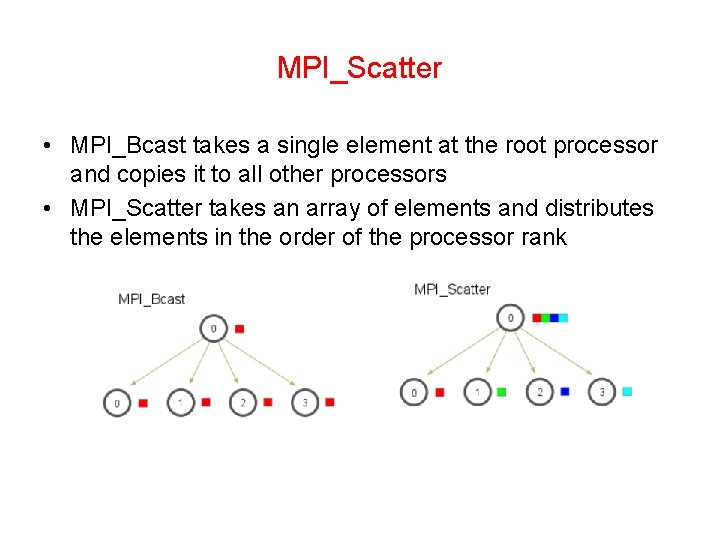

MPI_Scatter • MPI_Bcast takes a single element at the root processor and copies it to all other processors • MPI_Scatter takes an array of elements and distributes the elements in the order of the processor rank

MPI_Scatter • Its prototype MPI_Scatter(void* send_data, int send_count, MPI_Datatype send_datatype, void* recv_data, int recv_count, MPI_Datatype recv_datatype, int root, MPI_Comm communicator) • send_data: an array of data on the root processor • send_count and send_datatype: how many elements of a MPI Datatype will be sent to each processor • recv_data: a buffer of data that can hold recv_count elements • root: root processor • communicator

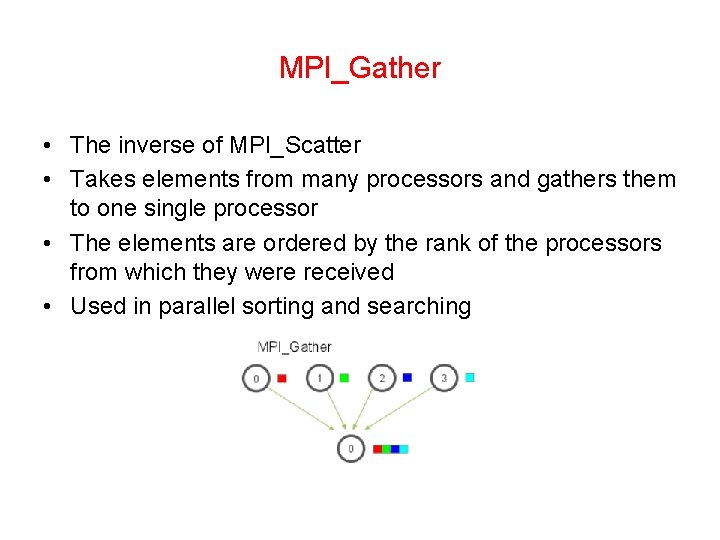

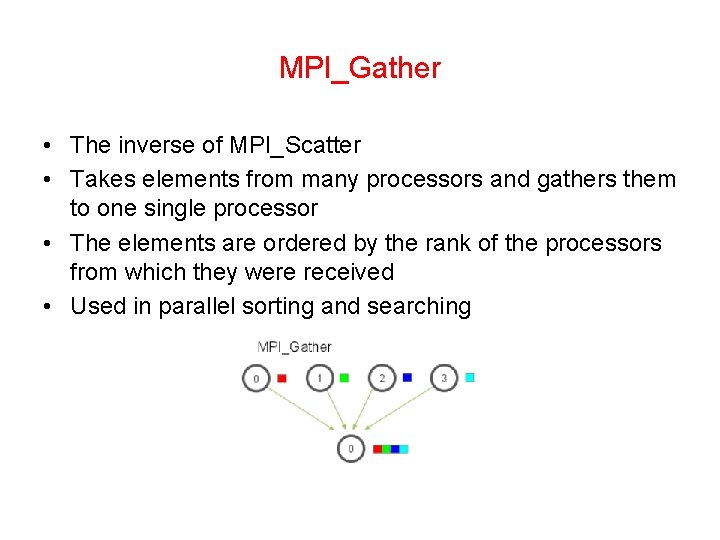

MPI_Gather • The inverse of MPI_Scatter • Takes elements from many processors and gathers them to one single processor • The elements are ordered by the rank of the processors from which they were received • Used in parallel sorting and searching

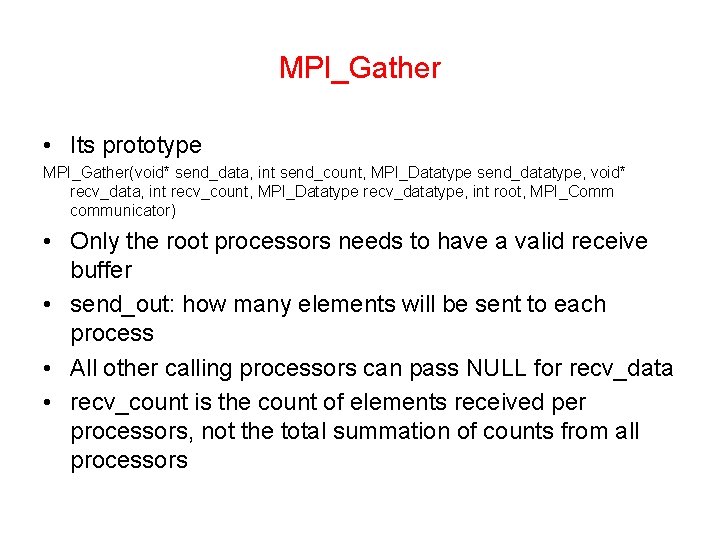

MPI_Gather • Its prototype MPI_Gather(void* send_data, int send_count, MPI_Datatype send_datatype, void* recv_data, int recv_count, MPI_Datatype recv_datatype, int root, MPI_Comm communicator) • Only the root processors needs to have a valid receive buffer • send_out: how many elements will be sent to each process • All other calling processors can pass NULL for recv_data • recv_count is the count of elements received per processors, not the total summation of counts from all processors

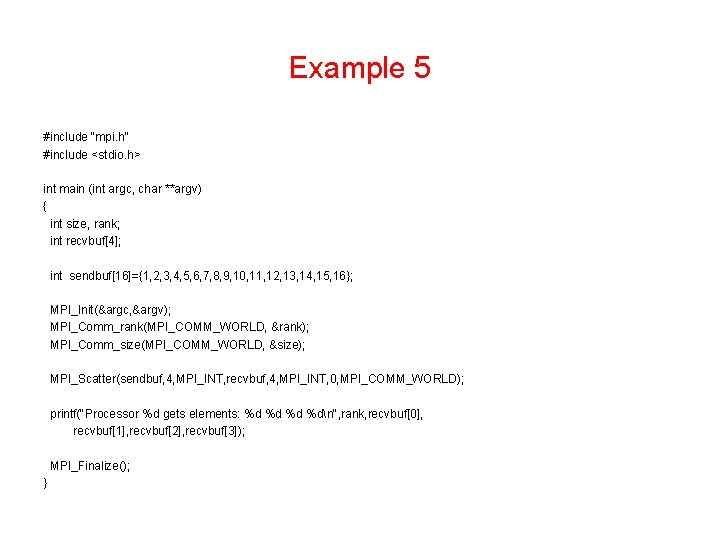

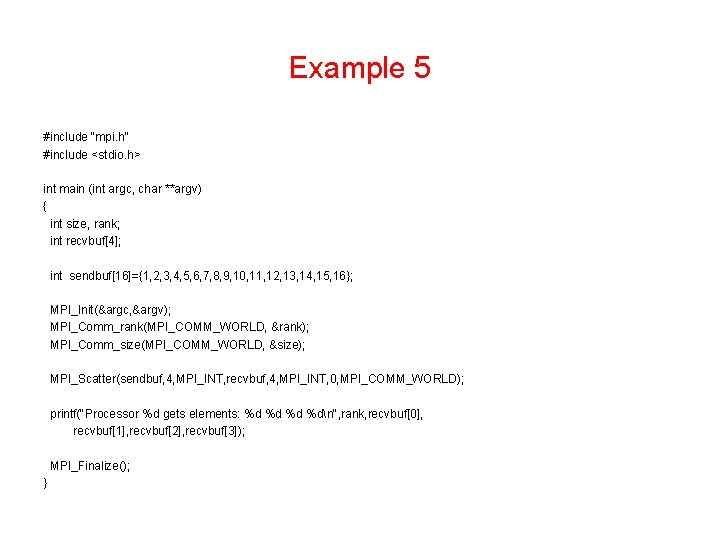

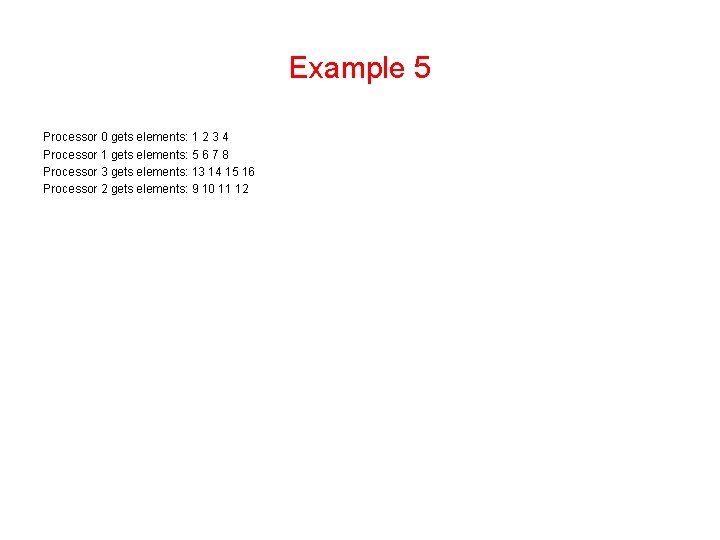

Example 5 #include "mpi. h" #include <stdio. h> int main (int argc, char **argv) { int size, rank; int recvbuf[4]; int sendbuf[16]={1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16}; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &size); MPI_Scatter(sendbuf, 4, MPI_INT, recvbuf, 4, MPI_INT, 0, MPI_COMM_WORLD); printf("Processor %d gets elements: %d %dn", rank, recvbuf[0], recvbuf[1], recvbuf[2], recvbuf[3]); MPI_Finalize(); }

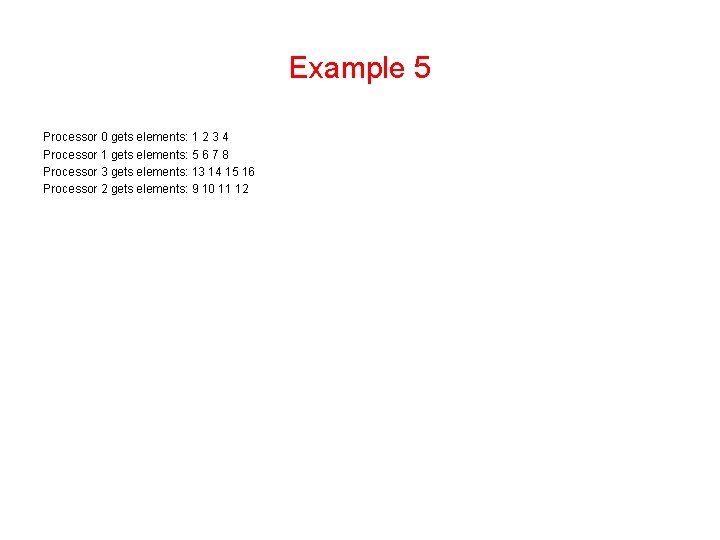

Example 5 Processor 0 gets elements: 1 2 3 4 Processor 1 gets elements: 5 6 7 8 Processor 3 gets elements: 13 14 15 16 Processor 2 gets elements: 9 10 11 12

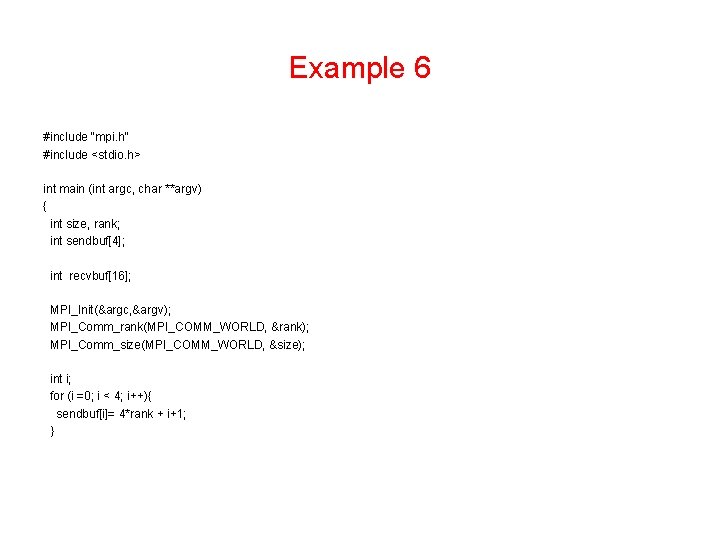

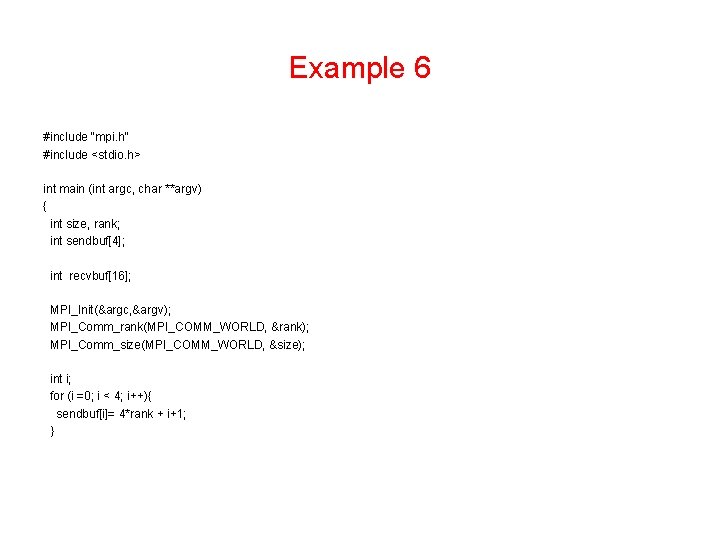

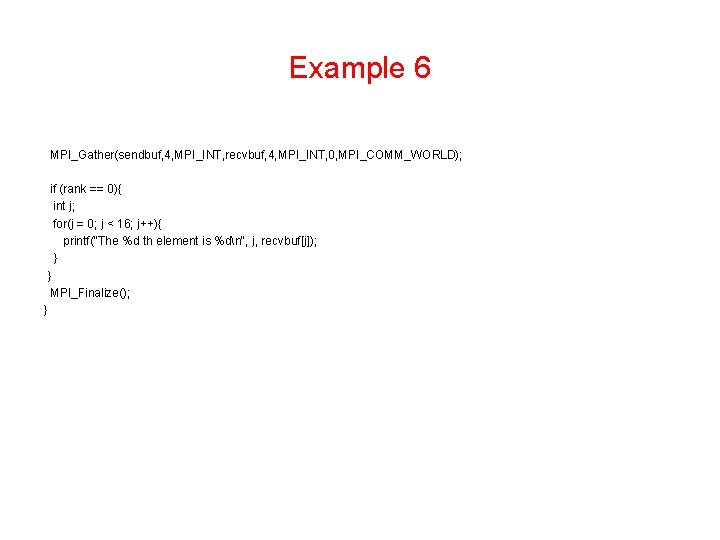

Example 6 #include "mpi. h" #include <stdio. h> int main (int argc, char **argv) { int size, rank; int sendbuf[4]; int recvbuf[16]; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &size); int i; for (i =0; i < 4; i++){ sendbuf[i]= 4*rank + i+1; }

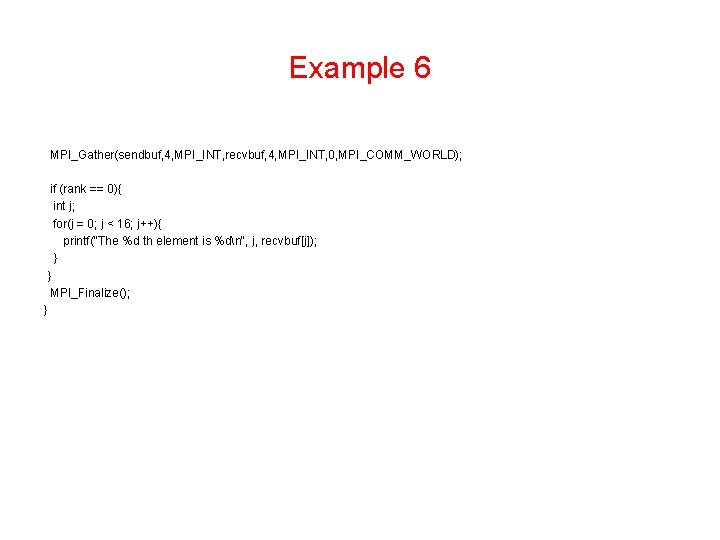

Example 6 MPI_Gather(sendbuf, 4, MPI_INT, recvbuf, 4, MPI_INT, 0, MPI_COMM_WORLD); if (rank == 0){ int j; for(j = 0; j < 16; j++){ printf("The %d th element is %dn", j, recvbuf[j]); } } MPI_Finalize(); }

Example 6 The 0 th element is 1 The 1 th element is 2 The 2 th element is 3 The 3 th element is 4 The 4 th element is 5 The 5 th element is 6 The 6 th element is 7 The 7 th element is 8 The 8 th element is 9 The 9 th element is 10 The 10 th element is 11 The 11 th element is 12 The 12 th element is 13 The 13 th element is 14 The 14 th element is 15 The 15 th element is 16

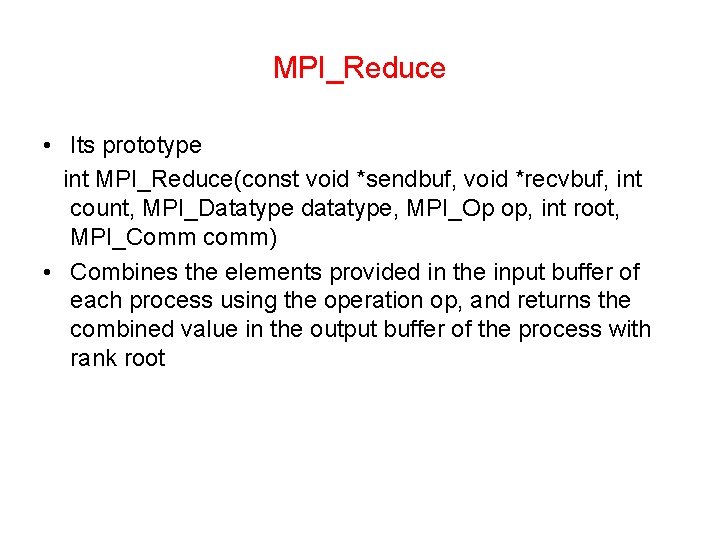

MPI_Reduce • Its prototype int MPI_Reduce(const void *sendbuf, void *recvbuf, int count, MPI_Datatype datatype, MPI_Op op, int root, MPI_Comm comm) • Combines the elements provided in the input buffer of each process using the operation op, and returns the combined value in the output buffer of the process with rank root

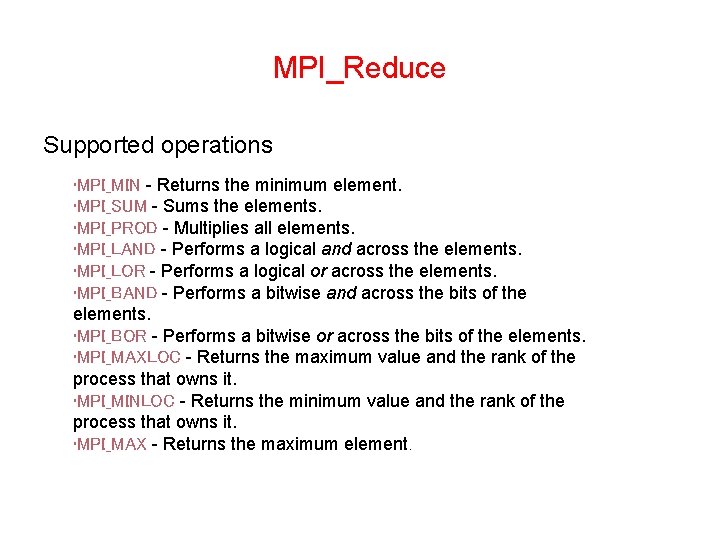

MPI_Reduce Supported operations • MPI_MIN - Returns the minimum element. • MPI_SUM - Sums the elements. • MPI_PROD - Multiplies all elements. • MPI_LAND - Performs a logical and across the elements. • MPI_LOR - Performs a logical or across the elements. • MPI_BAND - Performs a bitwise and across the bits of the elements. • MPI_BOR - Performs a bitwise or across the bits of the elements. • MPI_MAXLOC - Returns the maximum value and the rank of the process that owns it. • MPI_MINLOC - Returns the minimum value and the rank of the process that owns it. • MPI_MAX - Returns the maximum element.

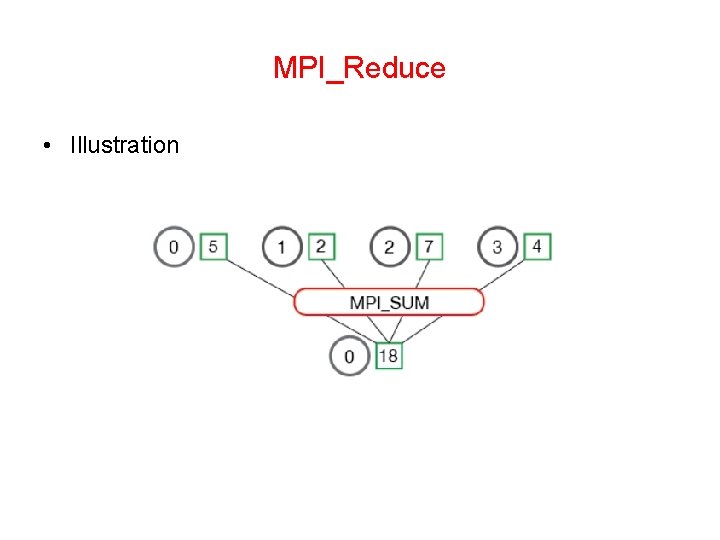

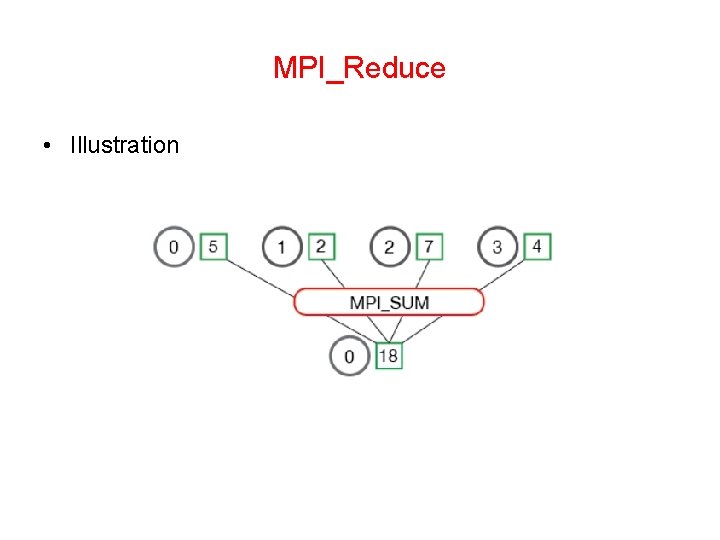

MPI_Reduce • Illustration

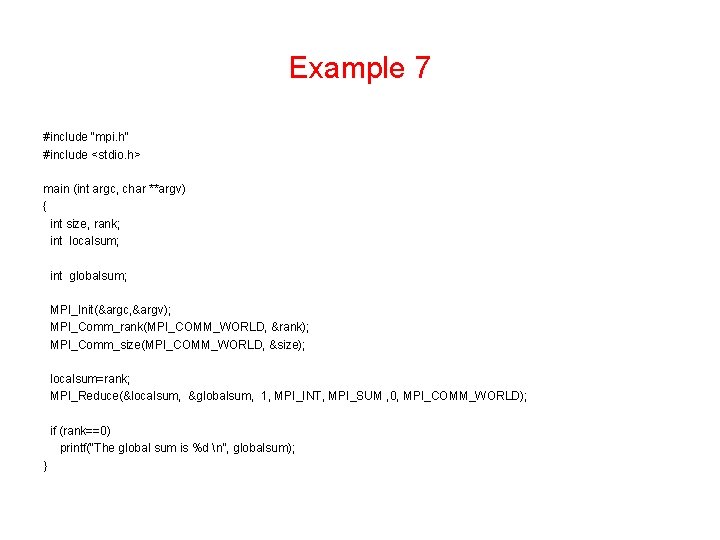

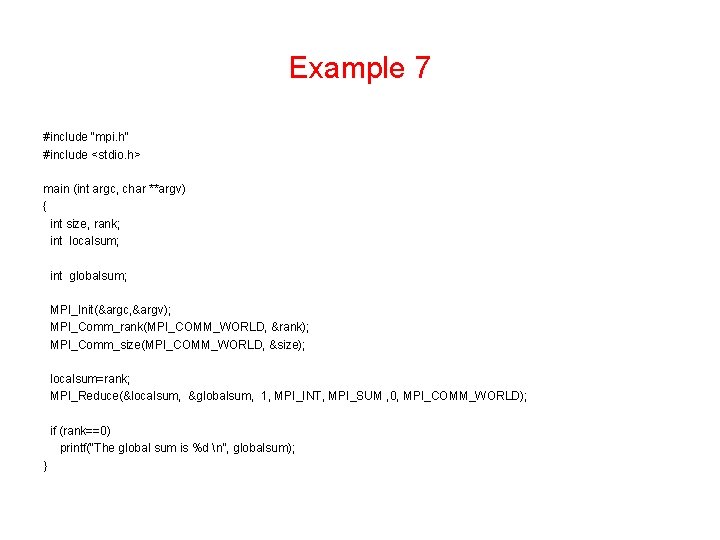

Example 7 #include "mpi. h" #include <stdio. h> main (int argc, char **argv) { int size, rank; int localsum; int globalsum; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &size); localsum=rank; MPI_Reduce(&localsum, &globalsum, 1, MPI_INT, MPI_SUM , 0, MPI_COMM_WORLD); if (rank==0) printf(“The global sum is %d n”, globalsum); }

Example 7 Run the program using 4 processors: The global sum is 6