Semisupervised Structured Prediction Models Ulf Brefeld Joint work

Semi-supervised Structured Prediction Models Ulf Brefeld Joint work with… Christoph Büscher Thomas Gärtner Peter Haider Tobias Scheffer Stefan Wrobel Alexander Zien

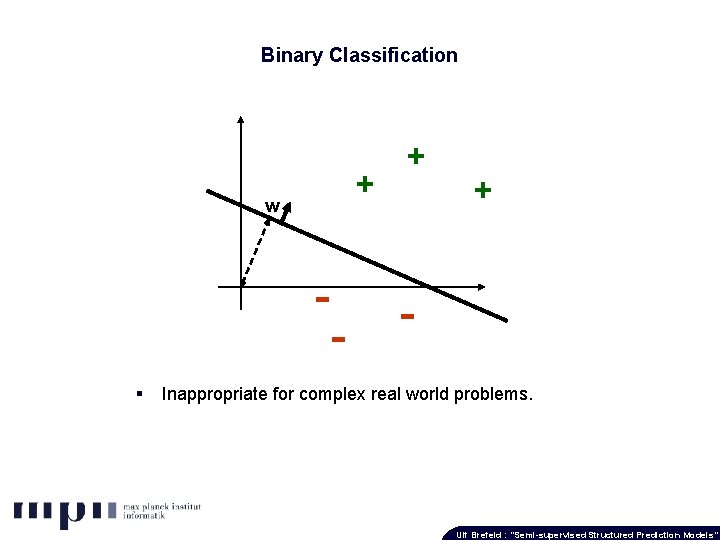

Binary Classification + + + w - - § Inappropriate for complex real world problems. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

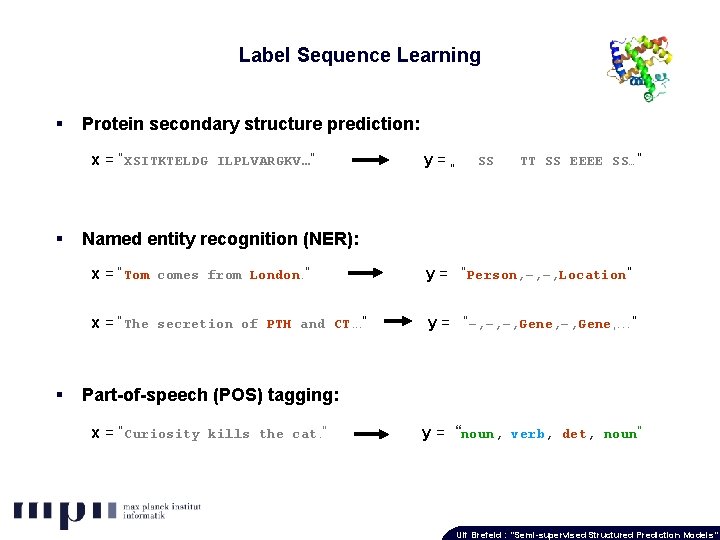

Label Sequence Learning § Protein secondary structure prediction: x = “XSITKTELDG ILPLVARGKV…” § § y = „ SS TT SS EEEE SS…“ Named entity recognition (NER): x = “Tom comes from London. ” y = “Person, –, –, Location” x = “The secretion of PTH and CT. . . ” y = “–, –, –, Gene, …” Part-of-speech (POS) tagging: x = “Curiosity kills the cat. ” y = “noun, verb, det, noun” Ulf Brefeld : “Semi-supervised Structured Prediction Models”

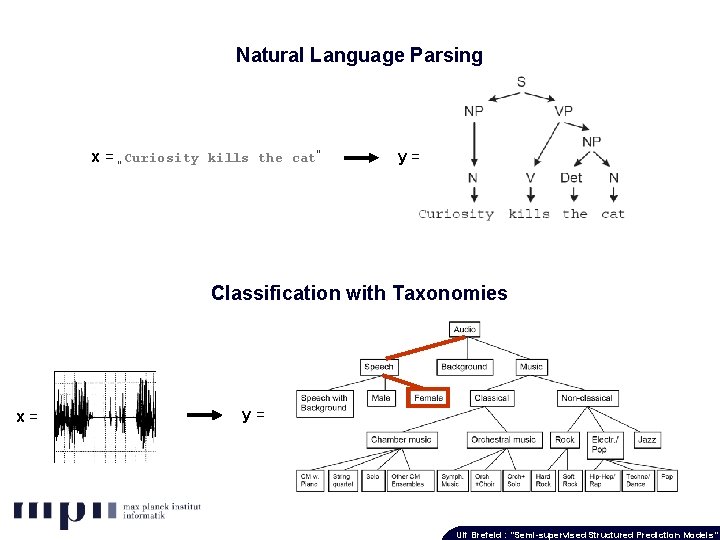

Natural Language Parsing x = „Curiosity kills the cat“ y= Classification with Taxonomies x= y= Ulf Brefeld : “Semi-supervised Structured Prediction Models”

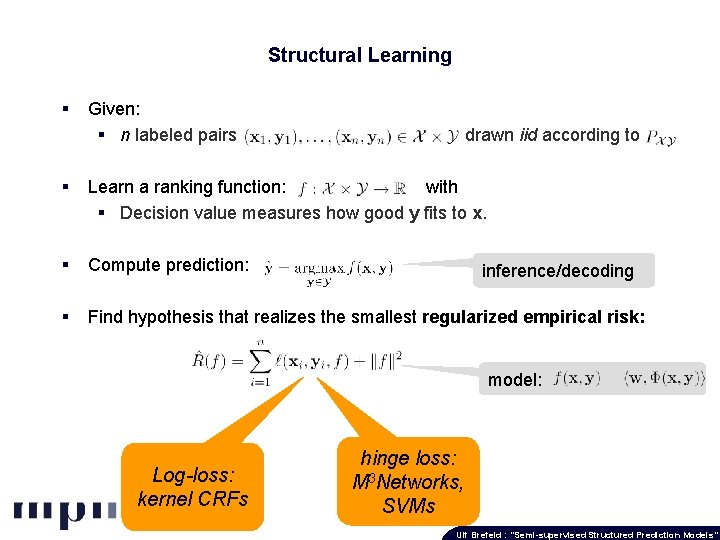

Structural Learning § Given: § n labeled pairs (x 1, y 1), …, (xn, yn) Xx. Y, drawn iid according to § Learn a ranking function: with § Decision value measures how good y fits to x. § Compute prediction: § Find hypothesis that realizes the smallest regularized empirical risk: inference/decoding model: Log-loss: kernel CRFs hinge loss: M 3 Networks, SVMs Ulf Brefeld : “Semi-supervised Structured Prediction Models”

Semi-supervised Discriminative Learning § Labeled training data is scarce and expensive. § Eg. , experiments in computational biology. § Need for expert knowledge. § Tedious and time consuming. § Unclassified instances are abundant and cheap. § Extract texts/sentences from www (POS-tagging, NER, NLP). § Assess primary structure of proteins from DNA/RNA. § … There is a need for semi-supervised techniques in structural learning! Ulf Brefeld : “Semi-supervised Structured Prediction Models”

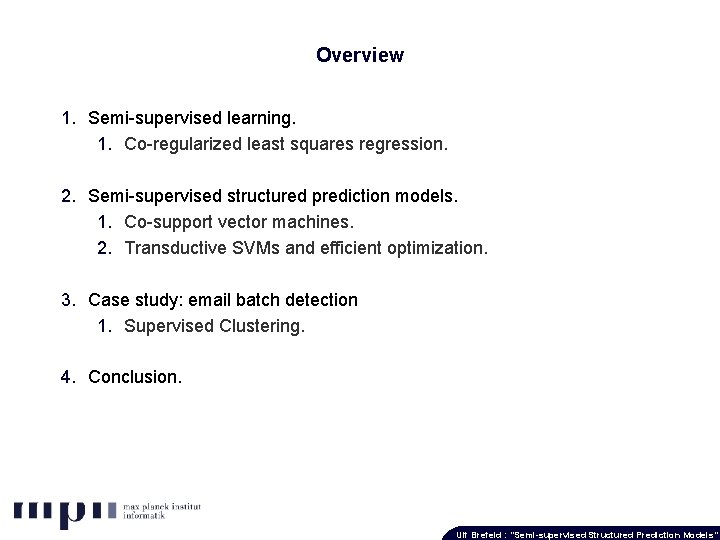

Overview 1. Semi-supervised learning. 1. Co-regularized least squares regression. 2. Semi-supervised structured prediction models. 1. Co-support vector machines. 2. Transductive SVMs and efficient optimization. 3. Case study: email batch detection 1. Supervised Clustering. 4. Conclusion. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

Overview 1. Semi-supervised learning techniques. 1. Co-regularized least squares regression. 2. Semi-supervised structured prediction models. 1. Co-support vector machines. 2. Transductive SVMs and efficient optimization. 3. Email batch detection 1. Supervised Clustering. 4. Conclusion. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

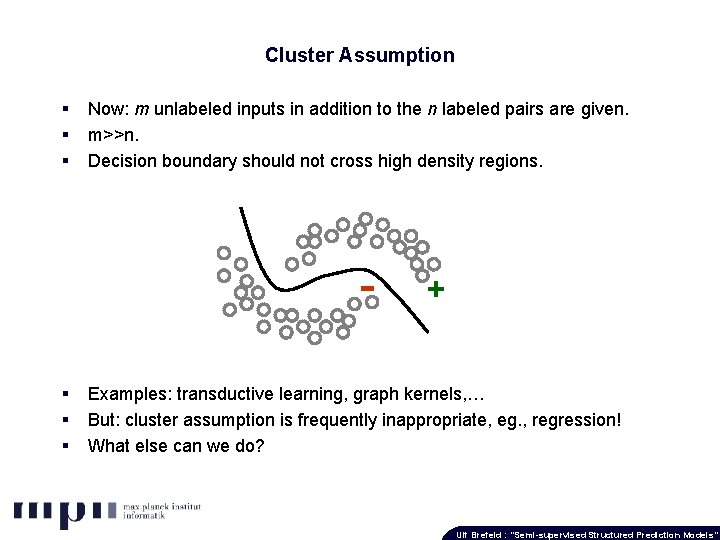

Cluster Assumption Now: m unlabeled inputs in addition to the n labeled pairs are given. m>>n. Decision boundary should not cross high density regions. § § § Examples: transductive learning, graph kernels, … But: cluster assumption is frequently inappropriate, eg. , regression! What else can we do? + § § § Ulf Brefeld : “Semi-supervised Structured Prediction Models” -

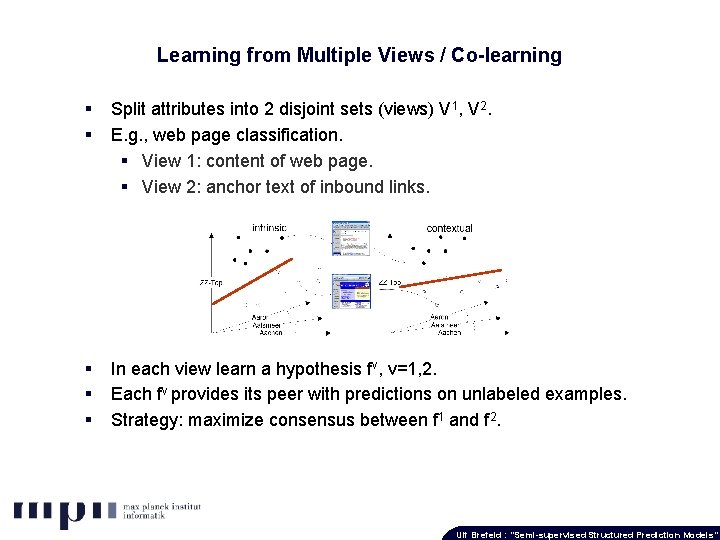

Learning from Multiple Views / Co-learning § § Split attributes into 2 disjoint sets (views) V 1, V 2. E. g. , web page classification. § View 1: content of web page. § View 2: anchor text of inbound links. § § § In each view learn a hypothesis fv, v=1, 2. Each fv provides its peer with predictions on unlabeled examples. Strategy: maximize consensus between f 1 and f 2. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

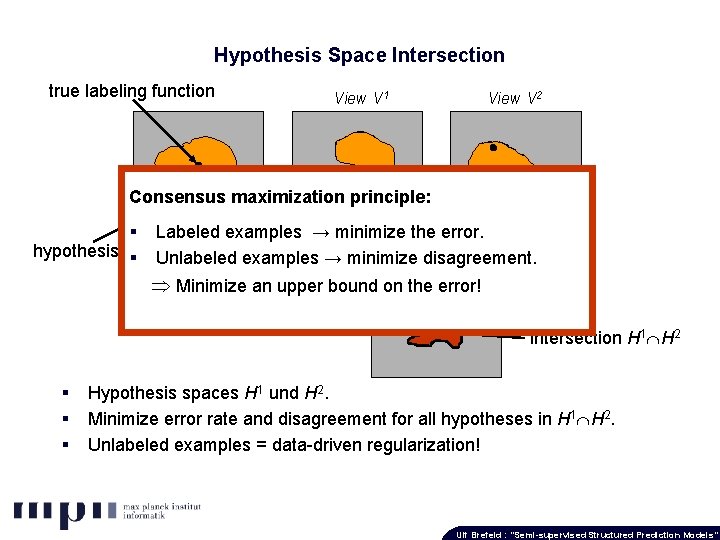

Hypothesis Space Intersection true labeling function View V 1 View V 2 Consensus maximization principle: § Labeled examples → minimize the error. hypothesis space § Unlabeled versionexamples space → minimize disagreement. Minimize an upper bound on the error! intersection H 1 H 2 § § § Hypothesis spaces H 1 und H 2. Minimize error rate and disagreement for all hypotheses in H 1 H 2. Unlabeled examples = data-driven regularization! Ulf Brefeld : “Semi-supervised Structured Prediction Models”

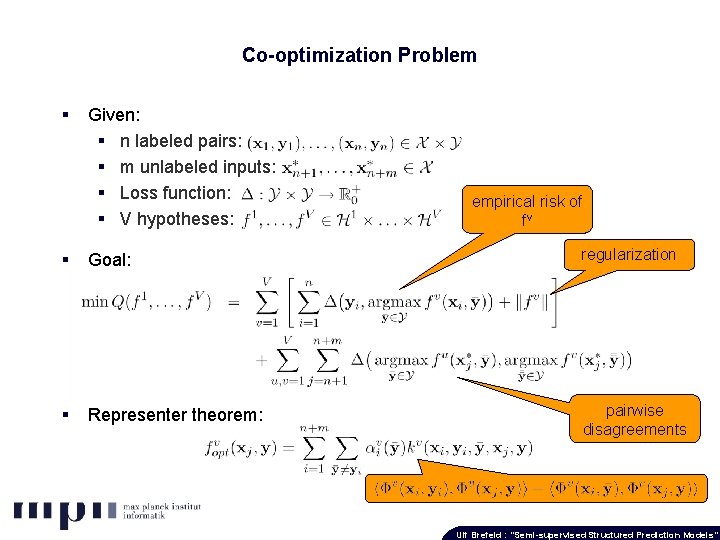

Co-optimization Problem § § Given: § n labeled pairs: (x 1, y 1), …, (xn, yn) Xx. Y § m unlabeled inputs: xn+1, …, xn+m X § Loss function: Δ: Yx. Y→R+ § V hypotheses: f 1, …, f. V H 1 x…x HV regularization Goal: V min Q(f 1, …f. V) = n v=1 i=1 V +λ § empirical risk of fv Representer theorem: Δ(yi, argmaxy’ fv(xi, y’)) + η ||fv||2 n+m u, v=1 j=n+1 Δ(argmaxy’ fu(xj, y’), argmaxy’’fv(xj, y’’)) pairwise disagreements Ulf Brefeld : “Semi-supervised Structured Prediction Models”

Overview 1. Semi-supervised learning techniques. 1. Co-regularized least squares regression. 2. Semi-supervised structured prediction models. 1. Co-support vector machines. 2. Transductive SVMs and efficient optimization. 3. Email batch detection 1. Supervised Clustering. 4. Conclusion. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

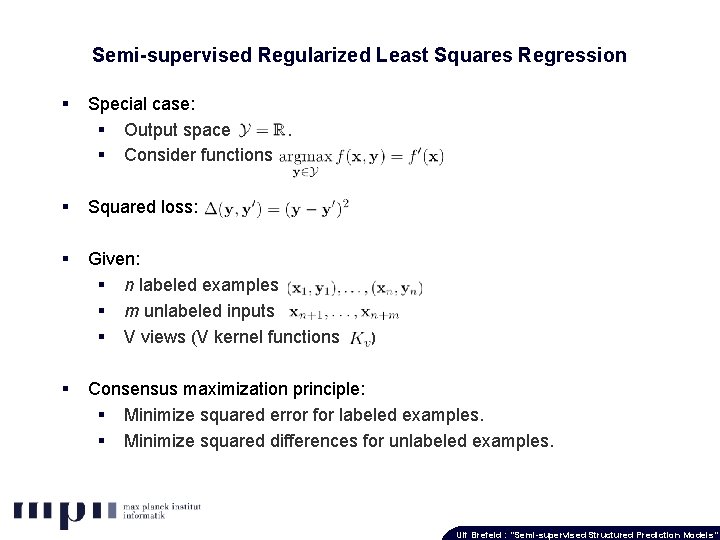

Semi-supervised Regularized Least Squares Regression § Special case: § Output space Y=R. § Consider functions § Squared loss: § Given: § n labeled examples § m unlabeled inputs § V views (V kernel functions § ) Consensus maximization principle: § Minimize squared error for labeled examples. § Minimize squared differences for unlabeled examples. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

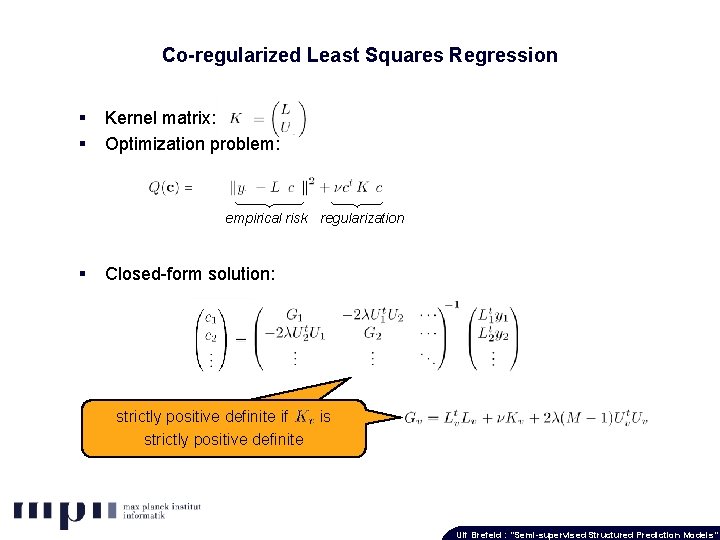

Co-regularized Least Squares Regression § § Kernel matrix: Optimization problem: empirical risk regularization § disagreement Closed-form solution: strictlypositive definite ifif K_v is is strictly positive definite strictly definite Ulf Brefeld : “Semi-supervised Structured Prediction Models”

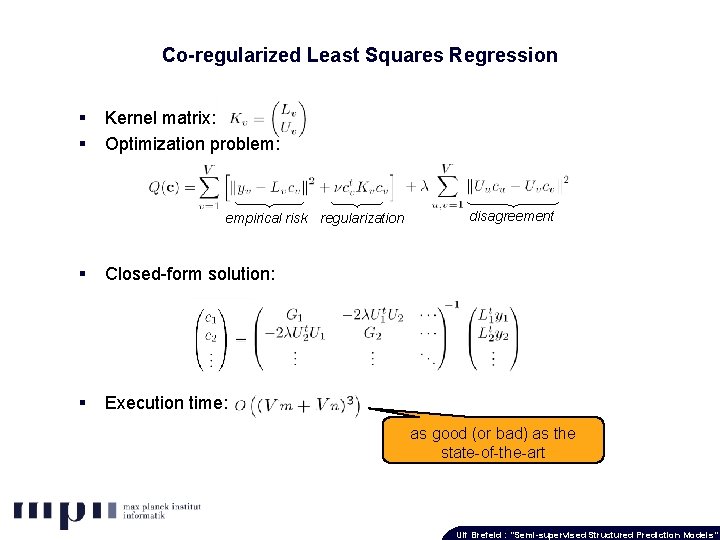

Co-regularized Least Squares Regression § § Kernel matrix: Optimization problem: empirical risk regularization § Closed-form solution: § Execution time: disagreement as good (or bad) as the state-of-the-art Ulf Brefeld : “Semi-supervised Structured Prediction Models”

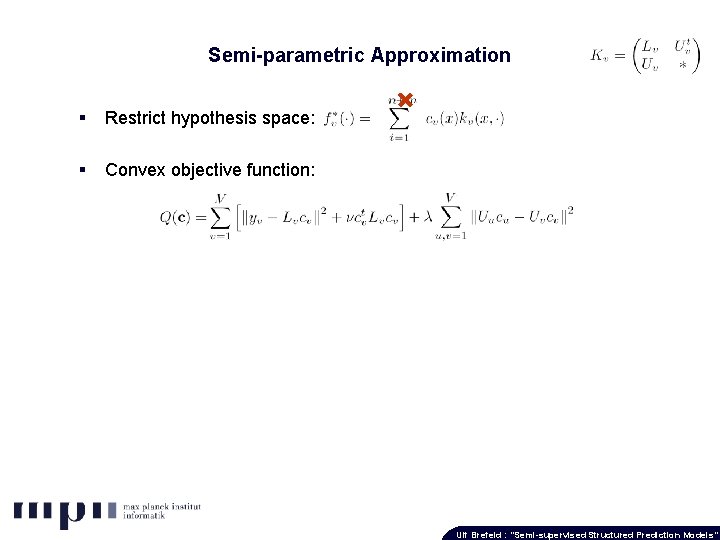

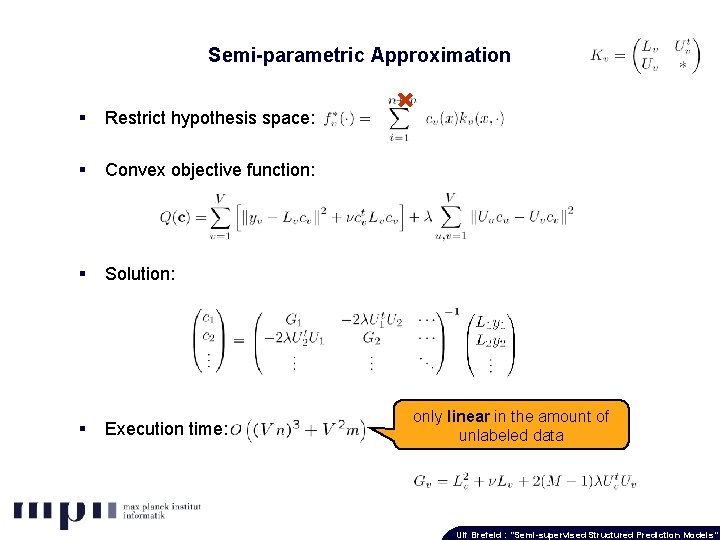

Semi-parametric Approximation § Restrict hypothesis space: § Convex objective function: Ulf Brefeld : “Semi-supervised Structured Prediction Models”

Semi-parametric Approximation § Restrict hypothesis space: § Convex objective function: § Solution: § Execution time: only linear in the amount of unlabeled data Ulf Brefeld : “Semi-supervised Structured Prediction Models”

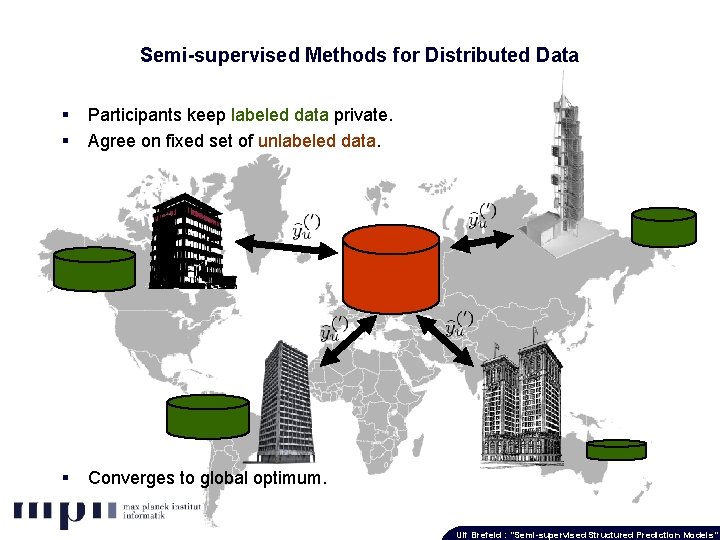

Semi-supervised Methods for Distributed Data § § Participants keep labeled data private. Agree on fixed set of unlabeled data. § Converges to global optimum. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

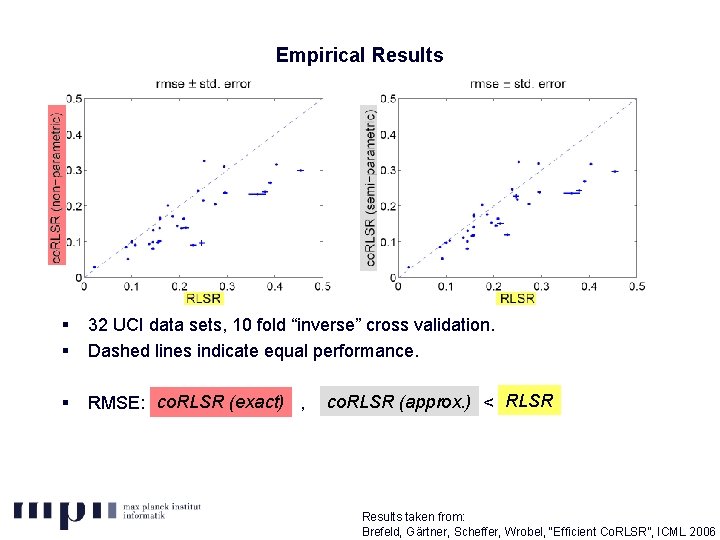

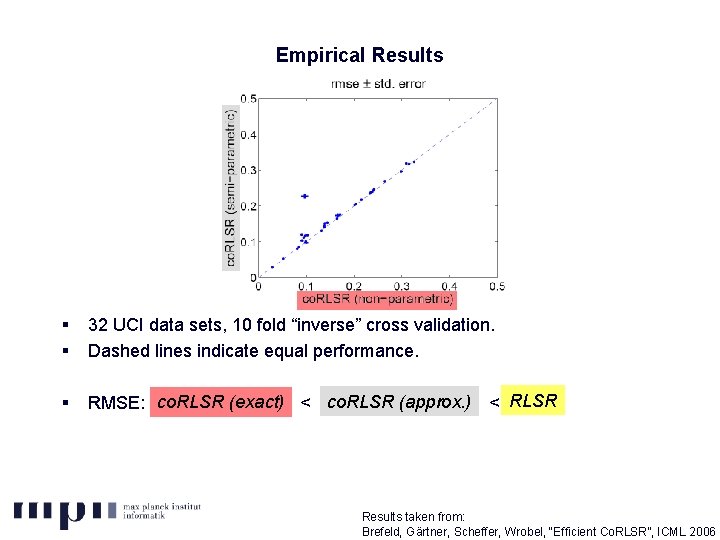

Empirical Results § § 32 UCI data sets, 10 fold “inverse” cross validation. Dashed lines indicate equal performance. § RLSR co. RLSR (approx. ) co. RLSR (exact) , semi-parametric RMSE: exact co. RLSR c < RLSR Results taken from: Brefeld, Gärtner, Scheffer, “Efficient Co. RLSR”, ICMLModels” 2006 Ulf Brefeld : Wrobel, “Semi-supervised Structured Prediction

Empirical Results § § 32 UCI data sets, 10 fold “inverse” cross validation. Dashed lines indicate equal performance. § RLSR co. RLSR (approx. ) co. RLSR (exact) < semi-parametric RMSE: exact co. RLSR c < RLSR Results taken from: Brefeld, Gärtner, Scheffer, “Efficient Co. RLSR”, ICMLModels” 2006 Ulf Brefeld : Wrobel, “Semi-supervised Structured Prediction

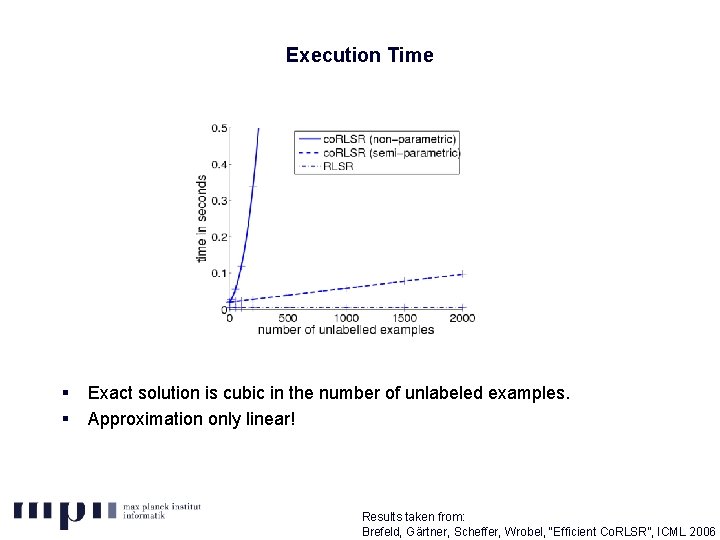

Execution Time § § Exact solution is cubic in the number of unlabeled examples. Approximation only linear! Results taken from: Brefeld, Gärtner, Scheffer, “Efficient Co. RLSR”, ICMLModels” 2006 Ulf Brefeld : Wrobel, “Semi-supervised Structured Prediction

Overview 1. Semi-supervised learning techniques. 1. Co-regularized least squares regression. 2. Semi-supervised structured prediction models. 1. Co-support vector machines. 2. Transductive SVMs and efficient optimization. 3. Email batch detection 1. Supervised Clustering. 4. Conclusion. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

Semi-supervised Learning for Structured Output Variables § Given § n labeled examples § m unlabeled inputs § Joint decision function: § where Distinct joint feature mappings in V 1 and V 2 § Apply consensus maximization principle. § Minimize the error for labeled examples. § Minimize the disagreement for unlabeled examples. § Compute argmax § Viterbi algorithm (sequential output) § CKY algorithm (recursive grammar) Ulf Brefeld : “Semi-supervised Structured Prediction Models”

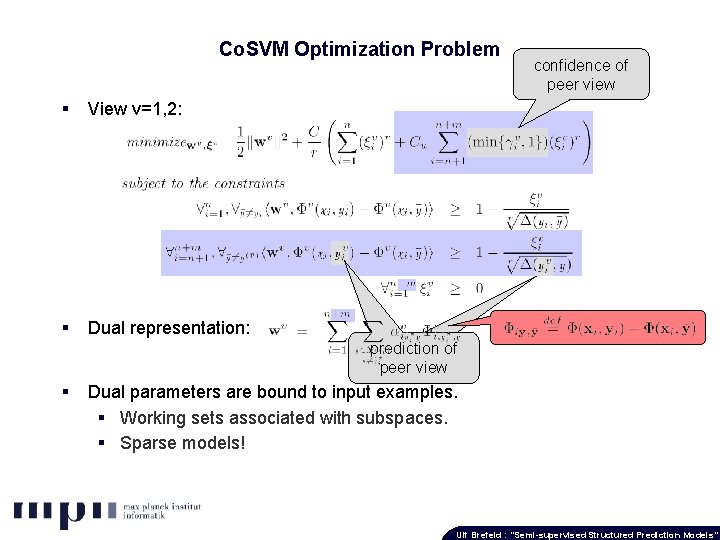

Co. SVM Optimization Problem § View v=1, 2: § Dual representation: § prediction of peer view Dual parameters are bound to input examples. § Working sets associated with subspaces. § Sparse models! confidence of peer view Ulf Brefeld : “Semi-supervised Structured Prediction Models”

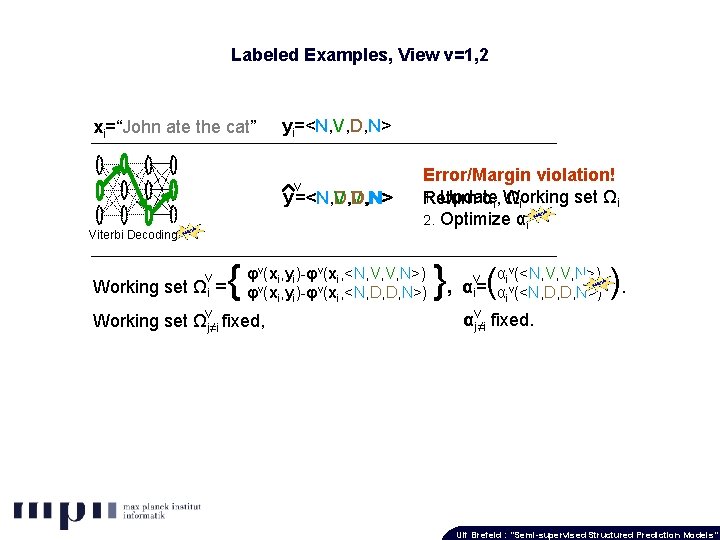

Labeled Examples, View v=1, 2 xi=“John ate the cat” yi=<N, V, D, N> v y =<N, D, D, N> =<N, V, V, N> =<N, V, D, N> Viterbi Decoding v Working set Ωi = v { Error/Margin violation! 1. Update set Ωi Return αi, Working Ωi 2. Optimize αi φv(xi, yi)-φv(xi, <N, V, V, N>) φv(xi, yi)-φv(xi, <N, D, D, N>) Working set Ωj≠i fixed, } ( αiv(<N, V, V, N>) , αi= α v(<N, D, D, N>) i v ). v αj≠i fixed. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

Unlabeled Examples xi=“John went home” View 1 1 αj≠i fixed, 1 Working set Ωj≠i fixed. { } ( ) 1 1 1 Working set Ωi = φ (xi, <N, V, V>)-φ (xi, <D, V, N>) , αi= αi (<D, V, N>) , 1 y =<D, V, N> =<N, V, N> Viterbi Decoding 2 Disagreement / margin Consensus: return αi 1, αviolation! i , Ωi View 2 Update working sets Ωi 1, Ωi 2 2. Optimize αi 1, αi 2 1. 2 y =<N, V, V> =<N, V, N> Viterbi Decoding { } ( 2 2 2 Working set Ωi = φ (xi, <D, V, N>)-φ (xi, <N, V, V>) , αi= αi (<N, V, V>) 2 Working set Ωj≠i fixed. ). 2 αj≠i fixed, Ulf Brefeld : “Semi-supervised Structured Prediction Models”

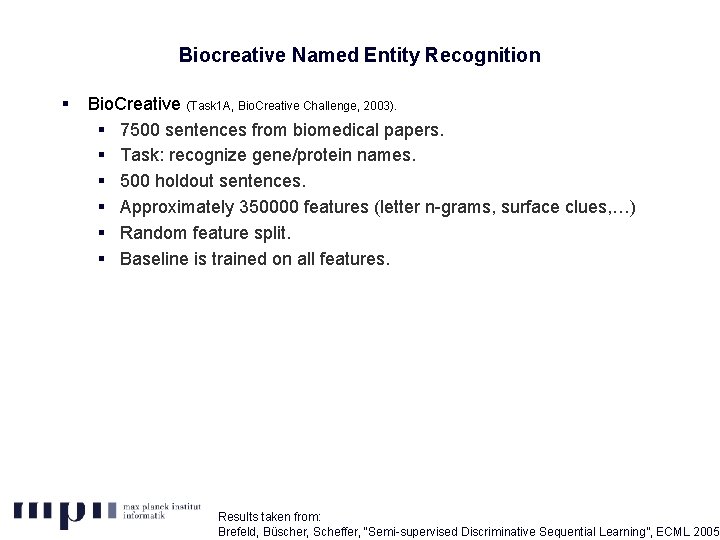

Biocreative Named Entity Recognition § Bio. Creative (Task 1 A, Bio. Creative Challenge, 2003). § 7500 sentences from biomedical papers. § Task: recognize gene/protein names. § 500 holdout sentences. § Approximately 350000 features (letter n-grams, surface clues, …) § Random feature split. § Baseline is trained on all features. Results taken from: Brefeld, Büscher, Scheffer, “Semi-supervised. Ulf Discriminative Sequential Learning”, ECML 2005 Brefeld : “Semi-supervised Structured Prediction Models”

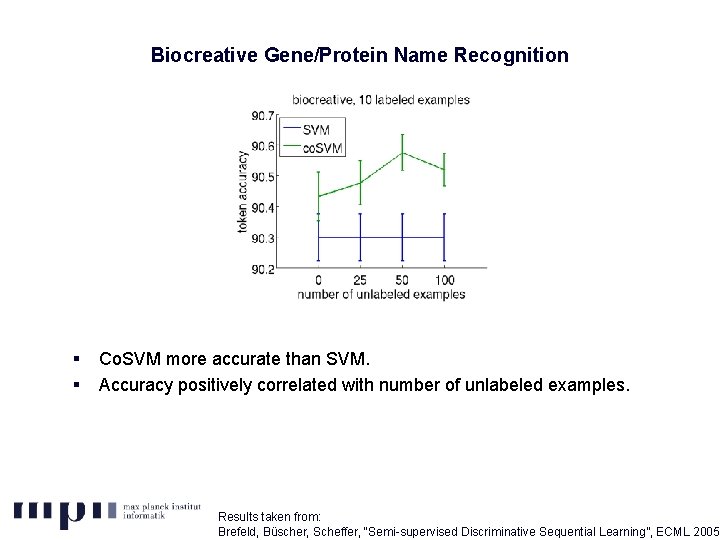

Biocreative Gene/Protein Name Recognition § § Co. SVM more accurate than SVM. Accuracy positively correlated with number of unlabeled examples. Results taken from: Brefeld, Büscher, Scheffer, “Semi-supervised. Ulf Discriminative Sequential Learning”, ECML 2005 Brefeld : “Semi-supervised Structured Prediction Models”

Natural Language Parsing § Wall Street Journal corpus (Penn tree bank). § Subsets 2 -21. § 8, 666 sentences of length ≤ 15 tokens. § Contex free grammar contains > 4, 800 production rules. § Negra corpus. § German news paper archive. § 14, 137 sentences of between 5 and 25 tokens. § Cf. G contains >26, 700 production rules. § Experimental setup: § Local features (rule identity, rule at border, span width, …). § Loss: (ya, yb) = 1 - F 1(ya, yb). § 100 holdout examples. § CKY parser by Mark Johnson. Results taken from: Brefeld, Scheffer, “Semi-supervised Learning for Structured Ouptut. Structured Variables”, ICMLModels” 2006 Ulf Brefeld : “Semi-supervised Prediction

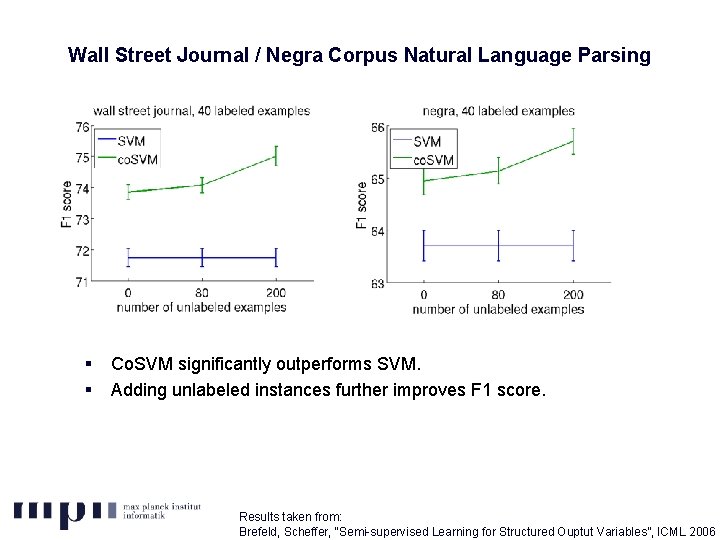

Wall Street Journal / Negra Corpus Natural Language Parsing § § Co. SVM significantly outperforms SVM. Adding unlabeled instances further improves F 1 score. Results taken from: Brefeld, Scheffer, “Semi-supervised Learning for Structured Ouptut. Structured Variables”, ICMLModels” 2006 Ulf Brefeld : “Semi-supervised Prediction

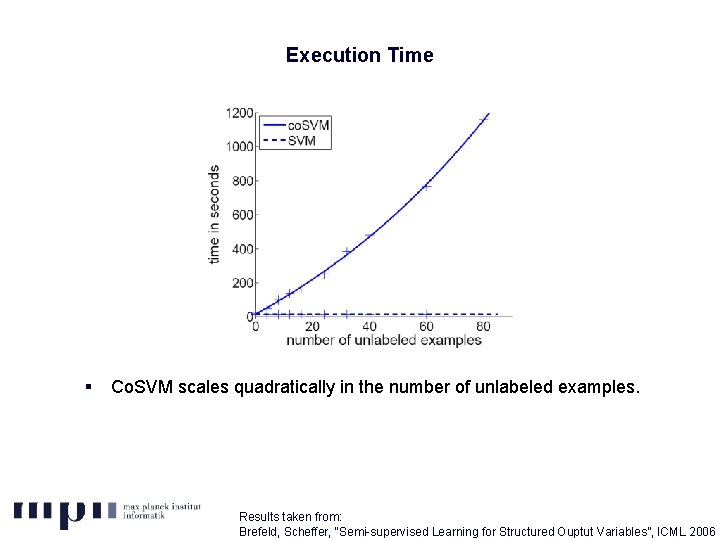

Execution Time § Co. SVM scales quadratically in the number of unlabeled examples. Results taken from: Brefeld, Scheffer, “Semi-supervised Learning for Structured Ouptut. Structured Variables”, ICMLModels” 2006 Ulf Brefeld : “Semi-supervised Prediction

Overview 1. Semi-supervised learning techniques. 1. Co-regularized least squares regression. 2. Semi-supervised structured prediction models. 1. Co-support vector machines. 2. Transductive SVMs and efficient optimization. 3. Email batch detection 1. Supervised Clustering. 4. Conclusion. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

Transductive Support Vector Machines for Structured Variables § Binary transductive SVMs: § Cluster assumption. § Discrete variables for unlabeled instances. § Optimization is expensive even for binary tasks! § Structural transductive SVMs. § Decoding = combinatorial optimization of discrete variables. § Intractable! § Efficient optimization: § Transform, remove discrete variables. § Differentiable, continuous optimization. § Apply gradient-based, unconstraint optimization techniques. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

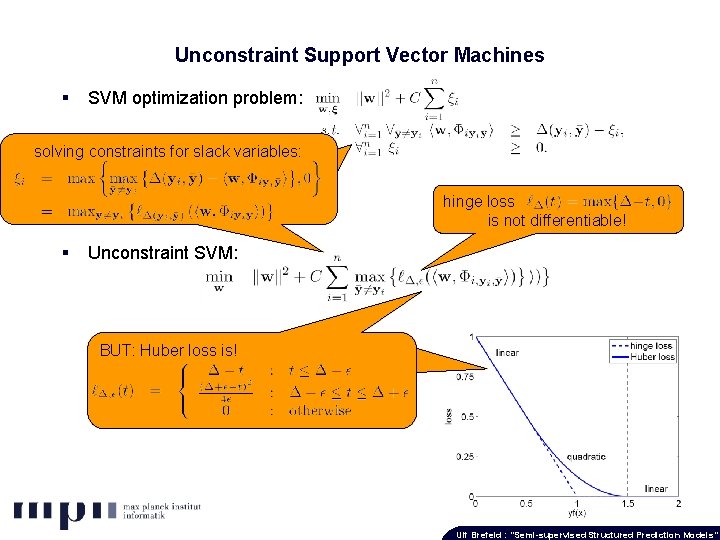

Unconstraint Support Vector Machines § SVM optimization problem: solving constraints for slack variables: hinge loss is not differentiable! § Unconstraint SVM: BUT: Huber loss is! Ulf Brefeld : “Semi-supervised Structured Prediction Models”

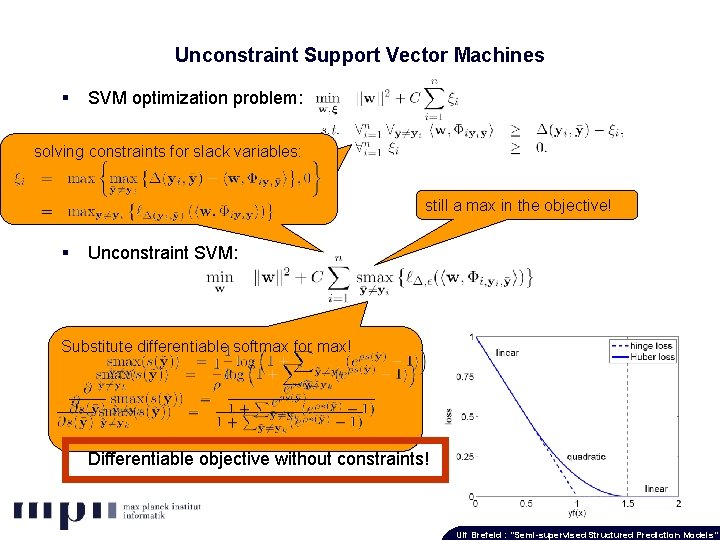

Unconstraint Support Vector Machines § SVM optimization problem: solving constraints for slack variables: still a max in the objective! § Unconstraint SVM: Substitute differentiable softmax for max! § Differentiable objective without constraints! Ulf Brefeld : “Semi-supervised Structured Prediction Models”

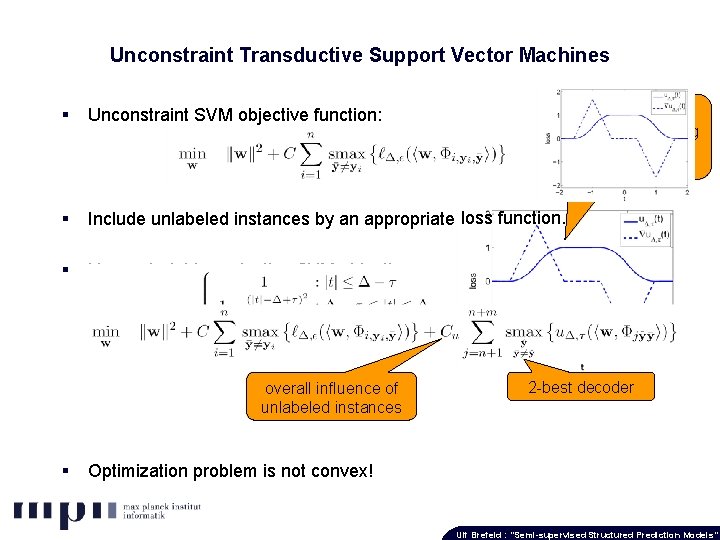

Unconstraint Transductive Support Vector Machines Mitigate margin violations by moving w in two symmetric ways § Unconstraint SVM objective function: § Include unlabeled instances by an appropriate loss function. Unconstraint transductive SVM objective: § overall influence of unlabeled instances § 2 -best decoder Optimization problem is not convex! Ulf Brefeld : “Semi-supervised Structured Prediction Models”

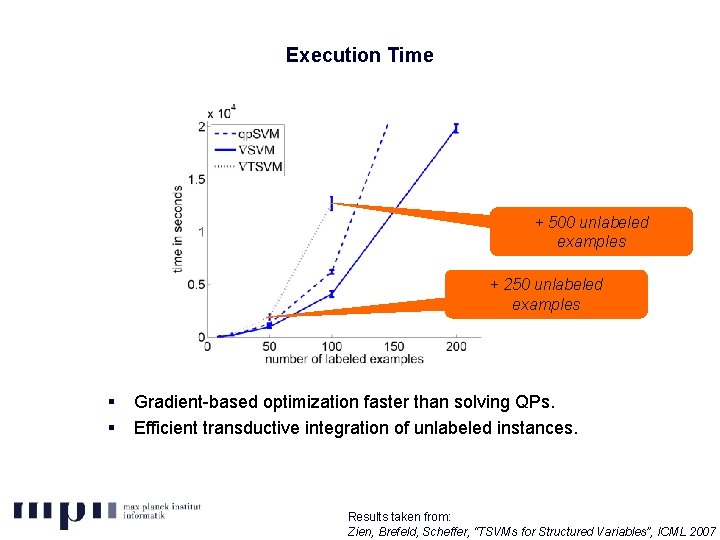

Execution Time + 500 unlabeled examples + 250 unlabeled examples § § Gradient-based optimization faster than solving QPs. Efficient transductive integration of unlabeled instances. Results taken from: Zien, Brefeld, Scheffer, “TSVMs for Structured Variables”, ICMLModels” 2007 Ulf Brefeld : “Semi-supervised Prediction

Spanish News Wire Named Entity Recognition § Spanish News Wire (Special Session of Co. NLL, 2002). § 3100 sentences of between 10 and 40 tokens. § Entities: person, location, organization and misc. names (9 labels). § Window of size 3 around each token. § Approximately 120, 000 features (token itself, surface clues. . . ). § 300 holdout sentences. Results taken from: Zien, Brefeld, Scheffer, “TSVMs for Structured Variables”, ICMLModels” 2007 Ulf Brefeld : “Semi-supervised Prediction

![token error [%] Spanish News Named Entity Recognition number of unlabeled examples § § token error [%] Spanish News Named Entity Recognition number of unlabeled examples § §](http://slidetodoc.com/presentation_image_h/a51c9c1b9fc6dc2e0806780cf76e2c02/image-40.jpg)

token error [%] Spanish News Named Entity Recognition number of unlabeled examples § § TSVM has significantly lower error rates than SVMs. Error decreases in terms of the number of unlabeled instances. Results taken from: Zien, Brefeld, Scheffer, “TSVMs for Structured Variables”, ICMLModels” 2007 Ulf Brefeld : “Semi-supervised Prediction

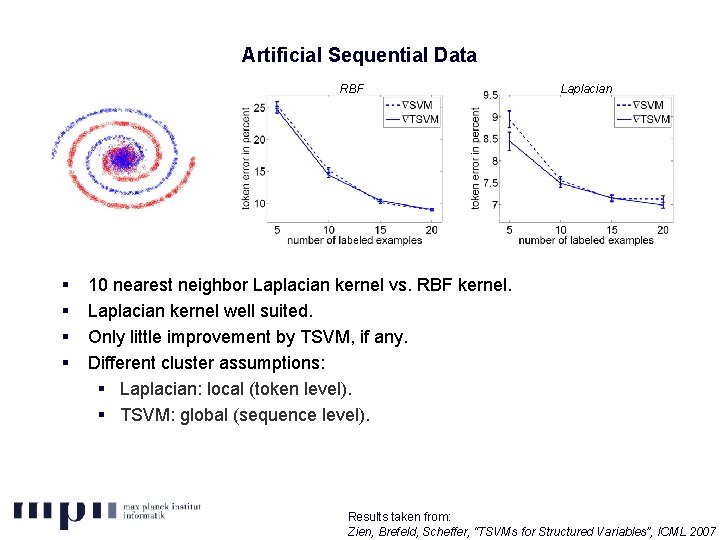

Artificial Sequential Data RBF § § Laplacian 10 nearest neighbor Laplacian kernel vs. RBF kernel. Laplacian kernel well suited. Only little improvement by TSVM, if any. Different cluster assumptions: § Laplacian: local (token level). § TSVM: global (sequence level). Results taken from: Zien, Brefeld, Scheffer, “TSVMs for Structured Variables”, ICMLModels” 2007 Ulf Brefeld : “Semi-supervised Prediction

Overview 1. Semi-supervised learning techniques. 1. Co-regularized least squares regression. 2. Semi-supervised structured prediction models. 1. Co-support vector machines. 2. Transductive SVMs and efficient optimization. 3. Email batch detection. 1. Supervised Clustering. 4. Conclusion. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

Supervised Clustering of Data Streams for Email Batch Detection § Spam characteristics: § Amount of spam messages in electronic messaging is ~80%. § Approximately 80 -90% of these spams are generated by only a few spammers. § Spammers maintain templates and exchange them rapidly. § Many emails generated by the same template (=batch) in short time frames. § Goal: § Detect batches in the data stream. § Ground-truth of exact clusterings exist! § Batch information: § Black/white listing. § Improve spam/non-spam classification. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

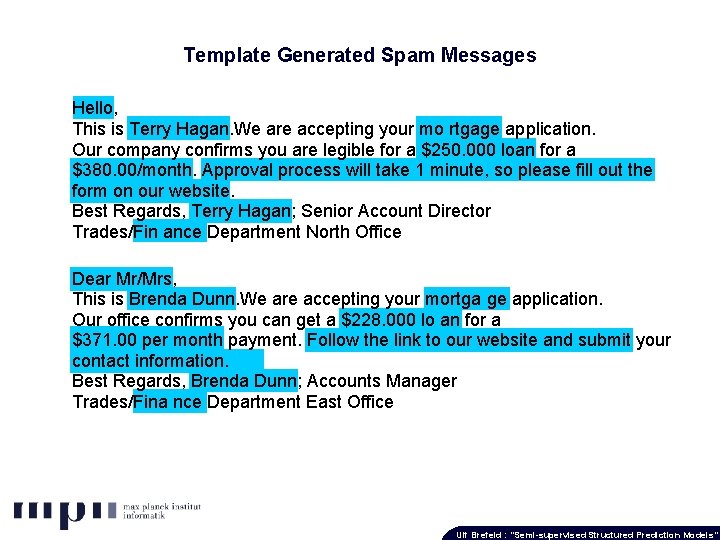

Template Generated Spam Messages Hello, This is Terry Hagan. We are accepting your mo rtgage application. Our company confirms you are legible for a $250. 000 loan for a $380. 00/month. Approval process will take 1 minute, so please fill out the form on our website. Best Regards, Terry Hagan; Senior Account Director Trades/Fin ance Department North Office Dear Mr/Mrs, This is Brenda Dunn. We are accepting your mortga ge application. Our office confirms you can get a $228. 000 lo an for a $371. 00 per month payment. Follow the link to our website and submit your contact information. Best Regards, Brenda Dunn; Accounts Manager Trades/Fina nce Department East Office Ulf Brefeld : “Semi-supervised Structured Prediction Models”

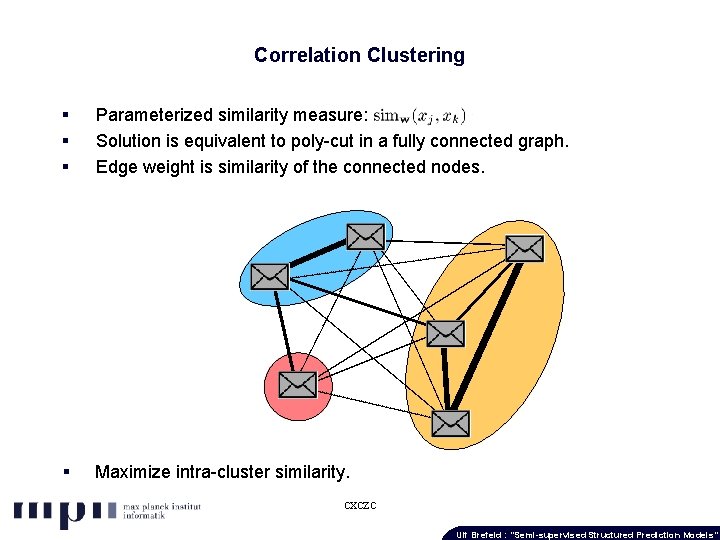

Correlation Clustering § § § Parameterized similarity measure: Solution is equivalent to poly-cut in a fully connected graph. Edge weight is similarity of the connected nodes. § Maximize intra-cluster similarity. cxczc Ulf Brefeld : “Semi-supervised Structured Prediction Models”

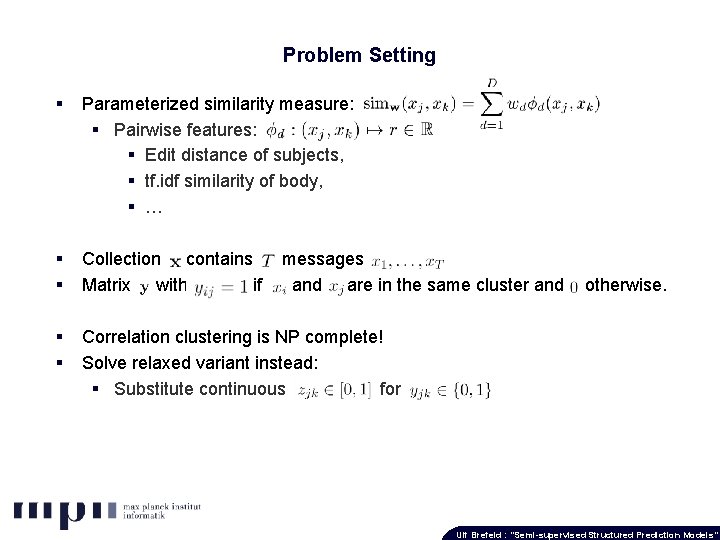

Problem Setting § Parameterized similarity measure: § Pairwise features: § Edit distance of subjects, § tf. idf similarity of body, § … § § Collection x contains Ti messages x 1(i), …, x. Ti. Matrix with if and are in the same cluster and 0 otherwise. § § Correlation clustering is NP complete! Solve relaxed variant instead: § Substitute continuous for Ulf Brefeld : “Semi-supervised Structured Prediction Models”

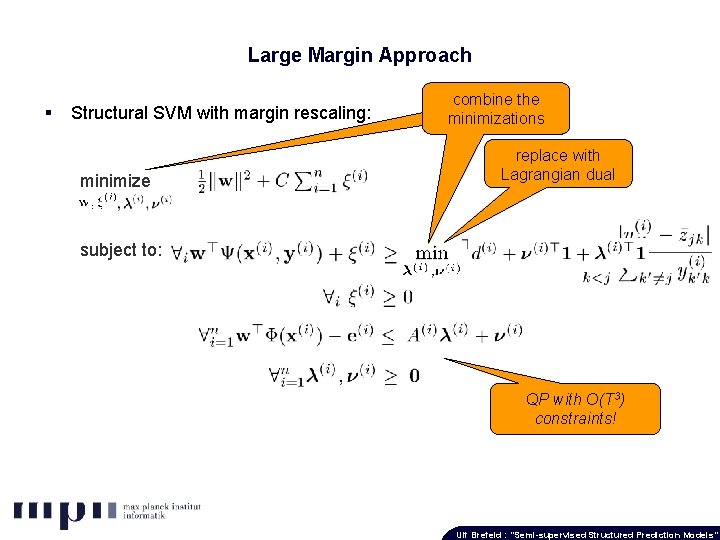

Large Margin Approach § Structural SVM with margin rescaling: minimize combine the minimizations replace with Lagrangian dual subject to: QP with O(T 3) constraints! Ulf Brefeld : “Semi-supervised Structured Prediction Models”

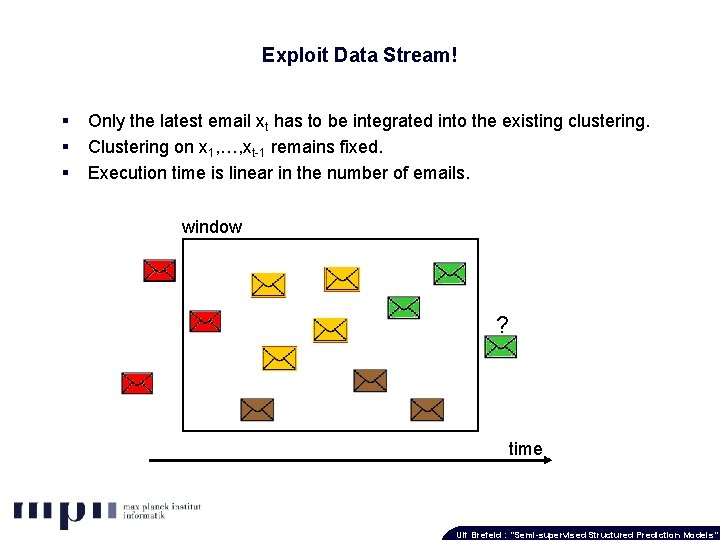

Exploit Data Stream! § § § Only the latest email xt has to be integrated into the existing clustering. Clustering on x 1, …, xt-1 remains fixed. Execution time is linear in the number of emails. window ? time Ulf Brefeld : “Semi-supervised Structured Prediction Models”

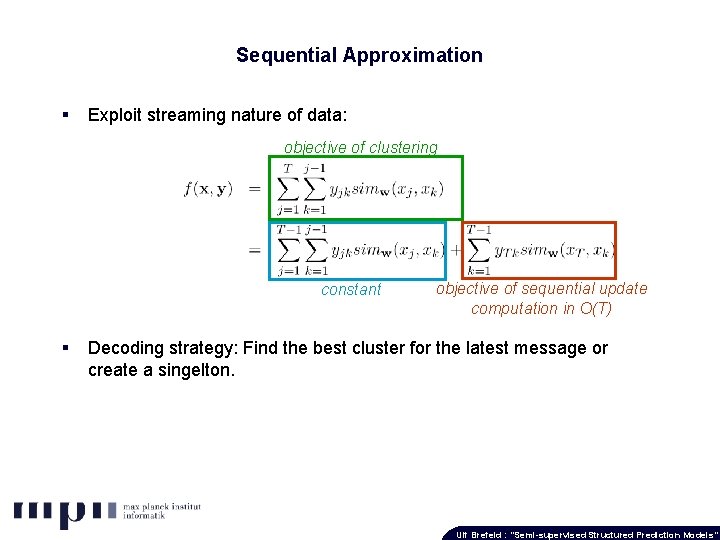

Sequential Approximation § Exploit streaming nature of data: objective of clustering constant § objective of sequential update computation in O(T) Decoding strategy: Find the best cluster for the latest message or create a singelton. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

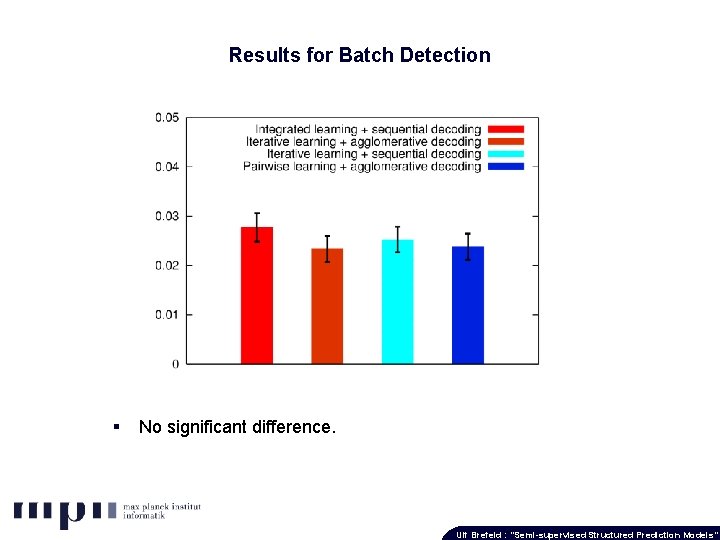

Results for Batch Detection § No significant difference. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

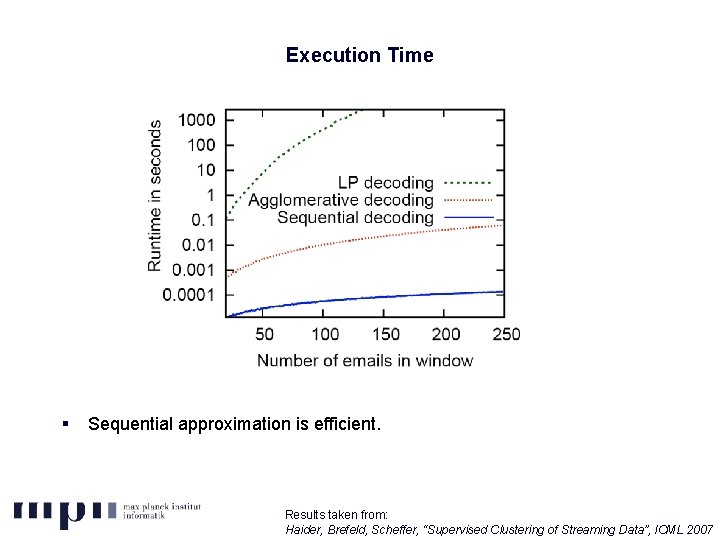

Execution Time § Sequential approximation is efficient. Results taken from: Haider, Brefeld, Scheffer, “Supervised Clustering of Streaming Data”, ICMLModels” 2007 Ulf Brefeld : “Semi-supervised Structured Prediction

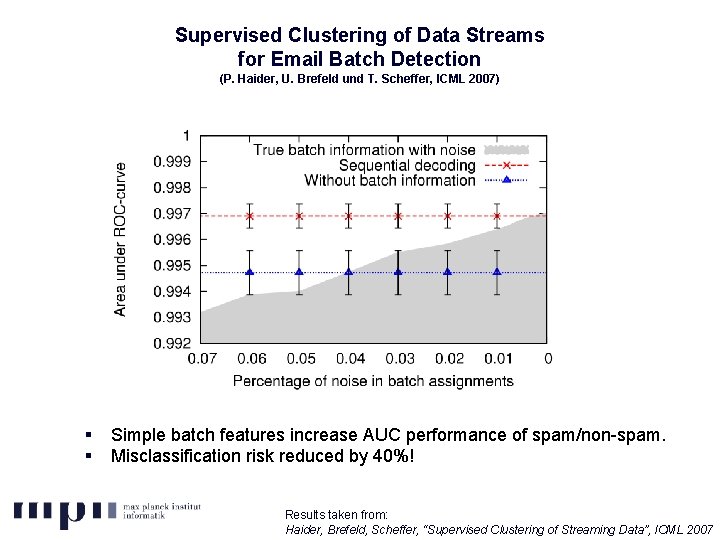

Supervised Clustering of Data Streams for Email Batch Detection (P. Haider, U. Brefeld und T. Scheffer, ICML 2007) § § Simple batch features increase AUC performance of spam/non-spam. Misclassification risk reduced by 40%! Results taken from: Results Haider, Brefeld, “Supervised Clustering of Streaming Data”, ICML 2007 Zien, Scheffer, Brefeld, Scheffer, “TSVMs for Structured Variables”, ICMLModels” 2007 Ulf Brefeld : “Semi-supervised Structured Prediction

Overview 1. Semi-supervised learning techniques. 1. Co-regularized least squares regression. 2. Semi-supervised structured prediction models. 1. Co-support vector machines. 2. Transductive SVMs and efficient optimization. 3. Email batch detection. 1. Supervised Clustering. 4. Conclusion. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

Conclusion § Semi-supervised learning. § Consensus maximization principle vs. cluster assumption. § Co-regularized Least Squares Regression. § Semi-supervised structured prediction models: § Co. SVMs and TSVMs. § Efficient optimization. § Empirical results: § Semi-supervised variants have lower error than baselines. § Adding unlabeled data further improves accuracy. § Supervised Clustering: § Efficient optimization. § Batch features reduce misclassification risk. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

Overview 1. Semi-supervised learning techniques. 1. Co-regularized least squares regression. 2. Semi-supervised structured prediction models. 1. Co-support vector machines. 2. Transductive SVMs and efficient optimization. 3. Email batch detection. 1. Supervised Clustering. 4. Conclusion. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

Conclusion § Semi-supervised learning. § Consensus maximization principle vs. cluster assumption. § Co-regularized Least Squares Regression. § Semi-supervised structured prediction models: § Co. SVMs and TSVMs. § Efficient optimization. § Empirical results: § Semi-supervised variants have lower error than baselines. § Adding unlabeled data further improves accuracy. Ulf Brefeld : “Semi-supervised Structured Prediction Models”

- Slides: 56