Semantic Similarity for Music Retrieval Acoustic Models Semantic

- Slides: 1

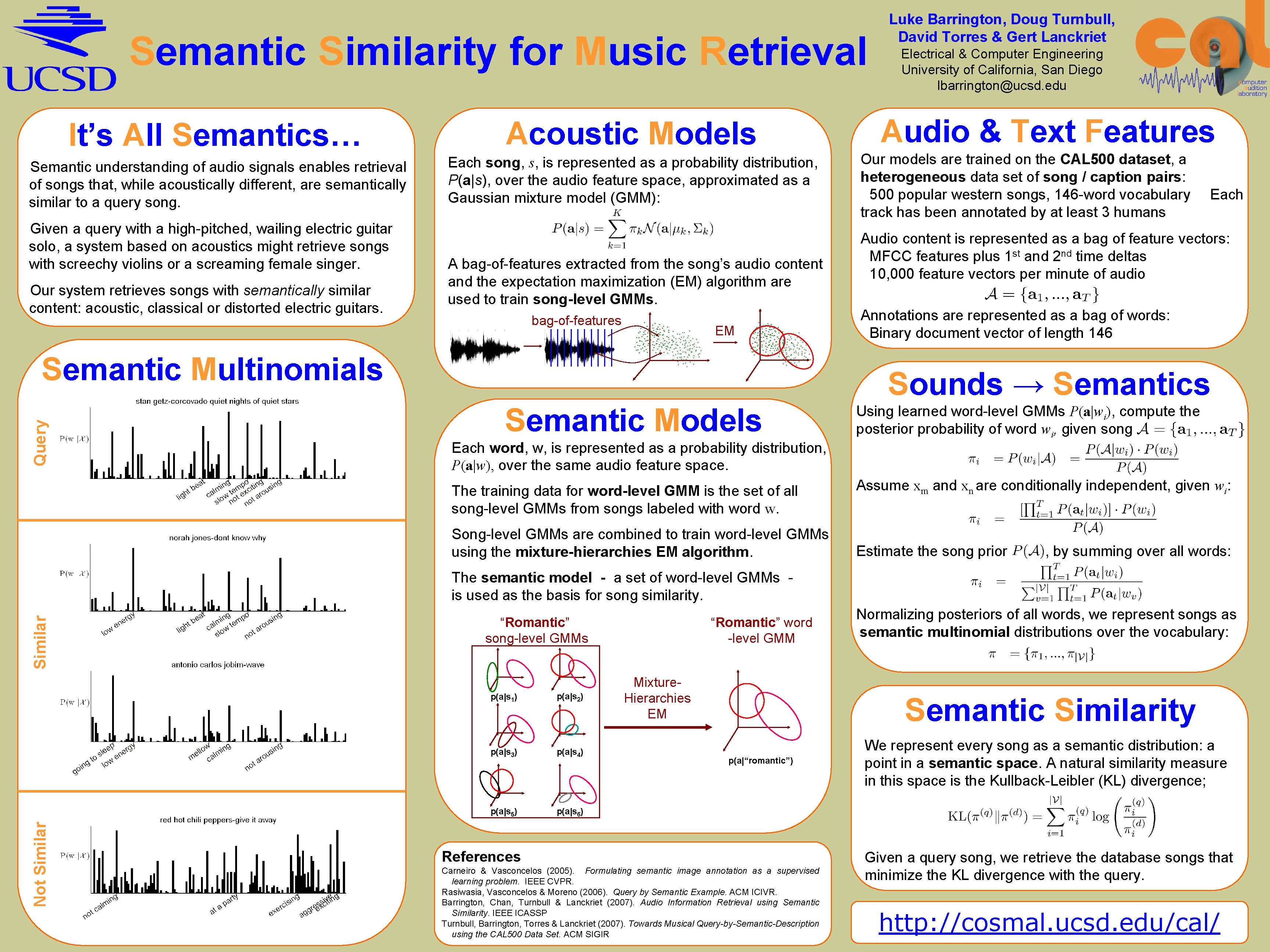

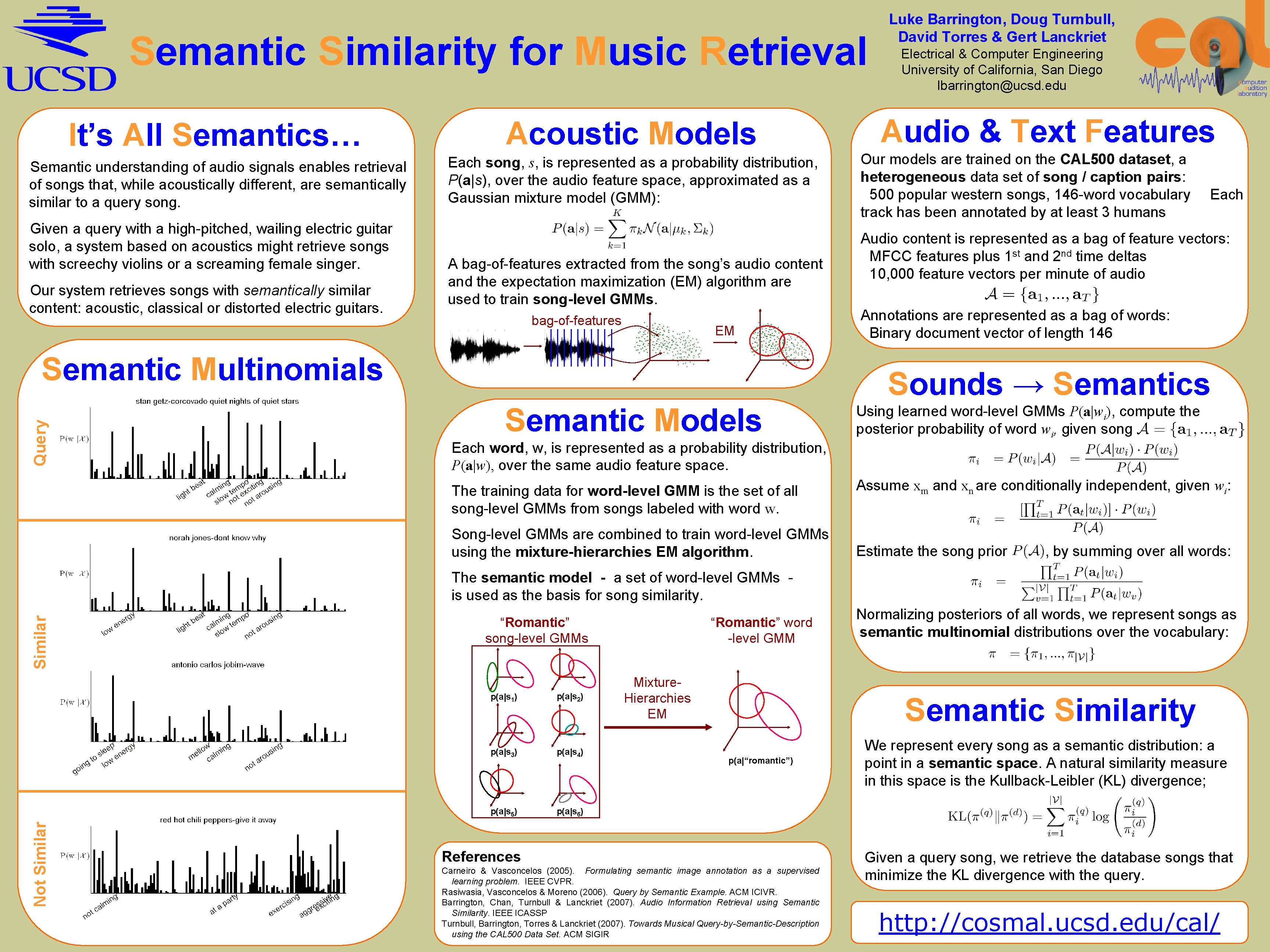

Semantic Similarity for Music Retrieval Acoustic Models Semantic understanding of audio signals enables retrieval of songs that, while acoustically different, are semantically similar to a query song. Each song, s, is represented as a probability distribution, P(a|s), over the audio feature space, approximated as a Gaussian mixture model (GMM): Our system retrieves songs with semantically similar content: acoustic, classical or distorted electric guitars. A bag-of-features extracted from the song’s audio content and the expectation maximization (EM) algorithm are used to train song-level GMMs. bag-of-features EM + + + +++ + + ++ +++ ++ + + + ++ ++ +++ + + ++++ + + + + + + + + ++ + + ++ + + + + ++ + + ++ + ++ + + + + + + + + + ++ + + + + ++ ++ + + + ++ + ++ + + + + + + ++ + + + + + +++ + + ++ ++ + ++ + ++ + + + ++ + + ++ + ++ + Semantic Multinomials Query + Electrical & Computer Engineering University of California, San Diego lbarrington@ucsd. edu Audio & Text Features It’s All Semantics… Given a query with a high-pitched, wailing electric guitar solo, a system based on acoustics might retrieve songs with screechy violins or a screaming female singer. Luke Barrington, Doug Turnbull, David Torres & Gert Lanckriet Semantic Models Each word, w, is represented as a probability distribution, P(a|w), over the same audio feature space. The training data for word-level GMM is the set of all song-level GMMs from songs labeled with word w. Song-level GMMs are combined to train word-level GMMs using the mixture-hierarchies EM algorithm. Our models are trained on the CAL 500 dataset, a heterogeneous data set of song / caption pairs: 500 popular western songs, 146 -word vocabulary track has been annotated by at least 3 humans Each Audio content is represented as a bag of feature vectors: MFCC features plus 1 st and 2 nd time deltas 10, 000 feature vectors per minute of audio Annotations are represented as a bag of words: Binary document vector of length 146 Sounds → Semantics Using learned word-level GMMs P(a|wi), compute the posterior probability of word wi, given song Assume xm and xn are conditionally independent, given wi: Estimate the song prior , by summing over all words: Not Similar The semantic model - a set of word-level GMMs is used as the basis for song similarity. “Romantic” song-level GMMs p(a|s 1) p(a|s 2) p(a|s 3) p(a|s 4) p(a|s 5) p(a|s 6) “Romantic” word -level GMM Mixture. Hierarchies EM Normalizing posteriors of all words, we represent songs as semantic multinomial distributions over the vocabulary: Semantic Similarity p(a|“romantic”) References Carneiro & Vasconcelos (2005). Formulating semantic image annotation as a supervised learning problem. IEEE CVPR. Rasiwasia, Vasconcelos & Moreno (2006). Query by Semantic Example. ACM ICIVR. Barrington, Chan, Turnbull & Lanckriet (2007). Audio Information Retrieval using Semantic Similarity. IEEE ICASSP Turnbull, Barrington, Torres & Lanckriet (2007). Towards Musical Query-by-Semantic-Description using the CAL 500 Data Set. ACM SIGIR We represent every song as a semantic distribution: a point in a semantic space. A natural similarity measure in this space is the Kullback-Leibler (KL) divergence; Given a query song, we retrieve the database songs that minimize the KL divergence with the query. http: //cosmal. ucsd. edu/cal/