Self Organization of a massive document collection IEEE

- Slides: 20

Self Organization of a massive document collection IEEE Transactions on Neural Networks VOL. 11 No. 3 3, May 2000 Teuvo Kohonen Helsinki University of Technology, Espoo, Finland Presented by Chu Huei-Ming 2004/12/01

Reference • A Scalable Self-organizing Map Algorithm for Textual Classification: A Neural Network Approach to Thesaurus Generation – Dmitri G. Roussinov – Hsinchun Chen – Department of MIS, Karl Eller Graduate School of Management, University of Arizona • http: //dlist. sir. arizona. edu/archive/00000460/01/ A_Scalable-98. htm 2

Outline • • • Introduction The Self-Organizing Map Statistical Models of Documents Rapid Construction of Large Document Maps Conclusion 3

Introduction • From simple search to browsing of selforganized data collections – Document collections could be automatically organized in some meaningful way. – The context of the other related documents residing nearby would then help in understanding the true meaning 4

Introduction • SOM can first be computed using any representative subset of old input data • New input items can be mapped straight into the most similar models without re-computation of the whole mapping 5

The Self-Organizing Map • Unsupervised-learning neural-network method • Similarity graph of input data • Arranged as a regular, usually two-dimensional grid 6

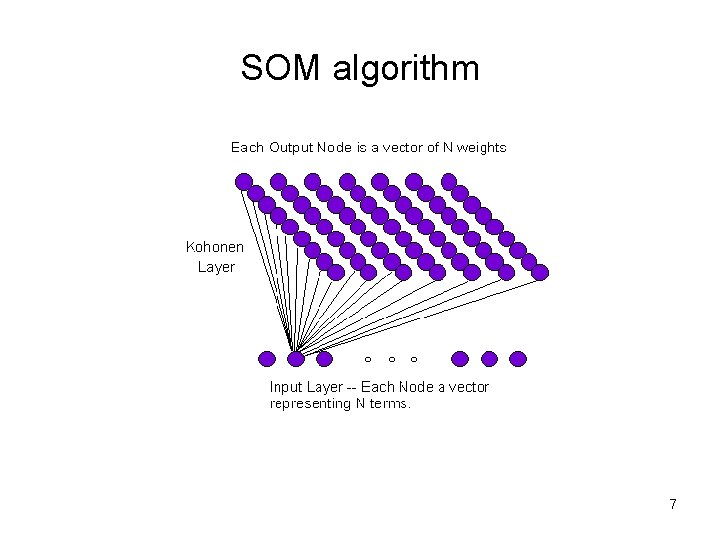

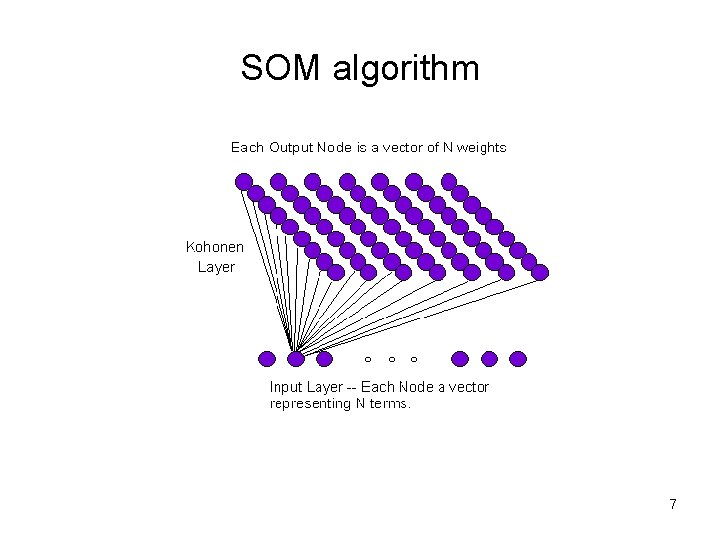

SOM algorithm 7

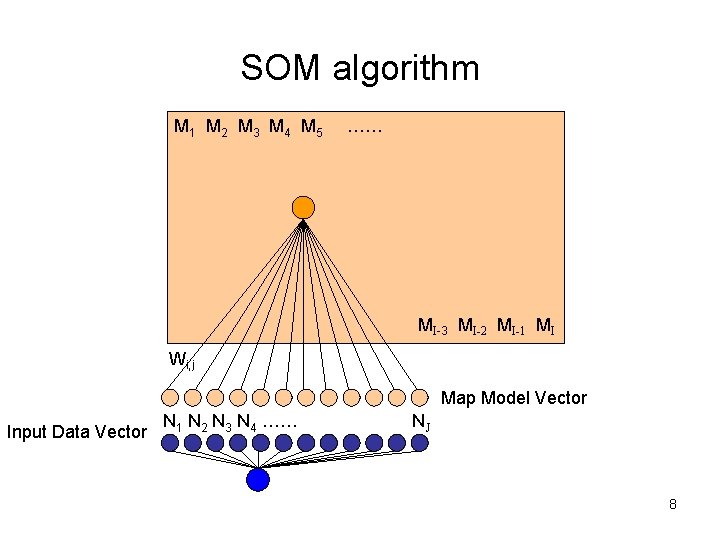

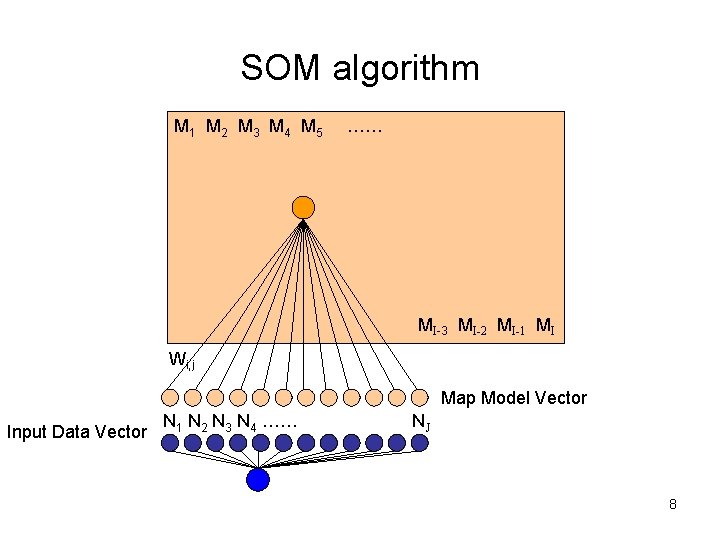

SOM algorithm M 1 M 2 M 3 M 4 M 5 …… MI-3 MI-2 MI-1 MI Wi, j Map Model Vector Input Data Vector N 1 N 2 N 3 N 4 …… NJ 8

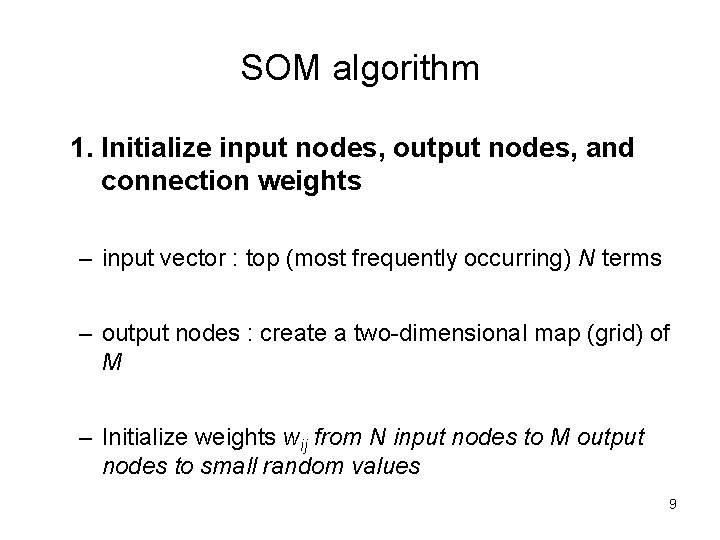

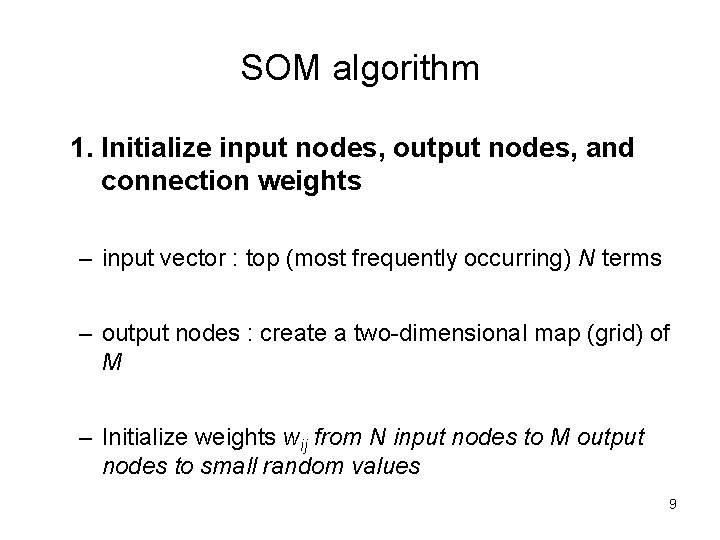

SOM algorithm 1. Initialize input nodes, output nodes, and connection weights – input vector : top (most frequently occurring) N terms – output nodes : create a two-dimensional map (grid) of M – Initialize weights wij from N input nodes to M output nodes to small random values 9

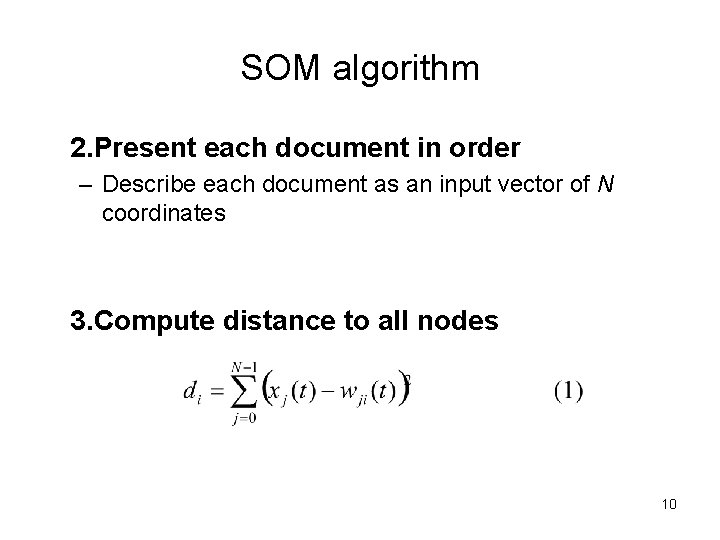

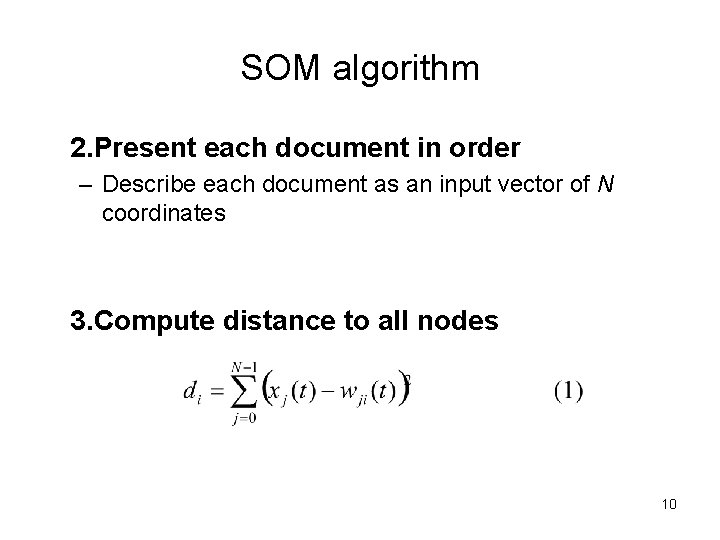

SOM algorithm 2. Present each document in order – Describe each document as an input vector of N coordinates 3. Compute distance to all nodes 10

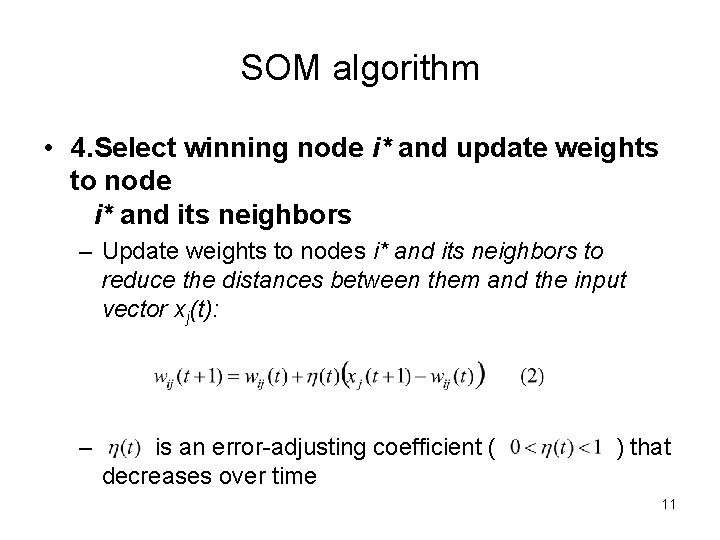

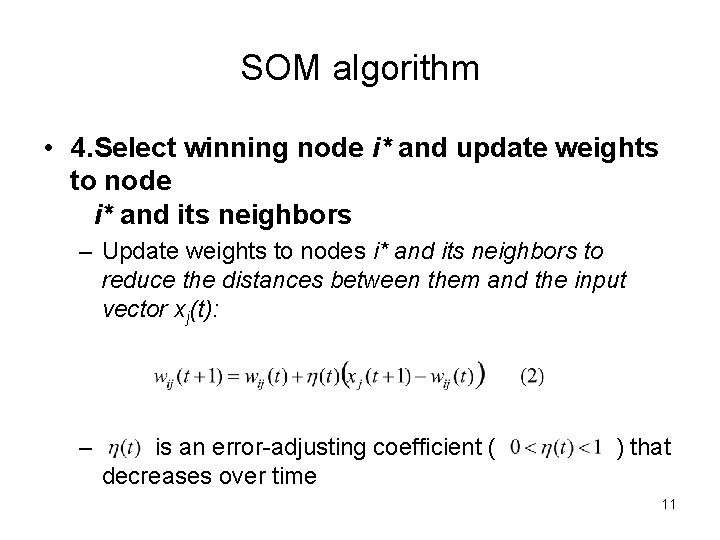

SOM algorithm • 4. Select winning node i* and update weights to node i* and its neighbors – Update weights to nodes i* and its neighbors to reduce the distances between them and the input vector xj(t): – is an error-adjusting coefficient ( decreases over time ) that 11

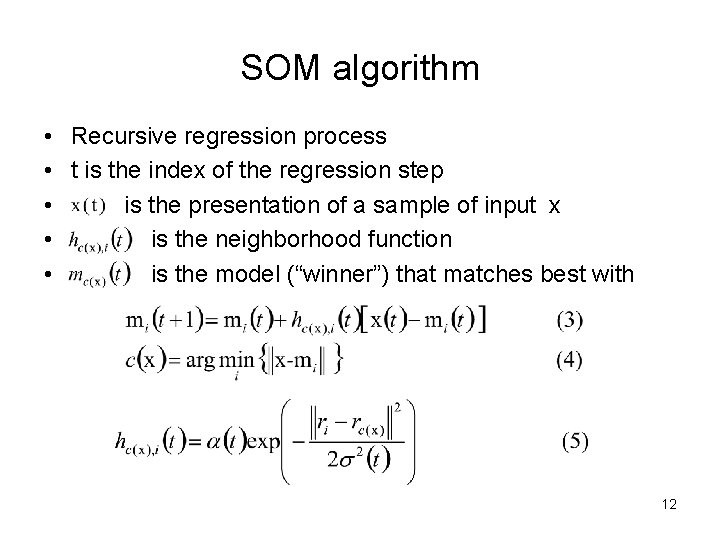

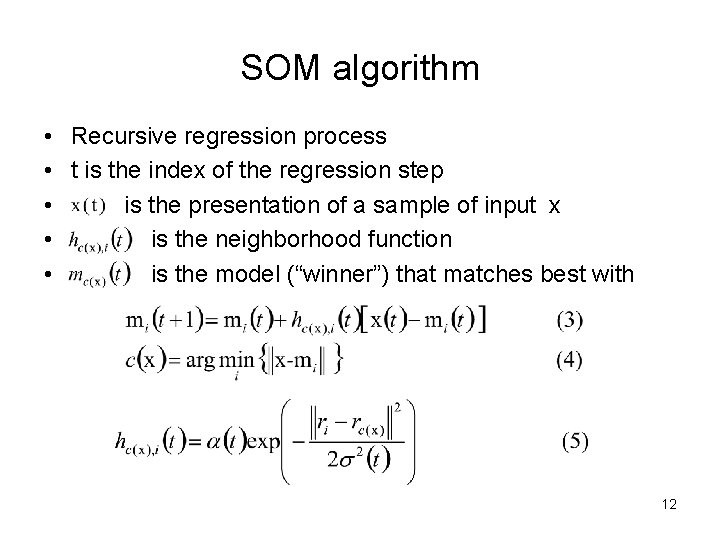

SOM algorithm • Recursive regression process • t is the index of the regression step • is the presentation of a sample of input x • is the neighborhood function • is the model (“winner”) that matches best with 12

SOM algorithm • is the learning-rate factor which decreases monotonically with the regression steps • corresponds to the width of the neighborhood function, which is also decreasing monotonically with the regression steps 13

Statistical Models of Documents • • The primitive vector space model Latent semantic indexing (LSI) Randomly projection histograms Histograms on the word category map 14

Rapid Construction of Large Document Maps • Fast distance computation • Estimation of larger maps based on carefully constructed smaller ones • Rapid fine-tuning of the large maps 15

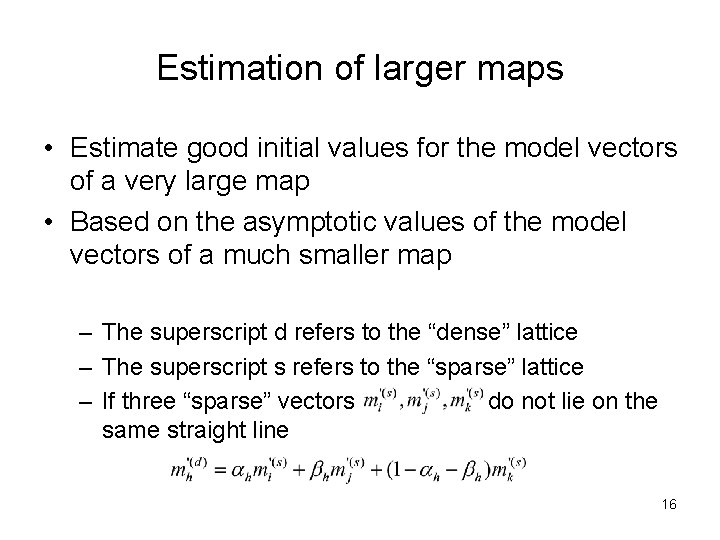

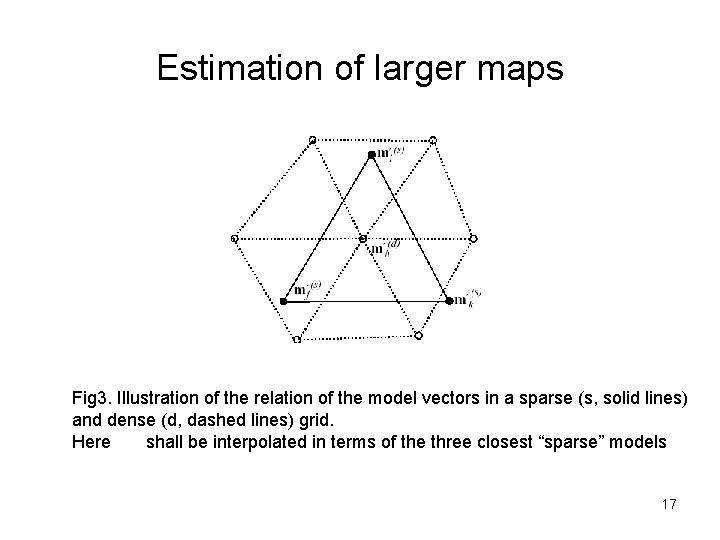

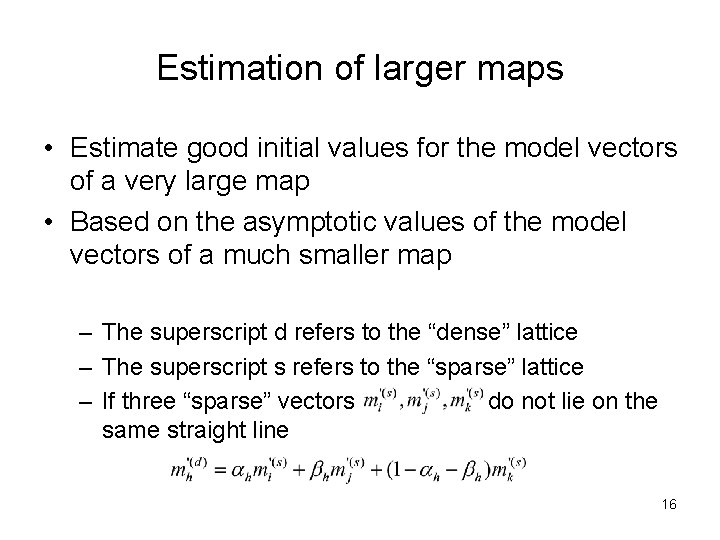

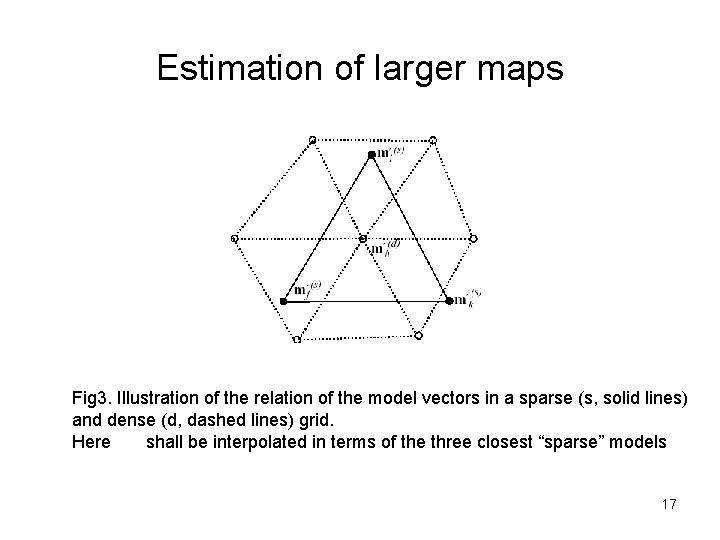

Estimation of larger maps • Estimate good initial values for the model vectors of a very large map • Based on the asymptotic values of the model vectors of a much smaller map – The superscript d refers to the “dense” lattice – The superscript s refers to the “sparse” lattice – If three “sparse” vectors do not lie on the same straight line 16

Estimation of larger maps Fig 3. Illustration of the relation of the model vectors in a sparse (s, solid lines) and dense (d, dashed lines) grid. Here shall be interpolated in terms of the three closest “sparse” models 17

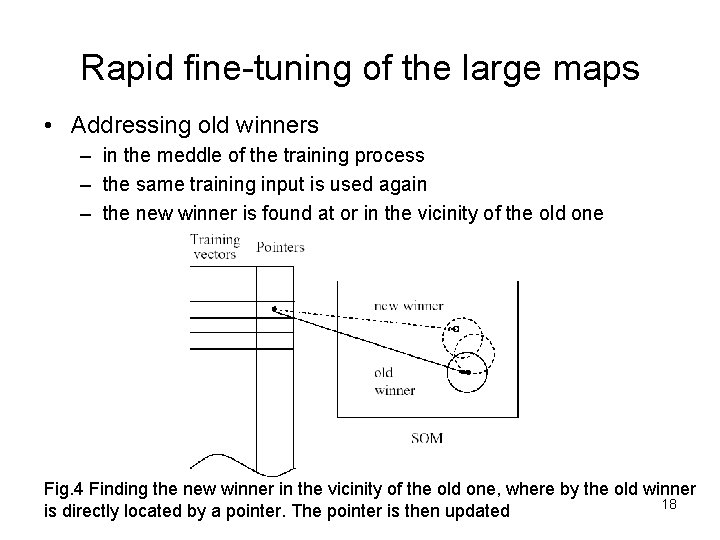

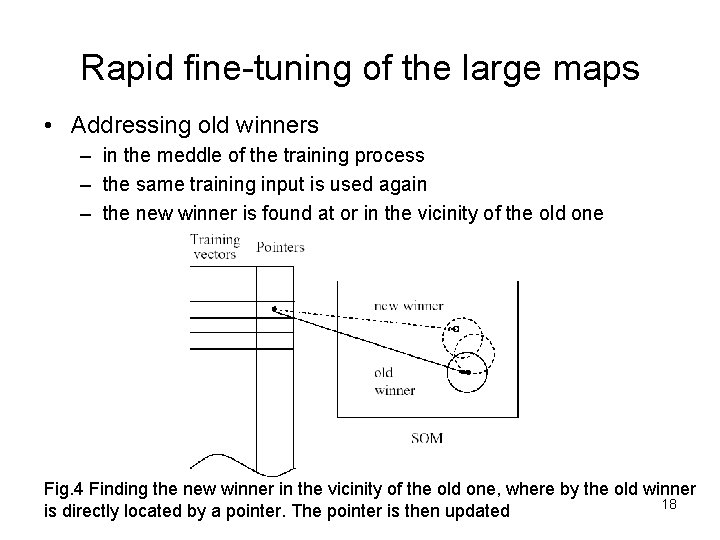

Rapid fine-tuning of the large maps • Addressing old winners – in the meddle of the training process – the same training input is used again – the new winner is found at or in the vicinity of the old one Fig. 4 Finding the new winner in the vicinity of the old one, where by the old winner 18 is directly located by a pointer. The pointer is then updated

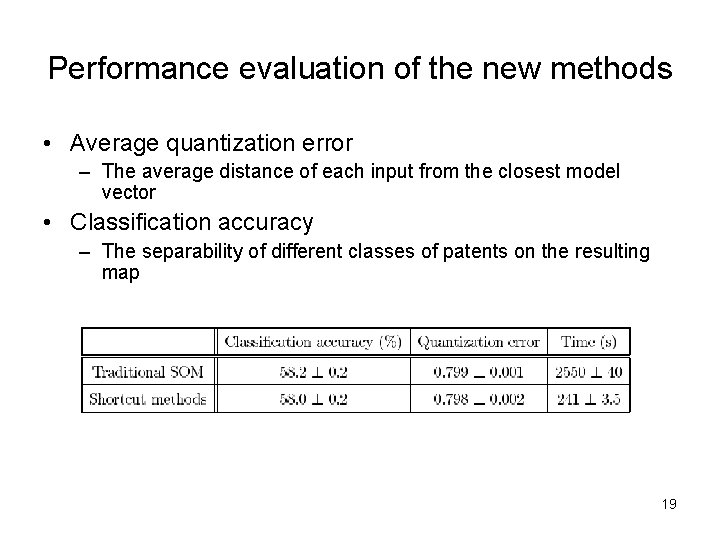

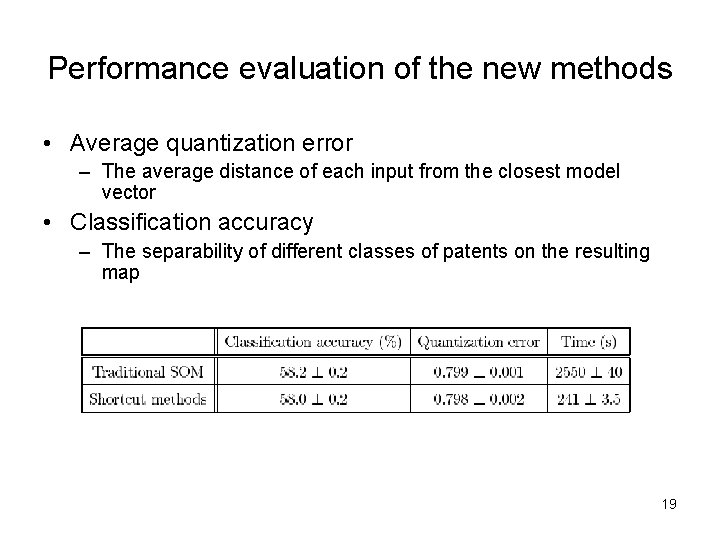

Performance evaluation of the new methods • Average quantization error – The average distance of each input from the closest model vector • Classification accuracy – The separability of different classes of patents on the resulting map 19

Conclusion • The graph is suitable for interactive data mining or exploration tasks. • A new method of forming statistical models of documents • Several new fast computing methods (shortcuts) 20