Selecting a Process Factors That Influence Process Selection

- Slides: 45

Selecting a Process

Factors That Influence Process Selection?

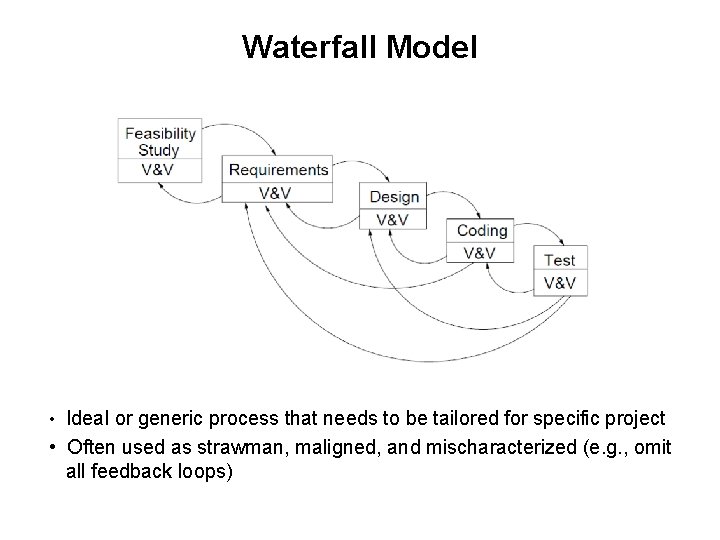

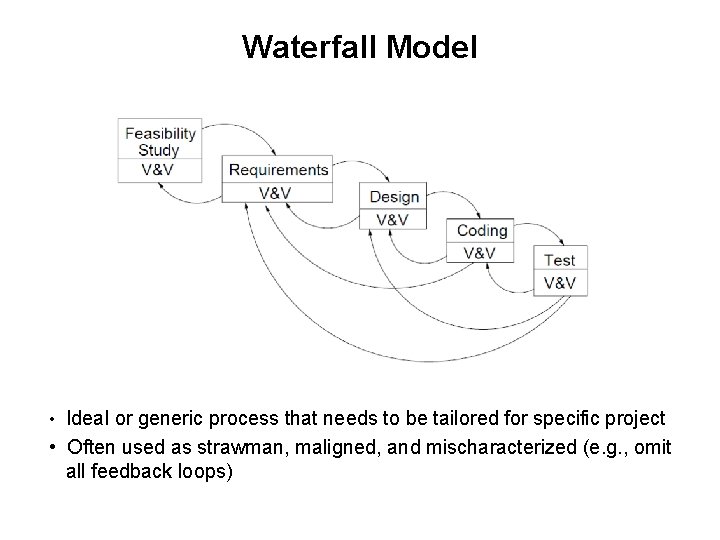

Waterfall Model • Ideal or generic process that needs to be tailored for specific project • Often used as strawman, maligned, and mischaracterized (e. g. , omit all feedback loops)

Features of the Waterfall Model • A phased development process with clearly identified milestones • Deliverables and baselines with each phase, results of each phase “frozen” (but can be changed later if necessary) • Reviews at each phase • Document-driven process (really deliverable driven, but deliverables in early phases are often documents) • “Big Bang” testing vs. stubs vs. daily build and smoke test • “A Rational Design Process and How to Fake It” – Strict sequencing between activities not usually obeyed – But at end design should look like it was

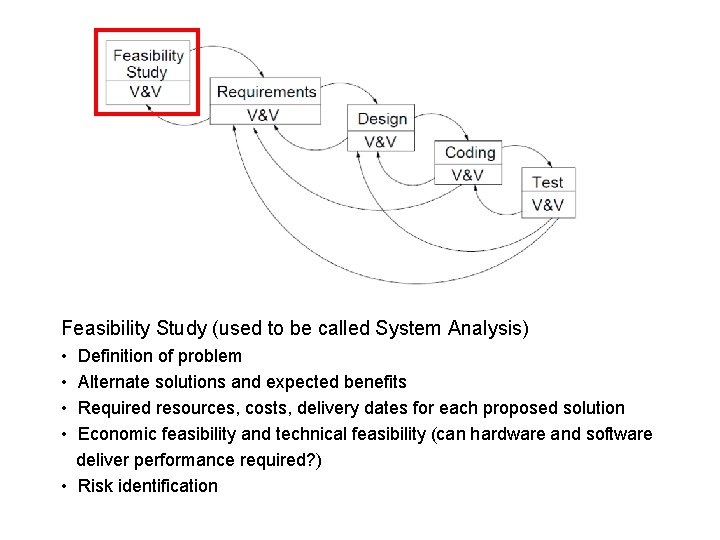

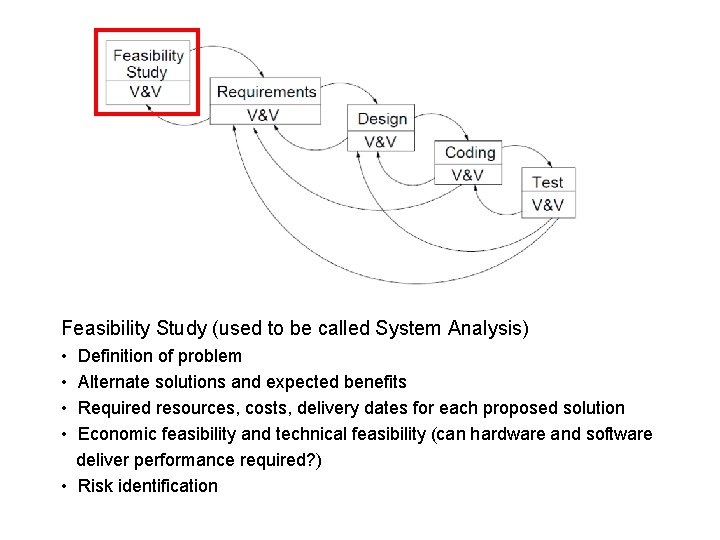

Feasibility Study (used to be called System Analysis) • • Definition of problem Alternate solutions and expected benefits Required resources, costs, delivery dates for each proposed solution Economic feasibility and technical feasibility (can hardware and software deliver performance required? ) • Risk identification

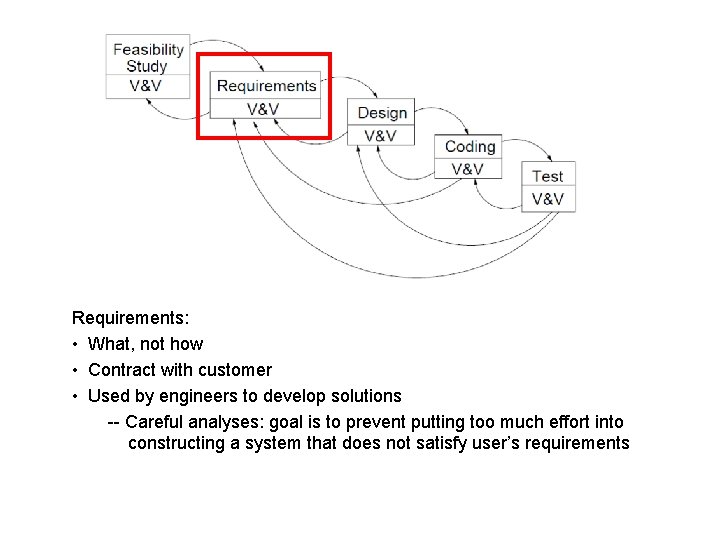

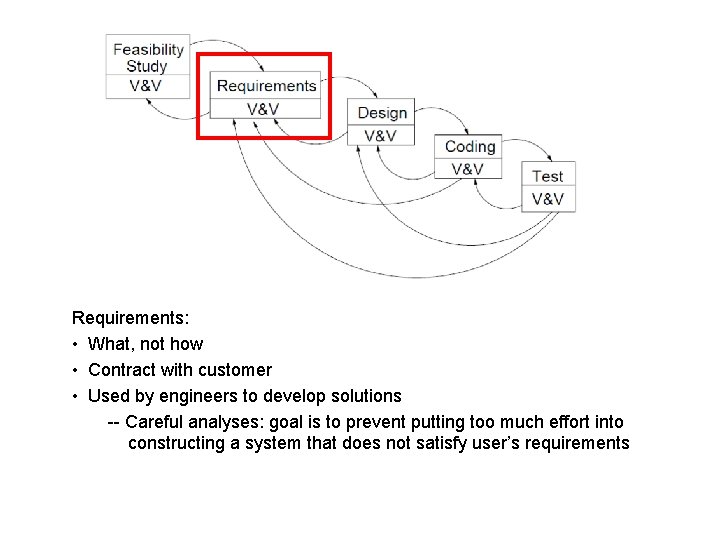

Requirements: • What, not how • Contract with customer • Used by engineers to develop solutions -- Careful analyses: goal is to prevent putting too much effort into constructing a system that does not satisfy user’s requirements

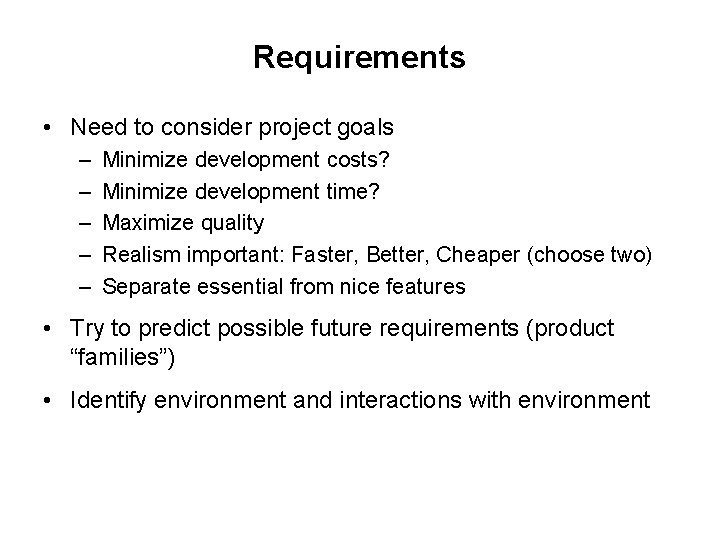

Requirements • Need to consider project goals – – – Minimize development costs? Minimize development time? Maximize quality Realism important: Faster, Better, Cheaper (choose two) Separate essential from nice features • Try to predict possible future requirements (product “families”) • Identify environment and interactions with environment

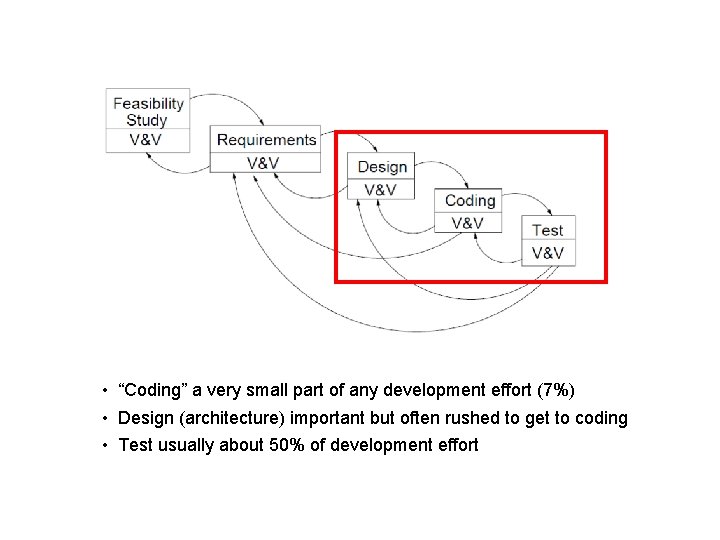

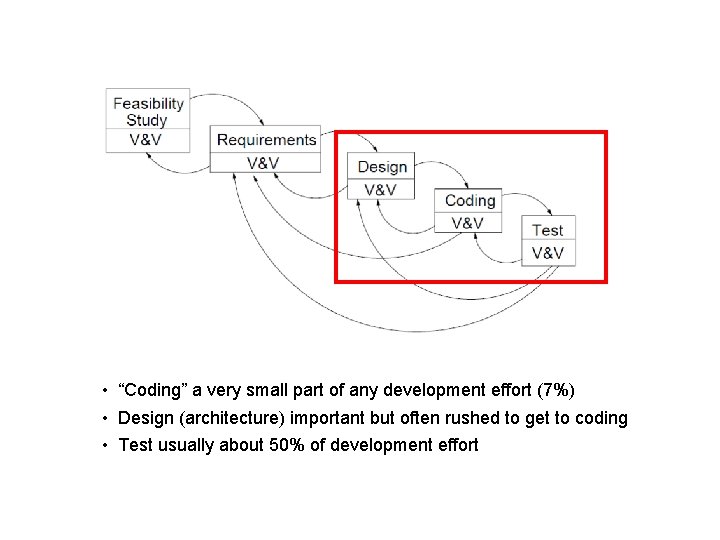

• “Coding” a very small part of any development effort (7%) • Design (architecture) important but often rushed to get to coding • Test usually about 50% of development effort

• What is the difference between the waterfall model and the V-model?

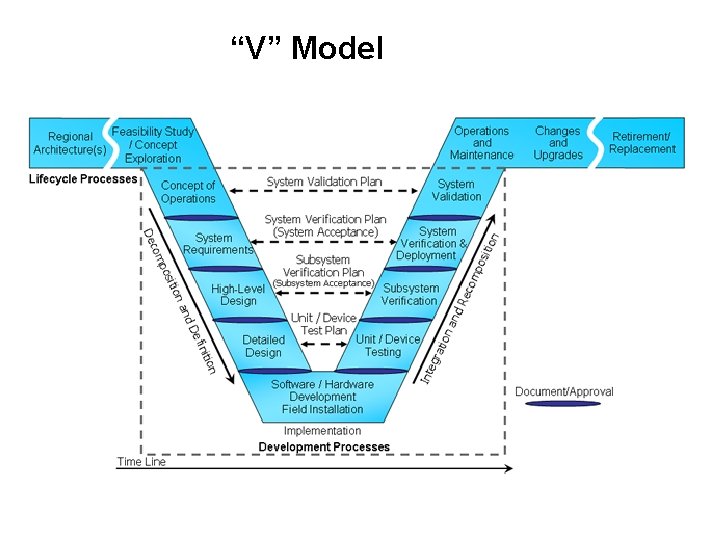

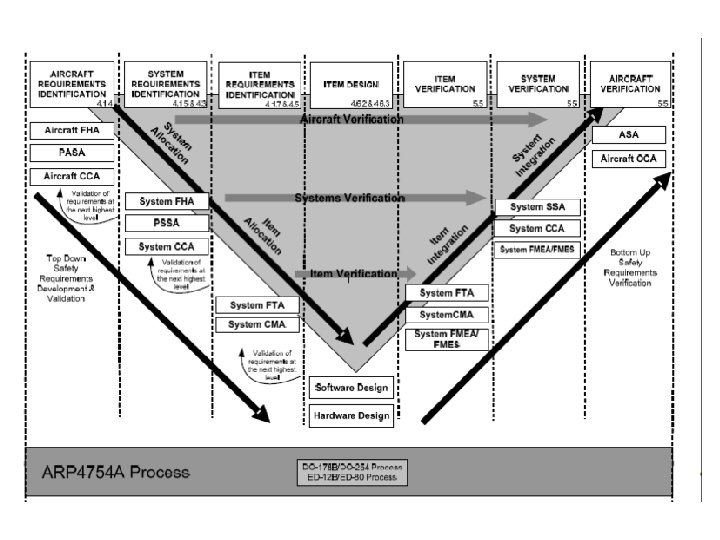

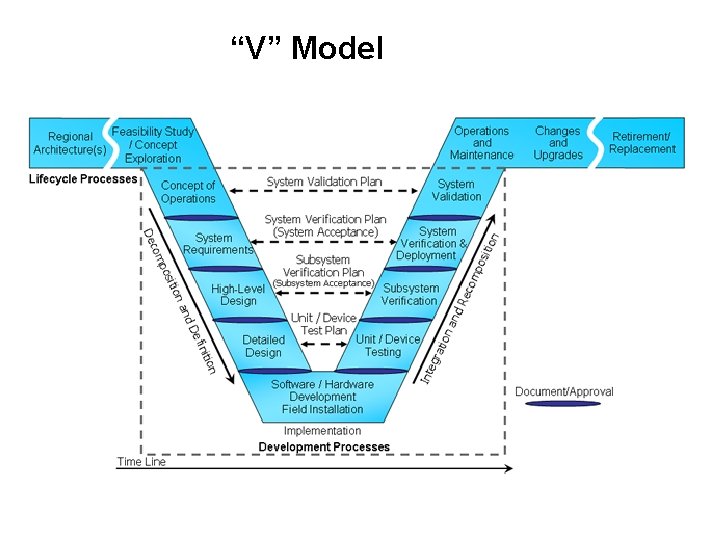

“V” Model

“V” Model/ Waterfall Model • Phases are delineated by review processes

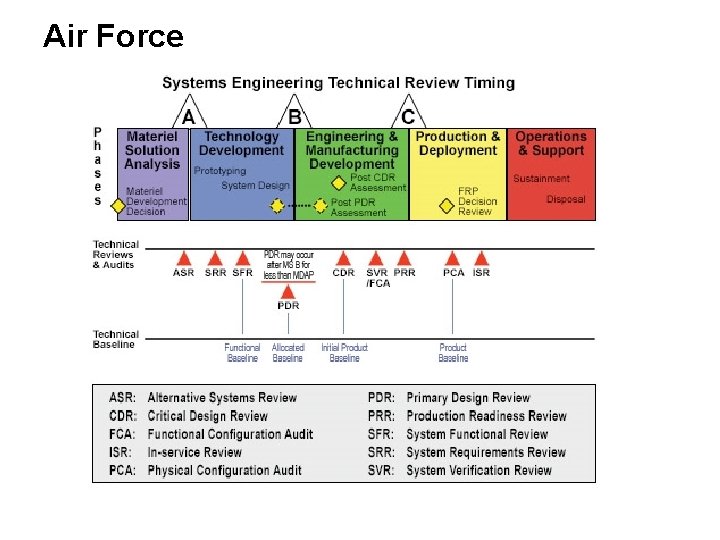

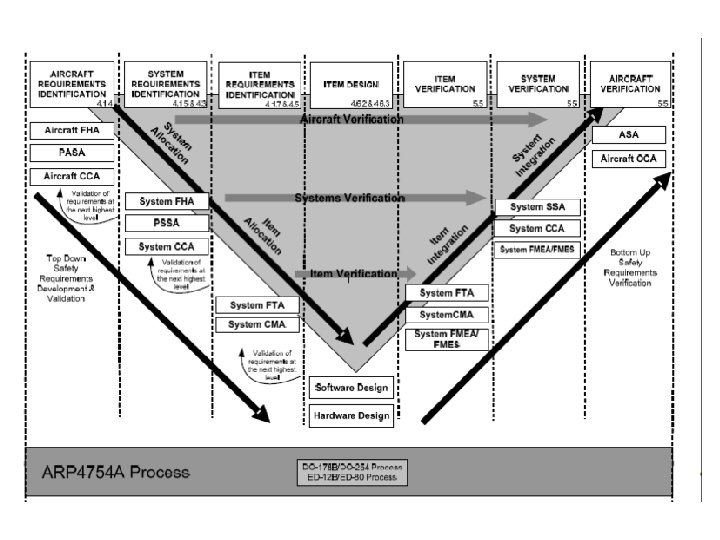

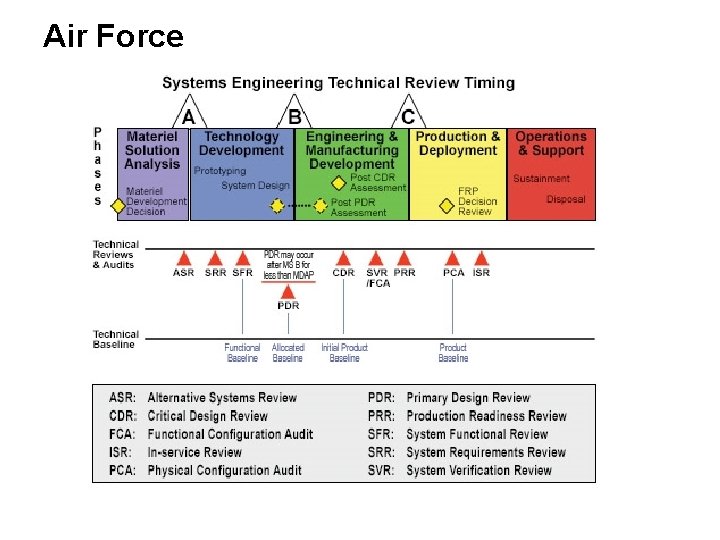

Air Force

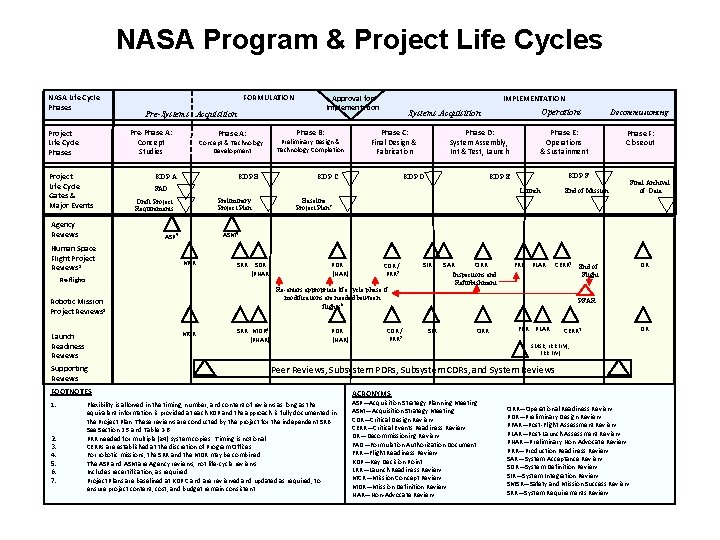

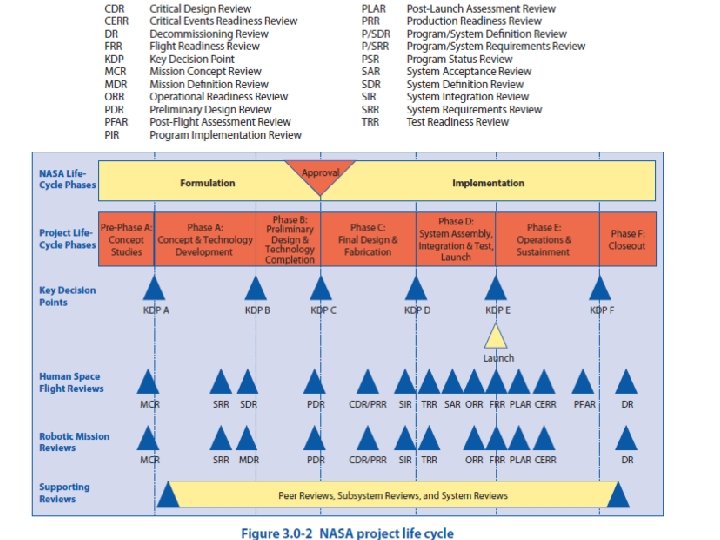

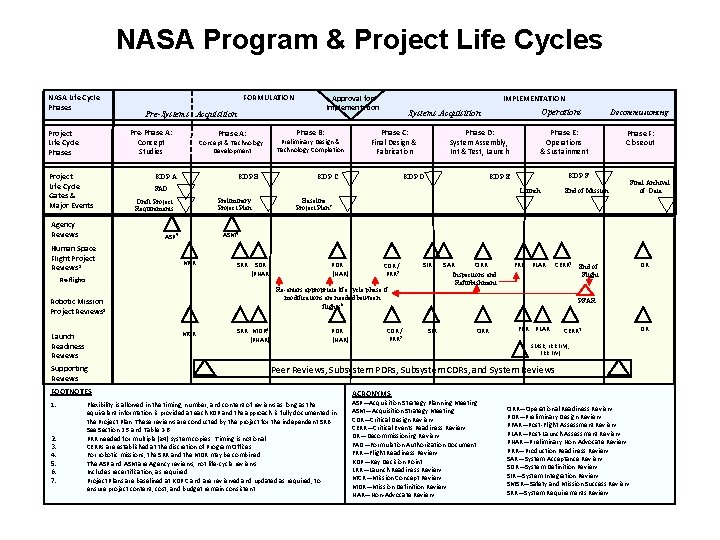

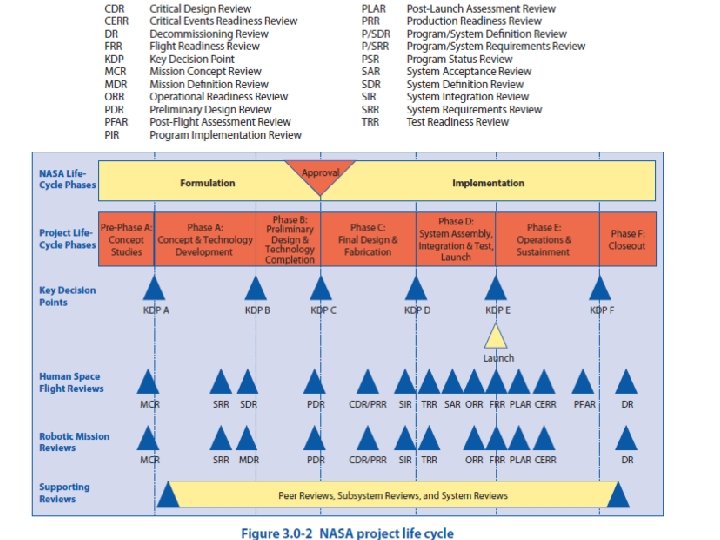

NASA Program & Project Life Cycles NASA Life Cycle Phases FORMULATION Pre-Systems Acquisition Pre-Phase A: Concept Studies Project Life Cycle Phases Project Life Cycle Gates & Major Events Agency Reviews Phase B: Phase A: KDP A 2. 3. 4. 5. 6. 7. Operations Phase D: System Assembly, Int & Test, Launch Phase E: Operations & Sustainment KDP E KDP F KDP D Launch Preliminary Project Plan Draft Project Requirements Decommissioning Phase F: Closeout End of Mission Final Archival of Data Baseline Project Plan 7 ASM 5 MCR SRR SDR (PNAR) PDR (NAR) CDR / PRR 2 SIR Re-enters appropriate life cycle phase if modifications are needed between flights 6 MCR SRR MDR 4 (PNAR) PDR (NAR) CDR / PRR 2 ORR SAR Inspections and Refurbishment FRR PLAR CERR 3 End of Flight Flexibility is allowed in the timing, number, and content of reviews as long as the equivalent information is provided at each KDP and the approach is fully documented in the Project Plan. These reviews are conducted by the project for the independent SRB. See Section 2. 5 and Table 2 -6. PRR needed for multiple (≥ 4) system copies. Timing is notional. CERRs are established at the discretion of Program Offices. For robotic missions, the SRR and the MDR may be combined. The ASP and ASM are Agency reviews, not life-cycle reviews. Includes recertification, as required. Project Plans are baselined at KDP C and are reviewed and updated as required, to ensure project content, cost, and budget remain consistent. DR PFAR SIR ORR FRR PLAR CERR 3 SMSR, LRR (LV), FRR (LV) Peer Reviews, Subsystem PDRs, Subsystem CDRs, and System Reviews FOOTNOTES 1. Phase C: Final Design & Fabrication KDP C KDP B Robotic Mission Project Reviews 1 Supporting Reviews IMPLEMENTATION Systems Acquisition FAD Re-flights Launch Readiness Reviews Preliminary Design & Technology Completion Concept & Technology Development ASP 5 Human Space Flight Project Reviews 1 Approval for Implementation ACRONYMS ASP—Acquisition Strategy Planning Meeting ASM—Acquisition Strategy Meeting CDR—Critical Design Review CERR—Critical Events Readiness Review DR—Decommissioning Review FAD—Formulation Authorization Document FRR—Flight Readiness Review KDP—Key Decision Point LRR—Launch Readiness Review MCR—Mission Concept Review MDR—Mission Definition Review NAR—Non-Advocate Review ORR—Operational Readiness Review PDR—Preliminary Design Review PFAR—Post-Flight Assessment Review PLAR—Post-Launch Assessment Review PNAR—Preliminary Non-Advocate Review PRR—Production Readiness Review SAR—System Acceptance Review SDR—System Definition Review SIR—System Integration Review SMSR—Safety and Mission Success Review SRR—System Requirements Review DR

Review of Where We Are • What are the major characteristics of the waterfall/Vmodel? • What alternatives have been suggested?

Evolutionary Model • Prototyping – “Do it twice” – To assess feasibility – To verify requirements • May only be – A front end or executable specification (“very high level” languages or front end for user interface) – Or develop system with less functionality or quality attributes (speed, robustness, etc. ) • What potential problems with this do you see so far?

Differences Between Hardware and Software Prototyping? • Why are hardware prototypes built? • What happens to them? • Are there differences between hardware and software that may make prototyping different in one than in the other? • Have any of you been on a project that used software prototyping?

Evolutionary Model (2) • 3 approaches 1. Use prototyping as tool for requirements analysis. 2. Use to accommodate design uncertainty a. Prototype evolves into final product b. Documentation may be sacrificed c. May be less robust d. Quality defects may cause problems later (during ops and maintenance -- Incorporating quality after system built is impossible or extremely costly 3. Use to experiment with proposed solutions before large investments made

Evolutionary Model (3) • Drawbacks – Can be expensive to build – Can develop a life of its own, turns out to be product itself – Hard to change basic decisions made early – Can be an excuse for poor programming practices

Experimental Evaluation • Boehm: prototyping vs. waterfall for software – Waterfall: • Addressed product and process control risks better • Resulted in more robust product, easier to maintain • Fewer problems in debugging and integration due to more thought-out design – Prototyping • Addressed user interfaces better • Alavi: prototyping vs. waterfall for an information system – Prototyping: users more positive and more involved – Waterfall: more robust and efficient data structures

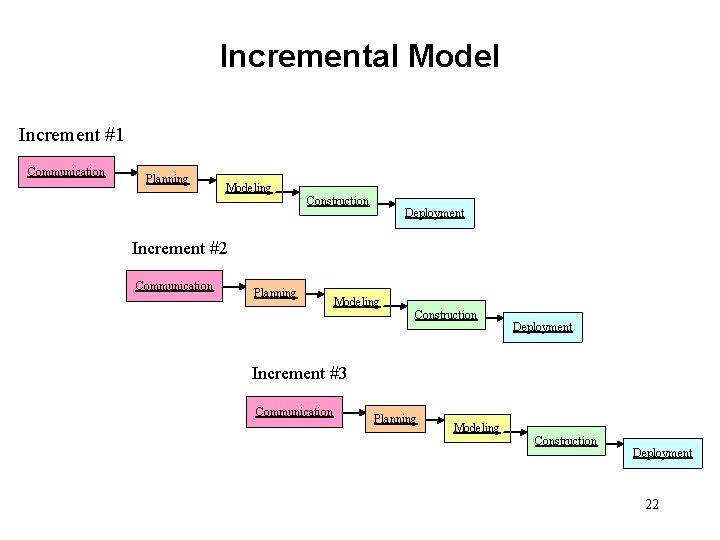

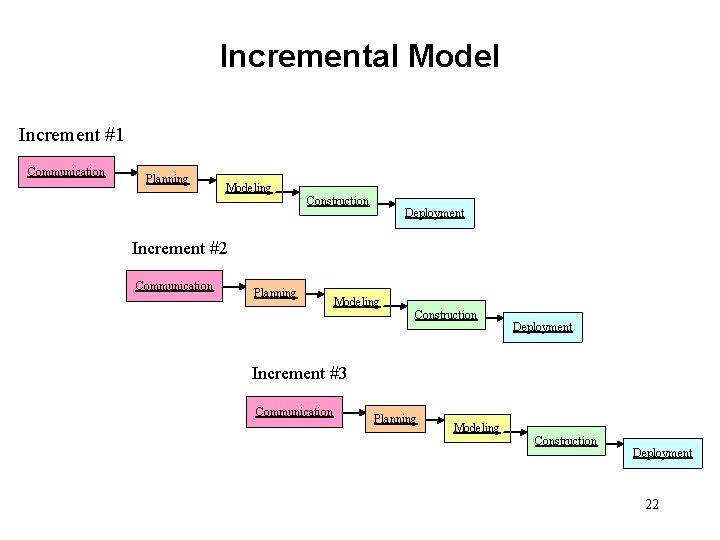

Incremental Model Increment #1 Communication Planning Modeling Construction Deployment Increment #2 Communication Planning Modeling Construction Deployment Increment #3 Communication Planning Modeling Construction Deployment 22

Incremental Model • Functionality produced and delivered in small increments • Focus attention first on essential features and add functionality only if and when needed • Systems tend to be leaner, fights overfunctionality syndrome • May be hard to add features later – CLCS (tried to add fault tolerance later) – Need to be careful about what put off. Requires very complex analysis and deep knowledge to do this right. • Does this work for large, complex systems? Why or why not?

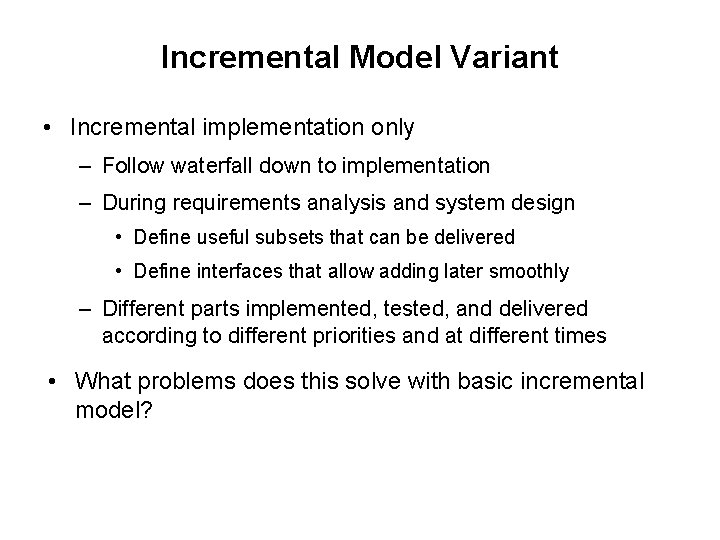

Incremental Model Variant • Incremental implementation only – Follow waterfall down to implementation – During requirements analysis and system design • Define useful subsets that can be delivered • Define interfaces that allow adding later smoothly – Different parts implemented, tested, and delivered according to different priorities and at different times • What problems does this solve with basic incremental model?

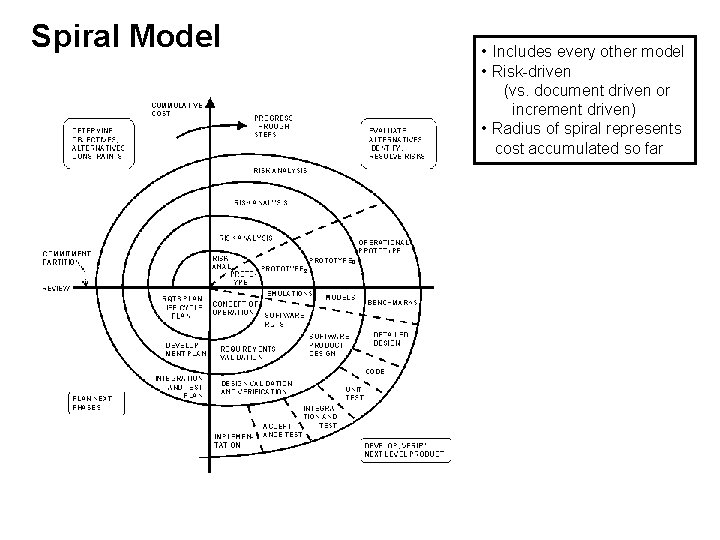

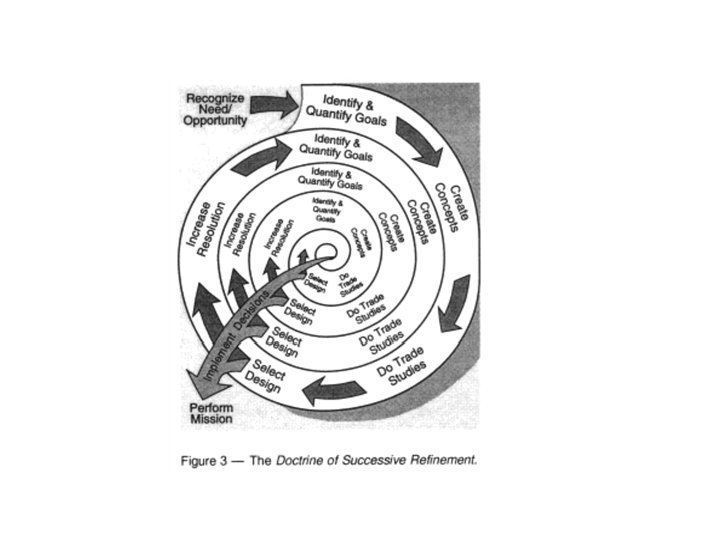

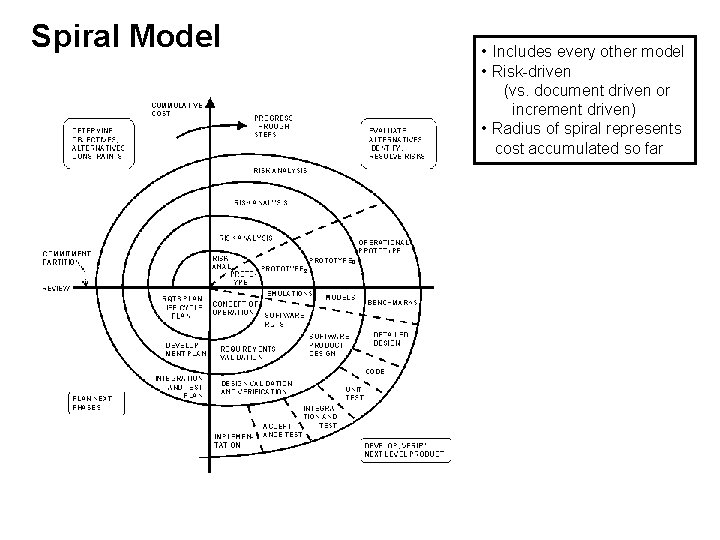

Spiral Model • Includes every other model • Risk-driven (vs. document driven or increment driven) • Radius of spiral represents cost accumulated so far

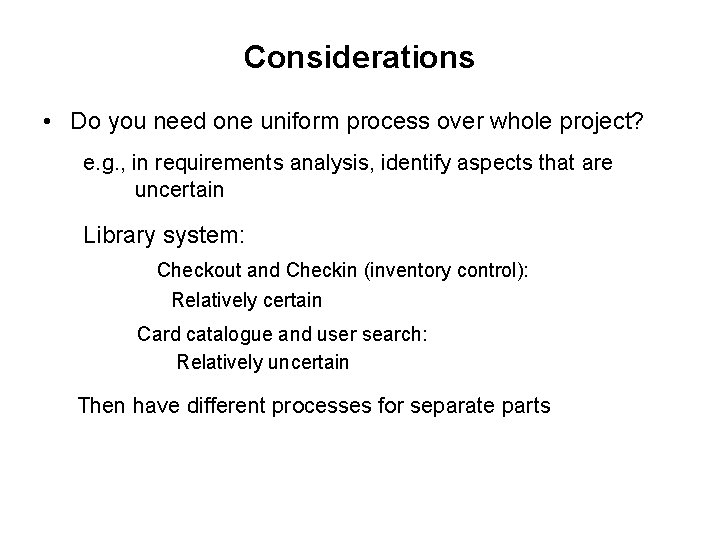

Considerations • Do you need one uniform process over whole project? e. g. , in requirements analysis, identify aspects that are uncertain Library system: Checkout and Checkin (inventory control): Relatively certain Card catalogue and user search: Relatively uncertain Then have different processes for separate parts

Software Factory • Most software organizations strictly separated between initial development and later maintenance – No incentive to produce a system that can be easily maintained – No incentive to produce reusable components • Project management vs. product management • Extend management responsibility to cover family of products rather than an individual product (product families)

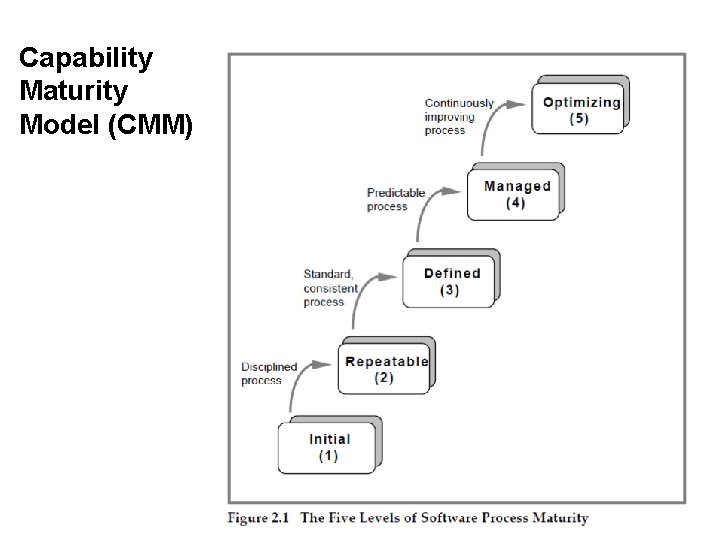

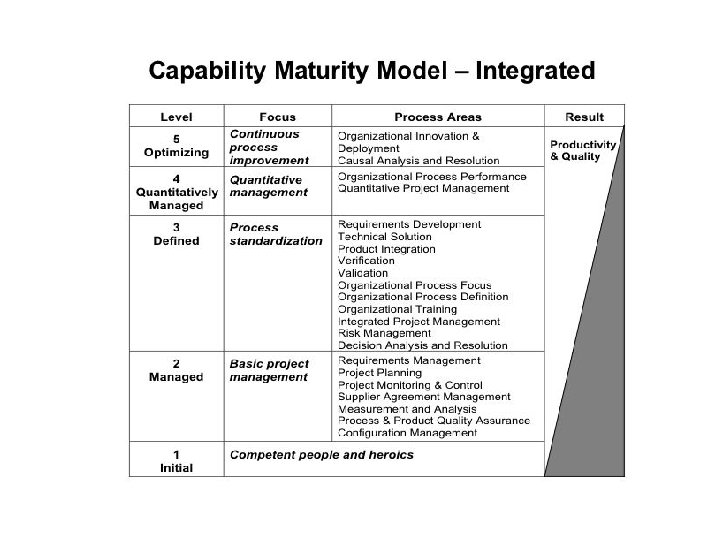

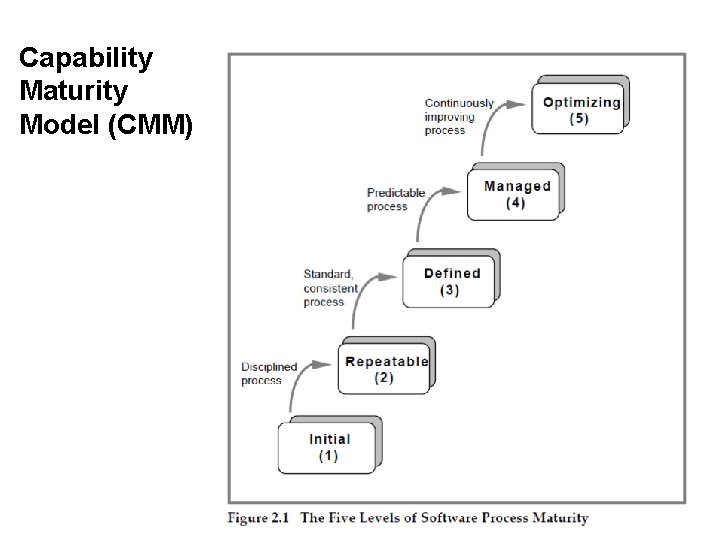

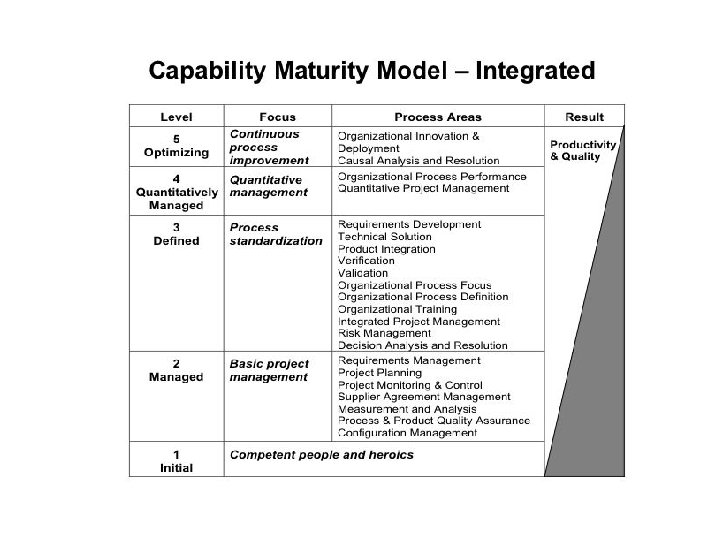

Capability Maturity Model (CMM)

What is CMMI? Consultant Money Making Initiative

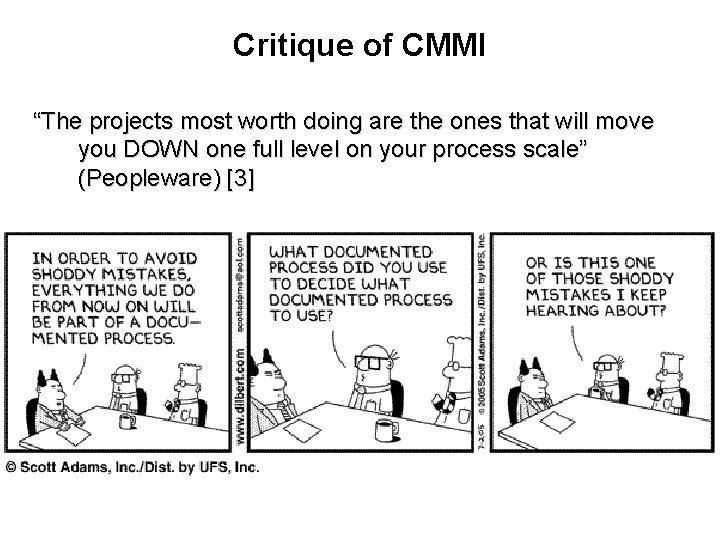

Critique of CMMI “The projects most worth doing are the ones that will move you DOWN one full level on your process scale” (Peopleware) [3]

CMM (Capability Maturity Model) • Comes from Taylorism (“scientific management”, statistical quality control) – Maximize efficiency by rational planning procedures – Assembly lines, time and motion studies (Henry Ford) – Behavior of individual controlled by normative rules and preplanned by system design – Projects achieve control over their products and processes by narrowing the variation in the process performance to fall within acceptable quantitative boundaries

CMM (Capability Maturity Model) (2) • Despite rhetoric, does not follow TQM – TQM emphasizes flexibility and learning – Learning orientation seeks to increase variation in order to explore opportunities • CMM focuses on narrowing variation – Formal bureaucratic control undermines intrinsic motivation needed for creative and flexible responses to uncertainty Senge: Humanistic values of caring and individual freedom are essential to building learning organizations Carroll: “In too many TQM programs, it is the difficult-toimplement portions of the program that are being finessed or ignored and the rhetoric that is being retained. ”

CMM Criticisms • Treats people as assembly line workers, i. e. , replaceable, unreliable • Humans are subordinated to defined processes • Why emphasis on “repeatable”? • Why five levels? Why a rigid order? – Peer reviews at level 3? Defect prevention at level 5? • Creates inflexible organizations and the illusion of control? • Places focus on the wrong things • Does this just ensure mediocrity? – Great software/hardware projects have done the opposite – Skunkworks, Apple (Mac), some Microsoft products

CMM Criticisms (2) Bollinger • Process improvement more than simply adding good measurement and controls to a project • Must address much deeper and uniquely difficult issue of how to distribute creative, intelligent problem-solving across a group of heterogeneous individuals. • Make group IQ improvement a major issue – Group stupification when process metrics structured around repetition – Use people for problem solving, don’t try to turn people into machines

More • Experimental validation? • Industry experience?

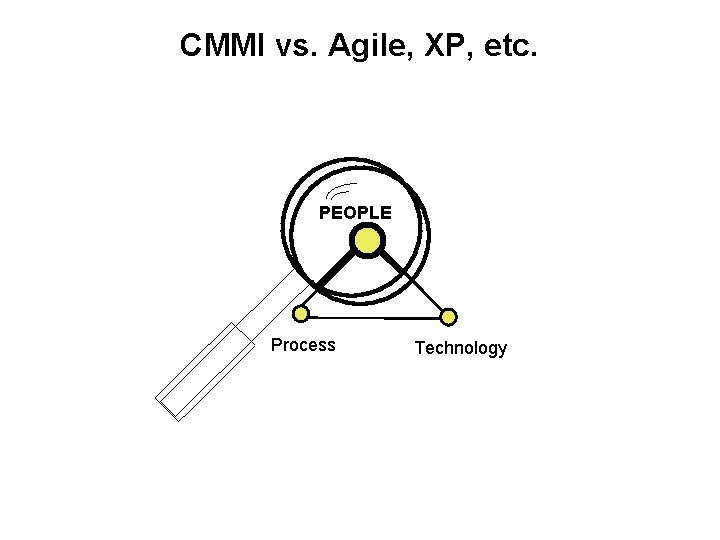

CMMI vs. Agile, XP, etc. PEOPLE Process TECHNOLOGY Technology

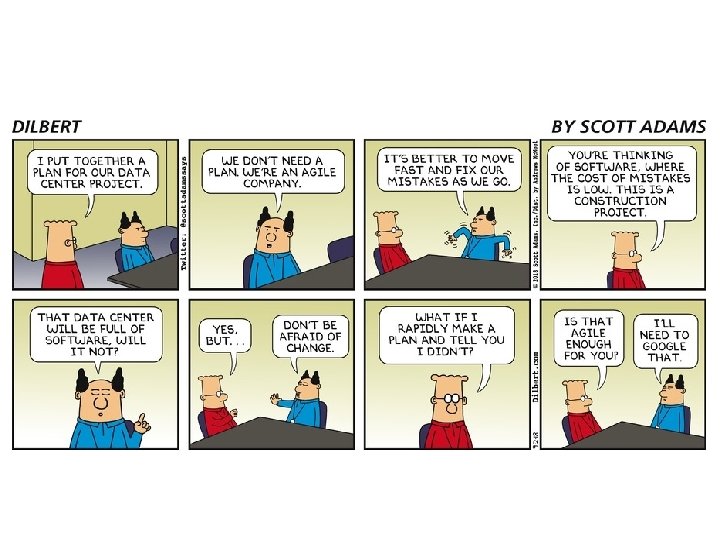

Discussion • What were some of the things you read about Agile and the other processes? Good and bad • What are your personal views on process vs. people, heros, etc. • What is the best development process to use? How do you choose? • [Story of Kessel Run] What factors made this project so successful?

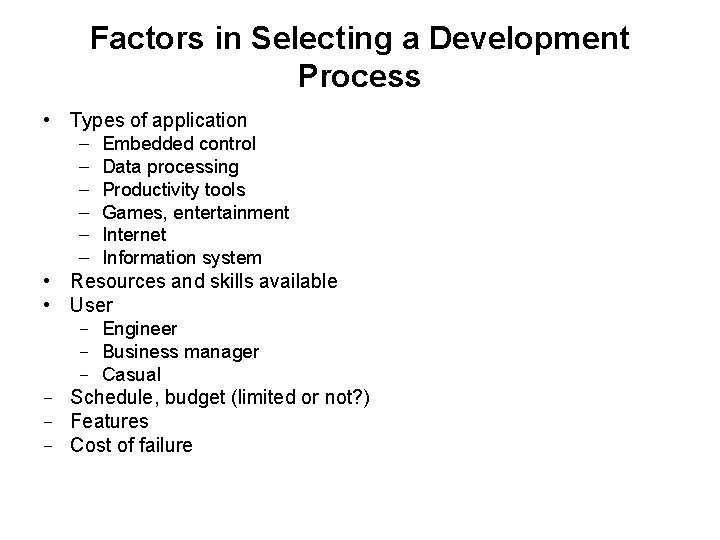

Factors in Selecting a Development Process • Types of application – Embedded control – Data processing – Productivity tools – Games, entertainment – Internet – Information system • Resources and skills available • User − Engineer − Business manager − Casual − Schedule, budget (limited or not? ) − Features − Cost of failure

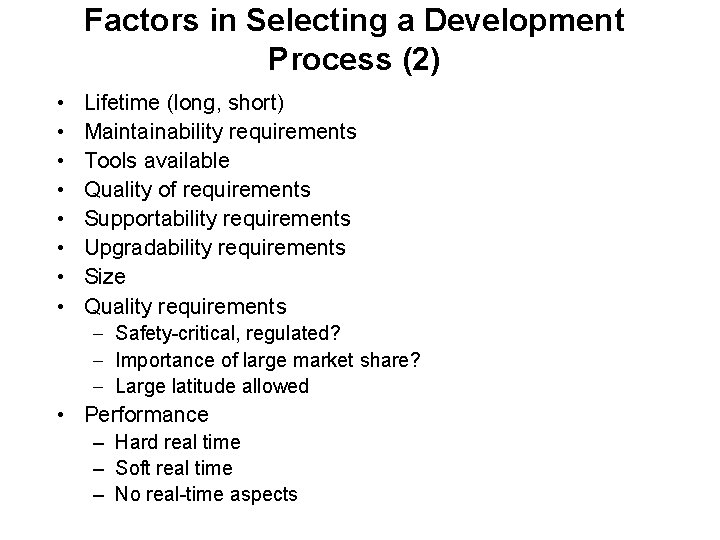

Factors in Selecting a Development Process (2) • • Lifetime (long, short) Maintainability requirements Tools available Quality of requirements Supportability requirements Upgradability requirements Size Quality requirements – Safety-critical, regulated? – Importance of large market share? – Large latitude allowed • Performance – Hard real time – Soft real time – No real-time aspects

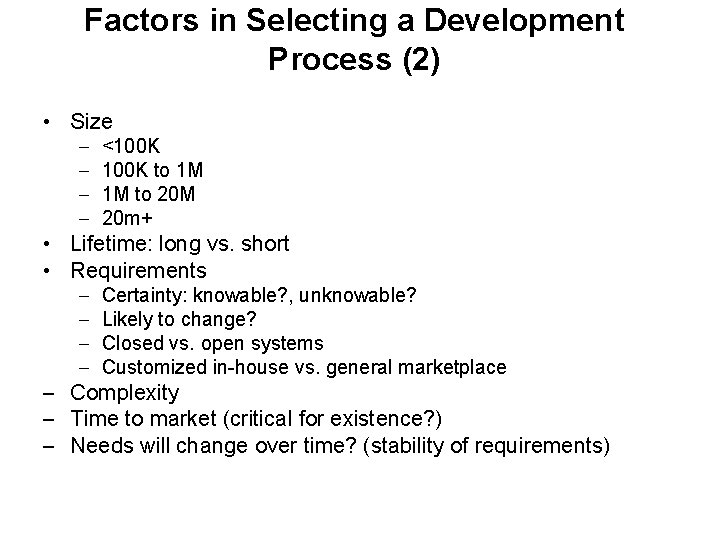

Factors in Selecting a Development Process (2) • Size – – <100 K to 1 M 1 M to 20 M 20 m+ • Lifetime: long vs. short • Requirements – – Certainty: knowable? , unknowable? Likely to change? Closed vs. open systems Customized in-house vs. general marketplace – Complexity – Time to market (critical for existence? ) – Needs will change over time? (stability of requirements)

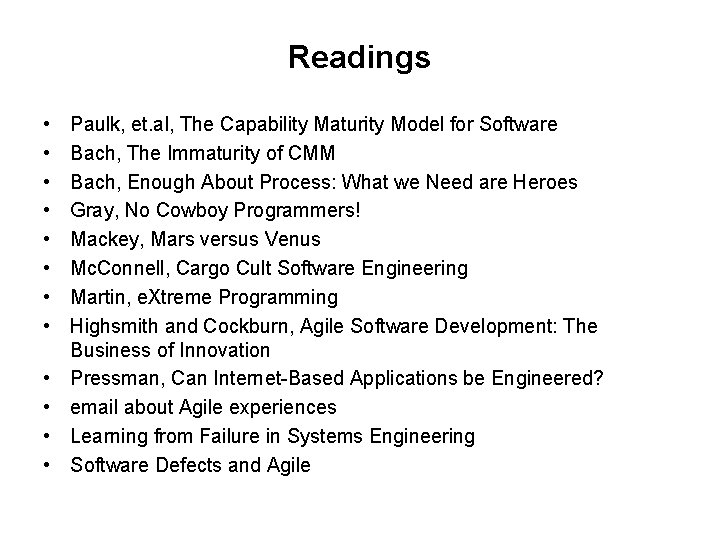

Readings • • • Paulk, et. al, The Capability Maturity Model for Software Bach, The Immaturity of CMM Bach, Enough About Process: What we Need are Heroes Gray, No Cowboy Programmers! Mackey, Mars versus Venus Mc. Connell, Cargo Cult Software Engineering Martin, e. Xtreme Programming Highsmith and Cockburn, Agile Software Development: The Business of Innovation Pressman, Can Internet-Based Applications be Engineered? email about Agile experiences Learning from Failure in Systems Engineering Software Defects and Agile

Takeways • There is no one “best” process model. Match your project’s characteristics with the model that best fits them. • You do not have to use the same model for all the parts of the project. • Consider maintenance from the beginning. • Beware of overselling, fads, etc. • Don’t let the “latest” process deter you from the basics: specification, requirements, etc. • Don’t stifle innovation by a rigid process and useless metrics based on wrong goals (e. g. , repeatibility). • The V-model equals the waterfall model.