SEG 4630 2009 2010 Tutorial 3 Clustering Yang

- Slides: 12

SEG 4630 2009 -2010 Tutorial 3 –Clustering Yang ZHOU

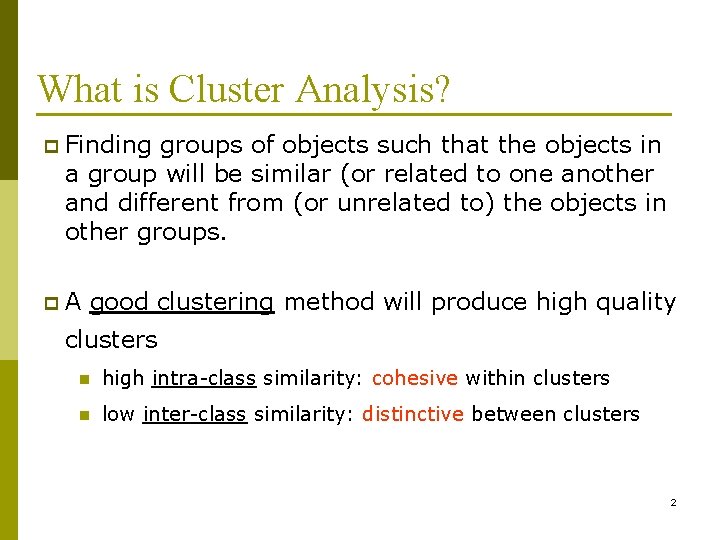

What is Cluster Analysis? p Finding groups of objects such that the objects in a group will be similar (or related to one another and different from (or unrelated to) the objects in other groups. p. A good clustering method will produce high quality clusters n high intra-class similarity: cohesive within clusters n low inter-class similarity: distinctive between clusters 2

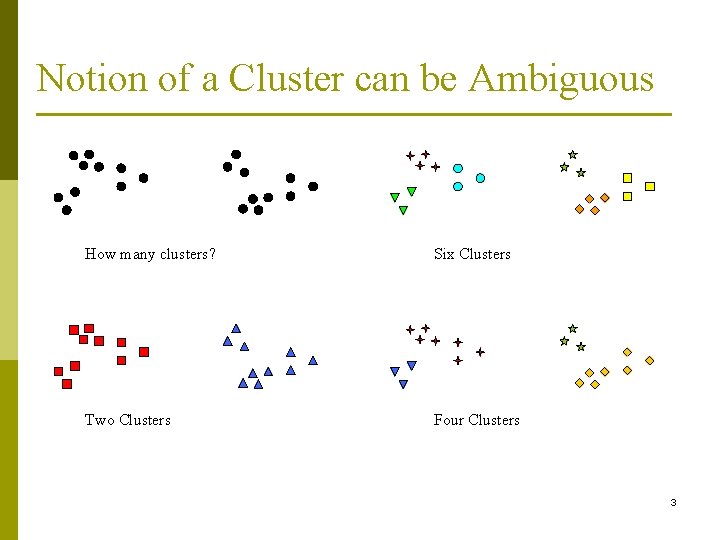

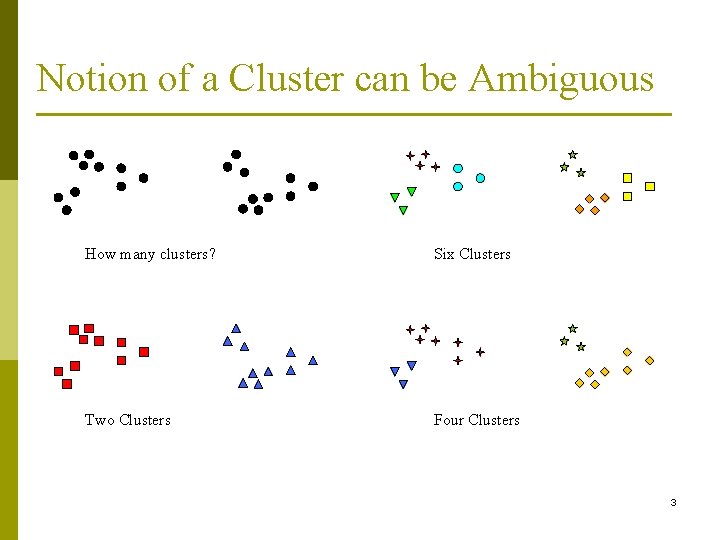

Notion of a Cluster can be Ambiguous How many clusters? Six Clusters Two Clusters Four Clusters 3

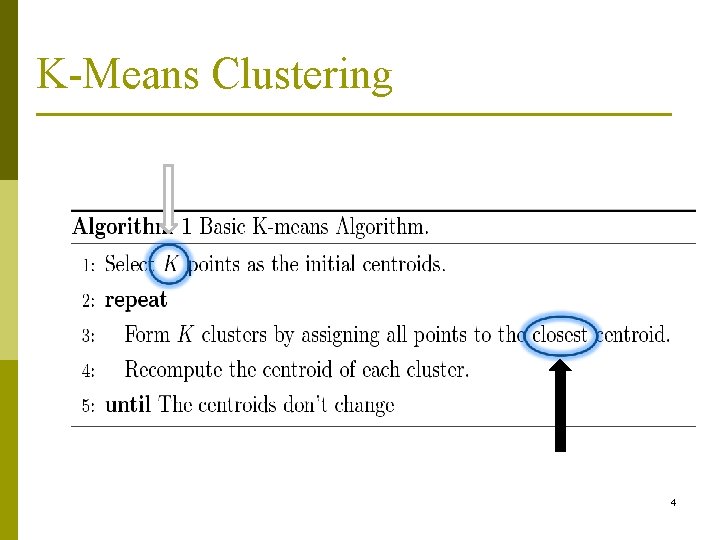

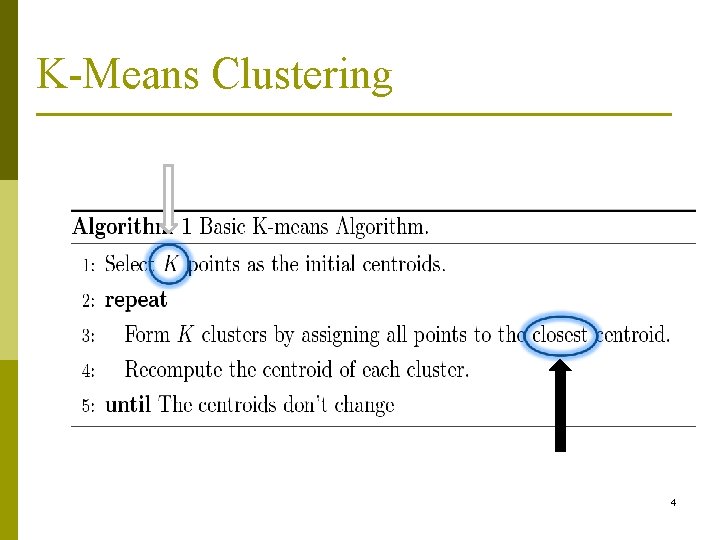

K-Means Clustering 4

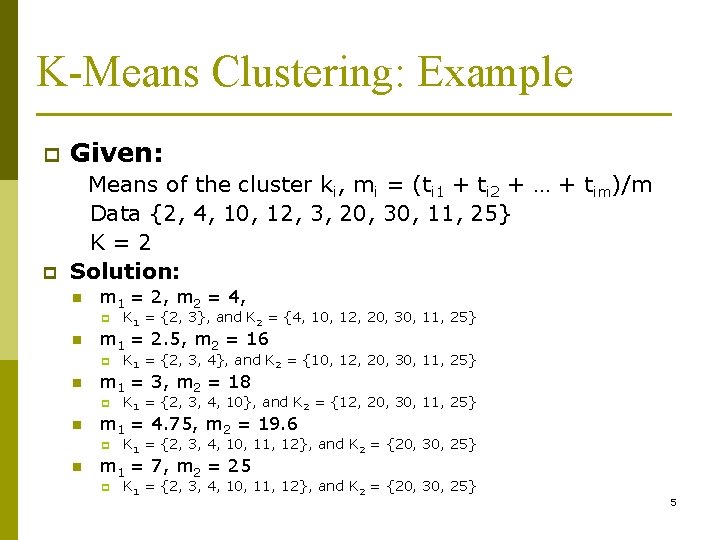

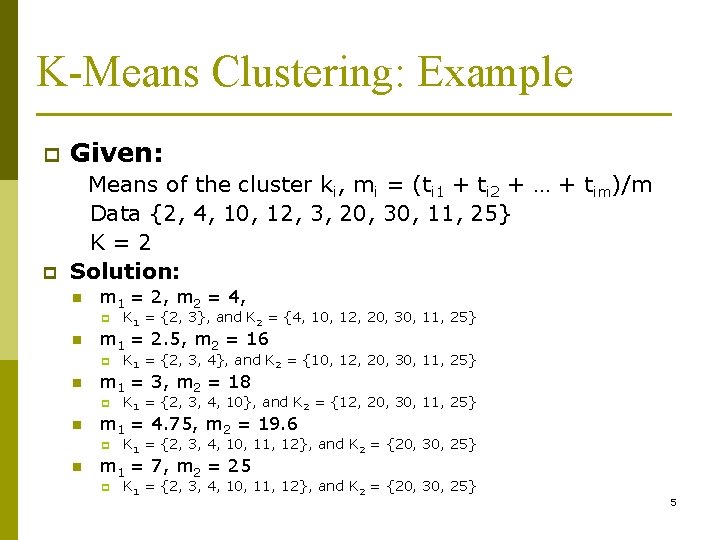

K-Means Clustering: Example p Given: p Means of the cluster ki, mi = (ti 1 + ti 2 + … + tim)/m Data {2, 4, 10, 12, 3, 20, 30, 11, 25} K=2 Solution: n m 1 = 2, m 2 = 4, p n m 1 = 2. 5, m 2 = 16 p n K 1 = {2, 3, 4, 10}, and K 2 = {12, 20, 30, 11, 25} m 1 = 4. 75, m 2 = 19. 6 p n K 1 = {2, 3, 4}, and K 2 = {10, 12, 20, 30, 11, 25} m 1 = 3, m 2 = 18 p n K 1 = {2, 3}, and K 2 = {4, 10, 12, 20, 30, 11, 25} K 1 = {2, 3, 4, 10, 11, 12}, and K 2 = {20, 30, 25} m 1 = 7, m 2 = 25 p K 1 = {2, 3, 4, 10, 11, 12}, and K 2 = {20, 30, 25} 5

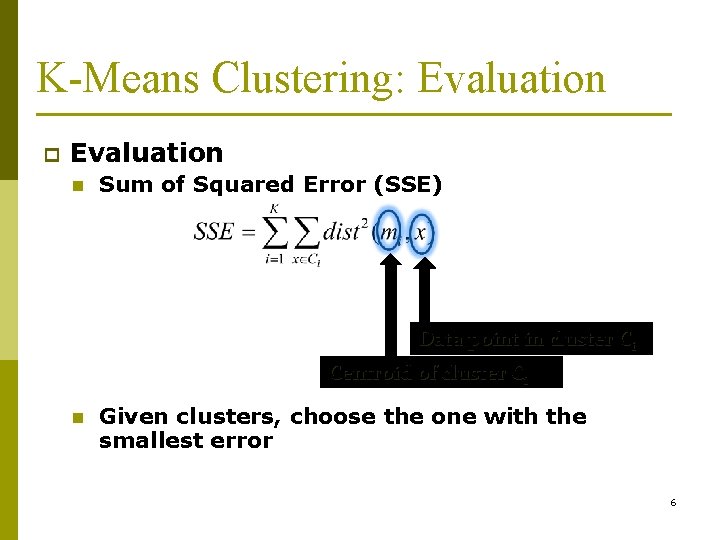

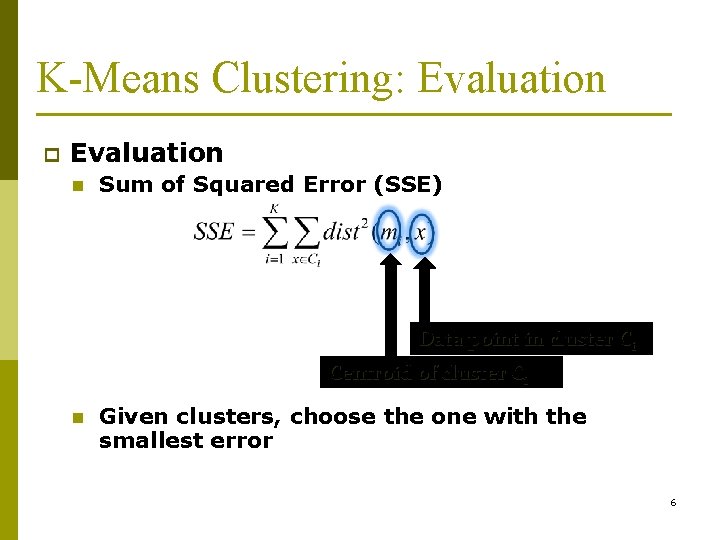

K-Means Clustering: Evaluation p Evaluation n Sum of Squared Error (SSE) Data point in cluster Ci Centroid of cluster Ci n Given clusters, choose the one with the smallest error 6

Limitations of K-means p It is hard to determine a good n n p K value The initial K centroids K-means has problems when the data contains outliers. n Outliers can be handled better by hierarchical clustering and density-based clustering 7

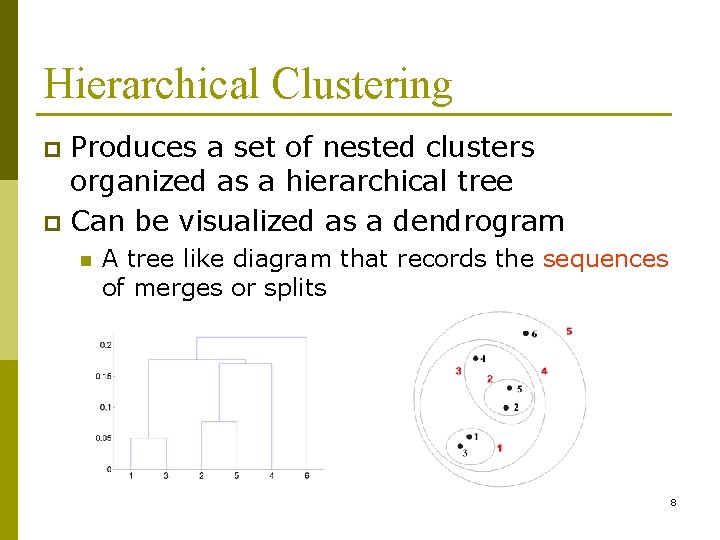

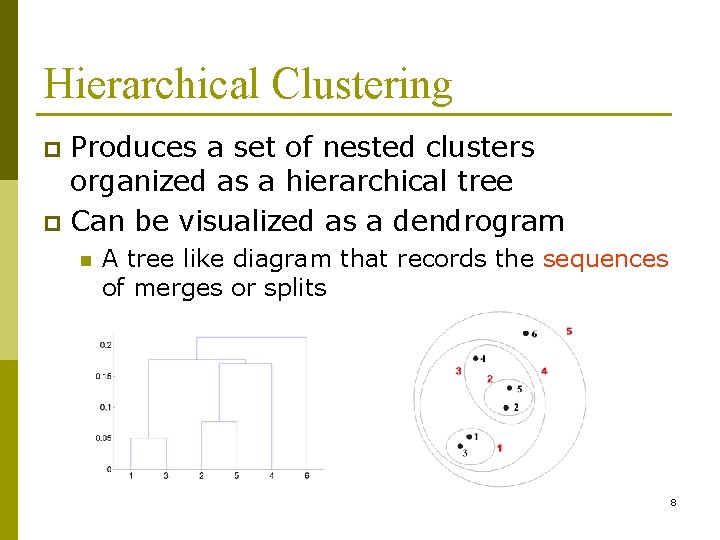

Hierarchical Clustering Produces a set of nested clusters organized as a hierarchical tree p Can be visualized as a dendrogram p n A tree like diagram that records the sequences of merges or splits 8

Strengths of Hierarchical Clustering p Do not have to assume any particular number of clusters n Any desired number of clusters can be obtained by ‘cutting’ the dendrogram at the proper level 9

Agglomerative Clustering Algorithm p More popular hierarchical clustering technique p Basic algorithm is straightforward 1. 2. 3. 4. 5. 6. p Compute the proximity matrix Let each data point be a cluster Repeat Merge the two closest clusters Update the proximity matrix Until only a single cluster remains Key operation is the computation of the proximity of two clusters n Different approaches to defining the distance between clusters distinguish the different algorithms 10

Hierarchical Clustering p Define Inter-Cluster Similarity Min n Max n Group Average n Distance between Centroids n 11

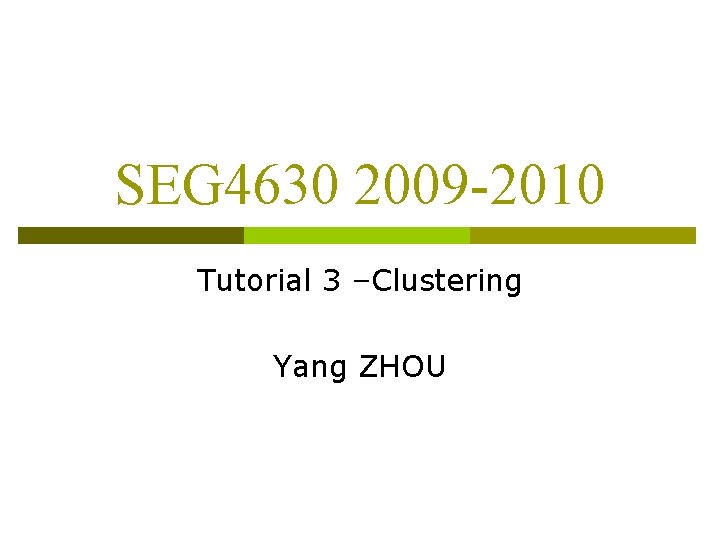

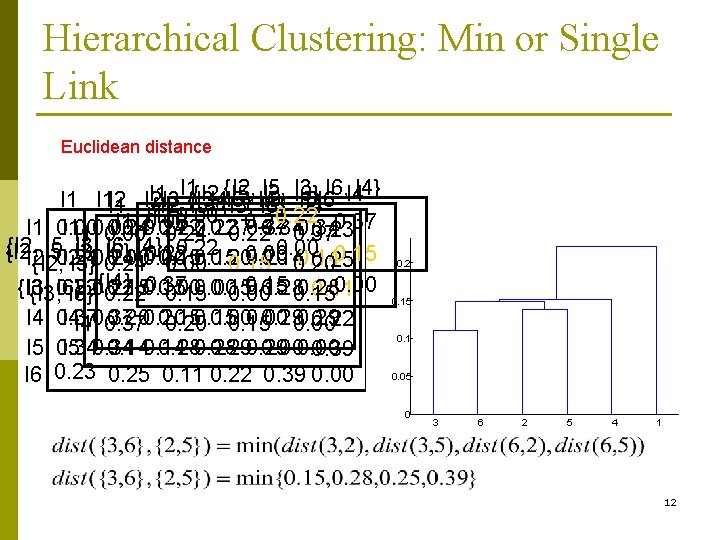

Hierarchical Clustering: Min or Single Link Euclidean distance {I 2, I 5, I 3, I 6, I 4 I 4} I 1 I 3 I 1{I 3, {I 2, I 5, I 4 I 3, I 6} I 1 I 2 I 4 I 6} I 5 I 1 {I 2, 0. 00 I 5} {I 3, I 6}0. 22 I 4 I 6 I 1 0. 24 0. 00 0. 22 0. 37 I 1 0. 00 0. 24 0. 22 0. 37 0. 340. 37 I 1 I 10. 00 0. 22 0. 37 0. 34 0. 23 0. 00 0. 24 0. 22 {I 2, I 5, I 3, I 6} I 6, I 4} 0. 22 0. 00 {I 2, 0. 22 0. 00 0. 15 I 2 0. 24 0. 00 0. 15 0. 20 0. 14 0. 25 {I 2, I 5} 0. 24 0. 00 0. 15 0. 20 {I 4} 0. 37 0. 15 0. 00 {I 3, I 3 I 6} 0. 15 0. 00 0. 15 0. 28 0. 11 {I 3, 0. 22 I 6}0. 22 0. 15 0. 00 I 4 I 40. 37 0. 15 0. 00 0. 29 I 4 0. 37 0. 20 0. 15 0. 00 0. 290. 00 0. 22 0. 37 0. 20 0. 15 I 5 0. 34 0. 14 0. 28 0. 29 0. 00 0. 39 I 6 0. 23 0. 25 0. 11 0. 22 0. 39 0. 00 0. 2 0. 15 0. 1 0. 05 0 3 6 2 5 4 1 12